1. Introduction

Light detection and ranging (LIDAR) is a critical sensor used by autonomous vehicles, as it provides a dense pointcloud with exceptional angular resolution, enabling the ability to provide mapping as well as detection and classification of moving objects in the environment [

1]. Each point in the pointcloud is a detection event, where the LIDAR sensor has emitted energy, and received some portion of the reflected energy, using the time delay between these two events to calculate an accurate estimate of distance.

Next-generation LIDAR will use homodyne or coherent detection in the receiver hardware; this approach has several advantages over direct detection systems, such as the ability to instantaneously measure the Doppler velocity of moving targets [

1]. This is possible as a homodyne detection system is able to measure the amplitude and phase of the reflected light, which provides the LIDAR sensor with additional information about the objects in the environment. In contrast, direct detection systems are sensitive to the intensity of the received signal, which is sufficient for ranging, but cannot measure the Doppler shift from a moving object.

Autonomous vehicles use sensors to understand the world around them, and in many applications, understanding the physical properties of the environment can greatly improve their functionality, such as when a sensor can classify a detection by the material type or structure; we call this ability “material classification”. This capability has been demonstrated in several autonomous applications, such as using feedback from force sensors in robotic excavators [

2], using robotic arms and optical sensors in recycling plants [

3], or capturing infra-red (IR) spectra of biomass on a production line to understand the composition of the feedstock [

4]. Other methods of active sensing for material classification have been demonstrated with thermal sensors [

5] as well as millimeter-wave vibrometry [

6].

Material classification using laser sensors has shown tremendous potential; compared to camera-based methods, which are lighting dependent, and rely on visible color [

7], lasers provide a stimulus to the material, and then the sensor receiver records the response. Typically, the reflection from an objects is treated as an ideal Lambertian surface, which is a diffuse reflector, but real-world objects have complicated behaviour which can be characterized and used to identify materials [

8]. Kirchner et al. demonstrated the ability to classify 5 materials using the depth error over angle and intensity from a commercial laser rangefinder [

9]. Similarly, intensity histograms have been used in aerial LIDAR to classify different types of forest, as well as surfaces, such as water, gravel, low vegetation, using a simple decision tree classifier [

10].

Several authors have looked at using off-the-shelf time-of-flight (ToF) cameras to exploit depth errors for classifying materials in an image, independent of the material color [

7,

11]; Tanaka et al. also demonstrated that the accuracy could be greatly improved from 55.0% to 89.9% by sweeping the modulation frequency as well [

7].

The wavelength response of objects in the environment is highly effective method of discriminating materials, has been extensively utilized as the enormous field of spectroscopy, and is demonstrated in diverse methods, such as hyperspectral cameras for material identification [

12,

13], optical absorbance sensors for detecting heavy metals in water[

7], and many others. For the purpose of this article, we will focus on single wavelength LIDAR systems.

Polarization is an additional property of light, which describes the orientation of the oscillation of an electromagnetic wave; when reflected back from an object, the polarization state may change in a manner that is related to the physical structure of the surface of that object [

14]. This insight led to investigations into how to leverage polarization LIDAR to measure depolarization of returns from different particles. Simply stated, Mie scattering from spherical particles results in the reflected light maintaining the same polarization as the transmit beam; when the particles are non-spherical, some proportion of the reflected light is depolarized [

14]. In a specific example, Sassen et al. demonstrated using polarization LIDAR to measure the ash size distribution from a volcanic eruption off the coast of Alaska [

15]. Alternatively, simply augmenting LIDAR with a passive polarimetric sensor was shown to provide over 90% accuracy in classifying materials, even in low SNR conditions [

16]; in this article, the authors demonstrate the large improvement in classification accuracy by combining polarization with the LIDAR information.

Using polarization-coded LIDAR, Nunes-Pereira et al. demonstrated that polarization could be effectively used in classification of common materials observed in operational domains for autonomous vehicles, and to understand the effect, conducted extensive examinations of the polarization-dependent reflectance of materials, then used optical coherence tomography (OCT) to determine the material cross-section of automotive car paints [

17]. In order to reconstruct the degree of polarization, the authors used a pulsed ToF LIDAR and placed a linear polarizer in front of the optics. To capture the orthogonal polarization, they rotated the polarizer and repeated the capture, synthesizing a material-coded pointcloud by processing both polarizations.

In this paper, we demonstrate a polarization-diverse LIDAR using random modulated continuous wave (RMCW) ranging with a polarization-diverse homodyne receiver,enabling material classification on an instantaneous shot-by-shot basis, only using the data acquired by the LIDAR sensor during the acquisition time. Our polarization-diverse receiver uses an integrated photonic chip to produce received signals for ranging and for calculating the polarization parameters required by our machine learning model. To our knowledge, this is the first polarization-diverse RMCW LIDAR system that has been demonstrated to perform instantaneous material classification.

4. Experimental Setup and Method

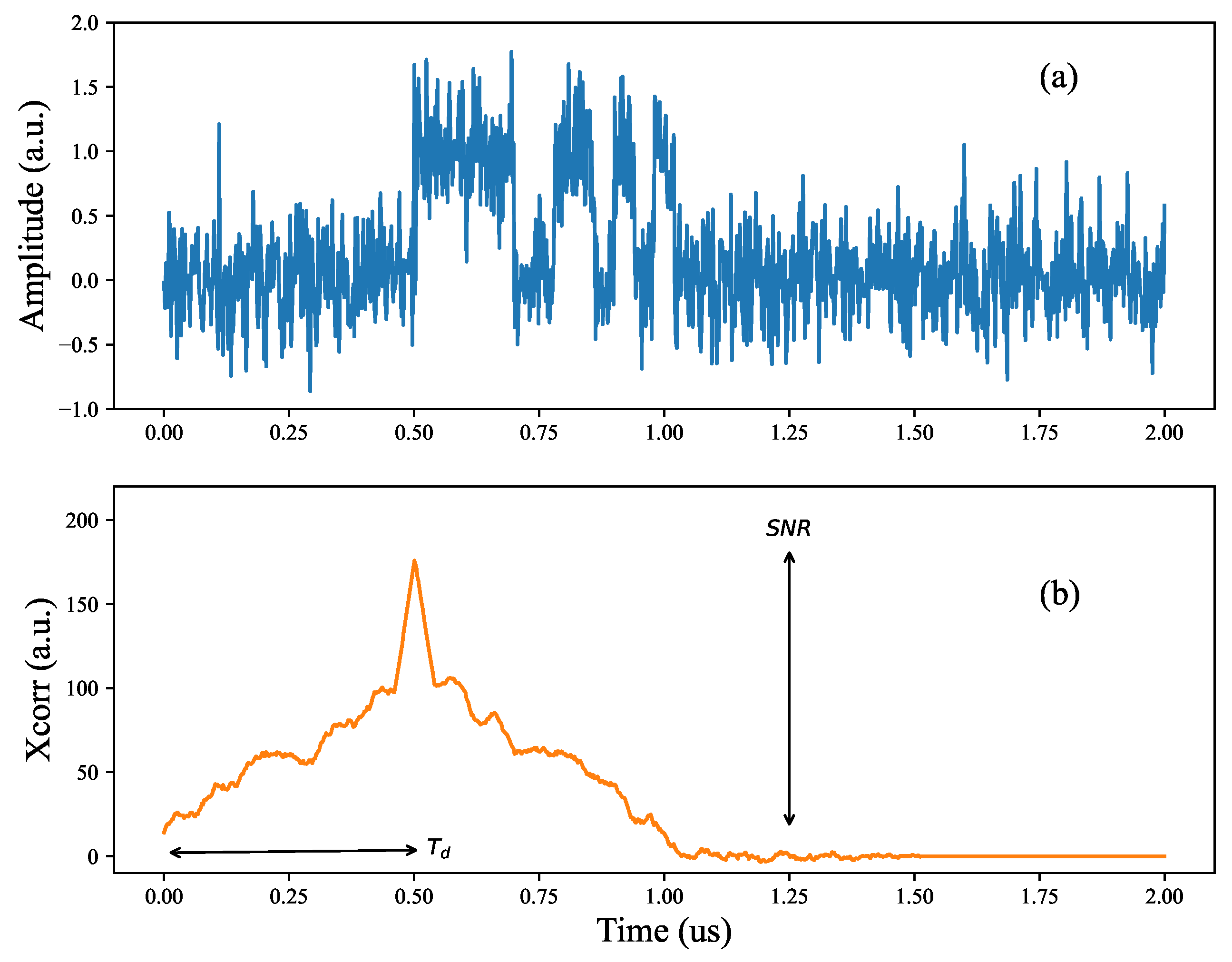

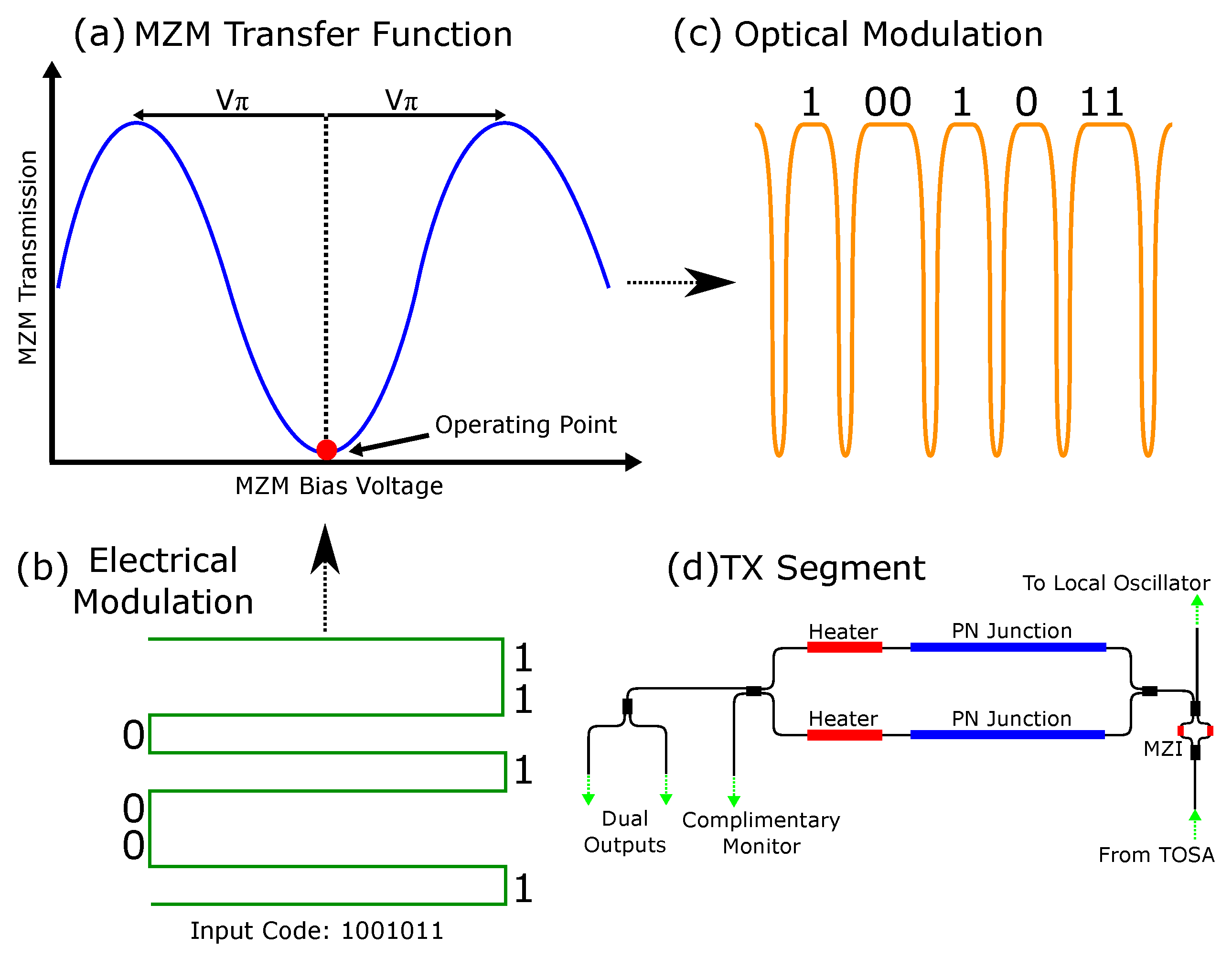

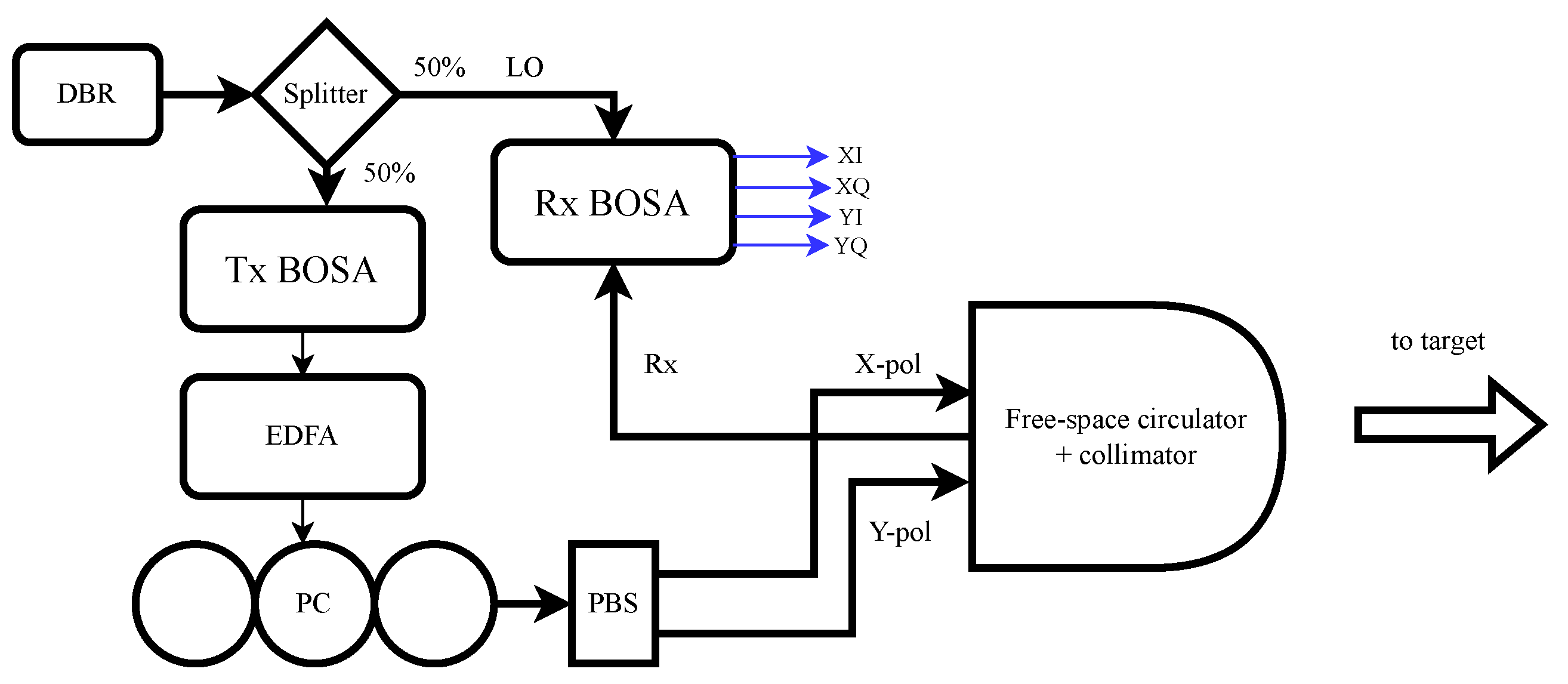

Figure 4 displays the experimental setup used to validate our material classification approach using a single-point RMCW LIDAR system. In our experimental setup, we use a wavelength-tunable DBR laser (Oclaro TL3000), set to 1545.3 nm and with +12 dBm output power. The laser is connected to the input of our custom silicon photonic BOSA, as described in

Section 3.1, which modulated the laser signal with a phase-modulated 512-bit Gold code, where the MZM is biased as shown in

Figure 2(a). To boost the optical power, we use an erbium-doped fiber amplifier which boosts the transmitted power to +27 dBm.

To control the polarization of the transmitted beam, we connect a fiber polarization controller to the output of the EDFA, and the align the polarization to be 45°linear relative to the input of the polarization beam splitter (PBS). Thus, we have equal power in both X- and Y-polarizations in separate fibers, which are assembled together into a fiber array. As part of our design of experiments, we would like to vary the transmitted polarization, and by connecting/disconnecting the inputs of the PBS, we are able to create roughly 0°, 45°, and 90°linear polarizations.

The optical fiber array is used to closely position parallel both Tx fibers and a single Rx fiber behind a birefringent and magneto-optic crystal stack, forming a system that resembles a free-space optical circulator. These types of non-reciprocal devices have been used in optics for decades to enable a polarization-independent separation of forward- and backwards-travelling waves; we direct the reader to the literature to understand more about these devices [

26,

27]. Creating a collimation system that projects both Tx and Rx fibers onto the same optical axis after collimation is called a coaxial LIDAR system, and has several benefits, such as ensuring alignment of Tx and Rx paths at all times. In this manner, the system resembles a free-space optical circulator with a single collimating lens that focuses onto both the Tx and the Rx fibers. The resulting collimated beam has a

intensity diameter of

x

.

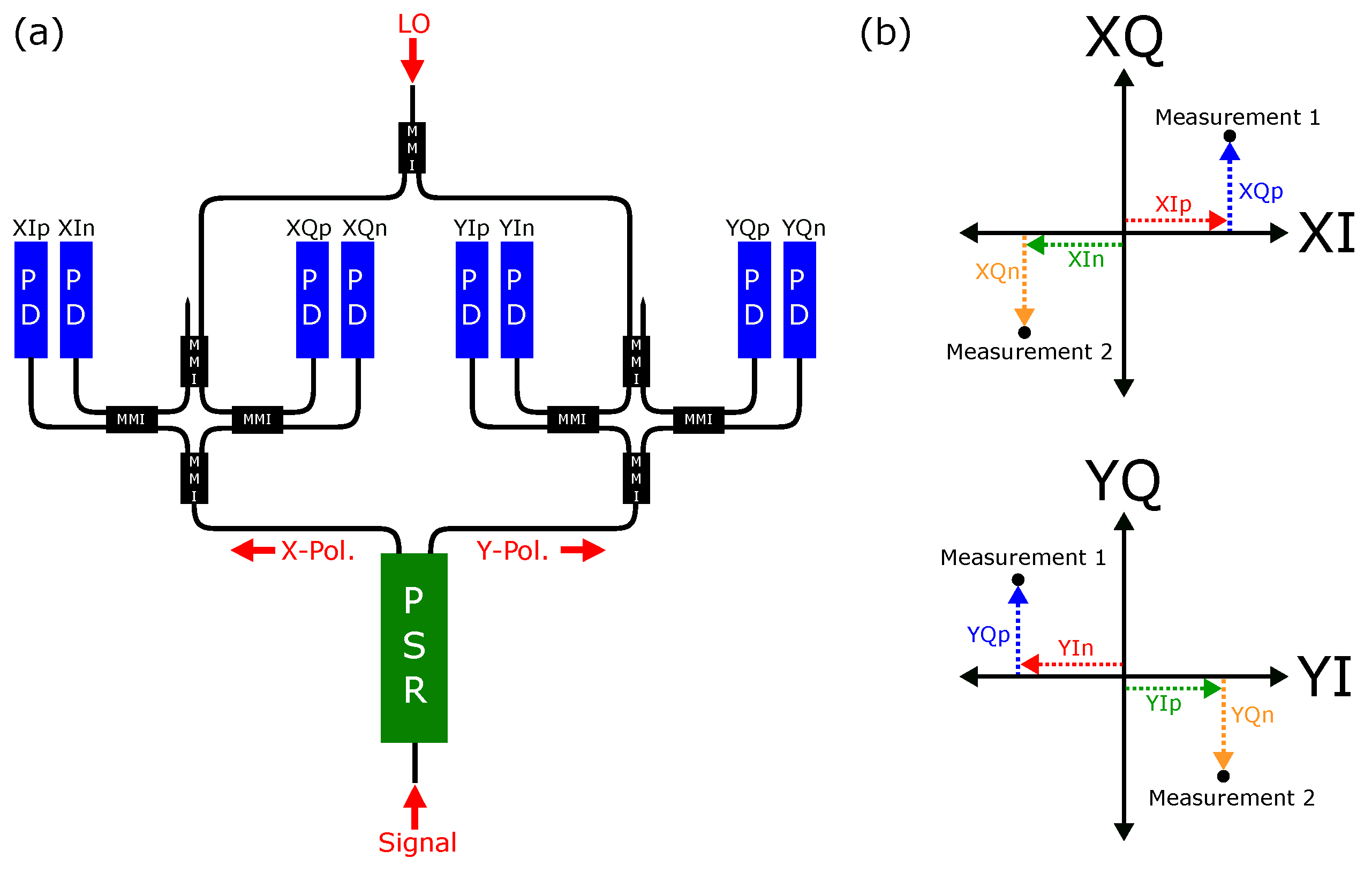

This beam is directed to the target of interest, and is reflected along the same path to the collimation optics. The free-space optical circulator collects the received signal and couples it to a singlemode fiber, which is edge-coupled to the Rx BOSA, described in

Section 3.2. The photonic integrated circuit mixes the local oscillator with the weak reflected LIDAR signal from the environment and creates four photocurrents, for two polarizations and two quadratures. These photocurrents are converted to voltages and then sampled by 4 x 500 MSps ADCs, producing polarization-diverse field reconstruction of the received LIDAR signal, labelled as XI, XQ, YI and YQ, where X and Y refer to horizontal and vertical polarization, and I+Q are the in-phase and quadrature photocurrents.

These digitized signals are processed in two steps: first, combined into a single measurement and correlated digitally with the ideal RMCW code, producing a correlation signal, similar to

Figure 1(b). From this, we calculate the distance to target and the SNR.

Second, we process the polarization measurements to calculate the Stokes time-series for the received signal, as in

Section 2.2. We then selected the mean Stokes values over the duration of the codeword and use a scalar value of

,

,

,

as inputs to our machine learning model, combined with the distance and SNR gives six inputs to our neural network model.

4.1. Data Collection Methodology

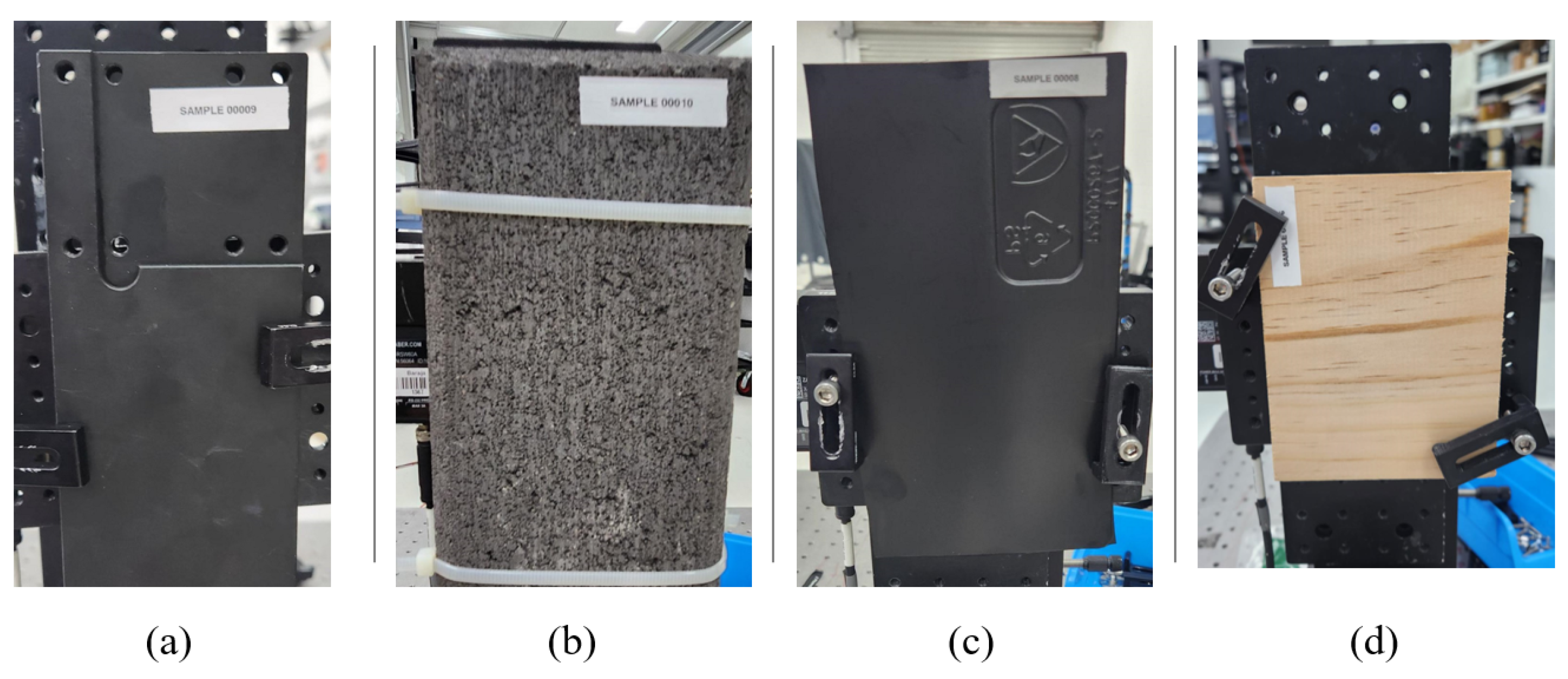

The set of materials used in our experiment are four that would occur in an urban environment: black power-coated aluminum, concrete, engineered wood and black plastic; photos of the samples are shown in

Figure 5. The samples are large enough for our laser beam to entirely fit without clipping. In order to assess the classification performance of the polarization-diverse RMCW LIDAR, we sought to collect data in controlled experimental conditions, but with predetermined variations that would mimic a situation in a real environment. To this end, we collected data for every material sample and varying the target distance, the angle of incidence to the LIDAR (which we call yaw), the rotation of the material in the plane perpendicular to the LIDAR optical path (which we call roll), and the polarization of the transmit signal from the RMCW LIDAR. These deliberate variations are to explore the range of polarizations that we receive in the BOSA, and to validate the classifier with these variations.

The variations are explored using a full-factorial design-of-experiments (DOE), and the values used in the input factors is shown in

Table 1. For each one of the 108 combinations, we collect roughly 830 measurements for a total of more than 89 640 measurements per material; using our RMCW reference code, we correlate the return signal and use a constant false alarm rate (CFAR) algorithm to determine if we have a valid peak, using a false alarm rate of 1e-4 [

28]. If no peaks are passed through CFAR, we reject the measurement completely.

5. Results

As described in

Section 4.1, each material was tested according to the DOE plan, with the polarization-diverse quadrature signals digitized and recorded for every configuration for each material. Thus, we store more than 89 000 measurements for each material, and each measurement is processed to produce a distance to target, the SNR of the cross-correlation peak, and the four Stokes parameters. Therefore, in total, we collected 356 000 measurements to train and validate our classifier, with the DOE ensuring we have significant real-world variation in the dataset.

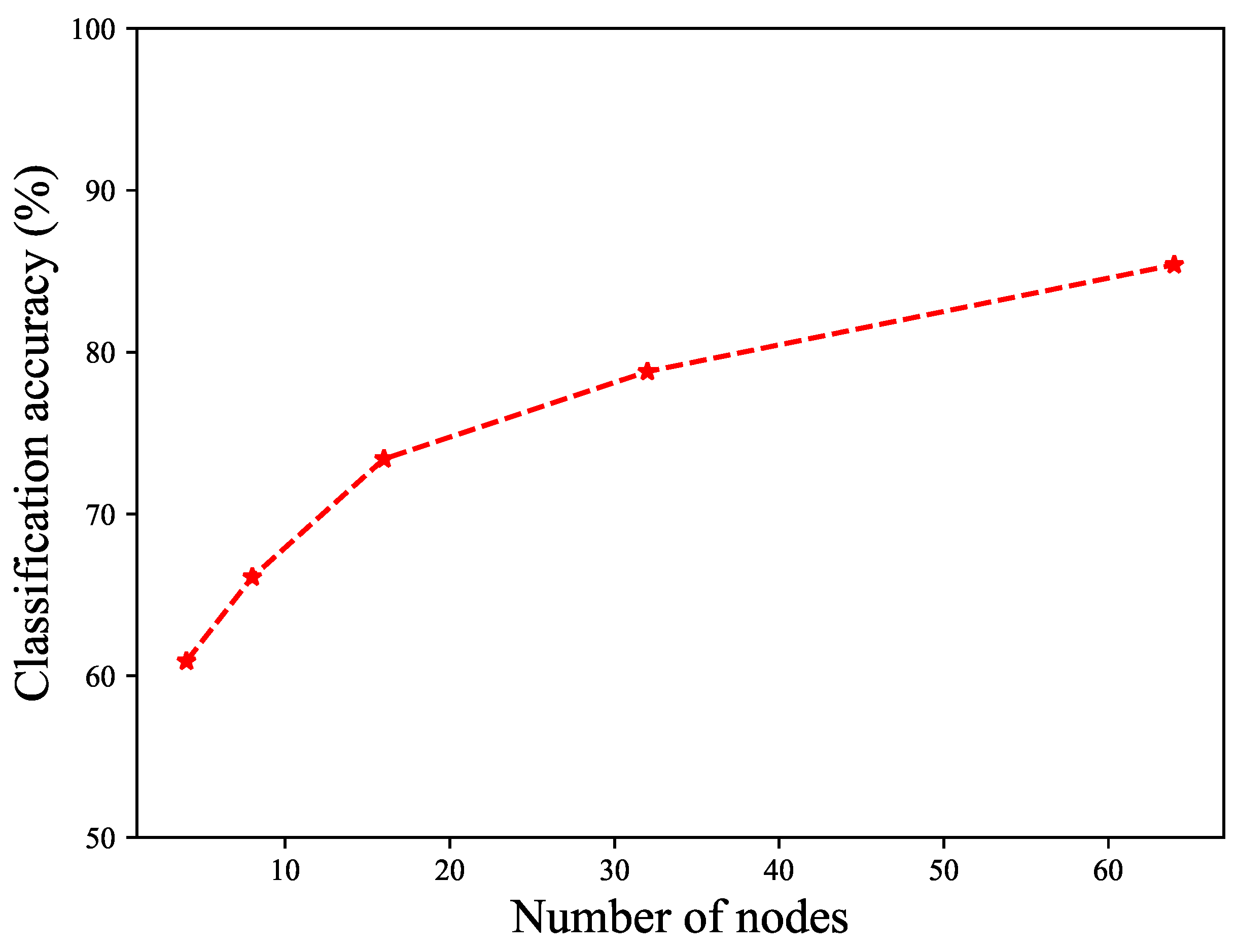

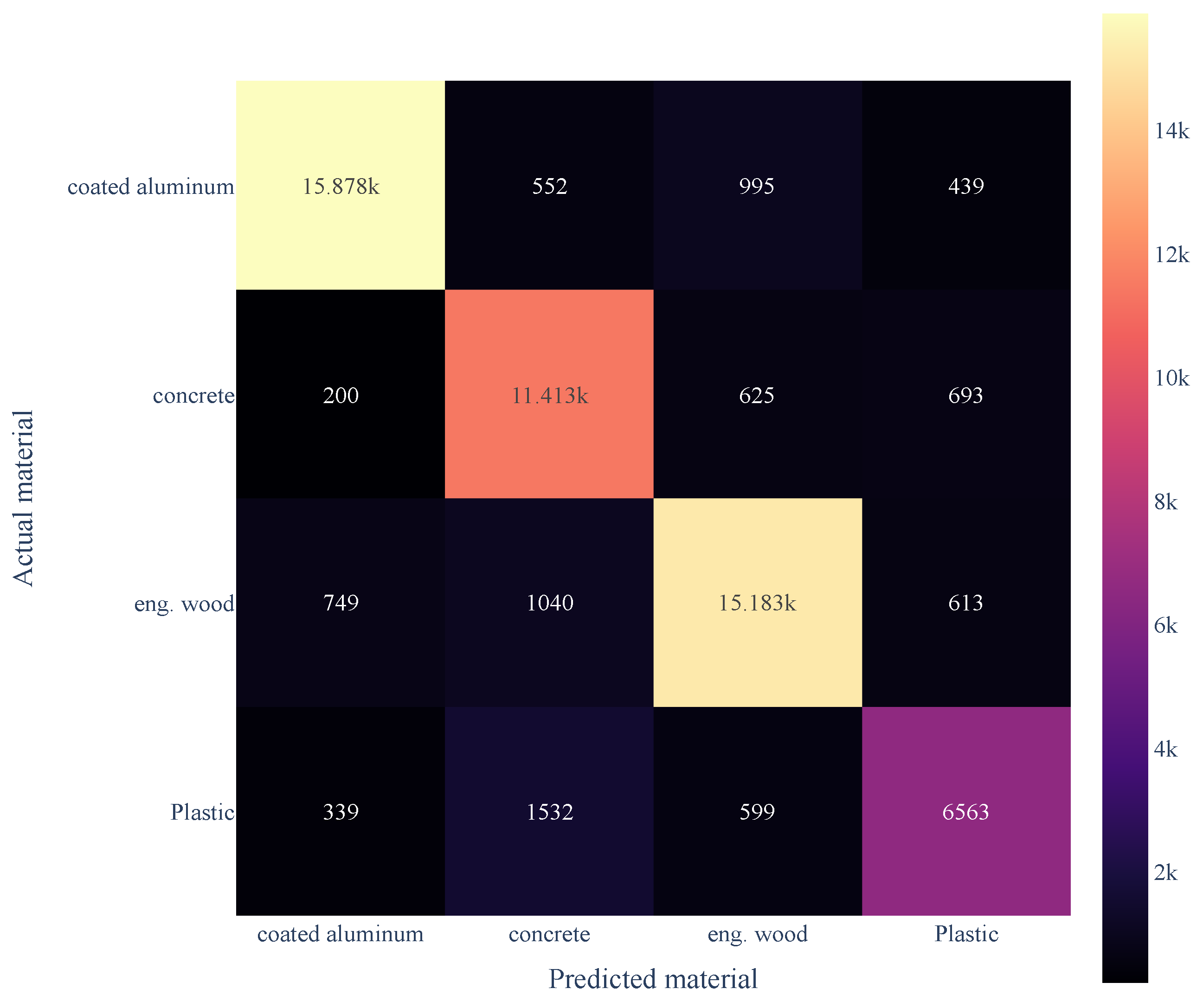

These six measurement outputs are the six inputs to our neural network, We then trained a single hidden layer neural network, with 64 nodes, as described in

Section 2.3, and then run the resulting model on the validation data set. We express the performance of the classifier with a confusion matrix, as shown in

Figure 6. The overall classification accuracy is 85.4%; however, from the confusion matrix it is clear that the majority of the misclassifications come from plastic, which has a classification accuracy of just 72.6%.

To explore the performance of the classifier with the hidden node number of neurons, we repeated the training and validation for a selection of hidden layer sizes, and measured the overall classification accuracy, shown in

Figure 8.

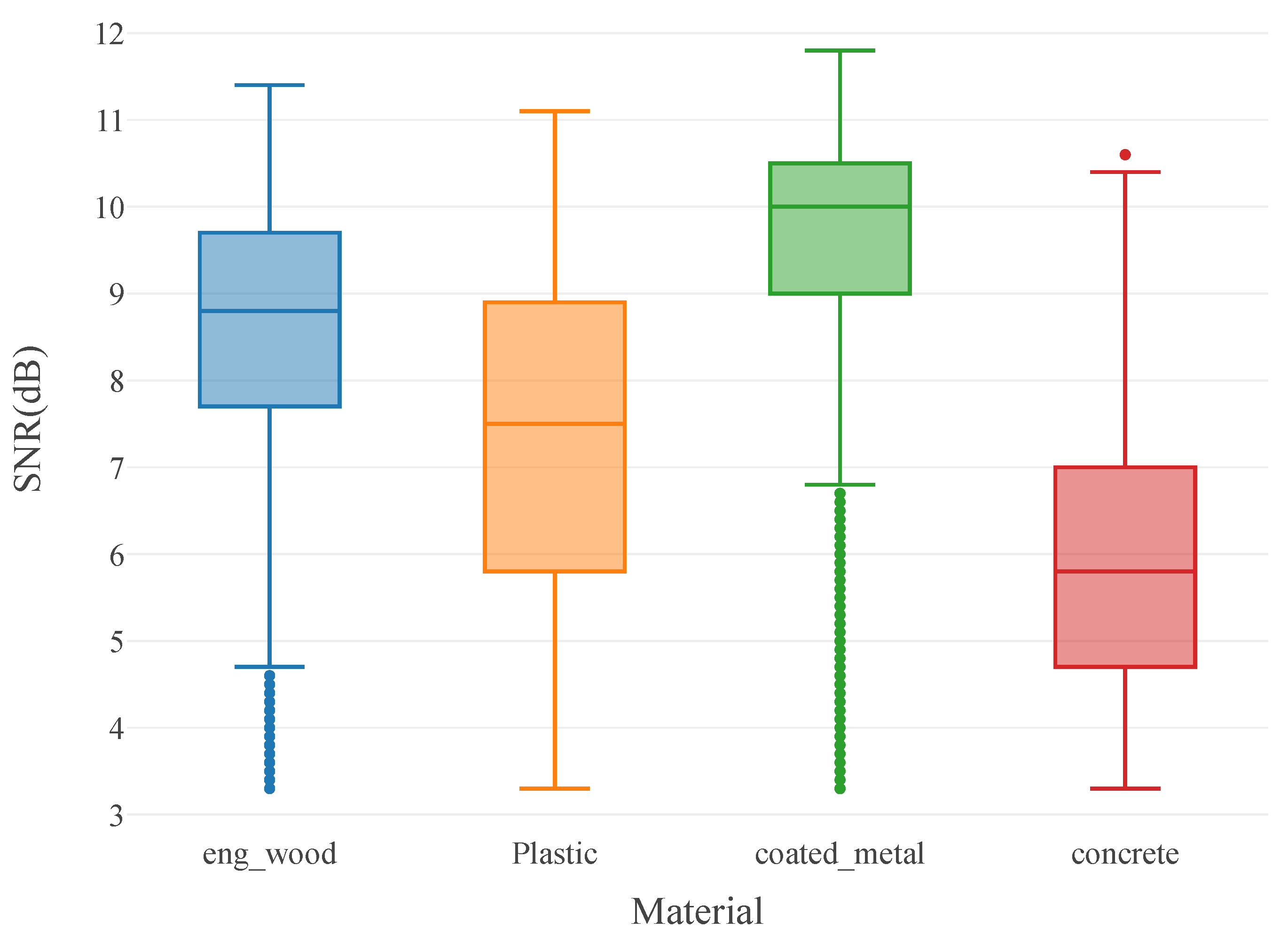

Figure 7.

Distribution of SNR values for all materials in dataset.

Figure 7.

Distribution of SNR values for all materials in dataset.

Figure 8.

Classification accuracy as number of nodes in hidden layer increase.

Figure 8.

Classification accuracy as number of nodes in hidden layer increase.

We use a 3D scatter plot to visualize the Stokes parameters, S1, S2, S3, and understand if the measurements demonstrate that the materials show differing polarization results; note that we remind the reader that the launch conditions were 0°, 45°, and 90°linear polarization relative to the output window of the LIDAR sensor. As shown in

Figure 9a, all materials have measured Stokes parameters that are well clustered; the exceptions are concrete (in purple), which is clustered, but not visible, and coated metal, which is clustered into many smaller groupings. This would suggest that classification on concrete would have poor accuracy, and that most of the false classifications would be for coated aluminum. In contrast, the confusion matrix in

Figure 6 indicates a different conclusion; the accuracy for concrete is 88.3%, and the false reading is highest for plastic.

Additional insight is available by plotting the relationship between SNR and the Stokes parameters; in

Figure 9b, we see a clear distinction between concrete and the other materials, as concrete is strongly confined in S2 but greatly dispersed in SNR. Similarly, we note that coated metal only has measurement points above SNR > 8 dB.