1. Introduction

The advancement of cloud computing has provided unprecedented flexibility and scalability to businesses [

1]. However, this progress brings with it new challenges beyond cost reduction, increased efficiency, and above all, agility. These include resource optimization, cost management, and performance assurance [

2]. In particular, refactoring to Microservices Architecture (MSA) and simple rehosting (Rehost) strategies for migration are directly linked to these challenges, complicating operational strategies in the cloud environment.

Many companies face problems such as inaccurate predictions of resource usage and the excessive allocation of unnecessary resources, leading to a surge in costs during the cloud transition process [

3]. To address this, businesses have been adopting artificial intelligence (AI)-based automation tools to attempt cloud resource optimization [

4]. By leveraging AI technology, they can monitor resource usage in real-time and automatically adjust resources as needed, thus reducing costs and maintaining performance [

5,

6].

This study utilizes IBM’s Turbonomic ARM and Instana APM tools to empirically compare the effectiveness of resource optimization and cloud cost management between cloud-native systems that have applied MSA and systems that have adopted simple rehosting strategies. Through this, it aims to explore the role and potential of AI in cloud resource management. Moreover, by comparing the refactored MSA systems with the simply rehosted systems, it seeks to evaluate the actual impact of AI tools on application performance and cloud cost reduction. The research findings are expected to expand the scope of cloud resource optimization and contribute to the establishment of more efficient cloud strategies by companies and institutions.

2. Method

This study adopts a systematic approach for the comparative analysis of resource optimization in the cloud environment. To ensure the reliability and reproducibility of the research, a detailed experimental design and data collection and analysis methods were implemented.

2.1. Tool Selection and Environment Setup

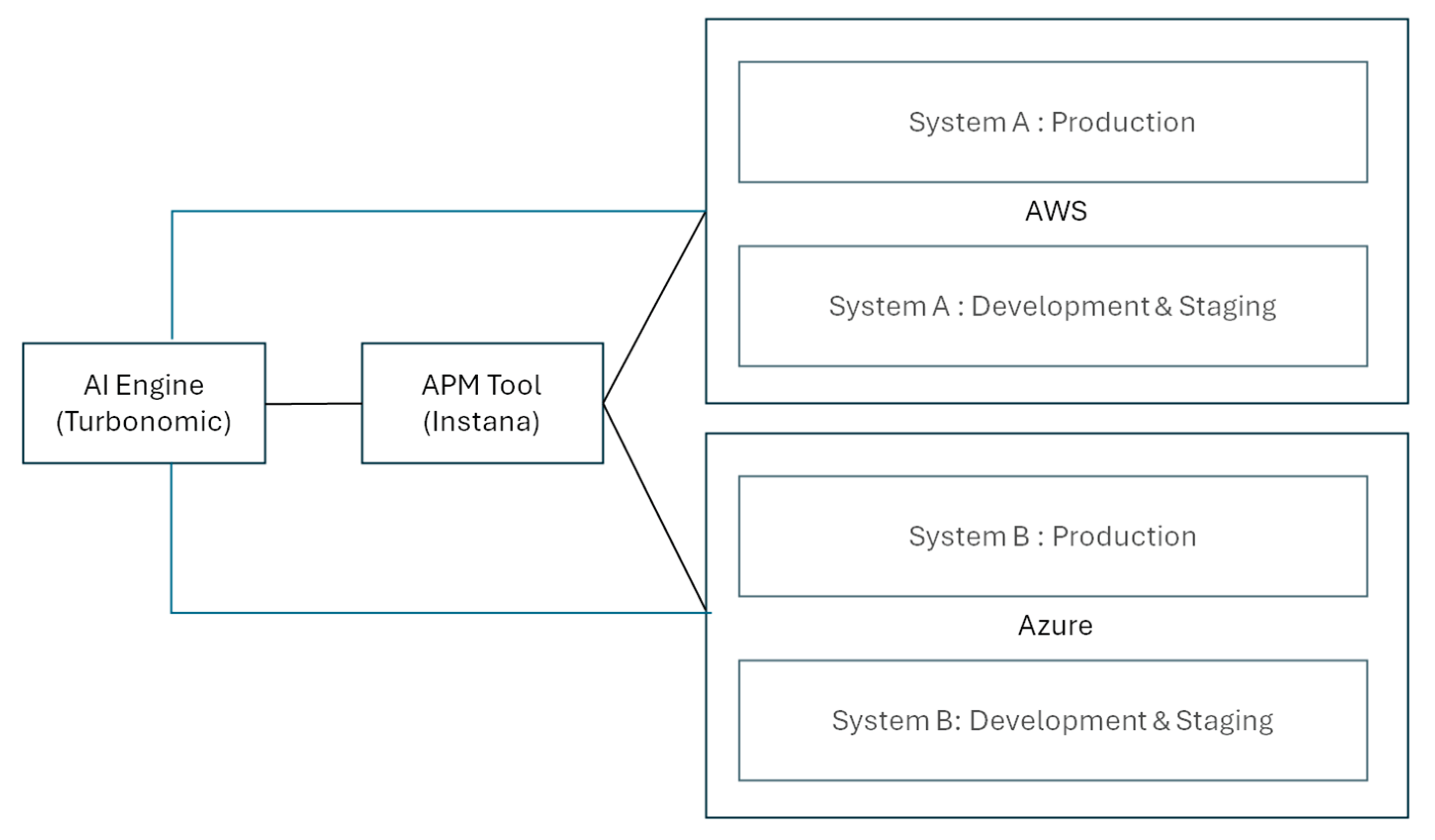

The choice of IBM Turbonomic ARM and Instana APM Tool in this study is due to their advanced resource optimization algorithms and real-time performance monitoring capabilities [

7,

8]. Turbonomic ARM optimizes automated workload placement, scaling, and resource allocation in both virtual and physical environments, effectively managing cloud costs [

9]. Instana APM detects performance degradation in real-time through application performance monitoring, providing insights for improvement [

10,

11]. Both tools offer deep integration with major cloud service providers like AWS and Azure, enabling effective operation in complex cloud environments. These functionalities are key elements in achieving the study’s objective of cloud resource optimization and performance enhancement.

2.2. Experimental Design

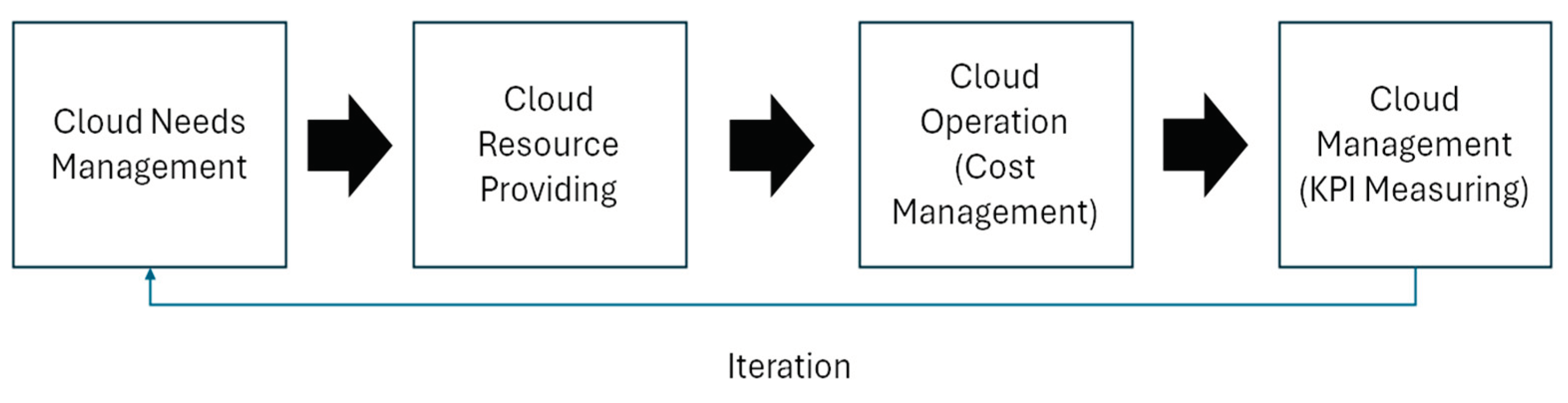

The procedure was designed to apply resource optimization and cost optimization solutions in real-time to each system and verify their effectiveness [

12]. This process aims to develop resource allocation strategies that can respond to dynamic changes in the cloud environment and identify optimized cost management methodologies. The experimental design includes aspects such as cloud resource allocation, performance monitoring, and cost analysis, with the data and analysis results obtained at each stage making a significant contribution to drawing the study’s main conclusions.

Figure 1.

AI PoC Configuration Environment and Application Plan Summary.

Figure 1.

AI PoC Configuration Environment and Application Plan Summary.

2.3. Performance Evaluation

The performance of applications is continuously monitored and analyzed using IBM Turbonomic ARM and Instana APM. Key Performance Indicators (KPIs) such as application throughput, response time, and availability are measured. Collecting performance indicator data is necessary to clearly understand the impact of resource optimization on performance changes within the cloud environment [

13,

14]. The effectiveness of the AI-based resource optimization tool is empirically verified by comparing performance changes before and after optimization between System A and System B.

2.4. Cost Analysis

Performed using cloud cost management tools. The cost impact of resource optimization tasks on cloud infrastructure is evaluated by conducting a detailed analysis of monthly and annual cloud consumption costs [

15]. The cost reduction effect before and after applying the optimization tool is measured based on the collected resource usage and cost data [

16]. This cost reduction analysis provides important data-driven insights for companies to establish cloud resource management strategies.

Figure 2.

Cyclical Process Flowchart for Cloud Resource Management.

Figure 2.

Cyclical Process Flowchart for Cloud Resource Management.

2.5. Results Comparison and Analysis

The resource optimization results of System A and System B are compared and analyzed. The actual impact of the AI-based resource optimization tool on cloud infrastructure management is assessed based on quantitative results of performance improvement and cost reduction [

17]. Multivariate and time series analyses are used to verify the effectiveness of the optimization tool from multiple angles [

18].

2.6. Future Improvement Tasks

Based on the research findings, future improvement tasks for cloud resource optimization and cost management methodologies are identified. Suggestions for additional research needs are presented based on identified limitations, including proposals for enhancing the functionality and application scope of AI-based optimization tools. Directions for developing best practices in cloud infrastructure management are also proposed.

This methodology systematically verifies the effectiveness of AI-based tools in solving resource optimization and cost management problems in the cloud environment, presenting practical improvement measures.

3. Results

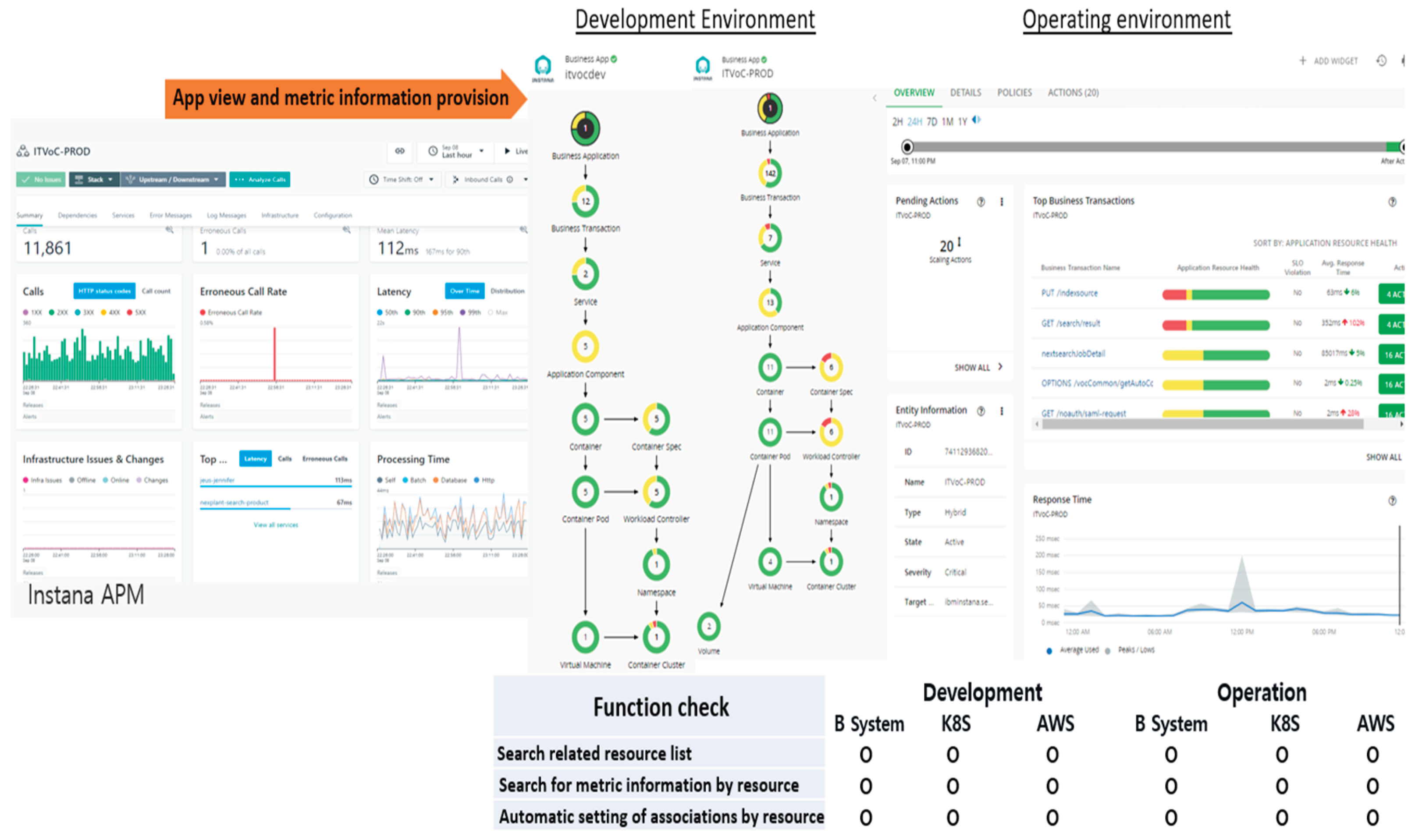

3.1. Performance Improvement

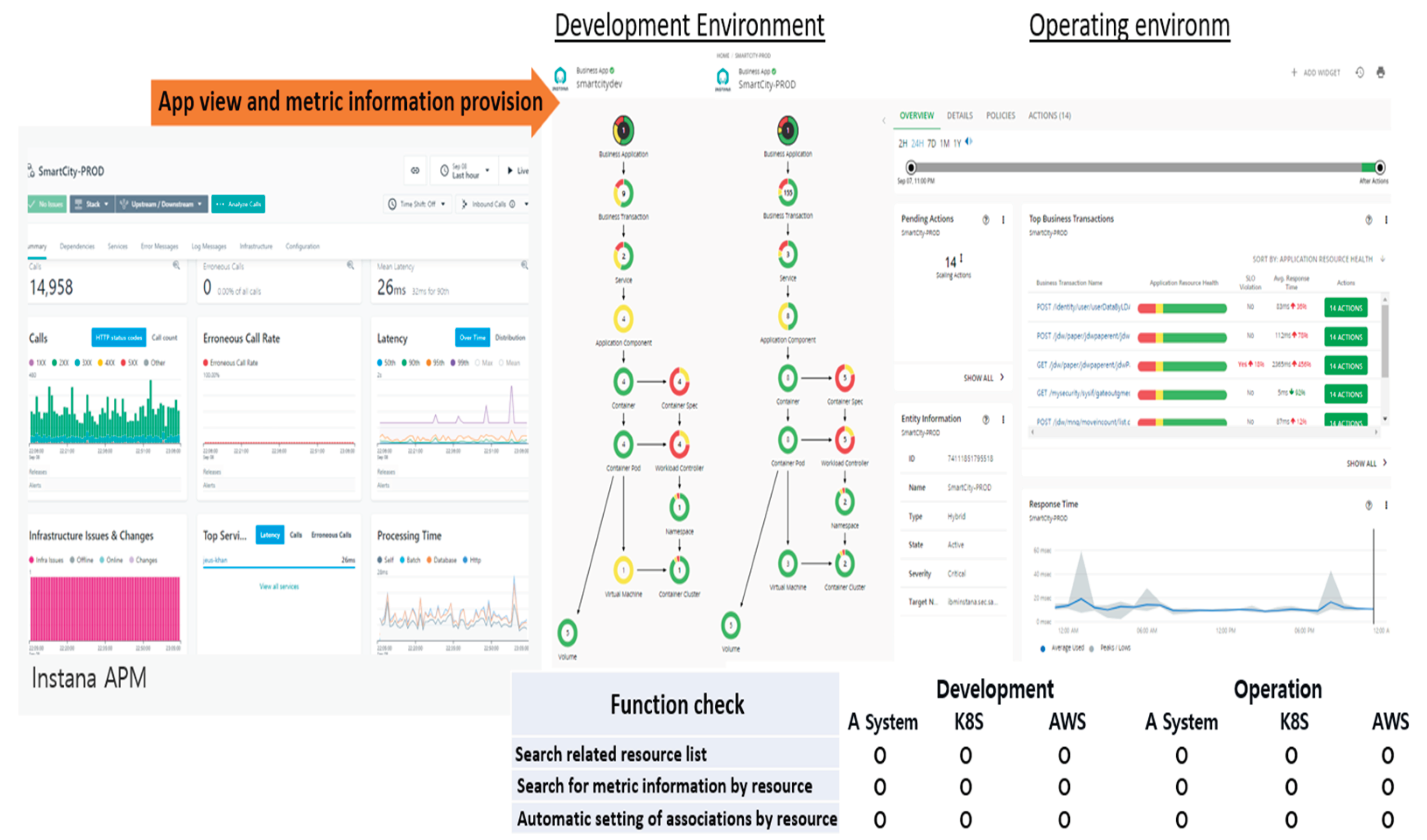

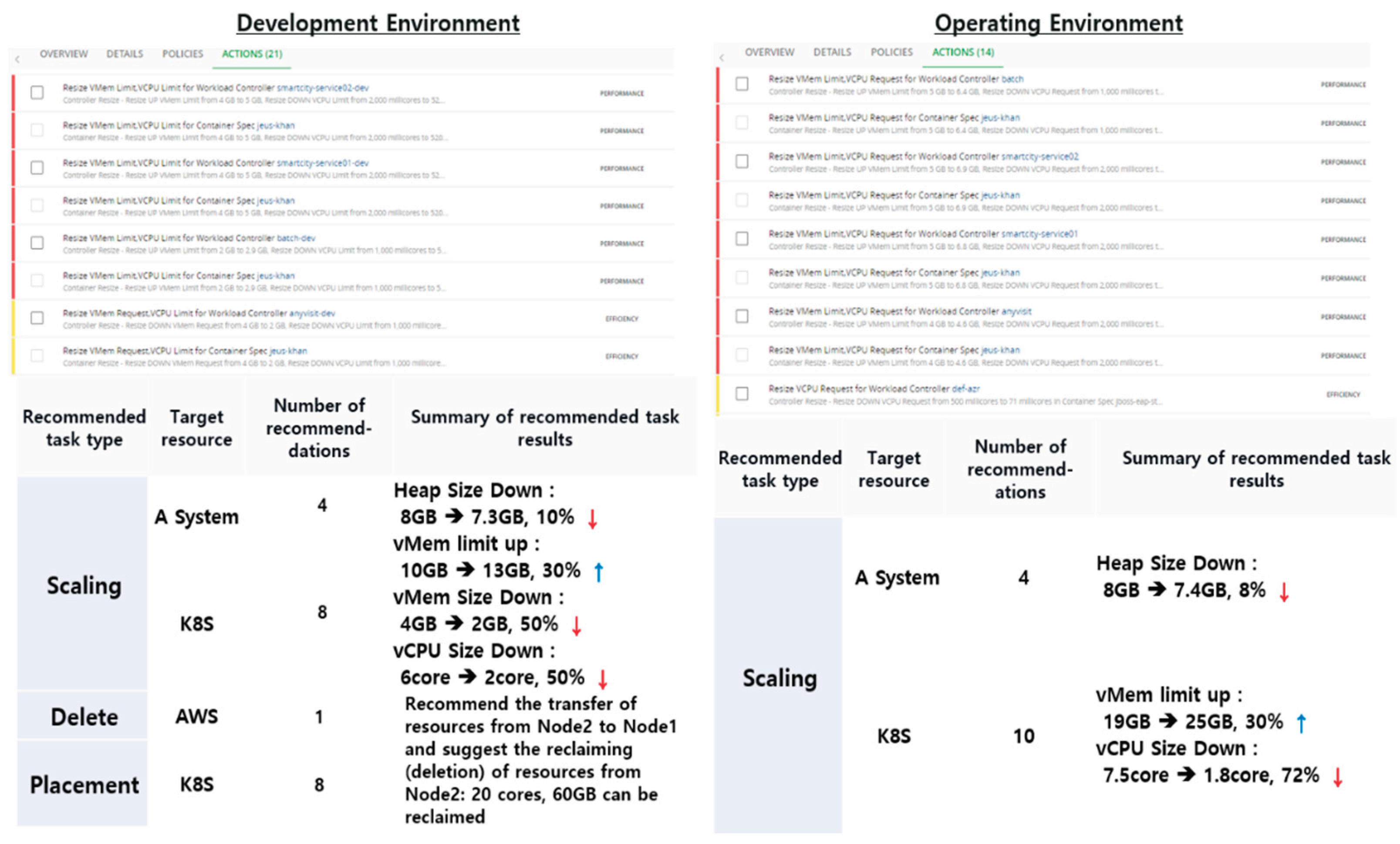

The performance improvement effects of IBM Turbonomic ARM and Instana APM Tool applied in this study were proven through a comparative analysis of Systems A and B. For System A, which implemented MSA, dynamic resource adjustment in a containerized environment resulted in improved throughput and response times. This achievement was particularly facilitated through integration with Kubernetes (K8S) clusters, demonstrating how efficient resource allocation positively affects application performance [

19].

Figure 3.

PoC Results Comparison (System A: Cloud Native System with MSA).

Figure 3.

PoC Results Comparison (System A: Cloud Native System with MSA).

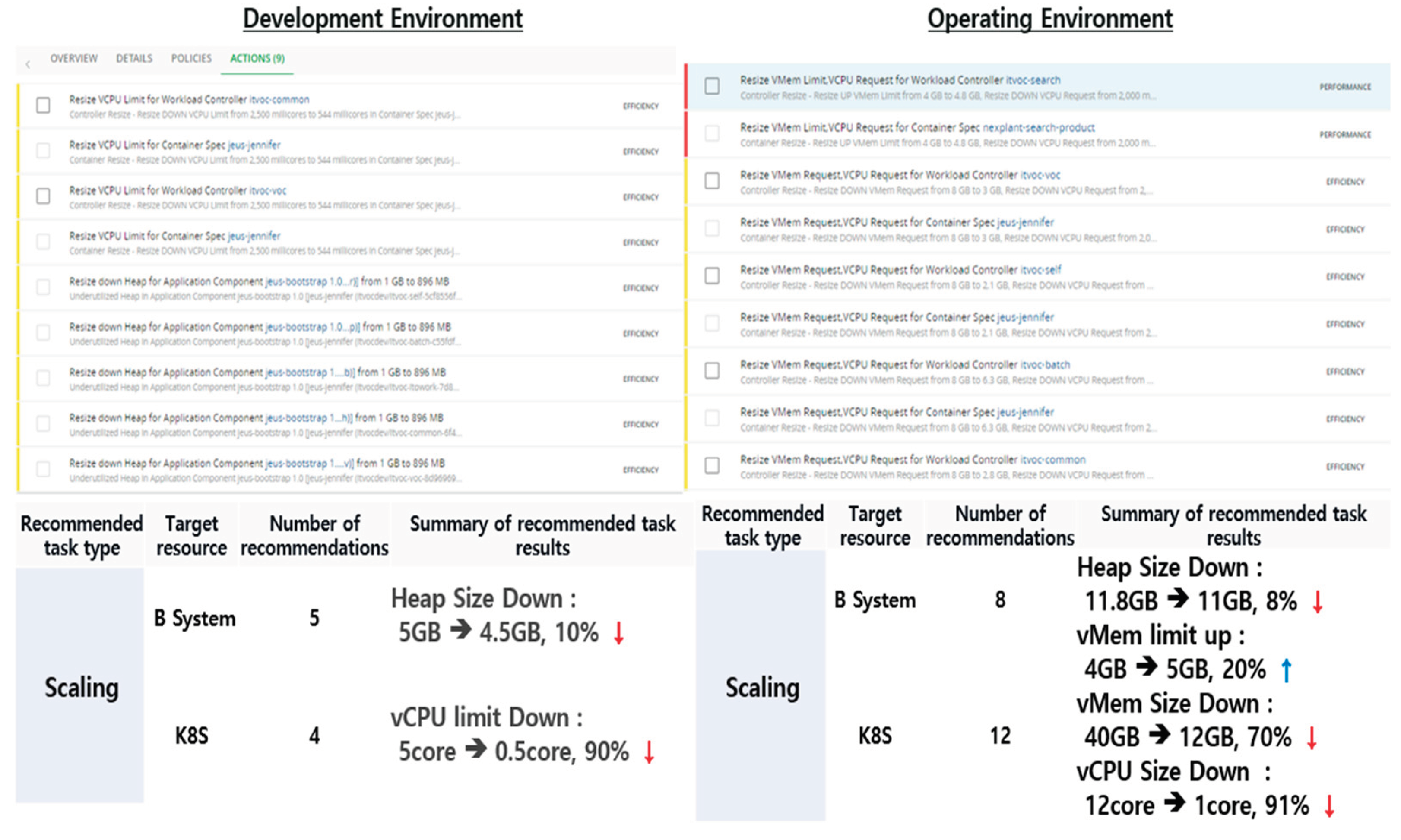

Figure 4.

PoC Results (System A: Resource Optimization Task Recommendations).

Figure 4.

PoC Results (System A: Resource Optimization Task Recommendations).

In the case of System B, even after migration to the cloud using the Rehost strategy, the resource optimization approach utilizing Turbonomic and Instana proved effective in maintaining availability and performance. This plays a crucial role in minimizing potential performance degradation by adjusting resources in real-time.

Figure 5.

PoC Results Comparison (System B: Migration through Rehost).

Figure 5.

PoC Results Comparison (System B: Migration through Rehost).

Figure 6.

PoC Results (System B: Resource Optimization Task Recommendations).

Figure 6.

PoC Results (System B: Resource Optimization Task Recommendations).

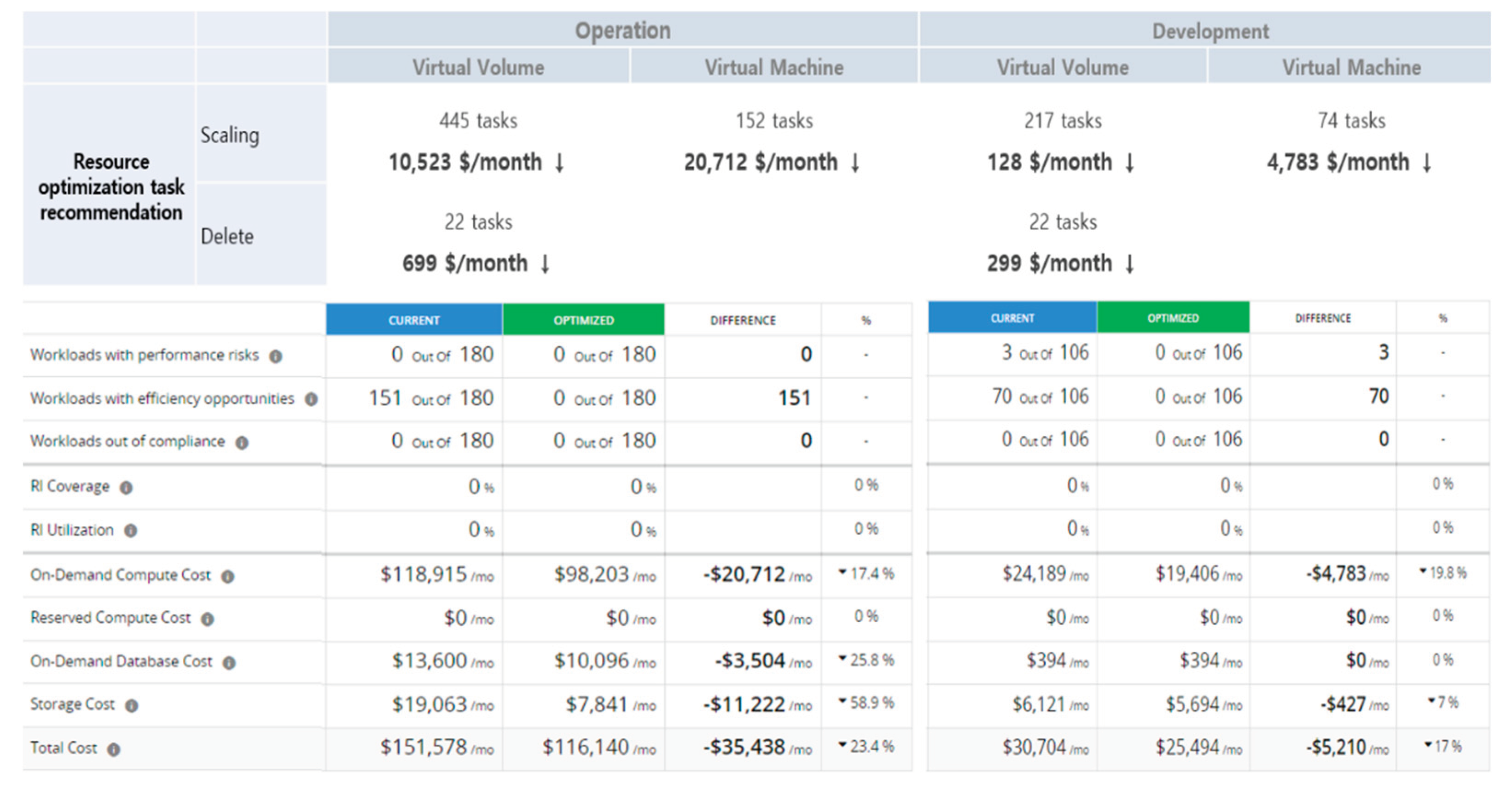

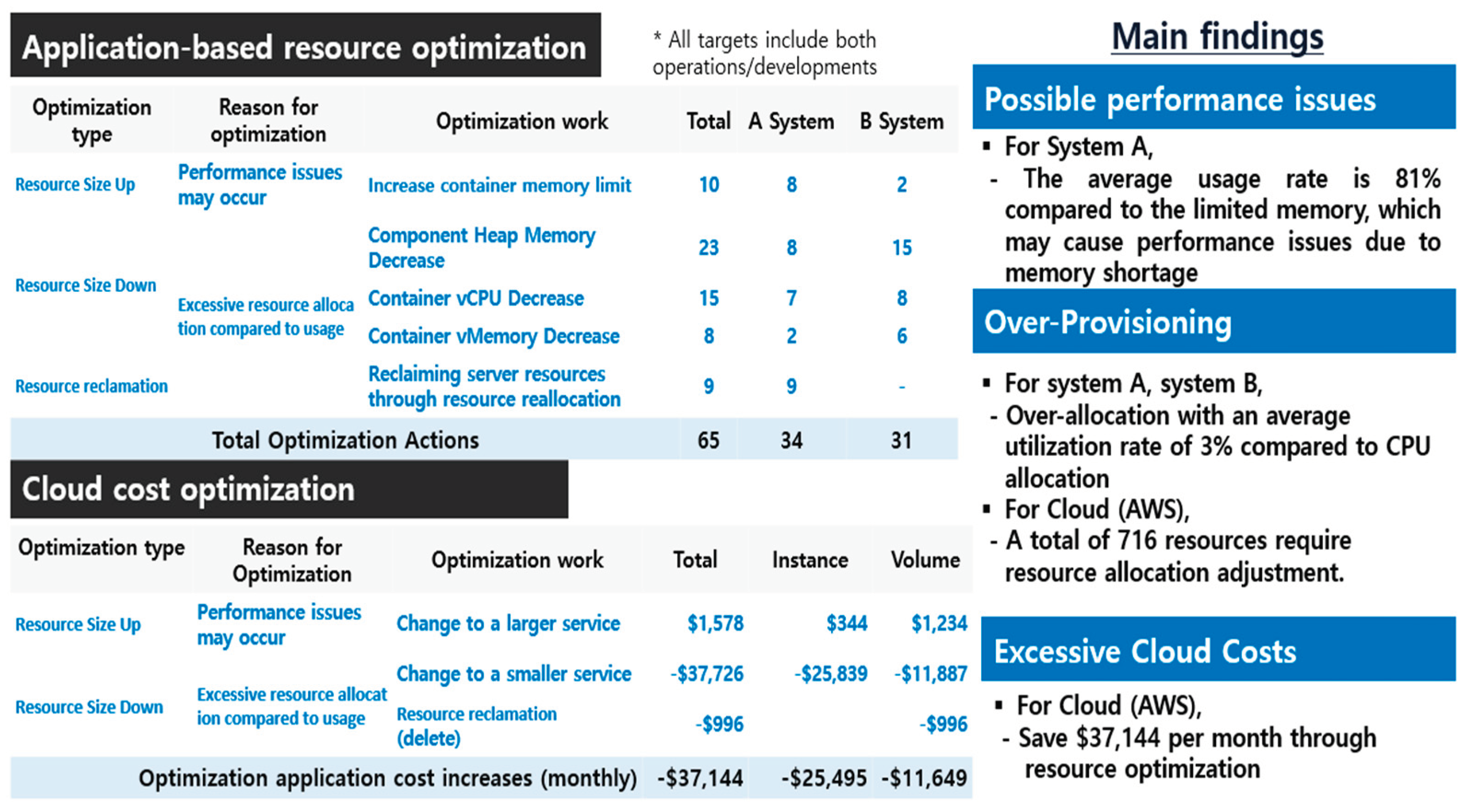

3.2. Cost Reduction

Cost reduction was one of the significant findings of this study. The resource optimization tasks for Systems A and B in AWS and Azure environments showed noticeable cost-saving effects. Specifically, System A achieved an average monthly cloud cost reduction of

$37,144 by reducing unnecessary resource allocations. This is directly linked to a decrease in operational expenses and contributes to long-term cost efficiency. Similarly, System B reduced monthly cloud costs through improved cost management, achieved by minimizing excessive provisioning and deleting unused instances and storage [

20,

21].

Figure 7.

PoC Results Comparison (AWS Cost Analysis).

Figure 7.

PoC Results Comparison (AWS Cost Analysis).

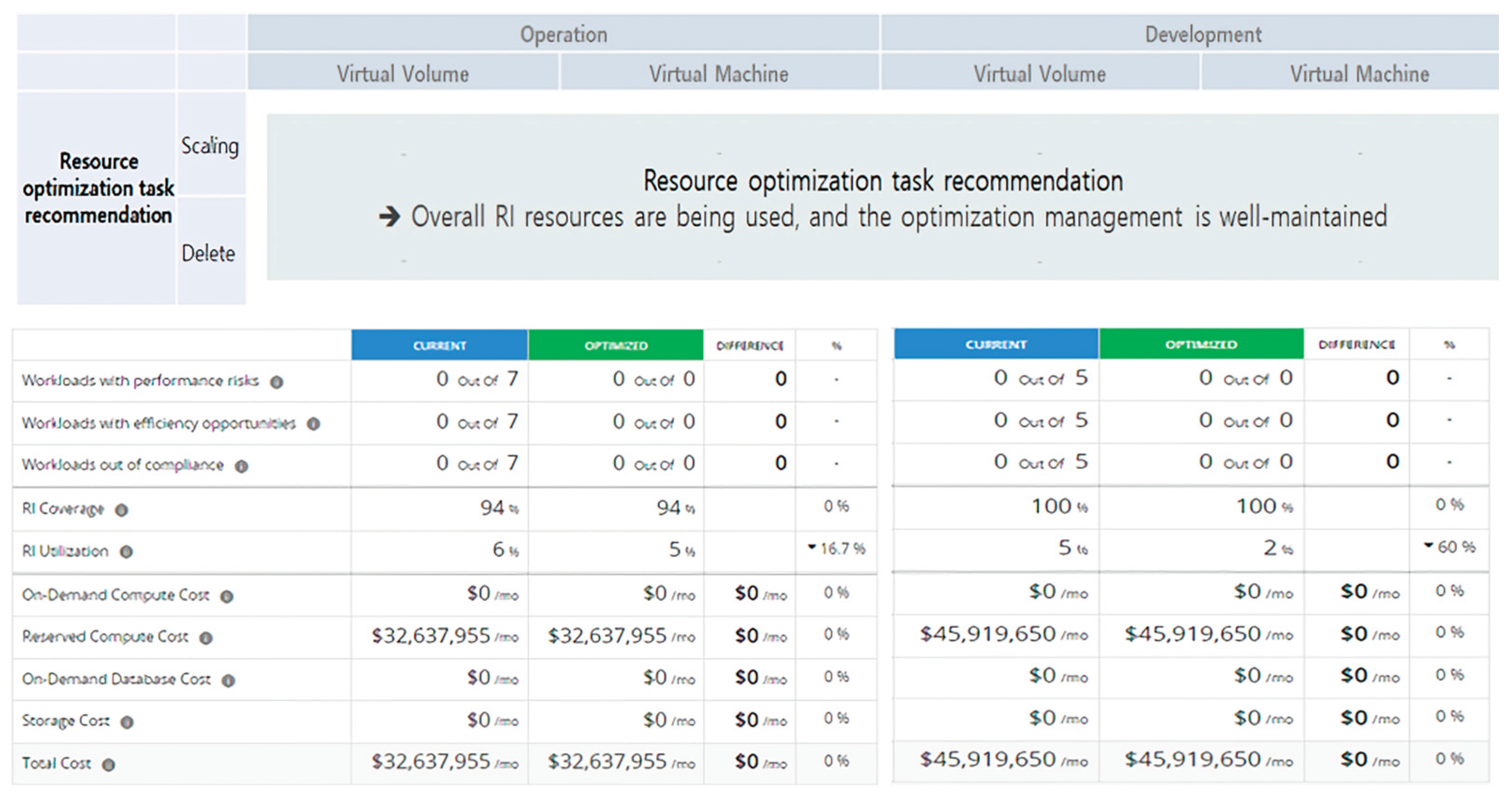

Figure 8.

PoC Results Comparison (Azure Cost Analysis).

Figure 8.

PoC Results Comparison (Azure Cost Analysis).

3.3. Detailed Results

The specific results of the research provide an in-depth analysis of the resource optimization tasks for both systems and their impact on application performance and cost reduction. In System A, application performance was enhanced while preventing over-provisioning of cloud resources and significantly improving cost efficiency based on usage patterns. System B realized resource optimization that ensures performance maintenance along with effective cost management.

These results clearly demonstrate that the application of AI Tools related to cloud resource optimization can provide significant advantages to businesses from both technical and financial perspectives. Consequently, AI-based Tools prove to be essential for companies to manage cloud resources more agilely and efficiently, developing sustainable cost-reduction strategies [

22,

23].

4. Discussion

4.1. Major Findings

This study deeply illuminates the impact of applying AI Tools for cloud resource optimization on application performance improvement and cost reduction. Especially, through the comparative analysis of System A, a refactored cloud-native application, and System B, a legacy system migrated to the cloud, it was demonstrated how the application of AI Tools could bring about significant results in various scenarios.

For System A, the introduction of IBM Turbonomic ARM and Instana APM led to a radical improvement in resource allocation and usage efficiency. Such optimization enabled dynamic resource management, allowing for automatic scaling based on real-time traffic changes, significantly reducing unnecessary resource usage. Consequently, it was confirmed that System A maintained higher performance and lower latency compared to traditional legacy systems.

Conversely, System B, by adopting a simple cloud migration approach (Rehost), retained the constraints and inefficiencies of the existing infrastructure. Although some level of resource optimization was achieved in System B through AI Tools, the performance improvement and cost reduction effects were relatively limited compared to System A. This underscores the importance of refactoring and transitioning to a cloud-native environment, clearly highlighting the advantages of proactive cloud environment adjustment with AI-based optimization Tools over simple migration [

24].

4.2. Interpretation of Results

The application of AI-based resource optimization Tools in this study provided significant benefits in terms of performance and cost in the cloud environment. Particularly, the combined use of IBM Turbonomic ARM and Instana APM Tool made a major contribution, as confirmed [

25,

26].

Dynamic Adjustment of Resource Allocation: Through Turbonomic’s analysis, System A was able to dynamically adjust resource allocation based on real-time data analysis. This contributed to cost savings by automatically allocating additional computing resources or reducing allocated resources according to changes in application usage. This process played an important role in optimizing resource use and preventing waste due to over-provisioning [

27,

28].

Performance Monitoring and Optimization: The Instana APM Tool continuously monitored application performance, identifying causes of performance degradation. Based on this, it preemptively addressed performance issues and optimized application response times, thereby improving user experience [

29].

Specific Examples of Cost Reduction: Through the implementation of System A, the research team achieved an average monthly cloud cost reduction of $37,144 by minimizing unnecessary resource allocations. This result, derived from applying a resource allocation strategy based on actual usage, also contributed to cost savings by selecting cost-efficient resources considering the price volatility of cloud resources.

Insights through Comparative Analysis: The comparison between Systems A and B clearly showed how the transition to a cloud-native architecture and the application of AI-based resource optimization Tools contribute to resource use efficiency and operational cost reduction. Compared to traditional resource management approaches, the case of System B, which only offered limited benefits in terms of performance and cost through the application of AI Tools, confirms this.

The study detailed the specific methods by which the application of AI-based resource optimization Tools contributes to performance improvement and cost reduction in the cloud environment [

30]. This provides practical guidelines for companies using cloud services and is expected to serve as an important reference for future cloud infrastructure management strategy development.

Figure 9.

Summary of Final Results.

Figure 9.

Summary of Final Results.

5. Conclusion

This study empirically analyzed the impact of applying AI-based resource optimization tools on application performance improvement and cost reduction in the cloud environment. The integrated use of IBM Turbonomic ARM and Instana APM tools has confirmed significant advantages in various aspects, including dynamic adjustment of resource allocation, performance monitoring and optimization, and specific examples of cost reduction. The comparative analysis of Systems A and B clearly demonstrated how the transition to a cloud-native architecture and the application of AI-based optimization tools contribute to the efficiency of resources and reduction of operational costs.

The results contribute to the advancement of cloud computing and AI technology, providing practical guidelines for establishing and implementing cloud resource management strategies in actual business environments. The AI-based resource optimization approach developed and validated in this study can be applied across various cloud environments and application types, helping businesses to effectively manage cloud costs and maximize operational efficiency.

The limitations of this study include its focus on specific tools and cloud environments, necessitating further research across a wider range of cloud service models and application types. Future research should aim to expand the application scope of AI-based resource optimization tools and seek answers to the following key questions:

What are the advantages of AI-based resource optimization over traditional rule-based resource adjustment methods?

How do AI-based tools recommend resource adjustments, and how accurate are their predictions?

Can system performance be ensured even when resources are scaled down, and to what extent are guarantees provided?

What methods support communication with application managers and improve the efficiency of the resource optimization decision process?

Besides resource scaling recommendations through ARM, what other use cases are possible?

In-depth investigation and analysis of these questions will further advance cloud resource optimization methodologies and make a significant contribution to realizing cost management and performance optimization in actual cloud service environments through AI technology.

Author Contributions

Conceptualization, D.Y. and C.S.; methodology, D.Y.; software, C.S.; validation, D.Y., C.S.; formal analysis, D.Y.; investigation, C.S.; resources, D.Y.; data curation, C.S.; writing—original draft preparation, D.Y.; writing—review and editing, C.S.; visualization, D.Y.; supervision, C.S.; project administration, D.Y.; funding acquisition, C.S. Both authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Motahari-Nezhad, H.R.; Stephenson, B.; Singhal, S. Outsourcing Business to Cloud Computing Services: Opportunities and Challenges. IEEE Internet Comput. 2009, 10, 1–17. [Google Scholar]

- Moghaddam, S.K.; Buyya, R.; Ramamohanarao, K. Performance-Aware Management of Cloud Resources: A Taxonomy and Future Directions. ACM Comput. Surv. 2019, 52, 1–37. [Google Scholar] [CrossRef]

- Khan, T.; Tian, W.; Zhou, G.; Ilager, S.; Gong, M.; Buyya, R. Machine learning (ML)-centric resource management in cloud computing: A review and future directions. J. Netw. Comput. Appl. 2022, 204, 103405. [Google Scholar] [CrossRef]

- Gill, S.S.; Xu, M.; Ottaviani, C.; Patros, P.; Bahsoon, R.; Shaghaghi, A.; Golec, M.; Stankovski, V.; Wu, H.; Abraham, A.; et al. AI for next generation computing: Emerging trends and future directions. Internet Things 2022, 19. [Google Scholar] [CrossRef]

- Wamba-Taguimdje, S.-L.; Wamba, S.F.; Kamdjoug, J.R.K.; Wanko, C.E.T. Influence of artificial intelligence (AI) on firm performance: the business value of AI-based transformation projects. Bus. Process. Manag. J. 2020, 26, 1893–1924. [Google Scholar] [CrossRef]

- Arora, R.; Devi, U.; Eilam, T.; Goyal, A.; Narayanaswami, C.; Parida, P. Towards Carbon Footprint Management in Hybrid Multicloud. In Proceedings of the 2nd Workshop on Sustainable Computer Systems, July 2023; pp. 1–7. [Google Scholar]

- Weinberg, N. Las 10 Mejores Empresas de Gestión de Redes. Computerworld Spain, 2022, NA, NA-NA.

- Sabir, A.; Shahid, A. Effective Management of Hybrid Workloads in Public and Private Cloud Platforms. Master’s Thesis, 2023.

- Kleehaus, M.; Matthes, F. Automated Enterprise Architecture Model Maintenance via Runtime IT Discovery. In Architecting the Digital Transformation: Digital Business, Technology, Decision Support, Management, 2021, pp. 247–263.

- Heger, C.; Van Hoorn, A.; Okanović, D.; Siegl, S.; Wert, A. Expert-Guided Automatic Diagnosis of Performance Problems in Enterprise Applications. 2016 12th European Dependable Computing Conference (EDCC), IEEE, September 2016, pp. 185–188.

- Chemashkin, F.Y.; Drobintsev, P.D. Kubernetes Operators as a Control System for Cloud-Native Applications. Technical Report, Peter the Great St. Petersburg Polytechnic University, 2021.

- Khriji, S.; Benbelgacem, Y.; Chéour, R.; El Houssaini, D.; Kanoun, O. Design and implementation of a cloud-based event-driven architecture for real-time data processing in wireless sensor networks. J. Supercomput. 2022, 78, 3374–3401. [Google Scholar] [CrossRef]

- Ige, T.; Sikiru, A. Implementation of Data Mining on a Secure Cloud Computing Over a Web API Using Supervised Machine Learning Algorithm. Computer Science On-line Conference, April 2022, pp. 203–210.

- Hameed, A.; Khoshkbarforoushha, A.; Ranjan, R.; Jayaraman, P.P.; Kolodziej, J.; Balaji, P.; Zeadally, S.; Malluhi, Q.M.; Tziritas, N.; Vishnu, A.; et al. A survey and taxonomy on energy efficient resource allocation techniques for cloud computing systems. Computing 2016, 98, 751–774. [Google Scholar] [CrossRef]

- Zhang, F.; Cao, J.; Li, K.; Khan, S.U.; Hwang, K. Multi-objective scheduling of many tasks in cloud platforms. Future Gener. Comput. Syst. 2014, 37, 309–320. [Google Scholar] [CrossRef]

- Aslanpour, M.S.; Gill, S.S.; Toosi, A.N. Performance evaluation metrics for cloud, fog and edge computing: A review, taxonomy, benchmarks and standards for future research. Internet Things 2020, 12, 100273. [Google Scholar] [CrossRef]

- Everman, B.; Gao, M.; Zong, Z. Evaluating and reducing cloud waste and cost—A data-driven case study from Azure workloads. Sustain. Comput. Informatics Syst. 2022, 35, 100708. [Google Scholar] [CrossRef]

- Aqasizade, H.; Ataie, E.; Bastam, M. Kubernetes in Action: Exploring the Performance of Kubernetes Distributions in the Cloud. arXiv preprint arXiv, 2024.

- Al-Sayyed, R.M.H.; Hijawi, W.A.; Bashiti, A.M.; AlJarah, I.; Obeid, N.; Al-Adwan, O.Y.A. An Investigation of Microsoft Azure and Amazon Web Services from Users’ Perspectives. Int. J. Emerg. Technol. Learn. (iJET) 2019, 14, 217–241. [Google Scholar] [CrossRef]

- Khan, A.A.; Zakarya, M. Energy, performance and cost efficient cloud datacentres: A survey. Comput. Sci. Rev. 2021, 40, 100390. [Google Scholar] [CrossRef]

- Walia, G.K.; Kumar, M.; Gill, S.S. AI-Empowered Fog/Edge Resource Management for IoT Applications: A Comprehensive Review, Research Challenges, and Future Perspectives. IEEE Commun. Surv. Tutorials 2023, 26, 619–669. [Google Scholar] [CrossRef]

- Robertson, J.; Fossaceca, J.M.; Bennett, K.W. A Cloud-Based Computing Framework for Artificial Intelligence Innovation in Support of Multidomain Operations. IEEE Trans. Eng. Manag. 2021, 69, 3913–3922. [Google Scholar] [CrossRef]

- Amiri, Z.; Heidari, A.; Navimipour, N.J.; Unal, M. Resilient and dependability management in distributed environments: a systematic and comprehensive literature review. Clust. Comput. 2023, 26, 1565–1600. [Google Scholar] [CrossRef]

- Tuli, S.; Gill, S.S.; Xu, M.; Garraghan, P.; Bahsoon, R.; Dustdar, S.; Sakellariou, R.; Rana, O.; Buyya, R.; Casale, G.; et al. HUNTER: AI based holistic resource management for sustainable cloud computing. J. Syst. Softw. 2022, 184, 111124. [Google Scholar] [CrossRef]

- Yathiraju, N. Investigating the use of an Artificial Intelligence Model in an ERP Cloud-Based System. Int. J. Electr. Electron. Comput. 2022, 7, 01–26. [Google Scholar] [CrossRef]

- Sangaiah, A.K.; Javadpour, A.; Pinto, P.; Rezaei, S.; Zhang, W. Enhanced resource allocation in distributed cloud using fuzzy meta-heuristics optimization. Comput. Commun. 2023, 209, 14–25. [Google Scholar] [CrossRef]

- Beloglazov, A.; Buyya, R. Energy Efficient Resource Management in Virtualized Cloud Data Centers. In Proceedings of the 2010 10th IEEE/ACM International Conference on Cluster, Cloud and Grid Computing, Melbourne, VIC, Australia, 17–20 May 2010; pp. 826–831. [Google Scholar]

- Heinrich, R., Van Hoorn, A., Knoche, H., Li, F., Lwakatare, L.E., Pahl, C., ... Wettinger, J. Performance engineering for microservices: research challenges and directions. 8th ACM/SPEC on International Conference on Performance Engineering Companion, April 2017, pp. 223-226.

- Boudi, A.; Bagaa, M.; Poyhonen, P.; Taleb, T.; Flinck, H. AI-Based Resource Management in Beyond 5G Cloud Native Environment. IEEE Netw. 2021, 35, 128–135. [Google Scholar] [CrossRef]

- Aryal, R.G., Altmann, J. Dynamic application deployment in federations of clouds and edge resources using a multiobjective optimization AI algorithm. 2018 Third International Conference on Fog and Mobile Edge Computing (FMEC), IEEE, April 2018, pp. 147-154.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).