One of the most well-known disorders affecting women worldwide is Carcinoma of the cervix. It is one of the illnesses that threatens the welfare of women all over the world and is difficult to detect at early stages. One of the cancer forms that affect the cells of the cervix is cervical cancer [

1]. It is common cancer in the female population. The two most common cancers in men are lung and oral cancer, whereas the two most common cancers in women are breast and cervical cancer [

2]. One of the main factors contributing to the significantly higher prevalence of cervical cancer in poor nations is the absence of efficient screening programs for identifying and treating precancerous diseases. In 2018 there were ~569,000 new cases of cervical cancer diagnosed worldwide. Of these, between 84% and 90% occurred in (LMICs) such as South Africa, India, China, and Brazil [

3]. As an example, low-income women do not undergo screening for cervical cancer (such as Pap tests), and thus require attention from health administrations, but some people choose to ignore the manifestations out of modesty [

4]. The majority of cervical cancer cases start as an increase in women between the ages of 20 and 29, reach their peak, turn grey between the ages of 55 and 64, and get even more grey after the age of 65 [

5]. Cervical cancer has many profound consequences, the most important of which is that it causes infertility. Increased cervical cancer risk and cause of cancer death are causes to be in low-income countries. The cause of 275,000 deaths in 2008 was cervical cancer. Moreover, about 88% of these incidents have a place in developing nations. Cervical cancer prevention may be programmed through techniques like acetic acid visual examination, Pap test, human papillomavirus (HPV) Testing, and colposcopy [

6]. In a concept of multifactorial, stepwise carcinogenesis at the cervix uteri, smoking and human papillomavirus (HPV) are now significant problems, thus preventative and control strategies based on society, screening procedures, and HPV vaccination are advised as it is the main cause of cervical cancer [

7]. However, due to the presence of some social obstacles and the importance of early detection of cervical cancer, researchers directed to identify cervical cancer based on behavior. Cervical cancer can be caused by a variety of factors [

8] such as smoking, low socioeconomic position, and long-term use of oral contraceptives (birth control pills: Long-term usage of oral contraceptives (OCs) has been linked to an increased risk of cervical cancer), several sexual partners, early marriage (partners in young age at first sex significantly raise the risk of acquiring cervical cancer, according to most studies) [

9], a weaker immune system such as human immunodeficiency virus (HIV) [

10], the virus that causes AIDS, affects the immune system, putting persons at risk for HPV infections, Chlamydia infection [

11], and numerous full-term pregnancies (cervical cancer is more likely to develop in women who have had three or more full-term pregnancies). Moreover, an independent risk factor for clear cell adenocarcinoma, a kind of cervical cancer, is exposure to the medication such as diethylstilbesterol (DES) during pregnancy. Several pregnant women in the United States received DES between 1940 and 1971 in order to avoid miscarriage (the premature birth of a fetus that cannot survive) and early birth. Clear cell adenocarcinoma of the vagina and cervix, as well as cervical cell abnormalities, are more common in women whose mothers used DES while they were expecting [

12]. Furthermore, hormonal changes during pregnancy have been linked to women being more susceptible to HPV infection or cancer progression. Certain families may have a history of cervical cancer. The likelihood of getting cervical cancer is higher if mother or sister had it as compared to no one in the family that has it. Some experts believe that a hereditary issue that makes certain women less able to fight off HPV infection than other women may be the root cause of some uncommon cases of this familial tendency. In other cases, women in a patient's immediate family may be more likely to have one or more of the additional non-genetic risk factors than women who are not related to the patient [

13]. Since the mid-1970s, the death rate has decreased by about 50%, in part due to improved screening that has allowed for the early detection of cervical cancer. From 1996 to 2003, the death rate was over 4% per year; from 2009 to 2018, it was less than 1% [

14]. Many studies on cervical cancer have been carried out recently employing modern techniques that offer early-stage prediction. It is noteworthy to mention that applying machine learning has contributed to early prediction [

15]. Thus, lack of knowledge, lack of access to resources and medical facilities, and the expense of attending regular examination in some countries are the main reasons of this disease among female populations [

16] Based on behavior, there are significant factors that can be used to estimate the risk of Ca Cervix illness. Behavior is extensively researched in social science theories, including psychology and the study of health. The Health Belief Model (HBM), Protective Motivation Theory (PMT), Theory of Planned Behavior (TPB), Social Cognitive Theory (SCT), etc., are all examples of common behavior-related theories or models. Two cognitive processes—perceiving the threat of sickness and evaluating the threat-reduction behaviors—determine HBM [

17]. Several theories in social and health psychology make the assumption that intentions are what motivate and direct conduct [

18]. Whereas PMT notes that the main factor influencing behavior is protection motivation, or the desire to engage in preventive action [

19]. One of the factors influencing organizational preventative behavior is motivation [

20]. According to TPB, attitudes, and perceived behavioral control (PBC) are thought to be the three components that influence intention, which in turn affects performance [

19]. In such a way that both were stronger indicators of intention, attitude and subjective norm interacted with perceived control [

21]. According to SCT, three factors—goals, result expectations, and self-efficacy—determine prevention behavior [

17]. Participants' behavior in preventing cervical cancer may be improved by emphasizing social support [

22]. The ability to make choices, gain access to information, and utilize personal and societal resources to engage in behaviors that prevent cervical cancer may be described to as empowerment [

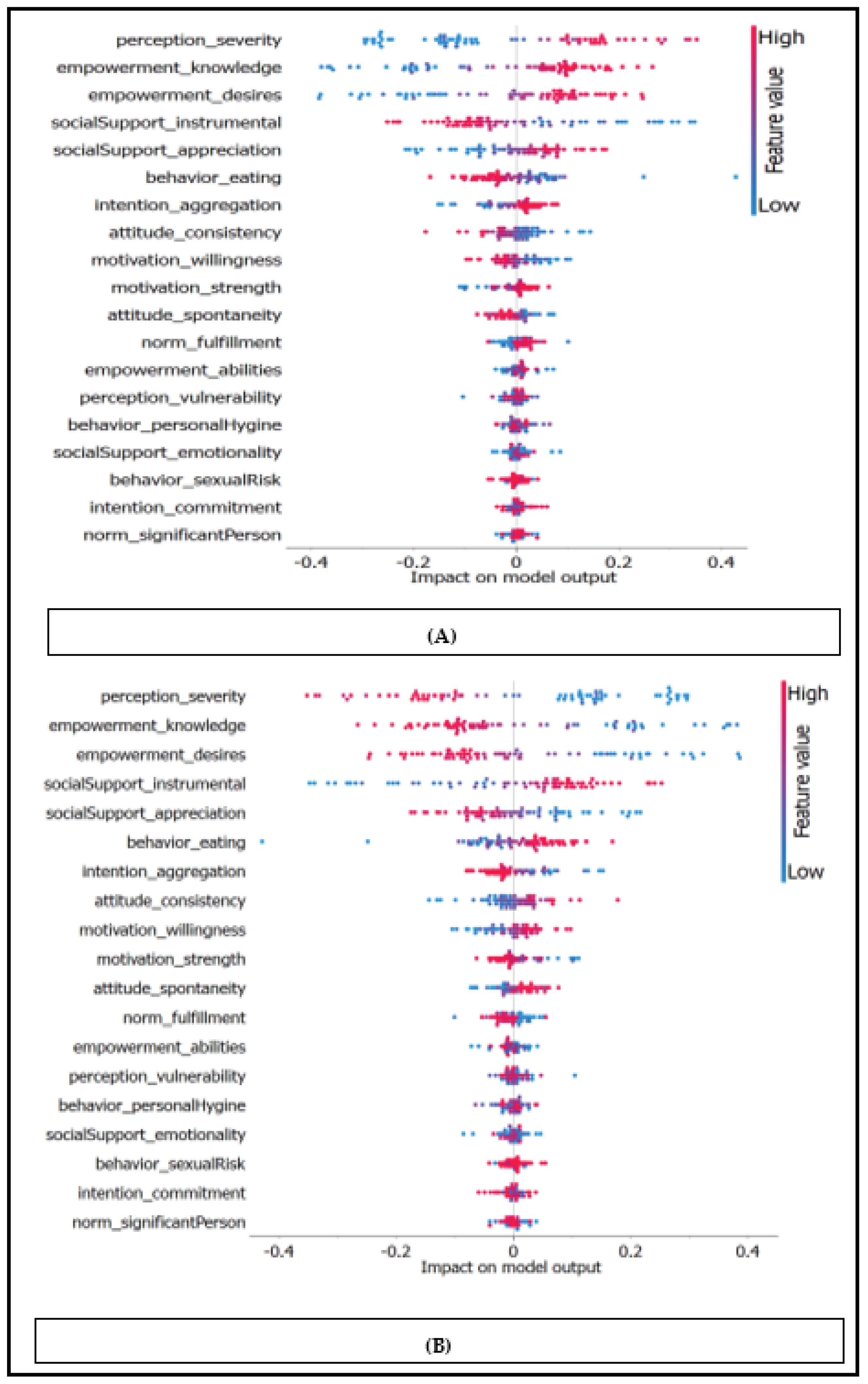

23]. According to those views, there are seven factors that influence behavior: attitude, social support, empowerment, motivation, subjective norm, and perception. These eight variables—seven determinants and the behavior itself—were translated into questionnaires in this study, each of which had nine questions. The questionnaire was subsequently delivered to 72 responders, 22 of whom were Ca Cervix sufferers and 50 of whom were not [

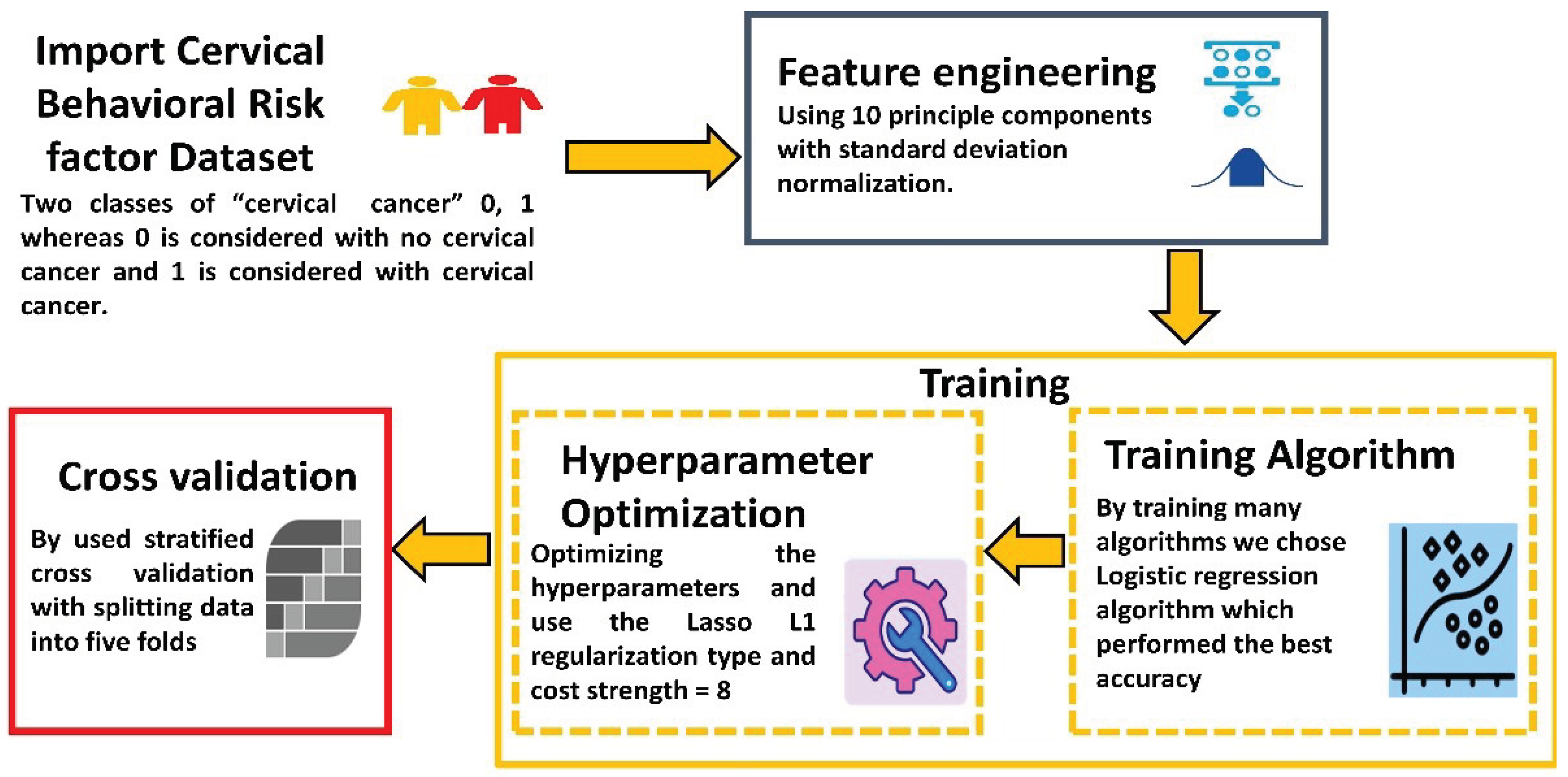

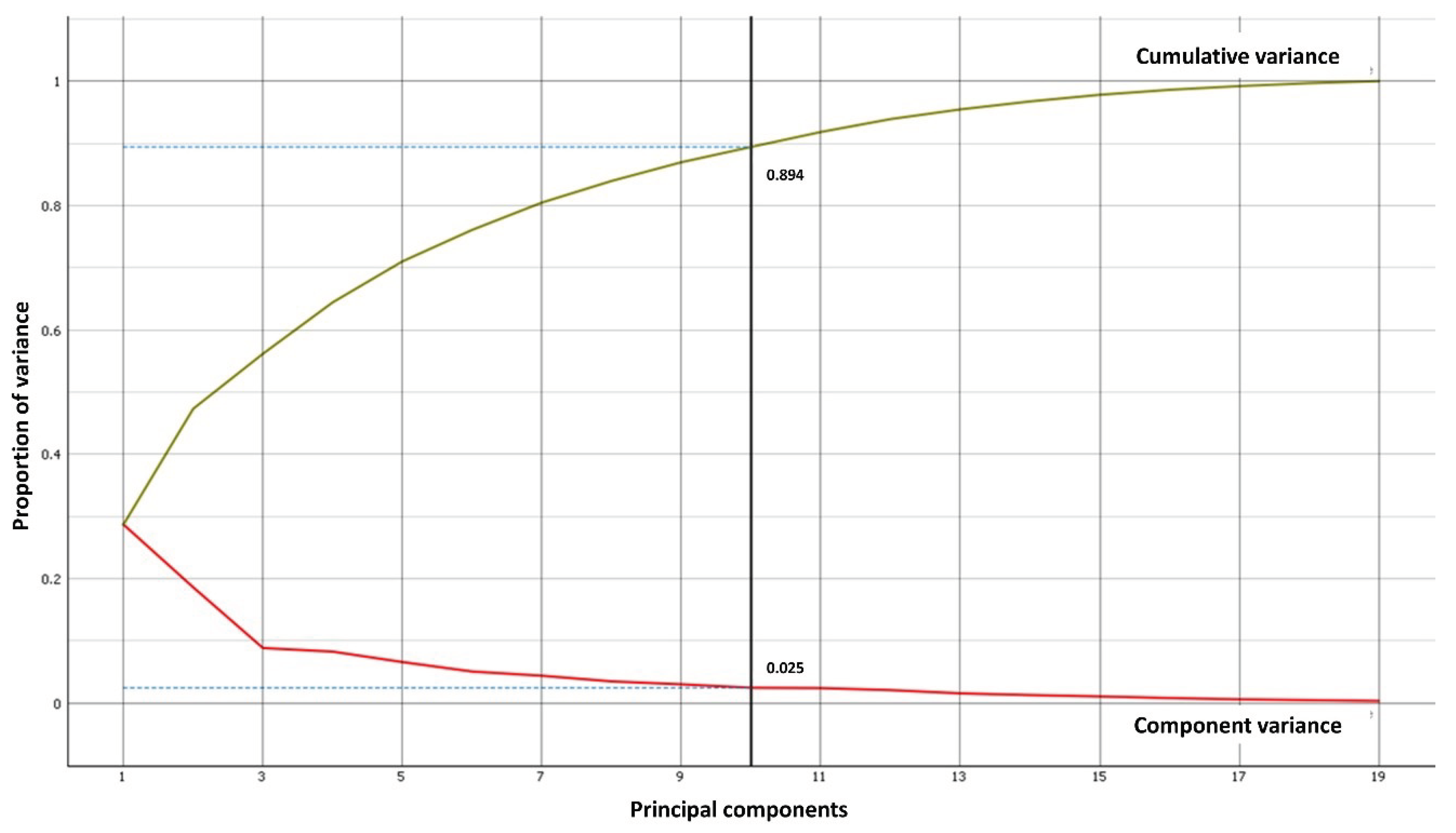

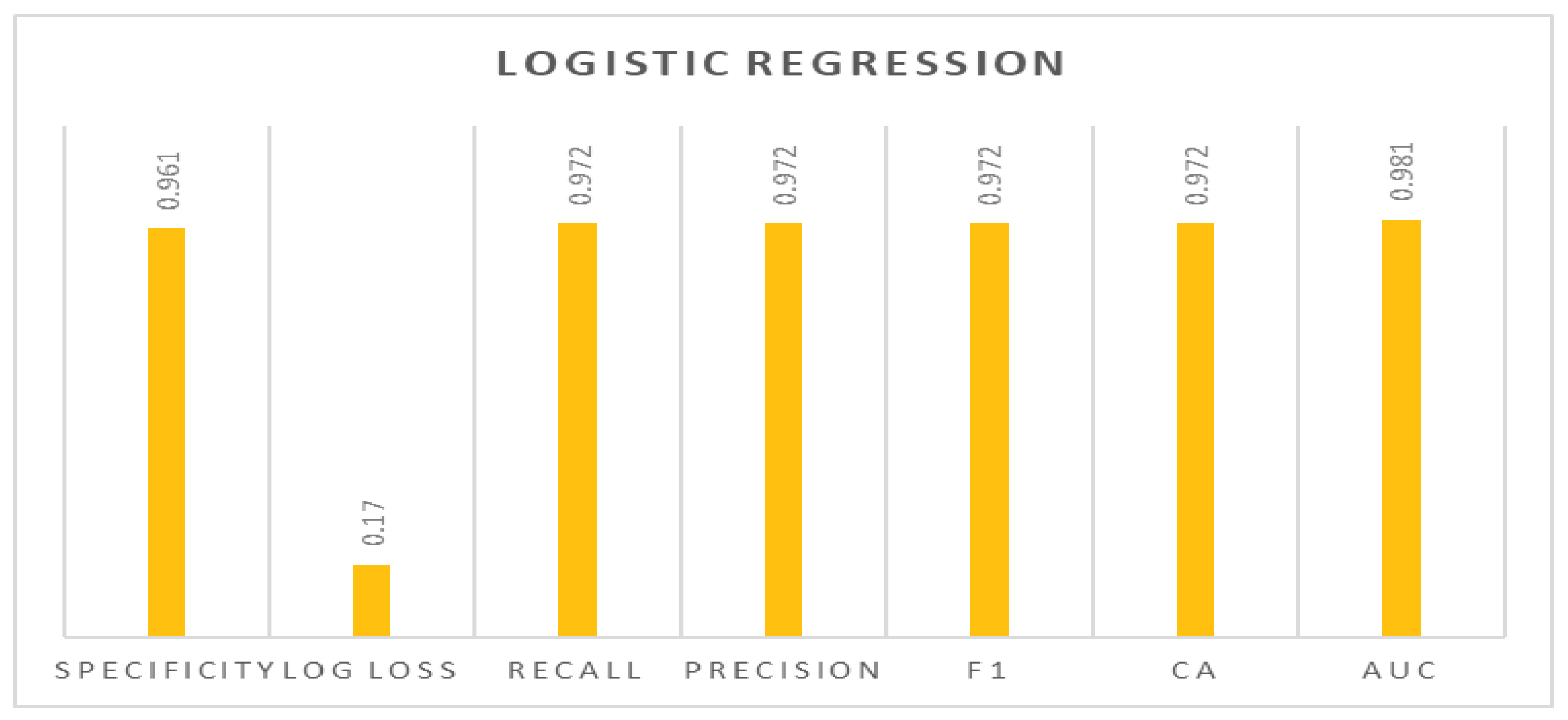

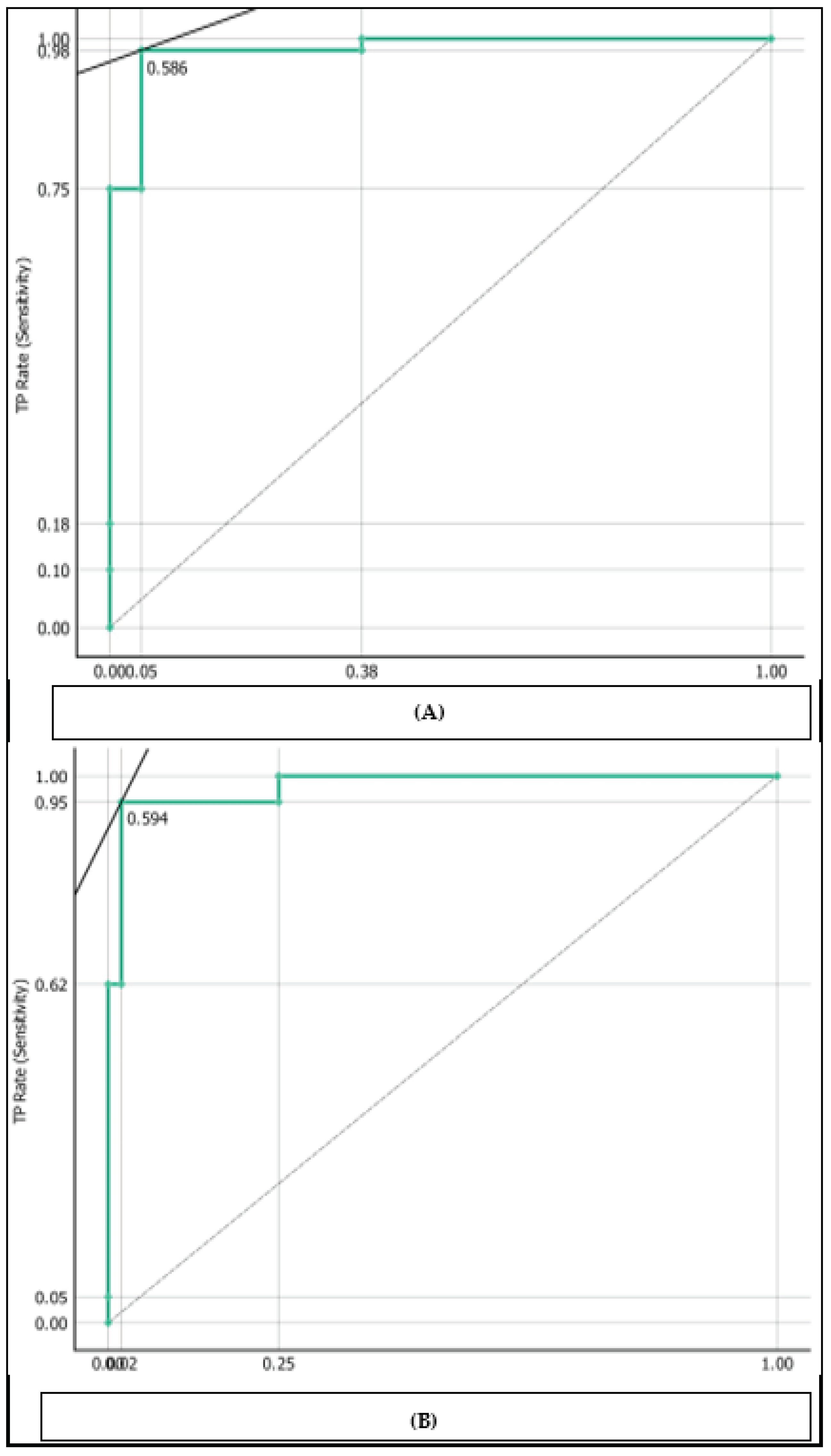

24]. Every single respondent is a city person in Jakarta, Indonesia. This set of seven factors plus the behavior itself are used as features or attributes to build a classification model for Ca Cervix risk early detection using machine learning. The efficiency of studies and the production of precise patient data have both improved due to machine learning. The recent research oriented towards using text mining, machine learning, and econometric technologies to improve the quality of screening and prediction. This paper proposed a pipeline for improving classification to help early prediction of cervical cancer from the behavioral risk factor perspective by employing the logistic regression algorithm. Our proposed method has achieved better classification accuracy than existing methods.

As shown in

Table 1, we made a comparison between previous studies which focused on predicting cervical cancer based on behavioral risk factor. L. Akter et, al. [

4] used three machine learning models including Random Forest, Decision Tree and XGBoost which contain seventy-two records and include nineteen attributes with 93.33% accuracy for all classifiers. This same study [

4] focused mainly on data pre-processing and exploratory data analysis which helped mainly to select the most important features, but the method is complex and it used data exploratory analysis as much as it needs. Sobar et, al. [

24] who also works on behavioral risk factor datasets using Naive Bayes and Logistic regression classifiers, have reached 91.67% and 87.55% respectively. This paper presented the used dataset in our proposed method and made it publicly available. And it also introduced the Risk factor based on psychological theory in details. On the other hand, the reached accuracy was low due to the lacking of pre-processing stage. Asadi F. et, al. [

25] used a dataset that contains 145 patients with twenty-three attributes and used machine learning classification algorithms which included SVM, and the results were 79% accuracy, 67% precision, and an 85% area under the curve. The main advantage of this paper is that the number of significant predictors for analysis was decreased, so the computing cost of this proposed model decreased. On the other hand, the literature review part was not clearly mentioned. Xiaoyu Deng et, al. [

26] used XGBoost, SVM, and Random Forest to evaluate data on the cervical disease. The set came from the "UCI machine learning repository," and it included thirty-two risk factors and four goal variables from 858 individuals' clinical histories. To deal with the dataset's unevenness, they employed Synthetic Minority Oversampling Technique Borderline-SMOTE. The using of the SMOTE in pre-processing stage and selecting the top five risk factors in such problem helped well in increasing the results. B. Nithya et, al. [

27] used machine learning to investigate the risk variables for cervical cancer. The dataset had 858 rows and twenty-seven characteristics. They employed five algorithms including SVM, C5.0, r-part, Random Forest, and K-NN, with ten-fold cross-validation and accuracy of 97.9%, 96.9%, 96.9%, 88.8%, and 88.8%, respectively. This paper introduced mainly the feature selection techniques which helped increasing the accuracy and also used variety of classifier, but it didn’t focus on predicting the cervical cancer based on behavioral risk factor. C.-J. Tseng et, al. [

28] used SVM, and C5.0 and were utilized to discover significant risk variables for predicting the recurrence-proneness of cervical illness. The SVM and C5.0 have an accuracy of 68.00 %, and 96.00 % respectively. They used the Extreme learning machines (ELM) which were taken into consideration to identify important risk factors to forecast the likelihood for cervical cancer to recur. S. K. Suman et, al. [

29] suggested a model that might be used to predict the risk of cervical cancer where SVM, AdaBoost, Bayes Net, Random Forest, Neural Network and Decision Tree were among the methods employed. The error rate, FP rate, TP rate, F1-score, AUC, and Matthews’s correlation coefficient (MCC) of the Bayes Net method are 3.61, 0.32, 0.96, 0.96, 0.95, and 0.68, respectively. Although the high accuracies which S. K. Suman et, al. [

29] reached, yet his study relied on classification on analyzing the tissue slide and looking at different risk factors so they didn’t perform early prediction. Therefore, it is important to mention that the biggest gap from the literature review is the neglect of behavioral risk factors as a source of cervical cancer prediction. While several studies examined feature selection, exploratory data analysis, and information pre-processing approaches, they did not specifically address the prediction of cervical malignancies based on behavioral risk factors. Additionally, some studies [27-29] showed low accuracy due to the absence of the pre-processing stage or failed to virtually identify the literature evaluation portion. Moreover, we noticed that there is a shortage in early prediction of cervical cancer based on behavioral risk factor while choosing the most suitable algorithm and increasing the prediction accuracy by enhancing the hyperparameter. Thus, our focus in this research work is to fill the gaps of the literature by building robust algorithm with optimized hyperparameter based on behavioral risk factor to better detect cervical cancer at earlier stages.