Submitted:

01 April 2024

Posted:

01 April 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Materials and Methods

Patient Selection

Radiographic Data

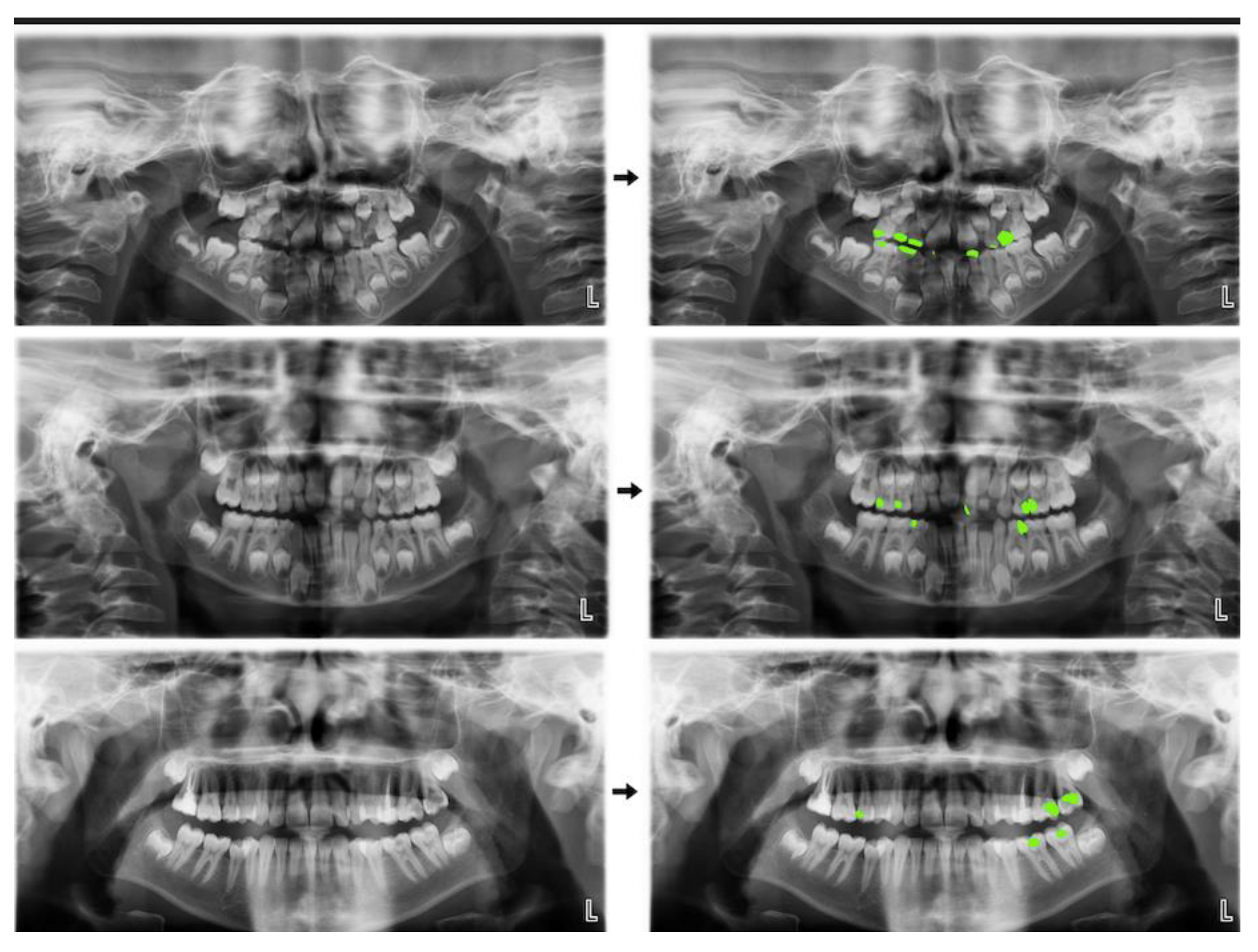

Image Evaluation

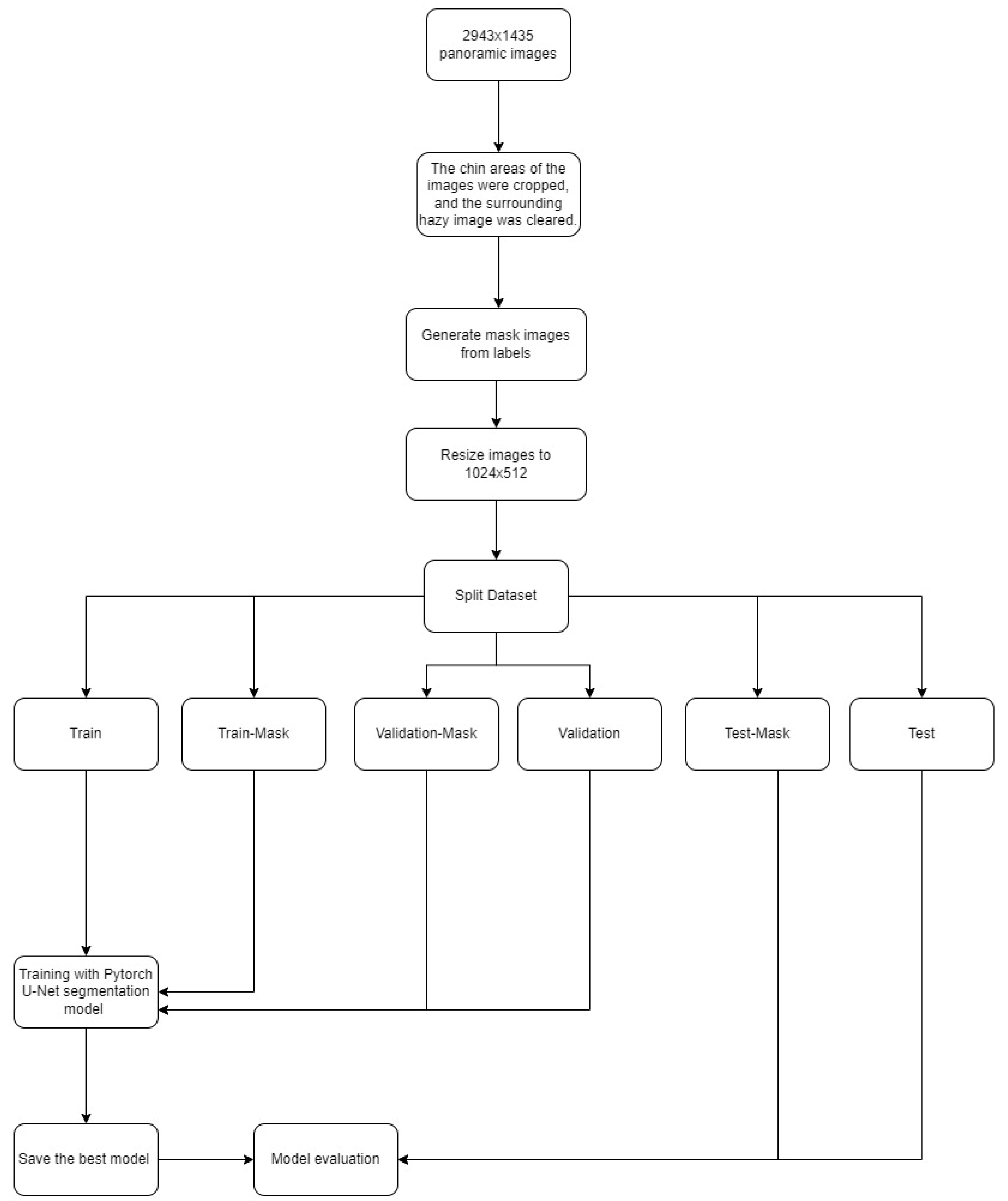

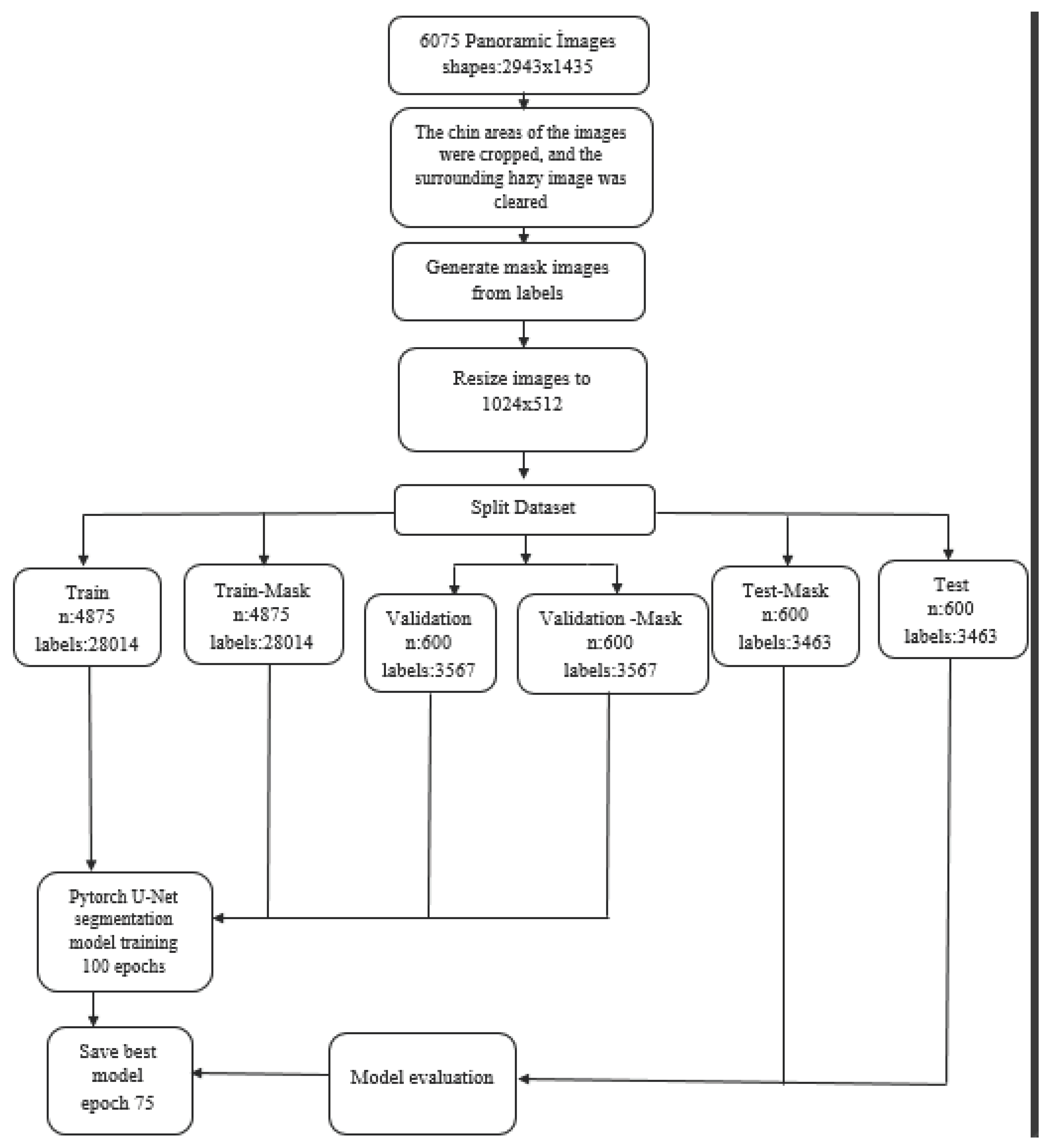

Model Pipeline

Primary Dentition:

Mixed Dentition:

Permanent Dentition:

Total (Primary Dentition + Mixed Dentition + Permanent Dentition)

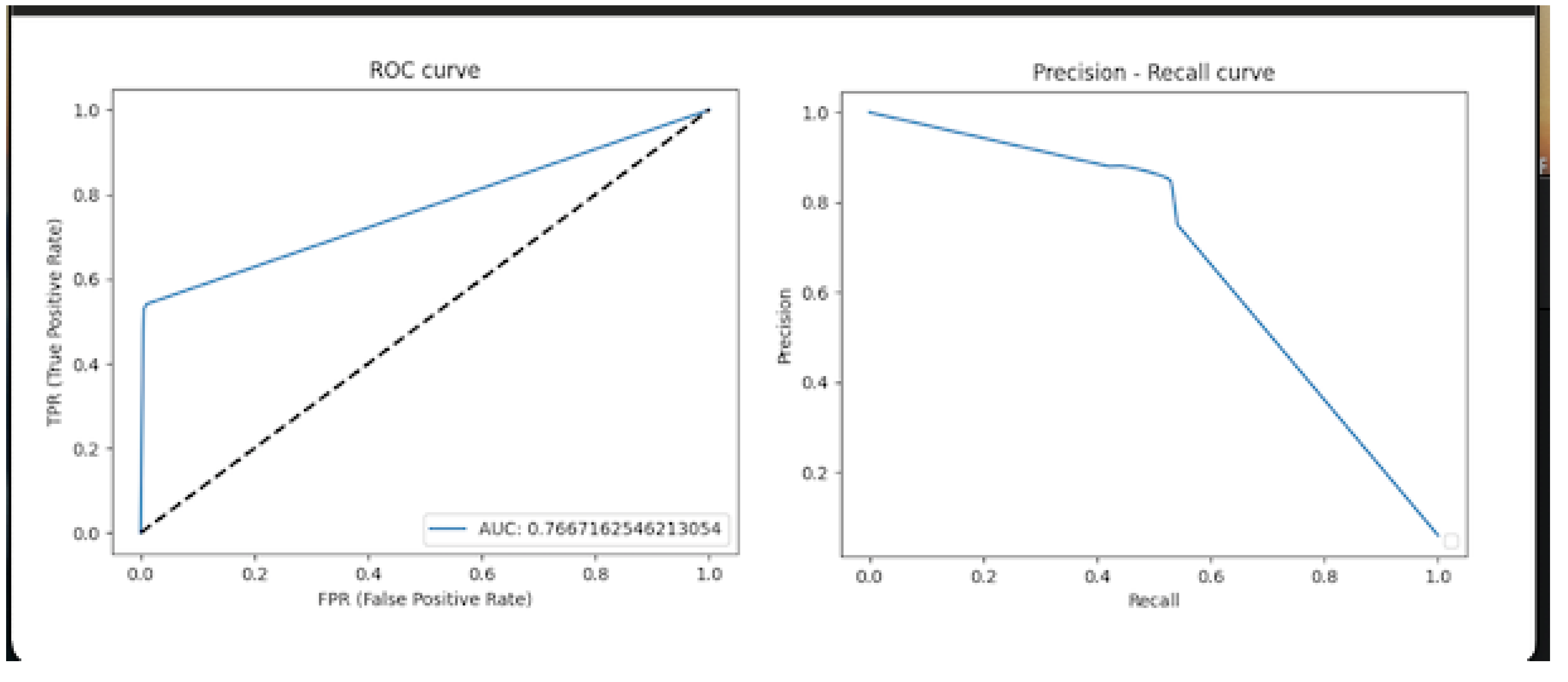

Statistical Analysis

3. Results

Primary Dentition:

Mixed Dentition:

Permanent Dentition:

Total (Primary Dentition + Mixed Dentition + Permanent Dentition):

4. Discussion

5. Conclusions

Main Points

Author Contributions

Funding

Institutional Review Board Statement

Conflicts of Interest

References

- Featherstone, JD. The science and practice of caries prevention. J Am Dent Assoc. 2000, 131, 887–899. [Google Scholar] [CrossRef] [PubMed]

- Robert H Selwitz AII, Nigel B Pitts. Dental Caries. The Lancet. 2007, 359, 51–59. [Google Scholar]

- Mortensen D, Dannemand K, Twetman S, Keller MK. Detection of non-cavitated occlusal caries with impedance spectroscopy and laser fluorescence: an in vitro study. Open Dent J. 2014, 8, 28–32. [Google Scholar] [CrossRef] [PubMed]

- 4. Baelum V, Heidmann J, Nyvad B. Dental caries paradigms in diagnosis and diagnostic research. Eur J Oral Sci, 2006; 114, 263–277.

- Korkut B, Tagtekin DA, Yanikoglu F. Early diagnosis of dental caries and new diagnostic methods: QLF, Diagnodent, Electrical Conductance and Ultrasonic System. EÜ Dişhek Fak Derg. 2011, 32, 55–67. [Google Scholar]

- Akkaya N, Kansu O, Kansu H, Cagirankaya L, Arslan U. Comparing the accuracy of panoramic and intraoral radiography in the diagnosis of proximal caries. Dentomaxillofacial Radiology. 2006, 35, 170–174. [Google Scholar] [CrossRef] [PubMed]

- Kamburoğlu K, Kolsuz E, Murat S, Yüksel S, Özen T. Proximal caries detection accuracy using intraoral bitewing radiography, extraoral bitewing radiography and panoramic radiography. Dentomaxillofacial Radiology. 2012, 41, 450–459. [Google Scholar] [CrossRef] [PubMed]

- Flint DJ, Paunovich E, Moore WS, Wofford DT, Hermesch CB. A diagnostic comparison of panoramic and intraoral radiographs. Oral Surgery, Oral Medicine, Oral Pathology, Oral Radiology, and Endodontology. 1998, 85, 731–735. [Google Scholar] [CrossRef] [PubMed]

- Farman, AG. There are good reasons for selecting panoramic radiography to replace the intraoral full-mouth series. Oral surgery, oral medicine, oral pathology, oral radiology, and endodontics. 2002, 94, 653–655. [Google Scholar] [CrossRef]

- 10. Sklan JE, Plassard AJ, Fabbri D, Landman BA. Toward content-based image retrieval with deep convolutional neural networks. Proc SPIE Int Soc Opt Eng, 2015; 9417.

- Lee J-H, Kim D-h, Jeong S-N, Choi S-H. Diagnosis and prediction of periodontally compromised teeth using a deep learning-based convolutional neural network algorithm. J Periodontal Implant Sci. 2018, 48, 114–123. [Google Scholar] [CrossRef] [PubMed]

- Orhan K, Yazici G, Kolsuz ME, Kafa N, Bayrakdar IS, Çelik Ö. An Artificial Intelligence Hypothetical Approach for Masseter Muscle Segmentation on Ultrasonography in Patients With Bruxism. Journal of Advanced Oral Research. 2021.

- Schleyer TK, Thyvalikakath TP, Spallek H, Torres-Urquidy MH, Hernandez P, Yuhaniak J. Clinical computing in general dentistry. J Am Med Inform Assoc. 2006, 13, 344–352. [Google Scholar] [CrossRef] [PubMed]

- Mendonça, EA. Clinical decision support systems: perspectives in dentistry. J Dent Educ, 5: 68(6), 2004; 68, 589–597. [Google Scholar]

- Sridhar N, Tandon S, Rao N. A comparative evaluation of DIAGNOdent with visual and radiography for detection of occlusal caries: an in vitro study. Indian J Dent Res. 2009, 20, 326. [Google Scholar] [CrossRef] [PubMed]

- Schneiderman A, Elbaum M, Shultz T, Keem S, Greenebaum M, Driller J. Assessment of dental caries with digital imaging fiber-optic translllumination (DIFOTITM): in vitro Study. Caries Res. 1997, 31, 103–110. [Google Scholar] [CrossRef] [PubMed]

- Takeshita WM, Iwaki LCV, Da Silva MC, Iwaki Filho L, Queiroz ADF, Geron LBG. Comparison of the diagnostic accuracy of direct digital radiography system, filtered images, and subtraction radiography. Contemp Clin Dent. 2013, 4, 338–342. [Google Scholar] [CrossRef] [PubMed]

- Park WJ, Park J-B. History and application of artificial neural networks in dentistry. Eur J Dent. 2018, 12, 594. [Google Scholar] [CrossRef]

- Laitala M-L, Piipari L, Sämpi N, et al. Validity of digital imaging of fiber-optic transillumination in caries detection on proximal tooth surfaces. Journal Of Dentistry I. 2017, 2017, 6. [Google Scholar]

- Diniz MB, Leme AFP, de Sousa Cardoso K, Rodrigues JdA, Cordeiro RdCL. The efficacy of laser fluorescence to detect in vitro demineralization and remineralization of smooth enamel surfaces. Photomed Laser Surg. 2009, 27, 57–61. [Google Scholar] [CrossRef] [PubMed]

- Jablonski-Momeni A, Ricketts DN, Rolfsen S, et al. Performance of laser fluorescence at tooth surface and histological section. Lasers Med Sci. 2011, 26, 171–178. [Google Scholar] [CrossRef] [PubMed]

- Rodrigues JA, Diniz MB, Josgrilberg ÉB, Cordeiro RC. In vitro comparison of laser fluorescence performance with visual examination for detection of occlusal caries in permanent and primary molars. Lasers Med Sci 2009, 24, 501–506. [Google Scholar] [CrossRef] [PubMed]

- Lussi A, Reich E. The influence of toothpastes and prophylaxis pastes on fluorescence measurements for caries detection in vitro. Eur J Oral Sci. 2005, 113, 141–144. [Google Scholar] [CrossRef] [PubMed]

- Mansour S, Ajdaharian J, Nabelsi T, Chan G, Wilder-Smith P. Comparison of caries diagnostic modalities: A clinical study in 40 subjects. Lasers Surg Med 2016, 48, 924–928. [Google Scholar] [CrossRef] [PubMed]

- Lussi, A. Comparison of different methods for the diagnosis of fissure caries without cavitation. Caries Res. 1993, 27, 409–416. [Google Scholar] [CrossRef] [PubMed]

- Bayram M, Yıldırım M, Adnan K, Seymen F. Pedodonti Anabilim Dali'nda Başlangiç Muayenesinde Alinan Panoramik Radyografilerin Değerlendirilmesi. Istanbul Univ Dishekim Fak Derg. 2011, 45, 41–47. [Google Scholar]

- Schwendicke F, Golla T, Dreher M, Krois J. Convolutional neural networks for dental image diagnostics: A scoping review. J Dentistry. 2019, 91, 103226. [Google Scholar] [CrossRef] [PubMed]

- Kamburoğlu K, Kolsuz E, Murat S, Yüksel S, Özen T. Proximal caries detection accuracy using intraoral bitewing radiography, extraoral bitewing radiography and panoramic radiography. Dentomaxillofac Radiol 2012, 41, 450–459. [Google Scholar] [CrossRef] [PubMed]

- Vinayahalingam S, Kempers S, Limon L, et al. Classification of caries in third molars on panoramic radiographs using deep learning. Sci Rep. 2021, 11, 1–7. [Google Scholar]

- Stehman, SV. Selecting and interpreting measures of thematic classification accuracy. Remote Sens Environ. 1997, 62, 77–89. [Google Scholar] [CrossRef]

- Yasa Y, Çelik Ö, Bayrakdar IS, et al. An artificial intelligence proposal to automatic teeth detection and numbering in dental bite-wing radiographs. Acta Odontol Scand 2020, 79, 275–281. [Google Scholar]

- Ozturk O, Sarıtürk B, Seker DZ. Comparison of Fully Convolutional Networks (FCN) and U-Net for Road Segmentation from High Resolution Imageries. IJEGEO. 2020, 7, 272–279. [Google Scholar] [CrossRef]

- Nishitani Y, Nakayama R, Hayashi D, Hizukuri A, Murata K. Segmentation of teeth in panoramic dental X-ray images using U-Net with a loss function weighted on the tooth edge. Radiol Phys Technol. 2021, 14, 1–6. [Google Scholar]

- Gao X, Ramezanghorbani F, Isayev O, Smith JS, Roitberg AE. TorchANI: A free and open source PyTorch-based deep learning implementation of the ANI neural network potentials. J Chem Inf Model. 2020, 60, 3408–3415. [Google Scholar] [CrossRef] [PubMed]

- Kızrak MA, Bolat B. Derin öğrenme ile kalabalık analizi üzerine detaylı bir araştırma. Bilişim Teknolojileri Dergisi. 2018, 11, 263–286. [Google Scholar] [CrossRef]

- Collobert R, Kavukcuoglu K, Farabet C. Torch7: A matlab-like environment for machine learning. 2011.

- Yoo SH, Geng H, Chiu TL, et al. Deep learning-based decision-tree classifier for COVID-19 diagnosis from chest X-ray imaging. Front Med (Lausanne) 2020, 7, 427. [Google Scholar]

- Lee J-H, Kim D-H, Jeong S-N, Choi S-H. Detection and diagnosis of dental caries using a deep learning-based convolutional neural network algorithm. Journal of dentistry. 2018, 77, 106–111. [Google Scholar] [CrossRef]

- Schwendicke F, Elhennawy K, Paris S, Friebertshäuser P, Krois J. Deep learning for caries lesion detection in near-infrared light transillumination images: A pilot study. J Dentistry. 2020, 92, 103260. [Google Scholar] [CrossRef] [PubMed]

- Devito KL, de Souza Barbosa F, Felippe Filho WN. An artificial multilayer perceptron neural network for diagnosis of proximal dental caries. Oral Surg Oral Med Oral Pathol Oral Radiol Endod 2008, 106, 879–884. [Google Scholar] [CrossRef] [PubMed]

- Özgür B, Ünverdi GE, Çehreli Z. Diş Çürüğünün Tespitinde Geleneksel ve Güncel Yaklaşımlar. Turkiye Klinikleri Journal of Pediatric Dentistry-Special Topics. 2018, 4, 1–9. [Google Scholar]

| Metrics and Measurements | Primary Dentition | Mixed Dentition | Permanent Dentition | Total (Primary+Mixed+Permanent) |

|---|---|---|---|---|

| True positive (TP) | 1006 | 467 | 866 | 2653 |

| False positive (FP) | 96 | 41 | 83 | 255 |

| False negative (FN) | 174 | 166 | 181 | 555 |

| Sensitivity | 0,8525 | 0,7377 | 0,8271 | 0.8269 |

| Precision | 0,9128 | 0,9192 | 0,9125 | 0.9123 |

| F1 score | 0,8816 | 0,8185 | 0,8677 | 0.8675 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).