Submitted:

01 April 2024

Posted:

03 April 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Classical Psychophysical Assumptions Relevant to CPS Acceptance as a Psychological Reality

3. A View on the Psychophysical Distance between the Robot and Human Agent Stimuli

4. Conclusion

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

| 1 | Italics is ours. |

References

- Losano, C.V.; Vijayan, K.K. Literature review on Cyber Physical Systems Design. Procedia Manuf. 2020, 45, 295–300. [Google Scholar] [CrossRef]

- Wang, S.; Gu, X.; Chen, J.J.; Chen, C.; Huang, X. Robustness improvement strategy of cyber-physical systems with weak interdependency. Reliab. Eng. Syst. Saf. 2023, 229. [Google Scholar] [CrossRef]

- Lawhead, P.B.; Duncan, M.E.; Bland, C.G.; Goldweber, M.; Schep, M.; Barnes, D.J.; Hollingsworth, R.G. A road map for teaching introductory programming using LEGO© mindstorms robots. ACM SIGCSE Bull. 2002, 35, 191–201. [Google Scholar] [CrossRef]

- Collins, E.C.; Prescott, T.J.; Mitchinson, B. Saying it with light: A pilot study of affective communication using the MIRO robot. In Biomimetic and Biohybrid Systems: 4th International Conference, Living Machines 2015, Barcelona, Spain, July 28-31; Proceedings 4, 243-255; Springer International Publishing: Berlin/Heidelberg, Germany, 2015. [Google Scholar] [CrossRef]

- Bachrach, L.L. Psychosocial rehabilitation and psychiatry in the care of long-term patients. Am. J. Psychiatry 1992, 149, 1455–1463. [Google Scholar] [CrossRef]

- Dimitrova, M.; Garate, V.R.; Withey, D.; Harper, C. Implicit Aspects of the Psychosocial Rehabilitation with a Humanoid Robot. In International Conference in Methodologies and intelligent Systems for Technology Enhanced Learning; Springer Nature: Cham, Switzerland, 2023; pp. 119–128, https://link.springer.com/chapter/10.1007/978-3-031-42134-1_12. [Google Scholar]

- Robinson, N.L.; Cottier, T.V.; Kavanagh, D.J. Psychosocial health interventions by social robots: Systematic review of randomized controlled trials. J. Med. Internet Res. 2019, 21, e13203. [Google Scholar] [CrossRef]

- Dimitrova, M.; Kostova, S.; Lekova, A.; Vrochidou, E.; Chavdarov, I.; Krastev, A.; Ozaeta, L. Cyber-physical systems for pedagogical rehabilitation from an inclusive education perspective. BRAIN. Broad Res. Artif. Intell. Neurosci. 2021, 11, 187–207, https://brain.edusoft.ro/index.php/brain/article/view/1135. [Google Scholar]

- Wolbring, G.; Diep, L.; Yumakulov, S.; Ball, N.; Yergens, D. Social robots, brain machine interfaces and neuro/cognitive enhancers: Three emerging science and technology products through the lens of technology acceptance theories, models and frameworks. Technologies 2013, 1, 3–25. [Google Scholar] [CrossRef]

- Ghazali, A.S.; Ham, J.; Barakova, E.; Markopoulos, P. The influence of social cues in persuasive social robots on psychological reactance and compliance. Comput. Hum. Behav. 2018, 87, 58–65. [Google Scholar] [CrossRef]

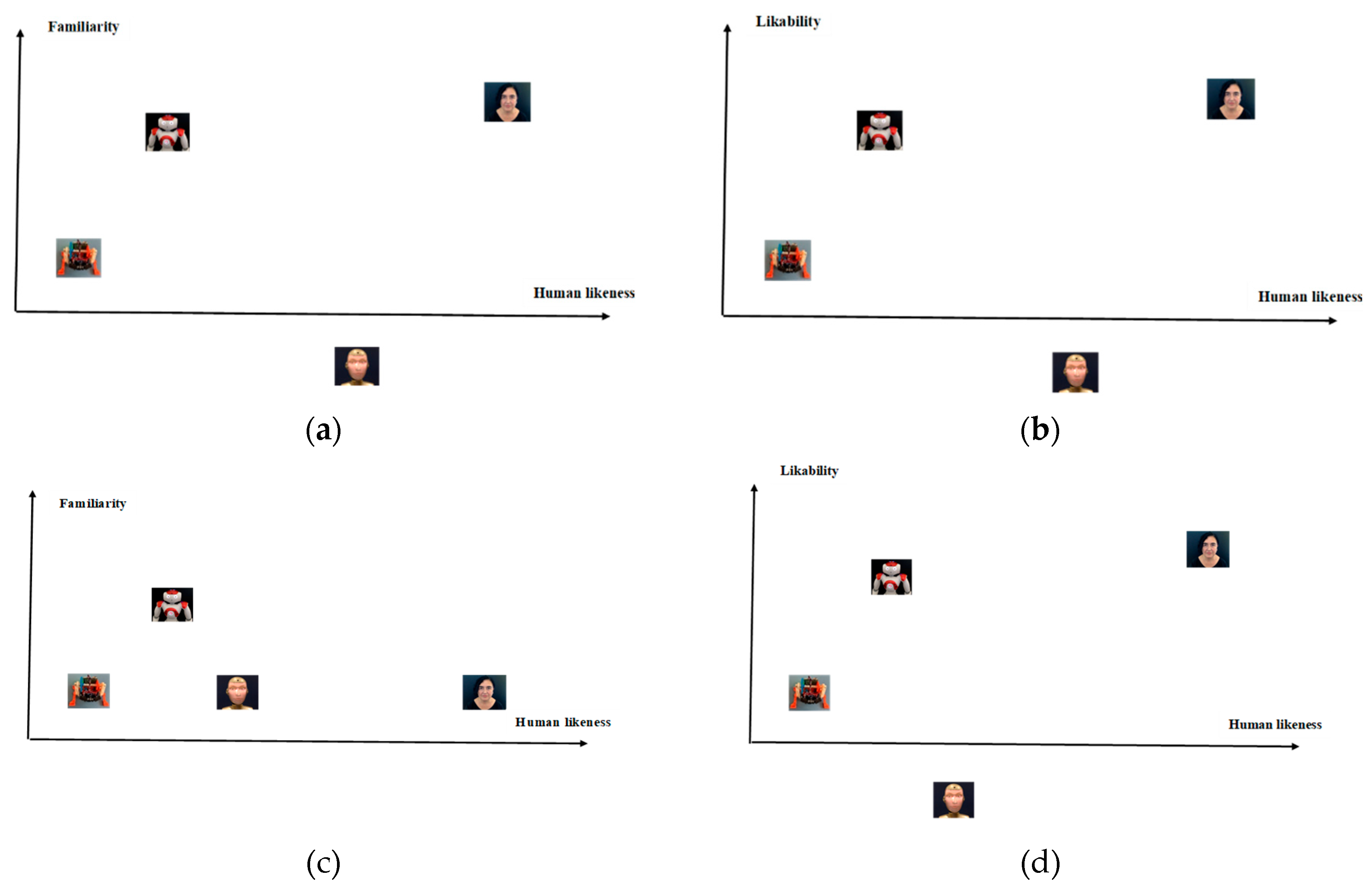

- Mori, M.; MacDorman, K.F.; Kageki, N. The uncanny valley [from the field]. IEEE Robot. Autom. Mag. 2012, 19, 98–100. [Google Scholar] [CrossRef]

- Saygin, A.P.; Chaminade, T.; Ishiguro, H.; Driver, J.; Frith, C. The thing that should not be: Predictive coding and the uncanny valley in perceiving human and humanoid robot actions. Soc. Cogn. Affect. Neurosci. 2011, 7, 413–422. [Google Scholar] [CrossRef]

- Urgen, B.A.; Plank, M.; Ishiguro, H.; Poizner, H.; Saygin, A.P. EEG theta and Mu oscillations during perception of human and robot actions. Front. Neurorobotics 2013, 7, 19. [Google Scholar] [CrossRef]

- Urgen, B.A.; Kutas, M.; Saygin, A.P. Uncanny valley as a window into predictive processing in the social brain. Neuropsychologia 2018, 114, 181–185. [Google Scholar] [CrossRef]

- MacDorman, K.F.; Chattopadhyay, D. Reducing consistency in human realism increases the uncanny valley effect; increasing category uncertainty does not. Cognition 2016, 146, 190–205. [Google Scholar] [CrossRef]

- Moore, R.K. A Bayesian explanation of the ‘Uncanny Valley’effect and related psychological phenomena. Sci. Rep. 2012, 2, 864. [Google Scholar] [CrossRef]

- Xu, J.; Zhang, C.; Cuijpers, R.H.; IJsselsteijn, W.A. How Might Robots Change Us? Mechanisms Underlying Health Persuasion in Human-Robot Interaction from A Relationship Perspective: A Position Paper, Persuasive 2023. In Proceedings of the 18th International Conference on Persuasive Technology, CEUR Workshop Proceedings, Eindhoven, The Netherlands, 19–21 April 2023. https://ceur-ws.org/Vol-3474/paper20.pdf.. [Google Scholar]

- El-Haouzi, H.B.; Valette, E.; Krings, B.J.; Moniz, A.B. Social dimensions in CPS & IoT based automated production systems. Societies 2021, 11, 98. [Google Scholar] [CrossRef]

- Dimitrova, M.; Wagatsuma, H. (Eds.) Cyber-Physical Systems for Social Applications; IGI Global: Hershey, PA, USA, 2019; ISBN 9781522578796. https://www.igi-global.com/book/cyber-physical-systems-social-applications/210606. [Google Scholar]

- Naneva, S.; Sarda Gou, M.; Webb, T.L.; Prescott, T.J. A systematic review of attitudes, anxiety, acceptance, and trust towards social robots. Int. J. Soc. Robot. 2020, 12, 1179–1201. [Google Scholar] [CrossRef]

- Tulving, E.; Wiseman, S. Relation between recognition and recognition failure. Bull. Psychon. Soc. 1975, 6, 79–82. [Google Scholar] [CrossRef]

- Prescott, T.J. Robots are not just tools. Connect. Sci. 2017, 29, 142–149. [Google Scholar] [CrossRef]

- Jackson, R.B.; Williams, T. A theory of social agency for human-robot interaction. Front. Robot. AI 2021, 8, 687726. [Google Scholar] [CrossRef]

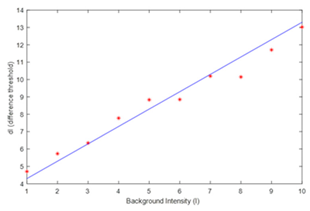

- Deco, G.; Rolls, E.T. Decision-making and Weber's law: A neurophysiological model. Eur. J. Neurosci. 2006, 24, 901–916. [Google Scholar] [CrossRef]

- Laming, D. Weber’s Law. In Inside Psychology: A Science over 50 Years; Rabbitt, P., Ed.; Oxford University Press: Oxford, UK, 2009; pp. 177–189. ISBN 9780199228768. [Google Scholar]

- Stevens, S.S. On the psychophysical law. Psychol. Rev. 1957, 64, 153–181. [Google Scholar] [CrossRef]

- Sanford, E.M.; Halberda, J. A Shared Intuitive (Mis) understanding of Psychophysical Law Leads Both Novices and Educated Students to Believe in a Just Noticeable Difference (JND). Open Mind 2023, 7, 785–801. [Google Scholar] [CrossRef]

- Lindskog, M.; Nyström, P.; Gredebäck, G. Can the Brain Build Probability Distributions? Front. Psychol. 2021, 12, 596231. [Google Scholar] [CrossRef]

- Johnson, K.O.; Hsiao, S.S.; Yoshioka, T. Neural coding and the basic law of psychophysics. Neuroscientist 2002, 8, 111–121. [Google Scholar] [CrossRef]

- Thurstone, L.L. A law of comparative judgment. Psychol. Rev. 1927, 34, 273. [Google Scholar] [CrossRef]

- Torgerson, W.S. Theory and methods of scaling; Wiley: Oxford, UK, 1958. [Google Scholar]

- Thurstone, L.L. Attitudes can be measured. Am. J. Sociol. 1928, 33, 529–554. [Google Scholar] [CrossRef]

- https://www.cis.rit.edu/people/faculty/montag/vandplite/pages/chap_3/ch3p1.html.

- Wilkins, L. The Statistical Foundation of Colour Space. bioRxiv 2019, 849984. https://www.researchgate.net/publication/337444621_The_Statistical_Foundation_of_Colour_Space/figures?lo=1.

- https://www.cis.rit.edu/people/faculty/montag/vandplite/pages/chap_6/ch6p10.html.

- George, G. Testing for the independence of three events. Math. Gaz. 2004, 88, 568. [Google Scholar] [CrossRef]

- Osorina, M.V.; Avanesyan, M.O. Lev Vekker and His Unified Theory of Mental Processes. Eur. Yearb. Hist. Psychol. 2021, 7, 265–281. [Google Scholar] [CrossRef]

- Rosenthal-Von der Pütten, A.M.; Krämer, N.C.; Maderwald, S.; Brand, M.; Grabenhorst, F. Neural mechanisms for accepting and rejecting artificial social partners in the uncanny valley. J. Neurosci. 2019, 39, 6555–6570. [Google Scholar] [CrossRef]

- Milivojevic, B.; Clapp, W.C.; Johnson, B.W.; Corballis, M.C. Turn that frown upside down: ERP effects of thatcherization of misorientated faces. Psychophysiology 2003, 40, 967–978. [Google Scholar] [CrossRef]

- Stock, R.M.; Merkle, M. A service Robot Acceptance Model: User acceptance of humanoid robots during service encounters. In 2017 IEEE international conference on pervasive computing and communications workshops (PerCom Workshops), 2017; pp. 339–344. https://ieeexplore.ieee.org/abstract/document/7917585/.

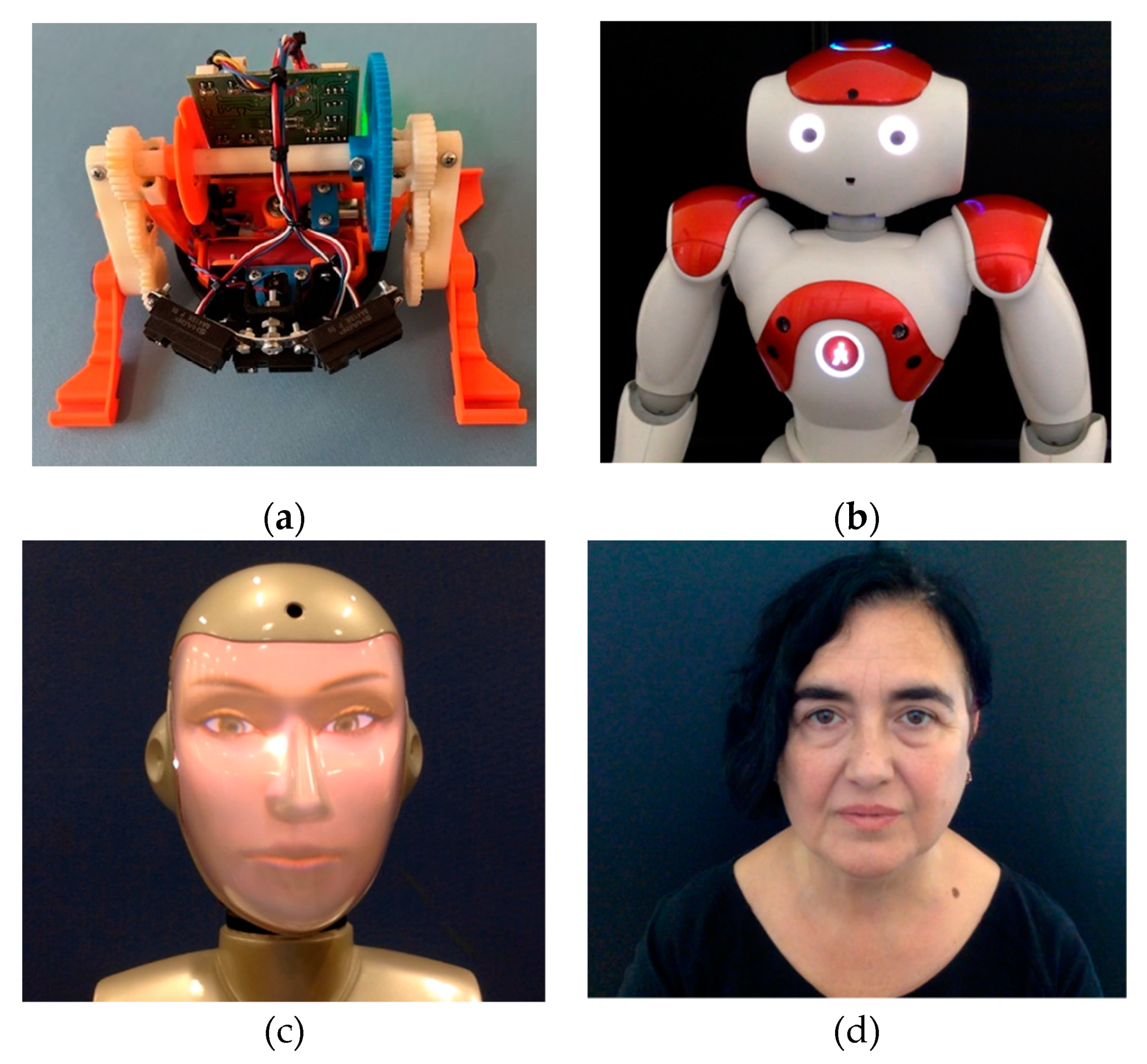

- Nikolov, V.; Dimitrova, M.; Chavdarov, I.; Krastev, A.; Wagatsuma, H. Design of educational scenarios with BigFoot walking robot: A cyber-physical system perspective to pedagogical rehabilitation. In International Work-Conference on the Interplay between Natural and Artificial Computation; Springer International Publishing: Cham, Switzerland, 2022; pp. 259–269, https://link.springer.com/chapter/10.1007/978-3-031-06242-1_26. [Google Scholar]

- Dimitrova, M.; Wagatsuma, H.; Tripathi, G.N.; Ai, G. Learner Attitudes towards Humanoid Robot Tutoring Systems: Measuring of Cognitive and Social Motivation Influences. In Cyber-Physical Systems for Social Applications; Dimitrova, M., Wagatsuma, H., Eds.; IGI Global: Hershey, PA, USA, 2019; pp. 1–24. [Google Scholar] [CrossRef]

- https://wiki.engineeredarts.co.uk/SociBot.

| Name | Definition | Relevance to HRI |

|---|---|---|

| Weber’s law | “If X is a stimulus magnitude and X + ∆X is the next greater magnitude that can just be distinguished from X, then Weber’s law states that ∆X bears a constant proportion to X data” (33, p. 177).  Adapted from [33]. |

The JND depends on the psychological effect of the background stimuli, meaning that sometimes a small incremental change of a stimulus can lead to a bigger response in comparison to the same change of a larger stimulus (i.e. noise). On an abstract decision making level small JNDs may invoke large responses depending on the contexts, i.e. larger to perceptually confusing, than to unambiguous, human or robot parts (faces, hands, etc.) |

| Fechner’s law | “… if ∆X bears a constant proportion to X, so also does X + ∆X, and ln(X + DX) – lnX = constant” (20, p= 177). Therefore, the sensation is a logarithmic function of the stimulus intensity: S = lnX + constant.  Reproduced from [34] under Creative Commons Attribution (CC BY) license. |

Fechner’s law accounts best for the (almost) linear part of any measurable in the lab “stimulus-response” dependency of midrange intensity. On an abstract decision making level it supports the assumption that any cognitive system, as function of the underlying neurological brain processing, is a measuring device best adapted to an Euclidean topology of representation of the external environment [28]. |

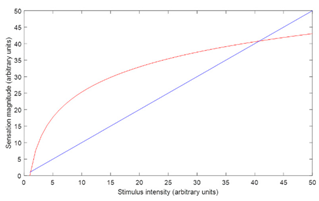

| Steven’s law | “… sensation was correctly reflected in magnitude estimation and was related to stimulus magnitude by a power law, S = aXβ … not a log law data” [35], (p.178).  Adapted from [35]. |

Steven’s law assumes that the human cognitive system is capable of mapping adequately the ratios of the responses to the ratios of the stimulus intensities, i.e. of higher level assessment of mathematical dependencies, existing in the environment. Therefore, it translates beyond the (physical/electro-chemical) properties of the sensor to the complex analyser abilities of the integrative function of the brain. It also states that, apart from the linear part of the power function, small increments of intensity result in an exponentially high increment of the response (i.e. pain, where the power degree is > 1). With strong light, for example, the power degree is < 1 (as in the figure to the left). |

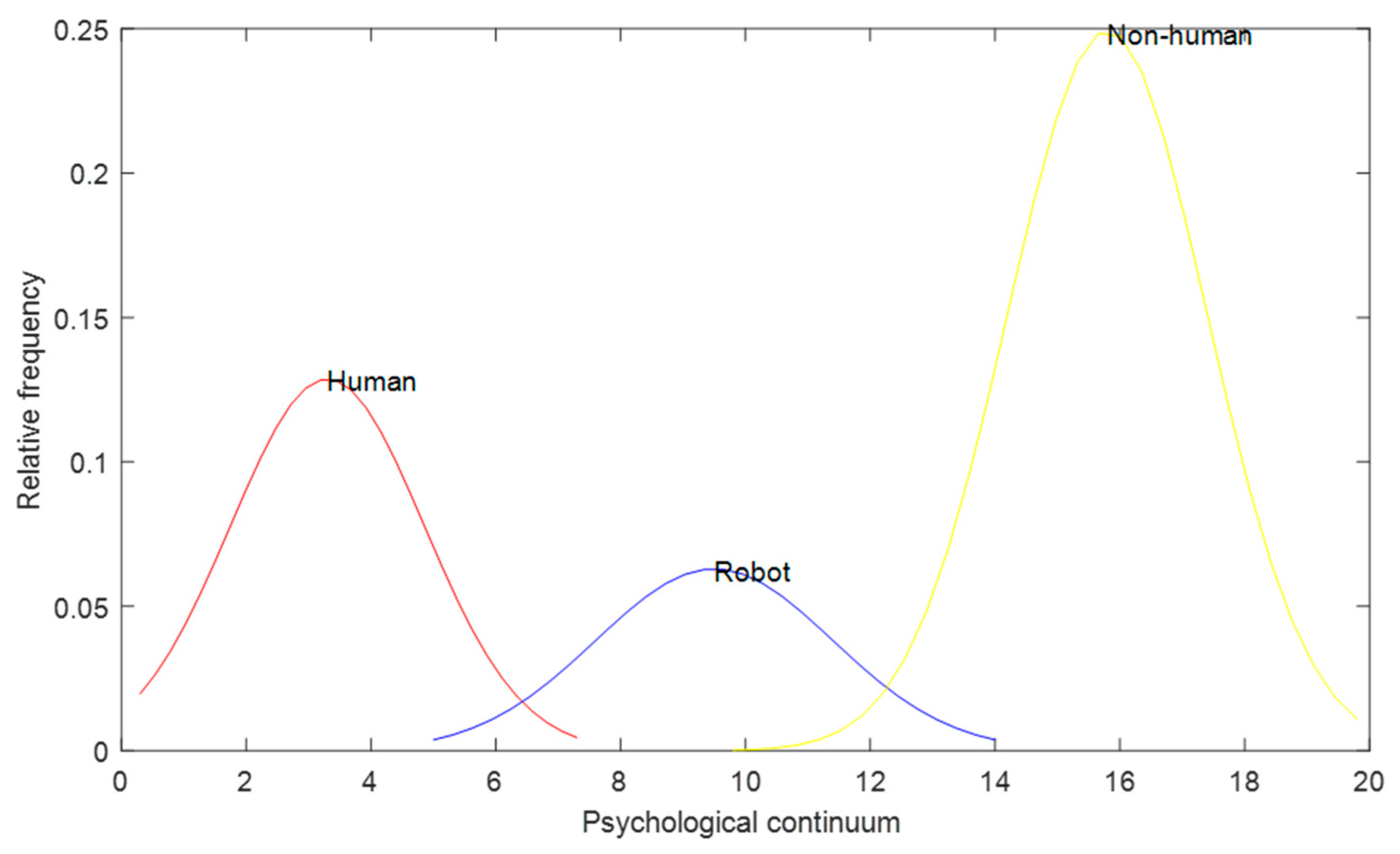

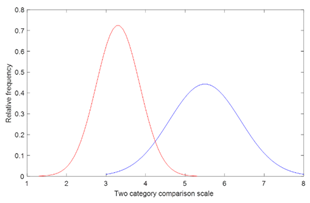

| Thurstone law | “the distribution of attitude of a group (of people*) on a specified issue may be represented in the form of a frequency distribution…limited to those aspects of attitudes for which one can compare individuals by the "more and less" type of judgment...The scale is so constructed that two opinions separated by a unit distance on the base line seem to differ as much in the attitude variable involved as any other two opinions on the scale which are also separated by a unit distance [32] (p.529). Adapted from [32]. |

Thurstone demonstrated that Weber’s and Fechner’s laws are independent from each other [27]. He proposed a method of indirect scaling, allowing to devise an interval scale of seemingly non-measurable qualities such as social attitudes. This method is therefore appropriate to formally represent in quantitative ways the distances between characteristics of radically different complex items like robots or people. Moreover, it reflects the ability of the brain to perform processing over complex multidimensional probabilistic representations - physical and social. |

| Tulving’s law of recognition failure | Tulving’s law of recognition failure postulates a slight distortion of the independence assumption of 2 cognitive processes – recognition Rn and recall Rc - operating over the mental representation of one and the same stimulus [21]. The probability of jointly recognizing and recalling a stimulus is expected to slightly violate the independence assumption, i.e. P(Rn|Rc) = P(Rn)P(Rc) + δ, or P(Rn|Rc)/P(Rc) = PRn + δ.  A hypothetical plot of the above function. A hypothetical plot of the above function. |

It is well known that when the conditional probability of an event P(Rn|Rc) in respect to another event P(Rc) equals its probability of occurence of P(Rn), the two processes are independent [36]. In the theory of Tulving and Wiseman [21], this independence relation is slightly violated by a fraction δ, where δ = c[1-P(Rn)], c is a coefficient in the range (0, 1]. Tulving’s theory of ‘trace independence’ when memorizing a stimulus demonstrates that what is learnt depends of the surrounding context of the learning situation, on the one hand, and the multiple, almost independent memory traces, created during learning, on the other [21].This may apply to memorizing complex stimuli with sophisticated behavior, like robots, as well. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).