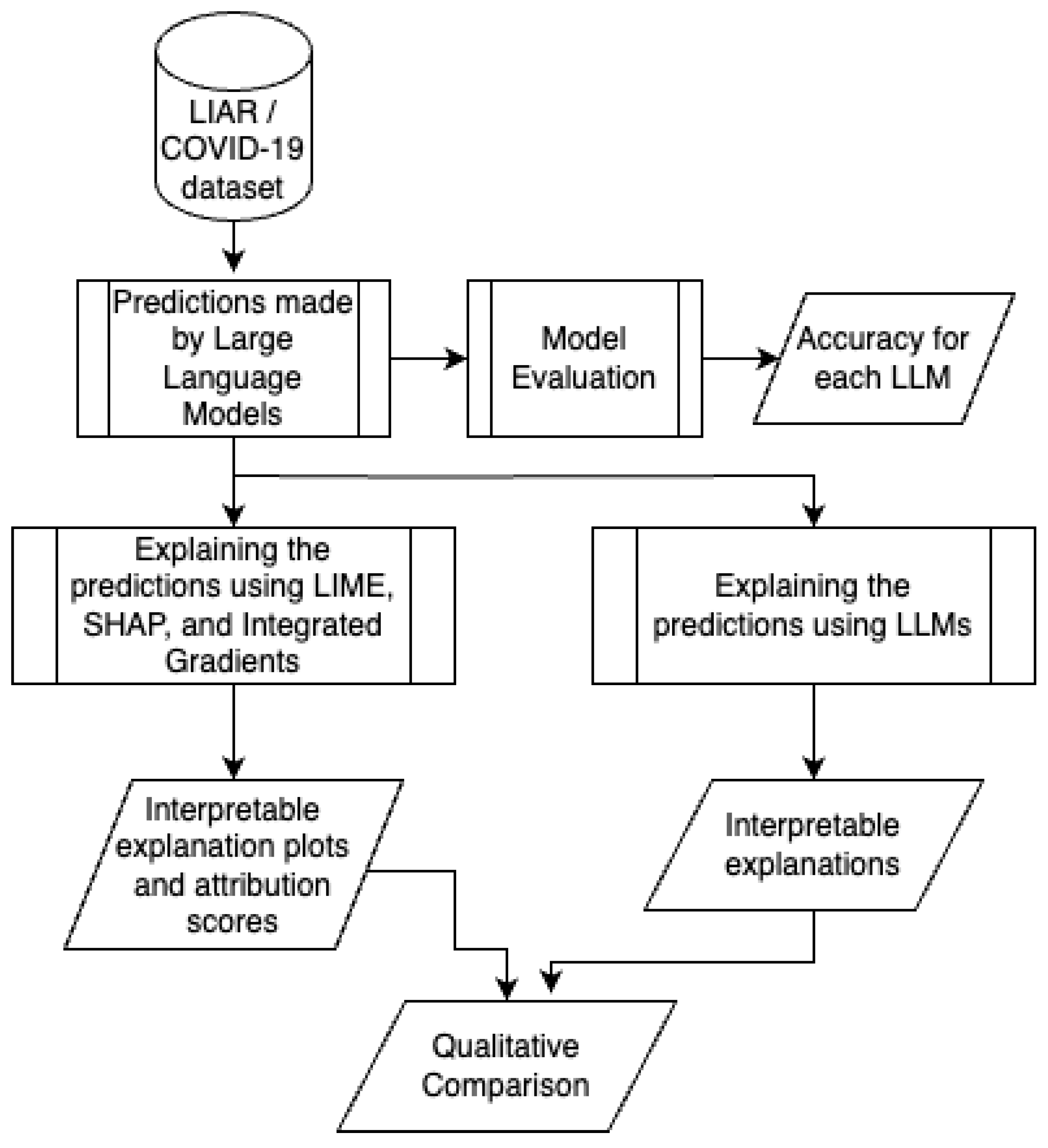

Given the poor performance of LLMs on the LIAR dataset, the next set of experiments explores what features LLMs were looking at when they came up with their predictions. While simply prompting an LLM for an answer is an option, we explore using known explainability techniques: Integrated Gradients, LIME, and SHAP.

3.1. Integrated Gradients

Integrated Gradients [

20] is an approach that integrates the gradients of each input feature to determine the contribution of each input feature to the model’s output. In the context of the experiments, features, words, and tokens, all refer to the constituents of the prompt. Gradients serve as the attribution scores. As such, the result of integrating the gradients should equal the model’s output, and the difference between the integrated gradients and the model’s output, the convergence delta, should give a sort of intuition on how accurate the attributions are, as described by the axioms in the original paper [

20].

Sundarajan et. al [

20] formally defined Integrated Gradients by the following equation:

. In the equation,

represents the input data item and

is the baseline reference item. Thus, the aim of Integrated Gradients is to comprehend the shift in the model’s output from the baseline (x’) to the actual input (x). To do so, the integrated gradients method uses a variable alpha

, which ranges from 0 to 1, to progress along a straight-line path between x’ and x. Along this path, in the core equation, the gradient of the model’s output (f) with respect to the input (x) must be integrated. When proposing integrated gradients, Sundararajan et. al [

20] also proposed a way to approximate the integrated gradients using Reimann sums according to the following equation, which is used in our experiments.

3.2. LIME

Local Interpretable Model-agnostic Explanations [

19], abbreviated LIME, is another explainability approach that aims to provide localized interpretations of each feature’s contribution to the model’s output. LIME is a perturbation-based approach that produces an explanation based on the following formula:

where G is the family of explanation functions, L is a fidelity function, is the input features, is a complexity measure, and f is the function to approximate.

When proposing LIME, Ribiero, et. al [

19] explored using an approximation model as g, where g is trained to predict the target model’s output (f) based on which input features are removed or masked, thereby approximating how features contribute to the target model’s output as per the above equation. In the experiments for this work, Captum [

26] and its implementation of LIME is used.

3.4. Setup and Hyperparameters

For the experiments, 150 random samples were selected from the LIAR dataset. They were converted to prompts as described above. Next, the above explainability methods were run on each prompt, using their implementations in Captum library.

The Integrated Gradients method was performed in the embedding space using n_steps = 512, the number of rectangles to use in the Reimann sum approximation of the integrated gradients, since smaller numbers of steps led to greater discrepancies between the integrated gradients and the actual model outputs. Using n_steps = 512 was sufficient to consistently reduce the convergence delta, or the difference between the sum of the attributions and the actual model outputs, to less than 10 percentage points. Increasing the number of steps to 1024 and above led to only slightly more accurate approximations at the cost of much more computing power. Therefore, for better efficiencies, all experiments used 512 steps.

LIME and SHAP were each performed with 512 perturbation samples. The approximation model used for LIME was an SKLearnLasso model with . Our similarity function for token sequences was the cosine similarity of said sequences in the embedding space. Our perturbation function for LIME masked tokens whose indexes i were sampled from a discrete uniform distribution where n is the number of tokens in the tokenized prompt. When the first token was allowed to be masked using the distribution , model outputs were sometimes NaN. This may be due to the nature of LLM tokenization creating a start token, which was determined to be crucial to maintaining meaningful outputs. The similarity function used for LIME was the cosine distance in the embedding space.

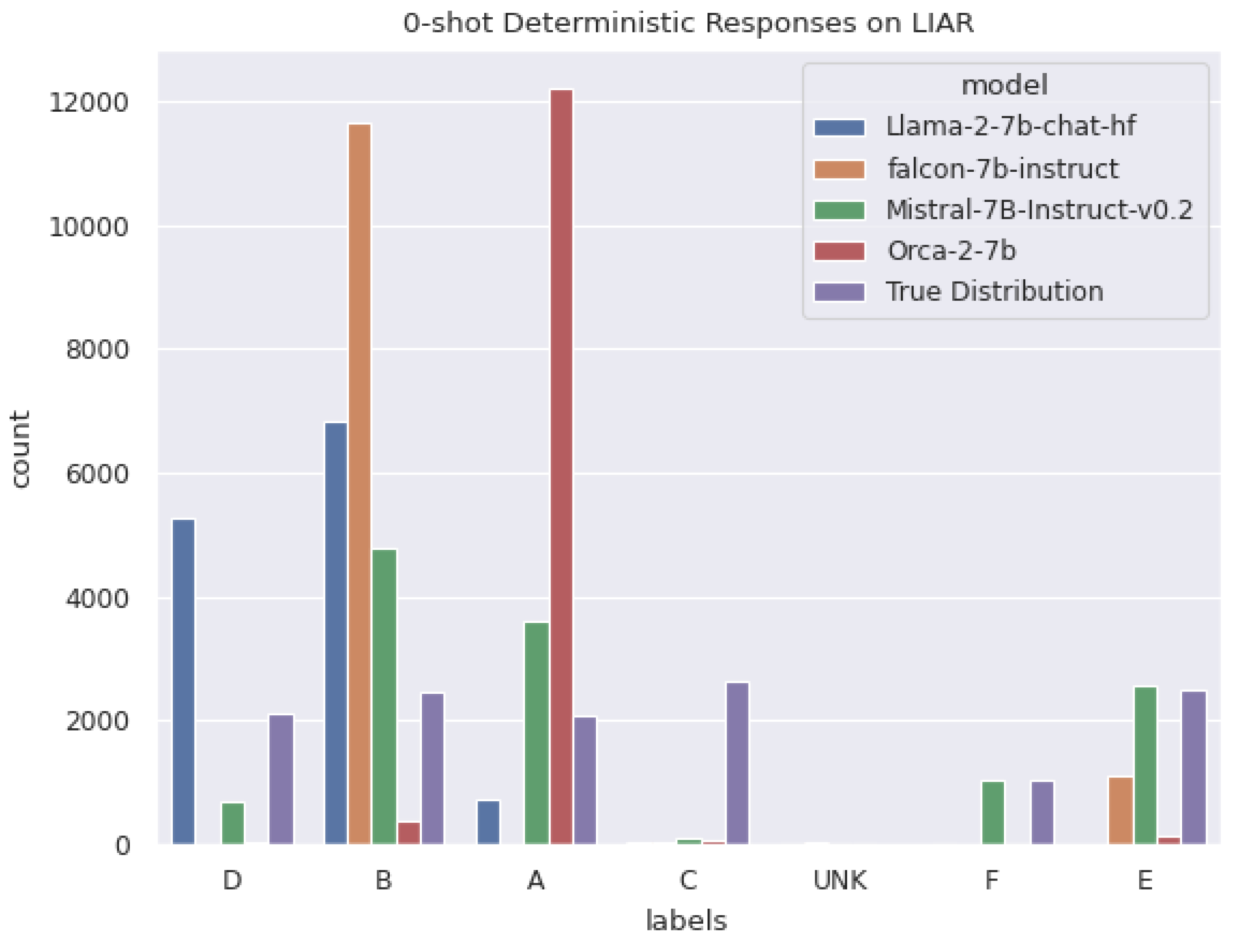

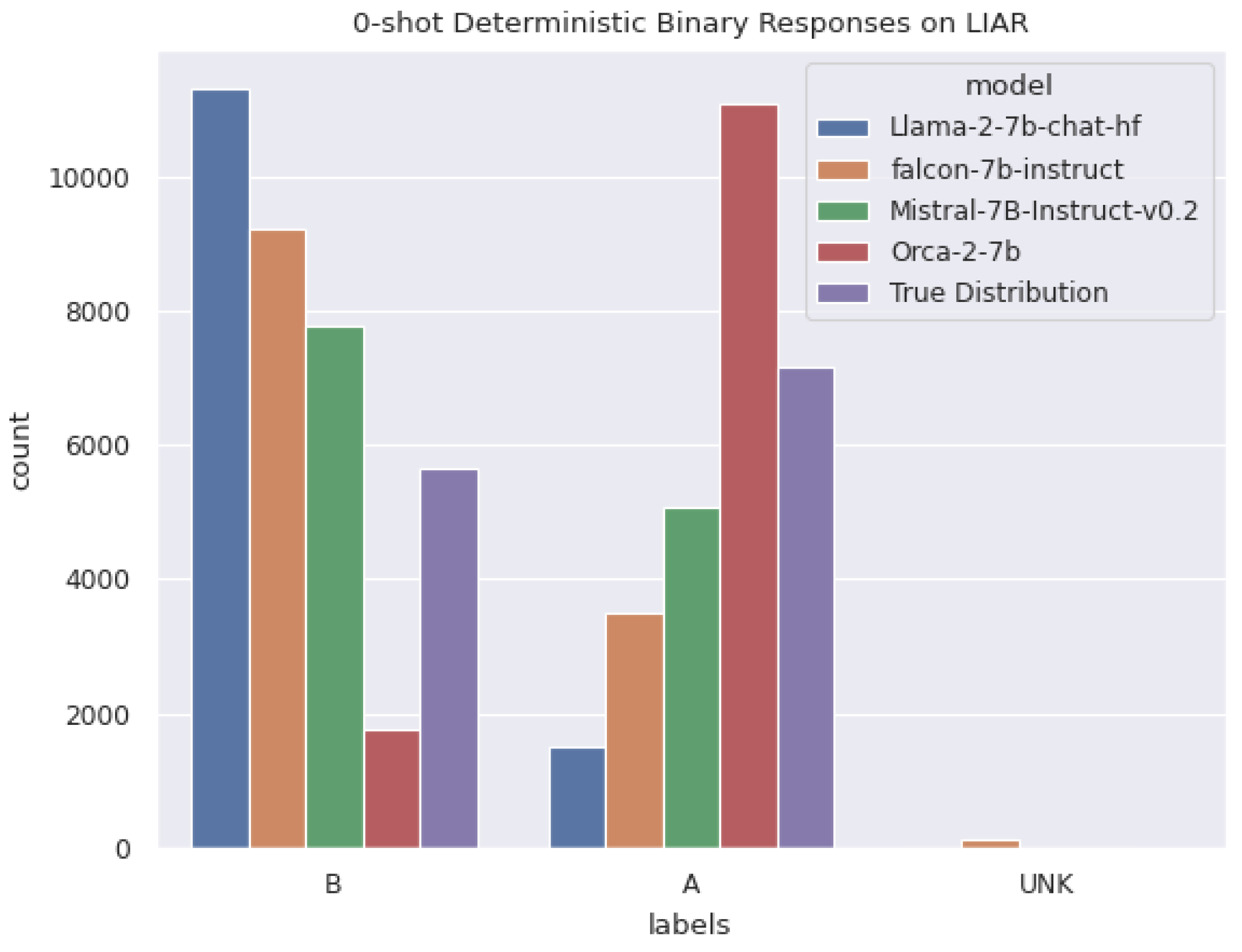

3.5. Interpretation of the Model Performance

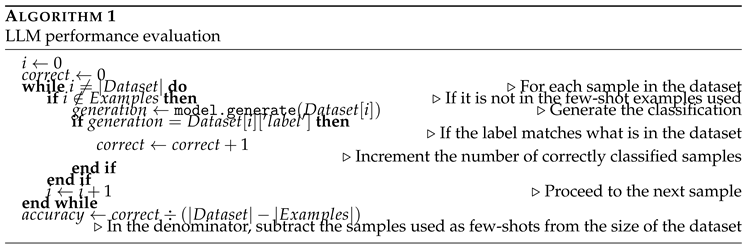

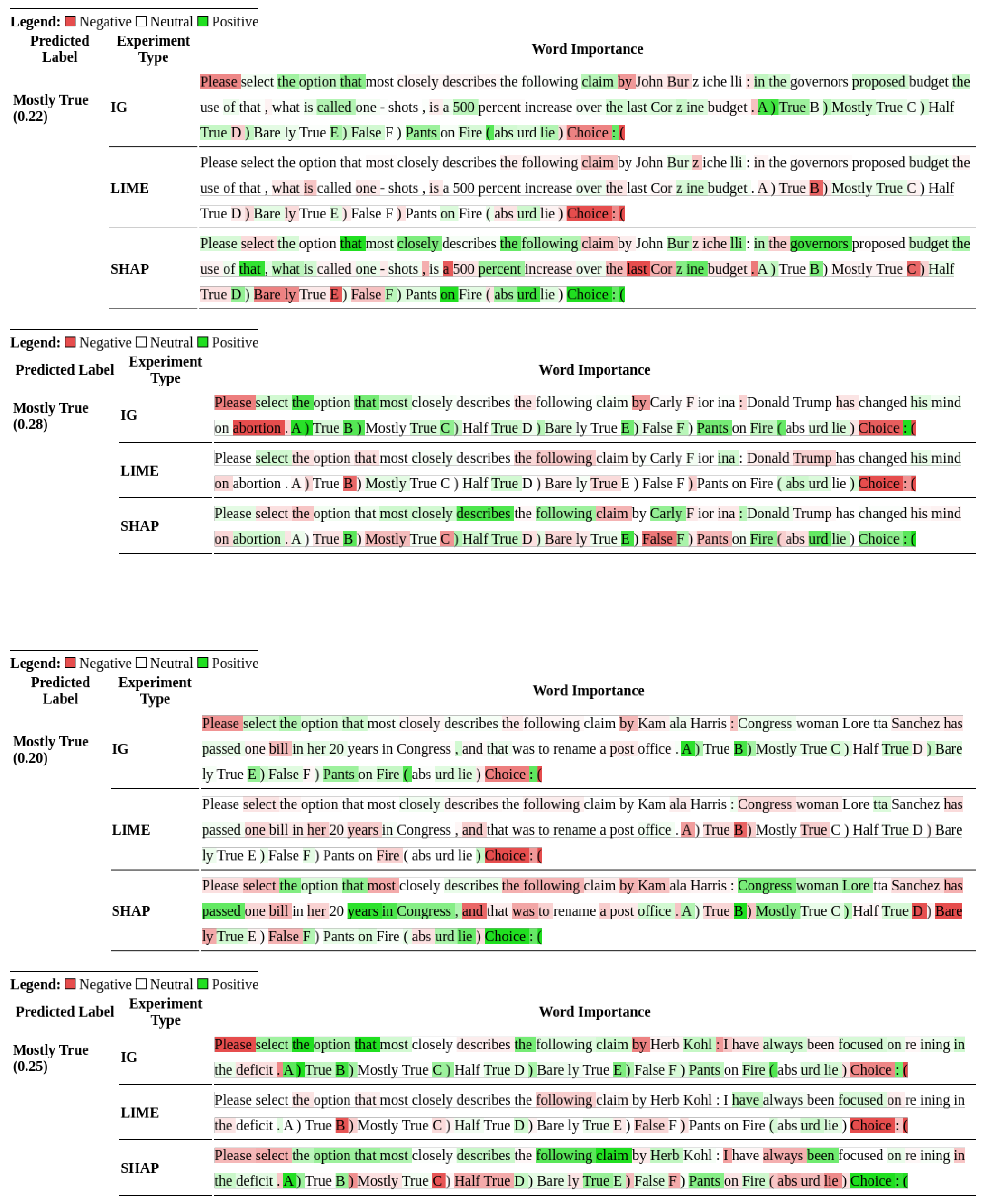

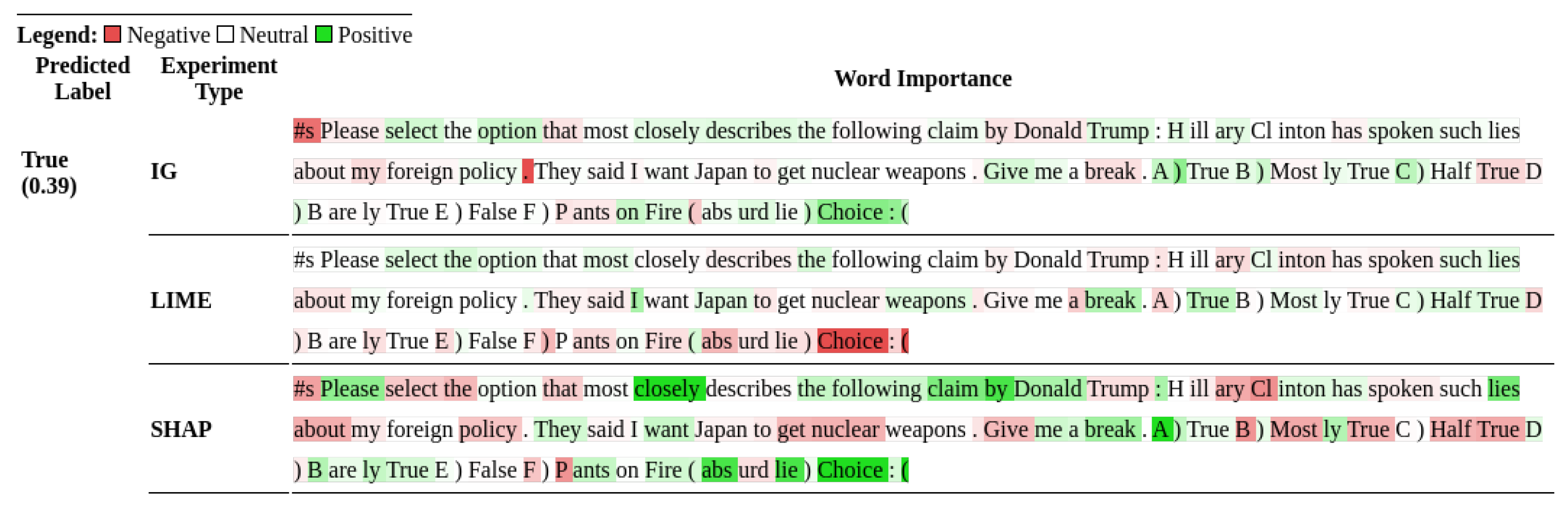

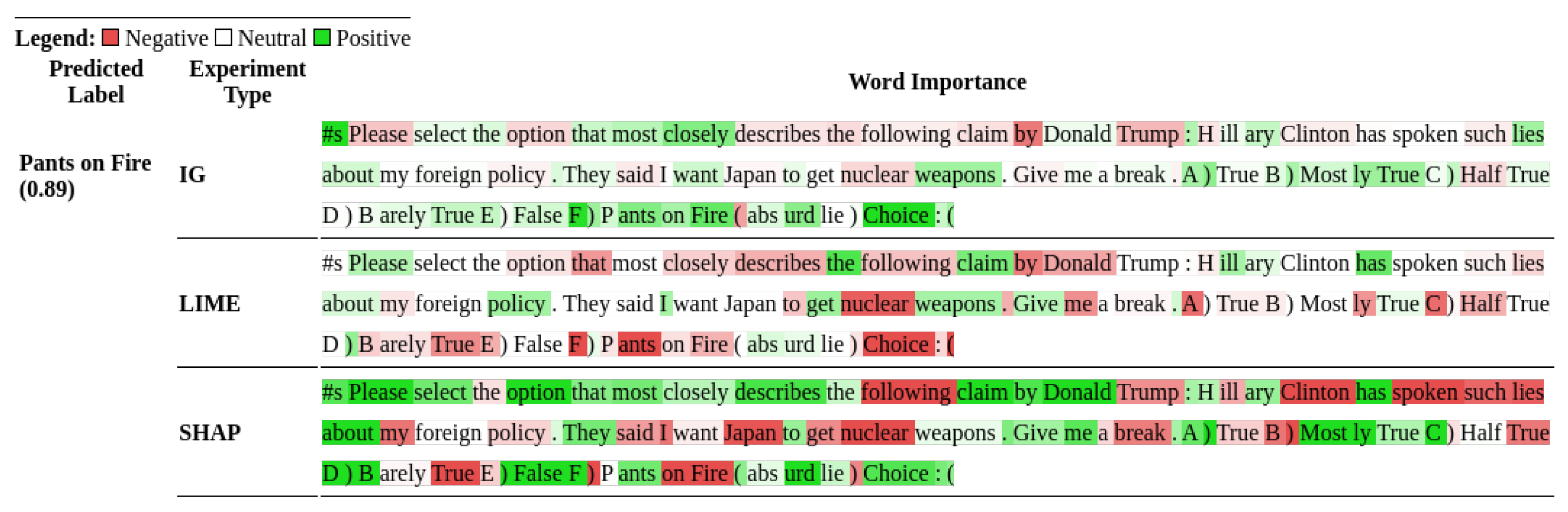

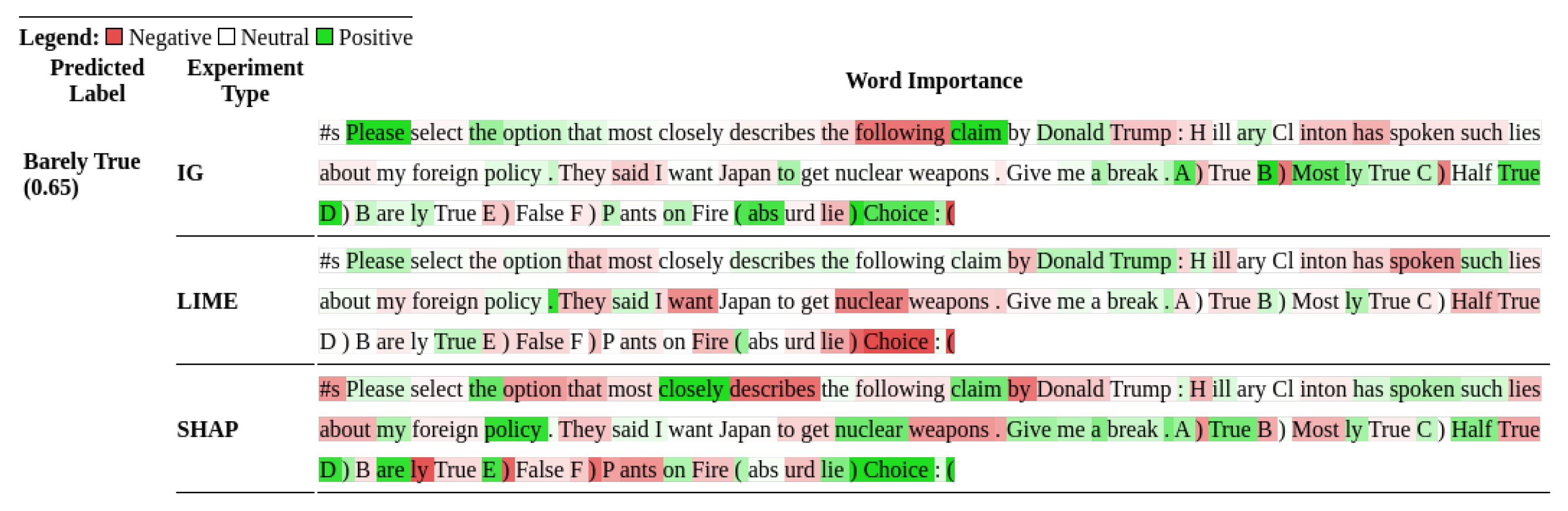

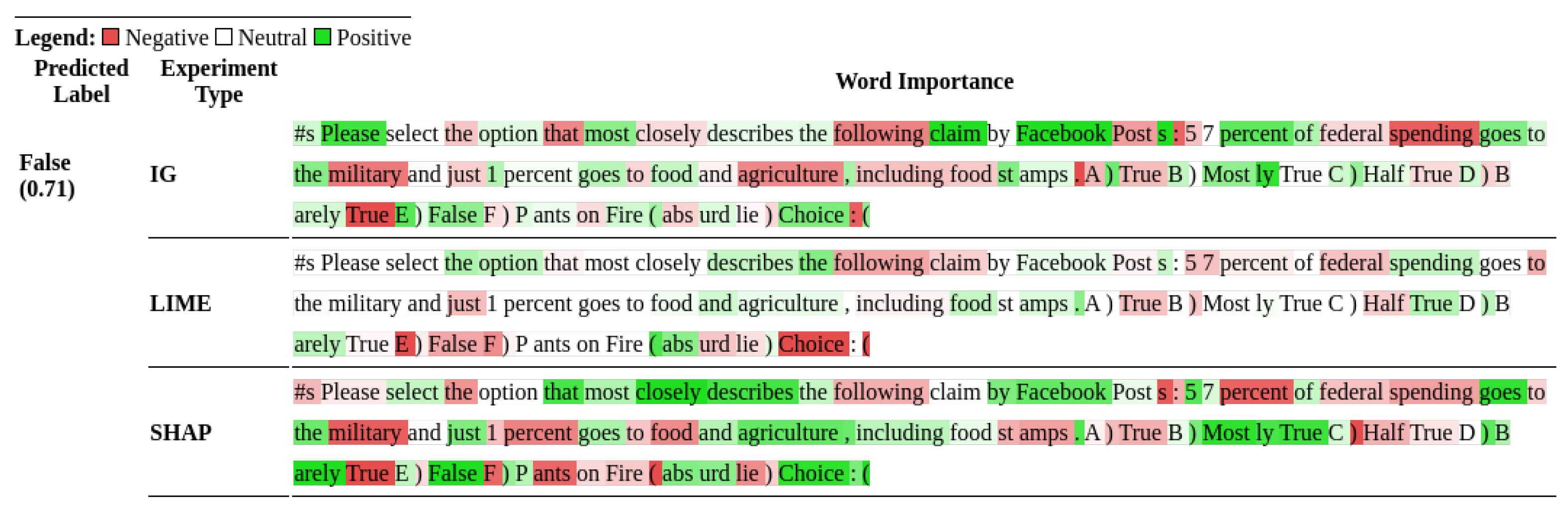

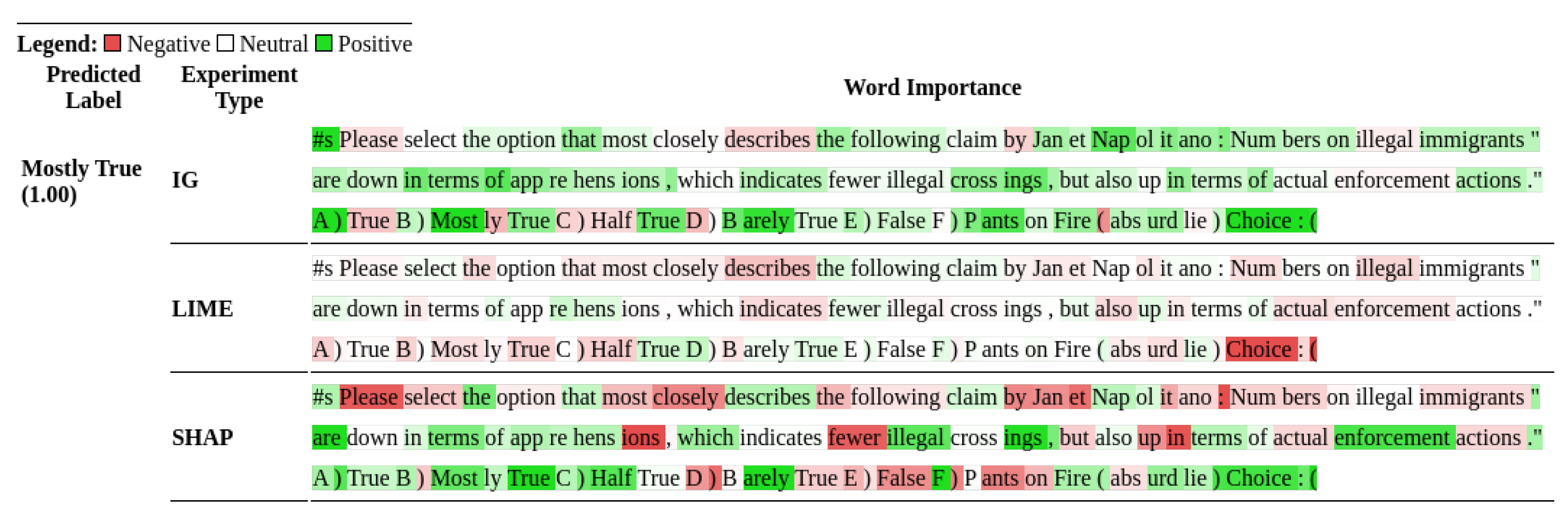

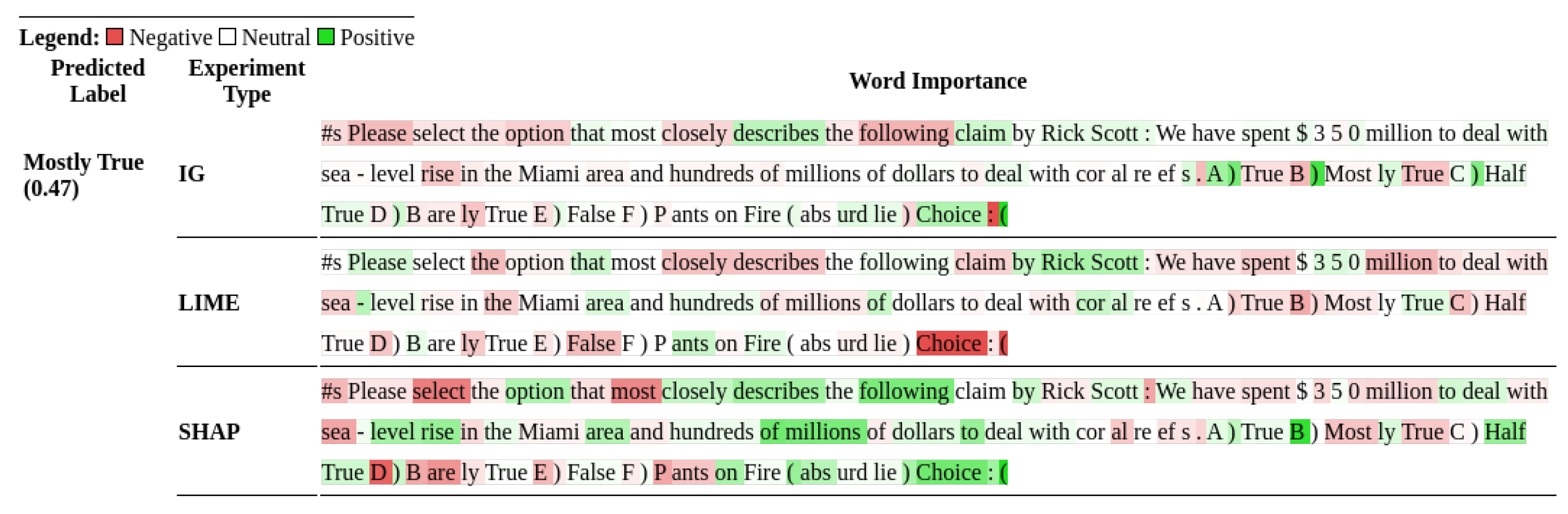

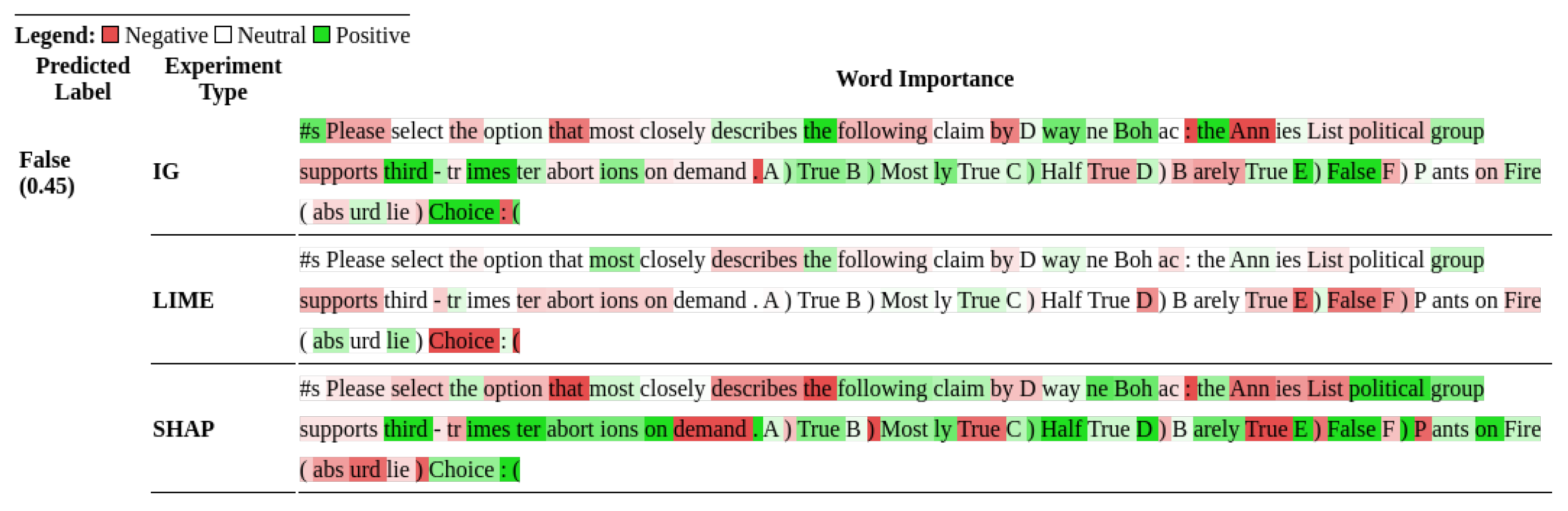

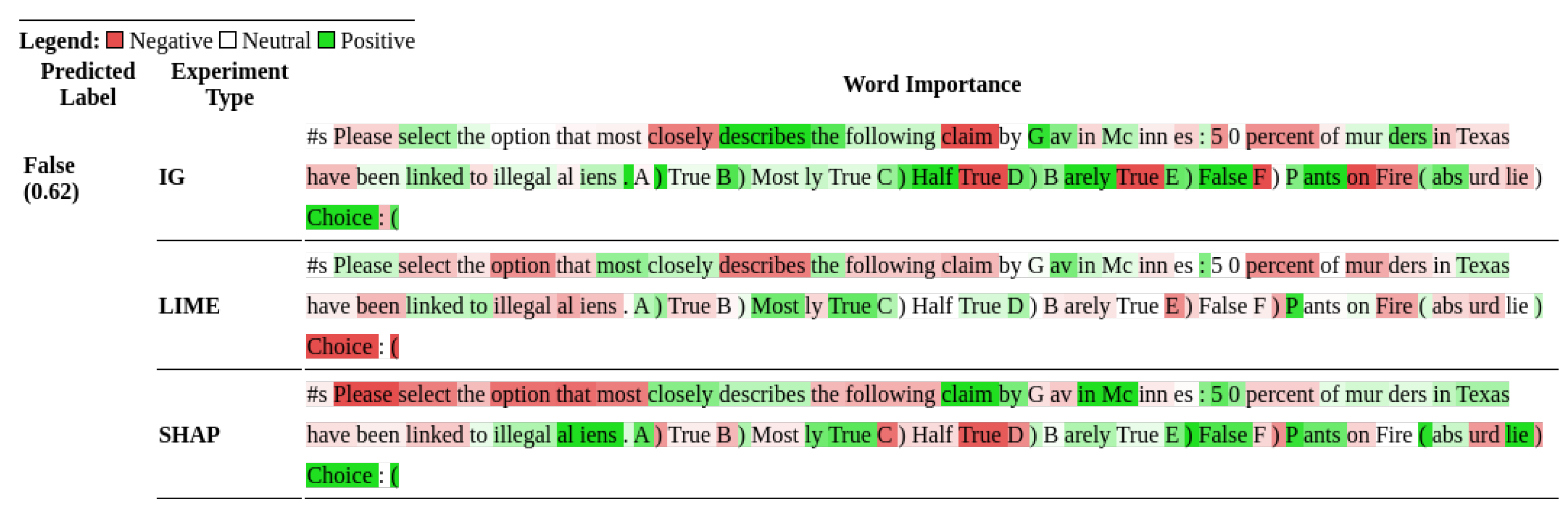

Figure 4,

Figure 5,

Figure 6 and

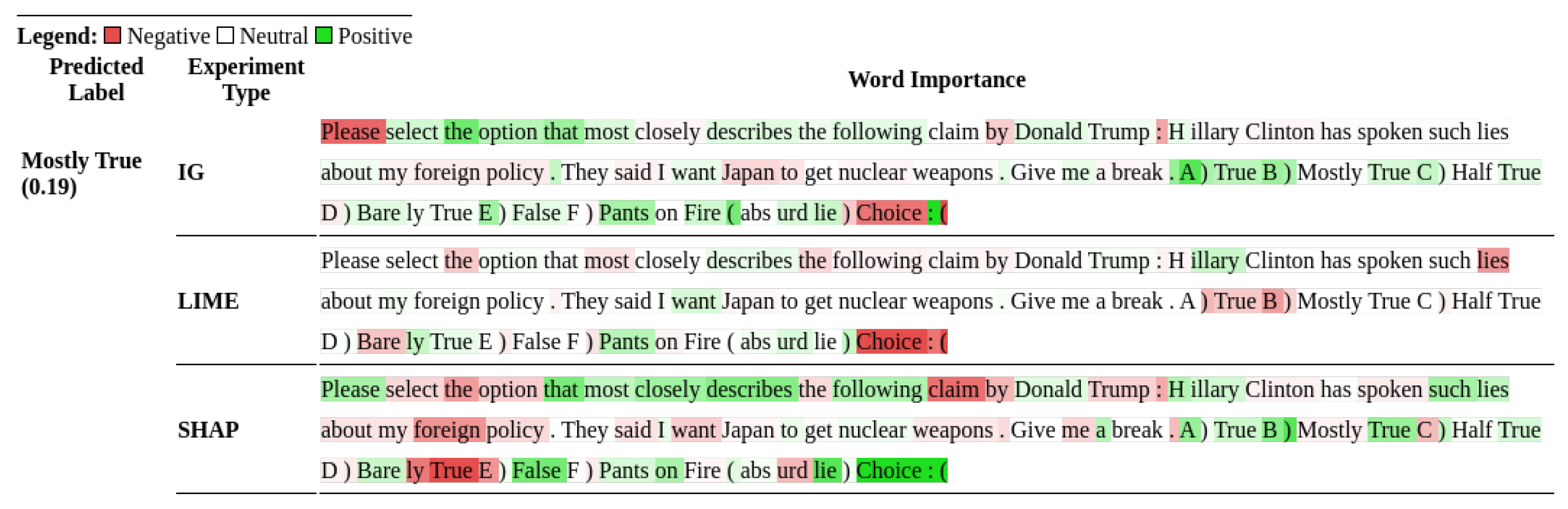

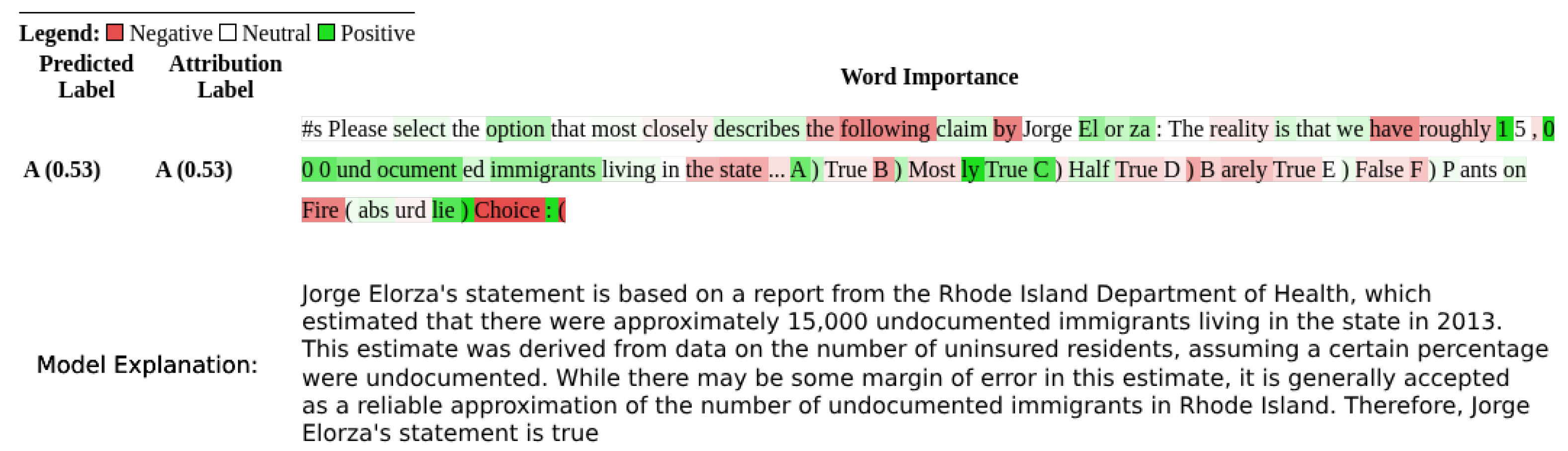

Figure 7 show the output from the explainability algorithms generated for a random sample from the LIAR dataset. The figures compare the results of the different explainability techniques across different models. The figures show the classification that the model produced along with the probability attached to it. For example, Mostly True (0.07) indicates that the probability of outputting "B", which corresponds to Mostly True, was 0.07, and the highlighted text that goes with it indicates what tokens increased or decreased that probability. The highlighted token’s impact on the overall model output is reflected in the highlight’s intensity.

The importance an LLM attaches to the words in the prompt to arrive at the classification is determined by the highlighting and the color legend. Most of the attributions in the figures seem similar to what humans would highlight if they were to classify the same claims. For instance, in

Figure 4, the highlighting using SHAP shows that the LLM’s decision to classify the statement as "B) Mostly True" was positively influenced by the words highlighted in green such as, "that most closely describes, Hillary, such lies,..." and negatively influenced by "claim by, Trump, about, foreign policy,..." in red. More such attributions and classifications for other snippets from the dataset can be viewed in the Appendix in

Figure A1.

To aid visualization of the contributions of each token, attributions were scaled by a factor of

. These attributions were then used solely for visualizing, and the original attributions were used in the rest of the experiments. Brightnesses of colors, ranging from 0 to 100, were calculated by the Captum package, which calculates intensity as

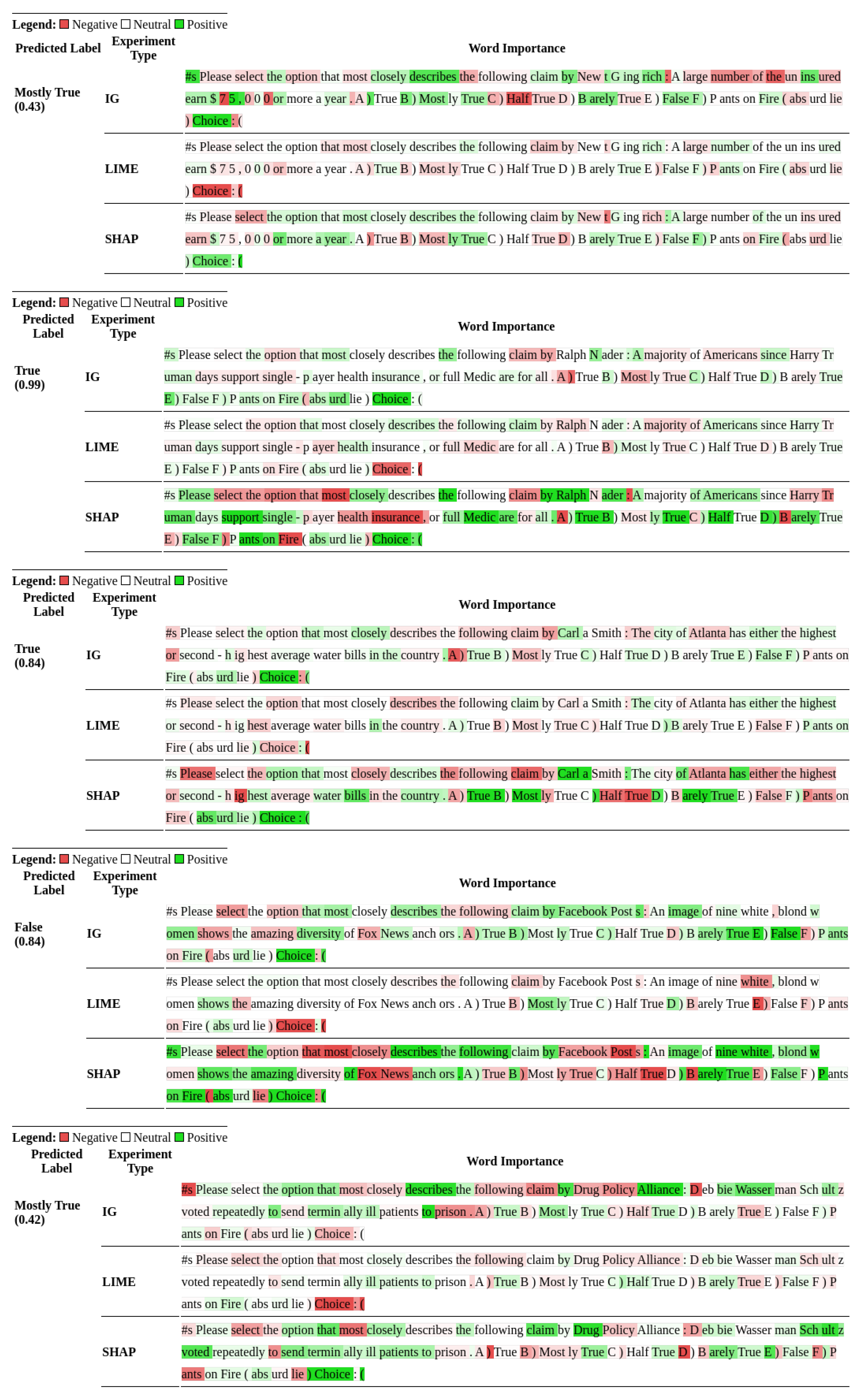

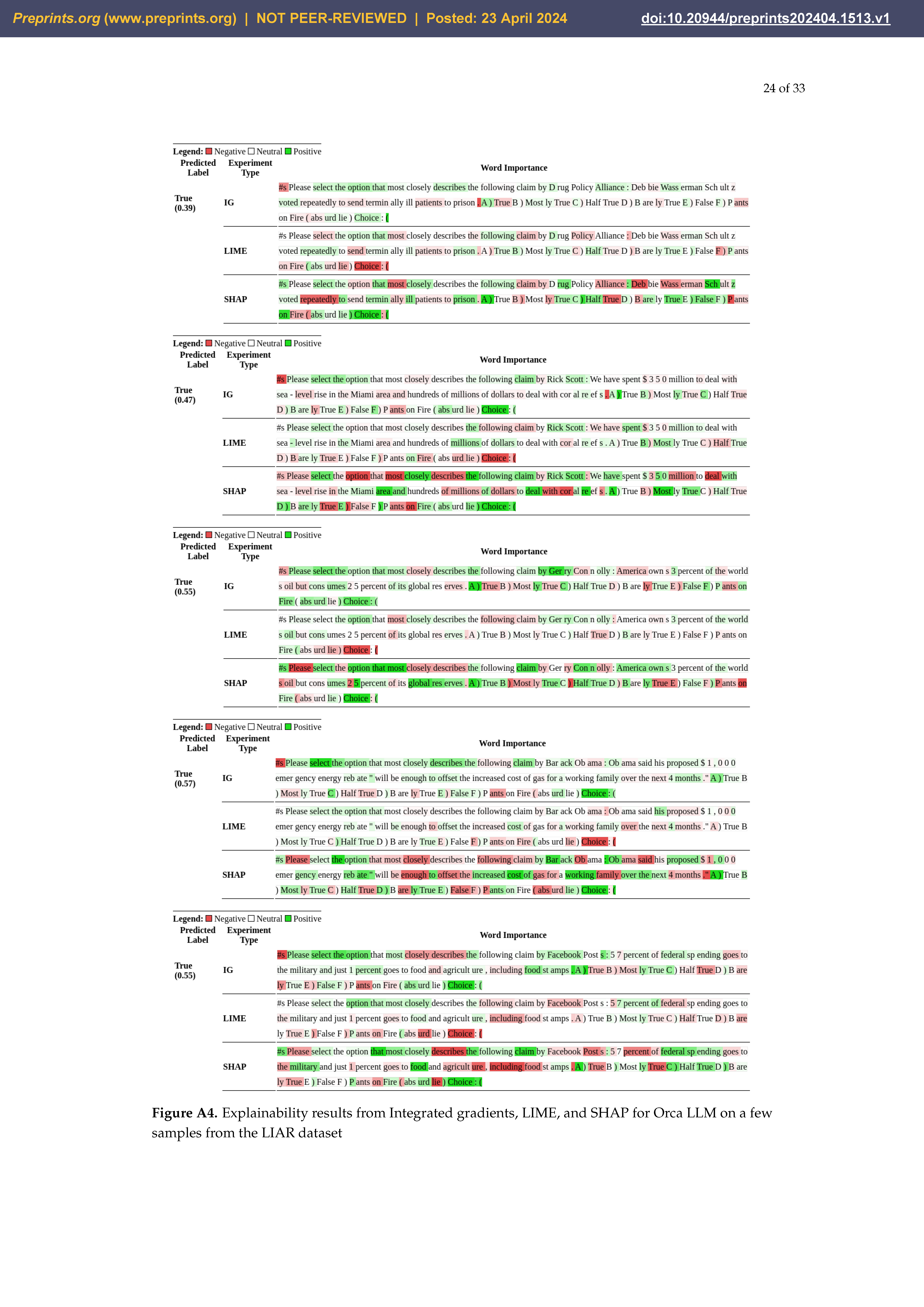

Consistently, the words

Please at the beginning of each prompt and the word

Choice at the end of each prompt were found to be important contributors to the model’s output. LIME and Integrated Gradients produced sparser attributions on average, although LIME curiously did not highlight the word

Please nearly as often as Integrated Gradients. As can be seen from

Table 8, out of the three explainability methods used, SHAP by far produced the most varied attributions. This is confirmed by the fact that words besides

Choice appeared highlighted far more often than with the other two methods.

Despite these non-intuitive observations, LIME, SHAP, and Integrated Gradients do highlight features, which in this case are words or parts of words, in the prompt that humans would categorize as important. Hot topics, such as "third-trimester abortion" and "illegal aliens," as well as large quantities, such as "billions" or "millions," are often labeled as important features by at least one of the explainability methods, as seen in

Figure 8,

Figure 9,

Figure 10 and

Figure 11. The speaker and any named entities in the claim are also frequent contributors to model outputs. Similarly, strong actions or verbs within the claims, such as "murder," are also labeled as important features

Figure 12. More such information from the dataset is analyzed in the Appendix in

Figure A2–

Figure A4.

The results from the explainability methods do not entirely align with the quantitative results since they suggest that most of the models do focus on the correct aspects of prompts. In other words, these results suggest that LLMs understand the prompts and know what to focus on, but lack the correct knowledge, possibly because the LLMs were not trained on the corresponding facts.

The problem is further examined by analyzing differences in results between models. First, a comparison is made between numerical attribution values, which varied significantly amongst models.

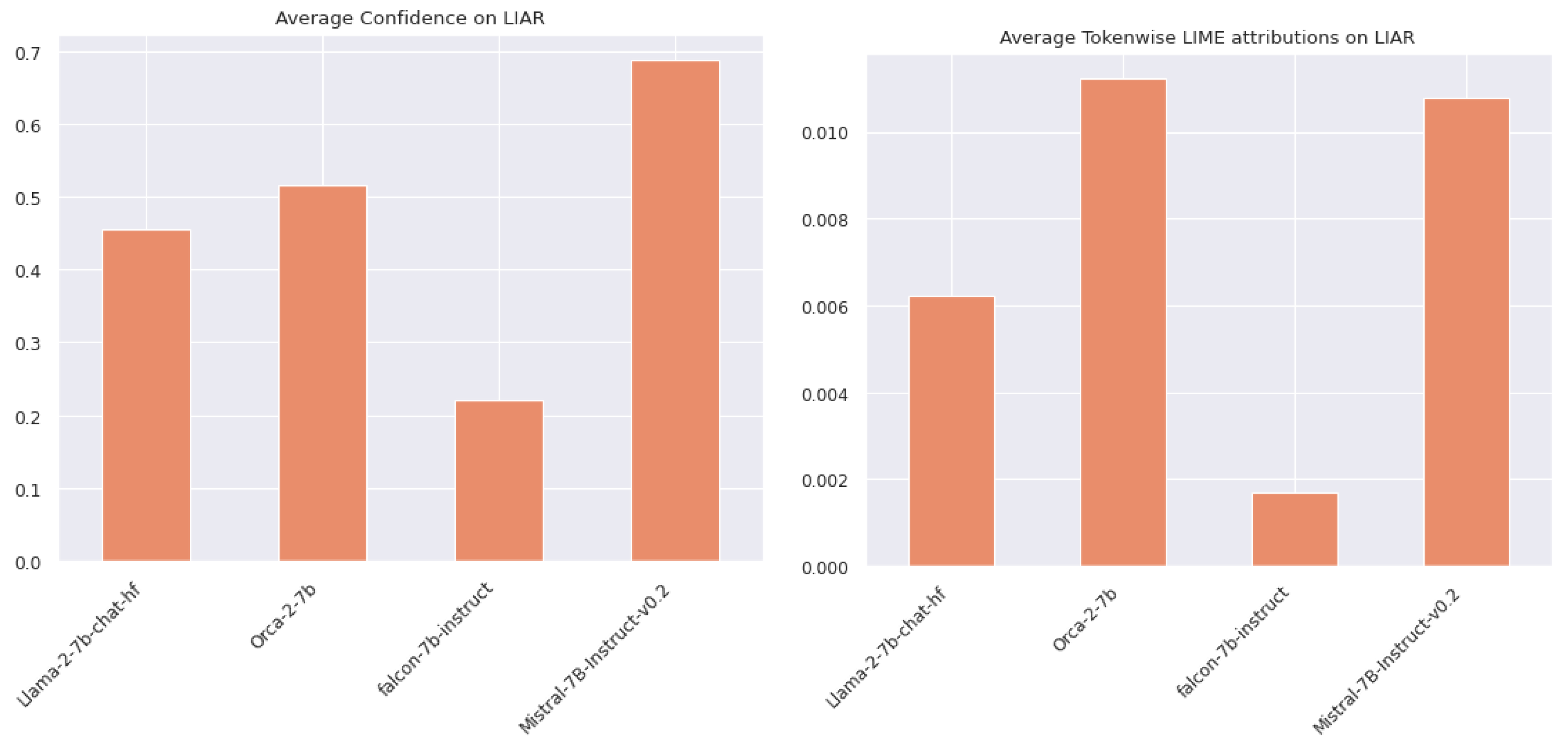

Figure 13 shows the summary of the comparison. Falcon showed the least average token-wise importance. This is likely because Falcon showed the lowest level of confidence in its predictions out of the four models that were compared. Confidence is indicated by the probabilities output from the softmax function when the model predicts the next token. It exhibited an average confidence of less than fifty percent for predicting the next token. This clarifies why Falcon has low token-wise importance when using explainability methods like SHAP and Integrated Gradients, whose attributions add up to the model’s prediction. Interestingly, the same trend holds for LIME attributions as well, as visible in

Figure 13 even though the attributions are not additive. The opposite holds true as well. Models that were more confident in their results, such as Orca and Mistral, as seen in

Figure 13, exhibited higher token-wise importance. Strangely, while Orca’s mean confidence is at least 20 percentage points less than Mistral’s, Orca’s mean LIME token-wise attribution is slightly greater than Mistral’s.

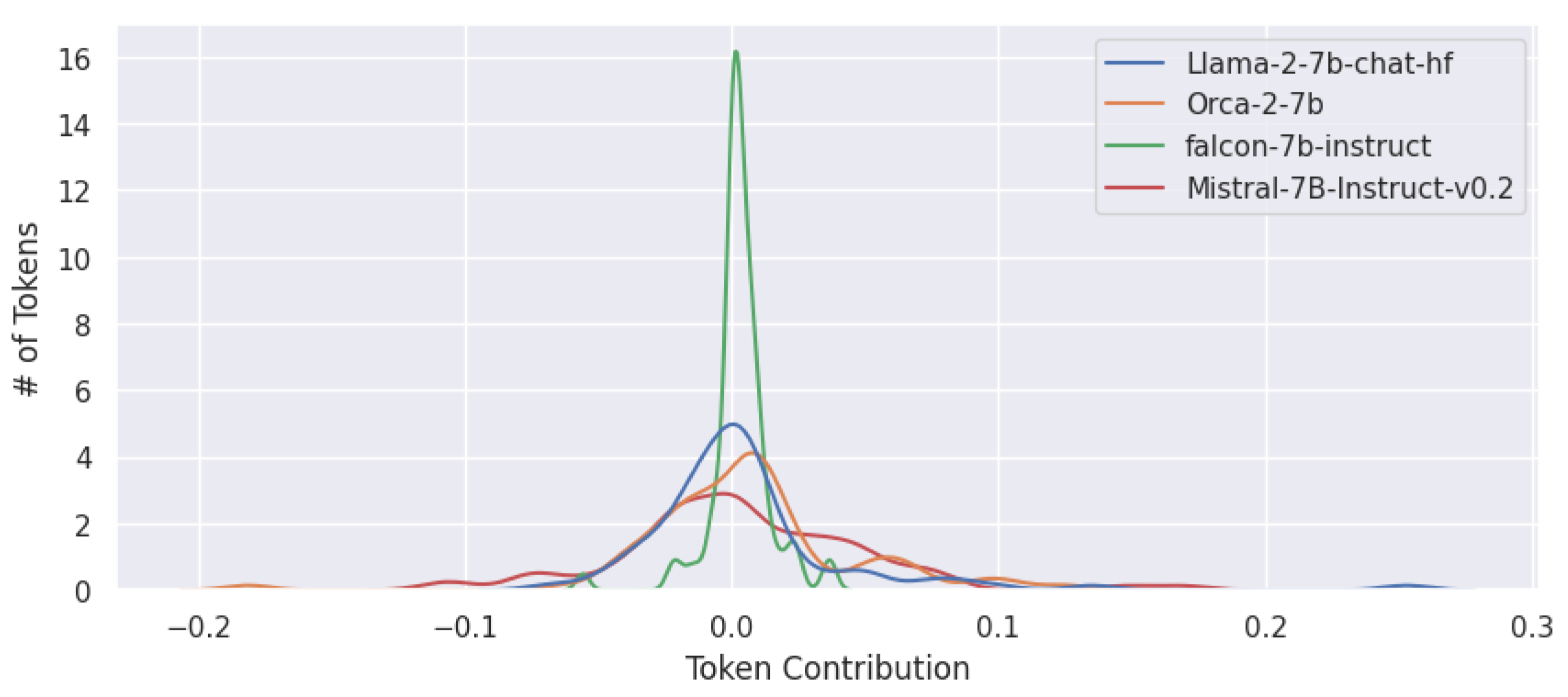

The distribution of the attributions plotted in

Figure 14 showed that Falcon’s token-wise importance distribution was highly centered around 0 with a smaller standard deviation when compared to other models. In contrast, Mistral, which performed the best out of the models evaluated, has a different kind of plot. The curve in the plot revealed a token-wise significance distribution with more fluctuations, volatility, and a higher standard deviation. This may be a key factor, from an explainability perspective, why Mistral outperforms its competitors. The distributions in the plot suggest that Mistral prominently uses more tokens in the prompt than its competitors do allowing it to better respond to the prompt.

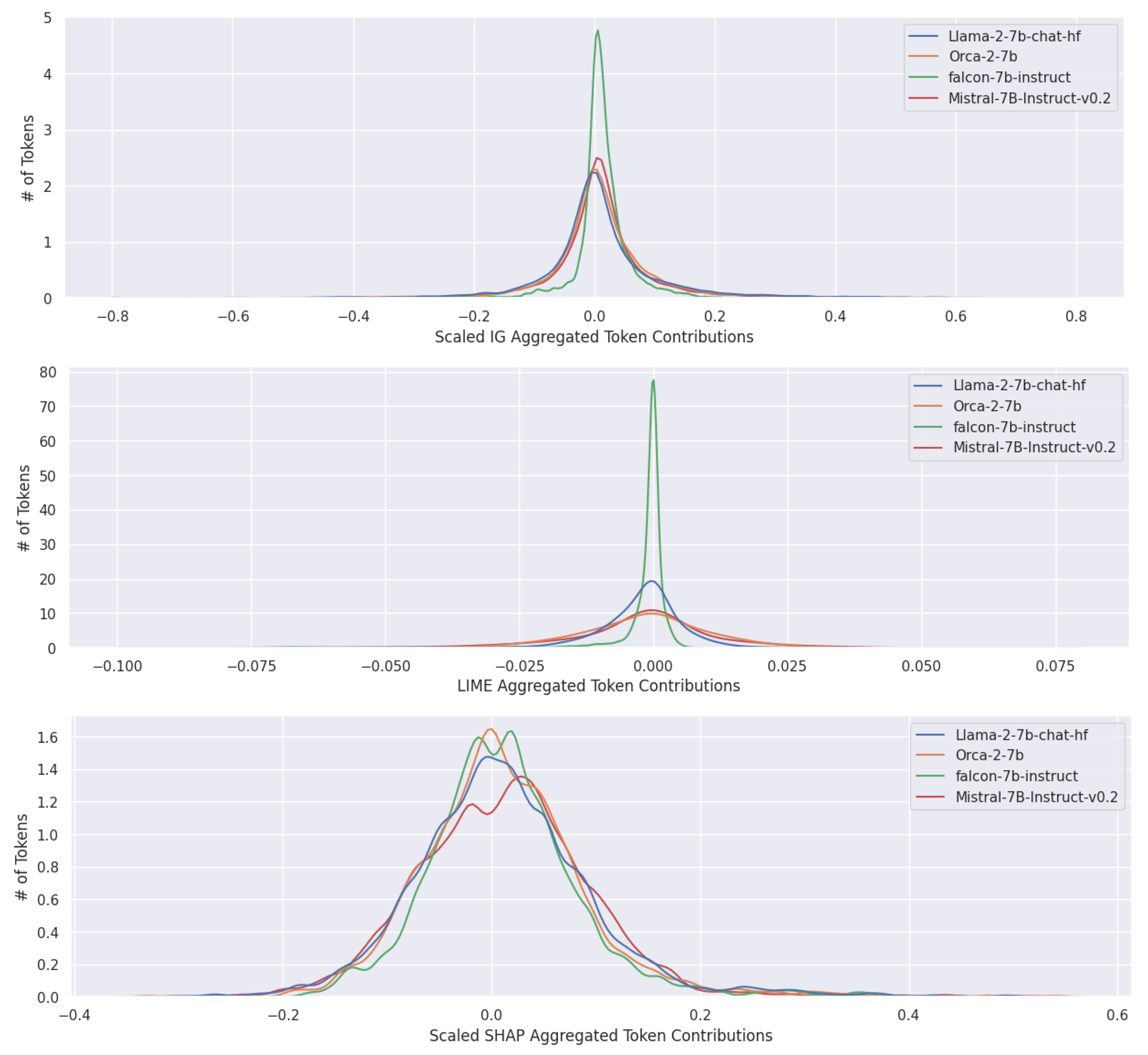

The initial plots used for comparisons were based on LIME, SHAP, and Integrated Gradients’ token-wise attributions. However, SHAP and Integrated Gradients attributions are additive and sum to the predicted probability. Therefore, the raw token-wise attributions may not be a fair comparison given the varying levels of confidence exhibited by different models. To account for this, each attribution is scaled by a factor of

. The scaled graphs showed similar trends, as seen in

Figure 15.

The plots reflect token-wise importance for specific entries within the LIAR dataset. The attributions produced by Integrated Gradients, LIME, and SHAP are all highly local. An aggregation of the attributions, despite losing information about local fluctuations, maintains most of the characteristics of the overall distributions. Accordingly, the aggregated scaled attributions are plotted in

Figure 16. Notably, Falcon’s distribution curve still has the smallest standard deviation and the highest peak of all the models, confirming suspicions that Falcon does not pay enough attention to most of the tokens to answer prompts with the same accuracy as its peers. In contrast, models that perform better have wider distribution curves.

To determine how well models could explain their predictions, the next step is to compare the results from the explainability methods with explanations from the models themselves. When asked to explain their answers, models frequently pointed to background information regarding the topic and any named entities important to and appearing in the claim. Notably, while the speaker may be highlighted by explainability methods, models rarely cite information specific to the speaker. Perhaps more interestingly, models cited different evidence and provided different levels of detail when supporting their claims. Mistral and Orca’s explanations were on average longer and more detailed than their competitors. Moreover, Mistral and Orca often produced explanations without explicitly being prompted for them. Llama sometimes produced explanations without explicit prompting, and Falcon often required explicit prompting, at which point it would provide the shortest explanations, if any.

Nevertheless, side-by-side comparisons of model explanations (

Figure 17) and current explainability algorithms appear to complement each other, as the latter reveals keywords in the text that appear to trigger models’ world knowledge, which is revealed explicitly in the former. Once again, this suggests that models are capable of focusing on the right words and are only failing to answer correctly because they were trained on insufficient, outdated, or inaccurate information.