Submitted:

30 April 2024

Posted:

01 May 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

- The dataset images are processed by adding Gaussian noise and adjusting the color space to enhance the model's generalization and robustness towards weed edge features in agricultural environments.

- By obtaining model scales compatible with laser weeding equipment and developing pre-trained weights more suited for agricultural settings, the accuracy and speed of model training are enhanced.

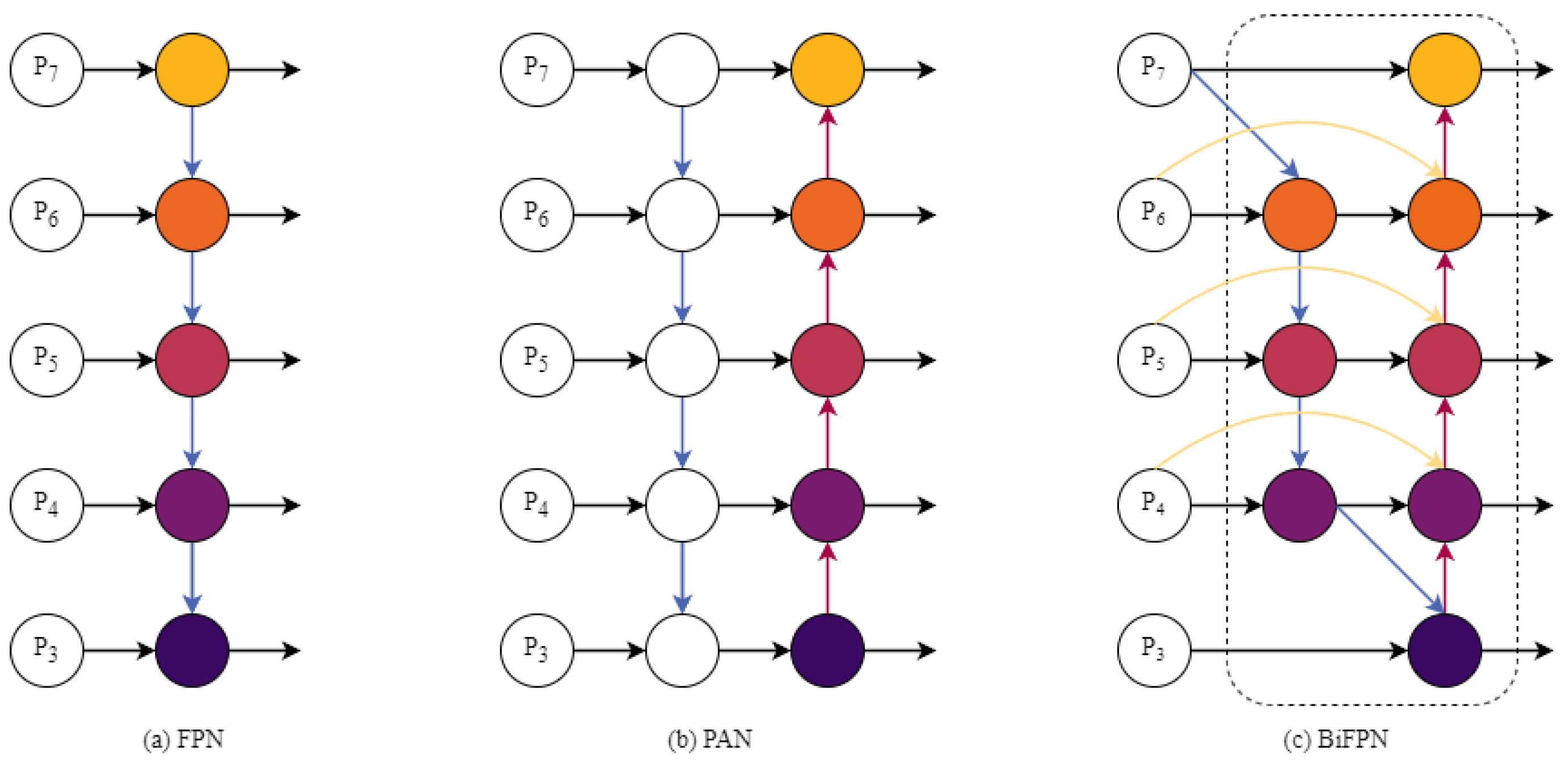

- During the feature fusion process, a Bidirectional Feature Pyramid Network (BiFPN) is introduced. BiFPN's weighted multi-scale feature fusion improves the network's focus on small targets, addressing the issue of inconspicuous features in complex backgrounds.

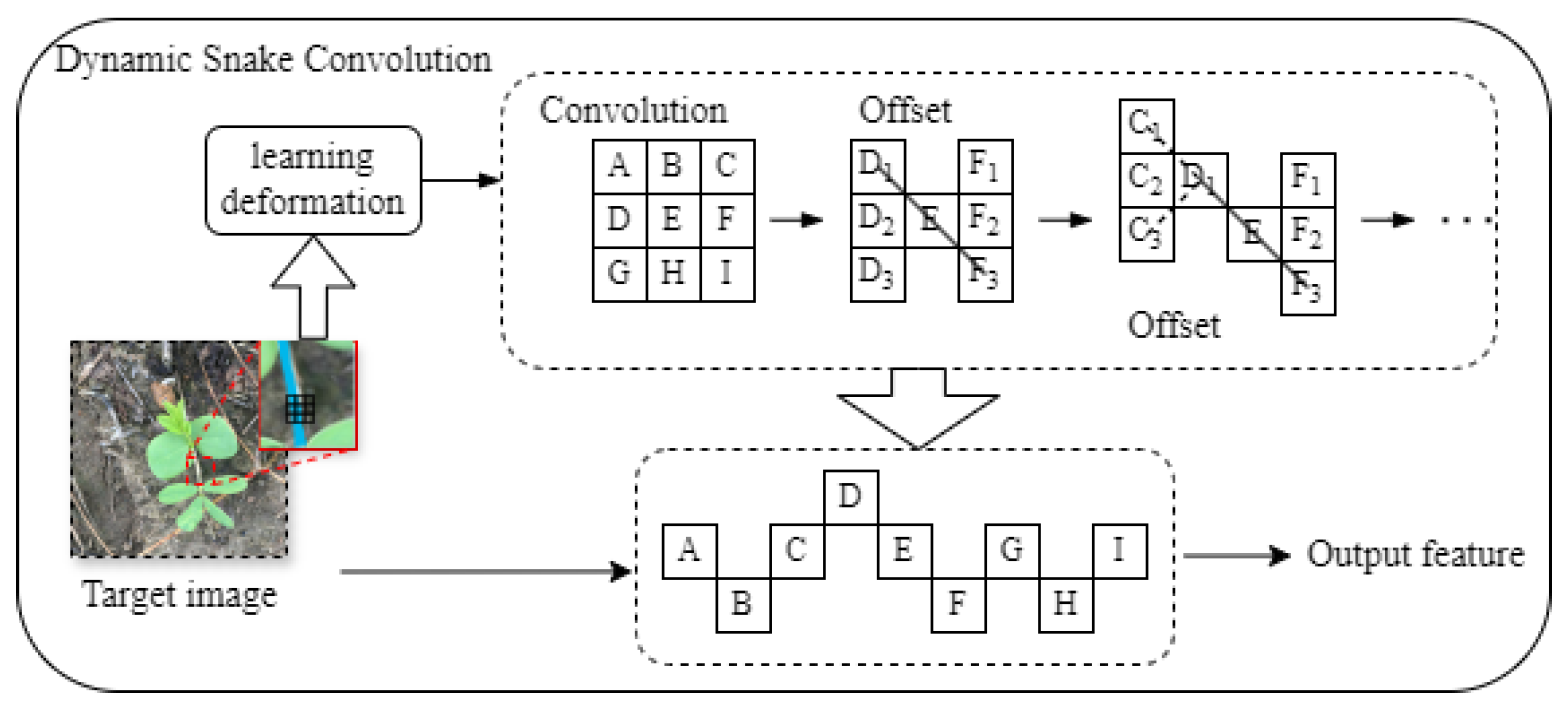

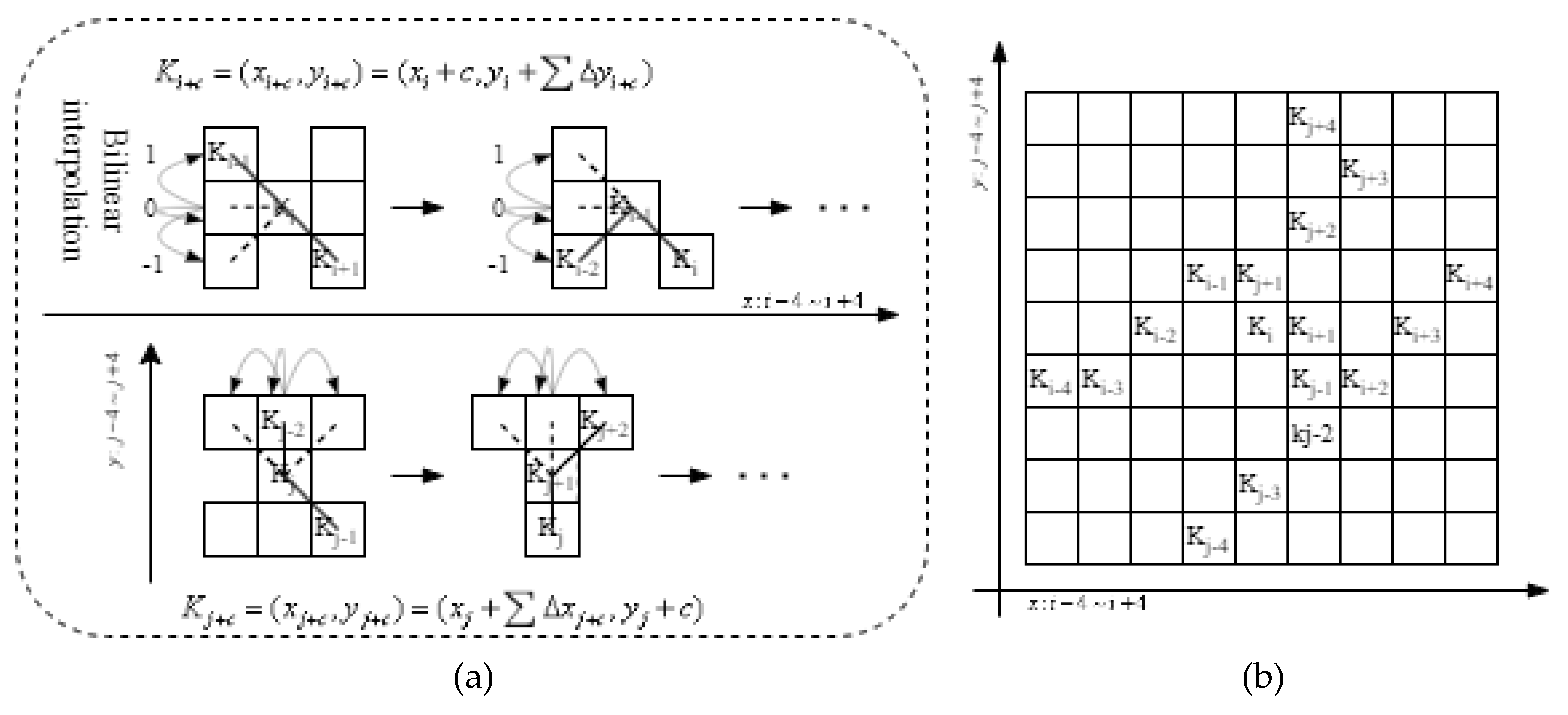

- DSConv is integrated to enhance the network's capability to segment irregular edges of plant stems and leaves, enabling accurate weed segmentation.

2. Materials and Methods

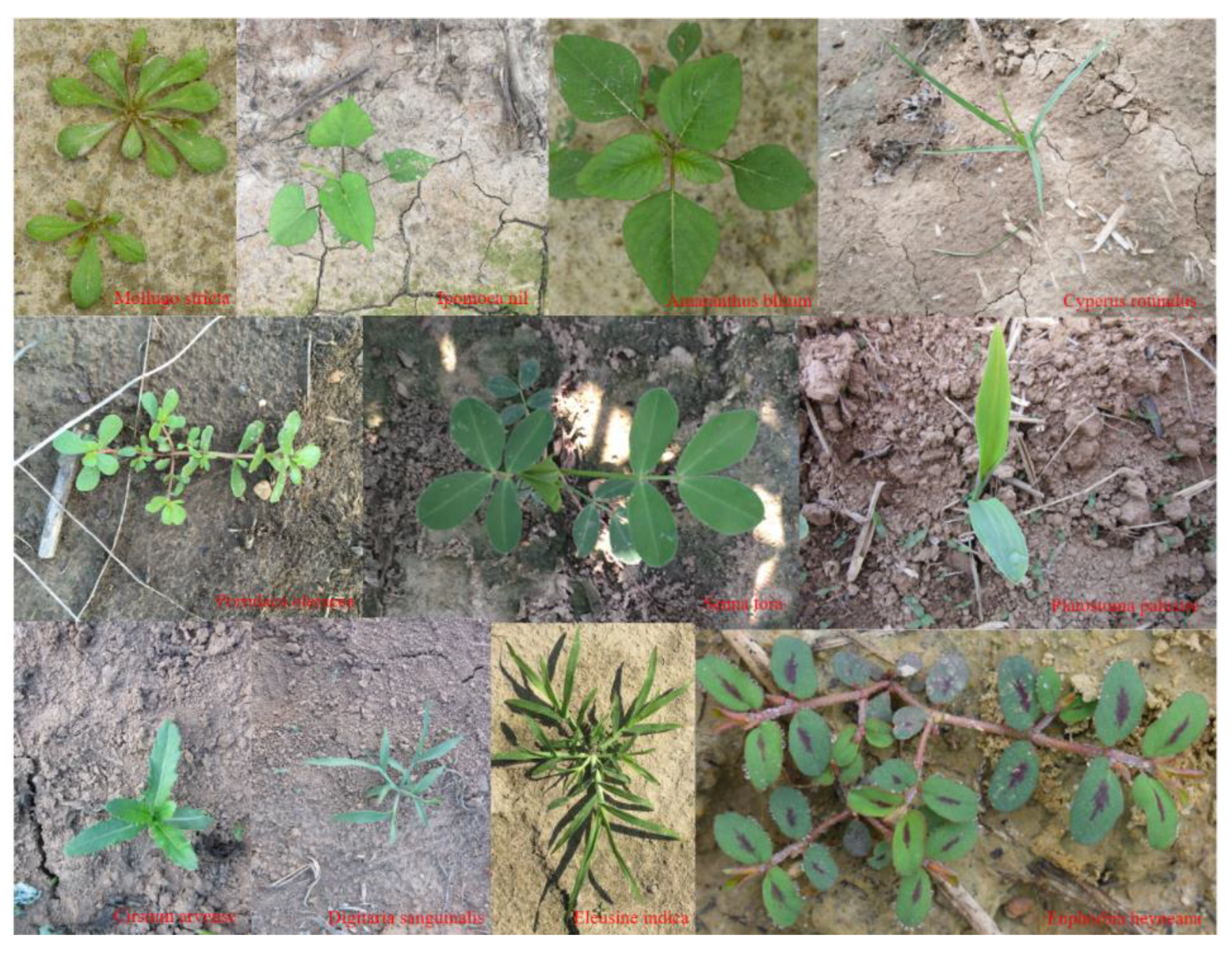

2.1. Image Collection and Dataset Construction

2.1.1. Data Collection and Annotation

2.1.2. Dataset Augmentation and Construction

2.2. Network Model Construction

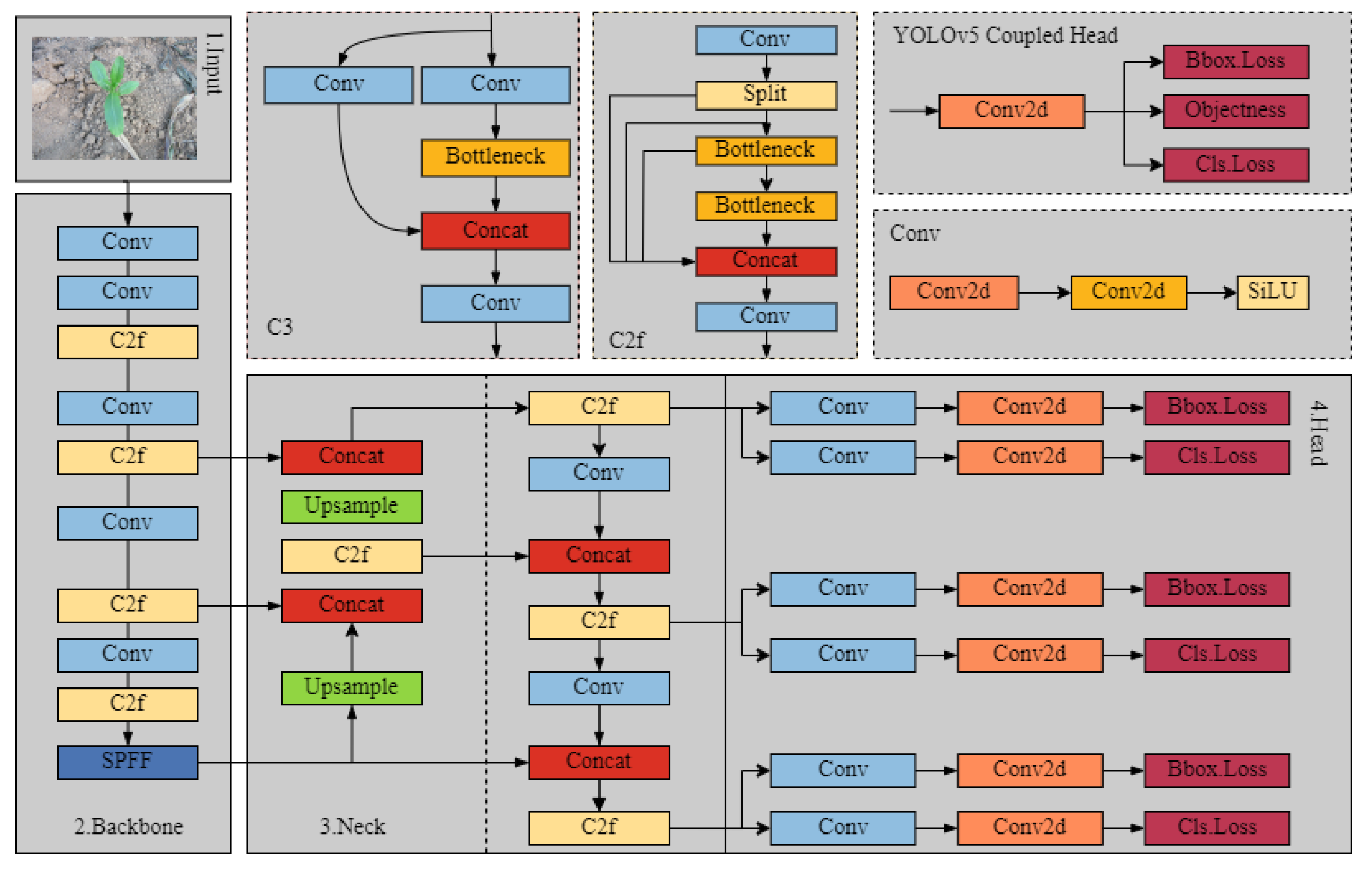

2.2.1. Structure of the YOLOv8-seg Network

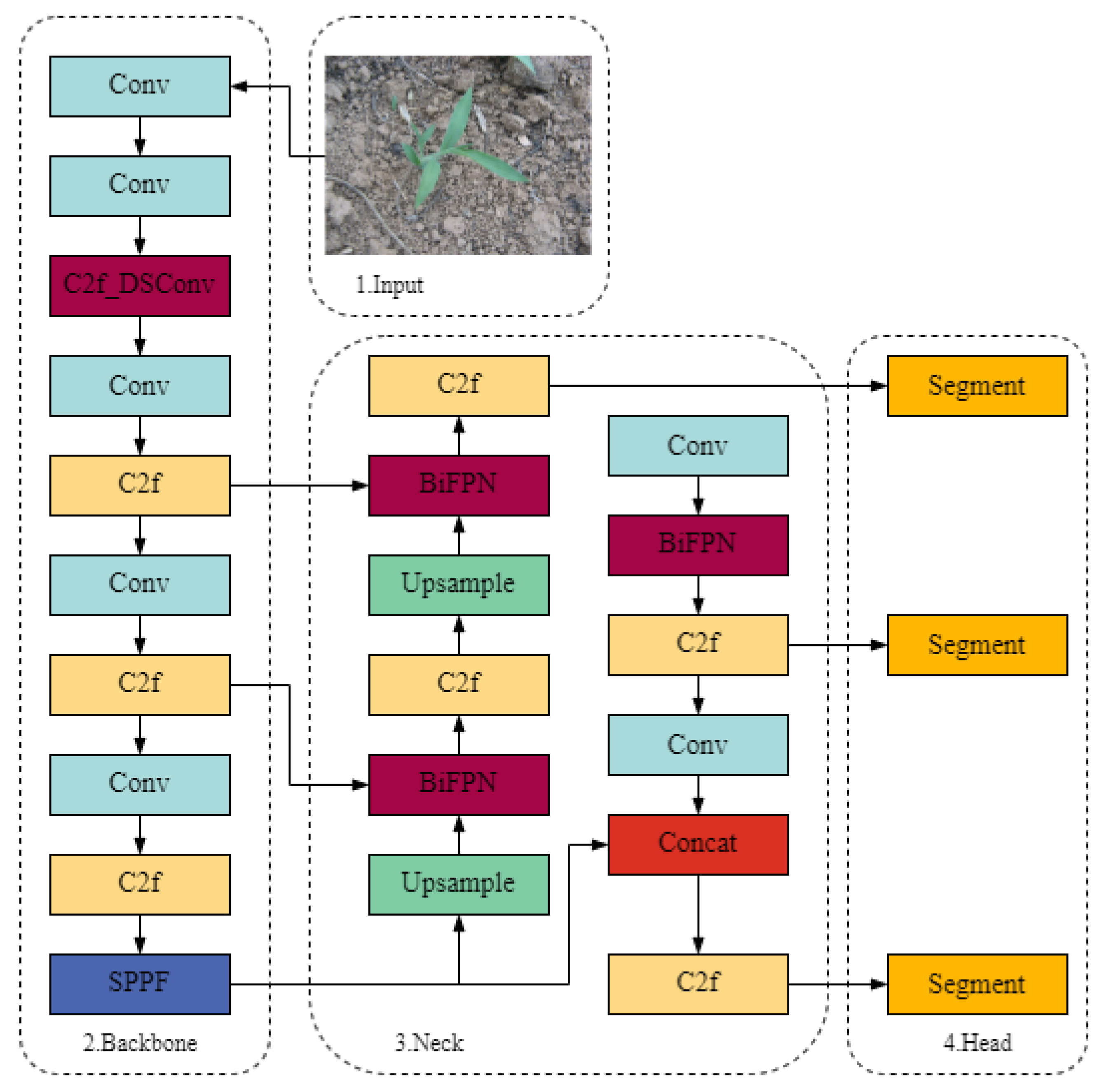

2.2.2. Structure of the BFFDC-YOLOv8-seg Network

- Appropriate weight documents and scales

- Multi-scale feature fusion

- Deformable convolution

2.3. Model Training and Outputs

2.4. Model Evaluation Criteria

3. Results

3.1. Ablation Experiments and Model Training Details

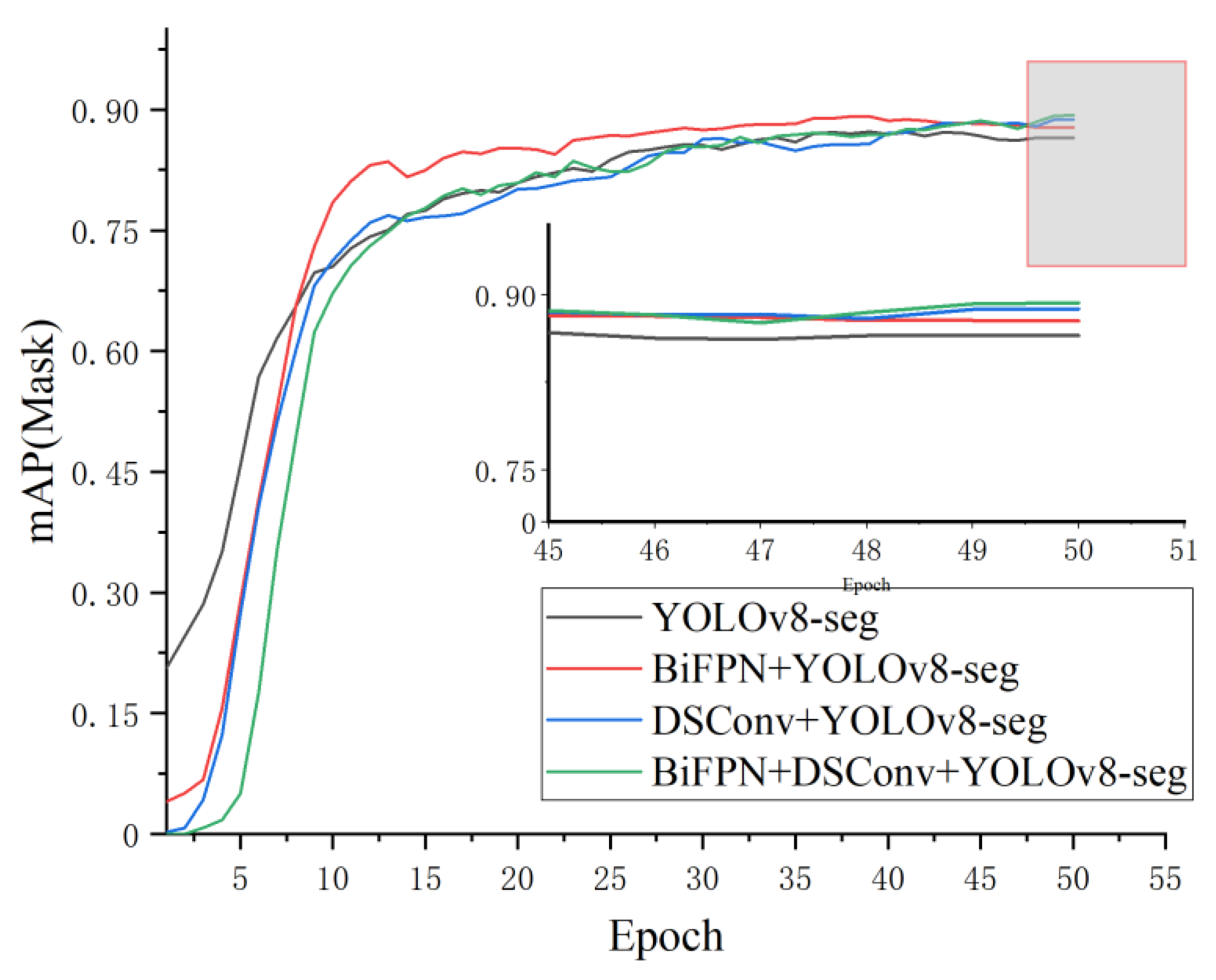

3.1.1. Ablation Experiments

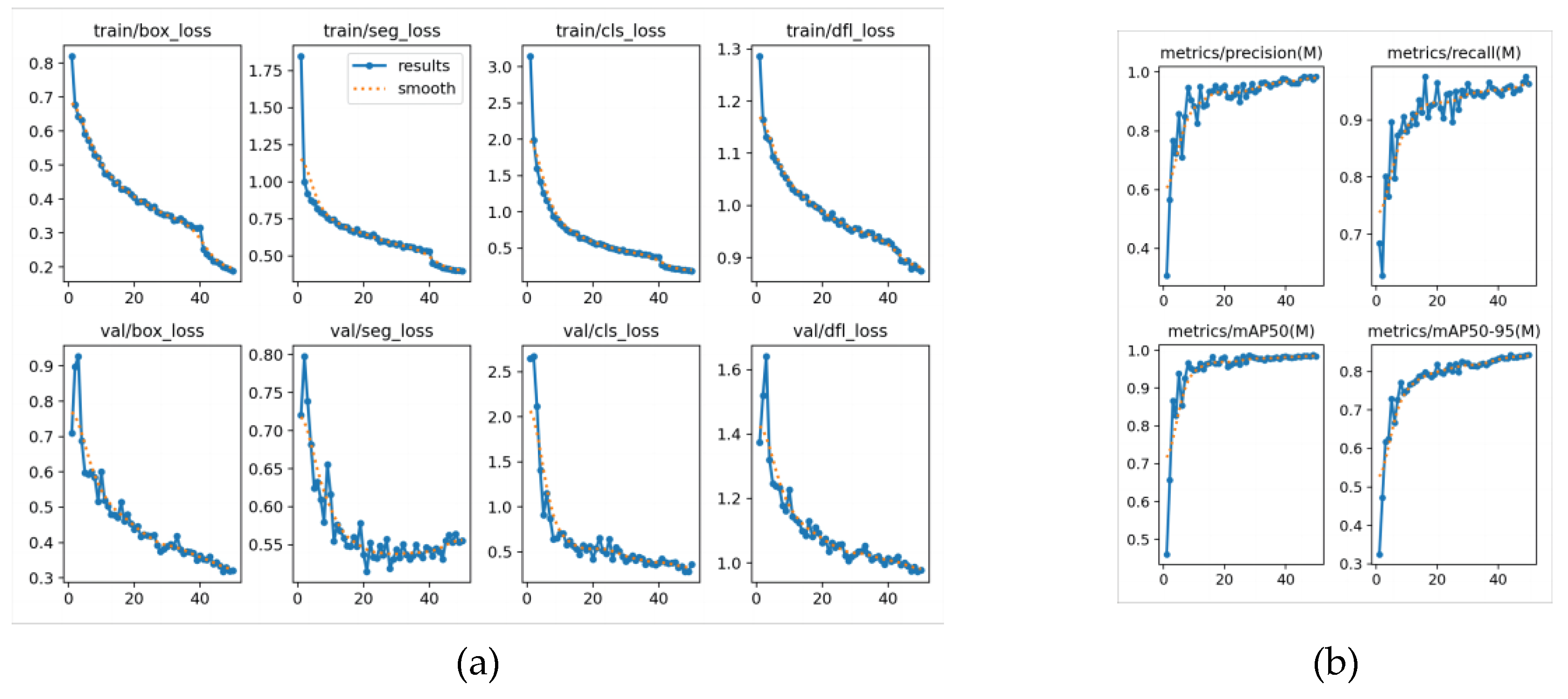

3.1.2. Training Results for the BFFDC-YOLOv8-seg

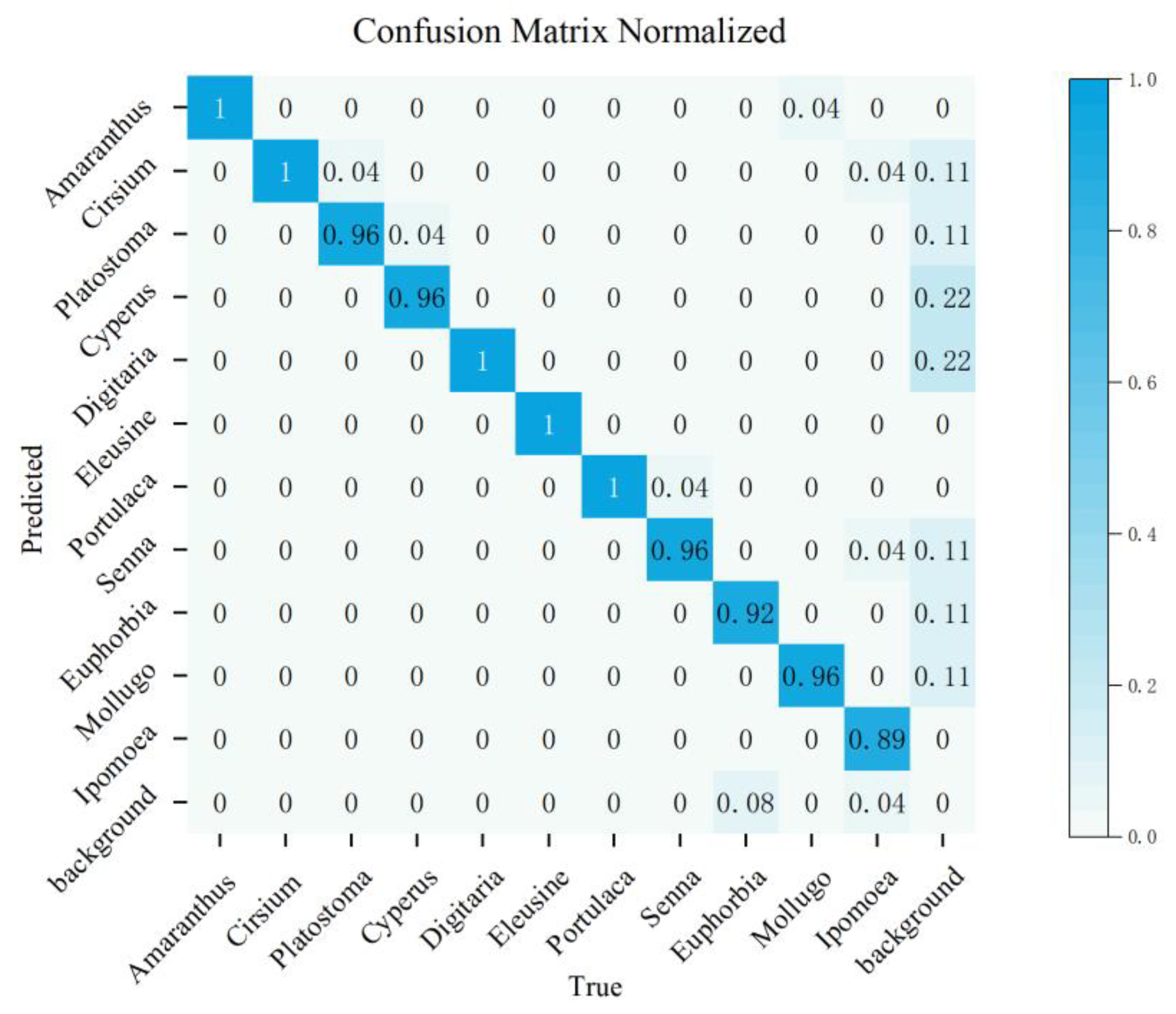

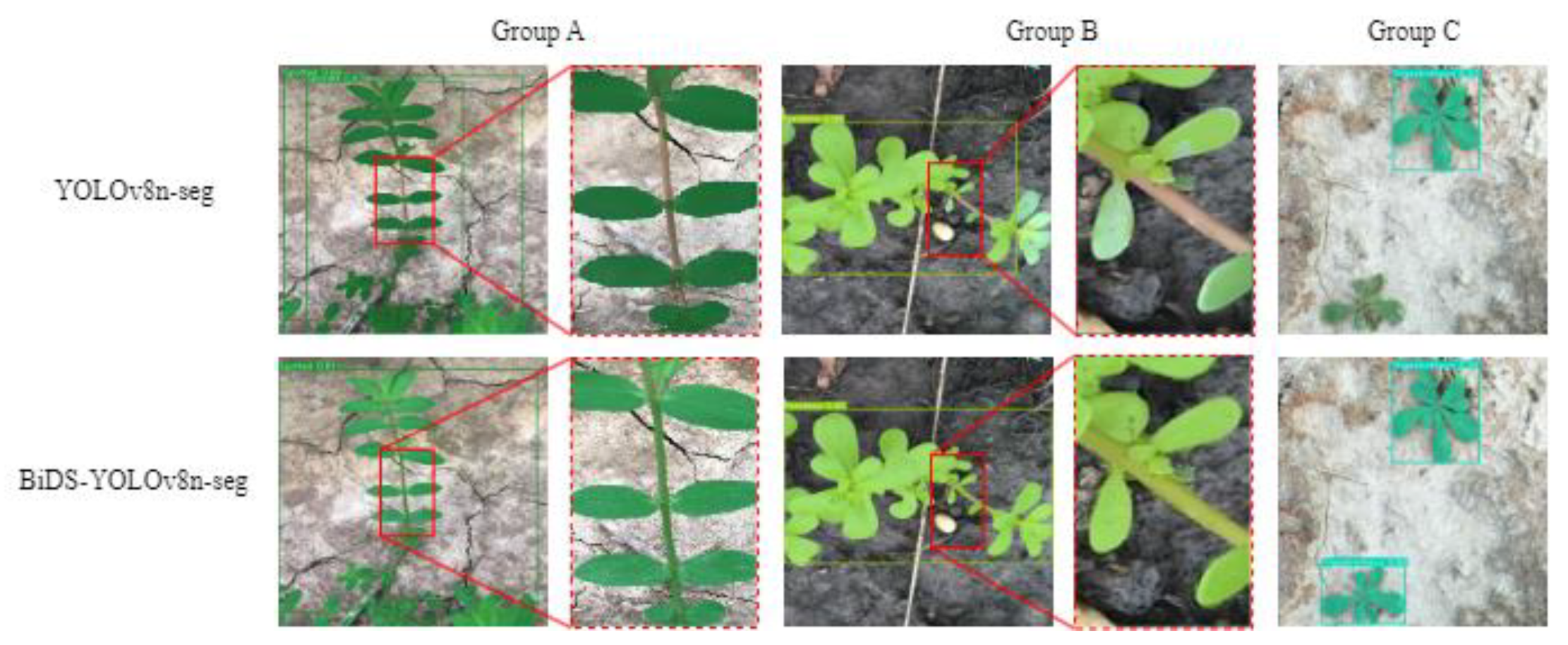

3.1.3. BFFDC-YOLOv8-seg Detection and Segmentation Effect

3.2. Comparison of the Performance with the Other Segmentation Models

3.3. Testing on Standalone Devices

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wozniak, A. Mechanical and chemical weeding effects on the weed structure in durum wheat. Italian Journal of Agronomy 2020, 15. [Google Scholar] [CrossRef]

- Panta, S.; Schwarzländer, M.; Weyl, P.S.R.; Hinz, H.L.; Winston, R.L.; Eigenbrode, S.D.; Harmon, B.L.; Bacher, S.; Paynter, Q. Traits of insect herbivores and target weeds associated with greater biological weed control establishment and impact. BioControl 2024. [Google Scholar] [CrossRef]

- Gao, W.-T.; Su, W.-H. Weed Management Methods for Herbaceous Field Crops: A Review. Agronomy 2024, 14. [Google Scholar] [CrossRef]

- Gaskin, J. Recent contributions of molecular population genetic and phylogenetic studies to classic biological control of weeds. BioControl 2023. [Google Scholar] [CrossRef]

- Gamble, A.V.; Price, A.J. The intersection of integrated pest management and soil quality in the resistant weed era. Italian Journal of Agronomy 2021, 16. [Google Scholar] [CrossRef]

- Raj, M.; Gupta, S.; Chamola, V.; Elhence, A.; Garg, T.; Atiquzzaman, M.; Niyato, D. A survey on the role of Internet of Things for adopting and promoting Agriculture 4.0. Journal of Network and Computer Applications 2021, 187. [Google Scholar] [CrossRef]

- Kaya, A.; Keceli, A.S.; Catal, C.; Yalic, H.Y.; Temucin, H.; Tekinerdogan, B. Analysis of transfer learning for deep neural network based plant classification models. Computers and Electronics in Agriculture 2019, 158, 20–29. [Google Scholar] [CrossRef]

- Nevavuori, P.; Narra, N.; Lipping, T. Crop yield prediction with deep convolutional neural networks. Computers and Electronics in Agriculture 2019, 163. [Google Scholar] [CrossRef]

- Hasan, A.S.M.M.; Sohel, F.; Diepeveen, D.; Laga, H.; Jones, M.G.K. A survey of deep learning techniques for weed detection from images. Computers and Electronics in Agriculture 2021, 184. [Google Scholar] [CrossRef]

- Coleman, G.R.Y.; Bender, A.; Hu, K.; Sharpe, S.M.; Schumann, A.W.; Wang, Z.; Bagavathiannan, M.V.; Boyd, N.S.; Walsh, M.J. Weed detection to weed recognition: reviewing 50 years of research to identify constraints and opportunities for large-scale cropping systems. Weed Technology 2022, 36, 741–757. [Google Scholar] [CrossRef]

- Yu, H.; Che, M.; Yu, H.; Zhang, J. Development of Weed Detection Method in Soybean Fields Utilizing Improved DeepLabv3+ Platform. Agronomy 2022, 12. [Google Scholar] [CrossRef]

- Li, J.; Zhang, W.; Zhou, H.; Yu, C.; Li, Q. Weed detection in soybean fields using improved YOLOv7 and evaluating herbicide reduction efficacy. Front Plant Sci 2023, 14, 1284338. [Google Scholar] [CrossRef]

- Babu, V.S.; Ram, N.V. Deep Residual CNN with Contrast Limited Adaptive Histogram Equalization for Weed Detection in Soybean Crops. Traitement du Signal 2022, 39, 717–722. [Google Scholar] [CrossRef]

- Zhao, J.; Tian, G.; Qiu, C.; Gu, B.; Zheng, K.; Liu, Q. Weed Detection in Potato Fields Based on Improved YOLOv4: Optimal Speed and Accuracy of Weed Detection in Potato Fields. Electronics 2022, 11. [Google Scholar] [CrossRef]

- Liu, L.; Liu, K. Can digital technology promote sustainable agriculture? Empirical evidence from urban China. Cogent Food & Agriculture 2023, 9. [Google Scholar] [CrossRef]

- Rakhmatulin, I.; Andreasen, C. A Concept of a Compact and Inexpensive Device for Controlling Weeds with Laser Beams. Agronomy 2020, 10. [Google Scholar] [CrossRef]

- Wang, M.; Leal-Naranjo, J.-A.; Ceccarelli, M.; Blackmore, S. A Novel Two-Degree-of-Freedom Gimbal for Dynamic Laser Weeding: Design, Analysis, and Experimentation. IEEE/ASME Transactions on Mechatronics 2022, 27, 5016–5026. [Google Scholar] [CrossRef]

- Mwitta, C.; Rains, G.C.; Prostko, E. Evaluation of Inference Performance of Deep Learning Models for Real-Time Weed Detection in an Embedded Computer. Sensors (Basel) 2024, 24. [Google Scholar] [CrossRef] [PubMed]

- Mwitta, C.; Rains, G.C.; Prostko, E. Evaluation of Diode Laser Treatments to Manage Weeds in Row Crops. Agronomy 2022, 12. [Google Scholar] [CrossRef]

- Xiong, Y.; Ge, Y.; Liang, Y.; Blackmore, S. Development of a prototype robot and fast path-planning algorithm for static laser weeding. Computers and Electronics in Agriculture 2017, 142, 494–503. [Google Scholar] [CrossRef]

- Zhu, H.; Zhang, Y.; Mu, D.; Bai, L.; Zhuang, H.; Li, H. YOLOX-based blue laser weeding robot in corn field. Front Plant Sci 2022, 13, 1017803. [Google Scholar] [CrossRef] [PubMed]

- Zhu, H.; Zhang, Y.; Mu, D.; Bai, L.; Wu, X.; Zhuang, H.; Li, H. Research on improved YOLOx weed detection based on lightweight attention module. Crop Protection 2024, 177. [Google Scholar] [CrossRef]

- Fatima, H.S.; ul Hassan, I.; Hasan, S.; Khurram, M.; Stricker, D.; Afzal, M.Z. Formation of a Lightweight, Deep Learning-Based Weed Detection System for a Commercial Autonomous Laser Weeding Robot. Applied Sciences 2023, 13. [Google Scholar] [CrossRef]

- Terven, J.; Córdova-Esparza, D.-M.; Romero-González, J.-A. A Comprehensive Review of YOLO Architectures in Computer Vision: From YOLOv1 to YOLOv8 and YOLO-NAS. Machine Learning and Knowledge Extraction 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 2017; pp. 936–944. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition; 2018; pp. 8759–8768. [Google Scholar]

- Bolya, D.; Zhou, C.; Xiao, F.; Lee, Y.J. YOLACT: Real-Time Instance Segmentation. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV); 2019; pp. 9156–9165. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 2020; pp. 10778–10787. [Google Scholar]

- Meng, Y.; Men, H.; Prasanna, V. Accelerating Deformable Convolution Networks. In Proceedings of the 2022 IEEE 30th Annual International Symposium on Field-Programmable Custom Computing Machines (FCCM); 2022; pp. 1–1. [Google Scholar]

- Qi, Y.; He, Y.; Qi, X.; Zhang, Y.; Yang, G. Dynamic Snake Convolution based on Topological Geometric Constraints for Tubular Structure Segmentation. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV); 2023; pp. 6047–6056. [Google Scholar]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV); 2017; pp. 2980–2988. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 2016; pp. 779–788. [Google Scholar]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); 2023; pp. 7464–7475. [Google Scholar]

- Yue, X.; Qi, K.; Na, X.; Zhang, Y.; Liu, Y.; Liu, C. Improved YOLOv8-Seg Network for Instance Segmentation of Healthy and Diseased Tomato Plants in the Growth Stage. Agriculture 2023, 13. [Google Scholar] [CrossRef]

| Training Images | Validation Images | Test Images | Total Images | |

|---|---|---|---|---|

| Before Augmentation | 924 | 264 | 132 | 1320 |

| After Augmentation | 2772 | 264 | 132 | 3168 |

| Scale | depth | width | mAP50 | FPS | Size (MB) |

|---|---|---|---|---|---|

| N | 0.33 | 0.25 | 0.859 | 277.7 | 6.8 |

| S | 0.33 | 0.50 | 0.872 | 147.0 | 23.9 |

| M | 0.67 | 0.75 | 0.875 | 33.2 | 54.9 |

| L | 1.00 | 1.00 | 0.876 | 5.4 | 92.3 |

| X | 1.00 | 1.25 | 0.878 | 1.2 | 548 |

| Configuration | Allocation |

|---|---|

| CUDA version | 11.3 |

| Python version | 3.8 |

| PyTorch version | 1.12 |

| Network | Precision | Recall | mAP50 | mAP50-95 |

|---|---|---|---|---|

| YOLOv8-seg | 0.904 | 0.811 | 0.875 | 0.637 |

| BiFPN+YOLOv8-seg | 0.914 | 0.836 | 0.889 | 0.641 |

| DSConv+YOLOv8-seg | 0.912 | 0.811 | 0.887 | 0.636 |

| BiFPN+DSConv+TOLOv8-seg | 0.917 | 0.835 | 0.893 | 0.640 |

| Model | Precision | Recall | mAP50 | mAP50-95 | FPS | Size (MB) |

|---|---|---|---|---|---|---|

| Mask RCNN | 0.895 | 0.876 | 0.88 | 0.682 | 34 | 228 |

| YOLOv5-seg | 0.701 | 0.781 | 0.854 | 0.593 | 227 | 4.2 |

| YOLOv7-seg | 0.917 | 0.95 | 0.975 | 0.749 | 18.3 | 76.4 |

| YOLOv8-seg | 0.926 | 0.894 | 0.96 | 0.776 | 270 | 6.8 |

| Ours | 0.975 | 0.975 | 0.988 | 0.842 | 101 | 6.8 |

| Box | Mask | FPS | ||||||

| Precision | Recall | mAP50 | mAP50-95 | Precision | Recall | mAP50 | mAP50-95 | |

| 0.974 | 0.924 | 0.958 | 0.916 | 0.974 | 0.924 | 0.958 | 0.817 | 24.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).