Methodology

Research Design

This study adopts a mixed-methods research design to conduct a comprehensive evaluation of the impact of integrating ChatGPT on the academic writing performance and engagement of 32 EFL undergraduate students at Delhi, India. The use of a mixed-methods approach enables the triangulation of data from both quantitative and qualitative sources, facilitating a more robust and nuanced understanding of the research questions at hand.

Participants

The participants for this study are meticulously selected based on specific inclusion criteria. They are currently enrolled in the Academic Writing course during the first semester of the academic year 2024/2025, ensuring their active involvement in academic writing tasks throughout the research duration. The inclusion criteria also take into account the participants' diverse levels of English proficiency, reflecting the typical variability observed in EFL student populations.

Table 1 presents a detailed breakdown of the demographic characteristics of the participants, providing essential insights into their backgrounds and ensuring a representative sample for the study.

The demographic breakdown provided in

Table 1 offers insightful glimpses into the makeup of the participant group, crucial for gauging the potential impact of integrating ChatGPT on student academic writing and engagement. Predominantly, the age distribution reveals that 26 out of 32 students (81.25%) fall within the 18-24 bracket, mirroring the typical profile of undergraduates engaged in refining their academic writing skills. In contrast, the 25-34 age group comprises 6 students (18.75%), highlighting a youthful cohort's emphasis in this study. Notably, there are no participants in the 35+ age category, underscoring the focus on a specific demographic segment. Gender-wise, females are slightly more represented, with 20 female students (62.50%) compared to 12 males (37.50%). This gender dynamic could influence engagement levels and writing practices, a nuance critical for understanding student interactions with academic tasks. Regarding English proficiency, 18 students (56.25%) are beginners, whereas 14 (43.75%) are at an intermediate level, indicative of a sample geared toward foundational and intermediate writing skills development. The absence of advanced-level students in this study group suggests a deliberate exploration of ChatGPT’s efficacy across varying proficiency tiers, accentuating its potential in enhancing academic writing skills holistically. Considering these demographic facets ensures a well-rounded examination of ChatGPT’s effectiveness within diverse EFL settings at Delhi, India, offering valuable insights into its pedagogical implications.

Instruments

A meticulously crafted survey, derived from Liu (2023) and Zhai (2023), plays a pivotal role in the data gathering endeavor. Tailored to gauge students’ viewpoints and encounters concerning academic writing prowess, engagement levels, and perspectives on technology integration, this survey is divided into three distinct dimensions. Each dimension comprises 10 items rated on a Likert scale from 1 to 5, elucidating varying degrees of agreement ranging from "strongly disagree" to "strongly agree." These dimensions encompass Writing Proficiency (WP), Engagement Factors (EF), and Attitudes Towards Technology (ATT). WP scrutinizes coherence, organization, linguistic adeptness, and other facets pertinent to writing proficiency. EF delves into motivation, interest, and the perceived utility of AI-driven writing aids like ChatGPT. Meanwhile, ATT investigates students’ stances regarding technology's integration into learning. This survey stands as an invaluable instrument for quantitatively gauging students’ outlooks and standpoints concerning academic writing and the amalgamation of technology. The survey's reliability, gauged via Cronbach’s alpha coefficient, manifests as 0.912 for WP, 0.896 for EF, and 0.906 for ATT, signifying robust internal consistency and dependability in capturing the intended constructs. Furthermore, drafts of academic writing tasks serve as tangible benchmarks for participants’ writing advancements, evaluated meticulously through qualitative rubrics founded on established criteria. These rubrics comprehensively evaluate writing elements such as content relevance, structural coherence, argumentative lucidity, linguistic adeptness, and adherence to conventions. Through systematic analysis, profound insights into participants’ strengths and areas for enhancement emerge, ensuring uniform and impartial evaluations. Additionally, semi-structured interviews conducted with a subset of participants deepen insights into their interactions with ChatGPT and other AI writing tools, unraveling perspectives on technology, ChatGPT’s impact on writing workflows, and suggestions for augmenting EFL education support. These interviews’ findings complement quantitative data, enriching the overall comprehension of the study.

Data Collection Procedure

The methodology employed for this research is intricately crafted to capture nuanced perspectives on participants’ writing abilities, engagement dynamics, and perceptions regarding ChatGPT. The process begins with the recruitment of 32 EFL undergraduate students from Delhi, India, who are currently enrolled in the Academic Writing course during the first semester of the academic year 2024/2025. A comprehensive orientation session familiarizes participants with the study’s aims, methodologies, potential risks, and advantages. Following this, informed consent is obtained from each participant prior to their active involvement in the research. Next, a questionnaire is distributed to participants via a WhatsApp group. This questionnaire incorporates Likert-scale items to quantitatively measure participants’ views on academic writing proficiency, engagement factors, and attitudes toward ChatGPT, using a scale ranging from 1 (strongly disagree) to 5 (strongly agree) for nuanced responses. Participants are then tasked with submitting their initial academic writing drafts electronically alongside the completed questionnaire, providing baseline metrics for their writing proficiency. These drafts undergo evaluation using qualitative rubrics that assess content relevance, coherence, argument clarity, language proficiency, and adherence to writing conventions. The rubrics are meticulously designed and scored to ensure uniformity and reliability in assessment.

During the intervention phase, participants independently interact with ChatGPT for draft revisions, utilizing its feedback and language correction functionalities. Revised drafts are reevaluated using the same rubrics to gauge improvements in quality and coherence following ChatGPT utilization. Additionally, the selection process for six EFL students for in-depth analysis is meticulously conducted, considering a range of factors to ensure a comprehensive grasp of ChatGPT’s impact. These participants are chosen based on their diverse writing capabilities, engagement levels, and experiences with ChatGPT during the intervention phase. Criteria such as the depth of insights gleaned from interactions with ChatGPT, the complexity of writing tasks tackled using the platform, and its potential influence on their writing processes are taken into consideration. Structured interviews are then conducted with these selected students, employing audio recording to facilitate detailed discussions about their perceptions, challenges, and benefits associated with ChatGPT usage. Through probing questions and extensive exploration, these interviews yield valuable insights into how ChatGPT shapes participants’ writing skills, engagement dynamics, and attitudes toward technology, contributing significantly to the overarching research outcomes.

Data Analysis

The investigation leverages SPSS 26 for quantitative exploration, specifically delving into Likert scale responses to assess participants’ sentiments concerning writing proficiency, technology integration, and their experiences with ChatGPT. The quantitative analysis employs descriptive statistics to encapsulate questionnaire findings, emphasizing the prevalence of various response choices and delineating the spectrum of agreement levels. Through this examination, a deeper understanding emerges regarding participants’ viewpoints and their levels of involvement. Moreover, qualitative inputs derived from academic writing drafts and interviews undergo a rigorous thematic analysis, uncovering pivotal themes, recurring patterns, and the nuanced perspectives of participants regarding ChatGPT's utilization. This comprehensive scrutiny delves into recurrent themes, hurdles encountered, and recommendations for enhancement, with the overarching goal of deciphering ChatGPT's impact on writing methodologies, educational experiences, and technological attitudes within the realm of EFL education. Furthermore, the study conducts an assessment of students’ work both pre and post ChatGPT intervention, employing qualitative rubrics as benchmarks. The initial drafts are instrumental in gauging writing proficiency across various dimensions such as content relevance, coherence, argumentative clarity, linguistic adeptness, and adherence to writing norms. Following revision aided by ChatGPT, the revised drafts are evaluated using the same rubrics to quantify enhancements in writing quality, coherence, and compliance with writing standards. This juxtaposed analysis yields tangible insights into the transformative influence of ChatGPT on writing efficacy.

Ethical Considerations

The ethical framework underpinning this study is foundational, commencing with the imperative of securing informed consent from every participant prior to their engagement. Participants are meticulously briefed on the study's objectives, methodologies, potential risks, and anticipated benefits, fostering a culture of transparency and voluntary involvement. Paramount to this ethos is the preservation of confidentiality and anonymity throughout the research trajectory, with stringent measures in place to securely store and restrict access to data, confining it solely to authorized personnel. Upholding participants' autonomy, the study upholds their prerogative to withdraw from the study at any juncture sans repercussion. Moreover, ethical imperatives governing data protection are meticulously observed, ensuring that all data garnered are employed exclusively for research objectives, handled with integrity, and respecting participants' rights and privacy. This ethical stance underscores the study's commitment to ethical conduct and the welfare of its participants, bolstering the credibility and integrity of its findings.

Results and Findings

The implementation of ChatGPT has brought about significant improvements in the academic writing prowess and active participation of EFL Indian undergraduate students. By analyzing the influence of this implementation on writing abilities and engagement, this research seeks to offer profound perspectives on the revolutionary capabilities of ChatGPT in elevating the academic writing journey for EFL learners.

RQ1: WHAT IS THE PERCEPTION OF EFL STUDENTS REGARDING THE IMPACT OF CHATGPT INTEGRATION ON THEIR ACADEMIC WRITING PERFORMANCE?

Exploring the perceptions of EFL students concerning the integration of ChatGPT reveals a multifaceted relationship between technology and writing proficiency. This investigation delves into the nuanced viewpoints of students regarding ChatGPT's effectiveness in augmenting their writing skills, offering valuable insights into the implications of AI tools within academic settings.

Table 2 presents quantitative data that encapsulates students' perspectives on the integration of ChatGPT for enhancing writing proficiency.

The insights gleaned from

Table 2 shed light on how users perceive the influence of ChatGPT on their writing skills, particularly in terms of coherence and related aspects. Analyzing the ten criteria assessed, we find a range of opinions, showcasing a nuanced perspective on ChatGPT's efficacy in improving different facets of writing. In the initial criterion (WP.1), 31.25% of respondents express strong agreement (SA) regarding ChatGPT's enhancement of clarity in Writing (n = 10 Std. Dev = 0.92). This positive feedback implies a tangible boost to coherence, making written material more lucid and comprehensible. However, there are dissenting voices (n = 3, 9.38%) and neutrals (n = 5, 15.63%), indicating that the perceived clarity enhancement of ChatGPT might not be consistent across all users, potentially impacting coherence in diverse ways. Transitioning to WP.2, a significant majority (59.38%) either agree (A) or strongly agree (SA) that ChatGPT aids in structuring ideas effectively (n = 19, Std. Dev = 0.87), thus contributing to coherence in organizing content and thoughts. This collective positive sentiment resonates with ChatGPT's role in enhancing coherence. However, there are dissenting views (n = 4, 12.5%) and neutral responses (n = 6, 18.75%), indicating varying user experiences and potential effects on coherence in content organization. Within WP.3, approximately 31.25% strongly agree (SA) that ChatGPT facilitates clear expression of complex ideas (n = 10, Std. Dev = 0.91), which positively impacts coherence when conveying intricate concepts. This observation highlights ChatGPT's potential to bolster coherence in handling complex content. Nevertheless, some respondents disagree (n = 4, 12.50%) or remain neutral (n = 4, 12.5%), revealing a blend of perspectives and experiences concerning its impact on coherence. Moving to WP.4, where no definitive consensus emerges, the standard deviation of 0.92 reflects varied insights into ChatGPT's influence on coherence through the use of academic vocabulary. Responses are distributed across categories (n = 4, 12.5% for D; n = 5, 15.63% for N; n = 6, 18.75% for A; n = 7, 21.88% for SA), indicating diverse user experiences and potential impacts on coherence in academic writing.

In WP.5, a substantial portion (37.50%) strongly agrees (SA) that their writing skills have seen improvement with ChatGPT (n = 12 Std. Dev = 0.94), which positively impacts overall coherence in writing proficiency. The variability in responses (n = 2, 6.25% for D; n = 4, 12.5% for N; n = 5 15.63% for A) indicates diverse impacts on coherence, highlighting individual experiences in skill enhancement and its coherence implications. WP.6 presents a standard deviation of 0.86, reflecting mixed insights into ChatGPT’s influence on coherence in source citation practices. While some users may find it moderately helpful for coherence in referencing (n = 6, 18.75% for A; n = 8, 25% for SA), others may not perceive the same level of support (n = 5 15.63% for D; n = 5, 15.63% for N), signifying varied experiences and potential impacts on coherence in citing sources. WP.7 indicates that 28.13% strongly agree (SA) that ChatGPT aids in producing well-structured paragraphs (n = 9 Std. Dev = 0.97), contributing positively to coherence in written organization. However, the high standard deviation suggests diverse views (n = 3, 9.38% for D; n = 6, 18.75% for N; n = 7, 21.88% for A), affecting coherence differently for users based on their perceptions of structured writing. In WP.8, -34.38% strongly agree (SA) that ChatGPT helps maintain coherence in writing (n = 11, Std. Dev = 0.90), with varied responses (n = 5, 15.63% for D; n = 3, 9.38% for N; n = 9, 28.13% for A). This suggests that while some users experience significant coherence maintenance support, others may not perceive the same level of impact, influencing coherence differently. WP.9 shows that 37.50% heavily rely on ChatGPT for grammar tasks (n = 12, Std. Dev = 0.95), impacting coherence differently across users. While some users find ChatGPT highly beneficial for coherence in grammar usage, others may not rely as heavily on it, affecting coherence maintenance in writing. Lastly, WP.10 reveals no clear majority in any category (Std. Dev = 0.91) with varied perceptions of feedback quality and usefulness (n = 3, 9.38% for D; n = 6, 18.75% for N; n = 6, 18.75% for A; n = 8 25% for SA), influencing coherence differently among users. This indicates that the quality and usefulness of feedback provided by ChatGPT may influence coherence in writing improvement to varying extents among users.

The data paints a nuanced picture of ChatGPT's influence on coherence in writing. It illuminates a spectrum of effects ranging from positive outcomes like improved clarity, enhanced skills, and better organizational structure to varying experiences and viewpoints, hinting at the diverse impact of ChatGPT on coherence among users. These results underscore the need to tailor assessments of ChatGPT's coherence-enhancing abilities to individual user experiences and requirements in writing scenarios.

Examining the Engagement Factors (EF) outlined in

Table 3 based on questionnaire responses, insights emerge regarding participants' attitudes and perceptions regarding ChatGPT's utility in writing assignments. The analysis brings to light aspects such as motivation, enjoyment, curiosity across different subjects, feedback appreciation, and the level of effort invested, reflecting an overall positive engagement with ChatGPT among the surveyed individuals.

The data from

Table 3 provides insights into how participants engage with ChatGPT across various dimensions like motivation, enjoyment, interest in diverse topics, confidence, feedback appreciation, effort, active utilization, focus, belief in its importance for academic writing, and goal-setting effectiveness. In EF.1, a significant portion (31.25%) strongly agrees (SA) that ChatGPT is a motivator for writing more frequently (n = 10, Std. Dev = 0.92), indicating a positive impact on motivation and engagement. However, there are also instances of disagreement (n = 3, 9.38%) and neutrality (n = 4, 12.5%), showcasing varying levels of motivation influenced by ChatGPT. Moving to EF.2, a substantial percentage (40.62%) strongly agrees (SA) that ChatGPT enhances the enjoyment of writing (n = 13 Std. Dev = 0.90), signaling a significant positive effect on engagement and enjoyment. Nevertheless, a small fraction (3.12%) disagrees (D), while some respondents remain neutral (n = 3, 9.38%), reflecting diverse experiences in terms of enjoyment and engagement. EF.3 indicates that 34.38% strongly agree (SA) that ChatGPT ignites their interest in exploring diverse topics (n = 11, Std. Dev = 0.91), indicating a positive impact on engagement with varied content. However, some respondents3 express disagreement (n = 4, 12.5%) or neutrality (n = 4, 12.5%), suggesting differing levels of interest and engagement in exploring different topics facilitated by ChatGPT. In EF.4, 31.25% strongly agree (SA) that ChatGPT enhances their confidence in writing effectively (n = 10, Std. Dev = 0.87), signaling a positive impact on confidence and engagement. Nevertheless, some respondents disagree (n = 5, 15.63%) or are neutral (n = 3, 9.38%), indicating varied levels of confidence and engagement influenced by ChatGPT. Furthermore, EF.5 reveals that 34.38% of respondents highly value the feedback and suggestions provided by ChatGPT (n = 11, Std. Dev = 0.86), showcasing a notable positive impact on engagement through feedback appreciation. However, a segment of respondents disagrees (n = 5, 15.63%) or remains neutral (n = 4, 12.5%), indicating diverse levels of appreciation and engagement with ChatGPT’s feedback. Within EF.6, 37.5% strongly agree (SA) that ChatGPT motivates them to invest more effort into their writing tasks (n = 12, Std. Dev = 0.90), suggesting a tangible positive influence on effort and engagement. Nevertheless, some respondents disagree (n = 4, 12.5%) or are neutral (n = 4, 12.5%), revealing varying degrees of effort and engagement influenced by ChatGPT. EF.7 illustrates that 34.38% of respondents actively seek opportunities to utilize ChatGPT for writing practice (n = 11 Std. Dev = 0.88), pointing towards a positive impact on engagement and proactive usage. However, some respondents disagree (n = 4, 12.5%) or are neutral (n = 4, 12.5%), indicating differing levels of active utilization and engagement with ChatGPT. In EF.8, 37.5% strongly agree (SA) that ChatGPT aids in maintaining focus and engagement during writing sessions (n = 12, St. Dev = 0.89), indicating a beneficial effect on concentration and engagement. Nonetheless, some respondents disagree (n = 4, 12.5%) or remain neutral (n = 4, 12.5%), showcasing varied experiences regarding focus and engagement during writing with ChatGPT. EF.9 demonstrates that 37.5% strongly believe in ChatGPT’s significance for enhancing their academic writing skills (n = 12, Std. Dev = 0.88), underscoring a positive impact on engagement and perceived importance for skill enhancement. However, some respondents disagree (n = 4, 12.5%) or are neutral (n = 4, 12.5%), highlighting differing levels of belief in ChatGPT’s importance for improving academic writing skills. Lastly, in EF.10, 34.38% strongly agree (SA) that establishing effective goals with ChatGPT has boosted their writing engagement (n = 11 Std. Dev = 0.90), signaling a positive impact on engagement through effective goal setting. Nevertheless, some respondents disagree (n = 4, 12.5%) or are neutral (n = 3, 9.38%), indicating varied experiences regarding goal-setting effectiveness and engagement influenced by ChatGPT. The analysis of

Table 3 reveals a consistently positive influence of ChatGPT across various dimensions of participant engagement, including motivation, enjoyment, interest in diverse topics, confidence, feedback appreciation, effort, active utilization, focus, belief in its importance for academic writing, and goal-setting effectiveness. Nonetheless, variations in responses highlight the diversity of experiences and perceptions among users regarding ChatGPT's impact on their engagement in writing tasks.

Turning to the findings from

Table 4, which explores Attitudes Towards Technology (ATT), participants' perceptions and attitudes towards utilizing ChatGPT for writing tasks are illuminated. The data indicates predominantly positive sentiments regarding comfort, skill enhancement, preference over traditional methods, utility of features, openness to learning, trust in feedback, usability, confidence, impact of feature exploration, and overall attitude improvement towards technology for writing tasks. However, these attitudes are tempered by diverse perspectives and experiences evident in the responses.

Table 4 offers an intricate analysis of participants’ attitudes toward technology, focusing particularly on their perceptions of employing ChatGPT for writing tasks. ATT.1 sheds light on the fact that 31.25% of respondents strongly agree (SA) that they are comfortable using ChatGPT for writing tasks (n=10, Std. Dev = 0.89), showcasing a positive inclination toward technology adoption and utilization. However, there are also expressions of disagreement (n = 3, 9.38%) or neutrality (n = 4, 12.5%), indicating differing levels of comfort and acceptance regarding ChatGPT. Moving to ATT.2, we find that 50% of respondents either agree (A) or strongly agree (SA) that ChatGPT significantly enhances their writing skills (n = 16, Std. Dev = 0.86), signaling a positive outlook on technology’s role in skill enhancement. Nevertheless, some respondents also express disagreement (n= 5, 15.63%) or neutrality (n = 7, 21.88%), showcasing varied perceptions regarding ChatGPT’s impact on improving writing skills. ATT.3 reveals that 59.38% prefer using ChatGPT over traditional methods for writing assistance (n = 19, Std. Dev = 0.88), indicating a strong inclination towards technology-based writing support. However, there are also expressions of disagreement (n = 4, 12.5%) or neutrality (n = 6, 18.75%), showcasing differing preferences and attitudes towards technology adoption in writing tasks. Additionally, ATT.4 demonstrates that a majority (53.13%) agree (A) or strongly agree (SA) that ChatGPT’s features are helpful and contribute to better writing outcomes (n = 17, Std. Dev = 0.87), underscoring a positive perception of technology’s utility and its contribution to enhancing writing quality. Nonetheless, some respondents also express disagreement (n = 5, 15.63%) or neutrality (n = 6, 18.75%), indicating diverse perspectives on ChatGPT’s features and their impact on writing outcomes. Moreover, ATT.5 reveals that 59.38% are open to learning and utilizing new technologies like ChatGPT (n = 19, Std. Dev = 0.88), indicating a positive attitude towards technology adoption and a willingness to explore new tools for writing assistance. However, there are also expressions of disagreement (n = 4, 12.5%) or neutrality (n = 6, 18.75%), suggesting varying levels of openness and willingness to adopt new technologies. In analyzing ATT.6, a substantial majority (50%) express agreement (A) or strong agreement (SA) in their trust in ChatGPT to provide accurate feedback (n = 16 Std. Dev = 0.87), indicating a positive perception of the technology's reliability in feedback provision. Conversely, a notable segment expresses disagreement (n = 5, 15.63%) or neutrality (n = 7, 21.88%), showcasing varying levels of trust in ChatGPT’s feedback accuracy. Moving to ATT.7, we observe that 59.38% agree (A) or strongly agree (SA) that ChatGPT has streamlined writing tasks, making them more efficient (n = 19, Std Dev = 0.89), highlighting a favorable perception of the technology’s usability and efficiency. However, there are also expressions of disagreement (n = 4, 12.5%) or neutrality (n = 6, 18.75%), indicating diverse experiences and views regarding ChatGPT’s ease of use and efficiency. In ATT.8, 50% agree (A) or strongly agree (SA) that they are confident in using ChatGPT for various writing purposes (n = 16, Std. Dev = 0.86), demonstrating a positive attitude towards the technology’s versatility and their proficiency in utilizing it. Nevertheless, dissenting opinions are present, with some expressing disagreement (n = 5, 15.63%) or neutrality (n = 7, 21.88%), reflecting differing levels of confidence in using ChatGPT. ATT.9 unveils that 53.13% agree (A) or strongly agree (SA) that exploring ChatGPT’s features has positively impacted their writing experience (n = 17, Std. Dev = 0.87), indicating a favorable perception of the technology’s impact on writing experience through feature exploration. However, there are also expressions of disagreement (n = 5, 15.63%) or neutrality (n = 6, 18.75%), suggesting varying experiences and perspectives regarding feature exploration and its impact. Lastly, in ATT.10, 59.38% agree (A) or strongly agree (SA) that ChatGPT has improved their overall attitude towards using technology for writing (n = 19 Std. Dev = 0.87), illustrating a positive overall impact of technology on their attitudes towards writing tasks. Nonetheless, differing viewpoints are evident, with some expressing disagreement (n = 4, 12.5%) or neutrality (n = 6, 18.75%), reflecting diverse attitudes and experiences concerning technology’s role in writing. The findings from

Table 4 underline positive attitudes towards technology, particularly ChatGPT, across various dimensions such as comfort, skill enhancement, preference over traditional methods, feature utility, openness to learning, trust in feedback, usability, confidence, feature exploration impact, and overall attitude improvement towards technology for writing tasks. However, the responses also reflect diverse perspectives and experiences, emphasizing the importance of considering individual preferences and experiences when evaluating attitudes towards technology adoption in writing contexts.

RQ2: HOW DOES THE INTEGRATION OF CHATGPT IMPACT STUDENT ENGAGEMENT IN ACADEMIC WRITING TASKS AMONG EFL STUDENTS?

Delving into the realm of academic writing, our second research question (RQ) seeks to unravel the influence of integrating ChatGPT on the engagement levels of EFL students. Analyzing the works of these students, especially focusing on their initial and revised drafts, has uncovered several pivotal insights to comprehensively address this RQ.

At the genesis of our study, the evaluation of students' initial drafts served as a cornerstone for assessing their writing prowess. Utilizing meticulous qualitative rubrics covering content relevance, structural coherence, argument clarity, language proficiency, and adherence to writing norms, we established a robust and impartial evaluation framework. Transitioning into the intervention phase with ChatGPT, participants actively harnessed the tool to refine their drafts, leveraging its feedback, language correction, coherence enhancement, and improvement suggestions. This phase emphasized participant autonomy in engaging with ChatGPT, fostering a tailored and adaptive writing revision process. Following the ChatGPT intervention, participants submitted their revised drafts for evaluation using the same qualitative rubrics as the initial drafts, enabling a nuanced exploration of improvements in writing quality, coherence, and adherence to conventions.

The findings from this process unveiled significant enhancements across various dimensions of academic writing. Notably, there was a substantial improvement in the structural coherence and logical flow of ideas in the revised drafts, showcasing ChatGPT’s efficacy in refining organization and argumentation. Moreover, participants demonstrated advancements in language proficiency, showcasing improvements in academic vocabulary usage, grammar accuracy, and adherence to conventions. This underscores ChatGPT’s role in refining linguistic expression in academic contexts.

Despite these positive outcomes, challenges such as potential over-reliance on ChatGPT and maintaining originality surfaced. Some participants grappled with balancing ChatGPT’s assistance while preserving their unique writing style and voice. This highlights the significance of nurturing critical thinking and autonomy alongside technological integration in academic writing processes. Our study emphasizes not just the impact of ChatGPT on writing outcomes but also the importance of cultivating students’ individuality and critical thought in their academic endeavors.

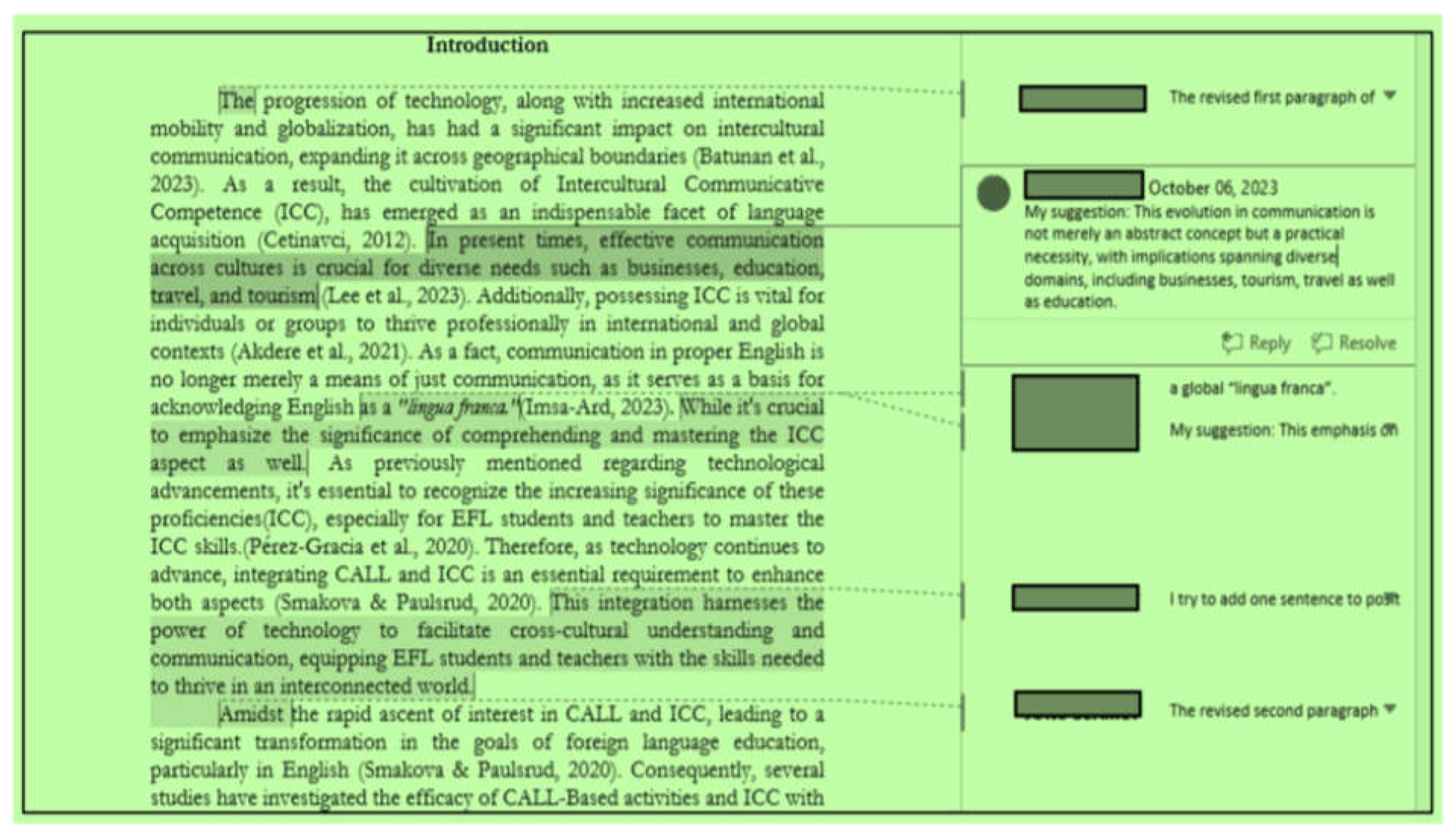

To provide a concrete illustration, let's delve into

Figure 1, showcasing a segment from a student's initial draft. Through a meticulous analysis employing established qualitative rubrics, we can pinpoint precise areas where enhancements were needed in coherence, organization, language proficiency, and adherence to writing standards. This detailed examination serves as a tangible demonstration of how integrating ChatGPT can tangibly elevate the academic writing skills of English as a Foreign Language (EFL) students.

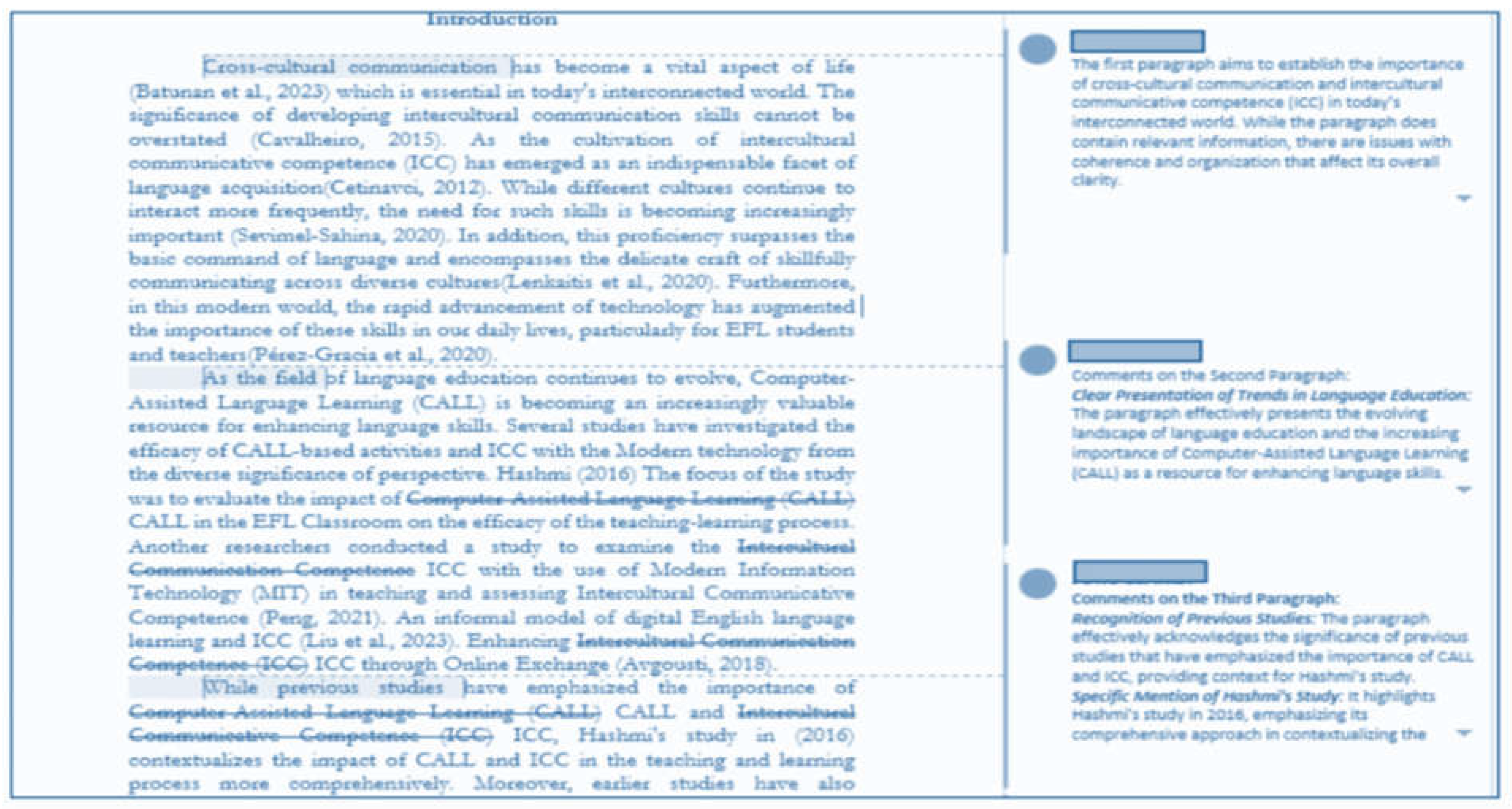

Figure 2 depicts a juxtaposition between the initial and revised versions of a student's academic composition, showcasing the discernible improvements attributed to the integration of ChatGPT. In the preliminary iteration (

Figure 1), the text exhibited various deficiencies, notably in coherence and organization. The paragraphs lacked a discernible structure, presenting ideas disjointedly and impeding a seamless flow. Moreover, language proficiency issues, including grammatical inaccuracies and inconsistencies in academic vocabulary employment, were apparent.

Following revision aided by ChatGPT, substantial enhancements emerged in the subsequent draft (

Figure 2). The revised passages showcased a more cohesive progression of ideas, with enhanced coherence and structural arrangement. Refinements in transitions between sentences and paragraphs contributed to a clearer and more unified argumentation.

ChatGPT's feedback mechanisms proved instrumental in addressing language proficiency concerns, leading to improved grammatical accuracy, refined academic vocabulary utilization, and adherence to writing conventions.

Figure 2 exemplifies the integration of ChatGPT's feedback, resulting in the elimination of redundant phrases, sentence streamlining, and overall text readability enhancement. The revisions signify a deeper exploration of the topic, evident through expanded analyses, increased evidence integration, and a more nuanced presentation of ideas. Furthermore, the student effectively maintained originality while leveraging ChatGPT's suggestions, striking a harmonious balance between technological assistance and preserving their unique writing style and voice.

Figure 2 underscores the tangible impact of ChatGPT integration in enhancing the quality of academic writing among English as a Foreign Language (EFL) students. The improvements in coherence, organization, language proficiency, and adherence to writing conventions collectively contribute to a polished final draft, underscoring the efficacy of technology in fostering student engagement and proficiency in academic writing endeavors.

Additionally, insights gleaned from interviews conducted with six chosen EFL students (referred to as P1-P6 for anonymity) offer a nuanced perspective on the influence of ChatGPT on their writing proficiency, engagement, and technological perception. Thematic analysis unveiled a spectrum of viewpoints encompassing both favorable and unfavorable observations across various pivotal themes.

Theme 1: Writing Improvement

The interviews with the selected EFL students provided valuable insights into how ChatGPT influenced their writing skills, particularly focusing on improvement. The participants shared a mix of positive and negative experiences regarding the tool’s feedback and suggestions.

Participant 1 (P1) emphasized, “ChatGPT’s feedback helped me improve the structure of my essays, leading to clearer arguments.” This indicates that ChatGPT’s feedback played a crucial role in enhancing the organization and coherence of P1’s writing, resulting in more effective communication of ideas. Similarly, Participant 4 (P4) expressed, “Using ChatGPT’s language suggestions improved the fluency and coherence of my writing.” This suggests that P4 found ChatGPT’s language assistance beneficial in enhancing the flow and coherence of their written work, contributing to overall writing improvement. These positive insights underscore the value of ChatGPT in providing targeted feedback and language suggestions that contribute to structural and linguistic enhancements in the students’ writing. The specific examples provided by P1 and P4 demonstrate the tangible impact of ChatGPT on enhancing the clarity and coherence of their written compositions.

However, Participant 3 (P3) mentioned, “While ChatGPT offered helpful suggestions, I sometimes struggled to incorporate them effectively into my writing.” This indicates that despite the usefulness of ChatGPT’s suggestions, P3 faced challenges in seamlessly integrating them into their writing, possibly due to issues such as adapting suggestions to fit their writing style or context. Similarly, Participant 6 (P6) also noted, “I found that ChatGPT’s corrections were sometimes too general, and I needed more specific feedback.” This suggests that P6 encountered limitations with ChatGPT’s feedback, particularly in terms of its specificity, which may have impacted their ability to address specific areas for improvement. These insights highlight potential challenges and limitations associated with ChatGPT’s feedback and corrections. P3’s experience underscores the importance of ensuring that AI-driven feedback aligns well with individual writing styles and contexts to facilitate effective incorporation. Likewise, P6’s feedback emphasizes the need for more tailored and specific feedback from ChatGPT to address nuanced aspects of writing, indicating areas for potential enhancement in the tool’s functionality.

Theme 2: Engagement and Motivation

The interviews conducted with the chosen EFL students provided valuable insights into their levels of engagement and motivation while utilizing ChatGPT for writing tasks. Each participant's experience varied, showcasing a blend of positive and negative encounters with the tool.

Participant 2 (P2) expressed, “Interacting with ChatGPT motivated me to write more frequently and explore new topics.” This highlights how ChatGPT’s interactive elements played a pivotal role in boosting P2’s motivation, fostering a keen interest in writing and a desire to delve into diverse subjects. Similarly, Participant 5 (P5) mentioned, “ChatGPT’s interactive features kept me engaged during writing sessions.” This indicates that P5 found ChatGPT’s interactive aspects effective in maintaining focus and sustaining interest, leading to prolonged engagement during writing sessions. These observations underline ChatGPT’s positive impact on enhancing engagement and motivation among EFL students. The experiences shared by P2 and P5 underscore the tool’s ability to spark curiosity, promote frequent writing, and sustain engagement through interactive functionalities, thereby enriching the writing experience.

Despite these positive experiences, Participant 1 (P1) expressed, “I sometimes felt overwhelmed by the amount of feedback from ChatGPT, which affected my motivation.” This suggests that while ChatGPT offers feedback, its volume or nature may occasionally overwhelm P1, influencing their motivation to continue writing. Participant 4 (P4) also noted, “While ChatGPT was helpful, I still preferred human feedback for more personalized guidance.” This indicates that P4 valued human feedback over ChatGPT’s feedback, indicating a preference for tailored guidance in the writing process. These insights shed light on potential challenges in maintaining optimal levels of motivation and engagement with ChatGPT. P1’s experience emphasizes the need to strike a balance in feedback volume to prevent overwhelming students, while P4’s preference for human feedback highlights the significance of considering individual preferences for guidance in writing tasks.

Theme 3: Attitudes Towards Technology

The interviews conducted also yielded valuable insights into the participants’ attitudes towards technology, particularly in relation to their experiences with ChatGPT during writing tasks.

Participant 3 (P3) mentioned, “Using ChatGPT improved my confidence in using technology for writing tasks.” This indicates that P3 perceived ChatGPT as a tool that boosted their confidence in employing technology for writing purposes, showcasing a positive attitude towards technology integration. Participant 6 (P6) stated, “ChatGPT introduced me to new writing tools and made me more open to technology in education.” This suggests that P6’s interaction with ChatGPT resulted in a broader acceptance and receptiveness towards technology in educational settings, indicating a favorable shift in attitudes. These insights highlight ChatGPT’s role in fostering positive attitudes towards technology among EFL students. P3’s enhanced confidence in technology use and P6’s openness to new tools exemplify the tool’s potential to influence perceptions and attitudes towards technology integration in educational contexts.

However, not all experiences were entirely positive. Participant 2 (P2) expressed, “I had concerns about the reliability of ChatGPT’s suggestions, which affected my trust in the tool.” This indicates that P2 harbored doubts regarding the reliability of ChatGPT’s suggestions, leading to a decrease in trust in the tool’s capabilities. Participant 5 (P5) noted, “While ChatGPT was useful, I still prefer traditional writing methods for certain tasks.” This suggests that despite finding ChatGPT beneficial, P5 maintained a preference for traditional writing approaches in specific contexts. These insights highlight challenges related to trust in AI tools and a persistent preference for traditional methods despite the utility of ChatGPT. P2’s concerns about reliability stress the importance of ensuring accuracy and dependability in AI-driven tools to build and maintain trust among users, while P5’s preference underscores the ongoing relevance of traditional writing methods alongside technological advancements.

The interviews with the six selected EFL students provided a holistic view of their encounters with ChatGPT in academic writing tasks. Across themes of writing enhancement, engagement and motivation, and attitudes towards technology, a spectrum of positive and negative insights emerged. While participants acknowledged the benefits of ChatGPT’s feedback in enhancing writing quality and motivation, challenges such as feedback effectiveness, trust in AI suggestions, and balancing technology with traditional teaching methods were also highlighted. These findings underscore the nuanced nature of integrating AI tools like ChatGPT into academic writing contexts. They provide valuable guidance for optimizing the use of such tools to support and enhance, rather than replace, writing instruction in EFL environments.

Discussions

This study endeavors to conduct a comprehensive analysis of the impacts of integrating ChatGPT into academic writing tasks among EFL students, focusing on writing enhancement, engagement levels, and attitudes toward technology.

Consistent with prior research, our study reaffirms the significant role of AI tools such as ChatGPT in improving writing quality by providing feedback on various dimensions like structure, coherence, and fluency. These outcomes resonate with participants' recognition of the advantages of AI-driven feedback in refining their writing skills (Bašić et al., 2023; Yan, 2023a; Zheng & Zhan, 2023). However, a noteworthy departure from past studies was observed regarding the effectiveness of incorporating AI suggestions into the writing process. While some participants found ChatGPT's feedback beneficial and easily integrable, others faced challenges in effectively utilizing the suggestions, echoing the difficulties noted by (Baskara, 2023) and Steiss et al. (2024) in their AI-assisted writing research.

Additionally, our study thoroughly investigates engagement and motivation, a key focus in prior AI integration research in education. Consistent with Dergaa et al. (2023) and Song and Song (2023), our participants reported heightened motivation and engagement due to their interactions with ChatGPT. Particularly, the tool's interactive features received praise for sustaining student engagement during writing sessions. However, conflicting viewpoints emerged concerning the impact of feedback overload on motivation, an aspect not extensively explored in earlier studies (Aljanabi et al., 2023; Yan, 2023a).

Regarding attitudes toward technology, our study aligns with Cooper (2023) and Zheng and Zhan (2023) in revealing a positive shift in participants' attitudes toward utilizing AI tools for writing tasks. Participants expressed increased confidence in using technology and a willingness to explore new writing tools. This positive trend mirrors findings from previous studies on technology adoption in educational environments (Adiguzel et al., 2023; Javaid et al., 2023). However, concerns about the reliability of AI suggestions and preferences for traditional methods for certain tasks were evident, indicating a nuanced perspective warranting further exploration.

A key contribution of our study lies in the detailed exploration of specific challenges and opportunities arising from ChatGPT's integration into academic writing tasks. For example, while the tool's feedback was generally beneficial, participants highlighted challenges in interpreting and effectively applying the suggestions, especially in complex writing tasks. This finding contrasts with earlier studies emphasizing the ease of use and immediate impact of AI feedback on writing quality (Bašić et al., 2023; Zheng & Zhan, 2023). Moreover, our study extends the discourse on technology attitudes by examining the interplay between perceived usefulness, trust, and skepticism toward AI tools. While participants generally recognized ChatGPT's benefits, concerns about data privacy, algorithmic bias, and the limitations of AI-generated feedback were expressed. This nuanced perspective underscores the importance of addressing ethical and technical considerations in AI integration initiatives to build trust and ensure responsible technology use in education (Baskara, 2023; Steiss et al., 2024).

Our findings present a nuanced view of ChatGPT's integration into academic writing tasks among EFL students, confirming and deviating from prior research. Consistent with existing literature, the study confirms the significant impact of AI tools like ChatGPT in improving writing quality, with participants acknowledging the benefits of AI-driven feedback. However, differing from previous studies, our research emphasizes the need for cautious integration of AI suggestions into the writing process for maximum effectiveness. Furthermore, the study highlights ChatGPT's positive influence on student engagement and motivation, in line with findings on AI integration in education. Nonetheless, concerns about feedback overload and preferences for traditional methods indicate a complex relationship between AI support and student motivation. These findings stress the importance of adopting nuanced approaches to AI integration and suggest areas for further research to explore the long-term effects and ethical considerations of AI use in educational settings.