1. Introduction

In recent years, the surge in demand for remote sensing technology has significantly broadened the scope of in-situ spectral measurements of vegetation and the discernment of individual species. This burgeoning interest is attributed to the escalating need for a more precise identification and monitoring of plant species, which, in turn, fosters a better comprehension of plant ecology and biology. One notable challenge in remote sensing is the taxonomic classification of many plant species. Recent estimates indicate that the Earth is home to approximately 374,000 to 435,000 plant species [

1,

2], with vascular plants accounting for around 308,312 of these [

1]. Hyperspectral measurements hold the potential to identify these vascular plants, thereby catalyzing groundbreaking research in remote sensing and vegetation sciences [

3].

In addition, remote sensing facilitates the enumeration and analysis of forest trees through image processing of satellite or drone imagery [

4]. Utilizing a fusion of multi-channel cameras, it becomes viable to discern trees, ascertain their species, and analyze their spatial distribution. Likewise, this technology is invaluable in conserving endangered and critically endangered plant species by continuously monitoring their growth patterns, habitats, and health status. By harnessing spectral information, coupled with high-performance satellite or drone imagery and novel algorithms, it is possible to calculate the growth area of these plants and differentiate between healthy and diseased specimens.

By employing a hyperspectral camera with an LCTF, researchers have been able to achieve vegetation classification with up to 94.5% accuracy based on spectral reflectance using machine learning algorithms [

5]. Hyperspectral imaging, a rapidly evolving technique, furnishes exhaustive information on Earth's surface reflectance over an extensive spectrum of wavelengths [

6]. This imaging modality has already proven indispensable in botany and plant sciences for reliably detecting and identifying plant stress diseases and quantifying their severity [

7,

8,

9,

10], as well as facilitating the precise classification of plant species [

7,

11,

12,

13,

14] and charting their distribution [

15,

16,

17,

18]. The high spatial and spectral resolution of hyperspectral data is increasingly vital for advancing these fields. Hyperspectral imaging encompasses hardware enhancements and algorithmic developments for data processing. The hardware, particularly hyperspectral imaging cameras, captures spatial and spectral information. Deployed initially in the 1980s for Earth remote sensing [

19], hyperspectral imaging (HSI) substantially augmented the capabilities of its predecessor – fiber probe-based spectroscopy – by incorporating a spatial dimension. HSI now enables the simultaneous acquisition of data in hundreds of spectral bands for each pixel in an image [

20].

This paper presents the results of field measurements conducted using a custom-developed hyperspectral imaging Liquid Crystal Tunable Filter (LCTF) camera within a grassland ecosystem in Mongolia. Initially implemented in space-borne instruments by Hokkaido University and Tohoku University, the technology captures spectral data over wavelength bands ranging from 460 to 780 nm at 1 nm spectral resolution. The camera can have a maximum switching time of 300 ms between spectral bands, with an exposure time ranging from 5 to 50 ms based on object reflectance and the solar zenith angle. The primary goal of this study is to utilize the Liquid Crystal Tunable Filter (LCTF) camera for detailed vegetation analysis, with a focus on accurately estimating vegetation and non-vegetated areas by analyzing spectral data obtained from the field.

2. Methods

2.1. Field Measurements

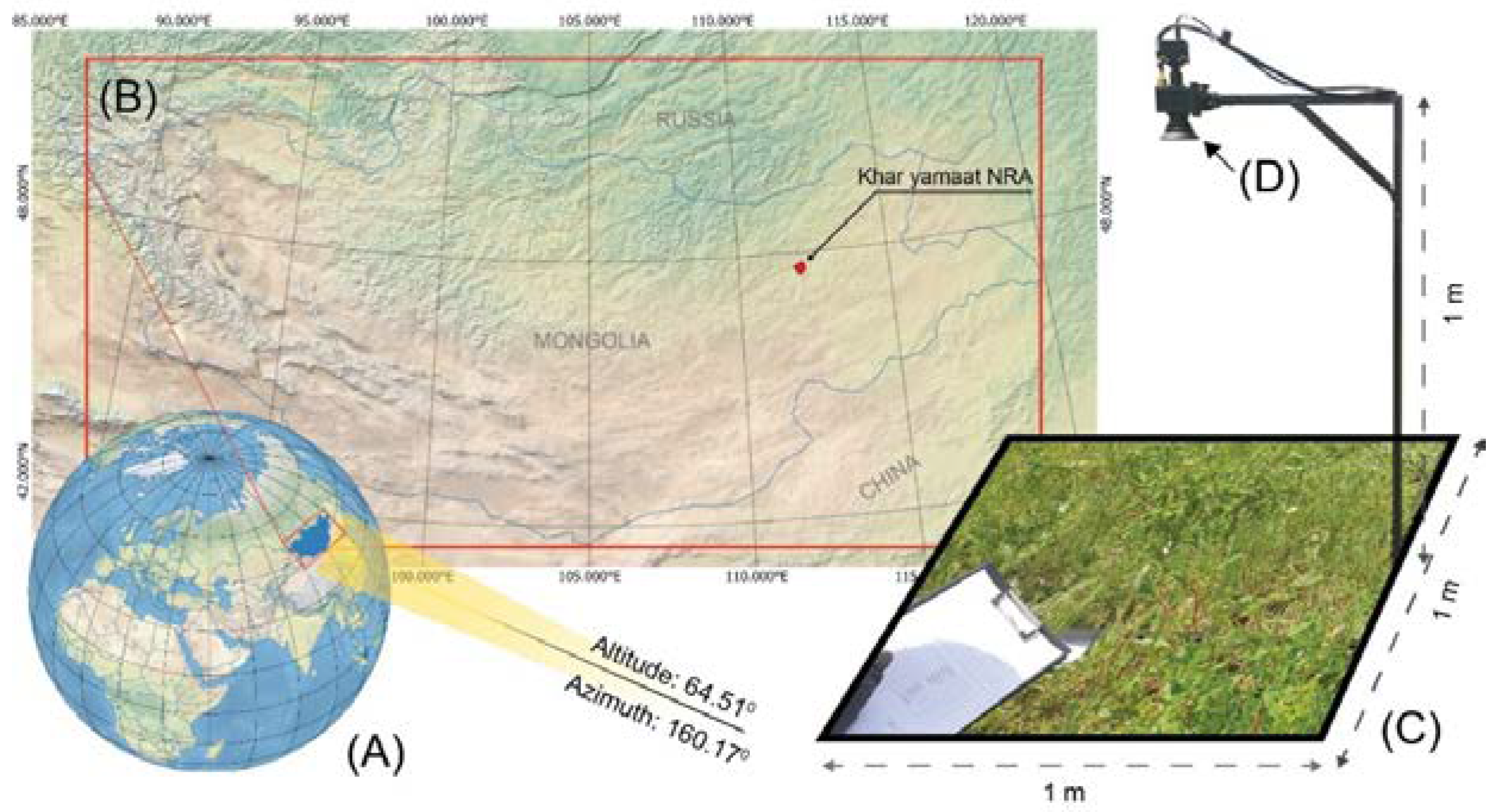

In this study, the LCTF camera [

5,

21] was used to capture vegetation spectra and images during a field survey (

Figure 1). The camera was set up perpendicular to the ground at a height of one meter. The camera has two output files: an 8-bit bitmap and a 10-bit spreadsheet with comma-separated values (CSV). The LCTF camera was positioned on a 1 sq. meter metal frame. Images were taken in the 460-780 nm range with a total of 65 channels at 5 nm intervals. We constructed six sample sites for capturing spectral images, each representing different habitats within the Khar Yamaat NRA.

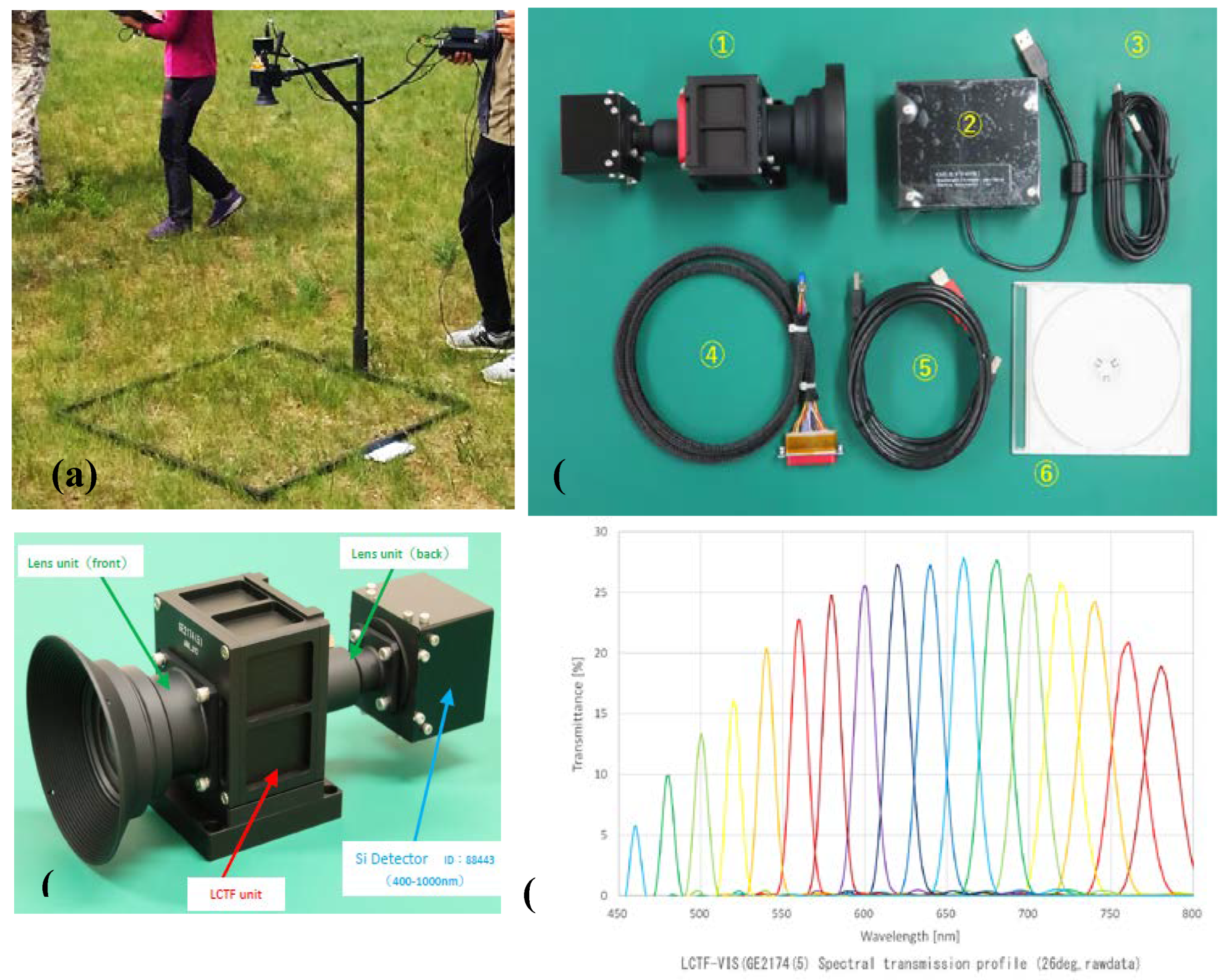

This LCTF camera contains a series of lenses, a liquid crystal filter, and a CCD array sensor connected to a computer through a USB. The tunable filter does not have direct power, so a portable battery pack is used for field operations. The filter is controlled via a Field Programmable Gate Array (FPGA) connected through a USB port on the PC (

Figure 2).

2.2. Image Processing: New Algorithms “Slope Coefficients on the Red Edge” for Image Classification

Calculations were performed using script code written in MATLAB to process image data from the hyperspectral imager LCTF camera. The camera operates in the wavelength band from 460 nm to 780 nm with a 1 nm sampling interval, and its spatial resolution is 656 x 494 pixels. It captures an 8-bit bitmap and a 10-bit spreadsheet with comma-separated values (CSV).

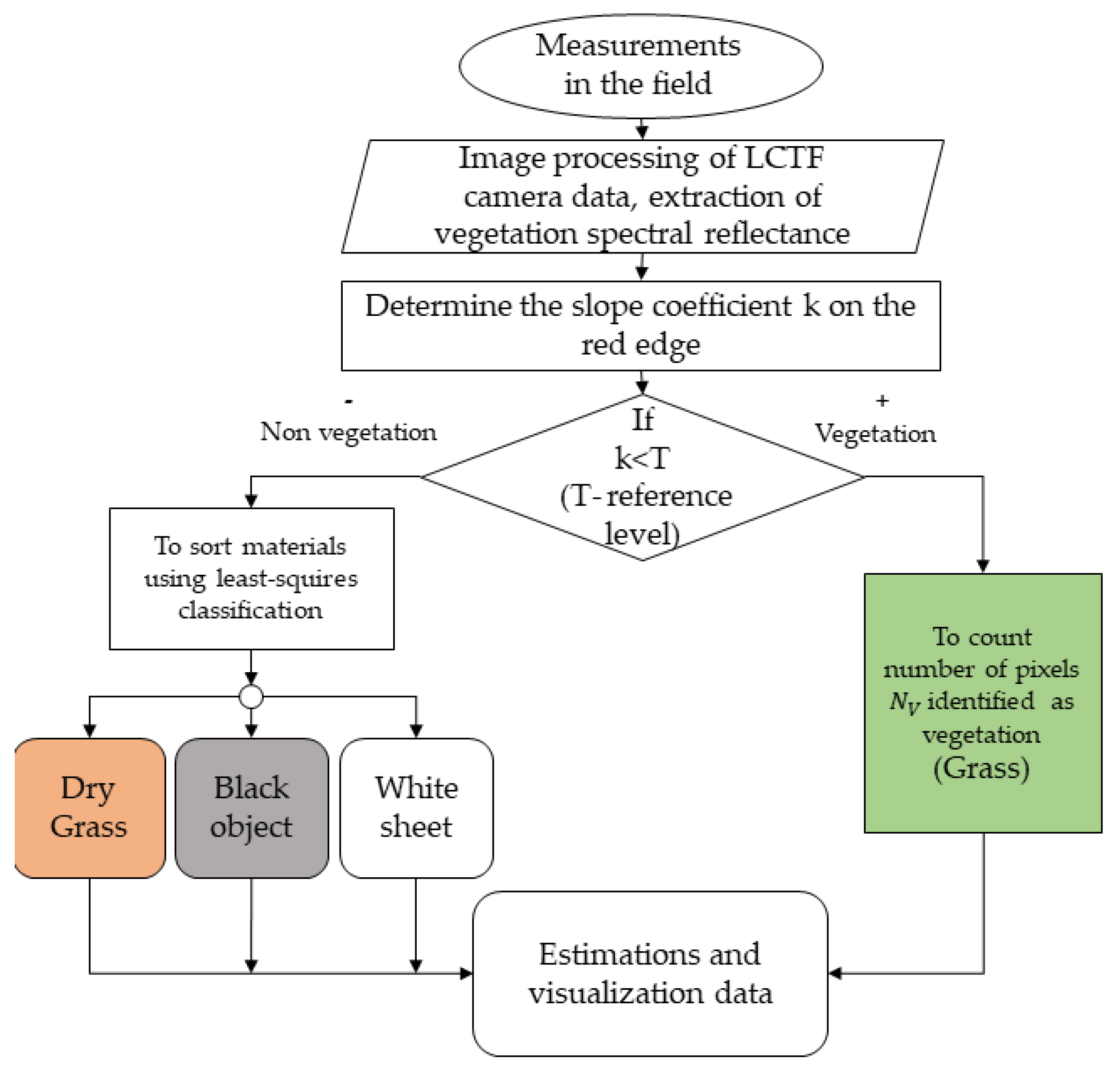

The step-by-step process of classification using camera data is summarized in the algorithmic flowchart presented in

Figure 3.

The proposed algorithm harnesses the high-resolution spectral data from the LCTF camera to differentiate between vegetative and non-vegetative elements within a given area, facilitating a refined approach to distinguish vegetation. The integration of spectral data analysis with advanced classification techniques underscores the robustness of this method in providing accurate and detailed assessments of vegetation cover.

Field measurements typically encompass several species within a 1 sq.meter area. Reflectance variations occur due to foliage shading each other or alterations in sunlight angles.

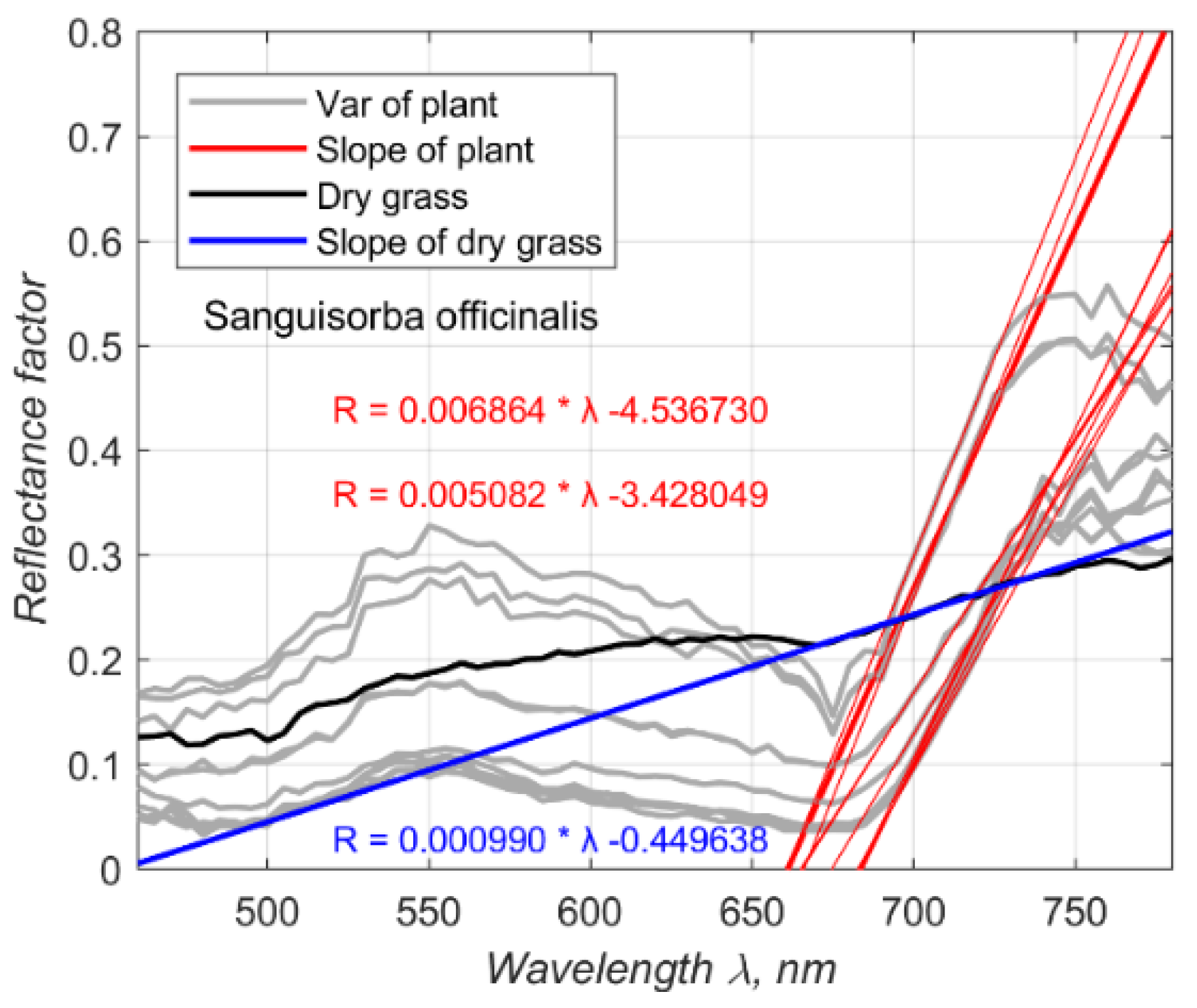

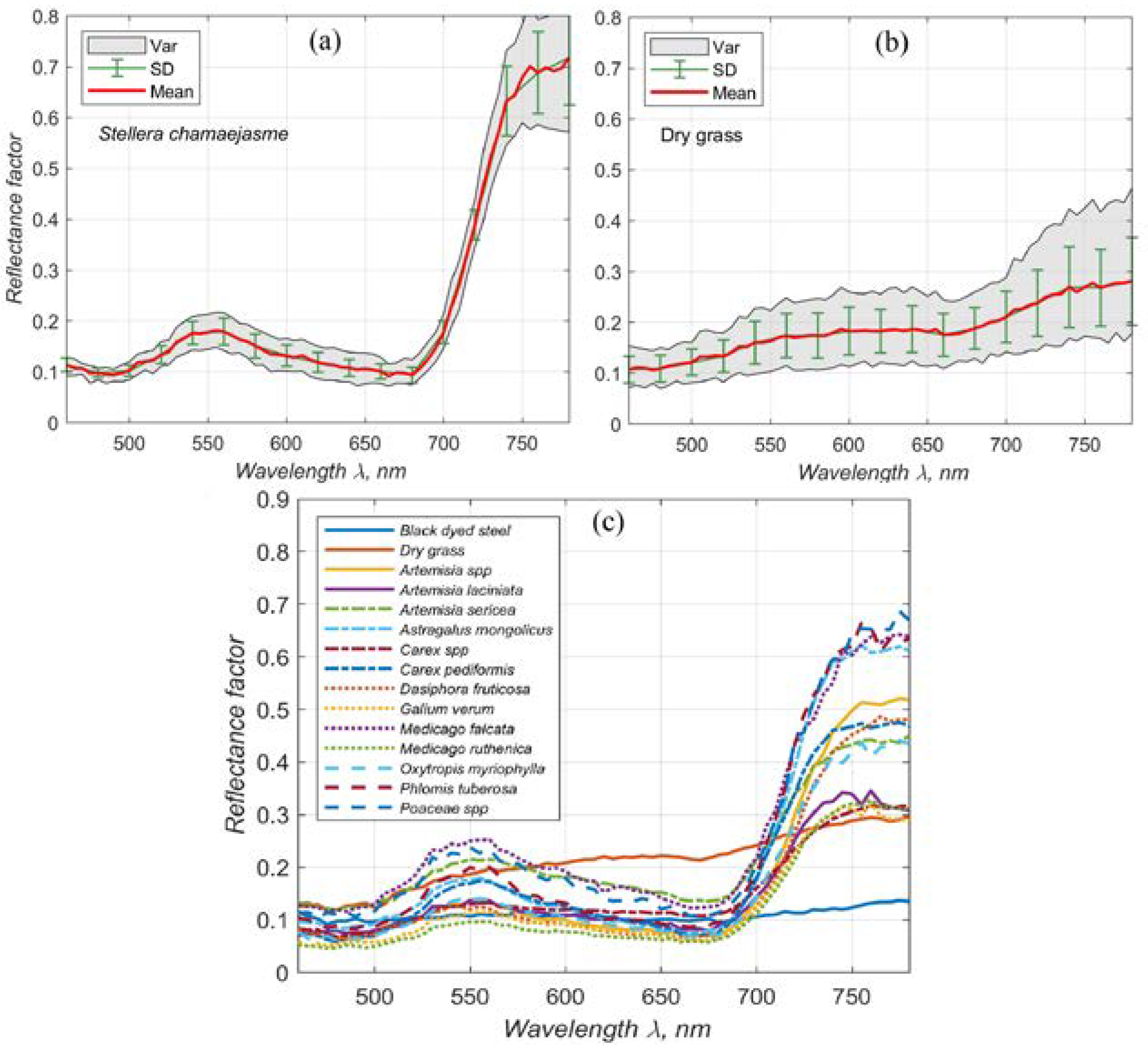

Figure 4 delineates the spectra of leaves from different species under diverse lighting conditions. Shadows or reduced illumination resulted in a consistent decline in the reflection factor.

Spectral measurements were calculated for a window of 10 by 10 pixels from distinct leaves of selected vegetation within the images, which were meticulously chosen for analysis. The camera’s overhead position meant that the reflection angles and relative azimuth were effectively zero, indicating that reflection was not dependent on the view or azimuth angle [

21]. The reflection factor (RF) was thus redefined for the LCTF camera as:

Here DN – the pixel value of the measured sample, – the pixel value of the white reference material, – the wavelength, – the value of the optical background.

The spectral shape of the vegetation remained distinguishable even in shadows. Another hallmark of green plants is the red edge, characterized by a sudden surge in reflectance in the infrared region due to chlorophyll absorbing the majority of light in the visible spectrum but becoming transparent beyond 700 nm.

Pixels with a slope coefficient higher than the reference value are classified as vegetation. Conversely, pixels with a slope coefficient below this threshold are classified as non-vegetation. Non-vegetation pixels undergo further classification through a least-squares method based on their spectral signatures to identify the specific type of material—dry grass, soil, other materials, or white sheets. The final step in the algorithm is estimations and visualizations of data, which is derived from the proportion of pixels identified as vegetation relative to the total number of pixels within the captured image. This measure provides an index of vegetation canopy density, a key variable in various ecological and agricultural applications.

Building on the spectral characteristics, a novel algorithm was formulated for calculating LAI and discerning plant species. The algorithm processes images from the LCTF camera, extracting vegetation reflection spectral factors. Pixels with a significant slope coefficient at the red edge are earmarked as vegetation. The LAI is subsequently computed as the ratio of pixels categorized as vegetation to the total pixel count.

Here , and are the number of vegetation and non-vegetation pixels, respectively.

To evaluate the effectiveness of our newly developed "slope coefficients on the red edge," we can leverage supervised learning algorithms like Support Vector Machines (SVMs) and K-means unsupervised classification algorithms. Due to its robustness and high accuracy in classification, we chose Support Vector Machine (SVM) algorithms as one of the controls for evaluating our newly developed algorithm. SVM is a commonly used classifier in various fields and shows slightly better performance than other classifiers, achieving an overall accuracy of 90% with Kappa coefficient values between 0.76 and 0.94, indicating its effectiveness in terrain classification using hyperspectral remote sensing images [

22]. A confusion matrix was employed to assess the classification accuracy. The Kappa coefficient is a commonly used metric for evaluating image classification accuracy. Accuracy of classified images as assessed using a combination of overall accuracy, producer’s accuracy, user’s accuracy, and kappa coefficient.

3. Results

The spectral reflectance data was successfully collected and contains comprehensive data on various plant species.

A slope coefficient was determined using reflection factors measured at wavelengths near the red edge (700, 705, 710, 715, and 720 nm). This coefficient effectively differentiates living green vegetation from dead grass, as exemplified in

Figure 5. The figure displays the spectra of

Sanguisorba officinalis leaves in gray, with the tangent at the red edge of each spectrum illustrated by a straight red line (

Figure 5a). The slope coefficients for the vegetation vary, with the highest recorded at

and the lowest at

. Conversely, the slope coefficient for dry grass is significantly lower at

(

Figure 5b).

Figure 5c shows the spectra for 14 plant species, dried grass, and black-dyed iron. This variation in slope coefficients facilitates the differentiation between healthy green vegetation and dead grass, showcasing the effectiveness of slope coefficient values in evaluating vegetation vitality.

Our field research leverages the versatility of a LCTF camera, which stands out by capturing imagery across five distinct spectral channels within this pivotal band. This enhanced spectral resolution enables the calculation of a slope coefficient, providing a quantitative measure of vegetation growth in the red-edge region. The capability to conduct such nuanced analysis underscores the superior utility of the LCTF camera in our study, offering a more comprehensive understanding of vegetation health and vigor by capturing subtle spectral variations that commercial satellite systems currently overlook.

The reflection factor, accounting for different light wavelengths, was computed using a relative reference method. The figure below shows the spectral reflectance factor and the variation of spectral signatures of different plant species and metal objects.

Table 1 demonstrates a significant difference in the slope coefficients between vegetation and non-vegetation materials. Among the data for 14 different plant species, the slope coefficients for each species ranged from a minimum of 3 to a maximum of around 14. The table selectively presents a subset of these species, grouped by threes, to illustrate the variation within vegetation samples. In stark contrast, non-vegetation materials display markedly lower slope coefficients. As shown in

Table 1, this distinct disparity is crucial for accurately distinguishing living vegetation from non-vegetation elements in spectral data analysis.

Result of a Novel Algorithm and Comparisons

The methodology employed in this study is grounded in a systematic algorithm that processes and analyzes LCTF camera data to distinguish healthy vegetation from dry grass and others. Initially, field measurements are conducted to capture the spectral data of the vegetation using LCTF camera. This data is then processed to extract the spectral reflectance of the vegetation, providing the foundational dataset for subsequent analysis. Upon extraction of the spectral reflectance, the algorithm determines the slope coefficient, denoted as , at the red edge of the spectrum. The red edge is a critical region in the spectrum, indicative of the chlorophyll content and, thus, the health of vegetation. Each pixel's slope coefficient is compared against a predetermined reference value, , to categorize the pixel as vegetation or non-vegetation. The reference threshold for distinguishing vegetation from non-vegetation in our study was set at 2.0.

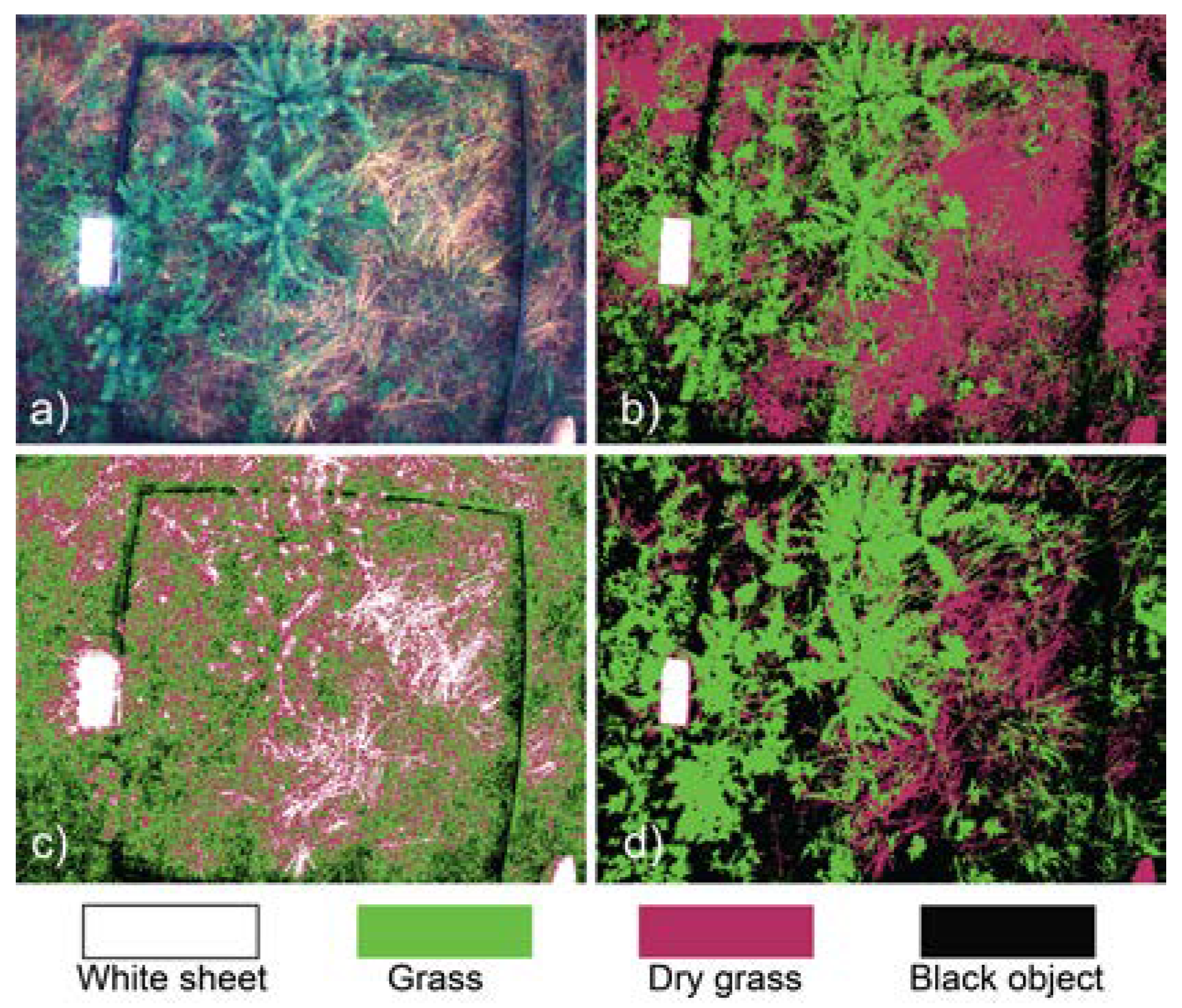

Our novel algorithm's performance, centered on the red-edge spectral feature, was rigorously evaluated against the established Support Vector Machine (SVM) and K-means clustering algorithms (

Figure 6).

Our red edge-based approach achieved an overall accuracy of 90% and a Kappa Coefficient of 0.86, evidencing its effectiveness in classifying vegetation. Meanwhile, SVM showcased exceptional accuracy, scoring 97% with a Kappa Coefficient of 0.96, indicating its reliable performance in complex scenarios. Conversely, with its ease of use in unsupervised learning, K-means showed a lower overall accuracy of 61% and a Kappa Coefficient of 0.47, signaling the need for refinement in vegetation classification applications.

This comparison of algorithmic performances highlights the strengths of our novel approach and reinforces the necessity for careful selection of classification techniques to align with specific project goals and resource availability.

Table 2 presents a detailed accuracy assessment, revealing that our algorithm achieves high accuracy levels and offers increased computational efficiency—a significant advantage for processing large-scale hyperspectral datasets.

The outcome of this comparative study highlighted the enhanced ability of our red edge-based algorithm to accurately classify vegetation types by relying on the red edge slope coefficient intimately connected to vegetation health. This specialized approach contrasts sharply with the broader spectral analysis used by SVM and K-means, which sometimes fails to differentiate between materials with similar spectral properties.

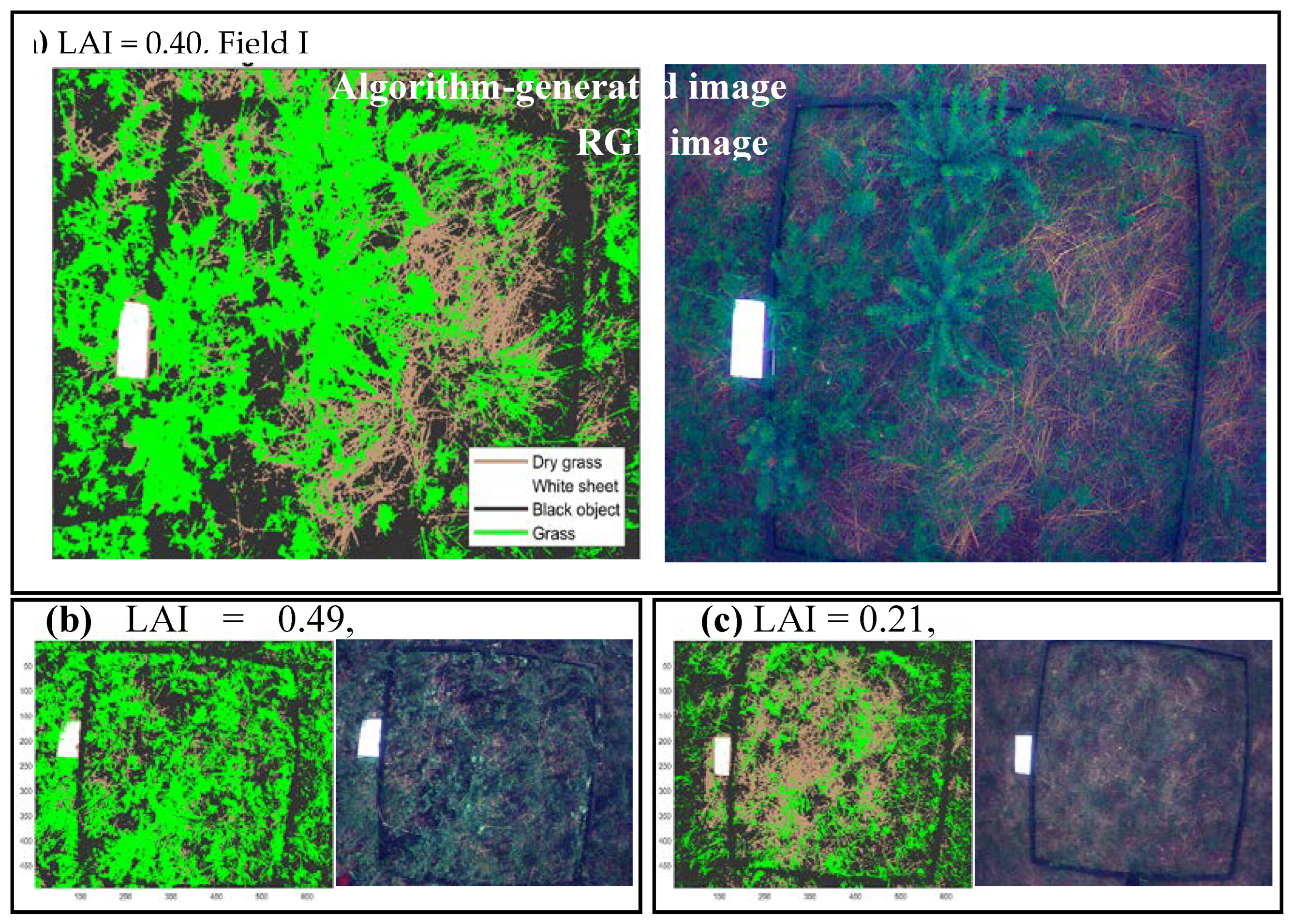

LAI Estimation

Our comprehensive analysis across six varied locations yielded LAI estimates that illustrate a broad spectrum of vegetation cover, with values ranging from 0.21 to 0.71. These findings reveal a notable diversity in vegetation density, indicative of the varying ecological characteristics across the sites. The minimum observed LAI value of 0.21 suggests areas with sparse vegetation, potentially reflecting regions with limited vegetation growth or disturbed habitats. Conversely, the maximum value of 0.71 points towards locations with dense vegetation cover, likely areas with rich biodiversity or well-preserved ecosystems (

Figure 7).

The range of LAI values obtained validates our algorithm's robustness in capturing and quantifying vegetation density and highlights the potential environmental and ecological implications of these findings. The plausibility of the minimum and maximum LAI values is consistent with expected variations in natural landscapes, underscoring the effectiveness of our methodological approach in accurately reflecting the heterogeneity of vegetation coverage.

4. Discussion

It is essential to consider the impact of sunlight's reflection on the leaf surface, which can cause some pixels to appear shiny. We posit that the likelihood of misclassifying pixels is minimized by our algorithm's approach, which determines vegetation presence based on the slope coefficient calculated at the red edge of the spectral reflectance. This method effectively reduces errors arising from specular reflection, a phenomenon observed when light interacts with a leaf's waxy surface, altering its spectral signature. Specifically, when light interacts with the leaf, the waxy surface layer gives rise to specular reflection, affecting the spectral signatures [

23,

24,

25]. Additionally, the varied orientations of leaves within the same vegetation are considered to be contributing to this algorithm.

Notably, the leaf's outer layer comprises a transparent cuticle [

25,

26,

27,

28]. This layer bears optical properties akin to glass, rendering it transparent. While the human eye can discern the upper epidermis and mesophyll layers of a leaf, the reflection of light from the leaf is governed by the incidence of the incoming light. This reflection introduces the importance of considering the polarization of the light reflected off the leaves. Polarized reflectance stems from reflections at the interface of different media. Existing research corroborates that the primary component of reflected light originates from the leaf's surface, particularly the waxy cuticle, as opposed to diffuse reflection [

28,

29,

30,

31]. It becomes apparent that the light reflection spectrum from leaves is influenced not just by the internal composition of the plant but also by its external layers. Thus, for close-range observations utilizing hyperspectral cameras, incorporating polarization techniques could improve vegetation classification accuracy based on visible leaf attributes.

One of the salient observations is that the utilization of hyperspectral cameras with spatial resolutions finer than 3 mm for close-up measurements is relatively uncharted territory in the existing literature. This positions the current study as potentially trailblazing in its endeavor to distinguish vegetations at an unprecedented level of spatial resolution. Notwithstanding, related research [

5,

22,

31,

32,

33] has harnessed drone and satellite data for remote sensing applications on broader scales.

In this study, deploying a hyperspectral LCTF camera was central to the methodology. A parallel study conducted collaboratively by Hokkaido University and research institutions in the Philippines used a drone outfitted with a similar camera for field measurements [

4]. This study noted that areas shadowed by mango trees were not sufficiently detected, rendering them black in the imaging. These findings hint at the potential for enhancing the precision of measurements by incorporating the angle coefficient calculation method developed in the present study.

5. Conclusions

This study, executed at the Khar Yamaat Nature Reserve and facilitated through collaboration with a research group focusing on ecology and nature conservation, employed a hyperspectral LCTF camera to acquire images of field vegetation across diverse locales. The camera proved highly adept, acting as a practical two-dimensional spectrophotometer, where each pixel operated as a spectrophotometer. This study establishes that incorporating the red edge slope estimation technique improves vegetation classification accuracy. Building on this methodology, an innovative algorithm was devised to calculate the LAI rapidly, streamlining the evaluation and identification of ecological resources in the field. The central contributions and takeaways of this study encompass:

The successful acquisition of spectral reflectance data from approximately 230-250 leaves, representing 14 distinct species common in Mongolia, spanning the wavelength range of 460-780 nm at 5 nm intervals.

By leveraging the spectral reflectance data, mainly focusing on the red-edge slope coefficient, we have developed a method that accurately discriminates vegetation and non-vegetation elements across various landscapes.

A comparative analysis was conducted between our novel red-edge based algorithm and the previously tested SVM and K-means algorithms. This comparison showcased our algorithm's superior performance in classifying vegetation with an overall accuracy of 90% and a Kappa Coefficient of 0.86.

Our findings from field analyses across seven distinct locations, revealing LAI values ranging from 0.21 to 0.71, underscore the algorithm's effectiveness in capturing the diversity of vegetation density.

Furthermore, the algorithm's robustness against potential misclassification caused by specular reflection or leaf orientation highlights its practicality for real-world applications. The implications of our research extend beyond academic interest, offering valuable insights for ecological assessments, conservation efforts, and resource management strategies. As we look to the future, the potential for integrating this algorithm with satellite and drone imagery analysis promises to enhance our ability to monitor and understand ecological systems on a global scale. This study contributes to the advancement of environmental monitoring techniques. It sets the stage for future research to refine and expand the applications of hyperspectral imaging in assessing vegetation health and distribution.

Author Contributions

B.U. spearheaded the experimental efforts and played a pivotal role in overseeing the field measurements. B.T. and B.O. were responsible for devising the estimation methodology and analyzing data. B.O. meticulously processed and generated graphical representations using MATLAB and other software tools. B.T. took the lead in compiling the initial manuscript and facilitated discussions among the co-authors. Y.T. provided invaluable methodological insights and guidance throughout the research process. E.D., Q.W., H.B. and T.T. provided supervision and oversight, ensuring the rigor and integrity of the findings. All authors actively engaged in fruitful discussions on the results and collectively contributed to refining the final manuscript.

Funding

This research received partial support from a high-level research project at the National University of Mongolia, with project number P2020-3983, titled "Measurement and Database Creation of Spectro-polarimetric Light Depending on the Direction of Reflection”. We acknowledge and appreciate the funding provided for this study. This research was performed in collaboration with the National University of Mongolia and WWF-Mongolia, with support of the GEF funded “Promoting Dryland Sustainable Landscapes and Biodiversity Conservation in the Eastern Steppe of Mongolia” project co-implemented by FAO and WWF.

Acknowledgments

The authors extend their gratitude to G. Anar, M. Baasanjargal, S.Enkhchimeg and S. Bizyaa for their invaluable assistance during field measurements and for their assistance with transportation. We also acknowledge the support of the WWF-Mongolia, and Higher Engineering Education Development Project, which played a crucial role in acquiring the LCTF camera from Japan.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Christenhusz, M.J.M.; Byng, J.W. The number of known plants species in the world and its annual increase. Phytotaxa 2016, 261, 201–217-201–217. [CrossRef]

- Enquist, B.J.; Feng, X.; Boyle, B.; Maitner, B.; Newman, E.A.; Jørgensen, P.M.; Roehrdanz, P.R.; Thiers, B.M.; Burger, J.R.; Corlett, R.T.; et al. The commonness of rarity: Global and future distribution of rarity across land plants. Science Advances 2019, 5. [CrossRef]

- Guo, Y.; Graves, S.J.; Flory, S.L.; Bohlman, S.A. Hyperspectral Measurement of Seasonal Variation in the Coverage and Impacts of an Invasive Grass in an Experimental Setting. Remote Sensing 2018. [CrossRef]

- Yao, L.; Liu, T.; Qin, J.; Lu, N.; Zhou, C. Tree counting with high spatial-resolution satellite imagery based on deep neural networks. Ecological Indicators 2021, 125. [CrossRef]

- Ishida, T.; Kurihara, J.; Viray, F.A.; Namuco, S.B.; Paringit, E.C.; Perez, G.J.; Takahashi, Y.; Marciano, J.J. A novel approach for vegetation classification using UAV-based hyperspectral imaging. Computers and Electronics in Agriculture 2018, 144, 80-85. [CrossRef]

- Gao, B.C.; Montes, M.J.; Davis, C.O.; Goetz, A. Atmospheric Correction Algorithms for Hyperspectral Remote Sensing Data of Land and Ocean. Remote Sensing of Environment 2009. [CrossRef]

- Lowe, A.; Harrison, N.; French, A.P. Hyperspectral image analysis techniques for the detection and classification of the early onset of plant disease and stress. Plant Methods 2017, 13, 80-80. [CrossRef]

- Bock, C.H.; Poole, G.H.; Parker, P.E.; Gottwald, T.R. Plant Disease Severity Estimated Visually, by Digital Photography and Image Analysis, and by Hyperspectral Imaging. Critical Reviews in Plant Sciences 2010, 29, 59-107. [CrossRef]

- Mahlein, A.-K.; Steiner, U.; Hillnhütter, C.; Dehne, H.-W.; Oerke, E.-C. Hyperspectral imaging for small-scale analysis of symptoms caused by different sugar beet diseases. Plant Methods 2012, 8, 3-3. [CrossRef]

- Mahlein, A.K.; Rumpf, T.; Welke, P.; Dehne, H.W.; Plümer, L.; Steiner, U.; Oerke, E.C. Development of spectral indices for detecting and identifying plant diseases. Remote Sensing of Environment 2013, 128, 21-30. [CrossRef]

- Hartfield, K.; Gillan, J.K.; Norton, C.L.; Conley, C.; van Leeuwen, W.J.D. A Novel Spectral Index to Identify Cacti in the Sonoran Desert at Multiple Scales Using Multi-Sensor Hyperspectral Data Acquisitions. Land 2022, 11, 786-786. [CrossRef]

- Iqbal, I.M.; Balzter, H.; Firdaus e, B.; Shabbir, A. Identifying the Spectral Signatures of Invasive and Native Plant Species in Two Protected Areas of Pakistan through Field Spectroscopy. Remote Sensing 2021, 13, 4009-4009. [CrossRef]

- Paz-Kagan, T.; Caras, T.; Herrmann, I.; Shachak, M.; Karnieli, A. Multiscale mapping of species diversity under changed land use using imaging spectroscopy. Ecological Applications 2017, 27, 1466-1484. [CrossRef]

- Huang, W.; Lamb, D.W.; Niu, Z.; Zhang, Y.; Liu, L.; Wang, J. Identification of yellow rust in wheat using in-situ spectral reflectance measurements and airborne hyperspectral imaging. Precision Agriculture 2007, 8, 187-197. [CrossRef]

- Andrew, M.; Ustin, S. The role of environmental context in mapping invasive plants with hyperspectral image data. Remote Sensing of Environment 2008, 112, 4301-4317. [CrossRef]

- Lawrence, R.L.; Wood, S.D.; Sheley, R.L. Mapping invasive plants using hyperspectral imagery and Breiman Cutler classifications (randomForest). Remote Sensing of Environment 2006, 100, 356-362. [CrossRef]

- Yu, K.-Q.; Zhao, Y.-R.; Li, X.-L.; Shao, Y.-N.; Liu, F.; He, Y. Hyperspectral Imaging for Mapping of Total Nitrogen Spatial Distribution in Pepper Plant. PLoS ONE 2014, 9, e116205-e116205. [CrossRef]

- Underwood, E. Mapping nonnative plants using hyperspectral imagery. Remote Sensing of Environment 2003, 86, 150-161. [CrossRef]

- Goetz, A.F.H.; Vane, G.; Solomon, J.E.; Rock, B.N. Imaging Spectrometry for Earth Remote Sensing. Science 1985, 228, 1147-1153. [CrossRef]

- Kamruzzaman, M.; Sun, D.W. Introduction to Hyperspectral Imaging Technology. In Computer Vision Technology for Food Quality Evaluation; Elsevier: 2016; pp. 111-139.

- Begzsuren Tumendemberel トゥメンテンプレル ベグズスレン 北 海 道 大 学 Supervisor, b.; Takahashi, Y. STUDY OF SPECTRO-POLARIMETRIC BIDIRECTIONAL REFLECTANCE PROPERTIES OF LEAVES. 2019. [CrossRef]

- Ahmad, A.M.; Minallah, N.; Ahmed, N.; Ahmad, A.M.; Fazal, N. Remote Sensing Based Vegetation Classification Using Machine Learning Algorithms. In Proceedings of the 2019 International Conference on Advances in the Emerging Computing Technologies (AECT), 10-10 Feb. 2020, 2020; pp. 1-6.

- Comar, A.; Baret, F.; Obein, G.; Simonot, L.; Meneveaux, D.; Viénot, F.; de Solan, B. ACT: A leaf BRDF model taking into account the azimuthal anisotropy of monocotyledonous leaf surface. Remote Sensing of Environment 2014, 143, 112-121. [CrossRef]

- Kallel, A. Leaf polarized BRDF simulation based on Monte Carlo 3-D vector RT modeling. Journal of Quantitative Spectroscopy and Radiative Transfer 2018, 221, 202-224. [CrossRef]

- Tulloch, A.P. Chemistry of waxes of higher plants. Chemistry and Biochemistry of Natural Waxes 1976.

- Baker, E.A. Chemistry and morphology of plant epicuticular waxes. Linnean Society symposium series 1982.

- Schieferstein, R.H.; Loomis, W.E. Wax Deposits on Leaf Surfaces. Plant physiology 1956, 31, 240-247.

- Grant, L. Diffuse and specular characteristics of leaf reflectance. Remote Sensing of Environment 1987, 22, 309-322. [CrossRef]

- Grant, L.; Daughtry, C.S.T.; Vanderbilt, V.C. Polarized and specular reflectance variation with leaf surface features. Physiologia Plantarum 1993, 88, 1-9. [CrossRef]

- Liu, W.; Wu, E.Y. Comparison of non-linear mixture models: sub-pixel classification. Remote Sensing of Environment 2005, 94, 145-154. [CrossRef]

- Ryan, J.S.; Brianna, M.C.; Pamela, C.Z.; Jason, C.G. An Analysis of the Accuracy of Photo-Based Plant Identification Applications on Fifty-Five Tree Species. Arboriculture & Urban Forestry (AUF) 2022, 48, 27. [CrossRef]

- Fu, B.; Zuo, P.; Liu, M.; Lan, G.; He, H.; Lao, Z.; Zhang, Y.; Fan, D.; Gao, E. Classifying vegetation communities karst wetland synergistic use of image fusion and object-based machine learning algorithm with Jilin-1 and UAV multispectral images. Ecological Indicators 2022, 140, 108989-108989. [CrossRef]

- Bousquet, L.; Lachérade, S.; Jacquemoud, S.; Moya, I. Leaf BRDF measurements and model for specular and diffuse components differentiation. Remote Sensing of Environment 2005, 98, 201-211. [CrossRef]

Figure 1.

Overview of Research Location and Field Equipment Schematic. (A, B) Display the location of Khar Yamaat NRA, accompanied by solar altitude (64.510) and azimuth (160.170) data on July 3rd, 2022 (Created using Natural Earth's free vector and raster map data, available at naturalearthdata.com). (C) Illustrates a custom camera holder measuring 1m x 1m x 1m. The inset photo within the frame showcases various grass and herb species growing in Khar Yamaat NRA.

Figure 1.

Overview of Research Location and Field Equipment Schematic. (A, B) Display the location of Khar Yamaat NRA, accompanied by solar altitude (64.510) and azimuth (160.170) data on July 3rd, 2022 (Created using Natural Earth's free vector and raster map data, available at naturalearthdata.com). (C) Illustrates a custom camera holder measuring 1m x 1m x 1m. The inset photo within the frame showcases various grass and herb species growing in Khar Yamaat NRA.

Figure 2.

Field Equipment and Hyperspectral Camera. (a) Depicts actual measurements being conducted in the field. (b) Shows the Hyperspectral LCTF camera at the National University of Mongolia (NUM). (b.1) LCTF camera, (b.2) Tunable Filter’s electric driver, (b.3-5) connection cables. (c) The configuration of hyperspectral LCTF camera. (d) The spectral transmission profile of LCTF. The figure's segments b, c, and d are sourced from the user manual provided by Genesia Corporation.

Figure 2.

Field Equipment and Hyperspectral Camera. (a) Depicts actual measurements being conducted in the field. (b) Shows the Hyperspectral LCTF camera at the National University of Mongolia (NUM). (b.1) LCTF camera, (b.2) Tunable Filter’s electric driver, (b.3-5) connection cables. (c) The configuration of hyperspectral LCTF camera. (d) The spectral transmission profile of LCTF. The figure's segments b, c, and d are sourced from the user manual provided by Genesia Corporation.

Figure 3.

Flowchart of a novel algorithm from hyperspectral LCTF camera data.

Figure 3.

Flowchart of a novel algorithm from hyperspectral LCTF camera data.

Figure 4.

Comparative Analysis of Spectral Reflectance in Different Lighting Conditions. This figure shows the spectral differences between shaded and sunlit leaves and the spectral reflectance of dry grass, highlighting their respective slope coefficients.

Figure 4.

Comparative Analysis of Spectral Reflectance in Different Lighting Conditions. This figure shows the spectral differences between shaded and sunlit leaves and the spectral reflectance of dry grass, highlighting their respective slope coefficients.

Figure 5.

Spectral Reflectance and Variation Analysis. (a) Displays the spectral reflectance factor, variation of spectral signatures, and standard deviations for Stellera chamaejasme L.. (b) Shows the reflectance factor, spectral variation, and standard deviations for dry grass. (c) Shows average reflectance values along with different plant species: Artemisia laciniata Willd. 1803, Artemisia sericea Weber ex Stechm. 1775, Astragalus mongholicus Bunge, 1868, Carex pediformis C.A. Mey. 1831, Dasiphora fruticosa (L.) Rydb. 1898, Galium verum L.1753, Medicago falcata L. 1753, Medicago ruthenica (L.) Ledeb. 1841, Oxytropis myriophylla (Pall.) DC. 1802, and Phlomis tuberosa L. 1753. Another 3 species unidentified.

Figure 5.

Spectral Reflectance and Variation Analysis. (a) Displays the spectral reflectance factor, variation of spectral signatures, and standard deviations for Stellera chamaejasme L.. (b) Shows the reflectance factor, spectral variation, and standard deviations for dry grass. (c) Shows average reflectance values along with different plant species: Artemisia laciniata Willd. 1803, Artemisia sericea Weber ex Stechm. 1775, Astragalus mongholicus Bunge, 1868, Carex pediformis C.A. Mey. 1831, Dasiphora fruticosa (L.) Rydb. 1898, Galium verum L.1753, Medicago falcata L. 1753, Medicago ruthenica (L.) Ledeb. 1841, Oxytropis myriophylla (Pall.) DC. 1802, and Phlomis tuberosa L. 1753. Another 3 species unidentified.

Figure 6.

Comparative visualization of vegetation and non-vegetation classification: (a) RGB Image from LCTF Camera, (b) Results from SVM Algorithm, (c) K-means Algorithm, and (d) Our Novel Algorithm.

Figure 6.

Comparative visualization of vegetation and non-vegetation classification: (a) RGB Image from LCTF Camera, (b) Results from SVM Algorithm, (c) K-means Algorithm, and (d) Our Novel Algorithm.

Figure 7.

Comparative analysis of algorithm-generated and RGB images.

Figure 7.

Comparative analysis of algorithm-generated and RGB images.

Table 1.

Slope Coefficients for Red-Edge Calculation of Vegetation and Non-Vegetation Objects. These numbers are multiplied by and the units are

Table 1.

Slope Coefficients for Red-Edge Calculation of Vegetation and Non-Vegetation Objects. These numbers are multiplied by and the units are

| № |

An example species or objects from the range group |

Mean |

SD |

Range group |

| Vegetation |

| 1 |

Artemisia laciniata |

4.36 |

0.82 |

3 - 5 |

| 2 |

Dasiphora fruticosa |

6.98 |

0.79 |

6 - 8 |

| 3 |

Astragalus mongolicus |

10.58 |

0.68 |

9 - 11 |

| 4 |

Polygonum divaricatum |

14.32 |

1.23 |

12 - 14 |

| Non-vegetation |

| 5 |

Dry grass |

0.86 |

0.41 |

0.37 - 1.46 |

| 6 |

White sheet |

0.12 |

0.23 |

0.33 - 0.47 |

| 7 |

Black dyed steel |

0.34 |

0.20 |

0.08 - 0.34 |

Table 2.

Accuracy assessment of comparison different algorithms of image classification.

Table 2.

Accuracy assessment of comparison different algorithms of image classification.

| Type |

Novel Algorithms Based on the Red Edge |

SVM Algorithms |

K-Means Algorithms |

| |

PA/% |

UA/% |

PA/% |

UA/% |

PA/% |

UA/% |

| Grass |

99.97 |

97.25 |

94.03 |

95.94 |

28.69 |

40.05 |

| Dry grass |

60.03 |

99.46 |

96.93 |

97.56 |

39.32 |

45.59 |

| White sheet |

99.72 |

100 |

100 |

100 |

100 |

61.78 |

| Black object |

98.7 |

71.63 |

96.58 |

94.13 |

67.26 |

96.72 |

| Overall accuracy |

90 |

97.02 |

60.56 |

| Kappa Coefficient |

0.86 |

0.96 |

0.47 |

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).