Submitted:

18 May 2024

Posted:

30 May 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction and Motivation

- -We propose a new robust approach that mainly explores a newly designed automatic pain detection using facial expressions.

- -The new framework includes multilinear principal component analysis as a contributing step for face feature extraction and dimension reduction, which increases the detection rate compared to previous studies reported in the literature review to improve the discriminatory power.

- - Our system performance is evaluated by testing on the UNBC-McMaster Shoulder Pain Expression Archive databases confirming its supremacy over those of the state-of-the-art.

| Imput: A face in pain from the UNBC-McMaster, learned space projection matrices by Multilinear Whitened Principal Component Analysis (MWPCA), and a fixed threshold. Initialize Type=(A pair of images) |

|---|

| For to 4. = Calculate Compute (equation 1) End. |

| Output: represent an image that contains five scales, with the convergence parameters. |

2. Related Works

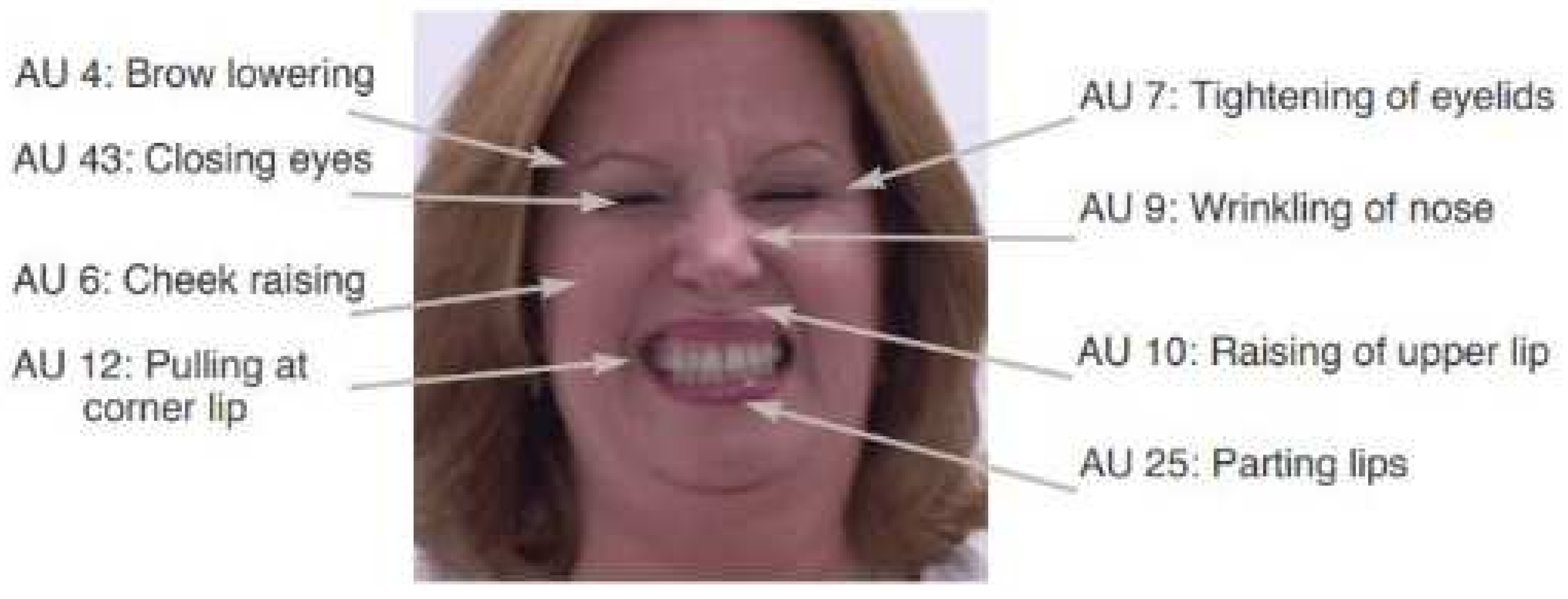

2.0.1. Prkachin and Solomon Pain Intensity Metric (PSPI)

2.0.2. UNBC Databaset

3. Proposed of Our Architecture Method

3.1. Data Preprocessing

3.2. Feature Extraction

4. Multilinear Subspace Learning

5. Training of the Model

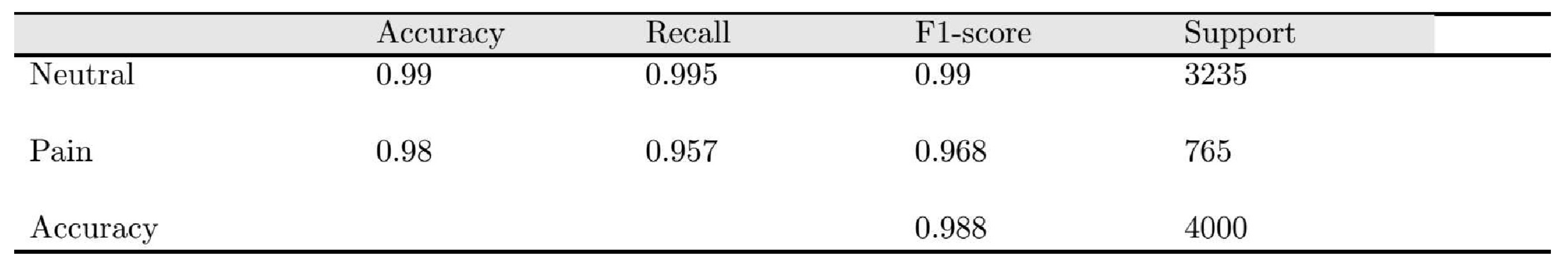

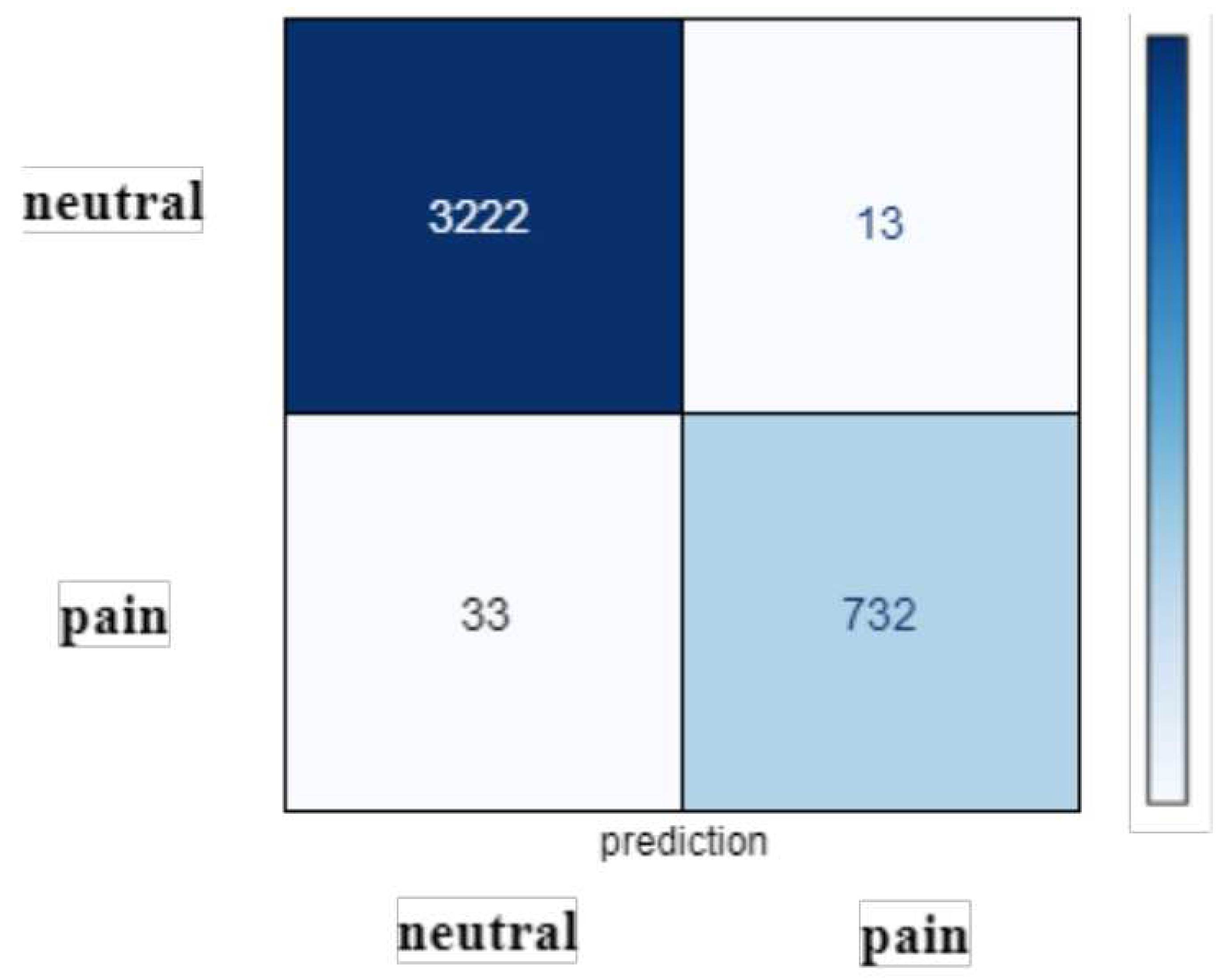

6. Results Analysis

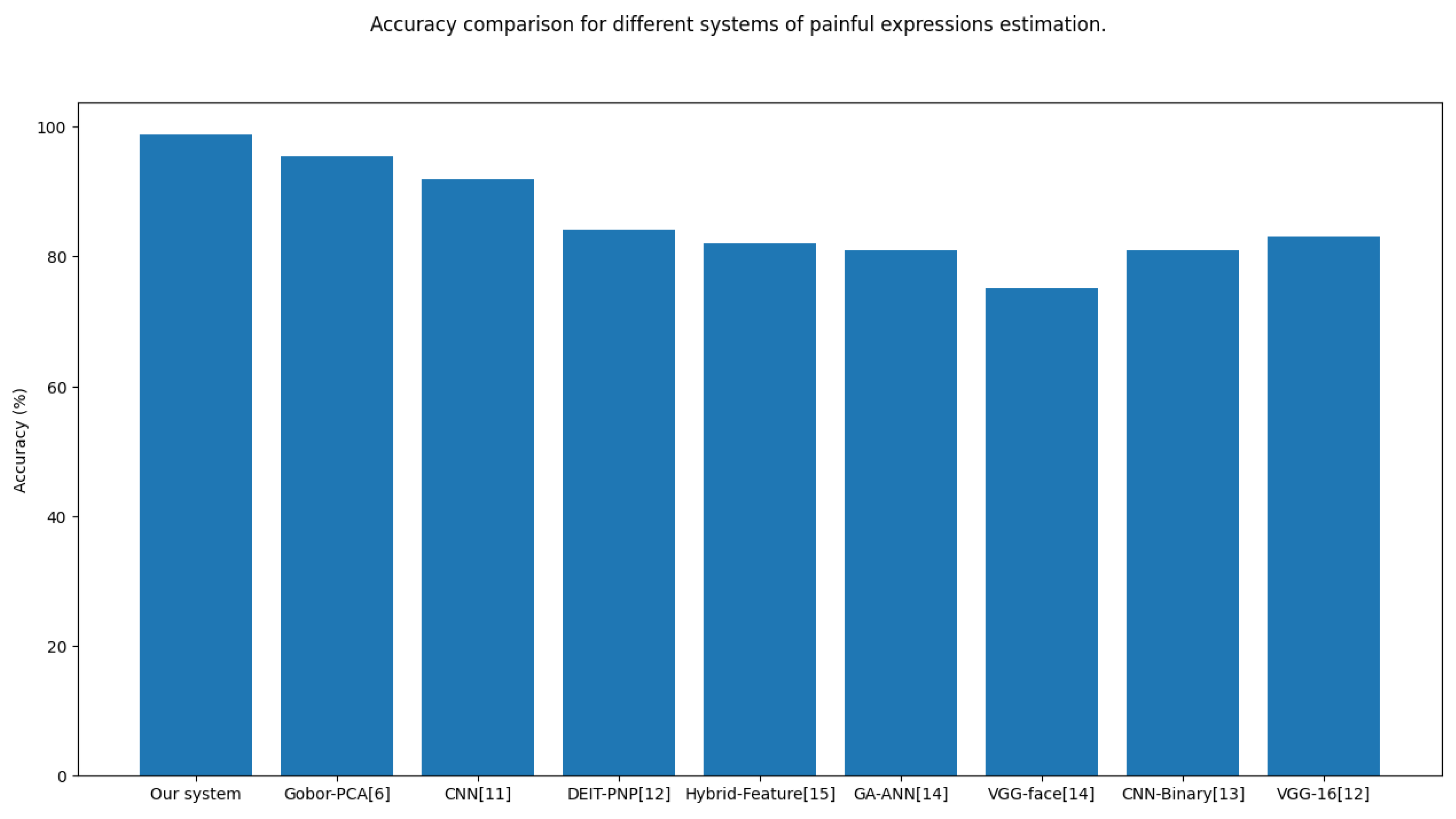

| Work/Year | Methods | Dataset | Classifier | Best Result |

|---|---|---|---|---|

| Rodriguez etal. [12] | Features: VGG-16 | UNBC-McMaster Shoulder Pain | LSTM | MSE 0.74 AUC Accuracy |

| Tavakolian et al. [13] | Features: CNN | UNBC-McMaster Shoulder Pain | Deep Binary | PCC81% |

| Bargshady et al. [14] | Features: VGG-face | UNBC-McMaster Shoulder Pain | RNN | MSE |

| Wang et al. [39] | Features: GA-ANN | UNBC-McMaster Shoulder Pain | ANN | MSE |

| Huang et al. [15] | Features: spatiotemporal, and geometric | UNBC-McMaster Shoulder Pain | Hybrid network | MAE MSE |

| Fat et al. [43] | Features: Gabor-Adaboost | UNBC-McMaster Shoulder Pain | KNN | |

| El-Morabit et al. [2] | Features DEIT-PNP | UNBC-McMaster Shoulder Pain | SVR | Accuracy |

| Semwal et al. [11]. | Features: Deep-CNN | UNBC-McMaster Shoulder Pain | SVM | |

| Ghosh et al. [6] | Features:Gabor-PCA | UNBC-McMaster Shoulder Pain | SVM | |

| Our system | Features SVM | UNBC-McMaster Shoulder Pain | SVC | MAE , and |

6.1. Defining the Time Cost

6.2. Comparison with Existing Methods

6.3. Discussions

6.4. Extensive Experimental Comparison

7. Conclusion and Future Work

Conflicts of Interest

References

- Wang, L. , Guo, Y., Dalip, B., Xiao, Y., Urman, R.D. and Lin, Y., 2022. An experimental study of objective pain measurement using pupillary response based on genetic algorithm and artificial neural network. Applied Intelligence, 52(2), pp.1145-1156.

- El Morabit, S. , Rivenq, A., Zighem, M.E.N., Hadid, A., Ouahabi, A. and Taleb-Ahmed, A., 2021. Automatic pain estimation from facial expressions: a comparative analysis using off-the-shelf CNN architectures. Electronics, 10(16), p.1926.

- Bendjillali, R.I.; Beladgham, M.; Merit, K.; Taleb-Ahmed, A. Improved facial expression recognition based on dwt feature for deep CNN. Electronics 2019, 8, 324. [Google Scholar] [CrossRef]

- Amrane, A. , Mellah, H., Aliradi, R., Amghar, Y. (2014). Semantic indexing of multimedia content using textual and visual information. International journal of advanced media and communication, 5(2-3), 182-194.

- Kenneth, M. Prkachin Patrick Lucey Jeffrey F. Cohn. “PAINFUL DATA: The UNBC-McMaster Shoulder Pain Expression Archive Database”. In: (2011).

- Patricia, E. Solomon Kenneth M. Prkachina. “The structure, reliability and validity of pain expression:Evidence from patients with shoulder pain”. In: (2009).

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2019, 25, 1097–1105. [Google Scholar] [CrossRef]

- Ashraf, A.B., Lucey, S., Cohn, J.F., Chen, T., Ambadar, Z., Prkachin, K., Solomon, P. and Theobald, B.J., 2007, November. The painful face: Pain expression recognition using active appearance models. In Proceedings of the 9th international conference on Multimodal interfaces (pp. 9-14).

- Lucey, P. , Cohn, J., Lucey, S., Matthews, I., Sridharan, S. and Prkachin, K.M., 2009, September. Automatically detecting pain using facial actions. In 2009 3rd International Conference on Affective Computing and Intelligent Interaction and Workshops (pp. 1-8). IEEE.

- Kaltwang, S., Rudovic, O. and Pantic, M., 2012. Continuous pain intensity estimation from facial expressions. In Advances in Visual Computing: 8th International Symposium, ISVC 2012, Rethymnon, Crete, Greece, July 16-18, 2012, Revised Selected Papers, Part II 8 (pp. 368-377). Springer Berlin Heidelberg.

- Hammal, Z. and Cohn, J.F., 2012, October. Automatic detection of pain intensity. In Proceedings of the 14th ACM international conference on Multimodal interaction (pp. 47-52).

- Roy, S.D. , Bhowmik, M.K., Saha, P. and Ghosh, A.K., 2016. An approach for automatic pain detection through facial expression. Procedia Computer Science, 84, pp.99-106.

- Semwal, A. and Londhe, N.D., 2021. Computer aided pain detection and intensity estimation using compact CNN based fusion network. Applied Soft Computing, 112, p.107780.

- Elgendy, F. , Alshewimy, M. and Sarhan, A.M., 2021. Pain detection/classification framework including face recognition based on the analysis of facial expressions for E-health systems. Int. Arab J. Inf. Technol., 18(1), pp.125-132.

- Chen, J. , Chi, Z. and Fu, H., 2017. A new framework with multiple tasks for detecting and locating pain events in video. Computer Vision and Image Understanding, 155, pp.113-123.

- Karamitsos, I. , Seladji, I. and Modak, S., 2021. A modified CNN network for automatic pain identification using facial expressions. Journal of Software Engineering and Applications, 14(8), pp.400-417.

- Virrey, R.A. , Liyanage, C.D.S., Petra, M.I.B.P.H. and Abas, P.E., 2019. Visual data of facial expressions for automatic pain detection. Journal of Visual Communication and Image Representation, 61, pp.209-217.

- Alghamdi, T. and Alaghband, G., 2022. Facial expressions based automatic pain assessment system. Applied Sciences, 12(13), p.6423.

- Lucey, P. , Cohn J.F., Prkachin K.M., Solomon P.E., Matthews I. Painful data: The UNBC-McMaster shoulder pain expression archive databaseFace Gesture 2011 (2011), pp. 57-64.

- H. Jung, S. Lee, J. Yim, S. Park, J. Kim, Joint fine-tuning in deep neural networks for facial expression recognition, in: Proc. IEEE Int. Conf. Comput. Vis. 2015 Inter, 2015, pp. 2983–2991.

- Aliradi, R. , Belkhir, A., Ouamane, A. and Elmaghraby, A.S., 2018. DIEDA: discriminative information based on exponential discriminant analysis combined with local features representation for face and kinship verification. Multimedia Tools and Applications, pp.1-18.

- Aliradi, R. , Belkhir, A., Ouamane, A., Aliane, H., Sellam, A., Amrane, A. and Elmaghraby, A.S., 2018, April. Face and kinship image based on combination descriptors-DIEDA for large scale features. In 2018 21st Saudi Computer Society National Computer Conference (NCC) (pp. 1-6). IEEE.

- Othman, E.; Werner, P.; Saxen, F.; Al-Hamadi, A.; Gruss, S.; Walter, S. Automatic Vs. Human Recognition of Pain Intensity from Facial Expression on the X-ITE Pain Database. Sensors 2021, 21, 3273. [Google Scholar] [PubMed]

- Zhou, J.; Hong, X.; Su, F.; Zhao, G. Recurrent Convolutional Neural Network Regression for Continuous Pain Intensity Estimation in Video. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Las Vegas, NV, USA, 27–30 June 2016; pp. 84–92. [Google Scholar]

- H. Pedersen, Learning appearance features for pain detection using the unbc-mcmaster shoulder pain expression archive database, in: Int. Conf. Comput. Vis. Syst., 2015, pp. 128–136.

- Ferroukhi, M. , Ouahabi, A., Attari, M., Habchi, Y. and Taleb-Ahmed, A., 2019. Medical video coding based on 2nd-generation wavelets: Performance evaluation. Electronics, 8(1), p.88.

- Ghosh, A. , Umer, S., Khan, M.K., Rout, R.K. and Dhara, B.C., 2023. Smart sentiment analysis system for pain detection using cutting edge techniques in a smart healthcare framework. Cluster Computing, 26(1), pp.119-135.

- Hamouchene, I. and Aouat, S., 2016. Efficient approach for iris recognition. Signal, Image and Video Processing, 10(7), pp.1361-1367.

- Zhang, G., and Wang, Y. (2011). Robust 3D face recognition based on resolution invariant features. Pattern Recognition Letters, 32(7), 1009-1019.

- Yusoff, Nooraini, and Loai Alamro. "Implementation of feature extraction algorithms for image tampering detection." International Journal of Advanced Computer Research 9.43 (2019): 197-211.

- Hoemmen, Mark. Communication-avoiding Krylov subspace methods. University of California, Berkeley, 2010.

- Wu Yi, Xiaoyan Jiang, Zhijun Fang, Yongbin Gao, and Hamido Fujita. "Multi-modal 3D object detection by 2D-guided precision anchor proposal and multi-layer fusion." Applied Soft Computing 108 (2021): 107405.

- Dutta, K. , Bhattacharjee, D., Nasipuri, M., and Krejcar, O. (2021). Complement component face space for 3D face recognition from range images. Applied Intelligence, 51(4), 2500-2517.

- Liu, Tingting, et al. "Flexible FTIR spectral imaging enhancement for industrial robot infrared vision sensing." IEEE Transactions on Industrial Informatics 16.1 (2019): 544-554.

- Wang, Ben, Shuhan Chen, Jian Wang, and Xuelong Hu. "Residual feature pyramid networks for salient object detection." The visual computer 36, no. 9 (2020): 1897-1908.

- Hai Liu, Hanwen Nie, Zhaoli Zhang, and You-Fi Li. " Anisotropic angle distribution learning for head pose estimation and attention understanding in human-computer interaction."Neurocomputing, Volume 433, April 2021: 310-322.

- Almuashi, Mohammed, et al. "Siamese convolutional neural network and fusion of the best overlapping blocks for kinship verification." Multimedia Tools and Applications (2022): 1-32.

- Y. Luo, D. Tao, K. Ramamohanarao, C. Xu Tensor canonical correlation analysis for multi-view dimension reduction IEEE Trans. Knowl. Data, 27 (11) (2015), pp. 3111-3124.

- Wang, L. , Guo, Y., Dalip, B., Xiao, Y., Urman, R.D. and Lin, Y., 2022. An experimental study of objective pain measurement using pupillary response based on genetic algorithm and artificial neural network. Applied Intelligence, 52(2), pp.1145-1156.

- Zhang, J. , Gao, K., Fu, K. and Cheng, P., 2020. Deep 3D Facial Landmark Localization on position maps. Neurocomputing, 406, pp.89-98.

- Laiadi, O. , Ouamane, A., Benakcha, A., Taleb-Ahmed, A., and Hadid, A. (2020). Tensor cross-view quadratic discriminant analysis for kinship verification in the wild. Neurocomputing, 377, 286-300.

- Guo, Y. , Huang, J., Xiong, M., Wang, Z., Hu, X., Wang, J. and Hijji, M., 2022. Facial expressions recognition with multi-region divided attention networks for smart education cloud applications. Neurocomputing, 493, pp.119-128.

- Othman, E. , Werner, P., Saxen, F., Al-Hamadi, A., Gruss, S. and Walter, S., 2021. Automatic vs. human recognition of pain intensity from facial expression on the X-ITE pain database. Sensors, 21(9), p.3273.

- Aliradi, R. and Ouamane, A.A., BSIF Features Learning using TXQEDA Tensor Subspace for kinship verification. The First International Conference on Advances in Electrical and Computer Engineering (ICAECE’2023) will be held on May 15-16, 2023, Tebessa, Algeria.

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).