Submitted:

21 May 2024

Posted:

22 May 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

- information system dependence.

- medical device connections.

- multiple software usage.

- multi-user shared devices.

- Suggestion of the electronically stored and need to be protected minimum and maximum data sets.

- Access mechanisms to these data sets

- Authorization mechanisms of healthcare staff

- Education/Awareness/informing mechanisms of healthcare staff about patient privacy

2. Materials and Methods

2.1. Ethical Considerations

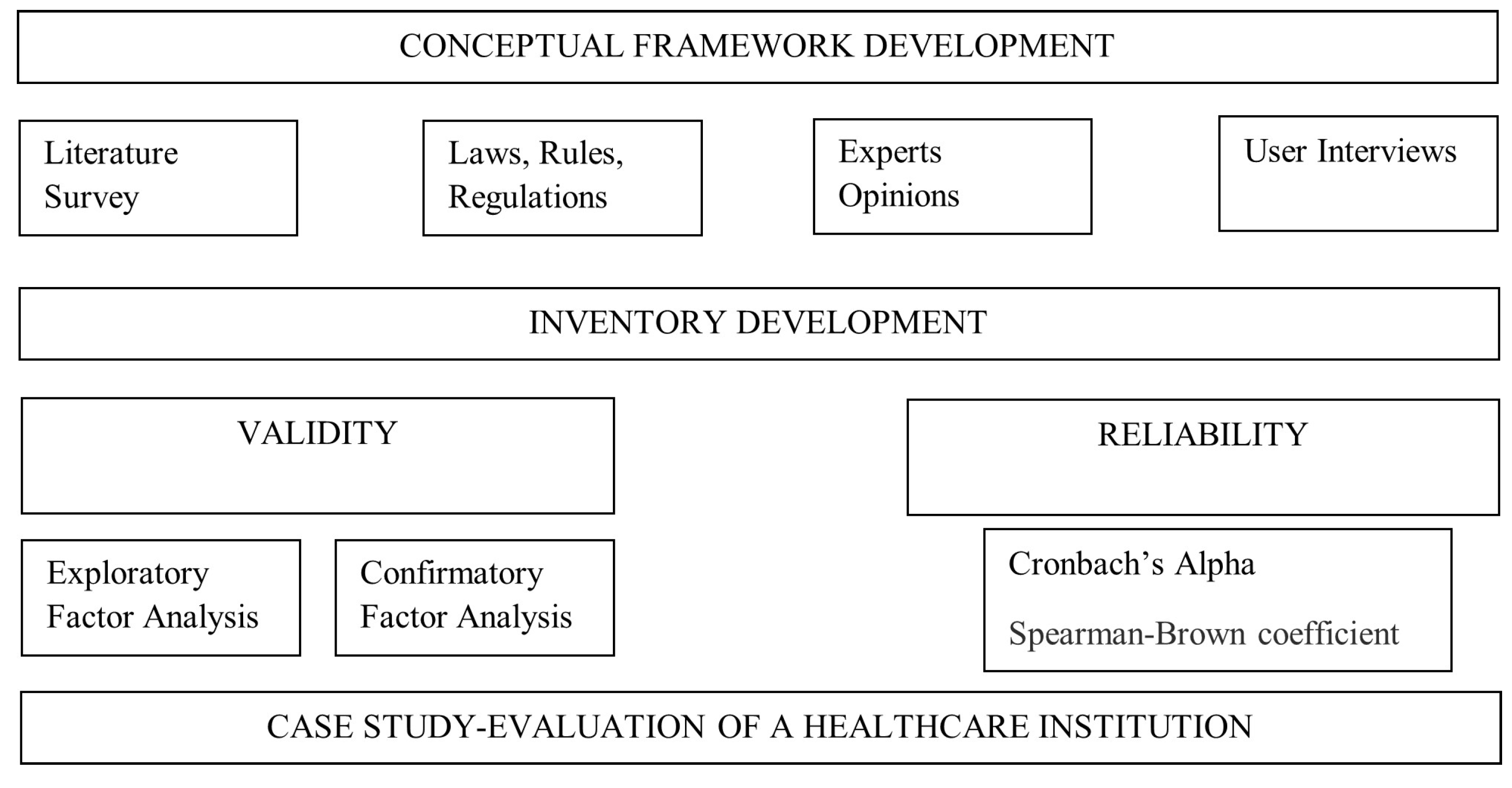

2.2. Study Design

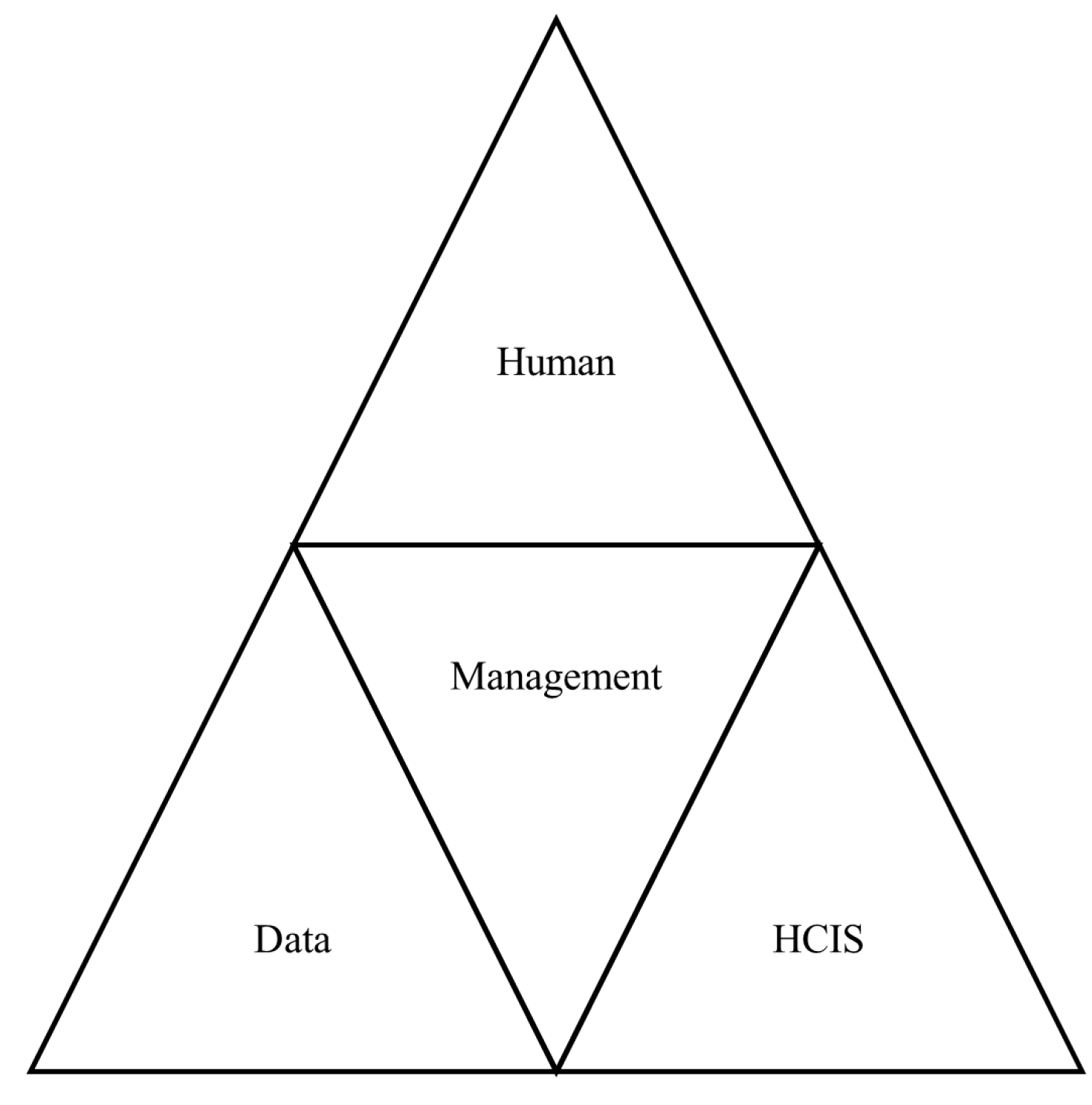

2.3. Conceptual Framework

- Management

- Users

- Patient

- Data

- Healthcare Information System (HCIS)

- Names.

- All elements of dates (except year).

- Phone numbers.

- Fax numbers.

- E-mail addresses.

- Social security numbers.

- Medical record numbers.

- Health plan numbers.

- Account numbers.

- Certificate/license numbers.

- All means of vehicle numbers.

- All means of device identifiers.

- Web Universal Resource Locators (URLs).

- Internet Protocol (IP) addresses.

- All means of biometric identifiers.

- Any comparable images.

- Any other unique identifying numbers.

| Data | Hierarchy level |

|---|---|

| Physician | 7 |

| Nurse | 5 |

| Technician | 2 |

| Lab technician | 2 |

| Office Worker | 1 |

2.4. Data Collection

2.5. Statistical Analysis

2.5.1. Content Validity

2.5.2. Structure Validity

2.5.3. Reliability

2.5.4. Evaluation of the Hospital

- wi is the answer given by i-th participant

- ∑Wi is the sum of the answers given to ith inventory item

- wi / ∑Wi is the weight of the i-th participant.

- Xi is the corresponding fuzzy set of the i-th respondents (if the answer is “Moderately disagree” then Xi is (0,0.25,0.5))

- Fj is the jth inventory item.

- n is the total number of answers.

- Ri (yj, A) is the fuzzy set determined by 2 (formula 2)

- F (xj, l) is the standard fuzzy sets defined (Table 1)

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Sun, W.; Cai, Z. Li; Y., Liu F.; Fang, S.; Wang, G. Security and privacy in the medical internet of things: A review. Security and Communication Networks 2018, vol 2018,1-9. [CrossRef]

- Tertulino, R.; Antunes, N.; Morais, H. Privacy in electronic health records: A systematic mapping study. Journal of Public Health 2024, 32, 435–454. [Google Scholar] [CrossRef]

- Yayla, A.; İlgin, V.E.; Özlü, Z.K. Development of the Perioperative Privacy Scale: A Validity and Reliability Study. Journal of PeriAnesthesia Nursing 2022, 37, 227–233. [Google Scholar] [CrossRef] [PubMed]

- Goodman, K.W. Confidentiality and Privacy. In Hester Micah D., & Schonfeld T.L.(Eds), Guidance for Healthcare Ethics Committees. Cambridge University Press: Cambridge, London. 2022, p. 85-94.

- Hurst, W.; Boddy, A.; Merabti, M.; Shone, N. Patient privacy violation detection in healthcare critical infrastructures: An investigation using density-based benchmarking. Future Internet 2020, 12, 100. [Google Scholar] [CrossRef]

- Wallace, I.M. Is Patient Confidentiality Compromised With the Electronic Health Record?: A Position Paper. Computers, Informatics, Nursing 2016, 33, 58–62. [Google Scholar] [CrossRef] [PubMed]

- Jimmy, F.N. U. Cyber security Vulnerabilities and Remediation Through Cloud Security Tools. Journal of Artificial Intelligence General science (JAIGS) 2024, 2, 129–171. [Google Scholar]

- Ak, B.; Tanrıkulu, F.; Gündoğdu, H.; Yılmaz, D.; Öner, Ö.; Ziyai, N.Y.; Dikmen, Y. Cultural viewpoints of nursing students on patient privacy: A qualitative study. Journal of religion and health 2021, 60, 188–201. [Google Scholar] [CrossRef] [PubMed]

- Akar, Y.; Özyurt, E.; Erduran, S.; Uğurlu, D.; Aydın, İ. Hasta mahremiyetinin değerlendirilmesi [Evaluation of patient confidentiality]. Sağlık Akademisyenleri Dergisi [health Care Academician Journal] 2021, 6, 18–24. [Google Scholar]

- Hartigan, L.; Cussen, L.; Meaney, S.; O’Donoghue, K. Patients’ perception of privacy and confidentiality in the emergency department of a busy obstetric unit. BMC Health Services Research 2018, 18, 1–6. [Google Scholar] [CrossRef] [PubMed]

- Aslan, A.; Greve, M; Diesterhöft T.; Kolbe, L. Can Our Health Data Stay Private? A Review and Future Directions for IS Research on Privacy-Preserving AI in Proceedings of Healthcare Wirtschaftsinformatik 2022, 8. Retrieved from https://aisel.aisnet.org/wi2022/digital_health/digital_health/8.

- Kaur, R.; Shahrestani, S.; Ruan, C. Security and Privacy of Wearable Wireless Sensors in Healthcare: A Systematic Review. Computer Networks and Communications 2024, 24–48. [Google Scholar] [CrossRef]

- Zhang, C.; Shahriar, H.; Riad, A.K. Security and Privacy Analysis of Wearable Health Device. In Proceedings of the IEEE 44th Annual Computers, Software, and Applications Conference (COMPSAC), Madrid, Spain (pp. 1767-1772). IEEE. 13-17 July 2020.

- Haris, M.; Haddadi, H.; Hui, P. Privacy leakage in mobile computing: Tools, methods, and characteristics. Available online: http://arxiv.org/abs/1410.4978. 2020.

- Shen, N.; Sequeira, L.; Silver, M.P.; Carter-Langford, A; Strauss, J.; Wiljer, D. Patient privacy perspectives on health information exchange in a mental health context: Qualitative study. JMIR mental health 2019, 6, 1–17. [Google Scholar] [CrossRef]

- Murdoch, B. Privacy and artificial intelligence: Challenges for protecting health information in a new era. BMC Medical Ethics 2021, 22, 1–5. [Google Scholar] [CrossRef] [PubMed]

- George, A.S.; Baskar, T.; Srikaanth, P.B. Cyber Threats to Critical Infrastructure: Assessing Vulnerabilities Across Key Sectors. Partners Universal International Innovation Journal 2024, 2, 51–75. [Google Scholar]

- Goldberg, S.G.; Johnson, G.A.; Shriver, S.K. Regulating privacy online: An economic evaluation of the GDPR. American Economic Journal: Economic Policy 2024, 16, 325–358. [Google Scholar] [CrossRef]

- Öztürk, H.; Torun Kılıç, Ç.; Kahriman, İ.; Meral, B.; &, *!!! REPLACE !!!*; Çolak, B.; Çolak, B. Assessment of nurses’ respect for patient privacy by patients and nurses: A comparative study. Journal of Clinical Nursing 2021, 30, 1079–1090. [Google Scholar] [CrossRef] [PubMed]

- Wu, H.; Dwivedi, A.D.; Srivastava, G. Security and privacy of patient information in medical systems based on blockchain technology. ACM Transactions on Multimedia Computing, Communications, and Applications (TOMM) 2021, 17, 1–17. [Google Scholar] [CrossRef]

- Miao, J.; Wang, Z.; Wu, Z.; Ning, X.; Tiwari, P. A blockchain-enabled privacy-preserving authentication management protocol for Internet of Medical Things. Expert Systems with Applications 2024, 237, 121329. [Google Scholar] [CrossRef]

- Raj, A.; Prakash, S. Privacy preservation of the internet of medical things using blockchain. Health Services and Outcomes Research Methodology 2024, 24, 112–139. [Google Scholar] [CrossRef]

- Li, F. Research on the Legal Protection of User Data Privacy in the Era of Artificial Intelligence. Science of Law Journal 2024, 3, 35–40. [Google Scholar]

- Chavali, D.; Baburajan, B.; Gurusamy, A.; Dhiman, V.K.; Katari, S.C. Regulating Artificial Intelligence: Developments And Challenges. Int. J. of Pharm. Sci 2024, 2, 1250–1261. [Google Scholar]

- Rychik, J. You can only change what you measure: An argument for more detailed characterization in the Fontan circulation. European Heart Journal 2022, 1–3. [Google Scholar] [CrossRef]

- Sebetci, Ö. Enhancing end-user satisfaction through technology compatibility: An assessment on health information system. Health Policy and Technology 2018, 7, 265–274. [Google Scholar] [CrossRef]

- Bastug, M. The structural relationship of reading attitude, reading comprehension and academic achievement. International Journal of Social Sciences and Education 2014, 4, 931–946. [Google Scholar]

- Ilhan, M.; Cetin, B. Comparing the analysis results of the structural equation models (SEM) conducted using LISREL and AMOS. Journal of Measurement and Evaluation In Education And Psychology-Epod 2014, 5, 26–42. [Google Scholar]

- Sofian, S.S.; Rambely, A.S. Measuring perceptions of students toward game and recreational activity using fuzzy conjoint analysis. Indonesian J. Electr. Eng. Comput. Sci 2020, 20, 395–404. [Google Scholar] [CrossRef]

- Turksen, I.B.; Willson, I.A. A fuzzy set preference model for consumer choice. Fuzzy Sets and Systems 1994, 68, 253–266. [Google Scholar] [CrossRef]

- Leech, N.; Barrett, K.; Morgan, G.A. SPSS for intermediate statistics: Use and interpretation. Lawrence Erlbaum Associates Inc: New Jersey, U.S.A. 2013; pp.154-158.

- Huang, M; Liu, A. Green data gathering under delay differentiated services constraint for internet of things. Wireless Communications and Mobile Computing 2018, vol. 2018, 1–23. [CrossRef]

| Data | Hierarchy level |

|---|---|

| Name | 7 |

| Phone Number | 6 |

| Certificate number | 4 |

| Plate Number | 4 |

| Social Security Number | 7 |

| Perfect fit | Acceptable fit | |

|---|---|---|

| AGFI | 0.90 ≤ AGFI ≤ 1.00 | 0.85 ≤ AGFI ≤ 0.90 |

| GFI | 0.95 ≤ GFI ≤ 1.00 | 0.90 ≤ GFI ≤ 0.95 |

| CFI | 0.95 ≤ CFI ≤ 1.00 | 0.90 ≤ CFI ≤ 0.95 |

| NFI | 0.95 ≤ NFI ≤ 1.00 | 0.90 ≤ NFI ≤ 0.95 |

| RMSEA | 0.00 ≤ RMSEA ≤ 0.05 | 0.05 ≤ RMSEA ≤ 0.08 |

| χ2/df | 2 ≤ χ2/df ≤ 3 | 3 ≤ χ2/df ≤ 5 |

| Data | Hierarchy level |

|---|---|

| Strongly Agree | 0.75, 1, 1 |

| Moderately Agree | 0.5, 0.75, 1 |

| Not Sure | 0.25 ,0.5, 0.75 |

| Moderately Disagree | 0, 0.25, 0.5 |

| Strongly Disagree | 0, 0 ,0.25 |

| Dimension | Cronbach’s Alpha | Spearman-Brown | Guttman's |

|---|---|---|---|

| Management | 0.929 | 0.870 | 0.930 |

| User | 0.834 | 0.830 | 0.834 |

| Patient | 0.853 | 0.768 | 0.856 |

| Data | 0.930 | 0.906 | 0.930 |

| Information system | 0.925 | 0.903 | 0.926 |

| Perfect fit | Acceptable fit | Study Value | |

|---|---|---|---|

| AGFI | 0.90 ≤ AGFI ≤ 1.00 | 0.85 ≤ AGFI ≤ 0.90 | 0.876 |

| GFI | 0.95 ≤ GFI ≤ 1.00 | 0.90 ≤ GFI ≤ 0.95 | 0.954 |

| CFI | 0.95 ≤ CFI ≤ 1.00 | 0.90 ≤ CFI ≤ 0.95 | 0.969 |

| NFI | 0.95 ≤ NFI ≤ 1.00 | 0.90 ≤ NFI ≤ 0.95 | 0.963 |

| RMSEA | 0.00 ≤ RMSEA ≤ 0.05 | 0.05 ≤ RMSEA ≤ 0.08 | 0.497 |

| χ2/df | 2 ≤ χ2/df ≤ 3 | 3 ≤ χ2/df ≤ 5 | 2.87 |

| Variable | Similarity | Strongly Disagree |

Moderately Disagree | Not Sure | Moderately Agree | Strongly Agree |

|---|---|---|---|---|---|---|

| M1 | Moderately Disagree | 0.718218 | 0.784835 | 0.519829 | 0.426298 | 0.384119 |

| M2 | Moderately Disagree | 0.699176 | 0.80075 | 0.532724 | 0.43434 | 0.390028 |

| M3 | Moderately Disagree | 0.650733 | 0.843053 | 0.564349 | 0.449726 | 0.398236 |

| M4 | Moderately Disagree | 0.709998 | 0.788945 | 0.52799 | 0.430863 | 0.386971 |

| M5 | Moderately Disagree | 0.684141 | 0.818907 | 0.540279 | 0.437499 | 0.39033 |

| M6 | Moderately Disagree | 0.628925 | 0.828878 | 0.587218 | 0.461348 | 0.403716 |

| M7 | Moderately Disagree | 0.668136 | 0.844412 | 0.546092 | 0.439264 | 0.390171 |

| M8 | Moderately Disagree | 0.66005 | 0.842132 | 0.556088 | 0.444531 | 0.393638 |

| M9 | Moderately Disagree | 0.668635 | 0.827864 | 0.552673 | 0.443886 | 0.393705 |

| U1 | Moderately Disagree | 0.733799 | 0.766653 | 0.514777 | 0.424011 | 0.382616 |

| U2 | Moderately Disagree | 0.650898 | 0.837291 | 0.565584 | 0.451363 | 0.39905 |

| U3 | Moderately Disagree | 0.642923 | 0.8332 | 0.572724 | 0.456872 | 0.403466 |

| U4 | Moderately Disagree | 0.668369 | 0.834325 | 0.550549 | 0.442125 | 0.393091 |

| P1 | Moderately Disagree | 0.663697 | 0.827674 | 0.557226 | 0.446755 | 0.396578 |

| P2 | Moderately Disagree | 0.691294 | 0.807406 | 0.538052 | 0.436991 | 0.391008 |

| P3 | Moderately Disagree | 0.642528 | 0.843339 | 0.571963 | 0.452661 | 0.398293 |

| P4 | Moderately Disagree | 0.624436 | 0.823395 | 0.590312 | 0.466845 | 0.409217 |

| P5 | Moderately Disagree | 0.698044 | 0.801263 | 0.533885 | 0.434788 | 0.388898 |

| D1 | Moderately Disagree | 0.6586 | 0.840305 | 0.558345 | 0.445075 | 0.393691 |

| D2 | Moderately Disagree | 0.62853 | 0.838854 | 0.585223 | 0.459092 | 0.402227 |

| D3 | Moderately Disagree | 0.647867 | 0.861636 | 0.561251 | 0.446213 | 0.393621 |

| D4 | Moderately Disagree | 0.630309 | 0.831065 | 0.585698 | 0.45986 | 0.403015 |

| D5 | Moderately Disagree | 0.650272 | 0.857155 | 0.560497 | 0.445853 | 0.393649 |

| D6 | Moderately Disagree | 0.648802 | 0.847849 | 0.564973 | 0.448839 | 0.396096 |

| HCIS1 | Moderately Disagree | 0.627952 | 0.860448 | 0.579846 | 0.455302 | 0.39888 |

| HCIS2 | Moderately Disagree | 0.671541 | 0.832493 | 0.547898 | 0.440915 | 0.39221 |

| HCIS3 | Moderately Disagree | 0.663280 | 0.839029 | 0.553546 | 0.444322 | 0.394377 |

| HCIS4 | Moderately Disagree | 0.624389 | 0.836324 | 0.589115 | 0.461753 | 0.404283 |

| HCIS5 | Moderately Disagree | 0.637784 | 0.851736 | 0.573845 | 0.452763 | 0.397918 |

| HCIS6 | Moderately Disagree | 0.654471 | 0.847441 | 0.559831 | 0.445748 | 0.394069 |

| Dimension | Similarity | Strongly Disagree |

Moderately Disagree | Not Sure | Moderately Agree | Strongly Agree |

|---|---|---|---|---|---|---|

| Management | Moderately Disagree | 0.676446 | 0.819975 | 0.547471 | 0.440862 | 0.392324 |

| User | Moderately Disagree | 0.673997 | 0.817867 | 0.550909 | 0.443593 | 0.394556 |

| Patient | Moderately Disagree | 0.664 | 0.820615 | 0.558287 | 0.447608 | 0.396799 |

| Data | Moderately Disagree | 0.644063 | 0.846144 | 0.569331 | 0.450822 | 0.39705 |

| Information System | Moderately Disagree | 0.64657 | 0.844579 | 0.567347 | 0.450134 | 0.396956 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).