1. Introduction

Tremor, a prevalent neurological disorder, is characterized by involuntary oscillations or tremors of various body parts, affecting millions of individuals worldwide [

1]. It can be broadly categorized into two primary types based on their occurrence: resting tremors and action tremors [

2]. Resting tremors, commonly seen in Parkinson’s and Wilson’s diseases, occur when the affected body parts are at rest. Parkinson's disease, for instance, is marked by resting tremors that impact not only the limbs but also the head, hindering fine motor skills and coordinated movements like stable walking or precise actions [

2,

3]. Wilson's disease often leads to uncontrollable ‘wing-beating’ resting tremors [

4]. In contact, action tremors are primarily present during conscious movements or while maintaining certain postures, with Essential Tremor (ET) being a prime example. ET mainly affects the rhythmic movements of the upper limbs and hand, typically oscillating between 4 to 12 Hz, and may progress to involve the voice, head, or other body parts [

2,

5,

6]. Regardless of their type, tremors significantly disrupt patients' daily lives. The involuntary shaking can hinder walking or manipulating objects, and hand tremors can interfere with using everyday items like remote controls or switches. These physical limitations often lead to reduced quality of life and increased dependence on others, sparking a growing demand for innovative solutions to help these individuals maintain independence and enhance their life quality.

Despite the proposition of various medical treatments like medications, deep brain stimulation (DBS), and physical therapy to alleviate tremor symptoms, they come with their own sets of challenges. Medications may cause side effects like dizziness, fatigue, and nausea [

7,

8]. DBS, while effective, carries surgical risks and can impact speech and balance [

9].

Physical therapy, although beneficial, might not always suffice to fully counteract tremor symptoms. Given these challenges, the development of assistive technologies not only stands as a vital complement to traditional treatments but also represents a crucial frontier in empowering patients to lead more autonomous and fulfilling lives.

Augmented Reality (AR) represents a groundbreaking fusion of real and virtual worlds, offering a unique visualization where physical and digital entities coexist [

10]. With features like immersive experience, real-time interaction, and high adaptability, AR holds great potential in assistive technology, particularly for those with movement disorders. Recent research underscores AR's role in aiding movement disorders in rehabilitation. Wang et al. [

11,

12] have showcased AR's application in assisting individuals with tremor in typing, demonstrating its viability in enhancing human-computer interaction. Compared to conventional tremor-assistance methods like mechanical aids and functional electric stimulation, which may cause physical strain and muscle fatigue, AR offers a more natural interaction mode for patients.

In this research, we introduce an SAR system rooted in eye-tracking technology. This system is specifically designed to empower patients suffering from tremors, allowing them to control a virtual hand through their eye movements. This capability facilitates their interaction with remote objects and household appliances, significantly enhancing their daily independence. Our approach is based on the observation that tremors do not necessarily affect the head. In fact, even for those with Parkinson's disease exhibiting head tremors, the extent of these tremors falls within a predictable ambit and can be offset using straightforward virtual stabilization techniques. Our methodology is encapsulated by the principle ‘interact where you look’. This not only ensures efficient and simplified control for individuals with tremor-related challenges, but it also significantly improves their ability to interact with their environment. Furthermore, our system has the potential to assist those with other movement disorders, such as amyotrophic lateral sclerosis (ALS) or hand impairments like the claw hand, broadening the scope of its application and impact.

2. Related Studies

The application of eye-tracking techniques in human-computer interaction has predominantly been centered around integrating eye movements into multimedia communication, with a focus on creating a seamless and intuitive user experience [

13]. These eye movements are increasingly being recognized as a crucial, real-time input medium, especially pertinent for individuals suffering from movement disorder like ALS [

14]. With the advancement of eye-tracking technology, the spectrum of gaze-based human-computer interaction methods have expanded, leading to an array of innovative applications [

15]. Nehete et al. [

16] developed an eye-tracking mouse, enabling users to interact with computers through dynamic movements like eye or nose gestures. Similarly, Missimer et al. [

17] have developed a system that employs head positioning to control the mouse cursor, where eye blinks are utilized to simulate left or right mouse clicks. Furthermore, the cheek switch, famously utilized by Stephen Hawking, combines facial muscle twitches with negative infrared emitters for letter selection in typing [

18]. This invention has proven to be a vital communication alternative for individuals with tetraplegia or complete body paralysis. However, it is important to recognize that, despite their significant utility in virtual computer control, interfaces based on eye movements face certain limitations when it comes to manipulating physical objects in the real world.

In the case of individuals with tremor, their motor stability is often severely compromised by intrinsic hand tremors during object manipulation. Addressing this challenge, Plaumann et al. [

19] implemented specialized filtering techniques to reduce or eliminate tremors in patients using smartphones. In hardware development, they created a motion sensor that, when utilized by tremor sufferers, significantly improves their precision on smartphone interfaces. On the software front, they harnessed data from smartphones’ built-in motion sensors to enhance input accuracy and decrease operational time for these patients. Research on hand interactions conducted by Wacharamanotham et.al [

20] showed that patients interact more effectively with touch screens using swipe gestures rather than tapping in the presence of hand tremors. However, current research still predominantly focuses on smartphone interactions, with relatively limited exploration regarding other electronic devices.

In leveraging AR technology for the rehabilitation of tremor patients, groundbreaking contributions have been made by Wang et al. They developed a unique rehabilitation training system for Parkinson’s tremor patients, utilizing augmented reality to provide professional rehabilitation guidance at home [

21]. Empirical studies have confirmed this method’s effectiveness and its potential to lower training costs. Additionally, Ueda et al. introduced the ‘Extend Hand’ system, which lets users control a virtual hand for distant object interaction via a touch panel. This approach represents a significant potential for individuals with mobility impairments who face challenges in manipulating items close to them due to walking difficulties. However, this method of hand interaction is not universally applicable. For patients with severe tremors, such as ‘wing-beating’, or those with Parkinson’s-related slowed movements, hand-controlled methods may be impractical. The extended use of tablets by tremor patients with mobility issues also presents operational challenges and risk of fatigue. Future research should thus focus on identifying more natural and accessible methods for remote interaction.

3. Pilot System

To transcend the physical limitations of individuals with tremor and to expand their range of activity, we have introduced a pilot AR system specifically designed to assist individuals with tremor in interacting with objects and devices in their immediate environment. Recognizing that head tremors, irrespective of tremor type, typically remain within a relatively manageable instability range, we have developed an eye-controlled projection AR system. This system harnesses eye movement tracking technology to pinpoint the user’s gaze target, integrating it with IoT technology tailored for physical objects. Consequently, users can seamlessly manipulate objects in the real world through simple eye movements, circumventing the physical strain and burden associated with conventional tremor assistance technologies.

3.1. System Configuration

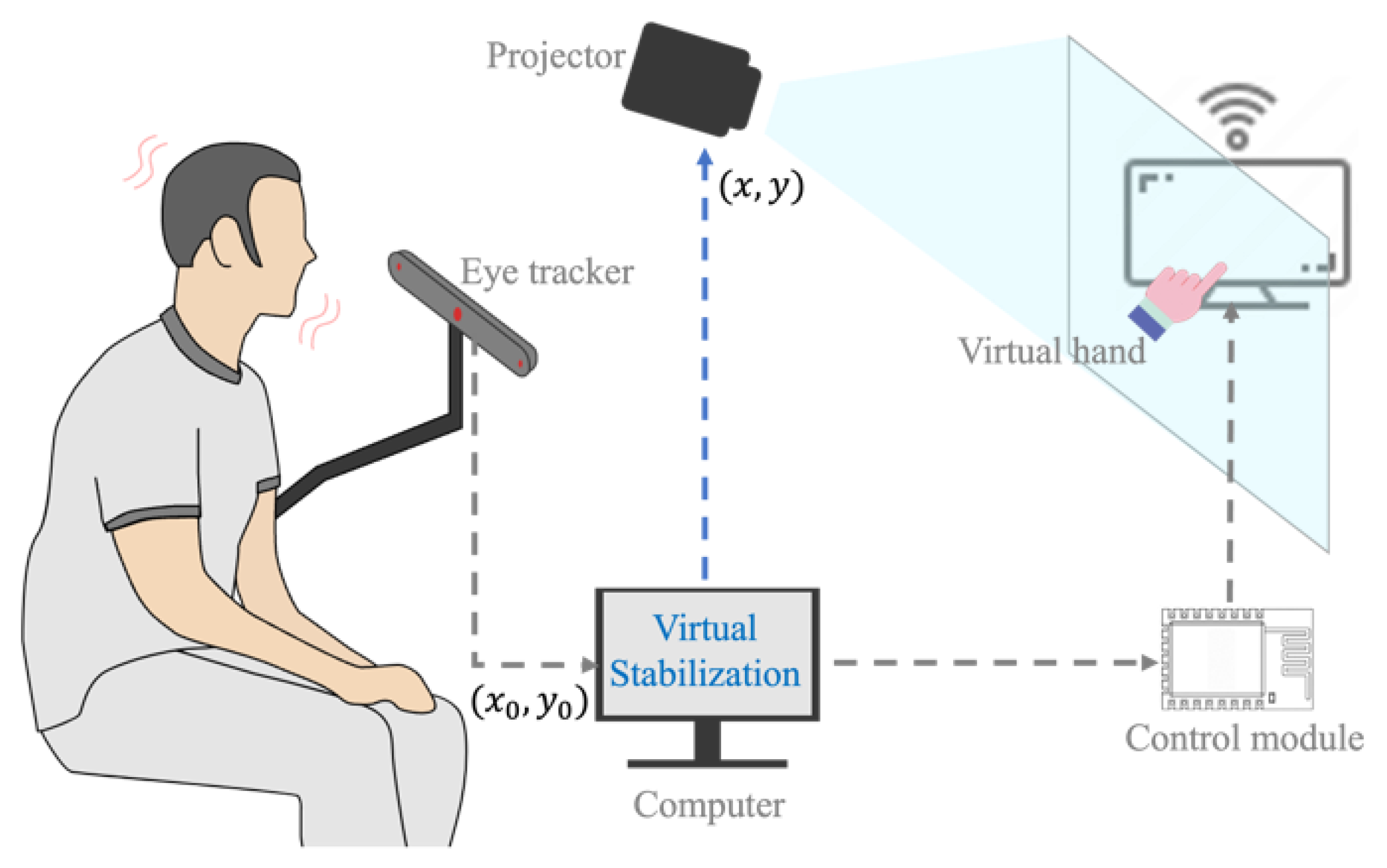

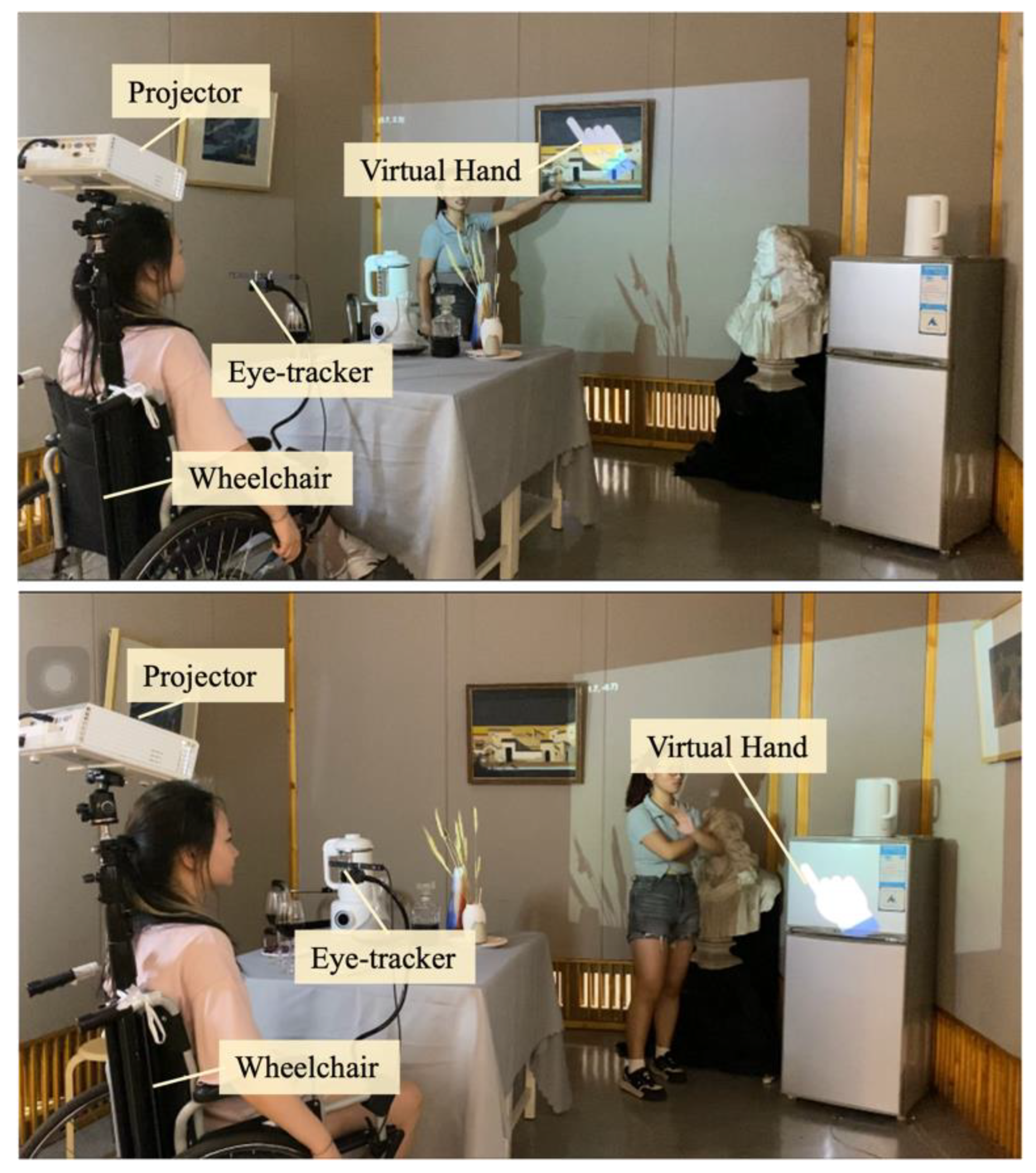

As illustrated in

Figure 1, the hardware portion of the system is comprised of several crucial components: a computer, a projector (wheelchair-compatible), an eye-tracking module (Tobii Eye Tracker 5), a control module (Arduino Nano, IoT module of ESP-8266, HX1838 infrared receiver, and infrared emitter, etc.).

The system employs the eye tracker for real-time capturing of eye movement data, specifically targeting individuals affected by head tremors. The captured data undergo virtual stabilization processing, accurately identifying the stable gaze position of the eyes. Furthermore, the system has designed interactive interfaces for various tasks, such as the virtual hand and bubble. These features enable individual suffering from tremors to interact with distant targets solely through their eye movements.

Figure 1.

Configuration of SAR system.

Figure 1.

Configuration of SAR system.

3.2. Assistive User Interface

3.2.1. Virtual Hand

Using fingers for direct interaction is an intuitive and natural human behavior. However, individuals with tremor often struggle with manual dexterity due to involuntary bodily tremors. These tremors can significantly hinder their ability to engage with objects at a distance. In response, exploring the use of alternative limbs for interaction can help overcome these limitations, enabling stable and remote interactions.

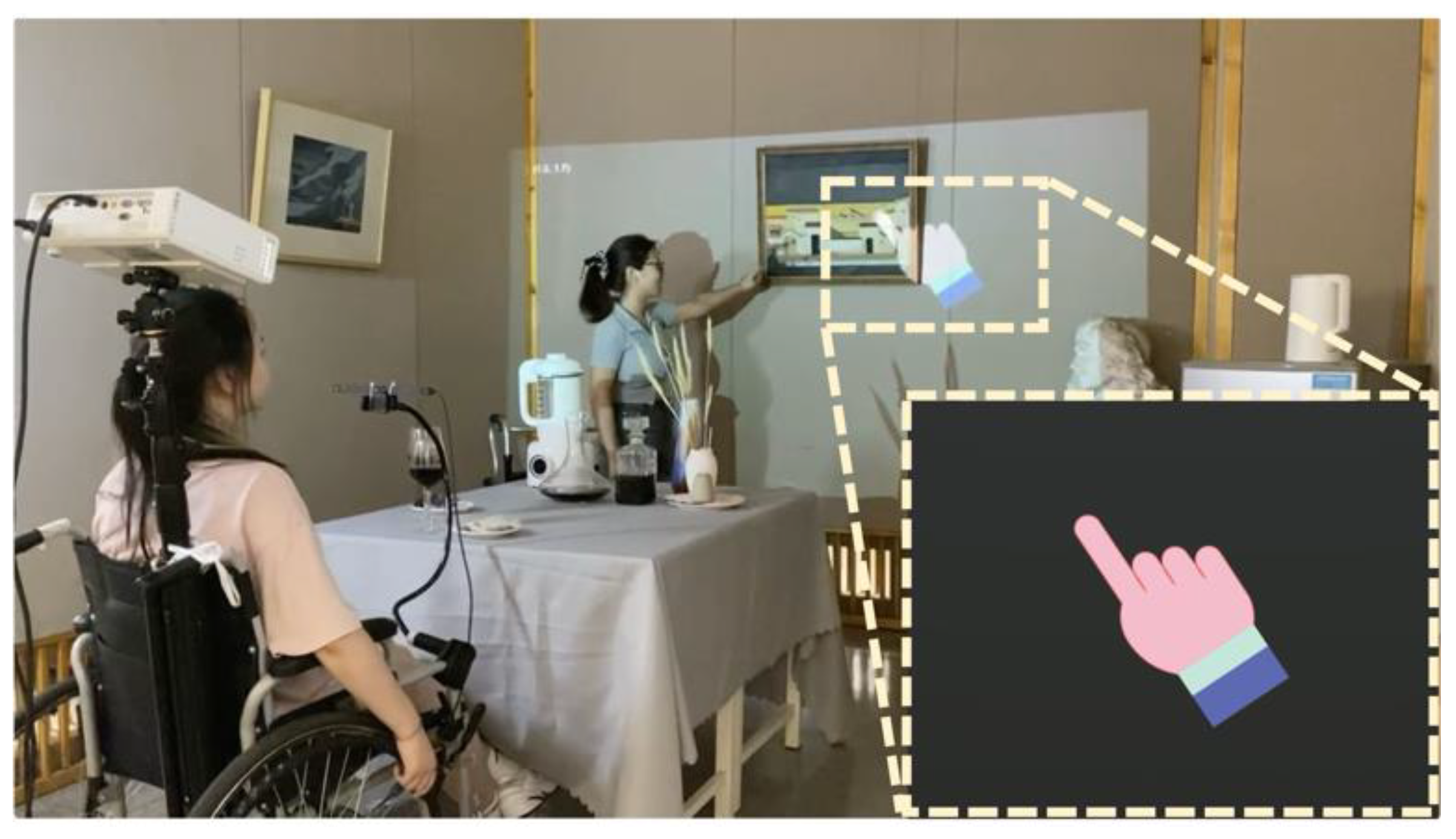

To realize this objective, a system incorporating a projected virtual hand has been meticulously designed to act as a surrogate for physical limbs, thereby aiding individuals with tremors in conducting remote interactions with objects. As depicted in the

Figure 2, the individual with trembling is seated in a wheelchair equipped with both a projector and an eye-tracking device. By adjusting the wheelchair's orientation and deploying the eye-tracker, he/she can effectuate control over a virtual hand through ocular movements, thereby enabling nuanced interaction with distant objects.

It is imperative to highlight that the eye-tracker functions by recording the coordinate positions of the eye's pupil, subsequently determining the virtual hand's coordinate positions through spatial transformation techniques. This process facilitates the registration of the virtual hand within the physical space through a mapping mechanism between the virtual hand and the actual environmental space. Nevertheless, tremors in the head region could introduce interference in the signals captured by the eye tracker. This interference, once transmitted to the virtual hand, may be amplified, resulting in visual instability of the virtual hand. To mitigate the adverse effects of head tremors on the stability of the virtual hand, a virtual stabilization algorithm has been incorporated, with its specifics to be elaborated in the later section. Through the implementation of this innovative technique, subjects can seamlessly control a stable virtual hand for interaction purposes. When combined with the wheelchair configuration, this approach markedly extends the interaction radius accessible to individuals with tremors.

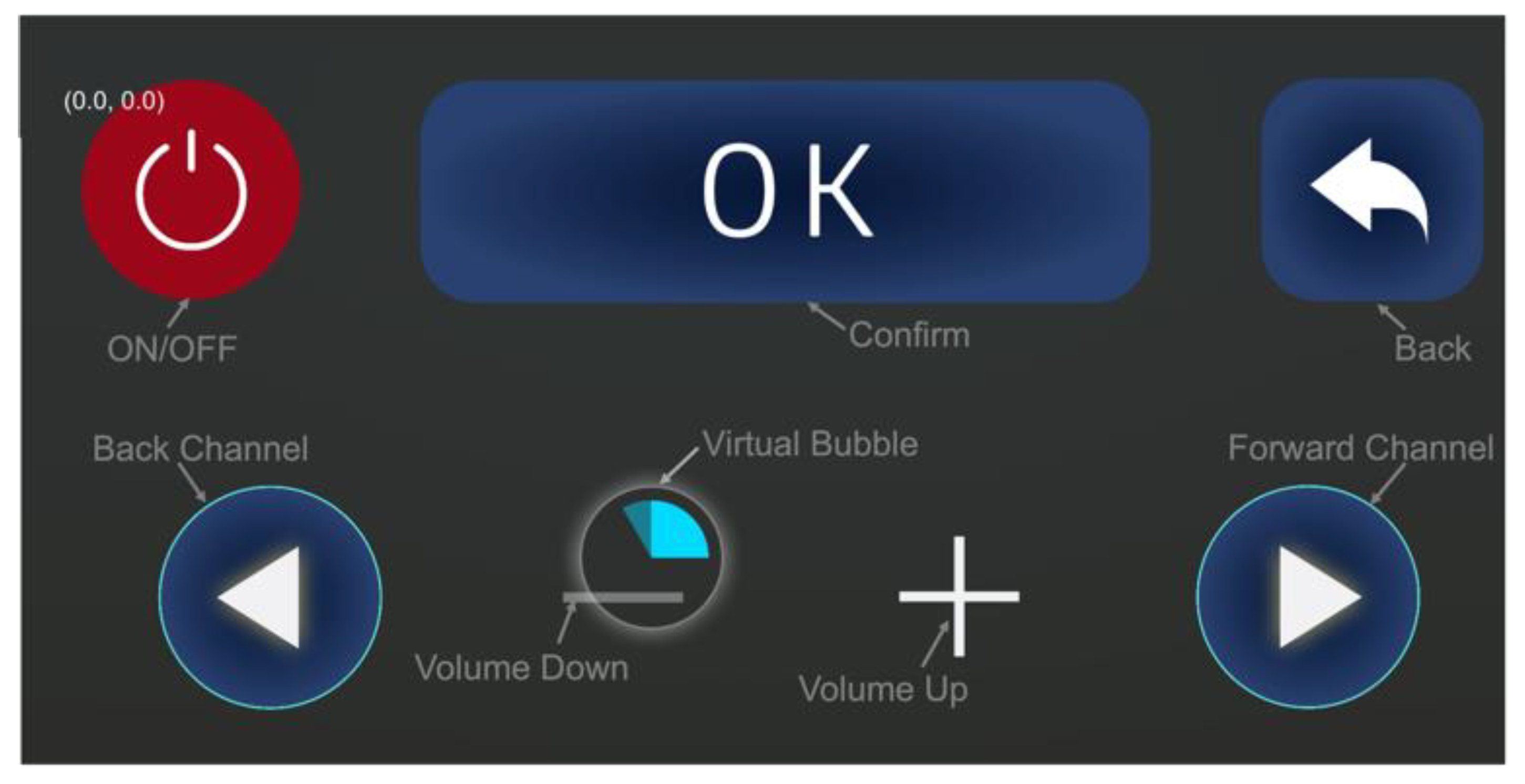

3.2.2. Virtual Confirmation

To enhance the precision of daily interactive inputs in our system, we have innovated a ‘bubble confirmation’ mechanism. This feature is particularly useful in standard operations, such as button clicking, where users traditionally confirm their inputs through prolonged pressing. Mirroring this in a virtual environment, our system introduces a bubble prompt that activates when the virtual hand or pointer hovers over a target. The process, illustrated in

Figure 3, is straightforward: upon positioning the virtual hand or pointer over a target, a bubble encircles it. Inside this bubble, a blue dial gradually appears, filling the bubble in a sector-filling fashion over a set time period. For instance, when interacting with a television, this process spans approximately 3 seconds. This duration is meticulously calibrated to ensure not only input accuracy but also the fluidity of the interaction. Should the virtual hand or pointer deviate from the target, the bubble reverts to its original state. This bubble mechanism is a key component in our system, reliably ensuring precise confirmation and gaze input for interactions with virtual objects.

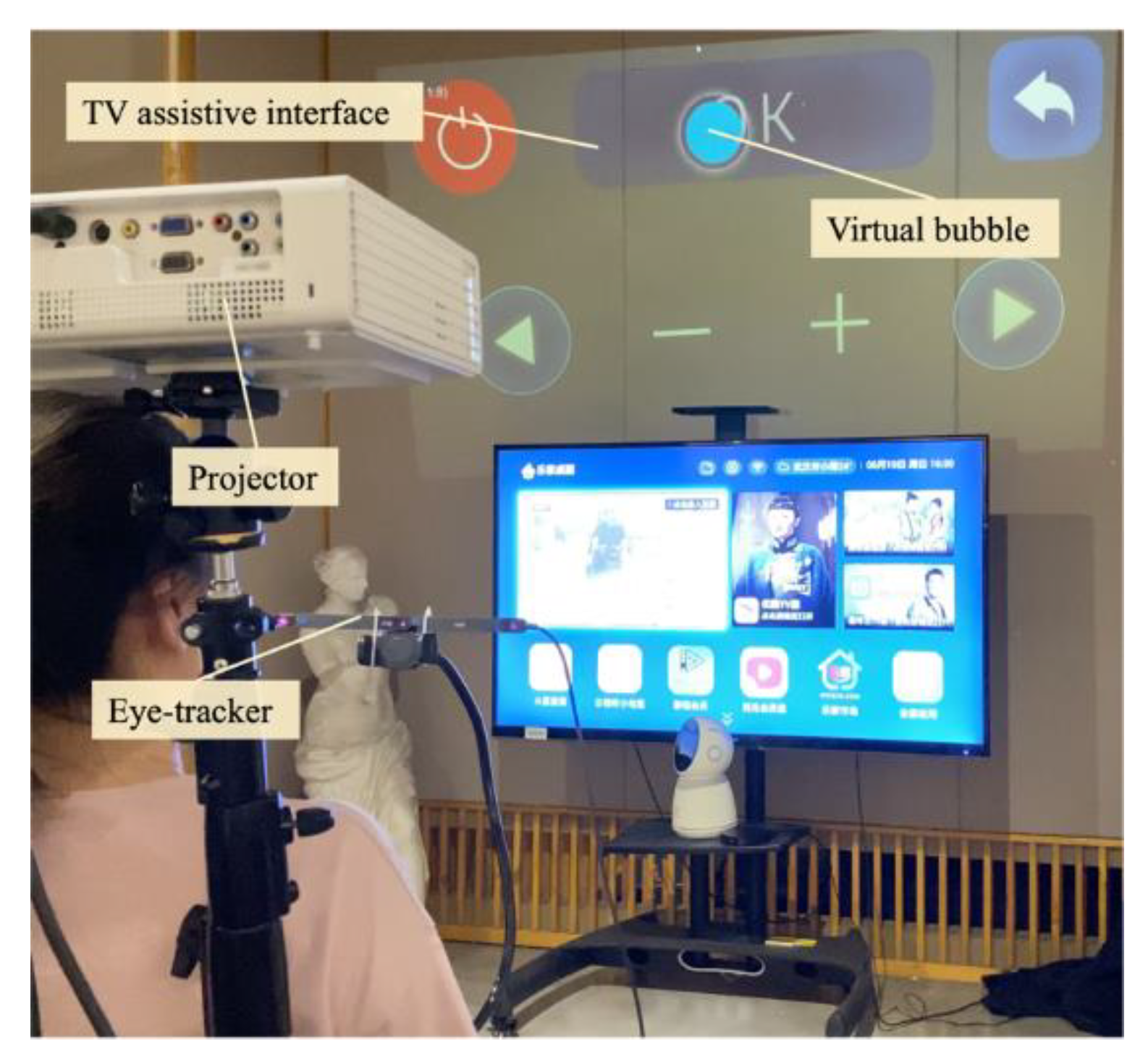

3.2.3. Assistive Interfaces

To facilitate easier operation of household appliances for individuals with tremor, some assistive interfaces was designed. This interface suite aims to simplify interaction steps, making the operation process more intuitive and straightforward. Taking television operation as an illustrative example, an assistive interface, as demonstrated in

Figure 3, was created. To ease the operation, this interface retains only the frequently used function modules, such as volume adjustment, channel switching, and the confirmation button. The assistive interface is projected around the television through a projector installed on a wheelchair. Individuals with tremors can interact with the assistive interface by controlling virtual hands and bubbles through their eye movements. All interaction information is transmitted in real-time to the television via microcontrollers and IoT modules, thereby controlling the television. This design can replace traditional remote control input methods, providing convenience for individuals with tremor who may find operating traditional controls challenging.

Figure 3.

A virtual bubbles and TV assistive interface.

Figure 3.

A virtual bubbles and TV assistive interface.

While such assistive interfaces represent a significant advancement, they still require careful customization. This customization must be based on the specific eye movement interaction patterns, environmental contexts, and the functionalities of household appliances, with a particular focus on the needs of individuals with tremors. Such a tailored approach is crucial to enable them to effortlessly control various home appliances, thereby significantly enhancing their daily living experience and independence.

3.3. Virtual Stabilization

Our objective is to develop a virtual stabilization algorithm aimed at minimizing the impact of head tremors on the tracking of eye tracker, leading to more stable eye tracking. This algorithm is instrumental in differentiating between involuntary head tremors and intentional head movements. By effectively distinguishing these two types of motion, our system ensures smooth and stable control. This distinction is crucial, as it allows the system to respond accurately to the user's intended movements, while simultaneously filtering out the disturbances caused by tremors.

Initially, the user's eye movement coordinates are recorded at a specific moment as

, along with the first three preceding coordinates:

,

and

. A set of weighted values ‘a’, ’b’, and ‘c’, respectively is assigned to these coordinates to calculate the weighted average coordinates

. Experimental testing has determined that the optimal coefficients for ‘a’, ’b’, and ‘c’, are 0.1, 0.8, and 0.1, respectively. The formula for this calculation is as follows:

We then set a threshold distance value, denoted as ‘d’. The system compares the Euclidean distance between the initial coordinates

and weighted average coordinates

with this threshold value. If the distance is greater than ‘d’, the system outputs the original coordinates

. Conversely, if the distance is less than or equal to d, the system outputs the weighted average coordinates

. The formulas for these conditions are:

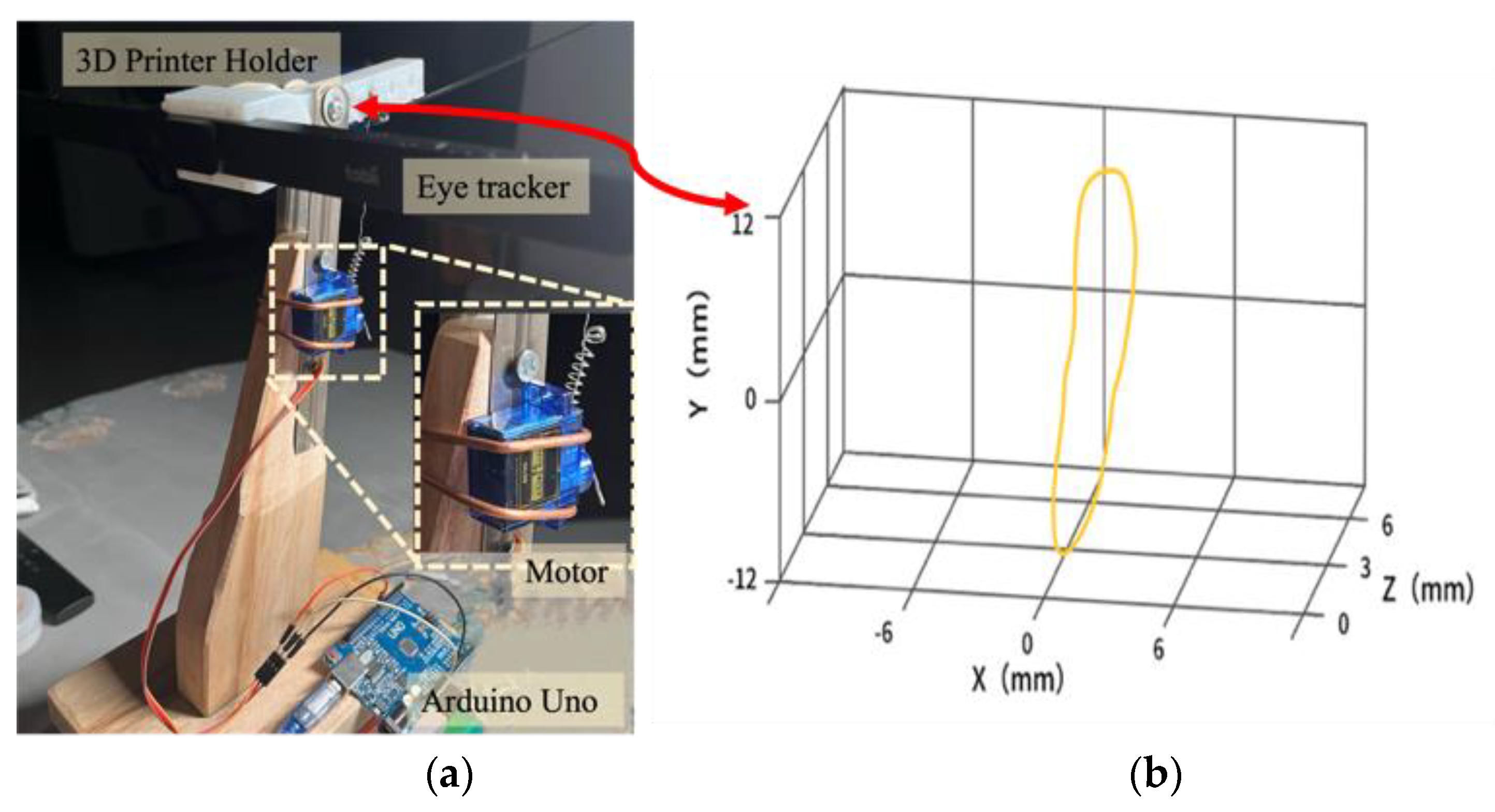

3.4. Tremor Simulation

Considering the experimental system phase and ethical implications, it is not advisable to directly conduct experiments on patients with tremors at the initial stage. Additionally, to gain a more accurate understanding of how various tremor characteristics match the system, utilizing a simulation method can more conveniently obtain objective usage data. Hence, we designed the tremor simulator enabling individuals without tremor to mimic those with trembling during system testing. The foundational idea behind this simulation is that the effects produced by the shaking of a person's head in front of a motion tracking device are analogous to those of an eye-tracking device trembling. As such, our design merely requires the control of the eye-tracking device to tremble according to specific tremor frequencies and amplitudes.

The tremor simulator design comprises a wooden stand, a 3D-printed fixed head, an Arduino, and a jitter motor, collectively forming an eye tracker stand (

Figure 4(a)). By swinging the fixed head of the stand up and down, we simulate the head tremors seen in patients with Parkinson's disease. According to Xu’s experiment [

14], the average tremor amplitude in patients with Parkinson's disease is 1-2 centimeters and the frequency is 5-6 hertz. Therefore, imitating the characteristics of this type of tremor population, the swing amplitude of the motor-controlled eye tracker is controlled within the range of 2 centimeters and 4-8 hertz. To ensure that the simulated data is consistent with the tremor characteristics, we used Opti-track device to track the jitter at the center point of the eye tracker and monitor its motion trajectory, as shown in

Figure 4 (b).

4. Experience and Results

This study is structured around three experiments, designed to comprehensively assess the practicality and auxiliary benefits of our developed system. Firstly, experiment 1 focuses on evaluating a virtual stabilization algorithm within AR system. Specifically, we aim to test the stability and efficiency of interactions with target objects by individuals with trembling using the AR system equipped with this virtual stabilization feature, alongside assessing the system's adaptability to eye movement control by individuals with tremor. Subsequently, experiment 2 delves into exploring the fluidity and stability of operations when individuals with tremor use the system to execute relatively complex interactive tasks. This phase evaluates the system's efficacy in assisting patients during tasks that demand a higher degree of operational complexity. Lastly, experiment 3 aims to investigate the precision of pointing and the effectiveness of remote interactions in complex physical environments by individuals with tremor using the system. We are particularly interested in determining whether the system enhances the individuals' ability to interact with distant targets and improves their communication efficiency within the AR environment. To conduct these experiments, we have invited volunteers to participate in rigorous simulated tests. Both subjective experiences and objective data have been systematically collected and analyzed for a comprehensive evaluation.

4.1. Evaluating the Virtual Stabilization of SAR System

This study aims to validate the virtual stabilization algorithm, exploring its efficacy in system assisting individuals with trembling to interact stably and efficiently with remote objects through eye movement control, such as virtual hand, pointer, and bubble.

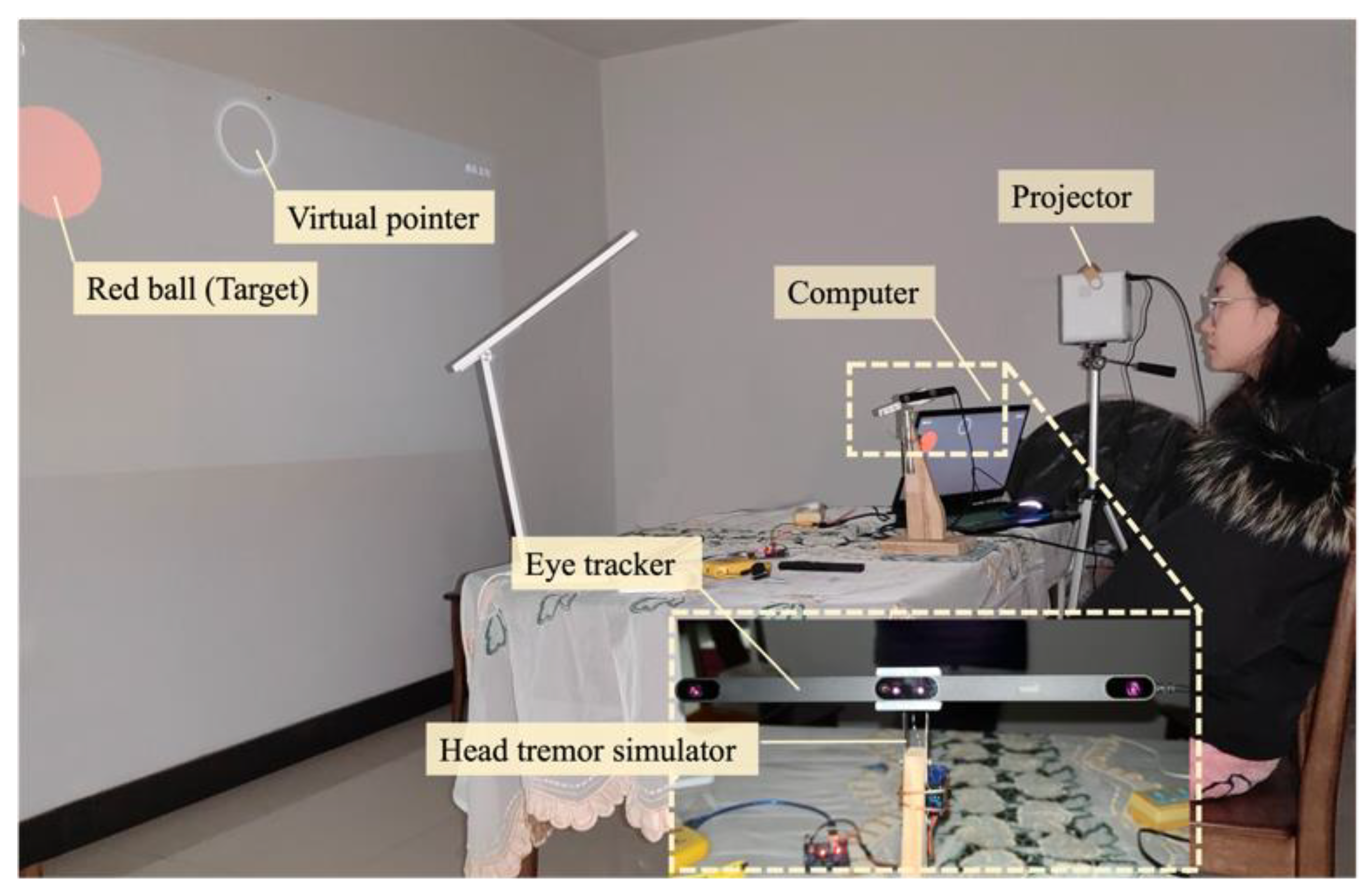

4.1.1. Experimental Setup

As illustrated in

Figure 5, participants were tasked with controlling a virtual pointer through eye movement, aiming to rapidly and accurately align the pointer with randomly appearing red balls in physical space. The experimental procedure encompassed several key steps: At the beginning of each trial, a red dot was randomly generated on the interface. Participants were required to maintain the eye-controlled bubble pointer within the collision range of the red dot for three seconds, after which the red dot would disappear and reappear at a new location. Each participant repeated this task five times. Ten participants aged between 20 and 80 years were recruited for the study. Prior to the experiments, the simulator's vibration frequency and amplitude were precisely calibrated to mimic the characteristics of real head tremors.

Two experimental conditions were set up: one with the system equipped with the virtual stabilization algorithm and the other without it, to compare the effects. The system automatically recorded the coordinates of the pointer and the target position, as well as the task completion time during each trial. Upon completion of the experiments, subjective assessments were collected from the participants to measure their personal experiences and perceptions of the experiment. These assessments were rated on a seven-point Likert scale, ranging from -3 (strongly disagree) to +3 (strongly agree), allowing participants to express their level of agreement or disagreement with each statement. The assessment questions included:

Q1: The movement of the virtual pointer was entirely controlled by my eyes.

Q2: I experienced stability while controlling the movement of the virtual pointer with my eyes.

Q3: I was capable of accurately targeting objects with the eye-controlled virtual pointer.

4.1.2. Result

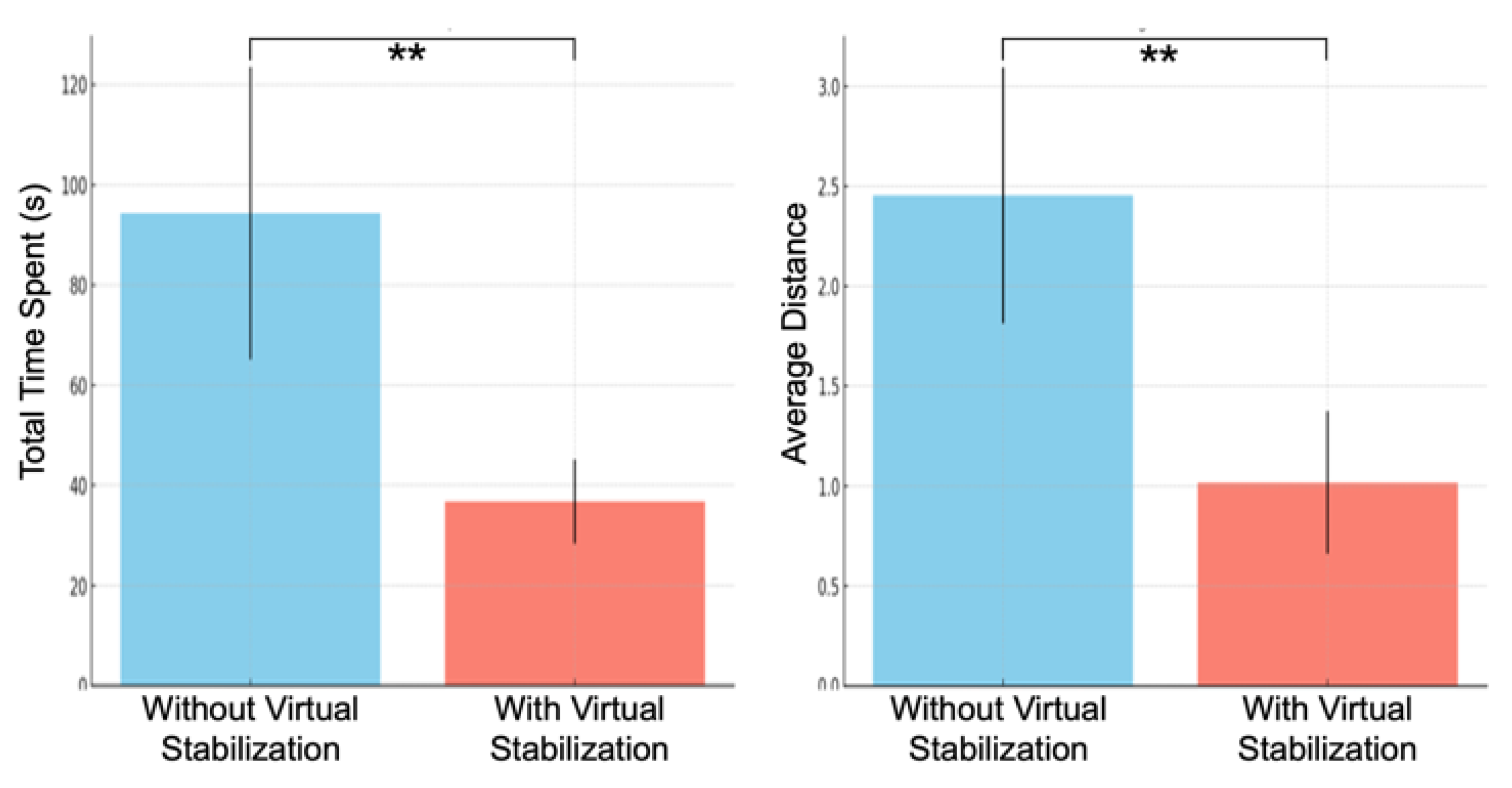

Efficiency evaluation with virtual stabilization: A key finding of our research was the significant reduction in time spent on tasks when the virtual stabilization system was employed. The paired sample t-test demonstrated a statistically significant decrease in time (p=0.000247) with the implementation of the system. Specifically, as illustrated in

Figure 6 (a), the mean time spent without the system was 94.31 seconds (SD = 29.21), which was significantly reduced to 36.72 seconds (SD = 8.43) with the system. The effect size, calculated as Cohen's d, was a substantial 2.68, highlighting the system's effectiveness in minimizing task completion time.

Stability evaluation with virtual stabilization: The mean distance between the pointer position and the target position was obtained by randomly sampling of users' process of targeting the red ball. The Wilcoxon signed-rank test revealed a statistically significant reduction when the virtual stabilization system was active (p =0.0039) (

Figure 6 (b)). The results indicated that the mean distance covered was notably less when the system was utilizing the virtual stabilization algorithm compared to when it was not in use, further confirming the system's role in enhancing operational precision and stability.

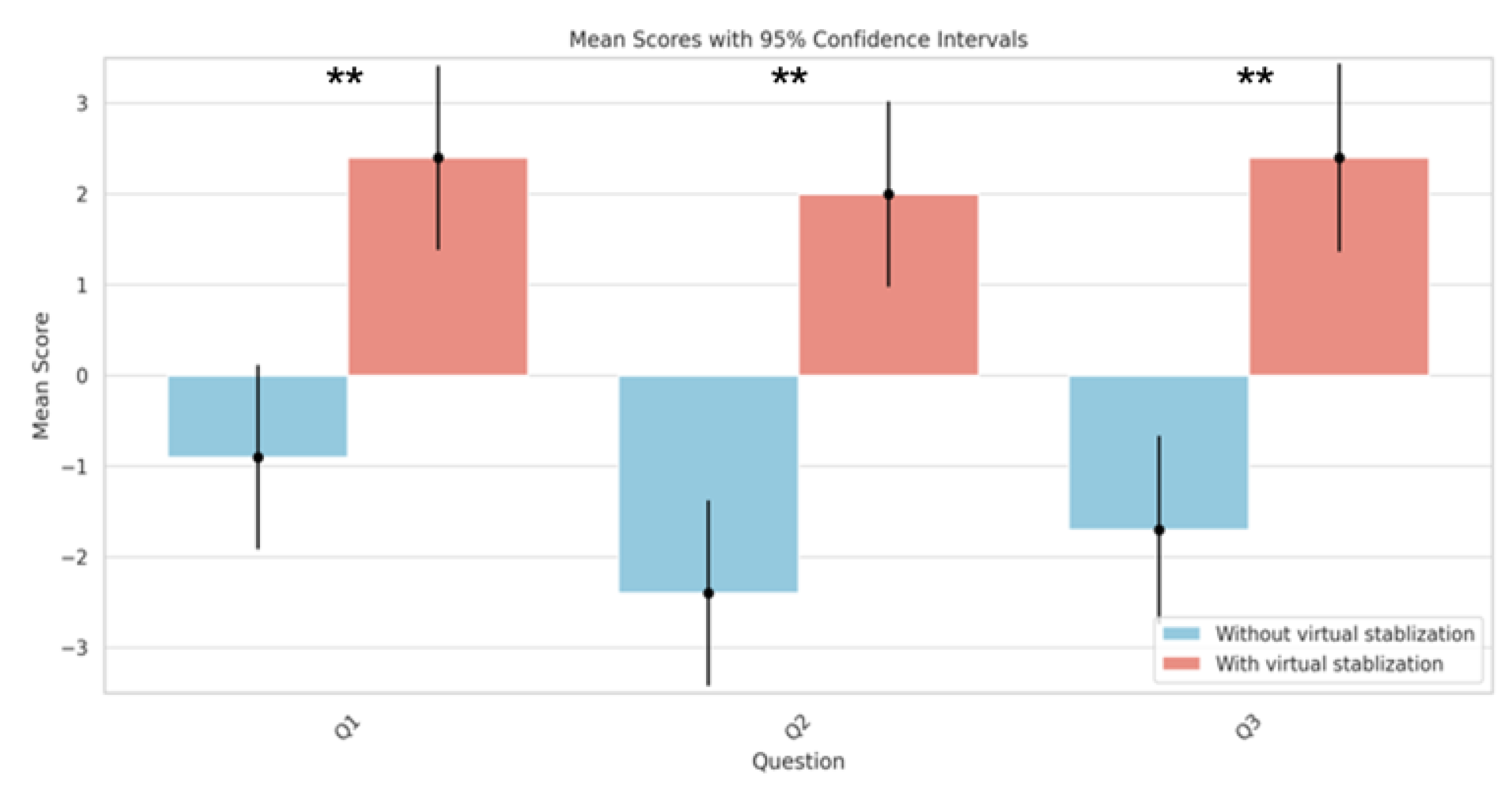

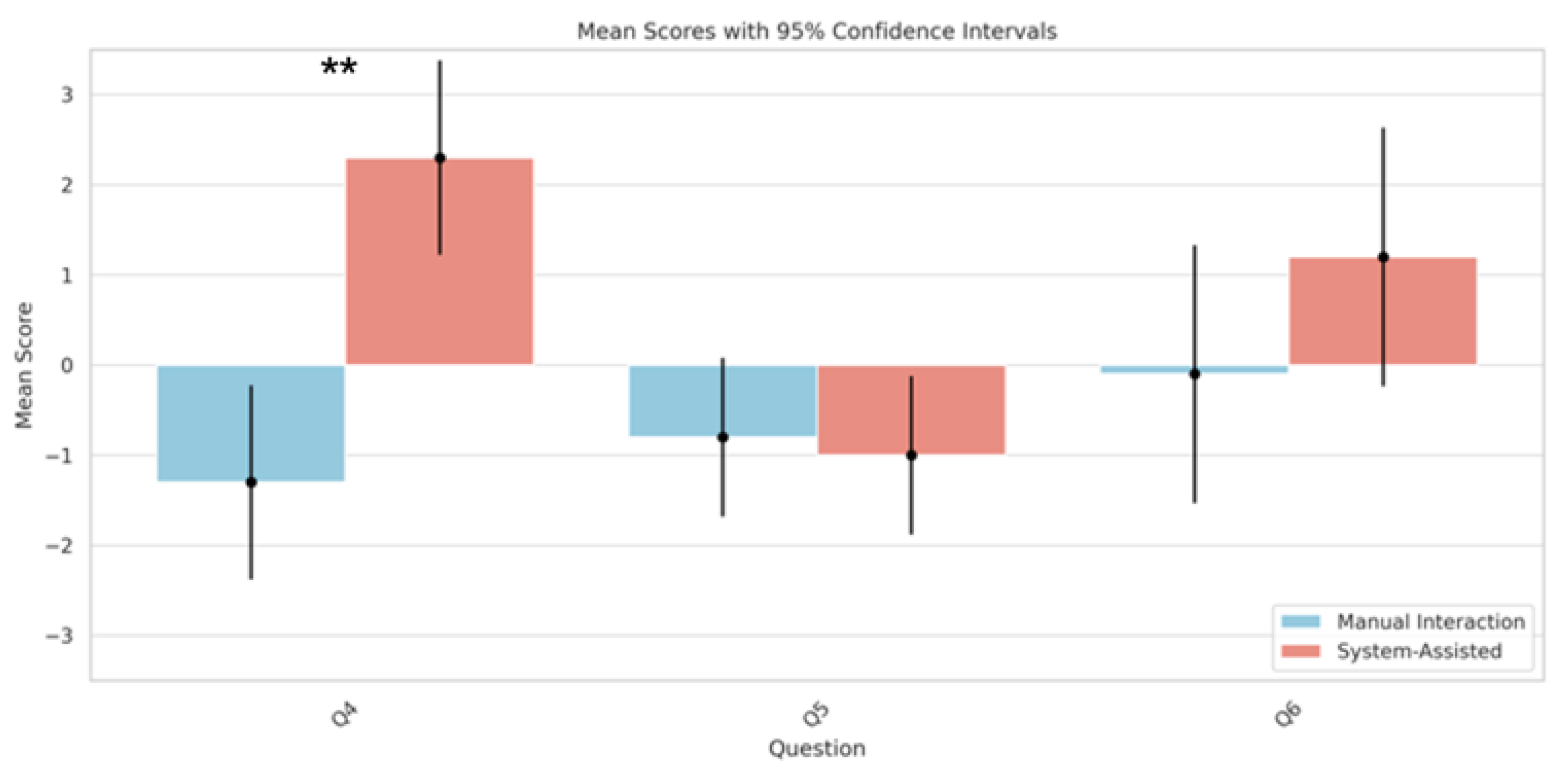

The statistical analysis of participant responses to three key questions further underscored the significant improvement in user experience in terms of control, stability, and accuracy with the virtual stabilization system. For Question 1(Q1), which assessed the extent to which participants felt the movement of the virtual pointer was controlled by their eyes, the mean difference in responses was 3.3 (95% CI: 0.09 to 6.51), with a significant p-value of 0.000043 (

Figure 7). This indicates a considerably higher sense of control when using the system with virtual stabilization.

Similarly, for Question 2 (Q2), regarding the stability experienced in controlling the virtual pointer's motion, the mean difference was 4.4 (95% CI: 1.17 to 7.63), with an even lower p-value of 0.0000045 (

Figure 7). This result underscores a marked improvement in perceived stability with the stabilization feature.

Question 3 (Q3), which focused on the participants' ability to accurately target objects using the eye-controlled virtual pointer, also showed a significant mean difference of 4.1 (95% CI: 0.82 to 7.38) and a p-value of 0.000009 (

Figure 7). This finding suggests that the virtual stabilization system substantially enhanced the accuracy of eye-controlled targeting.

Figure 6.

Comparison of (a) mean time spent and (b) mean distance between the pointer and the target in tasks under the condition of system with and without virtual stabilization (** p < 0.01).

Figure 6.

Comparison of (a) mean time spent and (b) mean distance between the pointer and the target in tasks under the condition of system with and without virtual stabilization (** p < 0.01).

Figure 7.

Comparison of mean score of Q1 to Q3 in the condition of system with and without virtual stabilization (** p < 0.01).

Figure 7.

Comparison of mean score of Q1 to Q3 in the condition of system with and without virtual stabilization (** p < 0.01).

4.2. Evaluating the System-assisted Effectiveness in Complex Interactive Tasks

This study aims to validate the effectiveness of our developed AR system in assisting individuals with tremor in operating everyday household appliances, with a particular focus on enhancing the fluidity and stability of operations. While television control may seem a common and straightforward task in daily life, it indeed comprises a variety of complex interactive tasks, such as switching functions, confirming selections, and returning to previous menus. Patients with tremor disorders often face challenges in smoothly completing these tasks using traditional control devices, like TV remotes, due to hand instability. Given the representativeness and ubiquity of television control, it serves as an ideal test case for assessing the efficacy of the AR system in everyday interactive tasks.

4.2.1. Experimental Setup

The design of our experiment was as follows: We selected several common TV control tasks, such as volume adjustment, channel switching, and confirmation. These tasks were arranged in a random sequence to create 10 sets of TV control task sequences. We recruited 10 participants aged between 20 and 65 years to complete these tasks. Each participant's primary task involved using the AR system and the conventional remote control to complete a randomly selected set of TV control tasks. For clarity in subsequent analysis, specific terminologies were designated for the experimental conditions: interactions facilitated by the AR system were termed ‘System-Assisted’, while those involving the conventional remote control were labeled ‘Manual Interaction’. To simulate the trembling finger manipulation typical of tremor patients when using a traditional remote control, we employed a hand tremor simulator, detailed in “a dual channel electrical stimulation instrument for simulating trembling limbs” [

22].

Upon completion of the tasks, participants were asked to respond to a series of subjective questions based on their experience. Responses were rated on a 7-point scale, which ranged from -3 (very poorly matched) to 3 (perfectly matched). Through these responses, we aimed to assess the potential effects of the AR system in enhancing operational accuracy and user experience.

Q4. In this condition, channel-switching and volume adjustment could be few mis-operations.

Q5: In this condition, channel-switching and volume adjustment could be easily performed.

Q6: In this condition, channel-switching and volume adjustment were performed stably.

4.2.2. Result

In the investigation of the AR system's ability to provide smooth control and stability for individuals with tremors during complex interactive tasks, our statistical analysis utilized a paired t-test to compare the ‘System-Assisted’ and ‘Manual Interaction’ conditions. Our analysis revealed a statistically significant improvement in the number of mis-operations during channel-switching and volume adjustment tasks when participants used the AR system as opposed to a conventional remote control. This was evident from the responses to Question 4 (Q4), which showed a mean difference of 3.6 (95% CI: 0.19 to 7.01, p = 0.000035) (

Figure 8). This underscores the potential of the AR system to enhance precision in control tasks that are typically challenging for individuals with tremor.

However, when evaluating the ease of performing the same tasks, as assessed in Question 5 (Q5), the statistical analysis did not demonstrate a significant difference between the two conditions (mean difference: -0.2, 95% CI: -2.98 to 2.58, p = 0.619) (

Figure 8). This suggests that participants did not perceive a change in the difficulty level when using the AR system compared to the manual method.

In regard to the stability of operation, as queried in Question 6 (Q6), while the mean difference in responses was 1.3, suggesting an improvement with the ‘System-Assisted’, the results did not achieve statistical significance (95% CI: -3.23 to 5.83, p = 0.070) (

Figure 8). This outcome implies a potential trend towards increased stability with the assistance of the AR system; however, the data does not allow for a definitive conclusion, indicating the need for further research with a larger sample size or refined experimental conditions.

Figure 8.

Comparison of mean score of Q4 to Q6, in the condition of ‘System-Assisted’ and ‘Manual Interaction’ (** p < 0.01).

Figure 8.

Comparison of mean score of Q4 to Q6, in the condition of ‘System-Assisted’ and ‘Manual Interaction’ (** p < 0.01).

4.3. Evaluating the System-Assisted Effectiveness in Distant Interactive Environments

This experiment aims to explore and validate the potential of AR technology in assisting individuals with tremors in remote communication and interaction. For individuals affected by tremors, particularly those impacting hand stability, accurately indicating and interacting with distant objects poses a significant challenge. Limited by their physical mobility, these patients often rely on the assistance and communication with others for interacting with objects that are out of reach, such as needing help to retrieve items located at a distance. The complexity of real-life environments, where objects may be obscured, closely packed, or small, further complicates this task. The experiment involves a pointing and guessing game, created to test the effectiveness of the AR system in complex situations. The main objectives are to determine if the AR system enhances their ability to accurately and efficiently point at objects, and to assess how the system aids their communication with others.

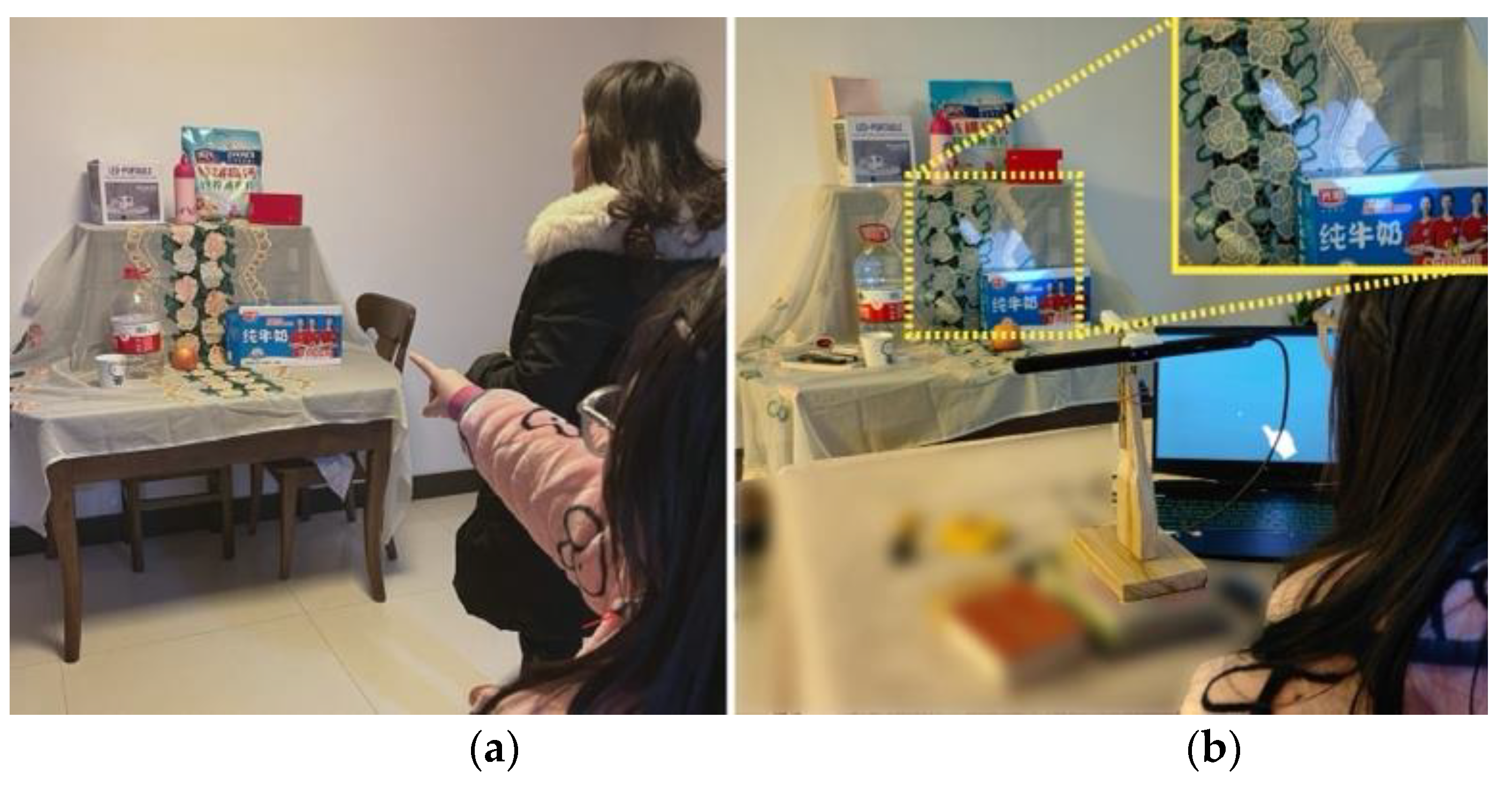

4.3.1. Experimental Setup

In our experimental setup, we carefully selected 10 objects of various sizes commonly found in households, including items like cardboard boxes, bottled water, apples, and wall paintings (

Figure 9). Each object was assigned a unique numerical identifier to facilitate the experiment's various stages. Spatially, five objects were placed on an upper tier, four on a lower tier, and the wall painting was hung on an adjacent wall. To increase the complexity of the task and more closely simulate a real-life environment, smaller objects were positioned in front of the larger ones on both tiers, creating a partial visual obstruction. All objects were strategically positioned 5 meters away from the participants, covering a horizontal range of 0 to 0.5 meters and a vertical range of 0 to 1 meter.

The task involved a sequence of numerically coded objects presented in a randomized order. As illustrated in

Figure 9, the experiment was conducted in 10 rounds, each involving two participants. One participant, using a hand tremor simulator or using the AR system, was to point to the distant objects. The other participant's role was to guess and note the numerical codes of the objects indicated. Upon the conclusion of each round, we compared the recorded numerical sequences of both participants, focusing on the discrepancies and the total time taken for each pointing task. Additionally, participants provided feedback on their experience, rating it on a 7-point scale at the experiment's conclusion. The scale ranged from -3 (very poorly matched) to 3 (perfectly matched), offering insights into their subjective experiences. The following questions guided their feedback:

Q7: In this case, I think I can more easily point to distant objects.

Q8: In this situation, I feel that I can stably point to the object.

Q9: In this case, I can easily understand the object being referred to.

Q10: In this situation, I feel that communication is easy.

Figure 9.

This is a figure. Evaluation of system assistance in a distant interaction environment: (a) a participant directly indicating distant items; (b) a participant using system assistance to indicate distant items.

Figure 9.

This is a figure. Evaluation of system assistance in a distant interaction environment: (a) a participant directly indicating distant items; (b) a participant using system assistance to indicate distant items.

4.3.2. Result

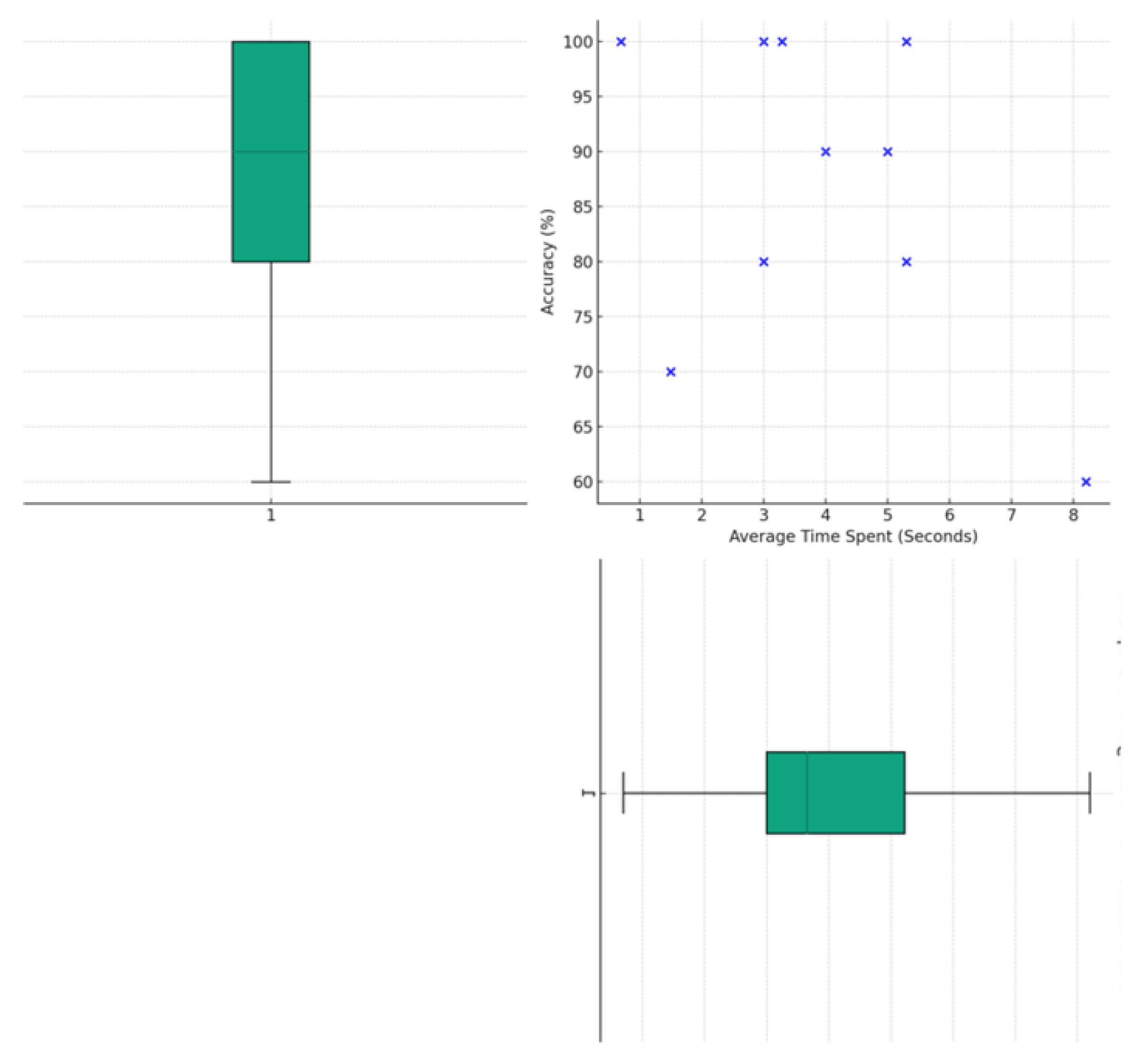

As illustrated in

Figure 10, the analysis of the data revealed that under the support of the system, participants were able to achieve a median accuracy rate of 90%, with the first quartile at 80% and the third quartile reaching 100%. This high level of accuracy demonstrates the system’s effectiveness in aiding participants to overcome challenges posed by obscured, closely packed, or small objects. Furthermore, the average time taken to complete the task showed a median of 3.65 seconds, with the bulk of the experiments falling between 3.0 seconds (first quartile) and 5.225 seconds (third quartile). This indicates a relatively quick response time in completing the tasks, highlighting the system’s role in facilitating efficient interactions.

Notably, our analysis did not find a significant direct correlation between the average time spent on tasks and the level of accuracy achieved. This indicates that the current level of accuracy was not attained at the expense of prolonged interaction duration.

In scenarios where the system was not used, participants were unable to successfully complete the tasks. This contrast highlights the critical role of the system in enabling precise and efficient interaction with remote objects. Overall, the experimental results demonstrate the system’s effectiveness in significantly enhancing remote communication for individuals with tremors.

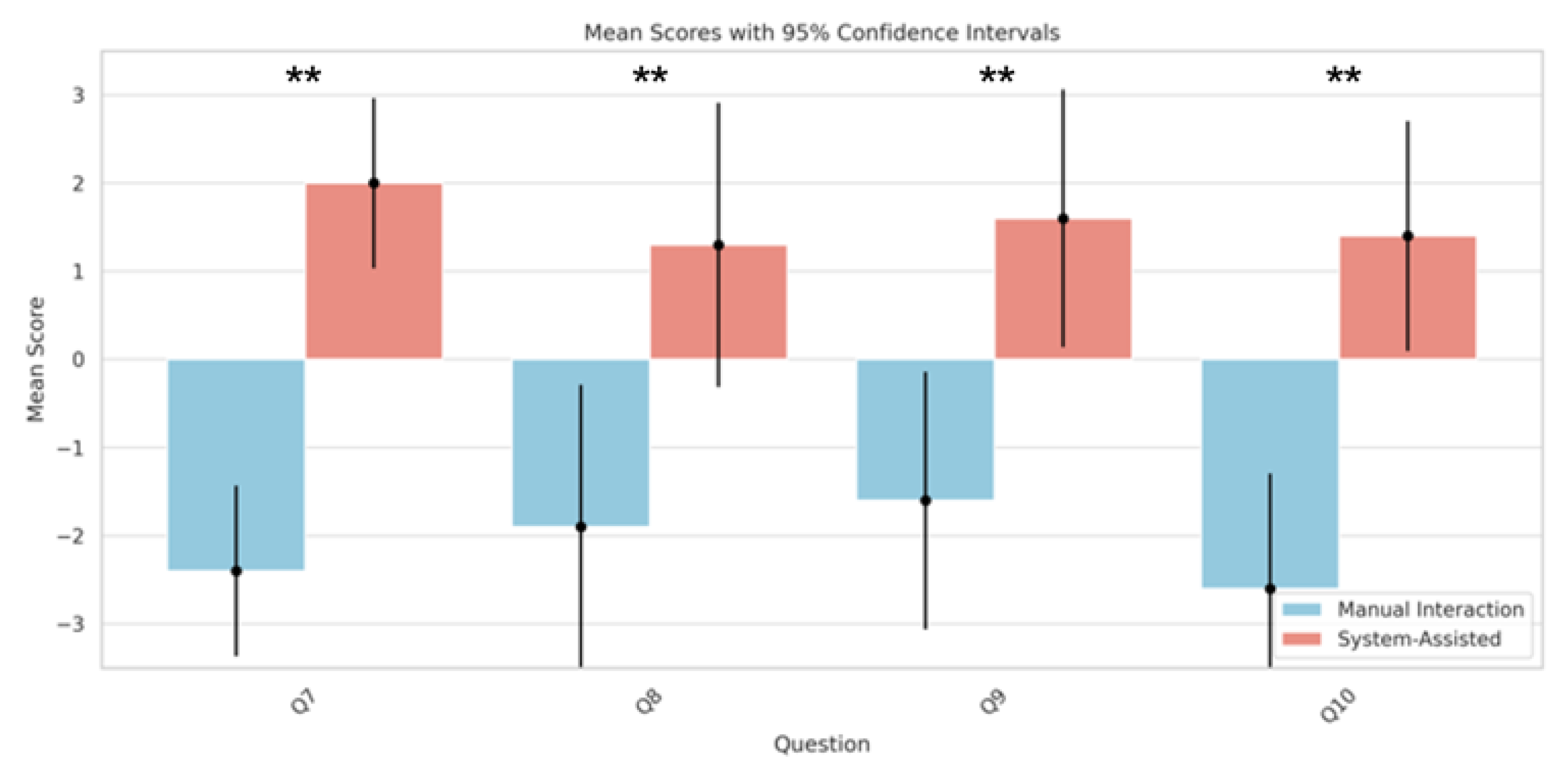

Question 7 (Q7) addressed the ease of performing remote pointing tasks. Participants noted that pointing at distant targets was easier when using an AR assisted system. Statistical analysis revealed a significant improvement in remote pointing ability with system assistance, with a mean difference of 4.4 (95% CI: 1.35 to 7.45, p = 0.00000278) (

Figure 11). This underscores the enhanced precision in locating distant objects by individuals with tremor with the aid of the system.

Question 8 (Q8) focused on the stability of participants while pointing at objects. The statistical outcomes indicated a significant aid provided by the system for tremor individuals in performing stable pointing actions (mean difference of 3.2, 95% CI: -1.89 to 8.29, p = 0.0015) (

Figure 11). This demonstrates that the virtual hand and pointer interface offered by the system can be controlled steadily by individuals with tremor.

Question 9 (Q9) explored the clarity of the pointing actions. Participants reported that it was easier to understand the indications given by tremor individuals in a system environment (mean difference of 3.2, 95% CI: -1.42 to 7.82, p = 0.00079) (

Figure 11). This highlights the effectiveness of using a virtual hand for remote interaction and pointing in the system setting.

Question 10 (Q10) examined the comparative ease of remote communication using the AR system versus traditional methods. Responses indicated that the convenience of communication significantly improved with the use of AR technology (mean difference of 4.0, 95% CI: -0.13 to 8.13, p = 0.0000685) (

Figure 11), suggesting that the system facilitates clearer communication in interactive tasks. These results collectively emphasize the important role of AR systems in enhancing interaction precision and convenience in complex environments.

Figure 10.

Assessing participants’ performance in complex remote interaction tasks under system support: accuracy and average time spent.

Figure 10.

Assessing participants’ performance in complex remote interaction tasks under system support: accuracy and average time spent.

Figure 11.

Comparison of mean score of Q7 to Q10 in the condition of ‘System-Assisted’ and ‘Manual Interaction’ (** p < 0.01).

Figure 11.

Comparison of mean score of Q7 to Q10 in the condition of ‘System-Assisted’ and ‘Manual Interaction’ (** p < 0.01).

5. Discussion

The results of Experiment 1 provided strong evidence for the benefits of the virtual stabilization algorithm. With the assistance of this algorithm, the stability and efficiency of tremor patients in interacting with remote objects via eye movement control were significantly enhanced. Specifically, the use of the virtual stabilization in system led to a substantial reduction in task completion time and a significant improvement in operational precision. These findings not only demonstrate the efficacy of the system in assisting with fine motor tasks but also provide valuable guidance for designing assistive technologies for individuals with tremor, particularly in scenarios that require eye movement or other non-traditional control methods.

In Experiment 2, we observed that the system-assisted condition significantly outperformed traditional manual interaction in reducing operational errors (Q4). This finding highlights the importance of the AR system in enhancing operational precision, especially in situations where individuals with tremor are prone to errors while using traditional control devices, such as TV remotes. However, no significant difference was observed in terms of ease of operation (Q5), possibly due to the system interface not being fully adapted to the characteristics of eye movement interaction. Additionally, the result for operational stability (Q6) did not show statistical significance, which might be attributed to insufficient sample size or the need for more refined measurement tools to capture this effect.

The outcomes of Experiment 3 underscored the effectiveness of the AR system in remote pointing and interaction within complex physical environments. The system notably enhanced the ability of individuals with tremors point at distant targets (Q7) and improved communication efficiency (Q10). These results not only demonstrate the potential of AR technology in enhancing remote interaction capabilities but also provide valuable insights into how to utilize these technologies to improve the interaction experience for users with specific needs.

Overall, these three experiments collectively highlight the potential value of the AR system in assisting individuals with tremors everyday tasks and complex interactions. The virtual stabilization algorithm, in particular, showed significant effects in reducing task completion time and improving operational precision, offering important guidelines for future development of assistive technologies for individuals with tremor. However, it should also be noted that while the AR system demonstrated significant advantages in certain aspects (such as reducing operational errors), its effectiveness in areas such as ease of use in complex interactive tasks has not yet met expectations. This suggests that future research should focus on developing interface designs that are better suited to the characteristics of eye movement interaction.

Additionally, limitations of this study include a relatively small sample size and the possibility that the experimental design did not cover all interaction scenarios relevant to the daily lives of tremor patients. Therefore, future research should involve a broader range of participants and consider a more diverse array of application scenarios and task types to comprehensively assess the practicality and benefits of the AR system in various environments.

In conclusion, our research provides valuable insights into the use of AR technology to enhance the quality of life and independence of tremor patients and points the way for future research and applications in this field.

6. Application

This system demonstrates significant potential in aiding individuals with tremors, helping them overcome physical limitations and facilitating remote interaction. In the following sections, we illustrate through a series of examples how individuals with tremors can use this system for controlling home appliances and conducting daily communication within a household setting.

6.1. Light Control

Operating light switches is a commonplace interaction within households. However, for individuals affected by tremors, even the seemingly simple task of turning lights on and off can become challenging due to their physical constraints. Our system seamlessly integrates into the household lighting infrastructure, enabling users to control lights through their gaze instead of physical movements.

In terms of system design details, the initial setup involves connecting the light switch to a relay. This relay is controlled by an Arduino mainboard equipped with a Wi-Fi module (ESP8266), facilitating intelligent control over the lighting. During user interaction, if the user focuses their gaze on the virtual switch for three seconds, the system accurately recognizes and logs this interaction, and immediately sends a control signal to the Arduino mainboard. Upon receiving this signal, the mainboard promptly responds by converting it into an electrical operation, accurately activating or deactivating the light switch. This provides users with an indirect but precise method to control the lights via the virtual switch (

Figure 12).

6.2. TV Control

Using television remotes can be a daunting task for individuals with tremor due to the precision and steadiness required. Our innovative system seamlessly integrates with televisions, allowing individuals with tremor to effortlessly control TVs without the need for physical remotes, simply by using their gaze.

During the initial setup, an Arduino motherboard is connected to an infrared transmitter, calibrated to match the signal of traditional TV remotes, enabling intelligent and responsive control through our system. As shown in

Figure 13, users efficiently interact with the system by focusing their gaze on projected virtual buttons for three seconds, activating commands like "confirm" "back" "channel" "volume" etc. This intuitive and user-centric design provides individuals with tremor with a straightforward and accessible way to accurately and effortlessly operate the TV through virtual controls.

6.3. Accessible Communication

Individuals with tremors often face challenges in movement and reaching items at height due to involuntary body shakes and limited mobility, such as wheelchair use, significantly impacting their daily communication. Discussing items located afar or at elevated positions becomes particularly challenging without assistance. For instance, discussing a painting hung high on a wall or instructing someone to retrieve an item from a distant location can be difficult for those with tremors.

However, these communication barriers are effectively eliminated with the use of our system. As illustrated in

Figure 14, an individual with tremor can control a stable virtual hand through eye movements to communicate about a painting located at a height. As shown in

Figure 9, they can also direct others to retrieve items by controlling the virtual hand. Additionally, when our system is integrated with wheelchair configurations, it enables individuals with tremors to easily navigate and reach various corners within the home, facilitating barrier-free communication. This spatial prompting method simplifies the communication process and enhances clarity and efficiency.

The application of the system extends beyond the aforementioned scenarios to a wider range of living situations, including air conditioning temperature adjustment, automated curtain control, and kitchen appliance management. The system can be deeply customized and optimized based on users' specific needs, eliminating many interaction barriers in daily life and expanding the interaction range for individuals with tremors. By reducing the difficulty of daily tasks and decreasing reliance on external assistance, this system opens a new path for tremor patients towards a more independent, efficient, and comfortable lifestyle.

Figure 14.

An individual with tremor engaging in daily communication with a companion using the SAR System, discussing distant objectives.

Figure 14.

An individual with tremor engaging in daily communication with a companion using the SAR System, discussing distant objectives.

7. Conclusion

This study demonstrates the significant assistive role of the SAR system for individuals with tremor, enabling them to interact more independently and efficiently with their surrounding environment. The virtual stabilization algorithm played a crucial role in enhancing the system's ability to assist individuals with tremors in stable and effective interaction with remote objects, even in complex interactive tasks and environments. However, the study also identified shortcomings in the system's usability and interface design, indicating a need for future research to further optimize eye-tracking interfaces to better accommodate the unique motion interaction needs of individuals with tremor disorders. Additionally, future research should validate the system's practical benefits in a more diverse range of application scenarios and user groups to fully assess its potential and value in real-world settings.

Author Contributions

For research articles with several authors, a short paragraph specifying their individual contributions must be provided. The following statements should be used “Conceptualization, K.W. and Z.S.; methodology, K.W., MJ.W. and Z.S.; software, MJ.W.; validation, K.W., MJ.W. and Z.S.; formal analysis, K.W. and Z.S.; investigation, K.W., MJ.W. and Z.S.; resources, Z.S.; data curation, MJ.W. and Z.S.; writing—original draft preparation, K.W. and MJ.W.; writing—review and editing, K.W.; visualization, K.W.; supervision, Z.S. and Q.H.; project administration, Z.S.; funding acquisition, K.W.. All authors have read and agreed to the published version of the manuscript.”.

Funding

This research was funded by National Natural Science Foundation of China, grant number 61902287.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Louis E D; Faust P L. Essential tremor: the most common form of cerebellar degeneration. Cerebellum & ataxias, 2020, 7, 1-10.. [CrossRef]

- Lenka A; Jankovic J. Tremor syndromes: an updated review. Frontiers in Neurology, 2021, 12, 684835. [CrossRef]

- Bain P G. Parkinsonism & related disorders. Tremor. Parkinsonism & Related Disorders, 2007, 13, S369-74. [CrossRef]

- Louis E D; McCreary M. How common is essential tremor? Update on the worldwide prevalence of essential tremor. Tremor and Other Hyperkinetic Movements, 2021, 11. [CrossRef]

- Welton T; Cardoso F; Carr J A, et al. Essential tremor. Nature Reviews Disease Primers, 2021, 7(1), 83. [CrossRef]

- National Institute of Neurological Disordersand Stroke. Tremor. https://www.ninds.nih.gov/health-information/disorders/tremor. Accessed 10 Dec 2023.

- Louis E D. Treatment of essential tremor: are there issues we are overlooking. Frontiers in neurology, 2012, 2, 91. [CrossRef]

- Zesiewicz T A; Elble R J; Louis E D, et al. Evidence-based guideline update: treatment of essential tremor: report of the Quality Standards subcommittee of the American Academy of Neurology. Neurology, 2011, 77(19), 1752-1755. [CrossRef]

- Dallapiazza R F; Lee D J; De Vloo P, et al. Outcomes from stereotactic surgery for essential tremor. Journal of Neurology, Neurosurgery & Psychiatry, 2019, 90(4), 474-482. [CrossRef]

- Carmigniani J; Furht B. Augmented reality: an overview. Handbook of augmented reality, 2011, 3-46.

- Wang K; Takemura N; Iwai D, et al. A typing assist system considering involuntary hand tremor. Transactions of the Virtual Reality Society of Japan, 2016, 21(2), 227-233.

- Wang K; Iwai D; Sato K. Supporting trembling hand typing using optical see-through mixed reality. IEEE Access, 2017, 5, 10700-10708. [CrossRef]

- Zhang X; Liu X; Yuan S M, et al. Eye tracking based control system for natural human-computer interaction. Computational intelligence and neuroscience, 2017, 2017. [CrossRef]

- Donaghy C; Thurtell M J; Pioro E P, et al. Eye movements in amyotrophic lateral sclerosis and its mimics: a review with illustrative cases. Journal of Neurology, Neurosurgery & Psychiatry, 2011, 82(1), 110-116.. [CrossRef]

- De Gaudenzi E; Porta M. Towards effective eye pointing for gaze-enhanced human-computer interaction. 2013 Science and Information Conference. IEEE, 2013, 22-27.

- Nehete M; Lokhande M; Ahire K. Design an eye tracking mouse. International Journal of Advanced Research in Computer and Communication Engineering, 2013, 2(2), 1118-1121.

- Missimer E; Betke M. Blink and wink detection for mouse pointer control. Proceedings of the 3rd international conference on pervasive technologies related to assistive environments. 2010, 1-8.

- Shahid E; Rehman M Z U; Sheraz M, et al. Eye Monitored Wheelchair Control. 2019 International Conference on Electrical, Communication, and Computer Engineering (ICECCE). IEEE, 2019, 1-6. Date Added to IEEE Xplore: 27 December 2019 ISBN Information:. [CrossRef]

- Plaumann K; Babic M; Drey T, et al. Improving input accuracy on smartphones for persons who are affected by tremor using motion sensors. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, 2018, 1(4), 1-30. [CrossRef]

- Wacharamanotham C; Hurtmanns J; Mertens A, et al. Evaluating swabbing: a touchscreen input method for elderly users with tremor. Proceedings of the SIGCHI conference on human factors in computing systems. 2011, 623-626.

- Wang K; Tan D, Li Z; et al. Supporting Tremor Rehabilitation Using Optical See-Through Augmented Reality Technology. Sensors, 2023, 23(8), 3924. [CrossRef]

- Xu C; Zhang X; Guan Q, et al. Analysis of the electrophysiological features of tremor and essential tremor in Parkinson’s disease. Chinese Journal of Practical Nervous Diseases, 2017, 20(21),16-20.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).