1. Introduction

Exploring new approaches to innovating tertiary education is a worldwide pursuit driven by the increasing demand for graduates with competencies, as researchers have found that there is a “skill gap” between labor market requirements and the educational readiness of graduates in the past few years (Tee et al., 2024). Additionally, the labor market is constantly evolving due to the development of information technology and globalization, as are the competencies required for graduates (Rakowska & de Juana-Espinosa, 2021). Indeed, the primary causes of job loss are the changes in job requirements driven by digital transformation and automation (Kimball, 2021). Hence, higher education institutions are reexamining their current educational systems and practices to produce graduates better prepared for the workforce, thereby fostering the sustainability of higher vocational education.

In recent years, OBE has gained global recognition for its innovative approach to learning (Gurukkal, 2018). OBE creates an educational environment where students are motivated by what they can learn and apply to solve real-world challenges. It is characterized by its curriculum design, TLAs, and ASs, all driven by the exit learning outcomes that students are expected to achieve upon completing a program (Harden, 2009; Ortega & Cruz, 2016). The primary objective of OBE is to provide students with a focused and coherent academic program that enhances student engagement and develops the skills and competencies required by the market. Therefore, OBE teaching is inductive, which many academic researchers argue is more effective in motivating students to learn. Currently, the OBE approach is highly regarded and widely accepted as a strategy to reform and renew education policy worldwide (Gurukkal, 2018).

Despite strong advocacy and comprehensive implementation in global higher education, OBE has faced criticism from educators and academics. Challenges have arisen due to a lack of understanding among educators about the operational principles of OBE, particularly the alignment between course learning outcomes (CLOs) and program learning outcomes (Ling et al., 2021). Large-scale changes in long-practiced teaching and learning methods are difficult to implement (Guzman et al., 2017). Besides, assessments often prioritize course content and grades over the ILOs (Erdem, 2019). These issues highlight the need for a systematic and comprehensive evaluation of OBE implementation to identify the reasons for its varied outcomes and explore opportunities for enhancement (Glasser & Glasser, 2014).

Constructive alignment (CA) is an instructional concept that prioritizes the implementation of OBE, ensuring that TLAs and ASs are aligned with ILOs to enhance the achievement of various competencies (Biggs, 2014; Thian et al., 2018). This alignment is crucial for enhancing AGC. PjBL, as a systematic teaching method, enables students to achieve different levels of competencies by linking projects with their professions (Mateos Naranjo et al., 2020). It is a student-centered process that caters to students’ needs and encourages active involvement in their learning (Mahedo & Bujez, 2014; Pradana, 2023). PjBL aligns with the principles of OBE, which emphasize student-centeredness, competency development, and real-world relevance. As Tang (2021) noted, implementing OBE with PjBL enhances student learning engagement. Therefore, relying on the theory of CA, the study integrates PjBL as a TLA into OBE implementation and tries to investigate how these aspects of OBE implementation are correlated and contributes to AGC in higher vocational education. The target population of this study comprises learners majoring in Cross-border E-commerce (CBEC) in Nanjing, Jiangsu province, China. Specifically, this current study intends to address the following research questions:

Q1. What is the relationship between ILOs and AGC among the CBEC learners?

Q2. What is the relationship between PjBL and AGC among the CBEC learners?

Q3. What is the relationship between ASs and AGC among the CBEC learners?

Q4. How does OBE implementation affect AGC among the CBEC learners?

The subsequent sections of this study are structured as follows:

Section 2 provides a comprehensive literature review and outlines the hypothesis development;

Section 3 introduces the research methodology employed in the study;

Section 4 details the results derived from the data analysis;

Section 5 engages in a discussion of the findings; and

Section 6 explores the theoretical, practical, and managerial implications arising from the findings of the study; and section 7 ends with the conclusions and limitations of the study.

2. Literature Review and Hypothesis Development

2.1. Outcome-Based Education: Background and Practice

Outcome-based education (OBE) can be traced back to the early 1990s when William G. Spady (1994) proposed it as a reformative measure to enhance the quality of the American school system. Since then, OBE has been widely promoted and extended to higher education, leading to profound changes in quality assurance mechanisms (Pham & Nguyen, 2023).

At its core, OBE prioritizes attaining specific, desired learning outcomes. Spady (1994) defined OBE as “clearly focusing and organizing everything in an educational system around what is essential for all students to be able to do successfully at the end of their learning experiences.” This definition is supported by King and Evans (1991), who describe outcomes as the end products of the instructional process. Similarly, Syeed et al. (2022) advocate for OBE as a way of designing, developing, and documenting instruction centered on goals and outcomes. In the OBE model, the teaching and learning process is oriented toward the ends, purposes, accomplishments, and results that students can achieve by the end of a program. Therefore, OBE is an educational process involving students in their learning journey to achieve well-defined outcomes.

Although the concept of OBE is straightforward, its implementation presents various challenges. Firstly, ILOs should be precisely articulated and communicated to all stakeholders, including students, educators, entrepreneurs, and academic institutions. Secondly, course contents, educational strategies, teaching methods, assessment procedures must be aligned with the learning outcomes. Lastly, the learning environment should be tailored to facilitate student engagement and support the achievement of the learning outcomes, with all modifications adequately documented. All in all, OBE requires a comprehensive strategy to teach, measure, and track well-defined outcomes that are crucial for students’ professional development.

Therefore, OBE represents a significant departure from traditional education, where teachers determine instruction content. Instead, OBE focuses on what students should be able to do at the end of a program. According to Ortega and Ortega-Dela Cruz (2016), the paradigm shift challenged educators to engage and act as learning facilitators rather than mere conveyors of knowledge. Transitioning from conventional education to OBE necessitates focusing on desired outcomes, ensuring that instructors and learners align with the educational objectives (Orfan, 2021). Therefore, a fundamental aspect of OBE is the shift towards a student-centered approach in teaching and learning processes. OBE prioritizes students’ needs, aims, and learning outcomes over traditional teacher-oriented methods. It emphasizes active learning, direct or indirect assessment of student progress, and the practical application of knowledge. This approach fosters an environment where students take ownership of their education and are better prepared for real-world challenges.

2.2. The Relationship between ILOs and PjBL

Studies have demonstrated the direct effect of ILOs (ILOs) on teaching and learning activities (Orfan, 2021). Libba et al. (2020) found that mission statements, learning objectives, and course outcomes can vary significantly across institutions, even within the same subject area. This variation leads to differences in teaching materials and methodologies, with some programs excelling in specific teaching domains while others focus on generic competencies. Precise and reasonable objectives are essential for effectively guiding all teaching activities, ensuring that the design of these activities closely aligns with the ILOs (Wu et al., 2023).

OBE emphasizes the importance of clear ILOs to help students achieve desired outcomes. Learning is effectively transferred to students through well-defined outcomes formulated for specific programs (Akramy, 2021). Therefore, teachers must first consider the pre-determined outcomes of the program when designing and preparing classroom activities, guiding students toward these goals (Orfan, 2021). This approach aligns with the concept of CA, proposed by Biggs and Tang (2007), where ILOs guide the development and implementation of teaching approaches that facilitate knowledge acquisition and skill development.

Currently, PjBL is considered one of the most effective teaching and learning activities for enhancing the achievement of ILOs. This hands-on approach not only aligns with OBE guidelines but also strengthens students’ learning engagement. In OBE, ILOs are strategically crafted to promote more effective learning at all levels (Driscoll & Wood, 2023).

Given these insights, we can hypothesize that:

Hypothesis 1: ILOs have a significant positive and direct effect on PjBL.

2.3. The Relationship between ILOs and ASs

The design and selection of ASs are deeply rooted in the ILOs (Rao, 2020). The effectiveness of assessment methods depends on how well they measure these outcomes (Alonzo et al., 2023). Alonzo et al. (2023) emphasized the importance of aligning assessment tasks with learning outcomes to accurately evaluate student achievements. CA further highlights the importance of developing ASs that can measure student learning relative to desired outcomes. Therefore, ILOs are crucial in shaping curriculum design, TLAs, and the overall assessment approach (Glasson, 2009).

Given this evidence, the following hypothesis is proposed:

H2: ILOs have a positive and direct effect on ASs.

2.4. The Relationship of ILOs (ILOs) and AGC (AGC)

ILOs refer to the level of understanding and performance that students are expected to achieve at the end of a learning experience (Biggs & Tang, 2007; Rao, 2020; Tam, 2014). They provide verifiable statements of what learners are expected to know, understand, and/or be able to do (Spady, 1994). Previous research has shown that different requirements of ILOs are positively related to achieving graduate employability (Kim, 2015; Tong & Gao, 2022). Clear teaching objectives and predefined expectations can inspire students to become creative and innovative thinkers, fostering successful implementation of OBE. Additionally, Soares et al. (2017) highlight that clearly defined learning outcomes can enhance students’ learning experiences and subsequently improve their employability prospects.

Therefore, hypothesis 3 can be proposed: ILOs positively and directly affect AGC.

2.5. The Relationships between PjBL (PjBL) and AGC

Previous studies have demonstrated that PjBL directly affect the development of graduate competence (Lozano et al., 2017; Lozano et al., 2019). PjBL provides students with opportunities to engage in activities that foster competence development, such as sustainability assessment or stakeholder engagement, within a safe learning environment (Cörvers et al., 2016; Heiskanen et al., 2016). Empirical research has shown that PjBL enhances students’ IT skills (Hron & Friedrich, 2003), academic performance (Baran & Maskan, 2011), and perception of the learning profession (Lavy & Shriki, 2008). By leveraging their existing knowledge, skills, and attitudes, PjBL promotes student competence and a sense of accomplishment (Lake et al., 2016; Gallagher & Savage, 2020).

Furthermore, PjBL integrates learning objectives into long-term projects related to real-world business practices and designed to solve actual business tasks and problems (Almulla, 2020). Through PjBL, different levels and types of graduate competence are incorporated into projects where students either work with actual clients or initiate and launch concepts they have created. Therefore, PjBL enables students to develop various professional competencies aligned with the ILOs required in the competence framework. This indicates that PjBL plays a significant role in mediating the relationship between the ILOs and AGC.

The design of ASs is informed by TLAs (Gunarathne et al., 2019; Tam, 2014). Jones et al. (2021) highlight the diverse dimensions of learning, indicating that a single assessment method may not fully capture the assessment needs of the curriculum, its objectives, and outcomes. A comprehensive evaluation encompasses various types of assessments, such as formative and summative assessment, direct and indirect; course focused and longitudinal, as well as authentic and course-embedded (Inés et al., 2020). PjBL environment is particularly well-suited for these types of assessments. Additionally, according to Guerrero-Roldán et al. (2018), selecting teaching and learning activities is essential for achieving and assessing competencies.

Based on the above analysis, the following hypotheses are proposed:

H4: PjBL has a positive and direct effect on AGC.

H4a: PjBL plays a mediating role in the relationship between the formulation of ILOs and AGC.

H5: PjBL has a positive and direct effect on ASs.

2.6. The Relationship between ASs (AS) and AGC

The design of ASs influences AGC (Jackson, 2016). ASs that are demanding, reliable, and engaging can enhance valuable student learning (Carless et al., 2017; Sadler, 2016). They have been widely acknowledged as essential instruments for improving the readiness of undergraduate students for future employment (Colthorpe et al., 2021). These findings support the notion that aligning assessments with ILOs can enhance AGC.

Furthermore, authentic assessment can positively impact students by elevating their aspirations and boosting their motivation by showcasing the connection between curriculum activities and their desired graduate outcomes (Frey et al., 2012). Similarly, teachers can use assessment to engage students in the learning and teaching activities and assessment (Black & Wiliam, 2009), as any approach that helps students learn is critical in increasing student learning gains (Lau & Ho, 2016). Alonzo et al. (2023) have emphasized the importance of effectively aligning assessment tasks with learning outcomes to evaluate students’ achievement of those outcomes. Cifrian et al. (2020) suggest that aligning assessments with learning objectives increases students’ opportunities to learn and practice the knowledge and skills required for successful assessment performance.

Besides, Biggs (2014) has proposed that constructive alignment involves defining the learning outcomes that students are expected to achieve before the teaching process begins. Subsequently, teaching and assessment methods are designed to effectively facilitate attaining those outcomes and assess the level of achievement. In line with this, assessment gathers evidence that validates and documents meaningful learning in the classroom (Wiggins & McTighe, 2005). The related literature confirms the desirability of using diverse assessment methods within active learning (Lynam & Cachia, 2018), which leads to better grades and helps students achieve educational learning goals and improve performance (Day et al., 2018). Studies have shown that aligning learning with learning outcomes and competencies can make assessment more transparent for students while assisting in quality assurance and course design (Guerrero-Roldán & Noguera, 2018). Therefore, it is believed that PjBL has a direct impact on the design of ASs, and PjBL and ASs play a chain mediating role in the relationship between the formulation of ILOs and AGC.

Hence, the following hypotheses are proposed:

H6: ASs have a positive and direct effect on AGC.

H6a: ASs play a mediating role in the relationship between ILOs and AGC.

H6b: PjBL and ASs play a chain mediating effect in the relationship between ILOs and AGC.

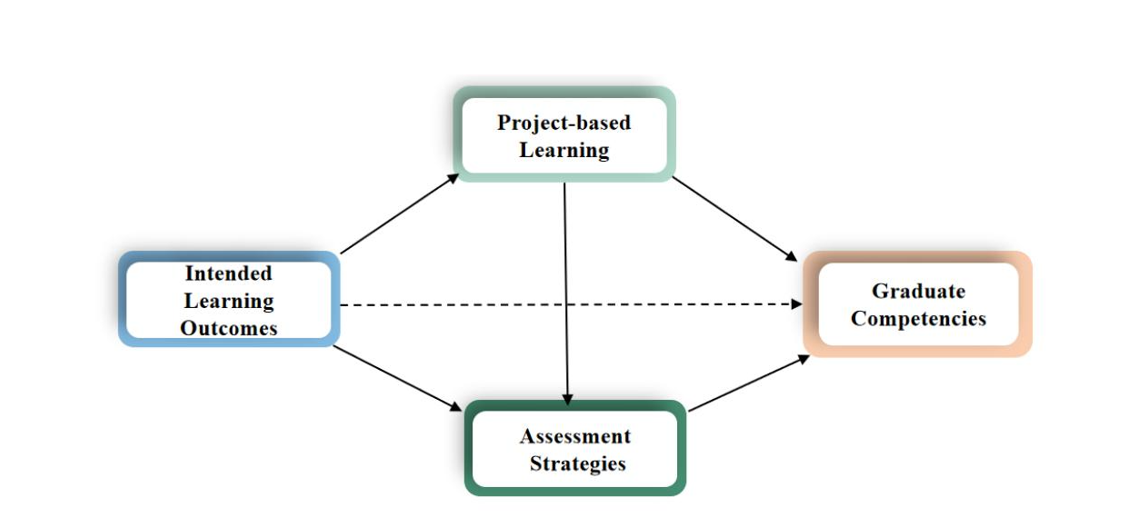

Informed by the theory of CA and the discussions above, a hypothesized framework was developed to examine the relationship between OBE implementation and AGC and the mediating roles of PjBL and ASs. This framework was depicted in

Figure 1.

3. Research Methodology

3.1. Sampling and Protocol

The population for this study was purposively selected from four higher vocational colleges in Nanjing, the capital of Jiangsu province, located in central China. The researchers chose these colleges as research sites because they had implemented OBE during the research. Therefore, respondents form these colleges could perceive the effect of the implementation of OBE and assess the extent to which they have already attained the competencies expected upon completing a program study.

The sample size was determined according to the criterion of SEM, which recommends a minimum sample size of ten times the total number of observed variables (Zhang et al., 2020). With 30 observed variables in this survey, 320 respondents were randomly selected from the higher vocational colleges through a lottery system to participate in the survey. Informal interviews were conducted to validate the responses of the participating CBEC learners. Ultimately, 301 valid samples with a retrieval rate of 94% were adopted for data analysis.

Before initiating this online survey, the researchers will seek permission from the Human Research Ethics Committee of University Science Malaysia (USM). Additionally, consent will be obtained from the deputy deans of the involved schools at the four higher vocational colleges. Afterward, the survey will be introduced to the respondents to ensure their understanding of the study. To facilitate the data collection process, the questionnaire link or QR code of the questionnaire, generated automatically using a network platform (i.e., Sojump), will be shared with the class groups via WeChat by their CBEC practitioners. Finally, participants will be informed of the study’s topic, purpose, and significance, and will be guided to complete the online questionnaire anonymously and voluntarily.

3.2. Instrument Design and Development

The questionnaire was designed based on the previous instruments whose validity and reliability had already been validated by the original researchers. The questionnaire had two primary sections. The initial section comprised demographic inquiries, encompassing gender, school affiliation, major, and the duration of exposure to OBE. The subsequent section, constituting the core of the questionnaire, featured four latent variables along with 24 measurement items.

Selecting previous questionnaires was guided by two key factors: their proven validity and reliability, and their adherence to OBE guidelines, which were deemed suitable for this investigation. Additionally, since the targeted respondents primarily consisted of students, the chosen instruments were deemed relevant to this study. Authorization to adapt the questionnaire was obtained from the original researchers, ensuring ethical compliance.

Respondents were tasked with rating the measurement items on a 7-point Likert scale, ranging from 1 (strongly disagree) to 7 (strongly agree). For ILOs, seven items were adapted from Custodio et al. (2019); for PjBL, six items were sourced from Grossman et al. (2019); for ASs, seven items were drawn from Baguio (2019) and Custodio et al. (2019); and for AGC, four items were adapted from Custodio et al. (2019).

To ensure content validity, two expert panels were assembled to scrutinize the adapted questionnaire items, identifying any potential issues such as irrelevance, ambiguity, or unclear wording. Following their recommendations, two additional demographic questions were incorporated into the initial section: respondents' age and the duration of their involvement in PjBL. Subsequently, language translators were engaged to assess the clarity and appropriateness of the items in the Chinese version, resulting in minor adjustments for linguistic accuracy.

As suggested by language experts, a brief introduction of OBE guidelines was included at the head of the questionnaire to enhance understand of OBE and assess its effect on attaining graduate competence. Lastly, to assess the face validity of the adapted instrument (Boateng et al., 2018), six students from the target demographic participated in a voluntary pretest of the initial questionnaire. Their feedback revealed no ambiguities or concerns requiring further clarification. Further details regarding the measurement items can be found in

Appendix A.

3.3. Statistical Analysis

The data analysis will be conducted using SPSS 23.0 and Amos 24.0. First, the Harman single-factor test will be carried out to test the common method bias. Then, descriptive analysis will be conducted to examine the sample characteristics. Secondly, structural equation modeling (SEM) analysis will be performed to investigate the measurement and structural models. Specifically, confirmatory factor analysis will be performed to examine the reliability and validity by providing the values of factor loadings, CR, and AVE. The goodness-of-fit index (GoF) and path coefficient are to be adopted to test the acceptable level for the structural model. Lastly, the bootstrapping method will be used to evaluate the statistical significance of the mediating effects of the proposed hypotheses.

4. Empirical Analysis

4.1. Common Method Bias (CMB)

All data were collected from a single source, CBEC graduates in public higher vocational colleges, through self-reported questionnaires. This uniform data collection method may lead to consistent responses, potentially resulting in common method bias (CMB) and affecting the study’s validity and reliability (Podsakoff et al., 2012). To address this issue, the Harman single-factor test was conducted using SPSS 23.0 to check for CMB deviations (Podsakoff et al., 2003). Employing the Exploratory Factor Analysis (EFA) method for the Harmon single-factor test (Podsakoff & Oran, 1986), the results indicated that four factors with eigenvalues exceeding 1 accounted for 79.800% of the total explained variance. Besides, the first factor, explaining 46.444% of the variance, fell below the critical threshold of 50% (Hair et al., 2010). This suggests that CMB was insignificant in the collected data and was unlikely to impact the observed relationship among variables.

4.2. Sample Characteristics

As depicted in

Table 1, the gender distribution of the respondents in this study was relatively balanced, with males accounting for 55.1% and females for 44.9%. The respondents aged 20-22 were the largest group in the sample (82.4%), followed by the age group of 23-25 and 26 above, accounting for 16.6% and 1.0%, respectively. The distribution of respondents among the institutions was relatively even, with each institution occupying approximately 22.3%, 26.2%, 27.2%, and 24.3%, respectively. Regarding distributions of their enrolled programs, most participants specialized in cross-border electronic e-commerce (50.5%), while a smaller percentage of participants specialized in electronic e-commerce, international trade, and international business, comprising 8.6%, 13.6%, and 13.3%, respectively. Among these respondents, 27.2% had two years of experience in OBE learning, and 72.8 % had three years of OBE learning experience. Furthermore, respondents were asked to specify how long they were engaged in PjBL, and it was found that 77.7% had two years of engagement in PjBL while 22.3 had three years of PjBL experience. No respondents reported having only one year of experience in project-based engagement.

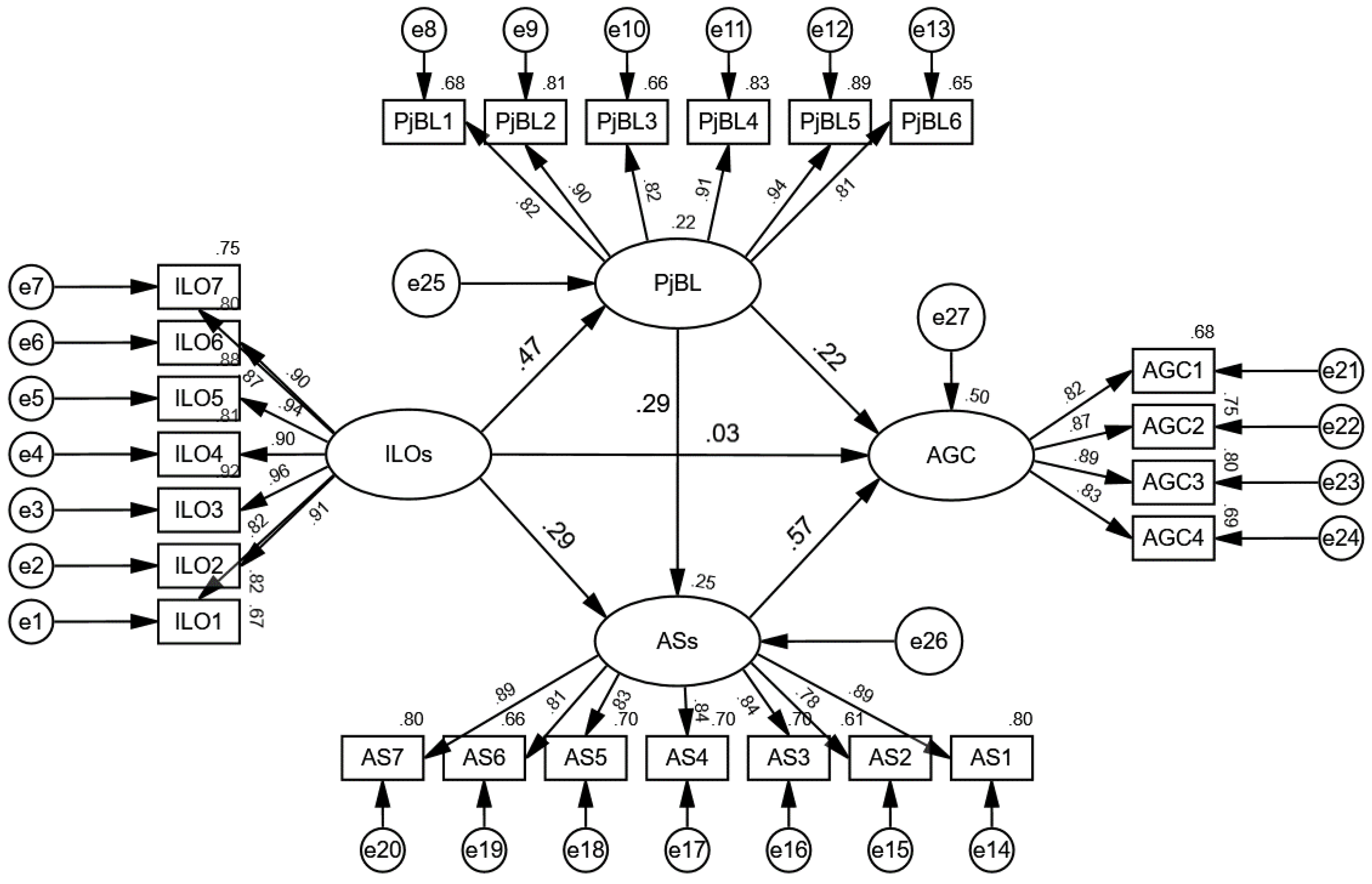

4.3. Measurement Model Verification

Anderson and Gerbing (1988) advocate for using confirmatory factor analysis (CFA) to validate the measurement model. One widely accepted reliability measure is the Cronbach coefficient alpha (α). Field (2009) asserts that a Cronbach’s alpha value exceeding 0.7 indicates satisfactory reliability of measurement items. Additionally, factor loading, composite reliability (CR), and average variance extracted (AVE) are commonly employed to assess convergent validity (Chen & Liu, 2019; Huang & Chang, 2020; Jo, 2023). Hair et al. (2019) suggest that factor loadings above 0.5 are considered significant, while values below 0.45 may warrant item deletion (Hooper et al., 2008). Doval (2017) suggests a minimum threshold of 0.70 for composite reliability to ensure reliability. Furthermore, Hair et al. (2019) recommend using average variance extracted (AVE) as a measure of convergent validity in structural equation modeling (SEM), with values of 0.5 or higher considered acceptable. The reliability and validity values of the four latent variables are presented in

Table 2.

As illustrated in

Table 3, the obtained Cronbach’s alpha value for the four latent variables ranged between 0.914 and 0.967. All the values exceeded the cut-off value of 0.70, indicating excellent reliability across the measured constructs. Besides, all standardized factor loadings were above 0.5 and significant at P< 0.001, with T-values ranging from 15.442 to 22.017, surpassing the suggested value of 1.96. It indicates that the factor loadings of individual items in the 4-factor model was all significant, which meant all constructs had acceptable reliability. Additionally, the scores of CR ranged from 0.915 to 0.967, and the AVE scores ranged from 0.710 to 0.808, above the suggested cut-off value of 0.70 and 0.50, respectively. These results provide strong evidence for convergent validity.

Furthermore, discriminant validity was assessed to determine the extent to which each construct in the model differed from the others (Hair et al., 2019). This was done by comparing each construct’s square root of the AVE for each construct with the correlations between latent variables. According to Fornell and Larcker (1981), the square root value of AVE is higher than the Pearson correlation coefficient value with other constructs, indicating discriminant validity between the constructs.

Table 4 presents the discriminant validity test results. The values below the diagonal represent the Pearson correlation coefficients between constructs, all of which are smaller than the square root of the AVE values on the diagonal. Conclusively, all research constructs have met the requirements for discriminant validity.

4.4. Structural Model Analysis

This study evaluated the structural model through a goodness-of-fit index (GoF) and path coefficient. Firstly, a GoF test aims to see how well the empirical data fit the hypothesized model. Many researchers have explored using model fit indices to assess overall model quality (Clark & Bowles, 2018; Montoya & Edwards, 2021; Shi et al., 2019). These indices include the Tucker-Lewis Index (TLI), the Comparative Fit Index (CFI), the Incremental Fit Index (IFI), the Goodness-of-Fit Index (GFI), and the Adjusted Goodness-of-Fit Index (AGFI), with values above 0.9 indicating good model fit. Conversely, the Root Mean Square of Approximation (RMSEA) and the Standardized Root Mean Square Residual (SRMR) below 0.08 also signify a good fit (Bagozzi & Yi, 2012). Moreover, the chi-square (x2) value, degrees of freedom (df), and the ratio of x2/df were used to assess overall model fit.

However, the chi-square value is sensitive to sample size and may not always produce precise results (Tong & Bentler, 2013). As Bollen et al. (1992) noted, when the sample size is larger than 200 in SEM, it is common for the chi-square value to be excessively large, leading to a poor model fit. To mitigate this, the GoF values can be adjusted through the Bootstrap correction, which can provide an alternative way to get a better result (Bollen et al., 1992). Using Chi-square divided by the degree of freedom, the ideal result should be less than 3.0 (Kline, 2004). More importantly, other fit indices can provide a more rigorous structural model fit verification standard.

The recommended threshold reference values of these indices, alongside the tested results from this study, are shown in

Table 4. As illustrated in

Table 4, the results indicated a good fit for the structural model with x2/df = 1.79, IFI = 0.97, CFI = 0.97, TLI = 0.97, GFI = 0.94, AGFI = 0.93, SRMR = 0.03, and RMSEA = 0.05. All the model fit indices exceeded the suggested threshold standards, confirming that the proposed structural model was acceptable. This indicates a strong alignment between the collected data and the proposed structural model.

In addition to assessing the GoF indices, SEM can be used to examine the significance and strength of the path relationships among the variables.

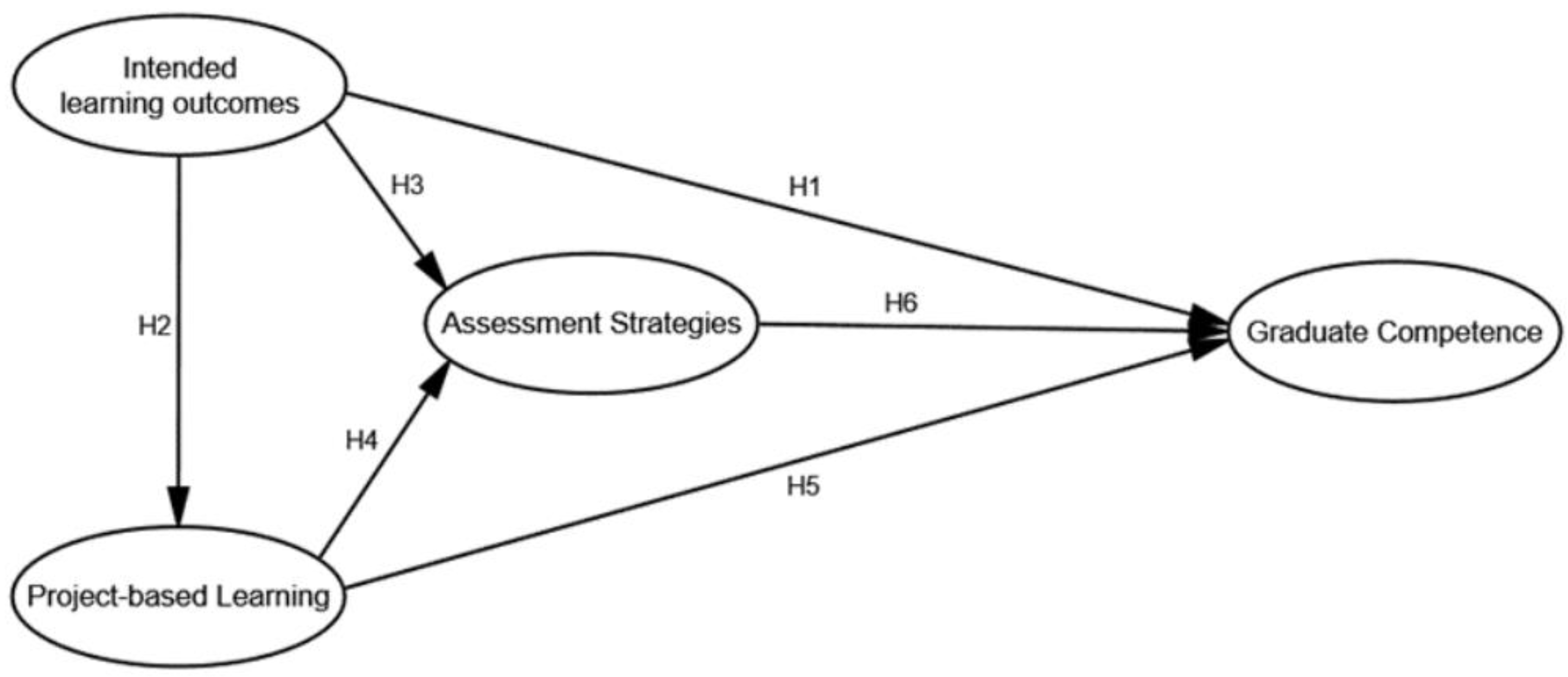

Figure 2 presents the explanatory variance and path coefficients with standardized parameter estimates.

In the hypothesized model, ILOs accounted for 22% of the variance in PjBL, with a standardized regression coefficient of 0.468, indicating a significant positive correlation between the two constructs. The combined influence of ILOs and PjBL explained 25% of the variance in ASs, with coefficients of 0.290 and 0.295, respectively, suggesting a positive association with AGC. Moreover, ILOs, PjBL, and ASs collectively explained 50% of the variance in AGC, with standardized regression coefficients of 0.026, 0.224, and 0.566, respectively, indicating satisfactory predictive strength within the hypothesized model. However, the standardized path coefficient of 0.026 for the direct path from ILOs to AGC falls below the recommended threshold value (0.20), suggesting a minimal direct contribution (Chin, 1998). This weak direct relationship underscores the roles of PjBL and Ass in mediating the indirect effect of ILOs on AGC within the hypothesized framework.

4.5. Hypotheses Tested

Applying SEM and AMOS with maximum likelihood estimates provided robust results for evaluating the hypothesized constructs in this study. As detailed in

Table 5, the empirical results supported five of the six hypotheses. ILOs were found to have a positive and significant influence on PjBL (PBL) (β=0.468, P<0.001), affirming Hypothesis 1 (H1). Similarly, PBL and ASs (ASs) were positively correlated (β=0.295, P<0.001), supporting Hypothesis 2 (H2). ILOs also had a significant positive relationship with ASs (β=0.29, P<0.001), confirming Hypothesis 3 (H3). PjBL was significantly linked to AGC (β=0.224, P<0.001), validating Hypothesis 4 (H4), and ASs were strongly associated with graduate competence (β=0.566, P<0.001), confirming Hypothesis 5 (H5). However, the direct effect of ILOs on AGC was not significant (β=0.026, P>0.05), leading to the rejection of Hypothesis 6 (H6). This suggests that ILOs may exert an indirect influence on AGC, potentially mediated by PBL and ASs.

4.6. Mediating Effects of Project-based Learning and Assessment Strategies

In order to analyze the mediating effects within the hypothesized model, the bootstrap method with 1000 repeated sampling at a 95% confidence interval, as suggested by MacKinnon (2008), was employed to verify the mediating roles of PjBL and ASs. A mediating effect is recognized as significant when the Z value exceeds 1.96, and the 95% bias-corrected CI does not include 0 (Shao & Kang, 2022).

Table 6 illustrates the results of direct, indirect, and total directs of the hypothesized model. At the 95% confidence level, both the confidence intervals of the Bias-Corrected method and the Percentile method were statistically significant (Z = 3.359, 95% bias-corrected CI [0.156, 0.511], 95% percentile CI [0.155, 0.510]), indicating a significant total effect. Similarly, the total indirect effect was significant (Z = 4.235, 95% bias-corrected CI [0.171, 0.442], 95% percentile CI [0.174, 0.448]). However, the direct effect of ILOs on AGC was not significant, with both the bias-corrected and percentile method confidence intervals including 0 (Z = 0.375), indicating that PjBL and ASs fully mediate the relationship between ILOs and AGC.

To specifically examine the mediating effects of PjBL and Ass, three alternative structural models were developed and tested.

Model 1: PjBL as a Mediator

This model assessed the indirect effects of ILOs on AGC through PjBL. The fit indices demonstrated a satisfactory fit (X2/df = 2.400, IFI = 0.970, CFI = 0.970, TLI = 0.965, GFI = 0.908, AGFI = 0.879, SRMR = 0.031, RMSEA = 0.068), with confidence interval values for indirect effects not crossing 0, supporting hypothesis 5a.

Model 2: ASs as a Mediator

This model assessed the indirect effects of ILOs on AGC through ASs, also exhibiting satisfactory fit indices (X2/df = 2.619, IFI = 0.962, CFI = 0.961, TLI = 0.955, GFI = 0.893, AGFI = 0.861, SRMR = 0.036, RMSEA = 0.073). The confidence intervals for indirect effects did not include 0, indicating that the indirect effect of ILOs on AGC through ASs was significant, thereby verifying hypothesis 6a.

Model 3: PjBL and ASs as Chain Mediators

This integral model examined the sequential mediating effect of PjBL and ASs in the relationship between ILOs and AGC, yielding satisfactory fit indices. The results confirmed a positive and significant sequential mediating effect (Z = 2.031, 95% bias-corrected CI [0.02, 0.157], 95% percentile CI [0.019, 0.148]), supporting hypothesis 6b.

Finally, it may be interest to explore the differences in mediating effects among the three models. The researchers examined the percentage of the total indirect effect accounted for by each mediator. The mediating effect of ASs (ILO-AS-AGC) was the greatest, accounting for 44.2% of the total indirect effect. This was followed by PjBL (ILO-PjBL-AGC), which accounted for 30.2%, and the sequential mediation of PjBL and ASs (ILO-PjBL-AS-AGC), accounting for 22.6%. These results highlight the critical mediating roles of PjBL and ASs in the relationship between ILOs and AGC, demonstrating their importance in enhancing graduate competence through structured educational strategies.

5. Discussion

This section presents comprehensive quantitative findings addressing each research question. The main contribution of this study lies in the development of a hypothesized model that presents the relationship among the different elements of OBE implementation and how these elements, individually and sequentially, influence AGC among CBEC learners. The following subsections discuss the findings related to the research questions.

5.1. Discussion of Research Question 1

The results revealed that ILOs had no statistically direct effect on AGC. This finding suggests that merely identifying specific ILOs does not guarantee the attainment of desired graduate competence, which is similar to previous studies on OBE implementation, such as the suggestions of Akhmadeval et al. (2013), Rao (2020), and Wang (2011) and that mechanical processes of pursuing outcomes without deliberate revision of pedagogy and assessment fail to attain the graduate competence.

While a significant direct effect may be lacking, prioritizing ILOs in OBE implementation remains crucial for ensuring effective graduate competence development. The ILOs increase the potential for enhancing students’ employability upon program completion. In OBE, the product determines the process. Essential to the successful implementation of OBE is the clarity of program goals and objectives, the relevance of instruction to the desired competencies, and the deployment of diverse assessment procedures that can reliably ascertain the achievement of the desired outcomes. This aligns with the suggestions of Thian et al. (2018) that the essence of OBE lies in clearly specifying learning outcomes, aligning them across educational levels, and strategically planning teaching methods and assessments.

Additionally, ILOs include institutional, program, and course outcomes, each specifying the competencies students should acquire by the end of their educational experience. Ensuring alignment of these outcomes across different levels is crucial, as it guarantees that students’ learning trajectories are geared towards graduate competence. The findings align with the research of Syeed et al. (2023), which suggests that with explicit tracking of learning outcomes allows for the quantitatively identification of students’ competences. This, in turn, helps determine students’ career paths and supports stakeholders in selecting candidates that meet their needs.

5.2. Discussion of Research Question 2

The study’s results highlight the positive and direct effect of PjBL on AGC among CBEC learners. This finding aligns with previous research by Zhang and Ma (2023), emphasizing that competencies can be effectively developed through active learning methodologies like PjBL. Unlike traditional educational models, PjBL offers a holistic, student-centered learning process. It fosters high levels of motivation, interest, and active engagement in learning, leading to enhanced academic performance and competencies relevant to the labor market. PjBL helps students better understand the statements of learning outcomes and fosters a conducive learning environment, thereby promoting the development of higher-order competencies for their future careers and lives. Hence, this study confirms the direct effect of PjBL on the development of graduate competence among CBEC learners.

However, the current study extends the previous research by investigating the mediating role of PjBL in the relationship between the ILOs and AGC. The findings confirmed that PjBL mediates the pathway from ILOs to AGC, consistent with the previous studies by Agi et al. (2023) and Upadhye et al. (2022). Specifically, ILOs are associated with PjBL, allowing students to clearly identify and state the aims and objectives of projects. The relationship indicates that formulating clear and specific ILOs at the initial stage of the course or program stimulates students’ motivation, engagement, and self-regulation through planning, organization, and monitoring in PjBL. ILOs provide students with a clear understanding of the expectations and criteria for their project work, which helps them improve their learning engagement to attain graduate competence.

Therefore, ILOs have a direct and positive effect on PjBL, which, in turn, enhances the development of graduate competence. PjBL acts as a mediator, creating a more effective learning environment aligned with ILOs, thus contributing to the development of graduate competence. The findings revealed that integrating PjBL as a TLA into OBE implementation fosters students’ achievement of graduate competence.

5.3. Discussion of Research Question 3

The result revealed that the design of ASs had a significant positive and direct effect on AGC. This finding was supported by previous studies of Mandinach and Gummer (2016) and Alonzo et al. (2023), which highlighted that well-designed ASs could motivate individual students’ achievement. When students start an assessment task, the clear description and assessment criteria help them understand what competencies they will attain, how to perform the activity, and how they will be assessed. This clarity makes students aware of what competencies can be attained through involving each activity and understand the level of competence attainment. Understanding their achieved competencies boosts students’ comfort and confidence, further enhancing their focus on attaining competencies (Alonzo et al., 2023).

The results also demonstrated that ASs is another significant mediating factor, echoing Guerrero-Roldán and Noguera’s (2018) findings that highlight the critical role of ASs in the relationship between Intended Learning Outcomes (ILOs) and AGC. Learning outcomes guide the design of ASs in the classroom. Teachers utilize suitable ASs to determine the final learning outcomes and enhance students’ learning gains (Lau & Ho, 2016). This aligns with Bagban et al. (2017), who stated that outcome-based assessment processes should be tailored to the specific outcomes being assessed. Bagban et al. (2017) added that various assessment techniques cultivate different course outcomes. When the assessments align with the learning outcomes, teachers can effectively monitor the development of graduate attributes and allow formative assessment to guide this development (Spracklin-Reid & Fisher, 2013).

Additionally, the findings support the work of (Yusof et al., 2017), which highlighted the importance of using multiple instruments and ASs that provide each student opportunities to perform by allowing adequate time and support. These element are key to evaluating the implementation level of OBE in higher education. Designing the assessment process requires selecting the most appropriate assessment criteria and tools to help students develop competencies and achieve the desired learning outcomes. In sum, the findings indicated that ASs plays a crucial mediating role in the relationship between ILOs and AGC.

Furthermore, the findings confirmed that ILOs affected AGC through the chain-mediating effect of PjBL and ASs. This finding is in line with Zhang and Ma (2023), who found that ILOs define the expected outcomes students must achieve, while TLAs enhance student engagement and facilitate the achievement of these outcomes. Finally, assessment measures students’ performance and provide the input for continuous quality improvement of higher vocational education.

5.4. Discussion of Research Question 4

The final research question investigated the effect of OBE implementation—specifically ILOs, PjBL, and ASs on AGC among CBEC learners. The analysis of the hypothesized model revealed that the standardized path coefficient for the direct pathway from ILOs to AGC was 0.026, which is below the recommended threshold of 0.2, indicating a minimal direct effect of ILOs on AGC. However, ILOs had an indirect effect on AGC through PjBL and ASs, suggesting that the two constructs completely mediated the relationship.

The investigation of three alternative models showed that within different aspects of OBE implementation, ASs had the highest effect size on AGC, followed by PjBL, which accounted for 30.2% of the total indirect effect, and the sequential mediation of PjBL and ASs, which accounted for 22.6%. Pairwise contrasts of the indirect effects demonstrated that while PjBL and ASs are correlated, their individual mediating effects are more substantial than when they are considered as a chain in the model. This indicates that each single mediator contributes more significantly to AGC than the sequential mediation.

6. Theoretical Contributions and Implications

6.1. Theoretically Contributions

This study contributed to the existing literature on OBE implementation in two aspects. Firstly, this study aimed to optimize OBE implementation to promote AGC by applying the theory of CA (Biggs & Tang, 2011). Proper educational alignment occurs when TLAs help students build the knowledge targeted by the module, which is then accurately measured through assessment tasks (Jaiswal, 2019).

This study employed PjBL as key TLAs to engage students in active learning, with the results indicating that such engagement significantly boosts their likelihood of achieving the desired outcomes. Well-defined assessment tasks, coupled with assessment criteria and rubrics tailored to the specific learning outcomes, were instrumental in making precise assessments of how well these levels of ILOs can be achieved and can serve as the input for continuous quality improvement. Therefore, the findings reveal that PjBL and ASs not only have a direct and positive impact on AGC but also play mediating roles in the relationships between ILOs and AGC, enriching our understanding of OBE operationalism.

Secondly, this study utilized Bloom’s Taxonomy to craft clear and constructively aligned learning outcomes, clarifying what learners are expected to achieve by the end of a study program, the anticipated standard of achievement, and how they should demonstrate their learning. The findings confirmed the pivotal role of Bloom’s Taxonomy in organizing ILOs and aligning them with program and course outcomes, thus promoting the attainment of advanced competencies pertinent to the job market. Therefore, this study enhances the existing body of literature on Bloom’s Taxonomy by illustrating how it serves as a foundational element in aligning ILOs with teaching and learning activities, as well as with ASs.

6.2. Practical Implications

This study offers several practical implications for higher vocational practitioners planning to implement OBE in higher vocational colleges. Firstly, proper training and tailored education for practitioners are essential to ensure a better understanding of OBE guidelines and can constructively align ILOs with PjBL, assessment, and performance measurement, which were deemed essential to enhance student’s learning experience and improve the competencies of graduates (Kalianna & Chandran, 2012).

Secondly, this study emphasizes that vocational educators should build a positive environment for active learning. This can be achieved by incorporating PjBL like engaging students in a challenging task, promoting sustained inquiry, facilitating the discovery of answers to authentic questions, assisting in project selection, reflecting on the process, reviewing and revising the work, as well as developing a final product for a specific audience to boost opportunities for students to attain ILOs. This is supported by Nagarajan (2019), who proposes that the PjBL model can assist educators in creating a pleasant learning environment so that students can connect their ideas and skills, leading to improved learning outcomes and effective engagement in the learning process.

Educators should design ASs that allow students to select appropriate assessment tasks aligned with previously defined ILOs. Using standardized rubrics and clearly defined learning outcomes can enhance program expectations and encourage students to take greater responsibility for their academic pursuits.

Additionally, this research has revealed that within the OBE framework, ASs have the most significant influence in mediating the relationship between ILOs and AGC. These ASs are more impactful than PjBL when it comes to this mediation role. This underscores the importance of having a diverse array of ASs that are well-suited to measure learning outcomes effectively. The findings align with Alonzo’s 2020 work, which emphasizes the growing emphasis on ASs in contemporary educational programs.

6.3. Managerial Implications

For policymakers and accreditation bodies, this study presents a comprehensive OBE framework that complies with the guidelines and processes of OBE guided by the national and international accreditation bodies (e.g., the Washington Accord and NBA) to ensure national and international academic equivalency and accreditation. The standardized and achievable expectations and benchmarks of these bodies will ensure the effectiveness of the OBE process and minimize resistance from education institutions during the implementation process (Gunarathne et al., 2019).

Moreover, due to the dynamic nature of the global business landscape, e-commerce companies will have to update their competency standards continuously. This ensures that graduates possess the skills to thrive in the international business environment. Meanwhile, these updating requirements for competencies are crucial for ensuring the continuous quality improvement of academic programs. Gurukkal (2020) highlighted the potential of OBE in the global bigger context, emphasizing the imperative for higher education institutions to adapt to the outcome-based education system because of the ongoing economic revolution that the worldwide growth of market needs such kinds of standardized indicators and international accreditations measures that higher educations need to implement.

7. Conclusions and Limitations

This study addresses the urgent need to implement OBE in higher vocational education to bridge the gap between employment requirements and graduates’ readiness. By quantitatively assessing the impact of OBE implementation on the AGC among CBEC learners, the study utilizes the theory of CA to construct a framework. This framework, validated empirically with a sample from four public vocational colleges already implementing OBE and PjBL, examines the effects of different aspects of OBE implementation (ILOs, PjBL, and ASs) on AGC.

The study identifies significant direct and indirect effects of OBE implementation on AGC. While ILOs do not directly impact AGC, PjBL and ASs do, with ASs having a more substantial direct effect. ASs also exhibit a more robust mediating effect than PjBL, highlighting the importance of sophisticated and authentic assessments aligned with ILOs. Additionally, the study identifies a significant chain mediating effect of PjBL and ASs in the relationship between ILOs and AGC, providing insights into assessment design and alignment within the OBE framework.

Effective implementation of the OBE framework relies on alignment, ensuring that teaching and learning activities and ASs align with learning outcomes. The study demonstrates the utility of alignment for enhancing teaching methods and AGC attainment, suggesting its importance for Higher Education Institutions (HEIs) to optimize OBE implementation based on the theory of CA.

The limitations of this study should be included, and directions for future studies should be suggested. Firstly, the survey was limited to candidates from public vocational colleges in Nanjing, Jiangsu province, China, potentially limiting the generalizability of the findings. Future research should encompass a broader range of institutions across China to provide a more comprehensive evaluation of OBE’s impact. Secondly, the study focused on a relatively small number of students, excluding educators, which may limit the depth of analysis. Future studies should expand participant numbers and include various student groups from different disciplines and program types while considering educators' perspectives to provide a more holistic understanding of OBE's impact. Thirdly, the study primarily explored the OBE process concerning ILOs, TLAs, Ass, and AGC, overlooking other factors such as curriculum design and institutional support. Future research should incorporate these variables to offer more robust recommendations for effective OBE implementation and competency enhancement in higher vocational education. Lastly, the study's cross-sectional design hinders the establishment of causal relationships. Longitudinal research is recommended to track changes over time and provide insights into the long-term efficacy of OBE implementation. Continuous evaluation aligns with the principles of OBE's continuous quality improvement approach, ensuring the sustainability of higher vocational education.

Conflicts of Interest

The authors declared that there was no potential conflict of interest concerning the research, authorship, and/or publication of this article.

Appendix A

Research Questionnaire

Dear students:

In order to better understand your attitudes and perceptions toward the effect of the implementation of Outcome-based education in the Cross-border E-commerce program, the researchers developed this questionnaire and welcome your answers. We ask you to cooperate with us, as a respondent, for about 10-15 minutes to fill this online questionnaire. This data is exclusively for academic research use and is strictly confidential. Please choose the number that best matches the degree to which you agree or disagree with the statements.

1= Strongly Disagree (StrDA)

2= Disagree (DA)

3= Slightly Disagree (SigDA)

4= Neither Agree nor Disagree (NAD)

5= Slightly Agree (SigA)

6= Agree (A)

7= Strongly Agree (StrA).

Section 1: Demographic Information

① Male ② Female

- 2.

Age

① 19 ② 20 ③ 21 ④ 22 ⑤ 23 ⑥ 24 ⑦ 25 or above

- 3.

Institute: ______________________________

- 4.

Major: _______________________________

- 5.

Duration of outcome-based education learning experience

One year ② Two years ③ Three years

- 6.

Duration of Engagement in Project-Based Learning

① One year ② Two years ③ Three years

Section 2:

| Item Code |

Statement |

Strongly Disagree |

Disagree |

Slightly Disagree |

Neither Agree nor Disagree |

Slightly Agree |

Agree |

Strongly Agree |

| Intended learning outcomes (ILO) |

| ILO 1 |

The intended learning outcomes are introduced to students upon admission into the program. |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

| ILO 2 |

The intended learning outcomes are clear and understandable. |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

| ILO 3 |

The intended learning outcomes are relevant to future professions. |

|

|

|

|

|

|

|

| ILO 4 |

The intended learning outcomes are attainable. |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

| ILO 5 |

The intended learning outcomes are carefully developed based on what your college requires in terms of the competences (knowledge, skills, and attitude) the program must possess. |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

| ILO 6 |

The intended learning outcomes are carefully developed based on what the industry expects from the graduates of the program prior to their entry into the labor force. |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

| ILO 7 |

The intended learning outcomes are carefully developed based on what students and parents expect from the program. |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

| Project-based learning (PjBL) |

| PjBL 1 |

Teaching and learning activities in project-based learning are in line with the intended learning outcomes. |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

| PiBL 2 |

Project-based learning motivates me to understand the learning outcomes they are meant to achieve. |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

| PjBL 3 |

Project-based learning increases the motivation for the subject. |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

| PjBL 4 |

Project-based learning helps in developing the learning process to improve learning performance. |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

| PjBL 5 |

Opportunities for practical application of professional skills in project-based learning are adequate. |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

| PjBL 6 |

Teaching and learning activities in project-based learning are organized appropriately for students to achieve the graduate competences. |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

| Assessment strategies (AS) |

| AS 1 |

The design of assessment tasks is closely linked to the intended learning outcomes. |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

| AS 2 |

Assessment tasks can align with the teaching and learning activities in project-based learning. |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

| AS 3 |

The design of assessment tasks offers me the opportunity to improve my performance (e.g., knowledge, skills, and attitude). |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

| AS 4 |

The teachers use different assessment tools to evaluate students’ progress. |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

| AS 5 |

I can assess how well I have acquired the expected knowledge, skills, and attitudes after the teaching and learning process. |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

| AS 6 |

Assessment criteria and rubrics for assessing learning outcomes are explained to students at the beginning of the teaching practice. |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

| AS 7 |

Criteria and rubrics for assessing the attainment of graduate competences are appropriate. |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

| Attainment of graduate competences (AGC) |

| AGC 1 |

The attainment of graduate competences can contribute to employability. |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

| AGC 2 |

The attainment of graduate competences can prepare me better for the workplace. |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

| AGC 3 |

The OBE approach is the best solution to address skill mismatches in the workplace. |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

| AGC 4 |

I think OBE will lead to greater efficiency and quality in attaining graduate competences. |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

References

- Aji, G. S., Darmadi, D., & Rohmawati, Y. I. I. (2023). Improving Learning Outcomes and Student Responses Through Project Based Learning Model On Light And Optical Instruments. JPPIPA (Jurnal Penelitian Pendidikan IPA), 8(1), 35-42. [CrossRef]

- Akhmadeeva, L., Hindy, M., & Sparrey, C. J. (2013). Overcoming obstacles to implementing an outcome-based education model: Traditional versus transformational OBE. In Proceedings of the 2013 Canadian Engineering Education Association (CEEA13) Conference (pp. 1-5). [CrossRef]

- Akramy, S. A. (2021). Implementation of Outcome-Based Education (OBE) in Afghan Universities: Lecturers' voices. International Journal of Quality in Education, 5(2), 27-47.

- Almulla, M. A. (2020). The effectiveness of the Project-Based Learning (PBL) approach as a way to engage students in learning. SAGE Open, 10(3). [CrossRef]

- Alonzo, D., Bejano, J., & Labad, V. (2023). Alignment between teachers’ assessment practices and principles of outcomes-based education in the context of Philippine education reform. International Journal of Instruction, 16(1), 489-506. [CrossRef]

- Anderson, J. C., & Gerbing, D. W. (1988). Structural equation modeling in practice e a review and recommended two step approach. Psychological Bulletin, 103(3), 411e423. [CrossRef]

- Anderson, L. W., & Krathwohl, D. R. (Eds.). (2002). A Taxonomy for Learning, Teaching, and Assessing: A Revision of Bloom’s Taxonomy of Educational Objectives. New York, NY, USA: Longman.

- Bagban, T. I., Patil, S. R., Gat, A., & Shirgave, S. K. (2017). On Selection of Assessment Methods in Outcome Based Education (OBE). Journal of Engineering Education Transformations, 30(3), 327–332. [CrossRef]

- Bagozzi, R. P., & Yi, Y. (2012). Specification, evaluation, and interpretation of structural equation models. Journal of the academy of marketing science, 40, 8-34. [CrossRef]

- Baguio, J. B. (2019). Outcomes-based education: teachers’ attitude and implementation. University of Bohol Multidisciplinary Research Journal, 7(1), 110-127. [CrossRef]

- Baran, M., & Maskan, A. (2011). The effect of project-based learning on pre-service physics teachers electrostatic achievements. Cypriot Journal of Educational Sciences, 5(4), 243–257.

- Biggs, J. (2014). Constructive alignment in university teaching. HERDSA Review of Higher Education, 1, 5-22. http://www.herdsa.org.au/ herdsa-review-higher-education-vol-1/5-22.

- Biggs, J., & Tang, C. (2011). Teaching for quality learning at university fourth edition the society for research into higher education (T. C. Biggs John, Ed.; Fourth). http://www.openup.co.uk.

- Biggs, J.B. & Tang, C. (2007). Teaching for quality learning at university. (3rd Ed.). McGraw Hill Education & Open University Press.

- Black, P., & Wiliam, D. (2009). Developing the theory of formative assessment. Educational Assessment, Evaluation and Accountability, 21(1), 5-31. [CrossRef]

- Boateng, G. O., Neilands, T. B., Frongillo, E. A., Melgar-Qui˜nonez, H. R., & Young, S. L. (2018). Best practices for developing and validating scales for health, social, and behavioral research: A primer. Frontiers in Public Health, 6(149). [CrossRef]

- Bollen, K.A.; Stine, R.A. Bootstrapping goodness-of-fit measures in structural equation models. Sociol. Methods Res. 1992, 21, 205–229.

- Carless, D., S. M. Bridges, C. K. Y. Chan, & R. Glofcheski, (Eds.) (2017). Scaling up assessment for learning in higher education. Springer.

- Chen, S., and Lin, C. (2019). Understanding the effect of social media marketing activities: the mediation of social identification, perceived value, and satisfaction. Technol. Forecast. Soc. Chan. 140, 22–32. [CrossRef]

- Chin, W. W. (1998). The partial least squares approach to structural equation modelling. Modern Methods in Business Research, 295(2), 295–336.

- Cifrian, E., Andrés, A., Galán, B., & Viguri, J. R. (2020). Integration of different assessment approaches: application to a project-based learning engineering course. Education for Chemical Engineers, 31, 62–75. [CrossRef]

- Clark, D. A., & Bowles, R. P. (2018). Model fit and item factor analysis: Overfactoring, underfactoring, and a program to guide interpretation. Multivariate behavioral research, 53(4), 544-558. [CrossRef]

- Cörvers, R., Wiek, A., de Kraker, J., Lang, D. J., & Martens, P. (2016). Problem-based and project-based learning for sustainable development. Sustainability Science: An Introduction, 349-358. [CrossRef]

- Custodio, P. C., Espita, G. N., & Siy, L. C. (2019). The implementation of outcome-based education at a Philippine University. Asia Pacific Journal of Multidisciplinary Research, 7(4), 37–49.

- Day, I. N. Z., van Blankenstein, F. M., Westenberg, M., & Admiraal, W. (2018). A review of the characteristics of intermediate assessment and their relationship with student grades. Assessment and Evaluation in Higher Education, 43(6), 908–929. [CrossRef]

- Driscoll, A., & Wood, S. (2023). Developing outcomes-based assessment for learner-centered education: A faculty introduction. Taylor & Francis.

- Erdem, Y. S. (2019). Teaching and learning at tertiary-level vocational education: A phenomenological inquiry into administrators', teachers, and students, perceptions and experiences (Unpublished doctoral dissertation). Middle East Technical University, Turki.

- Field, A. (2009). Discovering statistics using SPSS (3rd Ed.). Sage Publications Ltd.

- Fornell, C., & Larcker, D. F. (1981). Structural equation models with unobservable variables and measurement error: Algebra and statistics. [CrossRef]

- Frey, B. B., V. L. Schmitt, and J. P. Allen. (2012). Defining Authentic Classroom Assessment. Practical Assessment, Research and Evaluation, 17 (1), 2. [CrossRef]

- Gallagher, S. E., & Savage, T. (2020). Challenge-based learning in higher education: an exploratory literature review. Teaching in Higher Education, 1-23. [CrossRef]

- Glasser, S. P., & Glasser, P. (2014). Essentials of clinical research. Springer. [CrossRef]

- Glasson, T. (2009). Improving student achievement: A practical guide to assessment for learning. Curriculum Press.

- Guerrero-Roldán, A. E., & Noguera, I. (2018). A model for aligning assessment with competences and learning activities in online courses. The Internet and Higher Education, 38, 36-46. [CrossRef]

- Gunarathne, N., Senaratne, S., & Senanayake, S. (2019). Outcome-based education in accounting. Journal of Economic and Administrative Sciences, 36(1), 16–37. [CrossRef]

- Gurukkal, R. (2018). Towards outcome-based education. Higher Education for the Future, 5(1), 1-3. [CrossRef]

- Gurukkal, R. (2020). Outcome-based education: an open framework. Higher Education for the Future, 7(1), 1-4. [CrossRef]

- Guzman, M.F.D. De, Edaño, D. C., & Umayan, Z. D. (2017). Understanding the Essence of the Outcomes-Based Education (OBE) and Knowledge of its Implementation in a Technological University in the Philippines. Asia Pacific Journal of Multidisciplinary Research, 5(4), 64–71.

- Hair, J. F., William C. B., Babin, B. J., & Anderson, R. E. (2010). Multivariate Data Analysis. Prentice Hall.

- Hair, J.P., Black, J.P., Babin, J.P., & Anderson, R.E. (2019). Multivariate Data Analysis, Eighth Edition. Harlow: Cengage Learning.

- Harden RM. (2009). AMEE Guide No. 14: Outcome-based education: Part 1-An introduction to outcome-based education. Medical Teacher, 21, 7-14. [CrossRef]

- Heiskanen, E., Thidell, Å., & Rodhe, H. (2016). Educating sustainability change agents: The importance of practical skills and experience. Journal of Cleaner Production, 123, 218-226. [CrossRef]

- Hooper, D., Coughlan, J., & Mullen, M. (2008). Structural equation modelling: Guidelines for determining model fit. Electronic Journal of Business Research Methods, 6, 53–60.

- Hron, A., & Friedrich, H. F. (2003). A review of web-based collaborative learning: Factors beyond technology. Journal of Computer Assisted Learning, 19(1), 70-79. [CrossRef]

- Huang, S. W., & Chang, T. Y. (2020). Social image impacting attitudes of middle-aged and elderly people toward the usage of walking aids: An empirical investigation in Taiwan. Healthcare, 8(4), 543. [CrossRef]

- Inés, J., Daniel, D., & Belén, B. (2020). Learning outcomes-based assessment in distance higher education: A case study. Open Learning: The Journal of Open, Distance and e-Learning. [CrossRef]

- Jackson, D. (2016). Skill mastery and the formation of graduate identity in Bachelor graduates: evidence from Australia. Studies in Higher education, 41(7), 1313-1332. [CrossRef]

- Jaiswal, P. (2019). Using Constructive Alignment to Foster Teaching Learning Processes. English Language Teaching, 12(6), 10. [CrossRef]

- Jo, H. (2023). Understanding AI tool engagement: A study of ChatGPT usage and word-of-mouth among university students and office workers. Telematics and Informatics, 85, 102067. [CrossRef]

- Jones, M. D., Hutcheson, S., & Camba, J. D. (2021). Past, present, and future barriers to digital transformation in manufacturing: A review. Journal of Manufacturing Systems, 15. [CrossRef]

- Kaliannan, M., & Chandran, S. D. (2012). Empowering students through outcome-based education (OBE). Research in Education, 87(1), 50–63. [CrossRef]

- Kim, S. U. (2015). Exploring the knowledge development process of English language learners at a high school: how do English language proficiency and the nature of research task influence student Learning? J. Assoc. Inform. Sci.Technol. 66, 128-143. [CrossRef]

- King, J. A., & Evans, K. M. (1991). Can we achieve outcome-based education? Educational Leadership, 49(2), 73–75.

- Kline, R. B. (2004). Principles and practice of structural equation modeling (2nd ed.). The Guilford Press.

- Lake, N. J., Compton, A. G., Rahman, S., & Thorburn, D. R. (2016). Leigh syndrome: one disorder, more than 75 monogenic causes. Annals of neurology, 79(2), 190-203. [CrossRef]

- Lau, K. L., & Ho, E. S. C. (2016). Reading performance and self-regulated learning of Hong Kong students: What we learnt from PISA 2009. The Asia-Pacific Education Researcher, 25, 159-171. [CrossRef]

- Lavy, I., & Shriki, A. (2008). Investigating changes in prospective teachers’ views of a “good teacher” while engaging in computerized project-based learning. Journal of Mathematics Teacher Education, 11(4), 259–284. [CrossRef]

- Libba, M., Tanya, J., et al. (2020). Improving Student Learning Outcomes through a Collaborative Higher Education Partnership. International Journal of Teaching and Learning in Higher Education, 32(1). http://www.isetl.org/ ijtlhe/.

- Ling, Y., Chung, S. J., & Wang, L. (2021). Research on the reform of management system of higher vocational education in China based on personality standard. Current Psychology, 1-13. [CrossRef]

- Lozano, R., Barreiro-Gen, M., Lozano, F., & Sammalisto, K. (2019). Teaching sustainability in European higher education institutions: Assessing the connections between competences and pedagogical approaches. Sustainability, 11(6), 1602. [CrossRef]

- Lozano, R., Merrill, M., Sammalisto, K., Ceulemans, K., & Lozano, F. (2017). Connecting competences and pedagogical approaches for sustainable development in higher education: A literature review and framework proposal. Sustainability, 9(10), 1889. [CrossRef]

- Lynam, S., & Cachia, M. (2018). Students’ perceptions of the role of assessments at higher education. Assessment and Evaluation in Higher Education, 43(2), 223–234. [CrossRef]

- MacKinnon, D. P. (2008). Introduction to Statistical Mediation Analysis. Mahwah: Erlbaum.

- Mahedo, M. T. D. and Bujez A. V. (2014). Project Based Teaching As A Didactic Strategy For The Learning And Development Of Basic Competence In Future Teachers. Procedia Social and Behavioral Science, 141, 232-236. [CrossRef]

- Mandinach, E. B., & Gummer, E. S. (2016). What does it mean for teachers to be data literate: Laying out the skills, knowledge, and dispositions. Teaching and Teacher Education, 60, 366-376. [CrossRef]

- Mateos Naranjo, E., Redondo Gómez, S., Serrano Martín, L., Delibes Mateos, M., & Zunzunegui González, M. (2020). Implantación de una metodología docente activa en la asignatura de Redacción y Ejecución de Proyectos del Grado en Biología. Revista de estudios y experiencias en educación, 19(39), 259-274. [CrossRef]

- Matthews, K. E., & Mercer-Mapstone, L. D. (2018). Toward curriculum convergence for graduate learning outcomes: academic intentions and student experiences. Studies in Higher Education, 43(4), 644-659. [CrossRef]

- Montoya, A. K., & Edwards, M. C. (2021). The poor fit of model fit for selecting number of factors in exploratory factor analysis for scale evaluation. Educational and psychological measurement, 81(3), 413-440. [CrossRef]

- Nikolov, R., Shoikova, E., & Kovatcheva, E. (2014). Competence based framework for curriculum development. Sofia: Za bukvite, O'pismeneh.

- Orfan, S. N. (2021). Political participation of Afghan Youths on Facebook: A case study of Northeastern Afghanistan. Cogent Social Sciences, 7(1), 1857916. [CrossRef]

- Ortega, R. A. A., & Cruz, R. A. O.-D. (2016). Educators’ Attitude towards Outcomes-Based Educational Approach in English Second Language Learning. American Journal of Educational Research, 4(8), 597–601. [CrossRef]

- Pham, H. T., & Nguyen, P. V. (2023). ASEAN quality assurance scheme and Vietnamese higher education: a shift to outcomes-based education?. Quality in Higher Education, 1-28. [CrossRef]

- Podsakoff, P. M., & Organ, D. W. (1986). Self-reports in organizational research: Problems and prospects. Journal of Management, 12(4), 531–544. [CrossRef]

- Podsakoff, P. M., MacKenzie, S. B., and Podsakoff, N. P. (2012). Sourcesof method bias in social science research and recommendations on how to control it. Ann. Rev. Psychol. 63, 539–569. [CrossRef]

- Podsakoff, P. M., Mackenzie, S. B., Lee, J. Y., and Podsakoff, N. P. (2003). Common method biases in behavioral research: a critical review of the literature and recommended remedies. J. Appl. Psychol. 88, 879–903. [CrossRef]

- Pradana, H. D. (2023). Project-Based Learning: Lecturer Participation and Involvement in Learning in Higher Education. Journal for Lesson and Learning Studies, 6(2). [CrossRef]

- Rakowska, A., & de Juana-Espinosa, S. (2021). Ready for the future? Employability skills and competencies in the twenty-first century: The view of international experts. Human Systems Management, 40(5), 669-684. [CrossRef]

- Rao, N. J. (2020). Outcome-based education: An outline. Higher Education for the Future, 7(1), 5–21. [CrossRef]

- Sadler, D. R. (2016). Three in-Course Assessment Reforms to Improve Higher Education Learning Outcomes. Assessment & Evaluation in Higher Education, 41(7), 1081–1099. [CrossRef]

- Shao, Y., & Kang, S. (2022). The association between peer relationship and learning engagement among adolescents: The chain mediating roles of self-efficacy and academic resilience. Frontiers in Psychology, 13, 938756. [CrossRef]

- Shi, D., Lee, T., & Maydeu-Olivares, A. (2019). Understanding the model size effect on SEM fit indices. Educational and psychological measurement, 79(2), 310-334. [CrossRef]

- Soares, I., Dias, D., Monteiro, A. & Proença, J. (2017), “Learning outcomes and employability: a case study on management academic programmes” in INTED2017 Proceedings. [CrossRef]

- Spady, W. G. (1994). Choosing outcomes of significance. Educational Leadership, 51(6), 18-22.

- Spracklin-Reid, D., & Fisher, A. (2013). Course-based learning outcomes as the foundation for assessment of graduate attributes-an update on the progress of Memorial University. Proceedings of the Canadian Engineering Education Association (CEEA). [CrossRef]

- Syeed, M. M., Shihavuddin, A. S. M., Uddin, M. F., Hasan, M., & Khan, R. H. (2022). Outcome based education (OBE): Defining the process and practice for engineering education. IEEE Access, 10, 119170-119192. [CrossRef]

- Tam, M. (2014). Outcomes-based approach to quality assessment and curriculum improvement in higher education. Quality Assurance in Education, 22(2), 158–168. [CrossRef]

- Tang, D. K. H. (2021). A Case Study of Outcome-based Education: Reflecting on Specific Practices between a Malaysian Engineering Program and a Chinese Science Program. Innovative Teaching and Learning, 3(1), 86-104. https://www.researchgate.net/publication/352864157.

- Tee, P. K., Wong, L. C., Dada, M., Song, B. L., & Ng, C. P. (2024). Demand for digital skills, skill gaps and graduate employability: Evidence from employers in Malaysia. F1000Research, 13, 389. [CrossRef]

- Thian, L. B., Ng, F. P., & Ewe, J. A. (2018). Constructive alignment of graduate capabilities: Insights from implementation at a private university in Malaysia. Malaysian Journal of Learning and Instruction, 15(2), 111–142. [CrossRef]

- Tong M. & Gao T. (2022). For Sustainable Career Development: Framework and Assessment of the Employability ofBusiness English Graduates, Front. Psychol, 13, 847247. [CrossRef]

- Tong, X., & Bentler, P. M. (2013). Evaluation of a new mean scaled and moment adjusted test statistic for SEM. Structural Equation Modeling, 20, 148-156. [CrossRef]

- Upadhye, V., Madhe, S., & Joshi, A. (2022). Project Based Learning as an Active Learning Strategy in Engineering Education. Journal of Engineering Education Transformations, 36 (1). [CrossRef]

- Wang, L. (2011). Designing and implementing outcome-based learning in a linguistics course: A case study in Hong Kong. Procedia - Social and Behavioral Sciences, 12, 9–18. [CrossRef]

- Wiggins, G., & McTighe, J. (2005). Understanding by design. Association for Supervision and Curriculum Development.

- Wu, Y., Xu, L., & Philbin, S. P. (2023). Evaluating the Role of the Communication Skills of Engineering Students on Employability According to the Outcome-Based Education (OBE) Theory. Sustainability, 15(12), 9711. [CrossRef]

- Yusof, R., Othman, N., Norwani, N. M., Ahmad, N. L. B., & Jalil, N. B. A. (2017). Implementation of outcome-based education (OBE) in accounting programme in higher education. International Journal of Academic Research in Business and Social Sciences, 7(6), 1186-1200. [CrossRef]

- Zhang, L., & Ma, Y. (2023). A study of the impact of project-based learning on student learning effects: A meta-analysis study. Frontiers in psychology, 14, 1202728. [CrossRef]

- Zhang, W., Xu, M., and Su, H. (2020). Dance with Structural Equations. Xiamen: Xiamen University Press.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).