1. Introduction

Periacetabular osteotomy (PAO) is an effective approach for surgical treatment of developmental dysplasia of the hip (DDH) [

1,

2]. By detaching the acetabulum from the pelvis and then re-orienting it, the femoral coverage can be improved, which reduces pressure between the femoral head and the acetabular cartilage, alleviating patients’ pain [

1,

2,

3,

4]. However, PAO is technically demanding due to the complex anatomical structure around the hip and the limited field of view (FoV) during surgery [

5]. In conventional PAO surgeries, surgeons have to mentally match preoperative images with the patient anatomy, and then perform a freehand osteotomy, resulting in low osteotomy accuracy and limited surgical outcomes.

To solve this challenge, computer-assisted PAO has been developed, providing surgeons with both preoperative assistance and intraoperative guidance [

4,

6,

7,

8]. Specifically, computer-assisted surgical planning allows surgeons to analyze the femoral head coverage, to define osteotomy planes, and to determine the optimal reorientation angle based on preoperative computed tomography (CT) or magnetic resonance (MR) images [

6]. Additionally, navigation systems are employed during surgeries. By visualizing surgical instruments and osteotomy planes with respect to preoperatively acquired images, surgeons are provided with visual guidance during osteotomy and acetabulum reorientation [

4,

6,

7]. Furthermore, surgical robots have been applied in osteotomy surgeries, providing physical guidance during the procedure [

9,

10,

11].

In recent years, augmented reality (AR) technology has been employed in computer-assisted orthopedic surgeries (CAOS) [

12,

13,

14,

15,

16,

17]. Compared with conventional surgical navigation, AR guidance can reduce surgeons’ sight diversion [

18]. Specifically, by wearing an optical see-through head-mounted display (OST-HMD), virtual models of surgical planning, patient anatomy, and instruments are superimposed on the corresponding physical counterparts. Thus, surgeons no longer need to switch their focus between the patient anatomy and visualization interfaces. In the literature, AR guidance in CAOS can be roughly divided into two categories: ArUco marker-based methods [

12,

13] and external tracker-based methods [

14,

15,

16,

17]. For methods belonging to the former category, ArUco markers are rigidly attached to both patient anatomy and surgical instruments. Thus, they can be tracked by cameras on the OST-HMD, and then aligned with the corresponding virtual models [

12,

13]. In contrast, external tracker-based methods utilize an optical or electromagnetic (EM) tracker, which has a larger tracking range than cameras on the OST-HMD [

14,

15,

16,

17]. By performing a virtual-physical registration, a transformation between the tracker coordinate system (COS) and the virtual space COS is estimated [

14,

15,

17]. Then, virtual models can be aligned with the corresponding physical counterparts tracked by the tracker. However, to the best knowledge of authors, previous AR guidance systems for PAO have not been integrated with robot assistance. Thus, despite AR guidance, surgeons still have to perform a freehand osteotomy without any physical guidance, resulting in low accuracy and safety.

To tackle this issue, in this paper, we propose a robot-assisted AR-guided surgical navigation system for PAO. The main contributions are summarized as follow:

- •

We propose a robot-assisted AR-guided surgical navigation system for PAO using Microsoft HoloLens 2 which is a state-of-the-art (SOTA) OST-HMD. After calibration and registration, the proposed system provides surgeons with not only AR guidance but also robot assistance during the osteotomy procedure.

- •

We propose an optical marker-based virtual-physics registration method. Specifically, we control a robot arm to align an optical marker attached to the robot flange with pre-defined virtual models, collecting point sets in the optical tracker COS and the virtual space COS, respectively. The transformation is then estimated based on paired-point matching.

- •

Comprehensive experiments were conducted to evaluate both virtual-physics registration accuracy and osteotomy accuracy of the proposed system. Experimentally, the proposed virtual-physics registration method can accurately align virtual models with the corresponding physical counterparts while the navigation system achieved accurate osteotomy on sheep pelvises.

2. Related Works

2.1. Surgical Navigation in PAO

In recent years, surgical navigation has been employed in PAO to provide surgeons with surgical guidance [

4,

6,

7,

8]. Liu et al. introduced a computer-assisted planning and navigation system for PAO, involving preoperative planning, reorientation simulation, intraoperative instrument calibration, and surgical navigation for both osteotomy and acetabular re-orientation [

6]. Pflugi et al. developed a cost-effective navigation system for PAO, utilizing gyroscopes instead of an optical tracker to measure acetabular orientation [

4]. Furthermore, Pflugi et al. proposed a hybrid navigation system, combining gyroscopic visual tracking with Kalman filtering to facilitate accurate acetabular reorientation [

7]. However, conventional surgical navigation systems for PAO exhibit several limitations: (1) surgeons need to frequently switch their view between visualization interfaces and the patient anatomy. This can be inconvenient, and may increase the potential for inadvertent errors; (2) Despite visual guidance, surgeons still need to perform a freehand osteotomy, which causes limited surgical accuracy.

2.2. Robot Assistance in Osteotomy

Past decades have witnessed the rapid development of robot-assisted osteotomy [

9,

10,

11,

19]. Sun et al. proposed an EM navigation-based robot-assisted system for mandibular angle osteotomy, where a robot arm is employed to position a specially designed template for osteotomy guidance [

9]. Bhagvath et al. developed an image-guided robotic system for automated robotic spine osteotomy [

19]. Tian et al. proposed a virtual fixture-based shared control method for curve-cutting in robot-assisted mandibular angle split osteotomy [

10]. Shao et al. proposed a robot-assisted navigation system based on optical tracking for mandibular reconstruction surgery, where an osteotomy slot was installed on the robot flange to provide physical guidance [

11]. However, to the best knowledge of the authors, no robot-assisted system has been reported in the literature for PAO. Thus, in this paper, we aim to introduce robot assistance to the PAO procedure.

2.3. AR guidance in CAOS

AR technology has significantly contributed to various orthopedic surgeries [

12,

13,

14,

15,

17]. Liebmann et al. proposed a AR guidance system for pedicle screw placement [

12]. Hoch et al. developed an AR guidance system for PAO [

13]. Both methods used ArUco markers to achieve real-time tracking based on cameras on the HoloLens. In contrast, Sun et al. developed an external tracker-based AR navigation system for maxillofacial surgeries, where the virtual-physical registration was performed by digitizing virtual points using a trackable pointer [

14]. Tu et al. proposed a virtual-physical registration method based on a registration cube attached to an EM sensor, which was applied to EM tracker-based AR navigation for distal interlocking [

15]. Furthermore, to enhance the depth perception, Tu et al. proposed a multi-view interactive virtual-physical registration method for placement of guide wires based on AR assistance [

17]. However, few AR guidance system has been proposed to integrate AR guidance with surgical robots. Despite visual assistance, surgeons still have to perform surgeries without any physical guidance. Thus, a robot-assisted AR-guided surgical navigation system is highly desired during the PAO procedure.

3. Method

3.1. Overview of the Proposed Navigation System

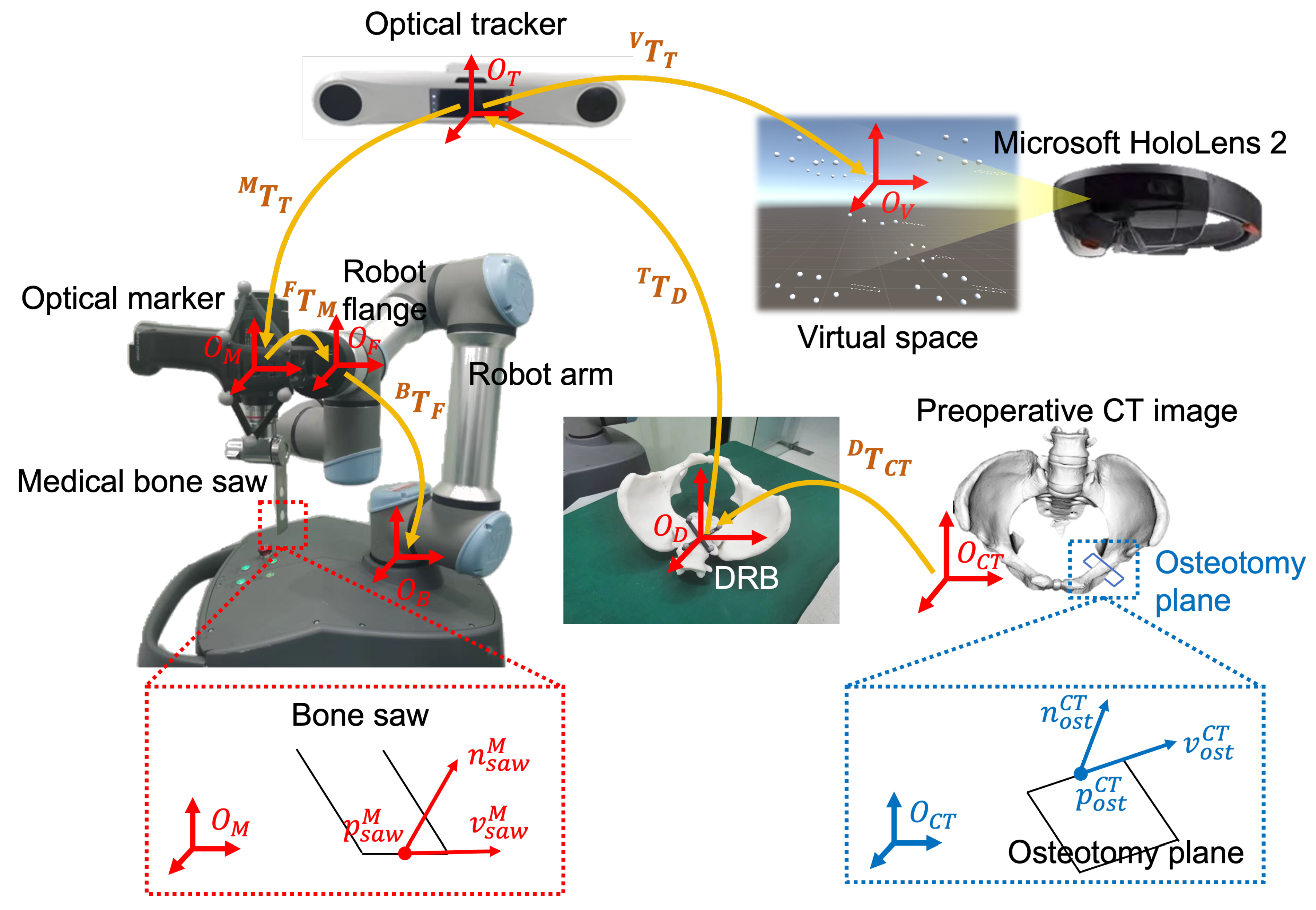

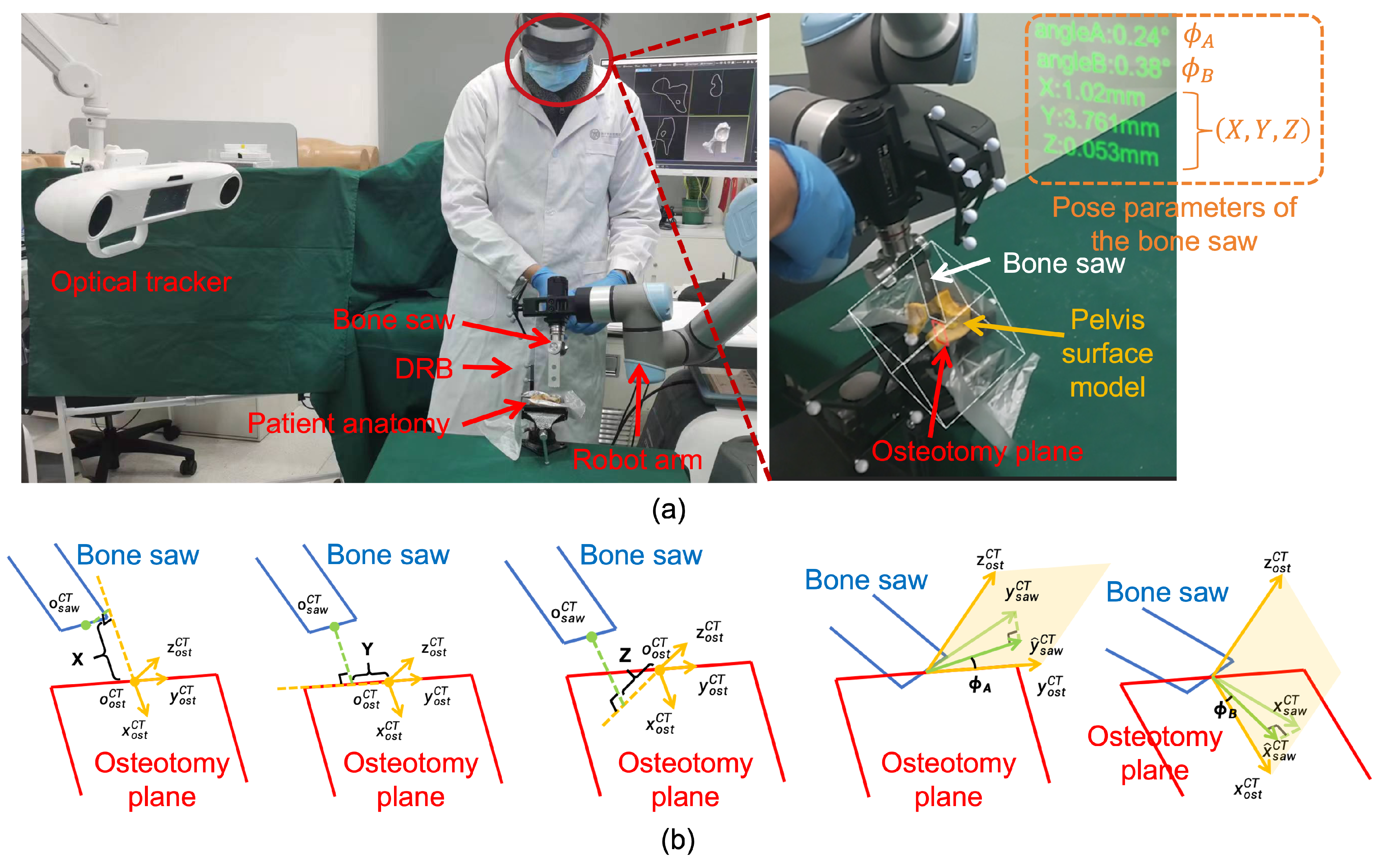

An overview of the proposed surgical navigation system setup is illustrated in

Figure 1. As shown in the figure, the proposed system consists of an optical tracker (OP-M620, Guangzhou Aimooe Technology Co., Ltd., Guangzhou, China), a Microsoft HoloLens 2, a robot arm (UR 5e, Universal robots Inc., Odense, Denmark), a medical bone saw (BJ5101, Bojin Medical Inc., Shanghai, China), an optical marker, a dynamic reference base (DRB), and a master computer. Specifically, the medical bone saw and the optical marker are rigidly attached to the flange of the robot arm. The DRB is rigidly attached to the patient anatomy. The optical tracker is fixed in the environment. The master computer communicates with the optical tracker to obtain poses of optical markers, with the remote controller of the robot arm for robot movement and feedback, and with the HoloLens for AR guidance.

Coordiante systems (COSs) involved in the proposed navigation system are summarized as follows. The three-dimensional (3D) COS of the preoperative CT image is represented by

. The 3D COS of the DRB is by

. The 3D COS of the optical tracker is by

. For the robot arm, the 3D COS of the optical marker is by

. The 3D COS of the robot flange is by

. The 3D COS of the robot base is by

. For the Microsoft HoloLens 2, once a HoloLens application is launched, a virtual space is defined and anchored to the environment. In this paper, we use

to represent the 3D COS of the virtual space. During the surgery, the pose of the Microsoft HoloLens 2 relative to the virtual space COS

can be tracked based on the HoloLens-SLAM algorithm [

15]. Thus, a virtual model can maintain its pose in the environment when the HoloLens moves around.

Before a PAO procedure, preoperative planning is performed to generate a pelvis surface model

and to define osteotomy planes in the CT COS

(will be introduced in

Section 3.2). As shown in

Figure 1, an osteotomy plane

is defined by a starting point

, a normal vector

, and a horizontal vector

, written as:

During the PAO procedure, the proposed system aims for two tasks: one is to align the bone saw with the planned osteotomy plane, and the other is to provide AR guidance for surgeons. For the first task, we first transform

from the CT COS

to the robot base COS

using following transformation chain:

In this transformation chain,

is the transformation from the CT COS

to the DRB COS

, which is estimated using a surface-based patient-image registration algorithm [

20].

is the transformation from the DRB COS

to the optical tracker COS

.

is the transformation from the optical tracker COS

to the optical marker COS

. Both

and

can be derived from the application programming interface (API) of the optical tracker at any time.

is the transformation from the optical marker COS

to the robot flange COS

, which can be estimated using our previously published hand-eye calibration method [

21].

is the transformation from the robot flange COS

to the robot base COS

, which can be derived from the API of the robot arm at any time.

Then, the robot arm is controlled to align the medical bone saw with the transformed osteotomy plane

. Specifically, as shown in

Figure 1, the medical bone saw

is defined by the blade center

and a normal vector

, and a horizontal vector

(will be introduced in

Section 3.3), written as:

Thus, the alignment between the medical bone saw and the osteotomy plane is formulated as:

where

is the target pose of the robot arm.

For the second task, the goal is to determine the poses of the osteotomy plane, the pelvis model, and the medical bone saw in the virtual space, such that virtual models rendered by the HoloLens 2 are superimposed on the corresponding physical counterparts. The poses of the osteotomy plane and the pelvis model in the virtual space COS

are calculated by:

where

is the transformation from the optical tracker COS

to the virtual space COS

, which is calculated using a virtual-physical registration (will be introduced in

Section 3.4). Meanwhile, we can calculate the pose of the bone saw in the virtual space COS by:

During the AR guidance, we update and from the API of the optical tracker at every moment. Thus, , , and are dynamically updated, allowing for the virtual models to follow the motion of both the patient anatomy and the medical bone saw.

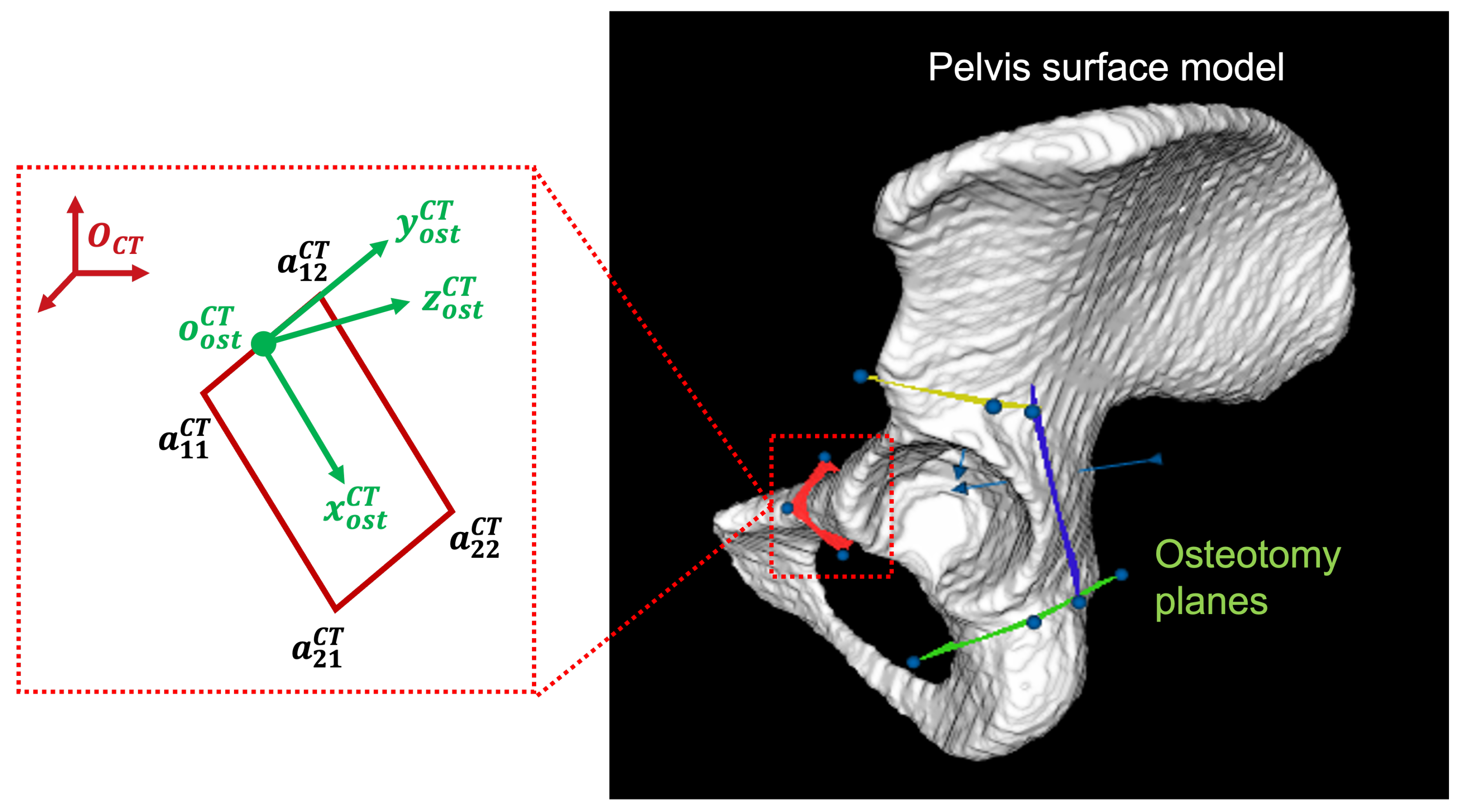

3.2. Preoperative planning

The goal of preoperative planning is to generate the pelvis model

and osteotomy planes from the preoperative CT image. We first segment the pelvis in the CT image using a threshold-based segmentation combined with a region growth algorithm [

17]. Then,

is generated based on the marching cube algorithm [

22]. Subsequently, osteotomy planes are manually defined on

, as shown in

Figure 2. For each plane, we denote its four corner points as

,

,

, and

, respectively. We then define a local COS, whose origin and three axes are calculated by:

We can define by setting , , and .

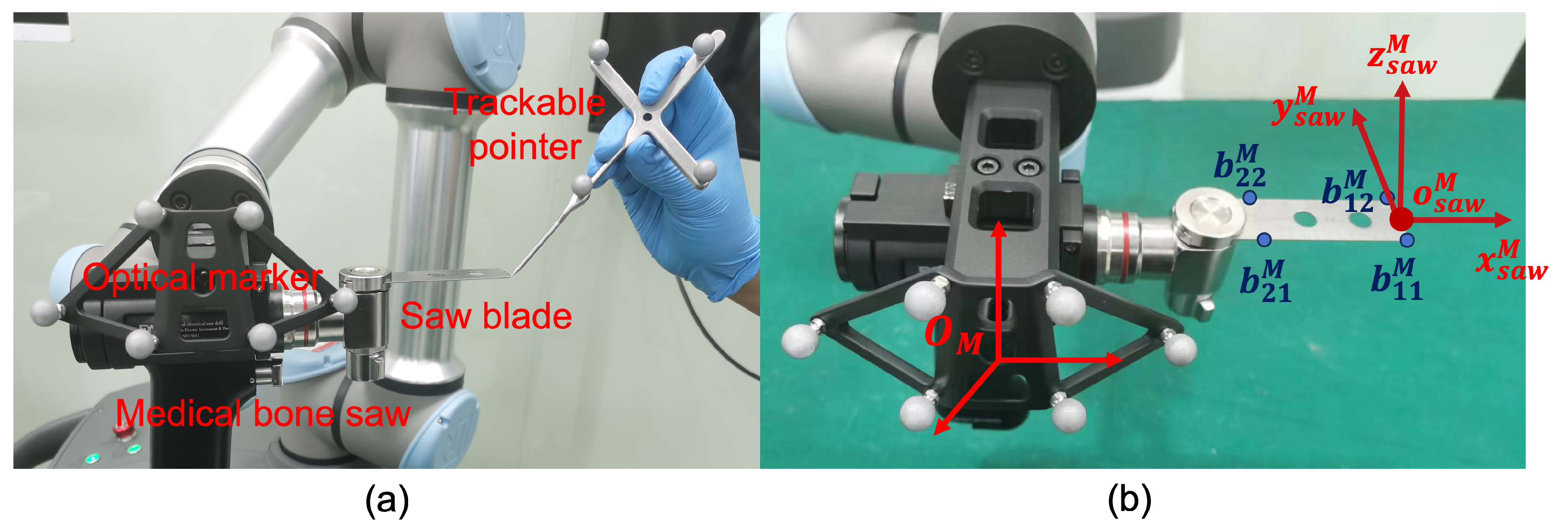

3.3. Bone saw Calibration

In bone saw calibration, we aim to calibrate the blade center

, the normal vector

, and the horizontal vector

of the medical bone saw

. Specifically, as shown in

Figure 3-(a), we digitize the four corner points of the saw blade using a trackable pointer, whose coordinates in the optical marker COS

are denoted as

,

,

, and

, respectively. Then, as shown in

Figure 3-(b), a local COS can be built based on the four points, where we define its origin and three axes by:

Subsequently, can be defined by setting , , and .

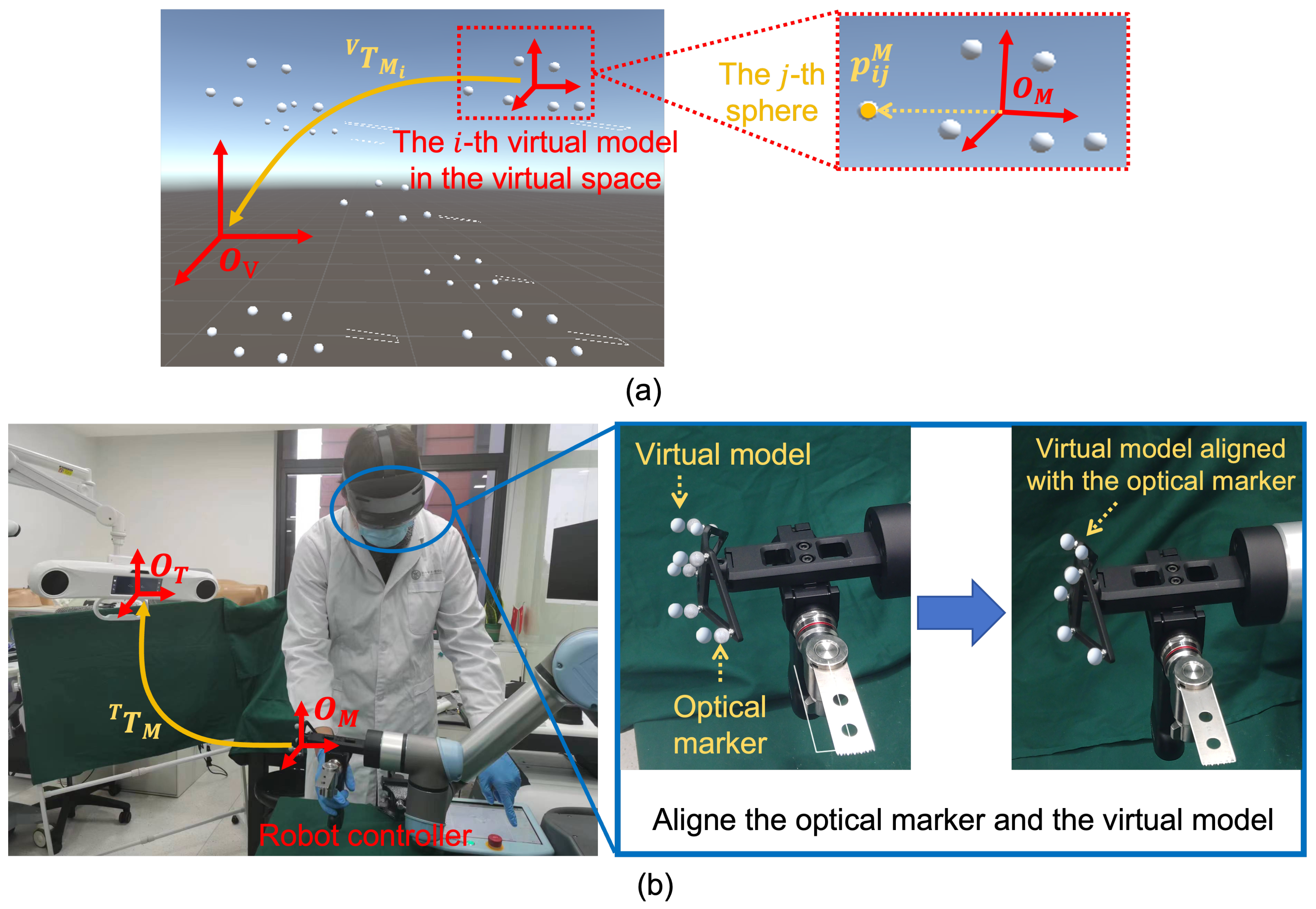

3.4. Virtual-Physical Registration

The goal of virtual-physical registration is to estimate . To this end, we propose an optical marker-based method via paired-point matching, which consists of three steps:

Step 1:

virtual models of the optical marker are loaded in the virtual space using different poses, as shown in

Figure 4-(a). We denote the pose of the

i-th virtual model (

) in the virtual space COS

as

. Then, for the

j-th infrared reflective spheres of this virtual model (

, where

is the number of spheres of a marker), we can calculate its coordinate in the virtual space COS

by:

where

is the coordinate of the

j-th sphere in the optical marker COS

. Thus, we can collect a point set in the virtual space COS

, denoted as

(

,

).

Step 2: We align the optical marker attached to the robot flange with each virtual model, as shown in

Figure 4-(b). Specifically, for the

i-th virtual model (

), we adjust the robot pose using the robot controller, aiming to minimize the misalignment between the optical marker and the virtual model. Compared with freehand alignment, the robot arm-based alignment has two advantages: (1) Due to the accurate movement and stability of the robot arm, no hand tremble is involved during the virtual-physical alignment; (2) Since the marker is held by the robot arm, surgeons can observe the misalignment from different directions. Thus, compared with freehand alignment where surgeons hold the marker and can only observe the misalignment from one direction, multi-view observation enhances depth perception, which can reduce the misalignment along the depth direction. After the virtual model is aligned with the optical marker, we derive

from the API of the optical tracker. Then, we can calculate the

j-th sphere center of the

i-th virtual model (

,

) in the optical tracker COS

by:

After aligning all virtual models and calculating all the , we collect another point set in the optical tracker COS , denoted as (, ).

Step 3: After collecting

and

,

is estimated by:

where

and

(

,

). Specifically, the transformation is solved using the paired-point matching algorithm [

23].

3.5. Robot-Assisted Augmented Reality-Guided Osteotomy

After surgical planning, calibration, and registration, the proposed system can provide surgeons with both robot assistance and AR guidance during the PAO procedure.

3.5.1. Robot Assistance

During the PAO procedure, the robot assistance consists of two parts: (1)

Bone saw alignment: Given an initial pose of the robot arm, a target robot pose is calculated using equation (

4). Then, the robot arm is controlled to move towards the target robot pose, aligning the medical bone saw with the planned osteotomy plane; (2)

Osteotomy plane constraint: After the alignment, the robot arm allows surgeons to drag the bone saw freely on the osteotomy plane, but does not allow movement along the normal vector or rotation along any axis. This is done by setting the robot arm into a force mode [

24]. Specifically, we first transform

,

, and

defined in

Section 3.2 from the CT COS

to the robot base COS

:

Then, using the API of the robot arm, we set the robot arm to be compliant along and , while non-compliant along . We also set the rotation along any axis to be non-compliant. By doing so, the motion of the bone saw can be restricted to the planned plane, providing a physical guidance for osteotomy.

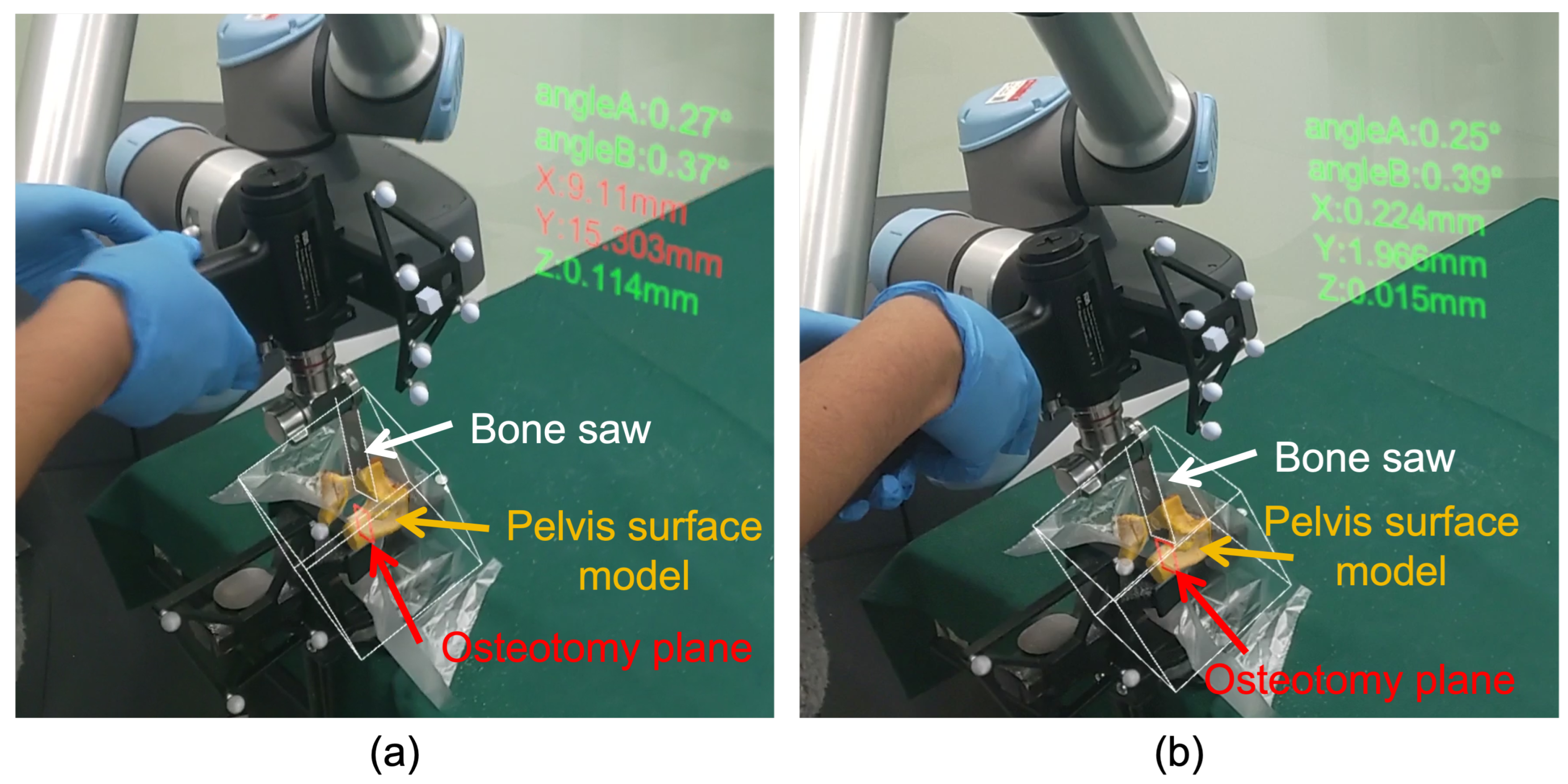

3.5.2. AR Guidance

In our system, two types of AR guidance are provided for surgeons: (1)

Visualization of virtual models: We transform the osteotomy plane

and the pelvis surface model

using equation (

5), and the bone saw

using equation (

6). Then, as shown in

Figure 5-(a), virtual models can be overlaid on the corresponding physical counterparts for AR visualization, providing surgeons with visual guidance; (2)

Display of pose parameters: It is critical for surgeons to know the translation and orientation of the bone saw relative to the osteotomy plane. To this end, we also display several pose parameters of the bone saw relative to the osteotomy plane, as shown in

Figure 5-(a). Specifically, we first transform

,

, and

defined in

Section 3.3 to the CT COS

:

Then, the following parameters are defined as shown in

Figure 5-(b): (a)

: the deviation between

and

along

,

, and

directions; (b)

: the angle between

and

, where

is the projection of

on the

plane; (c)

: the angle between

and

, where

is the projection of

on the

plane. By displaying these parameters in the HoloLens, surgeons can enhance their awareness of the bone saw pose relative to the planned osteotomy plane, leading to an accurate osteotomy.

4. Experiments and Results

4.1. Tasks and Evaluation Metrics

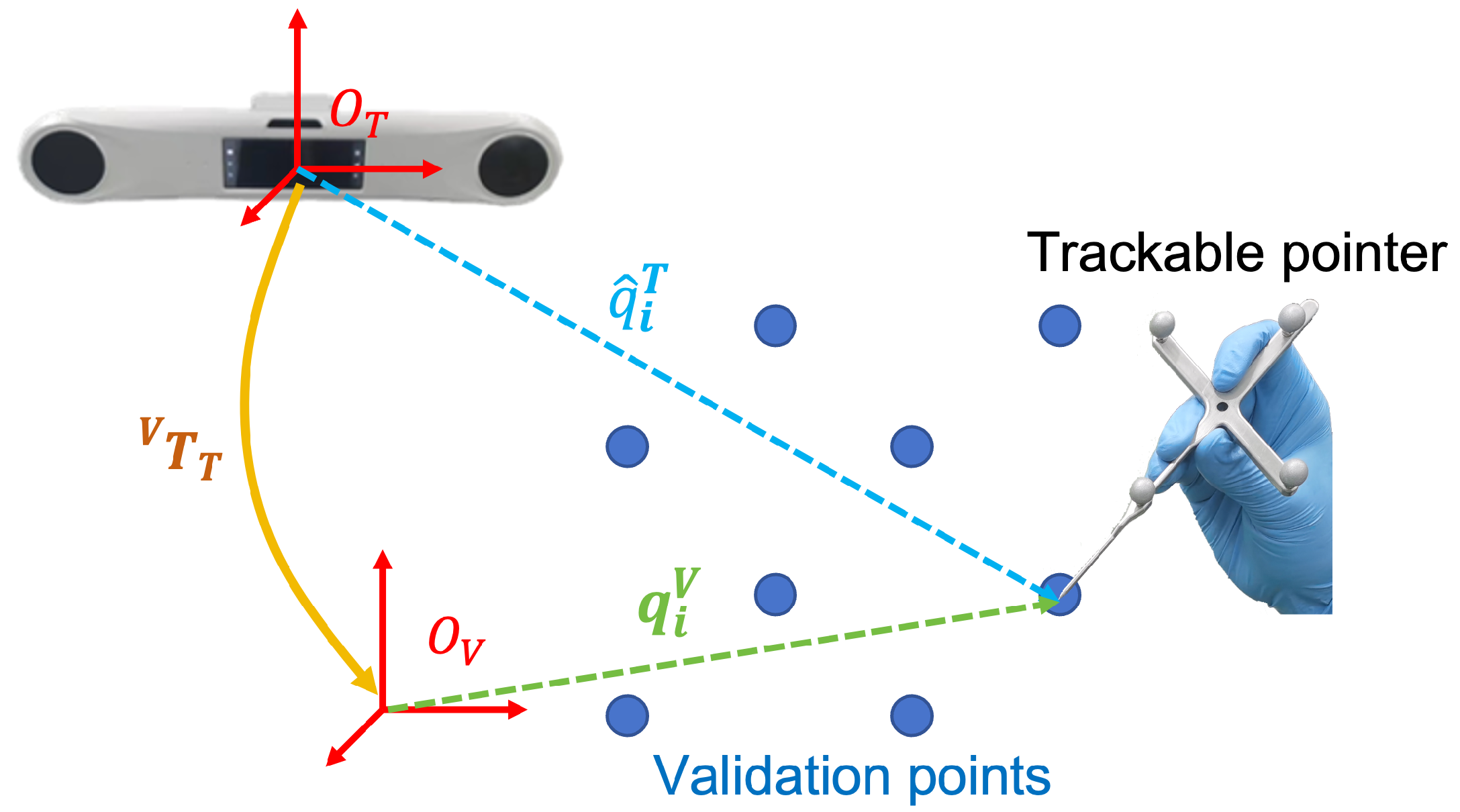

4.1.1. Evaluation of Virtual-Physical Registration Accuracy

To evaluate the accuracy of the proposed virtual-physical registration, we compared our method with SOTA methods [

14,

15,

17].

Figure 6 illustrates the experimental setup. Specifically, we first defined eight validation points

(

) in the virtual space. Then, for each method, we estimated a

, and then used a trackable pointer to digitize the validation points rendered in the HoloLens, obtaining their coordinates in the optical tracker COS

, denoted as

(

). We used mean absolute distance error (mADE) as a metric to evaluate registration accuracy, which was calculated by:

For each method, the experiment was repeated ten times. Thus, we calculated the average value, maximum, and minimum of mADE achieved by each method for comparison.

Additionally, we also conducted a qualitative evaluation using a pelvis phantom attached to a DRB. Specifically, the phantom was manufactured by 3D printing based on a pelvis surface model extracted from a CT image of a patient. Patient-image registration was performed before the experiment [

20], transforming the surface model to the DRB COS. Then, for each method, we estimated a

, and rendered the virtual model of the pelvis in the HoloLens. For qualitative evaluation of the registration accuracy, we compare the virtual-physical misalignment achieved by each method.

4.1.2. Ablation Study

We further conducted an ablation study to investigate the effectiveness of two strategies adopted in the proposed virtual-physical registration: (1) controlling a robot arm to align the optical marker; and (2) observing misalignment from different directions. In the ablation study, we followed the experimental setup introduced in

Section 4.1.1, while the proposed method was compared with the following two approaches: (1)

Freehand registration (denoted as FR): The optical marker was detached from the robot flange, and then held by hand to align with virtual models; (2)

Single-view robotic registration (denoted as SRR): In this approach, the optical marker was attached to the robotic flange, and aligned with virtual models by controlling the robot arm while the misalignment can only be observed from one direction. In this experiment, we also adopted mADE as a metric to evaluate the registration accuracy.

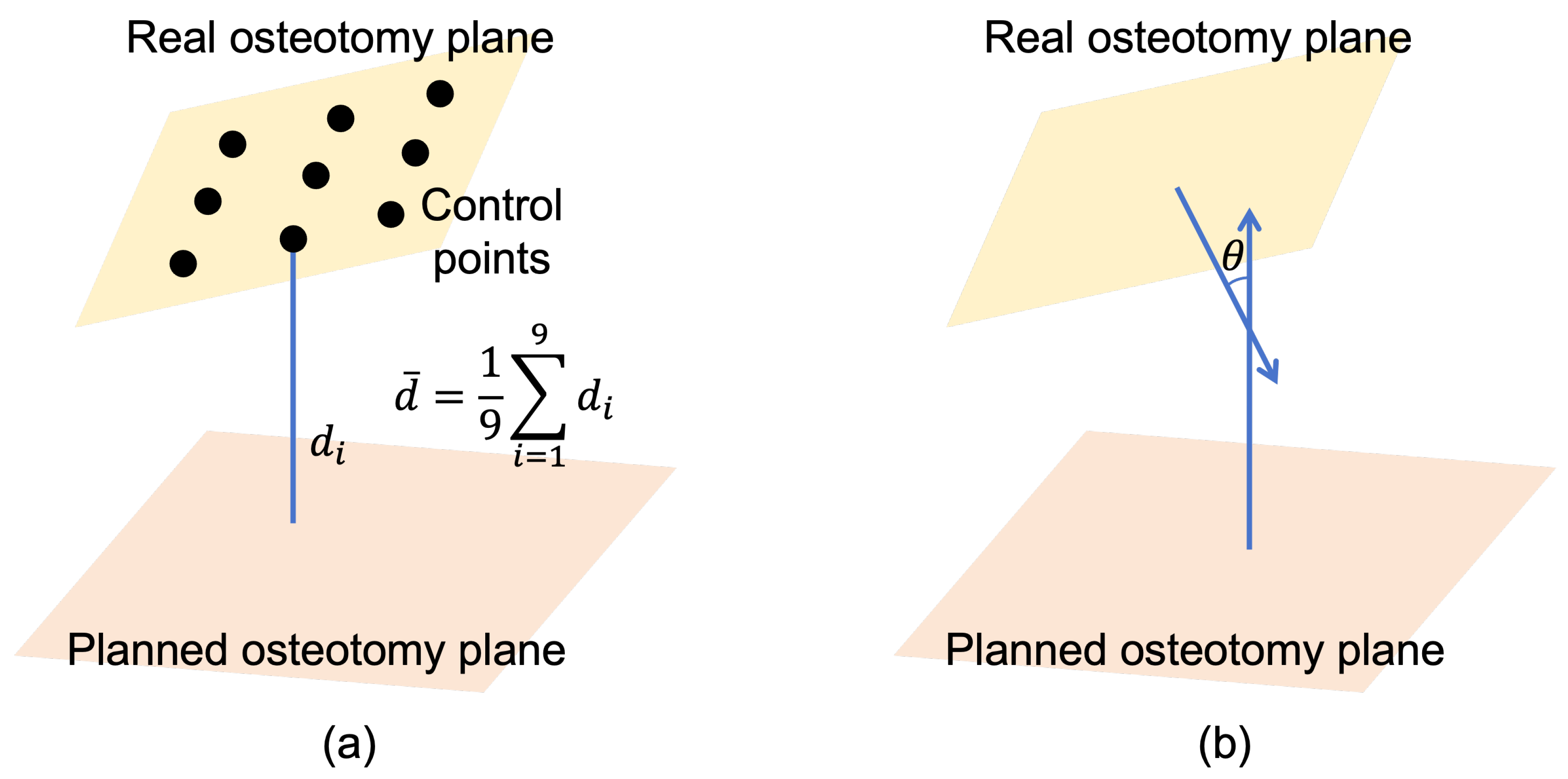

4.1.3. Evaluation of Osteotomy Accuracy

We finally conducted an experiment on five sheep pelvises to evaluate the osteotomy accuracy of the proposed system. For each pelvis, the experiment was carried out based on following steps: (1) A preoperative CT image was acquired, where pelvis segmentation and surgical planning were performed; (2) We calibrated the robot system, and performed patient-image registration as well as virtual-physical registration; (3) Osteotomy was then performed under AR guidance and robot assistance; (4) After osteotomy, a post-operative CT image was acquired, where we could annotate a real ostetotomy plane. By registering the pre-operative CT image and the postoperative CT image, surgical planning was transformed to the postoperative CT image. Thus, we could measure deviations between the real osteotomy plane and the planned osteotomy plane.

To evaluate osteotomy accuracy, both distance deviation

and angular deviation

were employed as metrics.

Figure 7 illustrates the definitions of

and

. For each osteotomy plane,

was an average distance from nine control points to the planned plane, where the control points were uniformly sampled on the real osteotomy plane.

was the angle between the normal vector of the real osteotomy plane and that of the planned plane.

4.2. Experimental Results

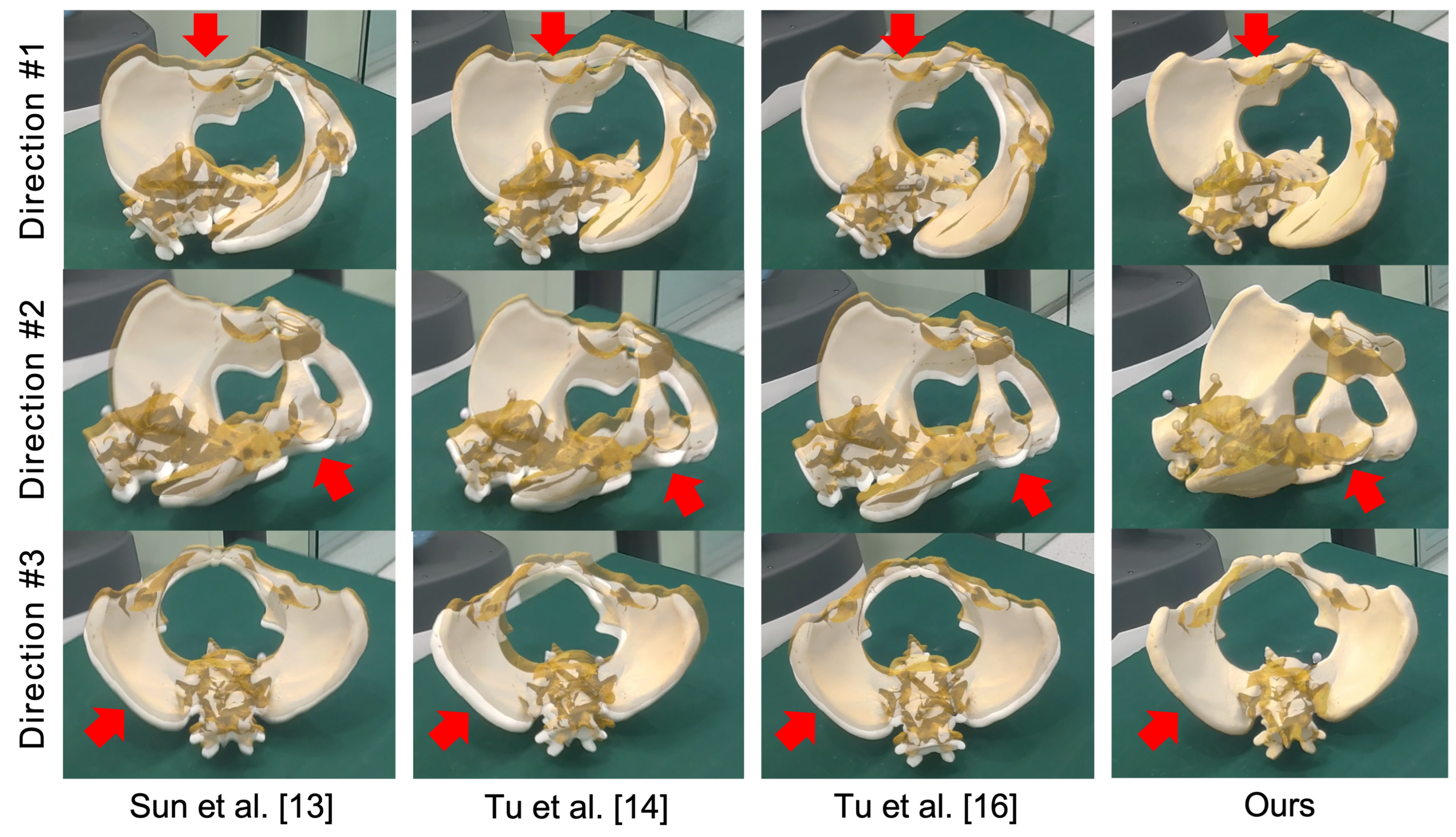

4.2.1. Accuracy of Virtual-Physical Registration

Experiment results of virtual-physical registration are presented in

Table 1 and

Figure 8.

Table 1 illustrates the average value, maximum, and minimum of mADE achieved by different virtual-physical registration methods [

14,

15,

17]. The proposed method achieved an average mADE of 1.96 ± 0.43 mm and a maximum mADE of 0.27 mm. Compared with the second-best method, our method achieved a 4.45 mm improvement in terms of average mADE. Additionally, we illustrate qualitative results in

Figure 8, where virtual models (yellow) were overlaid on the pelvis phantom (white) using

estimated using different methods. In this figure, we used red arrows to highlight the misalignment. Compared with other methods, our method achieved the best alignment accuracy. Experimental results demonstrated the virtual-physical registration accuracy of the proposed method.

4.2.2. Ablation Study

We summarize quantitative results of the ablation study in

Table 2. Compared with freehand registration (referred as FR), single-view robotic registration (referred as SRR) achieved a slight improvement, demonstrating the effectiveness of robot arm when aligning virtual models with the optical marker. Additionally, when using multi-view observation, the average mADE was improved by a margin of 4.58 mm compared with single-view robotic registration. Such improvement demonstrated the importance of observing misalignment from different directions during the virtual-physical registration.

4.2.3. Osteotomy Accuracy

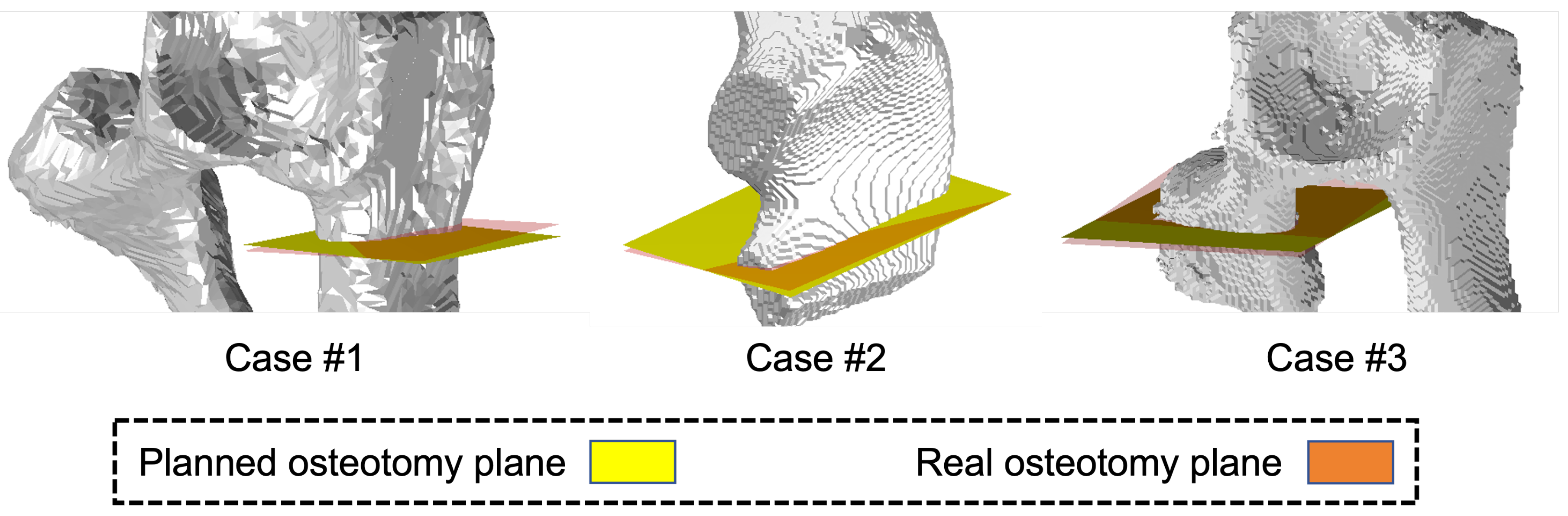

Experimental results of osteotomy accuracy are presented in

Table 3,

Figure 9, and

Figure 10. As shown in

Table 3, the proposed system achieved an average

of 0.82 ± 0.17 mm and an average

of 3.77 ± 0.85 . Qualitative visualization results are shown in

Figure 9. The sub-millimeter distance deviation demonstrated the osteotomy accuracy achieved by the proposed system.

Additionally, in

Figure 10, we show the AR guidance during the osteotomy procedure. As shown in the figure, virtual models of the sheep pelvis, the bone saw, and the planned osteotomy plane are accurately superimposed on their corresponding counterparts. At the same time, pose parameters of the bone saw are visualized in the HoloLens, serving as an extra visual feedback. The numbers turns to green whenever deviations are below the corresponding thresholds (5 mm for distance deviation, and 3 for angular deviation). Otherwise, it turns to red, serving as a warning message. Thus, the proposed system can provide surgeons with an AR guidance during the PAO procedure.

5. Discussion and Conclusion

In this paper, we proposed a robot-assisted AR-guided surgical navigation system for PAO, which mainly consists of a robot arm holding a medical bone saw, an optical marker, and a Microsoft HoloLens 2. In order to provide AR guidance, an optical marker-based virtual-physics registration method was proposed to estimate a transformation from the optical tracker COS to the virtual space COS, allowing virtual models to be aligned with the corresponding physical counterparts. Additionally, for osteotomy guidance, the proposed system automatically aligned the bone saw with planned osteotomy planes, and then provided surgeons with both robot assistance and AR guidance. Comprehensive experiments were conducted to evaluate both virtual-physical registration accuracy and system accuracy. In the experiment, the proposed virtual-physical registration method achieved an average mADE of 1.96 ± 0.43 mm while the proposed system achieved an average of 0.82 ± 0.17 mm and an average of 3.77 ± 0.85 . Experimental results demonstrated the effectiveness of the proposed registration method. The sub-millimeter distance deviation demonstrated the osteotomy accuracy of the navigation system.

It is worth comparing the proposed virtual-physical registration method with other SOTA methods. Our method has three advantages: (1) We control a robot arm to align virtual models with the optical marker. Compared with freehand alignment [

15,

17], no hand tremble is involved; (2) During alignment, the optical marker is held by the robot arm. Thus, the proposed method allows for multi-view observation during the virtual-physical alignment, improving the depth perception. Compared with single-view methods [

14,

15], our method can achieve higher virtual-physical registration accuracy; (3) Different from methods introduced in [

15] and [

17], our method does not require a registration tool that needs to be calibrated before the virtual-physical registration. Thus, calibration error is not coupled in the registration in our method. These advantages are confirmed by the experimental results shown in

Table 1 and

Figure 8, where the proposed method achieved the best virtual-physical registration accuracy with an average mADE of 1.96 ± 0.43 mm.

Additionally, the proposed robot-assisted AR-guided surgical navigation system has several advantages. First, the robot arm automatically aligns the bone saw with the osteotomy plane defined in the preoperative image, offering an accurate initial pose to perform the osteotomy. Second, the movement of saw bone is restricted on the osteotomy plane, serving as a physical guidance. Third, the proposed navigation system visualize not only virtual models, but also pose parameters of the bone saw relative to the planned osteotomy plane, providing surgeons with multiple visual feedback. In the experiment, our system achieved an average of 0.82 ± 0.17 mm and an average of 3.77 ± 0.85 . The sub-millimeter distance deviation demonstrated the high osteotomy accuracy of our system.

It is also worth discussing the limitations of the proposed robot-assisted AR-guided surgical navigation system. First, it is observed that the saw blade bends during osteotomy, causing angular deviation. Our future work focus on developing a compensation strategy to reduce the angular deviation, where the blade bending can be detected and then compensated. Second, the transformation estimated by the proposed virtual-physical registration is only valid for the current HoloLens application. Once a new HoloLens application is launched, the registration needs to be performed again. Nevertheless, during the experiment, we found that an average time required by the proposed virtual-physical registration step is less than three minutes. Thus, such time cost is acceptable, which will not interrupt the surgical workflow.

In summary, we proposed a robot-assisted AR-guided surgical navigation system for PAO. Experimental results demonstrated the efficacy of the proposed system.

Funding

This research is supported by the National Key R&D Program of China (Grant No. 2023YFB4706302) and by the National Science Foundation of China (U20A20199).

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Institutional Review Board of School of Biomedical Engineering, Shanghai Jiao Tong University, China (Approval No. 2020031, approved on May 08, 2020).

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| API |

Application Programming Interface |

| AR |

Augmented Reality |

| CAOS |

Computer-assisted Orthopedic Surgeries |

| COS |

Coordinate System |

| CT |

Computed Tomography |

| DDH |

Developmental Dysplasia of the Hip |

| DRB |

Dynamic Reference Base |

| EM |

Electromagnetic |

| FoV |

Field of View |

| PAO |

Periacetabular Osteotomy |

| MR |

Magnetic Resonance |

| mADE |

mean Absolute Distance Error |

| OST-HMD |

Optical See-through Head-mounted Display |

| SOTA |

State-of-the-art |

| 3D |

Three-dimension |

References

- Ahmad, S.S.; Giebel, G.M.; Perka, C.; Meller, S.; Pumberger, M.; Hardt, S.; Stöckle, U.; Konrads, C. Survival of the dysplastic hip after periacetabular osteotomy: A meta-analysis. Hip International 2023, 33, 306–312. [Google Scholar] [CrossRef] [PubMed]

- Troelsen, A. Assessment of adult hip dysplasia and the outcome of surgical treatment. Dan Med J 2012, 59, B4450. [Google Scholar] [PubMed]

- Liu, L.; Ecker, T.M.; Siebenrock, K.A.; Zheng, G. Computer assisted planning, simulation and navigation of periacetabular osteotomy. In Proceedings of the International Conference on Medical Imaging and Augmented Reality. Springer; 2016; pp. 15–26. [Google Scholar]

- Pflugi, S.; Liu, L.; Ecker, T.M.; Schumann, S.; Larissa Cullmann, J.; Siebenrock, K.; Zheng, G. A cost-effective surgical navigation solution for periacetabular osteotomy (PAO) surgery. International journal of computer assisted radiology and surgery 2016, 11, 271–280. [Google Scholar] [CrossRef] [PubMed]

- Grupp, R.B.; Hegeman, R.A.; Murphy, R.J.; Alexander, C.P.; Otake, Y.; McArthur, B.A.; Armand, M.; Taylor, R.H. Pose estimation of periacetabular osteotomy fragments with intraoperative X-ray navigation. IEEE transactions on biomedical engineering 2019, 67, 441–452. [Google Scholar] [CrossRef] [PubMed]

- Liu, L.; Siebenrock, K.; Nolte, L.P.; Zheng, G. Computer-assisted planning, simulation, and navigation system for periacetabular osteotomy. Intelligent Orthopaedics: artificial intelligence and smart image-guided Technology for Orthopaedics.

- Pflugi, S.; Vasireddy, R.; Lerch, T.; Ecker, T.M.; Tannast, M.; Boemke, N.; Siebenrock, K.; Zheng, G. Augmented marker tracking for peri-acetabular osteotomy surgery. International journal of computer assisted radiology and surgery 2018, 13, 291–304. [Google Scholar] [CrossRef] [PubMed]

- Inaba, Y.; Kobayashi, N.; Ike, H.; Kubota, S.; Saito, T. Computer-assisted rotational acetabular osteotomy for patients with acetabular dysplasia. Clinics in orthopedic surgery 2016, 8, 99. [Google Scholar] [CrossRef] [PubMed]

- Sun, M.; Lin, L.; Chen, X.; Xu, C.; Zin, M.A.; Han, W.; Chai, G. Robot-assisted mandibular angle osteotomy using electromagnetic navigation. Annals of Translational Medicine 2021, 9. [Google Scholar] [CrossRef] [PubMed]

- Tian, H.; Duan, X.; Han, Z.; Cui, T.; He, R.; Wen, H.; Li, C. Virtual-fixtures based shared control method for curve-cutting with a reciprocating saw in robot-assisted osteotomy. IEEE Transactions on Automation Science and Engineering 2023. [Google Scholar] [CrossRef]

- Shao, L.; Li, X.; Fu, T.; Meng, F.; Zhu, Z.; Zhao, R.; Huo, M.; Xiao, D.; Fan, J.; Lin, Y.; et al. Robot-assisted augmented reality surgical navigation based on optical tracking for mandibular reconstruction surgery. Medical Physics 2024, 51, 363–377. [Google Scholar] [CrossRef]

- Liebmann, F.; Roner, S.; von Atzigen, M.; Scaramuzza, D.; Sutter, R.; Snedeker, J.; Farshad, M.; Fürnstahl, P. Pedicle screw navigation using surface digitization on the Microsoft HoloLens. International journal of computer assisted radiology and surgery 2019, 14, 1157–1165. [Google Scholar] [CrossRef]

- Hoch, A.; Liebmann, F.; Carrillo, F.; Farshad, M.; Rahm, S.; Zingg, P.O.; Fürnstahl, P. Augmented reality based surgical navigation of the periacetabular osteotomy of Ganz–a pilot cadaveric study. In Proceedings of the International Workshop on Medical and Service Robots. Springer; 2020; pp. 192–201. [Google Scholar]

- Sun, Q.; Mai, Y.; Yang, R.; Ji, T.; Jiang, X.; Chen, X. Fast and accurate online calibration of optical see-through head-mounted display for AR-based surgical navigation using Microsoft HoloLens. International journal of computer assisted radiology and surgery 2020, 15, 1907–1919. [Google Scholar] [CrossRef] [PubMed]

- Tu, P.; Gao, Y.; Lungu, A.J.; Li, D.; Wang, H.; Chen, X. Augmented reality based navigation for distal interlocking of intramedullary nails utilizing Microsoft HoloLens 2. Computers in Biology and Medicine 2021, 133, 104402. [Google Scholar] [CrossRef] [PubMed]

- Tu, P.; Qin, C.; Guo, Y.; Li, D.; Lungu, A.J.; Wang, H.; Chen, X. Ultrasound image guided and mixed reality-based surgical system with real-time soft tissue deformation computing for robotic cervical pedicle screw placement. IEEE Transactions on Biomedical Engineering 2022, 69, 2593–2603. [Google Scholar] [CrossRef] [PubMed]

- Tu, P.; Wang, H.; Joskowicz, L.; Chen, X. A multi-view interactive virtual-physical registration method for mixed reality based surgical navigation in pelvic and acetabular fracture fixation. International Journal of Computer Assisted Radiology and Surgery 2023, 18, 1715–1724. [Google Scholar] [CrossRef] [PubMed]

- Qian, L.; Wu, J.Y.; DiMaio, S.P.; Navab, N.; Kazanzides, P. A review of augmented reality in robotic-assisted surgery. IEEE Transactions on Medical Robotics and Bionics 2019, 2, 1–16. [Google Scholar] [CrossRef]

- Bhagvath, P.V.; Mercier, P.; Hall, A.F. Design and Accuracy Assessment of an Automated Image-Guided Robotic Osteotomy System. IEEE Transactions on Medical Robotics and Bionics 2023. [Google Scholar] [CrossRef]

- Low, K.L. Linear least-squares optimization for point-to-plane icp surface registration. Chapel Hill, University of North Carolina 2004, 4, 1–3. [Google Scholar]

- Sun, W.; Liu, J.; Zhao, Y.; Zheng, G. A Novel Point Set Registration-Based Hand–Eye Calibration Method for Robot-Assisted Surgery. Sensors 2022, 22, 8446. [Google Scholar] [CrossRef]

- WE, L. Marching cubes: A high resolution 3D surface construction algorithm. Computer graphics 1987, 21, 7–12. [Google Scholar]

- Sorkine-Hornung, O.; Rabinovich, M. Least-squares rigid motion using svd. Computing 2017, 1, 1–5. [Google Scholar]

- Yan, B.; Zhang, W.; Cai, L.; Zheng, L.; Bao, K.; Rao, Y.; Yang, L.; Ye, W.; Guan, P.; Yang, W.; et al. Optics-guided robotic system for dental implant surgery. Chinese Journal of Mechanical Engineering 2022, 35, 55. [Google Scholar] [CrossRef]

Figure 1.

An overview of the proposed robot-assisted AR surgical navigation system for PAO.

Figure 1.

An overview of the proposed robot-assisted AR surgical navigation system for PAO.

Figure 2.

A schematic illustration of preoperative planning, where , , , and are calculated based on , , , and .

Figure 2.

A schematic illustration of preoperative planning, where , , , and are calculated based on , , , and .

Figure 3.

A schematic illustration of bone saw calibration. (a) Digitizing the four corner points using a trackable pointer; (b) Calculating , , , and based on , , , and .

Figure 3.

A schematic illustration of bone saw calibration. (a) Digitizing the four corner points using a trackable pointer; (b) Calculating , , , and based on , , , and .

Figure 4.

The proposed virtual-physical registration. (a) Virtual models of the optical marker are loaded in the virtual space using different poses; (b) The optical marker attached to the robot flange is aligned with each virtual model rendered in the HoloLens.

Figure 4.

The proposed virtual-physical registration. (a) Virtual models of the optical marker are loaded in the virtual space using different poses; (b) The optical marker attached to the robot flange is aligned with each virtual model rendered in the HoloLens.

Figure 5.

AR guidance during the PAO procedure. (a) The proposed AR navigation system provides not only visualization of virtual models, but also dispaly of pose parameters of the bone saw relative to the osteotomy plane. (b) The definitions of the pose parameters.

Figure 5.

AR guidance during the PAO procedure. (a) The proposed AR navigation system provides not only visualization of virtual models, but also dispaly of pose parameters of the bone saw relative to the osteotomy plane. (b) The definitions of the pose parameters.

Figure 6.

Experimental setup of the evaluation of virtual-physical registration accuracy. In this experiment, we defined validation points in the virtual space. After virtual-physical registration, we used a trackable pointer to digitize these validation points, and calculated mADE as a metric to evaluate the virtual-physical registration accuracy.

Figure 6.

Experimental setup of the evaluation of virtual-physical registration accuracy. In this experiment, we defined validation points in the virtual space. After virtual-physical registration, we used a trackable pointer to digitize these validation points, and calculated mADE as a metric to evaluate the virtual-physical registration accuracy.

Figure 7.

The definition of evaluation metrics for osteotomy accuracy. (a) The distance deviation , which was calculated as the average distance from the nine control points to the planned osteotomy plane; (b) The angular deviation , which was calculated as the angle between the normal vector of the real osteotomy plane and that of the planned plane.

Figure 7.

The definition of evaluation metrics for osteotomy accuracy. (a) The distance deviation , which was calculated as the average distance from the nine control points to the planned osteotomy plane; (b) The angular deviation , which was calculated as the angle between the normal vector of the real osteotomy plane and that of the planned plane.

Figure 8.

Visualization of the alignment between the virtual model (yellow) and the pelvis phantom (white) using different methods. In this figure, misalignment is highlighted using red arrows. Compared with other methods, the proposed method achieved the most accurate virtual-physical registration.

Figure 8.

Visualization of the alignment between the virtual model (yellow) and the pelvis phantom (white) using different methods. In this figure, misalignment is highlighted using red arrows. Compared with other methods, the proposed method achieved the most accurate virtual-physical registration.

Figure 9.

Visualization of qualitative results of osteotomy, where real osteotomy planes and planned osteotomy planes are visualized in orange and yellow, respectively.

Figure 9.

Visualization of qualitative results of osteotomy, where real osteotomy planes and planned osteotomy planes are visualized in orange and yellow, respectively.

Figure 10.

AR guidance during the osteotomy procedure: (a) AR display when the bone saw was far away from the planned osteotomy plane, where pose parameters turned red; (b) AR display when the deviations between the bone saw and the osteotomy plane were low.

Figure 10.

AR guidance during the osteotomy procedure: (a) AR display when the bone saw was far away from the planned osteotomy plane, where pose parameters turned red; (b) AR display when the deviations between the bone saw and the osteotomy plane were low.

Table 1.

Comparison with SOTA methods for virtual-physical registration.

Table 1.

Comparison with SOTA methods for virtual-physical registration.

| method |

mADE(mm) |

| |

Average |

Max |

Min |

| Sun et al. [14] |

|

20.53 |

1.83 |

| Tu et al. [15] |

|

10.91 |

3.41 |

| Tu et al. [17] |

|

9.53 |

3.94 |

| Ours |

|

2.71 |

1.38 |

Table 2.

Experimental results of the ablation study. (FR: freehand registration; SRR: single-view robotic registration)

Table 2.

Experimental results of the ablation study. (FR: freehand registration; SRR: single-view robotic registration)

| |

Strategies |

mADE (mm) |

| |

Controlling

robot arm |

Multi-view

observation |

Average |

Max |

Min |

| FR |

|

|

|

9.55 |

3.66 |

| SRR |

√ |

|

|

11.65 |

2.23 |

| Ours |

√ |

√ |

|

2.71 |

1.38 |

Table 3.

Osteotomy accuracy achieved by the proposed robot-assisted AR surgical navigation system.

Table 3.

Osteotomy accuracy achieved by the proposed robot-assisted AR surgical navigation system.

| Case |

|

|

| 1 |

|

4.77 |

| 2 |

|

3.14 |

| 3 |

|

4.79 |

| 4 |

|

2.75 |

| 5 |

|

3.41 |

| Average |

|

|

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).