Submitted:

14 June 2024

Posted:

17 June 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Related Works

2.1. Dynamic Sparse Training

2.2. Cannistraci-Hebb Theory and Network Shape Intelligence

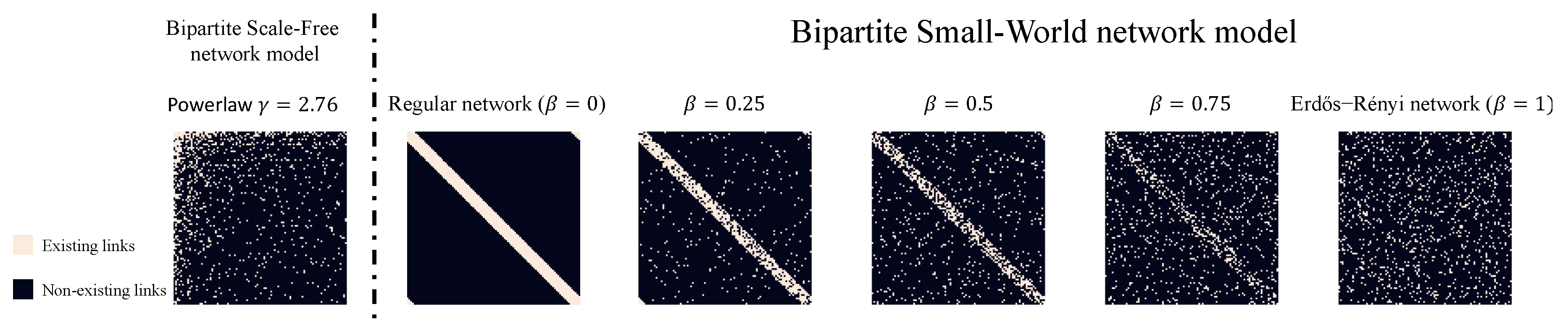

2.3. Bipartite Scale-Free model and Bipartite Small-World model

2.4. Correlated Sparse Topological Initialization

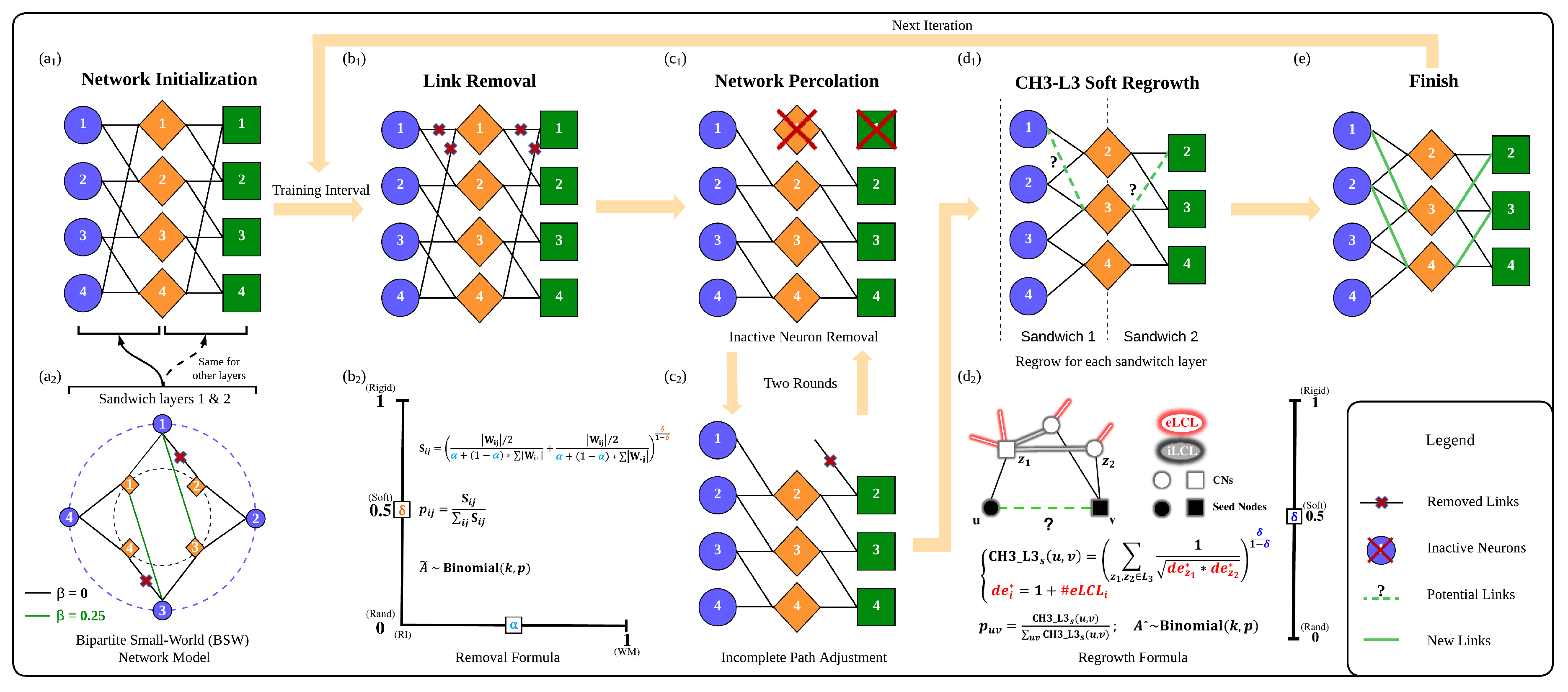

3. Network Science Modeling Via Cannistraci-Hebb Training Soft Rule

3.1. Sparse Topological Initialization with Network Science Models

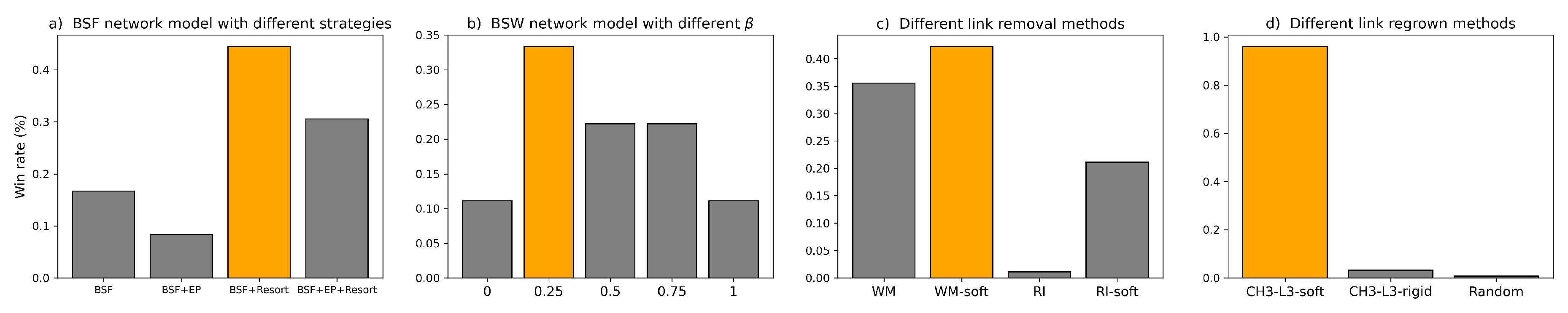

3.1.1. Equal Partition and Neuron Resorting to Enhance BSF Initialization

- The BSF model generates hub nodes randomly. However, This random assignment of hub nodes to less significant inputs leads to a less effective initialization, which is particularly detrimental in CHT, which merely utilizes the topology information to regrow new links.

- As demonstrated in CHT, in the final network, the hub nodes of one layer’s output should correspond to the input layer of the subsequent layer, which means the hub nodes should have a high degree on both sides of the layer. However, the BSF model’s random selection disrupts this correspondence, significantly reducing the number of Credit Assignment Paths (CAP) [9] in the model. CAP is defined as the chain of the transformation from input to output, which counts the number of links that go through the hub nodes in the middle layers.

- Equal Partitioning of the First Layer: We begin by generating a BSF model, then rewire the connections from the input layer to the first hidden layer. While keeping the out-degrees of the output neurons fixed, we randomly sample new connections to the input neurons until each of the input neurons’ in-degrees reaches the input layer’s average in-degree. This approach ensures all input neurons are assigned equal importance while maintaining the power-law degree distribution of output neurons.

- Resorting Middle Layer Neurons: Given the mismatch in hub nodes between consecutive layers, we suggest permuting the neurons between the output of one layer and the input of the next, based on their degree. A higher degree in an output neuron increases the likelihood of connecting to a high-degree input neuron in the subsequent layer, thus enhancing the number of CAPs.

3.1.2. Why Is the BSW Model the Best Network Science-Based Initialization Approach?

3.2. Cannistraci-Hebb Soft Removal and Regrown

3.2.1. Soft Link Removal Alternating from Weight Magnitude and Relative Importance

3.2.2. Network Percolation and Extension to Transformer

3.2.3. Soft Link Regrown Based on CH3-L3 Network Automata

4. Experiments

4.1. Setup

4.2. Baseline Methods

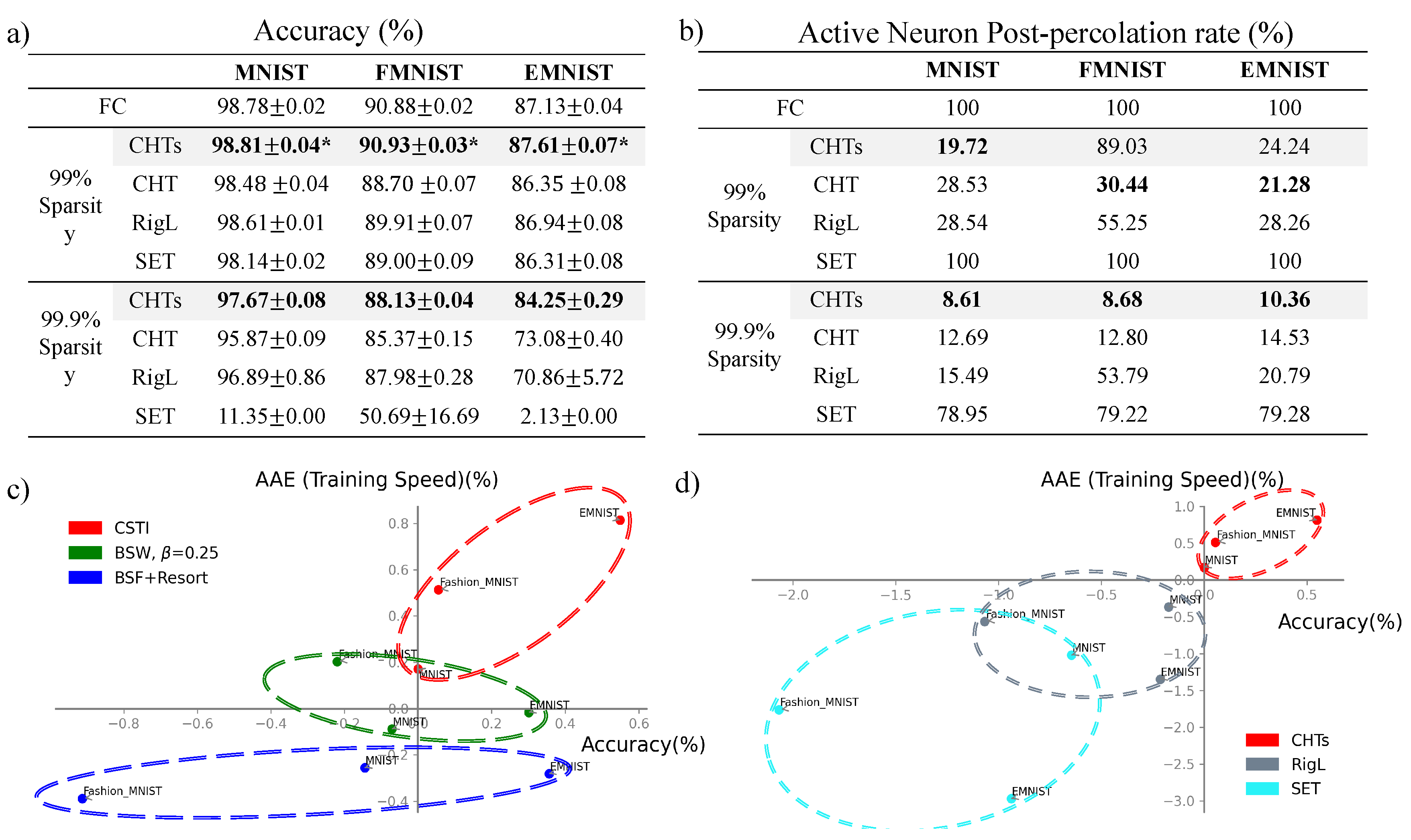

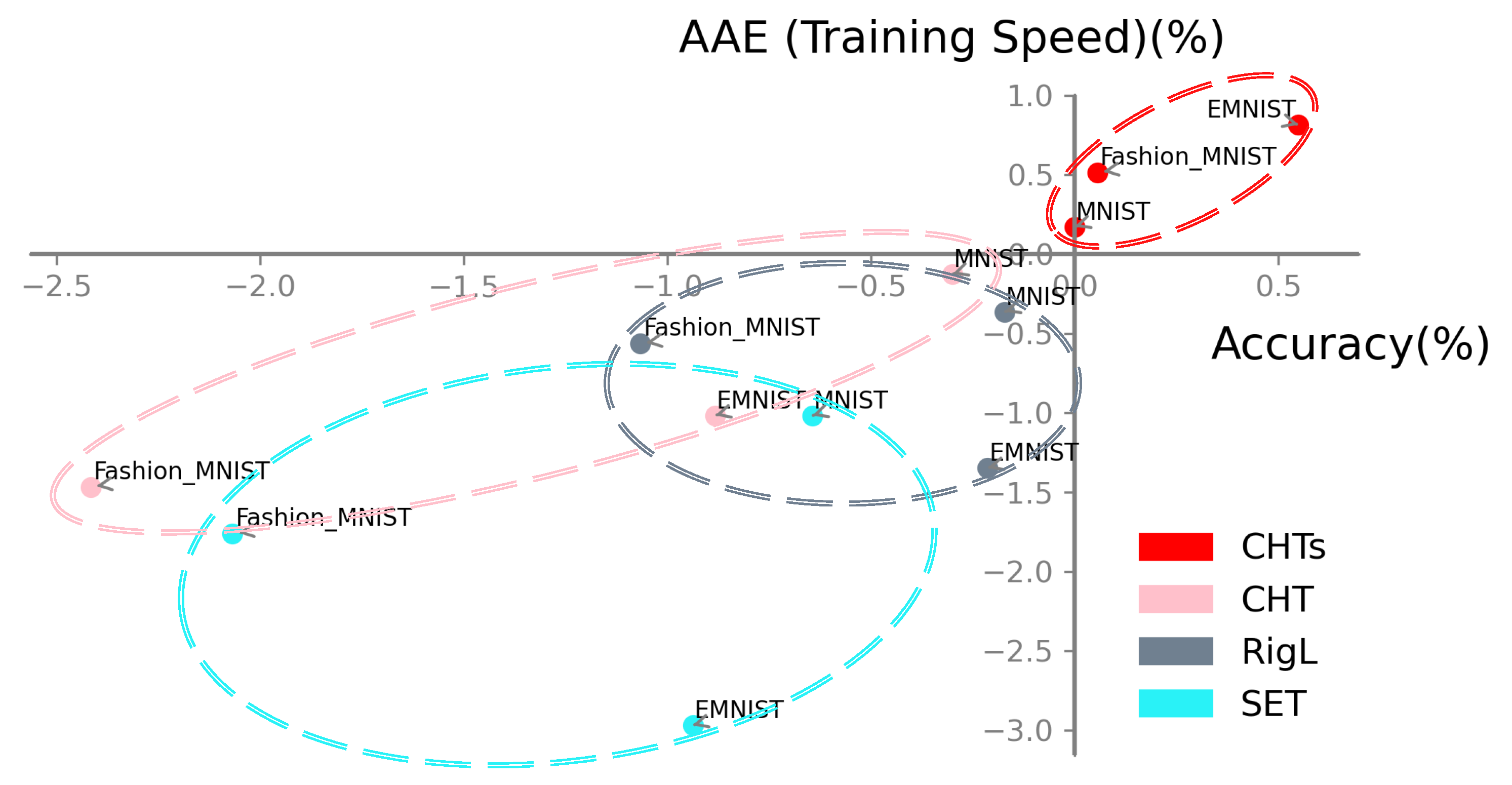

4.3. Results on MLP

4.3.1. Ablation Test

4.3.2. Main Results

4.4. Results on Transformer

5. Conclusion and Discussion

Appendix A. Limitation and Future Work

Appendix B. Broader Impact

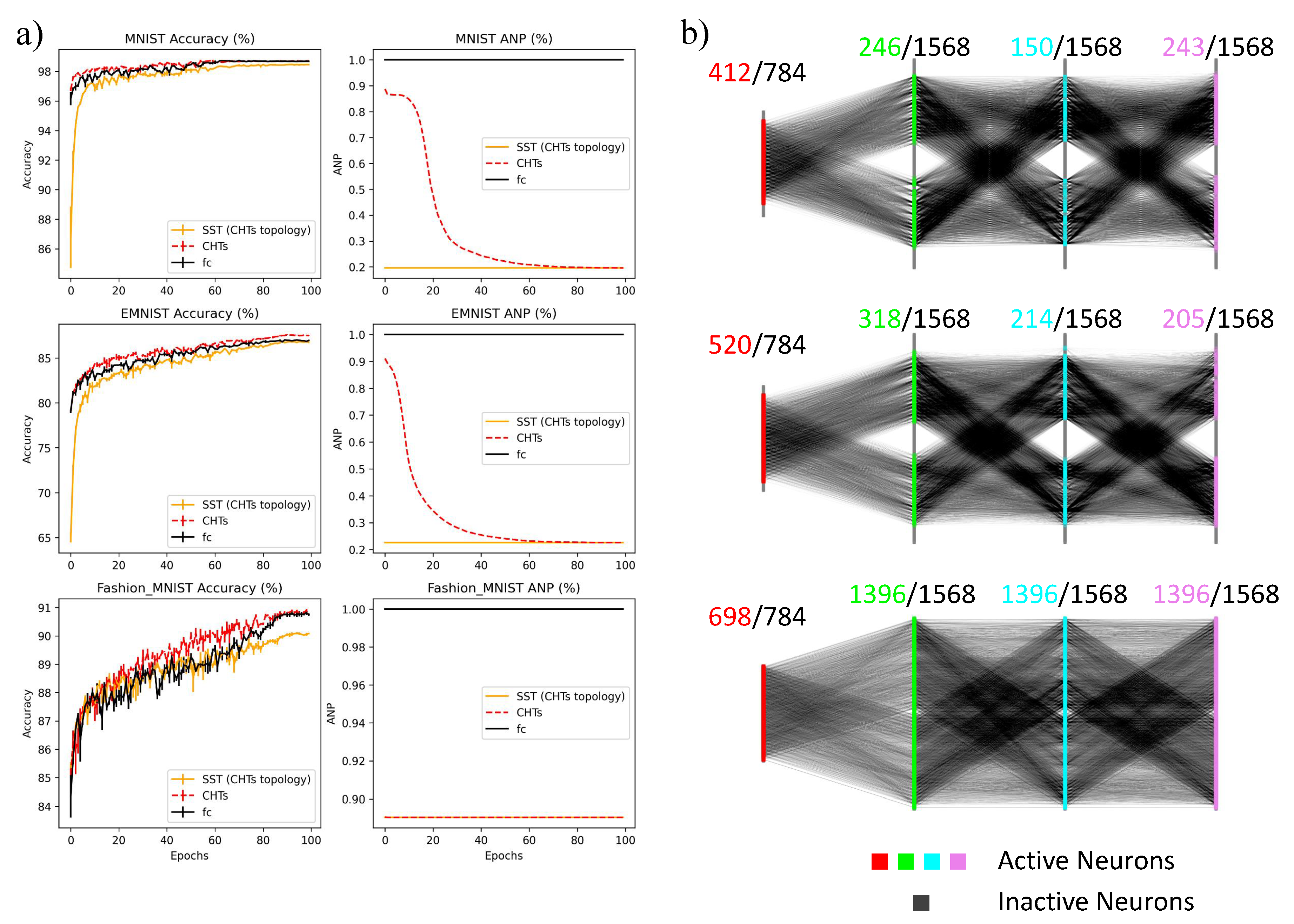

Appendix C. Comparative Analysis of CHTs and SST Using CHTs’ Topology

| Hyper-parameter | MLP |

|---|---|

| Hidden Dimension | 1568 |

| # Hidden layers | 3 |

| Batch Size | 32 |

| Training Epochs | 100 |

| LR Decay Method | Linear |

| Learning Rate | 0.025 |

| Update Interval (for DST) | 1 |

| Hyper-parameter | Multi30k | IWSLT14 | WMT17 |

|---|---|---|---|

| Embedding Dimension | 512 | 512 | 512 |

| Feed-forward Dimension | 1024 | 2048 | 2048 |

| Batch Size | 1024 tokens | 10240 tokens | 12000 tokens |

| Training Steps | 20000 | 20000 | 80000 |

| Dropout | 0.1 | 0.1 | 0.1 |

| Attention Dropout | 0.1 | 0.1 | 0.1 |

| Max Gradient Norm | 0 | 0 | 0 |

| Warmup Steps | 3000 | 6000 | 8000 |

| Decay Method | inoam | inoam | inoam |

| Label Smoothing | 0.1 | 0.1 | 0.1 |

| Layer Number | 6 | 6 | 6 |

| Head Number | 8 | 8 | 8 |

| Learning Rate | 0.25 | 2 | 2 |

| Update Interval (for DST) | 200 | 100 | 100 |

References

- Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.A.; Lacroix, T.; Rozière, B.; Goyal, N.; Hambro, E.; Azhar, F.; others. Llama: Open and efficient foundation language models. arXiv preprint arXiv:2302.13971 2023, arXiv:2302.13971 2023. [Google Scholar]

- Zhang, S.; Roller, S.; Goyal, N.; Artetxe, M.; Chen, M.; Chen, S.; Dewan, C.; Diab, M.; Li, X.; Lin, X.V.; others. Opt: Open pre-trained transformer language models. arXiv preprint arXiv:2205.01068 2022, arXiv:2205.01068 2022. [Google Scholar]

- Drachman, D.A. Do we have brain to spare?, 2005.

- Walsh, C.A. Peter Huttenlocher (1931–2013). Nature 2013, 502, 172–172. [Google Scholar] [CrossRef] [PubMed]

- Mocanu, D.C.; Mocanu, E.; Stone, P.; Nguyen, P.H.; Gibescu, M.; Liotta, A. Scalable training of artificial neural networks with adaptive sparse connectivity inspired by network science. Nature communications 2018, 9, 1–12. [Google Scholar] [CrossRef]

- Jayakumar, S.; Pascanu, R.; Rae, J.; Osindero, S.; Elsen, E. Top-kast: Top-k always sparse training. Advances in Neural Information Processing Systems 2020, 33, 20744–20754. [Google Scholar]

- Evci, U.; Gale, T.; Menick, J.; Castro, P.S.; Elsen, E. Rigging the Lottery: Making All Tickets Winners. Proceedings of the 37th International Conference on Machine Learning, ICML 2020, 13-18 July 2020, Virtual Event. PMLR, 2020, Vol. 119, <italic>Proceedings of Machine Learning Research</italic>, pp. 2943–2952.

- Yuan, G.; Ma, X.; Niu, W.; Li, Z.; Kong, Z.; Liu, N.; Gong, Y.; Zhan, Z.; He, C.; Jin, Q.; others. Mest: Accurate and fast memory-economic sparse training framework on the edge. Advances in Neural Information Processing Systems 2021, 34, 20838–20850. [Google Scholar]

- Zhang, Y.; Zhao, J.; Wu, W.; Muscoloni, A.; Cannistraci, C.V. Epitopological learning and Cannistraci-Hebb network shape intelligence brain-inspired theory for ultra-sparse advantage in deep learning. The Twelfth International Conference on Learning Representations, 2024.

- Han, S.; Mao, H.; Dally, W.J. Deep Compression: Compressing Deep Neural Network with Pruning, Trained Quantization and Huffman Coding. 4th International Conference on Learning Representations, ICLR 2016, San Juan, Puerto Rico, May 2-4, 2016, Conference Track Proceedings; Bengio, Y.; LeCun, Y., Eds., 2016.

- Frantar, E.; Alistarh, D. Massive language models can be accurately pruned in one-shot. arXiv preprint arXiv:2301.00774, arXiv:2301.00774 2023.

- Zhang, Y.; Bai, H.; Lin, H.; Zhao, J.; Hou, L.; Cannistraci, C.V. Plug-and-Play: An Efficient Post-training Pruning Method for Large Language Models. The Twelfth International Conference on Learning Representations, 2024.

- Cannistraci, C.V.; Alanis-Lobato, G.; Ravasi, T. From link-prediction in brain connectomes and protein interactomes to the local-community-paradigm in complex networks. Scientific reports 2013, 3, 1613. [Google Scholar] [CrossRef]

- Daminelli, S.; Thomas, J.M.; Durán, C.; Cannistraci, C.V. Common neighbours and the local-community-paradigm for topological link prediction in bipartite networks. New Journal of Physics 2015, 17, 113037. [Google Scholar] [CrossRef]

- Durán, C.; Daminelli, S.; Thomas, J.M.; Haupt, V.J.; Schroeder, M.; Cannistraci, C.V. Pioneering topological methods for network-based drug–target prediction by exploiting a brain-network self-organization theory. Briefings in Bioinformatics 2017, 19, 1183–1202. [Google Scholar] [CrossRef]

- Cannistraci, C.V. Modelling Self-Organization in Complex Networks Via a Brain-Inspired Network Automata Theory Improves Link Reliability in Protein Interactomes. Sci Rep 2018, 8, 2045–2322. [Google Scholar] [CrossRef]

- Narula, V.e.a. Can local-community-paradigm and epitopological learning enhance our understanding of how local brain connectivity is able to process, learn and memorize chronic pain? Applied network science 2017, 2. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Cohen, G.; Afshar, S.; Tapson, J.; Van Schaik, A. EMNIST: Extending MNIST to handwritten letters. 2017 international joint conference on neural networks (IJCNN). IEEE, 2017, pp. 2921–2926.

- Xiao, H.; Rasul, K.; Vollgraf, R. Fashion-mnist: A novel image dataset for benchmarking machine learning algorithms. arXiv preprint arXiv:1708.07747 2017, arXiv:1708.07747 2017. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, .; Polosukhin, I. Attention is all you need. Advances in neural information processing systems 2017, 30.

- Elliott, D.; Frank, S.; Sima’an, K.; Specia, L. Multi30K: Multilingual English-German Image Descriptions. Proceedings of the 5th Workshop on Vision and Language. Association for Computational Linguistics, 2016, pp. 70–74. [CrossRef]

- Cettolo, M.; Niehues, J.; Stüker, S.; Bentivogli, L.; Federico, M. Report on the 11th IWSLT evaluation campaign. Proceedings of the 11th International Workshop on Spoken Language Translation: Evaluation Campaign; Federico, M.; Stüker, S.; Yvon, F., Eds.;, 2014; pp. 2–17.

- Bojar, O.; Chatterjee, R.; Federmann, C.; Graham, Y.; Haddow, B.; Huang, S.; Huck, M.; Koehn, P.; Liu, Q.; Logacheva, V. ; others. Findings of the 2017 conference on machine translation (wmt17). Association for Computational Linguistics, 2017.

- Abdelhamid, I.; Muscoloni, A.; Rotscher, D.M.; Lieber, M.; Markwardt, U.; Cannistraci, C.V. Network shape intelligence outperforms AlphaFold2 intelligence in vanilla protein interaction prediction. bioRxiv, 2023. [Google Scholar]

- Prabhu, A.; Varma, G.; Namboodiri, A. Deep expander networks: Efficient deep networks from graph theory. Proceedings of the European Conference on Computer Vision (ECCV), 2018, pp. 20–35.

- Lee, N.; Ajanthan, T.; Torr, P.H.S. Snip: Single-Shot Network Pruning based on Connection sensitivity. 7th International Conference on Learning Representations, ICLR 2019, New Orleans, LA, USA, May 6-9, 2019. OpenReview.net, 2019.

- Dao, T.; Chen, B.; Sohoni, N.S.; Desai, A.; Poli, M.; Grogan, J.; Liu, A.; Rao, A.; Rudra, A.; Ré, C. Monarch: Expressive structured matrices for efficient and accurate training. International Conference on Machine Learning. PMLR, 2022, pp. 4690–4721.

- Stewart, J.; Michieli, U.; Ozay, M. Data-free model pruning at initialization via expanders. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2023, pp. 4518–4523.

- Bellec, G.; Kappel, D.; Maass, W.; Legenstein, R. Deep rewiring: Training very sparse deep networks. arXiv preprint arXiv:1711.05136 2017, arXiv:1711.05136 2017. [Google Scholar]

- Lasby, M.; Golubeva, A.; Evci, U.; Nica, M.; Ioannou, Y. Dynamic Sparse Training with Structured Sparsity. arXiv preprint arXiv:2305.02299 2023, arXiv:2305.02299 2023. [Google Scholar]

- Hebb, D. The Organization of Behavior. emphNew York, 1949.

- Muscoloni, A.; Michieli, U.; Zhang, Y.; Cannistraci, C.V. Adaptive Network Automata Modelling of Complex Networks. Preprints 2022. [Google Scholar] [CrossRef]

- Barabási, A.L.; Albert, R. Emergence of scaling in random networks. science 1999, 286, 509–512. [Google Scholar] [CrossRef]

- Watts, D.J.; Strogatz, S.H. Collective dynamics of ‘small-world’networks. nature 1998, 393, 440–442. [Google Scholar] [CrossRef]

- ERDdS, P.; R&, *!!! REPLACE !!!*; amp, *!!! REPLACE !!!*; wi, A. On random graphs I. Publ. math. debrecen 1959, 6, 18. [Google Scholar] [CrossRef]

- Sun, M.; Liu, Z.; Bair, A.; Kolter, J.Z. A Simple and Effective Pruning Approach for Large Language Models. arXiv preprint arXiv:2306.11695 2023, arXiv:2306.11695 2023. [Google Scholar]

- Li, M.; Liu, R.R.; Lü, L.; Hu, M.B.; Xu, S.; Zhang, Y.C. Percolation on complex networks: Theory and application. Physics Reports 2021, 907, 1–68. [Google Scholar] [CrossRef]

- Touvron, H.; Martin, L.; Stone, K.; Albert, P.; Almahairi, A.; Babaei, Y.; Bashlykov, N.; Batra, S.; Bhargava, P.; Bhosale, S.; others, *!!! REPLACE !!!*. Llama 2: Open foundation and fine-tuned chat models. arXiv preprint arXiv:2307.09288 2023, arXiv:2307.09288 2023. [Google Scholar]

- Lialin, V.; Muckatira, S.; Shivagunde, N.; Rumshisky, A. ReLoRA: High-Rank Training Through Low-Rank Updates. The Twelfth International Conference on Learning Representations, 2024.

- Thangarasa, V.; Gupta, A.; Marshall, W.; Li, T.; Leong, K.; DeCoste, D.; Lie, S.; Saxena, S. SPDF: Sparse pre-training and dense fine-tuning for large language models. Uncertainty in Artificial Intelligence. PMLR, 2023, pp. 2134–2146.

- Frankle, J.; Carbin, M. The Lottery Ticket Hypothesis: Finding Sparse, Trainable Neural Networks. International Conference on Learning Representations, 2019.

| Multi30k | IWSLT14 | WMT17 | |

|---|---|---|---|

| FC | 31.51 | 24.11 | 25.20 |

| CHTs | 29.97 | 21.98 | 22.43 |

| RigL | 28.52 | 20.73 | 21.20 |

| SET | 28.98 | 20.09 | 20.66 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).