Submitted:

17 June 2024

Posted:

17 June 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

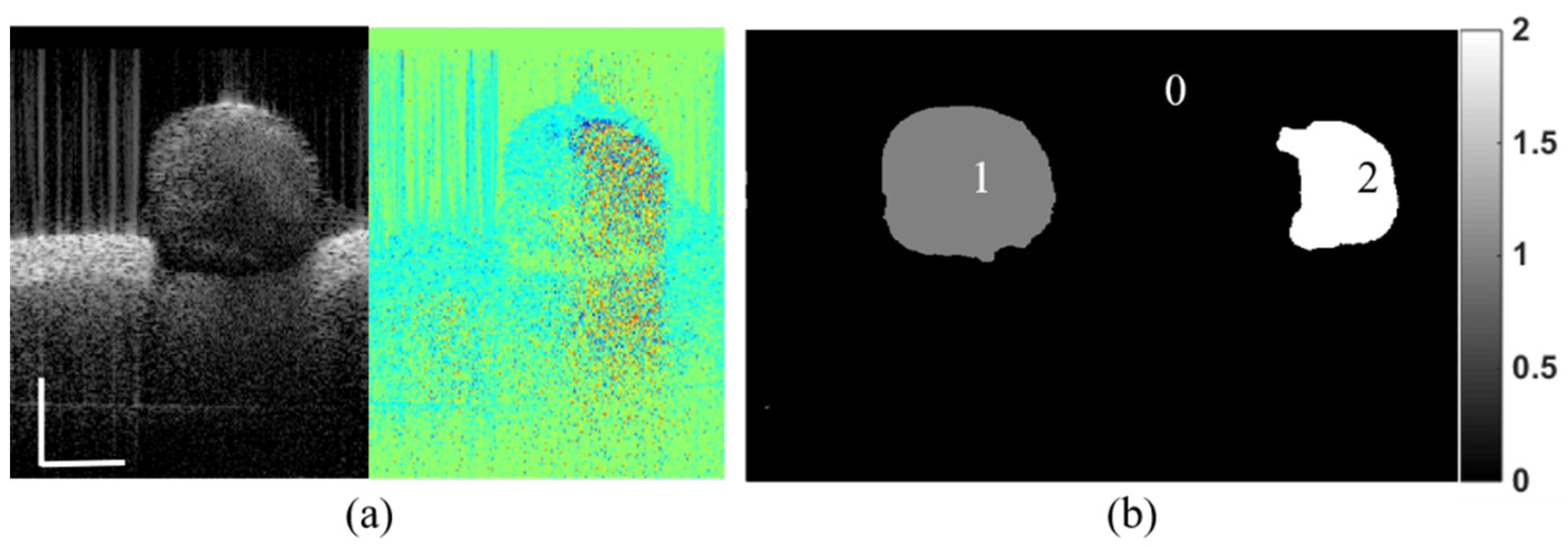

2. Methods

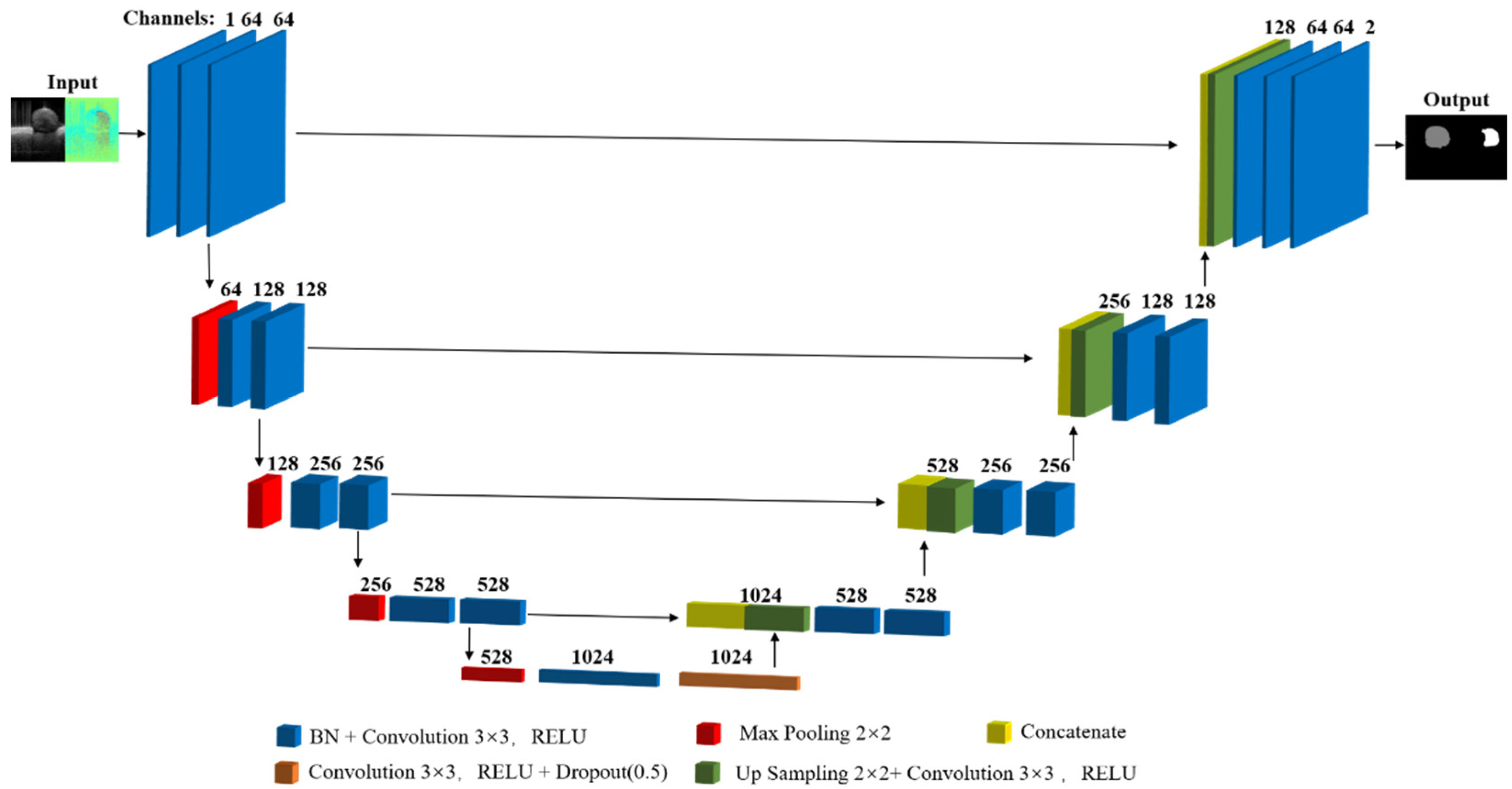

2.1. Model Architecture

2.2. Dataset and Implementation

3. Results

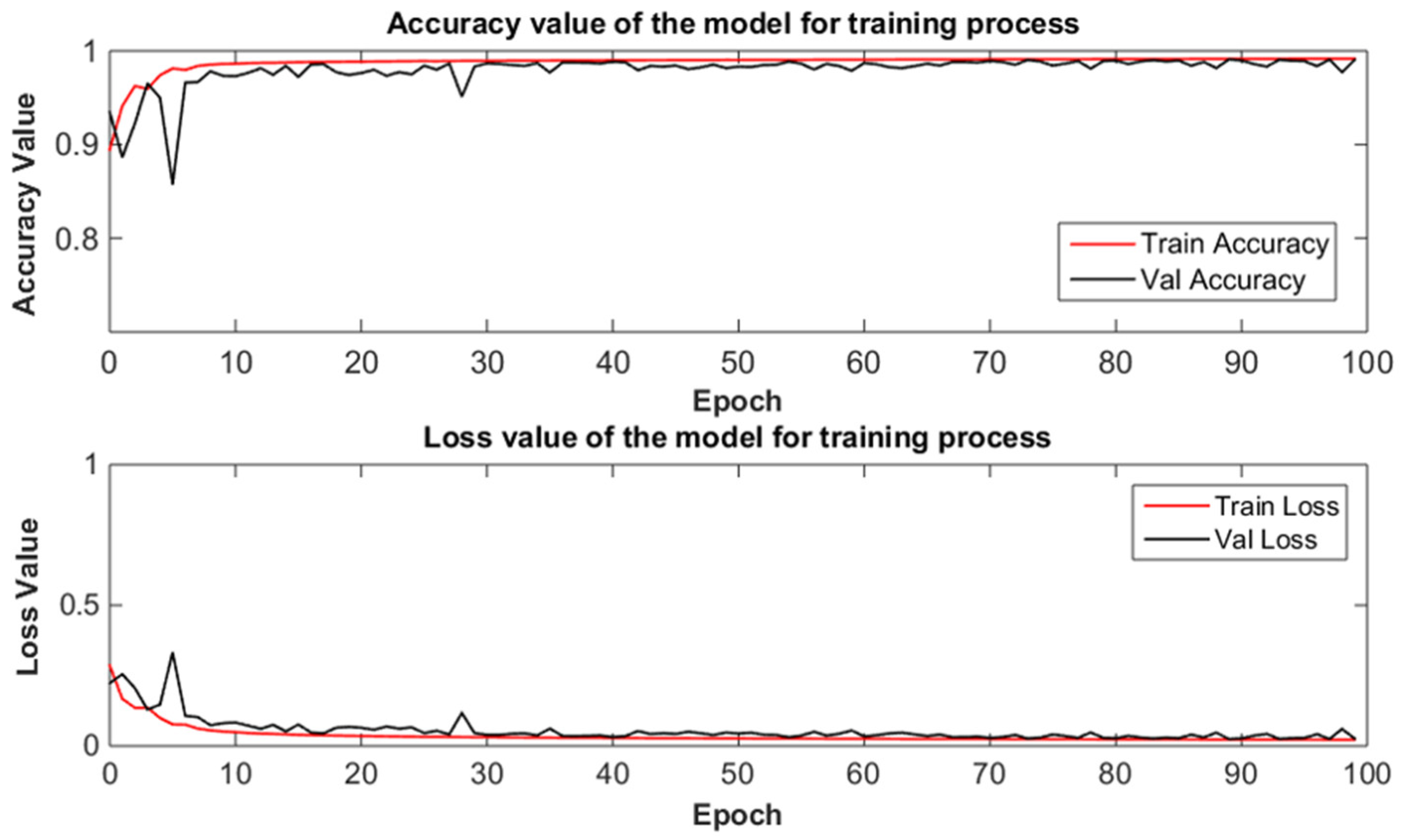

3.1. Training Procedure

3.2. Quantitative Evaluation

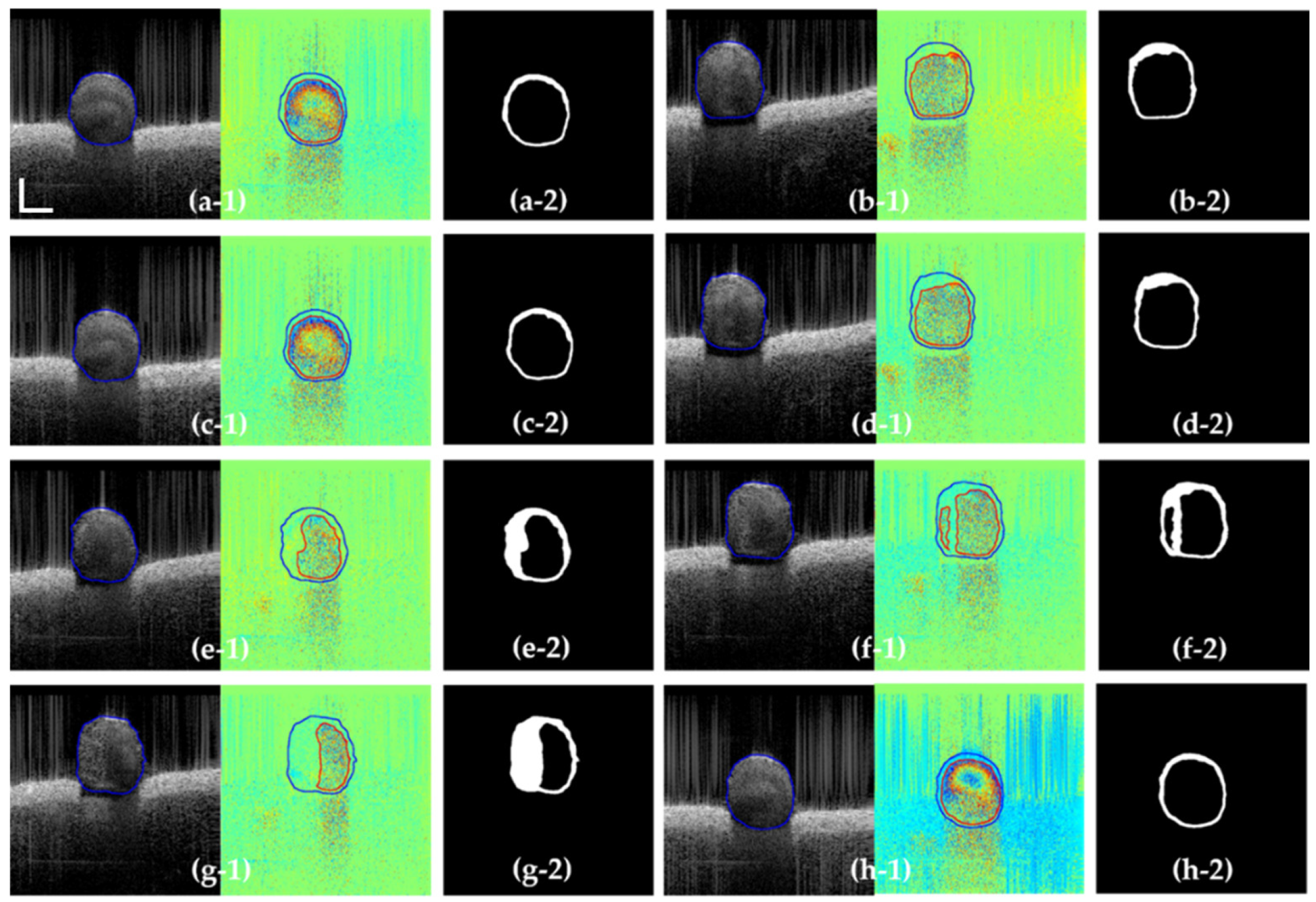

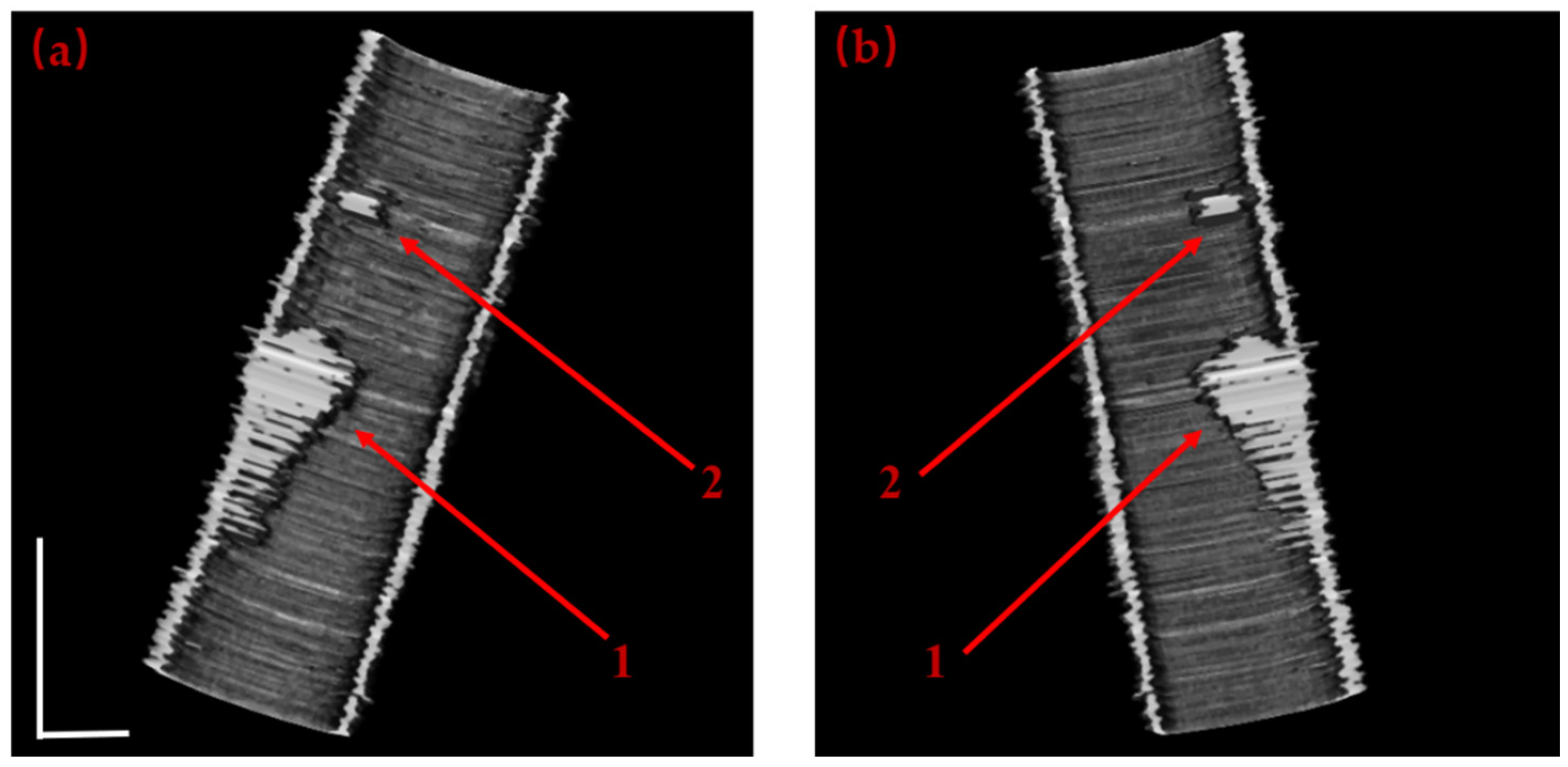

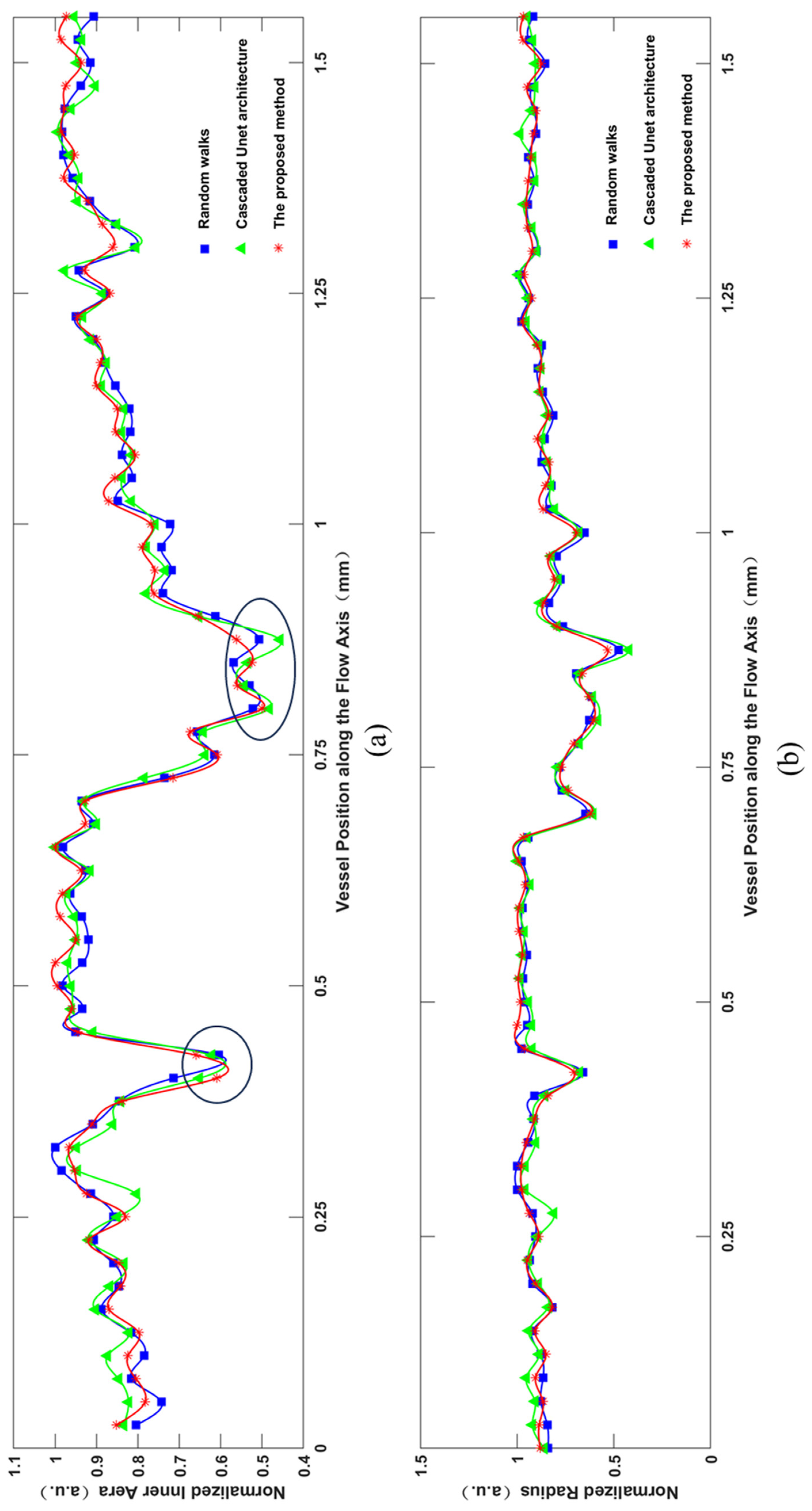

3.3. Segmentation Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Huang, Y., Ibrahim, Z., Tong, D., Zhu, S., Mao, Q., Pang, J., ... & Kang, J. U. Microvascular anastomosis guidance and evaluation using real-time three-dimensional Fourier-domain Doppler optical coherence tomography. Journal of biomedical optics. 2013, 18(11), 111404-111404. [CrossRef]

- Wang, Y., Bower, B. A., Izatt, J. A., Tan, O., & Huang, D. Retinal blood flow measurement by circumpapillary Fourier domain Doppler optical coherence tomography. J. Biomed. Opt. 2008, 13(6), 064003-064003. [CrossRef]

- Arevalillo-Herráez, M., Villatoro, F. R., & Gdeisat, M. A. A robust and simple measure for quality-guided 2D phase unwrapping algorithms. IEEE Trans. Image Process. 2016, 25(6), 2601-2609. [CrossRef]

- Dong, J., Chen, F., Zhou, D., Liu, T., Yu, Z., & Wang, Y. Phase unwrapping with graph cuts optimization and dual decomposition acceleration for 3D high-resolution MRI data. Magn. Reson. Med. 2017, 77(3), 1353-1358. [CrossRef]

- Koozekanani D, Boyer K, Roberts C. Retinal thickness measurements from optical coherence tomography using a Markov boundary model[J]. IEEE Transactions on Medical Imaging, 2001, 20(9): 900-916. [CrossRef]

- Ishikawa H, Stein D M, Wollstein G, et al. Macular segmentation with optical coherence tomography[J]. Investigative Ophthalmology & Visual Science, 2005, 46(6): 2012-2017. [CrossRef]

- Cabrera F D, Salinas H M, Puliafito C A. Automated detection of retinal layer structures on optical coherence tomography images[J]. Optics Express, 2005, 13(25): 10200-10216. [CrossRef]

- Ahlers C, Simader C, Geitzenauer W, et al. Automatic segmentation in three-dimensional analysis of fibrovascular pigmentepithelial detachment using high-definition optical coherence tomography[J]. British Journal of Ophthalmology, 2008, 92(2): 197-203. [CrossRef]

- Koozekanani D, Boyer K, Roberts C. Retinal thickness measurements from optical coherence tomography using a Markov boundary model[J]. IEEE Transactions on Medical Imaging, 2001, 20(9): 900-916. [CrossRef]

- Ishikawa H, Stein D M, Wollstein G, et al. Macular segmentation with optical coherence tomography[J]. Investigative Ophthalmology & Visual Science, 2005, 46(6): 2012-2017. [CrossRef]

- Mujat M, Chan R C, Cense B, et al. Retinal nerve fiber layer thickness map determined from optical coherence tomography images[J]. Optics Express, 2005, 13(23): 9480-9491. [CrossRef]

- Szkulmowski M, Wojtkowski M, Sikorski B, et al. Analysis of posterior retinal layers in spectral optical coherence tomography images of the normal retina and retinal pathologies[J]. Journal of Biomedical Optics, 2007, 12(4): 041207. [CrossRef]

- Fernandez D C, Salinas H M, Puliafito C A. Automated detection of retinal layer structures on optical coherence tomography images[J]. Optics Express, 2005, 13(25): 10200-10216. [CrossRef]

- Baroni M, Fortunato P, Torre A L. Towards quantitative analysis of retinal features in optical coherence tomography[J]. Medical Engineering & Physics, 2007, 29(4): 432-441. [CrossRef]

- Gasca F, Ramrath L, Huettmann G, et al. Automated segmentation of tissue structures in optical coherence tomography data[J]. Journal of Biomedical Optics, 2009, 14(3): 034046. [CrossRef]

- Sihan K, Botha C, Post F, et al. Fully automatic three-dimensional quantitative analysis of intracoronary optical coherence tomography: method and validation[J]. Catheterization and Cardiovascular Interventions, 2009, 74(7): 1058-1065. [CrossRef]

- Yang Q, Reisman C A, Wang Z, et al. Automated layer segmentation of macular OCT images using dual-scale gradient information[J]. Optics Express, 2010, 18(20): 21293-21307. [CrossRef]

- Guimarães P, Rodrigues P, Celorico D, et al. Three-dimensional segmentation and reconstruction of the retinal vasculature from spectral-domain optical coherence tomography[J]. Journal of Biomedical Optics, 2015, 20(1): 016006. [CrossRef]

- Grady L. Random walks for image segmentation[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2006, 28(11): 1768-1783. [CrossRef]

- Guha R A, Conjeti S, Carlier S G, et al. Lumen segmentation in intravascular optical coherence tomography using backscattering tracked and initialized random walks[J]. IEEE Journal of Biomedical Health Information, 2016, 20(2): 606-614. [CrossRef]

- Huang, Y., Wu, C., Xia, S., Liu, L., Chen, S., Tong, D. Ai D., Yang J. and Wang, Y. Boundary segmentation based on modified random walks for vascular Doppler optical coherence tomography images. Chinese Optics Letters, 2019, 17(5), 051001. [CrossRef]

- Haeker M, Abràmoff M D, Wu X, et al. Use of varying constraints in optimal 3-D graph search for segmentation of macular optical coherence tomography images[J]. Medical Image Computing and Computer-Assisted Intervention, 2007, 10(1): 244-251. [CrossRef]

- Haeker M, Sonka M, Kardon R, et al. Automated segmentation of intraretinal layers from macular optical coherence tomography images[C]. Medical Imaging 2007: Image Processing. SPIE, 2007, 6512: 385-395.

- Haeker M, Abrmoff M, Kardon R, et al. Segmentation of the surfaces of the retinal layer from OCT images[J]. Medical Image Computing and Computer-Assisted Intervention, 2006, 9(1): 800-807. [CrossRef]

- Zhang H, Essa E, Xie X. Automatic vessel lumen segmentation in optical coherence tomography (OCT) images[J]. Applied Soft Computing, 2020, 88: 106042. [CrossRef]

- Garvin M K, Abràmoff M D, Wu X, et al. Automated 3D intraretinal layer segmentation of macular spectral-domain optical coherence tomography images[J]. IEEE Transations on Medical Imaging, 2009, 28(9): 1436-1447. [CrossRef]

- Garvin M, Abramoff M, Kardon R, et al. Intraretinal layer segmentation of macular optical coherence tomography images using optimal 3-D graph search[J]. IEEE Transactions on Medical Imaging, 2008, 27(10): 1495-1505. [CrossRef]

- Eladawi N, Elmogy M, Helmy O, et al. Automatic blood vessels segmentation based on different retinal maps from OCTA scans[J]. Computers in Biology and Medicine, 2017, 89: 150-161. [CrossRef]

- Srivastava R, Yow A P, Cheng J, et al. Three-dimensional graph-based skin layer segmentation in optical coherence tomography images for roughness estimation[J]. Biomedical Optics Express, 2018, 9(8): 3590-3606. [CrossRef]

- Praveen M ,Charul B .Effectual Accuracy of OCT Image Retinal Segmentation with the Aid of Speckle Noise Reduction and Boundary Edge Detection Strategy.[J].Journal of microscopy,2022,289(3):164-179. [CrossRef]

- Malihe J, Hamid-Reza P, Ahad H. Vessel segmentation and microaneurysm detection using discriminative dictionary learning and sparse representation[J]. Computer methods and Programs in Biomedicine, 2017, 139: 93-108. [CrossRef]

- Soares J V, Leandro J J, Cesar R M Jr, et al. Retinal vessel segmentation using the 2D Gabor wavelet and supervised classification[J]. IEEE Transactions on Medical Imaging, 2006, 25(9): 1214-1222. [CrossRef]

- Wang Y, Ji G, Lin P, et al. Retinal vessel segmentation using multiwavelet kernels and multiscale hierarchical decomposition[J]. Pattern Recognition, 2013, 46(8): 2117-2133. [CrossRef]

- Roy A G, Conjeti S, Karri S P K, et al. ReLayNet: retinal layer and fluid segmentation of macular optical coherence tomography using fully convolutional networks[J]. Biomedical Optics Express, 2017, 8(8): 3627-3642.

- Fan J, Yang J, Wang Y, et al. Multichannel fully convolutional network for coronary artery segmentation in X-ray angiograms[J]. IEEE Access, 2018, 6(99): 44635-44643. [CrossRef]

- Hamwood J, Alonso C D, Read S A, et al. Effect of patch size and network architecture on a convolutional neural network approach for automatic segmentation of OCT retinal layers[J]. Biomedical Optics Express, 2018, 9(7): 3049-3066.

- Girish G N, Bibhash T, Sohini R, et al. Segmentation of intra-retinal cysts from optical coherence tomography images using a fully convolutional neural network model[J]. IEEE Journal of Biomedical and Health Informatics, 2019, 23(1): 296-304.

- Fang L, Cunefare D, Wang C, et al. Automatic segmentation of nine retinal layer boundaries in OCT images of non-exudative AMD patients using deep learning and graph search[J]. Biomedical Optics Express, 2017, 8(5): 2732-2744. [CrossRef]

- Zhang H, Wang G, Li Y, et al. Automatic plaque segmentation in coronary optical coherence tomography images[J]. International Journal of Pattern Recognition and Artificial Intelligence, 2019, 33(14): 1954035. [CrossRef]

- Fei M ,Sien L ,Shengbo W , et al. Deep-learning segmentation method for optical coherence tomography in ophthalmology.[J].Journal of biophotonics,2023,e202300321-e202300321.

- Gharaibeh Y, Prabhu D, Kolluru C, et al. Coronary calcification segmentation in intravascular OCT images using deep learning: application to calcification scoring[J]. Journal of Medical Imaging, 2019, 6(4): 045002. [CrossRef]

- Ronneberger O, Fischer P, Brox T. U-net: Convolutional networks for biomedical image segmentation[C]. International Conference on Medical image computing and computer-assisted intervention. Springer, Cham, 2015: 234-241.

- F. Isensee, P. F. Jaeger, S. A. A. Kohl, J. Petersen, and K. H. Maier-Hein,“nnU-Net: A self-configuring method for deep learning-based biomedical image segmentation,” Nature Methods, vol. 18, no. 2, pp. 203–211, 2021.

- B. N. Anoop et al., “A cascaded convolutional neural network architecture for despeckling OCT images,” Biomed. Signal Process. Control, vol. 66, 2021, Art. no. 102463. [CrossRef]

- B. Hassan et al., “CDC-Net: Cascaded decoupled convolutional network for lesion-assisted detection and grading of retinopathy using optical coherence tomography (OCT) scans,” Biomed. Signal Process. Control, vol. 70, 2021, Art. no. 103030. [CrossRef]

- Wu, C., Xie, Y., Shao, L., Yang, J., Ai, D., Song, H., ... & Huang, Y. Automatic boundary segmentation of vascular Doppler optical coherence tomography images based on cascaded U-net architecture. OSA Continuum, 2019, 2(3), 677-689. [CrossRef]

- Zeiler, M. D., & Fergus, R. Visualizing and understanding convolutional networks. In Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6-12 September 2014.

- Ulyanov, D., Vedaldi, A., & Lempitsky, V. Deep image prior. In Proceedings of the IEEE conference on computer vision and pattern recognition, Salt Lake City, UT, USA, 18-23 June 2018.

- Ioffe, S., & Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In International conference on machine learning, Guangzhou China,12-15 July 2015.

- Yi F., Moon I., Javidi B. Automated red blood cells extraction from holographic images using fully convolutional neural networks. Biomed. Opt. Express, 2017, 8(10), 4466-4479.

- Geetha P. P., Sreekar T., Krishna T., Birendra B. EANet: Multiscale autoencoder based edge attention network for fluid segmentation from SD-OCT images. Int J Imaging Syst Technol, 2023, 33: 909-927. [CrossRef]

- Guizar-Sicairos M., Thurman S. T., Fienup J. R. Efficient subpixel image registration algorithms. Opt. letters, 2008, 33(2): 156-158. [CrossRef]

| Frame from Test Dataset | (a-1) | (b-1) | (c-1) | (d-1) |

| Precision | 0.977 | 0.964 | 0.971 | 0.947 |

| Recall | 0.985 | 0.979 | 0.982 | 0.962 |

| F1 | 0.963 | 0.958 | 0.965 | 0.953 |

| Dice | 0.968 | 0.965 | 0.976 | 0.954 |

| Frame from Test Dataset | (e-1) | (f-1) | (g-1) | (h-1) |

| Precision | 0.967 | 0.954 | 0.963 | 0.977 |

| Recall | 0.975 | 0.969 | 0.983 | 0.982 |

| F1 | 0.968 | 0.945 | 0.965 | 0.978 |

| Dice | 0.964 | 0.960 | 0.966 | 0.974 |

| Frame from Test Dataset | Random Walks | CU-Net | The proposed method | ||

|---|---|---|---|---|---|

| Intensity | Phase | Intensity | Phase | ||

| Dice | 0.966 | 0.945 | 0.954 | 0.948 | 0.967 |

| Time(s) | 19.08 | 19.03 | 0.31 | 0.37 | 0.63 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).