1. Introduction

Brain-Computer Interfaces (BCIs) assess brain signals and provide commands to output devices to carry out certain tasks. Brain-computer interfaces do not use neuromuscular output pathways [

1].

The primary objective of BCI is to substitute or reinstate functionality for those afflicted with neuromuscular conditions such as ALS, cerebral palsy, etc . Scientists have used electroencephalography, intracortical, electrocorticographic, and other brain signals to manipulate cursors, robotic legs, robotic arms, prosthetics, wheelchairs, TV remote control and several other devices since the first demonstrations of spelling and controlling individual neurons. BCIs have the potential to assist in the rehabilitation of individuals affected by stroke and other diseases. They have the potential to enhance the performance of surgeons and other medical professionals [

4] since more than one billion people (about 15% of the global population) are disabled, and half of that group lacks the financial means to get adequate medical treatment, according to the World Health Organization (WHO) [

5].

The rapid growth of a research and development enterprise in BCI technology generates enthusiasm among scientists, engineers, clinicians, and the public. Also, BCIs need signal-acquisition technology that is both portable and dependable, ensuring safety and reliability in any situation. Additionally, it is crucial to develop practical and viable approaches for the widespread implementation of these technologies. BCI performance must provide consistent reliability on a daily and moment-to-moment basis to align with the normal functioning of muscles. The concept of incorporating sensors and intelligence into physical objects was first introduced in the 1980s by students from Carnegie Mellon University who modified a juice vending machine so that they could remotely monitor the contents of the machine [

6].

In the last decade, EEG-based BCI has been successfully used with Convolutional Neural Networking (CNN) to detect diseases like epilepsy [

7]. EEG-based BCI technology has been also used to control a prosthetic lower limb [

8], [

9] or a prosthetic upper limb [

10], As expected in future, BCI will be widely spread in our lives, improving our way of living especially for disabled people, who may do different activities by speech imagery only [

11].

A network of physical objects, autos, appliances, and other things that are fitted with sensors, software, and network connections is referred to as the Internet of Things (IoT) [

12]. Because of this, they are able to collect and share information. Electronic devices, which are sometimes referred to as “smart objects”, refer to a wide range of technologies. These gadgets include simple smart home devices including smart thermostats, wearable devices like as smartwatches and apparel with Radio Frequency Identification (RFID) technology, as well as complex industrial gear and transportation systems .

The Internet of Things (IoT) technology enables communication between internet-connected gadgets, as well as other devices such as smartphones and gateways. This leads to the formation of a vast interconnected system of devices that can autonomously exchange data and perform a diverse array of tasks. This includes a diverse array of applications, such as monitoring environmental conditions, improving traffic flow - by use of intelligent cars and other sophisticated automotive equipment, and tracking inventory and shipments in storage facilities, among others. For people with severe motor disabilities, having a smart home represents a necessity nowadays, they can manage not only daily used devices from home, but also, be able to manage the security of the home [

15].

During the past years, many approaches have been made to control a smart object or a software application by using EEG-based BCI signals. The following paragraphs present several related works that discuss the issue of BCI home automation and security.

In 2018, Qiang Gao et al. [

16], have proposed a safe and cost-effective online smart home system based on BCI to provide elder and paralyzed people with a new supportive way to control home appliances. They used the Emotiv EPOC EEG headset to detect EEG signals where these signals are denoised, processed and converted into commands. The system has the ability to identify several instructions for controlling four smart devices, including a web camera, a lamp, intelligent blinds, and guardianship telephone. Additionally, they used Power over Ethernet (PoE) technology to provide both power and connectivity to these devices. The experimental results elucidated that their proposed system obtained 86.88 ± 5.30% accuracy rate of average classification.

In 2020, K. Babu and P. Vardhini [

17], have implemented a system to control a software application, which can be used further in home automation. They used a NeuroSky headset, an Arduino, an ATMEGA328P, and a laptop. Neuro software application is used to create three virtual objects represented by three icons and to control them by blinking using the headset user. Three ports from Arduino were dedicated to the three objects from the Neuro application to simulate controlling a fan, a motor, and to manage the switch between the fan and motor. Home appliance status is changed by running a MATLAB code.

Other experiments reveal an implemented prototype to control some home appliances like a LED and a fan, as the implemented system presented by Lanka et al. [

18]. They used a dedicated neural headset, a laptop, an ESP32 microcontroller, a LED, and a fan. Using Bluetooth technology, they connected the headset to the laptop and this one connected with the microcontroller. The fan and LED have been wired and connected to the microcontroller. In this way, they developed a system to control a fan and a LED by a healthy, disabled, or paralysed user electric mind wave.

Eyhab Al-Masri et al. [

19] published an article in 2022, where they specified the development of a BCI framework that targeted people with motor disabilities to control Philips Hue smart lights and Kasa Smart Plug using a dedicated neural headset. They used an EEG EMOTIV headset, Raspberry Pi, Kasa smart plug and Philips Hue smart lights as hardware. Bluetooth technology is used to connect the headset to the Raspberry Pi. The commands are configured and transformed from Raspberry Pi to Kasa Smart Plug and Philips Hue smart lights using Node-RED. The experimental results showed the efficacy and practicability of using EEG signals to operate IoT devices with a precision rate of 95%.

In 2023, Danish Ahmed et al. [

20] have successfully used BCI technology to control light and a fan via a dedicated neural headset. The implemented system consists of an EMOTIV EPOC headset, a PC laptop, an Arduino platform, and a box that contains a light and a fan. The headset is connected to the PC via Bluetooth, the laptop uses a WebSocket server and the JSON-RPC protocol to connect to Arduino, and Arduino is wired to the light and fan. The user trained the headset to control the prototype by his/her thoughts.

A new challenge has been overcome in home automation, which is controlling a TV using brainwaves. Several papers have discussed this issue. One of the systems was presented in 2023 by Haider Abdullah et al. [

21] where they successfully implemented and tested this system on 20 participants. The proposed system includes the following components: EMOTIV Insight headset, laptop - connected via Bluetooth with the headset, Raspberry Pi 4 – connected through SSH to the laptop, and TV remote control circuit - connected with wires to the Raspberry Pi. Three different brands of TVs were used in the system testing: SONY®, SHOWINC® and SAMIX®. Four controlling commands were included in this EEG-based TV remote control: open/close of the TV, volume changing and channels changing. The test showed a promising result where the system's accuracy was almost 74.9%.

The use of BCI technology for controlling different devices represents the new direction of advancement in both hardware and software development. In this context, this paper presents the design and implementation of a proposed real-time BCI-IoT system used to assure home security using a dedicated neuronal headset to control door locking and light using speech imagery. The proposed system enables disabled and paralysed people to lock or unlock a door and to turn ON/OFF an LED with the ability to receive status notifications. The proposed system has been tested on one participant. The proposed system has been simulated using a unity engine as well as the hardware implementation using Raspberry PI and other hardware components as will be discussed in the following sections.

3. Results and Discussion

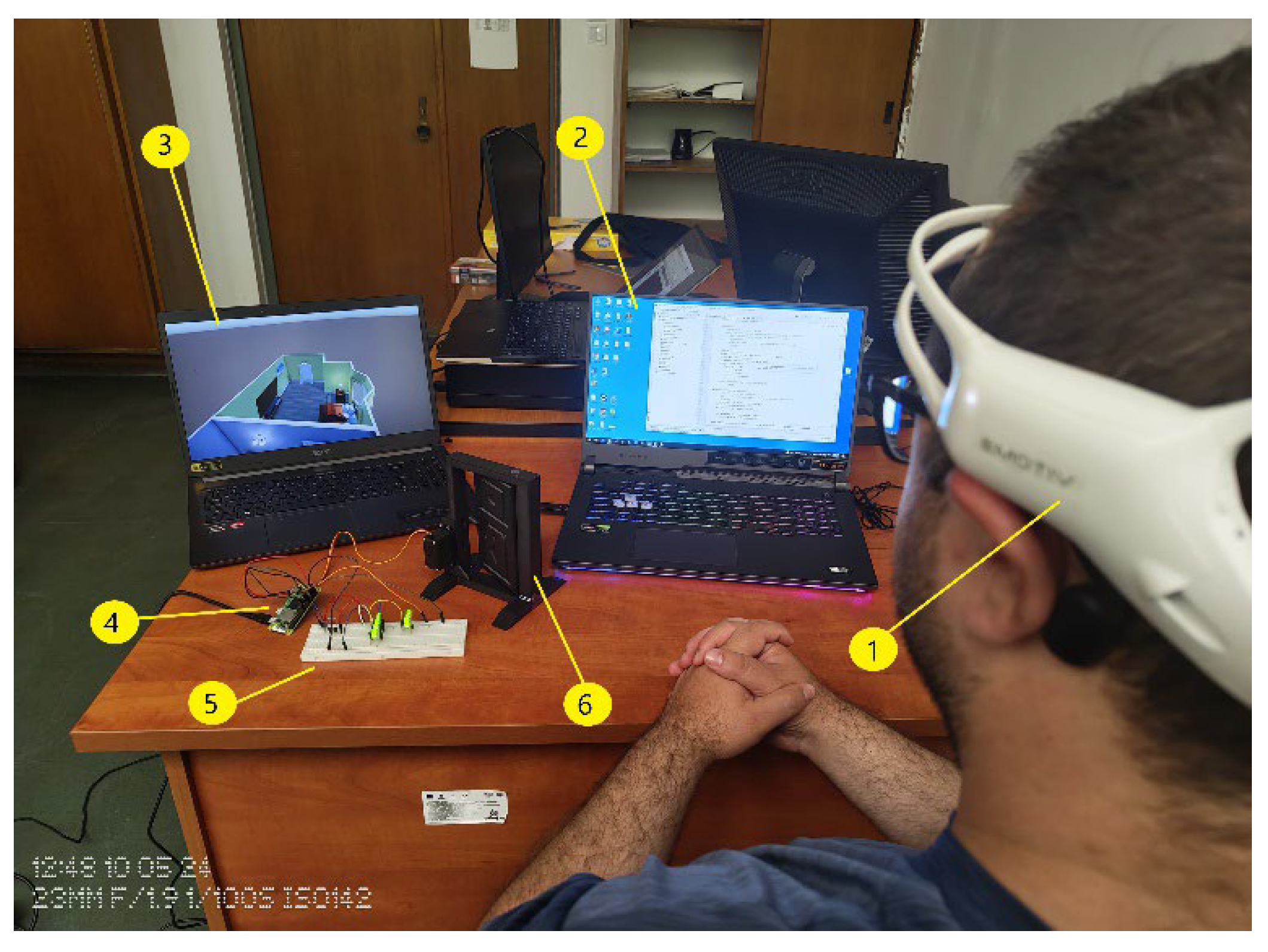

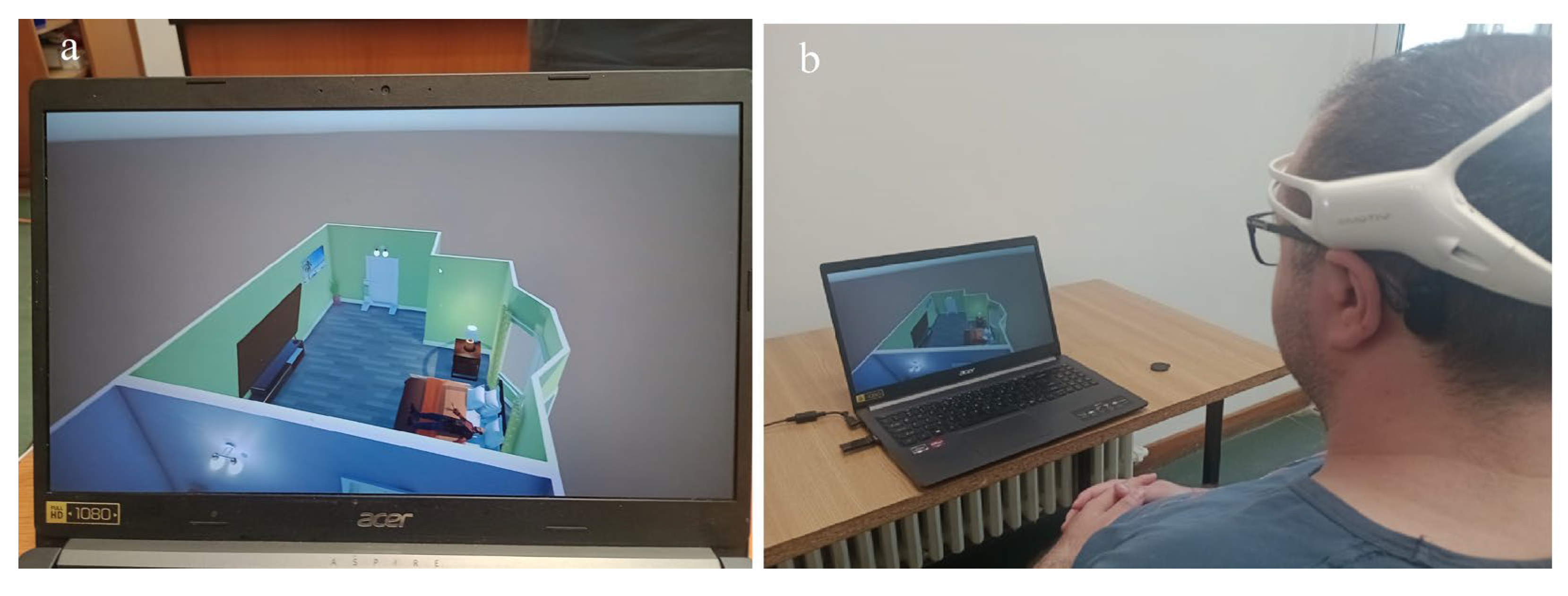

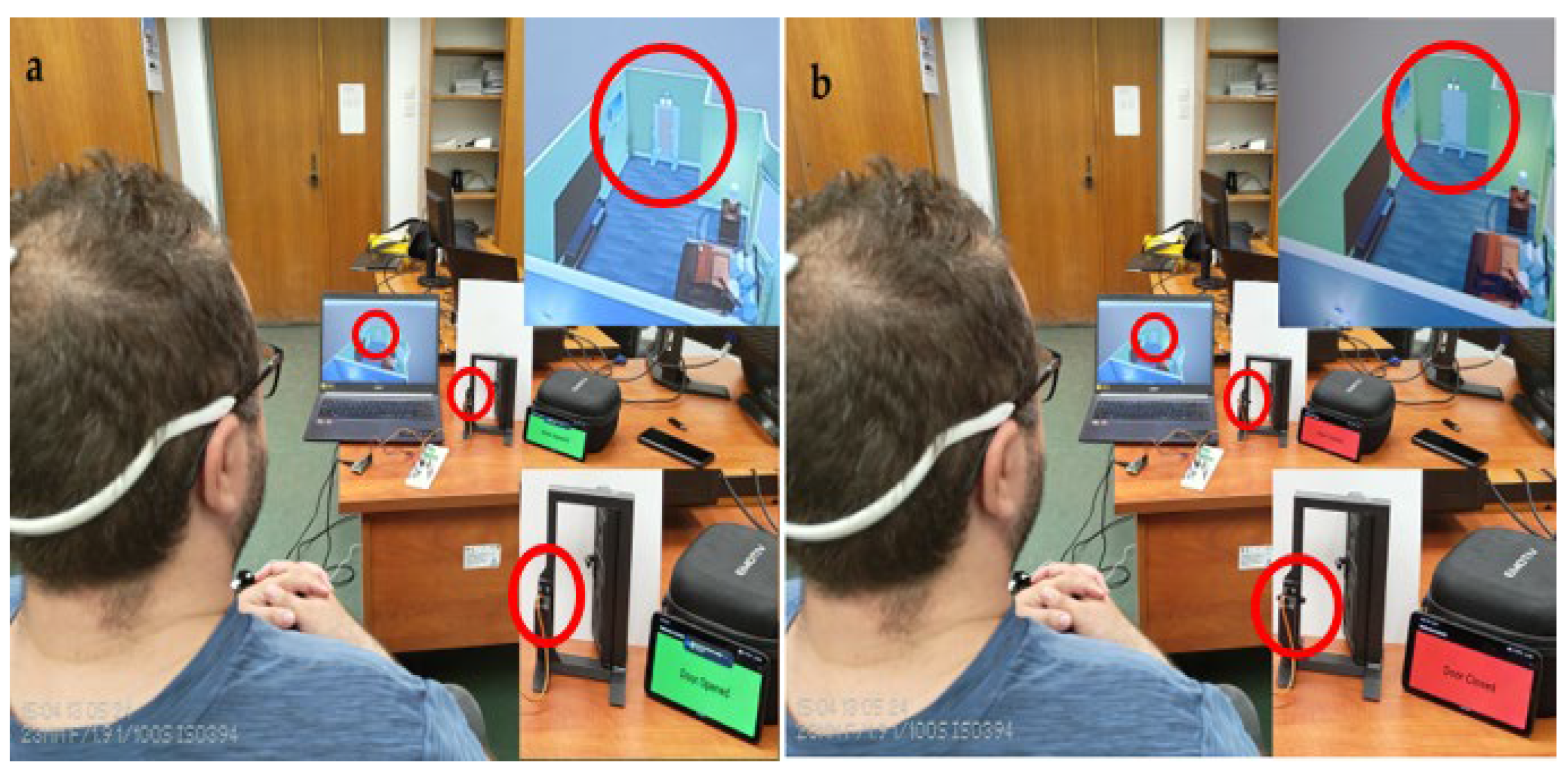

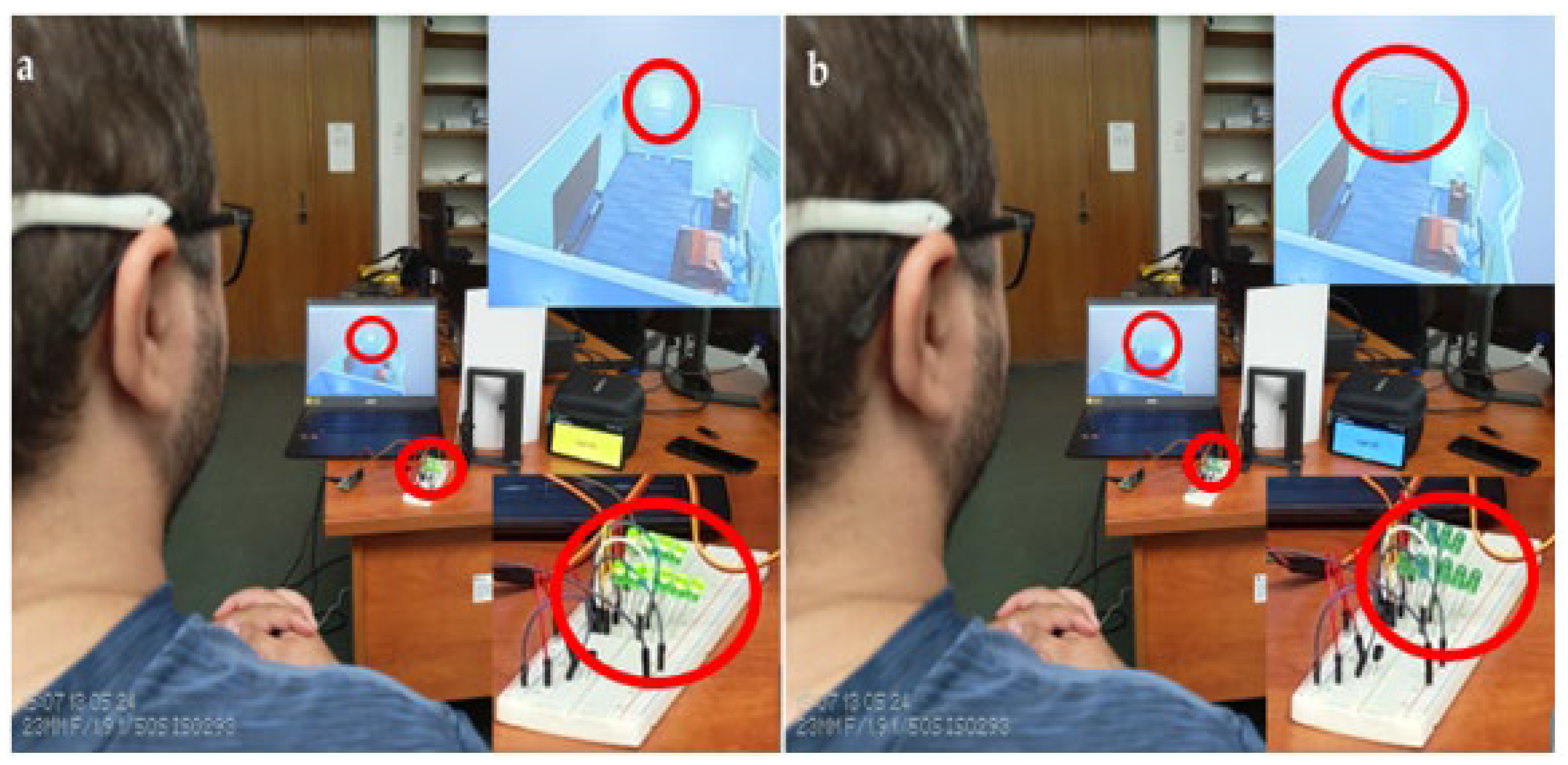

Twenty people participated in the study by having their EEG signals recorded. To control the video simulation and the implemented system, two commands on the EMOTIV Insight neural headset were, for each participant. Every participant was required to put on the dedicated neural headset and take a seat in front of the computer (for the simulation), and in front of the implemented hardware system (see

Figure 18 and

Figure 19).

Initially, each participant was instructed to activate the light by mentally focusing on the specific image/phrase that they had been trained to associate with it. Once the light was activated, the individual was instructed to deactivate the light. Similarly, the participant was asked to open and close the door. Each participant was instructed to attempt to manipulate the light and door on fifteen times for each order, and to keep track of the number of instructions that were successfully executed (the attempts that resulted in successful control of the light and door). To execute the required Python file, the user's PC sends a command through Bluetooth to the Raspberry Pi whenever they think about one of these instructions. This script includes the orders to transmit the appropriate signal to the light and door. All these changes are notified in real-time on a localhost server, which sends the notification to a Futter application developed to be used on the phone.

Table 6 displays the quantity of successfully executed commands for each participant during the trial. The examination of the results showed that a lack of concentration throughout the experiment could have contributed to some of the incorrect answers. Another possible explanation for the somewhat better success rate of male replies compared to fe-male responses is that the EEG signal quality was marginally worse in certain female individuals owing to their longer hair.

The overall average is 10.52, although the examination indicates that the overall precision of the system is 70.16%. The dispersion has been determined by calculating the standard deviation for each set of data, separately for each participant. The highest and lowest values obtained were 1.41 and 0, respectively. The experiment often has a low standard deviation, with an average standard deviation of 0.8131 for all results. Dispersion is seen in participants 4, 6, 13 and 14 as a result of variation in the relevance of the success attempts for the two trained commands.

Mental commands sent from the neural headset have successfully controlled the video simulation and the physical system in parallel, both versions being synchronised to give a response in a similar time as can be seen in

Figure 18 and

Figure 19.

This procedure was applied to the implemented hardware system.

Author Contributions

Conceptualization, M.-V.D.; methodology, I.N., A.F. and A.-M.T.; software, I.N., A.F. and A.-M.T.; validation, M.-V.D, I.N., A.F. and A.-M.T.; formal analysis, M.-V.D. and A.H.; investigation, M.-V.D, I.N. and A.F.; resources, M.-V.D., I.N. and A.F.; data curation, M.-V.D, I.N. and A.F.; writing—original draft preparation, M.-V.D.; writing—review and editing, M.-V.D., A.H., and C.-P.S.; visualization, M.-V.D., A.H., T.-G. D., C.-P.S. and A.-R. M.; supervision, M.-V.D., A.H., T.-G. D. and A.-R. M.; project administration, M.-V.D. All authors have read and agreed to the published version of the manuscript.

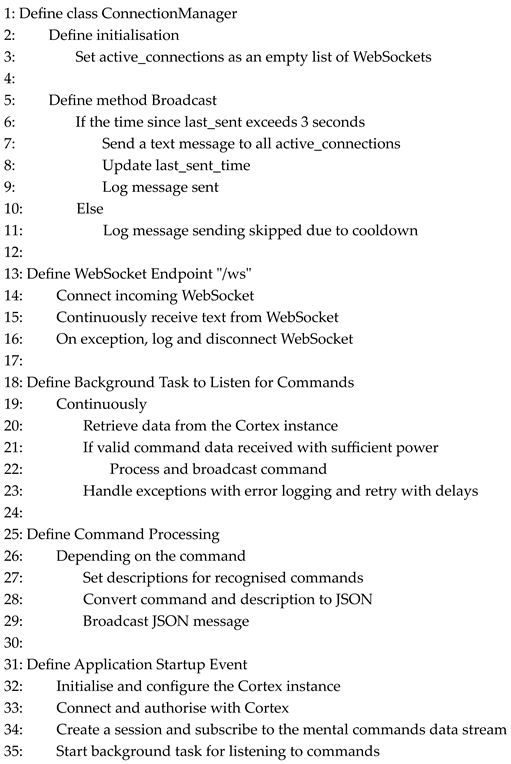

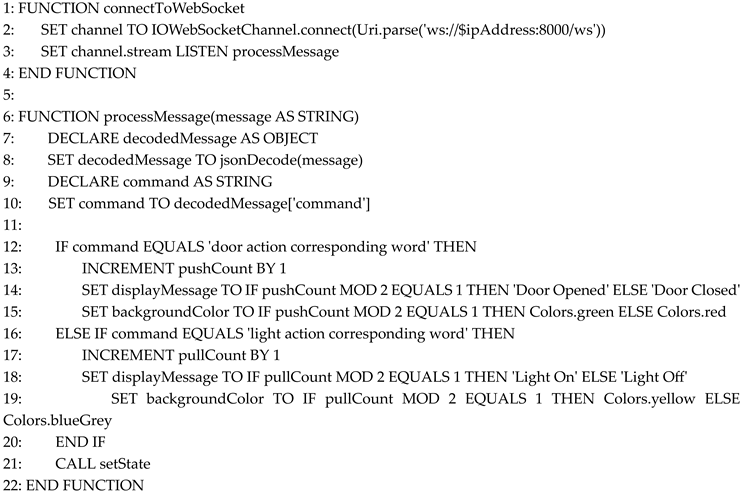

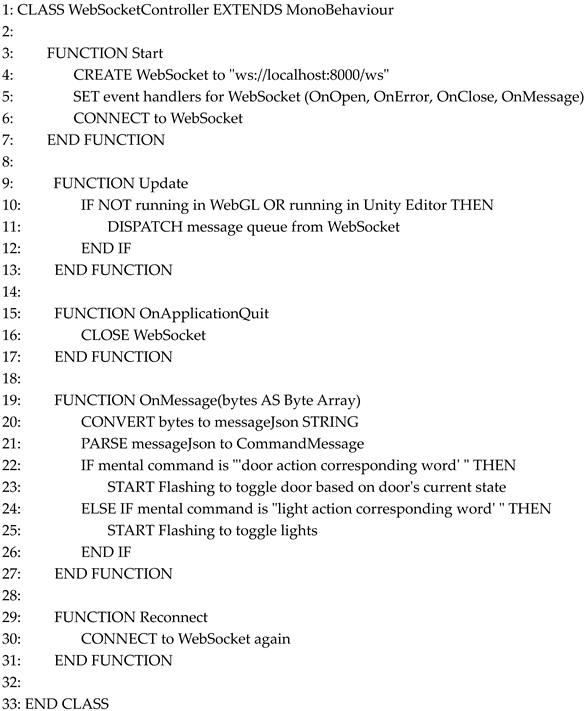

Figure 1.

Real-time home automation system and video simulation: 1. EMOTIV Insight neural headset; 2. Cortex API and FastAPI server are running; 3. The simulated system (Unity Engine); 4. Raspberry Pi Zero 2 W; 5. BreadBoard with 5V LEDs; 6. PLA Door and frame, equipped with Digital MG996 servo motor.

Figure 1.

Real-time home automation system and video simulation: 1. EMOTIV Insight neural headset; 2. Cortex API and FastAPI server are running; 3. The simulated system (Unity Engine); 4. Raspberry Pi Zero 2 W; 5. BreadBoard with 5V LEDs; 6. PLA Door and frame, equipped with Digital MG996 servo motor.

Figure 2.

Creality Ender 3 S1 PRO 3D printer [

24].

Figure 2.

Creality Ender 3 S1 PRO 3D printer [

24].

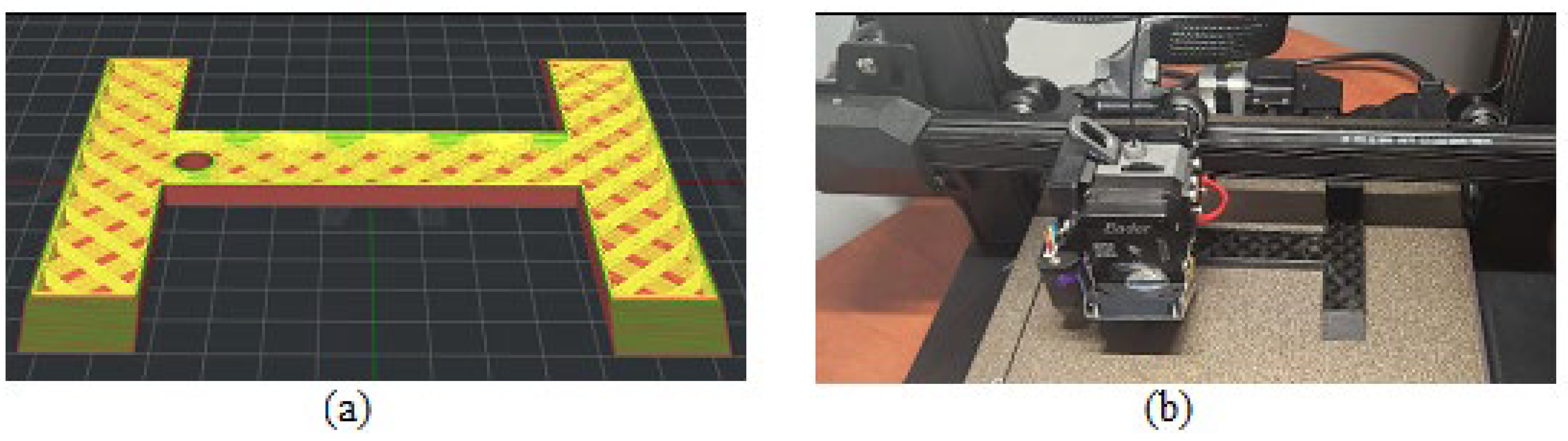

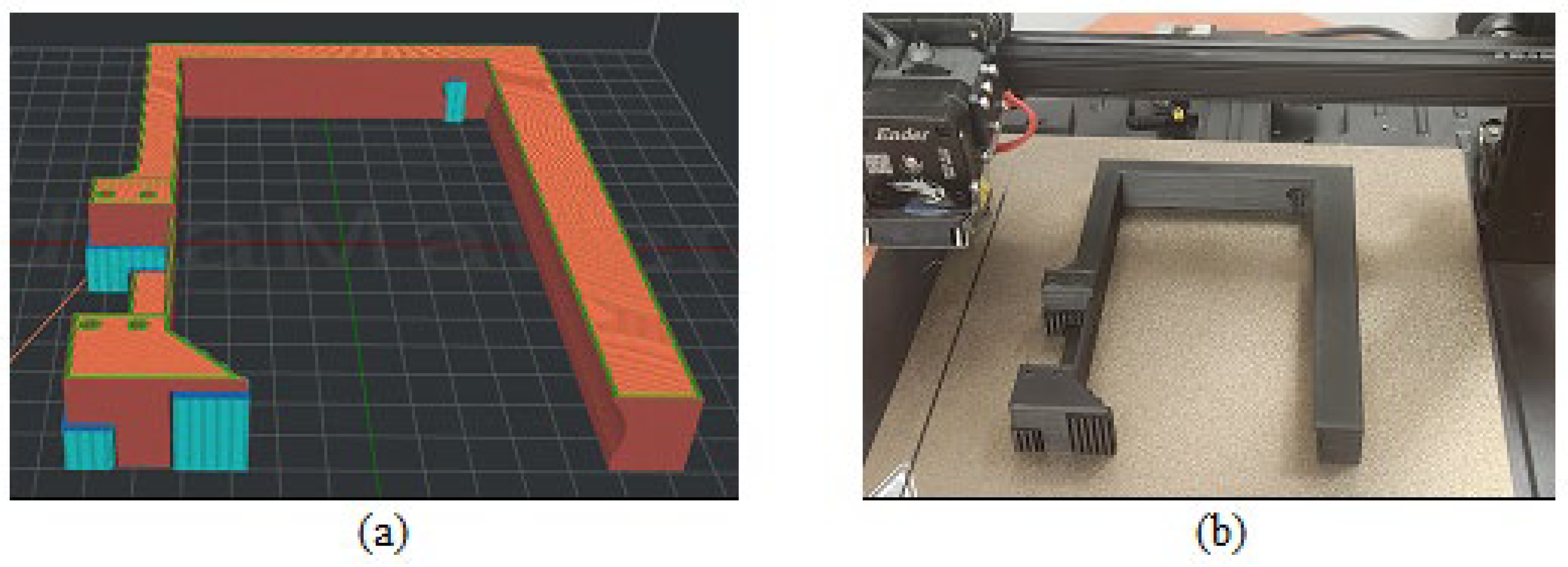

Figure 3.

Door model 10% - layer 42: (a) software; (b) 3D printer.

Figure 3.

Door model 10% - layer 42: (a) software; (b) 3D printer.

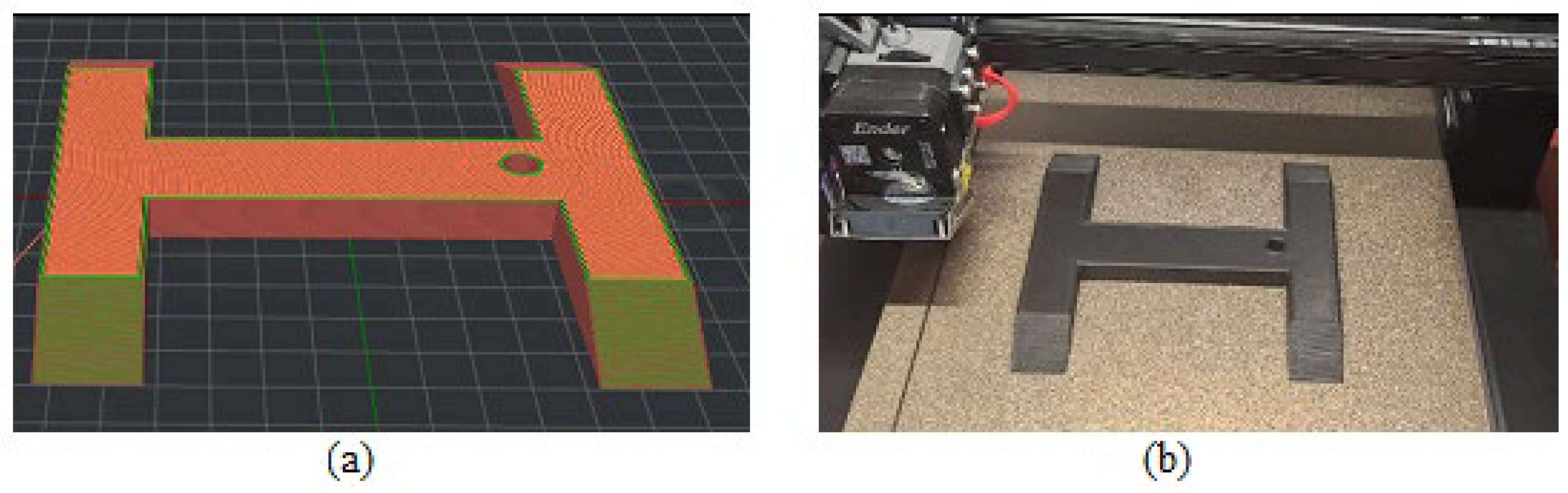

Figure 4.

Door model 60% - layer 225: (a) software; (b) 3D printer.

Figure 4.

Door model 60% - layer 225: (a) software; (b) 3D printer.

Figure 5.

Door model 100% - layer 425: (a) software; (b) 3D printer.

Figure 5.

Door model 100% - layer 425: (a) software; (b) 3D printer.

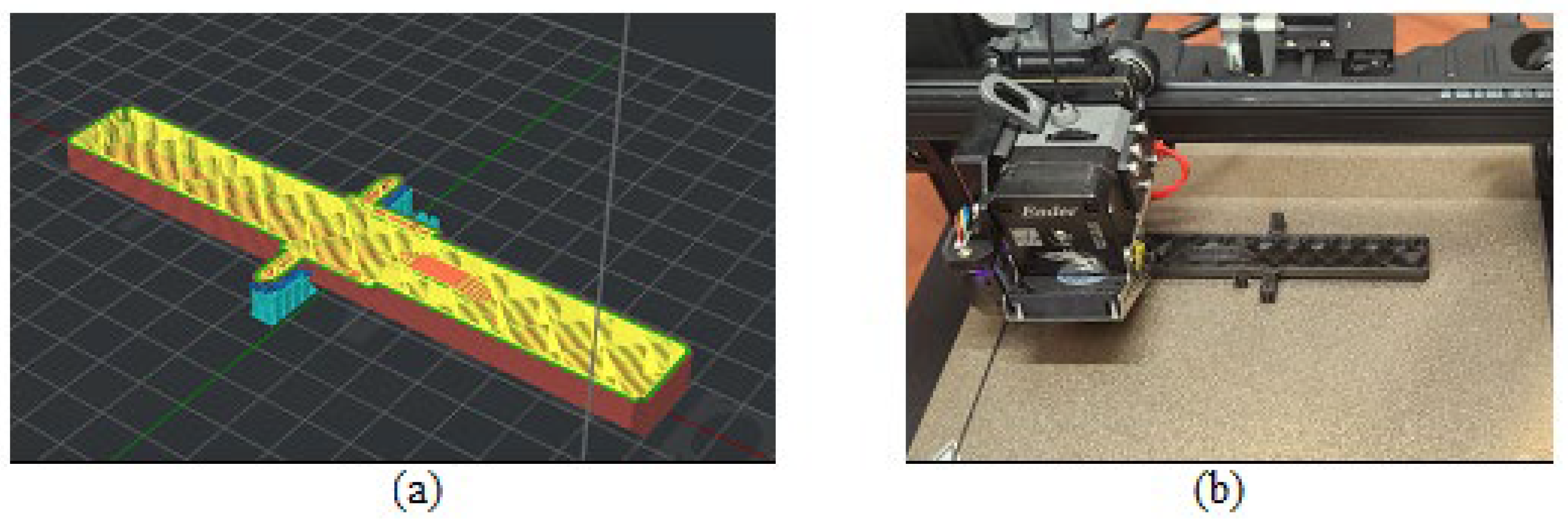

Figure 6.

Frame model - first component 10% - layer 5: (a) software; (b) 3D printer.

Figure 6.

Frame model - first component 10% - layer 5: (a) software; (b) 3D printer.

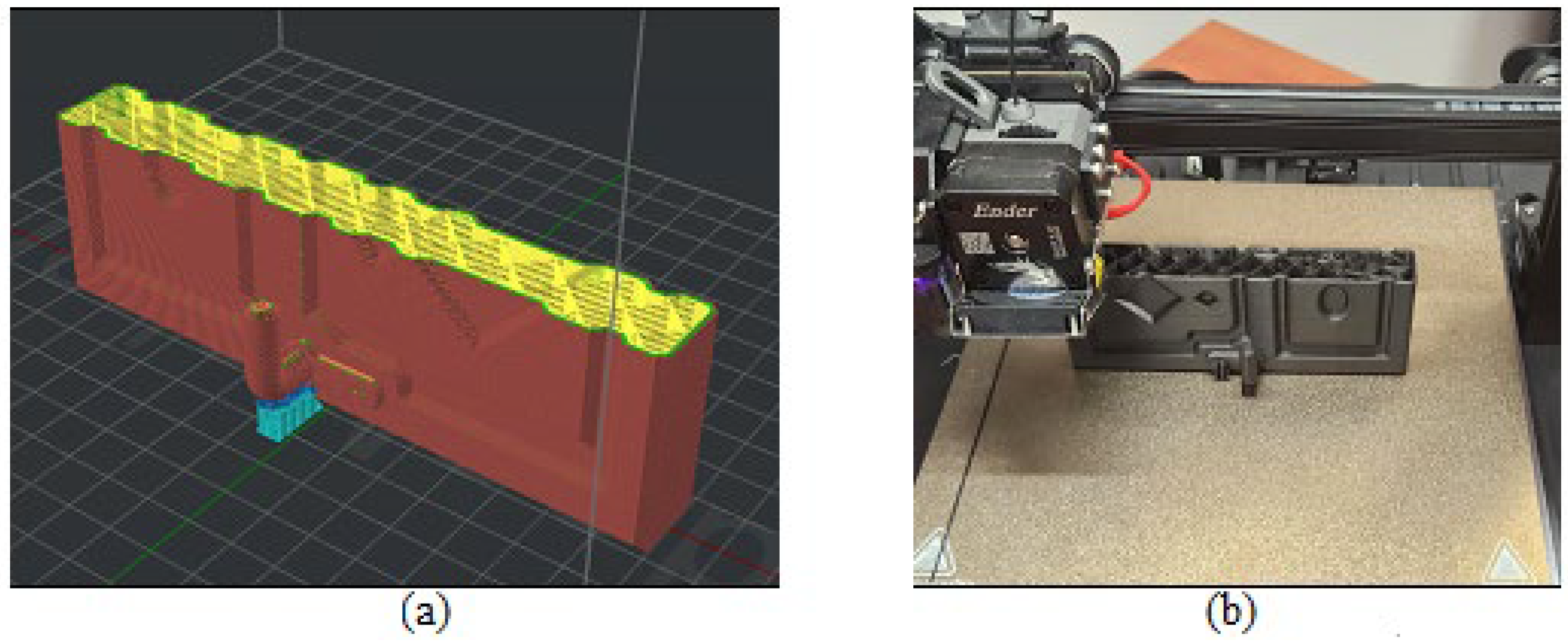

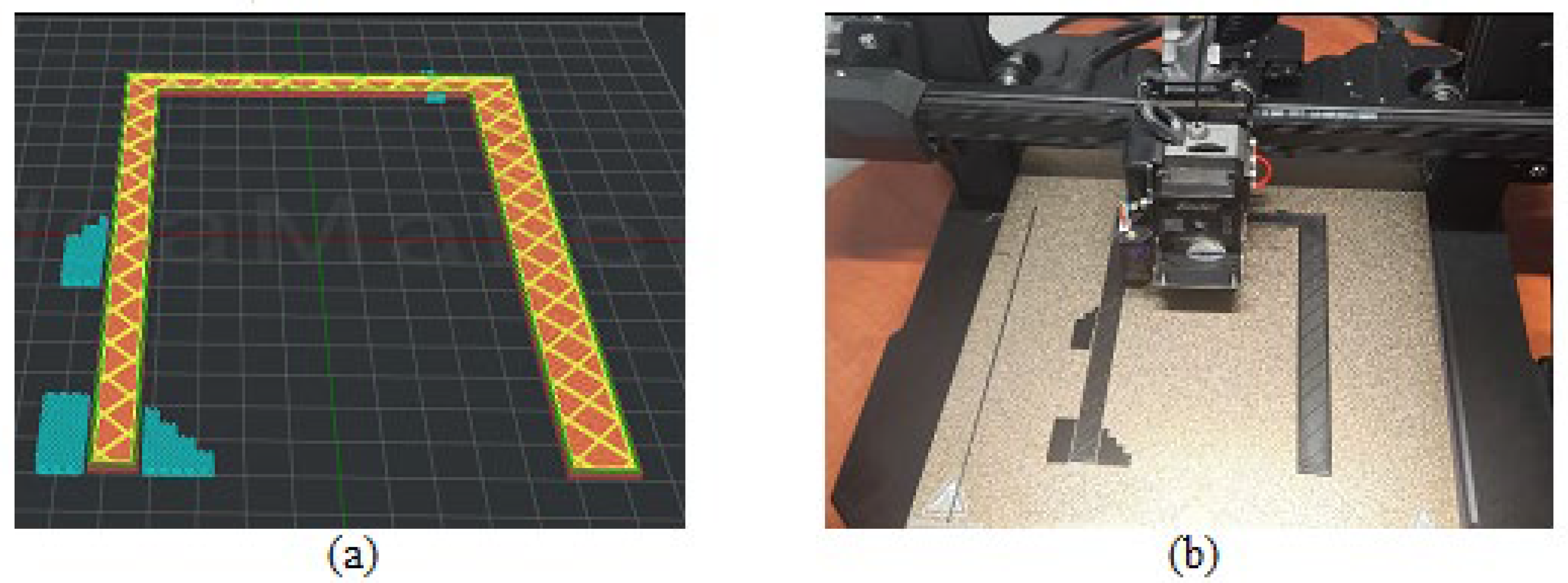

Figure 7.

Frame model - first component 60% - layer 30: (a) software; (b) 3D printer.

Figure 7.

Frame model - first component 60% - layer 30: (a) software; (b) 3D printer.

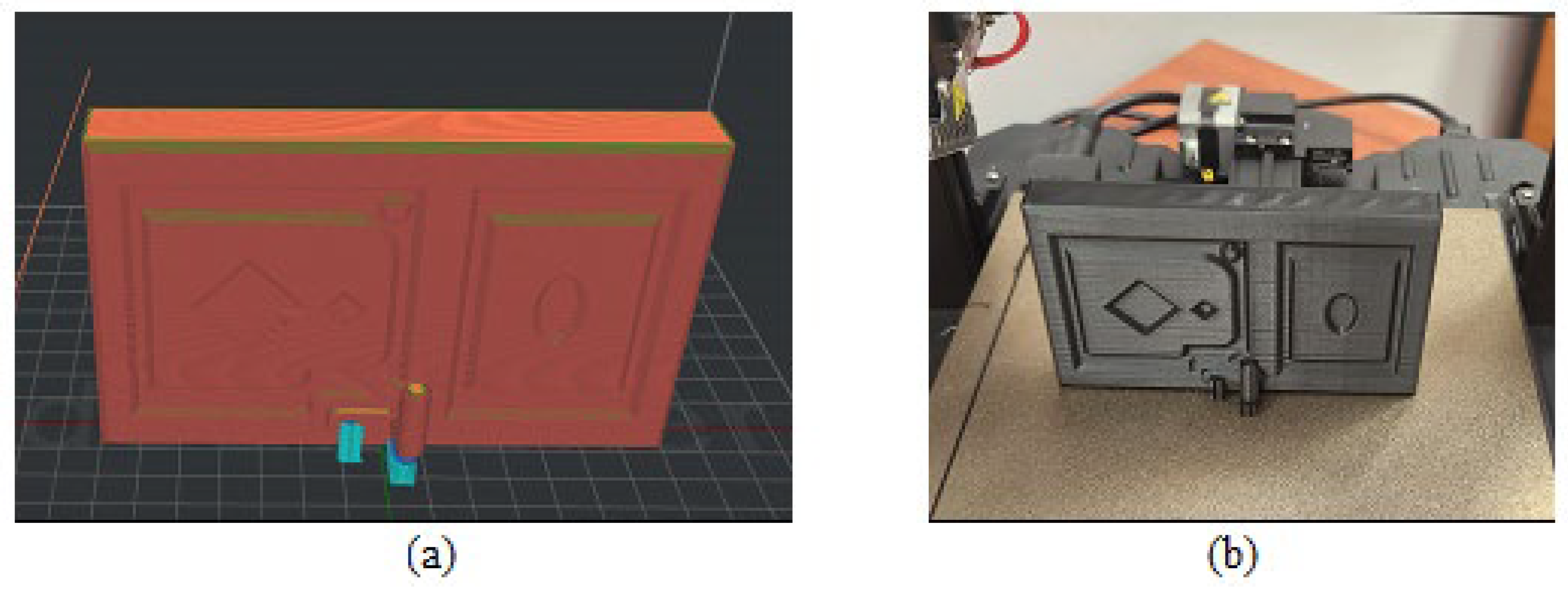

Figure 8.

Frame model - first component 100% - layer 50: (a) software; (b) 3D printer.

Figure 8.

Frame model - first component 100% - layer 50: (a) software; (b) 3D printer.

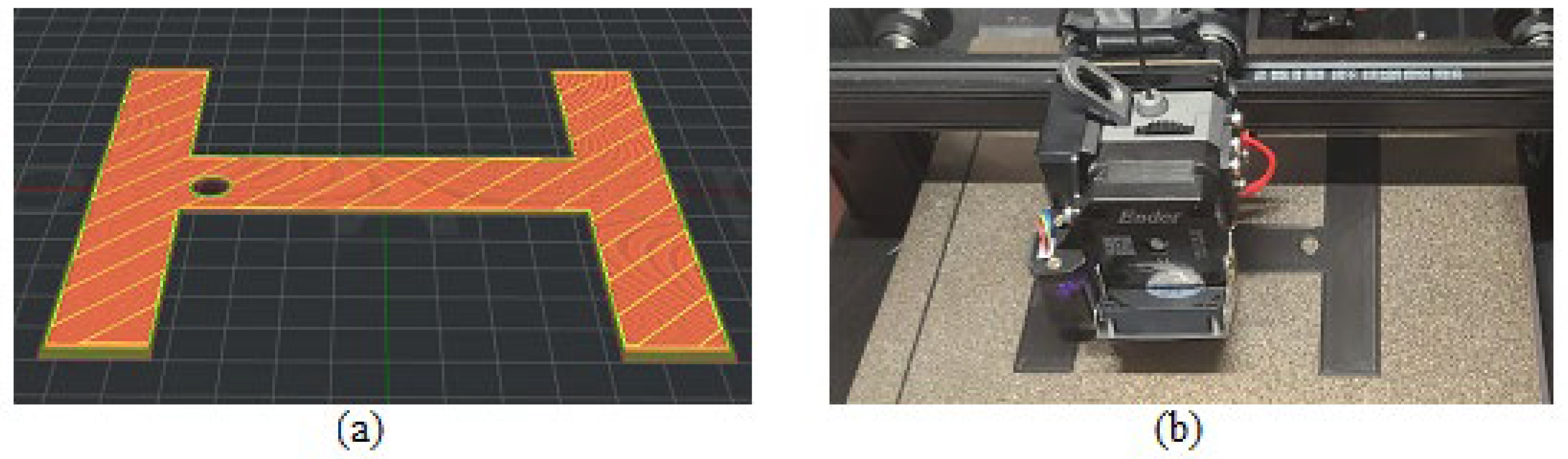

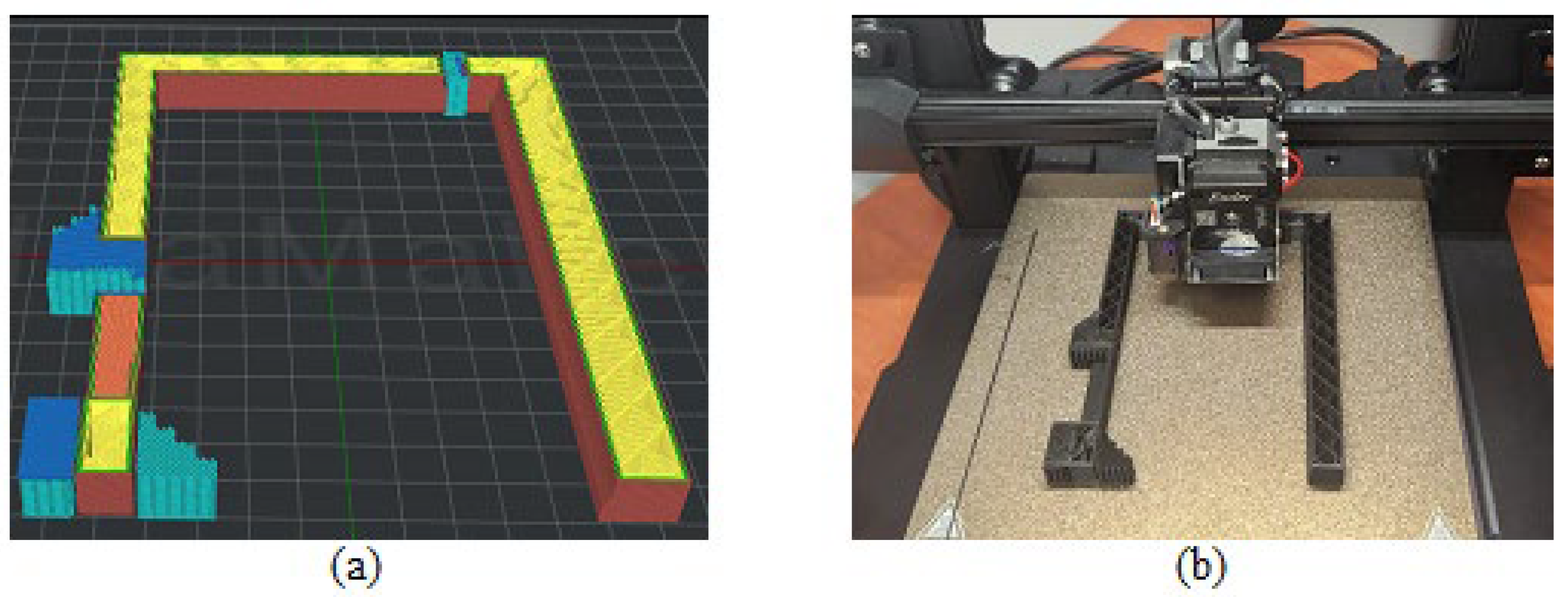

Figure 9.

Frame model - first component 10% - layer 12: (a) software; (b) 3D printer.

Figure 9.

Frame model - first component 10% - layer 12: (a) software; (b) 3D printer.

Figure 10.

Frame model - first component 60% - layer 72: (a) software; (b) 3D printer.

Figure 10.

Frame model - first component 60% - layer 72: (a) software; (b) 3D printer.

Figure 11.

Frame model - first component 100% - layer 120: (a) software; (b) 3D printer.

Figure 11.

Frame model - first component 100% - layer 120: (a) software; (b) 3D printer.

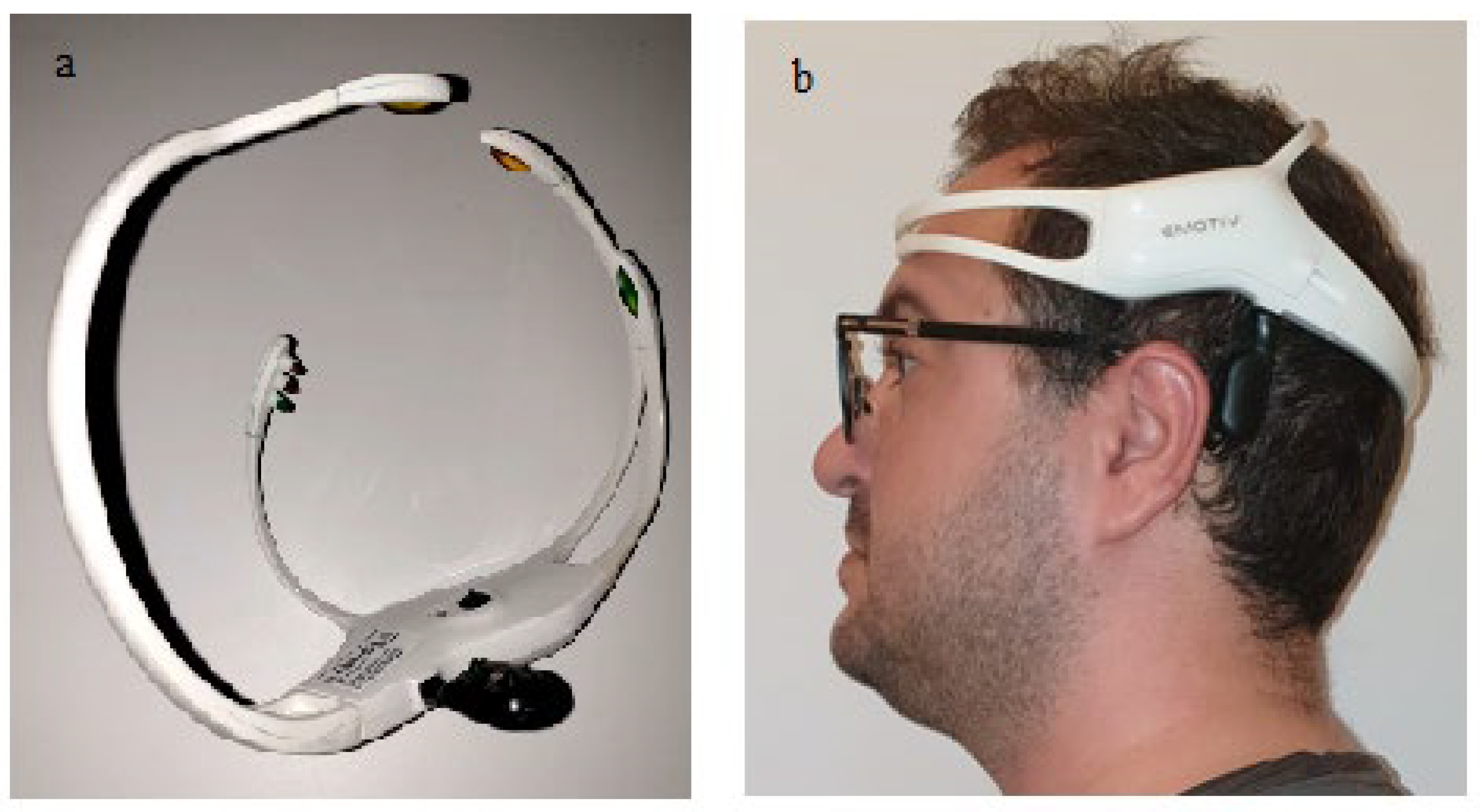

Figure 12.

Emotiv™ Insight neuro-headset: (a) Semi-dry polymer sensors of the headset; (b) the headset on a user head.

Figure 12.

Emotiv™ Insight neuro-headset: (a) Semi-dry polymer sensors of the headset; (b) the headset on a user head.

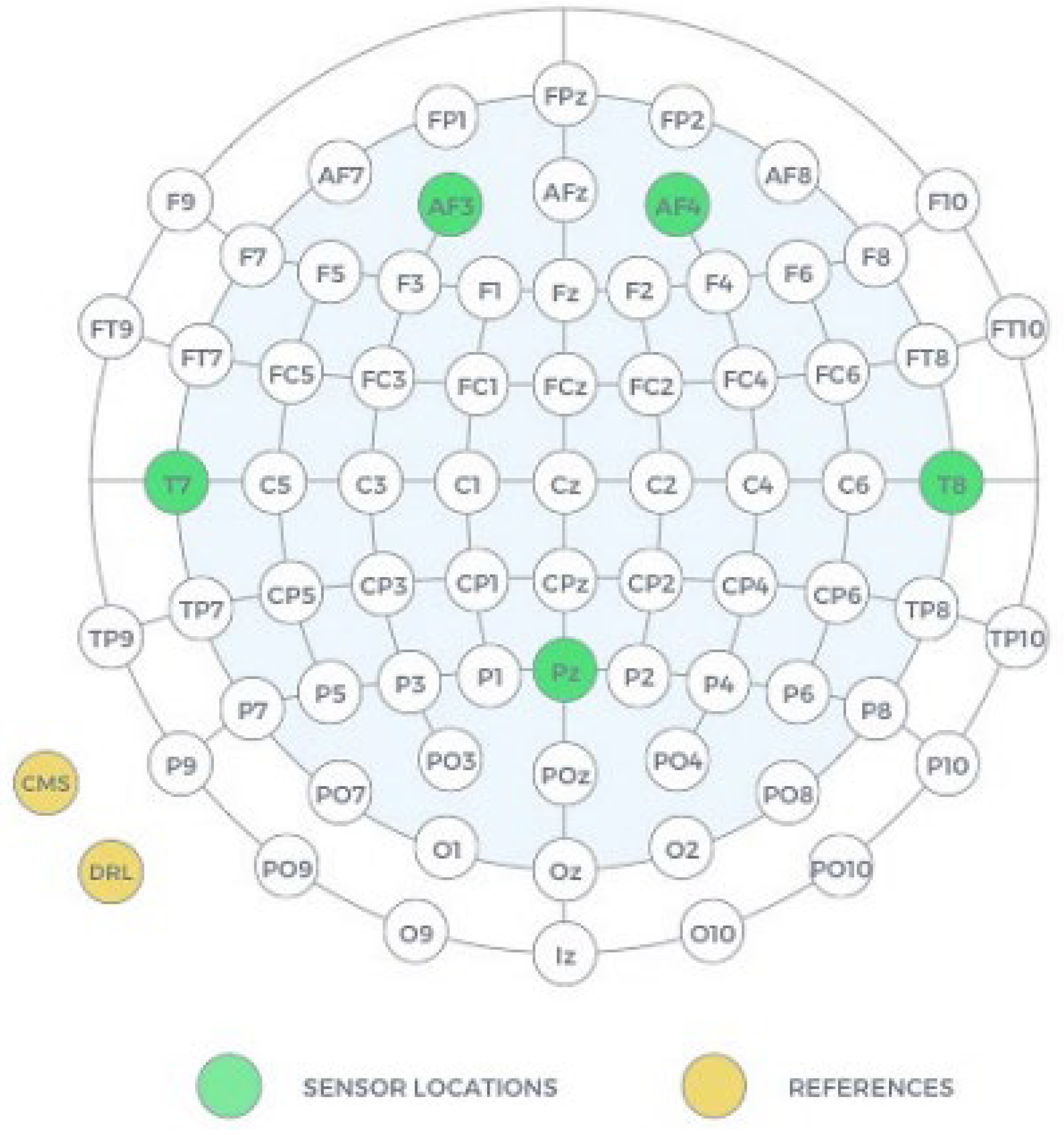

Figure 13.

Locations of sensors locations in Emotiv Insight headset [

25].

Figure 13.

Locations of sensors locations in Emotiv Insight headset [

25].

Figure 14.

Real-time simulation in Unity using Emotiv Insight: (a) simulation of the home view; (b) the use of the neuro-headset to control the video simulation.

Figure 14.

Real-time simulation in Unity using Emotiv Insight: (a) simulation of the home view; (b) the use of the neuro-headset to control the video simulation.

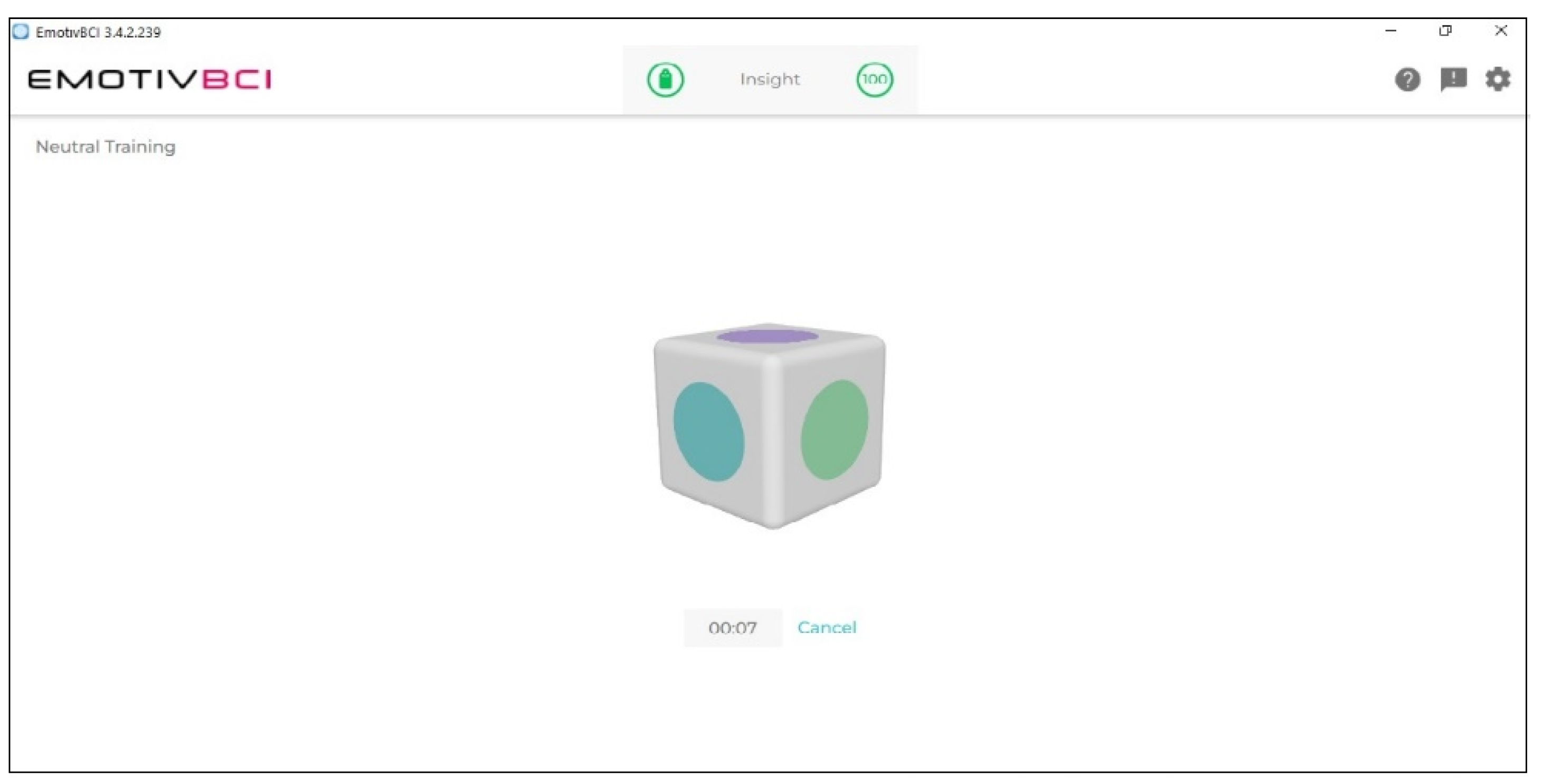

Figure 15.

Training session.

Figure 15.

Training session.

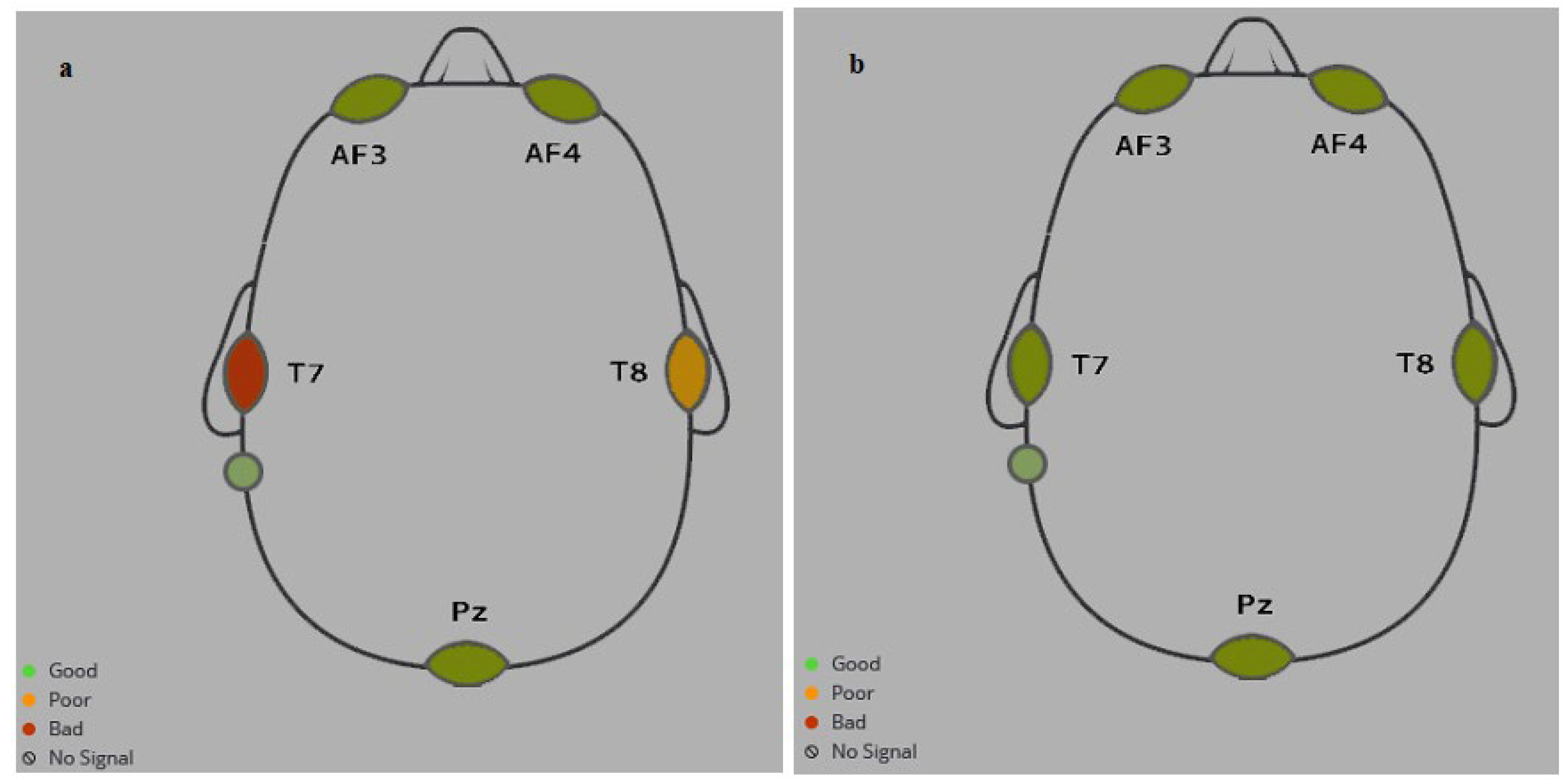

Figure 16.

EMOTIV Insight sensors quality: (a) bad quality; (b) good quality.

Figure 16.

EMOTIV Insight sensors quality: (a) bad quality; (b) good quality.

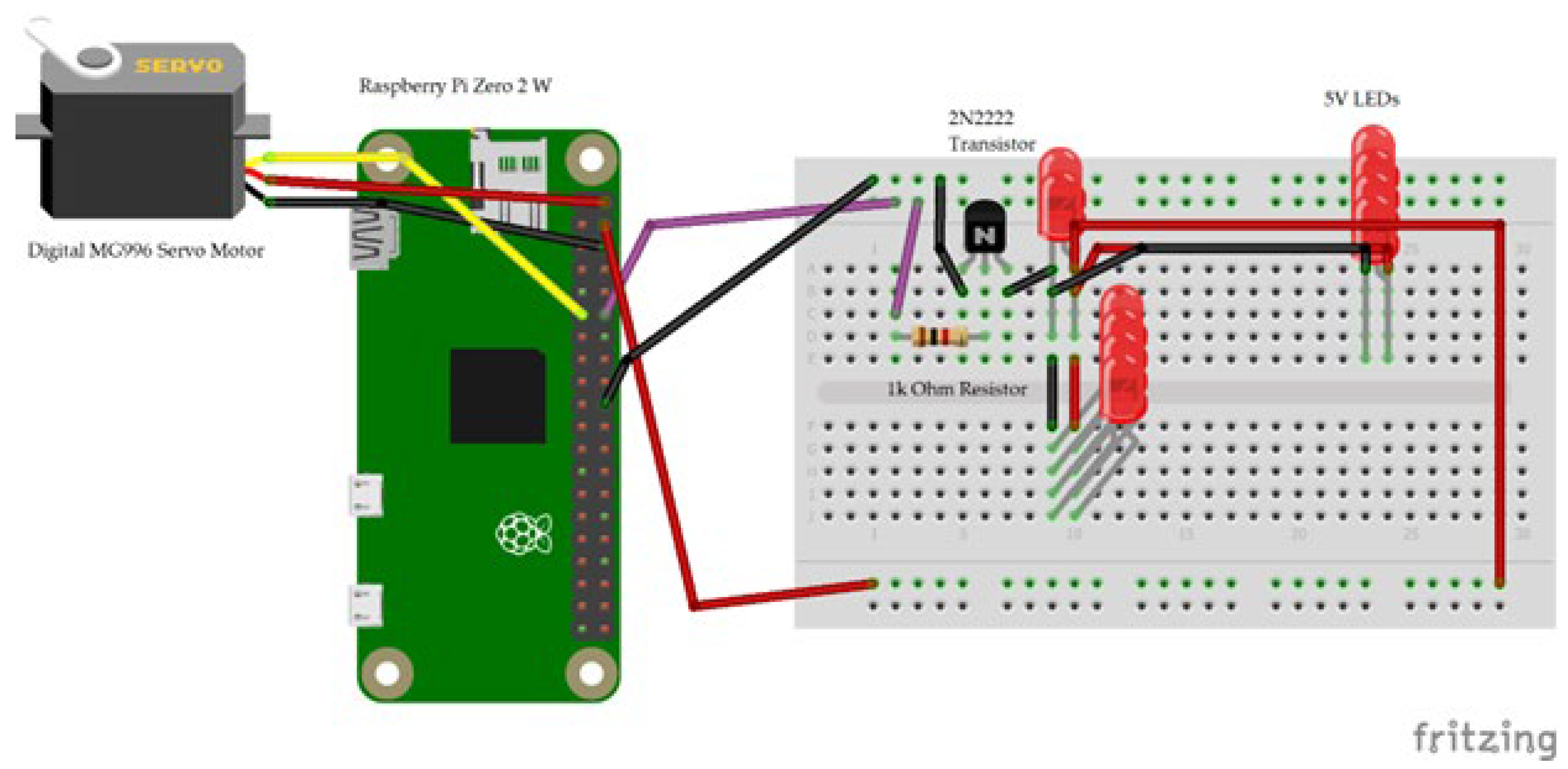

Figure 17.

Circuit Diagram made with Fritzing [

35].

Figure 17.

Circuit Diagram made with Fritzing [

35].

Figure 18.

Lock and unlock the door using brain signals, with real-time notifications on video simulation and the phone: a. Door unlocked; b. Door locked.

Figure 18.

Lock and unlock the door using brain signals, with real-time notifications on video simulation and the phone: a. Door unlocked; b. Door locked.

Figure 19.

Changing LED ON/OFF using brain signals, with real-time notifications on video simulation and the phone: a. LED ON; b. LED OFF.

Figure 19.

Changing LED ON/OFF using brain signals, with real-time notifications on video simulation and the phone: a. LED ON; b. LED OFF.

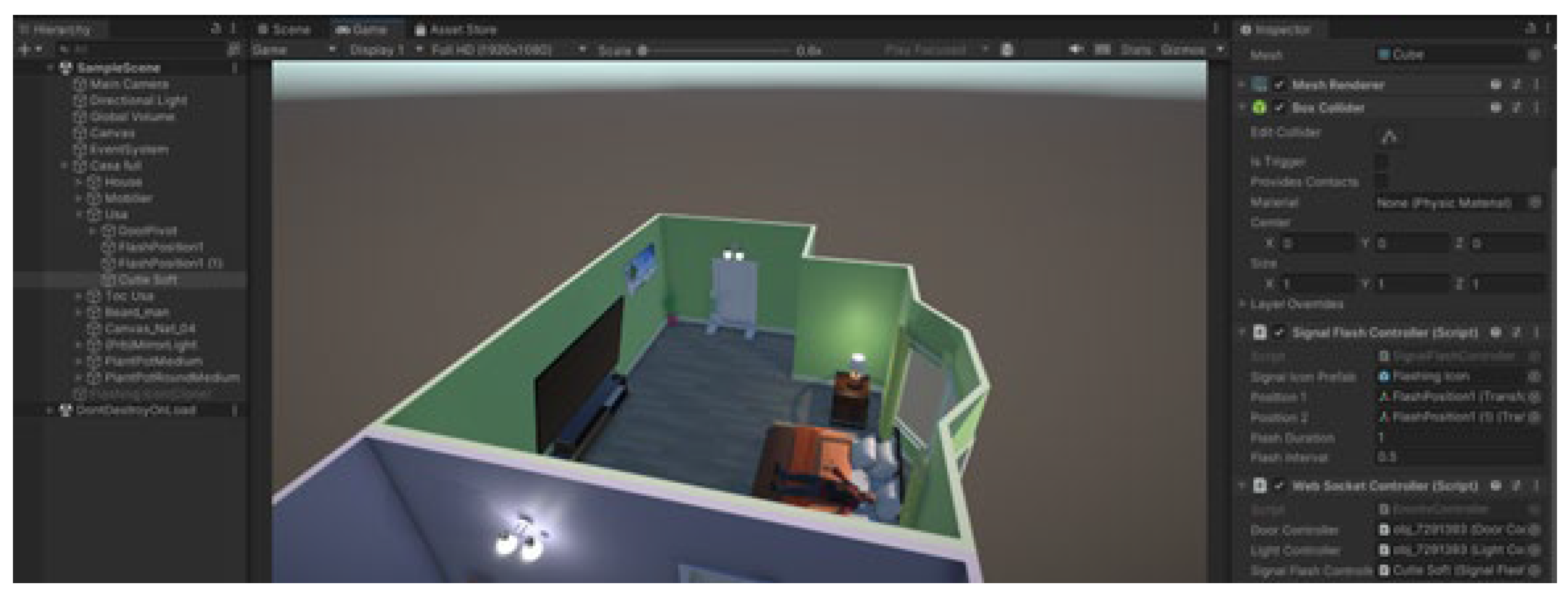

Figure 20.

Home automation system simulated with Unity Engine.

Figure 20.

Home automation system simulated with Unity Engine.

Table 1.

Printing parameters.

Table 1.

Printing parameters.

| Field |

Value |

| Layer height |

0.2 mm |

| Layers on contour |

2.5 |

| Filling density |

5% |

| The type of filling |

Straight |

| Printing plate temperature |

60 °C |

| Printing head temperature |

210 °C |

Table 2.

Time, quantity, and estimated price for the door 3D printing.

Table 2.

Time, quantity, and estimated price for the door 3D printing.

| Description |

Value |

| 3D printing time |

8 hours, 15 minutes, and 50 seconds |

| Amount of material used [g] |

81.9 |

| Estimated price [$] |

19.49 |

Table 3.

Time, quantity, and estimated price for the frame 3D printing - the first component.

Table 3.

Time, quantity, and estimated price for the frame 3D printing - the first component.

| Description |

Value |

| 3D printing time |

2 hours, 52 minutes, and 42 seconds |

| Amount of material used [g] |

25.9 |

| Estimated price [$] |

6.16 |

Table 4.

Time, quantity, and estimated price for the frame 3D printing – the second component.

Table 4.

Time, quantity, and estimated price for the frame 3D printing – the second component.

| Description |

Value |

| 3D printing time |

5 hours, and 29 minutes |

| Amount of material used [g] |

52.6 |

| Estimated price [$] |

12.53 |

Table 5.

Total time, quantity, and estimated price for the door and frame 3D printing.

Table 5.

Total time, quantity, and estimated price for the door and frame 3D printing.

| Description |

Value |

| 3D printing time |

16 hours, 37 minutes, and 32 seconds |

| Amount of material used [g] |

175.8 |

| Estimated price [$] |

41.84 |

Table 6.

Testing results.

Table 6.

Testing results.

| Participants |

Light On/Off |

Door Locked/Unlocked |

AVG |

STDEV |

| 1 |

11 |

12 |

11.5 |

0.7071 |

| 2 |

9 |

10 |

9.5 |

0.7071 |

| 3 |

8 |

7 |

7.5 |

0.7071 |

| 4 |

12 |

10 |

11 |

1.4142 |

| 5 |

9 |

10 |

9.5 |

0.7071 |

| 6 |

11 |

9 |

10 |

1.4142 |

| 7 |

10 |

11 |

10.5 |

0.7071 |

| 8 |

12 |

13 |

12.5 |

0.7071 |

| 9 |

13 |

14 |

13.5 |

0.7071 |

| 10 |

11 |

10 |

10.5 |

0.7071 |

| 11 |

12 |

12 |

12 |

0 |

| 12 |

7 |

8 |

7.5 |

0.7071 |

| 13 |

9 |

7 |

8 |

1.4142 |

| 14 |

11 |

9 |

10 |

1.4142 |

| 15 |

9 |

10 |

9.5 |

0.7071 |

| 16 |

13 |

12 |

12.5 |

0.7071 |

| 17 |

11 |

10 |

10.5 |

0.7071 |

| 18 |

10 |

11 |

10.5 |

0.7071 |

| 19 |

12 |

11 |

11.5 |

0.7071 |

| 20 |

12 |

13 |

12.5 |

0.7071 |

| Total AVG |

|

|

10.525 |

|

| STDEV AVG |

|

|

|

0.8131 |