1. Introduction

It is common to think of landscape as a specific arrangement of objects in space. These objects can then be measured, inventoried, and mapped for purposes of environmental planning and natural resource management. To shift the perspective to a process-oriented view, anthropologist Tim Ingold [

1] coined the term landscape temporality. According to Ingold, this concept encompasses both the human viewer component and the physical manifestation of objects in space and time. Landscape temporality can therefore refer to both human and phenomenal change. This is similar to concepts in landscape and urban planning, where ‘experiential’ approaches aim to describe how people perceive and interact with the landscape [

2]. It is generally accepted that both human and phenomenal change can significantly influence human-environment interactions and the perceived meaning and value of landscapes [

3]. However, the human viewer component in particular complicates the assessment of landscape scenic resources. Landscape and environmental planners need to assess not only physical changes (including ephemeral features), but also how people respond to these changes, which in turn affect landscapes. This includes temporal characteristics, trends, and collective perceptions of landscape change. Consequently, both the human viewer and the landscape are important issues in landscape scenic resource assessment. In recent years, algorithms and the global spread of information increasingly influence the behavior of large groups of people and how they engage with the landscape and its scenic resources [

4]. For this reason, social media and the dissemination of information have become a new component that planners need to consider.

To systematize these three components for landscape change assessment, we propose the application of the Social-Ecological-Technological System (SETS) framework [

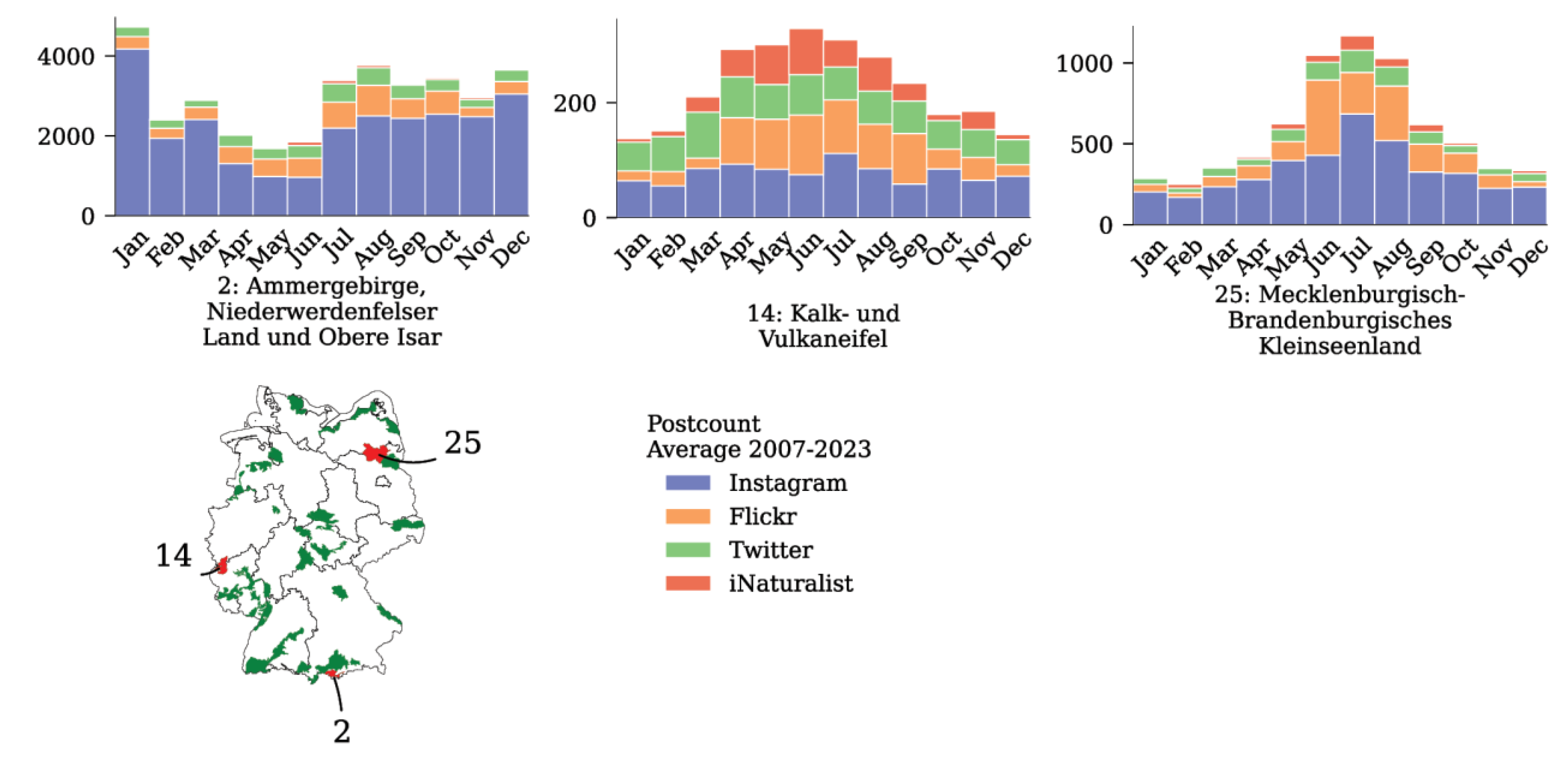

5] to temporal geosocial media analysis. As a means to demonstrate and discuss a variety of situations, we examine temporal patterns from five platforms (Reddit, Flickr, Twitter, Instagram and iNaturalist) and for five case studies. In particular, we interpret the results from a human-centered perspective, with the aim of disentangling the human viewer component from several other superimposed patterns in the data, such as algorithmic bias, platform dynamics, or shifting perceptual preferences. The results can help to corroborate or complement traditional scenic resource assessments. The presented approach can also extend the means to include newer phenomena resulting from changing communication patterns in a globally connected world.

2. Literature Review

In an attempt to improve the empirical assessment of ephemeral landscape features, Hull & McCarthy [

6] proposed a concept they called “change in the landscape”. While the authors give a specific focus to wildlife, they describe a wide range of processes associated with change: “[…] day changes to night, autumn to winter and flowers to fruit; there is plant succession, bird migration, wind, rain, fire and flood [...]” (ibid., p. 266). These changes are characterized by nine types, such as slow changes (gentrification of neighborhoods, growth of vegetation), sudden changes (weather fluctuations), regular changes (seasonal in plants, animal migration, sunrises), frequent (presence of wildlife, wind, sounds), infrequent (fire, floods), long duration (buildings, roads, consequences of natural disasters), medium duration (harvesting of trees, seasons), ephemeral-irregular, -occasional, and -periodic (wildlife, weather, hiking, evidence of other hikers). In their conclusion, the authors warn that ignoring these conditions leads to biased assessments of landscape quality. In practice, however, common temporal assessments continue to focus on physical manifestations of change, such as those observed in biotopes [

7], which are often assessed using remote sensing technologies [

8].

A number of approaches investigate people's perceptions, attitudes, and responses to environmental change and how people engage with the landscape over time [

9]. With the emergence of large collections of user-generated content shared on the Internet, several studies have attempted to assess temporal aspects. Juhász & Hochmair [

10] compare temporal activity patterns between geolocated posts shared on Snapchat, Twitter, and Flickr, and find that the different active groups on these platforms are a responsible for significant differences in the observed spatial patterns. Better understanding the source and nature of these differences has become a central focus of research around VGI. Paldino et al. [

11] study the temporal distribution of activity by domestic tourists, foreigners, and residents in New York City, analyzing daily, weekly, and monthly activity patterns and differences between these groups. Mancini et al. [

12] compare time series collected from social media and survey data. They conclude that day trips have the greatest impact on the differences between survey and social media data. Tenkanen et al. [

13] show how Instagram, Flickr, and Twitter can be used to monitor visitation to protected areas in Finland and South Africa. Their findings suggest that the amount and quality of data varies considerably across the three platforms.

In a relatively new direction, ecologists are increasingly relying on unstructured VGI for biodiversity monitoring [

14]. Rapacciuolo et al. [

15] demonstrate a workflow to separate measures of actual ecosystem change from observer-related biases such as changes in online communities, user location or species preferences, or platform dynamics. In particular, they find that trends in biodiversity change are difficult to separate from changes in online communities. In a recent study, Dunkel et al. [

16] examined reactions to sunset and sunrise expressed in the textual metadata of 500 million photographs from Instagram and Flickr. Despite significant differences in data sampling, both datasets revealed a strong consistency in spatial preference patterns for global views of these two events. Platform biases were observed in locations where user groups differed significantly, such as for the Burning Man festival in Nevada. The festival location ranked second globally for sunrise viewing on Instagram, while Flickr users shared very few photos, a pattern that is explained by the different user composition of these platforms.

As becomes obvious with the above review, a key task in analyzing user-generated content is to reduce bias in the data to increase representativeness. Bias can include factors such as uneven data sampling affected by population density, or highly active individual users skewing patterns through mass uploads, as well as changes in platform incentives that affect how and what content is shared [

12]. There are a number of methods that can help compensate for these effects. However, these methods can also introduce bias and further reduce the amount of data available, making interpretation more difficult. For this reason, [

15] divide approaches into two broad categories that are not mutually exclusive but tend to have opposite effects: Filtering and aggregation (ibid.). Filtering increases precision, which helps to derive more reliable and useful inferences but also tends to reduce the available variance, richness, and representativeness of the data. Aggregation, on the other hand, minimizes bias in the overall data by, for example, increasing quantity through sampling from a larger, more representative number of observers and by integrating data from different platforms. This comes at the expense of precision. Aggregation and filtering approaches can be combined [

17].

A gap in the current literature is how to systematize the application of filtering and aggregation approaches for new studies. The number of possible biases in data is large (e.g., [

14]), and it is not possible to know a priori which biases affect the data. There is a lack of a categorization scheme to help understand the phenomena that affect sampling at specific times and places. A first step in this direction is the consideration of any user generated data as ‘opportunistic‘ sampling and the contributing users as ‘observers’. Both terms are increasingly used in biodiversity monitoring [

14,

18]. Opportunistic in this case refers to the degree to which data are sampled without predefined systematic contribution rules or objectives. The classification is not abrupt, and a continuum of platforms exists between fully standardized and rigorous survey protocols at one end (e.g., the United Kingdom Butterfly Monitoring Scheme, [

19]) to semi-structured data (iNaturalist or eBird as volunteered geographic information aimed at collecting data for a specific purpose), to fully crowdsourced data (Flickr, Twitter, Reddit, Instagram as geosocial media) [

20]. The ranking of platforms along this continuum can be judged by the homogeneity of contributing user groups and contribution rules. In summary, the above research suggests that opportunistic data tend to better reflect the user's own value system, including individual preferences for activities and observational behavior, making them suitable for assessing landscape perception and scenic resources.

This openness typically results in larger volumes of observations than are typically available from more systematized field surveys, but also leads to more biases that can negatively affect the reliability and validity of the data. In species monitoring, [

15] several solutions to reduce bias, such as reverse engineering the ‘survey structure’ (1), finding the lowest common denominator for comparison (2), modeling the observation process (3), and comparing to standardized data sources (4). Applying these solutions to landscape perception, however, requires a broader set of considerations for disentangling results. While ecological changes are critical for landscape and urban planners, changes in the observer and the observation process itself are equally important. The latter covers effects introduced by the use of global social media and information spread. Examples include mass invasions [

4] and algorithmic bias [

21], which can have negative effects on landscapes and their perception.

This research presents five case studies. We discuss a system for categorizing three broad umbrella biases found in opportunistic data: Ecological, Social, and Technological. These biases are used to assess perceived landscape change from different perspectives. Rather than looking at a single dataset in detail, the cross section allows us to test the system under different parameters. The categories are borrowed from the Social-Ecological-Technological System (SETS). We demonstrate how the framework can help analysts disentangle three major system domains when interpreting and making sense of temporal patterns in community-contributed opportunistic data sources.

3. Materials and Methods

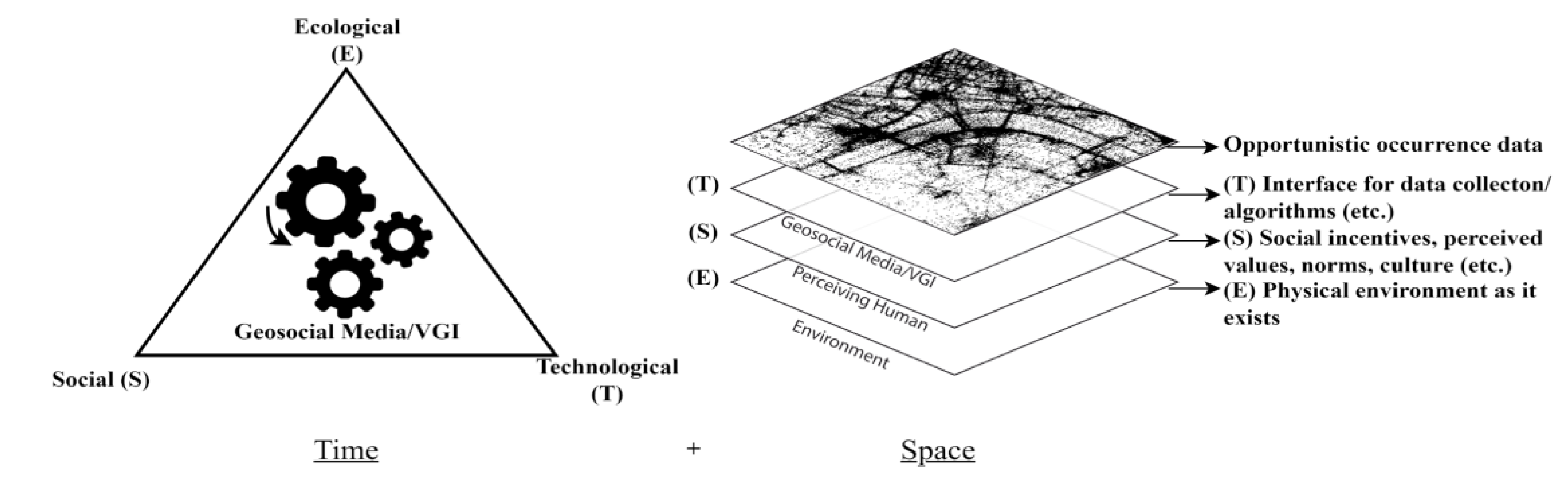

The SETS framework is a system consisting of three poles, the social (S), ecological (E), and technological (T) pole [

22]. So-called couplings exist between these poles. Couplings can be thought of as a ‘lens’ for understanding the dynamics between different parts of complex ecosystems. Perceptions of landscape change are part of such a system. To date, research on landscape perception has mainly focused on two of these poles: the physical landscape and the perceiving human (see [

23]). The third technological pole of the SETS framework has usually been subsumed under physical landscape assessment, which may include changes such as infrastructure. However, Rakova & Dobbe [

24] emphasize that algorithms have become a critical part of the technological pole. Algorithms increasingly affect the interactions between society and ecosystems on a global scale. From this perspective, it makes sense to consider technology as a separate third component. Using geosocial media or VGI as an interface for data collection means that technological couplings can be identified as imprints in data (shown on the right side of

Figure 1). Conversely, people communicating on these platforms use their senses and social context (the social dimension, S) to choose what to share and when to share it. Lastly, scenic resources and the environment (the ecological dimension, E) provide incentives that affect people's agency and their ability to perceive and respond in a particular way.

At the same time, more complex feedback loops exist between these poles that require special attention. In particular, technological phenomena such as algorithms influence individual social-ecological interactions [

25]. People gather information from all sources when making travel arrangements, for example. Their choices may be influenced by physical characteristics of the landscape, such as scenic quality, as an ecological coupling (hereafter referred to as E). Or by reports, reviews, and recommendations from other travelers, which can be seen as an example of a social coupling (S). Such a spatial discourse has effects over time on perceived values, norms, or the ways cultures perceive scenic beauty [

2]. Finally, algorithms that promote some information while downgrading others can be described as a technological coupling (T). Especially in the latter case and for geosocial media, many algorithms and platform incentives have known and unknown effects on user behavior [

26,

27]. The sum of these experiences defines how information about the environment is perceived and communicated. Geosocial media and VGI therefore can have a profound influence on long-term dynamics. Through repetition and reinforcement, algorithmic couplings increasingly manifest as actual changes in the social or ecological domain. Van Dijck [

25] already argued that networks such as Flickr "actively construct connections between perspectives, experiences, and memories", but also warned that "the culture of connectivity [...] leads to specific ways of 'seeing the world'" (p. 402). For example, by rewarding particularly stunning landscape photographs with "user reach" on social media, some landmarks are already under unusual visitation pressure [

4].

Figure 1 illustrates geosocial media and VGI as a core component and as indistinct from SETS. This concept helps to consider these algorithms together with their social (including institutional) and ecological couplings that define the broader ecosystem in which they operate [

24,

28]. To draw useful conclusions and derive actionable knowledge, planners need to assess all three poles. However, approaches to disentangling the effects of these poles vary widely depending on the data source and analysis context. To explore these different analytical contexts and data characteristics for assessing perceived landscape change, we use data from five platforms in five small case studies. The case studies illustrate a variety of tasks, challenges, and pitfalls in early exploratory parts of analyses. We discuss these case studies from a SETS perspective. The discussion is sorted based on the complexity of identified data couplings, from less complex to more complex.

Table 1 lists platforms and number of observations collected for each study.

Data collection for these studies was performed using the official application programming interfaces (APIs) provided by the platforms. Only publicly shared content was retrieved. With the exception of the Reddit data, we only selected content that was either geotagged or contained some other form of explicit reference to a location or coordinate. To reduce the effort of cross-platform analysis, we mapped the different data structures and attributes of all platforms to a common structure for comparison.

1 In addition, the data were transformed into a privacy-friendly format that allows quantitative analysis without the need to store raw data [

29]. As a result of this data abstraction process, all measures reported in this paper are estimates, with guaranteed error bounds of ±2.30%. To assess temporal patterns, we used either photo timestamps (Flickr), time of observation (iNaturalist), or post publication date as a proxy (Twitter, Instagram, Reddit). In the following, we keep the discussion of data collection and processing steps to a necessary minimum and refer readers to

Supplementary Materials S1-S9 for commented code, data collection, processing, and visualization.

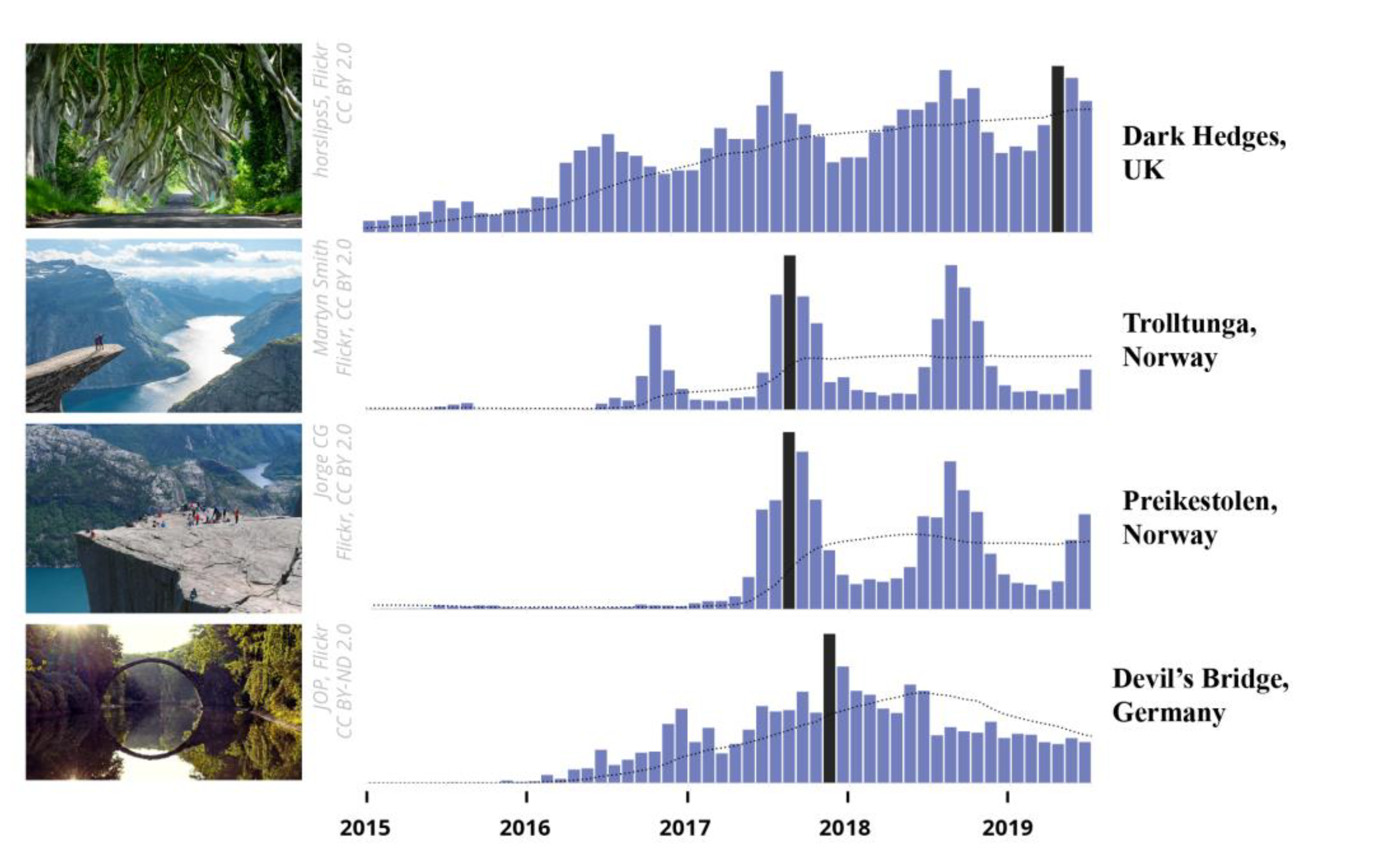

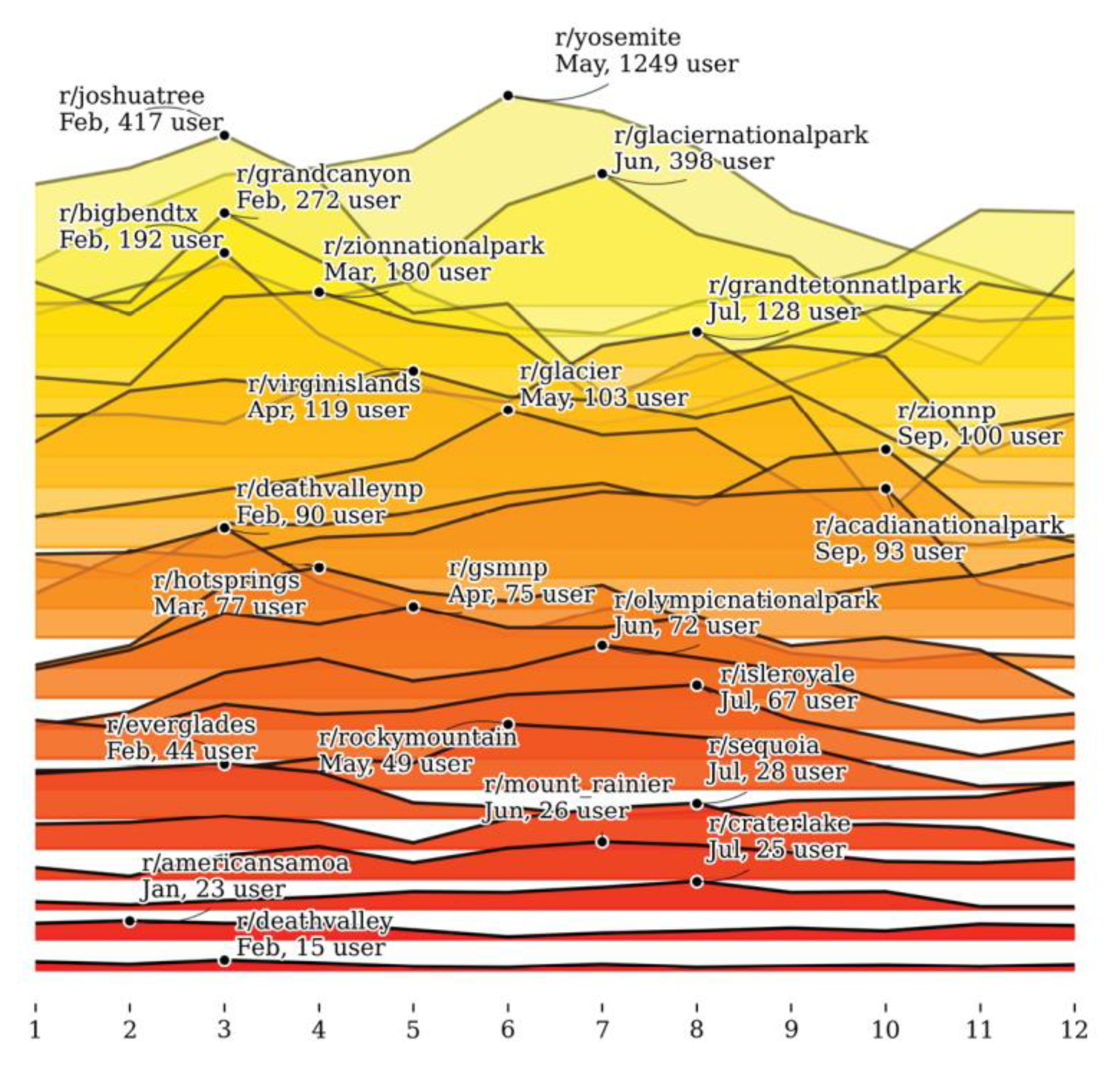

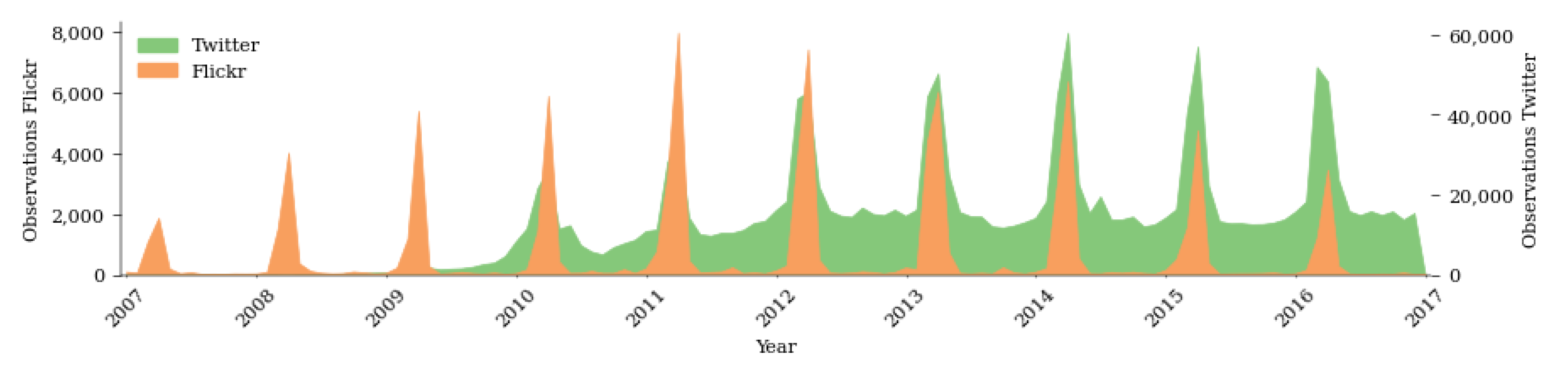

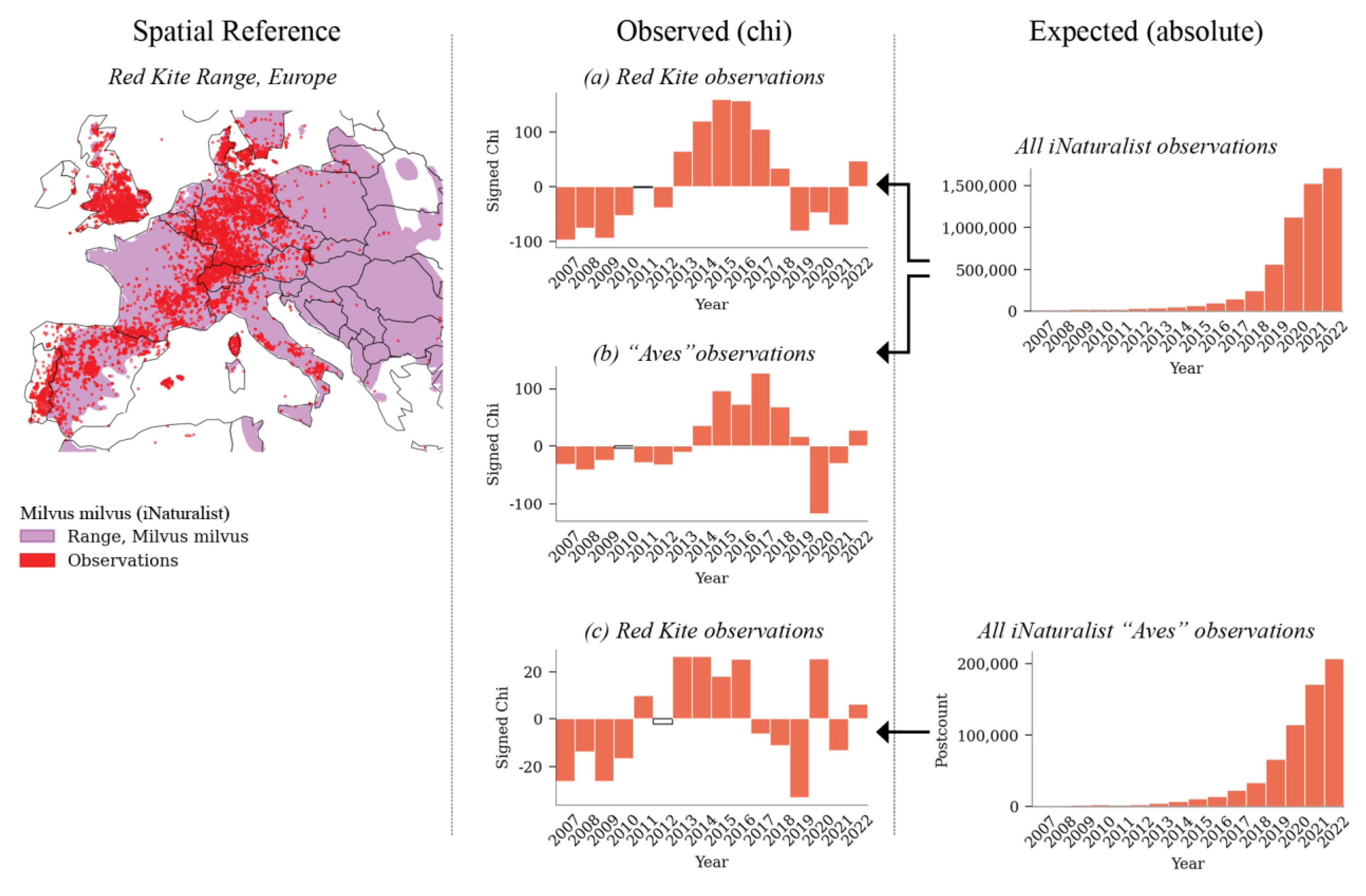

The first study focuses on data from Instagram, as a single data source, and a specific phenomenon related to landscape change that is observed at 14 selected vantage points across Europe. As a second example, we looked at Reddit, a discussion platform that does not support explicit georeferencing. However, spatial information can be inferred, for example, from subreddits that refer to different spatial regions. We manually matched 46 subreddits related to US national parks and collected comments and posts from 2010 to 2022 (S1-S4). This dataset contains 53,491 posts and 292,404 comments. Due to significant differences in data availability, we limit our analysis to the 20 national parks that receiv the most communication exposure. The third study focuses on a single ecological phenomenon (cherry blossoming) and examines seasonal and long-term variation across two platforms, Flickr and Twitter (S5). The fourth study illustrates cross-platform analysis by sampling and aggregating data from Instagram, Flickr, Twitter, and iNaturalist for 30 biodiversity hotspots in Germany. The total number of photos and observations is 2,289,722. In this case study, we do not apply any filtering techniques, and the results show the absolute frequencies of photos, tweets, and animal and plant observations, respectively (S6). In the last case study, we look at global observations of the Red Kite (

Milvus milvus) and use a variety of filtering techniques to examine temporal patterns (S7-S9). Specifically, we apply the signed chi normalization to temporal data. This equation was originally developed by Visvalingam [

30] to visualize overrepresentation and underrepresentation in spatial data.

Applying this normalization allows analysts to distinguish properties of filtered subsets of data from phenomena or biases found in the entire data set [

30]. The two components can also be described as a generic query (

expected) and a specific query (

observed). A specific query might be the frequency of photographs related to a particular topic or theme (e.g., all photographs of the Red Kite). A generic query, on the other hand, ideally requires a random sample of data. Observed and expected values are usually evaluated for individual "bins", which can be spatial grid cells or temporally delimited time periods. Based on the global ratio of frequencies between observed and expected (

norm), individual bins are normalized. Positive chi values indicate overrepresentation and negative values indicate underrepresentation of observations in a given time interval. Randomness of the generic query is typically difficult to achieve due to the opaque nature of APIs. For example, it is not always clear how data has been pre-filtered by algorithms before being served to the user [

16]. The easiest way to ensure randomness is to sample all data from a platform. For Flickr and iNaturalist, this was possible, and all geotagged photos and observations were queried for the period from 2007 to 2022. The resulting dataset we use for “expected” frequencies consists of metadata 9 million iNaturalist observations. Observed frequencies are based on 22,075 Flickr photos and 20,561 iNaturalist observations. All data and code used to generate the graphs are made available in a separate data repository [

31].

5. Conclusions

Many of the relationships between visual perception, photo-based communication, and collective social behavior have been known since Urry wrote about "the tourist gaze" [

33]. Since then, geosocial media and online communication have radically altered the technological counterpart. Geosocial media and algorithms now influence, distort, and modify the way people perceive their environment. This has given rise to new phenomena, such as mass invasions or cyber cascades, which cannot be explained without considering the global spread of information. Trends such as fake news [

46], social bubbles [

45], and GenAI are creating an "era of artificial illusions" [

47] in which the senses are increasingly challenged to distinguish between the real and the imagined. On the other hand, masses of data on how people perceive their environment are readily available online as what we call opportunistic occurrence data. Assessing perceived landscape change from this data requires disentangling multiple superimposed patterns in the data. For biodiversity monitoring and species observation, [

15] refer to this process as "reverse engineering survey structure" (p. 1226). Their goal is to identify changes in the physical world (species trends) based on data collected online. However, unlike species modeling, landscape perception analysis requires equal consideration of the human observer and the physical landscape. Both poles are important subjects of analysis. In this paper, we introduce technology as a third pole. Based on the SETS framework, we distinguish three main domains in which change can occur: the ecological (E), social (S), and technological (T) domain. We discuss the application of the SETS framework in five case studies and show how couplings between these domains can be used to disentangle relationships.

In terms of scenic resource assessment, the five case studies can be grouped based on how they address two common tasks: (1) identifying temporal characteristics for a given area or region (national parks 4.2, biodiversity hotspots 4.4), and (2) characterizing and identifying temporal trends for selected scenic resources or phenomena (mass invasions 4.1, cherry blossoms 4.3, red kites 4.5). Generic queries and the integration of multiple data sources can reduce bias and increase representativeness, which helps to gain confidence in the data. In particular, comparisons between data from different platforms help to better understand tourist flows for different user groups. However, only unspecific and broad interpretations are possible, such as identifying and confirming common, recurring seasonal visitation patterns for selected areas and regions. Our results show this for two case studies of US national parks and for 30 biodiversity hotspots in Germany. On the other hand, it proved difficult to identify trends for selected themes or scenic resources. Our interpretation is that overall platform changes (e.g., popularity) or changes in subcommunities (e.g., bird photographers or the group of "red kite photographers" on Flickr and iNaturalist) have a stronger influence on the observed patterns than phenomenal changes, such as the actual growth of the red kite population. As an exception, observations of cherry blossom, as a globally perceived ecological event, are found to be very stable and seem to be less affected by changes in communities. One possible interpretation is that the phenomenon is valued equally across many cultures and communities. Such events may therefore be useful as “benchmark events” to compensate for within-community variation in the study of more localized aspects of landscape change.

Our results show that platform biases exist toward individual poles that affect their suitability for assessing some contexts of landscape change better than others. iNaturalist or Flickr, for example, feature metadata that appears more directly linked to the actual perceived environment. This makes these platforms better suited for monitoring actual ecological change (E), such as the timing of events like flowers, fruits, and leaf color change. Other aspects related to broader societal behavior, human preferences, and collective spatiotemporal travel footprints (S) may require consideration of a broader set of platforms, including (e.g.) Instagram or Twitter. Due to the rules and incentives on these platforms, not all aspects are captured equally. In our study, we observed that charged discussions with positive and negative reaction sentiments, associations, metaphors, and political couplings are primarily found on X (formerly Twitter) and Reddit. The influence of technology and algorithms further varies, as shown in our case studies and confirmed by other authors [

37]. Capturing these different perspectives and conditions of opportunistic data contribution helps planners gain a more holistic understanding of the dynamics influencing visual perception and behavior observed in the field. Cross-platform comparisons, such as in case study 4.4, are found to be particularly useful in reducing bias and gaining actionable knowledge for decision making. Results can be used, for example, to increase environmental justice or reduce socio-spatial inequality [

24]. It can also help develop techniques to counteract phenomena associated with the technological domain, such as crowd bias toward certain visual stimuli and imitative photo behavior.

When evaluating scenic resources through the “lens” of user-generated content from geosocial media, we urge planners to consider the following three situations. First (1), some ecological features (E) may be valuable even if they are not perceived by someone. This applies to ecological phenomena that are rare, take a long time to occur, or cannot be recreated or replaced once lost. Such features may be difficult to detect in user-generated content and with quantitative analysis. Second (2), some content may be shared online for social purposes (S) even if the original experience was not perceived as scenic or valuable. We observed this effect for places affected by "mass invasions" (4.1). Here, users appear to selectively share photos that show few people or solitary scenes from what are actually crowded vantage points. Tautenhahn explains this phenomenon as a “self-staging” in the landscape [32, p. 9]. Finally (3), even in those cases where people share their original, unaltered experiences with (e.g.) photographs of crowded scenes, geosocial media ranking algorithms (T) may prevent these experiences from ever gaining a wider user "reach" by (e.g.) downgrading unaesthetic or negatively perceived content. These algorithmic effects may make it difficult for planners to interrupt feedback loops, such as mass invasions, with negative consequences for infrastructure, ecology, and human well-being (see [

4]).

From a broader perspective, we see variable specificity as a key challenge in capturing landscape change through user-generated content, including ephemeral features and how people respond to these changes. Depending on the definition, events can range from simple atomic changes that people perceive and respond to, such as a single rumble of thunder or a sunset, to more complex events or collections of events arranged in a particular pattern or sequence [

35]. The level at which events and landscape change needs to be assessed can vary widely. From an analyst's perspective, integrating and comparing data from multiple sources can increase representativeness, but it can also produce only generic results, leading to broad and non-specific interpretations that are difficult to translate into decision making. Conversely, specific queries can produce results of higher specificity, with the trade-off of increased bias and reduced representativeness. Rapacciuolo et al. [

15] propose individual data workflows to reduce bias in selective biodiversity monitoring. Specifically, they recommend the use of "benchmark species" to normalize observed data for the species under investigation, such as the Red Kite. Applying this concept to landscape change monitoring could mean first considering observations from umbrella communities, such as all "bird photographers" on iNaturalist (case study 4.5), as the expected value in the signed chi equation. This generic query can then be used to compensate for within-community variation to visualize corrected trends for specific observations (e.g., to normalize observations of specific bird species). In the fields of landscape and urban planning, such normalized observations over time can help to better understand the unique transient characteristics of places, areas and landscapes, to protect and develop specific ephemeral scenic values, or to propose actions to change negative influences.