1. Introduction

Hyperspectral images (HSIs) are widely applied in land cover mapping, agriculture, urban planning, and other applications [

1]. The high spectral resolution of HSIs enables the detection of a wide range of small and specific targets through spatial analysis or visual inspection[

2]. In HSIs, the definition of small targets typically depends on the specific application scenario and task requirements. For instance, if the task is to detect traffic signs in HSIs, small targets may be defined as objects with traffic sign characteristics, with their size determined by the actual dimensions of traffic signs. These small targets usually have dimensions ranging from the pixel level to a few dozen pixels. Although their size may be minuscule compared to the entire image, they hold significant importance in specific contexts. Additionally, the semantic content of small targets may carry particular significance; for example, HSIs may include various objects such as vehicles, buildings, and people.

In this topical area, the most challenging targets in HSIs have the following characteristics: 1) Weak contrast: HSIs with their high spectral resolution but lower spatial resolution depict spatially and spectrally complex scenes, affected by sensor noise and material mixing; the targets are immersed in this context, leading to usually weak contrast and low signal-to-noise ratio (SNR) values. 2) Limited numbers: Due to the coarse spatial resolution, a target often occupies only one or a few pixels in a scene, which provides limited training samples. 3) Spectral complexity: HSI contains abundant spectral information. This leads to a very large dimension of the feature space, to strong data correlation in different bands, extensive data redundancy, and eventually long computational times. In HSTD, the background typically refers to the parts of the image that do not contain the target. Compared to the target, the background usually has a higher similarity in color, texture, or spectral characteristics with the surrounding area, resulting in lower contrast within the image. This means it does not display distinct characteristics or significant differences from the surrounding regions. Background areas generally exhibit a certain level of consistency, with neighboring pixels having high similarity in their attributes. For example, a green grass field, a blue sky, or a gray building wall. This consistency means that the background may show relatively uniform spectral characteristics. Additionally, the background usually occupies a larger portion of the image and may have characteristics related to the environment, such as terrain, vegetation types, and building structures. These characteristics help distinguish the background from the target area. Accurately defining and modeling the background is crucial for improving the accuracy and robustness of target detection.

Based on these characteristics, the algorithms for HSI target extraction may be roughly subdivided into two big families: more traditional signal detection methods and more recent data-driven pattern recognition and machine learning methods.

Traditionally, detection involves transforming the spectral characteristics of target and background pixels into a specific feature space based on predefined criteria [

4]. Targets and backgrounds occupy distinct positions within this space, allowing targets to be extracted using threshold or clustering techniques. In this research domain, diverse descriptions of background models have led to the development of various mathematical models [

5,

6,

7,

8] for characterizing spectral pixel changes.

A first category of algorithms in this family are the spectral information-based models, when the target and background spectral signatures are supposed to be generated by a linear combination of end member spectra. The Orthogonal Subspace Projection (OSP) [

9] and the Adaptive Subspace Detector (ASD) [

10] are two representative subspace-based target detection algorithms. OSP employs a signal detection method to remove background features by projecting each pixel’s spectral vector onto a subspace. However, the fact that the same object may have distinct spectra, and the same spectra may appear in different objects because of spectral variation caused by the atmosphere, by sensor noise, and the mixing of multiple spectra makes the identification of a target more challenging in reality due to imaging technology limitations [

11]. To tackle this issue, Chen

et al. proposed an adaptive target pixel selection approach based on spectral similarity and spatial relationship characteristics [

12], which addresses the pixel selection problem.

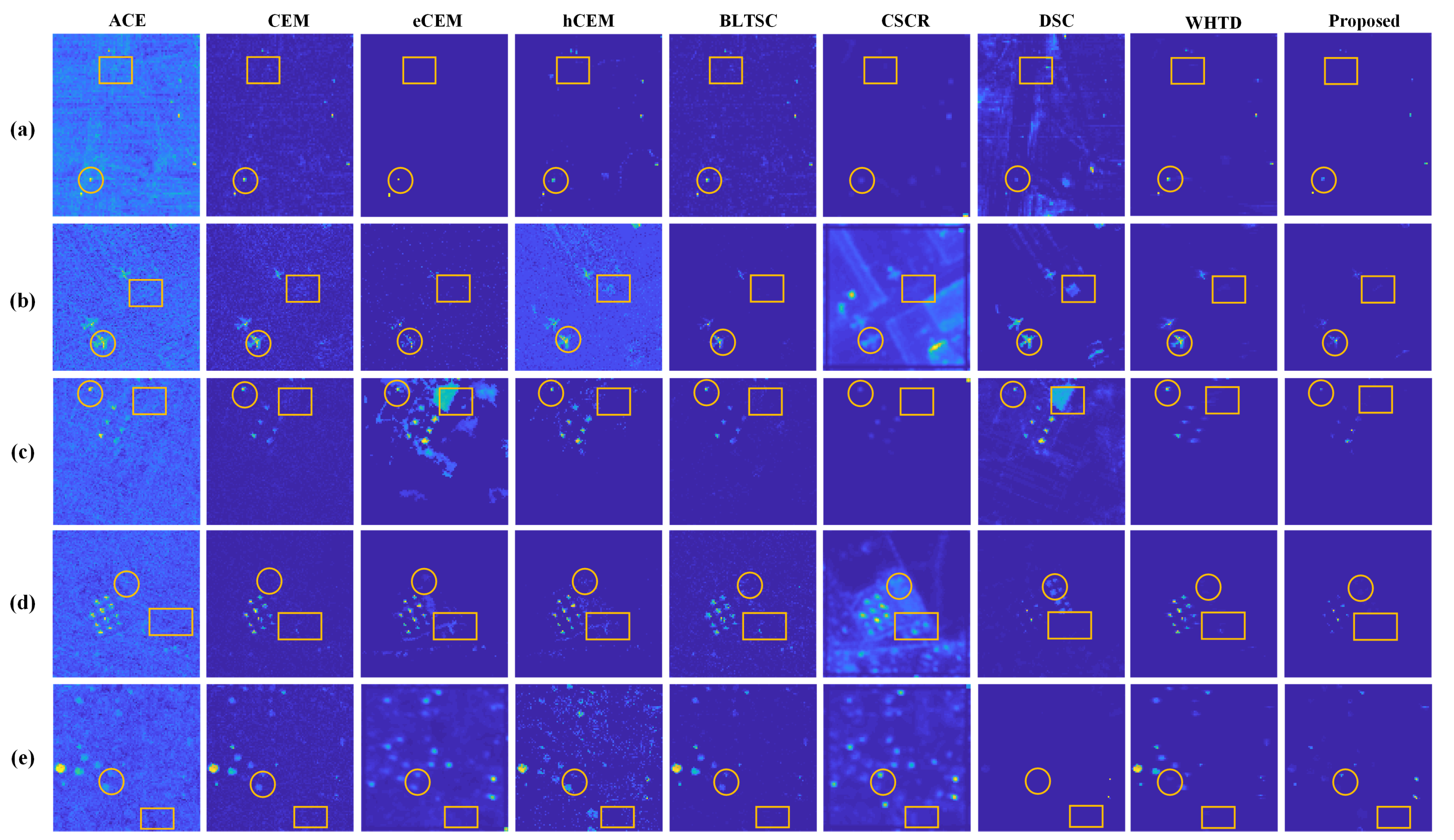

A second category is statistical methods. These approaches presume a background that follows a specified distribution and then establish whether or not the target exists by looking for outliers with respect to this distribution. The adaptive cosine consistency estimator (ACE) [

13] and the adaptive matched filter (AMF) [

14], are among the techniques in this group, and are both based on the generalized likelihood ratio-based detection test (GLRT) method [

15].

Both ACE and AMF are spectral detectors that measure the distance between target features and data samples. ACE can be considered as a special case of a spectral angle-based detector. AMF was designed according to the hypothesis testing method of Gaussian distribution. The third category is the representation-based methods without assumption of data distribution, e.g., constrained energy minimization (CEM) [

16,

17], hierarchical CEM (hCEM) [

18], ensemble-based CEM (eCEM) [

19], sCEM [

20], target-constrained inference-minimized filter (TCIMF), and sparse representation (ST)-based methods [

21]. Among these approaches, the classic and foundational CEM method constrains targets and minimizes data sample variance, and the TCIMF method combines CEM and OSP.

The most recent deep learning methods for target detection are mainly based on data representations involving kernels, sparse representations, manifold learning, and unsupervised learning. Specifically, methods based on sparse representation take into account the connections between samples in the sparse representation space. The Combined Sparse and Collaborative Representation (CSCR) [

22] and the Dual Sparsity Constrained (DSC) [

23] methods are examples of sparse representation. However, the requirement for exact pixel-wise labeling makes the task of achieving good performance an expensive one. To address the challenge of obtaining pixel-level accurate labels, Jiao

et al. proposed a semantic multiple-instance neural network with contrastive and sparse attention fusion [

24]. Kernel-based transformations [

25] are employed to address the linear inseparability issue between targets and background in the original feature space. Gaussian radial basic kernel functions are commonly used, but there is still a lack of rules for choosing the best performing kernel. Besides, in [

26], manifold learning is employed to learn a subspace that encodes discriminative information. Finally, for unsupervised learning methods, an effective feature extraction method based on unsupervised networks is proposed to mine intrinsic properties underlying HSIs. The spectral regularization is imposed on autoencoder (AE) and variational AE (VAE) to emphasize spectral consistency [

27]. Another novel network block with the region-of-interest feature transformation and the multi-scale spectral-attention module is also proposed to reduce the spatial and spectral redundancies simultaneously and provide strong discrimination [

28].

While recent advancements have shown increased effectiveness, challenges remain in efficiently tuning a large number of hyperparameters and in obtaining accurate labels [

29,

30]. Moreover, the statistical features extracted by GAN-based methods often overlook the potential topological structure information. Such phenomenon greatly limits the ability to capture non-local topological relationships to better represent the underlying data structure of HSI. Thus, the representative of features are not fully exploited and utilized to preserve the most valuable information through different networks. Detecting the location and shape of small targets with weak contrast against the background remains a significant challenge. Therefore, this paper proposes a deep representative model of the graph and generative learning fusion network with frequency representation. The goal is to learn a stable and robust model based on an effective feature representation. The primary contributions of this study are summarized as follows:

We explore a collaboration framework for HSTD with less computation cost and high accuracy. Under the framework, the feature extraction from the graph and generative learning compensates each other. As far as we are concerned, it is the first work to explore the collaborative relationship between the graph and generative learning in HSTD.

The graph learning module is established for HSTD. The GCN module aims at compensating for the information loss of details caused by the encoder and decoder via aggregating features from multiple adjacent levels. As a result, the detailed features of small targets can be propagated to the deeper layers of the network.

The primary mini batch GCN branch for HSTD is designed by following an explicit design principle derived from the graph method to solve high computational costs. It enables the graph to enhance the feature and suppress noise, effectively dealing with background interference and retaining the target details.

A spectral-constrained filter is used to retain the different frequency components. Frequency learning is introduced into data preparation in coarse candidate sample selection, favoring strong relevance among pixels of the same object.

The remainder of this article is structured as follows.

Section 2 provides a brief overview of graph learning.

Section 3 elaborates on the proposed GCN and introduces the fusion module. Extensive experiments and analyses are given in

Section 4.

Section 5 provides some conclusions.

2. Graph Learning

A graph describes the one-to-many relations in a non-Euclidean space [

31], including directed and un-directed patterns. It can be mixed with a neural network to design Graph Convolution Networks (GCNs), Graph Attention Networks (GATs), Graph Autoencoders (GAEs), Graph Generative Networks (GGNs), and Graph Spatial-Temporal Networks (GSTNs) [

32].

Among the graph-based networks mentioned above, this work focuses on GCNs, a set of neural networks that infers convolution from traditional data to graph data. Specifically, the convolution operation can be applied within a graph structure, where the key operation involves learning a mapping function. In this work, an undirected graph models the relationship between the spectral features and performs feature extraction [

33]. In matrix form, the layer-wise propagation rule of the multi-layer GCN is defined as:

where

denotes the output in the

-th layer.

is the activation function with respect to the weights to-be-learned and the biases of all layers.

D represents a diagonal matrix of node degrees.

represents the adjacency matrix

of undirected graph

G added with the identity matrix

of proper size throughout this article.

Deep learning-based approaches for HSIs based on Graph Learning are increasingly widely used, especially for detection and classification. For instance, weighted feature fusion of convolutional neural network (CNN) and graph attention network (WFCG) exploits the complementing characteristics of superpixel-based GAT and pixel-based CNN [

34,

35]. Finally, a robust self-ensembling network (RSEN) is proposed, comprising a base and ensemble network, which achieves satisfactory results even with limited sample sizes [

36].

3. Proposed Methodology

3.1. Overall Framework

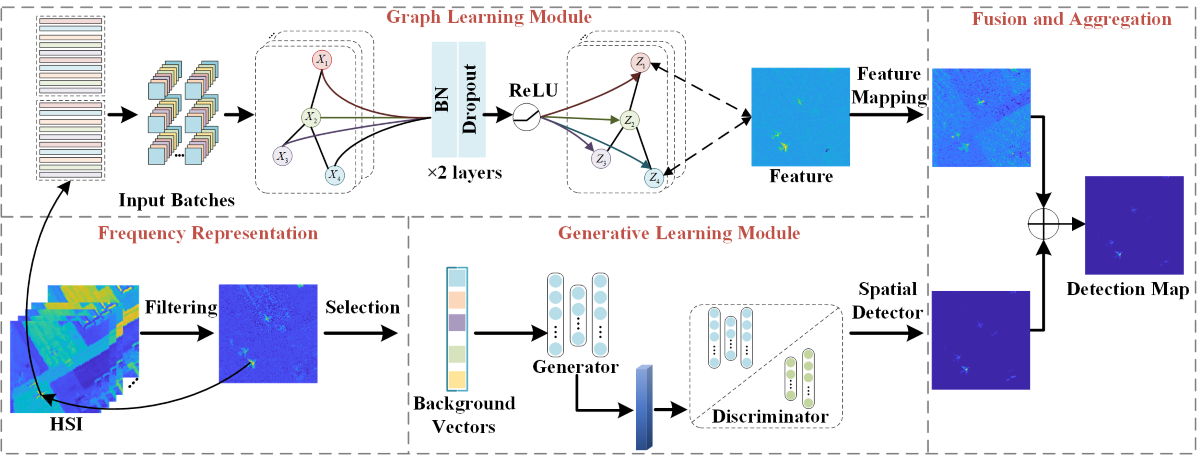

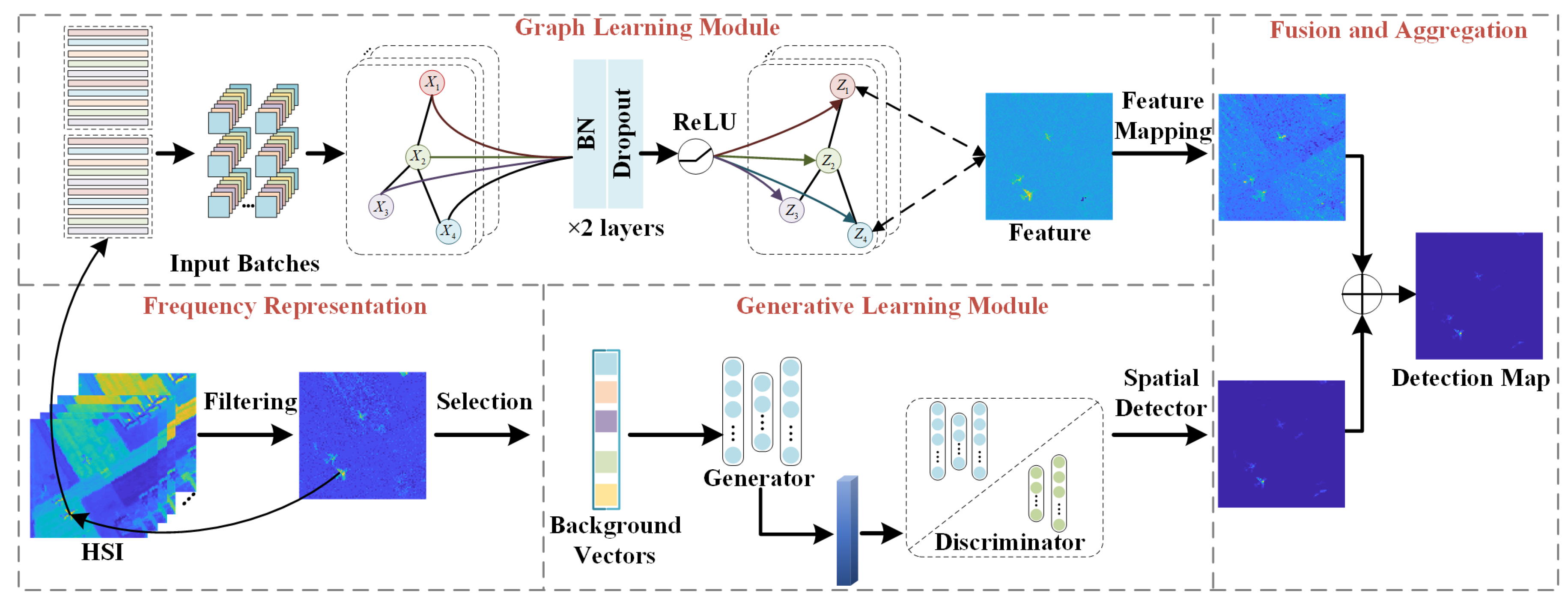

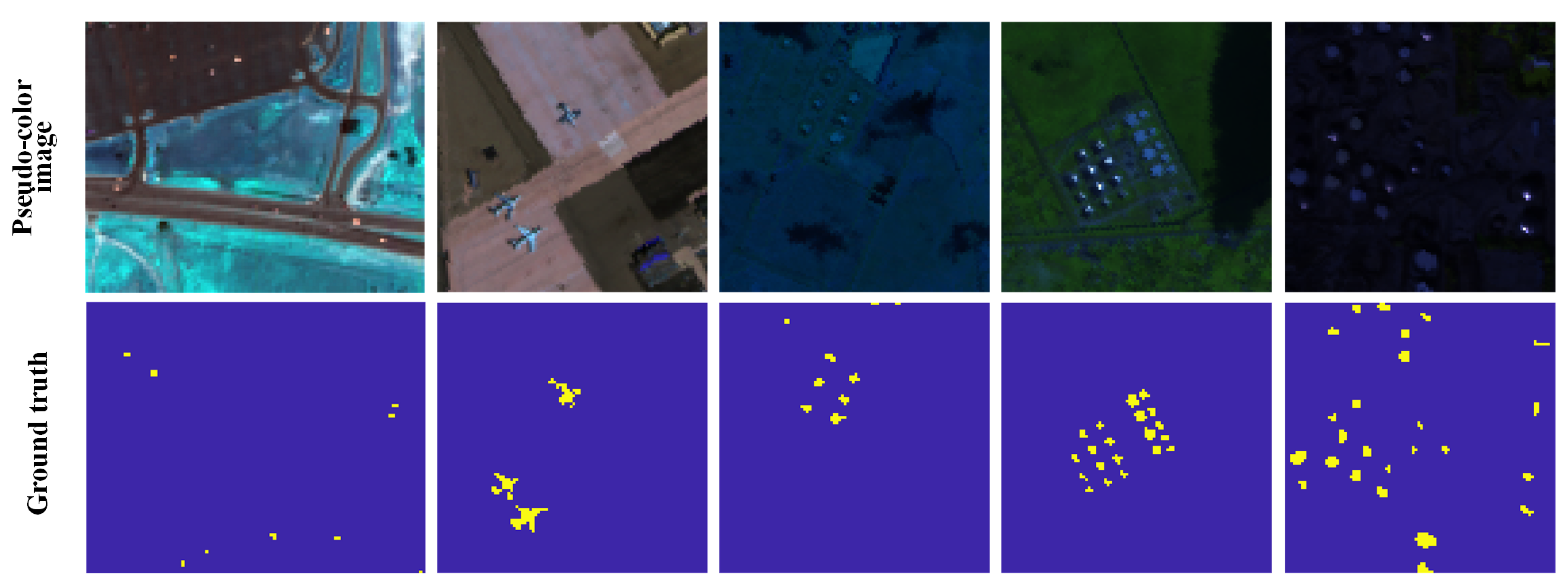

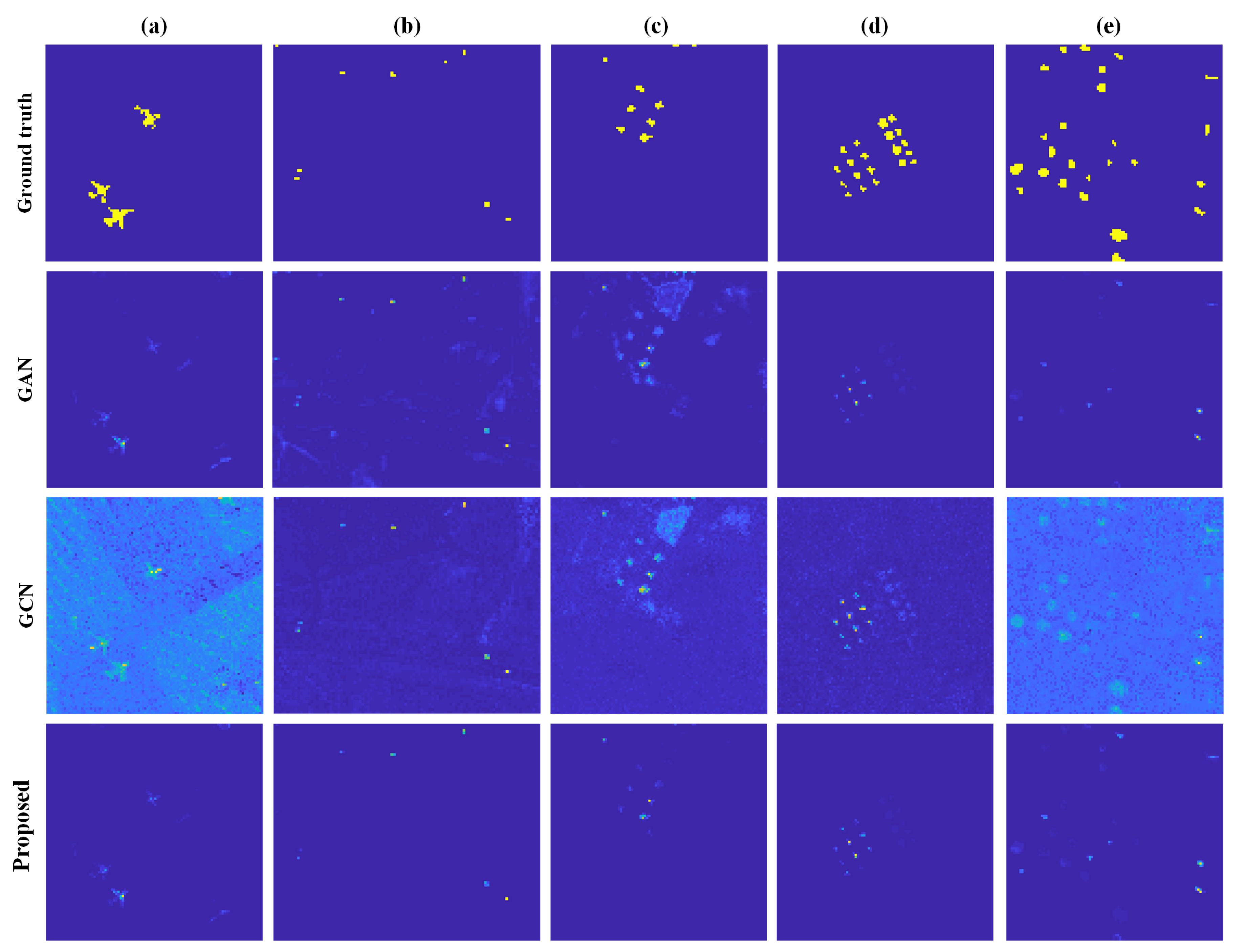

This section proposes a fusion graph and generative learning network with frequency representation. The architecture of this method is shown in

Figure 1, which consists of the GCN and GAN modules, to represent different types of features, with help from the frequency learning to select the initial pseudo labels. The graph learning module’s graph structure defines topological interactions in small batches to minimize computer resource requirements and refine the spatial features from HSI. The idea is to obtain spatially and spectrally representative information from the latent and reconstructed domains. The information is based on components extracted in the generative learning module to leverage the discriminative capacity of GAN models. A fusion module then exploits the association between these two complementary modules.

The description of problem formulation is as follows. Let’s denote the HSI by

, where

M,

N,

C are the three dimensions of the image. The input HSI can is the collection of background set

and target set

, i.e.

, and

,

. According to these definitions, the establishment of the overall model is

where

is the output of the GCN function

.

is the Laplacian matrix.

is the output of GAN.

and

are the input to the testing and the training phase, respectively.

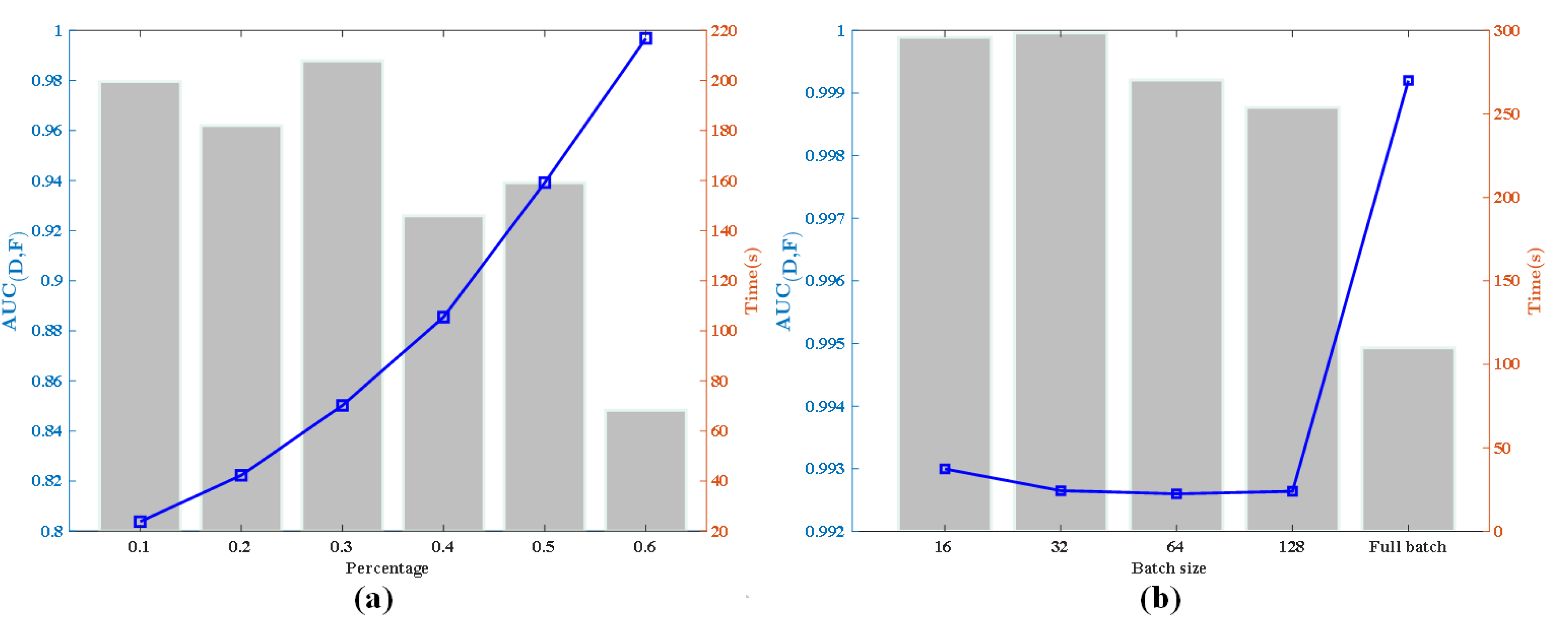

is the coefficient setting the percentage of the training samples

.

3.2. Data Preparation based on Frequency Learning

Prior to any detection step, the first step of the processing chain is a subdivision of the original HSI into frequency representation components. For images, frequency learning can be used to enhance features, reduce noise, and compress data by focusing on the significant frequency components. We analyze the spectral properties of images to extract relevant features. The filter output is the input to the upper and lower branches of the architecture in

Figure 1. The idea is that the high-frequency component plays a significant role in depicting smaller targets. Some specific information in high-frequency components, such as object boundaries, can more effectively distinguish different objects. Unlike natural images, HSI contains rich spectral and spatial information. The low-frequency component refers to its continuous smooth part in the spatial domain, which indicates the similarity of spectral features between adjacent pixels. Additionally, in the case of original labels, low-frequency components are more generalizable than high-frequency components, which may play an essential role in detecting particular smaller objects. Therefore, detecting objects in the frequency domain may be more suitable for small and sparse hyperspectral targets [

37,

38].

Based on the above ideas, this work passes the original HSI through a designed linear FIR filter to obtain a pseudo-label map through CEM-inspired algorithm. The filtered signal includes high-frequency components from the image with significant gradients, representing edges. Also, it includes low-frequency components in the image with relatively slow frequency changes. Combining high and low-frequency components, each representing distinct physical characteristics, as inputs to the network, enhances the accuracy of target detection. The filter output serves as input to both the upper and lower branches of the architecture in

Figure 1. For the GCN module, graph structure convolution is analogous to a Fourier transform that defines the original frequency signal. The combination of frequency components obtained by filtering is subjected to Fourier transform in the frequency domain to realize spectral domain graph convolution. The input of GCN includes pixel-level samples and an adjacency matrix that models the relationship between samples.

Specifically, the filter’s design is about the hCEM-inspired function.The traditional CEM algorithm designs a filter such that the energy of the target signal remains constant after passing through it, thereby minimizing total output energy under these conditions. However, the detection effect of this filter may not be achieved through one single layer. Therefore, the hCEM algorithm [

18] connects multiple layers of CEM detectors in series and uses a nonlinear function to suppress the background spectrum. Each CEM processes the spectrum as input for the subsequent layer, progressively enhancing the detector’s effectiveness. The filter designed in this way helps in individuating the target. With a similar idea, frequency filtering in this work is

where

represents the filter function. The latent variable, denoted as

, is obtained by the following steps. First, the HSI and prior target spectral signature are put in input to the initial network. Then, white Gaussian noise is added to each spectral vector, and the latent variable

is computed. Calculation stops once the output converges to a constant:

, where the initial value of

is the spectral vector of the

, and

represents the number of spectral vectors.

The filter coefficient

is designed to suppress the background spectrum as

where

d represents a prior spectral vector.

The output of the

m-th layer is

and the stopping condition is defined as

In each iteration, the previous output is utilized in the training phase. Iterations conclude upon meeting the stopping condition, yielding final output .

Values in

sort the pixels based on filtering results: closer to 0 indicates higher probability of background, while closer to 1 suggests higher likelihood of being a target. Solely relying on low frequencies limits discriminative ability in frequency learning [

39]. This is because node representations become similar by only aggregating low-frequency signals from neighbors, regardless of whether the nodes belong to the same class. Therefore, for GAN, the pseudo-target and pseudo-background vectors are input into the network simultaneously; the network learns high-frequency and low-frequency parts. The proposed network integrates benefits from both low-frequency and high-frequency signal representations. The output from this frequency learning module serves as input for training and testing phases, with detailed process and network structure descriptions as follows.

3.3. The Graph Learning Module

This section introduces the graph learning module. The output from the frequency learning module serves as input for both training and testing phases, accompanied by a detailed process and network structure description.

Due to the ability to represent the relations between samples, effectively handle graph structure data, and be compatible with HSIs, a GCN is the perfect fit as the basic structure [

34]. The traditional discrete convolution of CNN cannot maintain translation invariance on the data of non-Euclidean structure. Due to varying adjacency in the topology graph, convolution operations cannot use kernels of uniform size for each vertex’s adjacent vertices. CNN cannot handle the data of non-Euclidean structure, but it is expected to effectively extract spatial features on such a topological structure [

40]. And GCN is employed an undirected graph to represent the relations between spectral signatures in the proposed method. When building the model, the network is supposed to effectively characterize the non-structural feature information between different spectral samples and complement the representation ability of the traditional spatial-spectral joint features obtained by CNNs. Therefore, it is motivated to build the GCN to achieve multiple updates in each epoch. In traditional GCNs, pixel-level samples are fed into the network through an adjacency matrix that models the relationship among samples, and that must be computed before the training begins. However, the huge computational cost caused by the high dimensionality of HSIs limit graph learning performances for these data. This is why this work’s local optimum training in a mini-batch pattern (similar to CNN) was adopted. The mini-batch decreases the high computational cost of constructing an adjacency matrix on high-dimensional data sets and improves binary detection training and model convergence speed. In [

41], it is theoretically applicable in using mini-batch training strategies.

Figure 1 illustrates the GCN structure within the proposed target detection framework. Here,

denotes an undirected graph with vertex set

V and edge set

E. HS pixels define vertex sets, with similarities establishing the edge set. Adjacency matrix construction and spectral domain convolution proceed as follows.

The adjacency matrix defines the relationship between vertexes. Each element in can be generally computed by , where is a parameter to control the width of the Radial Basis Function (RBF) included in the formula. The vectors and denote the spectral signatures associated with the vertexes and . Given , the corresponding graph Laplacian matrix may be computed as , in which is a diagonal matrix representing the degrees of , i.e., .

Describing graph convolution involves initially extracting a set of basis functions by computing eigenvectors of

. Then, the spectral decomposition of

is performed according to

The convolution between

f and

g on a graph with coefficient

can thus be expressed as

Accordingly, the propagation rule for GCN can be represented as

where

and

are the re-normalization terms of

and

, respectively, used to enhance the stability in the process of the network training. Additionally,

represents the

layer’s output, with

serving as the activation function in the final layer (e.g., ReLU).

The unbiased estimator of the node in the full batch

GCN layer, denoted as

where

s is not only the

s-th sub-graph but also the

s-th batch in the network training.

Dropout is applied to the first GCN layer to prevent overfitting, thereby avoiding all pixels being misdetected as background during training.

3.4. Generative Learning Module

The GCN focuses on global feature smoothing by aggregating intra-class information and making intra-class features similar. As complementary, a generative learning module was introduced into the model to enhance the discrepancy between the two classes. During the training phase, the generator is pre-trained on pseudo samples. Therefore, it could be regarded as a function that transforms noise vector samples from the d-dimensional latent space to a pixel-space-generated image.

The generator

G minimizes

and generates output samples of the encoder

to match the distribution of

, deceiving discriminator

D. The network’s output can be described as follows:

where

is the extracted feature of the encoder.

are the weights and biases of the encoder and decoder.

When training converges, the discriminator fails to distinguish between generated and target data. serves as the testing and detection network in the generative module, obtaining maps from trained parameters.

3.5. Fusion and Aggregation Module

Extracting statistical features from data often overlooks the potential topological structure information between different land cover categories. GAN-based methods typically only model the local spatial relationships of samples, which greatly limits the ability to capture non-local topological relationships, which can better represent the underlying data structure of HSI. To alleviate this issue, in this work, the deep representative model fuses different modules and enhance the feature discrimination ability by combining the advantages of the graph and generative learning. Specifically, the proposed GCNs can be combined with standard GAN models as follows:

where

is the detection map of the whole network.

and

are the output of generative and graph learning modules, respectively.

represents the nonlinear aggregation module. The feature mapping function

transforms extracted features through GCN into detection maps, effectively suppressing background.

The outputs from the graph convolution module and generative training module can be considered as features. Since the generative model is able to extract spatial-spectral features, and graph learning represents topological relations between samples, combining both models should provide better results exploiting feature diversity. The nonlinear processing after two modules provides a more robust nonlinear representation ability of the features, aggregates the small targets which are hard to detect, and suppresses the complex background similar to the targets.