Submitted:

30 July 2024

Posted:

31 July 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Current State-of-the-Art

2.1. Definitions and Characterizations of Avatars in Literature

2.2. Object Detection

2.3. Summary

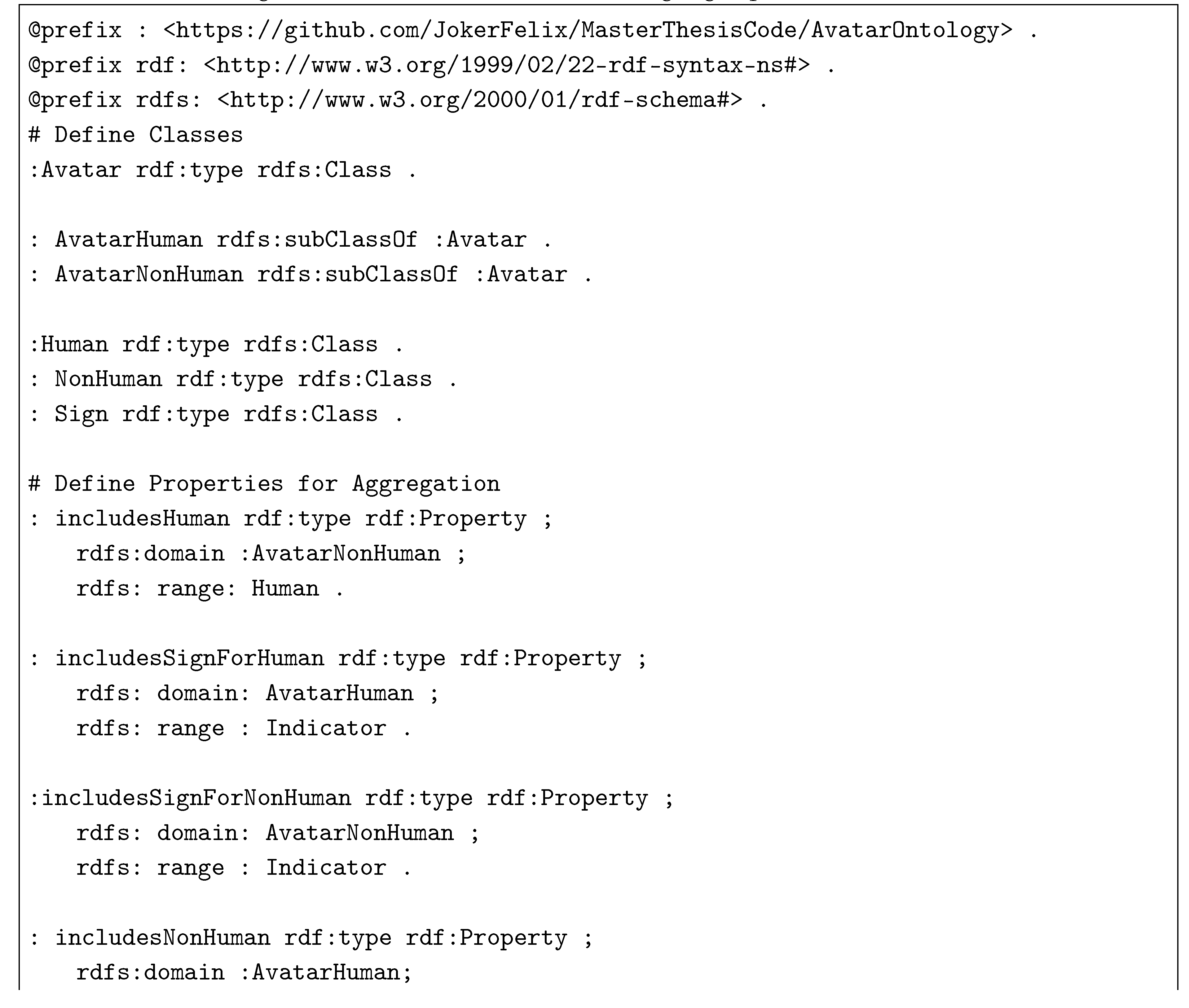

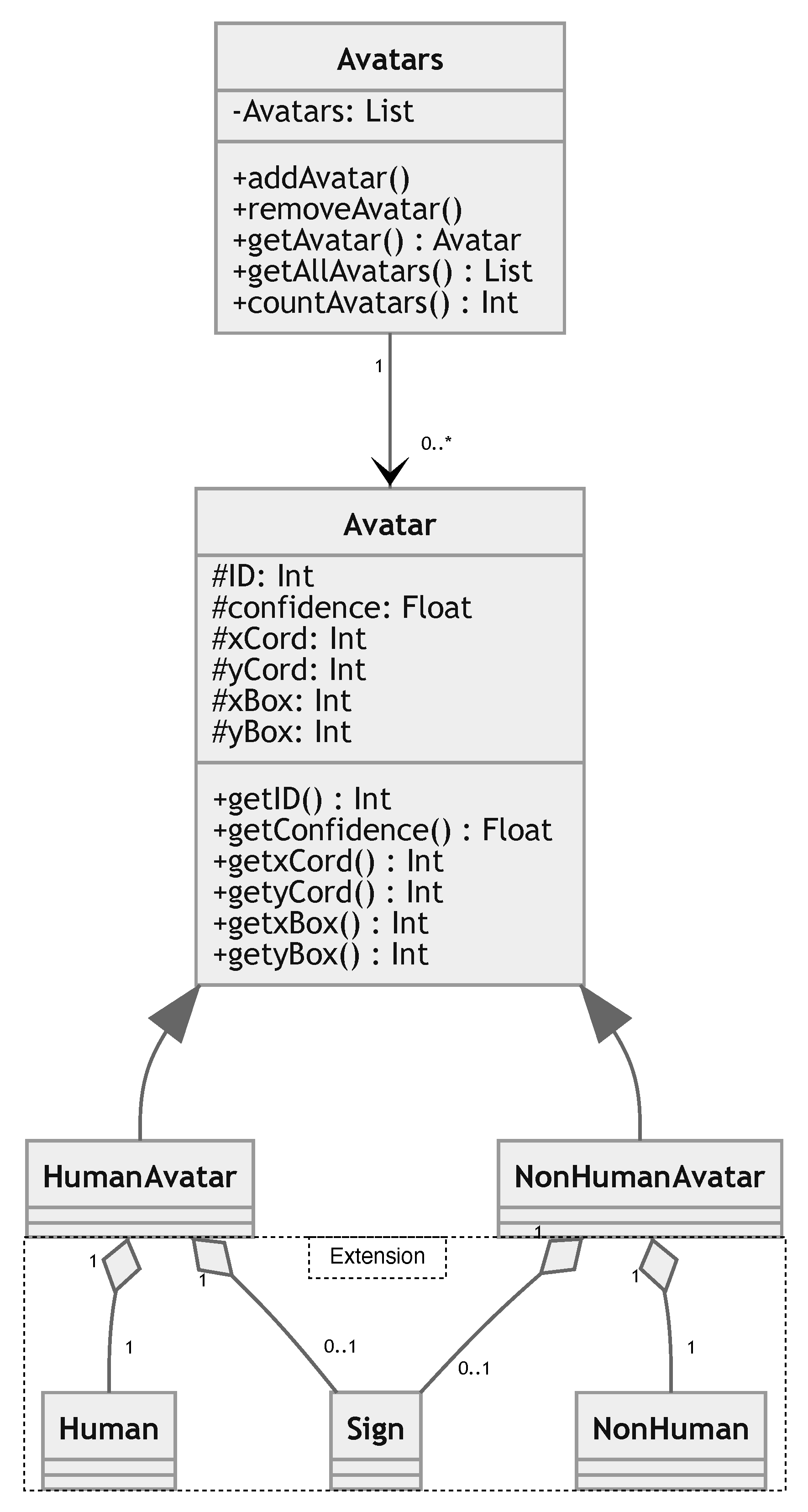

3. Modeling

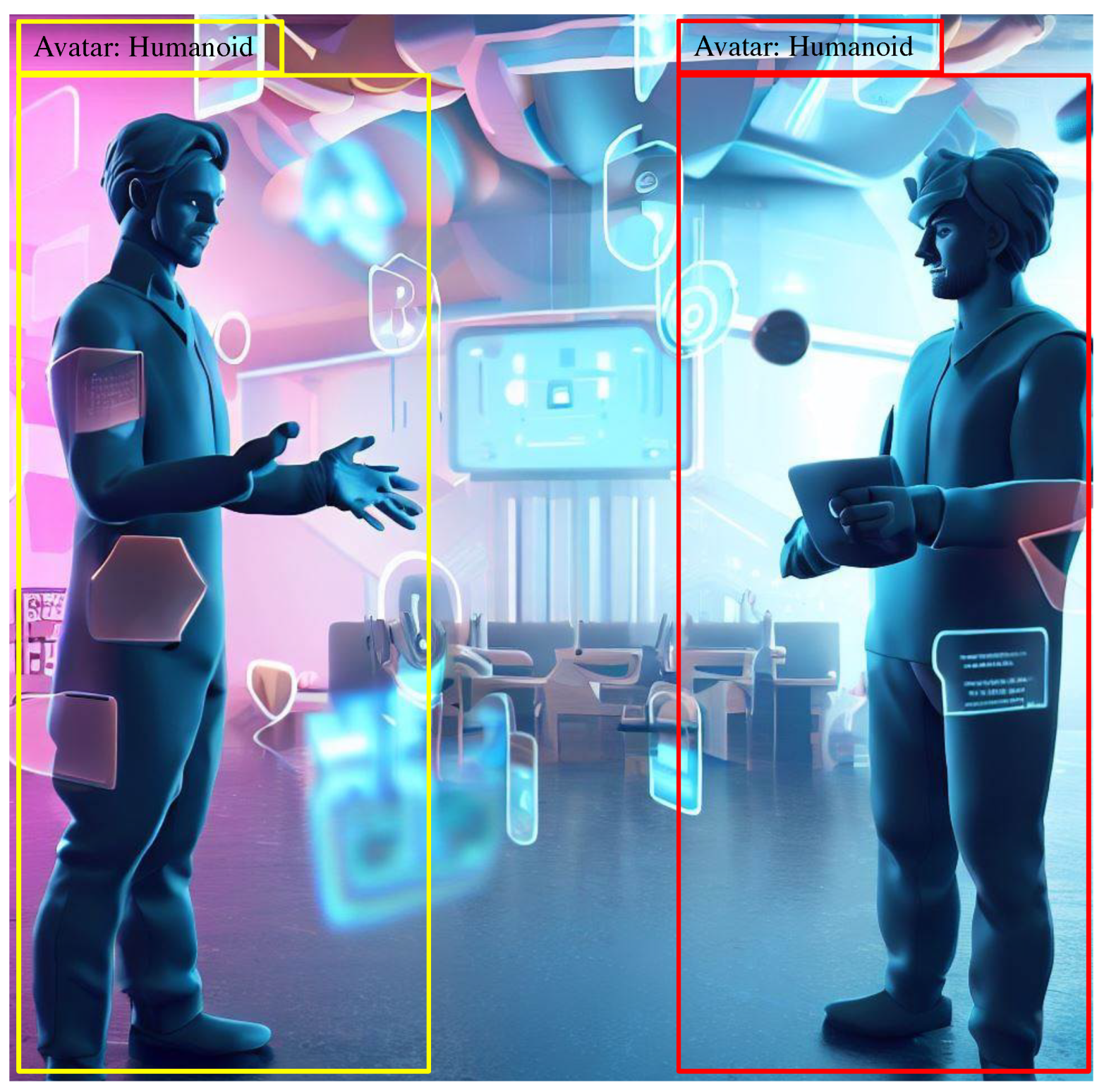

3.1. Avatar Classification

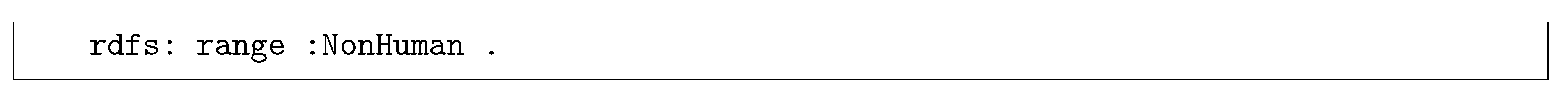

3.2. Avatar Detector Model

4. Implementation

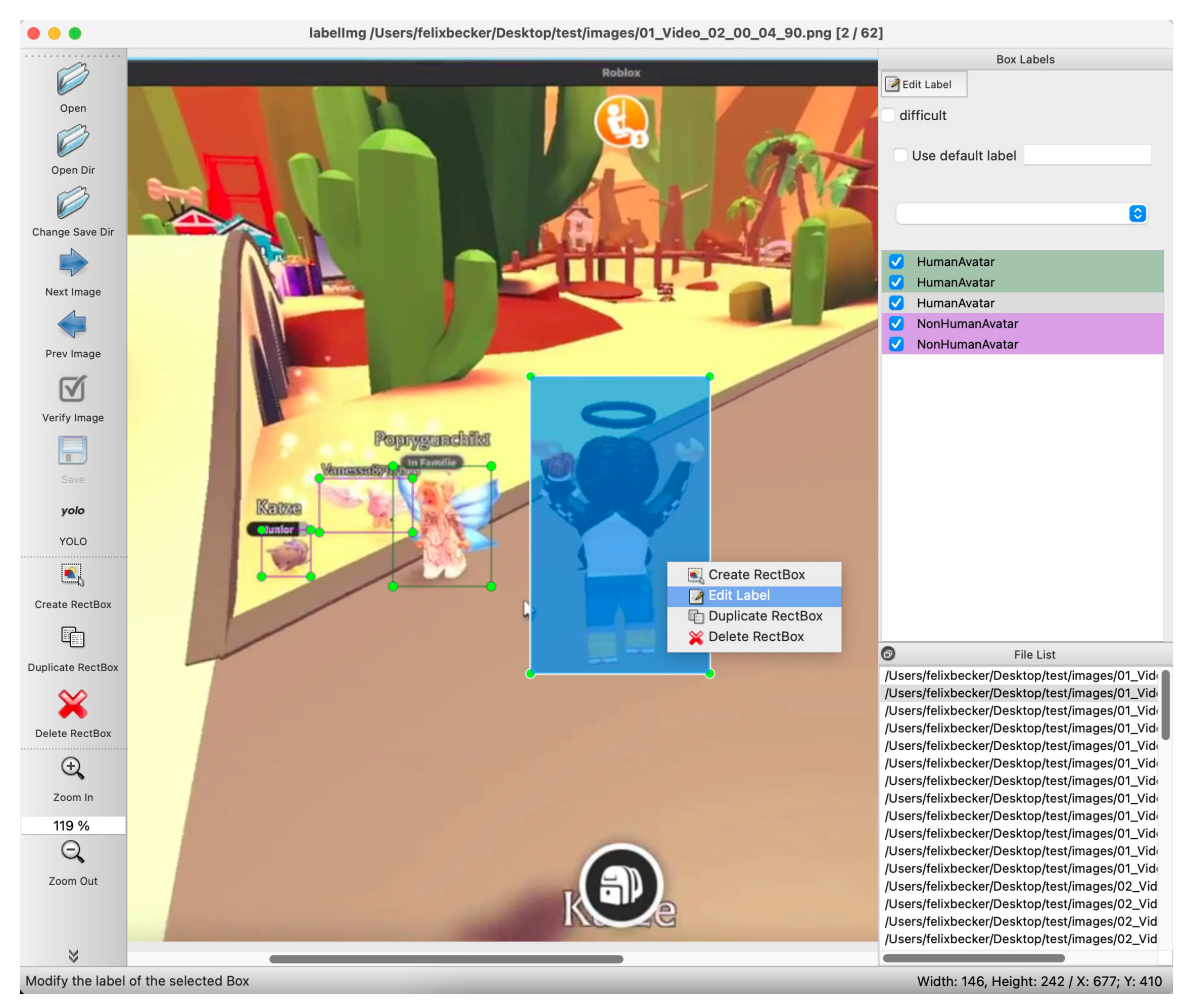

4.1. ADET Dataset

4.2. Avatar Detector

5. Evaluation

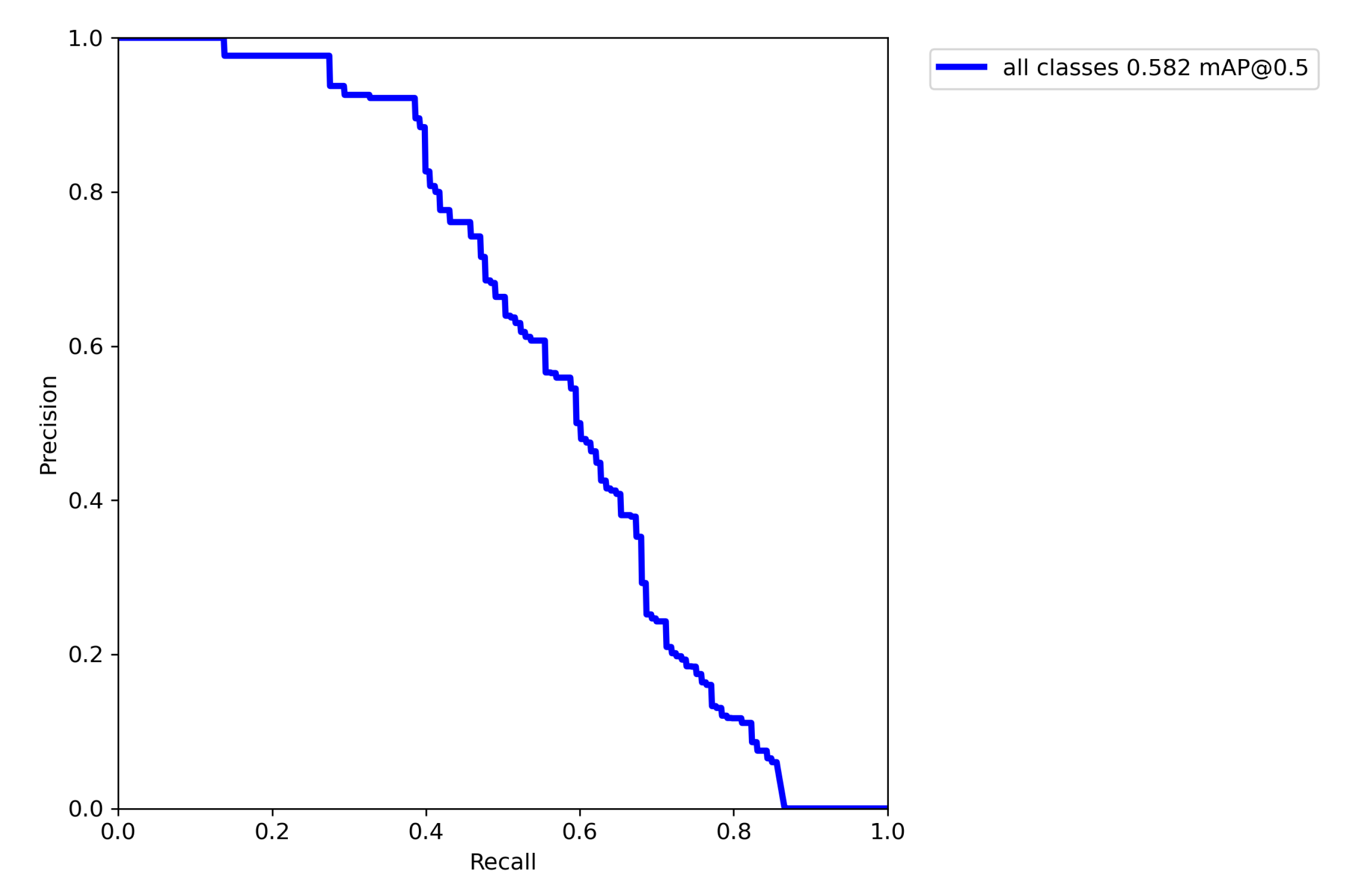

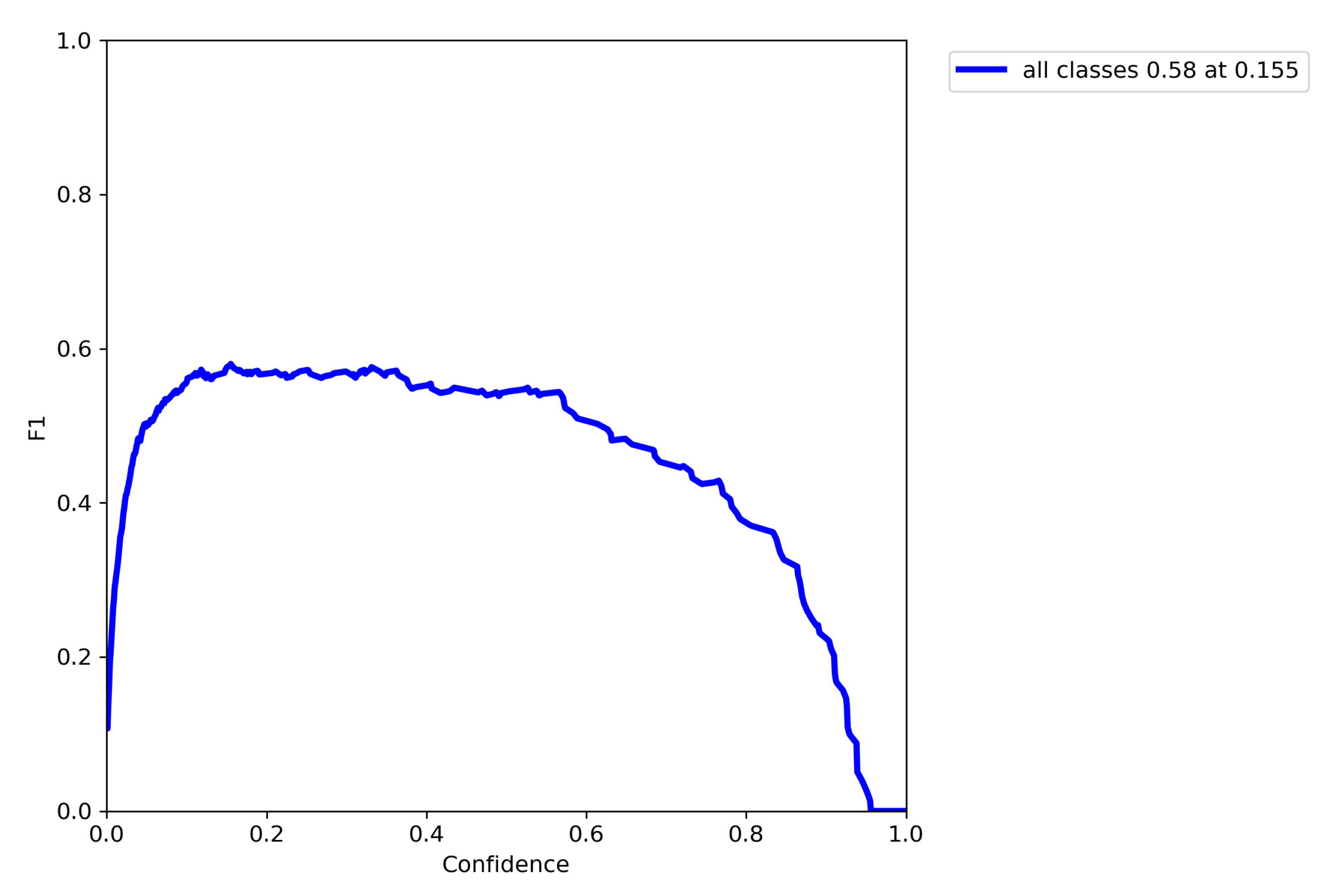

5.1. Evaluation of the Avatar Detection

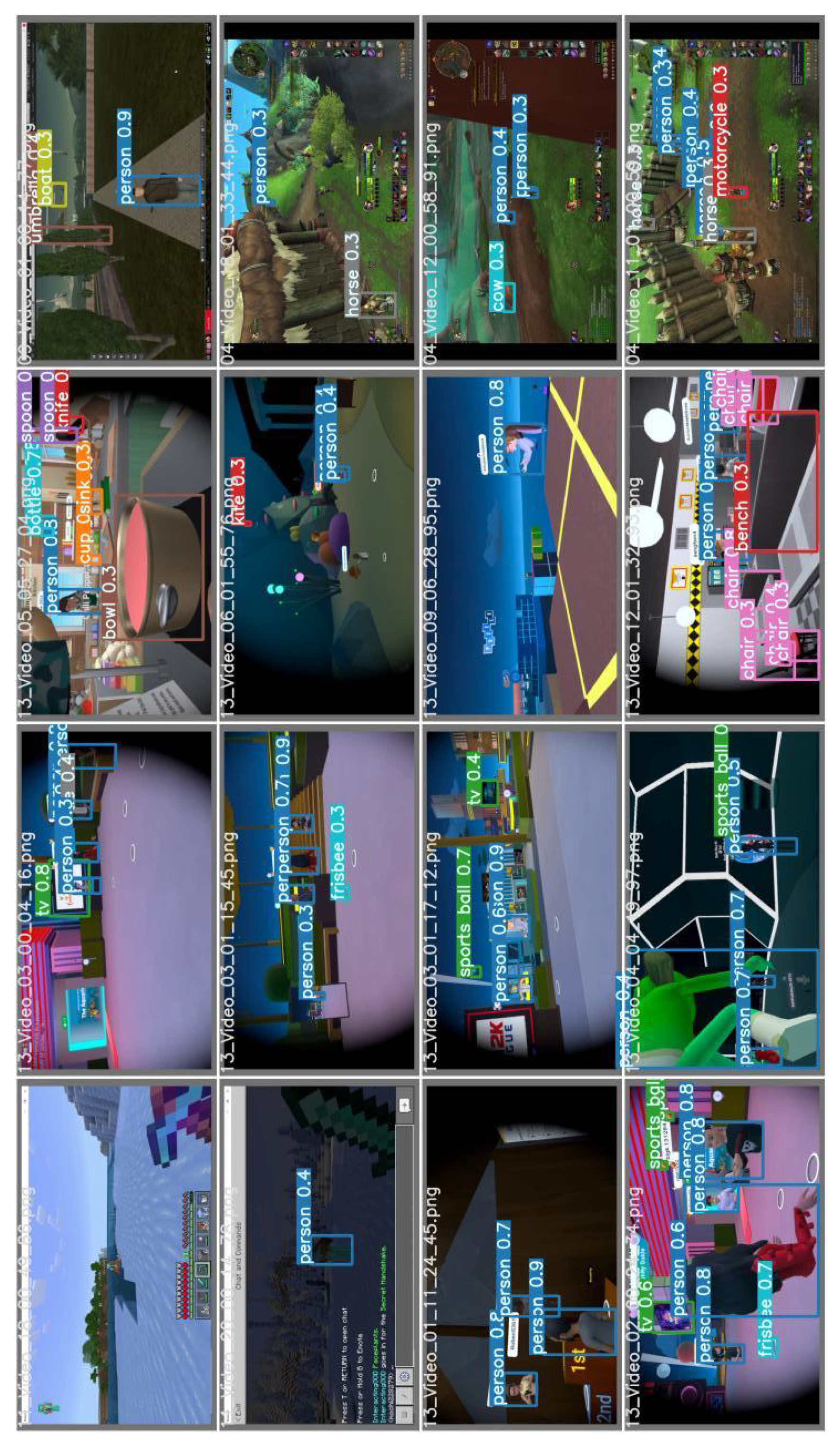

5.1.1. Baseline

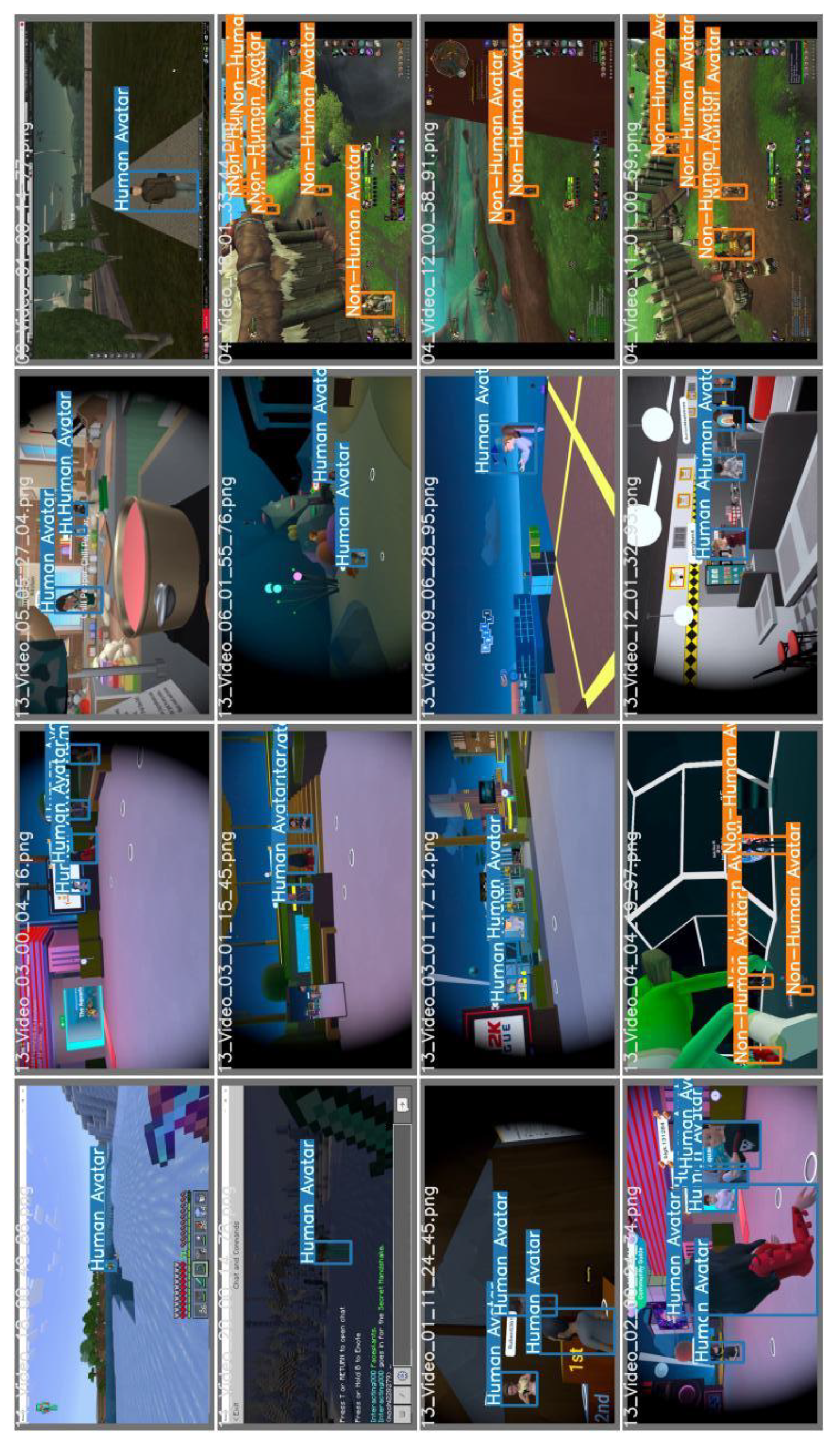

5.1.2. Avatar Detector

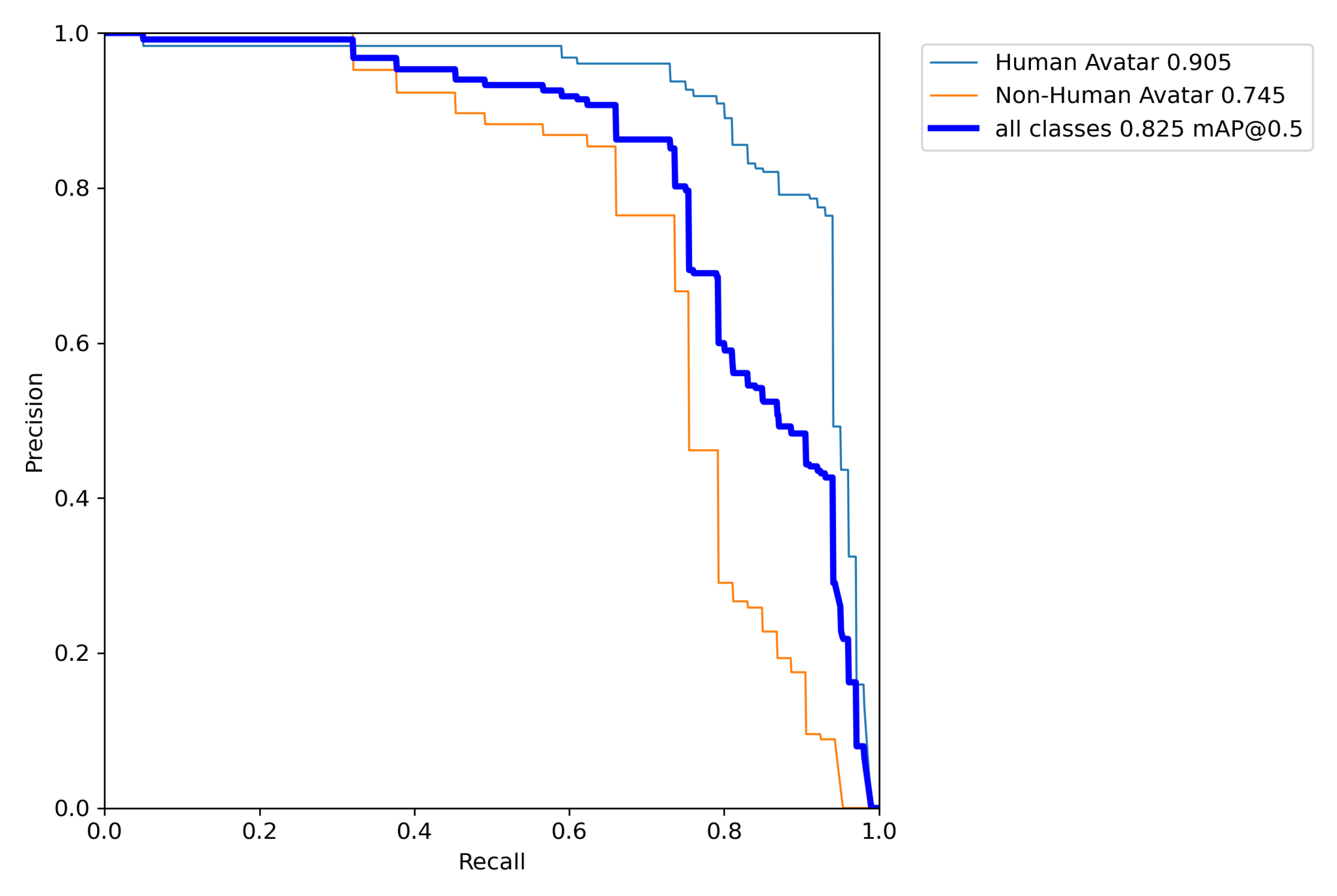

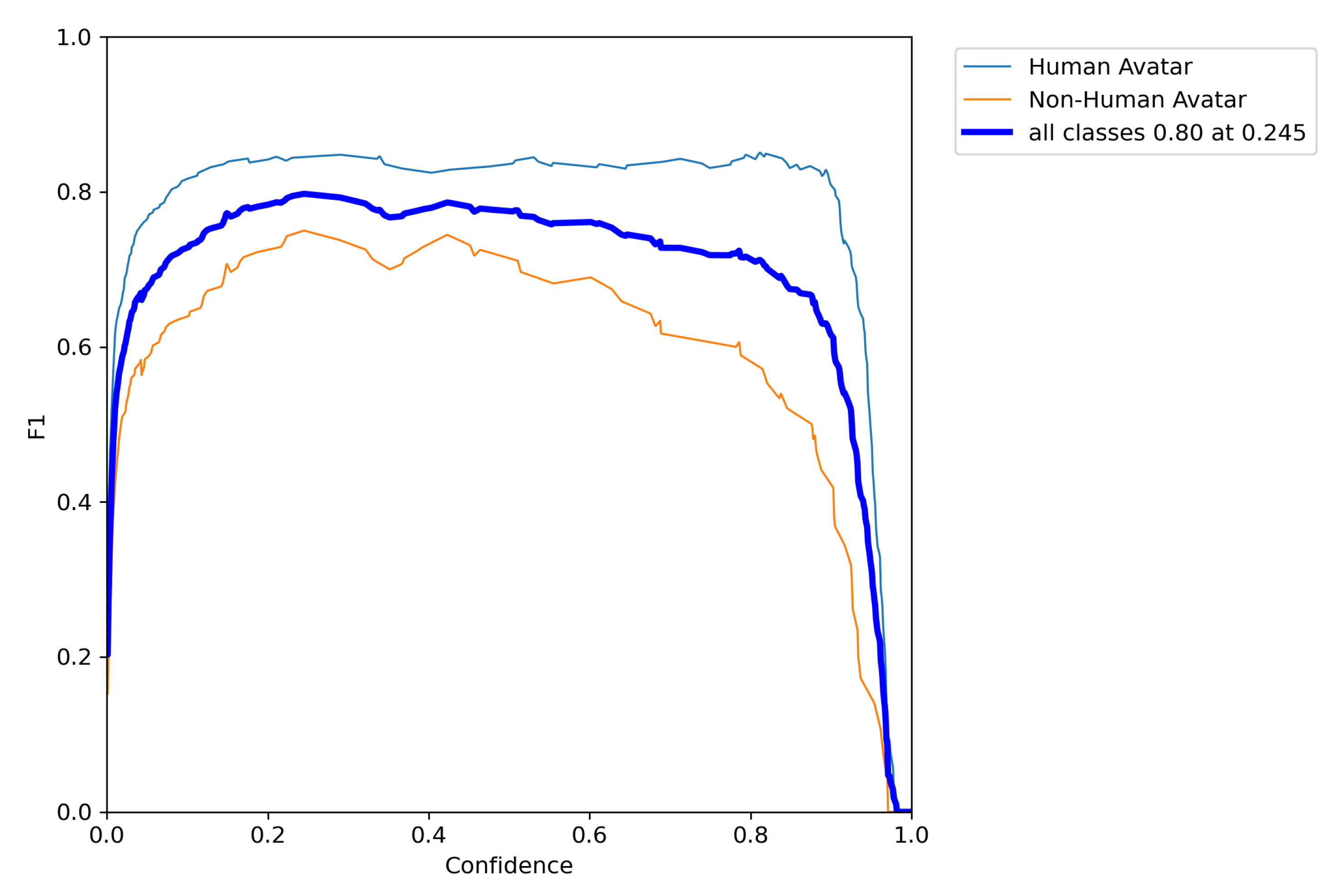

| Measure | Abs. Delta | Rel. Delta | ||

|---|---|---|---|---|

| mAP@0.5 | 0.582 | 0.825 | +0.245 | +0.422 |

| Class HumanAvatar | 0.905 | |||

| Class NonHumanAvatar | 0.745 | |||

| F1@Optimum | 0.580 | 0.800 | +0.220 | +0.379 |

| AP ↑ | mAP@0.5 ↑ | F1@Optimum ↑ | ||

|---|---|---|---|---|

| Class HumanAvatar | 0.905 | |||

| Class NonHumanAvatar | 0.745 | |||

| Both Classes | 0.825 | 0.800 | ||

| Person?? | 0.582 | 0.580 |

5.2. Ablation Study: Evaluating the Avatar Indicator

6. Discussion and Future Work

References

- Gartner Inc.. Gartner Predicts 25% of People Will Spend At Least One Hour Per Day in the Metaverse by 2026, 2022.

- Mystakidis, S. Metaverse. Encyclopedia 2022, 2, 486–497. [Google Scholar] [CrossRef]

- Ritterbusch, G.D.; Teichmann, M.R. Defining the Metaverse: A Systematic Literature Review. IEEE Access 2023, 11, 12368–12377. [Google Scholar] [CrossRef]

- KZero Worldwide. Exploring the Q1 24’ Metaverse Radar Chart: Key Findings Unveiled - KZero Worldswide, 2024.

- Karl, K.A.; Peluchette, J.V.; Aghakhani, N. Virtual Work Meetings During the COVID-19 Pandemic: The Good, Bad, and Ugly. Small Group Research 2022, 53, 343–365. [Google Scholar] [CrossRef] [PubMed]

- Meta Platforms, Inc.. Meta Connect 2022: Meta Quest Pro, More Social VR and a Look Into the Future, 2022.

- Takahashi, D. Nvidia CEO Jensen Huang weighs in on the metaverse, blockchain, and chip shortage, 2021.

- Apple Inc. Apple Vision Pro available in the U.S. on February 2, 2024.

- INTERPOL. Grooming, radicalization and cyber-attacks: INTERPOL warns of ‘Metacrime’, 2024.

- Linden Lab. Official Site Second Life, 2024.

- Decentraland. Official Website: What is Decentraland? https://decentraland.org, 2020. Online; accessed 09-June-2023.

- Corporation, R. Roblox: About Us. https://www.roblox.com/info/about-us?locale=en_us, 2023. Online; accessed 03-Nov-2023.

- Games, E. FAQ, Q: What is Fortnite? https://www.fortnite.com/faq, 2023. Online; accessed 06-Nov-2023.

- Meta Platforms, Inc.. Horizon Worlds | Virtual Reality Worlds and Communities, 2023.

- Wikipedia. Virtual world, 2023. Page Version ID: 1141563133.

- Lochtefeld, J.G. The Illustrated Encyclopedia of Hinduism; The Rosen Publishing Group, Inc. New York, 2002.

- Bartle, R. Designing Virtual Worlds; New Riders Games, 2003.

- Steinert, P.; Wagenpfeil, S.; Frommholz, I.; Hemmje, M.L. Towards the Integration of Metaverse and Multimedia Information Retrieval. 2023 IEEE International Conference on Metrology for eXtended Reality, Artificial Intelligence and Neural Engineering (MetroXRAINE); IEEE: Milano, Italy, 2023; pp. 581–586. [Google Scholar] [CrossRef]

- Ksibi, A.; Alluhaidan, A.S.D.; Salhi, A.; El-Rahman, S.A. Overview of Lifelogging: Current Challenges and Advances. IEEE Access 2021, 9, 62630–62641. [Google Scholar] [CrossRef]

- Bestie Let’s play. Wir verbringen einen Herbsttag mit der Großfamilie!!/Roblox Bloxburg Family Roleplay Deutsch, 2022.

- Uhl, J.C.; Nguyen, Q.; Hill, Y.; Murtinger, M.; Tscheligi, M. xHits: An Automatic Team Performance Metric for VR Police Training. 2023 IEEE International Conference on Metrology for eXtended Reality, Artificial Intelligence and Neural Engineering (MetroXRAINE); IEEE: Milano, Italy, 2023; pp. 178–183. [Google Scholar] [CrossRef]

- Koren, M.; Nassar, A.; Kochenderfer, M.J. Finding Failures in High-Fidelity Simulation using Adaptive Stress Testing and the Backward Algorithm. 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS); IEEE: Prague, Czech Republic, 2021; pp. 5944–5949. [Google Scholar] [CrossRef]

- Li, X.; Yalcin, B.C.; Christidi-Loumpasefski, O.O.; Martinez Luna, C.; Hubert Delisle, M.; Rodriguez, G.; Zheng, J.; Olivares Mendez, M.A. Exploring NVIDIA Omniverse for Future Space Resources Missions. 2022.

- NVIDIA Corp. NVIDIA DRIVE Sim, 2024.

- Rüger, S.; Marchionini, G. Multimedia Information Retrieval; Springer, 2010. OCLC: 1333805791.

- Zhao, Z.Q.; Zheng, P.; Xu, S.T.; Wu, X. Object Detection With Deep Learning: A Review. IEEE Transactions on Neural Networks and Learning Systems 2019, PP, 1–21. [Google Scholar] [CrossRef] [PubMed]

- Abdari, A.; Falcon, A.; Serra, G. Metaverse Retrieval: Finding the Best Metaverse Environment via Language. Proceedings of the 1st International Workshop on Deep Multimodal Learning for Information Retrieval; ACM: Ottawa ON Canada, 2023; pp. 1–9. [Google Scholar] [CrossRef]

- Nunamaker, J.; Chen, M. Systems development in information systems research. Twenty-Third Annual Hawaii International Conference on System Sciences, 1990, Vol. 3, pp. 631–640 vol.3.

- Miao, F.; Kozlenkova, I.V.; Wang, H.; Xie, T.; Palmatier, R.W. An Emerging Theory of Avatar Marketing. Journal of Marketing 2022, 86, 67–90. [Google Scholar] [CrossRef]

- Ante, L.; Fiedler, I.; Steinmetz, F. Avatars: Shaping Digital Identity in the Metaverse. https://www.blockchainresearchlab.org/wp-content/uploads/2020/05/Avatars-Shaping-Digital-Identity-in-the-Metaverse-Report-March-2023-Blockchain-Research-Lab.pdf, 2023. Online; accessed 17-July-2023.

- Kim, D.Y.; Lee, H.K.; Chung, K. Avatar-mediated experience in the metaverse: The impact of avatar realism on user-avatar relationship. Journal of Retailing and Consumer Services 2023, 73, 103382. [Google Scholar] [CrossRef]

- Mourtzis, D.; Panopoulos, N.; Angelopoulos, J.; Wang, B.; Wang, L. Human centric platforms for personalized value creation in metaverse. Journal of Manufacturing Systems 2022, 65, 653–659. [Google Scholar] [CrossRef]

- Steinert, P.; Wagenpfeil, S.; Hemmje, M.L. 256-MetaverseRecords Dataset. https://www.patricksteinert.de/256-metaverse-records-dataset/, 2023.

- Steinert, P.; Wagenpfeil, S.; Frommholz, I.; Hemmje, M.L. 256 Metaverse Recordings Dataset; In Publication: Melbourne, 2025. [Google Scholar]

- Linden Research, I. SecondLife. https://secondlife.com, 2023. Online; accessed 03-Nov-2023.

- Meta. Frequently Asked Questions, Q: What is Horizon Worlds? https://www.meta.com/en-gb/help/quest/articles/horizon/explore-horizon-worlds/horizon-frequently-asked-questions/, 2022. Online; accessed 06-Nov-2023.

- Steinert, P.; Wagenpfeil, S.; Frommholz, I.; Hemmje, M.L. Integration of Metaverse Recordings in Multimedia Information Retrieval.

- Naphade, M.; Smith, J.; Tesic, J.; Chang, S.F.; Hsu, W.; Kennedy, L.; Hauptmann, A.; Curtis, J. Large-scale concept ontology for multimedia. IEEE MultiMedia 2006, 13, 86–91. [Google Scholar] [CrossRef]

- Zou, Z.; Chen, K.; Shi, Z.; Guo, Y.; Ye, J. Object Detection in 20 Years: A Survey. Proceedings of the IEEE 2023, 111, 257–276. [Google Scholar] [CrossRef]

- Rauch, L.; Huseljic, D.; Sick, B. Enhancing Active Learning with Weak Supervision and Transfer Learning by Leveraging Information and Knowledge Sources. IAL@ PKDD/ECML 2022.

- Ratner, A.J.; De Sa, C.M.; Wu, S.; Selsam, D.; Ré, C. Data Programming: Creating Large Training Sets, Quickly. Advances in Neural Information Processing Systems; Lee, D.; Sugiyama, M.; Luxburg, U.; Guyon, I.; Garnett, R., Eds. Curran Associates, Inc., 2016, Vol. 29.

- Li, Z.; Liu, F.; Yang, W.; Peng, S.; Zhou, J. A Survey of Convolutional Neural Networks: Analysis, Applications, and Prospects. IEEE Transactions on Neural Networks and Learning Systems 2022, 33, 6999–7019. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR); IEEE: Vancouver, BC, Canada, 2023; pp. 7464–7475. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. 2016, pp. 779–788. [CrossRef]

- Ukhwah, E.N.; Yuniarno, E.M.; Suprapto, Y.K. Asphalt Pavement Pothole Detection using Deep learning method based on YOLO Neural Network. 2019 International Seminar on Intelligent Technology and Its Applications (ISITIA); IEEE: Surabaya, Indonesia, 2019; pp. 35–40. [Google Scholar] [CrossRef]

- J, D.; V, S.D.; S A, A.; R, K.; Parameswaran, L. Deep Learning based Detection of potholes in Indian roads using YOLO. 2020 International Conference on Inventive Computation Technologies (ICICT); IEEE: Coimbatore, India, 2020; pp. 381–385. [Google Scholar] [CrossRef]

- Rumpe, B. Modeling with UML; Springer, 2016.

- W3C. RDF Model and Syntax, 1997.

- Wikipedia. Notation3, 2024. Page Version ID: 1221181897.

- Steinert, P. 256-MetaverseRecordings-Dataset Repository, 2024. original-date: 2024-01-12T07:26:01Z.

- FFmpeg project. FFmpeg, 2024.

- Lin, T.T. labelImg PyPI. https://pypi.org/project/labelImg/, 2021. Online; accessed 21-January-2024.

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. WongKinYiu/yolov7. https://github.com/WongKinYiu/yolov7, 2022. Online; accessed 22-January-2024.

- Becker, F. JokerFelix/MasterThesisCode. https://github.com/JokerFelix/MasterThesisCode, 2023. Online; accessed 21-January-2024.

- Becker, F. JokerFelix/gmaf-master. https://github.com/JokerFelix/gmaf-master, 2023. Online; accessed 21-January-2024.

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. Computer Vision – ECCV 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer International Publishing: Cham, 2014; pp. 740–755. [Google Scholar]

- Kin-Yiu, Wong. yolov7/data/hyp.scratch.p5.yaml at main · WongKinYiu/yolov7, 2022.

- Wong, K.Y. WongKinYiu/yolov7, 2024. original-date: 2022-07-06T15:14:06Z.

- Nguyen, T.N.; Puangthamawathanakun, B.; Caputo, A.; Healy, G.; Nguyen, B.T.; Arpnikanondt, C.; Gurrin, C. VideoCLIP: An Interactive CLIP-based Video Retrieval System at VBS2023. In MultiMedia Modeling; Dang-Nguyen, D.T., Gurrin, C., Larson, M., Smeaton, A.F., Rudinac, S., Dao, M.S., Trattner, C., Chen, P., Eds.; Springer International Publishing: Cham, 2023. [Google Scholar] [CrossRef]

| Model | Train Set | AP human ↑ | AP non-human ↑ | mAP ↑ |

|---|---|---|---|---|

| ADET | Indicator | 0.905 | 0.745 | 0.825 |

| ADET | No Indicator | 0.883 | 0.586 | 0.735 |

| YOLO | COCO | 0.582* | - | |

| *class person | ||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).