Submitted:

30 July 2024

Posted:

01 August 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

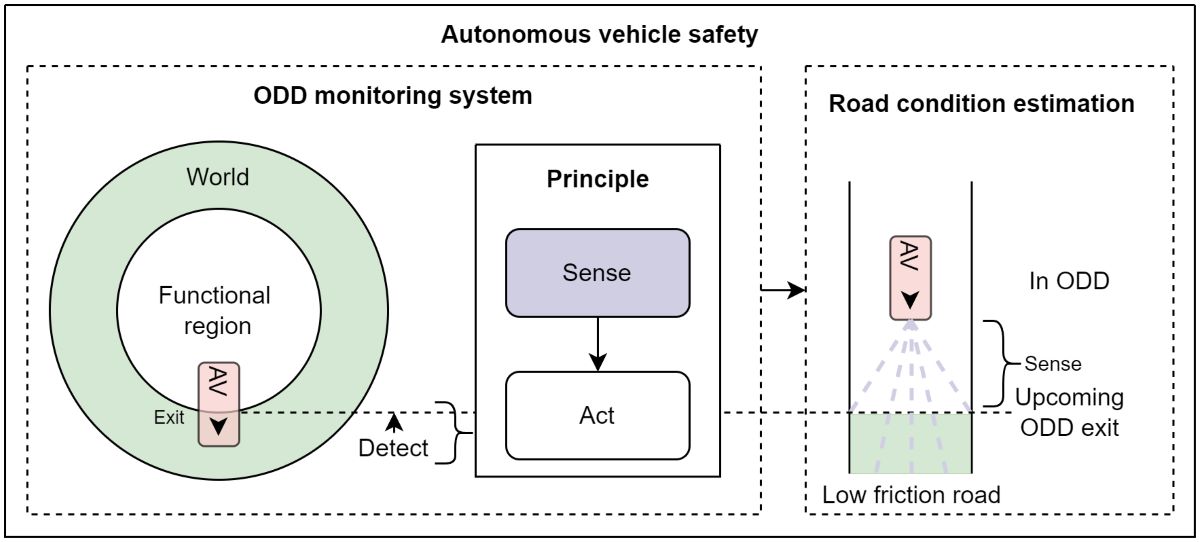

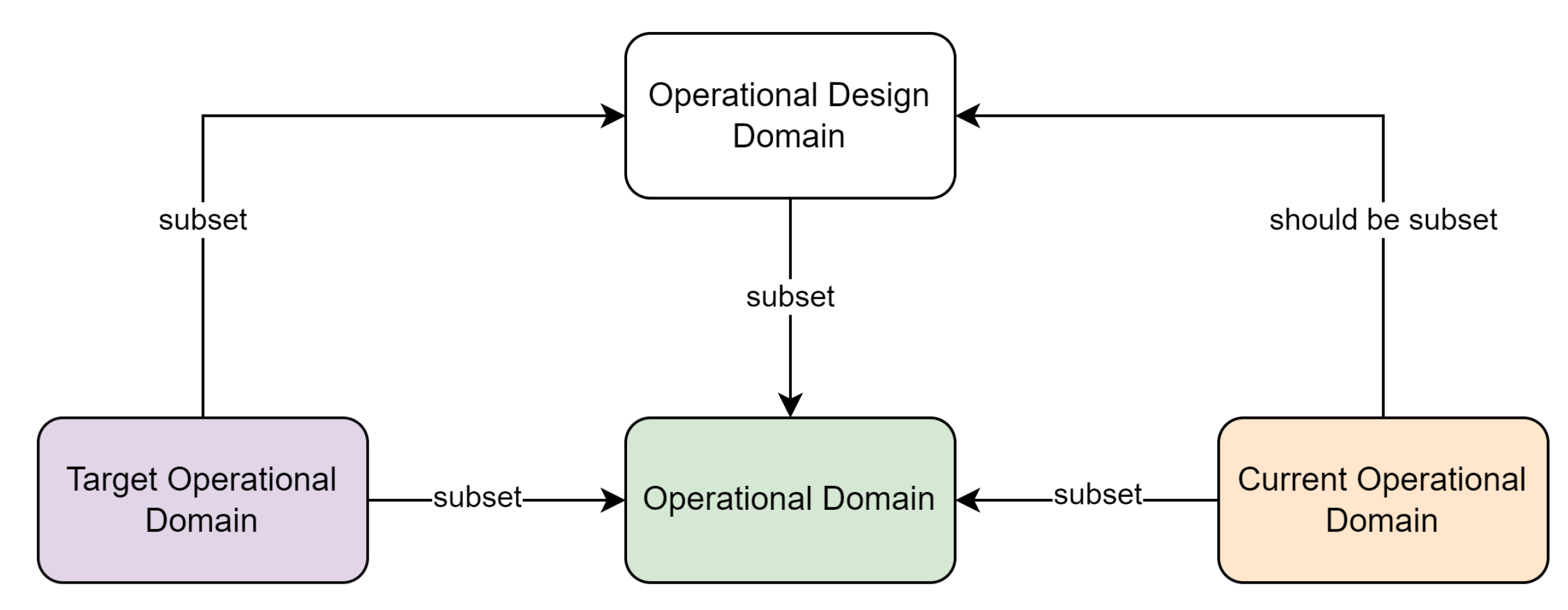

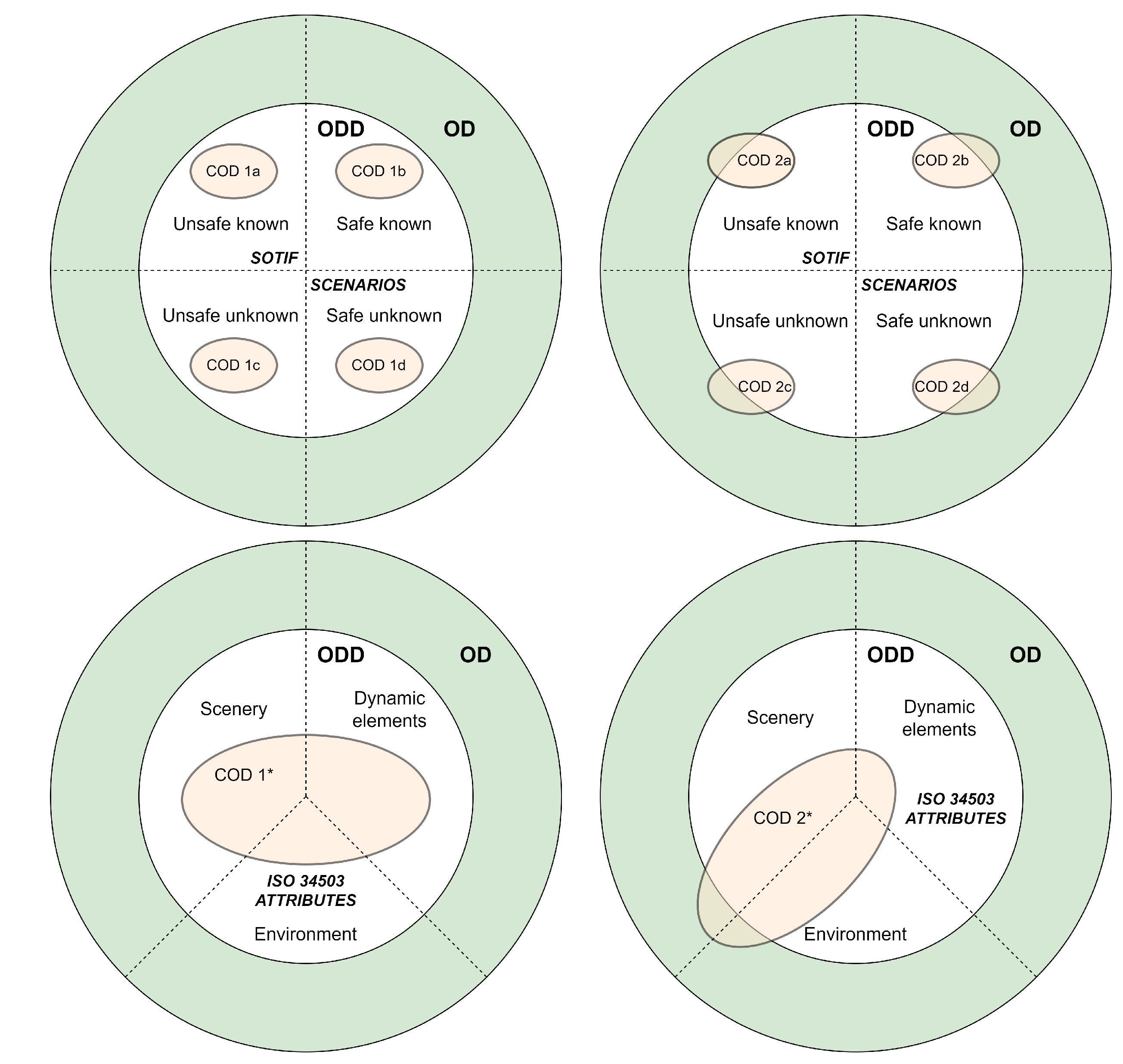

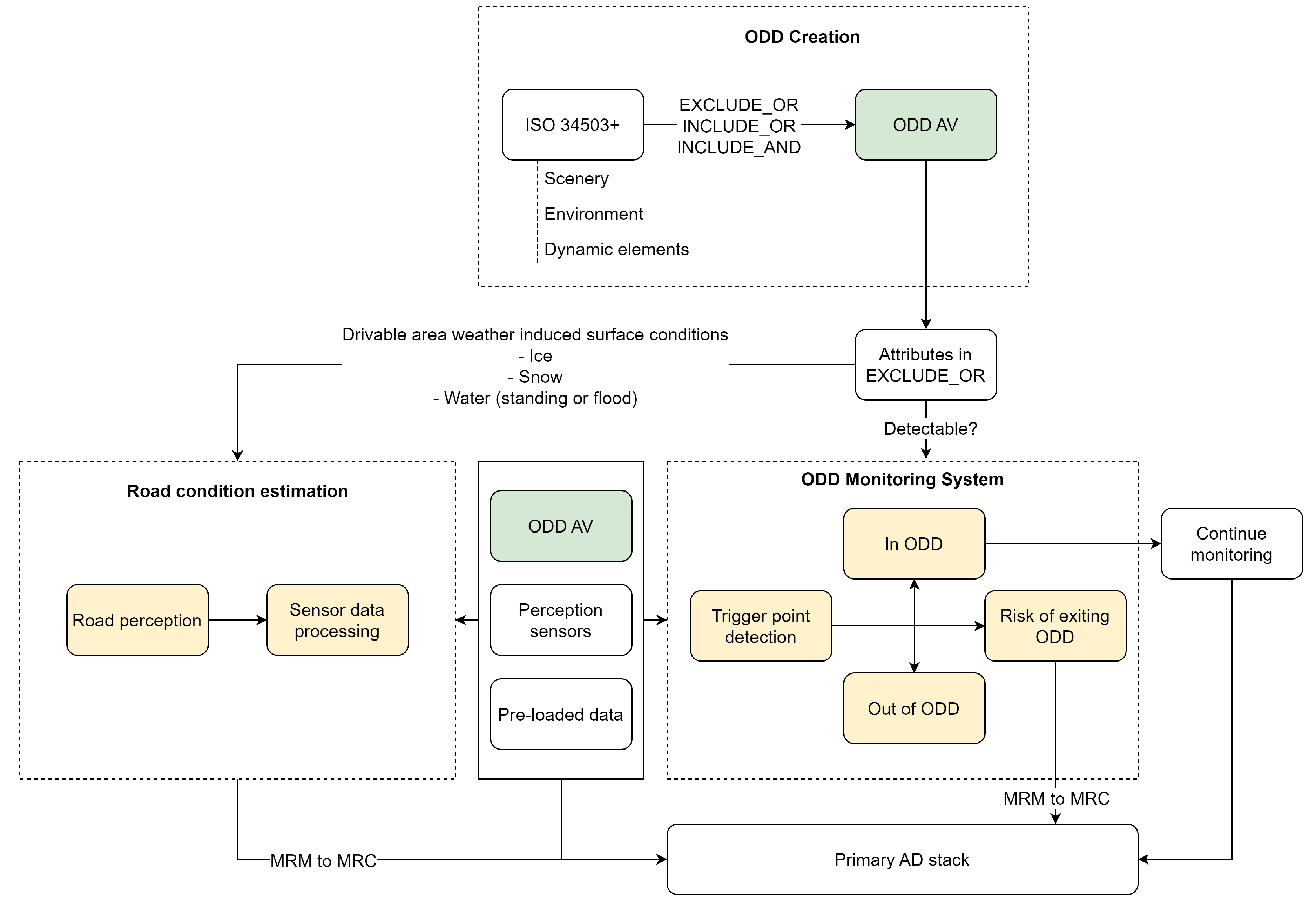

2. ODD Monitoring System

2.1. Related Work

2.2. Framework

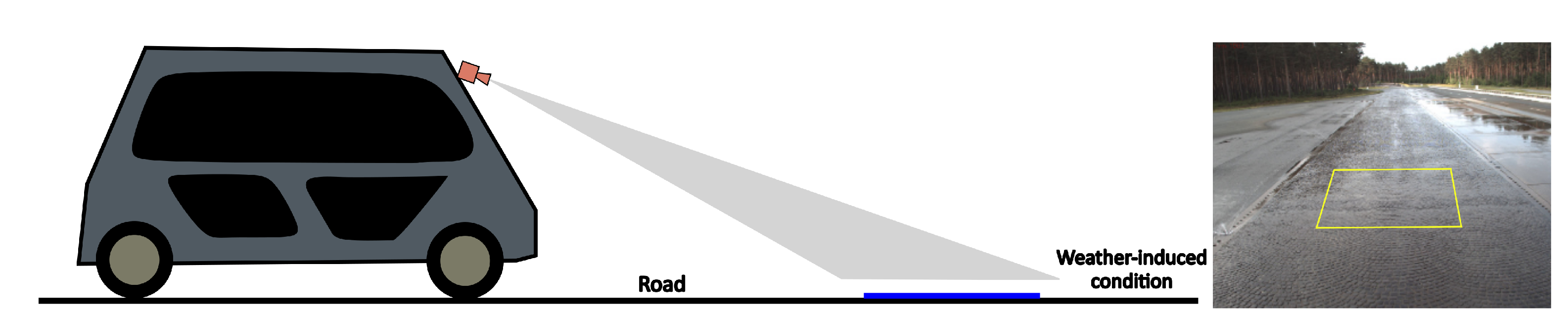

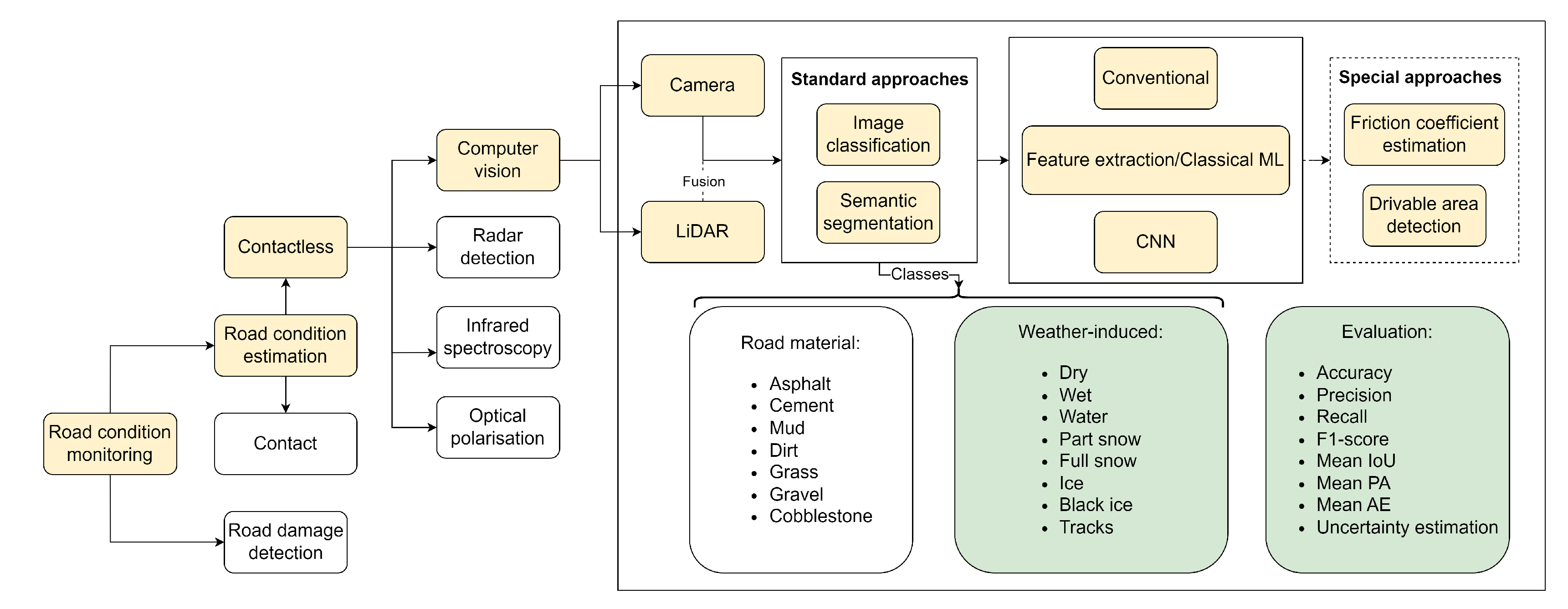

3. Contactless Computer Vision-Based Road Condition Estimation

3.1. Literature Review

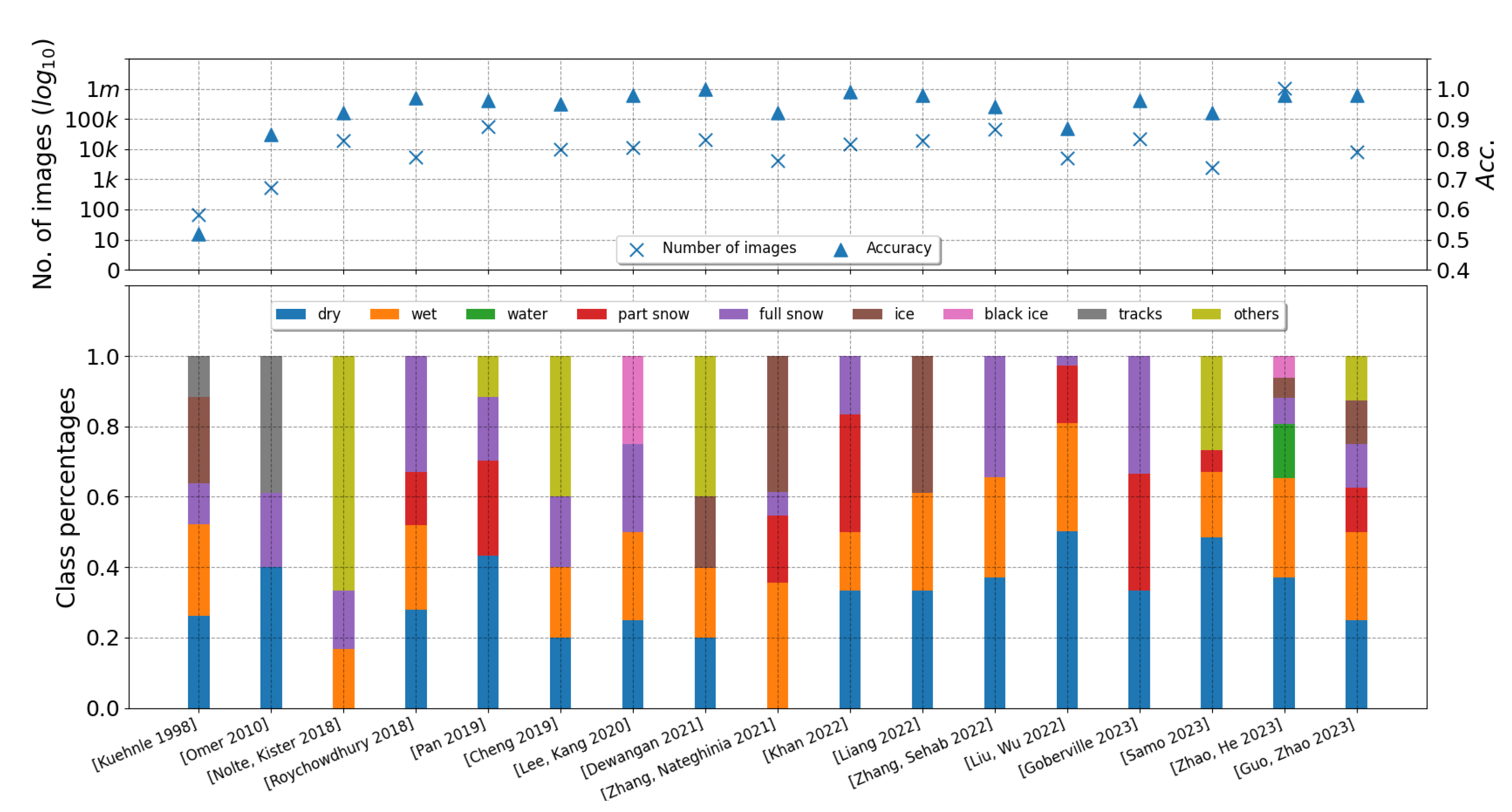

3.1.1. Image Classification

3.1.2. Semantic Segmentation

3.1.3. Drivable Area Detection

3.1.4. Friction Coefficient Estimation

3.2. Discussion

3.3. SOTA Datasets

4. Conclusion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AV | Autonomous vehicle |

| ISO | International Organization for Standardization |

| SOTIF | Safety of the Intended Functionality |

| FI | Functional Insufficiency |

| ODD | Operational Design Domain |

| OD | Operational Domain |

| COD | Current Operational Domain |

| TOD | Target Operational Domain |

| OMS | ODD monitoring system |

| SOTA | state-of-the-art |

| MRM | Minimum risk maneuver |

| MRC | Minimum risk condition |

| RCE | Road condition estimation |

| DDT | Dynamic Driving Task |

| ML | Machine learning |

| ROD | Restricted Operational Domain |

| UC | Use case |

| OC | Operating conditions |

| OOD | Out-of-distribution |

| CNN | Convolutional neural network |

| DNN | Deep neural network |

| ADS | Automated Driving Stack |

| DL | Deep learning |

| RWIS | Road Weather Information System |

| RF | Random forest |

| SVM | Support Vector Machine |

| ViT | Vision Transformer |

| DS | Dempster-Shafer |

| MARWIS | Mobile Advanced Road Weather Information Sensor |

References

- Shi, E.; Gasser, T.M.; Seeck, A.; Auerswald, R. SAE J3016: The Principles of Operation Framework: A Comprehensive Classification Concept for Automated Driving Functions. SAE International Journal of Connected and Automated Vehicles 2020, 3. [CrossRef]

- Gyllenhammar, M.; Johansson, R.; Warg, F.; Chen, D.; Heyn, H.M.; Sanfridson, M.; Söderberg, J.; Thorsén, A.; Ursing, S. Towards an Operational Design Domain That Supports the Safety Argumentation of an Automated Driving System. In Proceedings of the 10th European Congress on Embedded Real Time Software and Systems (ERTS 2020), TOULOUSE, France, 2020.

- Koopman, P.; Widen, W.H. Redefining Safety for Autonomous Vehicles. ArXiv 2024, abs/2404.16768.

- Road vehicles — Functional safety. Standard, International Organization for Standardization, Geneva, CH, 2018.

- Automotive SPICE Process Assessment / Reference Model. Standard, Verband der Automobilindustrie e. V., Berlin, GE, 2023.

- Road vehicles — Cybersecurity engineering. Standard, International Organization for Standardization, Geneva, CH, 2021.

- Road vehicles — Safety of the intended functionality. Standard, International Organization for Standardization, Geneva, CH, 2022.

- Khastgir, S. The Curious Case of Operational Design Domain: What it is and is not? Available online: https://medium.com/@siddkhastgir/the-curious-case-of-operational-design-domain-what-it-is-and-is-not-e0180b92a3ae (accessed on 25.07.2024).

- Road Vehicles — Test scenarios for automated driving systems — Specification for operational design domain. Standard, International Organization for Standardization, Geneva, CH, 2023.

- Riedmaier, S.; Ponn, T.; Ludwig, D.; Schick, B.; Diermeyer, F. Survey on Scenario-Based Safety Assessment of Automated Vehicles. IEEE Access 2020, 8, 87456–87477. [CrossRef]

- Yu, W.; Li, J.; Peng, L.M.; Xiong, X.; Yang, K.; Wang, H. SOTIF risk mitigation based on unified ODD monitoring for autonomous vehicles. Journal of Intelligent and Connected Vehicles 2022, 5, 157–166. [Google Scholar] [CrossRef]

- Goberville, N.A.; Prins, K.R.; Kadav, P.; Walker, C.L.; Siems-Anderson, A.R.; Asher, Z.D. Snow coverage estimation using camera data for automated driving applications. Transportation Research Interdisciplinary Perspectives 2023, 18, 100766. [Google Scholar] [CrossRef]

- Guo, H.; Yin, Z.; Cao, D.; Chen, H.; Lv, C. A Review of Estimation for Vehicle Tire-Road Interactions Toward Automated Driving. IEEE Transactions on Systems, Man, and Cybernetics: Systems 2019, 49, 14–30. [Google Scholar] [CrossRef]

- Yang, S.; Lei, C. Research on the Classification Method of Complex Snow and Ice Cover on Highway Pavement Based on Image-Meteorology-Temperature Fusion. IEEE Sensors Journal 2024, 24, 1784–1791. [Google Scholar] [CrossRef]

- Ojala, R.; Alamikkotervo, E. Road Surface Friction Estimation for Winter Conditions Utilising General Visual Features, 2024, [arXiv:cs.CV/2404.16578].

- Bundesamt, S. General causes of accidents. Available online: https://www.destatis.de/EN/Themes/Society-Environment/Traffic-Accidents/Tables/general-causes-of-accidents-involving-personal-injury.html (accessed on 25.07.2024).

- Ma, Y.; Wang, M.; Feng, Q.; He, Z.; Tian, M. Current Non-Contact Road Surface Condition Detection Schemes and Technical Challenges. Sensors (Basel, Switzerland) 2022, 22. [Google Scholar] [CrossRef]

- Liu, F.; Wu, Y.; Yang, X.; Mo, Y.; Liao, Y. Identification of winter road friction coefficient based on multi-task distillation attention network. Pattern Analysis and Applications 2022, 25, 441–449. [Google Scholar] [CrossRef]

- Morales-Alvarez, W.; Sipele, O.; Léberon, R.; Tadjine, H.H.; Olaverri-Monreal, C. Automated Driving: A Literature Review of the Take over Request in Conditional Automation. Electronics 2020, 9, 2087. [Google Scholar] [CrossRef]

- Emzivat, Y.; Ibanez-Guzman, J.; Martinet, P.; Roux, O.H. Adaptability of automated driving systems to the hazardous nature of road networks. In Proceedings of the 2017 IEEE 20th International Conference on Intelligent Transportation Systems (ITSC). IEEE; 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Ian Colwell. ; Buu Phan.; Shahwar Saleem.; Rick Salay.; Krzysztof Czarnecki. An Automated Vehicle Safety Concept Based on Runtime Restriction of the Operational Design Domain: 26-30 June 2018; IEEE: Piscataway, NJ, 2018. [Google Scholar]

- Alsayed, Z.; Resende, P.; Bradai, B. Operational Design Domain Monitoring at Runtime for 2D Laser-Based Localization algorithms. In Proceedings of the 2021 20th International Conference on Advanced Robotics (ICAR). IEEE; 2021; pp. 449–454. [Google Scholar] [CrossRef]

- Liu, S.; Zhang, Q.; Li, W.; Yao, S.; Mu, Y.; Hu, Z. Runtime operational design domain monitoring of static road geometry for automated vehicles. In Proceedings of the 2023 IEEE 34th International Symposium on Software Reliability Engineering Workshops (ISSREW). IEEE; 2023; pp. 192–197. [Google Scholar] [CrossRef]

- Jiang, Z.; Pan, W.; Liu, J.; Han, Y.; Pan, Z.; Li, H.; Pan, Y. Enhancing Autonomous Vehicle Safety Based on Operational Design Domain Definition, Monitoring, and Functional Degradation: A Case Study on Lane Keeping System. IEEE Transactions on Intelligent Vehicles. [CrossRef]

- Torfah, H.; Joshi, A.; Shah, S.; Akshay, S.; Chakraborty, S.; Seshia, S.A. Learning Monitor Ensembles for Operational Design Domains. In Runtime Verification; Katsaros, P.; Nenzi, L., Eds.; Springer Nature Switzerland: Cham, 2023; Vol. 14245, Lecture Notes in Computer Science, pp. 271–29. 2023. [Google Scholar] [CrossRef]

- Cheng, C.H.; Luttenberger, M.; Yan, R. Runtime Monitoring DNN-Based Perception. In Proceedings of the Runtime Verification; Katsaros, P.; Nenzi, L., Eds., Cham; 2023; pp. 428–446. [Google Scholar]

- Salvi, A.; Weiss, G.; Trapp, M. Adaptively Managing Reliability of Machine Learning Perception under Changing Operating Conditions. In Proceedings of the 2023 IEEE/ACM 18th Symposium on Software Engineering for Adaptive and Self-Managing Systems (SEAMS). IEEE; 2023; pp. 79–85. [Google Scholar] [CrossRef]

- Mehlhorn, M.A.; Richter, A.; Shardt, Y.A. Ruling the Operational Boundaries: A Survey on Operational Design Domains of Autonomous Driving Systems. IFAC-PapersOnLine 2023, 56, 2202–2213. [Google Scholar] [CrossRef]

- Aniculaesei, A.; Aslam, I.; Bamal, D.; Helsch, F.; Vorwald, A.; Zhang, M.; Rausch, A. 2023; arXiv:cs.RO/2307.06258].

- Zhao, T.; He, J.; Lv, J.; Min, D.; Wei, Y. A Comprehensive Implementation of Road Surface Classification for Vehicle Driving Assistance: Dataset, Models, and Deployment. IEEE Transactions on Intelligent Transportation Systems 2023, 24, 8361–8370. [Google Scholar] [CrossRef]

- Botezatu, A.P.; Burlacu, A.; Orhei, C. A Review of Deep Learning Advancements in Road Analysis for Autonomous Driving. Applied Sciences 2024, 14, 4705. [Google Scholar] [CrossRef]

- Tabatabai, H.; Aljuboori, M. A Novel Concrete-Based Sensor for Detection of Ice and Water on Roads and Bridges. Sensors (Basel, Switzerland) 2017, 17. [Google Scholar] [CrossRef] [PubMed]

- Roychowdhury, S.; Zhao, M.; Wallin, A.; Ohlsson, N.; Jonasson, M. Machine Learning Models for Road Surface and Friction Estimation using Front-Camera Images. In Proceedings of the 2018 International Joint Conference on Neural Networks (IJCNN). IEEE; 2018; pp. 1–8. [Google Scholar] [CrossRef]

- Jokela, M.; Kutila, M.; Le, L. Road condition monitoring system based on a stereo camera. In Proceedings of the 2009 IEEE 5th International Conference on Intelligent Computer Communication and Processing. IEEE; 2009; pp. 423–428. [Google Scholar] [CrossRef]

- Nolte, M.; Kister, N.; Maurer, M. Assessment of Deep Convolutional Neural Networks for Road Surface Classification 2018. abs 1710 2018, 381–386. [Google Scholar] [CrossRef]

- Jonsson, P.; Casselgren, J.; Thornberg, B. Road Surface Status Classification Using Spectral Analysis of NIR Camera Images. IEEE Sensors Journal 2015, 15, 1641–1656. [Google Scholar] [CrossRef]

- Zhang, H.; Azouigui, S.; Sehab, R.; Boukhnifer, M. Near-infrared LED system to recognize road surface conditions for autonomous vehicles. Journal of Sensors and Sensor Systems 2022, 11, 187–199. [Google Scholar] [CrossRef]

- Zhang, C. ; McGill University, Civil Engineering and Applied Mechanics. Winter Road Surface Conditions Classification using Convolutional Neural Network (CNN): Visible Light and Thermal Images Fusion 2021.

- Baby K., C.; George, B. A capacitive ice layer detection system suitable for autonomous inspection of runways using an ROV. In Proceedings of the 2012 IEEE International Symposium on Robotic and Sensors Environments Proceedings. IEEE; 2012; pp. 127–132. [Google Scholar] [CrossRef]

- Kuehnle, A.; Burghout, W. Winter Road Condition Recognition Using Video Image Classification. Transportation Research Record: Journal of the Transportation Research Board 1998, 1627, 29–33. [Google Scholar] [CrossRef]

- Cordes, K.; Reinders, C.; Hindricks, P.; Lammers, J.; Rosenhahn, B.; Broszio, H. RoadSaW: A Large-Scale Dataset for Camera-Based Road Surface and Wetness Estimation. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW); 2022; pp. 4439–4448. [Google Scholar] [CrossRef]

- Raj, A.; Krishna, D.; Priya, H.; Shantanu, K.; Devi, N. Vision based road surface detection for automotive systems. 2012 International Conference on Applied Electronics, 2012; 223–228. [Google Scholar]

- Lei, Y.; Emaru, T.; Ravankar, A.A.; Kobayashi, Y.; Wang, S. Semantic Image Segmentation on Snow Driving Scenarios. In Proceedings of the 2020 IEEE International Conference on Mechatronics and Automation (ICMA). IEEE; 2020; pp. 1094–1100. [Google Scholar] [CrossRef]

- Rawashdeh, N.A.; Bos, J.P.; Abu-Alrub, N.J. Camera–Lidar sensor fusion for drivable area detection in winter weather using convolutional neural networks. Optical Engineering 2023, 62. [Google Scholar] [CrossRef]

- Zhao, T.; Guo, P.; Wei, Y. Road friction estimation based on vision for safe autonomous driving. Mechanical Systems and Signal Processing 2024, 208. [Google Scholar] [CrossRef]

- Omer, R.; Fu, L. An automatic image recognition system for winter road surface condition classification. In Proceedings of the 13th International IEEE Conference on Intelligent Transportation Systems. IEEE; 2010; pp. 1375–1379. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. 2015; arXiv:cs.CV/1512.03385]. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision, 2015. 2015; arXiv:cs.CV/1512.00567]. [Google Scholar]

- Cheng, L.; Zhang, X.; Shen, J. Road surface condition classification using deep learning. Journal of Visual Communication and Image Representation 2019, 64, 102638. [Google Scholar] [CrossRef]

- Guangyuan Pan. ; Liping Fu.; Ruifan, Yu., Matthew Muresan. Evaluation of Alternative Pre-trained Convolutional Neural Networks for Winter Road Surface Condition Monitoring: 5th International Conference on Transportation Information and Safety : July 14th-July 17th 2019, Liverpool, UK, Eds.; IEEE: Piscataway, NJ, 2019. [Google Scholar]

- Simonyan, K.; Zisserman, A. 2015; arXiv:cs.CV/1409.1556].

- Chollet, F. 2017; Deep Learning with Depthwise Separable Convolutions, 2017, arXiv:cs.CV/1610.02357]. [Google Scholar]

- Du, Y.; Liu, C.; Song, Y.; Li, Y.; Shen, Y. Rapid Estimation of Road Friction for Anti-Skid Autonomous Driving. IEEE Transactions on Intelligent Transportation Systems 2020, 21, 2461–2470. [Google Scholar] [CrossRef]

- Šabanovič, E.; Žuraulis, V.; Prentkovskis, O.; Skrickij, V. Identification of Road-Surface Type Using Deep Neural Networks for Friction Coefficient Estimation. Sensors (Basel, Switzerland) 2020, 20. [Google Scholar] [CrossRef] [PubMed]

- Dewangan, D.K.; Sahu, S.P. RCNet: road classification convolutional neural networks for intelligent vehicle system. Intelligent Service Robotics 2021, 14, 199–214. [Google Scholar] [CrossRef]

- Khan, M.N.; Ahmed, M.M. Weather and surface condition detection based on road-side webcams: Application of pre-trained Convolutional Neural Network. International Journal of Transportation Science and Technology 2022, 11, 468–483. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions, 2014, 2014; arXiv:cs.CV/1409.4842]. [Google Scholar]

- Liang, H.; Zhang, H.; Sun, Z. A Comparative Study of Vision-based Road Surface Classification Methods for Dataset From Different Cities. In Proceedings of the 2022 IEEE 5th International Conference on Industrial Cyber-Physical Systems (ICPS). IEEE; 2022; pp. 01–06. [Google Scholar] [CrossRef]

- Zhang, H.; Sehab, R.; Azouigui, S.; Boukhnifer, M. Application and Comparison of Deep Learning Methods to Detect Night-Time Road Surface Conditions for Autonomous Vehicles. Electronics 2022, 11, 786. [Google Scholar] [CrossRef]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5MB model size, 2016, 2016; arXiv:cs.CV/1602.07360]. [Google Scholar]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks, 2018, 2018; arXiv:cs.CV/1608.06993]. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. T: Image is Worth 16x16 Words; An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale, 2021, 2021; arXiv:cs.CV/2010.11929]. [Google Scholar]

- Samo, M.; Mafeni Mase, J.M.; Figueredo, G. Deep Learning with Attention Mechanisms for Road Weather Detection. Sensors (Basel, Switzerland) 2023, 23. [Google Scholar] [CrossRef] [PubMed]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. 2019; MobileNetV2: Inverted Residuals and Linear Bottlenecks, 2019, arXiv:cs.CV/1801.04381]. [Google Scholar]

- Cordes, K.; Broszio, H. Camera-Based Road Snow Coverage Estimation. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision Workshops (ICCVW). IEEE; 2023; pp. 4013–4021. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks, 2020, 2020; arXiv:cs.LG/1905.11946]. [Google Scholar]

- Guo, Y.H.; Zhu, J.R.; Yang, C.C.; Yang, B. An Attention-ReXNet Network for Long Tail Road Scene Classification. In Proceedings of the 2023 IEEE 13th International Conference on CYBER Technology in Automation, Control, 2023, and Intelligent Systems (CYBER). IEEE; pp. 1104–1109. [CrossRef]

- Ahmed, T.; Ejaz, N.; Choudhury, S. Redefining Real-time Road Quality Analysis with Vision Transformers on Edge Devices. IEEE Transactions on Artificial Intelligence, 2024; 1–12. [Google Scholar] [CrossRef]

- Wu, K.; Zhang, J.; Peng, H.; Liu, M.; Xiao, B.; Fu, J.; Yuan, L. TinyViT: Fast Pretraining Distillation for Small 230 Vision Transformers. 2022; arXiv:cs.CV/2207.10666]. [Google Scholar]

- Mehta, S.; Rastegari, M. MobileViT: Light-weight, General-purpose, and Mobile-friendly Vision Transformer, 2022, 2022; arXiv:cs.CV/2110.02178]. [Google Scholar]

- Liang, C.; Ge, J.; Zhang, W.; Gui, K.; Cheikh, F.A.; Ye, L. Winter Road Surface Status Recognition Using Deep Semantic Segmentation Network. Proceedings – Int. Workshop on Atmospheric Icing of Structures, 2019. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. 2015; arXiv:cs.CV/1505.04597]. [Google Scholar]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The Cityscapes Dataset for Semantic Urban Scene Understanding. In Proceedings of the Proc. of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR); 2016. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. 2016; arXiv:cs.CV/1511.00561].

- Paszke, A.; Chaurasia, A.; Kim, S.; Culurciello, E. 2016; arXiv:cs.CV/1606.02147].

- Romera, E.; Álvarez, J.M.; Bergasa, L.M.; Arroyo, R. ERFNet: Efficient Residual Factorized ConvNet for Real-Time Semantic Segmentation. IEEE Transactions on Intelligent Transportation Systems 2018, 19, 263–272. [Google Scholar] [CrossRef]

- Zhao, H.; Qi, X.; Shen, X.; Shi, J.; Jia, J. ICNet for Real-Time Semantic Segmentation on High-Resolution Images, 2018, [arXiv:cs.CV/1704.08545].

- Yu, C.; Wang, J.; Peng, C.; Gao, C.; Yu, G.; Sang, N. BiSeNet: Bilateral Segmentation Network for Real-time Semantic Segmentation, 2018, [arXiv:cs.CV/1808.00897].

- Lee, S.Y.; Jeon, J.S.; Le, T.H.M. Feasibility of Automated Black Ice Segmentation in Various Climate Conditions Using Deep Learning. Buildings 2023, 13, 767. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN, 2018, [arXiv:cs.CV/1703.06870].

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection, 2016, [arXiv:cs.CV/1506.02640].

- Rawashdeh, N.A.; Bos, J.P.; Abu-Alrub, N.J. Drivable path detection using CNN sensor fusion for autonomous driving in the snow. In Proceedings of the Autonomous Systems: Sensors, Processing, and Security for Vehicles and Infrastructure 2021; Dudzik, M.C.; Axenson, T.J.; Jameson, S.M., Eds. SPIE, 12.04.2021 - 17.04.2021, p. 5. [CrossRef]

- Parth Kadav, Sachin Sharma, Farhang Motallebi Araghi, and Zachary D. Asher., Ed. Development of Computer Vision Models for Drivable Region Detection in Snow Occluded Lane Lines; Springer: Cham, 2023.

- Guo, H.; Zhao, X.; Liu, J.; Dai, Q.; Liu, H.; Chen, H. A fusion estimation of the peak tire–road friction coefficient based on road images and dynamic information. Mechanical Systems and Signal Processing 2023, 189, 110029. [Google Scholar] [CrossRef]

- Ma, N.; Zhang, X.; Zheng, H.T.; Sun, J. ShuffleNet V2: Practical Guidelines for Efficient CNN Architecture Design, 2018, [arXiv:cs.CV/1807.11164].

- Hojun Leea, Minhee Kanga, Jaein Songb, Keeyeon Hwangc,*. Pix2Pix-based Data Augmentation Method for Building an Image Dataset of Black Ice 2024.

- Lee, H.; Hwang, K.; Kang, M.; Song, J. Black ice detection using CNN for the Prevention of Accidents in Automated Vehicle. In Proceedings of the 2020 International Conference on Computational Science and Computational Intelligence (CSCI). IEEE, 2020, pp. 1189–1192. [CrossRef]

- Lee, H.; Kang, M.; Song, J.; Hwang, K. The Detection of Black Ice Accidents for Preventative Automated Vehicles Using Convolutional Neural Networks. Electronics 2020, 9, 2178. [Google Scholar] [CrossRef]

- Torfah, H. ; A. Seshia, S. Runtime Monitors for Operational Design Domains of Black-Box ML Models 2023.

- Pitropov, M.; Garcia, D.E.; Rebello, J.; Smart, M.; Wang, C.; Czarnecki, K.; Waslander, S. Canadian Adverse Driving Conditions dataset. The International Journal of Robotics Research 2021, 40, 681–690. [Google Scholar] [CrossRef]

- Bijelic, M.; Gruber, T.; Mannan, F.; Kraus, F.; Ritter, W.; Dietmayer, K.; Heide, F. Seeing Through Fog Without Seeing Fog: Deep Multimodal Sensor Fusion in Unseen Adverse Weather.

- Burnett, K.; Yoon, D.J.; Wu, Y.; Li, A.Z.; Zhang, H.; Lu, S.; Qian, J.; Tseng, W.K.; Lambert, A.; Leung, K.Y.K.; et al. Boreas: A Multi-Season Autonomous Driving Dataset.

- Lufft. MARWIS - Mobile Advanced Road Weather Information Sensor. Available online: https://www.lufft.com/products/road-runway-sensors-292/marwis-umb-mobile-advanced-road-weather-information-sensor-2308/ (accessed on 25.07.2024).

- Ojala, R.; Seppänen, A. Lightweight Regression Model with Prediction Interval Estimation for Computer Vision-based Winter Road Surface Condition Monitoring. IEEE Transactions on Intelligent Vehicles 2024, pp. 1–13. [CrossRef]

| Paper (Year) | Attribute | Monitoring theme |

|---|---|---|

| [20] 2017 | Scenery, Dynamic elements | Location and behavioural-based hazard threshold calculation |

| [21] 2018 | Scenery, Dynamic elements | Restricted Operational Domain |

| [2] 2020 | Scenery, Dynamic elements | Mapping of ODD, COD and TOD |

| [22] 2021 | Scenery | Monitoring ODD of laser-based localization algorithms |

| [11] 2022 | Scenery, Weather, Dynamic elements | Speed calculation, digitization of traffic signs, and road defects detection |

| [23] 2023 | Scenery | Road topology monitoring |

| [28] 2023 | Survey | ODD scope, creation, verification and monitoring |

| [29] 2023 | Scenery | Connected dependability cage monitoring of ADS |

| [26] 2023 | Perception algorithms | DNN-based perception algortihms runtime monitoring analysis |

| [27] 2023 | Perception algorithms | Reliability error estimation from mapping of sensor data and OCs |

| [25] 2023 | Perception algorithms | Correlating input feature space with safety specifications using ML |

| [24] 2024 | Perception algorithms | Causal approach to monitor perception and control modules in ADS |

| [3] 2024 | Scenery, Dynamic elements | Lane detection system monitoring |

| Purpose | Evaluation metric | Definition | Formula |

|---|---|---|---|

| Image classification | Accuracy | Ratio of correctly predicted instances over total number of instances. | Acc. = |

| Precision | Ratio of true positive predictions over all positive predictions | P = | |

| Recall | Ratio of true positive predictions over all positive instances | R = | |

| F1-Score | Harmonic mean of precision and recall | F1 = | |

| Average precision | Sum of products of P and difference in R steps at each threshold | AP = | |

| Average precision at 50 | AP value when IoU is greater than 0.50 | AP50 = AP, when IoU ≥ 0.50 | |

| Mean average precision | Mean of APs over all classes | mAP = | |

| Semantic segmentation | Mean Pixel Accuracy | Ratio of correctly predicted pixels over total number of pixels | mPA = |

| Intersection over Union | Ratio of area of IoU of predicted segment to the ground truth | ||

| Mean Intersection over Union | Mean of IoU for all classes | mIoU = | |

| Regression | Mean absolute error | Average of all absolute errors | MAE = |

| Main class | Mentioned class |

|---|---|

| Dry | Dry, Bare, None |

| Wet | Wet |

| Water | Water |

| Partially snow | Partly snow covered, Drivable path, Standard, Slush, Melted snow |

| Full snow | Snow, Covered, Fully snow covered, non-drivable path, Heavy, Fresh fallen snow |

| Ice | Icy |

| Black ice | Black ice |

| Tracks | Tracks |

| Others | Not recognizable, Others, Road materials |

| Paper (Year) | Network | Dataset | Evaluation metric | Result | |

|---|---|---|---|---|---|

| No. of images | No. of classes | ||||

| [40] 1998 | Feature extraction and simple NN | 69 | 5: Dry, Wet, Tracks, Snow, Icy | Acc. | 0.52 |

| [46] 2010 | SVM | 516 | 3: Bare, covered, tracks | Acc. | 0.85 |

| [35] 2018 | ResNet50 | 19000 | 6: Asphalt, dirt, grass, wet asphalt, cobblestone, snow | Acc. | 0.92 |

| [33]3 2018 | Custom CNN | 5300 | 4: Dry asphalt, Wet/Water, Slush, Snow/Ice | Acc. | 0.97 |

| [72]1 2019 | D-UNet | 2080 | 7: Background, dry, wet, snow, ice, water, tracks | mIoU | 0.79 |

| [50] 2019 | ResNet50 | 54,808 | 4: Bare, partly snow covered, fully snow covered, not recognizable | Acc. | 0.96 |

| [49] 2019 | Custom CNN and ReLu | 10,000 | 5: Dry, wet, snow, mud, other | Acc. | 0.95 |

| [89] 2020 | Custom CNN | 11000 | 4: Road, Wet road, Snow road, Black ice | Acc. | 0.98 |

| [54] 2020 | Custom CNN | 1200 | 2: Dry, wet | Acc. | 0.92 |

| [53] 2020 | Custom CNN: TLDKNet | 1000 | 3: Great resistance, medium resistance, weak resistance | Acc. | 0.80 |

| [43]1 2020 | ICNet | 1075 | 11 | mIoU | 0.66 |

| [83] 2021 | CNN fusion | 1000 | 2: drivable and non-drivable path | mIoU | 0.87 |

| [55] 2021 | RCNet | 20757 | 5: Curvy, dry, icy, rough and wet | Acc. | 1.0 |

| [38] 2021 | Custom CNN | 4244 | 4: Snowy, icy, wet, slushy | F1-Score | 0.92 |

| [56] 2022 | ResNet18 | 15000 | 3: Dry, snowy, wet/slushy | Acc. | 0.99 |

| [58] 2022 | ResNet50 | 18835 | 3: Dry, wet, icy; Time: day, night | Acc, | 0.98 |

| [59] 2022 | DenseNet121 | 45,200 | 3: Dry, wet, snowy | Acc. | 0.94 |

| [41] 2022 | MobileNetV2 | 720,000 | 12: RoadSAW | F1-Score | 0.64 |

| [18] 20222, 3 | ResNet50+ | 5061 | 6: dry, wet, partly snow, melted snow, fully packed snow, and slush | Acc. | 0.87 |

| [12] 2023 | RF | 21,375 | 3: Snow=None, standard, heavy | Acc. | 0.96 |

| ]2*[65] 2023 | MobileNetV2 | 90,759 | 3: fresh fallen snow, fully packed snow, partially covered snow | F1-Score | 0.97 |

| 810,759 | 15: RoadSAW/RoadSC | F1-Score | 0.71 | ||

| [80]1 2023 | Mask R-CNN | 800 | Black ice | AP50 | 0.93 |

| [63] 2023 | ViT-B/16 | 2498 | 8: Clear, Sunny, Cloudy, Wet, Snowy, Rainy, Foggy, Icy | Acc. | 0.92 |

| [30] 2023 | EfficientNet-B0+ | 1 mill. | 27: RSCD | Acc. | 0.98 |

| [67] 2023 | Attention-RexNet | 1 mill. | 27: RSCD | Acc. | 0.88 |

| [85] | ShuffleNetV2 | 8000 | 8 | Acc. | 0.98 |

| [84]2 2023 | Recurrent U-Net | 1500 | Drivable tracks | Acc. | 0.89 |

| [44] 2023 2 | Custom CNN | 1000 | 2: drivable and non-drivable region | mIoU | 0.89 |

| [68] 2024 | EdgeFusionViT | 1 mill. | 27: RSCD | Acc. | 0.90 |

| [15] 20243 | Custom CNN: WCamNet | 48791 | Friction factors | MAE | 0.15 |

| [95] 2024 | Custom, SIWNet | 4330; SeeingThroughFog (cite) | Friction factors | Average internal score | 0.48 |

| [14] 2024 | CNN Meteoroligcal Fusion | 600 | 5: Dry, fresh snow, transparent ice, granular snow, mixed ice | Average precision | 0.78 |

| [45]3 2024 | EfficientNet-B0+ | 1 mill. | RSCD | Acc. | 0.95 |

| Parameters | RSCD | RoadSAW/RoadSC |

|---|---|---|

| Number of images | 10,30,000 | 810,759 |

| Classes | 27 | 15 |

| Road material type | Asphalt, Concrete, Mud, Gravel | Asphalt, Cobblestone, Concrete |

| Wetness Conditions | Dry, wet, water | Dry, damp, wet, very wet |

| Winter Conditions | Fresh snow, melted snow, ice | Fresh fallen snow, fully packed snow, partially covered snow |

| Unevenness | Smooth, slight uneven, severe uneven | None |

| Class imbalance | Yes | No |

| Image/Patch size | 360 * 240 px | 2.56 m2, 7.84 m2, 12.96 m2 |

| Patch distance from camera | 10 m | 7.5 m, 15 m, 22.5 m, 30 m |

| Image calibration | None | To Bird eye view |

| Annotation | Manual | Wetness: MARWIS, Snow: Manual |

| Maximum metric | Acc: 89.76% | F1-Score: 70.92% |

| Generalization analysis | Confidence estimation from multiple overlapping images | Deterministic Uncertainty Quantification, CtD datasets implementation |

| Velocity measurements | No | Yes |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).