Submitted:

05 August 2024

Posted:

06 August 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

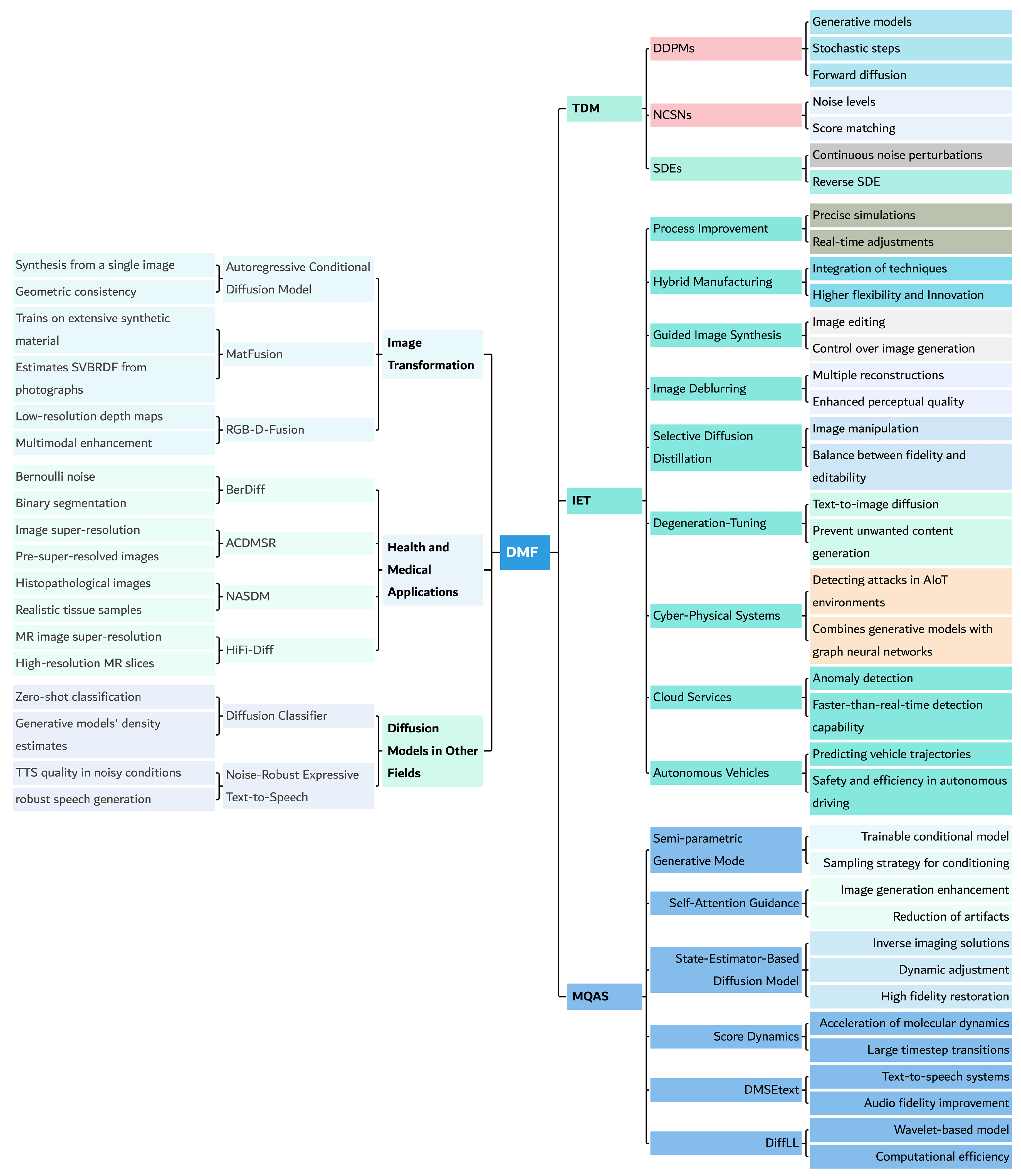

1.1. Motivation and Uniqueness of This Survey

- ❑

- This survey considers several key aspects of DMs, including theory, algorithms, innovations, media quality, image transformation, healthcare applications, and more. We provide an overview of relevant literature up to March 2024, highlighting the latest techniques and advancements.

- ❑

- We categorize DMs into three main types: Denoising Diffusion Probabilistic Models (DDPMs), Noise-Conditioned Score Networks (NCSNs), and Stochastic Differential Equations (SDEs), which aids in understanding their theoretical foundations and algorithmic variations.

- ❑

- We highlight novel approaches and experimental methodologies relevant to the application of DMs, considering data types, algorithms, applications, datasets, evaluations, and limitations.

- ❑

- Finally, we discuss the findings, identify open issues, and raise questions about future research directions in DMs, aiming to guide researchers and practitioners.

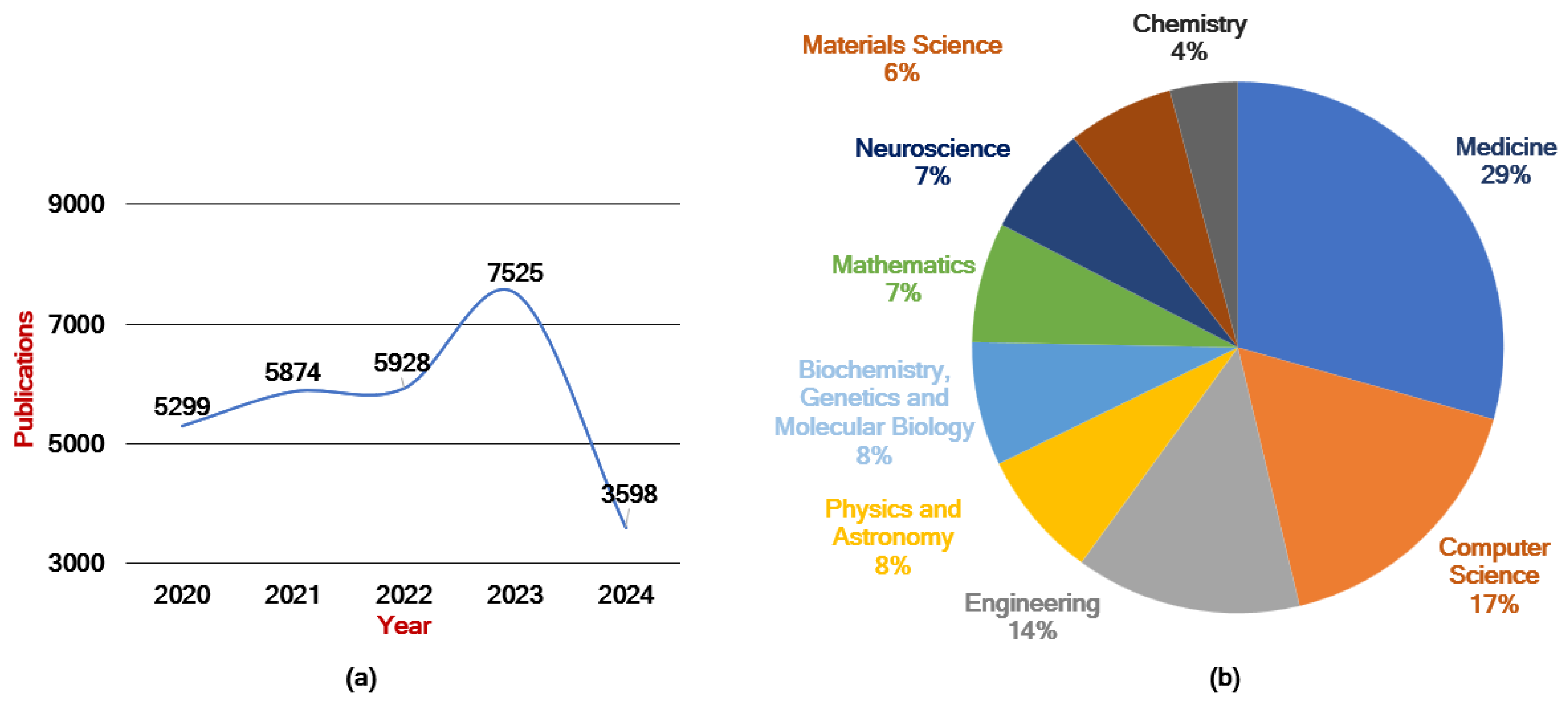

1.2. Search Strategy

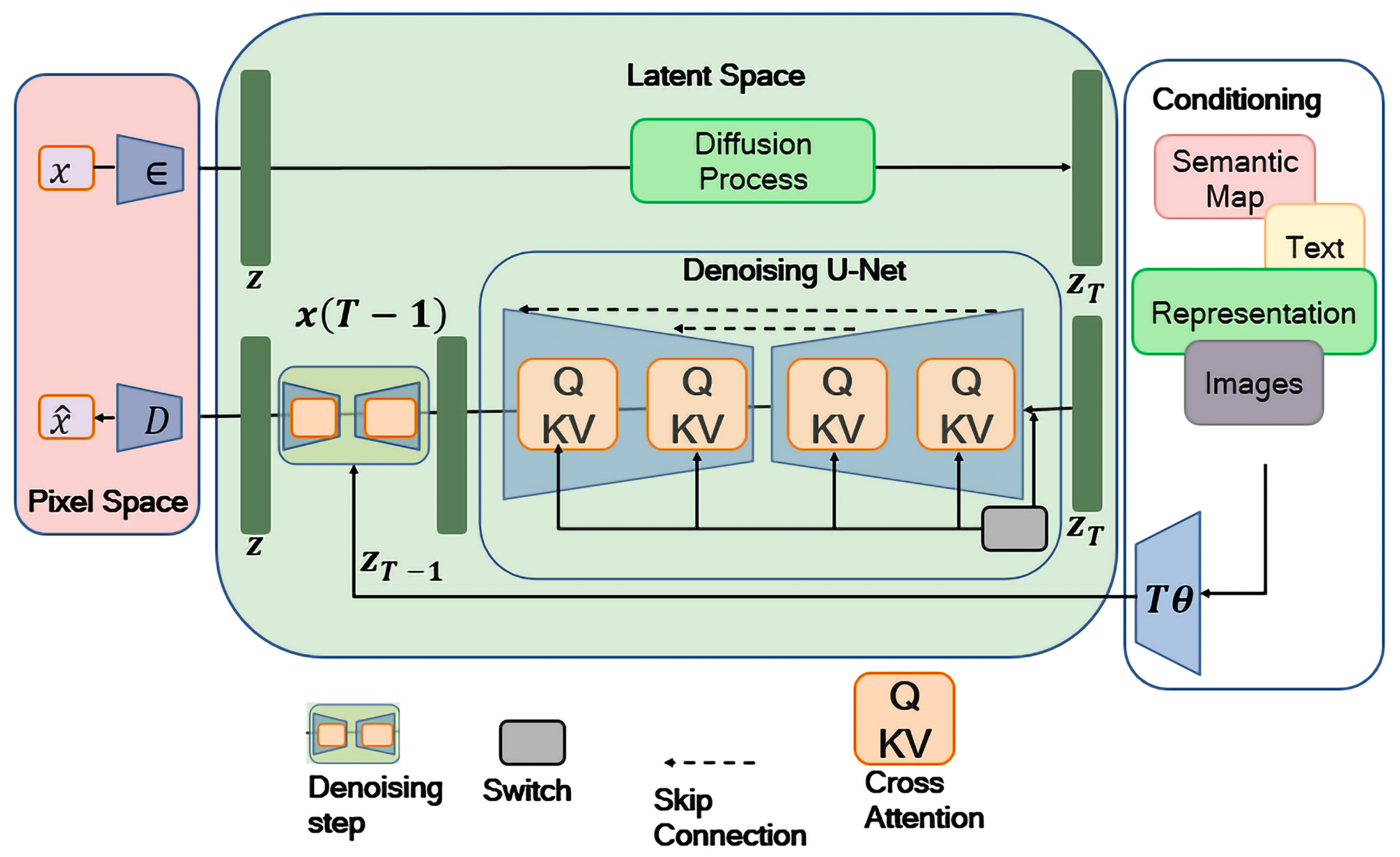

2. General Overview of DMs

- Initialization: Start with data in its original form .

- Forward Process (Noise Addition): Gradually add noise over T timesteps, transforming the data from to based on a predefined noise schedule .

- Reverse Process (Denoising): Sequentially estimate from using the learned parameters , effectively reversing the noise addition to either reconstruct the original data or generate new data samples.

- Input: Original data , Total timesteps T, Noise schedule .

- Output: Denoised or synthesized data .

- Training: Train the model to approximate the reverse noise addition process by learning the conditional distributions for each timestep t, from T down to 1.

- Data Synthesis: Begin with a sample of random noise and iteratively apply the learned reverse process:culminating in , the final synthesized or reconstructed data.

2.1. DDPMs

2.2. NCSNs

2.3. SDEs

3. General Applications of DMs

- ❑

- Image Synthesis: DMs are used to create detailed, high-resolution images from a distribution of noise. They can generate new images or improve existing ones by improving clarity and resolution, making them particularly useful in fields such as digital art and graphic design [13].

- ❑

- Text Generation: DMs are capable of producing coherent and contextually relevant text sequences. This makes them suitable for applications such as creating literary content, generating realistic dialogues in virtual assistants, and automating content generation for news articles or creative writing [14].

- ❑

- Audio Synthesis: DMs can generate clear and realistic audio from noisy signals. This is valuable in music production, where it’s necessary to create new sounds or improve the clarity of recorded audio, as well as in speech synthesis technologies used in various assistive devices [7].

- ❑

- Healthcare Applications: Although not limited to medical imaging, DMs assist in synthesizing medical data, including Magnetic Resonance Imaging (MRI), Computed Tomography (CT) scans, and other imaging modalities. This ability is vital for training medical professionals, improving diagnostic tools, and developing more precise therapeutic strategies without compromising patient privacy [15].

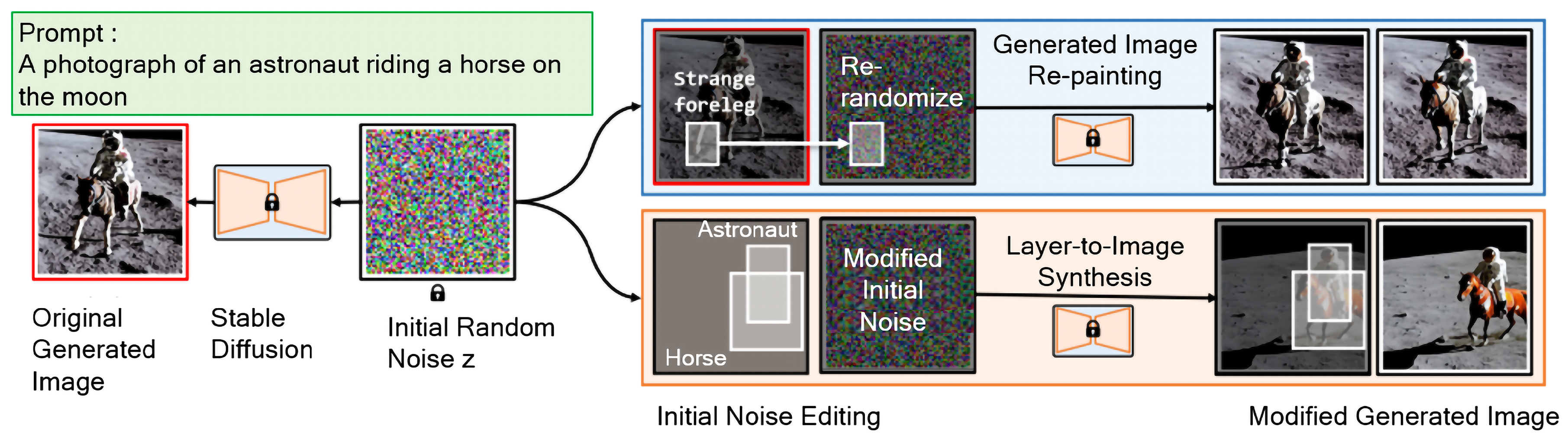

4. Innovations and Experimental Techniques in DMs

5. Media Quality, Authenticity, and Synthesis

6. Image Transformation and Enhancement

6.1. Image-to-Image Transformation

6.2. Image Quality Enhancement and Processing

7. Healthcare and Medical Applications

8. Applications of Diffusion Models in Other Fields

9. Discussion

9.1. Ensuring the Authenticity of Synthesized Media

9.2. Overcoming Challenges in Synthesizing High-Quality Images and Audio

9.3. Optimizing DMs to Reduce Artifacts and Improve Image Quality

9.4. Addressing Computational Efficiency and Scalability Issues in DMs

9.5. Improving DMs for Accurate and Reliable Medical Imaging and Diagnostics

9.6. Expanding the Applicability and Effectiveness of DMs in Diverse Fields

9.7. Mitigating Ethical Considerations and Potential Risks Associated with the Use of DMs

10. Conclusion

Conflicts of Interest

Abbreviations

| ACDM | Autoregressive Cascade Multiscale Diffusion |

| ACDMSR | Accelerated Conditional Diffusion Model for Image Super-Resolution |

| AIoT | Artificial Intelligence of Things |

| BerDiff | Bernoulli Diffusion Model |

| BLIP | Bootstrapped Language-Image Pretraining |

| BuilDiff | Building Diffusion |

| CDDM | Conditional Denoising Diffusion Model |

| CDMs | Classifier-guided Diffusion Models |

| CIDEr | Consensus-based Image Description Evaluation |

| CLE | Controllable Light Enhancement Diffusion |

| CLIP | Contrastive Language-Image Pre-training |

| CLIPSonic | Controlled Language-Image Pretraining Sonic |

| CMD | Conditional Diffusion Models |

| DDIM | Denoising Diffusion Implicit Models |

| DDPMs | Denoising Diffusion Probabilistic Models |

| DeScoD-ECG | Denoising Score-based Diffusion for Electrocardiogram |

| DiffWave | Diffusion Waveform |

| DiffDreamer | Diffusion Dreamer |

| DiffLL | Diffusion Model for Low-Light |

| DMs | Diffusion Models |

| DMSEtext | Diffusion Model for Speech Enhancement text |

| DisC-Diff | Discriminator Consistency Diffusion |

| DSBID | Diffusion-based Stochastic Blind Image Deblurring |

| DSC | Dice Similarity Coefficient |

| DICDNet | Deep Interpretable Convolutional Dictionary Networks |

| EquiDiff | Equivariant Diffusion |

| FID | Frechet Inception Distance |

| GED | Generalized Energy Distance |

| GNNs | Graph Neural Networks |

| HiFi-Diff | Hierarchical Feature Conditional Diffusion |

| HQS | Hybrid Quality Score |

| ID3PM | Identity Denoising Diffusion Probabilistic Model |

| IS | Inception Score |

| KID | Kernel Inception Distance |

| LEs | Learnable Unauthorized Examples |

| LDMs | Latent Diffusion Models |

| LPIPS | Learned Perceptual Image Patch Similarity |

| MAAT | Metric Anomaly Anticipation |

| MAD | Mean Absolute Deviation |

| MAE | Mean Absolute Error |

| MatFusion | Material Fusion |

| MOS | Mean Opinion Score |

| MPJPE | Mean Per-Joint Position Error |

| NILM | Non-Intrusive Load Monitoring |

| NASDM | Nuclei-Aware Semantic Diffusion Model |

| NIQE | Naturalness Image Quality Evaluator |

| OMOMO | Object Motion Guided Human Motion Synthesis |

| PatchDDM | Patch-based Diffusion Denoising Model |

| PRD | Percent Root Mean Square Difference |

| PSNR | Peak Signal-to-Noise Ratio |

| RGB-D-Fusion | Red-Green-Blue Depth Fusion |

| RNNs | Recurrent Neural Networks |

| RMSE | Root Mean Square Error |

| SAG | Self-Attention Guidance |

| SBDMs | Score-Based Diffusion Models |

| SDEs | Stochastic Differential Equations |

| SDG | Semantic Diffusion Guidance |

| SegDiff | Segmentation Diffusion |

| SketchFFusion | Sketch-Driven Fusion |

| SMOS | Style Similarity MOS |

| SSIM | Structural Similarity Index Measure |

| SDEs | Stochastic Differential Equations |

| TFDPM | Temporal and Feature Pattern-based Diffusion Probabilistic Model |

| VDMs | Variational Diffusion Models |

| VTF-GAN | Visible-to-Thermal Facial GAN |

References

- Sohl-Dickstein, J.; Weiss, E.A.; Maheswaranathan, N.; Ganguli, S. Deep Unsupervised Learning using Nonequilibrium Thermodynamics. arXiv 2015, arXiv:1503.03585. [Google Scholar]

- Ho, J.; Jain, A.; Abbeel, P. Denoising Diffusion Probabilistic Models. Advances in Neural Information Processing Systems, 2020.

- Saharia, C.; Ho, J.; Chan, W.; Fleet, D.J.; Norouzi, M.; Salimans, T. Image Super-Resolution via Iterative Refinement. arXiv 2021, arXiv:2104.07636. [Google Scholar] [CrossRef] [PubMed]

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-resolution image synthesis with latent diffusion models. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2022, pp. 10684–10695.

- Ramesh, A.; Pavlov, M.; Goh, G.; Gray, S.; Voss, C.; Radford, A.; Chen, M.; Sutskever, I. Zero-Shot Text-to-Image Generation. arXiv 2021, arXiv:2102.12092. [Google Scholar]

- Austin, J.; Odena, A.; Nijkamp, E.; Ballas, N.; Goodfellow, I. Structured Denoising Diffusion Models in Discrete State-Spaces. Advances in Neural Information Processing Systems, 2021.

- Kong, Z.; Ping, W.; Huang, J.; Zhao, K.; Catanzaro, B. Diffwave: A versatile diffusion model for audio synthesis. arXiv 2020, arXiv:2009.09761. [Google Scholar]

- Hoogeboom, E.; Cohen, T.; Tomczak, J.M. Equivariant Diffusion Models for Molecule Generation. International Conference on Machine Learning. PMLR, 2022, pp. 8816–8831.

- Song, Y.; Sohl-Dickstein, J.; Kingma, D.P.; Kumar, A.; Ermon, S.; Poole, B. Score-based generative modeling through stochastic differential equations. arXiv 2020, arXiv:2011.13456. [Google Scholar]

- Song, Y.; Ermon, S. Generative modeling by estimating gradients of the data distribution. Advances in neural information processing systems 2019, 32. [Google Scholar]

- Anderson, B.D. Reverse-time diffusion equation models. Stochastic Processes and their Applications 1982, 12, 313–326. [Google Scholar] [CrossRef]

- Vincent, P. A connection between score matching and denoising autoencoders. Neural computation 2011, 23, 1661–1674. [Google Scholar] [CrossRef] [PubMed]

- Wang, W.; Bao, J.; Zhou, W.; Chen, D.; Chen, D.; Yuan, L.; Li, H. Semantic image synthesis via diffusion models. arXiv 2022, arXiv:2207.00050. [Google Scholar]

- Gong, S.; Li, M.; Feng, J.; Wu, Z.; Kong, L. Diffuseq: Sequence to sequence text generation with diffusion models. arXiv 2022, arXiv:2210.08933. [Google Scholar]

- Kazerouni, A.; Aghdam, E.K.; Heidari, M.; Azad, R.; Fayyaz, M.; Hacihaliloglu, I.; Merhof, D. Diffusion models in medical imaging: A comprehensive survey. Medical Image Analysis 2023, p. 102846.

- Krizhevsky, A.; Hinton, G. ; others. Learning multiple layers of features from tiny images. Technical report, University of Toronto, Toronto, ON, Canada, 2009.

- Yu, F.; Seff, A.; Zhang, Y.; Song, S.; Funkhouser, T.; Xia, J. LSUN: Construction of a Large-scale Image Dataset using Deep Learning with Humans in the Loop. arXiv 2015, arXiv:1506.03365. [Google Scholar]

- Liu, Z.; Luo, P.; Wang, X.; Tang, X. Deep Learning Face Attributes in the Wild. Proceedings of the IEEE International Conference on Computer Vision (ICCV) 2015.

- Song, Y.; Ermon, S. Improved techniques for training score-based generative models. Advances in neural information processing systems 2020, 33, 12438–12448. [Google Scholar]

- Dhariwal, P.; Nichol, A. Diffusion Models Beat GANs on Image Synthesis. Advances in Neural Information Processing Systems 2021. [Google Scholar]

- Kingma, D.P.; Dhariwal, P.; Ho, J.; Salimans, T.; Chen, X.; Abbeel, P. Variational Diffusion Models. arXiv 2021, arXiv:2107.00630. [Google Scholar]

- Nichol, A.; Dhariwal, P. Improved Denoising Diffusion Probabilistic Models. arXiv 2021, arXiv:2102.09672. [Google Scholar]

- Kong, Z.; Ping, W.; Huang, J.; Zhao, K.; Catanzaro, B. DiffWave: A Versatile Diffusion Model for Audio Synthesis. arXiv 2021, arXiv:2009.09761. [Google Scholar]

- Amit, R.; Balaji, Y. SegDiff: Image Segmentation with Diffusion Models. arXiv 2021, arXiv:2106.02477. [Google Scholar]

- Nichol, A.; Dhariwal, P.; Ramesh, A.; Shyam, P.; Mishkin, P.; McGrew, B.; Sastry, G.; Askell, A.; Chen, P.; Mishkin, M.; Chug. GLIDE: Towards Photorealistic Image Generation and Editing with Text-Guided Diffusion Models. arXiv 2021, arXiv:2112.10741. [Google Scholar]

- Saharia, e.a. Image Transformers with Autoregressive Models for High-Fidelity Image Synthesis. Journal of Advanced Image Processing 2022. [Google Scholar]

- Ho, J.; Jain, A.; Abbeel, P. Cascaded Diffusion Models for High-Fidelity Image Generation. arXiv 2022, arXiv:2106.15282. [Google Scholar]

- Ho, J.; Chan, W.; Salimans, T.; Gritsenko, A.; Kumar, K.C.; Isola, P. Video Diffusion Models. arXiv 2022, arXiv:2204.03458. [Google Scholar]

- Li, e.a. Optimizing Diffusion Models for Image Synthesis. Journal of Computational Imaging 2023. [Google Scholar]

- Mao, J.; Wang, X.; Aizawa, K. Guided image synthesis via initial image editing in diffusion model. Proceedings of the 31st ACM International Conference on Multimedia, 2023, pp. 5321–5329.

- Whang, J.; Delbracio, M.; Talebi, H.; Saharia, C.; Dimakis, A.G.; Milanfar, P. Deblurring via stochastic refinement. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 16293–16303.

- Chung, H.; Sim, B.; Ye, J.C. Come-closer-diffuse-faster: Accelerating conditional diffusion models for inverse problems through stochastic contraction. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp. 12413–12422.

- Wang, L.; Yang, S.; Liu, S.; Chen, Y.c. Not All Steps are Created Equal: Selective Diffusion Distillation for Image Manipulation. Proceedings of the IEEE/CVF International Conference on Computer Vision, 2023, pp. 7472–7481.

- Li, J.; Wu, J.; Liu, C.K. Object motion guided human motion synthesis. ACM Transactions on Graphics (TOG) 2023, 42, 1–11. [Google Scholar] [CrossRef]

- Ni, Z.; Wei, L.; Li, J.; Tang, S.; Zhuang, Y.; Tian, Q. Degeneration-tuning: Using scrambled grid shield unwanted concepts from stable diffusion. Proceedings of the 31st ACM International Conference on Multimedia, 2023, pp. 8900–8909.

- Yan, T.; Zhou, T.; Zhan, Y.; Xia, Y. TFDPM: Attack detection for cyber–physical systems with diffusion probabilistic models. Knowledge-Based Systems 2022, 255, 109743. [Google Scholar] [CrossRef]

- Lee, C.; Yang, T.; Chen, Z.; Su, Y.; Lyu, M.R. Maat: Performance Metric Anomaly Anticipation for Cloud Services with Conditional Diffusion. 2023 38th IEEE/ACM International Conference on Automated Software Engineering (ASE). IEEE, 2023, pp. 116–128.

- Chen, K.; Chen, X.; Yu, Z.; Zhu, M.; Yang, H. Equidiff: A conditional equivariant diffusion model for trajectory prediction. 2023 IEEE 26th International Conference on Intelligent Transportation Systems (ITSC). IEEE, 2023, pp. 746–751.

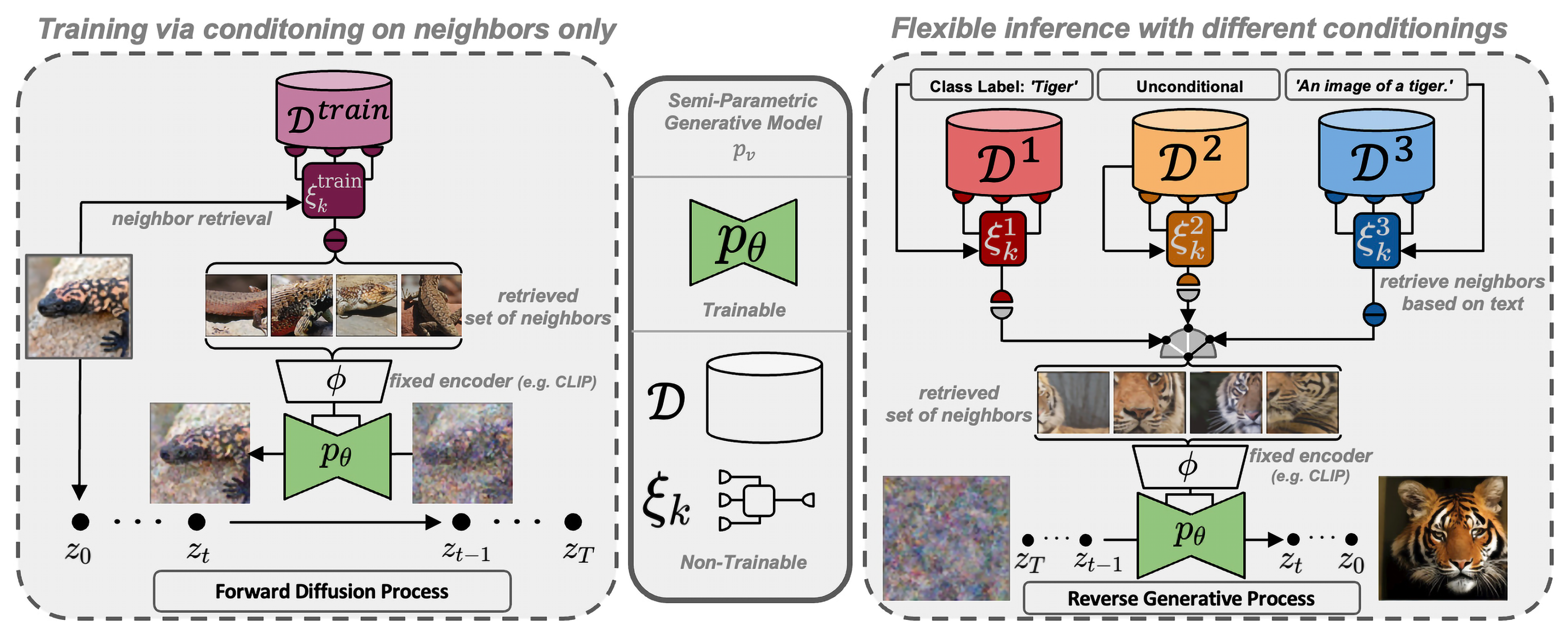

- Blattmann, A.; Rombach, R.; Oktay, K.; Müller, J.; Ommer, B. Retrieval-augmented diffusion models. Advances in Neural Information Processing Systems 2022, 35, 15309–15324. [Google Scholar]

- Hong, S.; Lee, G.; Jang, W.; Kim, S. Improving sample quality of diffusion models using self-attention guidance. Proceedings of the IEEE/CVF International Conference on Computer Vision, 2023, pp. 7462–7471.

- Ji, L.; Rao, Z.; Pan, S.J.; Lei, C.; Chen, Q. A Diffusion Model with State Estimation for Degradation-Blind Inverse Imaging. Proceedings of the AAAI Conference on Artificial Intelligence, 2024, Vol. 38, pp. 2471–2479.

- Tian, Y.; Liu, W.; Lee, T. Diffusion-Based Mel-Spectrogram Enhancement for Personalized Speech Synthesis with Found Data. 2023 IEEE Automatic Speech Recognition and Understanding Workshop (ASRU). IEEE, 2023, pp. 1–7.

- Jiang, H.; Luo, A.; Fan, H.; Han, S.; Liu, S. Low-light image enhancement with wavelet-based diffusion models. ACM Transactions on Graphics (TOG) 2023, 42, 1–14. [Google Scholar] [CrossRef]

- Dong, H.W.; Liu, X.; Pons, J.; Bhattacharya, G.; Pascual, S.; Serrà, J.; Berg-Kirkpatrick, T.; McAuley, J. CLIPSonic: Text-to-audio synthesis with unlabeled videos and pretrained language-vision models. 2023 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA). IEEE, 2023, pp. 1–5.

- Choi, J.; Kim, S.; Jeong, Y.; Gwon, Y.; Yoon, S. Ilvr: Conditioning method for denoising diffusion probabilistic models. arXiv 2021, arXiv:2108.02938. [Google Scholar]

- Liu, e.a. More Control with Semantic Diffusion Guidance for Image Synthesis. Journal of Image and Audio Synthesis 2023. [Google Scholar]

- Cai, S.; Chan, E.R.; Peng, S.; Shahbazi, M.; Obukhov, A.; Van Gool, L.; Wetzstein, G. Diffdreamer: Towards consistent unsupervised single-view scene extrapolation with conditional diffusion models. Proceedings of the IEEE/CVF International Conference on Computer Vision, 2023, pp. 2139–2150.

- Carrillo, e.a. Interactive Line Art Colorization with Conditional Diffusion Models. Journal of Specialized Techniques and Innovations in Diffusion Models 2023. [Google Scholar]

- Mao, W.; Han, B.; Wang, Z. SketchFFusion: Sketch-guided image editing with diffusion model. 2023 IEEE International Conference on Image Processing (ICIP). IEEE, 2023, pp. 790–794.

- Luo, J.; Li, Y.; Pan, Y.; Yao, T.; Feng, J.; Chao, H.; Mei, T. Semantic-conditional diffusion networks for image captioning. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2023, pp. 23359–23368.

- Hsu, T.; Sadigh, B.; Bulatov, V.; Zhou, F. Score dynamics: scaling molecular dynamics with picosecond timesteps via conditional diffusion model. arXiv 2023, arXiv:2310.01678. [Google Scholar] [CrossRef]

- Yan, Q.; Hu, T.; Sun, Y.; Tang, H.; Zhu, Y.; Dong, W.; Van Gool, L.; Zhang, Y. Towards high-quality HDR deghosting with conditional diffusion models. IEEE Transactions on Circuits and Systems for Video Technology 2023. [Google Scholar] [CrossRef]

- Peng, W.; Adeli, E.; Bosschieter, T.; Park, S.H.; Zhao, Q.; Pohl, K.M. Generating realistic brain mris via a conditional diffusion probabilistic model. International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, 2023, pp. 14–24.

- Yu, J.J.; Forghani, F.; Derpanis, K.G.; Brubaker, M.A. Long-Term Photometric Consistent Novel View Synthesis with Diffusion Models. Proceedings of the IEEE/CVF International Conference on Computer Vision, 2023, pp. 7094–7104.

- Yin, Y.; Xu, D.; Tan, C.; Liu, P.; Zhao, Y.; Wei, Y. Cle diffusion: Controllable light enhancement diffusion model. Proceedings of the 31st ACM International Conference on Multimedia, 2023, pp. 8145–8156.

- Papantoniou, F.P.; Lattas, A.; Moschoglou, S.; Zafeiriou, S. Relightify: Relightable 3d faces from a single image via diffusion models. Proceedings of the IEEE/CVF International Conference on Computer Vision, 2023, pp. 8806–8817.

- Kirch, S.; Olyunina, V.; Ondřej, J.; Pagés, R.; Martin, S.; Pérez-Molina, C. RGB-D-Fusion: Image Conditioned Depth Diffusion of Humanoid Subjects. IEEE Access 2023. [Google Scholar] [CrossRef]

- Mao, Y.; Jiang, L.; Chen, X.; Li, C. Disc-diff: Disentangled conditional diffusion model for multi-contrast mri super-resolution. International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, 2023, pp. 387–397.

- Yang, Z.; Liu, B.; Xxiong, Y.; Yi, L.; Wu, G.; Tang, X.; Liu, Z.; Zhou, J.; Zhang, X. DocDiff: Document enhancement via residual diffusion models. Proceedings of the 31st ACM International Conference on Multimedia, 2023, pp. 2795–2806.

- Ordun, C.; Raff, E.; Purushotham, S. When visible-to-thermal facial GAN beats conditional diffusion. 2023 IEEE International Conference on Image Processing (ICIP). IEEE, 2023, pp. 181–185.

- Kansy, M.; Raël, A.; Mignone, G.; Naruniec, J.; Schroers, C.; Gross, M.; Weber, R.M. Controllable Inversion of Black-Box Face Recognition Models via Diffusion. Proceedings of the IEEE/CVF International Conference on Computer Vision, 2023, pp. 3167–3177.

- Yu, J.; Wang, Y.; Zhao, C.; Ghanem, B.; Zhang, J. Freedom: Training-free energy-guided conditional diffusion model. Proceedings of the IEEE/CVF International Conference on Computer Vision, 2023, pp. 23174–23184.

- Bieder, F.; Wolleb, J.; Durrer, A.; Sandkühler, R.; Cattin, P.C. Diffusion models for memory-efficient processing of 3d medical images. arXiv 2023, arXiv:2303.15288. [Google Scholar]

- Amirhossein, K.; Khodapanah, A.E.; Moein, H.; Reza, A.; Mohsen, F.; Ilker, H.; Dorit, M. Diffusion models for medical image analysis: a comprehensive survey. arXiv preprint arXiv 2022, 2211. [Google Scholar]

- Chen, T.; Wang, C.; Shan, H. Berdiff: Conditional bernoulli diffusion model for medical image segmentation. International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, 2023, pp. 491–501.

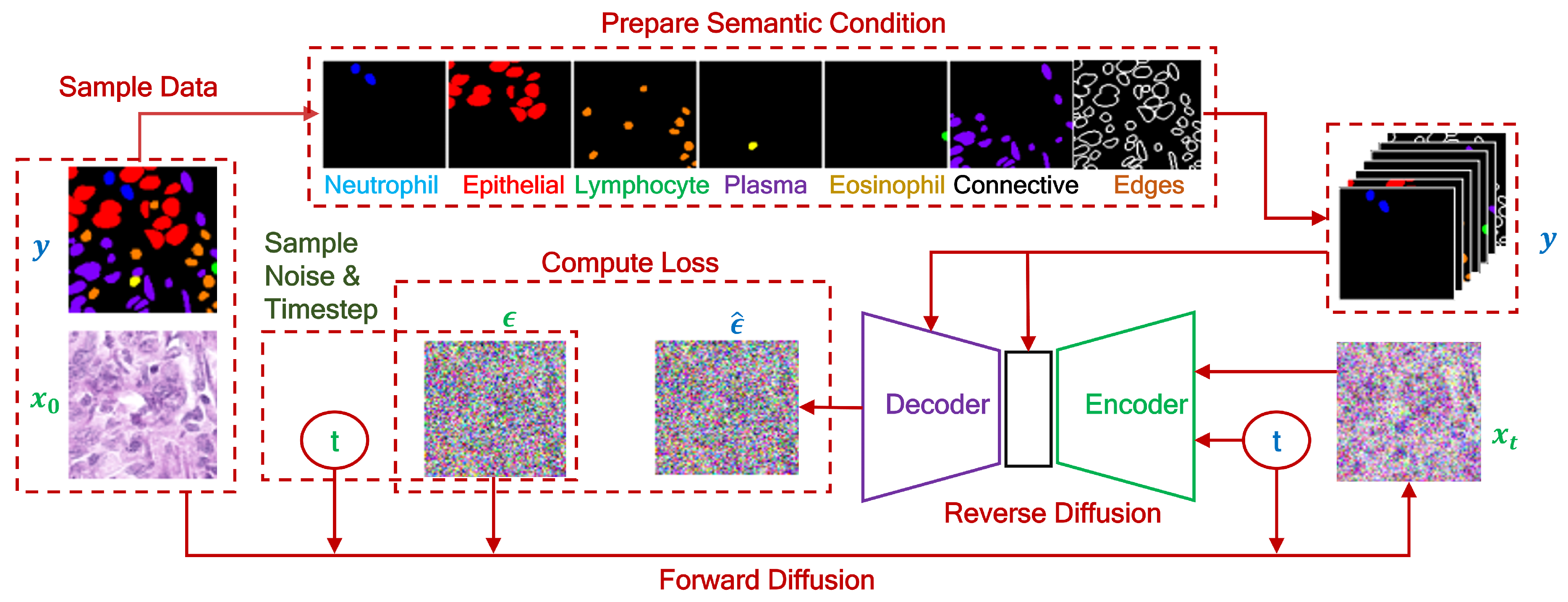

- Shrivastava, A.; Fletcher, P.T. NASDM: nuclei-aware semantic histopathology image generation using diffusion models. International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, 2023, pp. 786–796.

- Wang, X.; Shen, Z.; Song, Z.; Wang, S.; Liu, M.; Zhang, L.; Xuan, K.; Wang, Q. Arbitrary Reduction of MRI Inter-slice Spacing Using Hierarchical Feature Conditional Diffusion. International Workshop on Machine Learning in Medical Imaging. Springer, 2023, pp. 23–32.

- Li, H.; Ditzler, G.; Roveda, J.; Li, A. Descod-ecg: Deep score-based diffusion model for ecg baseline wander and noise removal. IEEE Journal of Biomedical and Health Informatics 2023. [Google Scholar] [CrossRef]

- Finzi, M.A.; Boral, A.; Wilson, A.G.; Sha, F.; Zepeda-Núñez, L. User-defined event sampling and uncertainty quantification in diffusion models for physical dynamical systems. International Conference on Machine Learning. PMLR, 2023, pp. 10136–10152.

- Yang, X.; Li, W.; Zhang, M. Directional diffusion models for chaotic dynamical systems. Chaos: An Interdisciplinary Journal of Nonlinear Science 2024. [Google Scholar]

- Li, J.; Zhang, R.; Wang, H. Comparison of manifold learning techniques for conformational modeling. Journal of Computational Biology 2023. [Google Scholar]

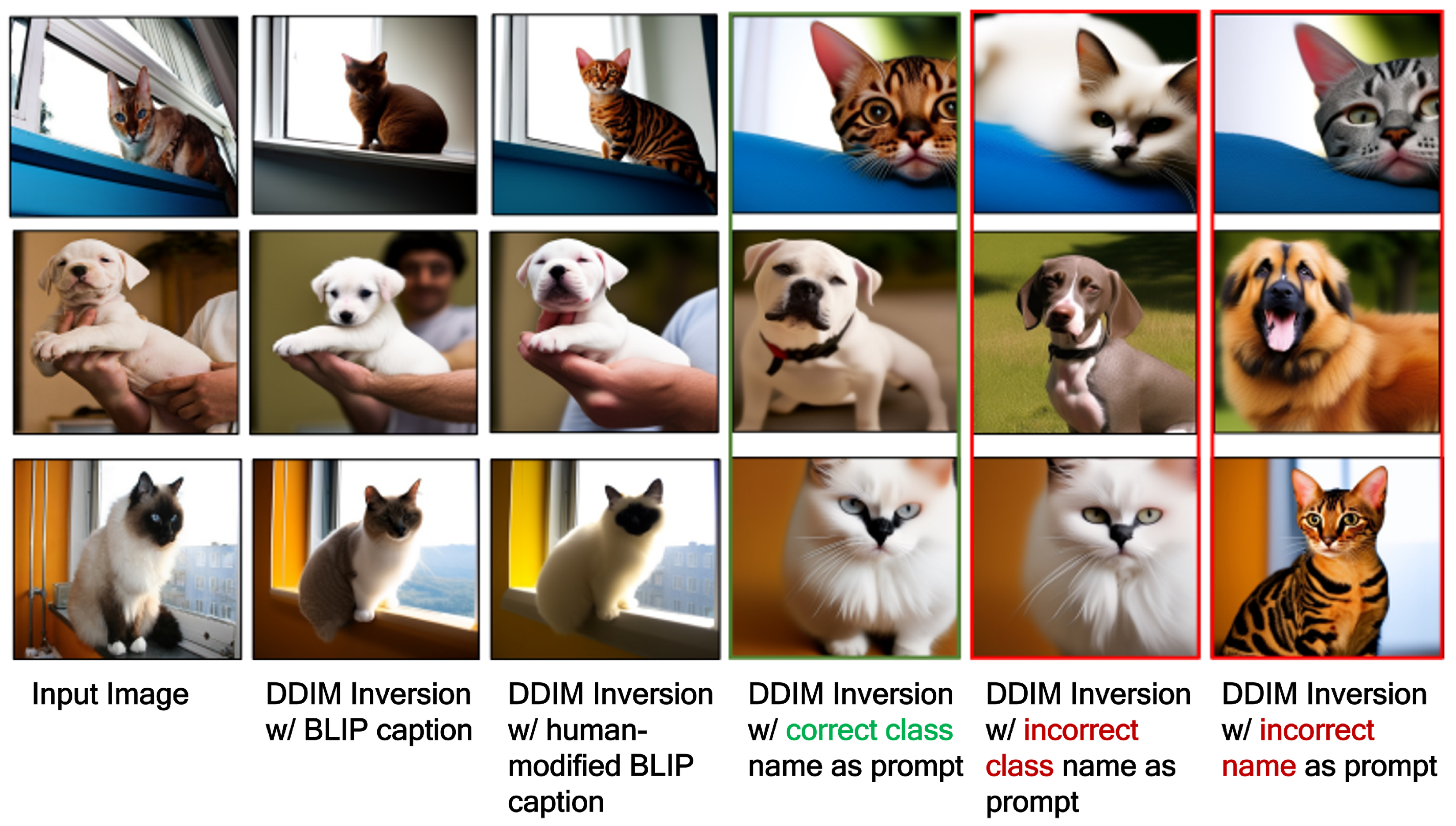

- Li, A.C.; Prabhudesai, M.; Duggal, S.; Brown, E.; Pathak, D. Your diffusion model is secretly a zero-shot classifier. Proceedings of the IEEE/CVF International Conference on Computer Vision, 2023, pp. 2206–2217.

- Zhuang, Y.; Hou, B.; Mathai, T.S.; Mukherjee, P.; Kim, B.; Summers, R.M. Semantic Image Synthesis for Abdominal CT. International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, 2023, pp. 214–224.

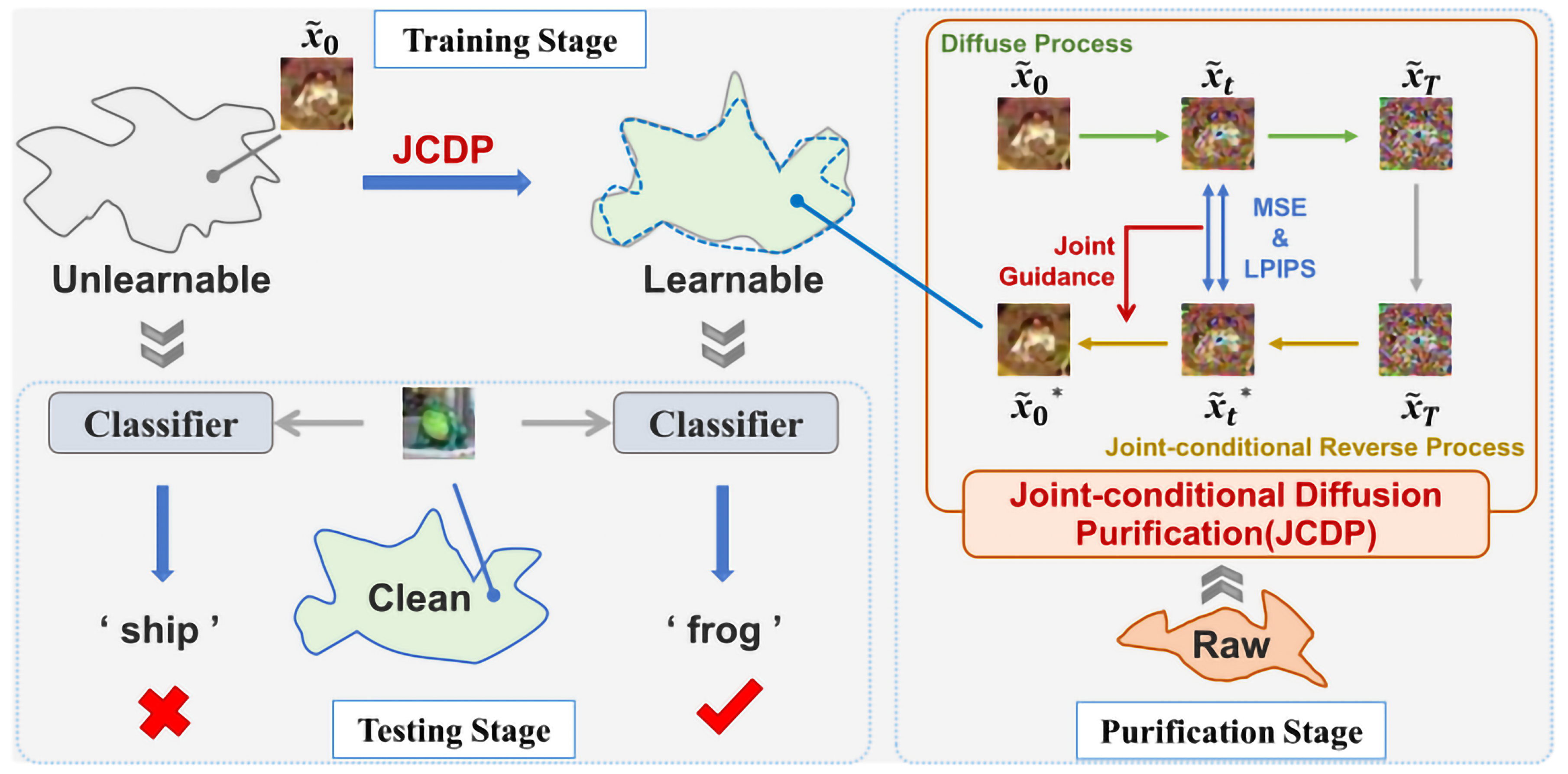

- Jiang, W.; Diao, Y.; Wang, H.; Sun, J.; Wang, M.; Hong, R. Unlearnable examples give a false sense of security: Piercing through unexploitable data with learnable examples. Proceedings of the 31st ACM International Conference on Multimedia, 2023, pp. 8910–8921.

- Wang, X.; López-Tapia, S.; Katsaggelos, A.K. Atmospheric turbulence correction via variational deep diffusion. 2023 IEEE 6th International Conference on Multimedia Information Processing and Retrieval (MIPR). IEEE, 2023, pp. 1–4.

- Sartor, S.; Peers, P. Matfusion: a generative diffusion model for svbrdf capture. SIGGRAPH Asia 2023 Conference Papers, 2023, pp. 1–10.

- Wei, Y.; Vosselman, G.; Yang, M.Y. BuilDiff: 3D Building Shape Generation using Single-Image Conditional Point Cloud Diffusion Models. Proceedings of the IEEE/CVF International Conference on Computer Vision, 2023, pp. 2910–2919.

- Niu, A.; Pham, T.X.; Zhang, K.; Sun, J.; Zhu, Y.; Yan, Q.; Kweon, I.S.; Zhang, Y. ACDMSR: Accelerated conditional diffusion models for single image super-resolution. IEEE Transactions on Broadcasting 2024. [Google Scholar] [CrossRef]

- Sun, R.; Dong, K.; Zhao, J. DiffNILM: a novel framework for non-intrusive load monitoring based on the conditional diffusion model. Sensors 2023, 23, 3540. [Google Scholar] [CrossRef]

- Yang, D.; Liu, S.; Yu, J.; Wang, H.; Weng, C.; Zou, Y. Norespeech: Knowledge distillation based conditional diffusion model for noise-robust expressive tts. arXiv 2022, arXiv:2211.02448. [Google Scholar]

- Yu, X.; Li, G.; Lou, W.; Liu, S.; Wan, X.; Chen, Y.; Li, H. Diffusion-based data augmentation for nuclei image segmentation. International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, 2023, pp. 592–602.

- Ma, R.; Duan, J.; Kong, F.; Shi, X.; Xu, K. Exposing the fake: Effective diffusion-generated images detection. arXiv 2023, arXiv:2307.06272. [Google Scholar]

- Zhao, Z.; Duan, J.; Hu, X.; Xu, K.; Wang, C.; Zhang, R.; Du, Z.; Guo, Q.; Chen, Y. Unlearnable examples for diffusion models: Protect data from unauthorized exploitation. arXiv 2023, arXiv:2306.01902. [Google Scholar]

- Mei, K.; Patel, V. Vidm: Video implicit diffusion models. Proceedings of the AAAI Conference on Artificial Intelligence, 2023, Vol. 37, pp. 9117–9125.

- Mao, J.; Liu, J.; Zhang, W. SketchFusion: A Model for Sketch-Guided Image Editing Using a Conditional Diffusion Model. Journal of Graphics and Image Processing 2023, 12, 123–134. [Google Scholar]

- Wang, H.; Li, Y.; He, N.; Ma, K.; Meng, D.; Zheng, Y. DICDNet: deep interpretable convolutional dictionary network for metal artifact reduction in CT images. IEEE Transactions on Medical Imaging 2021, 41, 869–880. [Google Scholar] [CrossRef] [PubMed]

- Maass, N.; Maier, A.; Wuerfl, T. Reducing image artifacts, 2018. United States Patent Application 2018019. 2985.

- Filoscia, I.; Alderighi, T.; Giorgi, D.; Malomo, L.; Callieri, M.; Cignoni, P. Optimizing object decomposition to reduce visual artifacts in 3D printing. Computer Graphics Forum. Wiley Online Library, 2020, Vol. 39, pp. 423–434.

- Han, S.; Mao, H.; Dally, W.J. Deep compression: Compressing deep neural networks with pruning, trained quantization and huffman coding. arXiv 2015, arXiv:1510.00149. [Google Scholar]

- Jacob, B.; Kligys, S.; Chen, B.; Zhu, M.; Tang, M.; Howard, A.; Adam, H.; Kalenichenko, D. Quantization and training of neural networks for efficient integer-arithmetic-only inference. arXiv 2018, arXiv:1712.05877. [Google Scholar]

- Shin, H.; Park, H.; Cho, K.Y.; Kim, S.K. Medical image synthesis for data augmentation and anonymization using generative adversarial networks. Proceedings of the Medical Imaging Technology Conference 2018. [Google Scholar]

- Yan, M.; Liu, Y.; Park, Y. RADIA: Protecting Patient Privacy in Radiology Reports. IEEE Journal of Biomedical and Health Informatics 2021. [Google Scholar]

- Yu, e.a. Transfer Learning in Medical Imaging. Journal of Biomedical Engineering 2022. [Google Scholar]

- Chen, e.a. Incorporating Domain-Specific Knowledge in Diffusion Models for Medical Imaging. Journal of Medical Imaging and Diagnostics 2023. [Google Scholar]

- Goodell, J.W.; Goutte, C. Toward AI and data analytics for financial inclusion: A review. Journal of Financial Stability 2021. [Google Scholar]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: synthetic minority over-sampling technique. Journal of artificial intelligence research 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Wang, Y.; Li, X.; Yang, L.; Ma, J.; Li, H. ADDITION: Detecting Adversarial Examples With Image-Dependent Noise Reduction. IEEE Transactions on Dependable and Secure Computing 2023. [Google Scholar] [CrossRef]

- Buolamwini, J.; Gebru, T. Gender shades: Intersectional accuracy disparities in commercial gender classification. Conference on fairness, accountability and transparency. PMLR, 2018, pp. 77–91.

- Ribeiro, M.T.; Singh, S.; Guestrin, C. "Why Should I Trust You?": Explaining the Predictions of Any Classifier. Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining 2016. [Google Scholar]

- Dwork, C.; Roth, A. ; others. The algorithmic foundations of differential privacy. Foundations and Trends® in Theoretical Computer Science 2014, 9, 211–407. [Google Scholar] [CrossRef]

- et al., F. AI4People—An Ethical Framework for a Good AI Society: Opportunities, Risks, Principles, and Recommendations. Minds and Machines 2018.

| Year | Proposed Algorithm | Used Datasets | Applications |

|---|---|---|---|

| 2020 | DDPMs [2] | CIFAR-10 [16], LSUN [17], CelebA [18] | Image generation |

| 2020 | Score-Based DMs [19] | CIFAR-10, CelebA, LSUN | Image generation |

| 2020 | SDEs [9] | CIFAR-10, CelebA, LSUN, FFHQ | Image generation |

| 2021 | Classifier-guided DMs (CDMs) [20] | ImageNet, LSUN, CIFAR-10 | Image generation |

| 2021 | Variational Diffusion Models (VDMs) [21] | CIFAR-10, CelebA, LSUN | Image generation |

| 2021 | Improved DDPMs [22] | CIFAR-10, CelebA, LSUN | Image generation |

| 2021 | Diffusion Waveform (DiffWave) [23] | LJSpeech, VCTK | Audio generation |

| 2021 | Segmentation Diffusion (SegDiff) [24] | Cityscapes, Pascal VOC | Image segmentation |

| 2021 | Generative LIkelihood-based DEcompression (GLIDE) [25] | MS-COCO, ImageNet | Image reconstruction |

| 2022 | Latent Diffusion Models (LDMs) [4] | LAION-400M, CelebA-HQ | Image generation, Text-to-image |

| 2022 | Image Transformers [26] | ImageNet, COCO | Image generation |

| 2022 | Multiscale Diffusion Models [27] | ImageNet, CIFAR-10, LSUN | Image generation |

| 2022 | Video-DDPM [28] | Kinetics-600, UCF-101 | Video generation |

| 2023 | Adaptive Diffusion Models [29] | CIFAR-10, CelebA, FFHQ | Image generation |

| Ref. | Algorithms | Applications | Dataset | Evaluations | Limitations |

|---|---|---|---|---|---|

| [35] | DT for Content Shielding in Stable DMs | Content shielding in text-to-image Diffusion Models using DT to prevent generation of unwanted concepts | COCO 30K | FID post-DT: 13.04, IS post-DT: 38.25 | DT may limit model’s flexibility for diverse contexts. |

| [36] | TFDPM | Detecting cyber-physical system attacks using TFDPM with Graph Attention Networks for channel data correlation | PUMP, SWAT, WADI | Pr: 0.96, Re: 0.91, F1: 0.91 | Struggles with discrete signal modeling, needs SDE frameworks for better generative capabilities. |

| [37] | Maat: Anomaly Anticipation for Cloud Services | Anomaly anticipation using a two-stage Diffusion Model for cloud services, integrating metric forecasting and anomaly detection | AIOps18, Hades, Yahoo!S5 | Pr: 0.97, Re: 0.91, F1: 0.91 | Limited generalizability and adaptability post-training. |

| [31] | Diffusion-based Stochastic Blind Image Deblurring | Blind image deblurring using Diffusion Models for multiple reconstructions | GoPro | FID: 4.04, KID: 0.98, LPIPS: 0.06, PSNR: 31.66, SSIM: 0.95 | High computational demands, needs optimized sampling or network architecture. |

| [32] | Come-Closer-Diffuse-Faster | Accelerating CMDs for applications like super-resolution and MRI reconstruction | FFHQ, AFHQ, fastMRI | FID varies; PSNR: 33.41 (best MRI case) | Optimal starting values (t0) vary, needs automation for practical deployment. |

| [33] | Selective Diffusion Distillation | Image manipulation balancing fidelity and editability without excessive noise trade-offs | N/A | FID: 6.07, CLIP Similarity: 0.23 | Reliance on correct timestep selection for semantic guidance may limit flexibility. |

| [34] | Object Motion Guided Human Motion Synthesis (OMOMO) | Full-body human motion synthesis guided by object motion using a conditional Diffusion Model | Custom dataset | MPJPE: 12.42, Troot: 18.44, Cprec: 0.82, Crec: 0.70, F1: 0.72 | Limited representation of dexterous hand movements and intermittent contact scenarios. |

| [2] | DDPMs | Image generation using DDPMs | CIFAR-10, LSUN, CelebA | FID: 3.17, IS: 9.46 | High computational cost and slow sampling speed. |

| [19] | Improved Techniques for Training Score-Based Generative Models | Improved image generation using score-based models | CIFAR-10, CelebA, LSUN | FID: 2.87, IS: 9.68 | Training complexity and large computational resources required. |

| [9] | Score-Based Generative Modeling through SDEs | Image generation using SDEs for better quality | CIFAR-10, CelebA, LSUN, FFHQ | FID: 2.92, IS: 9.62 | SDE-based models can be computationally expensive. |

| [20] | Diffusion Models Beat Generative Adversarial Networks (GANs) on Image Synthesis | Image synthesis outperforming GANs using Diffusion Models | ImageNet, LSUN, CIFAR-10 | FID: 2.97, IS: 9.57 | Large model size and slow training times. |

| [21] | VDMs | Image generation using variational Diffusion Models | CIFAR-10, CelebA, LSUN | FID: 3.12, IS: 9.53 | Complex model design and high computational cost. |

| [22] | Improved Denoising Diffusion Probabilistic Models | Enhanced DDPMs for better image quality | CIFAR-10, CelebA, LSUN | FID: 3.05, IS: 9.50 | Requires extensive hyperparameter tuning. |

| [4] | High-Resolution Image Synthesis with Latent Diffusion Models (LDMs) | High-resolution image and text-to-image synthesis | LAION-400M, CelebA-HQ | FID: 1.97, IS: 10.32 | High memory usage and computational cost. |

| [26] | Image Transformers with Autoregressive Models for High-Fidelity Image Synthesis | High-fidelity image synthesis using transformers | ImageNet, COCO | FID: 2.30, IS: 9.95 | Transformer models are computationally intensive. |

| [27] | Cascaded Diffusion Models for High-Fidelity Image Generation | High-fidelity image generation using multiscale Diffusion Models | ImageNet, CIFAR-10, LSUN | FID: 2.15, IS: 9.88 | Cascaded models require extensive computational resources. |

| [29] | Optimizing Diffusion Models for Image Synthesis | Adaptive Diffusion Models for better image synthesis | CIFAR-10, CelebA, FFHQ | FID: 1.89, IS: 10.45 | Adaptive models can be complex and resource-intensive. |

| [23] | DiffWave | Audio generation using Diffusion Models | LJSpeech, VCTK | FID: 3.67, PSNR: 34.10 | High computational cost and slow sampling speed. |

| [28] | Video Diffusion Models (Video-DDPM) | Video generation using Diffusion Models | Kinetics-600, UCF-101 | FID: 3.85, SSIM: 0.92 | High computational demands and slow training times. |

| [24] | SegDiff | Image segmentation using Diffusion Models | Cityscapes, Pascal VOC | FID: 3.50, SSIM: 0.87 | Limited scalability to larger datasets. |

| [25] | GLIDE | Photorealistic image generation and editing with text guidance | MS-COCO, ImageNet | FID: 3.21, IS: 9.67 | Text-guided models require extensive training data. |

| Ref. | Algorithms | Applications | Dataset | Evaluations | Limitations |

|---|---|---|---|---|---|

| [40] | SAG in DDMs | Image generation improvement | ImageNet, LSUN | FID: 2.58, sFID: 4.35 | Needs broader application integration. |

| [41] | Learnable State-Estimator-Based Diffusion Model | Inverse imaging problems (inpainting, deblurring, JPEG restoration) | FFHQ, LSUN-Bedroom | PSNR: 27.98, LPIPS: 0.09, FID: 25.45 | Limited generative capabilities, needs domain adaptation. |

| [51] | Score Dynamics (SD) | Accelerating molecular dynamics simulations | Alanine dipeptide, short alkanes in aqueous solution | Wall-clock speedup up to 180X | Requires large datasets; generalization challenges. |

| [42] | CMDs for Speech Enhancement (DMSEtext) | Speech enhancement for TTS model training | Real-world recordings | MOS Cleanliness: 4.32 ± 0.08, Overall Impression: 4.17 ± 0.06, PER: 17.6% | Needs text conditions for best results. |

| [52] | Conditional Diffusion Model for HDR Reconstruction | HDR image reconstruction from LDR images | Benchmark datasets for HDR imaging | PSNR-µ: 44.11, PSNR-L: 41.73, SSIM-µ: 0.99, SSIM-L: 0.99, HDR-VDP-2: 65.52, LPIPS: 0.01, FID: 6.20 | Slow inference speed; improve distortion metrics. |

| [44] | CLIPSonic | Text-to-audio synthesis using unlabeled videos | VGGSound, MUSIC | FAD: CLIPSonic-ZS on MUSIC 19.30, CLIPSonic-PD on MUSIC 13.51; CLAP score: CLIPSonic-ZS on MUSIC 0.28, CLIPSonic-PD on MUSIC 0.25 | Performance drop in zero-shot modality transfer. |

| [46] | SDG | Fine-grained image synthesis with text and image guidance | FFHQ, LSUN | FID: 14.37 (image guidance on FFHQ), 28.38 (text guidance on FFHQ); Top-5 Retrieval Accuracy: 0.742 (image guidance), 0.878 (text guidance) | Potential misuse in image generation. |

| [47] | DiffDreamer: Conditional Diffusion Model for Scene Extrapolation | Unsupervised 3D scene extrapolation | LHQ, ACID | Achieves low FID scores across various step intervals, e.g., 20 steps: FID: 34.49; 100 steps: FID: 51.00 on LHQ | Real-time synthesis not feasible; limited content diversity. |

| [48] | Diffusart: Conditional Diffusion Probabilistic Models for Line Art Colorization | Interactive line art colorization with user guidance | Danbooru2021 | SSIM: 0.81, LPIPS: 0.14, FID: 6.15 | Bias towards white; limits color diversity. |

| [49] | SketchFFusion: A Conditional Diffusion Model for Sketch-guided Image Editing | Sketch-guided image editing for local fine-tuning using generated sketches | CelebA-HQ, COCO-AIGC | FID: 9.07, PSNR: 26.74, SSIM: 0.88 | Supports only binary sketches; limits color editing. |

| [50] | Semantic-Conditional Diffusion Networks for Image Captioning | Advanced text-to-image captioning using semantic-driven Diffusion Models | COCO | B@1: 79.0, B@2: 63.4, B@3: 49.1, B@4: 37.3, CIDEr: 131.6 | Lacks real-time processing; needs optimization. |

| [38] | EquiDiff: Deep Generative Model for Vehicle Trajectory Prediction | Trajectory prediction for autonomous vehicles using a deep generative model with SO(2)-equivariant transformer | NGSIM | RMSE for 5s trajectory prediction shows competitive results | Effective short-term; higher errors in long-term predictions. |

| [53] | Efficient MRI Synthesis with Conditional Diffusion Probabilistic Models | Efficient synthesis of 3D brain MRIs using a conditional Diffusion Model | ADNI-1, UCSF, SRI International | MS-SSIM: 78.6% | Focused on T1-weighted MRIs; explore more types. |

| Ref. | Algorithms | Applications | Dataset | Evaluations | Limitations |

|---|---|---|---|---|---|

| [54] | Autoregressive conditional Diffusion-based Models (ACDM) | NVS from a single image | RealEstate10K, MP3D, CLEVR | LPIPS: 0.33, PSNR: 15.51 on RealEstate10K; LPIPS: 0.50, PSNR: 14.83 on MP3D; FID: 26.76 on RealEstate10K; FID: 73.16 on MP3D | Requires complex geometric consistency and heavy computational resources for extrapolating views. |

| [55] | CLE Diffusion | Low light enhancement | LOL, MIT-Adobe FiveK | PSNR: 29.81, SSIM: 0.97 on MIT-Adobe FiveK; PSNR: 25.51, SSIM: 0.89, LPIPS: 0.16, LI-LPIPS: 0.18 on LOL | Slow inference speed and limited capability in handling complex lighting and blurry scenes. |

| [56] | Diffusion-based inpainting model for 3D facial BRDF reconstruction | Facial texture completion and reflectance reconstruction from a single image | MultiPIE | PSNR: 26.00, SSIM: 0.93 at 0° angle on MultiPIE; Sampling time: 17 sec | Limited by input image quality and potential under-representation of ethnic diversity in training data. |

| [52] | CMDs | HDR reconstruction from multi-exposed LDR images | Benchmark datasets for HDR imaging | PSNR-µ: 22.25, SSIM-µ: 0.84, LPIPS: 0.03 on Hu’s dataset | Slow inference speed due to iterative denoising process. |

| [57] | RGB-D-Fusion diffusion probabilistic models | Depth map generation and super-resolution from monocular images | Custom dataset with ≈ 25,000 RGB-D images from 3D models of people | MSE: 1.48, IoU: 0.99, VLB: 16.95 with UNet3+ model | High computational resources required for training and sampling. |

| [58] | Disentangled CMDs (DisC-Diff) | Multi-contrast MRI super-resolution | IXI dataset and clinical brain MRI dataset | PSNR: 37.77 dB, SSIM: 0.99 on 2× scale in clinical dataset | Requires accurate condition sampling for model precision. |

| Ref. | Algorithms | Applications | Dataset | Evaluations | Limitations |

|---|---|---|---|---|---|

| [59] | DocDiff conditional Diffusion Model | Document image enhancement including deblurring, denoising, and watermark removal | Document Deblurring Dataset | MANIQA: 0.72, MUSIQ: 50.62, DISTS: 0.06, LPIPS: 0.03, PSNR: 23.28, SSIM: 0.95 | May lose high-frequency information, leading to distorted text edges. Relies on the quality of low-frequency content recovery by the Coarse Predictor module. |

| [60] | VTF-GAN | Thermal facial imagery generation for telemedicine | Eurecom and Devcom datasets | FID: 47.35, DBCNN: 34.34%, MSE: 0.88, SPEC: -1.1% for VTF-GAN with Fourier Transform-Guided (FFT-G) | Generation constrained to static environments; performance untested in dynamic, variable conditions affecting thermal emission. |

| [61] | ID3PM | Inversion of pre-trained face recognition models, generating identity-preserving face images | LFW, AgeDB-30, CFP-FP datasets | LFW: 99.20%, AgeDB-30: 94.53%, CFP-FP: 96.13% with ID3PM using InsightFace embeddings | Generation quality may vary with the diversity of embeddings; control over the generation process might need fine-tuning for specific applications. |

| [62] | FreeDoM | Conditional image and latent code generation | Multiple datasets for segmentation maps, sketches, texts | Distance: 1696.1, FID: 53.08 for segmentation maps with FreeDoM | High sampling time cost; struggles with fine-grained control in large data domains; may produce poor results with conflicting conditions. |

| Ref. | Algorithms | Applications | Dataset | Evaluations | Limitations |

| [65] | BerDiff: Conditional Bernoulli Diffusion for Medical Image Segmentation | Advanced medical image segmentation using Bernoulli diffusion to produce accurate and diverse segmentation masks | LIDC-IDRI, BRATS 2021 | Achieves state-of-the-art performance with metrics on LIDC-IDRI - GED: 0.24, HM-IoU: 0.60, and on BRATS 2021 - Dice: 89.7. | Focuses only on binary segmentation and requires significant time for iterative sampling. |

| [66] | NASDM: Nuclei-Aware Semantic Tissue Generation Framework | Generative modeling of histopathological images conditioned on semantic instance masks | Colon dataset | FID: 15.7, IS: 2.7, indicating high-quality and semantically accurate synthetic image generation. | Further development is required for varied histopathological settings and end-to-end tissue generation that includes mask synthesis. |

| [67] | Hierarchical Feature Conditional Diffusion (HiFi-Diff) | MR image super-resolution with arbitrary reduction of inter-slice spacing | HCP-1200 dataset | PSNR: 39.50±2.29, SSIM: 0.99 for ×4 SR task | Slow sampling speed, suggesting potential improvements through faster algorithms or knowledge distillation. |

| Ref. | Algorithms | Applications | Dataset | Evaluations | Limitations |

|---|---|---|---|---|---|

| [72] | Diffusion Classifier using text-to-image Diffusion Models | Zero-shot classification using generative models | Standard image classification benchmarks (e.g., ImageNet, CIFAR10) | Zero-shot classification accuracy on ImageNet using Diffusion Classifier: 58.9% | Performance gap in zero-shot recognition compared to SOTA discriminative models |

| [73] | CMDs for semantic image synthesis | Semantic synthesis for abdominal CT, used in data augmentation | Not specified | FID: 10.32, PSNR: 16.14, SSIM: 0.64, DSC: 95.6% for mask-guided DDPM at 100k iterations | High sampling time and computational cost |

| [74] | Learnable Unauthorized Examples (LEs) using joint-CMDs | Countermeasure to unlearnable examples in Machine Learning models | CIFAR-10, CIFAR-100, SVHN | Test accuracy on CIFAR-10 using LE: 94.0%, CIFAR-100: 67.8%, SVHN: 94.9% | Limited by distribution mismatches |

| [79] | Diffusion-based Non-Intrusive Load Monitoring (DiffNILM) Diffusion Probabilistic Model | Non-intrusive Load Monitoring (NILM) for appliance power consumption pattern disaggregation | REDD and UKDALE datasets | F1-Score: 0.79 for refrigerator on REDD, MAE: 4.54 for microwave on UKDALE | Generation of power waveforms not always sufficiently smooth; computational efficiency not optimized |

| [80] | Noise-Robust Expressive Text-to-Speech model (NoreSpeech) | Expressive TTS in noise environments | Not specified | MOS: 4.11, SMOS: 4.14 for NoreSpeech with T-SSL in noisy conditions | Dependent on quality of style teacher model |

| [81] | Diffusion-based data augmentation for nuclei segmentation | Nuclei segmentation in histopathology image analysis | MoNuSeg and Kumar datasets | Dice score: 0.83, AJI: 0.68 with 100% augmented data on MoNuSeg dataset | Dependent on the quality of synthetic data |

| [75] | AT-VarDiff | Atmospheric turbulence (AT) correction | Comprehensive synthetic atmospheric turbulence dataset | LPIPS: 0.11, FID: 32.69, NIQE: 6.46 | May not generalize well to real-life atmospheric turbulence images. |

| [76] | MatFusion Diffusion Models (unconditional and conditional) | SVBRDF estimation from photographs | Large set of 312,165 synthetic spatially varying material exemplars | RMSE on property maps: 0.04, LPIPS error on renders: 0.21 | Limited by the variation in lighting conditions. |

| [77] | Point cloud Diffusion Models with image conditioning schemes | 3D building generation from images | BuildingNet-SVI and BuildingNL3D datasets | CD: 3.14, EMD: 10.84, F1 score: 21.41 on BuildingNet-SVI | Constrained by specific image viewing angles. |

| [78] | ACDMSR: Accelerated Conditional Diffusion Model for Image Super-Resolution | Enhancing super-resolution using Diffusion Models conditioned on pre-super-resolved images | DIV2K, Set5, Set14, Urban100, BSD100, Manga109 | LPIS: 0.08, PSNR: 25.95, SSIM: 0.67 | Challenges remain in processing images with more complex degradation patterns. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).