Introduction

The increased use of Generative Artificial Intelligence (GAI) has brought about serious concerns regarding its use in academia and research. There are concerns surrounding the potential disappearance of genuinely human (non-AI assisted) academic creations, the tendency of AI to share vague information and fabricate responses, provide discriminatory outlooks and inappropriate language, and promote risky behavior. As such, researchers have sought to create frameworks for the academic and professional use of AI works that take into account proper citation, prompt usage, and more. These frameworks have the potential to allow for the responsible use of AI to increase efficiency and decrease workload for academics and practitioners without compromising the quality of the required output. This paper seeks to introduce a framework for the responsible use of language processing models such as ChatGPT for context-appropriate use in academia and beyond.

There have been previous attempts to recognize the importance of creating effective AI interaction including prompt engineering, prompt design (Heston and Khun, 2023; Strobelt et al., 2022), and Promptology (Dobson, 2023). For the purpose of our work grounded in the principles of human-computer interaction, we define Promptology as:

“Promptology is an interdisciplinary field that merges the art of designing effective prompts for Generative AI systems utilizing frameworks and theoretical models. It extends beyond traditional prompt engineering, focusing on the strategic creation of prompts to facilitate accurate and contextually relevant multi-modal AI responses.”

Promptology is emerging as a new branch of Human-Computer Interaction (HCI). Promptology integrates technical skills with a deep understanding of human cognition and language, aiming to optimize the interface between humans and AI across diverse computing environments. In an age where the effective use of GAI is becoming ubiquitous across various domains such as education, business, healthcare, research, specialized tasks, and workplace management (Robert et al., 2020), Promptology represents a critical body of knowledge for harnessing the full potential of these AI systems. Promptology not only fills the gap between humans and AI, expanding the field of Human-Computer Interaction (HCI) but also introduces mechanisms to enhance the safety, efficacy, and ethical use of AI systems. A vital aspect of Promptology involves learning to write prompts that are robust and secure, preventing potential misuse or hijacking by malicious actors (Ansari et al., 2022; Trope, 2020). By cultivating the skill of crafting clear and specific prompts, Promptology also aids in the generation of cost-effective AI interactions, reducing unnecessary computing time and energy (Lo et al., 2023; Svendsen and Garvey, 2023).

The field also contributes to the establishment of best practices in prompt design. It provides a structured approach that users across various sectors can follow to obtain optimal results from their AI systems. An essential component of this approach is the creation of a taxonomy of prompts, which categorizes prompts based on their characteristics and intended uses. The taxonomy serves as a valuable framework, further empowering users to harness the full potential of AI and enhancing the overall utility of GAI.

This paper introduces a foundational approach to incorporating promptology into academic and research settings, aiming to strike a balance between leveraging the efficiency and workload reduction capabilities of AI, while maintaining the integrity and quality of the intellectual outputs. Through the establishment of the SPARRO framework that provides context-appropriate guidelines and best practices in prompt design, this article aims to contribute to the broader discourse on fostering ethical, safe, and productive human-AI collaboration in critical intellectual domains. The paper is structured as follows. The first section provides the background to the emergence of Generative AI and discusses the potential for academia and research. The next discusses the autoethnography method used to conceptualize the SPARRO. This is followed by a discussion on the SPARRO framework. Before the conclusion, we present a future section prompt design discussing the advances being made in Promptology.

Background

Generative AI (GAI), also commonly referred to as Large Language Models (LLMs) have experienced a meteoric rise in prominence over the past few years. Engineered by leading technology and AI research entities, these models are reshaping the digital landscape, with transformative implications across a multitude of sectors (Javaid et al., 2022). GAIs have moved beyond being mere curiosities of computational linguistics and are now driving significant advancements in fields as diverse as education, healthcare, business, research, and even creative arts (Hajkowicz et al., 2023). Their potential for text generation, comprehension, translation, summarization, and more, extends their utility into virtually every domain where language and communication play integral roles.

One of the defining characteristics of GAIs is their interaction mechanism. Unlike traditional software that is governed by explicit commands and rigid interfaces, GAIs operate on the principle of ‘prompts.’ In the context of AI, a prompt is a user-issued instruction or query that guides the AI’s output. Prompts serve as the primary language for communicating with these models, providing an intuitive and flexible way to leverage their capabilities (Ronanki et al., 2024). However, in the utilization of prompts, said prompts must be generated with the methodology often employed in prompt engineering. Prompt engineering can be described as the step necessary to transition GAI models from a pre-train, fine-tune model requiring explicit, pre-trained commands to a pre-train, prompt, and predict model (Liu et al., 2023). An effective prompt or query allows the GAI model to predict the desired user output and generate an appropriate response. The selection of a prompt with sufficient keywords to generate the desired output from a GAI model, therefore, becomes paramount. A well-crafted prompt can elicit highly accurate, relevant, and even creative outputs from the AI, transforming it from a sophisticated tool into an intellectual partner.

This brings us to the emergent field of ‘Promptology’ (Velásquez-Henao et al., 2024). In a world where AI’s influence is steadily expanding, the ability to efficiently and effectively interact with these systems is becoming an increasingly important skill. Just as we study linguistics to understand the structures and principles of human languages, so too do we need to study Promptology to master the language of AI. Promptology is the study of prompt design. It encompasses the principles, strategies, and techniques for crafting prompts that maximize the utility, efficiency, and safety of interactions with GAIs (Dobson, 2023). There are several models currently in use and development to facilitate the design of improved prompts in a variety of GAI and other LLM systems, as outlined in a paper by Liu et al. (2023). Another necessary delineation is between manual template engineering and automated template learning (Liu et al., 2023). Manual template engineering describes the process of generating prompts through human creativity, reflection, and refinement. Automated template learning describes the automated generation of prompts for target tasks, and can be done through a variety of methods such as using GAI itself to generate prompts. Depending on the problem at the center of the prompt, manual engineering and automated engineering each have their advantages and disadvantages. Manual prompt engineering allows for a natural approach shaped by human intuition and reasoning, while automated generation of prompts allows for faster generation and testing of a variety of prompt options with less human workload (Liu et al., 2023).

Prompt engineering is the process of creating, structuring, fine-tuning, and then integrating the instructions that guide LLMs in helping them accomplish specific tasks (Meskó, 2023). Prompts are created with a specific purpose and usage framework in mind. Without prompt engineering, GAI would have no way of extracting relevant information reliably and would often make the GAI challenging to use in specific contexts. Using an example of prompt engineering in healthcare, a prompt could be used to manage administrative responsibilities and would include language that would provide GAI with fields to be entered surrounding medication reminders, the scheduling of appointments, or even simple health recommendations prior to appointments. Many methodologies can be used to achieve quality prompt engineering. While these will vary depending on the context of the task, general aims include being specific to the problem, providing as much context to the problem as possible, experimenting with verbiage, having the GAI take on specific roles, formulating open-ended prompts, and more.

The necessity of effective prompt engineering is further exemplified in the case of GAI models that are designed to work with more complex or field-specific data sets. One such example is described by Singhal et al. (2023), in which they utilize instruction prompt tuning in order to attempt to improve the performance of Large Language Models (LLMs) on the MultiMedQA, a question dataset composed of questions similar to the US Medical Licensing Exam (USMLE). They found that a LLM refined with prompt tuning towards medical vocabulary improved the performance of said LLM on the MultiMedQA, demonstrating the importance of domain-specific prompt engineering.

The adage “garbage in, garbage out” is aptly relevant here. If the prompts used to interact with an AI system are ill-designed or unclear, the output from the system is likely to be flawed or unhelpful (Velásquez-Henao et al., 2024). This makes the study of Promptology not merely an academic interest but a vital component of practical AI usage. As AI systems continue to evolve and their integration into everyday life deepens, a solid understanding of Promptology will become increasingly indispensable. Not just for technologists, AI researchers, and developers, but for anyone looking to tap into the transformative power of AI. Promptology represents a meticulous blend of technical strategy and creative experimentation, essential for developing input texts that coax out optimal performance from AI models. Recent advances have seen the field of Prompt engineering evolve to less engineering and more prompt designing (Dang et al., 2022).

Academic research involves searching for, interpreting, and making conclusions regarding large swaths of academic text sourced from a variety of places (Esplugas, 2023). This process accounts for a large portion of the time spent reviewing current standards of practice and perspectives for novel research. This review of the data is essential for the development of publications, presentations, and medical and scientific documentation. GAI applications such as ChatGPT, BART, or more domain-specific applications have the potential to be an incredible time-saver for clinicians and researchers (Li et al., 2023). More domain-specific GPT applications that have been launched in recent years would have the advantage of being trained on a more specific dataset than general models such as ChatGPT, and include BioGPT and PubMedGPT. GAIs can generate large portions of text, and summarize prompted articles, or text editing. They may also be able to summarize large amounts of data such as those sourced from clinical trials or from the record of a complex patient (Li et al., 2023). The next section provides an overview of how GAI can be safely integrated into the academic process.

Methodology

Autoethnography is a qualitative research method combining personal narrative with cultural analysis. It uses the researcher’s experiences to explore broader cultural and social contexts, offering insights into how individual experiences reflect and are shaped by larger societal structures and norms (Raab, D, 2013).

This autoethnographic study documents the development of the SPARRO framework for prompt engineering in generative AI (GAI) within the healthcare and nursing curriculum. The integration of GAI was executed across five classes taught by professors in Health Service Administration and Nursing. The principal investigator engaged deeply with the process, maintaining detailed journals, conducting informal interviews with professors, and observing online classroom interactions. This approach allowed for reflection educators experiences and the broader educational context, providing a comprehensive understanding of the AI integration process.

Data Collection

Data were gathered from:

Personal Journals: Documenting daily activities, challenges, and reflections.

Interviews: Informal discussions with the five professors to gather insights and feedback.

Classroom Observations: Regular observations to capture interactions between students, professors, and AI tools.

Student Feedback: Anonymous surveys and feedback forms from students.

SPARRO Framework Development

The autoethnographic insights led to the development of the SPARRO framework, addressing specific challenges faced by students and professors:

Strategy: Addressed the need for planning AI’s role in research with a ‘Declaration of Generative AI Use’ to maintain transparency.

Prompt Design: Utilized the CRAFT model (Clarity, Rationale, Audience, Format, Tasks) to create effective prompts tailored to course needs.

Adopting: Ensured AI content aligned with assignment objectives, integrating AI outputs seamlessly with human input.

Reviewing: Included critical assessments of AI content for accuracy and relevance, maintaining educational standards.

Refining: Focused on iterative improvements based on feedback, enhancing content quality.

Optimizing: Ensured originality and academic integrity with plagiarism checkers and reference verification tools.

Ethical Considerations

Transparency was maintained through clear communication, with informed consent obtained from participants. Anonymity and confidentiality were upheld throughout the research process.

Prompt Engineering SPARRO Framework

This paper proposes a structural framework to ethically and safely integrate language processors such as ChatGPT into student education. Critics have suggested that language processors undermine critical thinking while additionally suggesting that programs such as ChatGPT are still very prone to error. These discussions have called into question a ban on these tools (Eke, 2023). However, an overabundance of fear directed toward such technological developments may pose a greater problem in moving us backward in how we process information. Some professors are now opting for pen-and-paper assessments in fear that AI works may be introducing inefficiencies that could be more appropriately addressed via frameworks (Murugesan and Cherukuri, 2023; Xu and Ouyang, 2022).

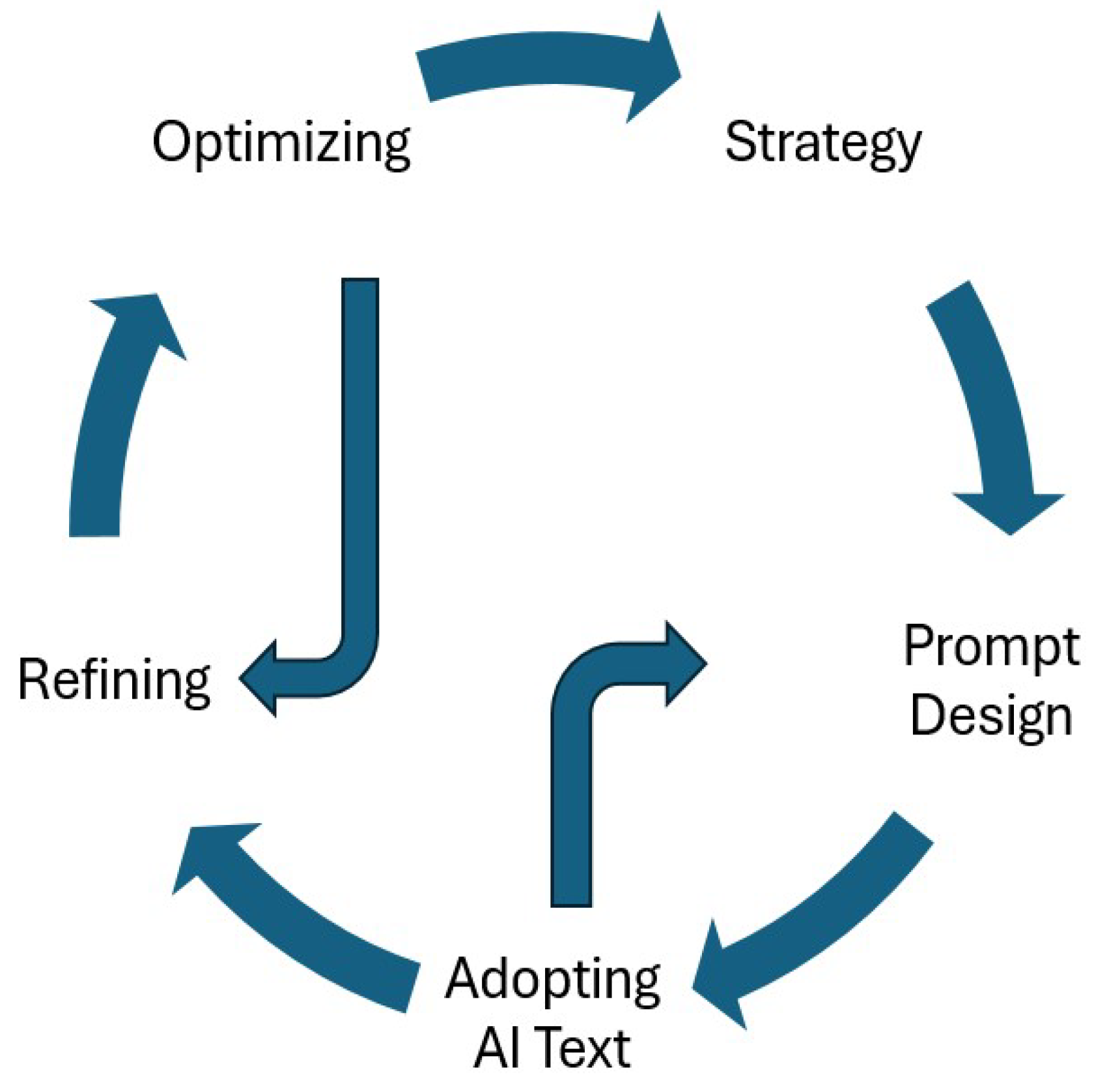

The SPARRO framework (outlined in

Table 1 and

Figure 1) attempts to guide the accurate ethical and reliable use of language processing systems such as ChatGPT in an educational setting. To harness AI responsibly, students must adopt a systematic method that respects academic integrity while leveraging AI’s capabilities. This section introduces the SPARRO framework as a comprehensive approach to facilitate the ethical and effective use of AI in academic settings. Each component—Strategy, Prompt design, Adopting, Reviewing, Refining, and Optimizing—serves as a guideline to ensure the reliability and appropriateness of AI-generated content within scholarly work.

Strategy

The foundational aspect of the SPARRO framework is Strategy, which involves developing a comprehensive plan for integrating AI into research or assignments. This plan includes creating a declaration of use statement, determining the role of AI, its scope, and the boundaries of its use. A well-defined strategy ensures that the application of AI aligns with the learning objectives and respects the ethical standards of academic work. It compels students to consider the AI’s function as a tool rather than a replacement for critical thinking and creativity.

‘Declaration of Generative AI and AI-assisted technologies in the writing process’

During the preparation of this assignment, I used [NAME TOOL / SERVICE] to perform the following [REASON]. After using this tool/service, I reviewed and edited the content as needed and I take full responsibility for the content of the publication.

Prompt Design

Prompt design, drawing on the CRAFT model (outlined in

Table 2), is crucial for harnessing AI’s potential effectively. It requires clarity in the communication of tasks to AI, providing a rationale for the context, acknowledging the intended audience, specifying the desired format, and delineating the tasks. This component ensures that the prompts generate relevant and precise responses, tailored to the academic task at hand. This is an iterative approach and may require some tweaking of the CRAFT model to achieve a desirable output.

By looking at each component of the CRAFT model and understanding its purpose, both educators and students can better understand what content is being generated from the AI systems.

Adoption

The adoption phase involves the careful integration of AI-generated text into the student’s own work. It emphasizes the importance of maintaining a consistent voice and ensuring that the AI output supports the assignment’s objectives. This step requires a discerning approach to distinguish between the value added by AI and the student’s original thought and analysis.

Reviewing

Reviewing is a critical examination of the AI-generated content. It necessitates a thorough evaluation of accuracy, relevance, and coherence, comparing the AI’s output against academic standards and the assignment’s criteria. It is during this phase that students must engage deeply with the material, identifying any gaps or inaccuracies and considering the argument’s logical flow.

Refining

Refinement is an iterative process, enhancing the language and arguments of the adopted text. It ensures that the content not only meets academic standards but also reflects the student’s intellectual contribution. Refining might involve restructuring arguments, expanding on ideas, and incorporating personal insights to elevate the work’s quality and authenticity.

Optimizing

Finally, Optimizing addresses the originality and integrity of the academic work. Students are encouraged to utilize plagiarism checkers and reference verification tools to ascertain that their work is free of inadvertent plagiarism and that all sources are properly cited. This step is pivotal in maintaining the scholarly value of their work and upholding the principles of academic honesty.

The benefit of the SPARRO model is that it transcends any particular chatbot, tool or AI technology. It is a guidebook that when applied properly will ensure that AI is used accurately, ethically and safely within the educational system. The future of Promptology entails developing new techniques to improve the interaction.

Discussion

The integration of generative AI into the healthcare and nursing curriculum, as examined through an autoethnographic lens, reveals significant insights into the practical and cultural implications of this technological advancement. The development and implementation of the SPARRO framework highlighted several key findings and raised important considerations for the future of AI in education.

Key Findings

Enhanced Engagement and Learning: The use of generative AI, guided by the SPARRO framework, significantly enhanced student engagement and learning outcomes. By tailoring prompts to align with course objectives, students found the material more relevant and engaging, which fostered a deeper understanding of complex topics.

Faculty Adaptation and Challenges: While professors acknowledged the potential of generative AI to transform teaching practices, they also faced challenges in adapting to this new technology. The need for ongoing training and support was evident, highlighting the importance of institutional backing and professional development.

Ethical Considerations: The emphasis on transparency and academic integrity within the SPARRO framework addressed critical ethical concerns. The ‘Declaration of Generative AI Use’ and the integration of plagiarism checkers ensured that AI’s role was clear and that academic standards were maintained.

Iterative Improvement: The iterative nature of the SPARRO framework, particularly the Reviewing and Refining components, proved effective in continuously enhancing the quality of AI-generated content. This iterative process allowed for the content to be fine-tuned based on feedback, ensuring its relevance and accuracy.

Implications for Practice

The findings underscore the potential of generative AI to enrich educational practices when guided by a structured framework like SPARRO. Institutions considering the adoption of AI should invest in comprehensive training for faculty, incorporate ethical guidelines, and support iterative content development to maximize the benefits of AI integration.

Future Research

Our future research will explore the long-term impact of generative AI on learning outcomes and its scalability across different disciplines. Additionally, investigating the experiences of students and faculty in diverse educational settings will provide a broader understanding of the framework’s applicability and effectiveness.

Conclusion

The SPARRO framework offers a structured approach to guide students in the use of AI within academia. By following its components—Strategy, Prompt design, Adopting, Reviewing, Refining, and Optimizing—students can engage with AI tools to augment their learning experience while ensuring academic integrity and the production of work that is both ethically sound and scholastically rigorous.Each component of the SPARRO framework was meticulously designed to address specific challenges faced by students and professors, ensuring a structured and ethical approach to integrating generative AI into academic work.

The autoethnographic study of integrating generative AI into healthcare and nursing education through the SPARRO framework offers valuable insights into the transformative potential of AI in academia. By addressing specific challenges faced by students and professors, the framework provides a practical, ethical, and effective approach to enhancing teaching and learning with AI. The study contributes to the growing body of literature on AI in education and underscores the importance of thoughtful, reflective practices in the deployment of new technologies.

References

- Ansari, M. F., Dash, B., Sharma, P., & Yathiraju, N. (2022). The Impact and Limitations of Artificial Intelligence in Cybersecurity: A Literature Review. International Journal of Advanced Research in Computer and Communication Engineering. [CrossRef]

- Dang, H., Mecke, L., Lehmann, F., Goller, S., & Buschek, D. (2022). How to prompt? Opportunities and challenges of zero-and few-shot learning for human-AI interaction in creative applications of generative models. arXiv preprint arXiv:2209.01390. [CrossRef]

- Denny, P., Leinonen, J., Prather, J., Luxton-Reilly, A., Amarouche, T., Becker, B. A., & Reeves, B. N. (2024, March). Prompt Problems: A new programming exercise for the generative AI era. In Proceedings of the 55th ACM Technical Symposium on Computer Science Education V. 1 (pp. 296-302). [CrossRef]

- Dobson, J. E. (2023). On reading and interpreting black box deep neural networks. International Journal of Digital Humanities, 1-19. [CrossRef]

- Eke, D. O. (2023). ChatGPT and the rise of generative AI: threat to academic integrity?. Journal of Responsible Technology, 13, 100060. [CrossRef]

- Esplugas, M. (2023). The use of artificial intelligence (AI) to enhance academic communication, education and research: a balanced approach. Journal of Hand Surgery (European Volume), 48(8), 819-822. [CrossRef]

- Hajkowicz, S., Sanderson, C., Karimi, S., Bratanova, A., & Naughtin, C. (2023). Artificial intelligence adoption in the physical sciences, natural sciences, life sciences, social sciences and the arts and humanities: A bibliometric analysis of research publications from 1960-2021. Technology in Society, 74, 102260. [CrossRef]

- Heston, T. F., & Khun, C. (2023). Prompt engineering in medical education. International Medical Education, 2(3), 198-205. [CrossRef]

- Javaid, M., Haleem, A., Singh, R. P., & Suman, R. (2022). Artificial intelligence applications for industry 4.0: A literature-based study. Journal of Industrial Integration and Management, 7(01), 83-111. [CrossRef]

- Li, H., Moon, J. T., Purkayastha, S., Celi, L. A., Trivedi, H., & Gichoya, J. W. (2023). Ethics of large language models in medicine and medical research. The Lancet Digital Health, 5(6), e333-e335. [CrossRef]

- Liu, P., Yuan, W., Fu, J., Jiang, Z., Hayashi, H., & Neubig, G. (2023). Pre-train, prompt, and predict: A systematic survey of prompting methods in natural language processing. ACM Computing Surveys, 55(9), 1-35. [CrossRef]

- Lo, L. S. (2023). The CLEAR path: A framework for enhancing information literacy through prompt engineering. The Journal of Academic Librarianship, 49(4), 102720. [CrossRef]

- Meskó, B. (2023). Prompt engineering as an important emerging skill for medical professionals: tutorial. Journal of Medical Internet Research, 25, e50638. [CrossRef]

- Murugesan, S., & Cherukuri, A. K. (2023). The rise of generative Artificial Intelligence and its impact on education: The promises and perils. Computer, 56(5), 116-121. [CrossRef]

- Raab, D. (2013). Transpersonal Approaches to Autoethnographic Research and Writing. Qualitative Report, 18(21).

- Robert, L. P., Pierce, C., Marquis, L., Kim, S., & Alahmad, R. (2020). Designing fair AI for managing employees in organizations: a review, critique, and design agenda. Human–Computer Interaction, 35(5-6), 545-575. [CrossRef]

- Ronanki, K., Cabrero-Daniel, B., Horkoff, J., & Berger, C. (2024). Requirements engineering using generative AI: Prompts and prompting patterns. In Generative AI for Effective Software Development (pp. 109-127). Cham: Springer Nature Switzerland. [CrossRef]

- Singhal, K., Azizi, S., Tu, T., Mahdavi, S. S., Wei, J., Chung, H. W., ... & Natarajan, V. (2023). Large language models encode clinical knowledge. Nature, 620(7972), 172-180. [CrossRef]

- Strobelt, H., Webson, A., Sanh, V., Hoover, B., Beyer, J., Pfister, H., & Rush, A. M. (2022). Interactive and visual prompt engineering for ad-hoc task adaptation with large language models. IEEE transactions on visualization and computer graphics, 29(1), 1146-1156. [CrossRef]

- Svendsen, A., & Garvey, B. (2023). An Outline for an Interrogative/Prompt Library to help improve output quality from Generative-AI Datasets. Prompt Library to help improve output quality from Generative-AI Datasets (May 2023). [CrossRef]

- Trope, R. L. (2020). What a Piece of Work is AI”-Security and Al Developments. Business Law., 76, 289-294.

- Velásquez-Henao, J. D., Franco-Cardona, C. J., & Cadavid-Higuita, L. (2023). Prompt Engineering: a methodology for optimizing interactions with AI-Language Models in the field of engineering. Dyna, 90(230), 9-17. [CrossRef]

- Xu, W., & Ouyang, F. (2022). A systematic review of AI role in the educational system based on a proposed conceptual framework. Education and Information Technologies, 1-29. [CrossRef]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).