Submitted:

25 August 2024

Posted:

26 August 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Literature Review

2.1. Traditional Segmentation Techniques

2.2. Machine Learning-Based Segmentation Techniques

2.3. Deep Learning-Based Techniques

3. Architecture Comparison of SAM and SAM 2

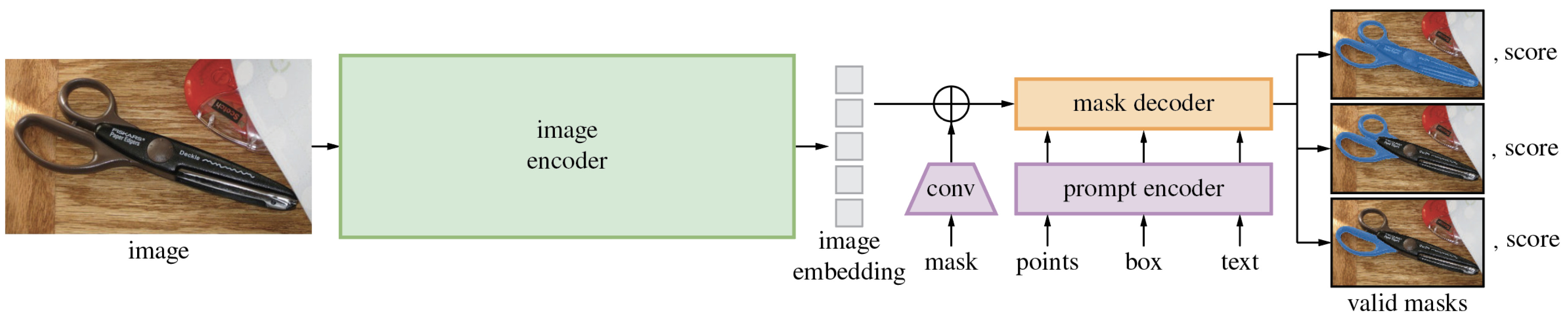

3.1. Architecture of SAM

3.1.1. The Segment Anything Task

3.1.2. Network Design of SAM

- The task of promptable segmentation to facilitate zero-shot generalization [70].

- This model architecture consists of a mask decoder, prompt encoder, and image encoder [70].

- The dataset that powers the task and model, specifically the SA-1B dataset containing over 1.1 billion masks [70].

Image Encoder

Prompt Encoder

Mask Decoder

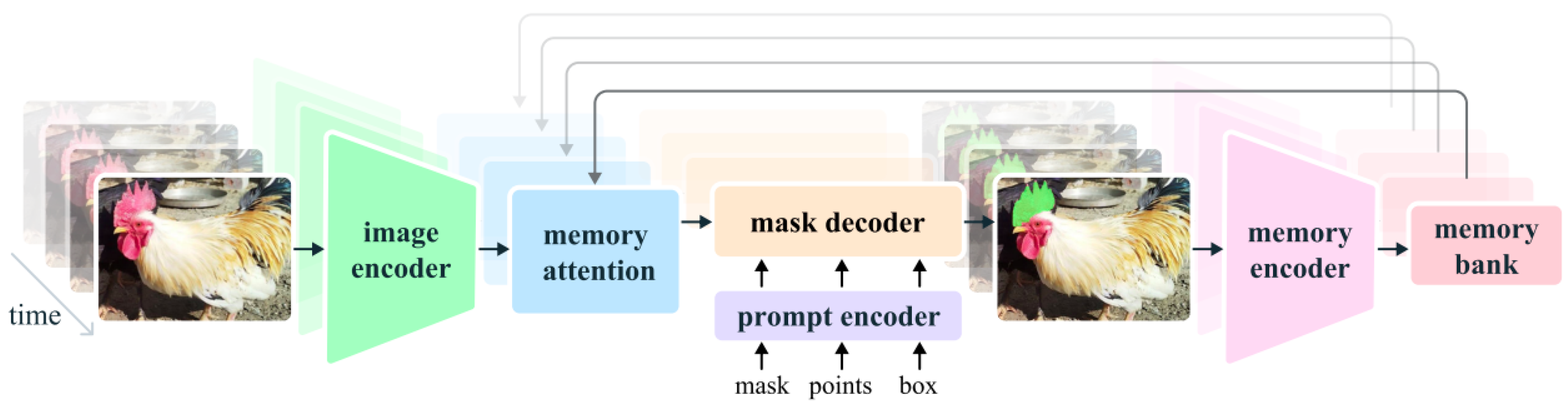

3.2. Architecture of SAM 2

3.2.1. Network Design of SAM 2

Enhanced Encoder

Advanced Decoder

Enhanced Feature Extraction

3.2.2. Enhancements in SAM 2 Architecture

References

- Richard Szeliski. Computer vision: algorithms and applications. Springer Nature, 2022.

- Wenwei Zhang, Jiangmiao Pang, Kai Chen, and Chen Change Loy. K-net: Towards unified image segmentation. Advances in Neural Information Processing Systems, 34:10326–10338, 2021.

- Alexander Kirillov, Yuxin Wu, Kaiming He, and Ross Girshick. Pointrend: Image segmentation as rendering. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 9799–9808, 2020.

- Shervin Minaee, Yuri Boykov, Fatih Porikli, Antonio Plaza, Nasser Kehtarnavaz, and Demetri Terzopoulos. Image segmentation using deep learning: A survey. IEEE transactions on pattern analysis and machine intelligence, 44(7):3523–3542, 2021.

- Stephen Gould, Tianshi Gao, and Daphne Koller. Region-based segmentation and object detection. Advances in neural information processing systems, 22, 2009.

- Alexander Kirillov, Kaiming He, Ross Girshick, Carsten Rother, and Piotr Dollár. Panoptic segmentation. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 9404–9413, 2019.

- Zhuowen Tu, Xiangrong Chen, Alan L Yuille, and Song-Chun Zhu. Image parsing: Unifying segmentation, detection, and recognition. International Journal of computer vision, 63:113–140, 2005.

- Jian Yao, Sanja Fidler, and Raquel Urtasun. Describing the scene as a whole: Joint object detection, scene classification and semantic segmentation. In 2012 IEEE conference on computer vision and pattern recognition, pages 702–709. IEEE, 2012.

- David A Forsyth and Jean Ponce. Computer vision: a modern approach. prentice hall professional technical reference, 2002.

- Nobuyuki Otsu et al. A threshold selection method from gray-level histograms. Automatica, 11(285-296):23–27, 1975. [CrossRef]

- Nameirakpam Dhanachandra, Khumanthem Manglem, and Yambem Jina Chanu. Image segmentation using k-means clustering algorithm and subtractive clustering algorithm. Procedia Computer Science, 54:764–771, 2015. [CrossRef]

- Richard Nock and Frank Nielsen. Statistical region merging. IEEE Transactions on pattern analysis and machine intelligence, 26(11):1452–1458, 2004.

- Laurent Najman and Michel Schmitt. Watershed of a continuous function. Signal processing, 38(1):99–112, 1994. [CrossRef]

- Yuri Boykov, Olga Veksler, and Ramin Zabih. Fast approximate energy minimization via graph cuts. IEEE Transactions on pattern analysis and machine intelligence, 23(11):1222–1239, 2001.

- Michael Kass, Andrew Witkin, and Demetri Terzopoulos. Snakes: Active contour models. International journal of computer vision, 1(4):321–331, 1988.

- Nils Plath, Marc Toussaint, and Shinichi Nakajima. Multi-class image segmentation using conditional random fields and global classification. In Proceedings of the 26th annual international conference on machine learning, pages 817–824, 2009.

- J-L Starck, Michael Elad, and David L Donoho. Image decomposition via the combination of sparse representations and a variational approach. IEEE transactions on image processing, 14(10):1570–1582, 2005. [CrossRef]

- Shervin Minaee and Yao Wang. An admm approach to masked signal decomposition using subspace representation. IEEE Transactions on Image Processing, 28(7):3192–3204, 2019. [CrossRef]

- Olaf Ronneberger, Philipp Fischer, and Thomas Brox. U-net: Convolutional networks for biomedical image segmentation. In Medical image computing and computer-assisted intervention–MICCAI 2015: 18th international conference, Munich, Germany, October 5-9, 2015, proceedings, part III 18, pages 234–241. Springer, 2015.

- Yuhui Yuan, Xilin Chen, and Jingdong Wang. Object-contextual representations for semantic segmentation. In Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part VI 16, pages 173–190. Springer, 2020.

- Kaiming He, Georgia Gkioxari, Piotr Dollár, and Ross Girshick. Mask r-cnn. In Proceedings of the IEEE international conference on computer vision, pages 2961–2969, 2017.

- Jonathan Long, Evan Shelhamer, and Trevor Darrell. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 3431–3440, 2015.

- Vijay Badrinarayanan, Alex Kendall, and Roberto Cipolla. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE transactions on pattern analysis and machine intelligence, 39(12):2481–2495, 2017. [CrossRef]

- Alexander Kirillov, Eric Mintun, Nikhila Ravi, Hanzi Mao, Chloe Rolland, Laura Gustafson, Tete Xiao, Spencer Whitehead, Alexander C Berg, Wan-Yen Lo, et al. Segment anything. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 4015–4026, 2023.

- Tianfei Zhou, Fatih Porikli, David J Crandall, Luc Van Gool, and Wenguan Wang. A survey on deep learning technique for video segmentation. IEEE transactions on pattern analysis and machine intelligence, 45(6):7099–7122, 2022.

- Anestis Papazoglou and Vittorio Ferrari. Fast object segmentation in unconstrained video. In Proceedings of the IEEE international conference on computer vision, pages 1777–1784, 2013.

- Wenguan Wang, Jianbing Shen, and Fatih Porikli. Saliency-aware geodesic video object segmentation. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 3395–3402, 2015.

- Chenliang Xu and Jason J Corso. Evaluation of super-voxel methods for early video processing. In 2012 IEEE conference on computer vision and pattern recognition, pages 1202–1209. IEEE, 2012.

- Thomas Brox and Jitendra Malik. Object segmentation by long term analysis of point trajectories. In European conference on computer vision, pages 282–295. Springer, 2010.

- Yong Jae Lee, Jaechul Kim, and Kristen Grauman. Key-segments for video object segmentation. In 2011 International conference on computer vision, pages 1995–2002. IEEE, 2011.

- Matthias Grundmann, Vivek Kwatra, Mei Han, and Irfan Essa. Efficient hierarchical graph-based video segmentation. In 2010 ieee computer society conference on computer vision and pattern recognition, pages 2141–2148. IEEE, 2010.

- Chen-Ping Yu, Hieu Le, Gregory Zelinsky, and Dimitris Samaras. Efficient video segmentation using parametric graph partitioning. In Proceedings of the IEEE International Conference on Computer Vision, pages 3155–3163, 2015.

- Federico Perazzi, Oliver Wang, Markus Gross, and Alexander Sorkine-Hornung. Fully connected object proposals for video segmentation. In Proceedings of the IEEE international conference on computer vision, pages 3227–3234, 2015.

- Vijay Badrinarayanan, Ignas Budvytis, and Roberto Cipolla. Semi-supervised video segmentation using tree structured graphical models. IEEE transactions on pattern analysis and machine intelligence, 35(11):2751–2764, 2013. [CrossRef]

- Naveen Shankar Nagaraja, Frank R Schmidt, and Thomas Brox. Video segmentation with just a few strokes. In Proceedings of the IEEE International Conference on Computer Vision, pages 3235–3243, 2015.

- Won-Dong Jang and Chang-Su Kim. Streaming video segmentation via short-term hierarchical segmentation and frame-by-frame markov random field optimization. In Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11-14, 2016, Proceedings, Part VI 14, pages 599–615. Springer, 2016.

- Buyu Liu and Xuming He. Multiclass semantic video segmentation with object-level active inference. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 4286–4294, 2015.

- Yu Zhang, Zhongyin Guo, Jianqing Wu, Yuan Tian, Haotian Tang, and Xinming Guo. Real-time vehicle detection based on improved yolo v5. Sustainability, 14(19):12274, 2022. [CrossRef]

- Chien-Yao Wang, Alexey Bochkovskiy, and Hong-Yuan Mark Liao. Yolov7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 7464–7475, 2023.

- Gang Wang, Yanfei Chen, Pei An, Hanyu Hong, Jinghu Hu, and Tiange Huang. Uav-yolov8: A small-object-detection model based on improved yolov8 for uav aerial photography scenarios. Sensors, 23(16):7190, 2023. [CrossRef]

- Rishi Bommasani, Drew A Hudson, Ehsan Adeli, Russ Altman, Simran Arora, Sydney von Arx, Michael S Bernstein, Jeannette Bohg, Antoine Bosselut, Emma Brunskill, et al. On the opportunities and risks of foundation models. arXiv preprint arXiv:2108.07258, 2021.

- Tom Brown, Benjamin Mann, Nick Ryder, Melanie Subbiah, Jared D Kaplan, Prafulla Dhariwal, Arvind Neelakantan, Pranav Shyam, Girish Sastry, Amanda Askell, et al. Language models are few-shot learners. Advances in neural information processing systems, 33:1877–1901, 2020.

- Josh Achiam, Steven Adler, Sandhini Agarwal, Lama Ahmad, Ilge Akkaya, Florencia Leoni Aleman, Diogo Almeida, Janko Altenschmidt, Sam Altman, Shyamal Anadkat, et al. Gpt-4 technical report. arXiv preprint arXiv:2303.08774, 2023.

- Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, et al. Learning transferable visual models from natural language supervision. In International conference on machine learning, pages 8748–8763. PMLR, 2021.

- Xi Chen, Zhiyan Zhao, Yilei Zhang, Manni Duan, Donglian Qi, and Hengshuang Zhao. Focalclick: Towards practical interactive image segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 1300–1309, 2022.

- Qin Liu, Zhenlin Xu, Gedas Bertasius, and Marc Niethammer. Simpleclick: Interactive image segmentation with simple vision transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 22290–22300, 2023.

- Nikhila Ravi, Valentin Gabeur, Yuan-Ting Hu, Ronghang Hu, Chaitanya Ryali, Tengyu Ma, Haitham Khedr, Roman Rädle, Chloe Rolland, Laura Gustafson, et al. Sam 2: Segment anything in images and videos. arXiv preprint arXiv:2408.00714, 2024.

- Mohamed Abdel-Basset, Victor Chang, and Reda Mohamed. A novel equilibrium optimization algorithm for multi-thresholding image segmentation problems. Neural Computing and Applications, 33:10685–10718, 2021. [CrossRef]

- Juan Liao, Yao Wang, Dequan Zhu, Yu Zou, Shun Zhang, and Huiyu Zhou. Automatic segmentation of crop/background based on luminance partition correction and adaptive threshold. IEEE Access, 8:202611–202622, 2020. [CrossRef]

- Jonathan T Barron. A generalization of otsu’s method and minimum error thresholding. In Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part V 16, pages 455–470. Springer, 2020.

- Yong Woon Kim and Addapalli VN Krishna. A study on the effect of canny edge detection on downscaled images. Pattern Recognition and Image Analysis, 30:372–381, 2020. [CrossRef]

- Fei Hao, Dashuai Xu, Delin Chen, Yuntao Hu, and Chaohan Zhu. Sobel operator enhancement based on eight-directional convolution and entropy. International Journal of Information Technology, 13(5):1823–1828, 2021. [CrossRef]

- Saeed Balochian and Hossein Baloochian. Edge detection on noisy images using prewitt operator and fractional order differentiation. Multimedia Tools and Applications, 81(7):9759–9770, 2022. [CrossRef]

- J Dafni Rose, K VijayaKumar, Laxman Singh, and Sudhir Kumar Sharma. Computer-aided diagnosis for breast cancer detection and classification using optimal region growing segmentation with mobilenet model. Concurrent Engineering, 30(2):181–189, 2022. [CrossRef]

- Tianyi Zhang, Guosheng Lin, Weide Liu, Jianfei Cai, and Alex Kot. Splitting vs. merging: Mining object regions with discrepancy and intersection loss for weakly supervised semantic segmentation. In Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XXII 16, pages 663–679. Springer, 2020.

- Sandra Jardim, João António, and Carlos Mora. Graphical image region extraction with k-means clustering and watershed. Journal of Imaging, 8(6):163, 2022. [CrossRef]

- Yongan Xue, Jinling Zhao, and Mingmei Zhang. A watershed-segmentation-based improved algorithm for extracting cultivated land boundaries. Remote Sensing, 13(5):939, 2021. [CrossRef]

- Yangtao Wang, Xi Shen, Yuan Yuan, Yuming Du, Maomao Li, Shell Xu Hu, James L Crowley, and Dominique Vaufreydaz. Tokencut: Segmenting objects in images and videos with self-supervised transformer and normalized cut. IEEE transactions on pattern analysis and machine intelligence, 2023. [CrossRef]

- Malti Bansal, Apoorva Goyal, and Apoorva Choudhary. A comparative analysis of k-nearest neighbor, genetic, support vector machine, decision tree, and long short term memory algorithms in machine learning. Decision Analytics Journal, 3:100071, 2022. [CrossRef]

- Derek A Pisner and David M Schnyer. Support vector machine. In Machine learning, pages 101–121. Elsevier, 2020.

- Robin Genuer, Jean-Michel Poggi, Robin Genuer, and Jean-Michel Poggi. Random forests. Springer, 2020.

- Patrick Schober and Thomas R Vetter. Logistic regression in medical research. Anesthesia & Analgesia, 132(2):365–366, 2021. [CrossRef]

- Neville Kenneth Kitson, Anthony C Constantinou, Zhigao Guo, Yang Liu, and Kiattikun Chobtham. A survey of bayesian network structure learning. Artificial Intelligence Review, 56(8):8721–8814, 2023. [CrossRef]

- Bengong Yu and Zhaodi Fan. A comprehensive review of conditional random fields: variants, hybrids and applications. Artificial Intelligence Review, 53(6):4289–4333, 2020. [CrossRef]

- Maria Baldeon Calisto and Susana K Lai-Yuen. Adaen-net: An ensemble of adaptive 2d–3d fully convolutional networks for medical image segmentation. Neural Networks, 126:76–94, 2020.

- Nahian Siddique, Sidike Paheding, Colin P Elkin, and Vijay Devabhaktuni. U-net and its variants for medical image segmentation: A review of theory and applications. IEEE access, 9:82031–82057, 2021. [CrossRef]

- Karuna Kumari Eerapu, Shyam Lal, and AV Narasimhadhan. O-segnet: Robust encoder and decoder architecture for objects segmentation from aerial imagery data. IEEE Transactions on Emerging Topics in Computational Intelligence, 6(3):556–567, 2021.

- Xiuli Bi, Jinwu Hu, Bin Xiao, Weisheng Li, and Xinbo Gao. Iemask r-cnn: Information-enhanced mask r-cnn. IEEE Transactions on Big Data, 9(2):688–700, 2022. [CrossRef]

- Fangfang Liu and Ming Fang. Semantic segmentation of underwater images based on improved deeplab. Journal of Marine Science and Engineering, 8(3):188, 2020. [CrossRef]

- Alexander Kirillov, Eric Mintun, Nikhila Ravi, Hanzi Mao, Chloe Rolland, Laura Gustafson, Tete Xiao, Spencer Whitehead, Alexander C Berg, Wan-Yen Lo, et al. Segment anything. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 4015–4026, 2023.

- Lei Ke, Mingqiao Ye, Martin Danelljan, Yu-Wing Tai, Chi-Keung Tang, Fisher Yu, et al. Segment anything in high quality. Advances in Neural Information Processing Systems, 36, 2024.

- Nikhila Ravi, Valentin Gabeur, Yuan-Ting Hu, Ronghang Hu, Chaitanya Ryali, Tengyu Ma, Haitham Khedr, Roman Rädle, Chloe Rolland, Laura Gustafson, et al. Sam 2: Segment anything in images and videos. arXiv preprint arXiv:2408.00714, 2024.

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).