Submitted:

03 September 2024

Posted:

05 September 2024

You are already at the latest version

Abstract

Keywords:

Introduction

Materials and Methods

Ethics

Data Collection and Search Strategy

Question Selection and Categorization

JAMA Accountability Analysis

Large Language Model (LLM)

Evaluation of LLM-Chatbot Responses

| DICERN scoring system | Total score (15–75 points) |

|---|---|

| 1.Are the aims clear? | 1-5 points |

| 2.Does it achieve its aims? | 1-5 points |

| 3.Is it relevant? | 1-5 points |

| 4.Is it clear what sources of information were used to compile the publication (other than the author or producer)? | 1-5 points |

| 5.Is it clear when the information used or reported in the publication was produced? | 1-5 points |

| 6. Is it balanced and unbiased? | 1-5 points |

| 7. Does it provide details of additional sources of support and information? | 1-5 points |

| 8. Does it refer to areas of uncertainty? | 1-5 points |

| 9.Does it describe how each treatment works? | 1-5 points |

| 10. Does it describe the benefits of each treatment? | 1-5 points |

| 11. Does it describe the risks of each treatment? | 1-5 points |

| 12. Does it describe what would happen if no treatment is used? | 1-5 points |

| 13.Does it describe how the treatment choices affect overall quality of life? | 1-5 points |

| 14. Is it clear that there may be more than 1 possible treatment choice? | 1-5 points |

| 15.Does it provide support for shared decision making? | 1-5 points |

| 16. Based on the answers to all of these questions, rate the overall quality of the publication | 1-5 points |

| Global Quality Score | Score |

| Poor quality, very unlikely to be of any use to patients | 0-1 Points |

| Poor quality but some information present, of very limited use to patients | 0-1 Points |

| Suboptimal flow, some information covered but important topics missing, somewhat useful | 0-1 Points |

| Good quality and flow, most important topics covered, useful to patients | 0-1 Points |

| Excellent quality and flow, highly useful to patients | 0-1 Points |

| Readability indexes | |

| Flesch reading ease score (FRE) | 206.835 - (1.015 (W/S)) - (84.6 * (S/W) |

| Flesch–Kincaid grade level (FKGL) | 0.39 * (W/S) + 11.8 * (B/W)−15.59 |

| Gunning FOG Index (GFI) | 0.4 × [(W/S) + 100 × (C*/W)] |

| Coleman-Lıau Readabılıty Index (CLI) | (0.0588 × L)−(0.296 × S*)−15.8 |

| Automated Readabılıty Index (ARI) | (4.71 * (C/W)) + (0.5 * (W/S)) - 21.43 |

| Simple measure of Gobbledygook (SMOG) | 1.0430 × √C + 3.1291 |

| Linsear Write Readability Formula (LW) | (ASL + (2 * HDW)) / SL |

| Forcast Readability Formula (FORCAST ) | 20 - ( # of Single Syllable Words x 150 / # of Words x 10 ) |

| Average Readıng Level Consensus Calc (ARLC) | Based on (8) above popular readability formulas, your text yielded a final result |

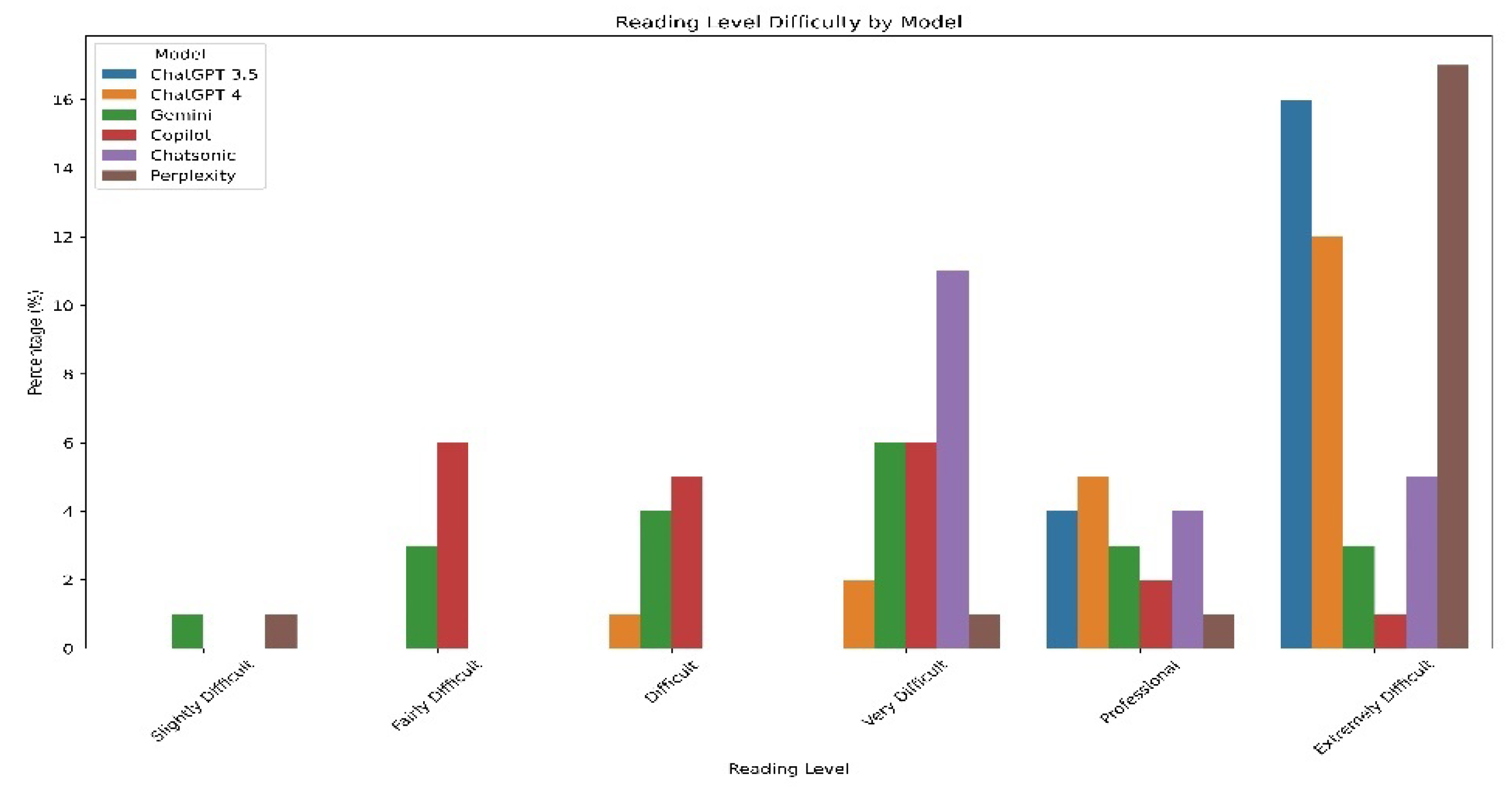

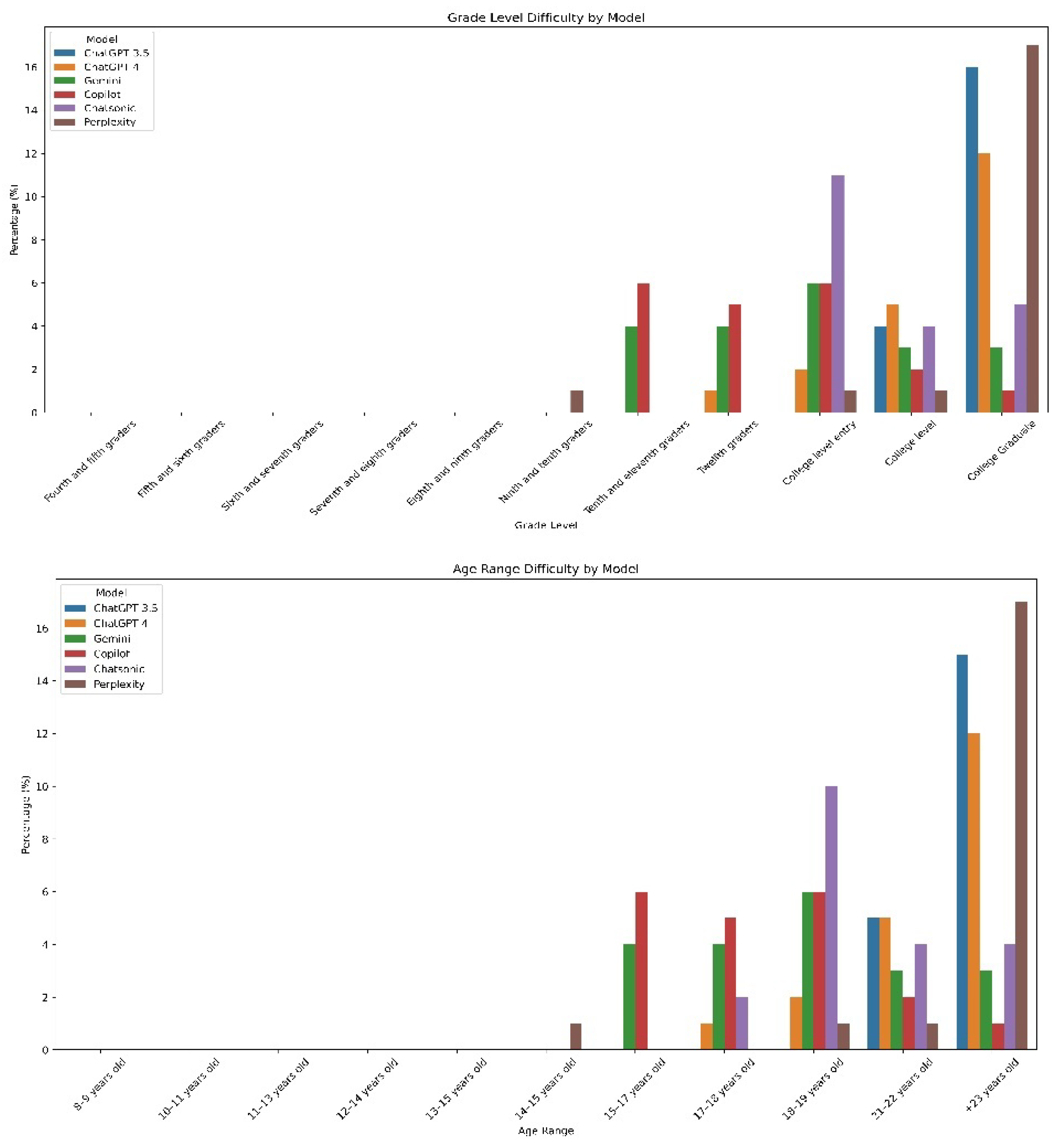

Readability Analysis

Statistical Analysis

Results

Frequently asked Questions after Google Searches for ‘Keratoconus’

JAMA Accountability Scores for Webpages to Keratoconus-Related FAQs

Average Score for Each Question

Reliability

(mDISCERN Score)

(Quality GQS Score)

Readability Indices

Response Length

Score Distributions of mDISCERN Scale and Quality Classification

Discussion

Conclusions

Author Contributions

Funding

Disclosure Statement

References

- Santodomingo-Rubido J, Carracedo G, Suzaki A. et al. Keratoconus: An updated review. Cont Lens Anterior Eye 2022; 45:101559. [CrossRef]

- Nazario-Johnson L, Zaki HA, Tung GA. Use of Large Language Models to Predict Neuroimaging. J Am Coll Radiol 2023; 20: 10004-09. [CrossRef]

- Kumari A, Kumari A, Singh A. et al. Large Language Models in Hematology Case Solving: A Comparative Study of ChatGPT-3.5, Google Bard, and Microsoft Bing. Cureus 2023; 15: e43861. [CrossRef]

- Meyrowitsch DW, Jensen AK, Sørensen JB.et al. AI chatbots and (mis)information in public health: impact on vulnerable communities. Front Public Health 2023; 11:1226776. [CrossRef]

- Stephens LD, Jacobs JW, Adkins BD.et al. Battle of the (Chat)Bots: Comparing Large Language Models to Practice Guidelines for Transfusion-Associated Graft-Versus-Host Disease Prevention. Transfus Med Rev 2023; 37:150753. [CrossRef]

- Lim ZW, Pushpanathan K, Yew SME.et al. Benchmarking large language models’ performances for myopia care: a comparative analysis of ChatGPT-3.5, ChatGPT-4.0, and Google Bard. EBioMedicine 2023; 95:104004. [CrossRef]

- Giuffrè M, Kresevic S, You K.et al. Systematic review: The use of large language models as medical chatbots in digestive diseases. Aliment Pharmacol Ther 2024; 27. [CrossRef]

- Neo JRE, Ser JS, Tay SS. Use of large language model-based chatbots in managing the rehabilitation concerns and education needs of outpatient stroke survivors and caregivers. Frontiers in Digital Health 2024; 6: 1395501. [CrossRef]

- Wu Y, Zhang Z, Dong X.et al. Evaluating the performance of the language model ChatGPT in responding to common questions of people with epilepsy. Epilepsy & Behavior 2024;151: 109645. [CrossRef]

- Peng C, Yang X, Chen A. et al. A study of generative large language model for medical research and healthcare. NPJ digital medicine 2023;6: 210. [CrossRef]

- Betzler BK, Chen H, Cheng CY. et al. Large language models and their impact in ophthalmology. Lancet Digit Health 2023;5: 917-24. [CrossRef]

- Cohen SA, Brant A, Fisher A.et al. Dr. Google vs. Dr. ChatGPT: Exploring the Use of Artificial Intelligence in Ophthalmology by Comparing the Accuracy, Safety, and Readability of Responses to Frequently Asked Patient Questions Regarding Cataracts and Cataract Surgery. Semin Ophthalmol 2024; 39:1-8. [CrossRef]

- Bernstein IA, Zhang YV, Govil D.et al. Comparison of Ophthalmologist and Large Language Model Chatbot Responses to Common Patient Queries. JAMA JAMA network open 2023; 6: e2330320. [CrossRef]

- Cohen SA, Fisher AC, Pershing S. Analysis of the readability and accountability of online patient education materials related to glaucoma diagnosis and treatment. Clin Ophthalmol 2023; 17:779-88. [CrossRef]

- Martin CA, Khan S, Lee R.et al. Readability and suitability of online patient education materials for glaucoma. Ophthalmol Glaucoma 2022; 5:525-530. [CrossRef]

- Patel P, Patel P, Ahmed H.et al. Content, Readability, and Accountability of Online Health Information for Patients Regarding Blue Light and Impact on Ocular Health. Cureus 2023;15: e43861. [CrossRef]

- Redick DW, Hwang JC, Kloosterboer A.et al. Content, readability, and accountability of freely available online information for patients regarding epiretinal membranes. Semin Ophthalmol 2022; 37:67-70. [CrossRef]

- Kloosterboer A, Yannuzzi N, Topilow N.et al. Assessing the quality, content, and readability of freely available online information for patients regarding age-related macular degeneration. Semin Ophthalmol 2021; 36:400-405. [CrossRef]

- Wilhelm TI, Roos J, Kaczmarczyk R. Large language models for therapy recommendations across 3 clinical specialties: comparative study. J Med Internet Res 2023;25: e49324. [CrossRef]

- Onder CE, Koc G, Gokbulut P.et al. Evaluation of the reliability and readability of ChatGPT-4 responses regarding hypothyroidism during pregnancy. Sci Rep 2024; 14:243. [CrossRef]

- Ostrowska M, Kacała P, Onolememen D.et al. To trust or not to trust: evaluating the reliability and safety of AI responses to laryngeal cancer queries. Eur Arch Otorhinolaryngol. 2024; 1-13. [CrossRef]

- Wu G, Zhao W, Wong A.et al. Patients with floaters: Answers from virtual assistants and large language models. Digit Health 2024; 10: 20552076241229933. [CrossRef]

- Newman-Casey PA, Niziol LM, Lee PP.et al. The impact of the support, educate, empower personalized glaucoma coaching pilot study on glaucoma medication adherence. Ophthalmol Glaucoma. 2020; 3:228-37. [CrossRef]

- Mbanda N, Dada S, Bastable K.et al. A scoping review of the use of visual aids in health education materials for persons with low-literacy levels. Patient Educ Couns 2021; 104:998-1017. [CrossRef]

| Website Category | Number n (%) | JAMA Benchmarks (Mean score±SD) |

|---|---|---|

| Private practice or independent user | 13 (65%) | 1.5±0.68 |

| Official patient education materials published by a national organization | 7 (35%) | 1.57±0.75 |

| Total | 20(100%) | 1.5±0.68 |

| JAMA Benchmarks of Website | Score | Number n (%) |

| Authorship | 5 | |

| Attribution | 3 | |

| Disclosure | 15 | |

| Currency | 9 | |

| 4.0 | 0(0%) | |

| 3.0 | 2(10%) | |

| 2.0 | 6(30%) | |

| 1.0 | 12(60%) |

| No | Question | Number of websites n (%) | mDISCERN (Mean ± SD) | GQS score (Mean ± SD) | ARLC (Mean ± SD |

|---|---|---|---|---|---|

| 1. | What Is Keratoconus? | 14 (70%) | 49.33±4.96 | 3.5±0.55 | 13.17±2.13 |

| 2. | What Are the Symptoms of Keratoconus? | 10 (50%) | 43.5±2.58 | 3±0.00 | 12.33±2.33 |

| 3. | How do patients with keratoconus See ? | 3 (15%) | 44.83±3.43 | 3±0.63 | 14.17±2.85 |

| 4. | How Can Keratoconus Affect My Life? | 6 (30%) | 45±3.34 | 3.17±0.41 | 14.5±1.37 |

| 5. | How Common Is Keratoconus? | 7 (35%) | 45.17±3.18 | 3.17±0.75 | 13.67±1.96 |

| 6. | What Causes Keratoconus? | 14 (70%) | 43.5±6.41 | 3±0.63 | 15.5±1.37 |

| 7. | Does Keratoconus Cause Blindness? | 6 (30%) | 47.17±5.74 | 3.5±0.84 | 14.83±1.47 |

| 8. | Can LASIK or RK Surgery Cause Keratoconus? | 5 (25%) | 42.67±3.93 | 3.17±0.75 | 16.33±2.65 |

| 9. | Are There Multiple Forms of Keratoconus? | 4 (20%) | 38.67±4.63 | 2.33±0.52 | 13.67±2.16 |

| 10. | How Is Keratoconus Diagnosed? | 8 (40%) | 42.5±2.42 | 3.17±0.41 | 15.33±1.50 |

| 11. | How Do You Measure the Severity of Keratoconus? | 4 (20%) | 43.33±1.96 | 3.17±0.41 | 15.5±3.39 |

| 12. | How Can I Treat My Keratoconus? | 16 (80%) | 47.5±4.32 | 3.67±0.52 | 13.67±1.63 |

| 13. | What is the Best Keratoconus Treatment? | 5 (25%) | 46.5±3.56 | 3.33±0.52 | 14±2.09 |

| 14. | How Can I Stop My Keratoconus From Getting Worse? | 6 (30%) | 44.83±4.57 | 3.33±0.52 | 13.67±1.75 |

| 15. | Is Keratoconus Always Progressive? | 9 (45%) | 43±3.34 | 3±0.00 | 13.67±1.21 |

| 16. | Does Keratoconus Cause Eye Pain? | 4 (20%) | 43.83±4.44 | 2.67±0.52 | 13.5±1.51 |

| 17. | Can Keratoconus Go Away On Its Own? | 3 (15%) | 44.5±3.61 | 2.83±0.41 | 14.5±1.64 |

| 18. | Can Keratoconus Cause Dry Eye? | 4 (20%) | 46.67±3.55 | 3.17±0.98 | 14.17±1.72 |

| 19. | What Do I Do If I Think I Have Keratoconus? | 4 (20%) | 45.5±3.27 | 3±0.63 | 13.83±1.72 |

| 20. | What Should Be Considered After Keratoconus Surgery? | 3 (15%) | 46.33±2.87 | 3.17±0.41 | 13.67±2.06 |

| chatgpt3.5 | Chatgpt4 | Gemini | Copilot | Chatsonic | Perplexity | p-value | |

|---|---|---|---|---|---|---|---|

| Reliability | |||||||

| mDISCERN score (mean ± SD) | 42.96±3.16 | 43.2±2.87 | 46.05±5.12 | 46.95 ±3.53 | 43.95±2.16 | 45.95±3.53 | <0.05 |

| Quality | |||||||

| GQS (Mean ± SD) | 3.01±0.51 | 3.05±0.44 | 3.3±0.65 | 3.25±0.55 | 3.15±0.67 | 3.06±0.68 | .34 |

| Readability indexes | |||||||

| FRE (Mean ± SD) | 21.43±7.10 | 28.85±8.44 | 34.7±8.79 | 29.6±9.04 | 24.4±8.98 | 22.3±12.75 | <0.05 |

| FKGL (Mean ± SD) | 15.41±1.39 | 14.64±1.70 | 12.46±1.73 | 12.04±1.44 | 13.38±1.31 | 15.5±3.02 | <0.05 |

| GFI (Mean ± SD) | 18.52±2.09 | 18.05±2.18 | 15.33±2.03 | 15.21±1.93 | 17.43±1.80 | 18.98±3.79 | <0.05 |

| CLI (Mean ± SD) | 15.85±0.75 | 14.75±1.27 | 14.57±1.51 | 15.29±1.35 | 16.07±1.42 | 15.93±1.75 | <0.05 |

| ARI (Mean ± SD) | 16.07±1.31 | 15.62±1.94 | 13.21±1.91 | 12.18±1.29 | 13.38±1.27 | 16.58±3.16 | <0.05 |

| SMOG (Mean ± SD) | 13.77±1.23 | 13.16±1.51 | 11.11±1.46 | 10.25±1.27 | 11.89±1.11 | 13.69±2.64 | <0.05 |

| LINSEAR (Mean ± SD) | 16.33±1.97 | 16.07±3.11 | 11.59±2.78 | 8.2±2.37 | 11.07±2.12 | 16.36±5.33 | <0.05 |

| FORCAST (Mean ± SD) | 12.45±0.52 | 12.08±0.65 | 12.08±0.54 | 12.75±0.67 | 12.61±0.62 | 12.53±0.61 | <0.05 |

| ARLC | 15.65±1.13 | 14.85±1.53 | 12.9±1.61 | 12.35±1.18 | 13.6±1.14 | 15.75±2.55 | <0.05 |

| Response length | |||||||

| Sentences (Mean ± SD) | 13.05±4.21 | 13.3±3.31 | 13±5 | 16±4.69 | 13.35±3.78 | 6.9±4.29 | <0.05 |

| Words (Mean ± SD) | 264.95±78.48 | 285.9±60.76 | 237.05±71.85 | 231.3±50.69 | 283.4±70.53 | 127.8±51.96 | <0.05 |

| Characters (Mean ± SD) | 1802.8±538.68 | 1919.6±425.43 | 1595.15±489.22 | 1585±363.31 | 1985.2±491.80 | 893.6±379.47 | <0.05 |

| Syllable (Mean ± SD) | 510.9±144.98 | 534.6±118.61 | 435.1±129.66 | 442.7±98.51 | 564.05±133.18 | 251.6±106.22 | <0.05 |

| Word/sentence (Mean ± SD) | 19.66±2.69 | 20.43±3.39 | 14.22±1.96 | 11.3±2.52 | 13.65±2.15 | 16.57±5.34 | <0.05 |

| Syllable/word | 1.96±0.06 | 1.89±0.10 | 1.86±0.09 | 1.96±0.12 | 2.01±0.10 | 1.99±0.12 | <0.05 |

| mDISCERN criteria | chatgpt3.5 | Chatgpt4 | Gemini | Copilot | Chatsonic | Perplexity |

|---|---|---|---|---|---|---|

| n=20 (%) | n=20 (%) | n=20 (%) | n=20 (%) | n=20 (%) | n=20 (%) | |

| Excellent (63–75 points) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) |

| Good (51–62 points) | 1 (5%) | 1 (5%) | 6 (30%) | 4 (20%) | 0 (0%) | 5 (25%) |

| Reasonable (39–50 points) | 17 (85%) | 16 (80%) | 12 (70%) | 15 (75%) | 19 (95%) | 15 (75%) |

| Poor (27–38 points) | 2 (10%) | 3 (15%) | 2 (10%) | 1 (5%) | 1 (5%) | 0 (0%) |

| Very poor (15–26 points) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) | 0 (0%) |

| Quality classification | ||||||

| Low quality | 2 (10%) | 2 (10%) | 2 (10%) | 1 (5%) | 3 (15%) | 4 (20%) |

| Moderate quality | 15 (75%) | 16 (80%) | 10 (50%) | 13 (65%) | 11 (55%) | 11 (55%) |

| High quality | 3 (15%) | 2 (10%) | 8 (40%) | 6 (30%) | 6 (30%) | 15(25%) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).