1. Introduction

The previous work examined a neural network model featuring six and seven neurons with multiple time delays. It is well known that many scholars are becoming more involved in analysing neural networks because of their broad range of usage in many areas of science, ranging from biological mechanisms, auto-control, brain modelling, sensor technology, computer vision, etc. [

1,

2,

3]. The dynamical features of various kinds of neural networks have been demonstrated to provide vast applications in a number of regions, such as recognizing patterns, control processes, artificial intelligence, the processing of images, medical sciences, and so on, since Hopfield provided a class of simpler neural networks in 1984 [

4,

5,

6,

7]. Time delay frequently arises from the lag in signal propagation between distinct neurons in artificial neural networks. Accordingly, it is acknowledged that delayed neural networks are much better models than classical neural networks with no time delays in order to provide insight into the real world of artificial neural networks. Time delays frequently lead neural networks to become unstable, provide periodic phenomena, indicate chaotic distinctive features, and more [

8,

9,

10]. In current times, there is a lot of interest in understanding how time delay affects several delayed neural network dynamics. Many neural networks containing time delays have already been built and studied these days, and many interesting studies have been published. As an example, we established a new necessary condition that ensures the existence and global exponential stability of pseudo-approximately periodic solutions for delayed fuzzy cell-based neural network models with quaternion values. The stability of moving wave results for a class that consists of distributed delay cell-based neural network models was examined by Hsu and Lin [

11]. The fuzzy cell-based neural network finite-time cluster synchronization problem has been addressed by Tang et al. [

12]. For a greater understanding, study [

13].

Many scholars in the areas of mathematics, neurology, and control technology have focused strongly on the study of the numerous dynamical features of various neural network structures in order to optimize neural networks for the benefit of humans. Time delay usually results from the delay in the feedback of transmitted signals over multiple neurons in a number of models of neural networks. As a result, research on delayed neural networks has developed interest nowadays. Many significant results on delayed neural structures have been achieved in the current time. A paper by Wang et al. [

14] explored the use of adapted pin control and pin management for attaining fixed-time synchronization of a type of complex-valued BAM neural network structures with delays; time-delayed impulsive behavior was used by Zhao et al. [

15] to study the global stabilization in quaternion-valued inertial BAM artificial neural networks with delays. A sufficiently strong criterion for guaranteeing the existence and global exponential nature of the stability of periodic solutions to quaternion-valued cell-based neural networks with delays was found by Li and Qin [

16]. The stability analysis of virtually periodic results of a certain type of inconsistency-delayed BAM neural network model with regard to the

D-operator has been conducted by Kong et al. [

17], the anti-periodic results of the Cohen-Grossberg shift restricting artificial neural networks, including time-varying impulses and delays, were examined by Xu and Zhang [

18]. Stochastic stability in Markovian-jumping BAM neural network models with time delay was studied by Syed Ali et al. [

19]. For more thoroughly comprehensive research on this area of study [

20,

21].

Although many dynamic problems in delayed neural networks have been resolved by the aforementioned works, they focus on the integer-order aspect. Many academics have suggested in recent times that fractional-order differential equations, which readily explain long-term mechanisms of change and memory, are a more appropriate tool for revealing the practical relationships of dynamical systems in daily life [

22]. Fractional calculus is currently used extensively in a wide variety of fields, including finance engineering, the field of neuroscience, electromagnetic radiation, light and electrical engineering, and biology [

23,

24,

25]. Recent times have included the receipt of several significant papers on fractional dynamical systems. Most recently, there has been suitable work on fractional-order neural networks that deal with delays. For instance, fractional impulsive delayed BAM artificial neural networks’ global stability was investigated by Wang et al. [

26]. Global Mittag-Leffler synchronization in fractional-order octonion-valued BAM artificial neural networks was examined by Xiao et al. [

27]. The impact of leakage delay on the Hopf bifurcation of fractional-order quaternion-valued artificial neural networks was discovered by Xu et al. [

28]. For more information, visit [

29,

30,

31].

In delayed systems with dynamics, Hopf bifurcation manners are a vital dynamical characteristic. More specifically, delay-driven Hopf bifurcation plays a key role in neural network system optimization and control. Therefore, many researchers are extremely interested in delay-driven Hopf bifurcation. Researchers might successfully improve the stability region and the time of launch of Hopf bifurcation in delayed neural systems by analysing delay-driven Hopf bifurcation. Valuable results on delay-driven Hopf bifurcation of integer-order neural network structures have been generated over the last few decades. But there are not a lot of studies on fractional-order neural systems and delay-driven Hopf bifurcation. Additionally, there are currently a few works on this topic. The delay-driven Hopf bifurcation in fractional-order double-ring structured neural networks was studied by Li et al. [

32]. The effect of the total of the various delays on the bifurcation of fractional-order BAM neural networks was investigated by Xu et al. [

33], and Yuan et al. [

34] discovered several fresh insights on the bifurcation of fractional-order delayed complex-valued neural systems. Wang et al. [

35] performed a comprehensive investigation of the Hopf bifurcation of fractional-order BAM neural networks in mixed delays and

neurons. It suggests the readers go to [

36,

37,

38] for further details. Although the research on the Hopf bifurcation of the fractional delayed model of neural networks has been implemented, many issues remain that require further discussion. Motivated by such a viewpoint, we are going to tackle the following major areas of the work: (i) Examine if a particular type of fractional BAM neural network model solution is bounded, exists, and is unique with regard to delays: (ii) Determine a delay-independent criteria for the stability of the stability of the fractional BAM neural network models and the formation of the bifurcation phenomenon: (iii) Look for the most effective controllers for managing the fractional BAM neural network model stability section and the resulting development of the bifurcation phenomenon through the use of delays.

Nowadays, fractional calculus is commonly utilized in many different domains, such as biology, finance engineering, neuroscience, electromagnetic radiation, light engineering, and electrical engineering. A number of important publications on fractional dynamical systems have been received recently.

In the above review of the literature, it seems that so many researchers have worked with and addressed integer as well as fractional-order problems in delayed neural networks. All these neural network models are examined for fractional orders by taking arbitrary values of the orders. The present article gives a detailed procedure for calculating the incommensurate fractional orders by using points of equilibrium and their corresponding eigenvalues. On the basis of these incommensurate fractional orders, the neural network models are examined and analysed numerically within the calculated stability region. The calculated incommensurate fractional orders will help to converge the numerical solution rapidly and accurately. The present research work will open a new area of research for time delay involving neural network models for incommensurate fractional orders. The simulated neural network weights are synchronized and stabilized with each other for the calculated incommensurate fractional values. The graphical presentation of the synchronized different neural network weights depicts stable and converged images with each other.

2. Mathematical Formulation

The extended bidirectional associative memory neural network is described as follows:

Where

and

represent the stability of internal neuron activities on the

I-layer and

J-layer, respectively, and

,

indicate connecting weights through neurons in two layers: the

I-layer and

J-layer. The neurons on the

I-layer whose states have been designated by

receive the inputs

and the inputs that those neurons in the

J-layer output through activation functions

, while the neurons on the

J-layer whose associated states are revealed by

obtain the inputs

and the inputs that those neurons in the

I-layer output via activation functions

[

39]. The majority of writers only take into consideration small delayed neural networks that consist of two, three, four, five, or six neurons in order to understand the rule of extensive structures [

40], as neural networks are large-scale, complicated, non-linear dynamical systems.

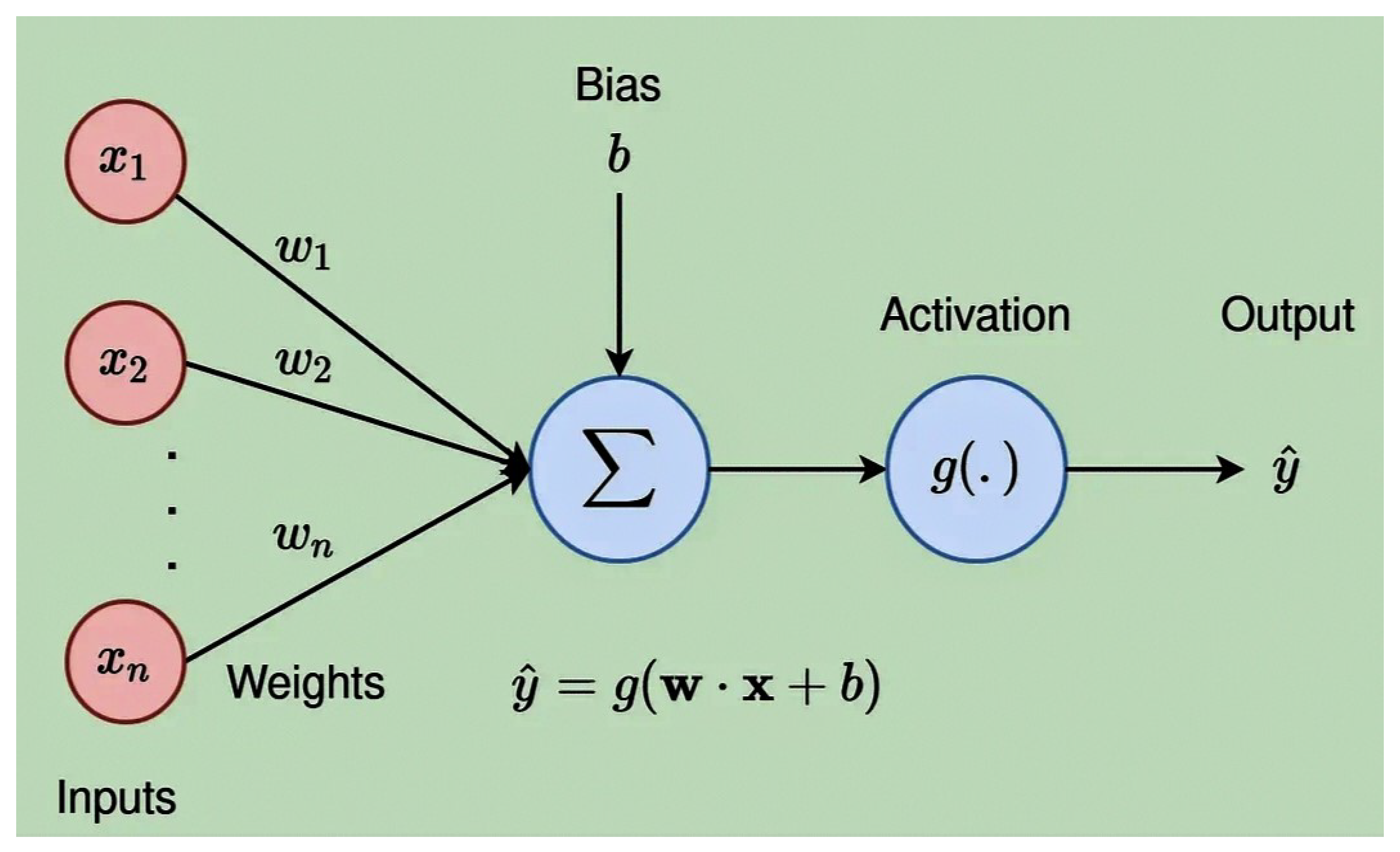

Figure 1.

Schematic diagram

Figure 1.

Schematic diagram

In 2009, Zhang et al. [

41] considered the following delayed BAM neural networks:

Where

,

denotes the state of neurons or state variables, by

is the stability of the internal neuron process, while activation functions are indicated by

g and

h. Considering

, and

are all real constants, they can assume that

and

in many situations. Zhang et al. [

41] created the condition on the stability and appearance of the Hopf bifurcation of the system (3) by referencing the characteristic equation of the system. Multi-periodic solutions to the system (3) were found to exist by Zhang et al. [

41], utilizing the symmetric bifurcation of Hopf’s theory of Wu [

42].

Based on the preceding description, the modified system (3) is the fractional-order example in order to more accurately define the long-term modifications to the process and memory among distinct neurons and also the global connection of neurons. The proposed system (4) can be modified for incommensurate fractional orders as follows:

Where

are the incommensurate fractional-orders to be calculated.

The appropriate initial condition of system (5) is stated as follows:

Where

is a time delay and

denotes a constant.

3. Stability Analysis

Before discussing the reliability of the neural network model, it is impossible to calculate the fractional incommensurate orders

, where

. For fixed values of the included parameters, Equation (

5) for the neural network model leads to a fractional-order system with the following form:

By choosing the random values of the involved parameters as

,

,

and

, the different points of equilibra are founded for the Jacobian matrix of the model (5) (omitted for the sack of brevity). On the basis of these equilibrium points, the upper bounds of incommensurate fractional orders are calculated as

,

,

,

,

and

, using the necessary condition for system A to be stable [

43], if the following condition is satisfied:

where

is the incommensurate fractional-orders and

is the

eigenvalue of the system.

The corresponding incommensurate fractional orders are determined as , , , , and , respectively. The incommensurate fractional orders from to are physically stable because they lie in the first Riemann sheet region. On the other hand, does not lie in the stable region, which is not physical. It is possible to improve the reliability and stability of neural networks for a variety of practical purposes. The stability of a neural network to generate reliable and consistent outputs in response to changing inputs or conditions is often referred to as stability in neural network models. For neural networks to be successfully implemented and used in many kinds of applications, stability must be exceeded. The points that follow a few essential details about neural network stability are: When trained on a certain data set, a stable neural network needs to consistently converge to its solution. The network can possibly be determined to be unstable if it is unable to converge or converge to an alternative solution for identical inputs. Reliability to changes in input information, noisy environments, or perturbations is another component of stability in neural networks. Additionally, in the event that the input data is slightly modified or transferred, a stable network should still provide trustworthy results. Whenever applied to new data, a stable neural network replicates very well. It is not appropriate to be too sensitive to slight changes in the input feature or over-fit the training set. Artificial neural networks are able to become more stable by using weight initialization techniques that prevent gradients from inflating or disappearing during training. Stable neural network training requires selecting the most appropriate learning rate. Clearly, high learning rates can cause instability, which in turn can result in oscillations or divergence while training. Neural networks can be made more stable and more capable of over-fitting by applying regularization methods.

Artificial neural networks represent a novel form of dynamical system. The ability to clarify the solutions of dynamical systems that are not linear makes the theory of dynamical systems extremely potent. When neural network models reliably and accurately forecast unknown data, they can identify a meaningful pattern from a training set of data, and this is when the models are considered stable. The model’s architectural design, training process, and data quantity and quality are only a few examples of those factors that can affect its stability.

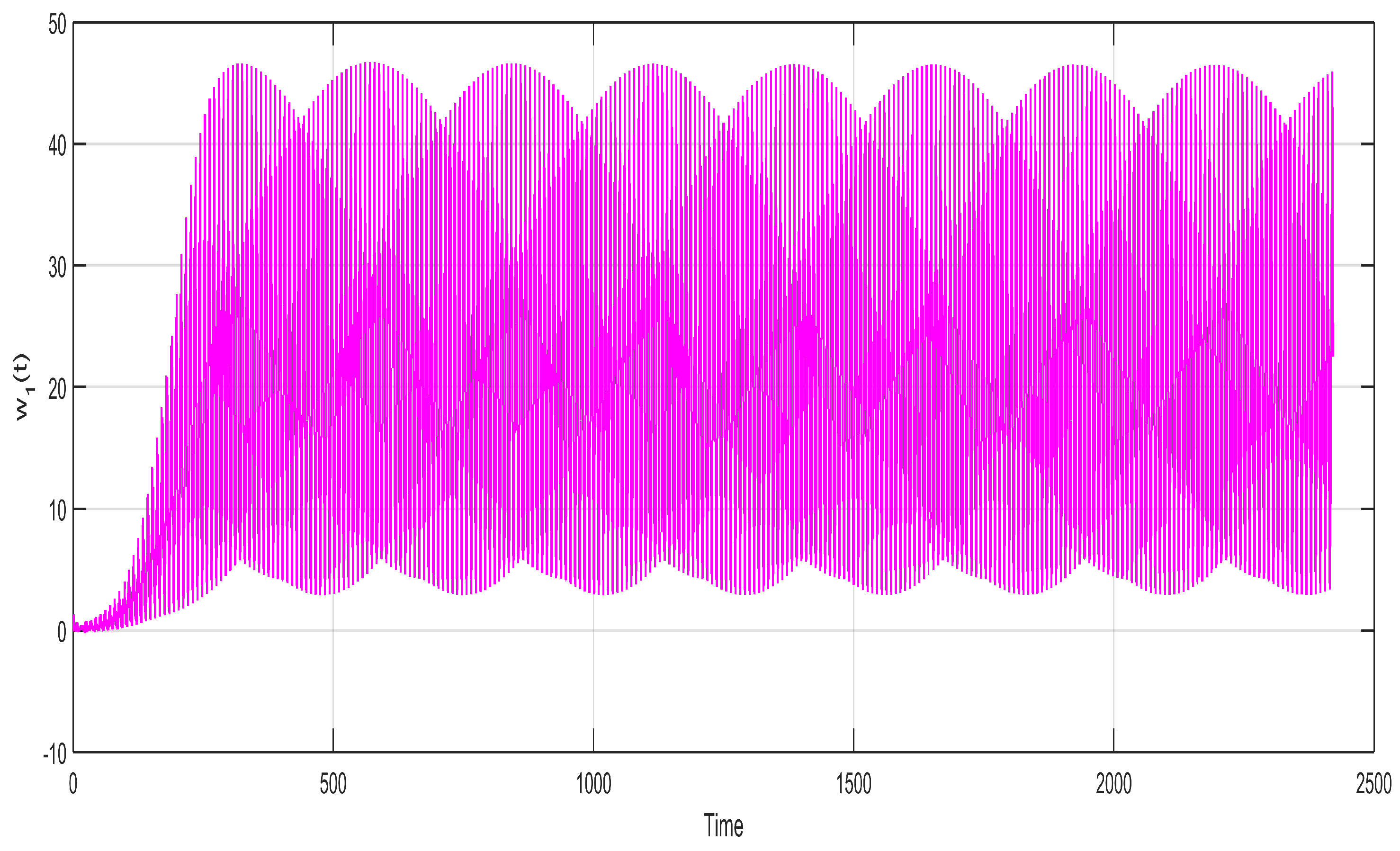

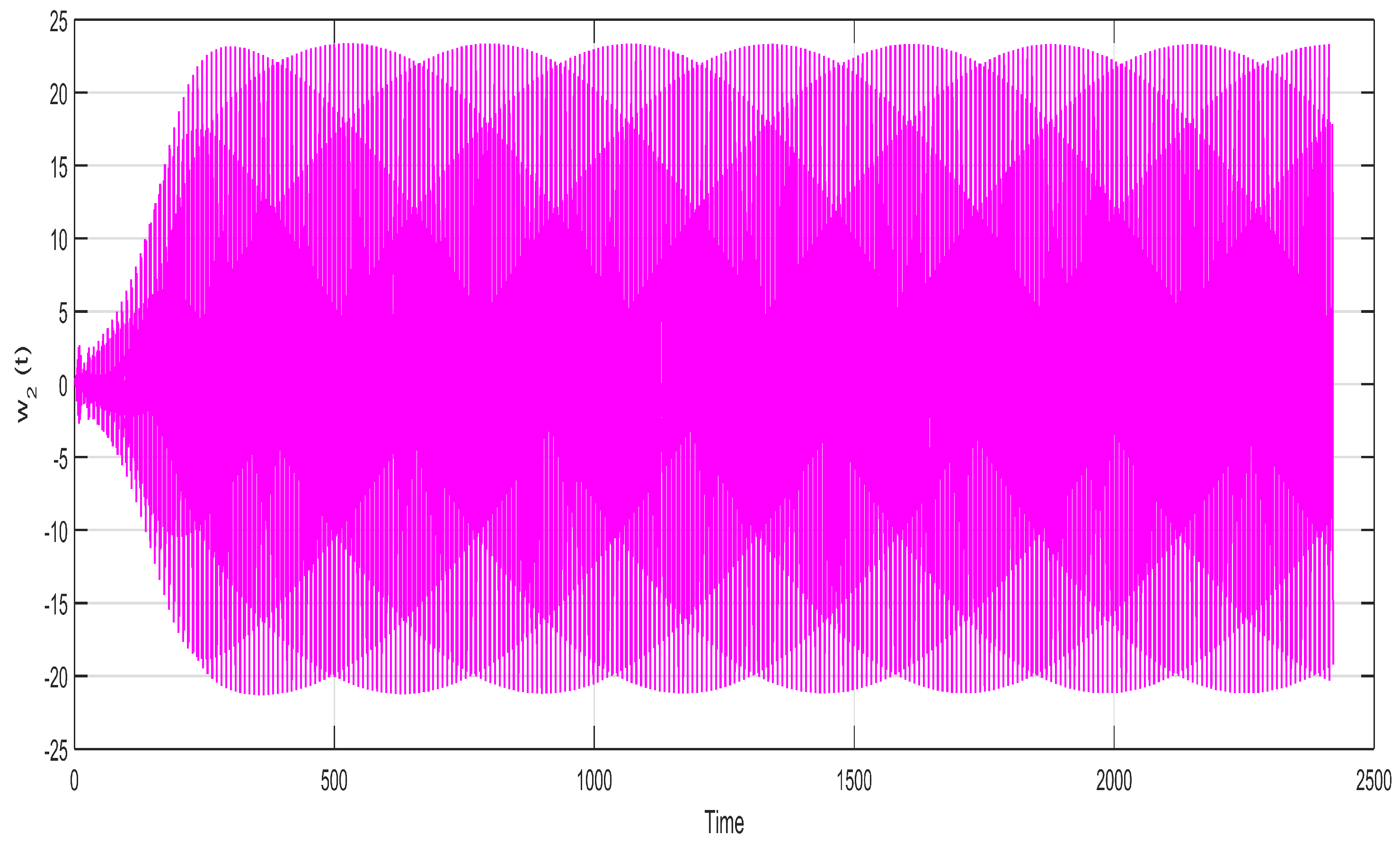

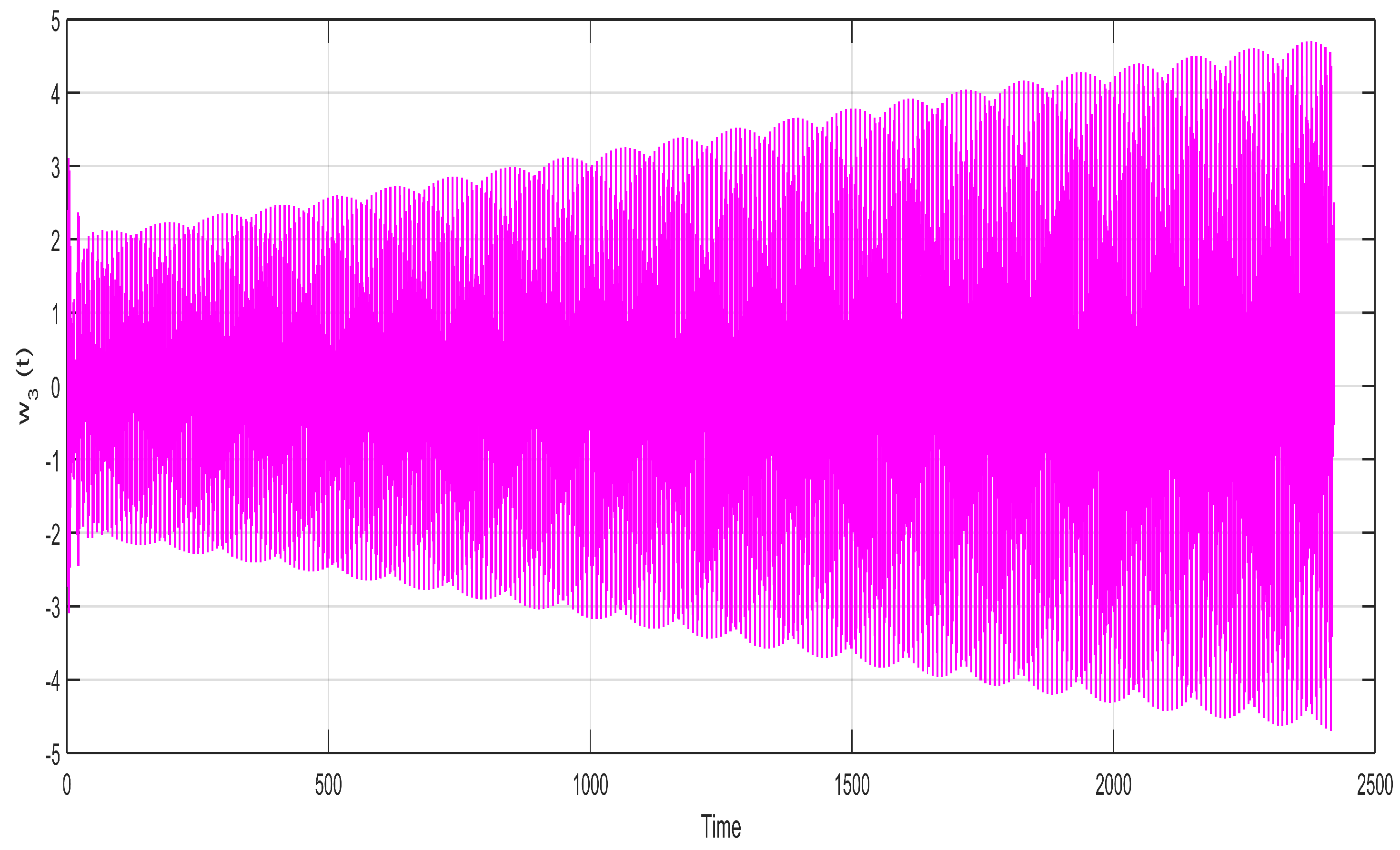

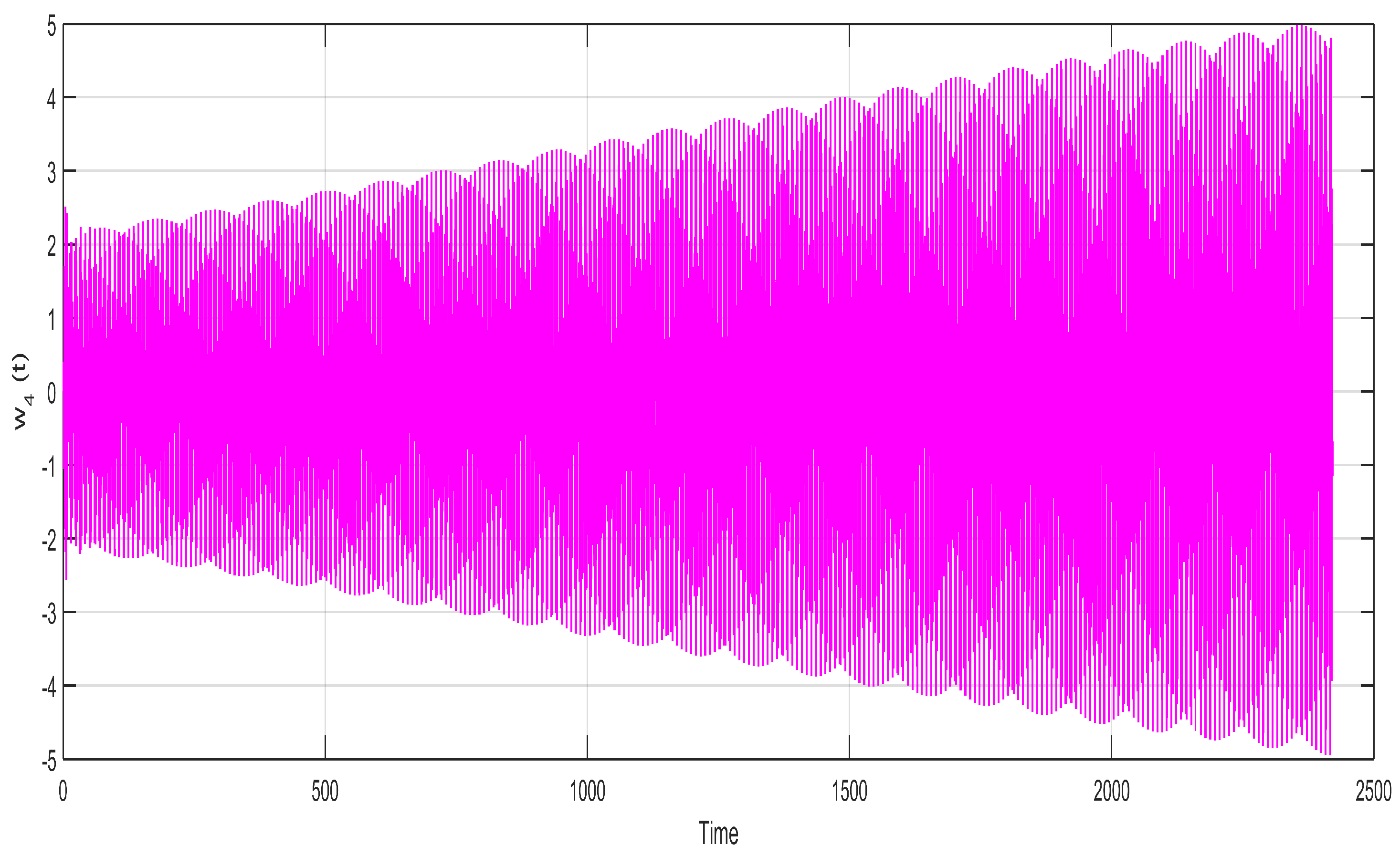

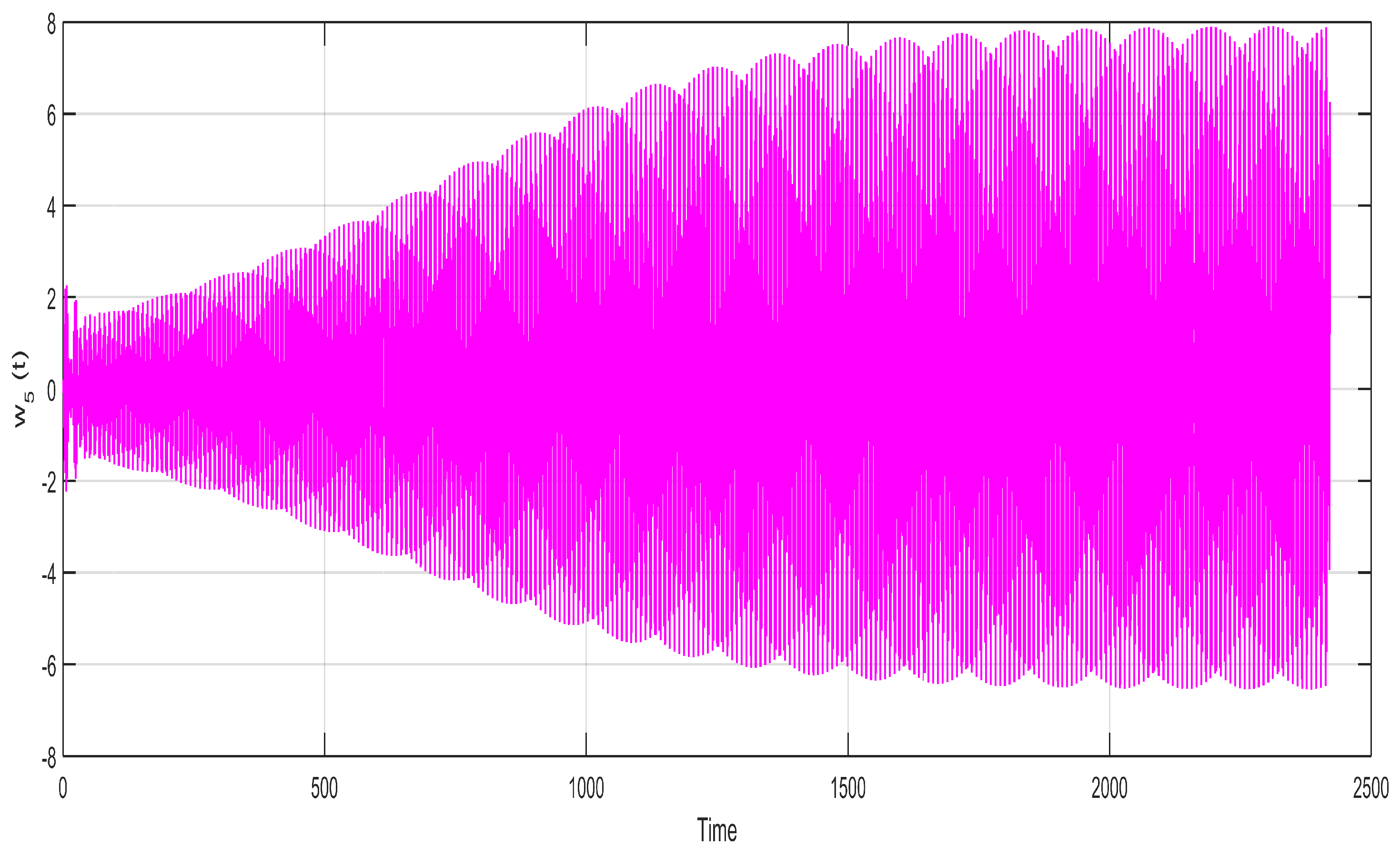

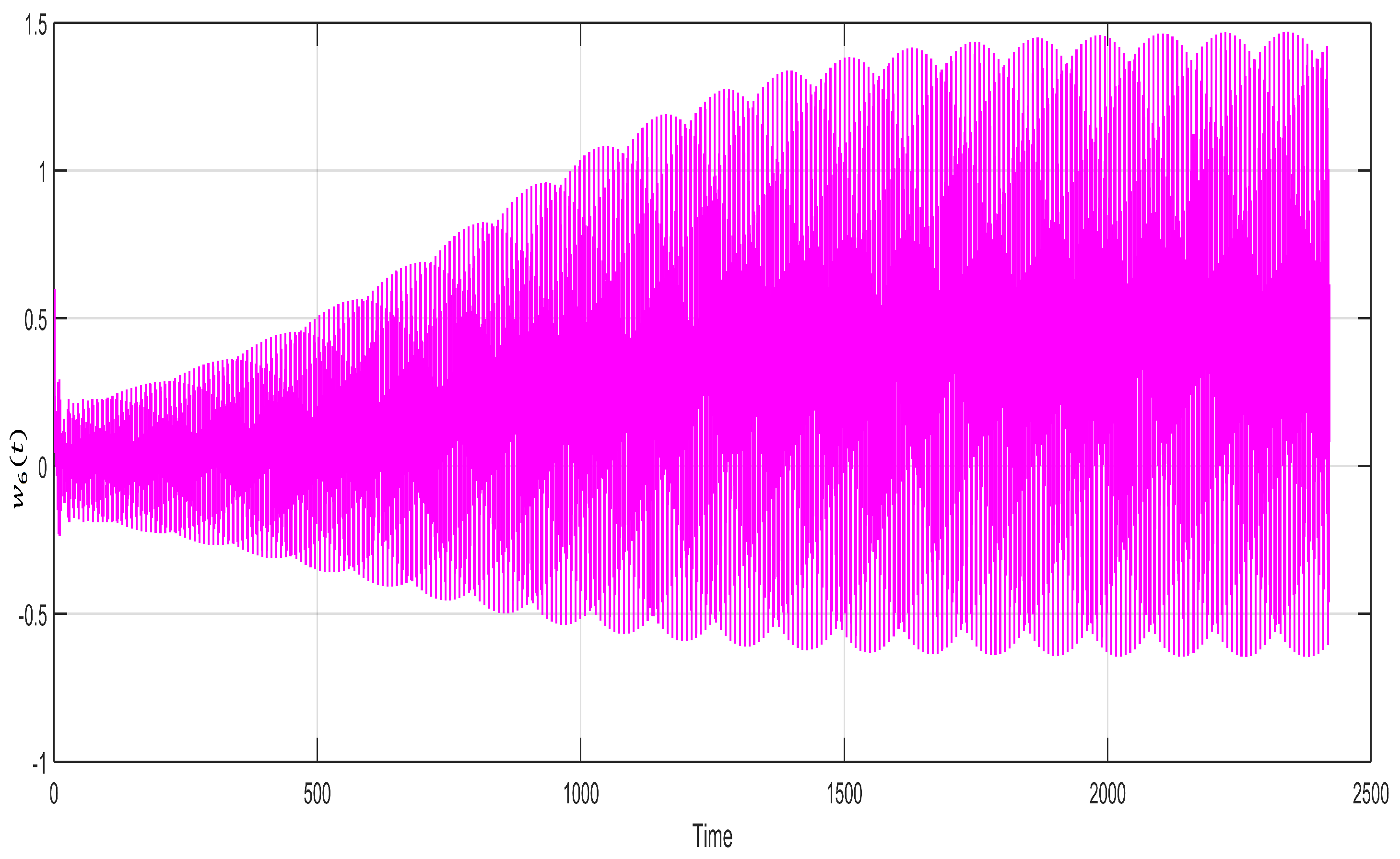

5. Results and Discussion

Figure 2,

Figure 3,

Figure 4,

Figure 5,

Figure 6 and

Figure 7 indicate the relationship between the state variables

with time

t and show a very uniform and stable two-layer sketch of the neuron stable

with passage of time. This uniform and stable sketch is the result of the calculated incommensurate fractional orders within the region of stability

,

,

,

,

and

and the state variable

of the neuron for the fixed values of

,

,

. They are drawn for the purpose of showing the variation of neuron states with the passage of time. The neural network fractional model system’s (5) stability are simulated under the neuron process state. It is possible to determine that each of the variables

becomes closer to zero with the help of the system (5). This suggests that the fractional orders system’s null equilibrium point

is locally asymptotically stable which satisfies system (8). All these graphs are sketched for the calculated incommensurate fractional orders as

,

,

,

,

and

, with a little bit of variation and keeping it within the stable region.

Figure 2,

Figure 3,

Figure 4,

Figure 5,

Figure 6 and

Figure 7 depict very stable and converged images of different neuron states over time. The different states of the neuron will behave unstable whenever the incommensurate fractional orders exceed their upper bounds, as calculated in

Section 3.

Figure 2.

State variable of neuron for the fixed values of , , and for incommensurate fractional orders , , , , , and .

Figure 2.

State variable of neuron for the fixed values of , , and for incommensurate fractional orders , , , , , and .

Figure 3.

State variable of neuron for the fixed values of , , and for incommensurate fractional orders , , , , , and .

Figure 3.

State variable of neuron for the fixed values of , , and for incommensurate fractional orders , , , , , and .

Figure 4.

State variable of neuron for the fixed values of , , and for incommensurate fractional orders , , , , , and .

Figure 4.

State variable of neuron for the fixed values of , , and for incommensurate fractional orders , , , , , and .

Figure 5.

State variable of neuron for the fixed values of , , and for incommensurate fractional orders , , , , , and .

Figure 5.

State variable of neuron for the fixed values of , , and for incommensurate fractional orders , , , , , and .

Figure 6.

State variable of neuron for the fixed values of , , and for incommensurate fractional orders , , , , , and .

Figure 6.

State variable of neuron for the fixed values of , , and for incommensurate fractional orders , , , , , and .

Figure 7.

State variable of neuron for the fixed values of , , and for incommensurate fractional orders , , , , , and .

Figure 7.

State variable of neuron for the fixed values of , , and for incommensurate fractional orders , , , , , and .

Neurons play an important role in the human brain as well as in other parts of the human body’s processing, such as receiving and transmitting signals from the affected body part. Artificial neural networks process information just like a human brain. It functions similarly to the human brain in the way that it processes information. The human brain is a very complex and complicated portion of the body and performs different tasks simultaneously. While ANNs have the deficiency to perform different functions accurately and precisely at the same time, when a neuron receives information from another neuron in a confined area, the activity level is able to transfer the impulses to another neuron. Only one signal can be fired by a neuron at a time, and that signal can reach multiple other neurons. However, artificial neural networks aim to perform the majority of tasks performed by the human brain.

The variables that indicate state during training are referred to as state variables in neural network models. These parameters are vary as the network gains experience, include things like weights, biases, and activations. During training, they are modified iteratively in order to reduce the loss function and boost efficiency. When state variables do not vary much over time, they are considered stable. Weight is the term for the value-carrying (signal-carrying) connection between neurons. Similar to weight, bias also has a value. Every neuron that is not in the input layer is biased. Activation is a standard test in which a function from the test-function set is applied to run the neural network in order to evaluate its performance.

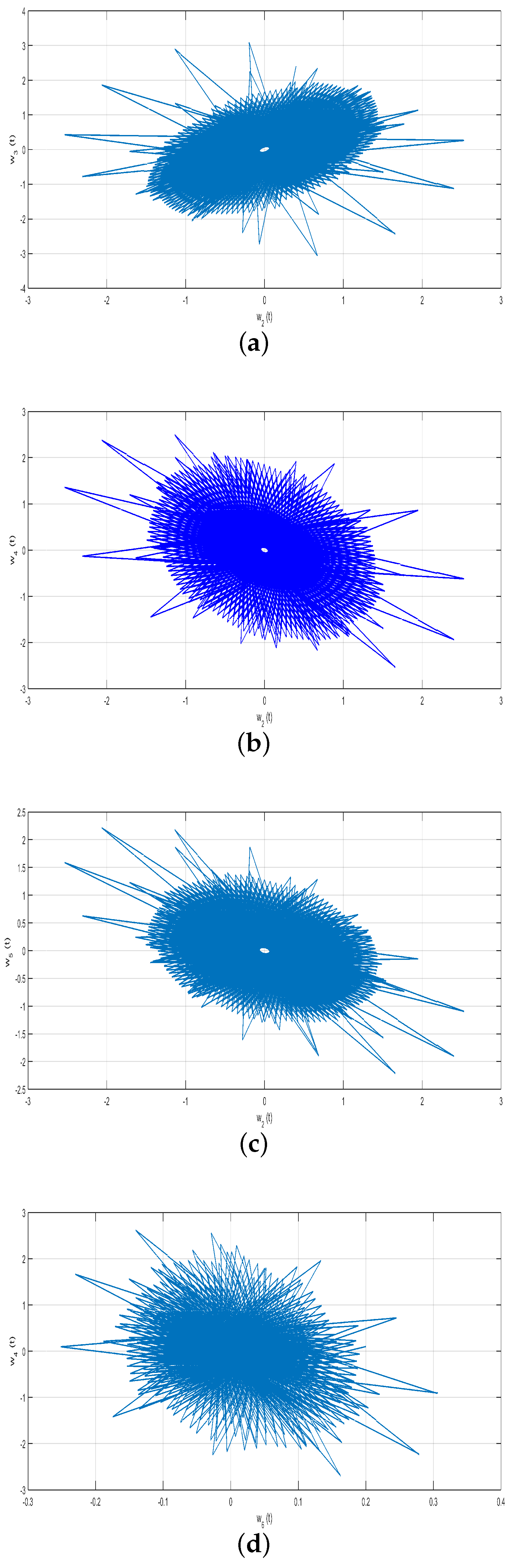

The region of stability, synchronization, and chaos are be classified on the basis of calculated incommensurate fractional-orders within the stability region through the corresponding eigenvalues and their equilibrium points. The eigenvalue lies outside the Riemann sheet region, which leads to the detection of chaos. The physical root of incommensurate fractional-orders situated in the Riemann sheet region, maintains stability for the system. The collaboration of different tasks to ensure data consistency and avoid conflicts when gaining access to common resources is referred to as synchronization, which enables the smooth and effective operation of diverse elements.

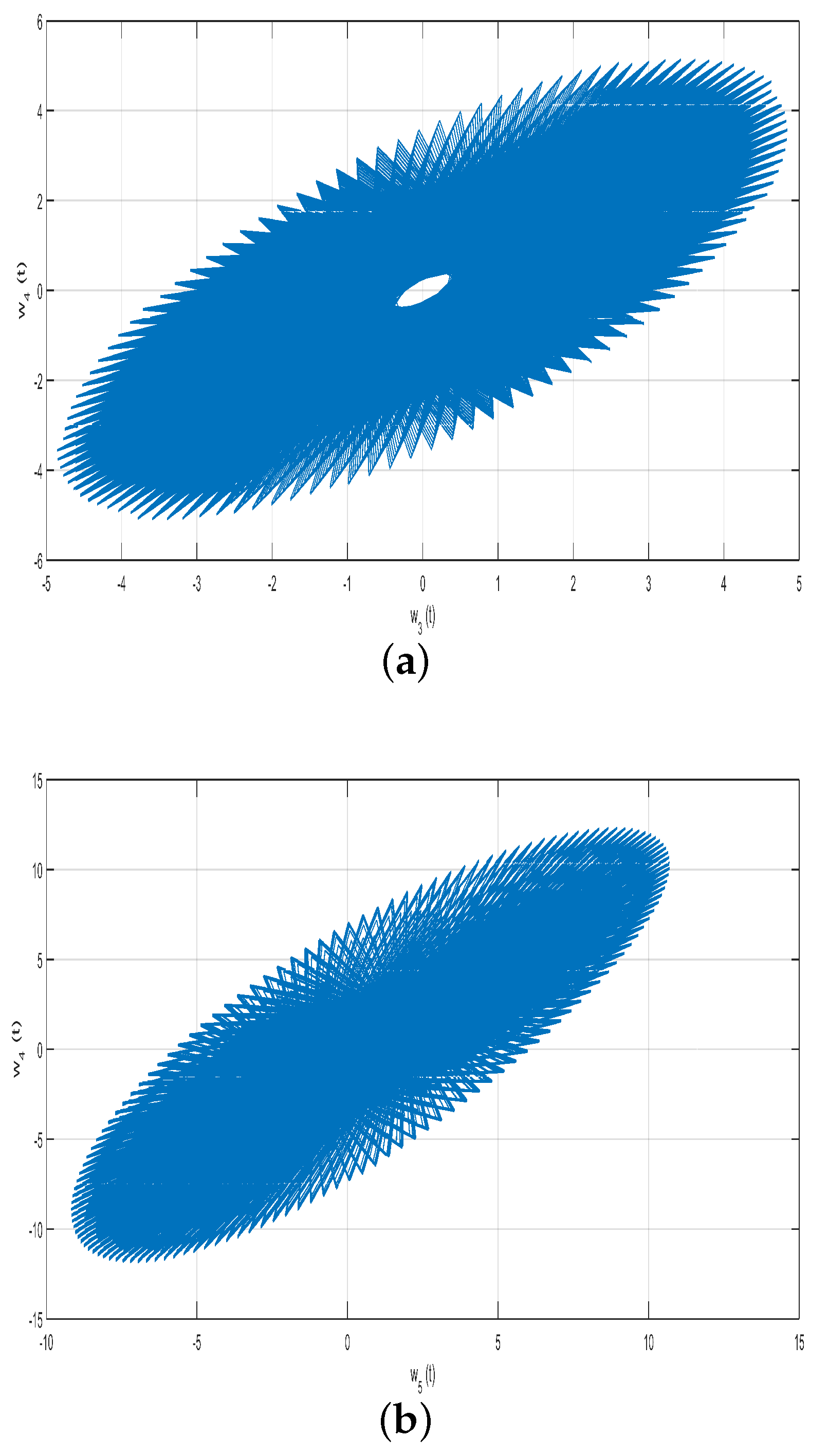

Algorithms used in machine learning or optimization issues where the objective is to modify the weights assigned to various neuron states in a model may be related to weight synchronization. Through this procedure, the relative importance of each neuron state is adjusted in an attempt to maximize accuracy and performance. On the other hand, two significant dynamical behaviors of delayed neural networks are stability and synchronization phenomena. In particular, the neural network element relies on synchronization brought forth by delay. Under the neuron process state, synchronization and stability of the neural network fractional model (7) are simulated and depicted in

Figure 8 and

Figure 9. These simulations are performed on the basis of the incommensurate fractional-orders

for their calculated values. The different state variables synchronization, stability, and their chaotic effects are shown in

Figure 8(a–d).

Figure 8(a) shows a congested stability within the interval

and a chaotic effect beyond this interval of neuron state

synchronized against

. On the other hand, the stability is a little bit uncongested and uniformly distributed within the same interval

for the synchronized values of

with

, while the chaotic effect is reduced all around, as shown in

Figure 8(b). The enhancement of stability in

Figure 8(b) is due to the exclusion of two neuron states

and

from the fourth equation of the system (7). The exclusion of these neuron states

and

will reduce the time delay effect as compared to the third equation of the system (7). The reduction of the time delay will give more relaxation time for the state variable

to be stable as compared to the state variable

(

Figure 8(a)).

Figure 8(c) shows a stable within the interval

and a chaotic effect beyond this interval of neuron state

versus

.

Figure 8(d) loses stability compared to

Figure 8(a-c). All the state variables

remain to show a chaotic influence around zero in

Figure 8 and

Figure 9, implying that system (7) instability exists around the null equilibrium point

.

Figure 9(a) indicates the synchronization between the state variables

and

, which seem very stable in nature. It is relaxed and uniformly distributed with the passage of time. On the other hand,

Figure 9(b) is quite a little bit losing its stability for the synchronization of

and

and chaotic behavior is increased in

Figure 9(b). The enhancement of stability in

Figure 9(a) is reasoned out by the addition of the extra parameter

b with the neuron state

in the dynamical equation of

in system (5).