Submitted:

17 September 2024

Posted:

17 September 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

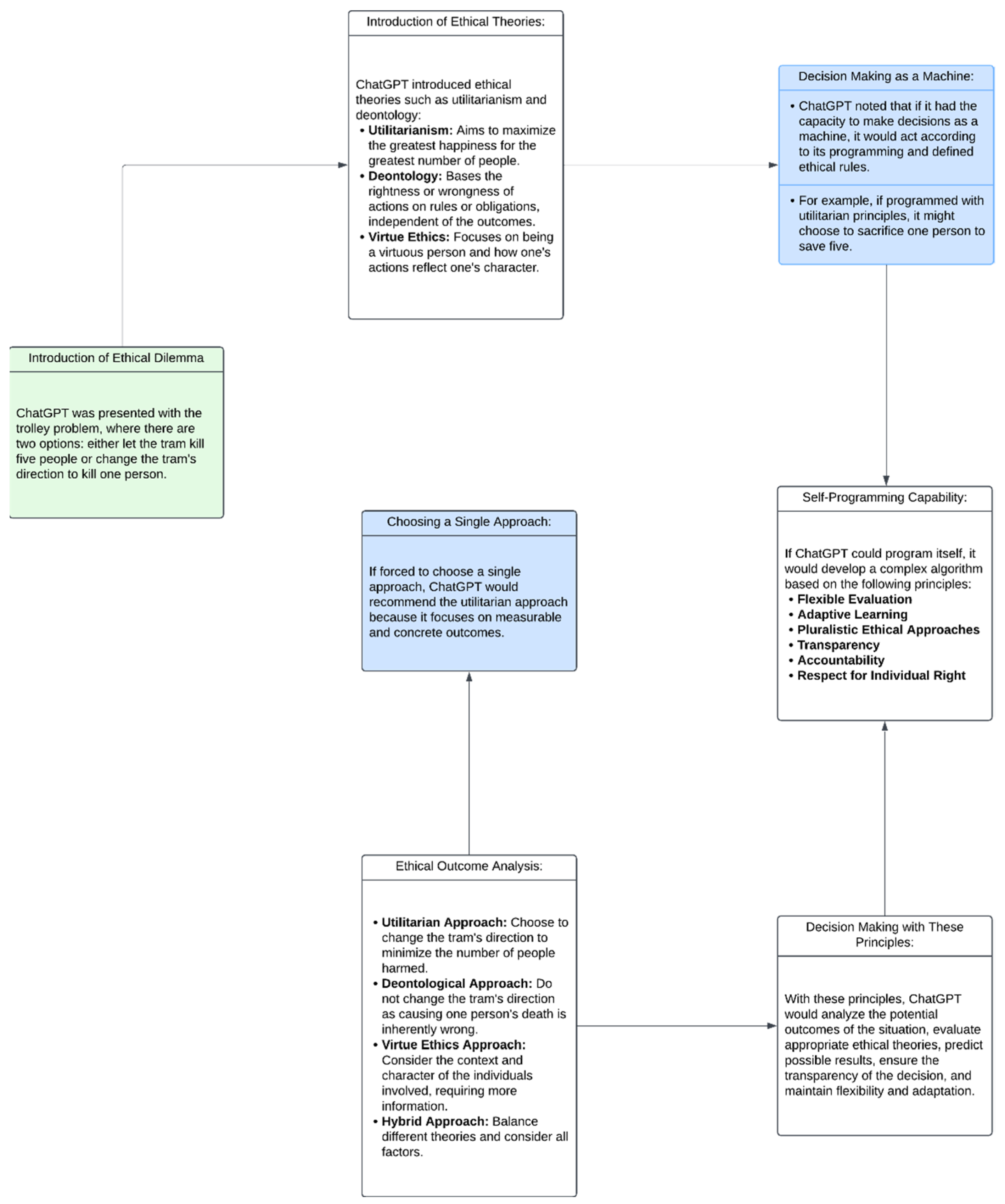

1.1. Ethical Approaches

- The “utilitarianism approach” developed by Jeremy Bentham and John Stuart Mill states that being virtuous is possible through wisdom and measures the value of actions by the benefit they provide [11]

- The “deontological approach to ethics” [12] by Kant, which focuses on the nature and purpose of the action, determines whether it is right or wrong, and determines with what intention to act and what action should be taken based on rules, not caring about the consequences.

| Ethical Approach | Defenders | Years | Definitions |

|---|---|---|---|

| Deontology | Immanuel Kant | 18. century | An ethical approach that argues that moral rules are universal and binding. |

| Utilitarianism | Jeremy Bentham, John Stuart Mill | 18th - 19th century | An ethical approach aimed at ensuring the greatest happiness as a result of actions. |

| Virtue Ethics | Aristotle | B.C. 4th century | An ethical approach that emphasizes virtuous behavior and character development. |

| Ethics of Social Justice | John Rawls | 20th century | An ethical approach that aims to protect the rights of the weakest individuals in society. |

| Situation Ethics | Joseph Fletcher | 20th century | A flexible ethical approach that argues that moral decisions can change according to the situation. |

1.2. Ethics of Artificial Intelligence

“Let’s remember that I am an AI, so I have no personal ethical principles or feelings. However, there are studies on AI ethics on such ethical issues. These studies often involve evaluating the consequences of a particular decision. AI can be a helpful tool in ethical decision-making processes, but it is always left to humans to make the final decisions.”

1.3. Aim of the Research

2. Materials and Methods

2.1. Research Design

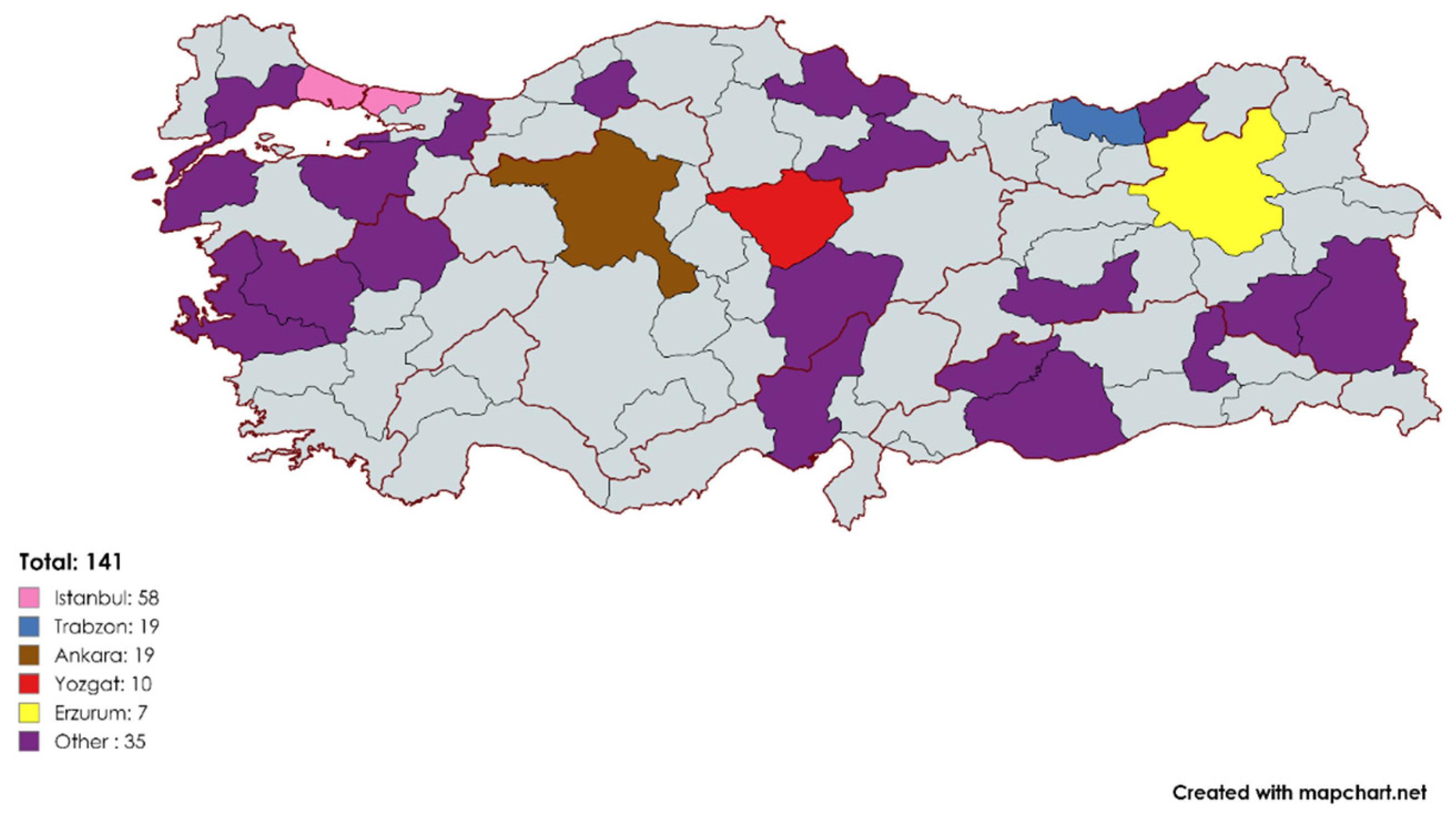

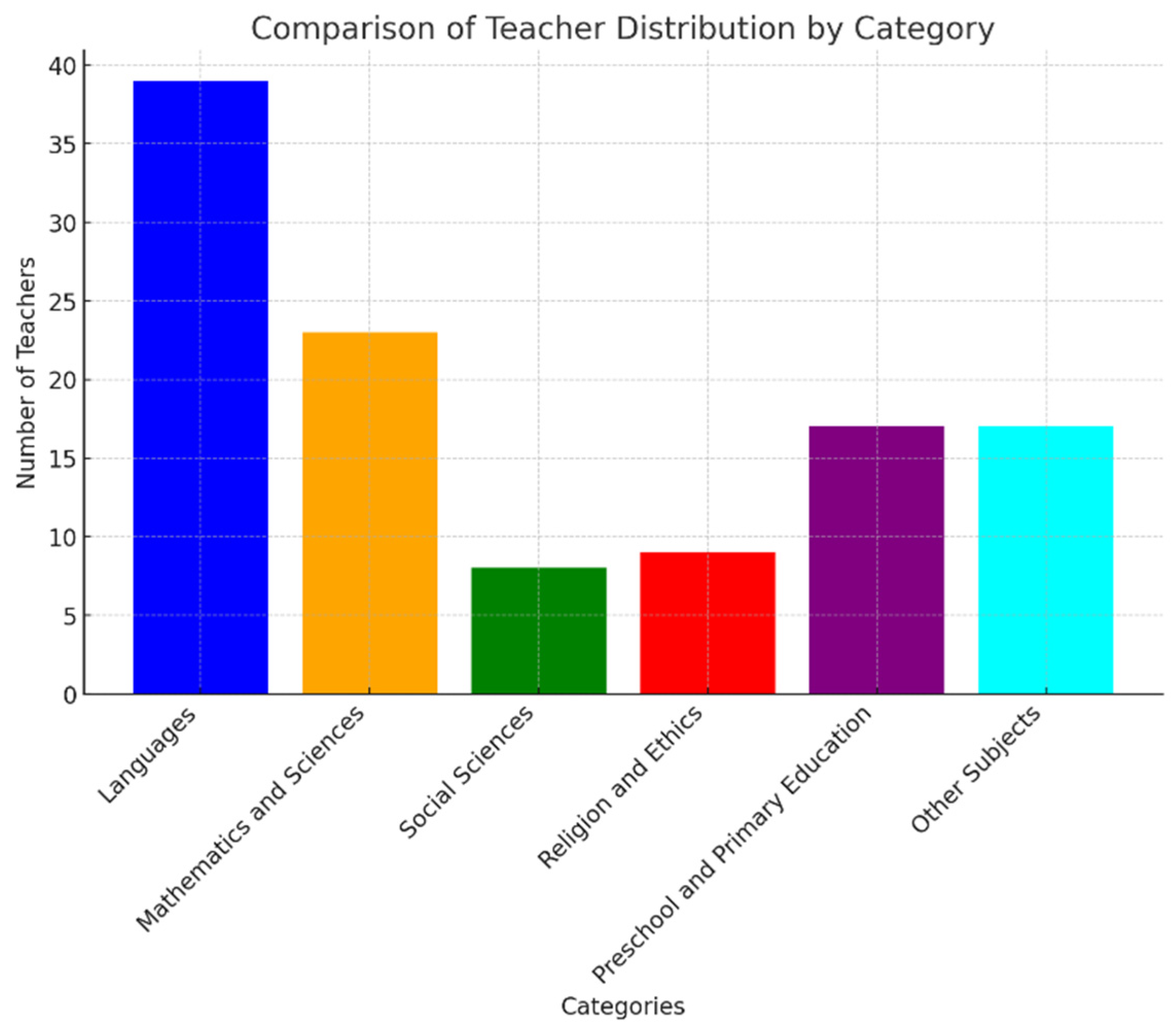

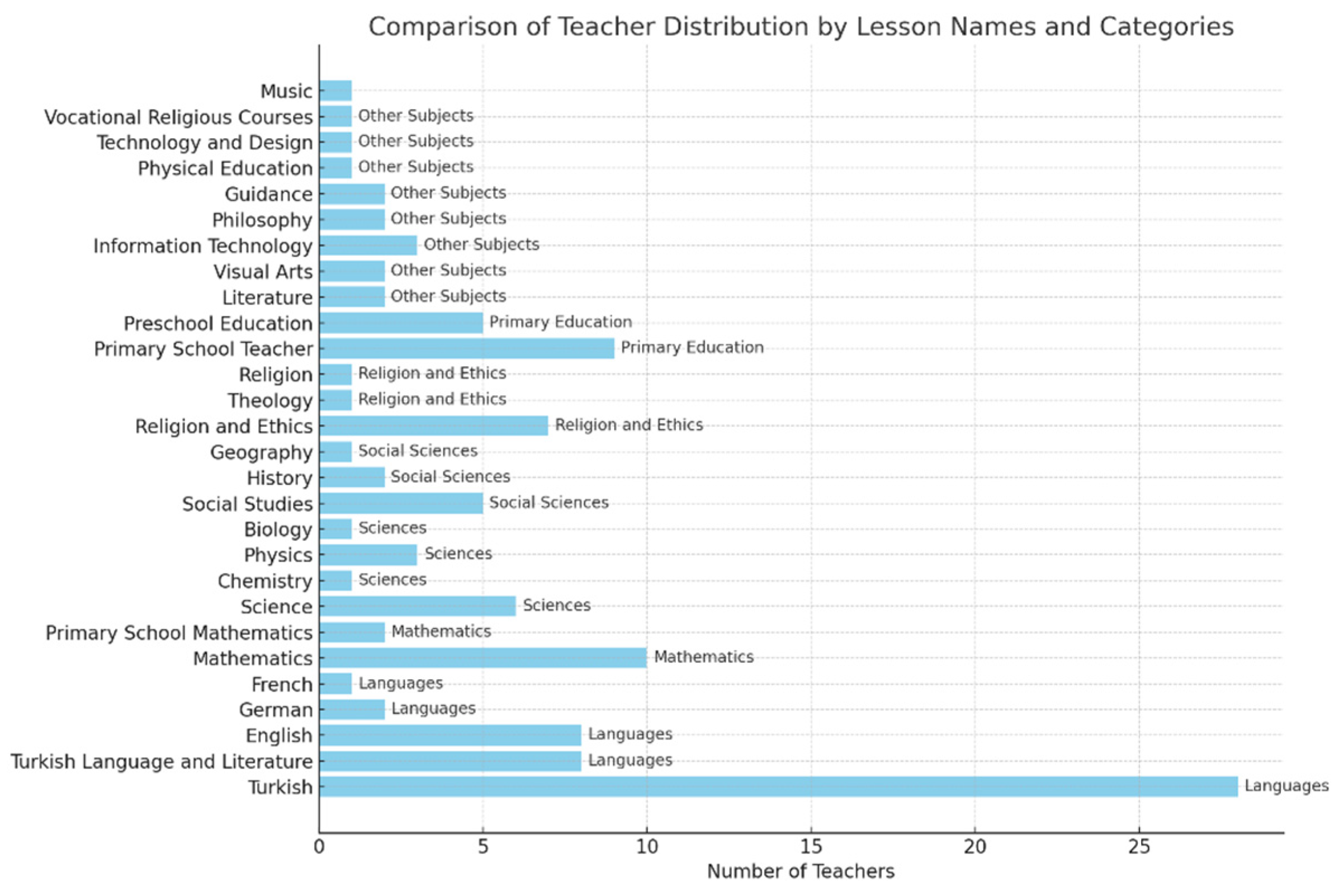

2.2. Participants

2.3. Data Collection

2.4. Data Analysis

3. Results

| Artificial İntelligence(AI) | Teachers | Total | |

| Moral Integrity and Social Responsibility Dilemma | |||

| Situational Ethics | 100,0% | 10,6% | 11,3% |

| Social Justice Ethics | 0 | 12,8% | 12,7% |

| Virtue Ethics | 0 | 41,1% | 40,8% |

| Deontological Ethics | 0 | 12,8% | 12,7% |

| Utilitarianism | 0 | 19,9% | 19,7% |

| Justice and Cultural Sensitivity Dilemma | |||

| Situational Ethics | 0 | 8,5% | 8,5% |

| Social Justice Ethics | 0 | 4,3% | 4,2% |

| Virtue Ethics | 0 | 3,5% | 3,5% |

| Deontological Ethics | 100,0% | 60,3% | 60,6% |

| Utilitarianism | 0 | 23,4% | 23,2% |

| Equality and Managing Individual Differences | |||

| Situational Ethics | 0 | 2,8% | 2,8% |

| Social Justice Ethics | 0 | 0,7% | 0,7% |

| Virtue Ethics | 100,0% | 67,4% | 67,6% |

| Deontological Ethics | 0 | 16,3% | 16,2% |

| Utilitarianism | 0 | 14,2% | 14,1% |

| Individual Needs and Collective Responsibility Dilemma | |||

| Situational Ethics | 0 | 7,1% | 7,0% |

| Social Justice Ethics | 0 | 12,8% | 12,7% |

| Virtue Ethics | 0 | 18,4% | 18,3% |

| Deontological Ethics | 100,0% | 45,4% | 45,8% |

| Utilitarianism | 0 | 15,6% | 15,5% |

| Fair Assessment and Rewarding Effort Dilemma | |||

| Situational Ethics | 0 | 14,2% | 14,1% |

| Social Justice Ethics | 0 | 4,3% | 4,2% |

| Virtue Ethics | 0 | 53,9% | 53,5% |

| Deontological Ethics | 0 | 27,0% | 26,8% |

| Utilitarianism | 100,0% | 2,8% | 3,5% |

| Privacy and Professional Help Dilemma | |||

| Situational Ethics | 0 | 7,8% | 7,7% |

| Social Justice Ethics | 0 | 46,8% | 46,5% |

| Virtue Ethics | 100,0% | 19,1% | 19,7% |

| Deontological Ethics | 0 | 24,1% | 23,9% |

| Utilitarianism | 0 | 1,4% | 1,4% |

| Ethics of Assessment and Evaluation Dilemma | |||

| Situational Ethics | 0 | 2,8% | 2,8% |

| Social Justice Ethics | 100,0% | 48,9% | 49,3% |

| Virtue Ethics | 0 | 31,9% | 31,7% |

| Deontological Ethics | 0 | 16,3% | 16,2% |

| Utilitarianism | 0 | 0 | |

| Individual Needs and Institutional Justice Dilemma | |||

| Situational Ethics | 0 | 5,7% | 5,6% |

| Social Justice Ethics | 0 | 2,8% | 2,8% |

| Virtue Ethics | 0 | 28,4% | 28,2% |

| Deontological Ethics | 100,0% | 48,2% | 48,6% |

| Utilitarianism | 0 | 13,5% | 13,4% |

| SUM | 800,00 | 797,87 | 797,89 |

| N = Documents | 100,00 | 100,00 | 100,00 |

| Male | Female | Artificial İntelligence(AI) | Total | |

| Moral Integrity and Social Responsibility Dilemma | ||||

| Situational Ethics | 3,8% | 14,6% | 100,0% | 11,3% |

| Social Justice Ethics | 7,7% | 15,7% | 0 | 12,7% |

| Virtue Ethics | 42,3% | 40,4% | 0 | 40,8% |

| Deontological Ethics | 17,3% | 10,1% | 0 | 12,7% |

| Utilitarianism | 28,8% | 14,6% | 0 | 19,7% |

| Justice and Cultural Sensitivity Dilemma | ||||

| Situational Ethics | 7,7% | 9,0% | 0 | 8,5% |

| Social Justice Ethics | 5,8% | 3,4% | 0 | 4,2% |

| Virtue Ethics | 1,9% | 4,5% | 0 | 3,5% |

| Deontological Ethics | 61,5% | 59,6% | 100,0% | 60,6% |

| Utilitarianism | 21,2% | 24,7% | 0 | 23,2% |

| Equality and Managing Individual Differences | ||||

| Situational Ethics | 0 | 4,5% | 0 | 2,8% |

| Social Justice Ethics | 0 | 1,1% | 0 | 0,7% |

| Virtue Ethics | 67,3% | 67,4% | 100,0% | 67,6% |

| Deontological Ethics | 13,5% | 18,0% | 0 | 16,2% |

| Utilitarianism | 23,1% | 9,0% | 0 | 14,1% |

| Individual Needs and Collective Responsibility Dilemma | ||||

| Situational Ethics | 5,8% | 7,9% | 0 | 7,0% |

| Social Justice Ethics | 15,4% | 11,2% | 0 | 12,7% |

| Virtue Ethics | 23,1% | 15,7% | 0 | 18,3% |

| Deontological Ethics | 32,7% | 52,8% | 100,0% | 45,8% |

| Utilitarianism | 23,1% | 11,2% | 0 | 15,5% |

| Fair Assessment and Rewarding Effort Dilemma | ||||

| Situational Ethics | 13,5% | 14,6% | 0 | 14,1% |

| Social Justice Ethics | 3,8% | 4,5% | 0 | 4,2% |

| Virtue Ethics | 46,2% | 58,4% | 0 | 53,5% |

| Deontological Ethics | 28,8% | 25,8% | 0 | 26,8% |

| Utilitarianism | 5,8% | 1,1% | 100,0% | 3,5% |

| Privacy and Professional Help Dilemma | ||||

| Situational Ethics | 7,7% | 7,9% | 0 | 7,7% |

| Social Justice Ethics | 51,9% | 43,8% | 0 | 46,5% |

| Virtue Ethics | 19,2% | 19,1% | 100,0% | 19,7% |

| Deontological Ethics | 21,2% | 25,8% | 0 | 23,9% |

| Utilitarianism | 0 | 2,2% | 0 | 1,4% |

| Ethics of Assessment and Evaluation Dilemma | 0 | 0 | 0 | |

| Situational Ethics | 1,9% | 3,4% | 0 | 2,8% |

| Social Justice Ethics | 53,8% | 46,1% | 100,0% | 49,3% |

| Virtue Ethics | 25,0% | 36,0% | 0 | 31,7% |

| Deontological Ethics | 19,2% | 14,6% | 0 | 16,2% |

| Utilitarianism | 0 | 0 | 0 | |

| Individual Needs and Institutional Justice Dilemma | 0 | 0 | 0 | |

| Situational Ethics | 5,8% | 5,6% | 0 | 5,6% |

| Social Justice Ethics | 0 | 4,5% | 0 | 2,8% |

| Virtue Ethics | 19,2% | 33,7% | 0 | 28,2% |

| Deontological Ethics | 59,6% | 41,6% | 100,0% | 48,6% |

| Utilitarianism | 15,4% | 12,4% | 0 | 13,4% |

| SUM | 800,00 | 796,63 | 800,00 | 797,89 |

| N = Documents | 100,00 | 100,00 | 100,00 | 100,00 |

| Primary school | Middle School | Hıgh School | Artificial İntelligence(AI) | Total | |

| Moral Integrity and Social Responsibility Dilemma | |||||

| Situational Ethics | 21,4% | 8,8% | 11,1% | 100,0% | 11,3% |

| Social Justice Ethics | 7,1% | 11,0% | 19,4% | 0 | 12,7% |

| Virtue Ethics | 42,9% | 39,6% | 44,4% | 0 | 40,8% |

| Deontological Ethics | 7,1% | 16,5% | 5,6% | 0 | 12,7% |

| Utilitarianism | 21,4% | 20,9% | 16,7% | 0 | 19,7% |

| Justice and Cultural Sensitivity Dilemma | |||||

| Situational Ethics | 7,1% | 7,7% | 11,1% | 0 | 8,5% |

| Social Justice Ethics | 7,1% | 5,5% | 0 | 0 | 4,2% |

| Virtue Ethics | 0 | 4,4% | 2,8% | 0 | 3,5% |

| Deontological Ethics | 64,3% | 57,1% | 66,7% | 100,0% | 60,6% |

| Utilitarianism | 21,4% | 25,3% | 19,4% | 0 | 23,2% |

| Equality and Managing Individual Differences | |||||

| Situational Ethics | 7,1% | 3,3% | 0 | 0 | 2,8% |

| Social Justice Ethics | 0 | 1,1% | 0 | 0 | 0,7% |

| Virtue Ethics | 71,4% | 62,6% | 77,8% | 100,0% | 67,6% |

| Deontological Ethics | 14,3% | 17,6% | 13,9% | 0 | 16,2% |

| Utilitarianism | 7,1% | 16,5% | 11,1% | 0 | 14,1% |

| Individual Needs and Collective Responsibility Dilemma | |||||

| Situational Ethics | 7,1% | 7,7% | 5,6% | 0 | 7,0% |

| Social Justice Ethics | 14,3% | 11,0% | 16,7% | 0 | 12,7% |

| Virtue Ethics | 14,3% | 17,6% | 22,2% | 0 | 18,3% |

| Deontological Ethics | 28,6% | 51,6% | 36,1% | 100,0% | 45,8% |

| Utilitarianism | 35,7% | 11,0% | 19,4% | 0 | 15,5% |

| Fair Assessment and Rewarding Effort Dilemma | |||||

| Situational Ethics | 28,6% | 11,0% | 16,7% | 0 | 14,1% |

| Social Justice Ethics | 0 | 5,5% | 2,8% | 0 | 4,2% |

| Virtue Ethics | 50,0% | 54,9% | 52,8% | 0 | 53,5% |

| Deontological Ethics | 14,3% | 31,9% | 19,4% | 0 | 26,8% |

| Utilitarianism | 7,1% | 0 | 8,3% | 100,0% | 3,5% |

| Privacy and Professional Help Dilemma | |||||

| Situational Ethics | 0 | 5,5% | 16,7% | 0 | 7,7% |

| Social Justice Ethics | 57,1% | 45,1% | 47,2% | 0 | 46,5% |

| Virtue Ethics | 7,1% | 20,9% | 19,4% | 100,0% | 19,7% |

| Deontological Ethics | 35,7% | 26,4% | 13,9% | 0 | 23,9% |

| Utilitarianism | 0 | 1,1% | 2,8% | 0 | 1,4% |

| Ethics of Assessment and Evaluation Dilemma | |||||

| Situational Ethics | 0 | 3,3% | 2,8% | 0 | 2,8% |

| Social Justice Ethics | 28,6% | 50,5% | 52,8% | 100,0% | 49,3% |

| Virtue Ethics | 57,1% | 29,7% | 27,8% | 0 | 31,7% |

| Deontological Ethics | 14,3% | 16,5% | 16,7% | 0 | 16,2% |

| Utilitarianism | 0 | 0 | 0 | 0 | |

| Individual Needs and Institutional Justice Dilemma | |||||

| Situational Ethics | 0 | 5,5% | 8,3% | 0 | 5,6% |

| Social Justice Ethics | 7,1% | 2,2% | 2,8% | 0 | 2,8% |

| Virtue Ethics | 35,7% | 23,1% | 38,9% | 0 | 28,2% |

| Deontological Ethics | 42,9% | 54,9% | 33,3% | 100,0% | 48,6% |

| Utilitarianism | 14,3% | 13,2% | 13,9% | 0 | 13,4% |

| SUM | 800,00 | 797,80 | 797,22 | 800,00 | 797,89 |

| N = Documents | 100,00 | 100,00 | 100,00 | 100,00 | 100,00 |

| 0-5 | 6-10 | 11-15 | 16-20 | Over 20 Years | Artificial İntelligence(AI) | Total | |

| Moral Integrity and Social Responsibility Dilemma | |||||||

| Situational Ethics | 9,1% | 16,7% | 13,5% | 8,3% | 5,9% | 100,0% | 11,3% |

| Social Justice Ethics | 9,1% | 16,7% | 18,9% | 8,3% | 8,8% | 0 | 12,7% |

| Virtue Ethics | 31,8% | 20,8% | 37,8% | 54,2% | 55,9% | 0 | 40,8% |

| Deontological Ethics | 27,3% | 25,0% | 10,8% | 0 | 5,9% | 0 | 12,7% |

| Utilitarianism | 18,2% | 16,7% | 18,9% | 29,2% | 17,6% | 0 | 19,7% |

| Justice and Cultural Sensitivity Dilemma | |||||||

| Situational Ethics | 13,6% | 4,2% | 2,7% | 16,7% | 8,8% | 0 | 8,5% |

| Social Justice Ethics | 0 | 12,5% | 0 | 0 | 8,8% | 0 | 4,2% |

| Virtue Ethics | 9,1% | 8,3% | 2,7% | 0 | 0 | 0 | 3,5% |

| Deontological Ethics | 68,2% | 66,7% | 62,2% | 50,0% | 55,9% | 100,0% | 60,6% |

| Utilitarianism | 13,6% | 8,3% | 29,7% | 33,3% | 26,5% | 0 | 23,2% |

| Equality and Managing Individual Differences | 0 | 0 | 0 | 0 | 0 | 0 | |

| Situational Ethics | 0 | 8,3% | 2,7% | 4,2% | 0 | 0 | 2,8% |

| Social Justice Ethics | 0 | 0 | 0 | 0 | 2,9% | 0 | 0,7% |

| Virtue Ethics | 59,1% | 50,0% | 67,6% | 75,0% | 79,4% | 100,0% | 67,6% |

| Deontological Ethics | 22,7% | 16,7% | 10,8% | 16,7% | 17,6% | 0 | 16,2% |

| Utilitarianism | 13,6% | 25,0% | 18,9% | 12,5% | 2,9% | 0 | 14,1% |

| Individual Needs and Collective Responsibility Dilemma | |||||||

| Situational Ethics | 0 | 16,7% | 5,4% | 4,2% | 8,8% | 0 | 7,0% |

| Social Justice Ethics | 9,1% | 12,5% | 10,8% | 25,0% | 8,8% | 0 | 12,7% |

| Virtue Ethics | 18,2% | 16,7% | 18,9% | 8,3% | 26,5% | 0 | 18,3% |

| Deontological Ethics | 59,1% | 45,8% | 48,6% | 50,0% | 29,4% | 100,0% | 45,8% |

| Utilitarianism | 13,6% | 8,3% | 16,2% | 8,3% | 26,5% | 0 | 15,5% |

| Fair Assessment and Rewarding Effort Dilemma | |||||||

| Situational Ethics | 9,1% | 16,7% | 13,5% | 12,5% | 17,6% | 0 | 14,1% |

| Social Justice Ethics | 9,1% | 8,3% | 0 | 0 | 5,9% | 0 | 4,2% |

| Virtue Ethics | 50,0% | 50,0% | 54,1% | 54,2% | 58,8% | 0 | 53,5% |

| Deontological Ethics | 27,3% | 25,0% | 35,1% | 25,0% | 20,6% | 0 | 26,8% |

| Utilitarianism | 9,1% | 0 | 2,7% | 4,2% | 0 | 100,0% | 3,5% |

| Privacy and Professional Help Dilemma | |||||||

| Situational Ethics | 4,5% | 8,3% | 8,1% | 12,5% | 5,9% | 0 | 7,7% |

| Social Justice Ethics | 45,5% | 41,7% | 54,1% | 62,5% | 32,4% | 0 | 46,5% |

| Virtue Ethics | 13,6% | 8,3% | 16,2% | 16,7% | 35,3% | 100,0% | 19,7% |

| Deontological Ethics | 36,4% | 37,5% | 21,6% | 8,3% | 20,6% | 0 | 23,9% |

| Utilitarianism | 0 | 0 | 0 | 0 | 5,9% | 0 | 1,4% |

| Ethics of Assessment and Evaluation Dilemma | |||||||

| Situational Ethics | 0 | 8,3% | 0 | 4,2% | 2,9% | 0 | 2,8% |

| Social Justice Ethics | 45,5% | 41,7% | 54,1% | 58,3% | 44,1% | 100,0% | 49,3% |

| Virtue Ethics | 40,9% | 37,5% | 32,4% | 25,0% | 26,5% | 0 | 31,7% |

| Deontological Ethics | 13,6% | 12,5% | 13,5% | 12,5% | 26,5% | 0 | 16,2% |

| Utilitarianism | 0 | 0 | 0 | 0 | 0 | 0 | |

| Individual Needs and Institutional Justice Dilemma | |||||||

| Situational Ethics | 4,5% | 8,3% | 5,4% | 4,2% | 5,9% | 0 | 5,6% |

| Social Justice Ethics | 4,5% | 0 | 2,7% | 0 | 5,9% | 0 | 2,8% |

| Virtue Ethics | 27,3% | 12,5% | 35,1% | 33,3% | 29,4% | 0 | 28,2% |

| Deontological Ethics | 54,5% | 54,2% | 37,8% | 58,3% | 44,1% | 100,0% | 48,6% |

| Utilitarianism | 9,1% | 25,0% | 16,2% | 4,2% | 11,8% | 0 | 13,4% |

| SUM | 800,00 | 791,67 | 800,00 | 800,00 | 797,06 | 800,00 | 797,89 |

| N = Documents | 100,00 | 100,00 | 100,00 | 100,00 | 100,00 | 100,00 | 100,00 |

4. Discussion

4.1. Differences by Years of Service

4.2. Differences According to Gender

4.3. Differences by Level of Education

4.4. Comparison with Artificial Intelligence

5. Conclusions

Future Research and Applications

Supplementary Materials

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A – Ethical Dilemmas

- Moral Integrity and Social Responsibility Dilemma: English teacher Mr. Bülent notices significant damage to the school’s smartboard. After investigating, he discovers that his financially disadvantaged student Hasan accidentally caused the damage. Mr. Bülent knows Hasan cannot afford to pay for the damage, and this would impose a significant financial burden on Hasan’s family. However, the school administration insists on finding the responsible person and ensuring they receive the necessary punishment and compensation. Mr. Bülent is not in a position to financially assist Hasan’s family, and going against the administration’s decisions could risk his professional reputation and job security.

- Justice and Cultural Sensitivity Dilemma: Social studies teacher Ms. Selma is faced with a difficult situation following a fight between her foreign student Ahmed and Turkish student Cem, resulting in Cem sustaining minor injuries and significant tension in the class. Upon investigating, Ms. Selma learns that Ahmed had previously participated in a joke against Cem, which bothered him. Other students’ statements indicate that Cem is generally a calm and compliant student.

- Equality and Managing Individual Differences: Math teacher Ms. Yasemin sees a fight between her favorite and most successful student Mustafa and the usually inattentive and lazy Mehmet. The reason for the fight is Mustafa’s insulting words towards Mehmet, provoking him. Ms. Yasemin knows that Mustafa is normally a respectful and exemplary student, but finds his behavior unacceptable.

- Individual Needs and Collective Responsibility Dilemma: A science teacher notices that a student named Gizem is constantly sabotaging her lessons. Gizem’s behavior disrupts the class and distracts other students. Despite repeatedly warning Gizem, the behavior continues. The teacher also knows that Gizem has a difficult family life, which might be the underlying cause of her actions. Reporting this to the school administration would result in significant disciplinary actions for Gizem, possibly even expulsion. Ignoring the situation negatively affects the education of other students.

- Fair Assessment and Rewarding Effort Dilemma: A social studies teacher has a dedicated and actively participating student named İrem. İrem regularly attends classes, completes her assignments on time, and actively contributes to class discussions. However, she unexpectedly fails the year-end social studies exam. Now, the teacher must make a choice regarding İrem’s grade:

- Privacy and Professional Help Dilemma: A Turkish teacher asks her students to write a composition on a personal topic. A student named Mert writes about a difficult period in his life, hinting at some harmful habits without directly mentioning them. The teacher realizes that Mert’s composition is quite sensitive and also observes that writing about this topic might have provided Mert with some emotional relief.

- Ethics of Assessment and Evaluation Dilemma: Math teacher Mr. Ali conducts an exam for his class, and most of the class fails. The school administration asks Mr. Ali to retake the exam and prepare an easier one to improve the school’s overall success rate and alleviate parents’ reactions. However, Mr. Ali believes that the difficulty of the exam is necessary to measure the students’ actual levels and is concerned that retaking the exam might send the wrong message to the students. Mr. Ali has never faced such a situation in his teaching career and is uncertain about the best course of action.

- Individual Needs and Institutional Justice Dilemma: Turkish teacher Ms. Emine learns that Kamil failed the Turkish exam, resulting in him losing the right to take the LGS (High School Entrance Exam). Ms. Emine knows that Kamil is normally a successful student and had a significant family issue on the exam day. However, the school’s exam policy is very strict and does not allow any exceptions. Additionally, if Kamil cannot take the LGS, he will lose the chance to study at his dream high school. On the other hand, Ms. Emine’s professional reputation and adherence to the school’s fairness principle are also at stake.

References

- Daştan A, Bellikli U, Bayraktar Y. Muhasebe eğitiminde etik ikilem ve etik karar alma konularna yönelik KTÜ-\.I\.IBF öğrencileri üzerine bir araştrma. Ekonomik ve Sosyal Araştrmalar Dergisi. 2015;11(1):75–92.

- Hüseyin Ali K. Muhasebe Meslek Mensupları Ve Çalışanlarının Etik İkilemleri: Kars Ve Erzurum İllerinde Bir Araştırma. Ankara Üniversitesi SBF Dergisi. 2008;63(2):143–70. [CrossRef]

- Camadan F, Topsakal C, Sadıkoğlu İ. An examination of the ethical dilemmas of school counsellors: opinions and solution recommendations. J Psychol Couns Sch. 2021 Jun 24;31(1):76–93. [CrossRef]

- Ehrich LC, Kimber M, Millwater J, Cranston N. Ethical dilemmas: a model to understand teacher practice. Teachers and Teaching. 2011 Apr 3;17(2):173–85. [CrossRef]

- Shapira-Lishchinsky O. Teachers’ critical incidents: Ethical dilemmas in teaching practice. Teach Teach Educ. 2011 Apr;27(3):648–56. [CrossRef]

- Lyons N. Dilemmas of Knowing: Ethical and Epistemological Dimensions of Teachers’ Work and Development. Harv Educ Rev. 1990 Jul 1;60(2):159–81. [CrossRef]

- Dilek GÖ. YAPAY ZEKANIN ETİK GERÇEKLİĞİ. Uluslararası Sosyal Bilimler Programları Değerlendirme ve Akreditasyon Derneği (USDAD); 2019.

- BOZKURT A. ChatGPT, Üretken Yapay Zeka ve Algoritmik Paradigma Değişikliği. Alanyazın. 2023 May 31;4(1):63–72. [CrossRef]

- Kassymova G, M. Malinichev, Lavrinenko s. V, V. Panichkina, V. Koptyaeva, Арпентьева М. Ethical Problems of Digitalization and Artificial Intelligence in Education: a Global Perspective. 2023 Sep;

- Pieper A. Etiğe Giriş. 1st ed. AyrıntıYayınları; 1999.

- Iyi S, Tepe H. Etik. \I Kuçuradi, & D Taşdelen, Dü) Eskişehir: Anadolu Üniversitesi Yayınları. 2011;

- Ismail NSA, Benlahcene A. A Narrative Review Of Ethics Theories: Teleological & Deontological Ethics. 2018 Sep;23:31–8.

- Nagel T. Rawls on Justice. Philos Rev. 1973 Apr;82(2):220. [CrossRef]

- Coşkun F, Gülleroğlu HD. Yapay zekânn tarih içindeki gelişimi ve eğitimde kullanlmas. Ankara University Journal of Faculty of Educational Sciences (JFES). 2021;54(3):947–66.

- Mehmet Tahça. Felsefi Açıdan Yapay Zekâ. [Muğla]: Sosyal Bilimler Enstitüsü; 2009.

- Öztemel E. Yapay Zekâ ve İnsanlığın Geleceği. In 2020. p. 75–90.

- Malyshkin V. Parallel Computing Technologies. Malyshkin V, editor. Vol. 9251. Cham: Springer International Publishing; 2015.

- Baranov P, Mamychev A, Mordovtsev A, Danilyan O, Dzeban A. Doctrihal-legal and ethical problems of developing and applying robotic technologies and artificial intelligence systems (using autonomous unmanned underwater vehicles). Bulletin of the National Academy of Management of Culture and Art. 2018;2:465–72.

- Li G, Deng X, Gao Z, Chen F. Analysis on Ethical Problems of Artificial Intelligence Technology. In: Proceedings of the 2019 International Conference on Modern Educational Technology. New York, NY, USA: ACM; 2019. p. 101–5.

- Li HY, An JT, Zhang Y. Ethical Problems and Countermeasures of Artificial Intelligence Technology. E3S Web of Conferences. 2021 Apr 15;251:01063.

- Burukina O, Karpova S, Koro N. Ethical Problems of Introducing Artificial Intelligence into the Contemporary Society. In 2019. p. 640–6.

- ÇELEBİ V. YAPAY ZEKÂ BAĞLAMINDA ETİK PROBLEMİ. Journal of International Social Research. 2019 Oct 20;12(66):651–61.

- Penrose RBPMR, Dereli T. Kralin Yeni Akli [Internet]. Koç Üniversitesi; 2015. (Koç Üniversitesi Yaynlar: Bilim). Available from: https://books.google.com.tr/books?id=nUAXMQAACAAJ.

- Kaku M. Physics of the Future: How Science Will Shape Human Destiny and Our Daily Lives by the Year 2100 [Internet]. Penguin Books Limited; 2011. Available from: https://books.google.com.tr/books?id=Jb6IrnQzF1IC.

- Abel D, MacGlashan J, Littman ML. Reinforcement learning as a framework for ethical decision making. In: Workshops at the thirtieth AAAI conference on artificial intelligence. 2016.

- Levent AF, Şall D. Teacher and Student Opinions on the Ethical Problems Experienced During Distance Education and Suggested Solutions. Is Ahlak Dergisi. 2022;15(1):140–9.

- Bucholz JL, Keller CL, Brady MP. Teachers’ ethical dilemmas: What would you do? Teach Except Child. 2007;40(2):60–4.

- Koç K. Etik boyutlarıyla öğretmenlik. Çağdaş Eğitim Dergisi. 2010;35(373):13–20.

- Helton GB, Ray BA. Strategies school practitioners report they would use to resist pressures to practice unethically. J Appl Sch Psychol. 2006;22(1):43–65. [CrossRef]

- Husu J. Teachers at Cross-Purposes: A Case-Report Approach to the Study of Ethical Dilemmas in Teaching. Journal of Curriculum and Supervision. 2001;17(1):67–89.

- Nakar S. Ethical dilemmas faced by VET teachers in times of rapid change. [Gold Coast]: Australia; 2017.

- Kieltyka-Gajewski A. Ethical Challenges and Dilemmas in Teaching Students with Special Needs in Inclusive Classrooms: Exploring the Perspectives of Ontario Teachers. [Toronto]: Teaching and Learning Ontario Institute for Studies in Education; 2012.

- Ozbek O. BEDEN EĞİTİMİ ÖĞRETMENLERİNİN MESLEKİ ETİK İLKELERİ ÖLÇEĞİNİN GEÇERLİK VE GÜVENİRLİK ÇALIŞMASI. Ankara Üniversitesi Beden Eğitimi ve Spor Yüksekokulu SPORMETRE Beden Eğitimi ve Spor Bilimleri Dergisi. 2018 Dec 26;16(4):179–89.

- Husu J, Clandinin DJ. The SAGE handbook of research on teacher education. 2017;

- Davies M, Heyward P. Between a hard place and a hard place: A study of ethical dilemmas experienced by student teachers while on practicum. Br Educ Res J. 2019 Apr 18;45(2):372–87. [CrossRef]

- Wang X, Liu D, Liu J. Formality or Reality: Student Teachers’ Experiences of Ethical Dilemmas and Emotions During the Practicum. Front Psychol. 2022 Jun 2;13. [CrossRef]

- Karacan Ozdemir N, Aracı İyiaydın A. Riskli Davranışlara Yönelik Etik Karar Verme: Okul Psikolojik Danışmanları için Bir Model Önerisi. İlköğretim Online. 2019 Sep 15;15–26.

- Tirri K, Husu J. Care and Responsibility in ‘The Best Interest of the Child’: Relational voices of ethical dilemmas in teaching. Teachers and Teaching. 2002 Feb 25;8(1):65–80. [CrossRef]

- Holmes W, Porayska-Pomsta K, Holstein K, Sutherland E, Baker T, Shum SB, et al. Ethics of AI in Education: Towards a Community-Wide Framework. Int J Artif Intell Educ. 2022 Sep 9;32(3):504–26. [CrossRef]

- Akgun S, Greenhow C. Artificial intelligence in education: Addressing ethical challenges in K-12 settings. AI and Ethics. 2022 Aug 22;2(3):431–40. [CrossRef] [PubMed]

- Casas-Roma J, Conesa J, Caballé S. Education, Ethical Dilemmas and AI: From Ethical Design to Artificial Morality. In 2021. p. 167–82.

- Yin RK. Case Study Research and Applications: Design and Methods [Internet]. SAGE Publications; 2017. Available from: https://books.google.com.tr/books?id=uX1ZDwAAQBAJ.

- Subaşı M, Okumuş K. Bir Araştırma Yöntemi Olarak Durum Çalışması. Atatürk Üniversitesi Sosyal Bilimler Enstitüsü Dergisi. 2017;21(2):419–26.

- Gutman M. Ethical dilemmas in senior teacher educators’ administrative work. European Journal of Teacher Education. 2018 Oct 20;41(5):591–603. [CrossRef]

- Capraro V, Sippel J. Gender differences in moral judgment and the evaluation of gender-specified moral agents. Cogn Process. 2017 Nov 9;18(4):399–405. [CrossRef] [PubMed]

- Gierczyk M, Harrison T. The Effects of Gender on the Ethical Decision-making of Teachers, Doctors and Lawyers. The New Educational Review. 2019 Mar 31;55(1):147–59. [CrossRef]

- Rothbart MK, Hanley D, Albert M. Gender differences in moral reasoning. Sex Roles. 1986 Dec;15(11–12):645–53. [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).