1. Introduction

The growing dominance of generative artificial intelligence (GAI) has led to significant changes in higher education (HE), prompting extensive research into its consequences. This development signifies a profound transformation, with GAI's capabilities being integrated into personalized learning experiences, enhancing faculty skills, and increasing student engagement through innovative tools and technological interfaces. Understanding this process is crucial for two main reasons: it impacts the dynamics of teaching within the educational environment and necessitates a reassessment of academic approaches to equip students with the necessary tools for a future where artificial intelligence (AI) is ubiquitous. Additionally, this evolution underscores the need to rethink and reinvent educational institutions, along with the core competencies that students must develop as they increasingly utilize these technologies.

AI and GAI, although sharing a common objective, cannot be understood as identical concepts. Marvin Minsky defined AI as "the science of getting machines to do things that would require intelligence if done by humans" (Minsky, 1985, as cited in Fjelland, 2020). This broad definition encompasses various fields that aim to mimic human behavior through technology or methods. GAI, for instance, includes systems designed to generate content such as text, images, videos, music, computer code, or combinations of different types of content (Farrelly & Baker, 2023). These systems utilize machine learning techniques, a subset of AI, to train models on input data, enabling them to perform specific tasks.

To grasp the significance of AI in HE, it is crucial to examine the growing academic interest at the intersection of these two fields. In the past two years (2022 – 2023), there has been a marked increase in scholarly focus on this convergence, as demonstrated by the rising number of articles indexed in the Scopus and Web of Science (WoS) databases. This trend is supported by systematic evaluations of AI's use in formal higher education. For example, studies by Bond et al. (2024) and Crompton & Burke (2023) provide a comprehensive analysis of 138 publications selected from a pool of 371 prospective studies conducted between 2016 and 2022. This increase highlights the expanding academic discussion, emphasizing the analysis and prediction of individual behaviors, intelligent teaching systems, evaluation processes, and flexible customization within the higher education context (op. cit.).

The importance of systematic literature reviews (SLRs) in this rapidly evolving discipline cannot be overstated. SLRs enable the synthesis of extensive research into aggregated knowledge, providing clear and practical conclusions. By employing well-established procedures such as Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA), researchers ensure the comprehensive inclusion of all relevant studies while maintaining the integrity of the synthesis process. This method enhances the reliability and reproducibility of findings, thereby establishing a solid foundation for future research and the development of institutional policies (Crompton & Burke, 2023; Bond et al., 2024).

While some studies explore the use of GAI in higher education, there are few articles that provide a systematic and comprehensive literature review on this topic. Additionally, existing reviews generally cover the period up to 2022. Given the significant advancements in AI, particularly GAI, over the past two years, it is crucial to investigate how this technology is shaping higher education and to identify the challenges faced by lecturers, students, and organizations.

Therefore, the main objective of this research was to conduct a systematic review of the empirical scientific literature on the use of GAI in HE published in the last two years. Selected articles were analyzed based on the main problems addressed, research questions and objectives pursued, methodologies employed, and main results obtained. The Research Onion model, developed by Saunders, Lewis, and Thornhill (2007), was used to analyze the methodologies. The review adhered to the PRISMA methodology (Page et al., 2021), and articles were identified and collected using the Scopus and WoS indexing databases.

The paper is structured as follows. Next, we detail the methods used in the selection and revision of the papers, including the inclusion and exclusion criteria. Then, we do a brief description of the papers’ content including the categories in which they can be group (topics and methodologies used). This is followed by a discussion of the results and a proposal for a future research agenda. The paper ends with the conclusion and the presentation of the limitations of this research.

2. Methods

The research utilized an SLR methodology, which involved a series of structured steps: planning (defining the research questions), conducting (executing the literature search, selecting studies, and synthesizing data), and reporting (writing the report). This process adhered to the PRISMA guidelines as outlined by Page et al. (2021).

During the planning phase, we formulated the Research Question (RQ) based on the background provided in section 1:

RQ: What are the main problems, research questions, objective pursued, methodologies employed, and key findings obtained in studies on GAI in HE conducted between 2023 and 2024?

The subsequent step involved identifying the search strategy, study selection, and data synthesis. The search strategy includes the selection of search terms, literature resources, and the overall searching process. Deriving the research question aided in defining the specific search terms. For the eligibility criteria – comprising the inclusion and exclusion criteria for the review and the method of grouping studies for synthesis - we opted to include only articles that described scientific empirical research on the use of GAI in higher education. Within the context of this paper, we define empirical research as investigations where researchers collect data to provide rigorous and objective answers to research questions and hypotheses, excluding articles based on opinions, theories, or beliefs. We decided to use Scopus and WoS as our databases, with the search being conducted in January 2024. The next step was to identify synonyms for the search strings.

The search restrictions considered in Scopus were as follows: title, abstract, and keywords; period: since January 1, 2023; document type: article; source type: journal; language: English; publication stage: final and article in press. The search equation used was: TITLE-ABS-KEY(( "higher education" OR "university" OR "college" OR "HE" OR "HEI" OR "higher education institution") AND ( "generative artificial intelligence" OR "generative ai" OR "GENAI" OR "gai")) AND PUBYEAR > 2022 AND PUBYEAR < 2025 AND ( LIMIT-TO ( DOCTYPE,"ar" ) ) AND ( LIMIT-TO ( SRCTYPE,"j" ) ) AND ( LIMIT-TO ( PUBSTAGE,"final" ) OR LIMIT-TO ( PUBSTAGE,"aip" ) ) AND ( LIMIT-TO ( LANGUAGE,"English" )). As a result, we gathered 91 articles, of which only 87 were available. The search results were documented, and the articles were extracted for further analysis.

The search restrictions in the WoS were as follows: search by topic, including title, abstract, and keywords; period: since January 1, 2023; document type: article; language: English; publication stage: published within the specified period. The search equation used was: TITLE-ABS-KEY(( "higher education" OR "university" OR "college" OR "HE" OR "HEI" OR "higher education institution") AND ( "generative artificial intelligence" OR "generative ai" OR "GENAI" OR "gai")), with the previously outlined restrictions. As a result, we collected 61 articles. Eight of these articles were unavailable. One article was excluded because its title was in English, even though the article itself was written in Portuguese. Thus, we considered a total of 52 articles. The search results were documented, and the articles were extracted for further analysis.

The entire process was initially tested by the three researchers, with the final procedure being implemented by one of them. All the articles were compiled into an excel sheet, where duplicates were identified and removed. This resulted in a final list of 102 articles.

The next step involved selecting the articles. The complete list was divided into three groups, with each group assigned to a different researcher. Each researcher reviewed their assigned articles, evaluating whether the keywords aligned with the search criteria and whether each article included empirical research. For each article, the researcher provided one of three possible responses: “Yes” for inclusion, “No” for exclusion, or “Yes/No” if there were uncertainties about its inclusion.

The three researchers held another meeting and decided to include all articles marked as "Yes" while excluding those marked as "No". Articles marked as "Yes/No" were redistributed among the researchers for a second opinion. Additionally, one article that was not available in Scopus or WoS was excluded. At the end of this process, 37 articles were selected and 65 were excluded (see

Table 1). These 37 articles constitute the basis for this SLR (see

Table 2 for the complete list of references).

These articles were published in 25 different journals, with only 7 journals featuring more than one article (

Table 3).

The 37 articles were written by 119 different authors, of whom only 5 appear as authors on more than one article (

Table 4).

Each researcher independently conducted a grounded theory exercise based on all the previously gathered information, including the articles themselves, to identify potential categories for each article. This process was completed separately by each researcher for all the articles. Subsequently, the results from the three researchers were combined, resulting in the categorization and distribution of the articles shown in

Table 5.

Multiple efforts were made to minimize the risk of bias. The procedures of this investigation are thoroughly described and documented to ensure accurate reproducibility of the study. The three researchers conducted the procedures, with certain steps performed independently. The results were then compared and reassessed as needed.

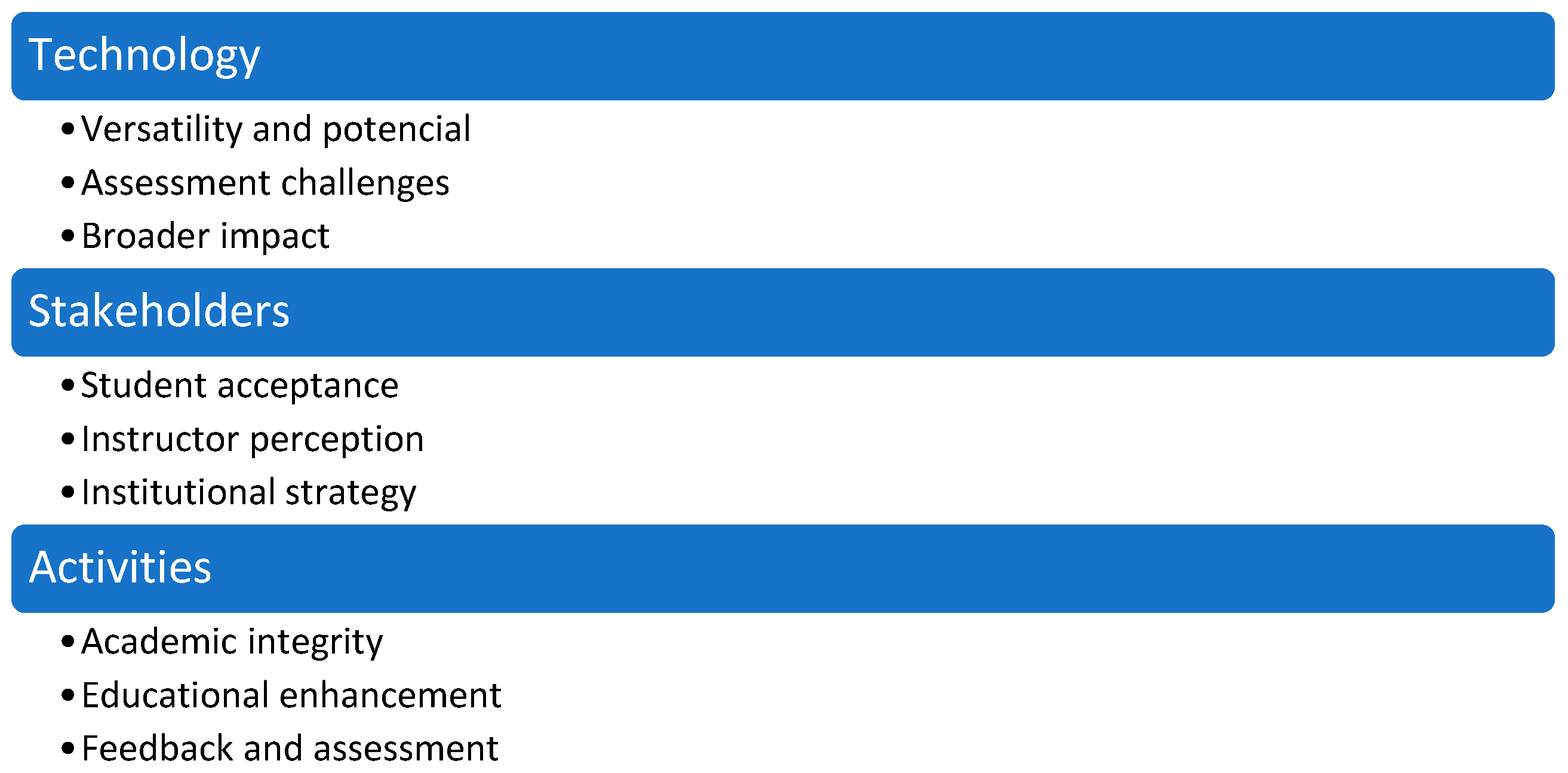

Category A encompasses all studies that focus on the use of the GAI technology as the core of the research. This category contains papers describing research on ChatGPT (sub-category A.1) and those addressing other technologies (sub-category A.2). Category B covers papers that examine the acceptance and perception of GAI from the perspective of different stakeholders, such as students, teachers, researchers, and higher education institutions. Here, the emphasis is on people rather than technology. Category C consists of studies that focus on specific tasks or activities, rather than on technology or people. These tasks include assessment, writing, content analysis, content generation, academic integrity, and feedback. It is important to note that GAI is a transversal aspect uniting all this research. This means that, in some cases, although a paper focuses on a particular stakeholder or activity, the technology factor still be present. However, the categorization was based on the core focus of each paper, even though technology is a common factor among them. Finally, a fourth category was added to encompass the methodology.

3. Results

The findings from the data synthesis are aimed at answering the research question (RQ) and are based on 37 papers, categorized and subcategorized as shown in

Table 5 (see previous section). These papers were divided into three main categories, as previously mentioned. The results are presented by category in the following paragraphs.

3.1. The Focus on the Technology – Use of GAI

The following is a comprehensive overview of 15 articles focused on the use of GAI. Each article is analyzed based on the main problems identified, research questions posed, objectives set, and main results achieved. Subsection 3.1.1 presents an analysis of articles specifically addressing ChatGPT (Duong, Vu, & Ngo, 2023; Elkhodr, Gide, Wu, & Darwish, 2023; French, Levi, Maczo, Simonaityte, Triantafyllidis, & Varda, 2023; Michel-Villarreal, Vilalta-Perdomo, Salinas-Navarro, Thierry-Aguilera, & Gerardou, 2023; Nikolic et al., 2023; Popovici, 2023). Subsection 3.1.2 examines articles that take a broader perspective on the use of GAI (Chan, 2023; Chan & Hu, 2023; Chiu, 2024; Jaboob, Hazaimeh, & Al-Ansi, 2024; Lopezosa, Codina, Pont-Sorribes, & Vállez, 2023; Shimizu et al., 2023; Walczak & Cellary, 2023; Watermeyer, Phipps, Lanclos, & Knight, 2023; Yilmaz & Karaoglan Yilmaz, 2023).

3.1.1. The Use of GenAI Technology – The Case of Chat GPT

Six articles are summarized here, each examining the application of GAI technology, particularly ChatGPT, in different higher education settings. Despite the varied contexts of ChatGPT usage, these articles collectively address the common challenges and opportunities this technology presents.

In the study of Michel-Villarreal, Vilalta-Perdomo, Salinas-Navarro, Thierry-Aguilera, and Gerardou (2023), the identification of challenges, potentialities and barriers is explicitly outlined in the two research questions it presents (see p. 2 of the article). This study stands out as a particularly interesting case because ChatGPT was used as a data source to help address these research questions. A chat session was conducted with ChatGPT in the format of a semi-structured interview, and the results of the content analysis from this interaction revealed a range of opportunities (5 for students and 2 for teachers), challenges (5), barriers (7) and priorities (6). Some of these findings are noteworthy as they remain underexplored in the literature. For instance, the opportunity of “providing ‘round-the-clock support to students’” is highlighted, which holds significant potential, particularly in distance learning scenarios (p. 10). Additionally, the authors emphasize that “incorporating ChatGPT into the curriculum can introduce innovative and interactive learning experiences” (p. 10), representing a novel opportunity. Furthermore, they identify two opportunities related to the role of teachers, including the possibility of freeing up more of their time by efficiently managing routine tasks, and using these technologies in various research activities, such as “by assisting with literature reviews, data analysis, and generating hypotheses” (p. 10). The primary challenges identified pertain to risks associated with academic integrity and quality control, suggesting a set of principles for the acceptable and responsible use of AI in HE (p. 11). Additionally, strategies for mitigating challenges, including policy development, education, and training, are proposed (p. 13).

French, Levi, Maczo, Simonaityte, Triantafyllidis, and Varda (2023) also explore the impact of integrating OpenAI tools (ChatGPT and Dall-E) in HE, particularly focusing on their incorporation into the curriculum and their influence on student outcomes. The authors facilitated the use of these technologies by students in game development courses. Through 5 case studies, the authors observed a significant impact on students' skills development. The students' outputs “show that they have adopted creative, problem-solving and critical skills to address the task” (p. 16). Additionally, the students exhibited high levels of motivation and engagement with this approach. The authors acknowledge the broader challenge of providing students with access to such technologies, allowing them to make their own judgments about their usage, arguing “that both students and educators need to be flexible, creative, reflective and willing to increase their skills to meet the demands of a future society” (p. 18).

Another article also addresses the use of ChatGPT in a specific HE context, particularly within engineering education (Nikolic et al., 2023). This article focuses on assessment integrity, as indicated by its research question: “How might ChatGPT affect engineering education assessment methods, and how might it be used to facilitate learning?” (p. 560). A group of authors from different universities and engineering disciplines questioned ChatGPT to determine whether its responses corresponded to passable responses. The authors highlight, as a primary finding, the need to reevaluate assessment strategies, as they have accumulated evidence suggesting that ChatGPT can generate passable responses.

Popovici (2023) also addresses the necessity of developing new approaches and strategies for using GAI tools, particularly focusing on the positive use of ChatGPT in HE contexts. Specifically, the authors examine the application of ChatGPT within a functional programming course. They were surprised to find that their students were already using ChatGPT to complete assignments and recognized its potential to aid in their learning (p. 1). Subsequently, the authors employed ChatGPT as if it were a student to evaluate their performance in programming tasks and code review. The results indicated that “ChatGPT as a student would receive an approximate score of 7 out of a maximum of 10. Nonetheless, 43% of the accurate solutions provided by ChatGPT are either inefficient or comprise of code that is incomprehensible for the average student” (p. 2). These findings highlight both potential and limitations of utilizing ChatGPT in programming tasks and code review.

Elkhodr, Gide, Wu, and Darwish (2023) examine the use of ChatGPT in another HE context, specifically within ICT education. The study aimed to “examine the effectiveness of ChatGPT as an assistive technology at both undergraduate (UG) and postgraduate (PG) ICT levels” (p. 71), and three case studies were conducted with students. In each case study, students were divided into two groups: one group was permitted to use ChatGPT, while the other was not. Subsequently, the groups were interchanged so that each group of students performed the same tasks with and without the assistance of ChatGPT, and they were asked to reflect on their experiences (p. 72). The results indicated that students responded positively to the use of ChatGPT, considering it to be a valuable resource that they would like to continue using in the future.

Duong, Vu, & Ngo (2023) describe a study in which a modified version of the Technology Acceptance Model (TAM) was used “to explain how effort and performance expectancies affect higher education students' intentions and behaviors to use ChatGPT for learning, as well as the moderation effect of knowledge sharing on their ChatGPT-adopted intentions and behaviors” (p. 3). The results of the study show that student behavior is influenced by both effort expectancy and performance expectancy, which is evident in their use of ChatGPT for learning purposes (p. 13).

3.1.2. Exploring the Use of GAI Technology – A Broader Perspective

Nine articles provide a broad perspective on the use of GAI technology across various higher education contexts.

Articles by Lopezosa, Codina, Pont-Sorribes, and Vállez (2023), Shimizu et al. (2023), and Yilmaz & Karaoglan Yilmaz (2023) focus on the use of GAI in specific academic disciplines, demonstrating how GAI tools are used in educational contexts and their impact on specific domains of teaching and learning. The articles by Lopezosa, Codina, Pont-Sorribes, and Vállez (2023), and Yilmaz & Karaoglan Yilmaz (2023) specifically address the issue of integrating AI into journalism and programming education, respectively. In the context of journalism education, Lopezosa, Codina, Pont-Sorribes, and Vállez (2023) aim to “provide an assessment of their impact and potential application in communication faculties” (p. 2). They propose training models based on the perspectives of teachers and researchers concerning the integration of AI technologies in communication faculties and their views on using GAI to “potentially transform the production and consumption of journalism” (p. 5). The results highlight the essential need to integrate AI into the journalism curriculum, although opinions on specific issues vary. A strong consensus has emerged on the ethical issues involved in using GAI tools. Regarding programming education, Yilmaz & Karaoglan Yilmaz (2023) argue that GAI tools can help students to develop skills in various dimensions, such as code creation, motivation, critical thinking e others. They also note that when challenges are significant, “the use of AI tools such as ChatGPT does not have a significant effect on increasing student motivation” (p. 11).

In turn, Shimizu et al. (2023) discuss the impact of using GAI in medical education, particularly its effects on curriculum reform and the professional development of medical practitioners, with concerns regarding “ethical considerations and decreased reliability of the existing examinations” (p. 1). The authors conducted a SWOT analysis, which identified 169 items grouped into 5 themes: “improvement of teaching and learning, improved access to information, inhibition of the existing learning processes, problems in GAI, and changes in physicians’ professionalism” (p. 4). The analysis revealed positive impacts, such as improvements in the teaching and learning process and access to information, alongside negative impacts, notably teachers' concerns about students' ability to “think independently” and issues related to ethics and authenticity (p. 5). The authors suggest that these aspects be considered in curriculum reform, advocating for an adaptive educational approach.

Another set of articles (Chan, 2023; Walczak & Cellary, 2023; Watermeyer, Phipps, Lanclos, & Knight, 2023) examines the impact of GAI on HE at a macro scale, focusing on policy development, institutional strategies, and broader curricular transformations. Walczak and Cellary (2023) specifically explore “the advantages and potential threats of using GAI in education and necessary changes in curricula” as well as discussing “the need to foster digital literacy and the ethical use of AI” (p. 71). A survey conducted among students revealed that the majority believed “students should be encouraged and taught how to use AI”' (p. 90). The article provides a thematic analysis of existing challenges and opportunities to HE institutions. They acknowledge the impact that the introduction of GAI has on the world of work, raising questions about the future nature of work and how to prepare students for this reality, emphasizing that human performance is crucial to avoid “significant consequences of incorrect answers made by AI” (p. 92). Among the study’s main conclusions and recommendations, it highlights the ethical concerns in using GAI tools and the need to critically assess the content they produce.

Watermeyer, Phipps, Lanclos, and Knight (2023) also raise concerns about the labor market, specifically regarding academic labor. It examines how GAI tools are transforming scholarly work, how these tools aim to alleviate the pressures inherent in the academic environment, and the implications for the future of the academic profession. The authors found that the uncritical use of GAI tools has significant consequences, making academics “less inquisitive, less reflexive, and more narrow and shallow scholars” (p. 14). This introduces new institutional challenges for the future of their academic endeavors.

Chan (2023) focuses on developing a framework for policies regarding the use of AI in HE. A survey was conducted among students, teachers, and staff members, which included both quantitative and qualitative components. The results indicate that, according to the respondents, there are several aspects arising from the use of AI technologies, such as ChatGPT. For example, the importance of integrating AI into the teaching and learning process is recognized, although there is still little accumulated experience with this use. Additionally, there is “strong agreement that institutions should have plans in place associated with AI technologies” (p. 9). Furthermore, there is no particularly strong opinion about the future of teachers, specifically regarding the possibility that “AI technologies would replace teachers” (p. 9). These and other results justify the need for higher education institutions to develop AI usage policies. The authors also highlight several “implications and suggestions” that should be considered in these policies, including areas such as “training”, “ethical use and risk management”, and “fostering a transparent AI environment”, among others (p. 12).

A third group of articles (Chan & Hu, 2023; Chiu, 2024; Jaboob, Hazaimeh, & Al-Ansi, 2024) addresses how GAI affects learning processes, student engagement, and the overall educational experience from the students' perspectives. Chiu (2024) focuses on the students' perspective, as reflected in the research question: “From the perspective of students, how do GAI transform learning outcomes, pedagogies and assessment in higher education?” (p. 4). Based on data collected from students, the study presents a wide range of results grouped into the three areas mentioned in the research question: learning outcomes, pedagogies, and assessment. It also presents implications for practices and policy development organized according to these three areas. Generally, the study suggests the need for higher education to evolve to incorporate the changes arising from AI development, offering a set of recommendations in this regard. It also shows that “students are motivated by the prospect of future employment and desire to develop the skills required for GAI-powered jobs” (p. 8).

The perspective of students is also explored in Jaboob, Hazaimeh, and Al-Ansi (2024), specifically through data collection from students in three Arab countries. The study aimed “to investigate the effects of generative AI techniques and applications on students’ cognitive achievement through student behavior” (p. 1). Various hypotheses were established that relate GAI techniques and GAI applications to their impacts on student behavior and students’ cognitive achievement. The results show that GAI techniques and GAI applications positively impact student behavior and students’ cognitive achievement (p. 8), emphasizing the importance of improving the understanding and implementation of GAI in HE, specifically in areas such as pedagogy, administrative tasks for teachers, the economy surrounding HE systems, and cultural impacts.

Chan and Hu (2023) also focus on students' perceptions of the integration of GAI in higher education, investigating their familiarity with these technologies, their perception of the potential benefits and challenges, and how these technologies can help “enhance teaching and learning outcomes” (p. 3). A survey was conducted among students from six universities in Hong Kong, and the results show that the students have a “good understanding of GAI technologies” (p. 7), that their attitudes towards these technologies are positive, showing willingness to use them. The results also point out the students have some concerns about using GAI, such as fears of becoming too reliant on these technologies and recognizing that they may limit their social interactions.

3.2. The Focus on the Stakeholders - Acceptance and Perceptions

The following summary highlights 10 articles that examine the acceptance and perceptions of GAI usage from the perspectives of various stakeholders

In Yilmaz, Yilmaz, and Ceylan (2023), the authors outlined their objectives and research questions, focusing on the acceptance and perceptions of AI-powered tools among students and professors. To obtain observable results, methods were defined based on the following objectives. To measure the degree of acceptance among students regarding educational applications, the authors proposed developing a tool based on the Unified Theory of Acceptance and Use of Technology (UTAUT) model. The results “indicated that all items possessed discriminative power” (p. 10) and “the instrument proves to be a valid and reliable scale for evaluating students’ intention to adopt generative AI” (p. 10). Nevertheless, as with any research tool, further studies are necessary to corroborate these findings and ensure the tool’s validity across different populations and contexts.

The objective of the study by Strzelecki and ElArabawy (2024) is to investigate the implications of integrating AI tools, particularly ChatGPT, into higher education contexts. The study highlights benefits of AI chat, such as “reducing task completion time and providing immediate responses to queries, which can bolster academic performance and in turn, foster an intention to utilize such tools” (p. 15). The findings indicated that the three variables of performance expectancy, effort expectancy, and social influence significantly influence behavioral intention. This study suggests that students are more likely to utilize ChatGPT if they perceive it to be user-friendly and requiring less effort. This is particularly true when ChatGPT offers multilingual conversational capabilities and enables the refinement of responses. Furthermore, the results indicate that the acceptance and usage of ChatGPT are positively correlated with the influence of instructors, peers, and administrators, who promote this platform to students. The authors noted that ChatGPT is “not adaptive and is not specifically designed for educational purposes” (p. 18).

Chen, Zhuo, and Lin (2023) offer several conclusions regarding the relationship between technology characteristics and performance. Specifically, the article provides practical recommendations for students on the appropriate use of the ChatGPT system during the learning process. Additionally, it offers guidance to developers on enhancing the functionality of the ChatGPT system. The study revealed that overall quality is a "key determinant of performance impact”. To influence the learning process effectively, the platform must support individualized learning for students, necessitating the continuous optimization and customization of features, as well as the provision of timely learning feedback.

The study described by Chergarova, Tomeo, Provost, De la Peña, Ulloa, and Miranda (2023) aimed to evaluate the current usage and readiness to embrace new AI tools among faculty, researchers, and employees in higher education. The analysis was performed over the AI tools and pricing model. To this end, the Technology Readiness Index (TRI) was used. The outcomes demonstrate that most users preferred the cost-free options for AI tools used in creative endeavors and tasks such as idea generation, coding, and presentations. Study participants indicate that “the participants showed enthusiasm for responsible implementation in regard to integrating AI generative tools” (p. 282). Additionally, professors incorporating these technologies in “into their teaching practice should undertake a responsible approach”.

Chan and Lee (2023) underscored the significance of integrating digital technology with conventional pedagogical approaches to enhance educational outcomes. Their findings have implications for the formulation of evidence-based guidelines and policies for the integration of GAI, aiming to cultivate critical thinking and digital literacy skills in students while fostering the responsible use of GAI technologies in higher education. It was concluded that integrating technology with traditional teaching methods is of paramount importance to facilitate an effective learning experience. To achieve this, evidence-based guidelines and policies must be developed to enable GAI integration, support the development of critical thinking and digital literacy skills in students, and promote the responsible use of GAI technologies in higher education.

Essel, Vlachopoulos, Essuman, and Amankwa (2024) aimed to investigate the impact of using ChatGPT on the critical, creative, and reflective thinking skills of university students in Ghana. The findings indicated that the incorporation of ChatGPT significantly influenced critical, reflective, and creative thinking skills, as well as their respective dimensions. Consequently, the study provides guidance for academics, instructional designers and researchers working in the field of educational technology. The authors highlighted the “potential benefits of leveraging the ChatGPT to promote students’ cognitive skills” and noted that “didactic assistance in-class activities can positively impact students’ critical, creative, and reflective thinking skills” (p. 10). Although the study did not assess the outcomes of the learning process or the effectiveness of different teaching methods, it can be concluded that using ChatGPT for in-class tasks can facilitate the development of cognitive abilities.

In the study by Rose, Massey, Marshall, and Cardon (2023) the aim is to gain insight into how computer science (CS) and information systems (IS) lecturers perceive the impact of new technologies and their anticipated effects on the academic sector. The authors noted that the utilization of these technologies by students allows them to complete their assignments more efficiently. Furthermore, these technologies have the potential to facilitate the identification of coding errors in the workforce. However, there is a growing concern about the rise in plagiarism among students, which could negatively impact the integrity of higher education. Additionally, there are “potential impact of AI chatbots on employment” (p. 185).

The study presented by Chan and Zhou (2023) examines the relationship between student perceptions and their intention to employ GAI in higher education settings. The authors note the importance of “enhancing expectancies for success and fostering positive value beliefs through personalized learning experience and strategies for mitigating GAI risks” (p. 19). The findings indicate a strong correlation between perceived value and intention to use GAI, and a relatively weak inverse correlation between perceived cost and intention to use. The GAI implications in other domains, such as education, was performed. It is crucial to evaluate the potential long-term consequences and the ethical challenges that may arise from its widespread adoption.

Greiner, Peisl, Höpfl, and Beese (2023) investigated the potential for AI to be employed as a decision-making agent in semi-structured educational settings, such as thesis assessment. Furthermore, they explored the nature of interactions between AI and its human counterparts. It was observed that students’ acceptance and willingness to adopt GAI are central, highlighting the need for further research in this area. Consequently, this work presents an instrument for measuring students’ perceptions of GAI, which can be employed by researchers in subsequent studies of GAI adoption. The authors also suggest that there is a sufficient foundation to analyze AI-human communication. Additionally, the study provides valuable insights into the potential application of AI within higher education, particularly in the evaluation of academic theses.

This study (Al-Zahrani, 2023) examines the impact of GAI tools on researchers and research related to higher education in Saudi Arabia. Results show that participants have positive attitudes and high awareness of GAI in research, recognizing the potential of these tools to transform academic research. However, the importance of adequate training, support, and guidance in the ethical use of GAI emerged as a significant concern, underlining the participants' commitment to responsible participants' commitment to responsible research practices and the and need to address the potential biases associated with using these tools.

3.3. Focus on Tasks and Activities: Utilizing GAI in Various Situations

Twelve articles are summarized, focusing on the application of GAI in diverse contexts such as assessment, writing, content analysis, content generation, academic integrity, and feedback.

In the study presented by Singh (2023), the authors assess the impact of ChatGPT on scholarly writing practices, with particular attention to potential instances of plagiarism. Furthermore, the relatively under-researched field of GAI and its potential application to educational contexts is discussed, referencing three professors from South Africa. To understand the impact of such technologies on the teaching and learning process, a comprehensive academic study is essential, encompassing not only universities but also all educational settings. Authors note “that lecturers need to develop their technical skills and learn how to incorporate these kinds of technologies into their classes and adapt how they assess students” (p. 218). Finally, the insights from the professors summarize their views on the impact of ChatGPT on plagiarism within higher education and its effects on scholarly writing.

The study by Farazouli, Cerratto-Pargman, Bolander-Laksov, and McGrath (2023) aimed to investigate the potential impact of emerging technologies, specifically AI chatbots, on the assessment practices employed by university teachers. The empirical observations revealed that while participants were not specifically requested to identify responses written by the chatbot, they were required to provide scores and evaluate the quality of responses during the Turing Test experiment. Results from focus group interviews indicate that participants were consistently mindful of the possibility that their assessment might be influenced by the presence of text generated by ChatGPT. The authors noted, “participants perceived that the evaluation of the responses required them to distinguish between student and chatbot texts” (p. 10). The findings suggest that the generated responses might have been influenced by AI, leading to flawed responses that were similar or identical to those produced by the chatbot and student. In contrast, the study aims to examine teachers’ responses and perceptions regarding emerging technological artifacts such as ChatGPT, with the goal of understanding the implications for their assessment practices in this context.

In their study, Barrett and Pack (2023) examine the potential interactions between an inexperienced or inadequately trained educator or student and a GAI tool, such as ChatGP. The aim was to inform approaches to GAI integration in educational settings and provide “initial insights into student and teacher perspectives on using GAI in academic writing” (p. 18). A potential drawback of this study is the non-random selection of the sample, which limits the generalization of the findings to a larger, broader population.

De Paoli (2023) presents the results and reflections of an experimental investigation conducted with the LLM GPT 3.5-Turbo to perform an inductive Thematic Analysis (TA). The authors state that it “was written as an experiment and as a provocation, largely for social sciences as an audience, but also for computer scientists working on this subject” (p. 18). The experiment compares the results of the research on the ‘gaming’ and ‘teaching’ datasets. The issue of whether an AI natural language processing (NLP) model can be used for data analysis arises from the fact that this form of analysis is largely dependent on human interpretation of meaning by humans.

In this study (Hammond, Lucas, Hassouna, & Brown, 2023), the objective was to rigorously examine the discourses used by five online paraphrasing websites to justify the use of Automated Paraphrasing Tool (APT). The aim was to identify appropriate and inappropriate ways these discourses are deployed. The competing discourses were conceptualized using the metaphorical representation of the dichotomy between the sheep and the wolf. Additionally, the metaphor of educators acting as shepherds was employed to illustrate how students may become aware of the claims presented on the APT websites and develop critical language awareness when exposed to such content. Educators can assist students in this regard by acquiring an understanding of how these websites use language to persuade users to circumvent learning activities.

The article by Kelly, Sullivan, and Strampel (2023) provides a novel foundation for enhancing our understanding of how these tools may affect students as they engage in academic pursuits at the university level. The authors observed “that students had relatively low knowledge, experience, and confidence with using GAI”. Additionally, the rapid advent of these resources in late 2022 and early 2023 meant that many students were initially unaware of their existence. The limited timeframe precluded academic teaching staff from considering the emerging challenges and risks associated with GAI and how to incorporate these tools into their teaching and learning practices. The findings indicate that students' self-assessed proficiency in utilizing GAI ethically increases with experience. It is notable that students are more likely to learn about GAI through social media.

The study by Laker and Sena (2023) provides a foundation for future research on the significant impact that AI will have on HE in the coming years. The integration of GAI models such as ChatGPT, in higher education - particularly in the field of business analytics - offers both potential advantages and inherent limitations. AI has the potential to significantly enhance the learning experience of students by providing code generation and step-by-step instructions for complex tasks. However, it also raises concerns about academic dishonesty, impedes the development of foundational skills, and brings up ethical considerations. The authors obtained insights into the accuracy of the generated content and the potential for detecting its use by students. The study indicates that ChatGPT can offer accurate solutions to certain types of assessments, including straightforward Python quizzes and introductory linear programming problems. It also illustrates how instructors can identify instances where students have used AI tools to assist with their learning, despite explicit instructions not to do so.

Two studies cover specifically, the topic of academic integrity / plagiarism. The study by Perkins, Roe, Postma, McGaughran, and Hickerson (2024) examines the effectiveness of academic staff utilizing the Turnitin Artificial Intelligence (AI) detection tool to identify AI-generated content in university assessments. Experimental submissions were created using ChatGPT, employing prompting techniques to minimize the likelihood of detection by AI content generators would be identified. The results indicate that Turnitin’s AI detection tool has potential for supporting academic staff in detecting AI-generated content. However, the relatively low detection accuracy among participants suggests a need for further training and awareness. The findings demonstrate that the Turnitin AI detection tool is not particularly robust to the use of these adversarial techniques, raising questions regarding the ongoing development and effectiveness of AI detection software.

By its turn, the aim of the article by Currie and Barry (2023) is to analyze the growing challenge of academic integrity in the context of AI algorithms, such as the GPT 3.5-powered ChatGPT chatbot. This issue is particularly evident in nuclear medicine training, which has been impacted by these new technologies. The chatbot “has emerged as an immediate threat to academic and scientific writing” (p. 247). The authors conclude that there is a “limited generative capability to assist student” (p. 253) and note “limitations on depth of insight, breadth of research, and currency of information” (p. 253). Similarly, the use of inadequate written assessment tasks can potentially increase the risk of academic misconduct among students. Although ChatGPT can generate examination answers in real time, its performance is constrained by the superficial nature of the evidence of learning produced by its responses. These limitations, which reduce the risk of students benefiting from cheating, also limit ChatGPT’s potential for improving learning and writing skills.

Alexander, Savvidou, and Alexander (2023) propose a consideration of generative AI language models and their emerging implications for higher education. The study addresses the potential impact on English as a second language teachers' existing professional knowledge and skills in academic writing assessment, as well as the risks that such AI language models could pose to academic integrity, and the associated the implications for teacher training. In conclusion, the authors noted that it’s “fully reliable way of establishing whether a text was written by a human or generated by an AI” (p. 40), and that “human evaluators’ expectations of AI texts differ from what in reality is generated by ChatGPT” (p. 40).

In their article, Hassoulas, Powell, Roberts, Umla-Runge, Gray, and Coffey (2023) address the responsibility of integrating assessment strategies and broadening the definition of academic misconduct as this new technology emerges. The results suggest that, at present, experienced markers cannot consistently distinguish between student-written scripts and text generated by natural language processing tools, such as ChatGPT. Additionally, the authors confirm that “despite markers suspecting the use of tools such as ChatGPT at times, their suspicions were not proven to be valid on most occasions” (p. 75).

The article by Escalante, Pack, and Barrett (2023) examined the use of GAI as an automatic essay evaluator, incorporating learners’ perspectives. The findings suggest that AI-generated feedback did not lead to greater linguistic progress compared to feedback from human tutors for new language students. The authors note that there is no clear superiority of one feedback method over the other in terms of scores.

3.4. Analysis of the Methodologies Employed

3.4.1. General Analysis

The methodologies employed by the authors of the 37 selected papers, which investigate the integration of AI tools such as ChatGPT across various domains, particularly in education, were analyzed. These methodologies were categorized and examined using the model proposed by Saunders et al. (2007) – The Research Onion. This model provides a comprehensive and visually rich framework for conducting or analyzing methodological research in the social sciences. It provides a structured approach with several layers, each of which must be sequentially examined.

Saunders et. al (2019) divided the model into three levels of decision-making: 1. The two outermost rings encompass research philosophy and research approach; 2. The intermediate level includes research design, which comprises methodological choices, research strategy, and time horizon; and 3. The innermost core consists of tactics, including aspects of data collection and analysis. Each layer of the Research Onion presents choices that researchers must confront, and decisions at each stage influence the overall design and direction of the study.

By aligning the papers within the structured layers of the Research Onion, we aimed to gain a deeper understanding of the methodological choices made across these studies and to provide a comprehensive overview of the trends and focal points in GAI research.

Since the research philosophy adopted by the authors is often not clearly stated in most papers, we chose to focus on three major areas: research approach, research strategies, and data collection and analysis. Research approaches can be either deductive or inductive. In the deductive approach, the researcher formulates a hypothesis based on a preexisting theory and then designs the research approach to test it. This approach is suitable for the positivist paradigm, enabling the statistical testing of expected results to an accepted level of probability. Conversely, the inductive approach allows the researcher to develop a theory rather than adopt a preexisting one.

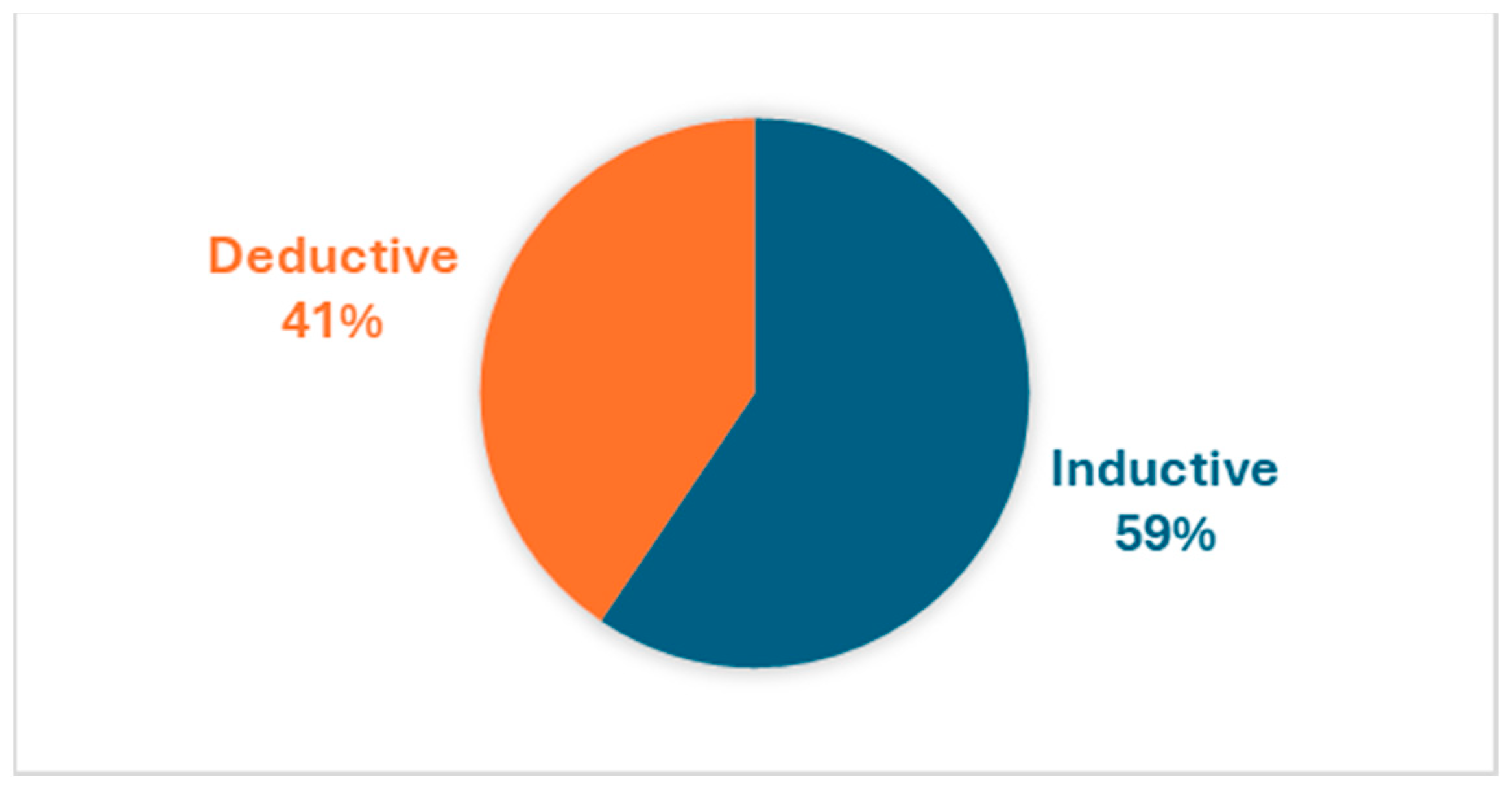

Our analysis reveals that 15 out of 37 papers adopted a deductive approach, while 22 have employed an inductive approach. Examples of studies employing the deductive approach include those of Popovici (2023), Yilmaz and Karaoglan (2023), and Greiner, Peisl, Höpfl and Beese (2023). In contrast, examples of studies using the inductive approach include papers by Walczak and Cellary (2023), French, Levi, Maczo, Simonaityte, Triantafyllidis and Varda (2023), and Barrett and Pack (2023). Graph 1 illustrates the distribution of the papers according to the research approach employed.

Graph 1.

Distribution of papers by research approach employed.

Graph 1.

Distribution of papers by research approach employed.

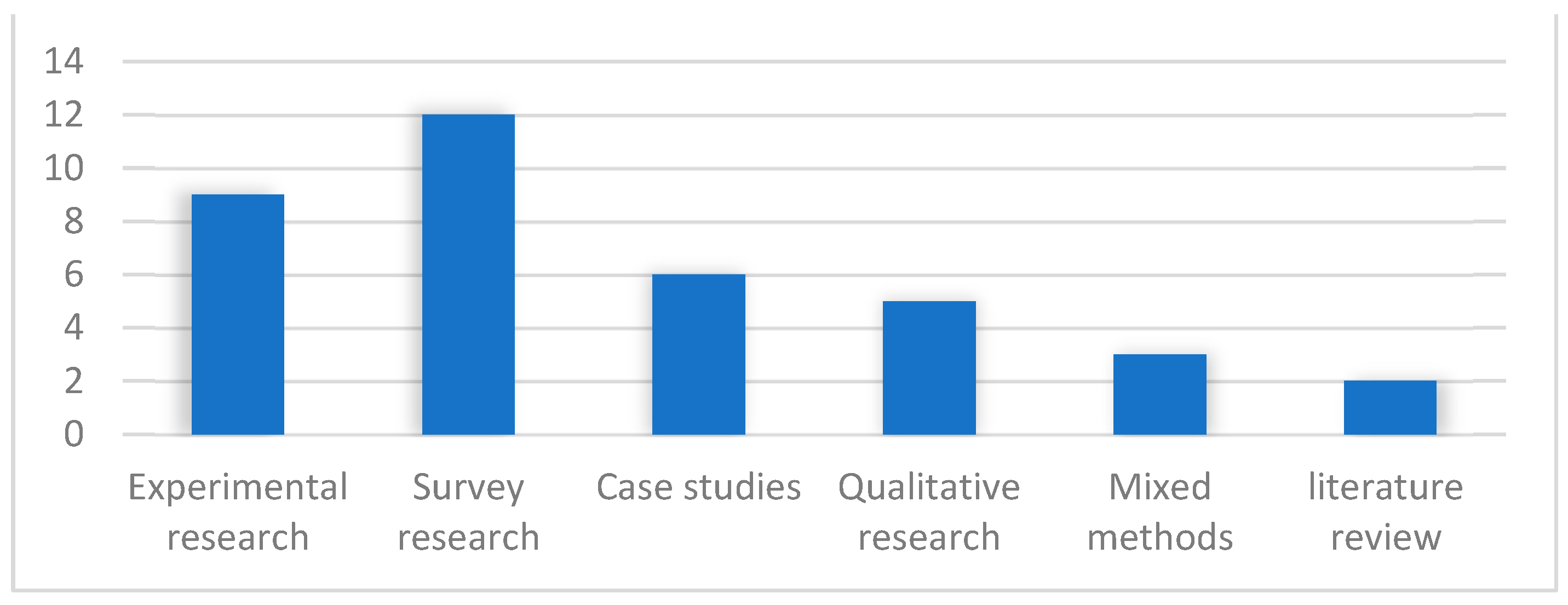

Delving deeper into the Research Onion, the Research Strategies layer reflects the overall operational approach to conducting research. Among the 37 articles analyzed, the most frequently encountered strategies were survey research, followed by experimental research and case studies. The least used were literature reviews and mixed methods, as shown in Graph 2:

Experimental research: researchers aim to study cause-effect relationships between two or more variables. Examples include the works Alexander, Savvidou, and Alexander (2023), and Strzelecki and ElArabawy (2024).

Survey research: this method involves seeking answers to “what”, “who”, “where”, “how much”, and “how many” types of research questions. Surveys systematically collect data on perceptions or behaviors. Examples include the papers of Yilmaz, Yilmaz, and Ceylan (2023) and Rose, Massey, Marshall and Cardon (2023).

Case studies: researchers conduct in-depth investigations. Examples include the work of Lopezosa, Codina, Pont-Sorribes. and Vállez (2023), as well as Jaboob, Hazaimeh and Al-Ansi (2024).

Graph 2.

Distribution of papers by research strategies.

Graph 2.

Distribution of papers by research strategies.

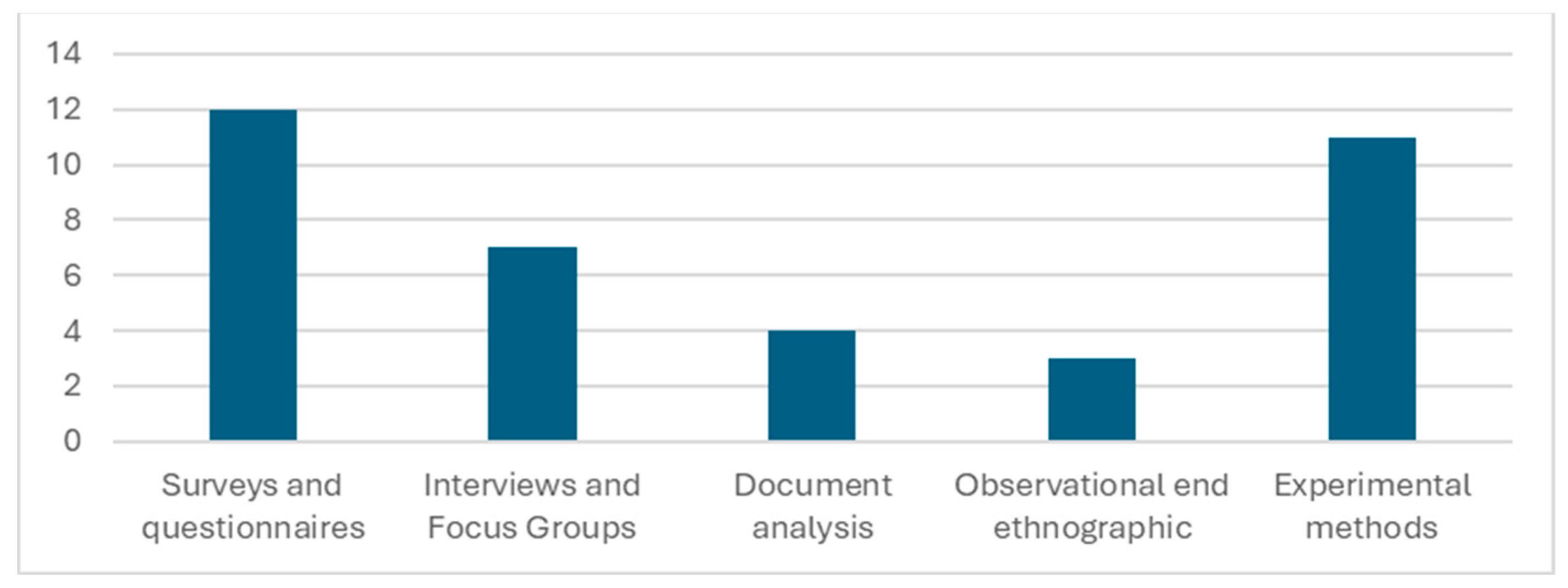

The final layer analyzed was the Data Collection and Analysis methods, which are crucial for understanding how empirical data are gathered. As shown in Graph 3, the most used method is surveys and questionnaires, followed by experimental methods and then interviews and focus groups.

Surveys and questionnaires: according to the analyzed papers, 12 studies gathered quantitative data from broad participant groups. Examples include Elkhodr, Gide, Wu and Darwish (2023), and Perkins, Roe, Postma, McGaughran and Hickerson (2024).

Experimental methods: central to 11 studies, these methods tested specific hypotheses under controlled conditions. Examples include Currie and Barry (2023), and Al-Zahrani (2023).

Interviews and focus groups: Seven studies collected qualitative data. Examples include Singh (2023), and Farazouli, Cerratto-Pargman, Bolander-Laksov and McGrath (2023).

Graph 3.

Distribution of papers by research strategies.

Graph 3.

Distribution of papers by research strategies.

The comprehensive classification of the 37 papers reveals trends in the methodological choices made by the researchers. Survey research predominates, being used in 12 studies, compared to experimental research (9), case studies (6), and qualitative approaches (7). This distribution underscores a preference for surveys, likely due to several advantages, such as the ability to generalize findings across a larger population. Additionally, surveys are well-suited for exploratory research aimed at gauging perceptions, attitudes, and behaviors towards GAI technologies. Conversely, while experimental research offers the advantage of isolating variables to establish causal relationships, it was not employed as frequently as it could have been. This may be due to the logistical complexities and higher costs associated with conducting such studies.

The diversity in data collection and analysis methods used across these papers highlights the varying research priorities and objectives. While surveys and questionnaires dominate, ensuring broad coverage and ease of analysis, methods like interviews and focus groups are invaluable for their depth. These qualitative tools are essential for exploring nuances that surveys might overlook.

3.4.2. Thing Ethnography: Adapting Ethnographic Methods for Contemporary Challenges - Analysis of a Case

Qualitative research approaches are continually adapting to address emerging challenges and leverage novel technologies. Thing ethnography exemplifies this transformation by modifying conventional ethnographic techniques to accommodate contemporary limitations, such as restricted access and the need for rapid data gathering. A distinguishing feature of this methodology, especially in its latest implementations, is the integration of artificial intelligence tools, such ChatGPT, into the ethnographic interview process. This innovative methodology allows researchers to incorporate AI as part of the ethnographic method, providing a distinct perspective on data collection in the digital technology era. Due to this novelty, we decided to analyze it in a deeper way.

Thing ethnography is a more efficient iteration of classic ethnography, aiming to collect cultural and social knowledge without requiring extensive on-site presence. This approach is particularly advantageous in dynamic contexts marked by rapid technological progress and frequent changes. Thing ethnography empowers researchers to expedite the analysis of human-AI interactions and their impacts by incorporating AI platforms like ChatGPT into their studies.

The research study “Challenges for Higher Education in the Era of Widespread Access to Generative AI” (Walczak & Cellary, 2023) exemplifies this innovative methodology. This research employed ethnography to assess the integration of GAI tools in higher education. An innovative feature of this study was the utilization of ChatGPT for conducting certain ethnographic interviews, thereby gathering data on AI and employing AI as a tool in the data collection process. This approach enabled a comprehensive understanding of AI’s function and its perception among students and instructors, providing a depth of insight that conventional interviews conducted solely with humans may not adequately capture.

Integrating AI, such as ChatGPT, with thing ethnography offers numerous benefits, including enhanced data gathering efficiency and improved data quality. The research study by Walczak and Cellary (2023) exemplifies the effectiveness of Thing Ethnography in rapidly producing comprehensive and meaningful observations that contribute to the development of educational policies and practices regarding AI. This approach extends the conventional boundaries of ethnographic research and adapts them to accommodate the intricacies of digital interaction and AI facilitation.

The integration of AI interviews into object ethnography marks a methodological advancement in qualitative research. By employing AI as both the subject and tool in ethnographic investigations, researchers can uncover new social and cultural dimensions of interaction in the digital era. This evolving methodology promises to offer insights into the complex relationship between humans and AI, potentially reshaping our understanding of technology and society.

4. Discussion

4.1. Discussion of Results

The integration of GAI in HE has been studied across various dimensions, revealing its multifaceted impact on educational practices, stakeholders, and activities. This discussion synthesizes findings from the 37 selected articles, grouped into three categories: focus on the technology, focus on the stakeholders, and focus on the activities, as outlined in

Table 5.

4.1.1. Focus on the Technology – The Use of GAI

The technological capabilities and limitations of GAI tools, particularly ChatGPT, have been central to numerous studies. These tools offer unprecedented opportunities for innovation in education but also pose significant challenges.

ChatGPT's application in higher education is widespread, with studies exploring its role in enhancing student support, teaching efficiency, and research productivity. For example, a study by Michel-Villarreal, Vilalta-Perdomo, Salinas-Navarro, Thierry-Aguilera, and Gerardou (2023) utilized ChatGPT in semi-structured interviews to identify educational challenges and opportunities, revealing significant potential for providing continuous student support and introducing interactive learning experiences. In game development courses, the integration of ChatGPT and Dall-E significantly improved students' creative, problem-solving, and critical skills, demonstrating the tools' ability to foster flexible and adaptive learning (French, Levi, Maczo, Simonaityte, Triantafyllidis, & Varda, 2023).

In specific educational contexts like engineering, ChatGPT's impact on assessment integrity was scrutinized. Researchers found that ChatGPT could generate passable responses to assessment questions, prompting a reevaluation of traditional assessment methods to maintain academic standards (Nikolic et al., 2023). Similarly, in functional programming courses, ChatGPT assisted students with assignments and performance evaluations, highlighting both its potential and limitations (Popovici, 2023).

Beyond ChatGPT, GAI tools have been integrated into various academic disciplines, demonstrating their broad applicability and impact. For instance, in journalism education, GAI tools were seen as transformative, with significant ethical considerations regarding their use (Lopezosa, Codina, Pont-Sorribes, & Vállez, 2023). In programming education, GAI tools enhanced coding skills and critical thinking, though their impact on motivation varied depending on the challenge's complexity (Yilmaz & Karaoglan Yilmaz, 2023). In medical education, the need for curriculum reform and professional development to address ethical concerns and improve teaching and learning processes was emphasized (Shimizu et al., 2023). At the institutional level, GAI tools were recognized for their potential to drive policy development, digital literacy, and ethical AI use, though concerns were raised about their possible negative impact on academic labor, such as making scholars less inquisitive and reflexive (Walczak & Cellary, 2023; Watermeyer, Phipps, Lanclos, & Knight, 2023).

Students' perspectives on GAI technology further enrich this narrative. Research indicated that students were generally familiar with and positively inclined towards GAI tools, although they expressed concerns about over-reliance and social interaction limitations. These studies suggested that higher education must evolve to incorporate AI-driven changes, focusing on preparing students for future employment in AI-powered jobs (Chan & Hu, 2023; Chiu, 2024; Jaboob, Hazaimeh, & Al-Ansi, 2024).

This discussion reflects the multifaced impact and interconnection of GAI in HE:

1. Versatility and Potential: GAI tools like ChatGPT have demonstrated significant potential across various disciplines, enhancing student support, teaching efficiency, and research productivity. They offer innovative learning experiences and assist in routine educational tasks, thereby freeing up valuable time for educators to focus on complex teaching and research activities (e.g., French et al., 2023; Michel-Villarreal et al., 2023).

2. Assessment Challenges: The use of ChatGPT in educational settings raises concerns about assessment integrity. Studies have shown that ChatGPT can generate passable responses to assessment questions, prompting the need for reevaluating traditional assessment strategies to maintain academic standards (e.g., Nikolic et al., 2023).

3. Broader Impact: Beyond specific applications like ChatGPT, GAI tools have broad applicability and impact across different academic disciplines, including journalism, programming, and medical education. These tools are recognized for their transformative potential, though ethical considerations and the need for curriculum reform are essential (e.g., Lopezosa, Codina, Pont-Sorribes, & Vállez, 2023; Shimizu et al., 2023; Yilmaz & Karaoglan Yilmaz, 2023).

4.1.2. Focus on Stakeholders: Acceptance and Perceptions

The acceptance and perceptions of GAI usage among various stakeholders, including students, teachers, and institutional leaders, are crucial for successful integration. Several studies employed theoretical models to measure acceptance levels and identify influencing factors.

Students' Acceptance: The Unified Theory of Acceptance and Use of Technology (UTAUT) was used for evaluating students' acceptance of GAI. These studies confirmed the tool's validity but recommended further research to ensure its applicability across different contexts (Yilmaz, Yilmaz, & Ceylan, 2023). Key factors influencing students' behavioral intentions towards using ChatGPT included performance expectancy, effort expectancy, and social influence. For instance, user-friendliness and multilingual capabilities of ChatGPT were found to enhance its acceptance (Strzelecki & ElArabawy, 2024).

Instructors' Perceptions: Instructors' perceptions highlighted the practical implications of GAI integration. Research indicates that the overall quality and customization of GAI tools were key determinants of their impact on learning. Continuous optimization and timely feedback were essential to maximize benefits (Chen, Zhuo, & Lin, 2023). Moreover, responsible implementation was emphasized, with educators encouraged to adopt a cautious approach when integrating AI into their teaching practices (Chan & Lee, 2023; Chergarova, Tomeo, Provost, De la Peña, Ulloa, & Miranda, 2023).

Institutional Impact: At the institutional level, GAI tools were recognized for their potential to drive policy development and broader curricular transformations. Surveys and studies suggested that higher education institutions should develop comprehensive plans for AI usage, incorporating ethical guidelines and risk management strategies (Chan, 2023). These institutional strategies are crucial for fostering a supportive environment for AI adoption and addressing potential ethical concerns (Chan & Lee, 2023).

Regarding the stakeholders, the main conclusions can be summarized as follows:

Student Acceptance: The acceptance of GAI tools among students is influenced by factors such as performance expectancy, effort expectancy, and social influence. Studies indicate that user-friendliness and multilingual capabilities of tools like ChatGPT enhance their acceptance. Effective promotion and support from educators and administrators are crucial (e.g., Strzelecki & ElArabawy, 2024; Yilmaz, Yilmaz, & Ceylan, 2023).

Instructor Perceptions: Instructors recognize the practical implications of integrating GAI tools. Key determinants of impact include the overall quality and customization of these tools. Continuous optimization, timely feedback, and responsible implementation are essential for maximizing benefits and addressing potential challenges (e.g., Chen, Zhuo, & Lin, 2023; Chergarova, Tomeo, Provost, De la Peña, Ulloa, & Miranda, 2023).

Institutional Strategies: Higher education institutions need to develop comprehensive plans for AI usage, incorporating ethical guidelines and risk management strategies. Institutional support is vital for fostering a positive environment for AI adoption and addressing concerns about academic labor and ethical use (e.g., Chan, 2023; Watermeyer, Phipps, Lanclos, & Knight, 2023).

4.1.3. Focus on Tasks and Activities: Utilizing GAI in Various Situations

Finally, the practical application of GAI technologies extends across various tasks and activities in higher education, including assessment, writing, content analysis, content generation, academic integrity, and feedback.

The impact of ChatGPT on scholarly writing practices and assessment is a key area of exploration. Concerns about potential plagiarism have prompted calls for educators to develop technical skills and adapt assessment strategies to effectively incorporate AI tools (Farazouli, Cerratto-Pargman, Bolander-Laksov, & McGrath, 2023; Singh, 2023). These studies highlighted the need for clear guidelines to distinguish between human and AI-generated content, thereby ensuring academic integrity.

GAI's role in content analysis and generation is another significant focus. For example, an experimental investigation using GPT-3.5-Turbo for inductive thematic analysis explored whether AI models could effectively interpret data typically analyzed by humans (De Paoli, 2023). Additionally, research on automated paraphrasing tools emphasizes the importance of educators understanding the persuasive language these tools use to develop students' critical language awareness (Hammond, Lucas, Hassouna, & Brown, 2023).

Academic integrity challenges posed by GAI tools is recurrent themes. Studies examined the impact of GAI across various disciplines, including nuclear medicine training, where ChatGPT's potential to generate superficial examination answers was highlighted (Currie & Barry, 2023). The effectiveness of AI detection tools like Turnitin in identifying AI-generated content was also evaluated, suggesting a need for further training and development to improve detection accuracy (Perkins, Roe, Postma, McGaughran, & Hickerson, 2024).

The implications of GAI tools for providing feedback and enhancing the learning experience have been explored in various studies. For instance, research on the impact of GAI tools on English as a second language teachers and their assessment practices underscored the importance of balancing human and AI-generated feedback (Alexander, Savvidou, & Alexander, 2023). Additionally, discussions on the integration of assessment strategies and the broader definition of academic misconduct revealed that experienced markers often struggled to distinguish between student-written and AI-generated texts (Hassoulas, Powell, Roberts, Umla-Runge, Gray, & Coffey, 2023).

Overall, the discussion suggests that while GAI tools, such as ChatGPT, offer significant opportunities to enhance education, their integration must be carefully managed to address challenges related to academic integrity, ethical use, and the balance between AI assistance and traditional learning methods. By considering the perspectives of technology, stakeholders, and roles, this synthesis provides a comprehensive understanding of the multifaceted implications of GAI in higher education.

Here are the main findings regarding the activities (

Figure 1):

1. Academic Integrity: The integration of GAI tools poses challenges related to academic integrity, particularly concerning plagiarism and the authenticity of AI-generated content. Clear guidelines and policies are necessary to ensure academic standards and promote responsible use (e.g., Farazouli, Cerratto-Pargman, Bolander-Laksov, & McGrath, 2023; Perkins, Roe, Postma, McGaughran, & Hickerson, 2024; Singh, 2023).

2. Educational Enhancement: GAI tools can significantly enhance the learning experience by providing support in tasks like content generation, analysis, and feedback. However, balancing AI assistance with traditional learning methods is crucial to ensure comprehensive educational development (e.g., De Paoli, 2023; Hammond, Lucas, Hassouna, & Brown, 2023).

3. Feedback and Assessment: The role of GAI tools in providing feedback and shaping assessment practices is significant. These tools can offer valuable insights and support, though the distinction between human and AI-generated content remains a challenge. Effective integration requires a nuanced approach to feedback and assessment strategies (e.g., Alexander, Savvidou, & Alexander, 2023; Hassoulas, Powell, Roberts, Umla-Runge, Gray, & Coffey, 2023).

4.2. Research Agenda

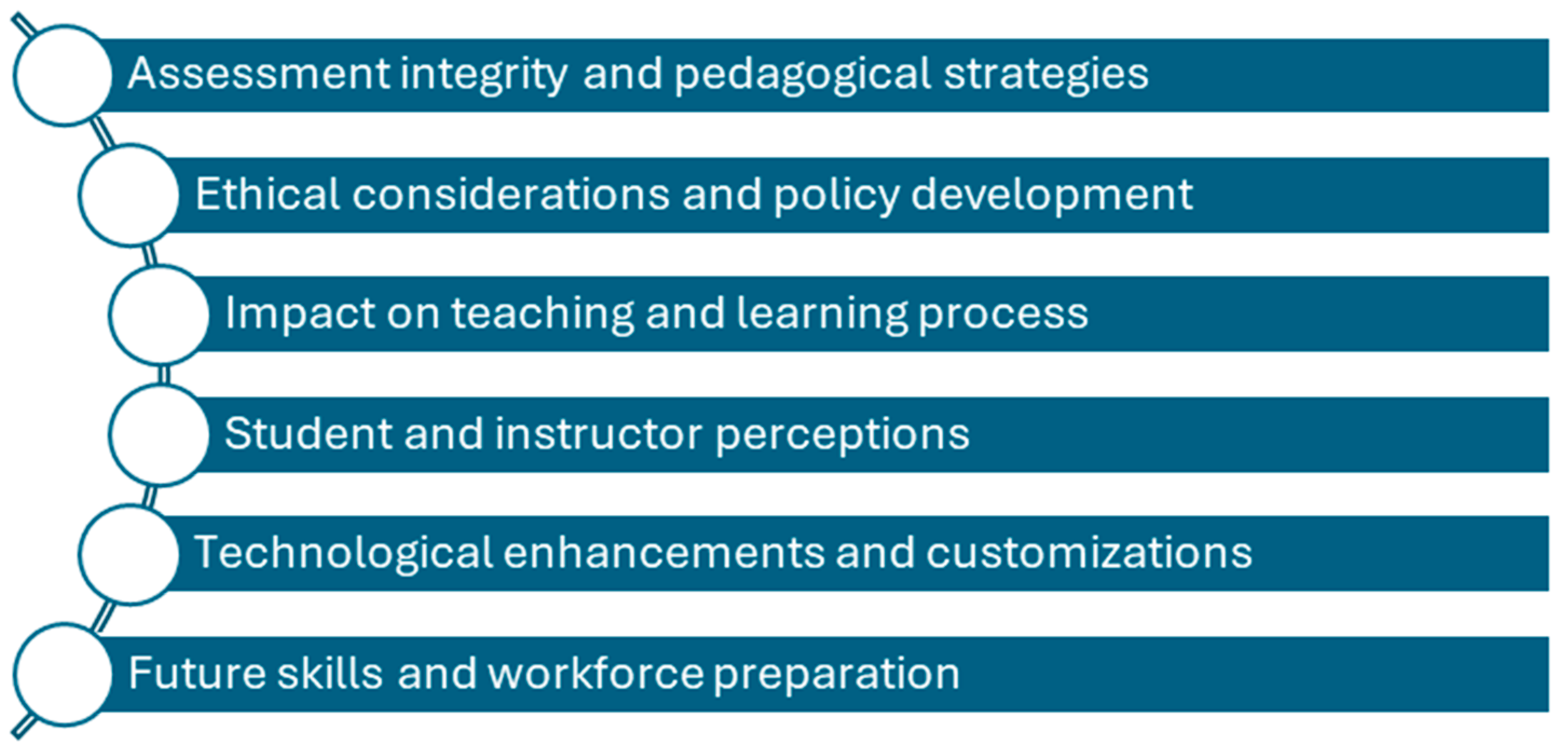

In this section, considering the main findings, we propose a potential research agenda for the future. Based on the research questions, findings, and conclusions of the 37 studies, we identify six key areas where knowledge about GAI and its use in HE needs further development. These areas are (

Figure 2):

1. Assessment Integrity and Pedagogical Strategies: it is necessary to develop robust assessment methods and pedagogical strategies that effectively incorporate GAI tools while maintaining academic integrity. For example, we need to understand how traditional assessment strategies can be adapted to account for the capabilities of GAI tools like ChatGPT and identify the most effective pedagogical approaches for integrating GAI tools into various disciplines without compromising academic standards.

2. Ethical Considerations and Policy Development: another area requiring further research is the establishment of ethical guidelines and institutional policies for the responsible use of GAI tools in higher education. Possible research questions include the ethical challenges arising from the use of GAI tools in educational contexts and how higher education institutions can develop and implement policies that promote the ethical use of GAI.

3. Impact on Teaching and Learning Processes: it is essential to investigate how GAI tools influence teaching methodologies, learning outcomes, and student engagement. Questions such as how do GAI tools impact student engagement, motivation, and learning outcomes across different disciplines, as well as what best practices exist for integrating GAI tools into the curriculum to enhance learning, require further study.

4. Student and Instructor Perceptions: further research is needed to explore the perceptions of GAI tools among students and teachers. Researchers should investigate the acceptance of these tools and identify factors influencing their adoption. For instance, it is crucial to understand what drives the acceptance and usage of GAI tools among students and instructors, and how perceptions of these tools differ across various demographics and educational contexts.

5. Technological Enhancements and Customization: it is essential to evaluate the effectiveness of various customization and optimization strategies for GAI tools in educational settings. For instance, it is important to understand how GAI tools can be customized to better meet the needs of specific educational contexts and disciplines, and to identify which technological enhancements can improve the usability and effectiveness of these tools.

6. Future Skills and Workforce Preparation: it is crucial to understand the role of GAI tools in preparing students for future employment and developing necessary skills for the evolving job market. Research should focus on identifying the essential skills students need to effectively use GAI tools in their future careers and how higher education curricula can be adapted to incorporate these tools, preparing students for AI-driven job markets.

5. Conclusions

The adoption of GAI is irreversible. Increasingly, students, teachers, and researchers consider this technology as a valuable support for their work impacting all aspects of the teaching-learning process, including research. This systematic literature review of 37 articles published between 2023 and 2024 on the use of GAI in HE reveals some concerns.

GAI tools have demonstrated their potential to enhance student support, improve teaching efficiency, and facilitate research activities. They offer innovative and interactive learning experiences while aiding educators in managing routine tasks. However, these advancements necessitate a reevaluation of assessment strategies to maintain academic integrity and ensure the quality of education.

The acceptance and perceptions of GAI tools among students, instructors, and institutional leaders are critical for their successful implementation. Factors such as performance expectancy, effort expectancy, and social influence significantly shape attitudes toward these technologies. Ethical considerations, particularly concerning academic integrity and responsible use, must be addressed through comprehensive policies and guidelines.

Moreover, GAI tools can significantly enhance various educational activities, including assessment, writing, content analysis and feedback. However, balancing AI assistance with traditional learning methods is crucial. Future research should focus on developing robust assessment methods, ethical guidelines, and effective pedagogical strategies to maximize the benefits of GAI while mitigating potential risks.

This study presents several limitations. Firstly, the articles reviewed only cover the period until the end of January 2024. Therefore, this analysis needs to be updated with studies published after this date. Secondly, the search query used may be considered a limitation, as using different words might identify different studies. Additionally, this SLR is limited to studies conducted in HE. Research done in other contexts or educational levels could provide different results and perspectives.

For future work, it is important to note that the use of GAI in HE is still in its early stages. This presents numerous opportunities for further analysis and exploration across various topics and areas. These include research on pedagogy, assessment, ethics, technology, and the development of future skills needed to remain competitive in the job market.

In conclusion, while GAI tools like ChatGPT offer transformative opportunities for higher education, their integration must be carefully managed. By addressing ethical concerns, fostering stakeholder acceptance, and continuously refining pedagogical approaches, higher education institutions can fully harness the potential of GAI technologies. This approach will not only enhance the educational experience but also prepare students for the evolving demands of an AI-driven future.

Author Contributions

Conceptualization, J.B., A.M. and G.C.; methodology, J.B., A.M. and G.C.; formal analysis, J.B., A.M. and G.C.; writing—original draft preparation, J.B., A.M. and G.C.; writing—review and editing, J.B., A.M. and G.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work is financially supported by national funds through FCT – Foundation for Science and Technology, I.P., under the projects UIDB/05460/2020 and UIDP/05422/2020.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Alexander, K., Savvidou, C., & Alexander, C. (2023). Who wrote this essay? Detecting AI-generated writing in second language education in higher education. Teaching English with Technology, 23(2), 25-43. [CrossRef]

- Al-Zahrani, A. M. (2023). The impact of generative AI tools on researchers and research: Implications for academia in higher education. Innovations in Education and Teaching International. [CrossRef]

- Barrett, A., & Pack, A. (2023). Not quite eye to A.I.: Student and teacher perspectives on the use of generative artificial intelligence in the writing process. International Journal of Educational Technology in Higher Education, 20. [CrossRef]

- Bond, M., Khosravi, H., De Laat, M., & et al. (2024). A meta-systematic review of artificial intelligence in higher education: A call for increased ethics, collaboration, and rigour. International Journal of Educational Technology in Higher Education, 21(4). [CrossRef]

- Chan, C. K. Y. (2023). A comprehensive AI policy education framework for university teaching and learning. International Journal of Educational Technology in Higher Education, 20. [CrossRef]

- Chan, C. K. Y., & Hu, W. (2023). Students’ voices on generative AI: perceptions, benefits, and challenges in higher education. International Journal of Educational Technology in Higher Education, 20. [CrossRef]

- Chan, C. K. Y., & Lee, K. K. W. (2023). The AI generation gap: Are Gen Z students more interested in adopting generative AI such as ChatGPT in teaching and learning than their Gen X and millennial generation teachers? Smart Learning Environments, 10(1). [CrossRef]

- Chan, C. K. Y., & Zhou, W. (2023). An expectancy value theory (EVT) based instrument for measuring student perceptions of generative AI. Smart Learning Environments, 10(1). [CrossRef]

- Chen, J., Zhuo, Z., & Lin, J. (2023). Does ChatGPT play a double-edged sword role in the field of higher education? An in-depth exploration of the factors affecting student performance. Sustainability, 15, 16928. [CrossRef]

- Chergarova, V., Tomeo, M., Provost, L., De la Peña, G., Ulloa, A., & Miranda, D. (2023). Case study: Exploring the role of current and potential usage of generative artificial intelligence tools in higher education. Issues in Information Systems, 24(2), 282-292. [CrossRef]

- Chiu, T. K. F. (2024). Future research recommendations for transforming higher education with generative AI. Computers and Education: Artificial Intelligence, 6. [CrossRef]

- Crompton, H., & Burke, D. (2023). Artificial intelligence in higher education: The state of the field. International Journal of Educational Technology in Higher Education, 20(22). [CrossRef]

- Currie, G., & Barry, K. (2023). ChatGPT in nuclear medicine education. Journal of Nuclear Medicine Technology, 51(3), 247-254. [CrossRef]

- De Paoli, S. (2023). Performing an inductive thematic analysis of semi-structured interviews with a large language model: An exploration and provocation on the limits of the approach. Social Science Computer Review. [CrossRef]

- Duong, C. D., Vu, T. N., & Ngo, T. V. N. (2023). Applying a modified technology acceptance model to explain higher education students' usage of ChatGPT: A serial multiple mediation model with knowledge sharing as a moderator. International Journal of Management Education, 21(3), 100883. [CrossRef]

- Elkhodr, M., Gide, E., Wu, R., & Darwish, O. (2023). ICT students’ perceptions towards ChatGPT: An experimental reflective lab analysis. STEM Education, 3(2), 70-88. [CrossRef]

- Escalante, J., Pack, A., & Barrett, A. (2023). AI-generated feedback on writing: insights into efficacy and ENL student preference. International Journal of Educational Technology in Higher Education, 20(1). [CrossRef]

- Essel, H. B., Vlachopoulos, D., Essuman, A. B., & Amankwa, J. O. (2024). ChatGPT effects on cognitive skills of undergraduate students: Receiving instant responses from AI-based conversational large language models (LLMs). Computers and Education: Artificial Intelligence, 6. [CrossRef]