Submitted:

30 September 2024

Posted:

01 October 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

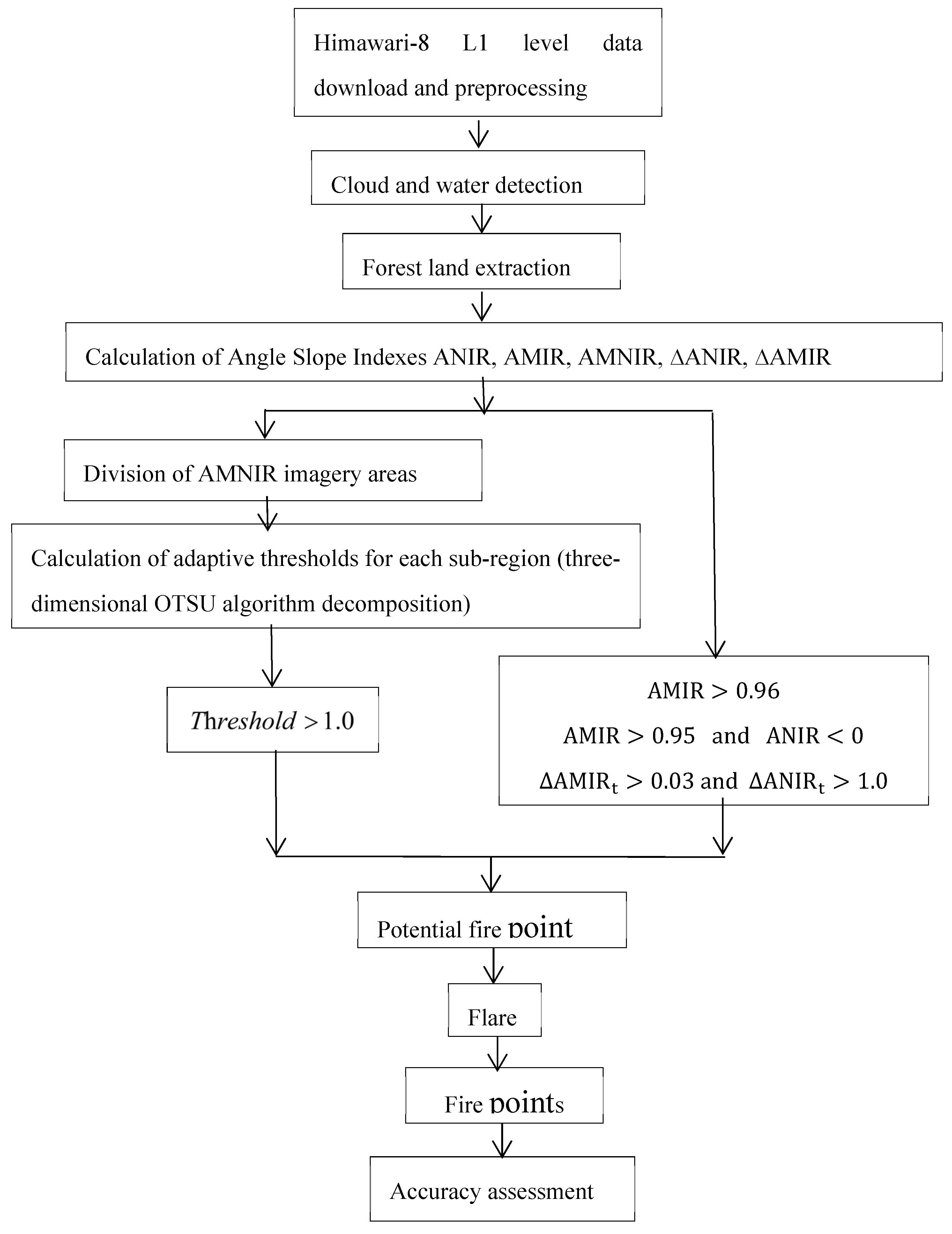

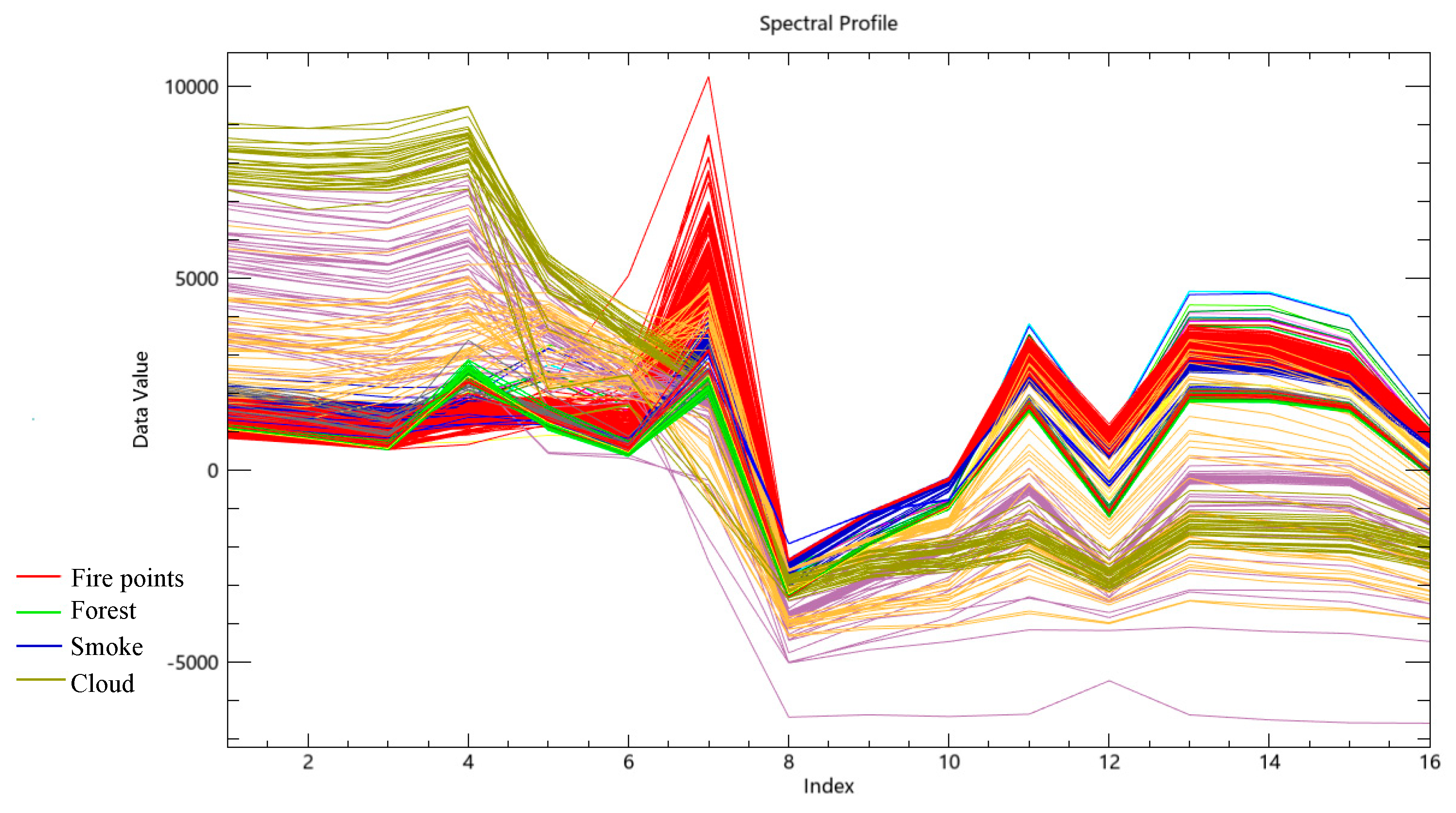

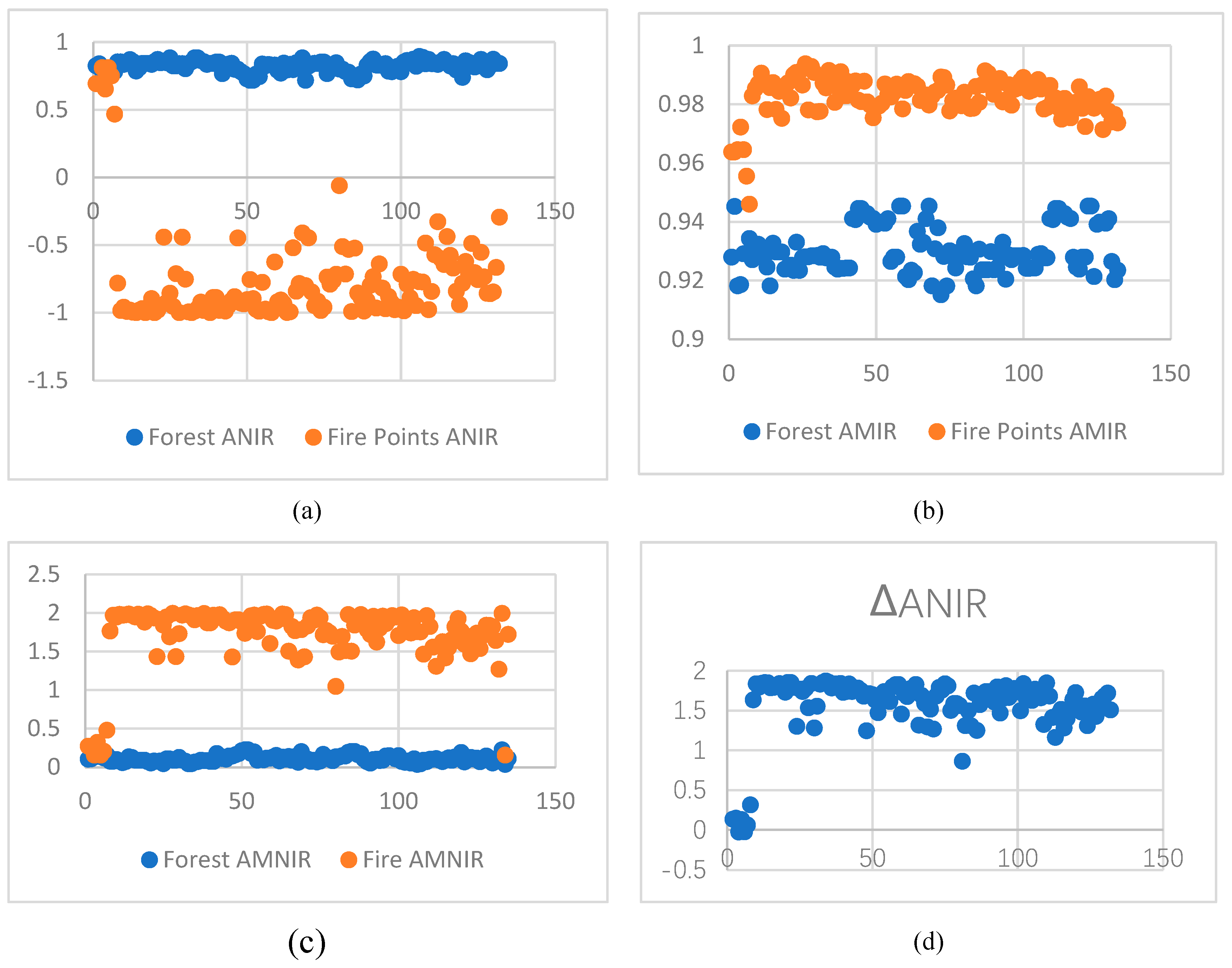

- To address the misjudgments caused by solely relying on changes in infrared band brightness values and single-band separation for forest fire discrimination, the Angle slope index based on Mid-infrared (AMIR), Angle slope index based on Near- infrared (ANIR), and Angle slope index based on the difference between mid-infrared and near-infrared (AMNIR) were constructed. These indices integrate the reflectance characteristics of visible and short-wave infrared bands and the strong inter-band correlations, enabling simultaneous monitoring of forest fire smoke and fuel biomass changes. This approach enhances sensitivity and improves accuracy.

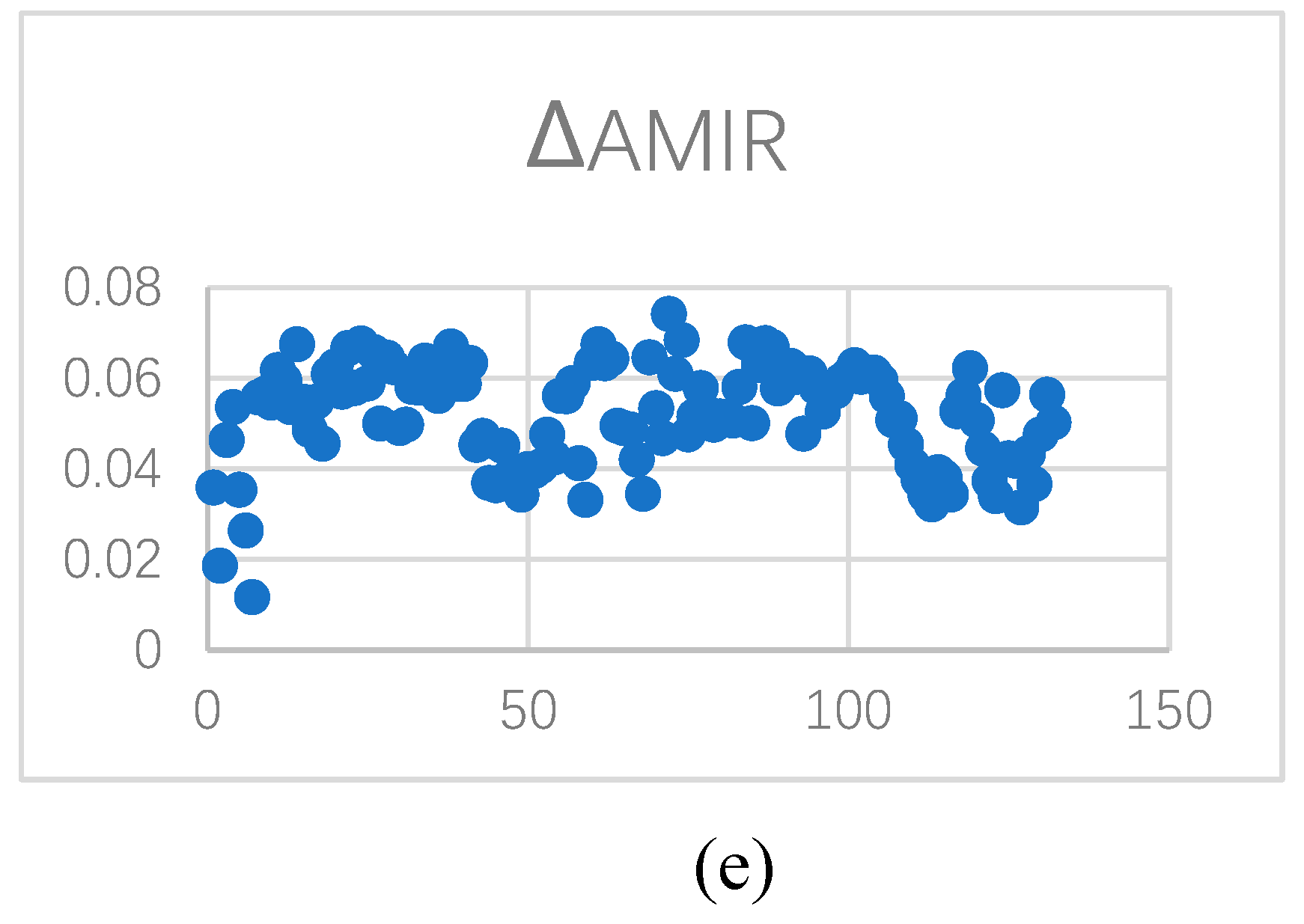

- To address the variations in fire discrimination thresholds across different backgrounds, based on images constructed from Angle slope difference index AMNIR, a decomposed three-dimensional OTSU algorithm was employed to calculate the fire point discrimination thresholds for subregions of the study area. This adaptive threshold calculation method reduces the rate of missed detections in fire discrimination, simplifies algorithm complexity, and improves efficiency, making it suitable for more practical application.

- To address the missed and incorrect detections caused by single-image fire discrimination algorithms, time-series remote sensing images were used to construct the time-series Angle slope difference indices ∆ANIR and ∆AMIR, thus enhancing the accuracy of fire discrimination.

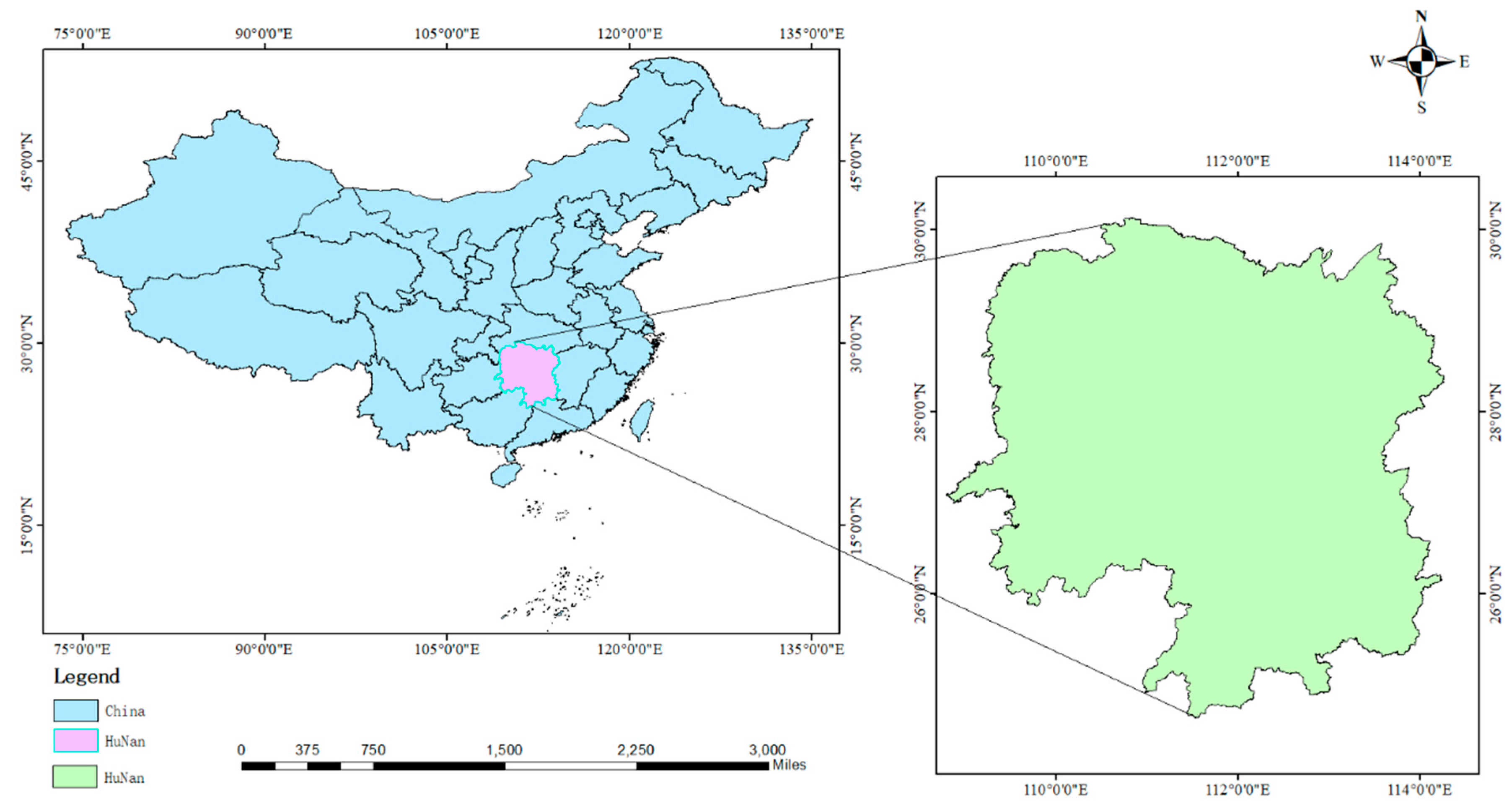

2. Data and Methods

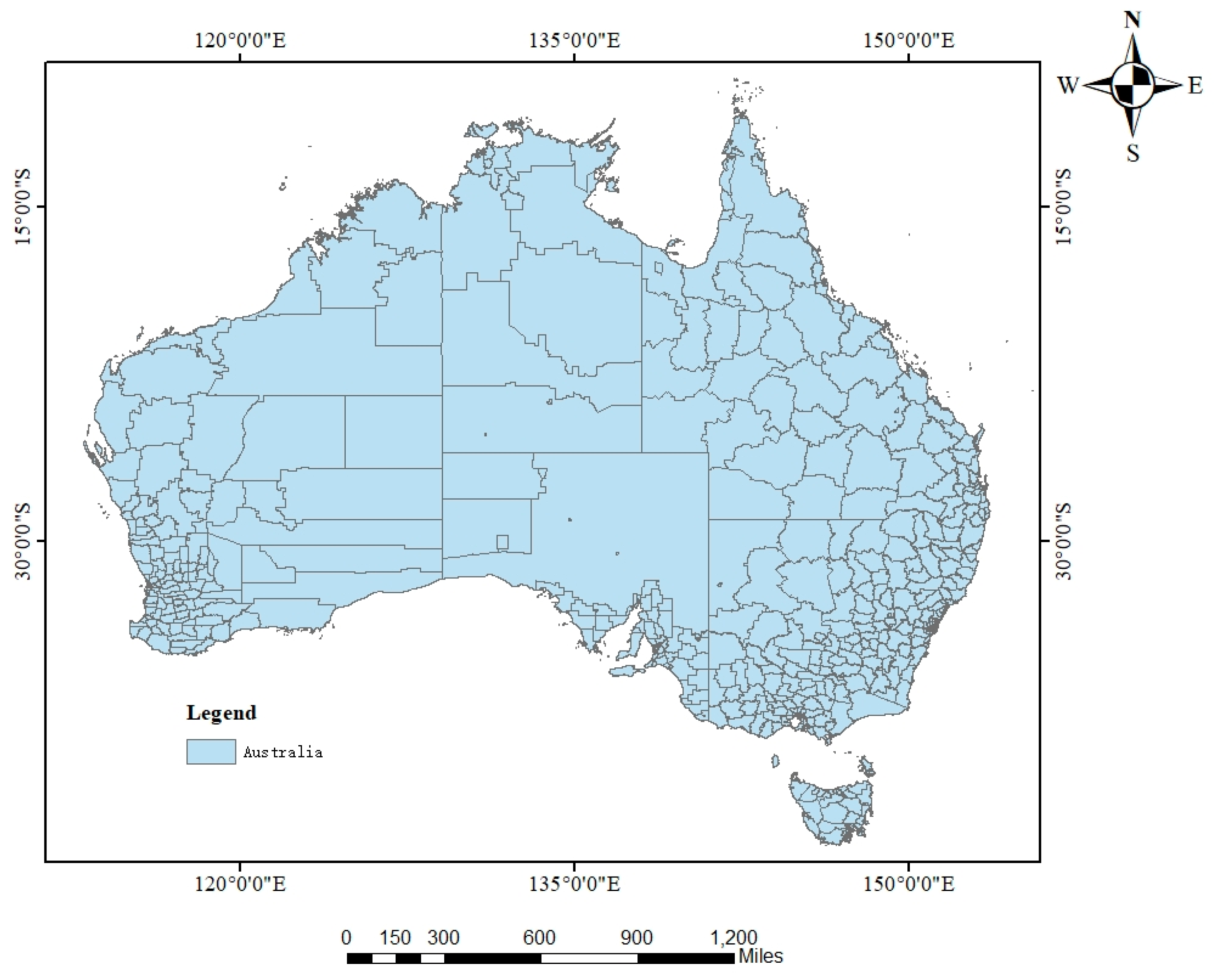

2.1. Data

2.1.1. The Remote Sensing Satellite Sensors and Data Channels

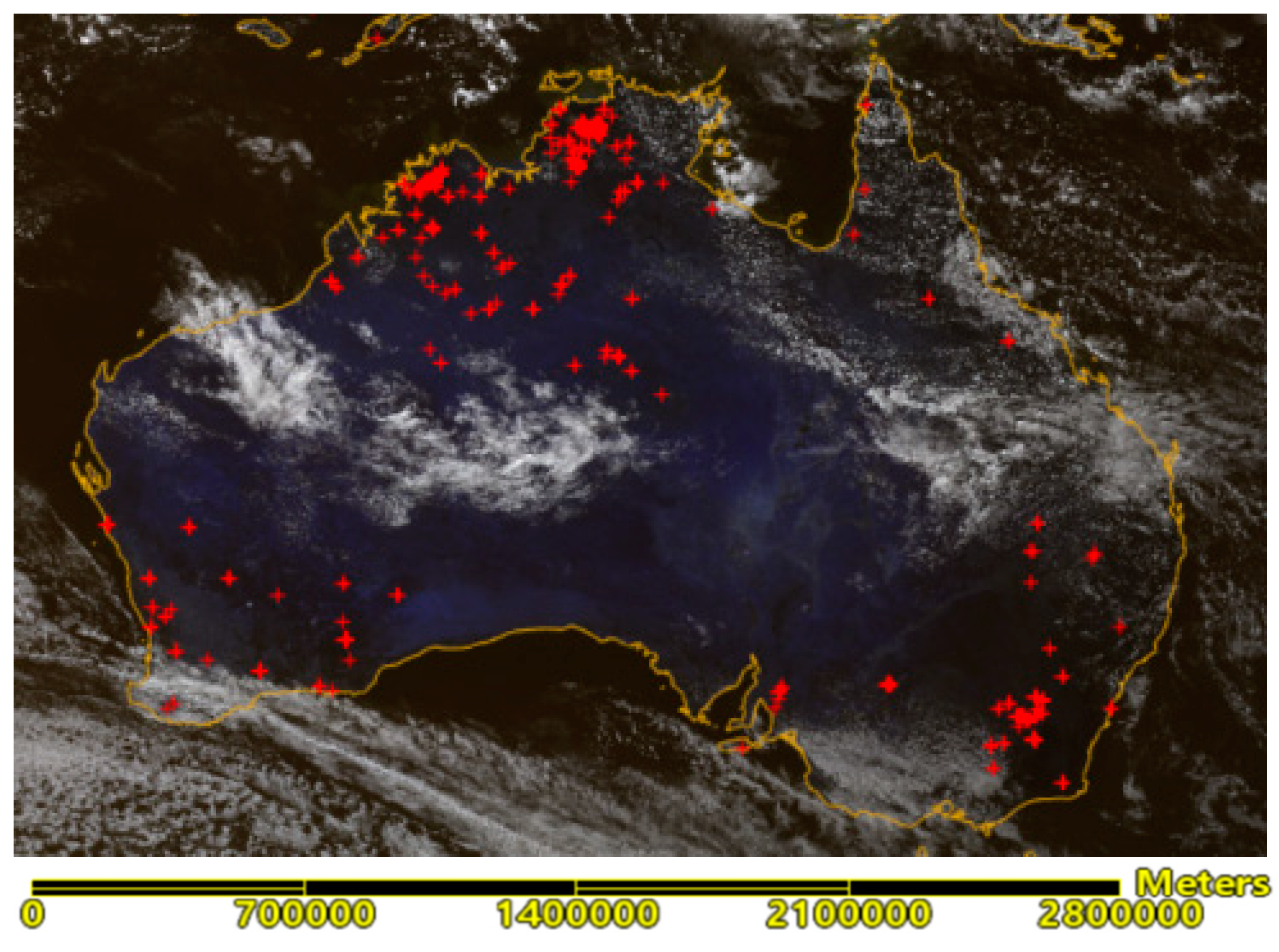

2.1.2. Sample Points Data for Angle Slope Index Threshold Statistics

2.1.3. Forest Fire Ground Actual Data and Land Cover Data

2.2. Method

2.2.1. The Theoretical Basis of Satellite Remote Sensing Fire Point Identification

2.2.2. Forest Fire Discrimination

2.2.2.1. Cloud Detection

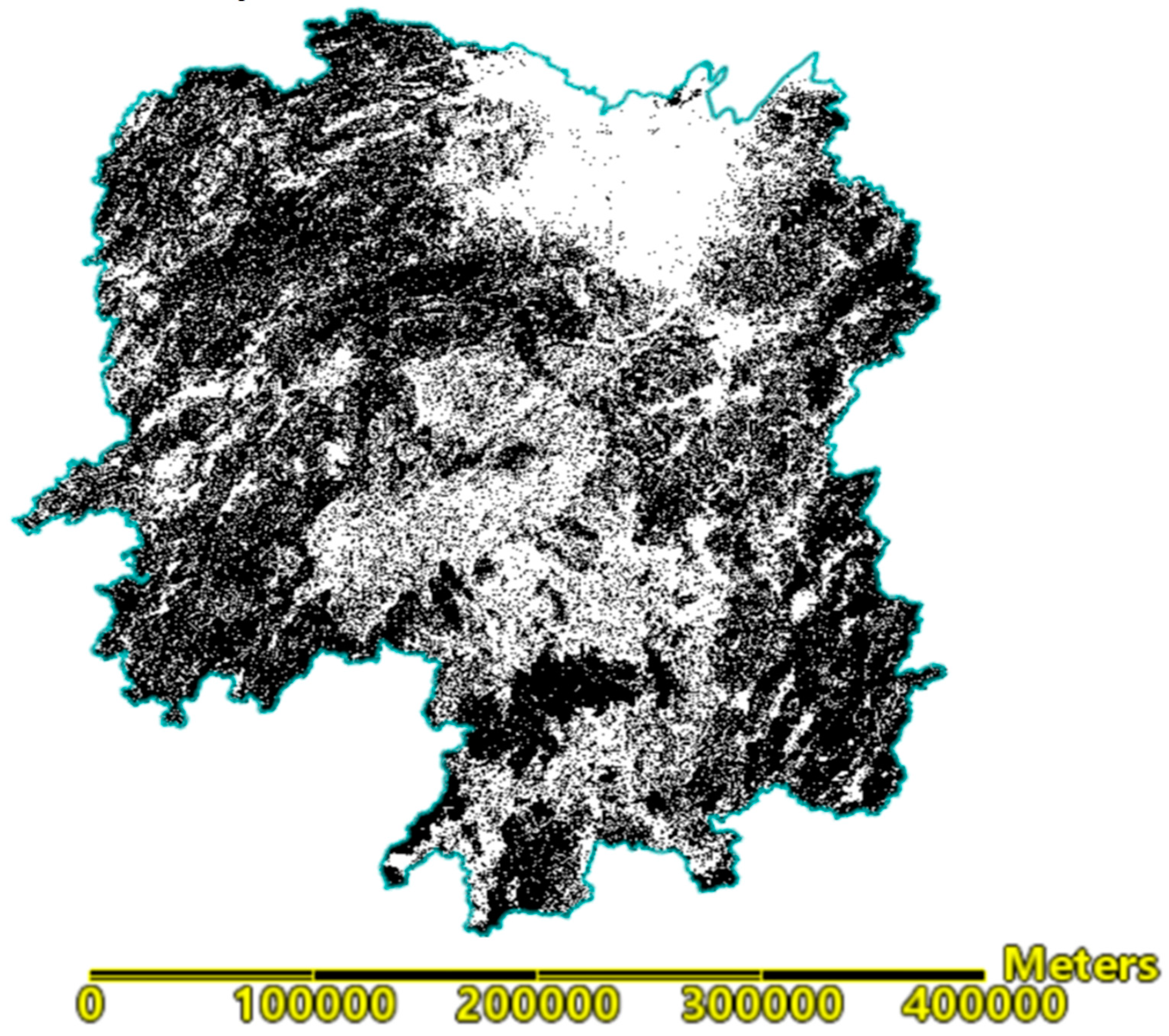

2.2.2.2. Forest Land Discrimination

- Use land cover data: The product of Global Land Cover with Fine Classification System at 30m in 2020 downloaded from the website of Earth big data science engineering data sharing service system supported by the Institute of Aerospace Information Innovation, Chinese Academy of Sciences.

- Use the Normalized Difference Vegetation Index (NDVI) to confirm:

2.2.2.3. Forest Fire Point Discrimination

- Construction of Angel slope Indices and forest fire discrimination.

- 2.

- Construction of Time-series Angle slope difference index and forest fire discrimination;

- 3.

- Decomposed 3D OTSU adaptive threshold segmentation algorithm.

2.2.3. Flare Removal

2.2.4. Precision Evaluation Method

3. Results

3.1. Angle Slope Index Threshold Statistics

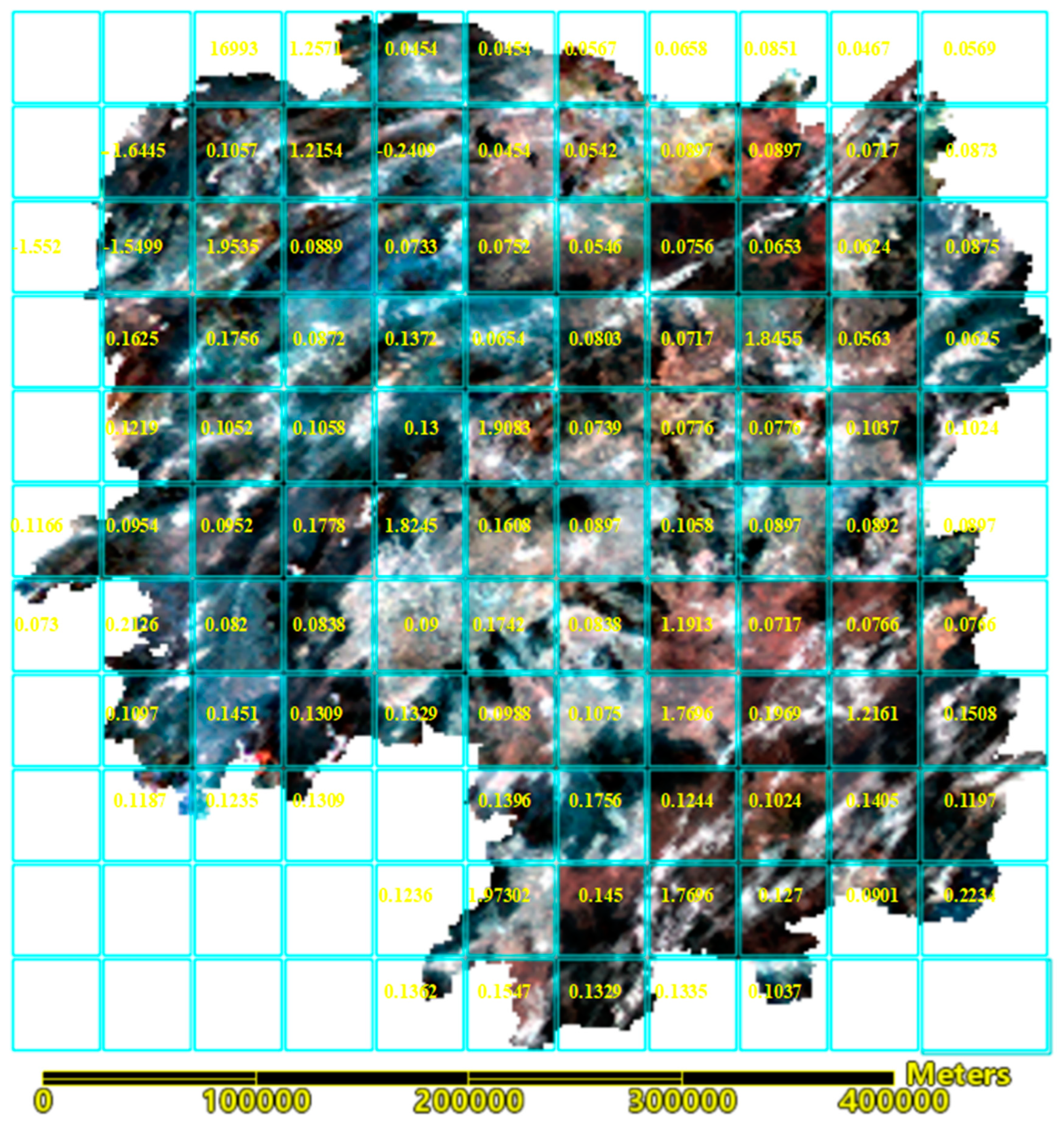

3.2. Forest Fire Identification Precision of Application Case

3.2.1. Forest Fire Identification Precision Based on Angle Slope Index Threshold (ASITR)

3.2.2. Forest Fire Identification Precision Based on the Fusion of Angle Slope Difference Index (

4. Discussion

5. Conclusion

6. Patents

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Feng, H.; Zhang, G.; Tan, S.; et al. Forest fire discrimination based on Himawari-8 satellite data. Journal of Central South University of Forestry & Technology 2021, 41(08), 75-83.

- Chinese Academy of Forestry. Available online: http://www.caf.ac.cn/info/1806/48310.htm (accessed on 6 March 2023).

- Cai, W.; Yang, J.; Liu, Z.; et al. Forest regeneration and its influencing factors in the burnt areas of Daxing'anling Forest Region, Heilongjiang Province. Acta Ecologica Sinica 2012, 32(11), 3303-12.

- Shi, Y.; Shan, H.; Zhang, Y.; et al. Research on forest fire monitoring of new generation of geostationary meteorological satellite. Forest Fire Prevention 2017, 4, 32–35. [Google Scholar]

- Shao, Y.; Feng, Z.; Cao, M.; et al. An ensemble model for forest fire occurrence mapping in China. Forests, 2023; 14, 704. [Google Scholar]

- Flannigan, M.D.; Vonder Haar, T.H. Forest fire monitoring using NOAA satellite AVHRR. Can. J. Forest Res. 1986, 16, 975–982. [Google Scholar] [CrossRef]

- Flasse, S.P.; Ceccato, P. A contextual algorithm for AVHRR fire detection. Int. J. Remote Sens. 1996, 17, 419–424. [Google Scholar] [CrossRef]

- Giglio, L.; Descloitres, J. ; Justice,C.O.; Kaufman,Y.J. An enhanced contextual fire detection algorithm for MODIS. Remote Sens. Environ. 2003; 87, 273–282. [Google Scholar]

- Xie, Z. Research on fire detection and exploration of auto-mated cloud detection based on Himawari8 remote sensing. PhD Thesis, University of Science and Technology of China, Beijing, 2019. [Google Scholar]

- Dozier, J. A method for satellite identification of surface temperature fields of subpixel resolution. Remote Sens. Environ. 1981, 11, 221–229. [Google Scholar] [CrossRef]

- Miao, T. Research on the algorithm of monitoring forest fire points and estimating burn scar based on MODIS data. Master Thesis, Nanjing University of Information Science and Technology, Nanjing, 2012. [Google Scholar]

- Xu, Q.; Gu, W.; Xie, T. ; et.al. Crop straw fire remote sensing monitoring and its algorithm implementation. Remote Sensing Technology and Application, 2017; 32, 728–733. [Google Scholar]

- Prints, E.M.; Feltz, J.M.; Menzel, W.P.; et al. An overview of GOES-8 diurnal fire and smoke results for SCAR-B and 1995 fire season in South America. J. Geophys. Res. 1998; 103, 31821–31835. [Google Scholar]

- Riguka, S. Real-time monitoring and early warning of grassland fire based on geostationary meteorological satellite. Master of Thesis, Inner Mongolia Normal University, Huhehot, 2020. [Google Scholar]

- Giglio, L.; Descloitres, J. An enhanced contextual fire detection algorithm for MODIS. Remote Sens. Environ. 2003, 87(2-3), 273-282.

- Giglio, L.; Csisizar, I.; Justice, C.O. Global distribution and seasonality of active fires as observed with the Terra and Aqua Moderate Resolution Imaging Spectroradiomete (MODIS) sensors. Journal of Geophysical Research Biogeosciences 2006, 111(G2), 17-23.

- Kaufman, Y.J.; Justice, C.O.; Flynn, L.P.; et al. Potential global fire monitoring from EOS-MODIS. J. Geophys. Res. 1998, 103(D24), 32215-32238.

- Kaufman, Y.J.; Kleidman, R.G.; King, M.D. SCAR-B fires in the tropics: Properties and remote sensing from EOS-MODIS. J. Geophys. Res. 1998, 103(D24), 31955-31968.

- Justice, C.O.; Giglio, L.; et al. The MODIS fire products. Remote Sens. Environ. 2002, 83(1-2), 244-262.

- Giglio, L.; Descloitre, J.; Justice, C.O. An enhanced contextual fire detection algorithm for MODIS. Remote Sens. Environ. 2003, 87(2-3), 273-282.

- Qin, X; Yi, H. A Method to Identify Forest Fire Based on MODIS Data. Fire Safety Science 2004, 13(2), 83-89.

- Gao, M.; Qing, Z; Liu, S. A Study of Forest Frie Detection Based on MODIS Data. Remote Sensing for land and Resources 2005, (2), 60-63.

- Gong, A.; Su, Y.; Lv,X.; et al. A forest fire detection method based on MODIS. CN106840409A, 2017-06-13, Beijing.

- Xu, H.; Zhang, G.; Chu, R.; et al. Detecting forest fire omission error based on data fusion at subpixel scale. International Journal of Applied Earth Observation and Geoinformation 2024, 128, 103737. [Google Scholar] [CrossRef]

- Xiong, D.; Tan, S.; et al. Forest fire discrimination research based on FY4 remote sensing data. Journal of Central South University of Forestry & Technology 2020, 40(10), 42-50.

- Maeda, N.; Tonooka, H. Early stage Forest fire detection from Himawari-8 AHI images using a modified MOD14 algorithm combined with machine learning. Sensors 2022, 23(1), 210.

- Zhang, G.; Li, B.; Luo, A self-adaptive wild fire detection algorithm with two- dimensional Otsu optimization. Math. Problems Eng. 2020.8, 2020, 1-12.

- Deng, Z.; Zhang, G. An Improved Forest Fire Monitoring Algorithm With Three-Dimensional Otsu. IEEE Access 1979, 9, 118367–118378. [Google Scholar] [CrossRef]

- Liu, J.; Li, Wen. Two-dimensional Otsu automatic threshold segmentation method for grayscale images. Acta Automatica Sinica. 1993, 19(1), 101-105.

- Yue, F.; Zuo, W.; Wang, K. Algorithm for selecting two-dimensional threshold of grayscale images based on decomposition. Acta Automatica Sinica. 2009, 35(7), 1022-1027.

- Jing, X.; Li, J.; Liu, Y. An image segmentation algorithm based on three-dimensional maximum inter-class variance. Acta Electronica Sinica. 2003, 09, 1281-1285.

- Schroeder, W.; Oliva, C.D.; Giglio, L.; et al. The new VIIRS 375 m active fire detection data product: algorithm description and initial assessment. Remote Sens Environ 2014, 143, 85–96. [Google Scholar] [CrossRef]

- Giglio, A.B.; Descloitres, J.; Justice, C.O.; et al. An enhanced contextual fire detection algorithm for MODIS. Remote Sens Environ. 2003, 87, 273–282. [Google Scholar] [CrossRef]

- Prins, E.M.; Menzel, W.P. Trends in South American biomass burning detected with the GOES visible infrared spin scan radiometer atmospheric sounder from 1983 to 1991. Journal of Geophysical Research 1994, 99(D8), 16719‒16735.

- Du, P.; Liu, M.; Xu, T.; et al. Preliminary study on forest fire monitoring using Himawari-8 data. Acta Scientiarum Naturalium Universitatis Pekinensis 2018, 54(06), 1251-1258.

- Zhao, W.; Shan, H.; Zhang, Y. Research on forest fire identification technology based on Himawari-8 geostationary satellite. Journal of Safety and Environment 2019, 19(06), 2063-2073.

- Yan, J.; Qu, J.; Ran, M.; et al. Himawari-8 AHI fire detection in clear sky based on time-phase change. Journal of Remote Sensing 2020, 24(5), 571-577.

- Chen, J.; Zheng, W.; Liu, C.; et al. Temporal sequence method for fire spot detection using Himawari-8 geostationary meteorological satellite. National Remote Sensing Bulletin 2021, 25(10), 2095-2102.

- Zhang, P.; Guo, Q.; Guo, Q.; Chen, B.; et al. The Chinese next-generation geostationary meteorological satellite FY-4 compared with the Japanese Himawari-8/9 satellites. Advances in Meteorological Science and Technology 2016, 6(1), 72-75.

- Dong, X.; Wei, Y.; Wang, Z. Research on the sensitivity analysis of straw burning monitoring by stationary meteorological satellite. Heilongjiang Meteorology 2018, 35(1), 30-31.

- Jang, E.; Kang, Y.; Im, J.; Lee, D.W.; Yoon, J.; Kim, S.K. Detection and Monitoring of Forest Fires Using Himawari-8 Geostationary Satellite Data in South Korea. Remote Sens. 2019, 11, 271. [Google Scholar] [CrossRef]

- Koltunov, A.; Ustin, S.L.; Quayle, B.; Schwind, B.; Ambrosia, V.; Li, W. The development and first validation of the GOES Early Fire Detection (GOES-EFD) algorithm. Remote Sens. Environ. 2016, 184, 436–453. [Google Scholar] [CrossRef]

- Filizzola, C.; Corrado, R.; Marchese, F.; et al. RST-FIRES, an exportable algorithm for early fire detection and monitoring: Description, implementation, and field validation in the case of the MSG-SEVIRI sensor. Remote Sens. Environ. 2016, 186, 196–216. [Google Scholar] [CrossRef]

- Di Biase, V.; Laneve, G. Geostationary Sensor Based Forest Fire Detection and Monitoring: An Improved Version of the SFIDE Algorithm. Remote Sens. 2018, 10, 741. [Google Scholar] [CrossRef]

- Zhang, J.; Zhang, W.; Feng, J.; et al. Research on MODIS fire point monitoring algorithm based on brightness temperature-vegetation index-aerosol optical thickness. Remote Sensing Technology and Application 2016, 31(05), 886-892.

- Xu, H.; Zhang, G.; Zhou, Z.; et al. Forest fire monitoring and positioning improvement at subpixel level: application to himawari-8 fire products. Remote Sensing 2022, 14(10), 2460.

- Zhou, W.; Tang, B.H.; He, Z.W.; et al. Identification of forest fire points under clear sky conditions with Himawari-8 satellite data. International Journal of Remote Sensing 2024, 45(1), 214-234.

- Wooster, M.J.; Roberts, G.; Freeborn,P.H.; et al. LSA SAF Meteosat FRP products–Part 1: Algorithms, product contents, and analysis. Atmospheric Chemistry and Physics 2015, 15(22), 13217-13239.

- Pan, J.; Xing, L.X.; Wen, J.C.; Meng, T.; Jiang, L.J. Inversion method studyon short wave infrared remote sensing data high temperature surface feature temperature. Proc. 2nd Int. Congr. Image Signal Process. 2009, 10, 1–4. [Google Scholar]

- Han, C.; Yang, Q.; He, X.; et al. Research on fire point identification algorithm based on Himawari-8 satellite. Remote Sensing Technology and Application. 2023, 38(01), 173-181.

- The People’s Government of Hunan Province. Available online: https://www.hunan.gov.cn/hnszf/jxxx/hngk/hngk.html (accessed on 22 September 2024).

- The forestry Department of Hunan Province. Available online: http://lyj.hunan.gov.cn/lyj/ztzl/lshn_77586/202303/t20230306_29264640.html (accessed on 22 September 2024).

| Satellite | Sensor | Channel No. | Wavelength (µm) | Spatial Resolution (m) |

|---|---|---|---|---|

| Himawari-8 | AHI | 1 | 0.46 | 1000 |

| 2 | 0.51 | 1000 | ||

| 3 | 0.64 | 500 | ||

| 4 | 0.86 | 1000 | ||

| 5 | 1.60 | 2000 | ||

| 6 | 2.30 | 2000 | ||

| 7 | 3.90 | 2000 | ||

| 8 | 6.20 | 2000 | ||

| 14 | 11.20 | 2000 | ||

| 16 | 13.30 | 2000 | ||

| Sentinel-2A | MSI | 2 | 0.49 | 10 |

| 3 | 0.56 | 10 | ||

| 4 | 0.665 | 10 | ||

| 8A | 0.865 | 20 | ||

| 11 | 1.61 | 20 | ||

| 12 | 2.19 | 20 |

| Address | Type | Date & Time | Imagery | Level |

|---|---|---|---|---|

| West Australia (South East) | Fire point sample | May 2, 2022, 02:10 and 02:30 | NC_H08_20220502_0200_R21_FLDK.06001_06001.nc | Level 1 |

| NC_H08_20220502_0210_R21_FLDK.06001_06001.nc | Level 1 | |||

| NC_H08_20220502_0230_R21_FLDK.06001_06001.nc | Level 1 | |||

| H08_20220502_0200_L3WLF010_FLDK.06001_06001.csv | Level 3 | |||

| H08_20150727_0800_L2WLF010_FLDK.06001_06001.csv | Level 3 | |||

| Forest land sample | March 12, 2022, 2:00 | NC_H08_20220312_0200_R21_FLDK.06001_06001.nc | Level 1 | |

| West Australia (West South) | Fire point sample | February x, 2022, 00:00, 00:30, and 01:00 | NC_H08_20220207_0000_R21_FLDK.06001_06001.nc | Level 1 |

| NC_H08_20220207_0030_R21_FLDK.06001_06001.nc | Level 1 | |||

| NC_H08_20220207_0100_R21_FLDK.06001_06001.nc | Level 1 | |||

| H08_20220207_0000_L3WLF010_FLDK.06001_06001.csv | Level 3 | |||

| H08_20220207_0100_L3WLF010_FLDK.06001_06001.csv | Level 3 | |||

| Forest land sample | December 17, 2021, 02:10 | NC_H08_20211217_0210_R21_FLDK.06001_06001.nc | Level 1 | |

| West Australia (outside Perth) | Fire point sample | February 2, 2022, 03:00, 03:30 and 04:00 | NC_H08_20210202_0300_R21_FLDK.06001_06001.nc | Level 1 |

| NC_H08_20210202_0330_R21_FLDK.06001_06001.nc | Level 1 | |||

| NC_H08_20210202_0400_R21_FLDK.06001_06001.nc | Level 1 | |||

| H08_20210202_0300_L3WLF010_FLDK.06001_06001.csv | Level 3 | |||

| H08_20210202_0400_L3WLF010_FLDK.06001_06001.csv | Level 3 | |||

| Forest land sample | December 7, 2020, 03:30 | NC_H08_20201207_0330_R21_FLDK.06001_06001.nc | Level 1 | |

| New South Wales | Fire point sample | November 17, 2019, 00:30 and 01:00 | NC_H08_20191117_0030_R21_FLDK.06001_06001.nc | Level 1 |

| NC_H08_20191117_0100_R21_FLDK.06001_06001.nc | Level 1 | |||

| H08_20191117_0000_L3WLF010_FLDK.06001_06001.csv | Level 3 | |||

| H08_20191117_0100_L3WLF010_FLDK.06001_06001.csv | Level 3 | |||

| Forest land sample | September 11, 2019, 01:00 | NC_H08_20200330_0550_R21_FLDK.06001_06001.nc | Level 1 |

| No. * | Time of the Fire Event | Longitude | Latitude | Extent (ha) |

|---|---|---|---|---|

| 1 | October 6, 2018 at 05:00 UTC | 109°52′ | 27°17′ | 95.88 |

| 2 | September 28, 2019 at 07:20 UTC | 109°36′ | 28°15′ | 34.00 |

| 3 | September 28, 2019 at 05:30 UTC | 109°34′ | 28°12′ | 22.00 |

| 4 | September 28, 2019 at 03:10 UTC | 111°19′ | 29°19′ | 0.87 |

| 5 | March 20, 2020 at 08:00 UTC | 109°23′ | 28°21′ | 8.4 |

| 6 | March 21, 2020 at 08:11 UTC | 112°21′ | 26°19′ | 18.00 |

| 7 | November 8, 2020 at 09:12 UTC | 118°28′ | 27°13′ | 11.00 |

| 8 | January 14, 2021 at 09:10 UTC | 112°27′ | 26°43′ | 20.70 |

| 9 | January 14, 2021 at 07:15 UTC | 112°29′ | 27°8′ | 5.80 |

| 10 | January 14, 2021 at 07:50 UTC | 110°51′ | 26°51′ | 4.50 |

| 11 | January 19, 2021 at 04:30 UTC | 113°48′ | 25°49′ | 18.55 |

| 12 | January 19, 2021 at 06:30 UTC | 113°1′ | 25°41′ | 23.73 |

| 13 | January 19, 2021 at 09:48 UTC | 112°20′ | 26°12′ | 0.20 |

| 14 | January 19, 2021 at 10:23 UTC | 113°53′ | 28°55′ | 0.90 |

| 15 | February 20, 2021 at 09:15 UTC | 113°17′ | 25°38′ | 9.30 |

| Index | Maximum | Minimum | Average | Variance |

|---|---|---|---|---|

| 1.8702 | -0.0242 | 1.5594 | 0.1540 | |

| 0.0741 | -0.0242 | 0.0517 | 0.0001 | |

| 0.8823 | 0.7165 | 0.8210 | 0.0016 | |

| 0.8094 | -0.9999 | -0.7381 | 0.1506 | |

| 0.9453 | 0.9182 | 0.9308 | 0.0001 | |

| 0.9937 | 0.9460 | 0.9822 | 0.0000 | |

| 0.2234 | 0.0362 | 0.1095 | 0.0017 | |

| 1.9926 | 0.1551 | 1.7108 | 0.0097 |

| No. | Identification | Imagery | Num. Of Gro. Tru. Fir./Pcs | Level |

|---|---|---|---|---|

| 1 | Moment 1 | NC_H08_20210220_0210_R21_FLDK.06001_06001.nc | 12 | Level 1 |

| 2 | Moment 2 | NC_H08_20210119_0150_R21_FLDK.06001_06001.nc | 10 | Level 1 |

| 3 | Moment 3 | NC_H08_20210114_0410_R21_FLDK.06001_06001.nc | 9 | Level 1 |

| 4 | Moment 4 | NC_H08_20201108_0200_R21_FLDK.06001_06001.nc | 5 | Level 1 |

| 5 | Moment 5 | NC_H08_20191031_0610_R21_FLDK.06001_06001.nc | 9 | Level 1 |

| 6 | Moment 6 | NC_H08_20191001_0430_R21_FLDK.06001_06001.nc | 14 | Level 1 |

| 7 | Moment 7 | NC_H08_20190928_0320_R21_FLDK.06001_06001.nc | 8 | Level 1 |

| 8 | Moment 8 | NC_H08_20181006_0410_R21_FLDK.06001_06001.nc | 7 | Level 1 |

| 9 | Moment 9 | NC_H08_20181005_0110_R21_FLDK.06001_06001.nc | 14 | Level 1 |

| No. | Identification | Num. Of Gro.Tru. Fir./Pcs | Num. Of Fir. Mon./Pcs | Num. of Fal. Fir./Pcs | For.Fir.Ide.Acc. | For.Fir.Ide.Mis. | For.Fir.Ide.Ove. |

|---|---|---|---|---|---|---|---|

| 1 | Moment 1 | 12 | 10 | 2 | 83.33% | 16.67% | 83.33% |

| 2 | Moment 2 | 10 | 9 | 1 | 90.00% | 10.00% | 90.00% |

| 3 | Moment 3 | 9 | 7 | 2 | 77.78% | 22.22% | 77.78% |

| 4 | Moment 4 | 5 | 4 | 1 | 80.00% | 20.00% | 80.00% |

| 5 | Moment 5 | 9 | 7 | 0 | 100.00% | 22.22% | 87.50% |

| 6 | Moment 6 | 14 | 11 | 1 | 91.67% | 21.43% | 84.62% |

| 7 | Moment 7 | 8 | 7 | 0 | 100.00% | 12.50% | 93.33% |

| 8 | Moment 8 | 7 | 6 | 1 | 85.71% | 14.29% | 85.71% |

| 9 | Moment 9 | 14 | 12 | 2 | 85.71% | 14.29% | 85.71% |

| Ave. | — | — | — | — | 88.25% | 17.07% | 85.33% |

| No. | Identification | Num. Of Gro.Tru. Fir./Pcs | Num. Of Fir. Mon./Pcs | Num. of Fal. Fir./Pcs | For.Fir.Ide.Acc. | For.Fir.Ide.Mis. | For.Fir.Ide.Ove. |

|---|---|---|---|---|---|---|---|

| 1 | Moment 1 | 12 | 11 | 3 | 78.57% | 8.33% | 84.62% |

| 2 | Moment 2 | 10 | 9 | 1 | 90.00% | 10.00% | 90.00% |

| 3 | Moment 3 | 9 | 7 | 2 | 77.78% | 22.22% | 77.78% |

| 4 | Moment 4 | 5 | 5 | 2 | 71.43% | 0.00% | 83.33% |

| 5 | Moment 5 | 9 | 8 | 0 | 100.00% | 11.11% | 94.12% |

| 6 | Moment 6 | 14 | 12 | 1 | 92.31% | 14.29% | 88.89% |

| 7 | Moment 7 | 8 | 8 | 2 | 80.00% | 0.00% | 88.89% |

| 8 | Moment 8 | 7 | 7 | 1 | 87.50% | 0.00% | 93.33% |

| 9 | Moment 9 | 14 | 13 | 2 | 86.67% | 7.14% | 89.66% |

| Ave. | — | — | — | — | 86.00% | 8.12% | 87.85% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).