1. Introduction

As the guardian of the Sonoran Desert, the saguaro cactus (Carnegiea gigantea) plays a vital role as a source of sustenance and shelter for a diverse range of desert wildlife, while holding profound significance within the rich cultural heritage of the Tohono O’odham people [

1]. The saguaro stands as the tallest presence within the local ecosystem, typically reaching heights of 14 m and as high as 23 m [

2]. Sagurao provides shelter for numerous species seeking to evade predators or hunt for prey [

3]. Several avian species, including Harris’s and red-tailed hawks [

4], choose to nest on its sturdy branches or drill cavities (e.g., gilded flicker and Gila woodpeckers) inside the saguaro’s pulpy flesh [

5]. Despite the presence of oxalates, certain creatures like pack rats, jackrabbits, mule deer, and bighorn sheep consume the flesh directly from a standing saguaro, especially during periods of food and water scarcity [

6]. Beyond its critical ecological role, the saguaro is intricately intertwined with the religions, ceremonies, cultures, and livelihoods of indigenous peoples such as the Papago (Tohono O’odham) and Pima nations, who have inhabited the region for millennia [

5].

Consequently, the saguaro stands as a reliable bioindicator of ecological well-being in the Sonoran Desert, underscoring the imperative of accurate population monitoring for this keystone species in conservation management efforts [

1]. The United States Forestry Service has been conducting extensive manual censuses in Saguaro National Park (SNP) every ten years since 1990 to study the saguaro cactus [

7]. This vital endeavor requires a significant commitment of time, resources, and labor. In this regard, an impressive 500 individual volunteers dedicated an astounding 750 person-days and over 3,500 hours to map 24,000 saguaros at Saguaro National Park in 2020 [

8].

An emerging trend is to use affordable unmanned aerial vehicles, commonly known as drones, to monitor agricultural and other natural resources, as an alternative to extensive in situ surveying techniques [

9]. This innovative approach holds the promise of significantly diminishing the time and labor demands associated with conventional population assessments [

10]. A study used aerial imagery collected by Pima Association Governments at the spatial resolution of 15.24 cm/pixel [

10]. However, it employed shadow features rather than the actual pixels of saguaros, likely due to insufficient resolution of saguaro images. Thus, this method can only detect mature saguaros that cast a sufficiently distinct shadow, which affects the accuracy depending on the prominence and orientation of the shadows as well as effects from local vegetation, geology, and topographic relief [

10]. The authors indicated that identification of saguaros within the obtained images presented a challenging task, reporting an overall accuracy of their technique 58% [

10].

Recent advances in artificial intelligence (AI) techniques, particularly automatic object detection from images, provide unprecedented opportunities to provide solutions to this problem set [

11,

12]. Object detection primarily revolves around two key tasks: object localization or segmentation, which predicts the precise position of an object within an image, and object classification, which determines the category (i.e., class) to which an object belongs [

13]. In a classification task, the input is typically an image containing a single object, and the output is a corresponding class label. Conversely, localization methods discern patterns from images that contain one or more objects, each annotated with a bounding box or precise boundary. By seamlessly integrating these two tasks, it becomes feasible to both locate and classify each instance of an object within an image. Nevertheless, accurately determining the positions of bounding boxes, masks, or boundaries (i.e., precise outline) for each object is a critical step in this process [

14,

15]. If too many candidates of bounding boxes or boundaries are proposed, it can lead to a computationally burdensome training process, while an insufficient number of candidates may result in the identification of “unsatisfactory regions” [

13]. Of note, even general object recognition algorithms, such as DALLE-3 [

16], cannot achieve adequate performance for recognizing a large population of saguaros in a single picture. It performs particularly poorly from top-views, due to insufficient saguaros representation in the training datasets. Thus, application of the latest AI techniques to high-resolution top-view images requires further development.

The R-CNN (Regions with Convolutional Neural Network Features) algorithm [

17] introduced a novel approach to object detection by combining CNN features with region proposals, leading to substantial improvements in accuracy, object localization, and the overall object detection pipeline [

10]. This innovative approach mitigated the requirement for handling an excessive number of candidates by harnessing a selective search algorithm to generate candidates judiciously. Nonetheless, it did exhibit certain limitations, such as slow inference speed due to the need to propose and process each region independently. To address these issues, the fast R-CNN algorithm was introduced [

18], which effectively classifies object proposals using deep convolutional networks. This approach enhances both training and testing speeds while simultaneously improving detection accuracy. The successor to Fast R-CNN, Faster R-CNN [

19], enhances its predecessor by incorporating a Region Proposal Network (RPN) that shares full-image convolutional features with the detection network. This integration allows for an almost cost-free generation of region proposals [

17]. After that, Mask R-CNN [

20] was developed on top of Faster R-CNN by adding an output branch in parallel for predicting an object mask (Region of Interest) along with the branch for bounding box recognition [

18]. Mask R-CNN has been used in various applications such as medical image analysis, autonomous driving, and robotics [

21,

22]. The model is trained end-to-end using a multi-task loss function that combines classification, localization, and mask prediction losses. Mask R-CNN has several advantages over its predecessors, including higher detection rate, improved instance segmentation performance, the ability to generalize to other tasks, and the potential to estimate the size of the saguaros.

In this study, we trained a Mask R-CNN model using the Detectron2 [

23] python packages to reliably detect and count saguaro cacti from drone images, using training data captured by a drone flying from around 100 meters above the ground, with much higher resolution than previous aerial imagery [

10].

2. Materials and Methods

2.1. Data Collection and Preprocessing

Drone images were collected with a DJI Phantom 4 Pro quadcopter between 3 and 4 pm near Sun Ray Park in Phoenix, Arizona (approximately 33.3188° north, 111.9980° west) on March 3, 2022. The aircraft operated at three low altitudes ranging from 381 m to 421 m above mean sea level (AMSL): 486 m (west of the park), 519 m (most in the east of the park), and 507 m (covering the entire park). These flights were designed to test the reproducibility across a range of altitudes and the adaptability of the algorithm, with the first two flights adjusting for altitude differences between the peak and the ground, while the last flight used an unadjusted altitude, as an alternative data collection method. The aircraft traversed horizontally at its nominal flight altitude with the camera pointed directly below the aircraft (i.e., nadir), or 90 degrees from the direction of travel, to capture imagery.

The images were uploaded to and annotated by the Datatorch [

24] and Roboflow platforms [

25]. Each image was assigned to a team member for manual annotation and another for verification. The cacti were annotated into three classes: “saguaro” (mature saguaro with arms), “spear” (young saguaro without arms), and “barrel”(which may produce buds that bloom at the top in April) [

26]. The creation of the “spear” class was to focus on training cacti with arms since barrel cacti and young saguaros are very similar, both of which comprise a small proportion in the full dataset. We focus on modeling the distinct morphology of mature saguaros with arms and recommend that the discrimination between the other two classes be focus of future studies.

To facilitate model training, various image preprocessing steps were explored, including auto-orientation, horizontal and vertical flip augmentations, resizing, tiling, and auto-contrast filtering.

After annotation and preprocessing, the resulting dataset was exported into Common Objects in Context (COCO) JSON format [

27] for modeling.

2.2. Model Training and Evaluation

To detect saguaros from the images, we utilized the Detectron2 package [

23], one of the state-of-the-art object detection packages that does not require a very large sample size. Detectron2 is built on the Torch deep learning library [

25] and implemented with various R-CNN models and evaluation tools, including Mask R-CNN [

20].

The Mask R-CNN (Region-based Convolutional Neural Network) was chosen as the base model for object detection for its capability to model on pixel level, speed, performance, and compatibility with various libraries and platforms. Additionally, the model offered robust documentation, which made it preferable over other alternatives, such as YOLOv5 [

28].

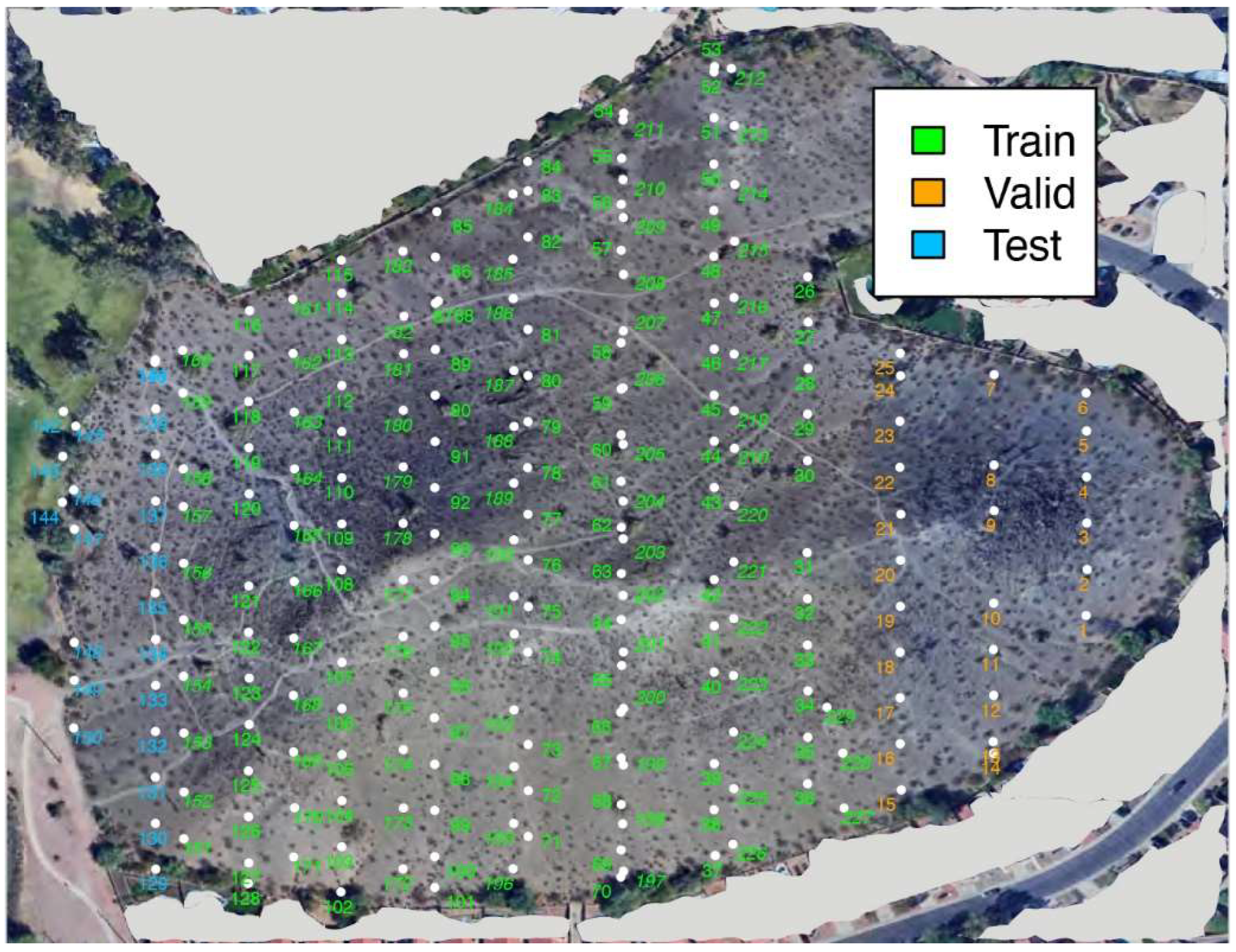

The model was trained, validated, and tested using the processed dataset of altitude 486/519 m AMSL, with approximated sample ratios of 8:1:1, respectively. Training, validation, and independent test sets were separated by their geographical location to minimize image overlap of saguaros between training, validation, and testing datasets. Default training parameters were used initially with Mask R-CNN. Various parameters were tuned, such as learning rate, maximum iterations, and Intersection over Union (IoU) threshold [

17,

29]. Default values were used for other parameters. The results of the model were evaluated, to achieve the maximal mean average precision (mAP) [

30] from the validation set under various combinations of parameters.

The final model performance was evaluated on the independent test datasets that were not utilized during the training and validation. The mAP, sensitivity (recall), precision, and F1 scores, which combine sensitivity and precision, were selected as metrics of accuracy for model recognition of saguaros in the images. Following convention, sensitivity was defined as the percentage of correctly predicted saguaros among total annotated saguaros in the test set with precision being referring to the percentage of correctly predicted saguaros among total predicted saguaros. During the evaluation of the testing dataset, we treated the same saguaros from different images as unique samples or observations, as they were likely collected from different altitudes, aircraft headings, or camera geometry.

Our code was extended from a preexisting Google Colab notebook [

31] that can directly work with the Roboflow platform, specifically created for training models in the Detectron2 ecosystem with customized datasets. It was revised to work within Jupyter Notebooks on a GPU server. Specifically, the Nvidia RTX 4090 GPU was used for the training and testing.

To recap, our methodology encompassed data collection, pre-processing, model selection, choice of hardware and training environment, selection of an object detection library, code execution, data export, model training, evaluation, and post-training analysis to achieve the desired results.

4. Discussion

Our study aimed to enhance saguaro (Carnegiea gigantea) cacti population monitoring using an advanced object detection model, Mask R-CNN, applied to drone images. The saguaro, with its ecological significance and cultural importance, serves as a bioindicator in the Sonoran Desert, emphasizing the need for accurate population assessments. The traditional method of manual censuses, undertaken by the United States Forestry Service, is resource-intensive. In comparison with another population research on saguaros that developed an automated shadow detection method for mapping mature saguaros and correctly identified 58% of mature saguaros [

10], our approach leverages more affordable automated object detection technologies and higher resolution nadir images, representing a promising avenue for optimizing population monitoring. Our model achieved an average F

1 score of 90.3% across all independent test images taken from a distance ranging from 65 to 136 m above the ground level, despite being trained on a limited number of training samples.

The integration of Mask R-CNN into our methodology yielded compelling results with a limited set of images. The model demonstrated exceptional recall, precision, and F1 scores, achieving a notable average F1 score of 90.3% across independent test images. This robust performance signifies the model’s proficiency in accurately identifying saguaro instances, thereby streamlining the monitoring process. Importantly, our model showcased adaptability in scenarios without saguaros. It yielded an average false positive rate of 16.4%, implying future improvement. Versatility is critical for its application in diverse ecological contexts, contributing to its reliability as a monitoring tool.

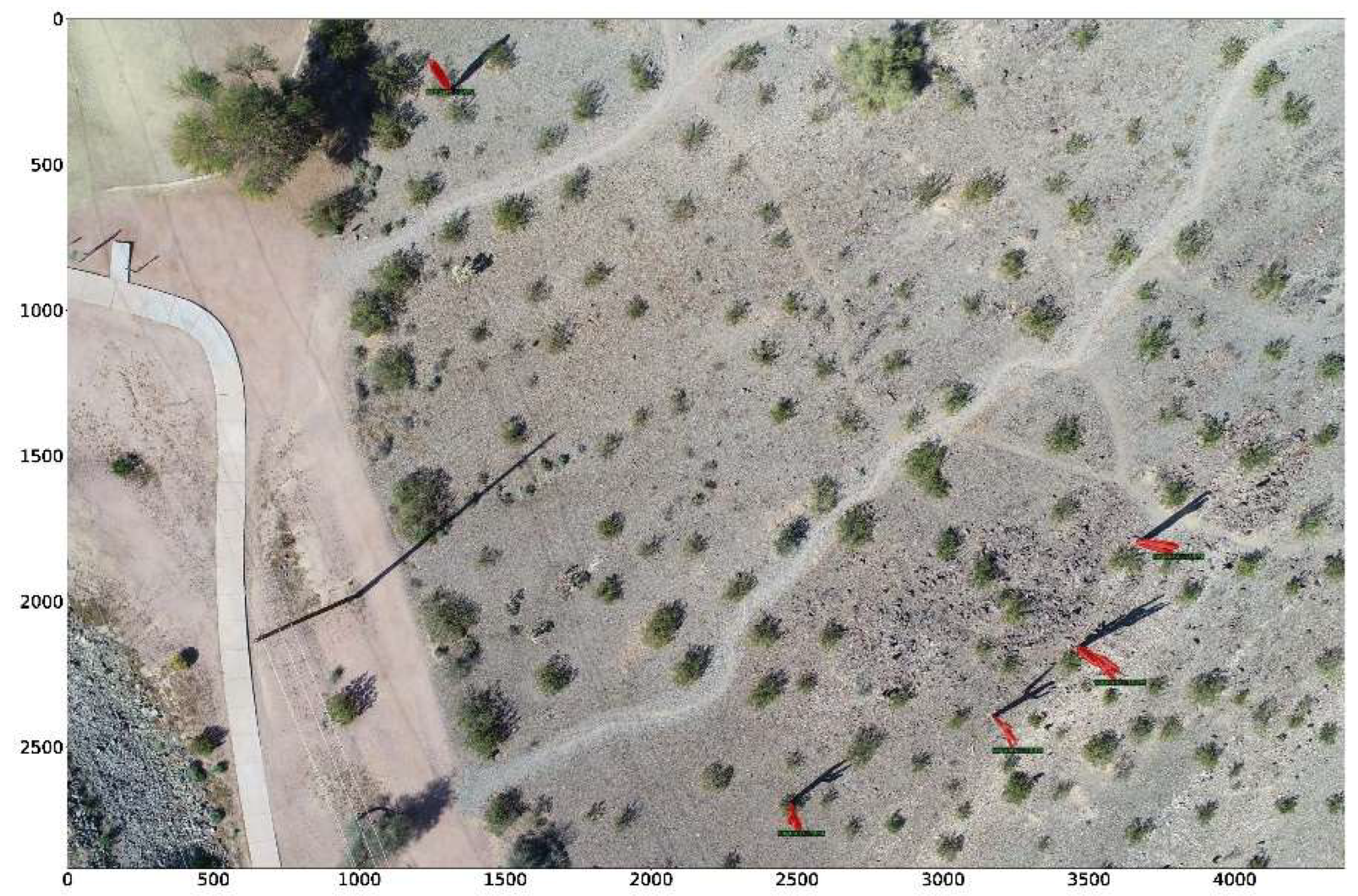

However, our study also highlighted challenges, particularly in

Figure 3, where the model incorrectly identified spears and barrels as saguaros. We also experienced challenges in handling partial saguaros located at the edge of an image. This underscores the importance of ongoing refinement, considering intricacies in the landscape like saguaros spread on the mountains over 100 meters, and addressing complex scenarios where false positives can occur. Notably, we tested with a small number of sample and trained with a much larger training set. Performance is expected to increase with additional training samples. Additionally, our images were taken from a nadir view using drones, making pattern recognition more challenging compared to front-view images or those with shadows. In such cases, inexperienced annotators often struggle to distinguish spear saguaro cacti from barrel cacti when image resolution is insufficient. Future studies should focus on capturing more data to improve the model’s capability to discern subtle differences in dense vegetation areas, ensuring greater accuracy in challenging environments. With larger data, other AI models such as transformer-based ones will become applicable.

Author Contributions

Conceptualization, Don Swann, Kamel Didan and Haiquan Li; Data curation, Wenting Luo, Breeze Scott, Jacky Cadogan and Truman Combs; Formal analysis, Wenting Luo and Haiquan Li; Funding acquisition, Haiquan Li; Investigation, Kamel Didan and Haiquan Li; Methodology, Breeze Scott, Jacky Cadogan and Haiquan Li; Project administration, Haiquan Li; Resources, Truman Combs and Kamel Didan; Software, Wenting Luo, Breeze Scott and Jacky Cadogan; Supervision, Kamel Didan and Haiquan Li; Validation, Haiquan Li; Visualization, Wenting Luo; Writing – original draft, Wenting Luo, Breeze Scott and Jacky Cadogan; Writing – review & editing, Truman Combs and Haiquan Li. All authors will be informed about each step of manuscript processing including submission, revision, revision reminder, etc. via emails from our system or assigned Assistant Editor.