1. Introduction

Aviation is considered as one of the safest forms of transport, with 2024 considered one of the safest years on record [

1], primarily because of its proactive and continuous focus on safety. The recent trend in aviation safety increasingly gravitates towards data-driven solutions. Aviation safety data has been used in reactive and proactive [

2,

3] approaches to detect risk factors using different kinds of models. Broadly, a reactive approach to safety focuses on previously occurring accidents and their causal factors to understand historical data. A proactive approach focuses building models that predict the probability of risky events, which can be used to recommend actions.

Natural Language Processing (NLP) is a fast growing area in Artificial Intelligence (AI) where computer models are built to analyze text data. In aviation, the analysis of safety reports, aviation maintenance, and traffic control are the three broad areas where NLP is currently used [

4]. For reactive safety analysis, the National Transportation Safety Board (NTSB) Report Database

1 is invaluable for learning lessons from past incidents and accidents. It is an openly available database that stores accident text narratives data in structured and unstructured form. Since any manual analysis of these reports is complicated and time consuming, NLP can serve as a powerful enabler to automatically extract safety data from accident reports for use in data-driven reactive safety analysis. The models built using these analyses can then be used for improving aviation safety as a whole.

Yang and Huang [

5] and Liu [

6] conducted a detailed review of applications of NLP techniques in aviation safety and air transportation. The evolution of NLP techniques from word-counting techniques like term-frequency, inverse-document-frequency (TF-IDF), bag-of-words, to long short-term memory (LSTM), to more modern context-capturing transformer-based architectures is visible over the last decade. For instance, Perboli et al. [

7] used Word2vec and Doc2vec representations of language for semantic similarity in the identification of human factor causes in aviation. Miyamoto et al. [

8] used a bag-of-words approach with TF-IDF to identify operational safety causes behind flight delays using the ASRS database. Zhang et al. [

9] used word embeddings and LSTM neural networks for the prognosis of adverse events using the NTSB database.

The state-of-the-art models in NLP use the transformer architecture with the attention mechanism, which allows better capturing of context from textual data [

10]. Google AI’s Bidirectional Encoder Representations from Transformers (BERT), is one such transformer-based model which revolutionized NLP through its innovative pre-training of deep bidirectional representations from unlabeled text [

11]. Recently, transformer-based models are increasingly finding their applications to the aviation-domain. Kierszbaum and Lapasset [

12] applied the BERT base model on a small subset of Aviation Safety Reporting System (ASRS)

2 narratives to answer the question “When did the accident happen?”. In another work, Kierszbaum et al. [

13] pre-trained a Robustly optimized BERT (RoBERTa) model on a limited ASRS dataset to evaluate its performance on natural language understanding (NLU). Recently, Wang et al. [

14] developed AviationGPT on open-source LLaMA-2 and Mistral architectures which showed greater performance for aviation-domain specific NLP tasks.

The present work introduces Aviation-BERT-NER, which builds on top of previous aviation domain-specific BERT model developed by Chandra et al. [

15]. This tailored variant, Aviation-BERT, incorporated domain-specific data from aviation safety narratives, including those from NTSB and ASRS. By doing so, Aviation-BERT significantly enhanced its performance in aviation text-mining tasks. Aviation-BERT not only captures the unique lexicon and complexities of aviation texts but also surpasses the capabilities of traditional BERT models in extracting valuable insights from aviation safety reports [

15]. For instance, Aviation-BERT-Classifiers show improved performance for identifying occurrence categories for NTSB and ASRS text reports [

16] compared to the generic English language version of BERT. Aviation-BERT was also shown to outperform other generic English language models more than 4 times its size in expanding Aviation-Knowledge Graph (Aviation-KG) [

17]. In a parallel effort by Jing et al. [

17], the Aviation-KG question answering system, first proposed by Agarwal et al. [

18], is being expanded upon to mine and integrate data from structured knowledge graphs, thereby enabling the system to answer complex questions with accuracy. The development of these knowledge graphs involves a thorough process of mapping out subjects, objects, and their interrelations within texts to form structured data sets. However, this detailed process presents its own set of challenges, particularly in accurately extracting entities and their relationships, which are crucial for the model’s effectiveness.

This is where Named Entity Recognition (NER) becomes invaluable, particularly in niche domains like aviation. NER is essential for Knowledge Graph Question Answering (KGQA) systems because it precisely identifies and classifies specific aviation-related named entities, such as airport names, aircraft models, and aviation terms. By doing so, NER can potentially lay a solid foundation for accurately responding to fact-based queries in the aviation field [

19]. The rest of this paper is organized as follows:

Section 2 reviews the latest in domain-specific NER models, data preparation, related works, and defines the problem;

Section 3 details the data preparation while

Section 4 provides details for the Aviation-BERT-NER model fine-tuning;

Section 5 discusses results while

Section 6 concludes the present work.

3. Data Preparation

3.1. Named Entity Data Preparation

Aviation safety reports feature a wide range of named entities and essential key terms vital for thorough analysis. Utilizing narratives from the NTSB database, 17 distinct types of entities were identified, and the corresponding data were compiled from various sources, as shown in

Table 1.

The vocabulary for each named entity derived from external sources included a diverse array of forms, such as full names, codes, and abbreviations, reflecting the complexity found in actual aviation safety reports. The counts of unique entities excluded overlapping terms, such as identical abbreviations and codes. This variability also applied to DATE, TIME, and WAY lists, which included the assorted formats seen in genuine reports. Similarly, quantity-based entities (SPEED, ALTITUDE, DISTANCE, TEMPERATURE, PRESSURE, DURATION, and WEIGHT) were represented with realistic ranges and units. Consistency was maintained across entities like AIRPORT, CITY, STATE, and COUNTRY to ensure alignment in the subsequent step of template-based NER dataset generation.

3.2. Template Preparation

To generate templates that closely resemble aviation safety reports, NTSB narratives were manually examined, occasionally adopting their exact structure or making minor alterations in phrasing and sequence to introduce diversity. Named entities within these templates were then replaced with placeholders marked by {}. These placeholders were designed to be randomly filled with named entities from the previously generated lists in

Section 3.1. A few examples of such templates are provided below:

-

During a routine surveillance flight {DATE} at {TIME}, a {AIRCRAFT_NAME}

({AIRCRAFT_ICAO}) operated by {AIRLINE_NAME} ({AIRLINE_IATA}) experienced technical difficulties shortly after takeoff from {AIRPORT_NAME}

({AIRPORT_SYMBOL}) in {CITY}, {STATE_NAME}, {COUNTRY_NAME}.

The pilot of a {MANUFACTURER} {AIRCRAFT_ICAO} noted that the wind was about {SPEED} and favored a {DISTANCE} turf runway.

An unexpected {WEIGHT} shift prompted {AIRCRAFT_NAME}’s crew to reroute via {TAXIWAY} for an emergency inspection.

{AIRLINE_NAME} encountered unexpected {TEMPERATURE} fluctuations while cruising at {SPEED}, leading to an unscheduled maintenance check upon landing at {AIRPORT_NAME}.

Visibility challenges due to a low {TEMPERATURE} at {AIRPORT_NAME} led the {AIRCRAFT_NAME} to modify its course to an alternate {TAXIWAY}, under guidance from air traffic control.

Additionally, ChatGPT 4 was utilized to create varied sets of modified templates, with half of them generated by this external model [

38]. These templates were generated iteratively using few-shot prompting. The initial prompt included placeholder descriptions for all the entity categories listed in

Table 1. The next prompt provided three genuine NTSB narratives to illustrate the structure of aviation safety reports, along with three example templates containing placeholders. Finally, ChatGPT was prompted to create templates with the following instructions:

"Using the information provided for entity placeholders, aviation safety reports, and example templates, generate 10 new templates based on aviation safety data, covering a range of possible incidents and scenarios. Do not add placeholders beyond the list already provided. Templates must be single sentences."

ChatGPT was then prompted to generate further templates in batches of 10, ensuring no repetition of previously generated templates. Each template was reviewed for suitability and added to the collection. To adjust the frequency of specific placeholders, the prompt was modified accordingly. An example of such a prompt is given below:

"Generate 10 more templates. Increase the occurrence of placeholders {DURATION}, {WEIGHT}, and {TEMPERATURE}. Do not repeat any previously generated templates."

This process ultimately resulted in the development of 423 distinct templates (manual and ChatGPT combined), each consisting of a single sentence.

3.3. Labeled Synthetic Dataset Generation for Training

The developed templates were used to construct meaningful sentences by randomly replacing placeholders with named entities. This process was repeated 166 times, resulting in the creation of 70,218 sentences. The precise number of repetitions was determined based on the study outlined in

Appendix A, which highlights the flexibility of the template-based approach in generating synthetic training datasets of varying sizes. The entity annotation and BIO tagging were performed simultaneously, with "O" representing words

outside the entities of interest, "B" marking the

beginning of an entity phrase, and "I" indicating continuation

inside the entity phrase.

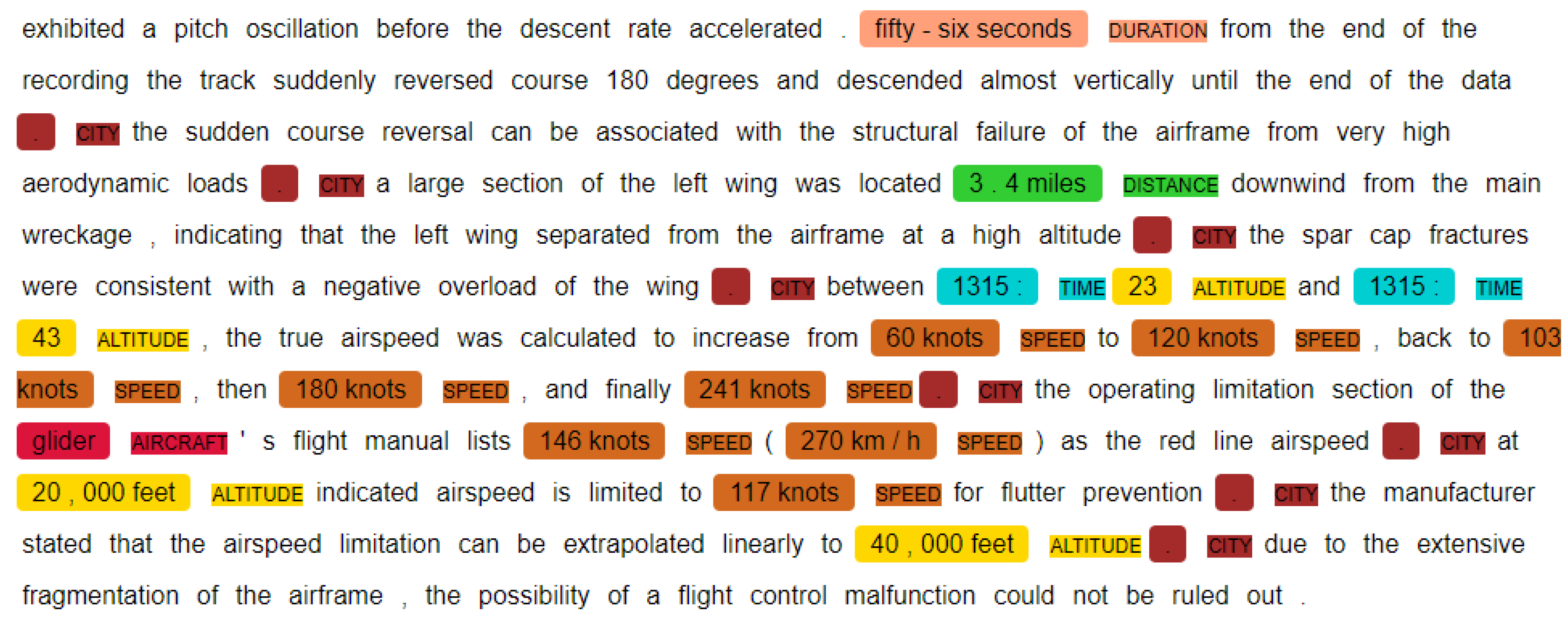

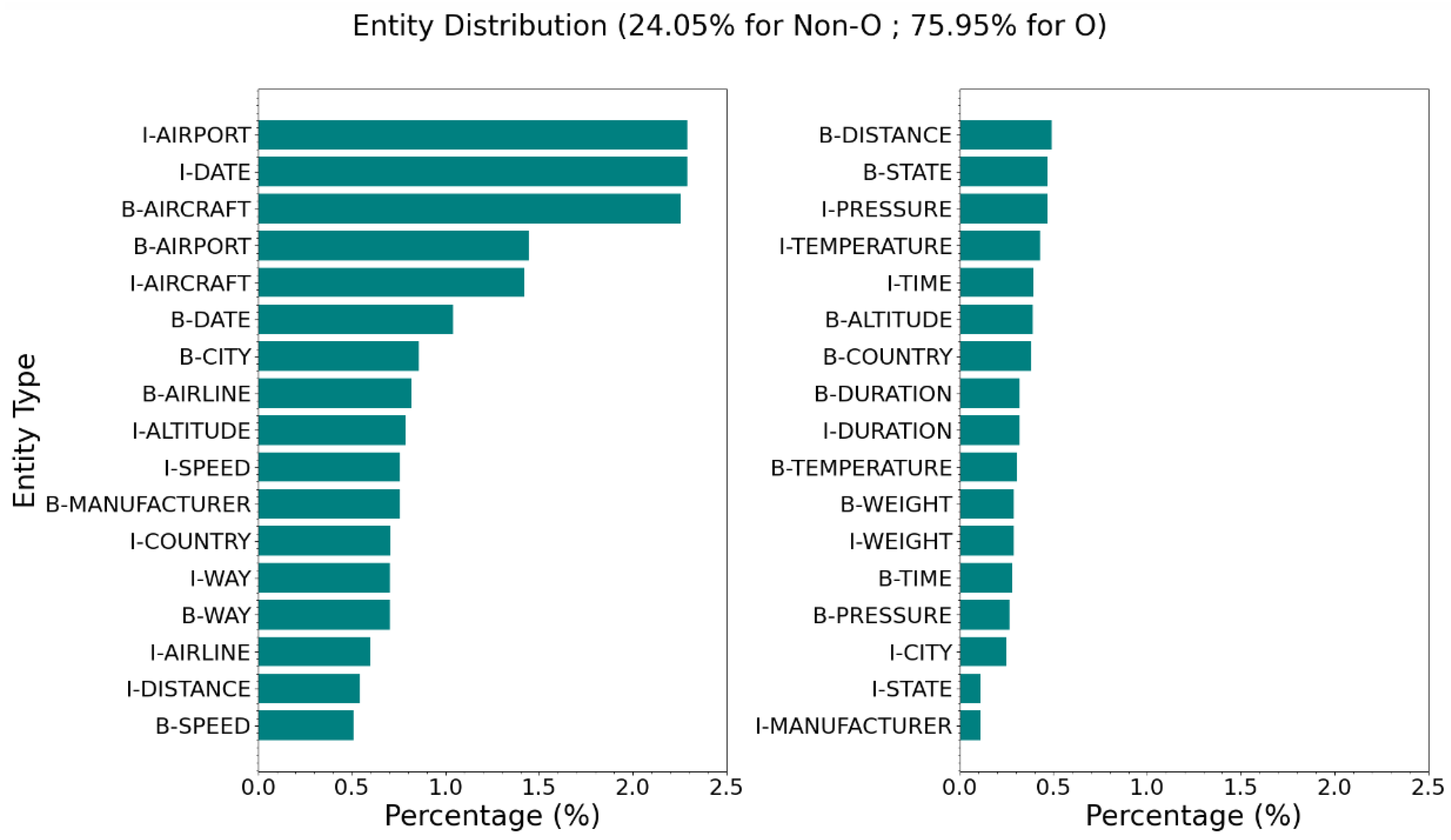

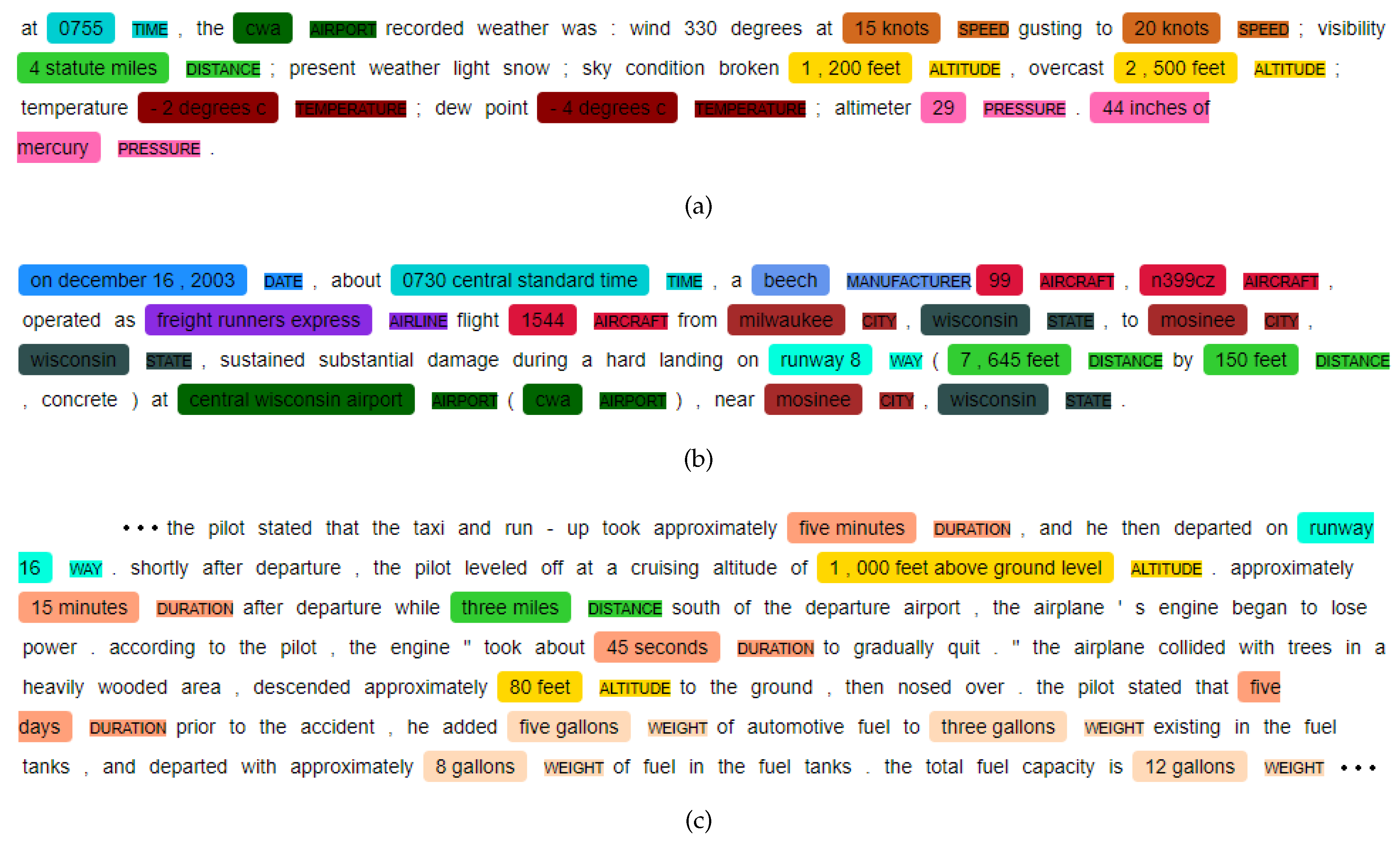

Table 2 provides an example of placeholders replaced with random named entities, along with their corresponding tags. Additionally,

Figure 1 shows the distribution of entities across the training dataset, with a total of 2,125,157 entities (including "O").

3.4. Labeled Dataset Generation for Testing

To test performance on real-world data, genuine narratives from the NTSB database were considered. As an initial step in the selection process, all narratives in the database were tokenized using the Aviation-BERT tokenizer, and only those narratives with a sequence length shorter than 512 tokens were selected. This was done to prevent indexing errors during inference, as Aviation-BERT is based on the BERT-Base-Uncased model with a maximum allowable sequence length of 512 tokens [

11,

15]. In total, 108,514 narratives were selected from this process.

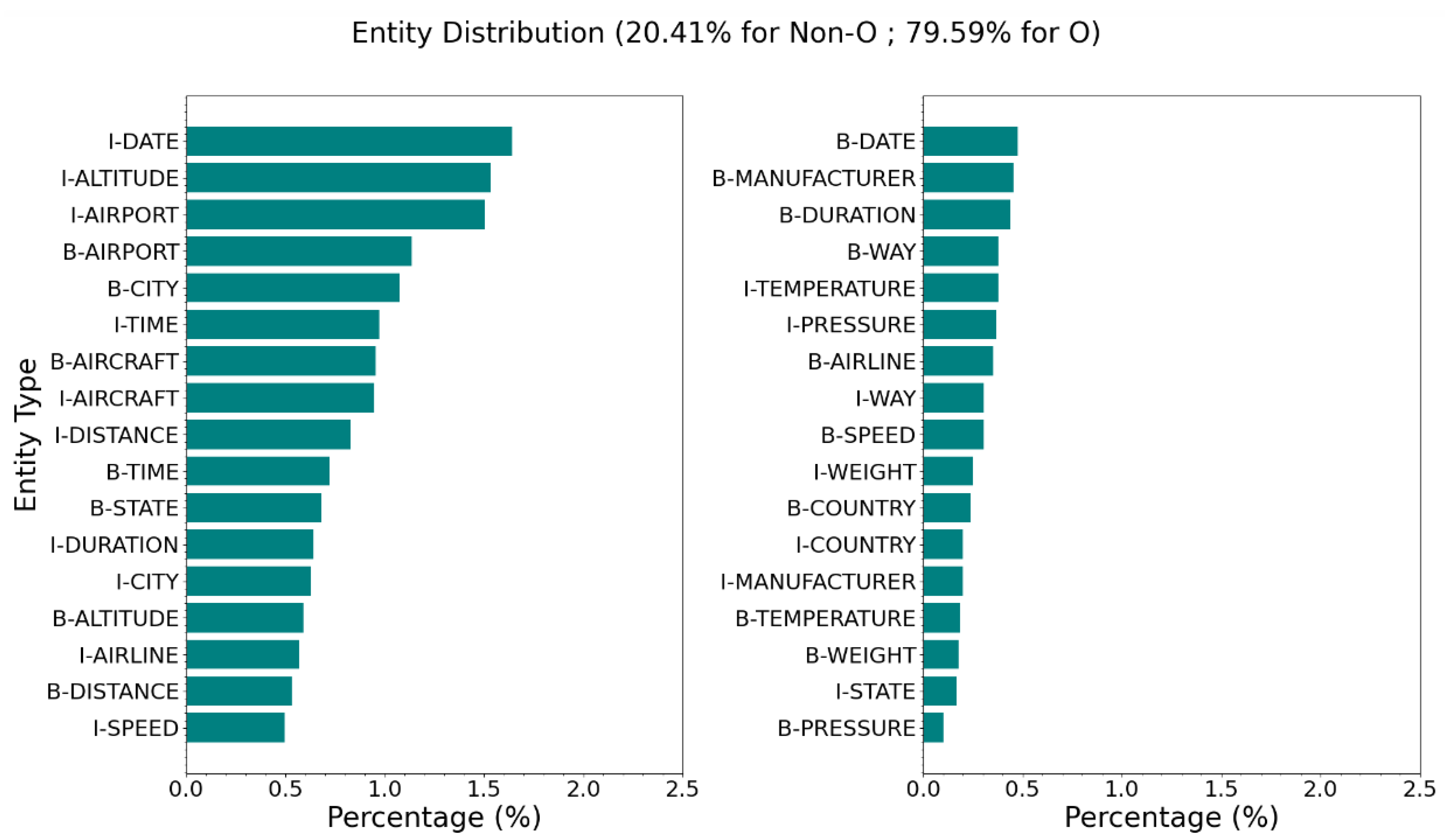

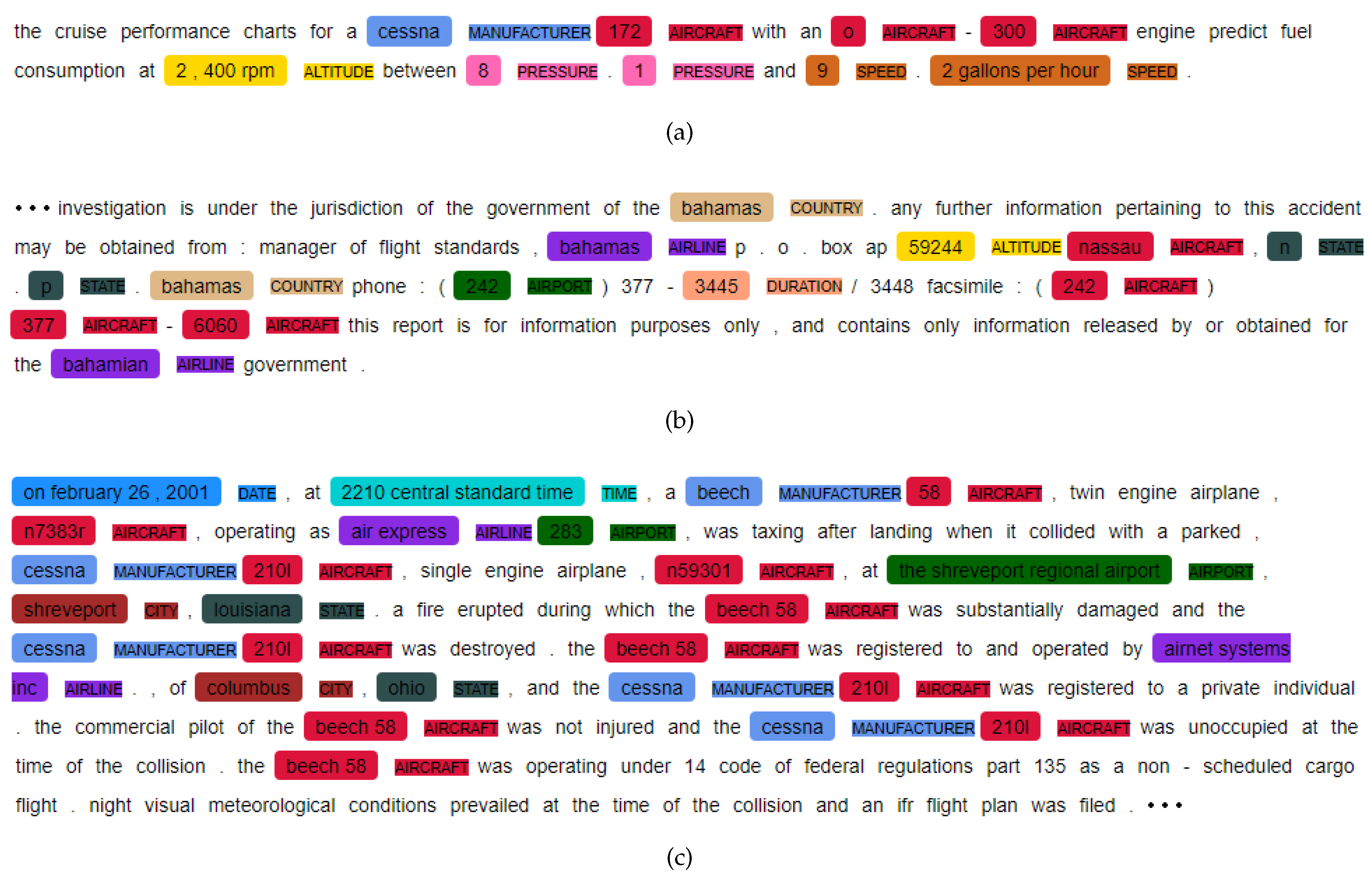

From the 108,514 selected narratives, individual narratives were randomly chosen and qualitatively examined for entities of interest. Narratives with a visibly high number and diversity of entities were shortlisted for the test dataset, while others were disregarded. For example, extremely short narratives of one or two sentences with no words within the entities of interest were not considered as candidates for the test dataset. This random selection process continued until 50 narratives were shortlisted. These shortlisted narratives were then manually annotated for all entities with a total of 22,021 (including "O"). Entity distribution for the test dataset is shown in

Figure 2.

A secondary test dataset was constructed using the same 50 narratives to understand the effect of shorter inputs on the model’s performance. For this purpose, the 50 narratives were broken down into individual sentences without altering the manual annotation for entities. This process resulted in 848 sentences, which can be used to evaluate performance of the same narratives at the sentence level.

6. Conclusions

Domain-specific NER models serve an important function in the analysis of textual data. This paper presents a novel approach to training such models by generating synthetic training datasets for improved generalizability, flexibility, and customization, while reducing reliance on manual labeling, and facilitating their application to real-world problems.

The Aviation-BERT-NER model enhances the extraction of key terminology from aviation safety reports, such as those found in the NTSB and ASRS databases. A central feature of Aviation-BERT-NER’s approach is its use of templates, designed to reflect the diverse formats and scenarios found in real-world aviation safety narratives. This approach not only supports generalizability but also allows for quick adaptation to evolving aviation terminology and standards. The synthetic training dataset can be tailored to accommodate any number of entities while ensuring balance and diversity. In this work, over 70,000 synthetic sentences were generated for training Aviation-BERT-NER to recognize 17 distinct entities. For testing, real NTSB accident narratives were manually annotated to assess the model’s performance.

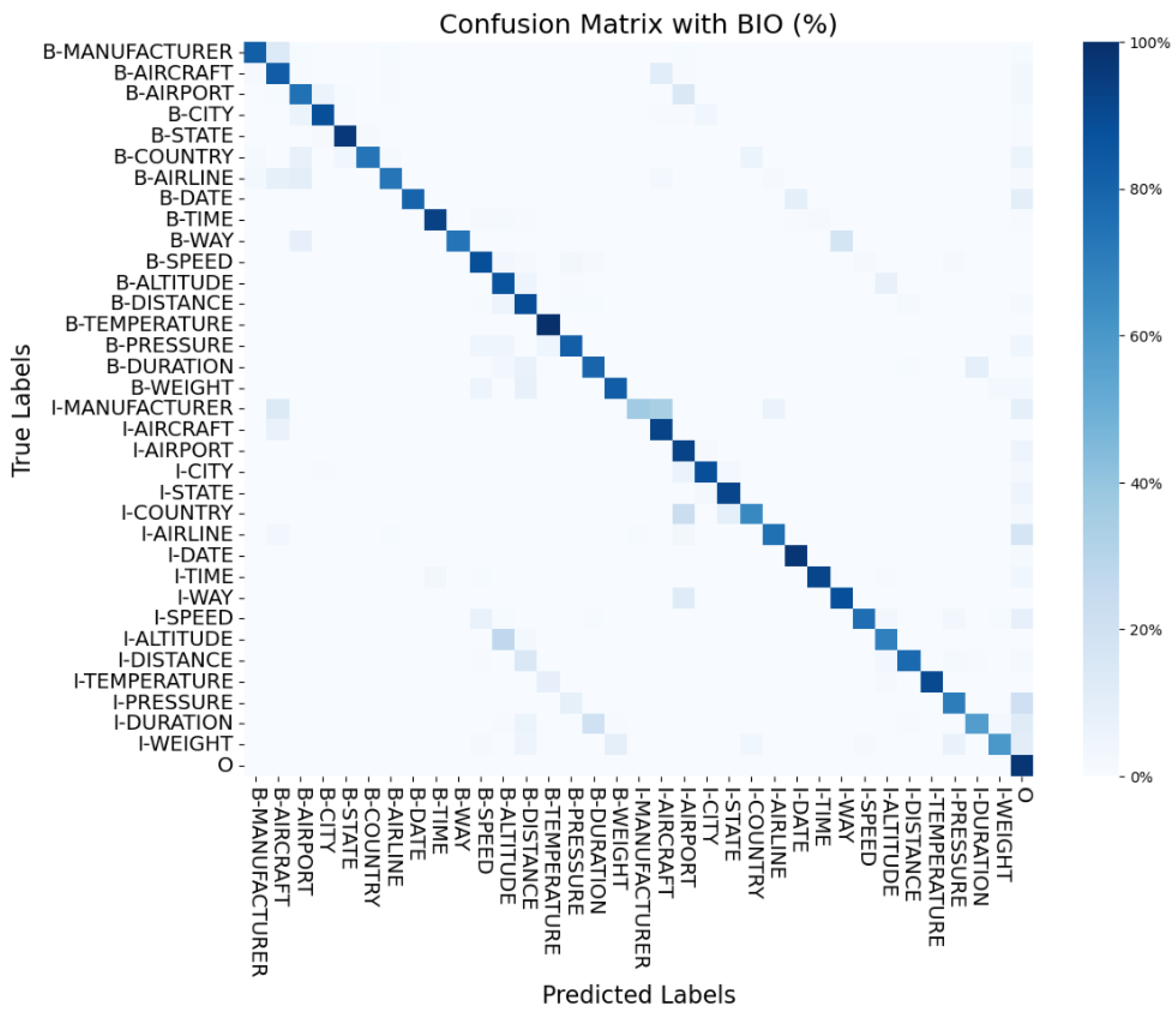

Aviation-BERT-NER addresses common challenges in NER models, such as misclassification, by continuously refining template diversity and strategically enhancing its named entity datasets. This proactive approach to model improvement demonstrates the method’s effectiveness in delivering a robust tool for aviation safety analysis. In benchmarking against other NER models, Aviation-BERT-NER outperformed other aviation-specific English NER models while handling more than twice the number of entities. Aviation-BERT-NER achieved an F1 score of 94.78% while identifying 17 entities, compared to Singapore’s SpaCy NER, which had an F1 score of 93.73% for 7 entities.

However, the model has limitations in handling entities it was not trained to recognize, such as aircraft engine details, telephone numbers or addresses, which may appear in real-world aviation safety reports. Future work will focus on addressing these gaps. Future work will also involve integrating Aviation-BERT-NER into an Aviation-KGQA system. This integration aims to enhance the KGQA system’s ability to formulate accurate query responses by leveraging structured knowledge graphs for detailed information retrieval.