“Why may not the world be a sort of republican banquet of this sort, where all the qualities of being respect one another’s personal sacredness, yet sit at the common table of space and time? To me this view seems deeply probable. Things cohere, but the act of cohesion itself implies but few conditions, and leaves the rest of their qualifications indeterminate. ... if we stipulate only a partial community of partially independent powers, we see perfectly why no one part controls the whole view, but each detail must come and be actually given, before, in any special sense, it can be said to be determined at all. This is the moral view, the view that gives to other powers the same freedom it would have itself.”

William James, 1882 [1]

1. Introduction

Science weaves insights and observations, theories and experiments. When Galileo focused a telescope on Jupiter, its orbiting moons dethroned geocentrism, and demanded new theories.

What is an observer? What is an observation? How do observations inform theories?

Classical physics posits an observer that is distinct from, and need not disturb, what it observes. This observer can be safely ignored.

Einstein’s theories of relativity do not ignore the observer. But they identify it with a frame of reference—a system of coordinates and clocks.

In quantum theory the observer is essential, but ill understood. If a physical system is not observed, its state evolves according to Schrödinger’s equation, which is unitary and deterministic. But when the system is observed, its state “collapses”: a complex superposition of eigenstates becomes a single eigenstate. This collapse is nonunitary and random, and that is a problem: observation, being nonunitary, can’t be explained by quantum processes that are unitary. This is a remarkable failure of reductive explanation within quantum theory.

Some attempt to remedy this through the process of decoherence, in which a complex superposition of eigenstates evolves into a real mixture of eigenstates. But it does not evolve into a single eigenstate, and thus fails to model the collapse induced by observation.

Some propose to replace the term “observer” with “measuring apparatus,” which sounds less subjective. Werner Heisenberg, for instance, wrote, “Of course the introduction of the observer must not be misunderstood to imply that some kind of subjective features are to be brought into the description of nature. The observer has, rather, only the function of registering decisions, i.e., processes in space and time, and it does not matter whether the observer is an apparatus or a human being; but the registration, i.e., the transition from the “possible” to the “actual,” is absolutely necessary here and cannot be omitted from the interpretation of quantum theory” [3].

And Asher Peres wrote that observers in quantum physics are “similar to the ubiquitous “observers” who send and receive light signals in special relativity. Obviously, this terminology does not imply the actual presence of human beings. These fictitious physicists may as well be inanimate automata that can perform all the required tasks, if suitably programmed” [2].

This fails to resolve the issue. A measuring apparatus, even if not subjective, must still instantiate a nonlinear collapse, and nonlinearity is the stubborn problem that precludes a reductive account of measurement in quantum theory.

So quantum theory acknowledges the essential role of the observer, but offers no reductive theory of the observer. This has long been a source of consternation. As Frank Wilczek put it in 2006, “The relevant literature is famously contentious and obscure. I believe it will remain so until someone constructs, within the formalism of quantum mechanics, an “observer,” that is, a model entity whose states correspond to a recognizable caricature of conscious awareness … That is a formidable project, extending well-beyond what is conventionally considered physics.”

It is indeed formidable. This measurement problem prompted John Wheeler to suggest a complete rethinking of quantum theory: “It is difficult to escape asking a challenging question. Is the entirety of existence, rather than being built on particles or fields of force or multidimensional geometry, built upon billions upon billions of elementary quantum phenomena, those elementary acts of “observer-participancy,” those most ethereal of all the entities that have been forced upon us by the progress of science?” [24].

Wheeler’s challenge was accepted by his student Chris Fuchs, who developed QBism, an interpretation of quantum theory that promotes observing agents to the limelight [16]. In QBism, quantum states describe beliefs of agents, not their external reality as it is. If an agent says “this system has state ” she asserts nothing about that reality. She means that describes her probabilities for measurement outcomes. When she measures, she updates her belief, from to an eigenstate of the outcome. This “collapse” is no mystery. It is revising belief in light of new data.

What is an observing agent? QBism offers no theory. It simply says that, whatever an observer is, quantum theory describes its beliefs.

Thus Wilczek’s formidable project of modeling the observer remains unfinished. Wilczek reiterated its importance in 2022: “Quantum mechanics has an unusual mechanism since the theory has equations, and to interpret the equations one must make an observation. I believe that, eventually, in order to … fully understand quantum mechanics, we will need to understand that we have that model of consciousness that corresponds to our experience of everyday life, which is fully based on quantum mechanics. At present, I don’t think we have that” [12].

What we have, instead, is remarkable. In the last decade, theorists in high-energy physics have discovered geometric structures, entirely beyond spacetime and quantum theory, called “positive geometries.” These structures dramatically simplify the computation of particle interactions in spacetime, and reveal new insights about these interactions.

This success prompted the European Research Council to fund UNIVERSE+, an international collaboration of researchers that “seeks a new foundation for fundamental physics, ranging from elementary particles to the Big Bang, revealing a hidden world of ideas beyond quantum mechanics and spacetime. Novel geometric objects recently discovered in theoretical physics hint at new mathematical structures. Combinatorics, algebra, and geometry have been connected to particle physics and cosmology in an entirely unexpected way. Leveraging these advances, the team will launch the field of Positive Geometry, as a new mathematical framework for describing the laws of physics” [25].

Incredible. In the last decade, physics has taken its first peak beyond spacetime. And what does it see? Objects with positive geometries. This is ground breaking and exhilarating. It’s also puzzling. Why these objects and geometries? Why no dynamical systems? Why no observers? Who ordered this?

Positive geometries confound us, much as the obelisk in 2001: A Space Odyssey confounded the apes that swarmed it, hooting and pounding without comprehension. Positive geometries feel deep and important, but their deeper meaning eludes us.

Here we propose a deeper meaning. We propose that beyond positive geometries there lies a rich dynamics of “observer-participancy," just as Wheeler suggested. We model it as a dynamics of entities we call conscious agents (CAs). Positive geometries, we propose, describe the asymptotic behavior of CAs.

We briefly review CA theory (CAT) and the formulation of their experience dynamics as Markov kernels. We then introduce a partial order on Markov kernels: if M is a trace of N. Intuitively, M is a trace of N if M describes what you see when you watch the dynamics of N only on that subset of states: : we say that M is supported on that subset of the states.

The trace order, interpreted as logical implication, induces a logic, the trace logic, on the set of all Markov kernels. The trace logic is non-Boolean: it has no greatest kernel and many incomparable kernels. But the trace logic is locally Boolean: for a given N, the set of all M such that forms a Boolean logic.

The trace logic defines a theory of observation. Conscious agent Aobserves conscious agent B iff in the trace logic, where , respectively , is the “experience kernel” of A, respectively B. We express this by saying “”, and call this relation on CAs the “trace order” too. The relation “” is thus a preorder on the set of all CAs: two CAs with the same experience kernel Q will be indistinguishable in trace order. To simplify notation, we will henceforth use the same symbol, A, both for the CA A and for its experience kernel . This definition of trace order is the foundation of the trace theory of observation, and implies that any observer is an integral part of what it observes. Observers are not aloof, objective, and negligible. They are not just a system of coordinates and clocks. They are organically entwined with what they observe. This is a radical departure from standard notions of detached observers with paltry influence.

The trace theory of observation is, as we shall see, an ideal theory: the trace of a kernel demands an infinite sample of its dynamics. We assume that, as with our phenomenal experience, observation occurs via finite sampling of the observed. So we discuss finite sampling of trace chains, and their application to real experiments.

What does the CA A “observe” in a CA B, when the support of A is contained in the state space of B? We posit that A observes what it can of the long-term, or asymptotic dynamics of B. In fact, we show that if B is ergodic then A sees the asymptotic probabilities of states it shares with B. We quantify this using stationary measures. The stationary measure of a kernel P is a probability measure on the states of P satisfying ; describes the long-term probability that the dynamics of P will visit each of its states. We show that the stationary measure of A is the normalized restriction of the stationary measure of B. Thus A “sees” a probability measure that encodes long-term behaviors of B.

So observers are modeled as kernels, and observations yield probability measures. These probability measures express that which the observer can know about the observed: as in QBism’s approach, we take these probabilities to express the beliefs of the observer caused by observation. The set of all probability measures also has a logic, the Lebesgue logic, [7] which too is non-Boolean: it has no greatest kernel and many incomparable kernels. But the Lebesgue logic is again “locally” Boolean: for a given , the set of all such that implies forms a Boolean logic. We show that the map from kernels to stationary measures, i.e., from observation to belief, is a homomorphism from the trace logic to the Lebesgue logic: the logic of observation and the logic of belief mesh perfectly.

We then discuss our progress on projecting the dynamics of CAs onto positive geometries and thence into spacetime. We propose specific correspondences between properties of CAs and properties of particles in spacetime, including mass, energy, momentum and spin. We outline the work that remains to complete the projection and to make predictions testable by scattering experiments with particles.

2. Conscious Agents

We develop the CAT formalism in prior papers [5,33,34]. To keep this paper self contained, we briefly review the motivations and definition of CAs.

A scientific theory asks us to grant certain assumptions. If we grant them, the theory promises in return to explain some phenomena of interest. William of Ockham counsels theory builders to keep assumptions to a minimum. That is sage advice. The bare minimum, however, is never zero. Each theory has assumptions. So no theory explains everything in its domain: no theory explains its assumptions.

One may propose a deeper theory, which explains assumptions of a prior theory. But the new theory has its own, unexplained, assumptions. And so on, forever. Thus science can offer no theory of everything, in the sense of a theory that explains its own assumptions. (This is distinct from what physicists term a "theory of everything," which is one that unifies the known four fundamental forces.)

Since no theory can be a theory of everything, it follows that every theory has a scope and limits. A good theory provides mathematically precise tools to explore its scope. A great theory provides tools to discover its own limits.

Quantum field theory, for instance, which unites quantum mechanics and Einstein’s theory of spacetime, has marvelous scope and application. It also informs us of its limit: spacetime has no operational meaning beyond the Planck scale—roughly centimeters and seconds. This means that the concept of spacetime is not fundamental, and we must look deeper.

That is a key motivation for CAT. Spacetime is not fundamental, so we propose something beyond spacetime. We propose that networks of CAs are prior to, and give rise to, spacetime and objects in it. We call this proposal conscious realism. It says that the world a CA interacts with can be modeled as a network of CAs that includes the CA itself. So we must show how spacetime arises from a network of interacting CAs.

A theory of consciousness must explicate a wide range of phenomena, including qualia, choice, learning, memory, problem solving, intelligence, attention, observation, the self, semantics, comprehension, altered states, morals, levels of awareness, mathematical knowledge, the hard problem of consciousness, and the combination problem of consciousness. Ockham says, "Assume as few of these as possible, and explain the rest."

CAT assumes just four: qualia, choice, action and a sequencing of qualia. It must explain the rest.

Ockham would be proud. But he’s not done with us yet. His counsel governs our next step: choosing a mathematical formalism. His advice: keep it minimal.

To this end, we represent the qualia—the possible experiences—of a CA by a measurable space. Recall that a measurable space is a set X together with a collection of subsets of X that (1) contains X and (2) is closed under complementation and countable union. The reason to use a measurable space is simple. We must, at a minimum, speak of the probability that a CA has some experience. A measurable space is a minimal formalism to permit this.

Of course some qualia may have additional structure. The color red, for instance, appears closer to orange than it does to green. This suggests adding a metric or topology to the measurable space. Fine. This is not precluded by the definition. But we don’t include it in the definition, because it would then assert that qualia must have a metric or topology, and that assertion may be false. What must be true, if we hope to do science, is that we may always speak of the probability of experiences.

For the same reasons, we also represent the possible choices of a CA’s actions and the possible states of the world the CA interacts with (including the CA itself), as measurable spaces.

A CA’s experiences affect its choices, and its choices affect its experiences and the experiences of other CAs. We model these influences with Markov kernels. A finite Markov kernel can be represented by a matrix whose entries (1) are real numbers between 0 and 1 inclusive that (2) sum to 1 in each row. The reason to use a Markov kernel is again simple. We must, at a minimum, use probabilities to describe how qualia affect choices, how the choices affect the agent’s action, and how that action affects the world-state. The rows of a Markov kernel are those probabilities. This is an informal description of a Markov kernel.

We now give a formal definition of a Markov kernel. Let Y be a set. A collection of subsets of Y is called a σ-algebra if it contains Y itself and is closed under countable union and complement. A measurable space is a pair where is a -algebra of subsets of Y. The subsets in are called events. A non-negative measure is a function such that (1) and (2) is -additive, i.e., for any countable collection of pairwise disjoint events, . A probability measure is a non-negative measure that assigns the value 1 to Y. If and are measurable spaces, a kernel from X to Y is a mapping N from into such that: (i) for every , the mapping is a measure on , written as ; (ii) for every , the mapping is a measurable function, denoted by . A kernel N is said to be Markovian if for all .

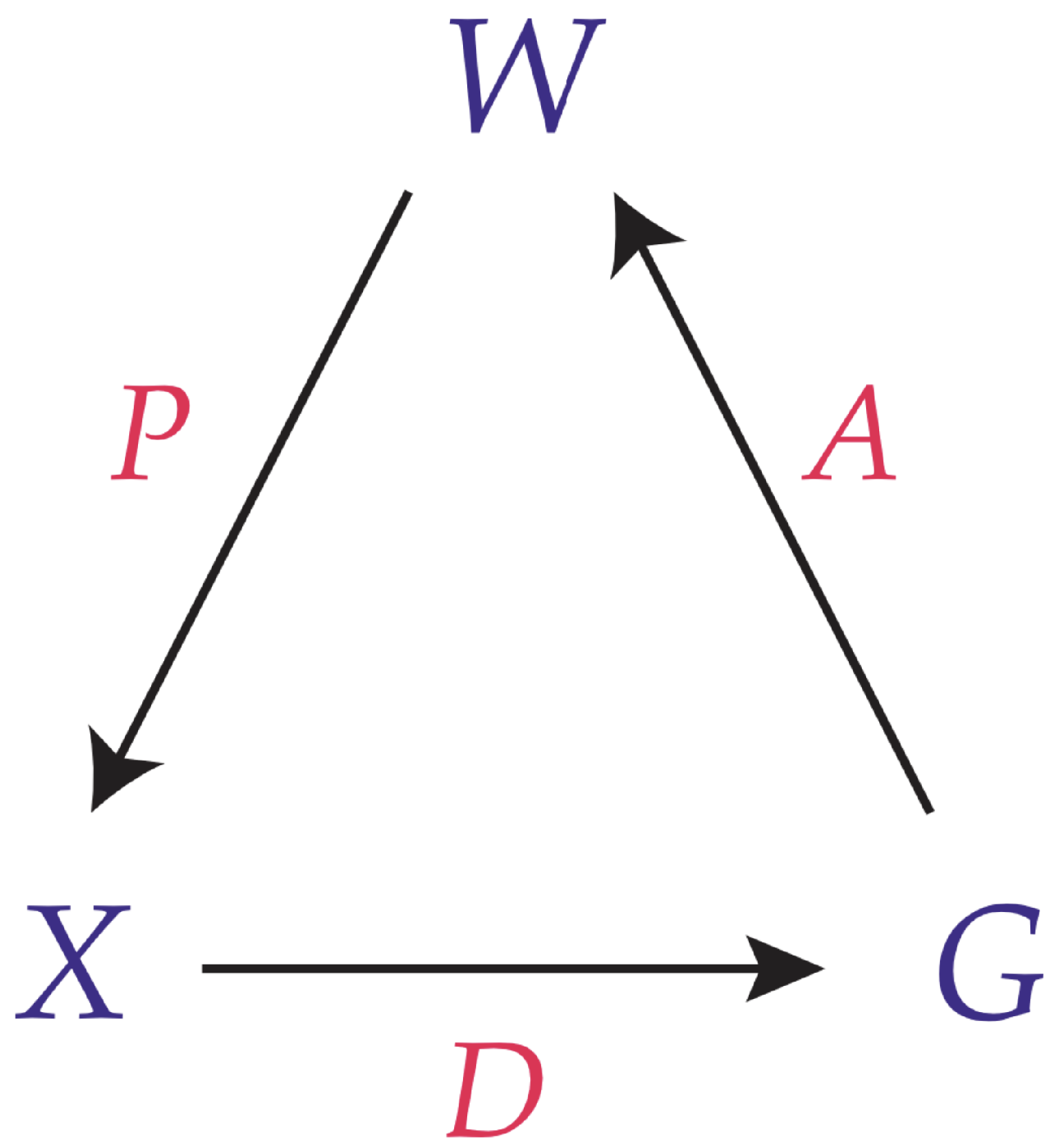

Mathematically, we represent a conscious agent,

C, by a 7-tuple:

where

is a measurable space of qualia,

is a measurable space of actions,

is a measurable space constituting the set of states of the collection of the network of all CAs,

N is an integer that counts the sequence of experiences, and

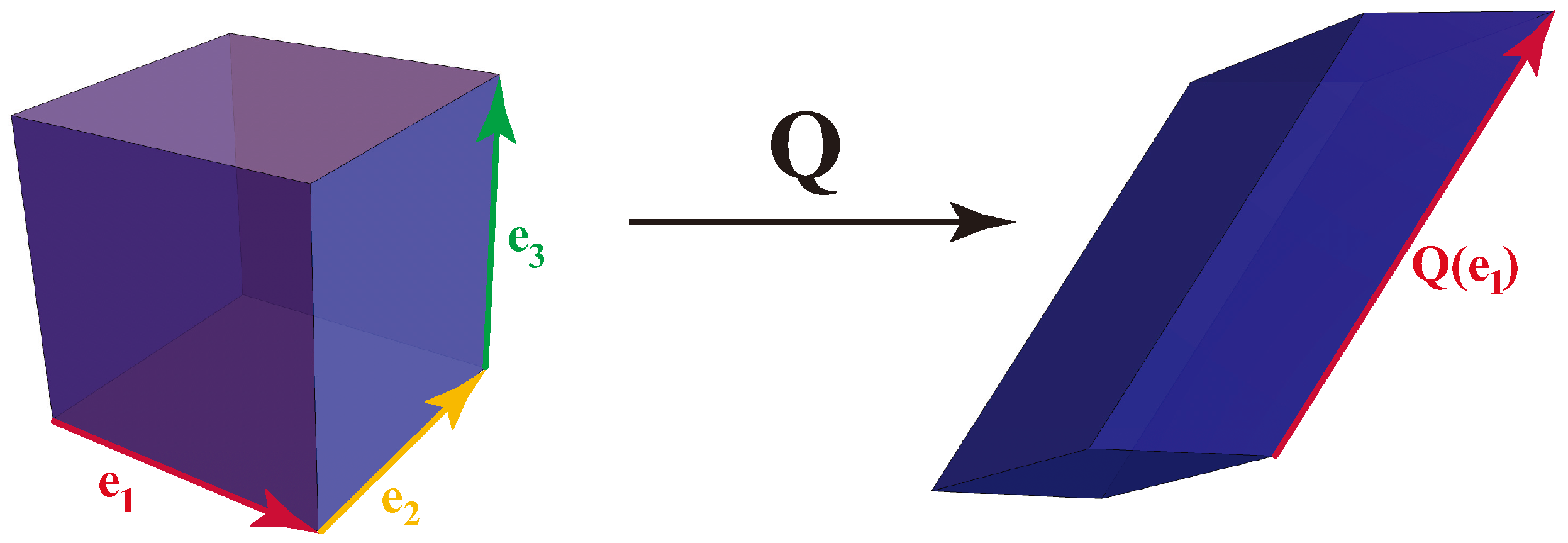

are Markov kernels. This is illustrated in

Figure 1.

This definition embodies the assumptions of the theory. The measurable space denotes that which makes qualia possible. What makes qualia possible? The theory does not say. That is a “miracle” of the theory. Whatever that miracle is, the theory, with a nod to William of Ockham, settles for describing it with a measurable space.

The measurable space denotes that which makes choice possible. What makes choice possible? The theory is silent, but describes it with a measurable space.

The kernel P denotes that which governs which quale is in awareness now. What is the governor? That is beyond the theory, which placates Ockham by describing it with a Markov kernel.

The kernel D denotes that which governs which choice is taken now. No story is offered on what that governor might be.

Similarly, the kernel A denotes that which governs how the choice of this agent affects what is in the awareness of other agents now. That governor is a mystery.

The measurable space , in accordance with conscious realism, denotes the state space of all conscious agents. It inherits all the assumptions above.

These are the assumptions of the theory, the things it posits but cannot explain. This foundation of unexplained postulates is no idiosyncratic disease of CAT. It is endemic to all scientific theories.

We should note a miracle we lack: we need no physical substrate. In this regard, CAT differs fundamentally from the integrated information theory (IIT) of consciousness. Both theories use Markov kernels (called transition probability matrices by IIT). IIT employs them to quantify properties of physical substrates required for consciousness to exist. CAT says consciousness has no physical substrate. The Markov kernels of CAT describe probabilistic relationships among conscious experiences directly, without substrates. CAT heeds the discovery of high-energy physics: spacetime is doomed, and with it any physical substrates inside spacetime. Requiring such substrates is an anachronism. Consciousness has no physical substrates, but it can create physical interfaces. We will refer to such interfaces here, metaphorically, as “headsets.” In doing so, it creates the physics we see, and an infinity of others beyond the confines of our spacetime headset.

If we grant the assumptions of CAT, then it can explain many other things. For instance, networks of interacting CAs are computationally universal [33]: anything that can be computed by Turing machines or neural networks can be computed by CA networks. So we can use CA networks to build models of learning, memory, problem solving, intelligence, attention, observation and the rest of the cognitive laundry list we trotted out earlier.

Let’s try observation.

3. Observation

In 1954 the physicist Wolfgang Pauli reflected on the uncertainty principle in quantum theory and its implication that “the theory predicts only the statistics of the results of an experiment” but not “the individual outcome of a measurement.” He argued that this requires a new theory of observation: “In the new pattern of thought we do not assume any longer the detached observer, occurring in the idealizations of this classical type of theory, but an observer who by his indeterminable effects creates a new situation, theoretically described as a new state of the observed system” [26].

This is echoed in recent work by the physicist Stephen Wolfram: “It’s become an essential thing to understand what we’re like as observers because it seems to be the case that what we’re like as observers determines what laws of physics we perceive there to be.”

Such an observer does not simply register preexisting facts. Instead it “creates a new situation, theoretically described as a new state” and “determines what laws of physics we perceive there to be.” The observer is somehow inseparable from the observed, and its observations create its depictions of the observed.

There is a natural way to capture this insight in the language of conscious agents. To do so, we first define the

qualia kernel of a CA to be the Markov kernel obtained by the product (e.g., matrix multiplication in the finite case) of the

D,

A, and

P kernels of that CA:

is the probability that, given the current experience is

e, the next experience will be

. The Equation (

5) is interpreted in terms of the actions of the kernels on a state or, more generally, on a vector giving state probabilities, by right multiplication: The matrix multiplication is defined by

and, if the current probability of state

e is

, then the new vector of state probabilities is

Note that the sums above are over all action choices, denoted

g, and all world states, denoted

w.

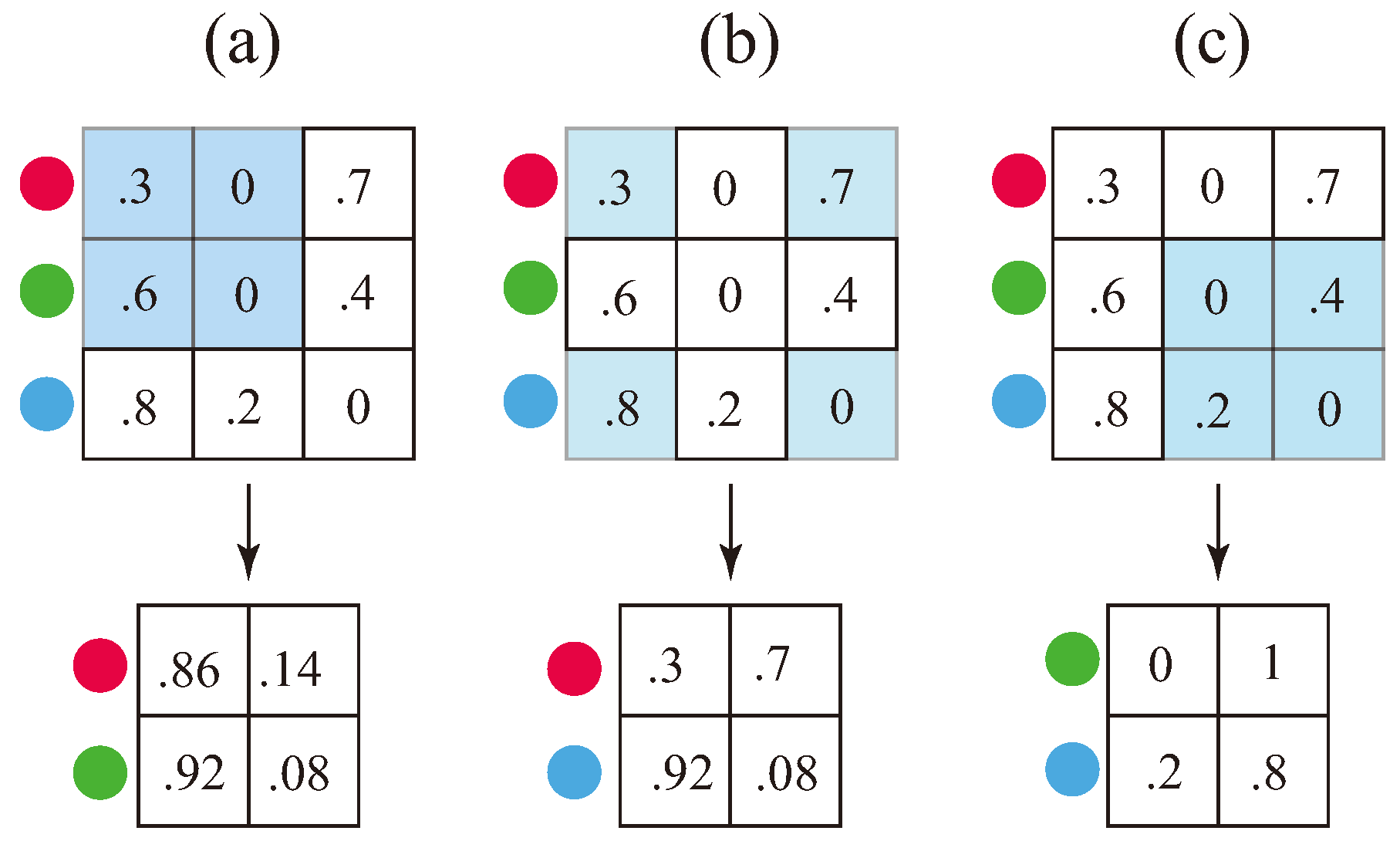

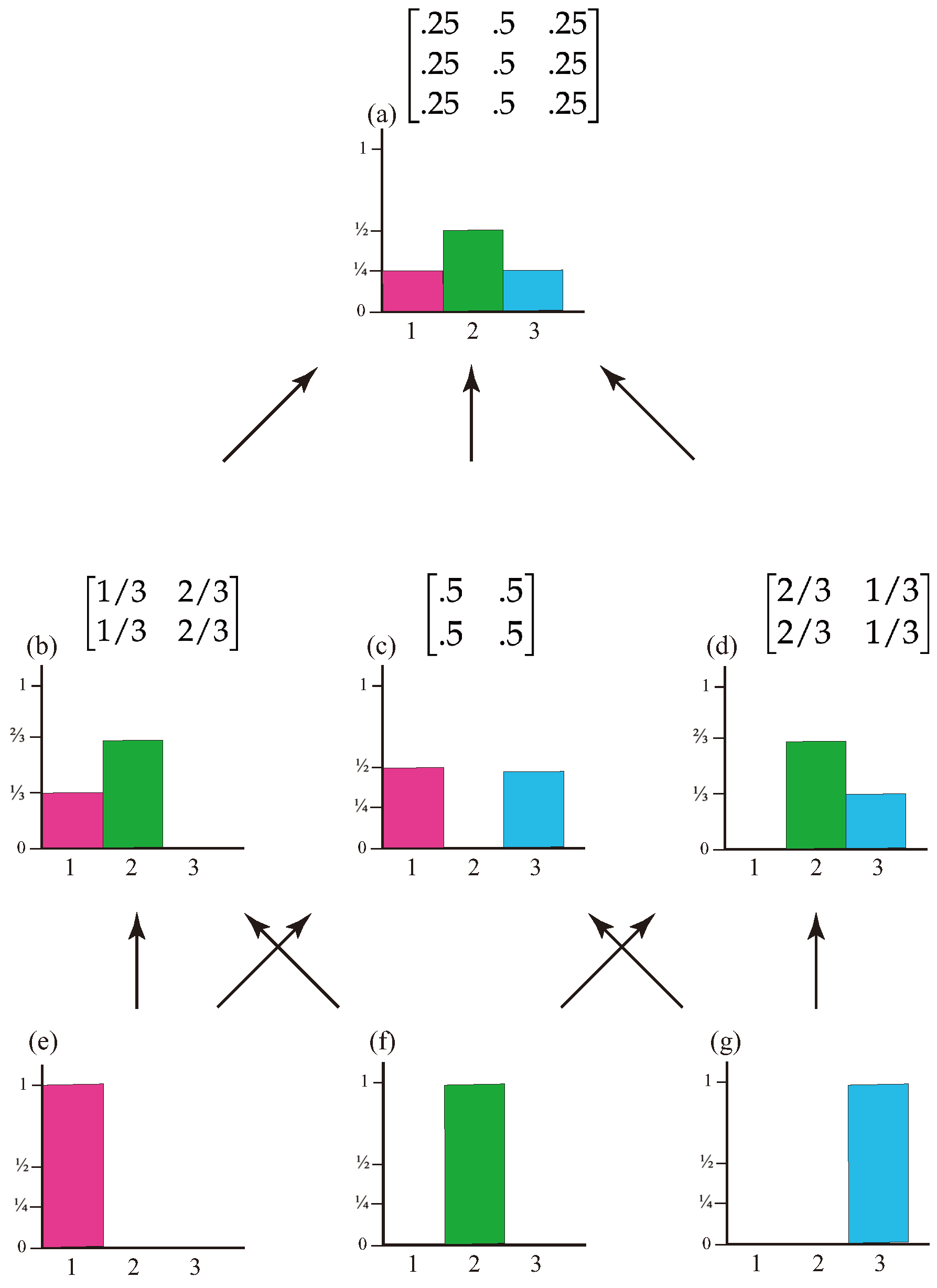

The qualia kernel of a CA describes how its conscious experiences evolve. For instance, for a CA that just sees the colors red, green, and blue, the qualia kernel,

, can be written as a matrix having three rows and three columns, whose entries are real numbers between 0 and 1 inclusive, and whose entries within a row sum to 1. For example,

could be the matrix

The entries in the first row give the probabilities that, if it now sees red, that it will next see red, green, or blue. The second row gives the probabilities that, if it now sees green, that it will next see red, green, or blue. The third row gives the probabilities that, if it now sees blue, that it will next see red, green, or blue.

Suppose I’m looking at the pattern of reds, greens, and blues generated by

, and I decide to attend only when red or green appears; I just ignore blue. Then I will see some pattern of reds and greens generated by

while ignoring blues. I can describe it by writing down a new Markov matrix

that has just two rows and two columns. In this example, the correct matrix is

The entries in the first row give the probabilities that, if I now see red that I will next see red or green. The second row gives the probabilities that, if I now see green that I will next see red or green. The matrix is like a projection of the matrix onto the red and green states. is called the kernel of the trace chain of on the red and green states. We sometimes just say that is the trace of ; this should not be confused with the trace of a matrix, which is the sum of its diagonal elements.

Notice that the probabilities in

differ from those in

. For instance, in

the probability that I see red next if I see red now is 0.2, but in

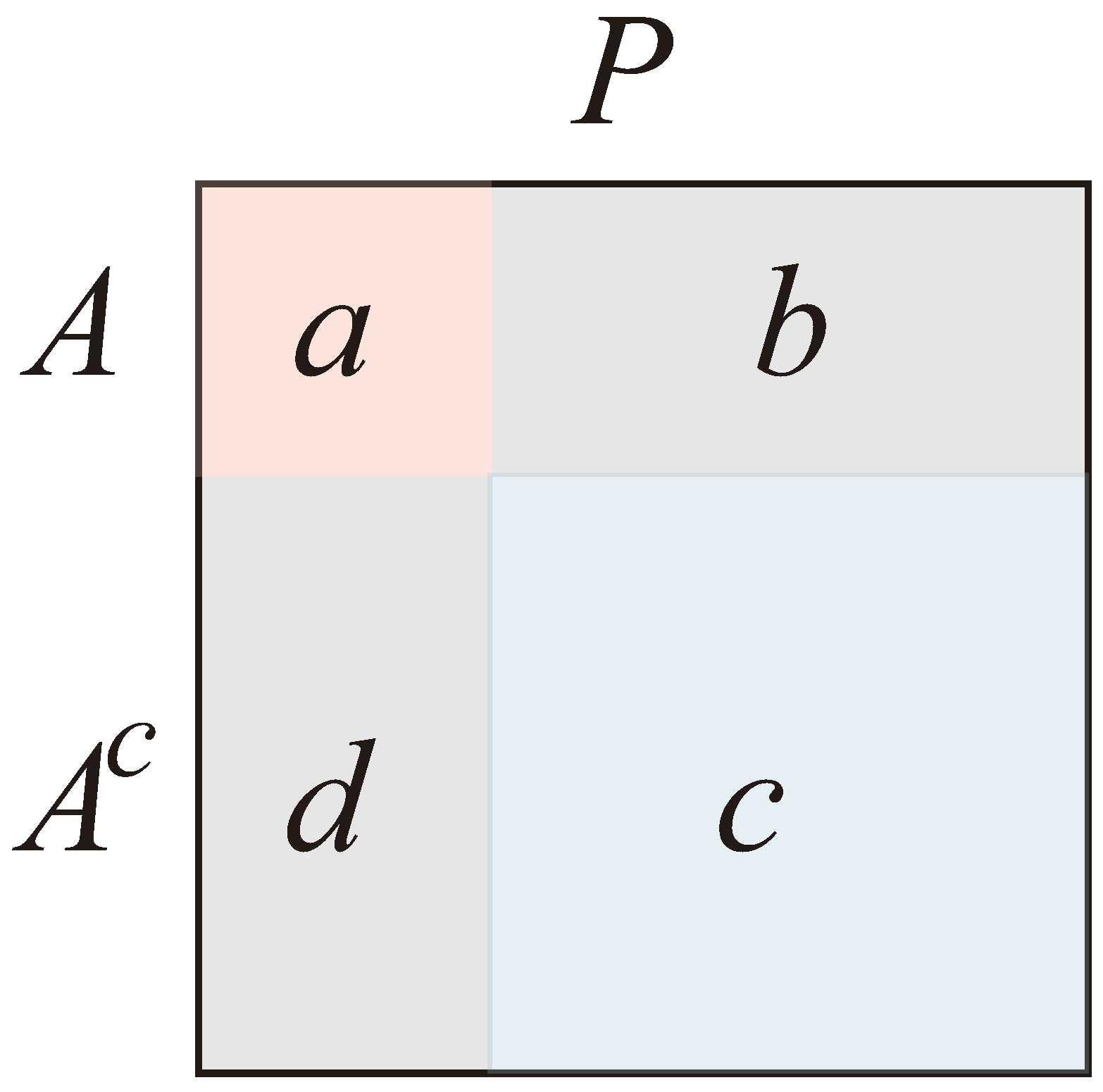

it is 0.4222. The formula for the kernel of a trace chain is nontrivial. It is presented in the following theorem, which uses notation illustrated in

Figure 2.

First we need a couple of technical definitions. A kernel from X to X is simply referred to as a kernel onX. Given a kernel N on X, its support set A is the smallest subset of X such that (i) for any , and (ii) for . We say that N is supported on A (and supported in any larger subset). Intuitively, all the weight of N lies within A.

If the kernel N is both supported on A and satisfies (iii) , , we say that N is semi-Markovian. To keep things simple, we will in the following use the term “Markovian” for semi-Markovian kernels also, trusting that the context will make the distinction clear.

Trace Chain Theorem. (See

Appendix A, Theorem 3.2). Let

P be a Markov kernel on a finite state space, which we denote simply by

. For any subset

, the Markovian kernel,

, for the trace chain of

P on

A, is given by

Here

is multiplication by the indicator function on the set of states

A;

a,

b,

c, and

d are the submatrices of

P shown in

Figure 2; and

I is the identity matrix of the same dimension as

c. For convenience, the picture shows the subset

A of states as the first part of

S, and its complement

as the second. This may not be the case in any actual situation. However the relevant submatrices have their obvious meaning.

The kernel

is supported on

A (and so is actually semiMarkovian). Restricting our attention to the new state space

A, we get a Markovian kernel for a chain on

A. We call this kernel

, the

projection of

P on

A; it is given by

Here

is multiplication by the indicator function on the set of states

A;

a,

b,

c, and

d are the submatrices of

P shown in

Figure 2; and

I is the identity matrix of the same dimension as

c. For convenience, the picture shows the subset

A of states as the first part of

S, and its complement

as the second. This may not be the case in any actual situation. However the relevant submatrices have their obvious meaning.

When P is a kernel, and is the kernel of the trace chain of P on a subset of its states, we will simply say Q is a trace of P. With a slight abuse of notation, we will also say that the projection , as in (12) above, is a trace of P.

Notice that to each Markov kernel we can associate a proposition, i.e., a statement that can be true or false. For instance, to the kernel we associate the proposition "If I see red now, then the probability is 0.4222 that I will see red next, and 0.5778 that I will see green next. If I see green now, then the probability is 0.7071 that I will see red next, and 0.2929 that I will see green next."

Propositions have logical relationships, such as entailment, conjunction, disjunction and negation. For instance, the proposition “Chris is taller than Francis” entails “Francis is shorter than Chris.” The conjunction of “Chris is taller than Francis” with “Francis weighs more than Chris” is “Chris is taller than Francis and Francis weighs more than Chris”. Their disjunction is “Chris is taller than Francis or Francis weighs more than Chris.” And so on.

Logical relationships can be modeled by the mathematics of partially ordered sets: elements of the set are the “propositions” and the order relation, ≤, is “entailment.” So if x and y are elements in the set and then x entails y, also written as . So a partially ordered set is also a logic. If x and y are elements in the set then their least upper bound, if it exists, is denoted and corresponds to disjunction or join; their greatest lower bound, if it exists, is denoted and corresponds to conjunction or meet. A zero element of the logic is an element 0 such that for all x. A unit element is an element 1 such that for all x. If 1 exists then the complement of x, if it exists, is an element such that and . The complement of 1 is 0.

For instance, for some fixed set S, the algebra of all subsets of S forms a boolean logic. The elements of the logic are the subsets. If x and y are subsets of S and then . That is, subset inclusion corresponds to entailment. Union of sets corresponds to ∨. Intersection of sets corresponds to ∧.

There is a class of logics called “orthocomplemented modular lattices” that are considered to be the logics of importance in quantum theory. These are logics in which ∨ and ∧ exist for any two elements, 0 and 1 exist, and the complement of every element x exists. Boolean algebras are in this class, and are characterized by having the property that ∧ is distributive over ∨. However the orthocomplemented modular lattices relevant to quantum mechanics are not distributive, but have the property of “modularity,” which generalizes distributivity [14].

Now, since each Markov kernel is a proposition, the question arises: What is a natural logic on Markov kernels? What ideas should guide us in seeking such a logic?

One central idea, mentioned earlier, is that Markov kernels are propositions about conditional probabilities: If I now see red, then this is the probability that I next see green, and so on.

Another central idea is attention. The states on which a kernel is defined are the only states to which it “attends.”

Putting these two ideas together, we see that a kernel’s proposition about conditional probabilities critically depends on the states to which it attends. If the support states of kernel P are a subset of the states of kernel Q, then P has a smaller focus of attention than Q. But suppose that P and Q are both traces of the same dynamical process. Then the proposition of P must somehow agree with the proposition of Q. How? P must say the same thing Q would say if Q restricted its attention to the same states as P. That is, P must be the kernel of the trace chain of Q on the smaller set. In that case, what P says agrees with what Q says, but Q says more than P does. So in this sense P entails Q. This motivates the following definition.

Definition: Trace Order. If P and Q are Markov kernels, then iff P is a trace of Q.

This leads to the following theorem.

Trace Order Theorem. Let be a measurable space and the set of all Markovian kernels on the measurable sets . The trace order is a partial order on .

To prove that the trace order is indeed a partial order, we must prove that it is reflexive (

), antisymmetric (if

and

then

), and transitive (

, if

and

then

). The proof is given in

Appendix A.

The trace logic is neither a “classical” boolean logic, nor is it an orthocomplemented modular lattice as in quantum theory [14]. It is more general. It has no unit, no greatest element 1. It has no globally defined complement. Although the trace logic is not boolean, it is

locally boolean: For any given kernel

Q, the set of all kernels less than or equal to

Q form a boolean logic. The ∨ and ∧ do not exist for many pairs of elements. Only if

P and

Q share two or more states, and have the same trace on all states they share, can

and

exist. These properties are proven in

Appendix A. We also conjecture that if

then the entropy rate of

P is less than the entropy rate of

Q.

We have defined the trace logic on qualia kernels of CAs. But how does this relate to the stated goal of this section: Create a theory of observation using CAs?

The trace logic yields an ideal theory of observation: if then PobservesQ. This trace theory of observation says that observation is focused attention: P observes Q by comprising, and hence attending to, a subset of states of Q. This subset relation entails that the observer is part of the observed. Thus observer and observed exist only in relation to each other. Indeed, the observer participates in the observed.

But the relation of observer and observed goes deeper. The observed is itself an observer: it observes any kernel greater than it in the trace logic. So the distinction between observer and observed dissolves. John Wheeler envisioned the entirety of existence being built upon billions of elementary acts of observer-participancy. The trace theory of observation gives his vision a formal statement.

We can think of traces as ”spatial windows.” For instance,

Figure 3 shows a

matrix with its three

traces. The spatial window of each trace is highlighted in blue.

Note that the use of the term “spatial” here is not meant to invoke physical space: this is just “space” as the perceptual set of a CA.

The well-known hidden Markov models (HMMs) are similar to trace chains, but with a restriction. An HMM posits one Markovian dynamics on a set of

hidden states, and another dynamics on a set of

observable states called

symbols [23]. Referring to

Figure 2, HMM symbols correspond to states

A on which a trace is taken; hidden states correspond to states

. In an HMM, the hidden states

influence the symbols

A, but not vice versa. This embodies the fiction of objective observation. Trace chains remove this fiction, allowing observer and observed to interact.

The trace theory of observation is an ideal theory, because the definition of trace involves infinite sampling. This is evident in Equations (10)–(12), which sum k from 0 to infinity. So, in order to connect with the observational outcomes corresponding to physical entities, we must consider finite approximations to traces. We can do this by viewing the infinite sequence of steps of a trace chain through finite “temporal” windows. We will refer to these as “sampled” trace chains.

To see how this is done, let’s use a new

matrix, which we will call

Q,

and let’s use the trace of

Q on states 1 and 2, the red and green states. Using (12), this trace is

We first use

Q to randomly generate a

run, a specific temporal sequence. Let’s call it

, a sequence of states 1, 2, 3, where state 1 is red, 2 is green, and 3 is blue. Each run will, of course, generate a different sequence. One example is

From

we can create sampled trace chains on states 1 and 2 as follows. First, delete all 3’s. This leaves the sequence

Then choose a step window. Let’s start with a window of three steps. We partition

into the groups

Now we use each group to create a “sampled matrix.” The first group, (1, 1, 2) starts with a transition from state 1 to state 1, then has a transition from state to 1 to state 2. It has no other transitions. So this corresponds to the sampled matrix

which is not Markov.

The second group, (1,2,2), starts with a transition from state 1 to state 2, then has a transition from state to 2 to state 1. So this corresponds to the sampled matrix

which is Markov.

The third group, (2, 1, 2), starts with a transition from state 2 to state 1, then has a transition from state to 1 to state 2. So this corresponds to the sampled matrix

which is Markov.

We see three important facts from these sampled matrices. First, even though all groups have the same number of steps, the sampled matrices they create can and do differ. Second, the entries in these sampled matrices are dominated by 1’s and 0’s, even though the original matrix has no 1’s or 0’s. Third, the pattern of entries in these sampled matrices little resembles the pattern in the true trace matrix .

All three facts are due to the step size, which is too small to collect enough data to create sampled matrices that closely approximate the true trace matrix . The second and third properties of these sampled matrices are primarily artifacts of the small step size.

If we choose a larger step window of, say, 6 steps, then the first group is (1,1,2,1,2,2), which corresponds to the sampled matrix

which is Markov, and still does not resemble

. If we increase the step window to 14 steps, then the first group is (1,1,2,1,2,2,2,1,2,2,1,1,1,2), which corresponds to the Markovian matrix

which is still far from the correct matrix.

But these examples give a flavor of what happens when we sample with different temporal windows. This will be critical when we propose empirical tests of this theory using data from scattering experiments with subatomic particles. The trace logic and its sampling also comport well with, and offer a non-Boolean extension of, the nested observer windows (NOW) theory of hierarchical consciousness proposed by Riddle and Schooler. [6]

Just as we defined the qualia kernel

of a CA, we can also define its

strategy kernel:

The strategy kernel focuses on the sequence of actions that a CA takes. The set of all strategy kernels also inherits the trace order and trace logic.

Suppose that conscious agent A has qualia kernel and strategy kernel , and conscious agent B has qualia kernel and strategy kernel . Then we can define a preorder on the set of conscious agents by: iff and .

In information theory, memoryless communication channels can be represented by Markov kernels. [22] It would be of interest to extend the trace logic to non-square kernels and so extend the trace logic to the set of all such channels. One can then ask for any pair of channels whether one entails the other, and whether the pair have a meet or join.

The trace theory seems to closely connect to quantum theory, in that the possible outcomes of any experiment are given by the tracing and sampling operations in terms of probabilities. Note also that the trace operation violates an assumption similar to "statistical independence" [35], meaning that the probabilities (after tracing) are not independent of the tracing parameter choices. The expected distribution of trace chains depends on two measurement settings: (1) how many steps we choose to sample and (2) which states we choose to sample.

4. Probabilistic Belief

The trace logic on Markov kernels provides a theory of the observation process. A next question is: How shall we represent possible and actual outcomes of observations?

Given a Markov kernel, P, a natural way to represent its possible outcomes is by its stationary measure, which is a probability measure that satisfies the equation . For the class of irreducible Markov chains, the long-term probability that the Markov chain occupies any state is defined and given by a unique stationary measure.

A probability measure represents probabilistic beliefs, such as the belief that "The probability of the Markov chain being in state 1 is such and such, the probability of being in state 2 is such and such....” This identifies probability measures with propositions, in this case propositions about Markov chains. Once again, whenever we have a set of propositions we can ask about their logic. Is there a natural logic on sets of probability measures? And if so, how is it related to the trace logic? These are the topics of this section.

We briefly review essential terminology. Let be a measurable space and denote by , or just , the collection of non-negative finite measures on . Unless otherwise stated, we will assume this in the sequel that the set Y is finite, or at most denumerable, and that the collection of “measurable sets” is some collection of subsets of Y closed under unions, complements and including the whole space Y itself. consists, then, of functions on Y taking values in the interval . We can write the measure in sequential notation: .

, or just , denotes the collection of probability measures, together with the null measure, on . For , we define and, for , .

We want to define a logic on . To do this, we need to define a partial order on . This partial order must capture the idea that if then the probability measure somehow entails the probability measure .

Here is the intuition behind our definition. The statement , which means “A entails A or B,” is a tautology: If A is true, then is necessarily true. Suppose A and B are statements of probabilities. Say, for instance, we roll a biased die, and A states “1 is twice as likely as 5; 6 cannot happen; 2, 3, and 4 have nonzero probabilities a, b, and c respectively.” Similarly, B states “1 is twice as likely as 6; 5 cannot happen; 2, 3, and 4 have nonzero probabilities a, b, and c respectively.”

We could write A as a probability measure on the six possible outcomes for the roll of a die: . Here m is a factor that normalizes to be a probability. Similarly, we could write B as . Then we expect to find that , where n is a normalizing factor, since this value for agrees with that “1 is twice as likely as 5,” and it agrees with that “1 is twice as likely as 6.”

Another way to say this is that setting the measure to 0 outside the “support” of , i.e., the set of outcomes where it is non-zero, recovers up to a normalizing constant. We say that is a “normalized restriction of to the support of "; this is a consequence of the tautology , and the same is true of on its support. (We note in passing that this use of “restriction” is not quite the standard notion of restricting functions to subsets of their domains.)

Inspired by this intuition, we will, below, define iff is a "normalized restriction" of , and where both and lie in the set , of probability measures together with the “zero” measure.. This is the foundational definition for a logic of probability measures called the Lebesgue logic[7]. The Lebesgue logic includes a “zero” element: this is just the zero measure assigning 0 to each state, so that for any in the logic.

Like the trace logic, the Lebesgue logic is not boolean, nor is it an orthocomplemented modular lattice. It is more general. It also has no “unit,” i.e., no greatest element 1, nor does it have globally defined complements. Although the Lebesgue logic is not boolean, it is locally boolean: For any given probability measure , the set of all probability measures less than form a boolean logic. The ∨ and ∧ do not exist for many pairs of elements. Only if the supports of and share two or more states, and have the same normalized restriction on the intersection of their supports, do and exist. These properties are proven in [7].

The formal definition of the Lebesgue order uses the Lebesgue decomposition theorem for measures. We recall this theorem and then define the order. The theorem uses the notions of absolute continuity and singularity of measures. Intuitively, a measure is absolutely continuous with respect to another measure if wherever says something can’t happen then agrees that it can’t happen. Again, we say that and are (mutually) singular if, intuitively, whatever can happen according to cannot happen according to and vice versa: the supports of the measures and have no nonzero overlap.

Formally, is absolutely continuous with respect to another measure if whenever a set E has measure zero under it also has measure zero under . Measures and are (mutually) singular if there is a set F with , where is the complement of F.

Lebesgue Decomposition Theorem. Given any two measures , , the measure can be written uniquely as the sum of two measures: , where is absolutely continuous with respect to and is singular to .

Note that is, by definition, supported within the support of , while has support in the complement of the support of . Note also that the null measure is both absolutely continuous with and singular to any other measure.

Definition: Lebesgue Order. For

,

, we say that

if

is a normalized restriction of

, i.e.,

Denote by

the set

partially ordered with the Lebesgue order.

Proofs that the Lebesgue order is a partial order, and that if then is a normalized restriction of , are given in [7].

Intuitively, means that both measures have the same shape where is supported, but may also have nonzero content outside of that support.

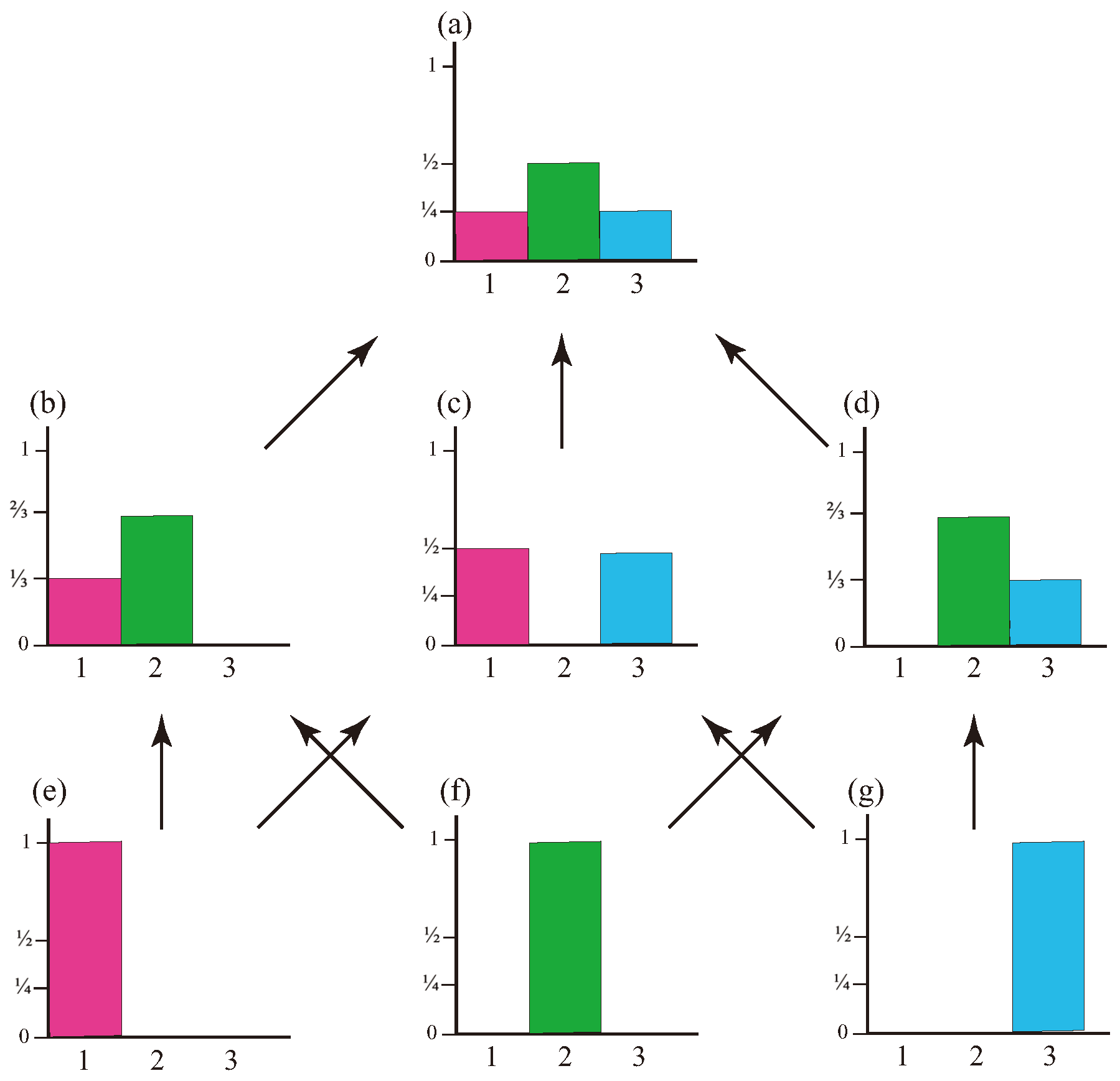

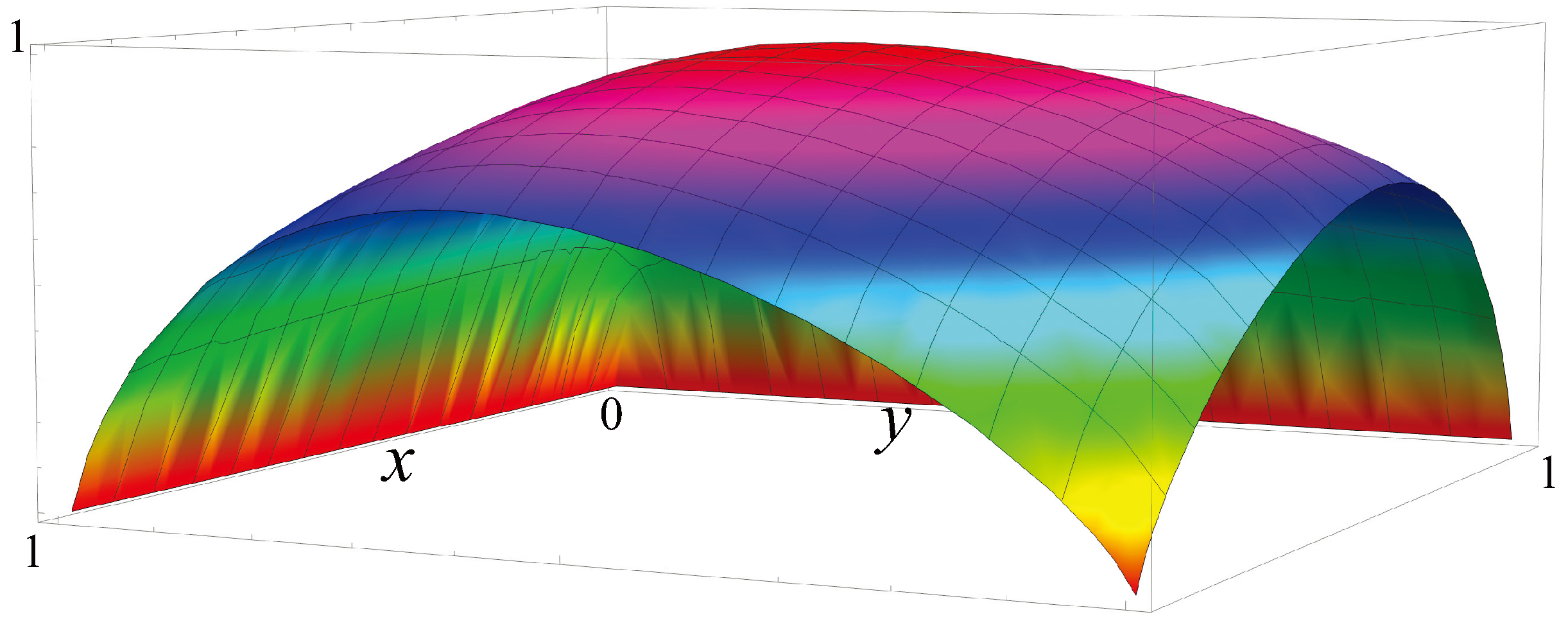

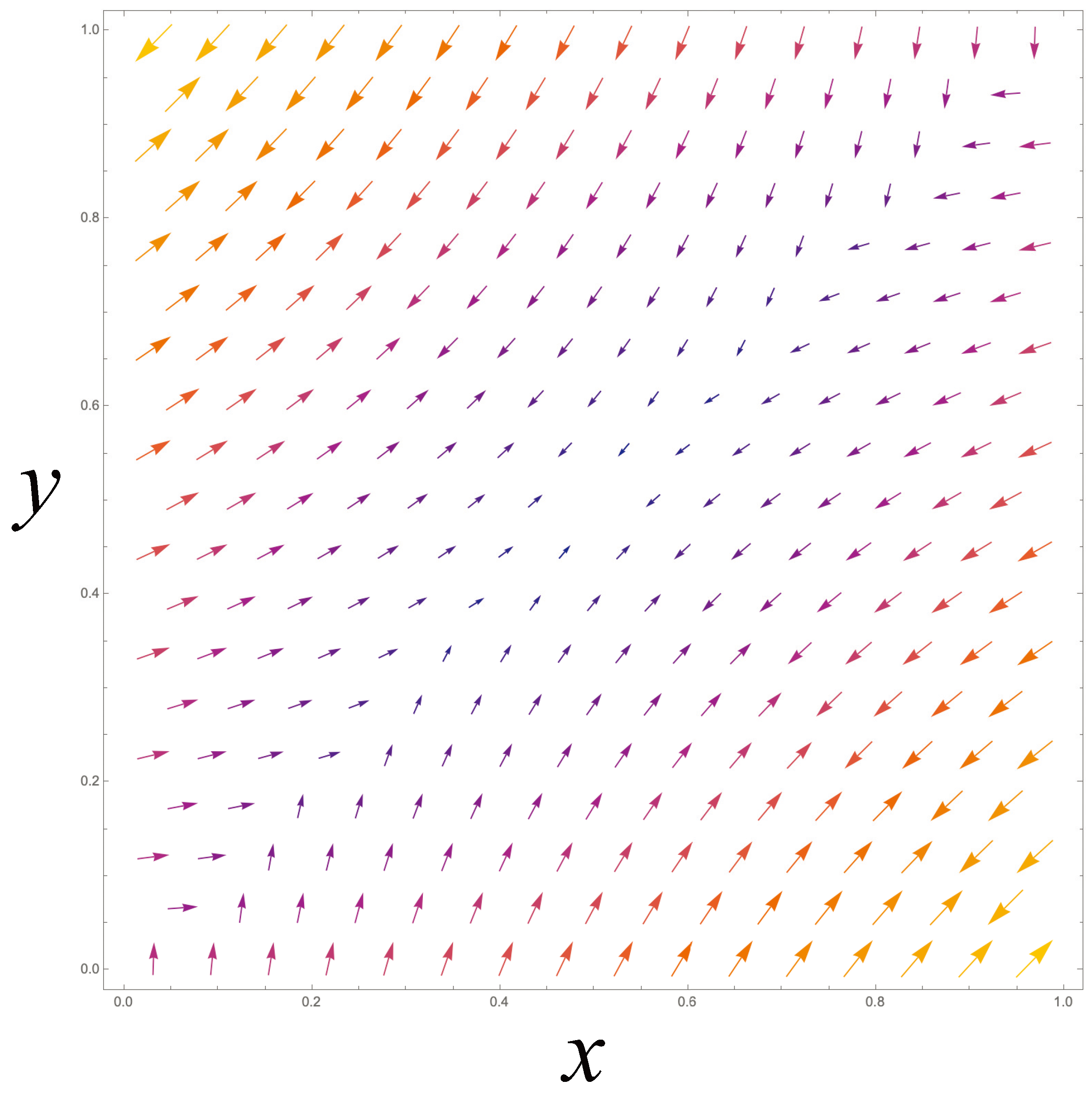

The Lebesgue order is illustrated in

Figure 4, with arrows indicating entailment. So, for instance, the green point measure (or "Dirac" measure) concentrated at 2 and shown in

Figure 4(f) entails the probability measures illustrated in

Figure 4(b,d). Each of

Figure 4(b–d) entails

Figure 4(a).

In

Appendix A.5 we summarize some of the main results from [7], about the existence of the meets and joins, or ANDs and ORs, of two measures under certain conditions, suggestively termed “simultaneous verifiability” and “compatibility.”

There is an interesting relationship between irreducible Markov kernels in the trace logic and their stationary measures in the Lebesgue logic (for a proof, see Theorem 3.9 in the

Appendix A):

Stationary Map Theorem. Let

denote the stationary measure of Markov chain

, and

the stationary measure of

, with

. If

is a trace of

then

is the normalized restriction of

to

D. The proof is in

Appendix A.

Homomorphism From Trace Order To Lebesgue Order. The Stationary Map Theorem entails that if in the trace order then , i.e., that the map taking a Markovian kernel to its stationary measure is a homomorphism from the trace logic to the Lebesgue logic.

Consider, for example, the Markovian kernel

Its stationary measure is

Its traces on states

,

, and

are

with stationary measures

The stationary measures of the trace chains are indeed the appropriate normalized restrictions of the stationary measure of

P. We have the disjunctions

and the conjunctions

,

, and

, where

denotes the Dirac kernel on state

i.

The kernel P and its trace chains are not the only kernels that have exactly these stationary measures. For example, any kernel Q satisfying , for some integer , will have the same stationary measure as P and its traces will have the same stationary measures as the traces for P.

The trace logic and Lebesgue logic are a powerful theory for the combination problem of consciousness. They describe when, and precisely how, conscious observers and their probabilistic beliefs can be combined.

They also provide a formal account of dissociation for idealist theories of consciousness, describing precisely how conscious observers can be dissociated into sub-observers [15]. There are many informal accounts of dissociation, such as Schiller’s in 1906: “Now it is clearly quite easy to push this conception one step further, and to conceive individual minds as arising from the raising of the threshold in a larger mind, in which, though apparently disconnected, they would really all be continuously connected below the limen, so that on lowering it their continuity would again display itself, and mental processes could pass directly from one mind to another. Particular minds, therefore, would be separate and cut off from each other only in their visible or supraliminal parts, much as a row of islands may really be the tops of a submerged mountain chain, and would become continuous if the water-level were sufficiently lowered” [13]. Schiller describes dissociation informally as islands that appear when the water level in a valley is raised. This idea is captured formally in our theory of the trace order, in which smaller Markov kernels appear when a larger kernel gets traced on different subsets of its states and is, further, sampled over finite steps in the chain.

We close this section with a brief recap of the main point. The trace logic on Markov kernels, along with a choice of sampling interval provides a formal theory of observation. In this theory the observer is an integral part of the observed. The outcome of an observation is not an objective description. It is a creative interaction of observer and observed, in which the observer, in deep collaboration with the observed, creates the outcome of the observation.

This comports well with the ideas of physicist Chris Fuchs: “QBism says when an agent reaches out and touches a quantum system—when he performs a quantum measurement—that process gives rise to birth in a nearly literal sense. With the action of the agent upon the system, something new comes into the world that wasn’t there previously: It is the “outcome,” the unpredictable consequence for the very agent who took the action. John Archibald Wheeler said it this way, and we follow suit: “Each elementary quantum phenomenon is an elementary act of ‘fact creation.’” [16] In a “participatory” universe, an observation “co-creates” facts simply by its access being limited (“spatially” or “temporally,” or both) to the whole. Fact-creation happens within the observer’s perceptual space. This does not mean that facts are created in the absence of any underlying structure, rather that “facts” are (co-)created in ignorance of it.

5. The Spacetime Interface: Time, Energy, Position, Momentum

Theories of conscious experiences, like any scientific theories, must make testable predictions. Most current theories of experience are physicalist: they assume that spacetime is fundamental, and that conscious experiences arise from physical substrates in spacetime with the right properties.

It’s estimated that humans distinguish thousands of flavors, millions of colors, billions of smells, and untold numbers of emotions and bodily sensations. This is an ample pool of targets for theories of conscious experiences. One might suppose that crafting a physicalist account of a specific experience is like shooting fish in a barrel. So, how are we doing? How many experiences have physicalist theories explained?

Zero. No physicalist theory explains any specific conscious experience. This is remarkable, because the theorists are determined, brilliant and include winners of the Nobel Prize. The failure is not for lack of effort or intelligence.

It is also striking that these theories are called theories of conscious experience. Suppose I tell a physicist, “I have a theory of particle interactions,” and she replies, “Great! Give an example. How about quark-gluon interactions?” If I respond,“Oh, it can’t explain specific interactions—it’s a general theory,” she could be forgiven for asking, “Why claim you have a theory of particle interactions?”

The theory of conscious agents has an opposite challenge. It claims that a network of agents is fundamental, and that spacetime and its contents are a headset that some agents use to simplify their interactions. This invites the reply, “Great! Give an example. How do agent networks explain a specific process in spacetime, such as quark-gluon interactions?” If we reply, “Oh, the theory can’t explain any specific process,” then we deserve the retort, “Why claim you have a theory? You make no testable predictions.”

There is a critical difference between physicalist and conscious agent theories. The problem for conscious agents appears to be technical and manageable, as we discuss in this section. But the problem for physicalist theories appears to be principled. It simply cannot be solved. This was understood by Leibniz three centuries ago: “It must be confessed, however, that Perception, and that which depends upon it, are inexplicable by mechanical causes, that is to say, by figures and motions. Supposing that there was a machine whose structure produced thought, sensation, and perception, we could conceive of it as increased in size with the same proportions until one was able to enter into its interior, as he would into a mill. Now, on going into it he would find only pieces working upon one another, but never would he find anything to explain Perception. It is accordingly in the simple substance, and not in the composite nor in a machine that the Perception is to be sought. Furthermore, there is nothing besides perceptions and their changes to be found in the simple substance. Additionally, it is in these alone that all the internal activities of the simple substance can consist” [17] .

We want a dynamics of conscious agents beyond spacetime that makes predictions we can test within spacetime. To do this, we must project the dynamics onto spacetime. That is the topic of this section.

Fortunately we have an assist. In the last decade, high-energy theoretical physicists have found structures beyond spacetime, called positive geometries, [32] that dramatically simplify the computation of amplitudes for particle scattering. Positive geometries reveal that the principles of spacetime and quantum theory arise from more fundamental mathematical principles. This gives us a target: Project agent dynamics onto positive geometries. The positive geometries then project onto spacetime.

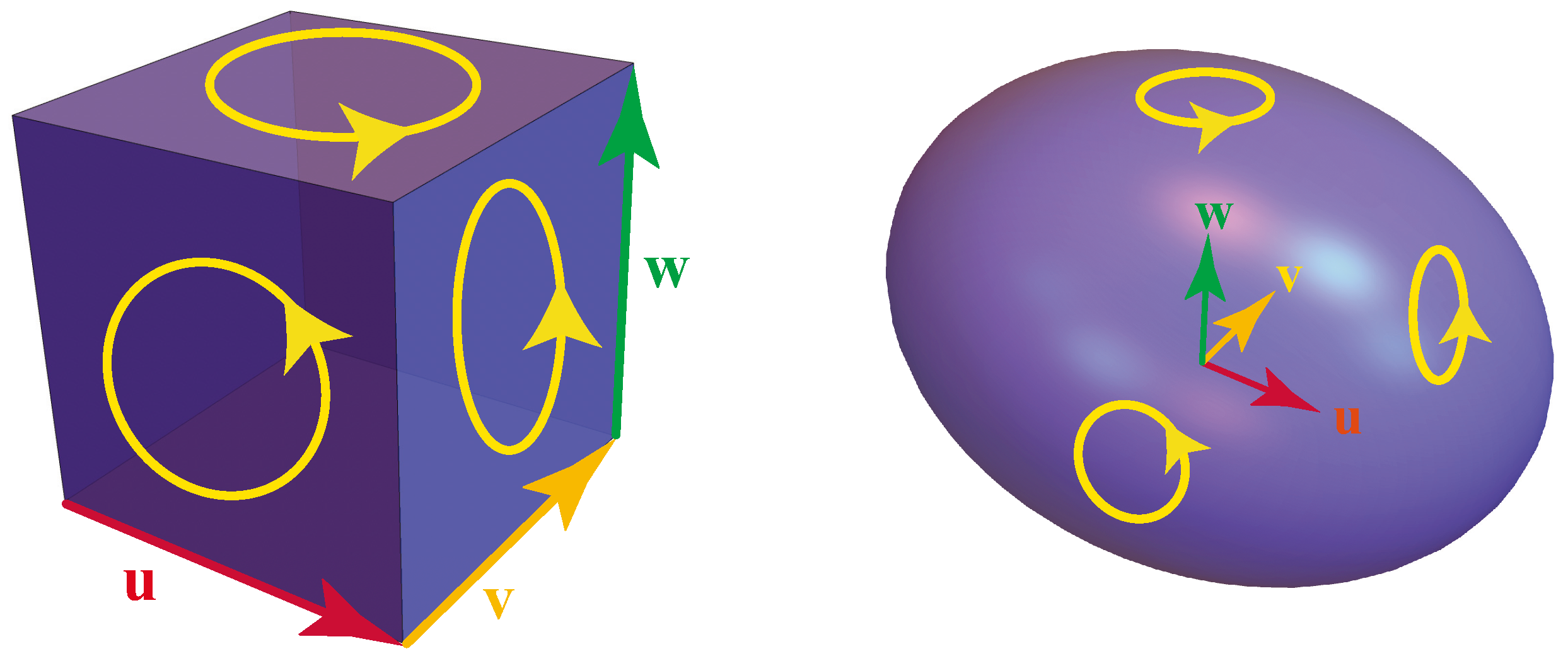

In a previous paper, “Fusions of consciousness,” we took a first step [18]. Physicists had discovered that combinatorial objects called decorated permutations classify positive geometries. We showed that decorated permutations also classify the recurrent communicating classes (RCCs) of Markov chains [18]. Intuitively, an RCC is a set of states that all “talk” to each other: Starting at any state in the class, you eventually get to every other state in the class, and you eventually get back to where you started. This allowed us to propose that particles in spacetime are projections of RCCs of Markov chains [18]. We think of RCCs as arising from a sampled trace chain, so that the communicating class and the particle to which it projects are both results of an observation, and are not taken as an objective reality independent of observation.

This connection is critical. If correct, it entails that properties of particles, such as spin, mass, energy and momentum, are projections of properties of RCCs, an idea we started to explore in our paper “Objects of consciousness” [5]. Here we explore the idea a bit further. To do so, we first introduce the notion of the enhanced chain that is associated to any Markov chain. (Note that in [21] this is called the “space-time” chain; in order to avoid confusion with physical spacetime, we are using the term “enhanced.”) We will then see that harmonic functions of the enhanced chain are identical in form to the quantum wave-functions of free particles. This suggests precise correspondences, which we will describe, between properties of RCCs and the physical properties of position, momentum, time, and energy.

First, some notation. denotes the natural numbers (including 0). Any subset of is considered measurable (we say that has the discrete-algebra). Let be the state space.

Definition: Enhanced Chain. The enhanced chain associated to a Markov chain with kernel

L on state space

E is the Markov chain on the product state space

, with the kernel

given by

where

,

, and

. If the initial measure of the chain on

E is

, then the initial measure of the enhanced chain is

, where

the unit mass at 0:

takes the value 1 for

and is otherwise 0.

Definition: Harmonic function of Markov kernel. Given a Markov kernel P on a state space S, a measurable function g is P-harmonic if it is an eigenfunction of P with eigenvalue 1: for all .

Given a Markov chain, its large-time, or asymptotic behavior can be described in terms of the collection of its

asymptotic sets (intuitively, these are the sets that are measurable for all tail sequences of the chain). A certain general class of chains, including the chains on spaces we consider here, have simple asymptotic behavior: their invariant events consist of a finite number of absorbing sets (these are sets from which the chain never leaves) and each absorbing set itself is partitioned into a finite number of “asymptotic” subsets. We index the absorbing sets with the symbol

and denote the number of partitioning subsets in the

absorbing set by the symbol

. Furthermore, the partitioning subsets of each absorbing set

can be indexed in such a way that, once the chain enters one of them, indexed by say

, then at the next step it moves to the partitioning set with the next higher index

. Then it turns out that there is a correspondence between eigenfunctions of

L and harmonic functions of

Q ( [21], p. 210). Let

where

k is an integer between 1 and

, and

where

is the indicator function of the asymptotic event with index

. Then we have the

Theorem: Harmonic Functions for Enhanced Chains.

is an eigenfunction of

L with eigenvalue

:

Moreover, the function

is

Q-harmonic. The proof is given in [21].

Inserting (35) and (36), the definition (38) becomes

This is identical in form to the wavefunction of a free particle ( [20]

§7.2.3):

where

and

. This leads us to suggest identifying:

,

,

,

, where

. Then the momentum of the particle is

, where

h is Planck’s constant. For a massless particle, its energy is

, where

c is the speed of light, while for a particle of mass

m, its energy is

.

Thus we are identifying

- 1.

A wavefunction of the free particle with a harmonic function g of an enhanced Markov chain of interacting conscious agents;

- 2.

The position basis of the particle with the indicator functions of asymptotic events of the agent dynamics;

- 3.

The position index x with the asymptotic state index ;

- 4.

The time parameter t with the step parameter n;

- 5.

The wavelength and period T with the number of asymptotic events in the asymptotic behavior of the agents; and

- 6.

The momentum p and energy E as functions inversely proportional to .

Note that wavelength and period are identical here, so that in these units the speed of the wave is 1.

Example 1: Q-harmonic Functions and Wavefunctions. Consider the Markovian kernel on four states,

, given by

L has one absorbing set, containing all four states, so

. This absorbing set has 4 asymptotic events, viz., the states

, so

. It has four eigenvalues,

indexed by the integer

k, and four eigenfunctions,

The

Q-harmonic functions are

We can rewrite these in the form

Comparing this with (40) we find that for the corresponding massless spacetime particle has momentum and energy . For , and . For , and . For , and .

In summary, this section proposes that

The momentum and energy of particles in spacetime are projections of the number of asymptotic states of some communicating class of the enhanced dynamics of CAs beyond spacetime.

The position index in spacetime is a projection of an index over the asymptotic states of this CA dynamics.

The time index in spacetime is a projection of the step parameter of this CA dynamics.

We propose a novel understanding of the Heisenberg uncertainty principle, by combining the proposals of this section with our theory of observation based on sampled trace chains. Recall that this principle states that, for position and momentum,

where

denotes the standard deviation in position,

denotes the standard deviation in momentum, and

ℏ is the reduced Planck constant. A similar inequality holds for time and energy.

According to our proposal, momentum is a projection of the number of asymptotic sets in the RCC. To determine this number from a sampled trace chain, we need a long sampled trace; the longer the sample, the more accurate our estimate of the details of the asymptotic sets. But the position is a projection of the current asymptotic of the chain, which requires a short sample: ideally, just one step. Thus the conditions required for accurate measurement of position contradict the conditions required for accurate measurement of momentum. This contradiction, then, is proposed as the source of the uncertainty relation in the theory of conscious agents. Similar remarks hold for time and energy.

6. The Spacetime Interface: Mass and Spin

In the last section we showed how the energy and momentum of a free particle in spacetime can be the projection of a Markovian RCC, and are intimately connected to the number of asymptotic sets in the RCC. What about mass and spin? Can these also be seen as projections of properties of an RCC? That is the topic of this section.

We begin by modifying the RCC of Example 1 from the last section.

Example 2: Q-harmonic Functions and Wavefunctions. Consider the Markovian kernel on eight states,

, given by

L has one absorbing set, containing all 8 states, so

. This absorbing set has 4 asymptotic events, viz., the 4 sets of states

, so

. It has exactly the same form for the 4 eigenvalues, eigenfunctions, Q-harmonic functions, and wavefunctions as Example 1.

So what is the difference? A key difference is that the entropy rate of Example 1 is 0, but the entropy rate of Example 2 is greater than 0. We propose that

and thus that Examples 1 and 2 differ in the masses of their particles.

We briefly review the definition of entropy rate [22]. A stochastic process

is a sequence of random variables. If

is generated by a stationary Markov chain, then asymptotically the joint entropy

grows linearly with

N at rate

, which is called the entropy rate of the process. An

irreducible, or

ergodic chain is one for which there is a non-zero probability of going from any given state to any other state in some finite number of steps. Such chains possess a unique stationary measure.The entropy rate of an ergodic Markov chain with stationary measure

and transition probability

P is given by

This says that the entropy rate of P is the weighted sum of the entropies of the probability measures constituting its rows, where the weighting is the stationary measure of the Markov kernel. Notice that a row of a Markov kernel with a single unit entry (and therefore all other entries 0) has zero entropy. So a Markov kernel all of whose rows are of this type will have a 0 entropy rate. Such kernels constitute the periodic kernels [18]: periodic chains have 0 entropy rate.

So if the physical mass of a particle is a projection of the entropy rate of a communicating class of CAs, then Examples 1 and 2 differ in the mass of the particle they represent. We can continue, by making similar examples whose 4 asymptotic events each contain more and more states, and thus potentially greater entropy rates, i.e., greater masses. As we said before, we think of this communicating class as arising from a sampled trace chain, so that the communicating class and the particle to which it projects are both results of an observation, and are not taken as an objective reality independent of observation.

Why should we posit this connection between mass and entropy rate? Intuitively, the more internal interactions an object has, the less it is affected by outside influences, i.e., the greater its “inertia.” Similarly, the more connections a given state of a communicating class has with its other states, the wider influence it has with other states. If a state has only one connection, then its row in the Markov kernel is all 0’s except for a single 1: the entropy of its row is 0. The more connections a state has, i.e., the more nonzero entries it has in its row, the greater its entropy will tend to be. Another way to say this is that the entropy rate is the minimum expected codeword length per symbol of a stationary stochastic process [22]. Greater entropy rate thus leads to “weightier” descriptions.

There is a physical analogy giving insight into how an intrinsic ‘mass’ of a particle, can arise from the sampling and trace-chaining on a Markovian dynamic by looking at the Langevin Equation for Brownian motion of a pollen particle [37,38]. Considering n interacting entities, one can ‘trace’ the coupled motion of the entire system only on entities, the ignored entities leaving their trace on the resulting dynamics of fewer entities. For the Brownian particle, m is the pollen grain under consideration, incessantly bombarded by and interacting with its environmental molecules. In our language, we can say that the Brownian particles ‘observes’ (i.e. samples) the whole system via the ‘trace-chaining’ operation. The equation of motion for the Brownian particle is then described by the Langevin equation, which is typically characterized by three properties: (i) a fluctuation term representing the initial conditions of the neglected degrees of motion; (ii) a ‘damping’ or dissipation term representing ‘friction’ or viscous drag in the soup of the entities of the environmental bath; and (iii) a memory of previous motion, as feedback from sampling i.e. tracing. Statistical mechanics connects the ‘fluctuation’ to ‘dissipation’ and ‘memory’ via the ‘fluctuation-dissipation’ theorem. Friction implies an inertial ‘mass’ experiencing ‘damping’ or resistance as ‘dissipation’ which is nothing but thermodynamic ‘heat’, connected with the loss of information from the whole system onto the Brownian particle: the ‘entropy flow or rate’ within the tracing/sampling processing process. This supports the conjecture of connecting the ‘entropy rate’ of a sampled trace-chaining to the ‘mass’ of a particle.

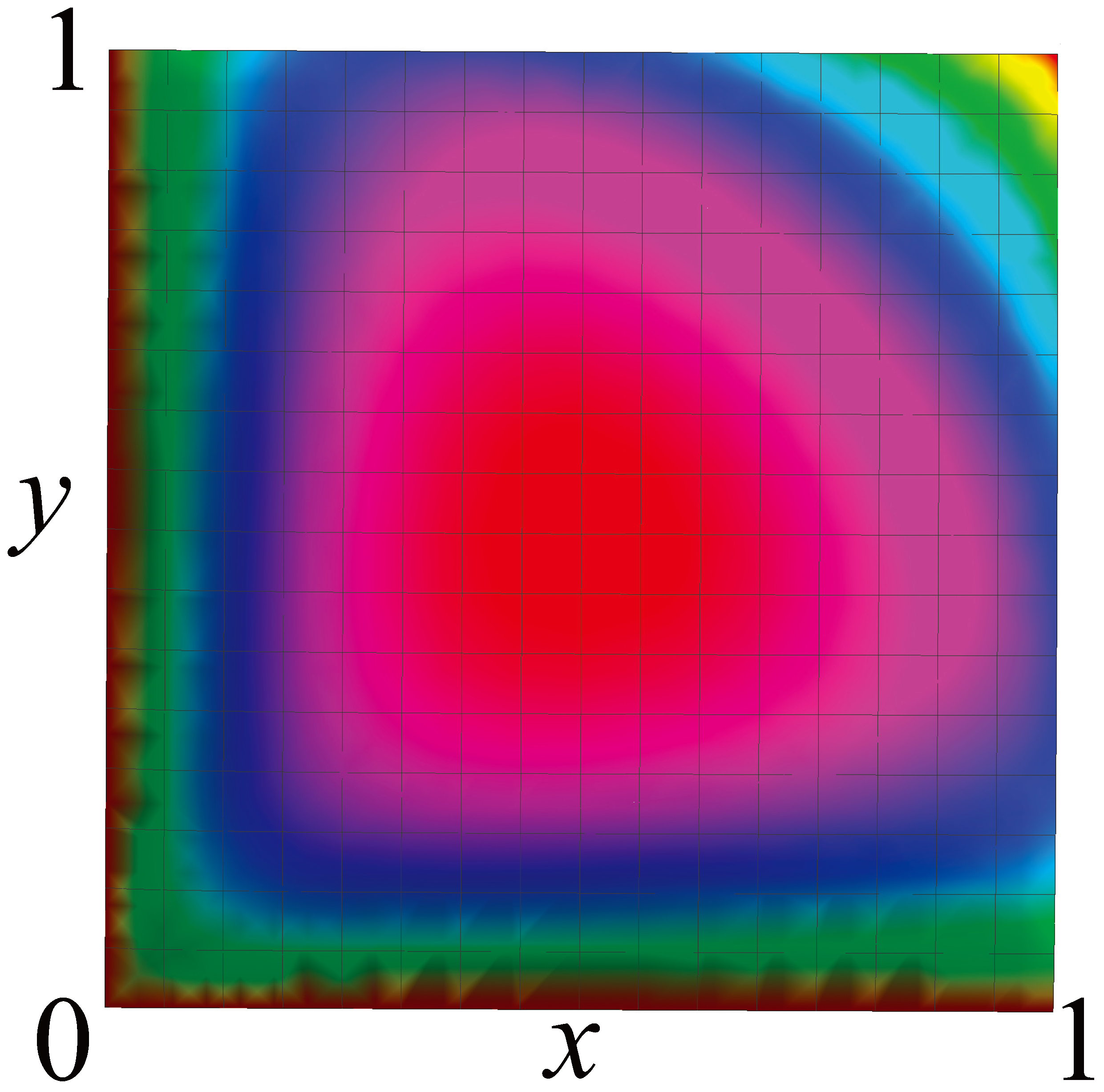

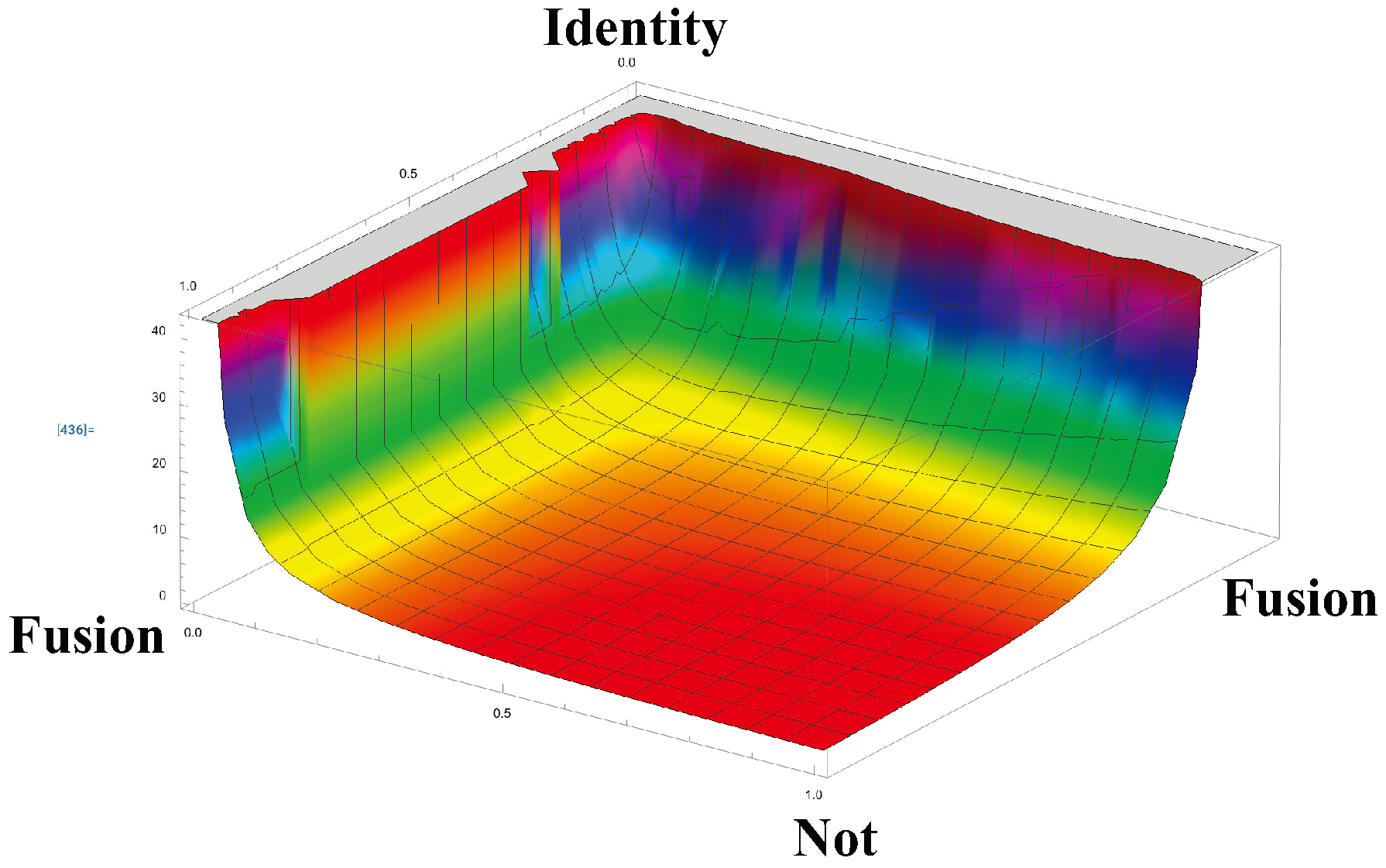

Figure 6 plots the entropy rate for each kernel in the Markov polytope

, the set of all

Markov matrices. The maximum entropy rate in

is 1. The maximum entropy rate for matrices in the Markov polytope

is

. Thus enormous matrices are required to get large masses. For instance, the maximum mass for Markov kernels of dimension

is about 1834, which is roughly the proton-electron mass ratio. Now

is a lot of elementary conscious agents. To compare, the number of particles in the observable universe is roughly

.

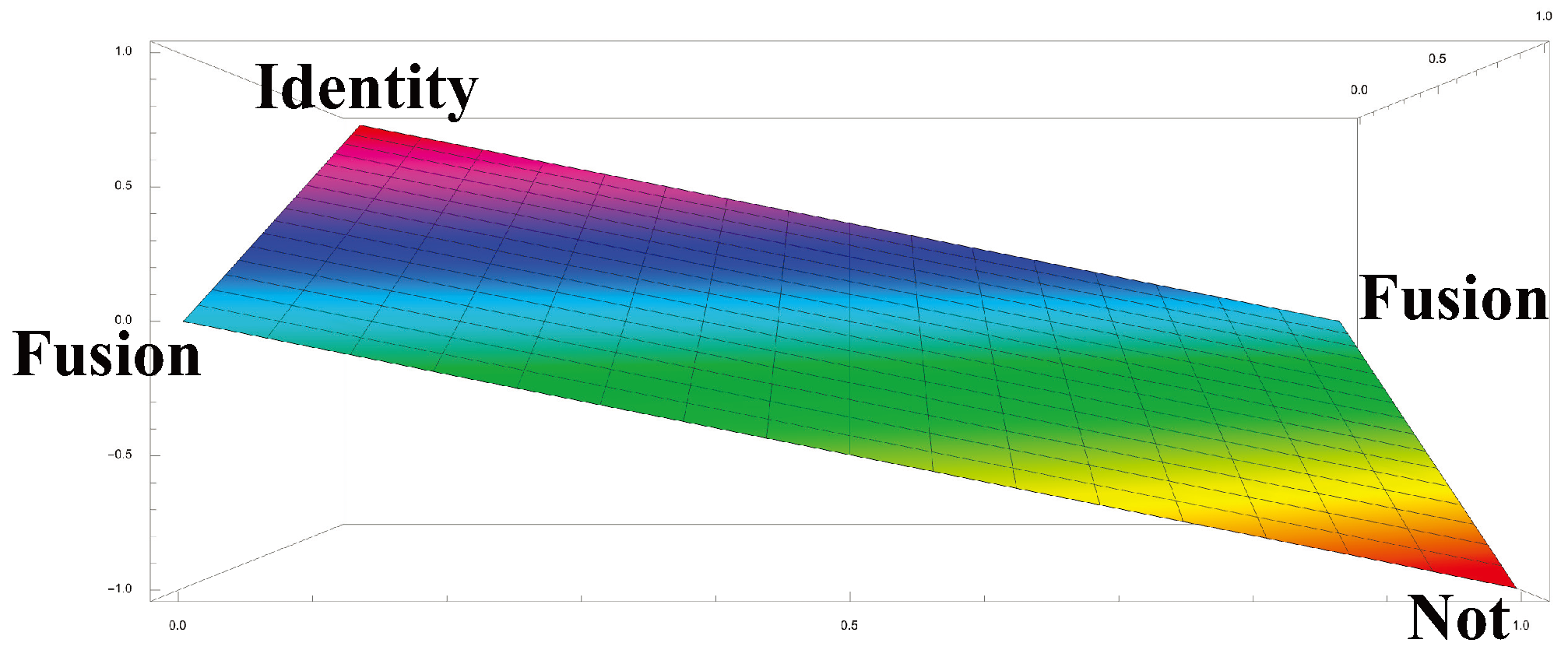

This claimed link between mass and entropy rate of an RCC faces two obvious challenges. First, quantum theory dictates that massless particles have spin 1, with helicities or . (There is the theoretical possibility of a massless spin 2 particle for gravity, but no experimental evidence yet). Second, relativity dictates that massless particles always move at the speed of light. Can entropy rate meet these challenges? Quite nicely, it appears.

To meet the spin challenge, we first need to propose what property of an RCC projects to spin. An obvious candidate is the determinant of the Markov matrix of the RCC, which can take any real value between 1 and

inclusive.

Figure 8 shows the determinants for each matrix in the Markov polytope

. The identity matrix, at the corner labelled

Identity, has determinant 1. The Not matrix

in the corner labelled

Not, has determinant -1. Fusion matrices, which have the form

have deteminant 0, lying on the line between the corners labelled

Fusion, (roughly following the light blue streak). [18] The determinants between this line and the

Identity corner satisfy

, while determinants between this line and the

Not corner satisfy

.

While the determinants can take any real value between 1 and

inclusive, the only possible spin values are 0,

, and

. A natural projection from determinant

d to spin

is

We see from

Figure 6 that the matrices in

with zero entropy rate lie along the lines

and

. However, the only one of these that is irreducible (i.e., has a two-state RCC), is the NOT matrix: a periodic matrix with period 2. All other matrices with zero entropy rate have an absorbing state, so none of them are irreducible. Matrices arbitrarily close to the lines

and

do have a communicating class of two states and an entropy rate close to 0, but never exactly 0. This is reminiscent of neutrino masses, which are close to 0, but not exactly 0. The behavior of these matrices, which contain states that are nearly absorbing, is also reminiscent of neutrino behavior: they rarely interact.

The map is defined not just for matrices of , but also for matrices of , for any n. In each case, the communicating classes of size n that have determinant are periodic with period n, and these are the only matrices with full-size communicating classes having an entropy rate of 0. If n is even, these periodic classes have determinant , whereas if n is odd they have determinant is +1. This may be related to the hyperfine structure of energy states.

Another physical analogy can give insight as to how ‘spin’, or intrinsic angular momentum of a particle, can arise from the sampling of trace-chains in a Markovian dynamic. In the Ising model, which uses magnetic dipoles as a model of interacting ‘spins’, the phase transition from a ‘disordered’ state of magnetization (i.e. randomly distributed spins at high temperature) to a perfectly ‘ordered’ state of magnetization (i.e. aligned spins at low temperature) occurs below a positive, finite temperature (the Curie temperature), but only in dimensions higher than 1. In one dimension the total magnetization or ‘net spin’ continuously and monotonically decreases, as the temperature increases, from 1 (but only at the lowest temperature of absolute zero degrees), to zero at infinite temperature. But in 2- or more dimensions, a ‘disorder-order’ transition takes place at a non-zero temperature, separating a state of total magnetization (perfect spin alignment) from randomly distributed magnetic dipoles (random spin directions).

In statistical mechanics, the thermodynamic variables of a system can be derived from its partition function, which for the experiencing ‘magnetic domain’ of interaction spins has been calculated [39,40,41,42]. Typically, it is the trace of an N-fold product of individual spin-spin transfer matrices and for antisymmetric transfer matrices, it simply turns out to be the power of the highest eigenvalue. In 2- and higher dimensions, where a phase transition is a reality, it is shown to be given by the square root of the determinant of the associated anti-symmetric spin-spin transfer matrix. The interaction between two such magnetic domains can then be described by the product of the corresponding partition functions and (if the interaction between domains is identical in all other aspects) the total partition function is then proportional to the determinant of the associated transfer matrices. Given two magnetic/spin domains where aggregate spins can be all aligned (normalized spin +1) or all anti-aligned (normalized spin -1), this determinant can range between -1 to +1.

Thus ‘interacting spins’ in the Ising model, described by concatenated spin coupling transfer matrices, offers trace of the resultant matrix as a determinant of the associated matrix representing the statistical mechanical ‘partitioning’ between aligned and anti-aligned spin states, supports our conjecture that the determinant of the sampled and trace-chained Markov chain dynamic is a measure, in some sense, of the fundamental ‘spin’ of a particle. We intend to explore this further in our future investigations.

So the proposal that mass is a projection of entropy rate passes the challenge of quantum theory that massless particles have spin 1, with helicities or . How does it do with the challenge of relativity that massless particles travel at the maximum possible speed, the speed of light?

To investigate that challenge, we need to propose a property of Markov kernels that projects to speed. A natural candidate is related to the commute time, between two states of a Markov chain [27]. is the expected time, starting at a, to go to b and return to a. We have , and . This makes look like a distance between a and b, but it is more natural to view as the squared distance, .

We propose:

We briefly review how to compute mean commute times [31]. Let

P be an

ergodic Markovian tp and let

w be its unique stationary measure. Let

W be the

matrix all of whose rows are

w. Set

Then the matrix

M of

expected first passage times, where

is the expected time to start at

i and arrive at

for the first time (with

) is given by

(Recall that, since

P is ergodic, all

.) Then the

expected commute time is given by

The

total expected commute time for a Markov chain governed by

P is then

and has

terms.

Example:

The typical Markov kernel in

is

The domain

E of ergodic

P consists of the interior of the unit square, together with the NOT operator at

. At these points, the stationary measure is

We have

Now (56) and (58) applied to (59) give us the total expected commute time for a Markov chain governed by

P is

Note that in

the total commute time has only one term; for

there will be

terms.

The commute times for Markov chains in

are shown in

Figure 9. The minimum commute time is 2, and is obtained by the Not matrix, the only periodic kernel of period 2 in

.

This example illustrates that when the entropy rate of a CC is 0, the commute times between states of the chain are the shortest possible; i.e., the speed of transition among states of the CC is the maximum speed possible: 1 state per step of the chain. This is achieved by periodic Markov chains, whose entropy rate is 0. Note that such periodic kernels correspond to permutations on the state space that are complete derangements. We make the following conjecture (which holds true in

Figure 9).

Conjecture:

An ergodic Markov chain on n states has a minimal total expected commute time between states if and only if it is periodic with period n.

For periodic CCs, which have zero entropy rate, the expected “speed” of transition between states is maximal, in the sense that the commute time between states is minimal. If we propose that the mass of a particle is a projection of the entropy rate of a CC of CA dynamics, and that the speed of the particle is a projection of the expected speed of transitions between states of that CC, then we satisfy the demand of relativity that massless particles always move at the speed of light, the maximum possible speed. This is encouraging. The proposal that mass is a projection of entropy rate passes the initial challenges posed by quantum theory and relativity.

Suppose, however, that we consider two distinct CCs, say CC1 and CC2, corresponding to two distinct particles, say and . The commute time between any state, i, of CC1 and any state, j, of CC2 is infinite, simply because the probability of getting from i to j is 0. This entails that there is no possible causal interaction between and . In relativistic language we would say that and are separated by a spacelike interval. So clearly we need to generalize our proposal, so that we can also describe particles that are separated by timelike and lightlike intervals.

We still propose that free particles are projections of communicating classes. But particles are not always free. Particles can be bound together, such as when an electron and proton bind to form a hydrogen atom. The strengths of binding vary across combinations of particles. The binding of quarks is so strong that they are said to be “confined.” Quarks are never free particles at normal temperatures, but are always grouped together into hadrons, such as protons, neutrons, and pions. This property of quarks is called “quark confinement.” Only if the temperature exceeds the Hagedorn temperature, about K, do quarks become free, forming a quark-gluon plasma.

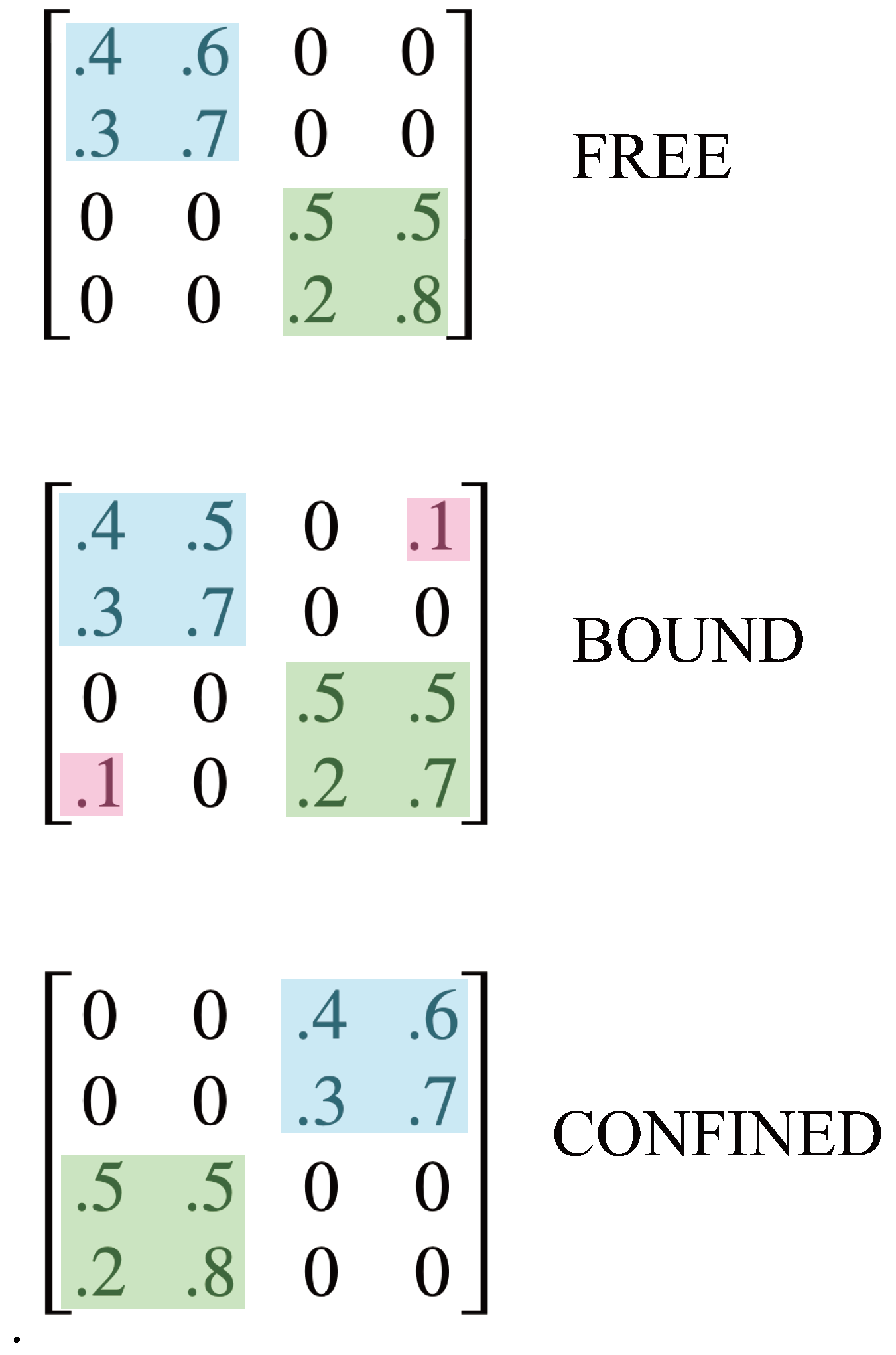

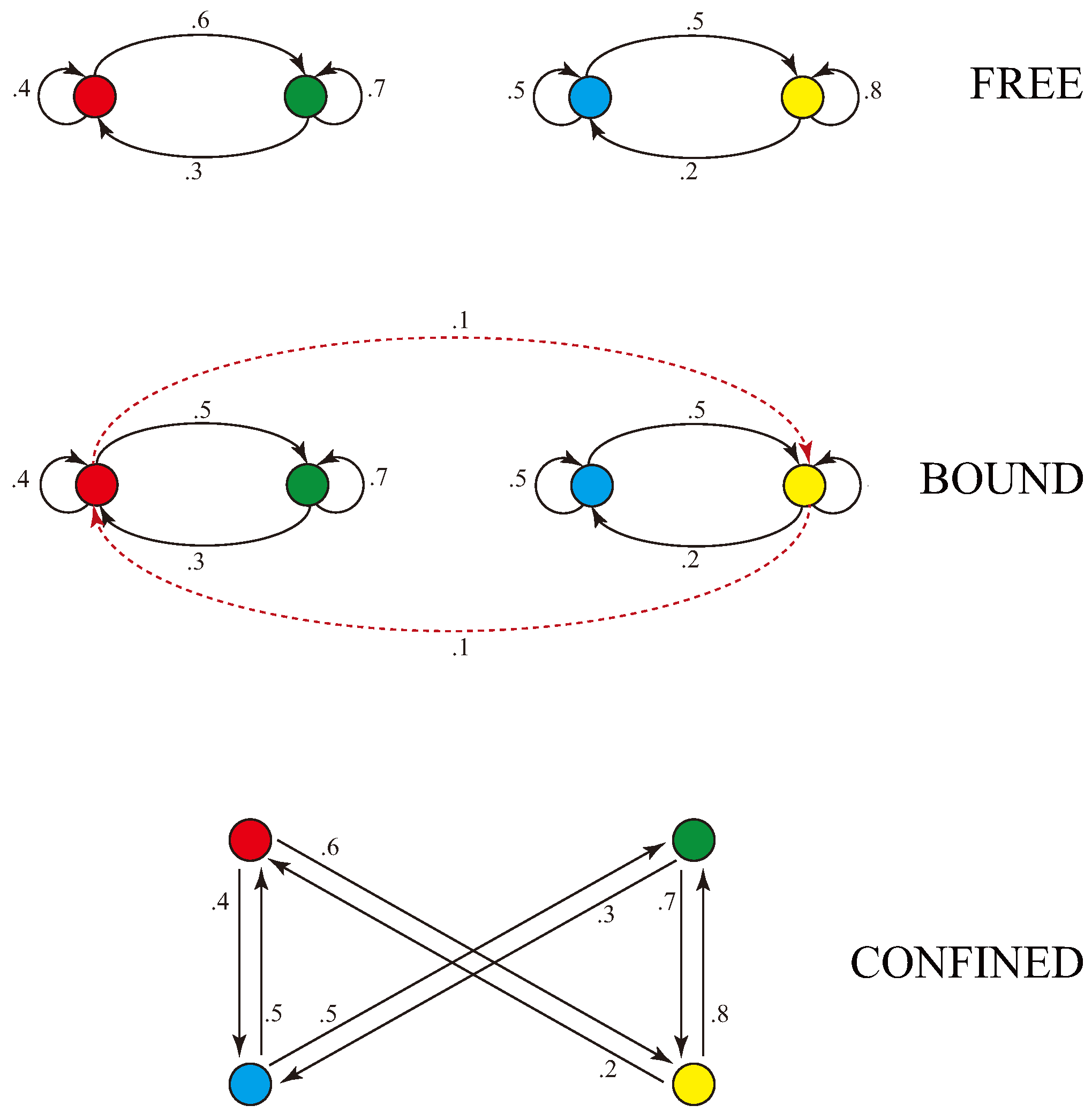

So the relationship between particles can vary on a continuum from free to bound to confined. We can model this variation using Markovian kernels, as illustrated in

Figure 11. The first kernel has two communicating classes, one highlighted in blue and one in green. There is no interaction between the two classes, and so this models free particles. The mass of the blue class is the entropy rate of states 1 and 2; the mass of the green is the entropy rate of states 3 and 4.

The second kernel in the figure has just one communicating class, which consists of all four states of the kernel. However, this kernel is almost identical to the kernel above it, with just the addition of small interaction terms, highlighted in red. So this models the case where the two particles are weakly bound and represent, together, a compound particle. The binding strength between particles can be varied from weak to strong, depending on the size of the interaction terms. We can quantify this by dividing the entropy rate of states 1 and 2 into two parts: the kinetic entropy rate (), which is the part colored blue and corresponds to the mass of the particle, and the potential entropy rate (), corresponding to the binding mass in red. Similarly for states 3 and 4 in green. We will say, suggestively, that a particle is “free” if , “bound” if , and “confined” otherwise.

With stronger binding, particles are confined, as shown in the third kernel. In this kernel the dominant terms are the interaction terms, highlighted in blue and green. In this case the entropy rate of states 1 and 2 is primarily binding mass, as is the entropy rate of states 3 and 4. The mass of the three valence quarks of a proton constitute about 2% of the mass of the proton. We can model this by placing 98% of the entropy rate in the bindings.

A way to model bound particles is to generalize from CCs to

communities, and to propose that bound particles in spacetime are projections of communities in Markov dynamics of CAs. The notion of communities naturally arises as follows. To each Markov matrix we can associate a weighted, directed graph: Each node of the graph represents a state of the Markov chain, and each directed link from a node

A to a node

B is weighted by the corresponding transition probability from the state represented by

A to the state represented by

B [30]. The graph associated to a Markov chain is called its

diagram.

Figure 10 shows the diagram associated to each matrix of

Figure 10. Red, green, blue and yellow nodes denote, respectively, states 1, 2, 3, and 4 of the matrix.

The diagram labelled “free” is a disconnected graph, with two connected components that correspond to the two communicating classes.

The diagram labelled “bound” is a connected graph, and corresponds to one communicating class involving all four states. However it has a natural division into two communities, one composed of the red and green states, and another composed of the blue and yellow states. The community of red and green states is highly interconnected: most of its transition probabilities stay within the group. Similarly, the community of blue and yellow states is highly interconnected. But the connection between the two communities is weak, accounting for a small fraction of the transition probabilities. There are various approaches to segregating a graph into communities, including infomap, spectral clustering, and modularity maximization [30].