Submitted:

23 October 2024

Posted:

24 October 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

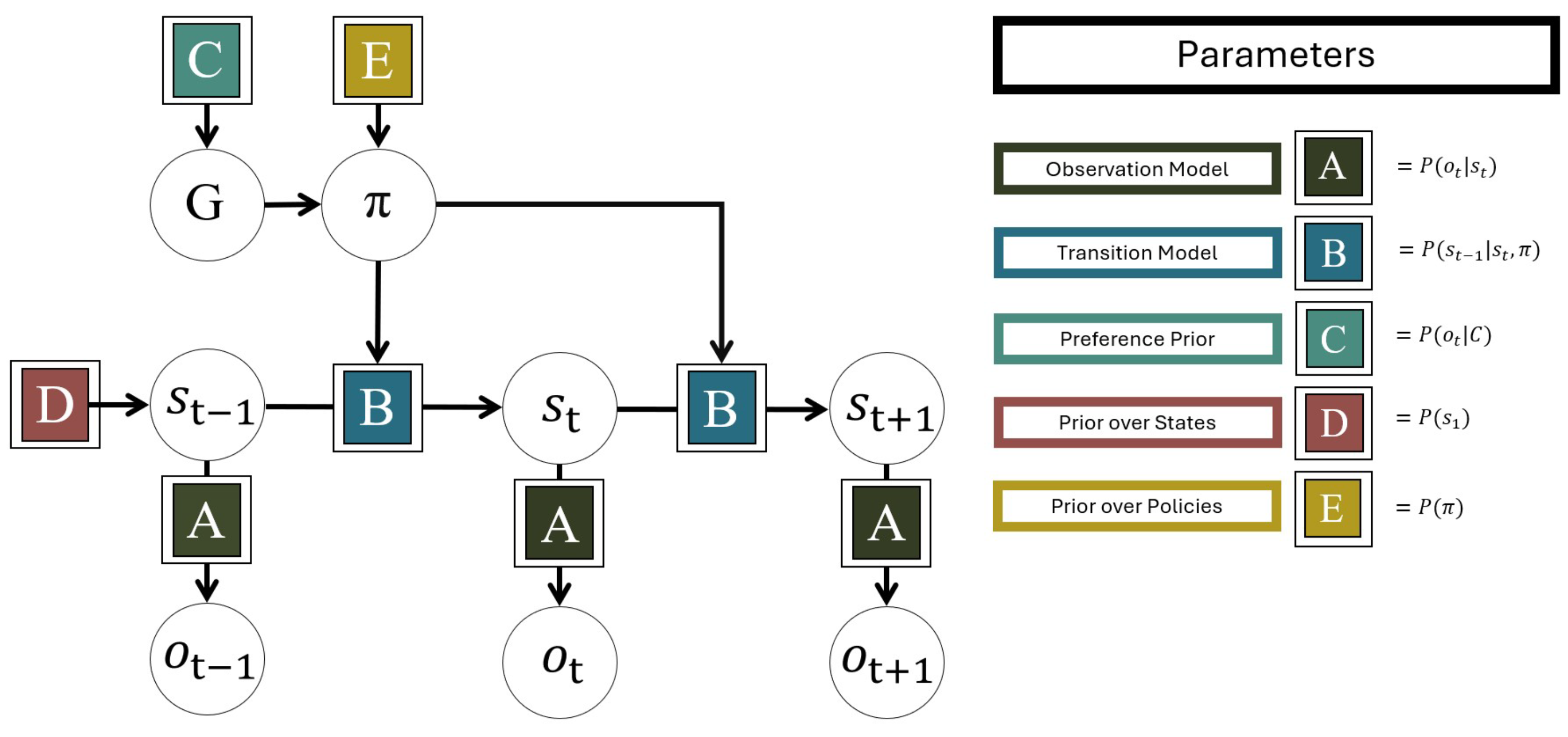

1.1. Active Inference and the Free Energy Principle

1.2. Multi-Agent and Collective Active Inference

2. Materials and Methods

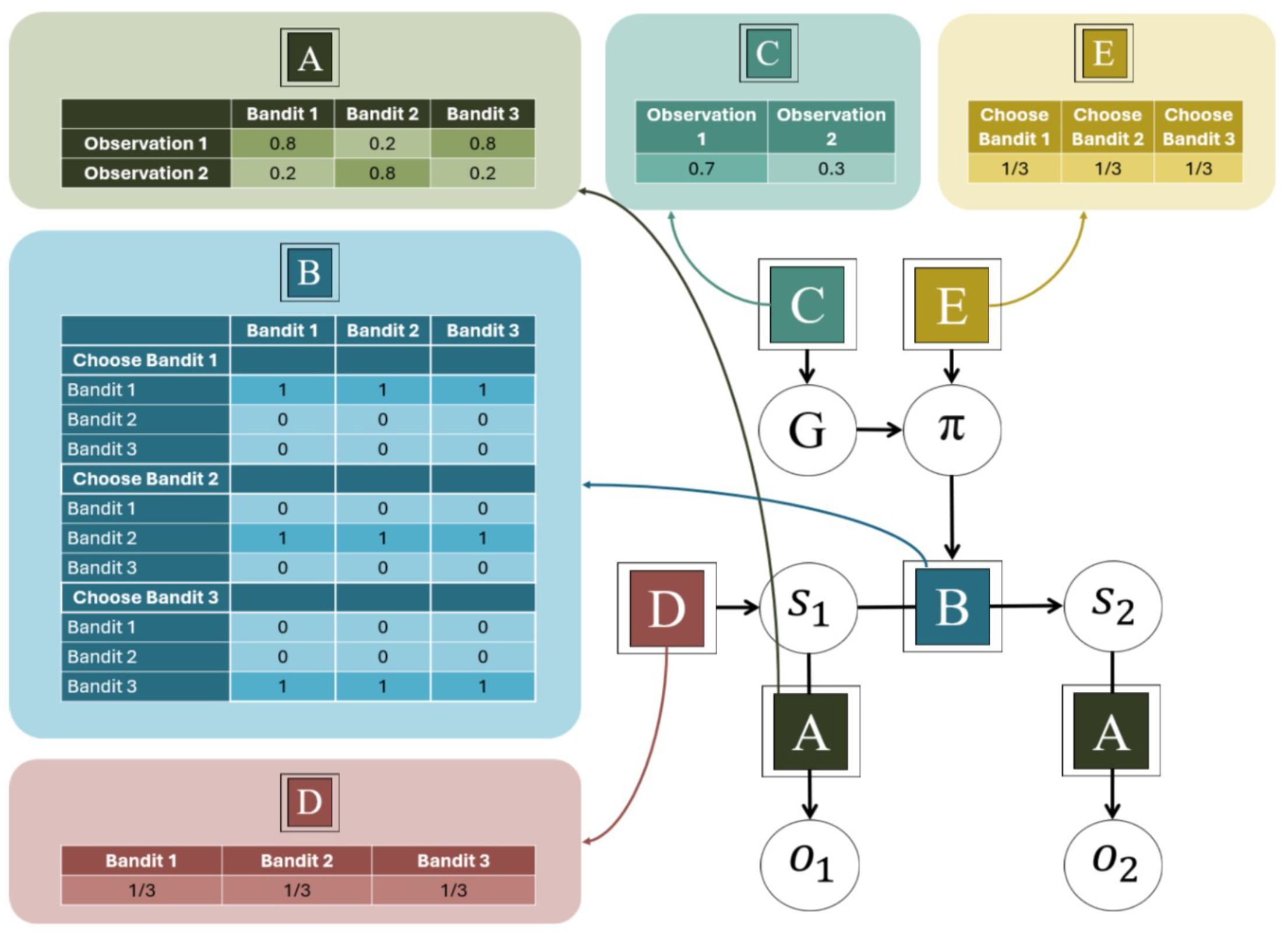

2.1. Active Inference and Multi-Armed Bandits

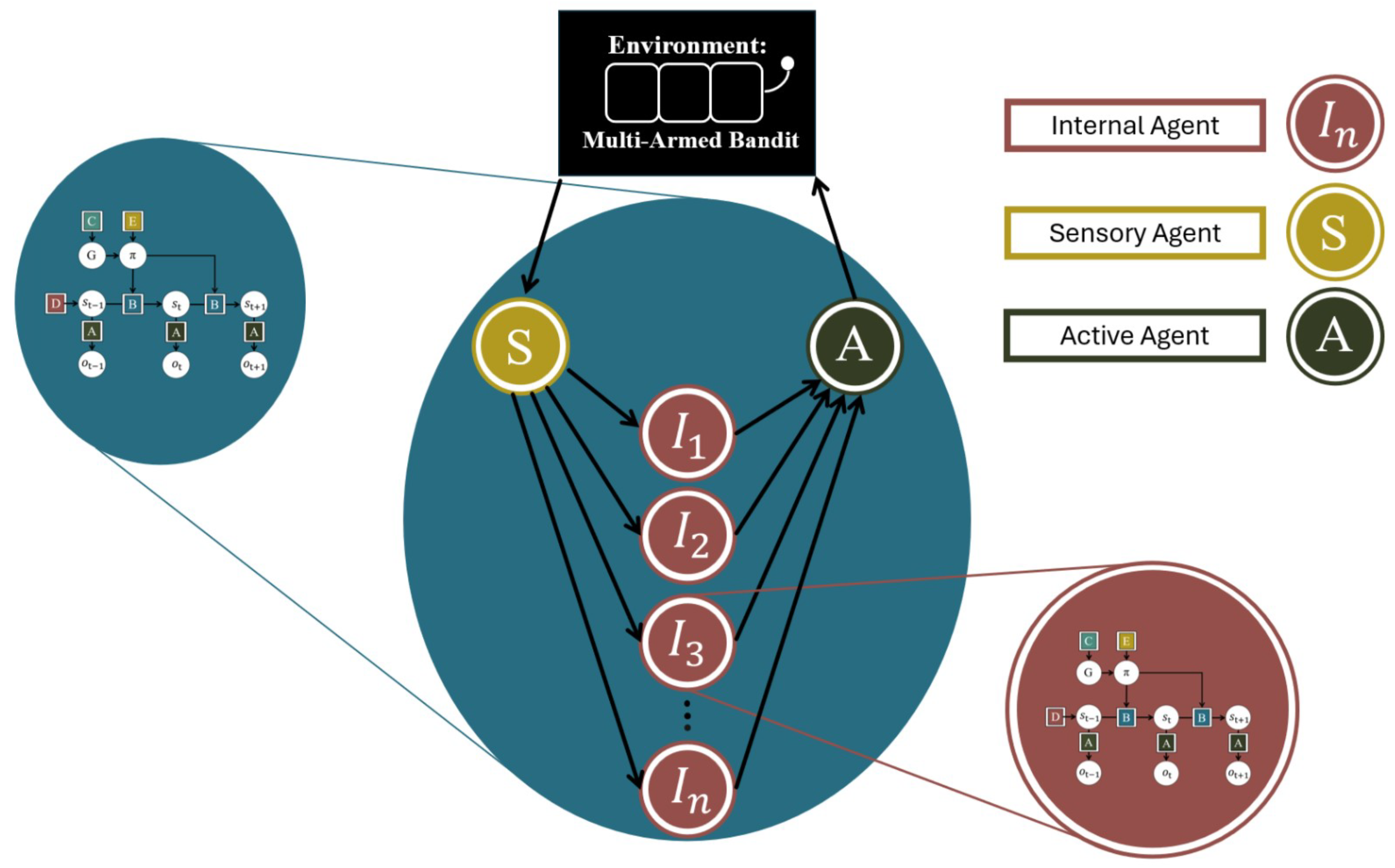

2.2. Cognitive Modelling for Emergent Agents

2.3. Simulation Experiments

3. Results

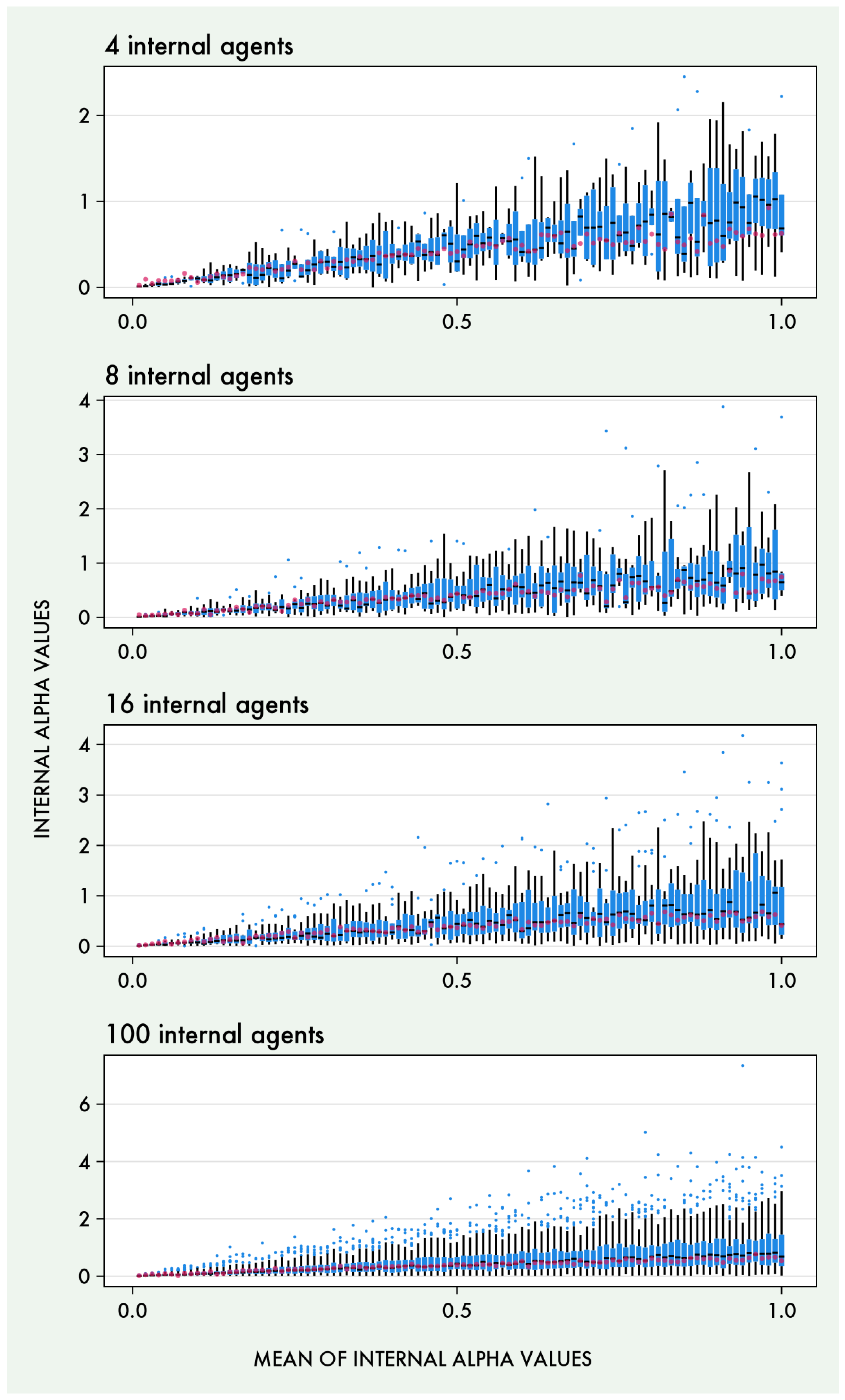

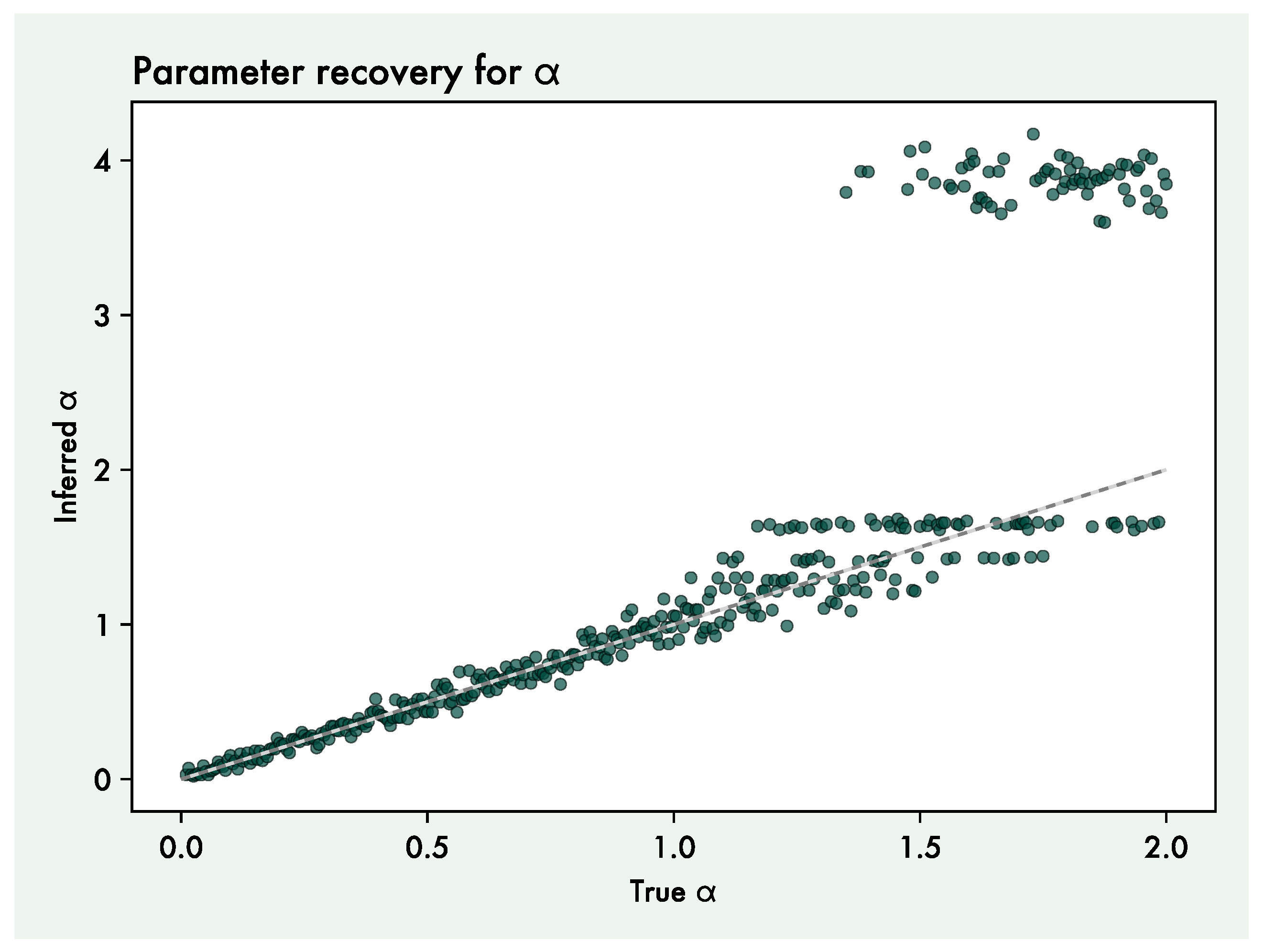

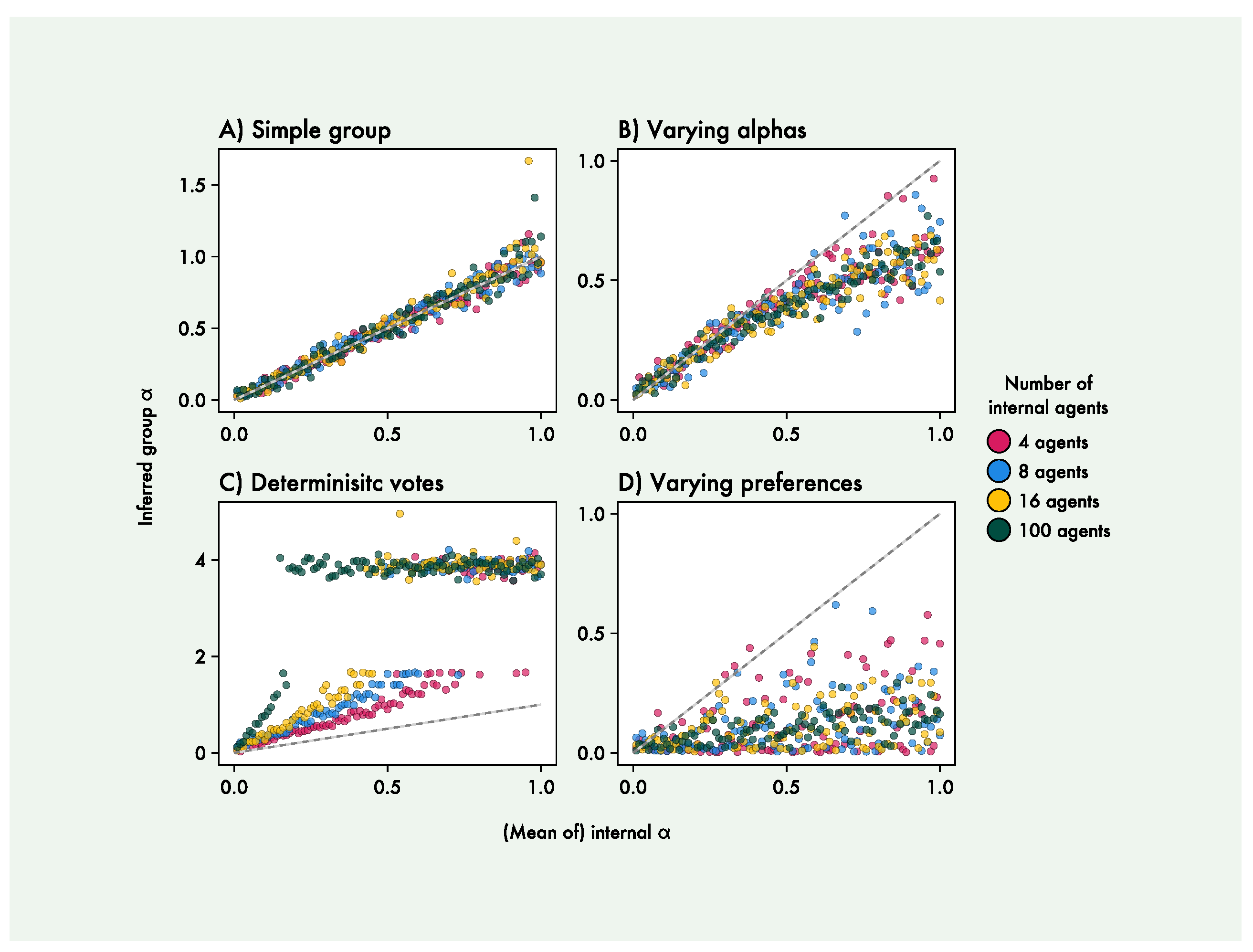

3.1. Parameter Recovery

3.2. Simulation Experiments

4. Discussion

4.1. Applications and Extensions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| FEP | Free Energy Principle |

| POMDP | Partially-Observed Markov Decision Process |

| MAB | multi-armed bandit task |

Appendix A

References

- Parr, T.; Pezzulo, G.; Friston, K.J. Active Inference: The Free Energy Principle in Mind, Brain, and Behavior; The MIT Press, 2022. [CrossRef]

- Friston, K.; FitzGerald, T.; Rigoli, F.; Schwartenbeck, P.; O’Doherty, J.; Pezzulo, G. Active inference and learning. Neuroscience & Biobehavioral Reviews 2016, 68, 862–879. [Google Scholar] [CrossRef]

- Friston, K.; FitzGerald, T.; Rigoli, F.; Schwartenbeck, P.; Pezzulo, G. Active inference: A process theory. Neural Computation 2017. [Google Scholar] [CrossRef] [PubMed]

- Friston, K.J.; Stephan, K.E. Free-energy and the brain. Synthese 2007, 159, 417–458. [Google Scholar] [CrossRef] [PubMed]

- Friston, K. The free-energy principle: a unified brain theory? Nature Reviews Neuroscience 2010, 11, 127–138. [Google Scholar] [CrossRef] [PubMed]

- Friston, K. The free-energy principle: a rough guide to the brain? Trends in Cognitive Sciences 2009, 13, 293–301. [Google Scholar] [CrossRef]

- Friston, K. A free energy principle for a particular physics, 2019. arXiv:1906.10184 [q-bio]. 1018. [Google Scholar] [CrossRef]

- Friston, K.; Da Costa, L.; Sajid, N.; Heins, C.; Ueltzhöffer, K.; Pavliotis, G.A.; Parr, T. The free energy principle made simpler but not too simple. Physics Reports 2023, 1024, 1–29. [Google Scholar] [CrossRef]

- Ramstead, M.J.D.; Badcock, P.B.; Friston, K.J. Answering Schrödinger’s question: A free-energy formulation. Physics of life reviews 2018, 24. [Google Scholar] [CrossRef]

- Kirchhoff, M.; Parr, T.; Palacios, E.; Friston, K.; Kiverstein, J. The markov blankets of life: Autonomy, active inference and the free energy principle. Journal of the Royal Society Interface 2018, 15. [Google Scholar] [CrossRef]

- Hesp, C.; Ramstead, M.; Constant, A.; Badcock, P.; Kirchhoff, M.; Friston, K. A Multi-scale View of the Emergent Complexity of Life: A Free-Energy Proposal; Springer, Cham, 2019; pp. 195–227. [CrossRef]

- Heins, C.; Klein, B.; Demekas, D.; Aguilera, M.; Buckley, C.L. Spin Glass Systems as Collective Active Inference. Active Inference; Buckley, C.L., Cialfi, D., Lanillos, P., Ramstead, M., Sajid, N., Shimazaki, H., Verbelen, T., Eds.; Springer Nature Switzerland: Cham, 2023; pp. 75–98. [Google Scholar] [CrossRef]

- Biehl, M.; Pollock, F.A.; Kanai, R. A Technical Critique of Some Parts of the Free Energy Principle. Entropy 2021, 23, 293. [Google Scholar] [CrossRef]

- Friston, K.; Da Costa, L.; Sakthivadivel, D.A.R.; Heins, C.; Pavliotis, G.A.; Ramstead, M.; Parr, T. Path integrals, particular kinds, and strange things. Physics of Life Reviews 2023, 47, 35–62. [Google Scholar] [CrossRef]

- Friston, K.; Kiebel, S. Predictive coding under the free-energy principle. Philosophical Transactions of the Royal Society B: Biological Sciences 2009, 364, 1211–1221. [Google Scholar] [CrossRef] [PubMed]

- Friston, K. Computational psychiatry: from synapses to sentience. Molecular Psychiatry 2023, 28, 256–268. [Google Scholar] [CrossRef] [PubMed]

- Schwartenbeck, P.; Friston, K. Computational Phenotyping in Psychiatry: A Worked Example. eNeuro 2016, 3, ENEURO.0049–16.2016. [Google Scholar] [CrossRef] [PubMed]

- Lanillos, P.; Meo, C.; Pezzato, C.; Meera, A.A.; Baioumy, M.; Ohata, W.; Tschantz, A.; Millidge, B.; Wisse, M.; Buckley, C.L.; Tani, J. Active Inference in Robotics and Artificial Agents: Survey and Challenges, 2021. arXiv:2112.01871 [cs]. [CrossRef]

- Hohwy, J. The Predictive Mind; Oxford University Press, 2013. [CrossRef]

- Hohwy, J. Conscious Self-Evidencing. Review of Philosophy and Psychology 2022, 13, 809–828. [Google Scholar] [CrossRef]

- Friston, K.J.; Daunizeau, J.; Kiebel, S.J. Reinforcement Learning or Active Inference? PLOS ONE 2009, 4, e6421, Publisher: Public Library of Science. [Google Scholar] [CrossRef]

- Palacios, E.R.; Razi, A.; Parr, T.; Kirchhoff, M.; Friston, K. On Markov blankets and hierarchical self-organisation. Journal of Theoretical Biology 2020, 486, 110089. [Google Scholar] [CrossRef]

- Friston, K. Life as we know it. Journal of The Royal Society Interface 2013, 10, 20130475. [Google Scholar] [CrossRef]

- Friston, K.; Frith, C. A Duet for one. Consciousness and Cognition 2015, 36, 390–405. [Google Scholar] [CrossRef]

- Veissière, S.P.L.; Constant, A.; Ramstead, M.J.D.; Friston, K.J.; Kirmayer, L.J. Thinking through other minds: A variational approach to cognition and culture. Behavioral and Brain Sciences 2020, 43, e90. [Google Scholar] [CrossRef]

- Veissière, S.P.L.; Constant, A.; Ramstead, M.J.D.; Friston, K.J.; Kirmayer, L.J. TTOM in action: Refining the variational approach to cognition and culture. Behavioral and Brain Sciences 2020, 43, e120. [Google Scholar] [CrossRef]

- Ramstead, M.J.D.; Veissière, S.P.L.; Kirmayer, L.J. Cultural Affordances: Scaffolding Local Worlds Through Shared Intentionality and Regimes of Attention. Frontiers in Psychology 2016, 7. Publisher: Frontiers. [Google Scholar] [CrossRef] [PubMed]

- Constant, A.; Ramstead, M.J.D.; Veissière, S.P.L.; Friston, K. Regimes of Expectations: An Active Inference Model of Social Conformity and Human Decision Making. Frontiers in Psychology 2019, 10. [Google Scholar] [CrossRef] [PubMed]

- Isomura, T.; Parr, T.; Friston, K. Bayesian Filtering with Multiple Internal Models: Toward a Theory of Social Intelligence. Neural Computation 2019, 31, 2390–2431. [Google Scholar] [CrossRef] [PubMed]

- Pöppel, J.; Kahl, S.; Kopp, S. Resonating Minds—Emergent Collaboration Through Hierarchical Active Inference. Cognitive Computation 2022, 14, 581–601. [Google Scholar] [CrossRef]

- Yoshida, W.; Dolan, R.J.; Friston, K.J. Game Theory of Mind. PLOS Computational Biology 2008, 4, e1000254, Publisher: Public Library of Science. [Google Scholar] [CrossRef]

- Devaine, M.; Hollard, G.; Daunizeau, J. Theory of Mind: Did Evolution Fool Us? PLOS ONE 2014, 9, e87619, Publisher: Public Library of Science. [Google Scholar] [CrossRef]

- Vasil, J.; Badcock, P.B.; Constant, A.; Friston, K.; Ramstead, M.J.D. A World Unto Itself: Human Communication as Active Inference. Frontiers in Psychology 2020, 11, 417. [Google Scholar] [CrossRef] [PubMed]

- Albarracin, M.; Pitliya, R.J.; St. Clere Smithe, T.; Friedman, D.A.; Friston, K.; Ramstead, M.J.D. Shared Protentions in Multi-Agent Active Inference. Entropy 2024, 26, 303. Number: 4 Publisher: Multidisciplinary Digital Publishing Institute. [CrossRef]

- Friston, K.J.; Frith, C.D. Active inference, communication and hermeneutics. Cortex; a Journal Devoted to the Study of the Nervous System and Behavior 2015, 68, 129–143. [Google Scholar] [CrossRef]

- Medrano, J.; Sajid, N. A Broken Duet: Multistable Dynamics in Dyadic Interactions. Entropy 2024, 26, 731, Number: 9 Publisher: Multidisciplinary Digital Publishing Institute. [Google Scholar] [CrossRef]

- Friston, K.J.; Parr, T.; Yufik, Y.; Sajid, N.; Price, C.J.; Holmes, E. Generative models, linguistic communication and active inference. Neuroscience & Biobehavioral Reviews 2020, 118, 42–64. [Google Scholar] [CrossRef]

- Friston, K.J.; Parr, T.; Heins, C.; Constant, A.; Friedman, D.; Isomura, T.; Fields, C.; Verbelen, T.; Ramstead, M.; Clippinger, J.; Frith, C.D. Federated inference and belief sharing. Neuroscience & Biobehavioral Reviews 2024, 156, 105500. [Google Scholar] [CrossRef]

- Maisto, D.; Donnarumma, F.; Pezzulo, G. Interactive inference: a multi-agent model of cooperative joint actions. IEEE Transactions on Systems, Man, and Cybernetics: Systems 2024, 54, 704–715, arXiv: 2210.13113 [cs, math, q-bio]. [Google Scholar] [CrossRef]

- Levchuk, G.; Pattipati, K.; Serfaty, D.; Fouse, A.; McCormack, R. Chapter 4 - Active Inference in Multiagent Systems: Context-Driven Collaboration and Decentralized Purpose-Driven Team Adaptation. In Artificial Intelligence for the Internet of Everything; Lawless, W.; Mittu, R.; Sofge, D.; Moskowitz, I.S.; Russell, S., Eds.; Academic Press, 2019; pp. 67–85. [CrossRef]

- Demekas, D.; Heins, C.; Klein, B. An Analytical Model of Active Inference in the Iterated Prisoner’s Dilemma. Active Inference; Buckley, C.L., Cialfi, D., Lanillos, P., Ramstead, M., Sajid, N., Shimazaki, H., Verbelen, T., Wisse, M., Eds.; Springer Nature Switzerland: Cham, 2024; pp. 145–172. [Google Scholar] [CrossRef]

- Isomura, T.; Friston, K. In vitro neural networks minimise variational free energy. Scientific Reports 2018, 8, 16926. [Google Scholar] [CrossRef] [PubMed]

- Palacios, E.R.; Isomura, T.; Parr, T.; Friston, K. The emergence of synchrony in networks of mutually inferring neurons. Scientific Reports 2019, 9, 6412. [Google Scholar] [CrossRef] [PubMed]

- Isomura, T.; Shimazaki, H.; Friston, K.J. Canonical neural networks perform active inference. Communications Biology 2022, 5, 55. [Google Scholar] [CrossRef] [PubMed]

- Kastel, N.; Hesp, C. Ideas Worth Spreading: A Free Energy Proposal for Cumulative Cultural Dynamics. Machine Learning and Principles and Practice of Knowledge Discovery in Databases; Kamp, M., Koprinska, I., Bibal, A., Bouadi, T., Frénay, B., Galárraga, L., Oramas, J., Adilova, L., Krishnamurthy, Y., Kang, B., Largeron, C., Lijffijt, J., Viard, T., Welke, P., Ruocco, M., Aune, E., Gallicchio, C., Schiele, G., Pernkopf, F., Blott, M., Fröning, H., Schindler, G., Guidotti, R., Monreale, A., Rinzivillo, S., Biecek, P., Ntoutsi, E., Pechenizkiy, M., Rosenhahn, B., Buckley, C., Cialfi, D., Lanillos, P., Ramstead, M., Verbelen, T., Ferreira, P.M., Andresini, G., Malerba, D., Medeiros, I., Fournier-Viger, P., Nawaz, M.S., Ventura, S., Sun, M., Zhou, M., Bitetta, V., Bordino, I., Ferretti, A., Gullo, F., Ponti, G., Severini, L., Ribeiro, R., Gama, J., Gavaldà, R., Cooper, L., Ghazaleh, N., Richiardi, J., Roqueiro, D., Saldana Miranda, D., Sechidis, K., Graça, G., Eds.; Springer International Publishing: Cham, 2021; pp. 784–798. [Google Scholar] [CrossRef]

- Kastel, N.; Hesp, C.; Ridderinkhof, K.R.; Friston, K.J. Small steps for mankind: Modeling the emergence of cumulative culture from joint active inference communication. Frontiers in Neurorobotics 2023, 16. Publisher: Frontiers. [Google Scholar] [CrossRef]

- Albarracin, M.; Demekas, D.; Ramstead, M.J.D.; Heins, C. Epistemic Communities under Active Inference. Entropy 2022, 24, 476, Number: 4 Publisher: Multidisciplinary Digital Publishing Institute. [Google Scholar] [CrossRef]

- Friston, K.J.; Ramstead, M.J.; Kiefer, A.B.; Tschantz, A.; Buckley, C.L.; Albarracin, M.; Pitliya, R.J.; Heins, C.; Klein, B.; Millidge, B.; Sakthivadivel, D.A.; St Clere Smithe, T.; Koudahl, M.; Tremblay, S.E.; Petersen, C.; Fung, K.; Fox, J.G.; Swanson, S.; Mapes, D.; René, G. Designing ecosystems of intelligence from first principles. Collective Intelligence 2024, 3, 26339137231222481, Publisher: SAGE Publications. [Google Scholar] [CrossRef]

- Friston, K.; Levin, M.; Sengupta, B.; Pezzulo, G. Knowing one’s place: a free-energy approach to pattern regulation. Journal of the Royal Society, Interface 2015, 12, 20141383. [Google Scholar] [CrossRef]

- Kuchling, F.; Friston, K.; Georgiev, G.; Levin, M. Morphogenesis as Bayesian inference: A variational approach to pattern formation and control in complex biological systems. Physics of Life Reviews 2019. Publisher: Elsevier B.V. [CrossRef]

- Friston, K.J.; Fagerholm, E.D.; Zarghami, T.S.; Parr, T.; Hipólito, I.; Magrou, L.; Razi, A. Parcels and particles: Markov blankets in the brain. Network Neuroscience 2021, 5, 211–251. [Google Scholar] [CrossRef] [PubMed]

- Pio-Lopez, L.; Kuchling, F.; Tung, A.; Pezzulo, G.; Levin, M. Active inference, morphogenesis, and computational psychiatry. Frontiers in Computational Neuroscience 2022, 16. Publisher: Frontiers. [Google Scholar] [CrossRef] [PubMed]

- Friedman, D.A.; Tschantz, A.; Ramstead, M.J.D.; Friston, K.; Constant, A. Active Inferants: An Active Inference Framework for Ant Colony Behavior. Frontiers in Behavioral Neuroscience 2021, 15. Publisher: Frontiers. [Google Scholar] [CrossRef] [PubMed]

- Heins, C.; Millidge, B.; Da Costa, L.; Mann, R.P.; Friston, K.J.; Couzin, I.D. Collective behavior from surprise minimization. Proceedings of the National Academy of Sciences 2024, 121, e2320239121, Publisher: Proceedings of the National Academy of Sciences. [Google Scholar] [CrossRef] [PubMed]

- Kaufmann, R.; Gupta, P.; Taylor, J. An Active Inference Model of Collective Intelligence. Entropy 2021, 23, 830, Number: 7 Publisher: Multidisciplinary Digital Publishing Institute. [Google Scholar] [CrossRef] [PubMed]

- Bezanson, J.; Karpinski, S.; Shah, V.B.; Edelman, A. Julia: A Fast Dynamic Language for Technical Computing, 2012. arXiv:1209.5145 [cs]. [CrossRef]

- Ge, H.; Xu, K.; Ghahramani, Z. Turing: A Language for Flexible Probabilistic Inference. Proceedings of the Twenty-First International Conference on Artificial Intelligence and Statistics. PMLR, 2018, pp. 1682–1690. ISSN: 2640-3498.

- Slivkins, A. Introduction to Multi-Armed Bandits. Foundations and Trends® in Machine Learning 2019, 12, 1–286, Publisher: Now Publishers, Inc.. [Google Scholar] [CrossRef]

- Marković, D.; Stojić, H.; Schwöbel, S.; Kiebel, S.J. An empirical evaluation of active inference in multi-armed bandits. Neural Networks 2021, 144, 229–246. [Google Scholar] [CrossRef] [PubMed]

- Friston, K.J.; Rosch, R.; Parr, T.; Price, C.; Bowman, H. Deep temporal models and active inference. Neuroscience & Biobehavioral Reviews 2017, 77, 388–402. [Google Scholar] [CrossRef]

- Friston, K.; Heins, C.; Verbelen, T.; Costa, L.D.; Salvatori, T.; Markovic, D.; Tschantz, A.; Koudahl, M.; Buckley, C.; Parr, T. From pixels to planning: scale-free active inference, 2024. arXiv:2407.20292. [CrossRef]

- Daunizeau, J.; Ouden, H.E.M.d.; Pessiglione, M.; Kiebel, S.J.; Stephan, K.E.; Friston, K.J. Observing the Observer (I): Meta-Bayesian Models of Learning and Decision-Making. PLOS ONE 2010, 5, e15554, Publisher: Public Library of Science. [Google Scholar] [CrossRef]

- Lee, M.D.; Wagenmakers, E.J. Bayesian Cognitive Modeling: A Practical Course, 1 ed.; Cambridge University Press, 2014. [CrossRef]

- Blei, D.M.; Kucukelbir, A.; McAuliffe, J.D. Variational Inference: A Review for Statisticians. Journal of the American Statistical Association 2017, 112, 859–877, arXiv: 1601.00670 [cs, stat]. [Google Scholar] [CrossRef]

- Palmeri, T.J.; Love, B.C.; Turner, B.M. Model-based cognitive neuroscience. Journal of mathematical psychology 2017, 76, 59–64. [Google Scholar] [CrossRef] [PubMed]

- Ma, S.k. Introduction to the Renormalization Group. Reviews of Modern Physics 1973, 45, 589–614, Publisher: American Physical Society. [Google Scholar] [CrossRef]

- Rosas, F.E.; Mediano, P.A.M.; Jensen, H.J.; Seth, A.K.; Barrett, A.B.; Carhart-Harris, R.L.; Bor, D. Reconciling emergences: An information-theoretic approach to identify causal emergence in multivariate data. PLOS Computational Biology 2020, 16, e1008289, Publisher: Public Library of Science. [Google Scholar] [CrossRef] [PubMed]

- Albantakis, L.; Barbosa, L.; Findlay, G.; Grasso, M.; Haun, A.M.; Marshall, W.; Mayner, W.G.P.; Zaeemzadeh, A.; Boly, M.; Juel, B.E.; Sasai, S.; Fujii, K.; David, I.; Hendren, J.; Lang, J.P.; Tononi, G. Integrated information theory (IIT) 4.0: Formulating the properties of phenomenal existence in physical terms. PLOS Computational Biology 2023, 19, e1011465, Publisher: Public Library of Science. [Google Scholar] [CrossRef] [PubMed]

- Kagan, B.J.; Kitchen, A.C.; Tran, N.T.; Habibollahi, F.; Khajehnejad, M.; Parker, B.J.; Bhat, A.; Rollo, B.; Razi, A.; Friston, K.J. In vitro neurons learn and exhibit sentience when embodied in a simulated game-world. Neuron 2022, 110, 3952–3969.e8. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).