Introduction

Hearing aid users frequently report the substantial mental effort required to communicate in noisy environments [

1,

2]. Prolonged exertion of listening effort can lead to significant fatigue and may result in disengagement from conversations [

3,

4,

5]. Regular exposure to these challenges increases the risk of developing anxiety due to fears of being misunderstood and may discourage social interaction [

6,

7], leading to isolation [

8], which in turn can accelerate cognitive decline and dementia [

9,

10]. The hearing-aid industry has invested considerable effort in developing advanced technologies to facilitate communication in difficult acoustic environments [

11,

12]. The present study aims to assess the effectiveness of directional microphones in improving intelligibility and reducing listening effort within a realistic noisy scenario.

Directional microphones are designed to improve the signal-to-noise ratio (SNR) in loud acoustic scenarios with multiple sound sources, such as noisy cafeterias, restaurants and shopping centres [

13]. Directional microphones enhance speech understanding by focusing on sounds coming from a specific direction, typically from in front of the user, while attenuating sounds from other directions. This is achieved through the use of two or more microphones that capture sound at different locations on the hearing aid. The device then processes the differences in timing and intensity between these signals to create a directional response, thereby helping users focus on conversations by minimising interference from surrounding noise sources [

14]. Extensive literature demonstrates that directional microphones improve speech-in-noise intelligibility, particularly when the noise originates from directions other than the front of the listener [

14,

15,

16,

17].

The Ease of Language Understanding (ELU) model is a theoretical framework that explains listening effort by describing how individuals process spoken language in various conditions [

18,

19,

20]. According to the model, when auditory input matches the brain’s stored linguistic representations, understanding occurs effortlessly and automatically. However, when the input is degraded or unclear, such as in noisy environments or with hearing loss, the brain must dedicate additional cognitive resources, such as working memory, to reconstruct the message. This increased cognitive demand is perceived as listening effort. The ELU model advocates for a comprehensive assessment of hearing aid benefits, considering not only benefits in terms of speech-in-noise performance but also considering the amount of cognitive resources engaged in speech understanding [

18,

19,

20].

Listening effort can be measured using various methods, each providing unique insights into the cognitive demands of auditory processing [

1].

Behavioural measures, such as the dual-task paradigm, assess listening effort by requiring participants to perform a primary listening task alongside a secondary task. The performance on the secondary task reflects the cognitive resources allocated to listening, with poorer performance indicating greater listening effort [

21,

22].

Physiological measures include techniques such as electroencephalography (EEG), pupillometry, and skin conductance. EEG is used to measure listening effort by analysing brain activity patterns, such as changes in neural oscillations, that reflect the cognitive load associated with processing auditory information in challenging acoustic environments [

23,

24,

25]. Pupillometry, which tracks changes in pupil size, is another widely used measure, with larger pupil dilation indicating greater listening effort [

24,

26,

27,

28]. Skin conductance measures changes in sweat gland activity, providing an index of autonomic nervous system activation and is used to gauge stress and cognitive load during listening tasks [

29,

30].

Self-reported measures involve subjective ratings where individuals assess their own perceived listening effort, typically using scales or questionnaires. These self-assessments provide valuable insights into personal experiences of listening difficulty, complementing objective measures [

31,

32,

33].

Previous studies investigating the effects of directional microphones on listening effort have often examined them in addition to other signal processing algorithms aimed at selectively reducing noise components [

34]. Recent literature presents mixed findings, highlighting the complexity of these technologies across different listening environments. Hornsby (2013) found no reduction in listening effort or mental fatigue when using directional microphones and noise reduction in hearing-impaired adults at SNRs producing 75% intelligibility in speech-shaped cafeteria babble [

4]. Wu et al. (2014) examined hearing aid amplification and directional technology in two dual-task paradigms, and found that although speech recognition improved, listening effort did not decrease in older adults [

35]. This contrasts with findings in younger populations, likely due to age-related declines in cognitive processing, making older adults less responsive to hearing aid technology in complex environments, where listening effort remains high despite improved speech recognition. Desjardins (2016) explored the individual and combined effects of noise reduction and directional microphones at SNRs producing 50% intelligibility, showing that listening effort was reduced with directional microphones alone or in combination with noise reduction, but not with noise reduction alone [

16]. Bernarding et al. (2017) found that, relative to omnidirectional microphones, directional microphones and noise reduction enhanced intelligibility and reduced listening effort in 6-talker babble at SNRs producing 50% intelligibility, measured using self-reports and EEG biomarkers in the 7.68 Hz frequency band (alpha-theta border) [

33]. Winneke et al. (2020) assessed wide versus narrow directional microphones in diffuse cafeteria noise and found that narrow directional microphones reduced both subjective listening effort and alpha power in neurophysiological measures [

36]. These studies collectively suggest that while directional microphones and noise reduction can lower listening effort, their benefits are context-dependent and require further investigation in real-world listening environments.

This paper investigates the isolated effect of an adaptive binaural beamformer in a commercially available hearing aid on speech-in-noise intelligibility and listening effort. The study was conducted in a realistic Ambisonics cafeteria noise environment and used behavioural measures via a dual task, neurophysiological measures based on alpha power, and self-reported measures.

Methods

Ethics

The study was conducted at the National Acoustic Laboratories (NAL, Sydney, Australia) following methodologies in accordance with the Ethical Principles of the World Medical Association (Declaration of Helsinki) for medical research involving human subjects. The study protocols were approved by the Hearing Australia Human Research Ethics Committee (Ref. AHHREC2019-12).

Participants

Potential candidates for the study were recruited from the NAL Research Participants Database (a registry of individuals who consented to be invited to participate in NAL research), and clients from Hearing Australia (a government-funded hearing service provider).

The study involved 20 participants (9 females, 19–81 years old, mean ± SD = 69.0 ± 18.8 years old), who met the five inclusion criteria: (1) age >18 years, (2) native English speakers, (3) absence of cognitive impairment, indicated by scoring above 85% on the Montreal Cognitive Assessment (MoCA) [

37], (4) more than 2 years of bilateral hearing aid use, and (5) bilateral downward-sloping hearing loss characterised by ≥30 dB hearing loss at 500 Hz, ≤100 dB hearing loss at 3000 Hz, steepness ≤20 dB/oct, and symmetry differences ≤15 dB between the left and right 4-frequency average hearing loss (500, 1000, 2000, and 4000 Hz). Participants were compensated for their time at the conclusion of the study.

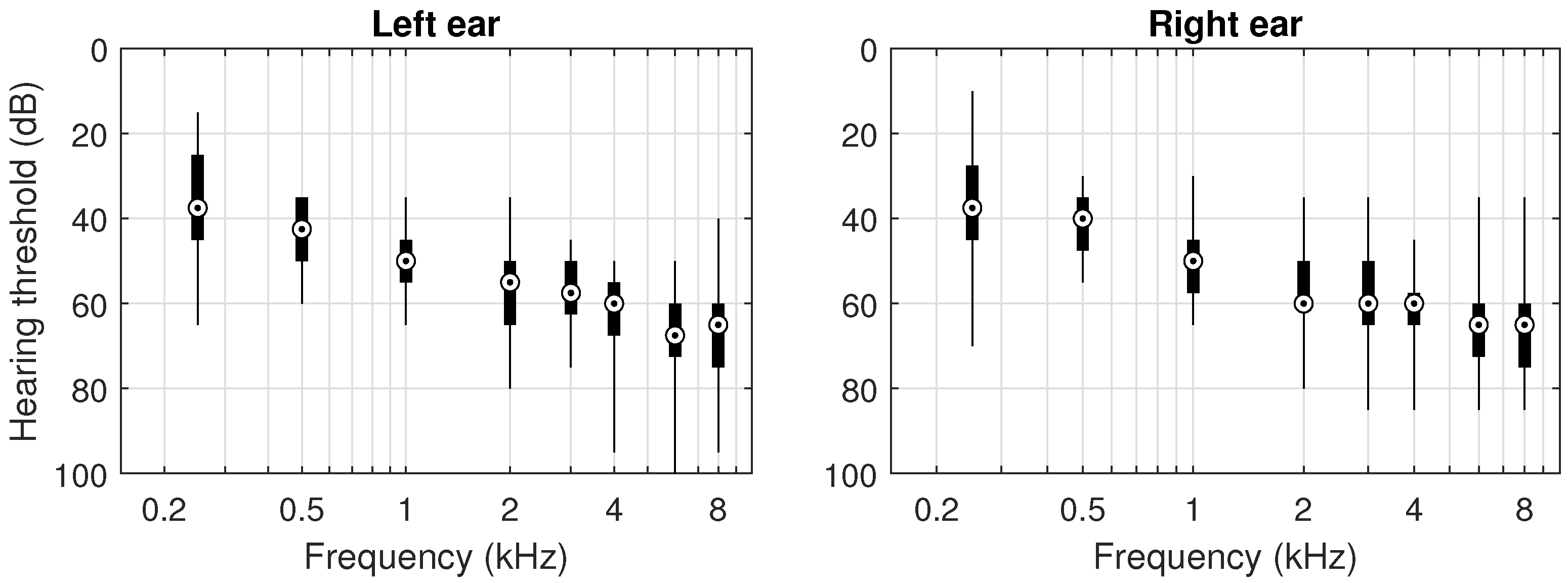

Air-conduction pure-tone audiometry was conducted using an AC40 audiometer (Interacoustics A/S, Middelfart, Denmark).

Figure 1 shows the quartile distributions of the participants’ pure-tone hearing thresholds at different frequencies ranging from 250 to 8000 Hz. Individual participant characteristics, including age, gender, first language, years of hearing aid use, MoCA score, and pure-tone audiometric thresholds at 250, 500, 1000, 2000, 3000, 4000, 6000, and 8000 Hz, are detailed in Section 1 of Appendix A in the online

Supplementary Materials.

Dual Task Paradigm

Listening effort was measured via a dual-task paradigm, in which participants performed two tasks simultaneously. The primary task involved repeating a sentence presented in background noise. This noise consisted of realistic cafeteria sounds obtained from the Ambisonics Recording of Typical Environments (ARTE) database [

38], presented at 65 dB sound pressure level (SPL) from an array of 41 speakers arranged spherically in five rows. Additionally, two distractors were positioned at ±67° azimuth to facilitate the evaluation of the adaptive binaural beamformer’s efficacy in suppressing nearby noise sources. These distractors were Australian female speakers delivering speech segments from real conversations, each presented at 65 dB SPL. Therefore, the total background noise level was approximately 70 dB SPL.

The target speech was the Australian version of the Matrix test [

39]. This test uses a closed set of 10 words from the categories

Name +

Verb +

Quantity +

Adjective +

Object to form sentences with identical syntax but unpredictable content (e.g.,

Peter likes six red toys). Words were 500 ms long with a 100 ms interval between them and were delivered from a speaker in front of the participant. The level was adjusted for each participant according to the SNR required to achieve 80% and 95% intelligibility in an aided condition, ensuring consistent speech reception thresholds (SRTs) across participants. The SRT-80 and SRT-95 were estimated in each participant from a psychometric function fitted to intelligibility scores over a range of SNRs from +15 dB to -15 dB. Detailed methodologies for estimating these SRTs are provided in Section 2 of Appendix A in the online

Supplementary Materials. This appendix also shows the individual SRTs of each participant. The averaged SRT-80 and SRT-95 across participants were +0.1 dB and +4.6 dB, respectively.

The secondary task involved a visual component triggered by the auditory stimulus of the primary task. Two large vertical rectangles were projected on an acoustically transparent screen in front of the participant. When the auditory stimulus began, a black circle appeared randomly in the centre of either the left or right rectangle. Participants were instructed to press the keyboard arrow key pointing

towards the circle if the first word of the auditory stimulus was a

male name, or the arrow key pointing

away from the circle if it was a

female name. Two seconds after the auditory stimulus ended (retention period), the black circle disappeared from the screen, and the participant repeated the words they had understood. Panel (a) of

Figure 2 illustrates the structure of an example trial. Panel (b) depicts a black circle in the centre of the left vertical rectangle. Given that the auditory stimulus in this example begins with a male name (Peter), the correct response is to press the left arrow key, highlighted with a grey background, as it points toward the circle.

Speech intelligibility was assessed by manually marking the correctly identified words. Participants’ listening effort was measured using three methods:

behaviourally, through reaction time [

21] from sentence onset to key press;

neurophysiologically, through alpha power [

25,

40] recorded via a 64-channel SynAmps-RT NeuroScan electroencephalography (EEG) recording system (Compumedics Limited, Abbotsford, Australia) using a sampling rate of 1 kHz; and

self-reported, with participants rating their perceived effort on a 7-point scale (i.e., no effort, very little, little, moderate, considerable, much, and extreme effort) [

31] after every five sentences. The dual-task paradigm was implemented in MATLAB (The Mathworks Inc., Natick, MA), using functions from the ‘Statistics and Machine Learning’, ‘Signal Processing’ and ‘Optimization’ toolboxes, along with the ‘Psychophysics Toolbox Version 3’ extension [

41,

42,

43].

Preparatory & Experimental Sessions

The study comprised two preparatory sessions and one experimental session. In the first preparatory session, participants (i) received an overview of the study’s rationale and methods and signed a consent form; (ii) underwent a hearing assessment with otoscopy and air-conduction audiometry; (iii) completed the Montreal Cognitive Assessment (MoCA) to screen for cognitive impairment [

37]; and (iv) had two sets of slim-tip earmold impressions taken: one vented appropriate for an acclimatisation period, and one with occluded vents for the experimental session.

Participants were scheduled for a second preparatory session after their earmolds were received at NAL facilities. This session included a practice session of the dual-task methodology, in which participants (i) read the test instructions (see Section 3 of Appendix A in the online

Supplementary Materials), (ii) practised the test procedure by marking responses on a printed document (also available in the same appendix) without time constraints; and (iii) practised a simplified version of the dual-task test delivered on a laptop using headphones, first in quiet (only target speech presented), and then with background noise present. This structured approach ensured participants were well-prepared to perform the full dual-task test during the experimental session.

In the second preparatory session, participants were also fitted with Phonak Audéo M90-312 hearing aids (Sonova AG, Stafa, Switzerland) using Phonak Target 7.0.5 software and vented SlimTip earmolds. The hearing aids were adjusted to meet the NAL-NL2 target at 65 dB, as verified by real-ear measurement using Aurical FreeFit (Natus Medical Inc., Middleton, WI), and individual feedback tests were conducted. Participants were subsequently sent home with these devices for a 4-week acclimatisation period. During this acclimatisation period, the hearing aids defaulted to an automatic program that independently adjusted hearing aid settings based on the listening environment. To emulate a fitting with typical settings, all hearing aid features were left enabled at their default values. Frequency lowering was also permitted but could be disabled at the participant’s discretion. In addition to the automatic program, two manually selectable programs were also made available to the listener: (i)

Quasi-omnidirectional (Q-Omni) and (ii)

Directional microphone (DM). Both manual programs used the same settings as the default automatic program for speech in noise but differed in their microphone modes. Q-Omni employed a quasi-ominidirectional microphone strategy that simulates the ear’s natural directionality, whereas DM used an adaptive binaural microphone system, providing a highly directional listening beam. During the 4-weeks acclimatisation, participants were encouraged to try the two manual programs in loud acoustic scenarios to familiarize themselves with the sound. The two manual programs were selected for use in the experimental session. Compared to Q-Omni, acoustic measures showed that DM provided a +5.6 dB improvement in the articulation index-weighted directivity index [

44], and a +4.8 dB advantage in the articulation index SNR [

44] (details available in Section 4 of Appendix A in the

Supplementary Materials online).

Participants attended the experimental session, which took place in the anechoic chamber of the Australian Hearing Hub (Sydney, Australia), after completing their acclimatization period. This session involved: (i) estimating the SNRs for 80% and 95% speech-in-noise intelligibility (SRT-80 and SRT-95) with hearing aids in Q-Omni mode, as detailed in Section 2 of Appendix A in the online

Supplementary Materials; (ii) practising the dual-task test in quiet (without background noise); and (iii) conducting the dual-task test under four conditions–SRT-80 and SRT-95 in both the Q-Omni and DM hearing aid programs. Each condition was tested four times, resulting in a total of 16 blocks. Each block comprised 20 sentences, amounting to 80 sentences per condition. The order of conditions was pseudo-randomised by randomly varying the sequence of the four conditions. For example: [SRT-95 (Q-Omni) – SRT-80 (Q-Omni) – SRT-80 (DM) – SRT-95 (DM)] – [SRT-80 (DM) – SRT-95 (Q-Omni) – SRT-80 (Q-Omni) – SRT-95 (DM)] – [SRT-80 (Q-Omni) – SRT-95 (Q-Omni) – SRT-95 (DM) – SRT-80 (DM)] – [SRT-80 (DM) – SRT-95 (Q-Omni) – SRT-95 (DM) – SRT-80 (Q-Omni)]. This pseudo-randomisation aimed to balance the effects of learning across the test conditions. During the experimental session, hearing aids were fitted with occluded-vent earmolds to enhance the effectiveness of directional microphones [

45], and manual programs were used to select the desired hearing aid settings.

Data Analysis

Speech-in-Noise Intelligibility, Reaction Time, and Self-Reported Effort

Data analysis was conducted in MATLAB using functions from the ‘Statistics and Machine Learning’ toolbox. The DM effect relative to Q-Omni was characterised in the two evaluated SRTs via a series of generalised linear mixed-effects (GLME) [

46] models. Speech-in-noise intelligibility, reaction time, or self-reported measures were considered as test variables; the hearing-aid program (Q-Omni or DM) and run order were included as predictor variables; and participants were treated as a random effect. Lilliefors tests indicated that none of the test variables were normally distributed [

47], which justified the use of GLME models. Additionally, GLME models offer the advantage of accounting for repeated measures and provide robustness against missing and unbalanced data [

48]. The inclusion of run oder as a predictor variable aimed to model any potential learning effect during the test.

Given that speech-in-noise intelligibility scores and self-reported measures consisted of nonnegative integers, the GLME models employed a Poisson distribution family with a natural logarithmic link function, i.e.,

, and an exponential inverse link function, i.e.,

[

48]. Reaction times were deemed valid if the first word of the acoustic stimulus was correctly understood, thus excluding unreliable estimates of listening effort due to intelligibility issues. Considering the reaction time distributions across participants, reaction times below 400 ms were considered unreliable and were discarded from analysis. As reaction times consisted of positive numbers, a Gamma distribution family was used with

as link function, and

as inverse link function [

48].

Appendix B in the online

Supplementary Materials provides the raw data for speech-in-noise intelligibility, reaction time, and self-reported measures of effort, along with custom MATLAB scripts for re-generating figures and performing the statistical analyses presented in the Results section.

Neurophysiological Measures

Recorded EEG signals were processed using MATLAB, employing functions from the FieldTrip [

49] and EEGLAB [

50] toolboxes. Participant #P06 was excluded due to a technical issue with the triggers, resulting in a final sample size of 19 participants.

Participants’ EEG signals were processed in each test condition following the steps below: (i) data loading; (ii) visual identification of noisy channels; (iii) re-reference to the common ground, excluding noisy channels; (iv) interpolation of noisy channels using data from neighbouring channels; (v) segmentation of data into 9-seconds trials; (vi) estimation of independent components via Independent Component Analysis; (vii) visual identification of components related to eye-blinks and saccades; (viii) recomposition of data excluding eye-activity components; (ix) high-pass filtering of EEG signals with a 1 Hz cutoff frequency; (x) identification of noisy-trials based on absolute values exceeding

V; (xi) interpolation of noisy trials from neighbouring channels if fewer than 10 noisy channels were present, otherwise rejection of the entire trial; and (xii) power spectrum estimation through time-frequency analysis using a Morlet wavelet of 5 cycles within the 0–30 Hz range. Section 5 of Appendix A in the online

Supplementary Materials provides the MATLAB script used for processing the EEG files from a selected participant, detailing each methodological step.

Differences in brain activity between Q-Omni and DM were investigated in the two evaluated SRTs across the time intervals [-1.0 – 0.0] s, [0.0 – 2.0] s, [2.0 – 3.5] s, and [3.5 – 5.0] s. These time intervals were chosen based on the averaged power spectra across participants and electrodes. A cluster-based permutation test was used for statistical analysis to correct for multiple comparisons [

51]. This test: (i) identified groups of EEG channels (i.e., clusters) with statistically significant differences in the power of the alpha frequency band (8–12 Hz) between the two hearing aid programs, using a 0.05 significance threshold; (ii) estimated the effect magnitude in each channel using a two-sided paired-samples t-statistic; (iii) constructed a reference distribution via Monte Carlo simulation, performing all possible randomised permutations of the data, identifying clusters, and calculating t-statistics for each permutation; and (iv) determined the statistical significance of the observed clusters by comparing their t-statistics against those in the reference distribution. Section 6 of Appendix A in the online

Supplementary Materials provides the MATLAB script used for the statistical analysis.

Results

Speech-in-Noise Intelligibility

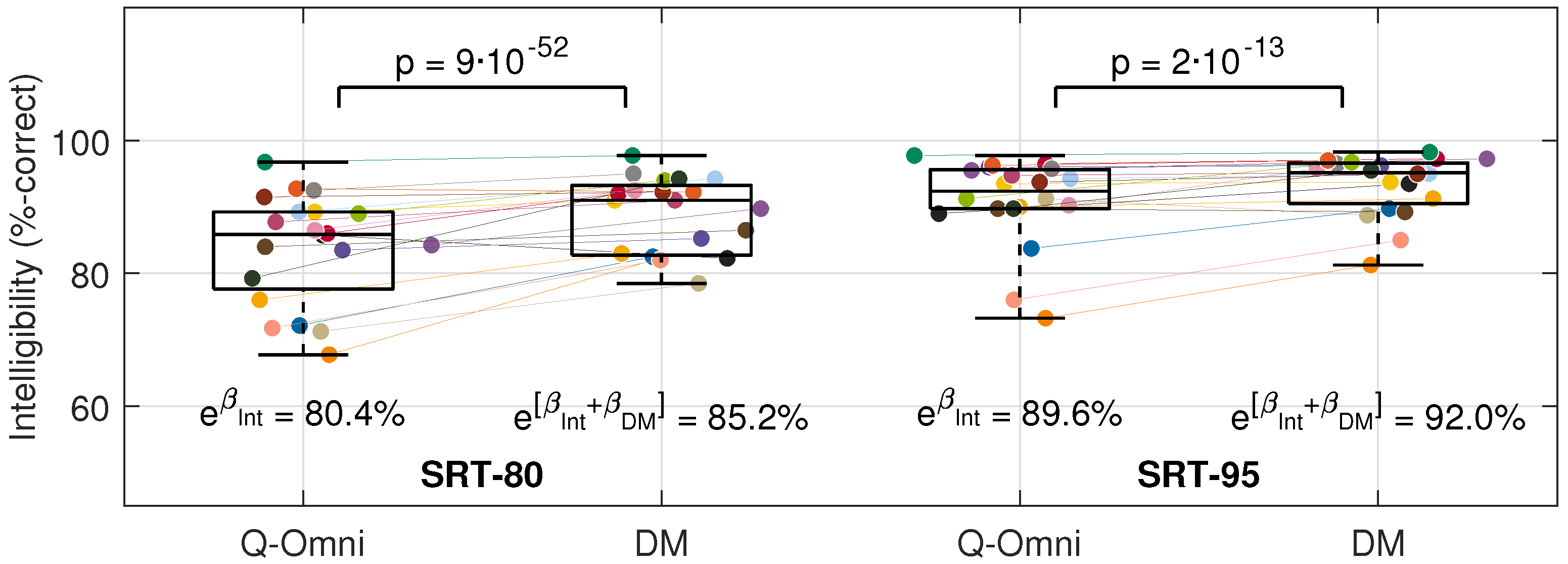

Figure 3 shows the mean speech-in-noise intelligibility scores per participant across the four testing conditions. The GLME models in

Table 1 indicate that at SRT-80, intelligibility improved from 80.4% with Q-Omni (estimated as

) to 85.2% with DM (

, estimated as

); and at SRT-95, from 89.6% with Q-Omni to 92.0% with DM (

). Additionally, these models demonstrated a statistically significant learning effect in both SRTs. At SRT-80, relative to the first run, intelligibility improved by 2.8% in the second run (

, estimated as

), 4.6% in the third run (

), and 5.6% in the fourth run (

). At SRT-95, compared to the first run, intelligibility improved by 1.8% in the third run (

), and 2.1% in the fourth run (

).

Reaction Time

Figure 4 shows the individuals’ reaction time data in the four testing conditions. Results from the GLME models presented in

Table 2 show that the predicted reaction times for SRT-80 [Q-Omni], SRT-80 [DM], SRT-95 [Q-Omni] and SRT-95 [DM] were 1677 ms (estimated as

), 1624 ms (estimated as

), 1527 ms, and 1490 ms, respectively. The DM effect over Q-Omni was found to be statistically significant, both at SRT-80 (

) and SRT-95 (

).

Table 2 also shows a statistically significant learning effect in the two SRTs. At SRT-80, compared to the first run, reaction times were 255 ms shorter in the second run (

), calculated as

, 309 ms shorter in the third run (

), and 289 ms shorter in the fourth run (

). At SRT-95, relative to the first run, reaction times were 143 ms shorter in the second run (

), 208 ms shorter in the third run (

), and 270 ms shorter in the fourth run (

).

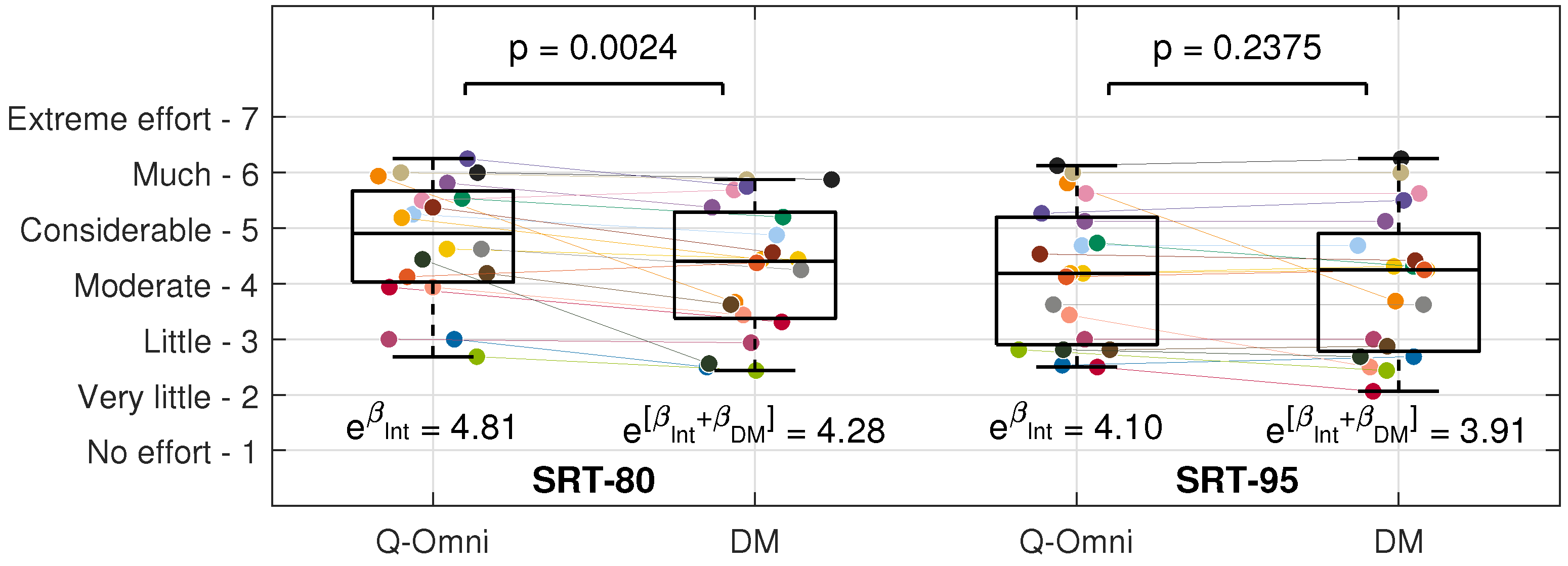

Self-Reported Effort

Figure 5 shows the mean self-reported effort per participant for each test condition, along with the predicted scores and

p-values resulting from the GLME model presented in

Table 3. On a scale from 1 (

no effort) to 7 (

extreme effort), participants reported 0.53 units less effort with DM compared to Q-Omni at SRT-80 (

). At SRT-95, DM resulted in 0.19 units less effort than Q-Omni, though this was not statistically significant (

). No learning effect was observed in this measure.

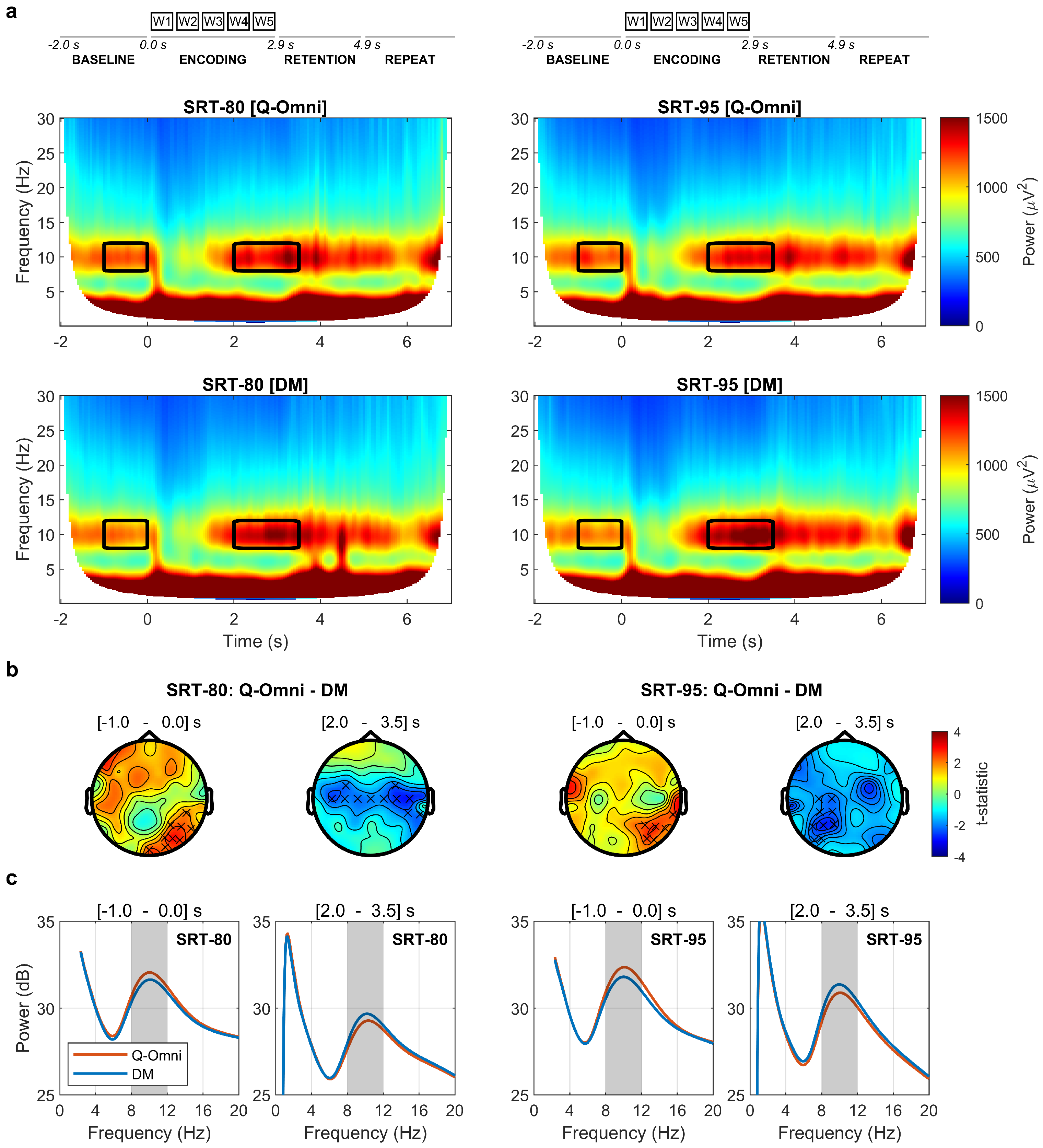

Neurophysiological Measures

Figure 6a presents the averaged spectrogram across participants and electrodes for the Q-Omni (top) and DM (bottom) hearing aid programs under SRT-80 (left) and SRT-95 (right) conditions. A trial structure diagram is shown at the top. Brain activation patterns are consistent across all scenarios, showing prominent alpha power during the baseline, the final portion of encoding and in the retention periods. The black rectangles indicate the time intervals where Q-Omni and DM exhibited statistically significant differences in alpha power for each SRT. No significant differences were observed in the [0.0 – 2.0] s or [3.5 – 5.0] s intervals for any SRT condition. Individual spectrograms for each participant are provided in Section 8 of Appendix A in the online

Supplementary Materials. These figures show consistent brain activation patterns across participants, and substantial individual variability in alpha power magnitude.

Figure 6b presents topographic maps of the t-statistic distribution across the scalp from the cluster-based permutation analysis on absolute alpha power differences between Q-Omni and DM in the [-1.0 – 0.0] s and [2.0 – 3.5] s intervals for SRT-80 and SRT-95. Crosses denote EEG channels within statistically-significant clusters. Results indicate a consistent effect of DM over Q-Omni in both SRTs. In the [-1.0 – 0.0] s interval, DM showed decreased alpha power (positive t-statistic values) in the right parietal-occipital region for both SRT-80 (cluster t-statistic = 20.98,

) and SRT-95 (cluster t-statistic = 14.18,

). In the [2.0 – 3.5] s interval, DM resulted in increased alpha power (negative t-statistic values) in centro-temporal areas for SRT-80 (cluster t-statistic = -25.05,

) and in the left centro-parietal region for SRT-95 (cluster t-statistic = -20.09,

).

Figure 6c shows the averaged power spectrum across participants and EEG channels within each cluster for the [-1.0 – 0.0] s and [2.0 – 3.5] s intervals in both SRTs. Consistent with the topographic representations shown in Panel ‘b’, these plots visually show decreased alpha power with DM in the [-1.0 – 0.0] s interval and increased alpha power in the [2.0 – 3.5] interval across both SRTs.

Discussion

This study aimed to investigate the effect of an adaptive binaural beamformer in a commercially available hearing aid on speech intelligibility in noise and listening effort. The research was conducted in a realistic Ambisonics-simulated cafeteria noise environment, utilising for the first time simultaneous measures of behavioural dual-task performance, neurophysiological alpha power monitoring, and self-reported data. By integrating these methods, we captured both objective and subjective aspects of auditory processing. Behavioural measures, such as dual-task performance, provided direct evidence of reduced cognitive load, while neurophysiological data (specifically alpha power) offered insights into the underlying brain activity associated with listening effort. Self-reports complemented these by capturing participants’ perceptions of effort. This multi-faceted approach allowed for a comprehensive evaluation of how hearing aids with directionality alleviate listening challenges in complex auditory environments.

Results show that adaptive binaural beamforming improves speech intelligibility in noisy environments. This aligns with previous research showing that directional microphones enhance speech perception by improving the SNR [

15,

26,

35,

36,

52]. The binaural beamformer directs focus towards frontal speech signals while suppressing noise from other directions, thereby creating a more favourable listening environment. This directional focus enables hearing aid users to converse more effectively in noisy conditions, particularly in diffuse sound environments like a busy cafeteria.

The effect of directional microphones on listening effort were more complex. Behavioural dual-task performance revealed a reduction in listening effort, evidenced by faster reaction times when the hearing aid’s directionality was active. This reduction is consistent with the Ease of Language Understanding (ELU) model [

18,

19,

20], suggesting that a lower cognitive load allows for better performance in secondary tasks [

21]. Neurophysiological measures further supported this finding, showing a reduction in baseline alpha power and an increase during the later portion of encoding when the beamformer was engaged. The reproducibility of this brain activation pattern across the two SRT conditions reinforces its reliability. The observed pattern likely reflects the dual roles of alpha-band oscillations: inhibition and information processing [

25]. On one hand, pre-stimulus alpha, associated with inhibition [

40], was higher in the Q-Omni program, suggesting increased cognitive resource allocation to attenuate the effect of the louder background noise in that condition, thus indicating heightened listening effort in the Q-Omni program relative to DM. On the other hand, the increased alpha power during the later encoding phase in the DM condition likely reflects a greater working memory load due to retaining more words as a result of improved intelligibility [

53,

54,

55,

56]. While participants’ subjective ratings of listening effort were reduced in both SRT conditions, the effect of DM was minimal and statistically insignificant at SRT-95. The combination of behavioural, neurophysiological, and self-reported data supports the positive impact of these technologies on listening effort in challenging acoustic environments.

The findings of this study hold important clinical implications, particularly regarding how clinicians assess and manage listening effort. While self-reported measures are commonly used, this study highlights the potential for more objective measures, such as behavioural measures based on a dual-task, in revealing effort reductions that may not be consciously perceived. In this study, significant effects of directional microphones were observed in behavioural and neurophysiological measures at SRT-95, but these were not reflected in self-reports. Clinicians should be aware that improvements in listening effort due to directional microphones may occur even when patients do not report significant changes. It is therefore essential for audiologists to inform patients about the benefits of these technologies, even if the effects are not immediately obvious, in order to manage expectations and enhance satisfaction, ultimately improving clinical outcomes.

Interestingly, a statistically significant learning effect was observed in both speech intelligibility and reaction time measures over multiple dual-task runs, though not in self-reported listening effort. This discrepancy between objective performance improvements and subjective effort aligns with previous studies, suggesting that repeated exposure to difficult listening conditions can lead to perceptual learning, improving intelligibility and reaction times [

57,

58,

59]. However, self-reported effort may remain unchanged, as these assessments are influenced more by cognitive load, emotional state, and individual differences rather than task mastery [

1,

60] This highlights the complexity of measuring listening effort and the importance of using both objective and subjective assessments to provide a comprehensive evaluation, as subjective reports may not always reflect objective improvements [

3].

We also observed that the effects of DM were more pronounced at the lower SRT. This was reflected in greater improvements in speech-in-noise intelligibility (a 4.8% increase at SRT-80 compared to 2.4% at SRT-95), larger reductions in reaction times (53 ms at SRT-80 compared to 37 ms at SRT-95), greater self-reported benefits (a 0.53-point reduction on a 7-point scale at SRT-80 compared to 0.19 at SRT-95), and more distinct brain activation differences (cluster t-statistics of 20.98 and -25.05 during pre-stimulus and encoding at SRT-80, versus 14.18 and -20.09 at SRT-95). This pattern aligns with existing research, which shows that directional microphones and noise reduction systems are most effective in environments with lower SNRs [

26,

32,

52,

61]. These findings suggest that DM systems offer greater benefit in more challenging listening conditions, particularly where auditory processing demands are higher. By selecting SRTs of 80% and 95%, we also ensured that intelligibility was high enough for participants to engage meaningfully with the dual-task paradigm, minimising potential confounds related to motivation. Excessively low SNRs could lead to a lack of participant engagement, diminishing the perceived benefits of the hearing aid features [

62,

63]. Therefore, it is possible that at extremely low SNRs, the benefits of directionality may plateau, as the cognitive demand may surpass the technology’s capacity to assist.

A key strength of this study lies in the use of a realistic Ambisonics-simulated cafeteria noise environment at relatively high SNRs, which closely mimics the complex auditory landscapes that hearing aid users encounter in daily life. Previous studies have often relied on simplified laboratory settings that do not fully capture the spatial and temporal dynamics of real-world listening situations [

16,

32,

52,

61]. By simulating a realistic environment, this study provides insights that are more applicable to everyday listening conditions, thus enhancing the ecological validity of the findings. However, the effectiveness of hearing aid technologies may vary across different environments and user populations. While this study focused on cafeteria noise, further research should explore the generalisability of these findings to other challenging listening contexts, such as fluctuating outdoor noise or environments with significant reverberation. Methodological improvements to increase ecological validity could include using more realistic speech stimuli in the primary task, such as the Everyday Conversational Sentences in Noise (ECO-SiN) test [

64], which presents natural conversation in real-world background noise, and employing Ecological Momentary Assessment (EMA) methods to assess self-reported DM benefits in a broad range of everyday listening environments [

65,

66].

Conclusions

This study provides robust evidence that hearing aids incorporating adaptive binaural beamforming significantly enhance speech intelligibility and reduce listening effort in noisy environments, particularly under challenging conditions with lower signal-to-noise ratios. The combination of behavioural, neurophysiological, and self-reported data offers a comprehensive assessment of these benefits. Objective measures, such as faster reaction times and changes in alpha power, indicate a clear reduction in cognitive load when these hearing aid features are activated. However, subjective ratings of listening effort did not fully reflect the extent of these improvements, underscoring the need for both objective and subjective measures in assessing listening effort. Self-reports alone may not capture the nuanced reductions in cognitive load observed through behavioural and neurophysiological data. The use of a realistic Ambisonics-simulated cafeteria environment further strengthens the ecological validity of the findings, offering insights applicable to everyday listening challenges faced by hearing aid users. Clinically, this study highlights the value of directional microphones in improving listening outcomes, while emphasising that patient expectations should be carefully managed, given that subjective perceptions of benefit may not always align with measurable improvements in performance. Ultimately, these findings support the adoption of advanced hearing aid technologies to alleviate listening effort, particularly in complex auditory environments.

Supplementary Materials

The following supporting information can be downloaded at the website of this paper posted on

Preprints.org.

Author Contributions

J.T.V., J.M., N.C.H., and B.E. conceptualised and designed the experiments. J.T.V. and A.W. implemented the methodologies. A.W. recruited participants and collected data. J.T.V. and J.M. analysed data. J.T.V., J.M., and B.E. managed the administrative elements of the project. J.T.V. and B.E. secured the funding. J.T.V. wrote the original draft of the manuscript. All authors reviewed and approved the final version of the manuscript.

Data Availability Statement

All raw data, processed data, programming scripts, and other research materials supporting the findings of this study are available upon reasonable request. Correspondence and requests for materials should be addressed to J.T.V.

Acknowledgments

The authors gratefully acknowledge the following contributions: Dr. Elizabeth F. Beach and Dr. Bram Van Dun (National Acoustic Laboratories, Sydney, Australia) for their supervision during early stages of this research; Mr. James Galloway and Mr. Greg Stewart (NAL) for their work in collecting and analysing acoustic measures; Dr. Ronny Ibrahim (NAL) for technical support with EEG data analysis; Mr. Mark Seeto (NAL) for general statistical guidance; Dr. Kelly Miles (NAL) for assistance with implementing dual-task methodologies; and Mr. Paul Jevelle (NAL) for data collection during initial pilot studies. The authors also appreciate the valuable contributions from collaborators from Sonova AG (Stäfa, Switzerland), including Dr. Stefan Launer, Dr. Mathias Latzel, Dr. Matthias Keller, Dr. Charlotte Vercammen, Ms. Juliane Raether, and Dr. Peter Derleth. This research was supported by the Ramón y Cajal Fellowship (RYC-2022-037875-I) awarded to Dr. Joaquín T. Valderrama by the Spanish Ministry of Science and Innovation (MCIU/AEI/10.13039/501100011033) and the European Social Fund Plus (FSE+); by Sonova AG (Stäfa, Switzerland); and by the Australian Government Department of Health.

Conflicts of Interest

N.C.H. is employed by Sonova AG. He declares that this affiliation did not influence the study design, data collection and analysis, decision to publish, or preparation of the manuscript. Sonova AG provided funding for this work but had no additional role in the study design, data collection and analysis, decision to publish, or preparation of the manuscript. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- McGarrigle, R.; Munro, K.J.; Dawes, P.; Stewart, A.J.; Moore, D.R.; Barry, J.G.; Amitay, S. Listening effort and fatigue: What exactly are we measuring? A British Society of Audiology Cognition in Hearing Special Interest Group ’white paper’. International Journal of Audiology 2014, 53, 433–445. [Google Scholar] [CrossRef] [PubMed]

- Eckert, M.A.; Teubner-Rhodes, S.; Vaden Jr, K.I. Is listening in noise worth it? The neurobiology of speech perception in challenging listening conditions. Ear and Hearing 2017, 38, 725–732. [Google Scholar] [CrossRef]

- Pichora-Fuller, M.K.; Kramer, S.E.; Eckert, M.A.; Edwards, B.; Hornsby, B.W.Y.; Humes, L.E.; Lemke, U.; Lunner, T.; Matthen, M.; Mackersie, C.L.; Naylor, G.; Phillips, N.A.; Richter, M.; Rudner, M.; Sommers, M.S.; Tremblay, K.L.; Wingfield, A. Hearing impairment and cognitive energy: The framework for understanding effortful listening (FUEL). Ear and Hearing 2016, 37, 5S–27S. [Google Scholar] [CrossRef]

- Hornsby, B.W.Y. The effects of hearing aid use on listening effort and mental fatigue associated with sustained speech processing demands. Ear and Hearing 2013, 34, 523–534. [Google Scholar] [CrossRef]

- Hornsby, B.W.Y.; Naylor, G.; Bess, F.H. A taxonomy of fatigue concepts and their relation to hearing loss. Ear and Hearing 2016, 37, 136S–144S. [Google Scholar] [CrossRef]

- Contrera, K.J.; Betz, J.; Deal, J.; Deal, J.S.; Choi, J.S.; Ayonayon, H.N.; Harris, T.; Helzner, E.; Martin, K.R.; Mehta, K.; Pratt, S.; Rubin, S.M.; Satterfield, S.; Yaffe, K.; Simonsick, E.M.; Lin, F.R. Association of hearing impairment and anxiety in older adults. Journal of Aging and Health 2017, 29, 172–184. [Google Scholar] [CrossRef]

- Mealings, K.; Yeend, I.; Valderrama, J.T.; Gilliver, M.; Pang, J.; Heeris, J.; Jackson, P. Discovering the Unmet Needs of People With Difficulties Understanding Speech in Noise and a Normal or Near-Normal Audiogram. American Journal of Audiology 2020, 29, 329–355. [Google Scholar] [CrossRef]

- Mick, P.; Kawachi, I.; Lin, F.R. The association between hearing loss and social isolation in older adults. Otolaryngology–Head and Neck Surgery 2014, 150, 378–384. [Google Scholar] [CrossRef]

- Livingston, G.; Huntley, J.; Sommerlad, A.; Ames, D.; Ballard, C.; Banerjee, S.; Brayne, C.; Burns, A.; Cohen-Mansfield, J.; Cooper, C.; Costafreda, S.G.; Dias, A.; Fox, N.; Gitlin, L.N.; Howard, R.; Kales, H.C.; Kivimäki, M.; Larson, E.B.; Ogunniyi, A.; Orgeta, V.; Ritchie, K.; Rockwood, K.; Sampson, E.L.; Samus, Q.; Schneider, L.S.; k, G.S.; Teri, L.; Mukadam, N. Dementia prevention, intervention, and care: 2020 report of the Lancet Commission. Lancet 2020, 396, 413–446. [Google Scholar] [CrossRef]

- Lin, F.R.; Yaffe, K.; Xia, J.; Xue, Q.; Harris, T.B.; Purchase-Helzner, E.; Satterfield, S.; Ayonayon, H.N.; Ferrucci, L.; Simonsick, E.M.; Health ABC Study Group. Hearing loss and cognitive decline in older adults. JAMA Internal Medicine 2013, 173, 293–299. [Google Scholar] [CrossRef]

- Alexander, J.M. Hearing aid technology to improve speech intelligibility in noise. Seminars in Hearing 2021, 42, 175–185. [Google Scholar] [CrossRef] [PubMed]

- Edwards, B. Emerging technologies, market segments, and MarkeTrak 10 insights in hearing health technology. Seminars in Hearing 2020, 41, 37–54. [Google Scholar] [CrossRef]

- Edwards, B. Beyond amplification: Signal processing techniques for improving speech intelligibility in noise with hearing aids. Seminars in Hearing 2000, 21, 137–156. [Google Scholar] [CrossRef]

- Ricketts, T.A. Directional hearing aids. Trends in Amplification 2001, 5, 139–176. [Google Scholar] [CrossRef]

- Bentler, R.A. Effectiveness of directional microphones and noise reduction schemes in hearing aids: A systematic review of the evidence. Journal of the American Academy of Audiology 2005, 16, 473–484. [Google Scholar] [CrossRef]

- Desjardins, J.L. The effects of hearing aid directional microphone and noise reduction processing on listening effort in older adults with hearing loss. Journal of the American Academy of Audiology 2016, 27, 29–41. [Google Scholar] [CrossRef]

- Valente, M.; Fabry, D.A.; Potts, L.G. Recognition of speech in noise with hearing aids using dual microphones. Journal of the American Academy of Audiology 1995, 6, 440–449. [Google Scholar]

- Rönnberg, J.; Lunner, T.; Zekveld, A.; Sörqvist, P.; Danielsson, H.; Lyxell, B.; Dahlström, O.; Signoret, C.; Stenfelt, S.; Pichora-Fuller, M.K.; Rudner, M. The Ease of Language Understanding (ELU) model: Theoretical, empirical, and clinical advances. Frontiers in Systems Neuroscience 2013, 7, 31. [Google Scholar] [CrossRef]

- Rönnberg, J.; Holmer, E.; Rudner, M. Cognitive hearing science and ease of language understanding. International Journal of Audiology 2019, 58, 247–261. [Google Scholar] [CrossRef]

- Rönnberg, J.; Signoret, C.; Andin, J.; Holmer, E. The cognitive hearing science perspective on perceiving, understanding, and remembering language: The ELU model. Frontiers in Psychology 2022, 13, 967260. [Google Scholar] [CrossRef]

- Gagné, J.P.; Besser, J.; Lemke, U. Behavioral assessment of listening effort using a dual-task paradigm: A review. Trends in Hearing 2017, 21, 2331216516687287. [Google Scholar] [CrossRef] [PubMed]

- Schiller, I.S.; Breuer, C.; Aspök, L.; Ehret, J.; Bönsch, A.; Kuhlen, T.W.; Fels, F.; Schlittmeier, S.J. A lecturer’s voice quality and its effect on memory, listening effort, and perception in a VR environment. Scientific Reports 2024, 14, 12407. [Google Scholar] [CrossRef] [PubMed]

- Dimitrijevic, A.; Smith, M.L.; Kadis, D.S.; Moore, D.R. Neural indices of listening effort in noisy environments. Scientific Reports 2019, 9, 11278. [Google Scholar] [CrossRef]

- Ala, T.S.; Graversen, C.; Wendt, D.; Alickovic, E.; Whitmer, W.M.; Lunner, T. An exploratory study of EEG alpha oscillation and pupil dilation in hearing-aid users during effortful listening to continuous speech. PLoS One 2020, 15, e0235782. [Google Scholar] [CrossRef]

- Klimesch, W. Alpha-band oscillations, attention, and controlled access to stored information. Trends in Cognitive Sciences 2012, 16, 606–617. [Google Scholar] [CrossRef]

- Wendt, D.; Hietkamp, R.K.; Lunner, T. Impact of noise and noise reduction on processing effort: A pupillometry study. Ear and Hearing 2017, 38, 690–700. [Google Scholar] [CrossRef]

- Ohlenforst, B.; Zekveld, A.A.; Lunner, T.; Wendt, D.; Naylor, G.; Wang, Y.; Versfeld, N.J.; Kramer, S.E. Impact of stimulus-related factors and hearing impairment on listening effort as indicated by pupil dilation. Hearing Research 2017, 351, 68–79. [Google Scholar] [CrossRef]

- Zhang, Y.; Callejón-Leblic, M.A.; Picazo-Reina, A.M.; Blanco-Trejo, S.; Patou, F.; Sánchez-Gómez, S. Impact of SNR, peripheral auditory sensitivity, and central cognitive profile on the psychometric relation between pupillary response and speech performance in CI users. Frontiers in Neuroscience 2023, 17, 1307777. [Google Scholar] [CrossRef]

- Zekveld, A.A.; Kramer, S.E.; Festen, J.M. Pupil response as an indication of effortful listening: The influence of sentence intelligibility. Ear and Hearing 2010, 31, 480–490. [Google Scholar] [CrossRef]

- Mackersie, C.L.; Cones, H. Subjective and psychophysiological indexes of listening effort in a competing-talker task. Journal of the American Academy of Audiology 2011, 22, 113–122. [Google Scholar] [CrossRef]

- Schulte, M. Listening effort scaling and preference rating for hearing aid evaluation. Workshop Hearing Screening and Technology;, 2009.

- Desjardins, J.L.; Doherty, K.A. The effect of hearing aid noise reduction on listening effort in hearing-impaired adults. Ear and Hearing 2014, 35, 600–610. [Google Scholar] [CrossRef] [PubMed]

- Bernarding, C.; Strauss, D.J.; Hannemann, R.; Seidler, H.; Corona-Strauss, F.I. Neurodynamic evaluation of hearing aid features using EEG correlates of listening effort. Cognitive Neurodynamics 2017, 11, 203–215. [Google Scholar] [CrossRef] [PubMed]

- Bentler, R.A.; Chiou, L.K. Digital noise reduction: An overview. Trends in Amplification 2006, 10, 67–82. [Google Scholar] [CrossRef]

- Wu, Y.H.; Aksan, N.; M.Rizzo.; Stangl, E.; Zhang, X.; Bentler, R. Measuring listening effort: Driving simulator versus simple dual-task paradigm. Ear and Hearing 2014, 35, 623–632. [Google Scholar] [CrossRef]

- Winneke, A.H.; Schulte, M.; Vormann, M.; Latzel, M. Effect of directional microphone technology in hearing aids on neural correlates of listening and memory effort: An electroencephalographic study. Trends in Hearing 2020, 24, 2331216520948410. [Google Scholar] [CrossRef]

- Nasreddine, Z.S.; Phillips, N.A.; Bédirian, V.; Charbonneau, S.; Whitehead, V.; Collin, I.; Cummings, J.L.; Chertkow, H. The Montreal Cognitive Assessment, MoCA: A brief screening tool for mild cognitive impairment. Journal of the American Geriatrics Society 2005, 53, 695–699. [Google Scholar] [CrossRef]

- Weisser, A.; Buchholz, J.M.; Oreinos, C.; Badajoz-Davila, J.; Galloway, J.; Beechey, T.; Keidser, G. The Ambisonic Recordings of Typical Environments (ARTE) Database. Acta Acustica united with Acustica 2019, 105, 695–713. [Google Scholar] [CrossRef]

- Kelly, H.; Lin, G.; Sankaran, N.; Xia, J.; Kalluri, S.; Carlile, S. Development and evaluation of a mixed gender, multi-talker matrix sentence test in Australian English. International Journal of Audiology 2017, 56, 85–91. [Google Scholar] [CrossRef]

- Alhanbali, S.; Munro, K.J.; Dawes, P.; Perugia, E.; Millman, R.E. Associations between pre-stimulus alpha power, hearing level and performance in a digits-in-noise task. International Journal of Audiology 2022, 61, 197–204. [Google Scholar] [CrossRef]

- Brainard, D.H. The Psychophysics Toolbox. Spatial Vision 1997, 10, 433–436. [Google Scholar] [CrossRef]

- Pelli, D.G. The VideoToolbox software for visual psychophysics: Transforming numbers into movies. Spatial Vision 1997, 10, 437–442. [Google Scholar] [CrossRef] [PubMed]

- Kleiner, M.; Brainard, D.; Pelli, D. What’s new in psychtoolbox-3. Perception ECVP ‘07 Abstract Supplement 2007, 36, 14. [Google Scholar] [CrossRef]

- Killion, M.; Schulien, R.; Christensen, L.; Fabry, D.; Revit, L.; Niquette, P.; Chung, K. Real-world performance of an ITE directional microphone. The Hearing Journal 1998, 51, 24–39, https://www.etymotic.com/wp-content/uploads/2021/05/erl-0038-1998.pdf. [Google Scholar]

- Winkler, A.; Latzel, M.; Holube, I. Open versus closed hearing-aid fittings: A literature review of both fitting approaches. Trends in Hearing 2016, 20, 2331216516631741. [Google Scholar] [CrossRef]

- Bolker, B.M.; Brooks, M.E.; Clark, C.J.; Geange, S.W.; Poulsen, J.R.; Stevens, M.H.H.; White, J.S. Generalized linear mixed models: A practical guide for ecology and evolution. Trends in Ecology & Evolution 2009, 24, 127–135. [Google Scholar] [CrossRef]

- Lilliefors, H.W. On the Kolmogorov-Smirnov test for normality with mean and variance unknown. Journal of the American Statistical Association 1967, 62, 399–402. [Google Scholar] [CrossRef]

- McCullagh, P.; Nelder, J.A. Generalized Linear Models. 2nd Edition; Chapman & Hall / CRC Press: London, 1989. [Google Scholar]

- Oostenveld, R.; Fries, P.; Maris, E.; Schoffelen, J.M. FieldTrip: Open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data. Computational Intelligence and Neuroscience 2011, 2011, 156869. [Google Scholar] [CrossRef]

- Delorme, A.; Makeig, S. EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. Journal of Neuroscience Methods 2004, 134, 9–21. [Google Scholar] [CrossRef]

- Maris, E.; Oostenveld, R. Nonparametric statistical testing of EEG- and MEG-data. Journal of Neuroscience Methods 2007, 164, 177–190. [Google Scholar] [CrossRef]

- Ohlenforst, B.; Wendt, D.; Kramer, S.E.; Naylor, G.; Zekveld, A.A.; Lunner, T. Impact of SNR, masker type and noise reduction processing on sentence recognition performance and listening effort as indicated by the pupil dilation response. Hearing Research 2018, 365, 90–99. [Google Scholar] [CrossRef]

- Jensen, O.; Gelfand, J.; Kounios, J.; Lisman, J.E. Oscillations in the alpha band (9–12 Hz) increase with memory load during retention in a short-term memory task. Cerebral Cortex 2002, 12, 877–882. [Google Scholar] [CrossRef] [PubMed]

- Tulhadar, A.M.; Huurne, N.; Schoffelen, J.M.; Maris, E.; Oostenveld, R.; Jensen, O. Parieto-occipital sources account for the increase in alpha activity with working memory load. Human Brain Mapping 2007, 28, 785–792. [Google Scholar] [CrossRef] [PubMed]

- Meltzer, J.A.; Zaveri, H.P.; Goncharova, I.I.; Distasio, M.M.; Papademetris, X.; Spencer, S.S.; Spencer, D.D.; Constable, R.T. Effects of working memory load on oscillatory power in human intracranial EEG. Cerebral Cortex 2008, 18, 1843–1855. [Google Scholar] [CrossRef] [PubMed]

- Obleser, J.; Wöstmann, M.; Hellbernd, N.; Wilsch, A.; Maess, B. Adverse listening conditions and memory load drive a common alpha oscillatory network. The Journal of Neuroscience 2012, 32, 12376–12383. [Google Scholar] [CrossRef]

- Zhang, X.; Samuel, A.G. Perceptual learning of speech under optimal and adverse conditions. Journal of Experimental Psychology: Human Perception and Performance 2014, 40, 200–217. [Google Scholar] [CrossRef]

- Hervais-Adelman, A.; Davis, M.H.; Johnsrude, I.S.; Carlyon, R.P. Perceptual learning of noise vocoded words: Effects of feedback and lexicality. Journal of Experimental Psychology: Human Perception and Performance 2008, 34, 460–474. [Google Scholar] [CrossRef]

- Dosher, B.A.; Lu, Z.L. Perceptual learning: Learning, memory, and models. In The Oxford Handbook of Human Memory, Two Volume Pack: Foundations and Applications; Kahana, M.J., Wagner, A.D., Eds.; Oxford University Press: Oxford, United Kingdom, 2024. [Google Scholar] [CrossRef]

- Picou, E.M.; Ricketts, T.A.; Hornsby, B.W.Y. Visual cues and listening effort: Individual variability. Journal of Speech, Language, and Hearing Research 2011, 54, 1416–1430. [Google Scholar] [CrossRef]

- Sarampalis, A.; Kalluri, S.; Edwards, B.; Hafter, E. Objective measures of listening effort: Effects of background noise and noise reduction. Journal of Speech, Language, and Hearing Research 2009, 52, 1230–1240. [Google Scholar] [CrossRef]

- Picou, E.M.; Ricketts, T.A. Increasing motivation changes subjective reports of listening effort and choice of coping strategy. International Journal of Audiology 2014, 53, 418–426. [Google Scholar] [CrossRef]

- Carolan, P.J.; Heinrich, A.; Munro, K.J.; Millman, R.E. Quantifying the effects of motivation on listening effort: A systematic review and meta-analysis. Trends in Hearing 2022, 26, 23312165211059982. [Google Scholar] [CrossRef]

- Miles, K.M.; Keidser, G.; Freeston, K.; Beechey, T.; Best, V.; Buchholz, J.M. Development of the everyday conversational sentences in noise test. The Journal of the Acoustical Society of America 2020, 147, 1562–1576. [Google Scholar] [CrossRef] [PubMed]

- Mealings, K.; Valderrama, J.T.; Mejia, J.; Yeend, I.; Beach, E.F.; Edwards, B. Hearing aids reduce self-perceived difficulties in noise for listeners with normal audiograms. Ear and Hearing 2024, 45, 151–163. [Google Scholar] [CrossRef] [PubMed]

- Valderrama, J.T.; Mejia, J.; Wong, A.; Chong-White, N.; Edwards, B. The value of headphone accommodations in Apple Airpods Pro for managing speech-in-noise hearing difficulties of individuals with normal audiograms. International Journal of Audiology 2024, 63, 447–457. [Google Scholar] [CrossRef] [PubMed]

- Valderrama, J.T.; Jevelle, P.; Beechey, T.; Miles, K.; Bardy, F. Towards a combined behavioural and physiological measure of listening effort. 5th International Conference on Cognitive Hearing Science for Communication (CHS-COM);, 2019.

- Valderrama, J.T.; Mejia, J.; Mealings, K.; Yeend, I.; Sun, V.; Beach, E.F.; Edwards, B. The use of binaural beamforming to reduce listening effort. 45th Association for Research in Otolaryngology (ARO) Annual Midwinter Meeting 2022;, 2022.

- Valderrama, J.T.; Mejia, J.; Wong, A.; Herbert, N.; Edwards, B. Reducing listening effort in a realistic sound environment using directional microphones: Insights from behavioural, neurophysiological and self-reported data. 7th International Conference on Cognitive Hearing Science for Communication (CHS-COM);, 2024.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).