Submitted:

28 October 2024

Posted:

30 October 2024

Read the latest preprint version here

Abstract

Keywords:

I. Introduction

II. Purpose of the Study

III. Related Work

A. Data Preparation Techniques

B. Addressing Class Imbalance

C. ML Techniques for Churn Prediction

D. Ensemble Learning Techniques

E. Hybrid Learning Approaches

F. Rule-Based and Social Network Analysis Approaches

G. Applications in Various Sectors

IV. Method

A. Training and Validation Process

B. Evaluation Metrics

V. Results

A. Setup

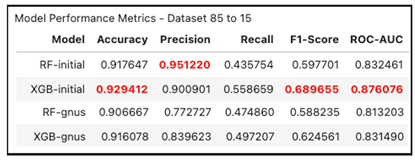

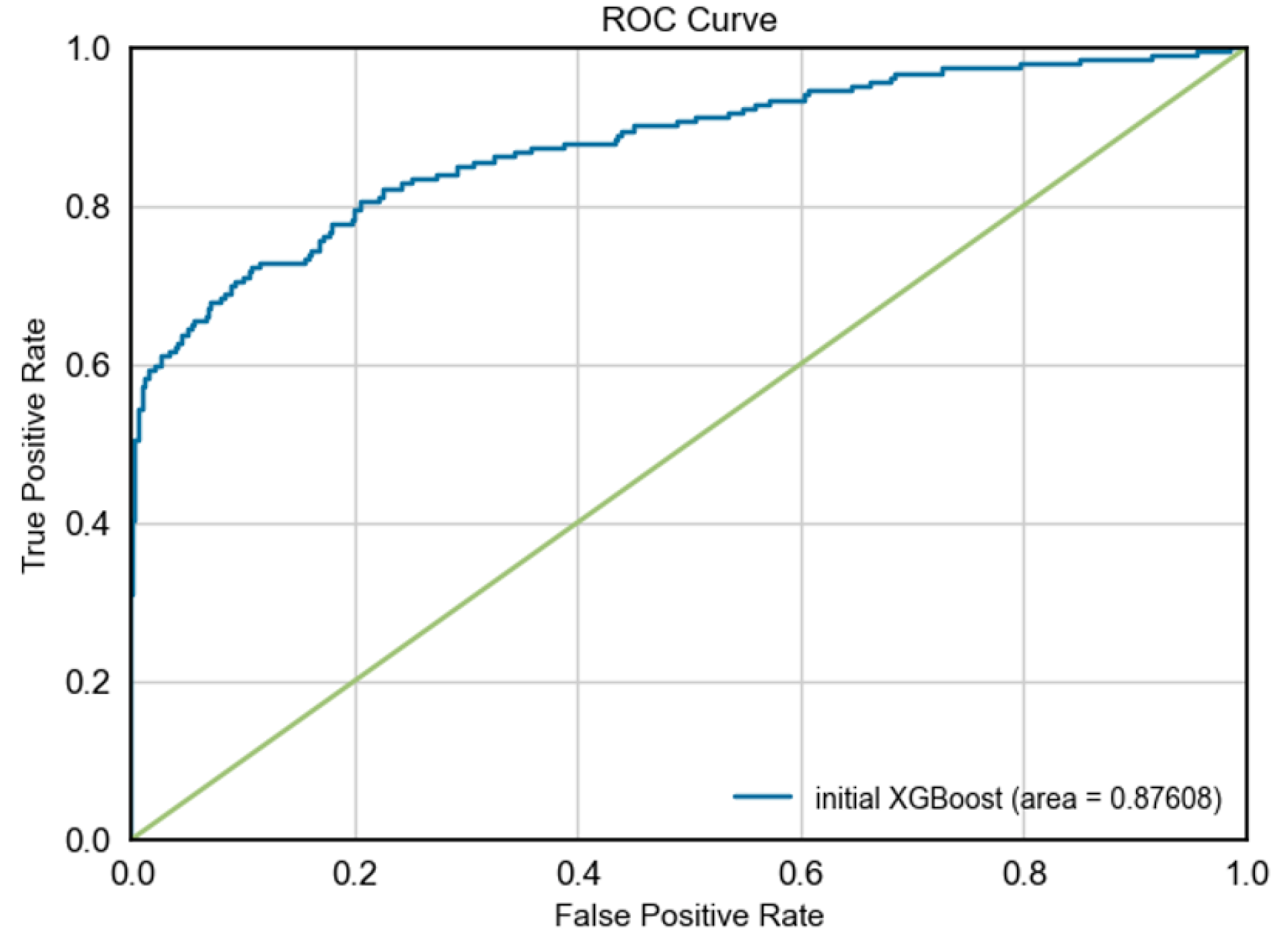

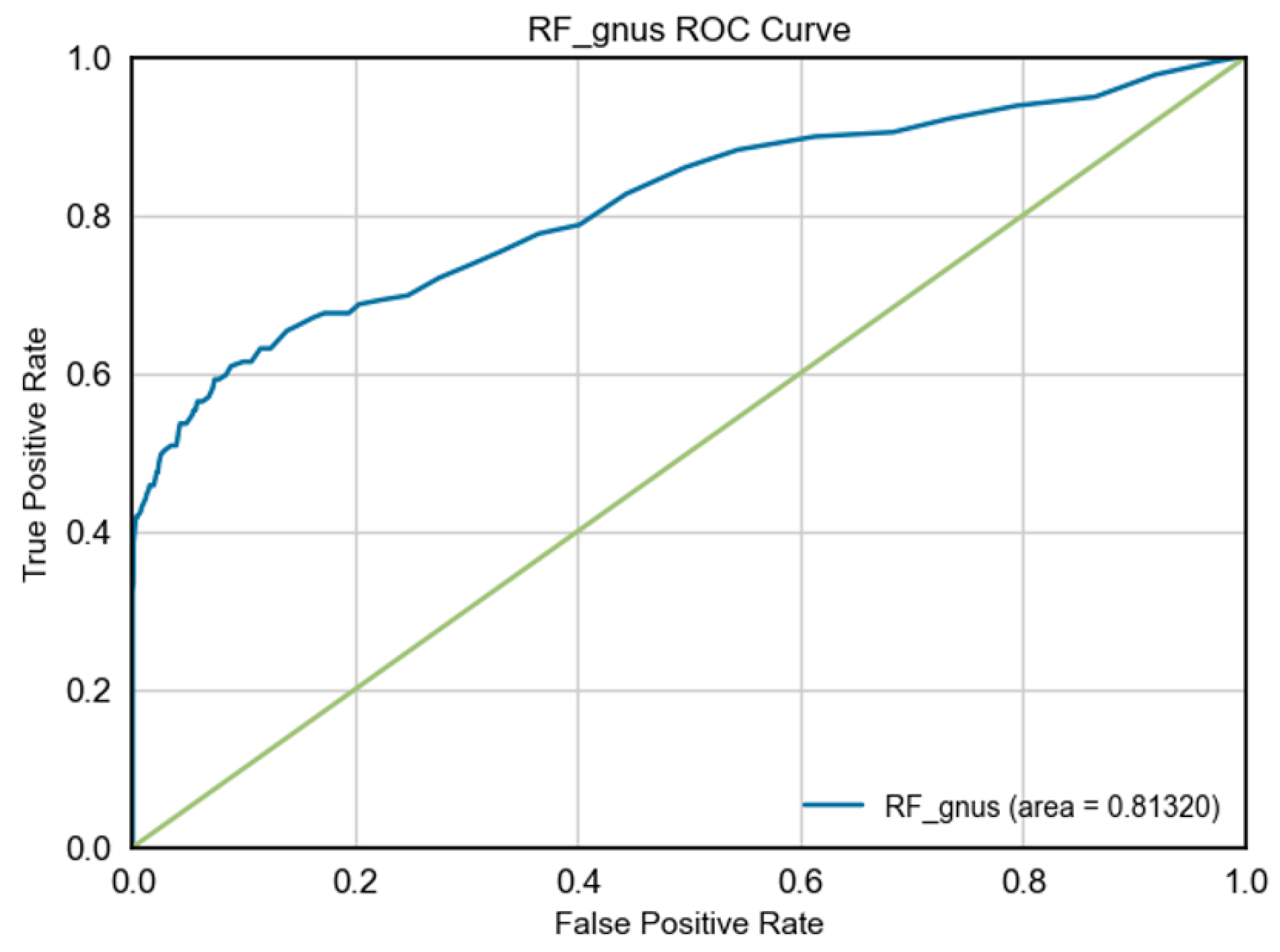

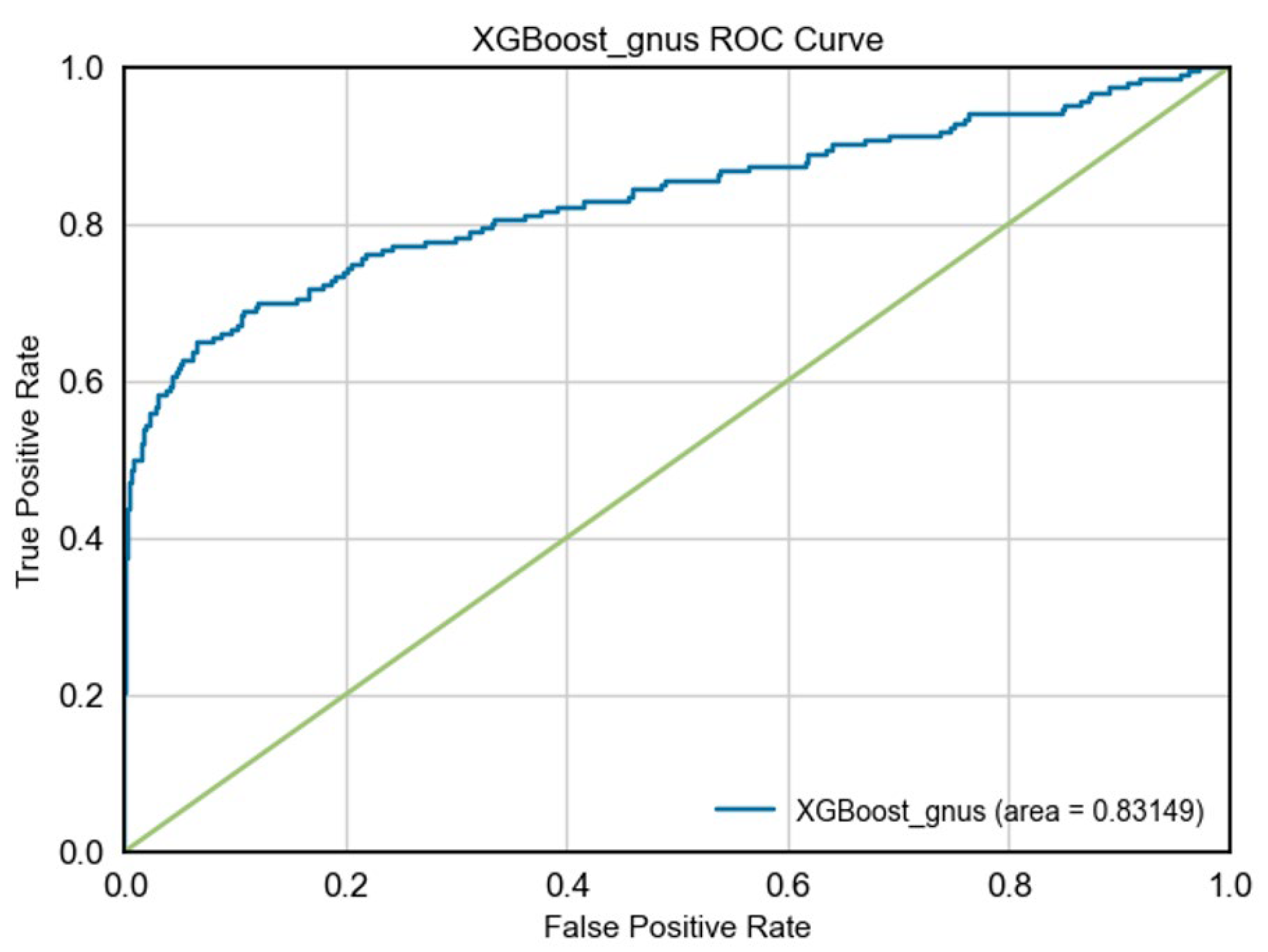

B. Results

|

Conclusions

References

- Vafeiadis, Thanasis & Diamantaras, Kostas & Sarigiannidis, G. & Chatzisavvas, Konstantinos. (2015). A Comparison of Machine Learning Techniques for Customer Churn Prediction. Simulation Modelling Practice and Theory. 55. 10.1016/j.simpat.2015.03.003.

- Ahmad, A.K., Jafar, A. & Aljoumaa, K. Customer churn prediction in telecom using machine learning in the big data platform. J Big Data 6, 28 (2019). https://doi.org/10.1186/s40537-019-0191-6.

- Kristof Coussement, Stefan Lessmann, Geert Verstraeten, A comparative analysis of data preparation algorithms for customer churn prediction: A case study in the telecommunication industry, Decision Support Systems, Volume 95, 2017, Pages 27-36, ISSN 0167-9236. https://doi.org/10.1016/j.dss.2016.11.007.

- Adnan Amin, Babar Shah, Asad Masood Khattak, Fernando Joaquim Lopes Moreira, Gohar Ali, Alvaro Rocha, Sajid Anwar, Cross-company customer churn prediction in telecommunication: A comparison of data transformation methods, International Journal of Information Management, Volume 46, 2019, Pages 304-319, ISSN 0268-4012. https://doi.org/10.1016/j.ijinfomgt.2018.08.015.

- D. Do, P. Huynh, P. Vo, and T. Vu, "Customer churn prediction in an internet service provider," 2017 IEEE International Conference on Big Data (Big Data), Boston, MA, USA, 2017, pp. 3928-3933.

- J. Burez, D. Van den Poel, Handling class imbalance in customer churn prediction, Expert Systems with Applications, Volume 36, Issue 3, Part 1, 2009, Pages 4626-4636, ISSN 0957-4174. https://doi.org/10.1016/j.eswa.2008.05.027.

- Yaya Xie, Xiu Li, E.W.T. Ngai, Weiyun Ying, Customer churn prediction using improved balanced random forests, Expert Systems with Applications, Volume 36, Issue 3, Part 1, 2009, Pages 5445-5449, ISSN 0957-4174. https://doi.org/10.1016/j.eswa.2008.06.121.

- Jadhav, Rahul & Pawar, Usharani. (2011). Churn Prediction in Telecommunication Using Data Mining Technology. International Journal of Advanced Computer Sciences and Applications. 2. 10.14569/IJACSA.2011.020204.

- T. Vafeiadis, K.I. Diamantaras, G. Sarigiannidis, K.Ch. Chatzisavvas, A comparison of machine learning techniques for customer churn prediction, Simulation Modelling Practice and Theory, Volume 55, 2015, Pages 1-9, ISSN 1569-190X. https://doi.org/10.1016/j.simpat.2015.03.003.

- Wouter Verbeke, David Martens, Christophe Mues, Bart Baesens, Building comprehensible customer churn prediction models with advanced rule induction techniques, Expert Systems with Applications, Volume 38, Issue 3, 2011, Pages 2354-2364, ISSN 0957-4174. https://doi.org/10.1016/j.eswa.2010.08.023.

- Ahmed, Mahreen & Afzal, Hammad & Siddiqi, Imran & Amjad, Muhammad & Khurshid, Khawar. (2020). Exploring nested ensemble learners using overproduction and choosing an approach for churn prediction in the telecom industry. Neural Computing and Applications. 32. 10.1007/s00521-018-3678-8.

- Kimura, Takuma. (2022). Customer Churn Prediction with Hybrid Resampling and Ensemble Learning.. 1-23.

- Arno De Caigny, Kristof Coussement, Koen W. De Bock, A new hybrid classification algorithm for customer churn prediction based on logistic regression and decision trees, European Journal of Operational Research, Volume 269, Issue 2, 2018, Pages 760-772, ISSN 0377-2217, https://doi.org/10.1016/j.ejor.2018.02.009.

- 2019; 94, 14. Adnan Amin, Feras Al-Obeidat, Babar Shah, Awais Adnan, Jonathan Loo, Sajid Anwar, Customer churn prediction in telecommunication industry using data certainty, Journal of Business Research, Volume 94, 2019, Pages 290-301, ISSN 0148-2963, https://doi.org/10.1016/j.jbusres.2018.03.003. [CrossRef]

- 2012; 28, 15. Xiaohang Zhang, Ji Zhu, Shuhua Xu, Yan Wan, Predicting customer churn through interpersonal influence, Knowledge-Based Systems, Volume 28, 2012, Pages 97-104, ISSN 0950-7051, https://doi.org/10.1016/j.knosys.2011.12.005. [CrossRef]

- Wouter Verbeke, David Martens, Bart Baesens, Social network analysis for customer churn prediction, Applied Soft Computing, Volume 14, Part C, 2014, Pages 431-446, ISSN 1568-4946. https://doi.org/10.1016/j.asoc.2013.09.017.

- L. Bin, S. Peiji, and L. Juan, "Customer Churn Prediction Based on the Decision Tree in Personal Handyphone System Service," 2007 International Conference on Service Systems and Service Management, Chengdu, China, 2007, pp. 1-5.

- Jennifer Karlberg, Maja Axén. (2020). Binary Classification for Predicting Customer Churn. Department of Mathematics and Mathematical Statistics at Umeå University.

- Shaaban, Essam & Helmy, Yehia & Khedr, Ayman & Nasr, Mona. (2012). A Proposed Churn Prediction Model. International Journal of Engineering Research and Applications (IJERA. 2. 693-697.

- Imani, Mehdi, et al. "The Impact of SMOTE and ADASYN on Random Forest and Advanced Gradient Boosting Techniques in Telecom Customer Churn Prediction." 2024 10th International Conference on Web Research (ICWR). IEEE, 2024.

- Imani, Mehdi, and Hamid Reza Arabnia. "Hyperparameter optimization and combined data sampling techniques in machine learning for customer churn prediction: a comparative analysis." Technologies 11.6 (2023): 167. https://doi.org/10.3390/technologies11060167.

- Rinichristy, “Customer Churn Prediction 2020,” Kaggle, Dec. 12, 2022.https://www.kaggle.com/code/rinichristy/customer-churn-prediction-2020.

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).