Submitted:

29 October 2024

Posted:

30 October 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

- RT1

- Tabular reinforcement learning will lead to better explainable policies while maintaining similar model performance to deep reinforcement learning methods when the training is done with non-noisy rewards.

- RT2

- Noisy rewards in tabular reinforcement learning algorithms will lead to worse model performance regarding collected rewards and make explanations of performed actions less interpretable compared to tabular reinforcement learning algorithms trained on non-noisy rewards.

- RQ1

- How can reinforcement learning methods help to improve critical infrastructure management in the long term by ensuring accountability, resilience and adaptability?

2. State of the Art & Related Work

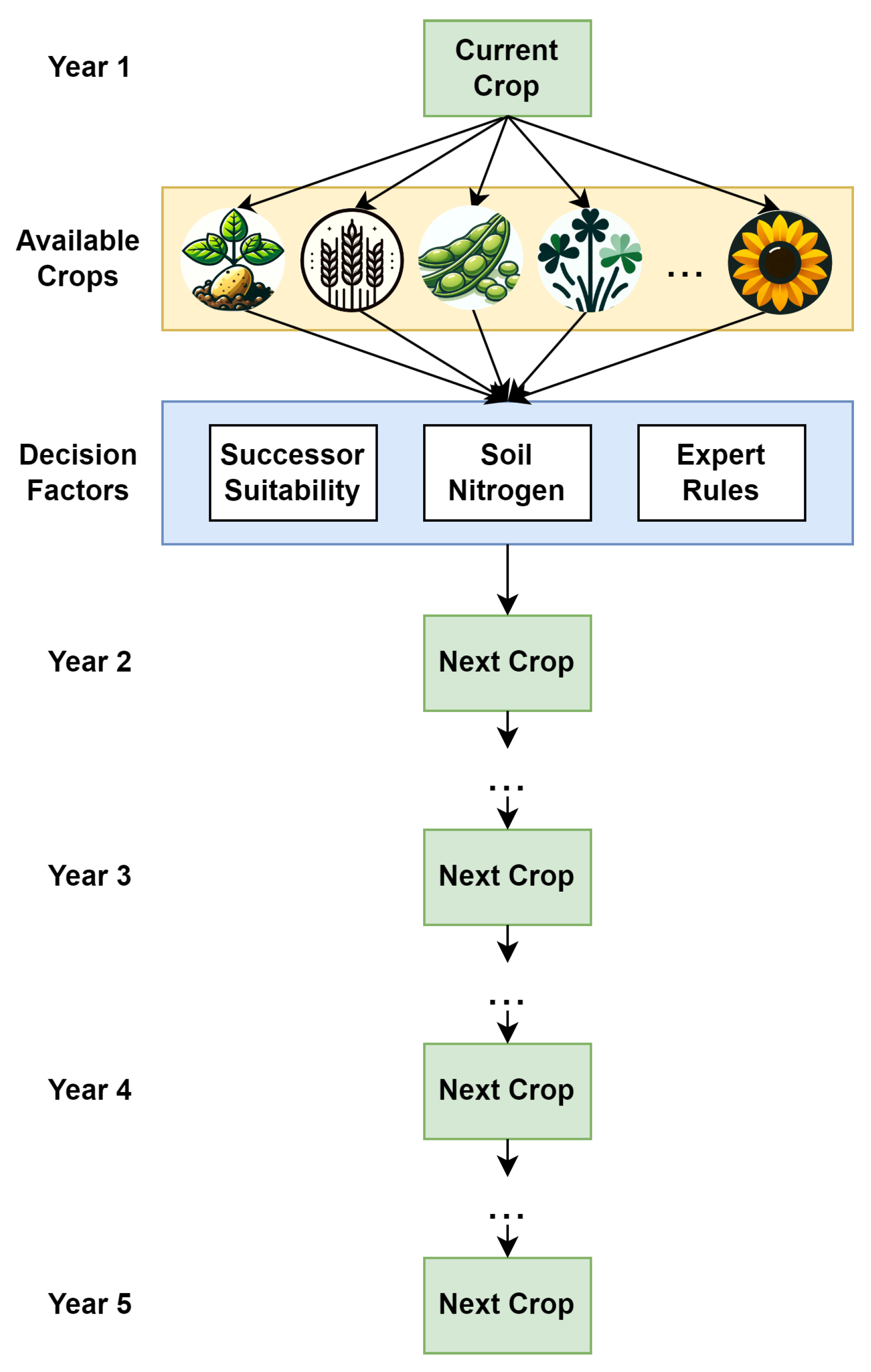

3. Methodology

3.1. Definitions

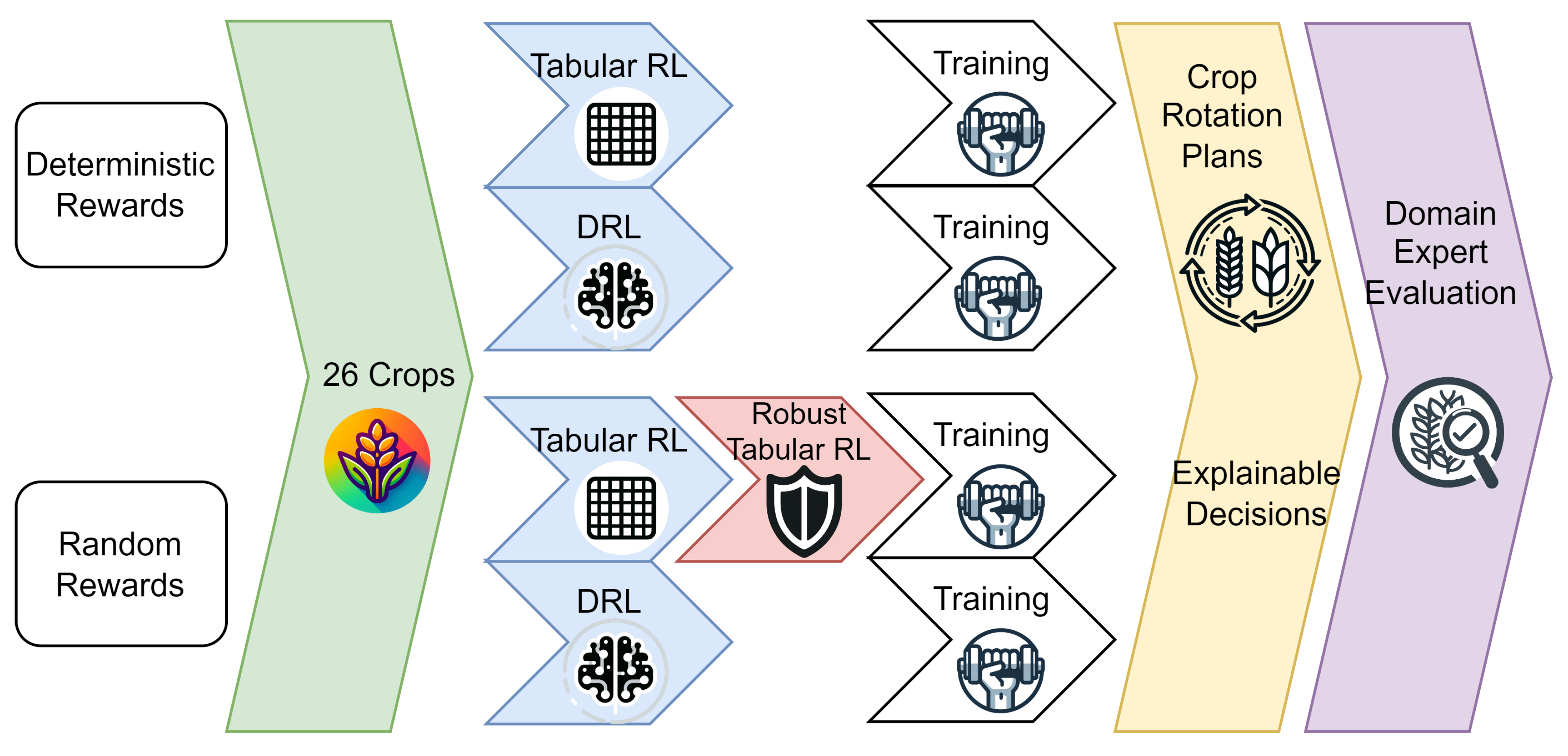

3.2. Reinforcement Learning Methods

3.3. Tabular Reinforcement Learning Methods

- Model-based vs. model-free techniques [27]. In model-based methods, rewards for state action pairs visited during training are stored as memories, which enables tracing the learning behaviour but comes at a higher computational cost.

- On-policy vs. off-policy reinforcement learning [27]. The difference between these two variants is how the Q value for each state-action pair gets updated. In an on-policy setting, the q-value update is based on the next action reward and under the assumption that the current policy will be followed in the future. In contrast, an off-policy agent updates its q values as if it followed a greedy policy even though it does not.

- A basic 1-step tabular Q-learning method that is off-policy and model-free. This is one of the simplest versions of reinforcement learning and is, therefore, easy to implement. However, it has no internal memory, which might lead to bad results in training on random rewards.

- DynaQ is an off-policy and model-based algorithm that is more advanced than the 1-step tabular Q-learning as it combines learning and planning by maintaining a model of the environment. The model, which can essentially be seen as memory, is useful for deriving explainable policies and can ensure good learning results even when rewards for the same state-action pair vary over the course of training. The reason for this is that in addition to the exploration and exploitation phase, there is an additional step in the algorithm that can be influenced to learn good results. Maintaining and updating the model increases the computational cost compared to the 1-step tabular Q-learning.

- The Expected SARSA algorithm will be tested as an on-policy alternative. This agent is a model-free variation of the frequently used SARSA algorithm that updates its Q-values based on the average Q-values of future actions. The idea behind choosing expected SARSA over its basic version was that fluctuations based on perturbed rewards might be mitigated by selecting actions based on the expected value.

3.4. Markov Property

- ?

- ?

- 26 x 26

- 676 x 26

- 17.576 x 26

- 456.976 x 26

- 26 x 26

- 578 x 26

- 11.648 x 26

- 206.002 x 26

3.5. Robustifying Tabular Algorithms

- In the weight w, the fraction gets taken to the power of , thus punishing small differences between and less, compared to choosing a larger value in the exponent while still having a reasonably fast decay for larger differences of and

- The factor is bound to the interval , which ensures the importance of the Q-values in the decision-making, as bounds of 0 and 1 would almost exclusively base the decisions on the number of observations.

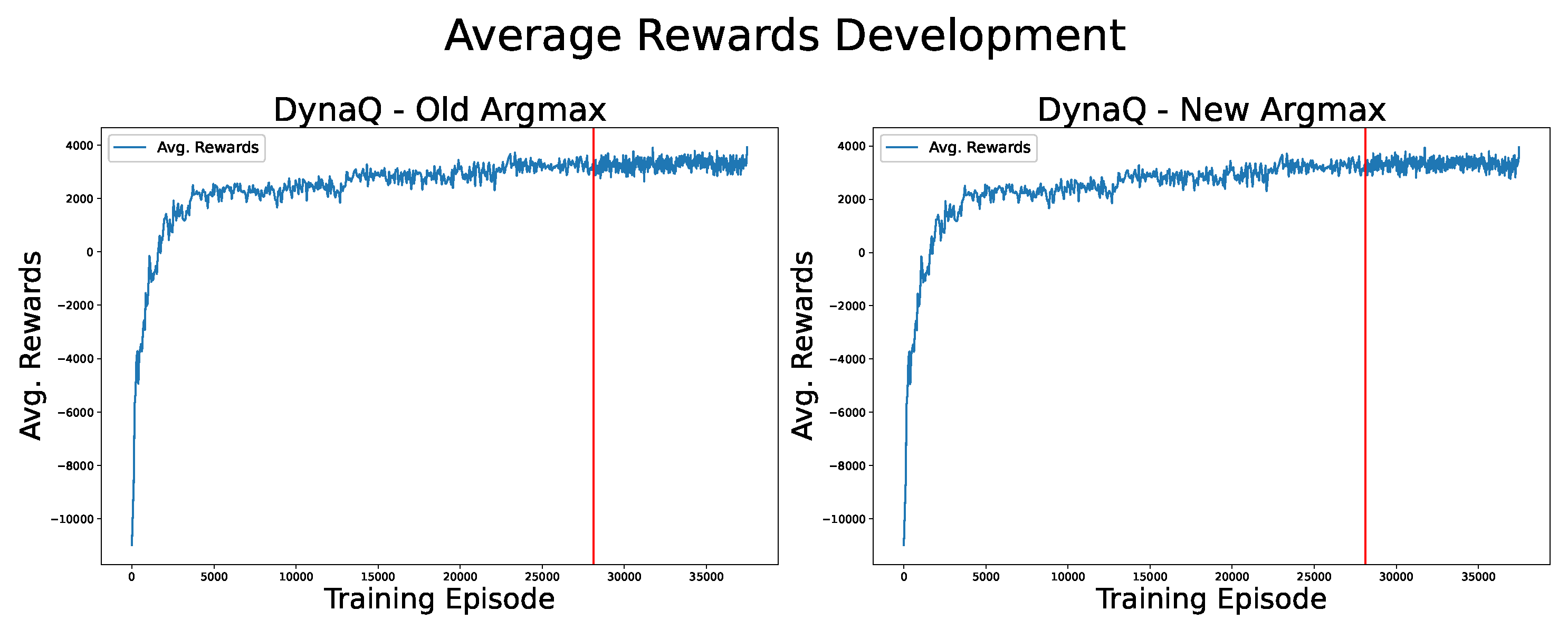

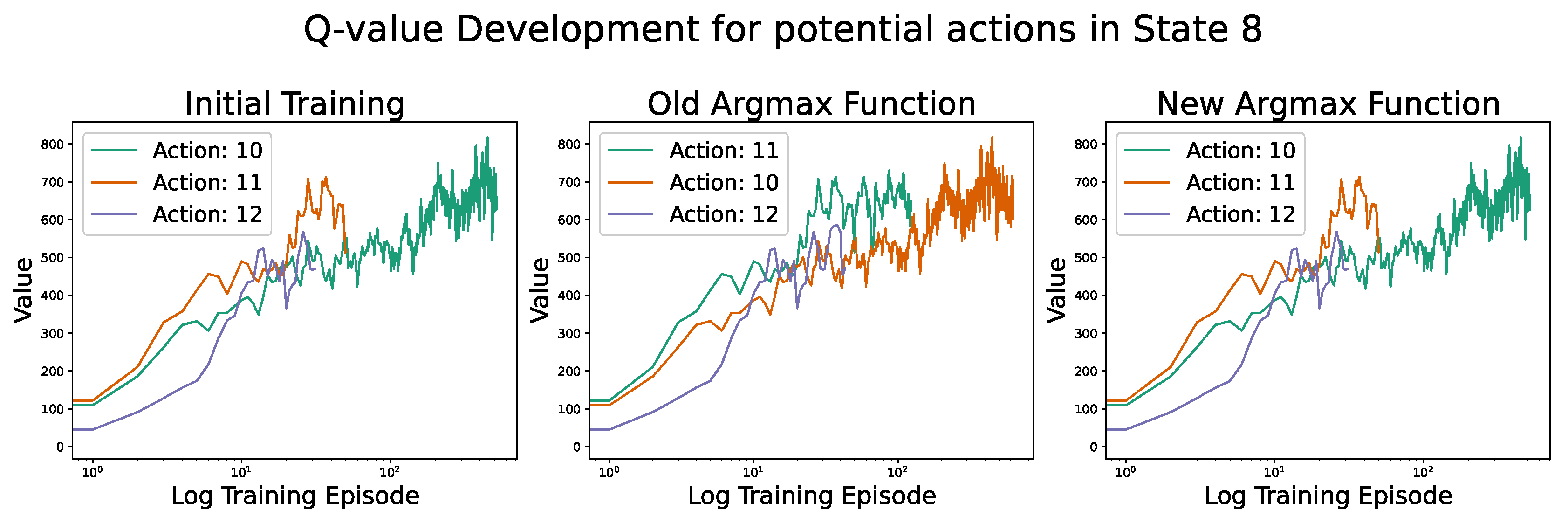

- The new argmax function was only used after 75 per cent of the training was done on the regular argmax function because the new argmax still favours more frequently updated decisions. This hinders training initially as exploration is more important, but later in the process, the new argmax helps to improve over regular exploitation.

3.6. Robust Planning

- Firstly, one way to ensure that all rewards to the same state-action pair are of equal importance is to collect all of these rewards and, in the planning phase, randomly select one of them to be used in the planning step.

- Secondly, another way of thinking about these rewards and giving equal importance to each of them is to construct an estimator for the mean of the underlying distribution and use this value as a target in the planning phase. Implementing this estimator is simple, as the average of the observed rewards is unbiased for the true sample mean. Using the unweighted average also puts equal importance on each observed reward.

3.7. Deep Reinforcement Learning Method

3.8. Setting the Random Rewards

4. Experimental Design and Setup

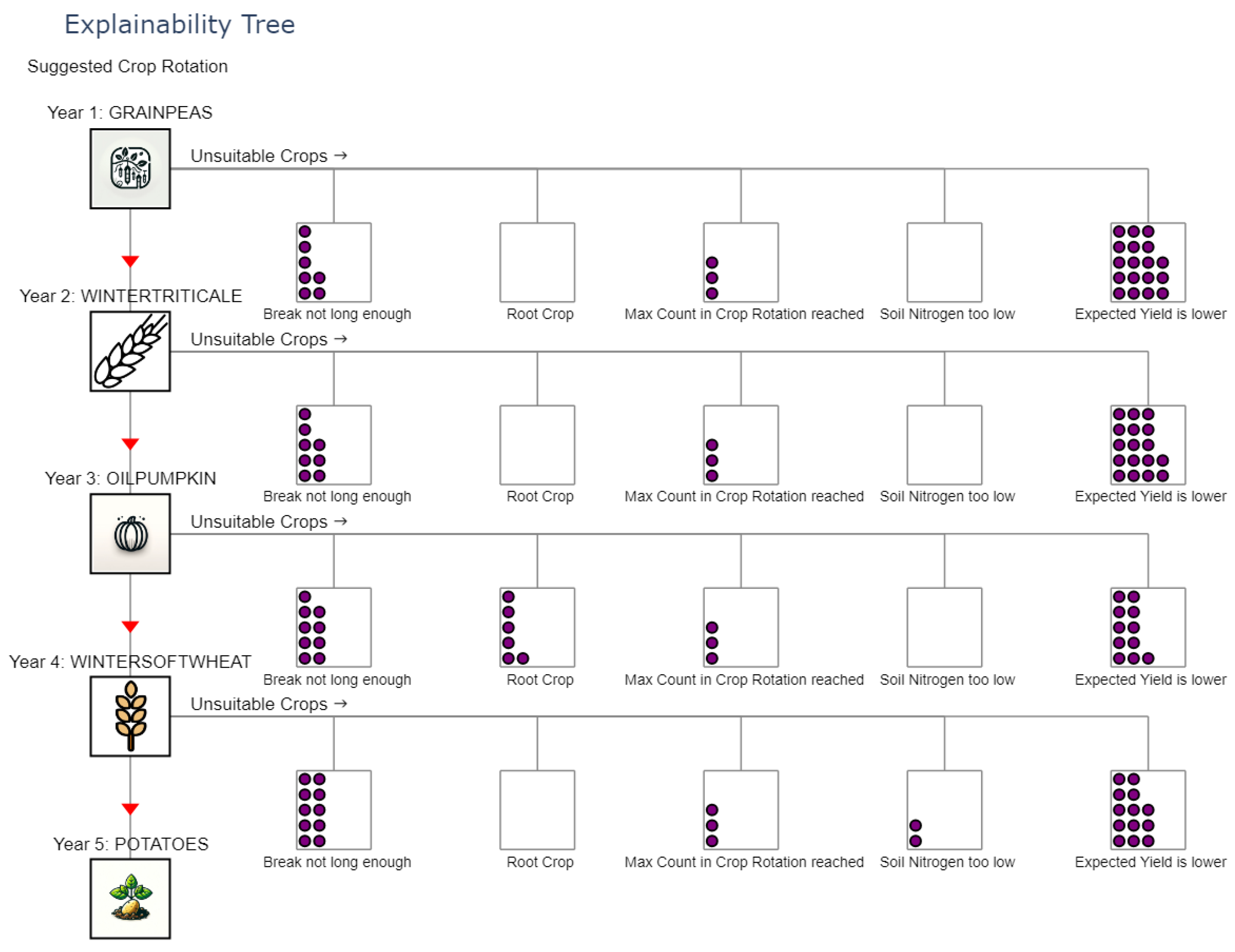

- Soil nitrogen levels must not fall below 0

- There is a long enough break before planning the same crop again

- There cannot be two root crops in direct succession

- A crop can only occur a fixed number of times within a crop rotation plan

5. Results

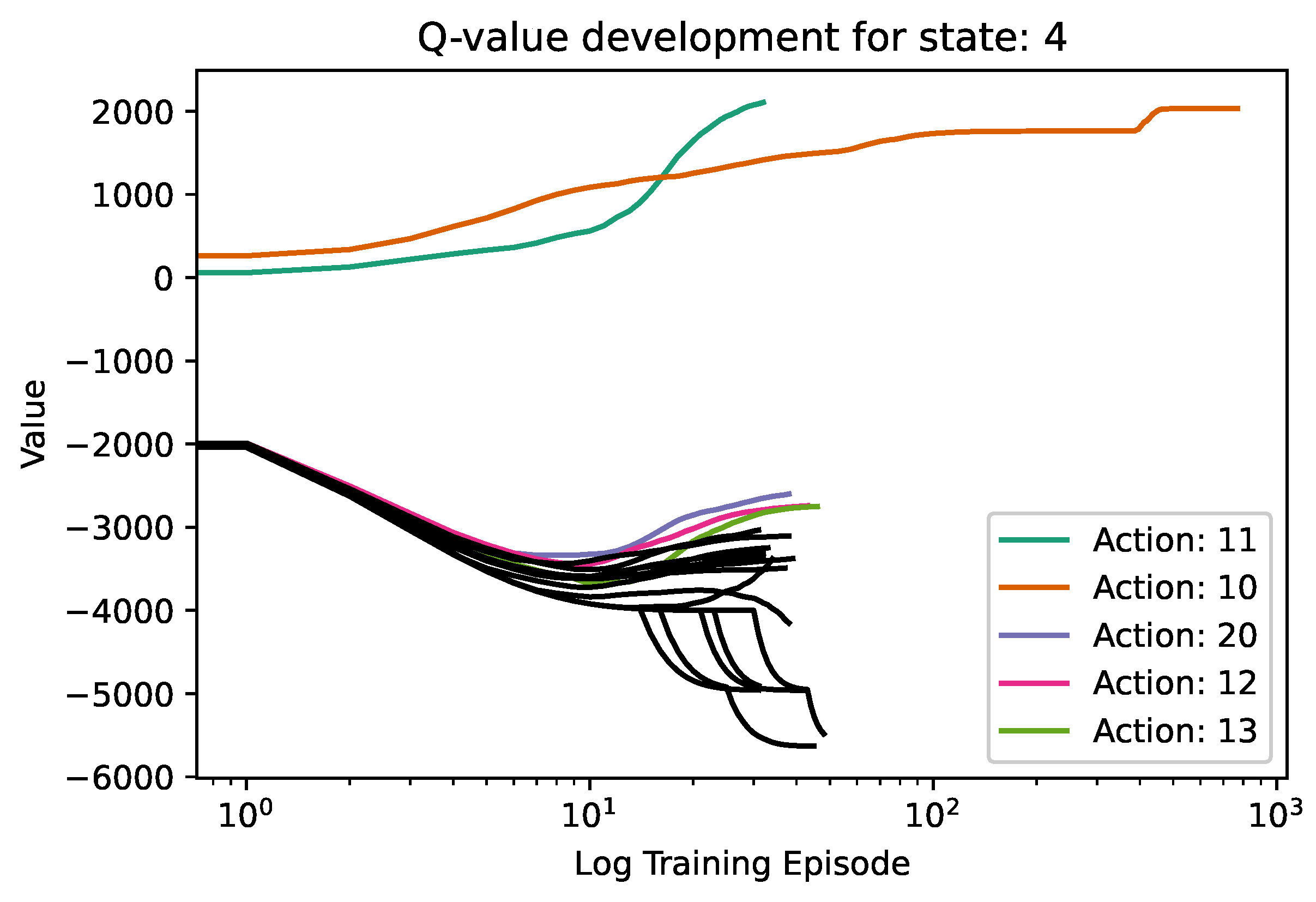

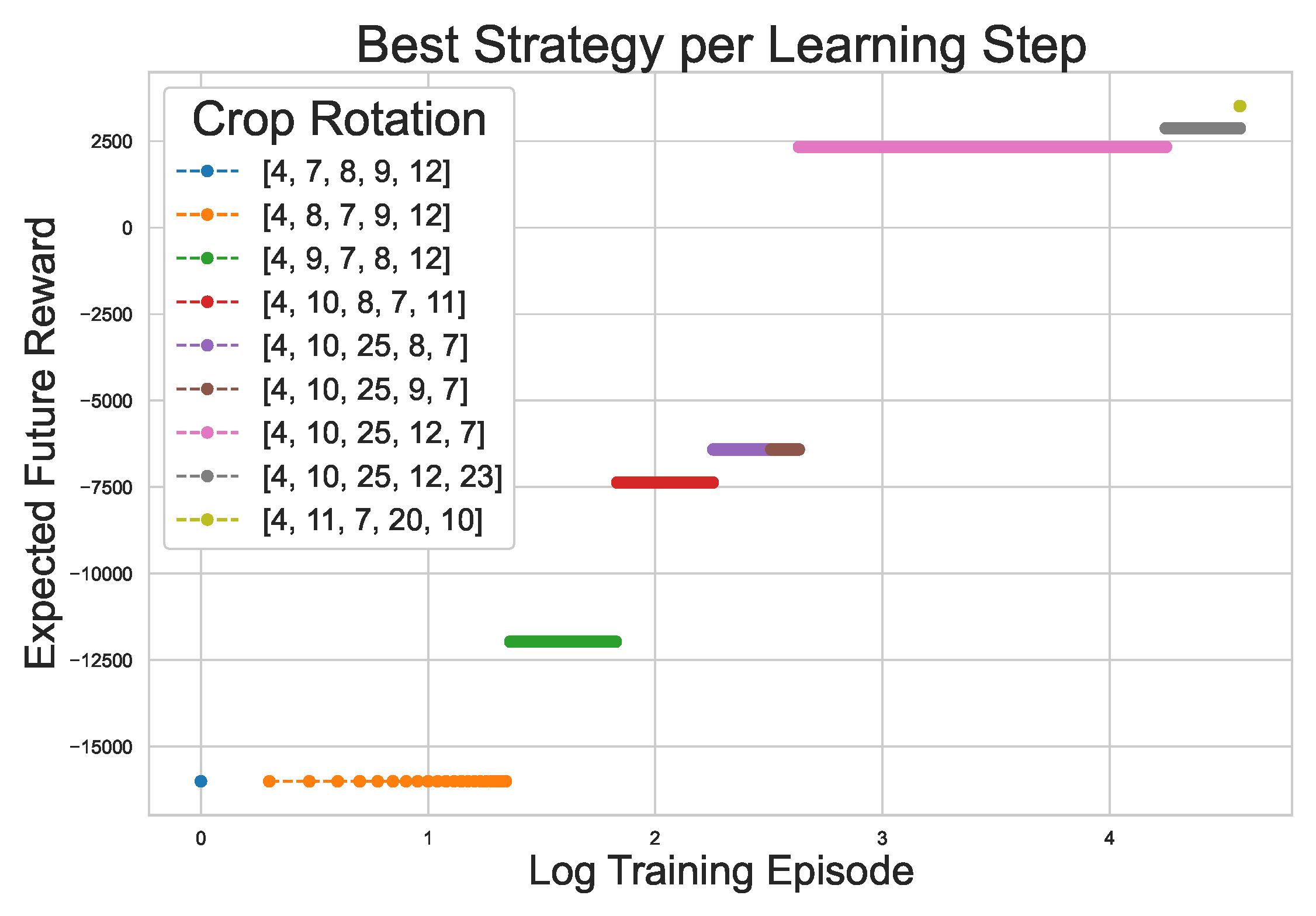

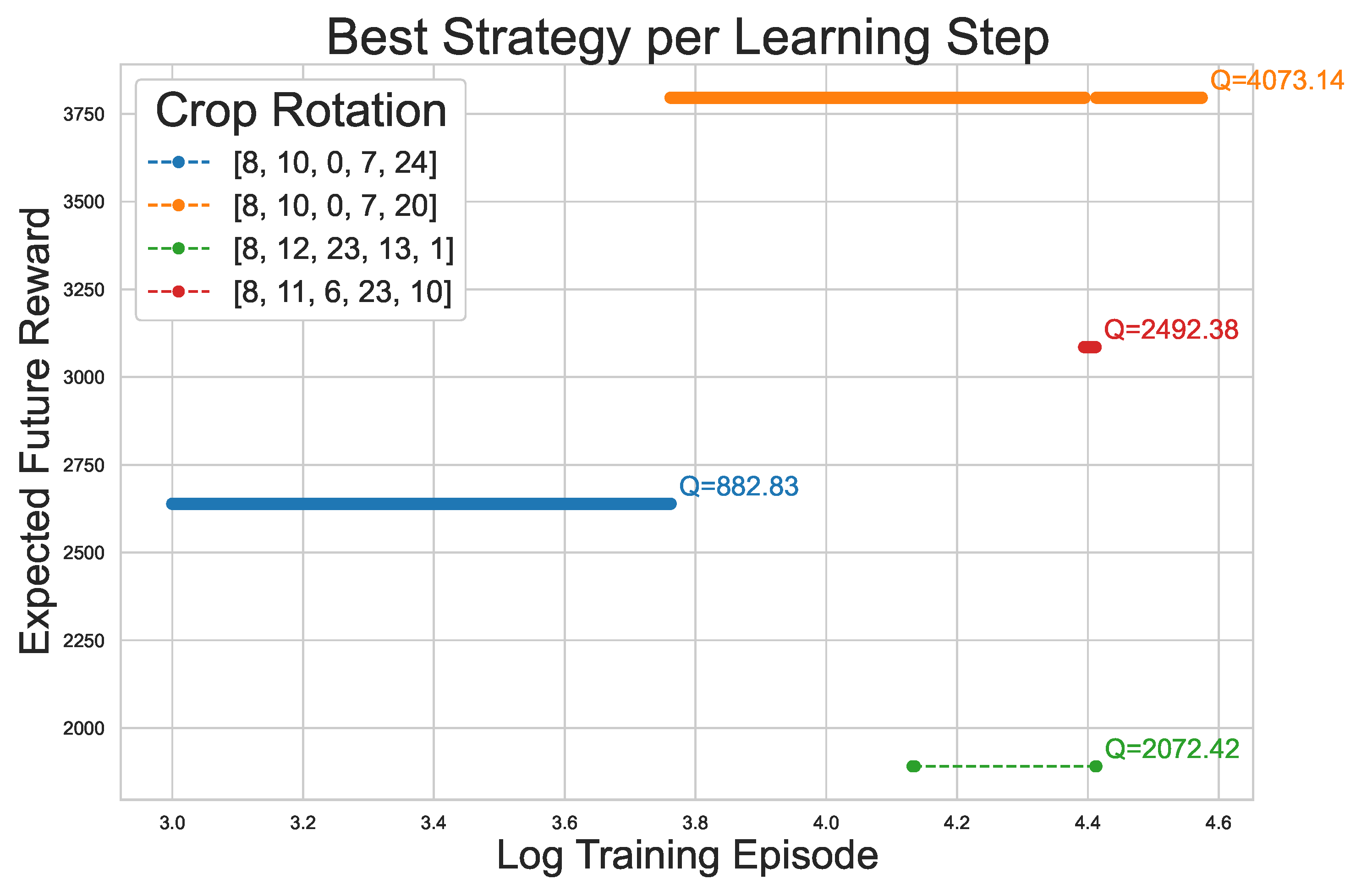

5.1. DRL vs. Tabular RL: Non-Random Rewards

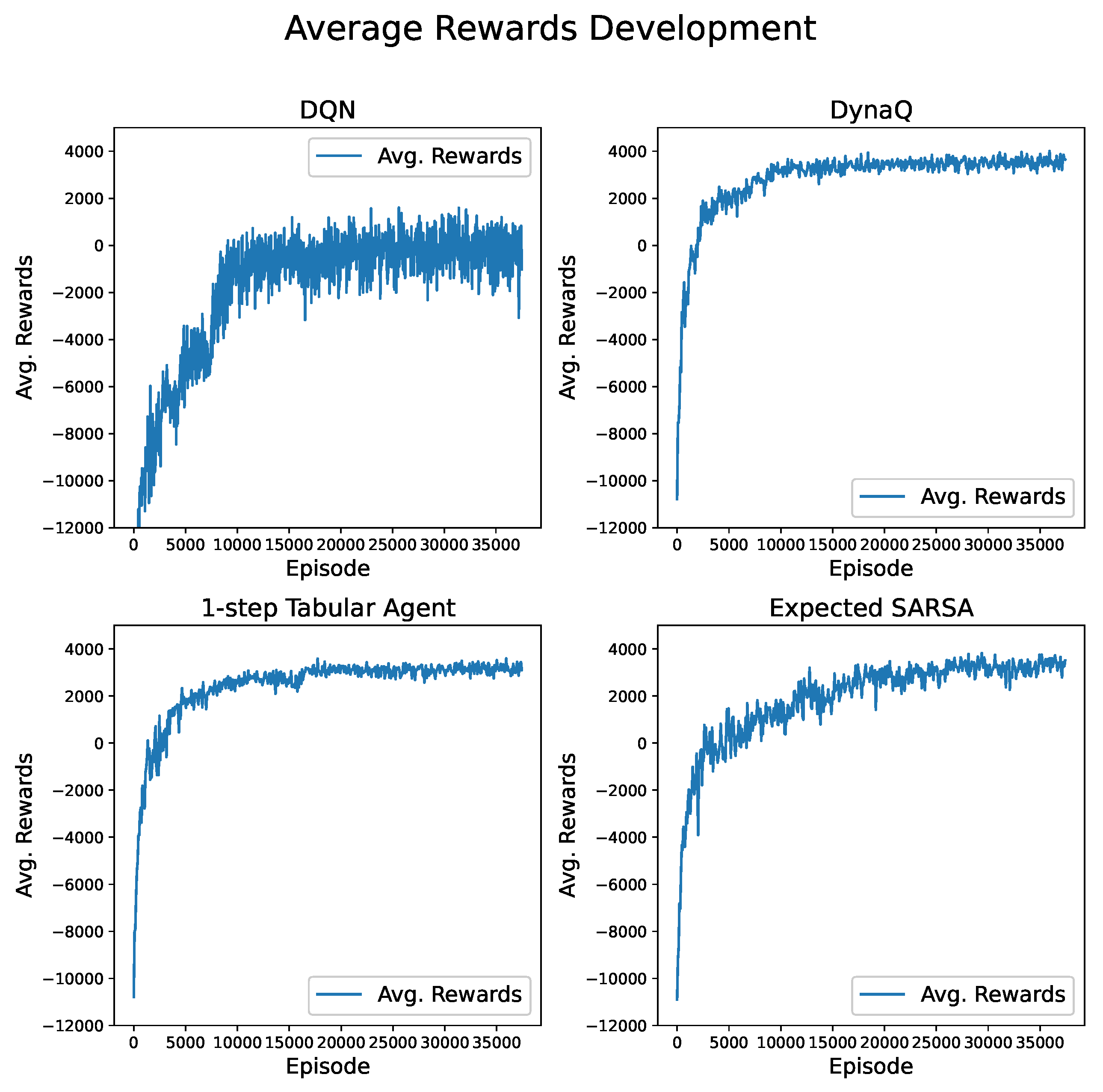

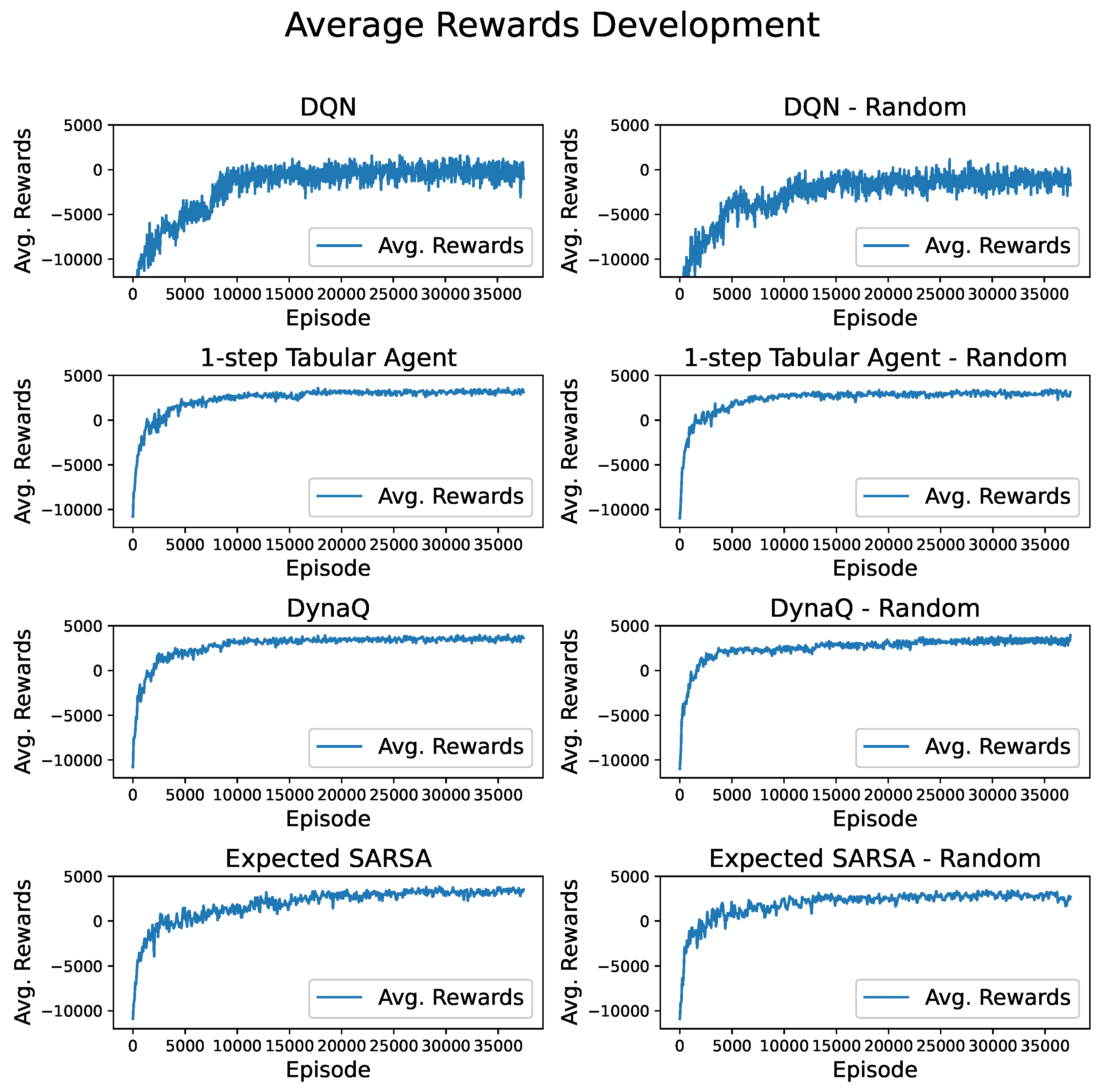

5.1.1. Overall Rewards During Training

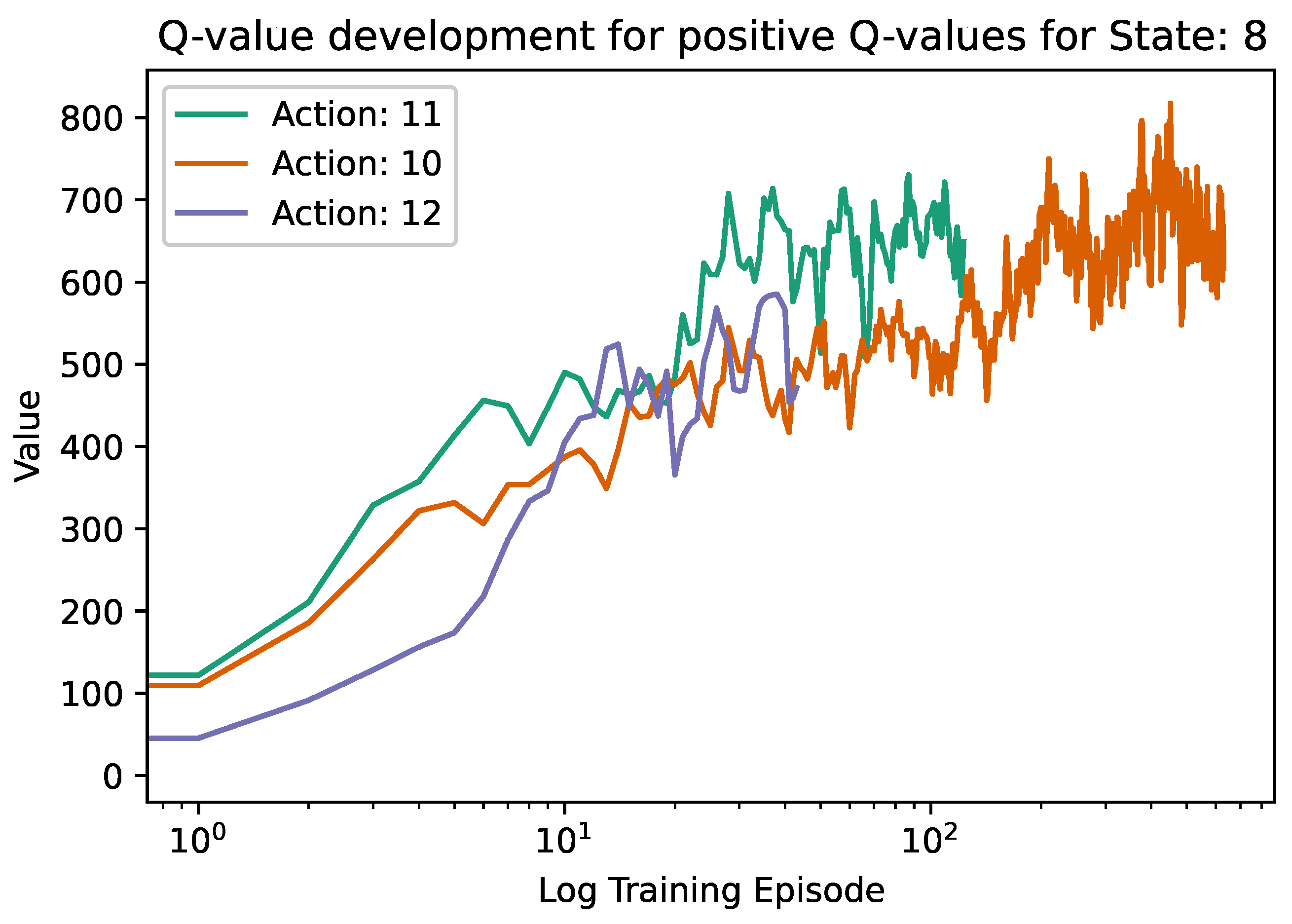

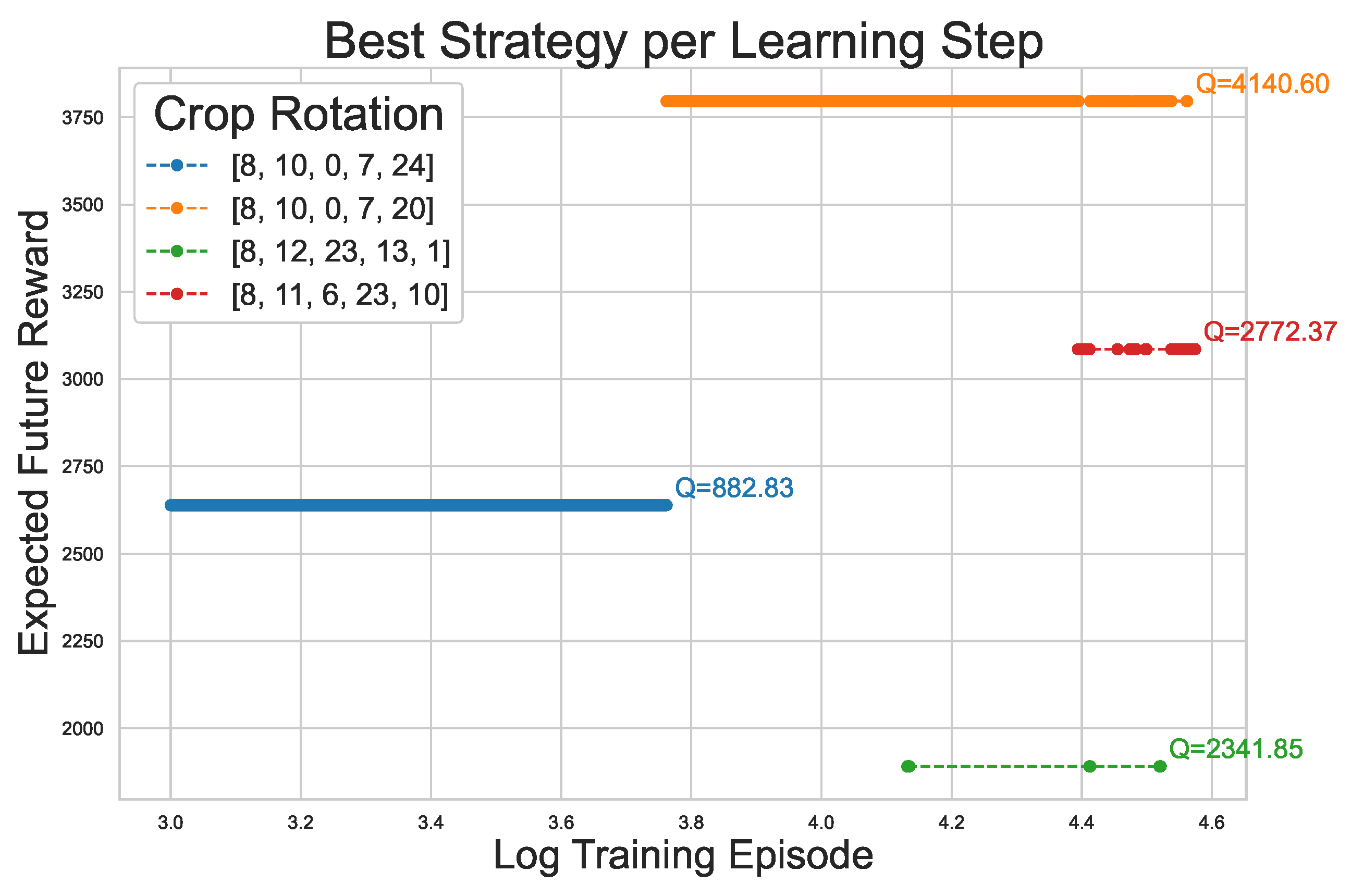

5.1.2. Explainability for non-Random Rewards

5.2. DRL vs. Tabular RL: Random Rewards

5.3. Overall Rewards During Training

5.3.1. Update Argmax for Exploration

5.3.2. Differing Planning Strategies for DynaQ

5.4. Robust Random Reward learning vs. non-Random Reward Learning

5.4.1. Summary

5.5. Domain Expert Evaluation

6. Discussion

- A more accurate real-world representation of crop yields, modelled by randomised rewards in the learning process, decreases the quality of crop rotation policies learned by reinforcement learning agents.

- Robustification measures guide the agents towards overcoming some of the negative influences of the noisy rewards and, therefore, lead to better and more resilient crop rotation plans.

- The interviewed domain experts overall favoured the crop rotation plans obtained from the noisy reward training, indicating that accounting for external factors that influence crop yields improves the quality of the crop rotation plans.

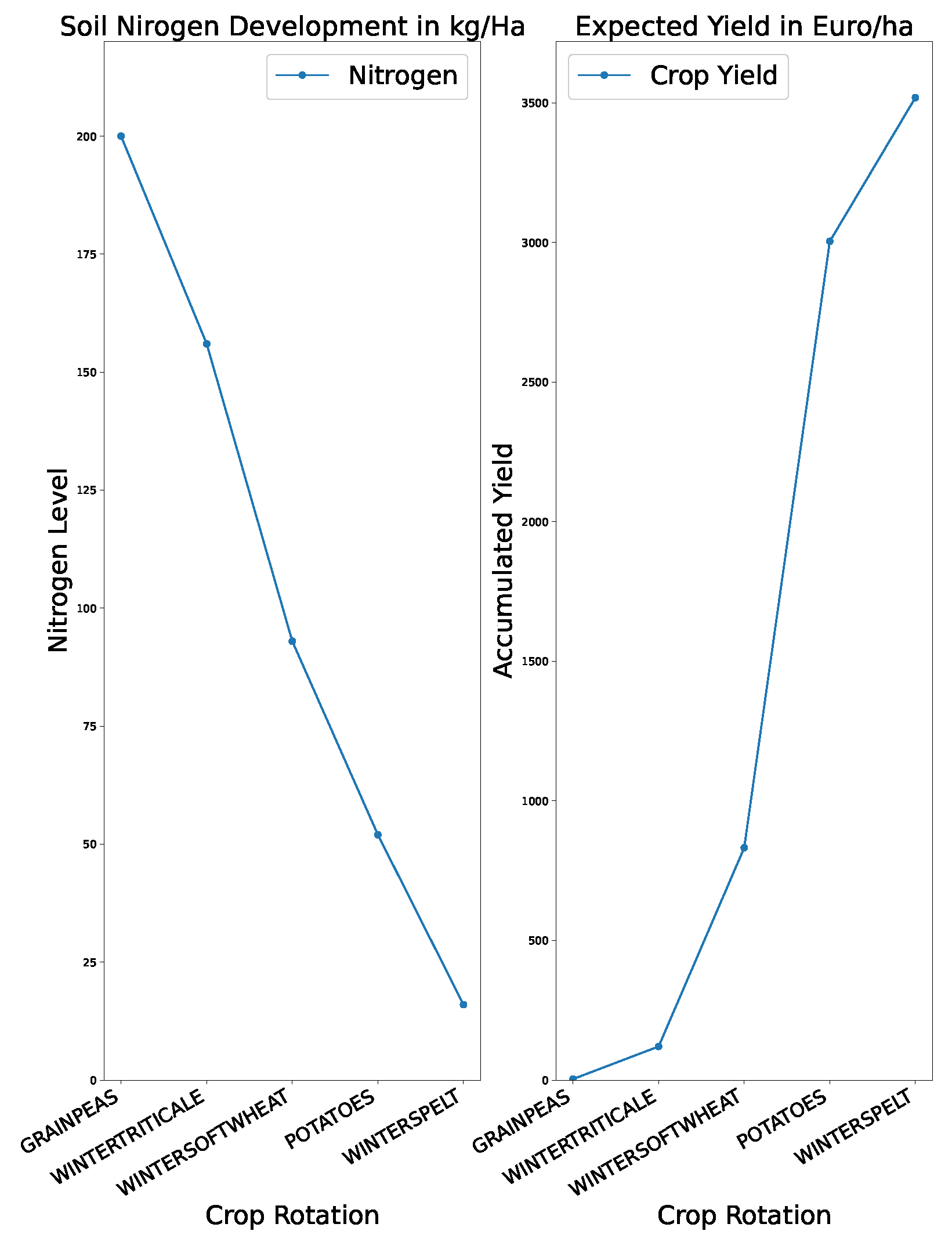

- Explanatory graphics, such as the ones presented in this work, are deemed necessary by the domain experts to understand why a crop rotation plan was suggested and what effects on the income and the field following that plan has.

7. Conclusion & Future Research

Acknowledgments

Appendix A

-

Arbeiten Sie aktuell mit wechselnden Fruchtfolgen?JA □ NEIN□

-

Verwenden Sie bereits bestimmte Programme oder künstliche Intelligenz (KI) zur Unterstützung bei der Fruchtfolgeplanung?JA □ NEIN□

- (a)

- Wenn ja, welche Programme oder KI verwenden Sie?

- (b)

- Wenn ja, wird bei dem Programm oder der KI der Entscheidungsprozess erklärt?

- (c)

- Wenn nein, was sind die Gründe, warum Sie keine KI oder andere Software verwenden?

- Das erwartete Einkommen pro Hektar für eine Pflanze verändert sich nicht von Jahr zu Jahr = Risiko nicht berücksichtigt

- Das erwartete Einkommen pro Hektar für eine Pflanze unterliegt gewissen Schwankungen, die z.B. durch das Wetter hervorgerufen werden können = Risiko berücksichtigt

-

Können Sie aus den folgenden Grafiken erkennen, warum das Modell zu seinen Entscheidungen gekommen ist? Können Sie die Folgen und Risiken abschätzen, die der Anbau einer Pflanze hätte? (Anmerkung: Hier wurden den Experten Grafik 7 und Grafik 8 gezeigt)JA □ NEIN□

-

Hätten Sie mehr Vertrauen in Fruchtfolgepläne, die von einer KI vorgeschlagen werden, wenn erklärende Grafiken, wie die gerade gezeigten, verwendet werden um den Entscheidungsprozess nachvollziehbar zu machen?JA □ NEIN□Warum?

- Welche Bedenken haben Sie beim Einsatz von KI in der Fruchtfolgeplanung?

- Welche Möglichkeiten sehen Sie beim Einsatz von KI in der Fruchtfolgeplanung?

-

Do you currently work with changing crop rotations?YES □ NO□

-

Do you already use any programs or artificial intelligence (AI) tools to support your crop rotation planning?YES □ NO□

- (a)

- If yes, which programs or AI tools do you use?

- (b)

- If yes, does the program or AI tool focus on explaining the decision-making process?

- (c)

- If no, what are the reasons that you do not use any software or AI tool?

- The expected income per hectare for a crop does not change from year to year = Risk not considered

- The expected income per hectare for a crop is subject to fluctuations, which might arise, for example, due to weather patterns = Risk considered

-

Can you recognise from the following diagrams why the model came to its decisions? Can you estimate the consequences and risks of growing a plant? (Note: Here, we showed Figure 7 and Figure 8 to the domain experts)YES □ NO□

-

Would you have more confidence in crop rotation plans proposed by an AI if explanatory graphics, such as those just shown, were used to make the decision-making process comprehensible?YES □ NO□Why?

- What concerns do you have about the use of AI in crop rotation planning?

- What opportunities do you see for the use of AI in crop rotation planning?

References

- Adam, S. , Busoniu, L., & Babuska, R. (2012). Experience Replay for Real-Time Reinforcement Learning Control. IEEE Transactions On Systems, Man, And Cybernetics, Part C (Applications And Reviews). [CrossRef]

- Abounadi, J. , Bertsekas, D., & Borkar, V. (2001). Learning Algorithms for Markov Decision Processes with Average Cost. SIAM Journal On Control And Optimization 40, 681–698. [CrossRef]

- Bellemare, M. , Dabney, W., & Munos, R. (2017). A Distributional Perspective on Reinforcement Learning. Proceedings Of The 34th International Conference On Machine Learning. https://proceedings.mlr.press/v70/bellemare17a.html. 70, 449–458.

- Bellman, R. Dynamic Programming. https://www.science.org/doi/abs/10.1126/science.153.3731.34. Science, 153, 34–37.

- Botín-Sanabria, D., Mihaita, A., Peimbert-García, R., Ramírez-Moreno, M., Ramírez-Mendoza, R., & Lozoya-Santos, J. Digital Twin Technology Challenges and Applications: A Comprehensive Review. https://www.mdpi.com/2072-4292/14/6/1335. Remote Sensing, 14.

- Brucherseifer, E., Winter, H., Mentges, A., Mühlhäuser, M., & Hellmann, M. (2021). Digital Twin Technology Challenges and Applications: A Comprehensive Review. In At - Automatisierungstechnik; Volume 69, pp. 1062–1080. [CrossRef]

- Burgos, D., & Ivanov, D. (2021). Food retail supply chain resilience and the COVID-19 pandemic: A digital twin-based impact analysis and improvement directions. Transportation Research Part E: Logistics And Transportation Review, 152, 102412. [CrossRef]

- Casella, G., & George, E. (1992). Explaining the Gibbs Sampler.. https://www.tandfonline.com/doi/abs/10.1080/00031305.1992.10475878. The American Statistician, 1992; 46, 167–174. [CrossRef]

- Fenz, S., Neubauer, T., Friedel, J., & Wohlmuth, M. (2023). AI- and data-driven crop rotation planning. https://www.sciencedirect.com/science/article/pii/S0168169923005483. Computers And Electronics In Agriculture, 2023; 212, 108160. [CrossRef]

- Fenz, S., Neubauer, T., Heurix, J., Friedel, J., & Wohlmuth, M. AI- and data-driven pre-crop values and crop rotation matrices. https://www.sciencedirect.com/science/article/pii/S1161030123002174. European Journal Of Agronomy, 2023; 150, 126949. [CrossRef]

- Goldenits, G. , Mallinger, K., Raubitzek, S., & Neubauer, T. (2024). Current applications and potential future directions of reinforcement learning-based Digital Twins in agriculture. [CrossRef]

- Grieves, M. , & Vickers, J. (2017). Digital Twin: Mitigating Unpredictable, Undesirable Emergent Behavior in Complex Systems. pp. 85–113. Transdisciplinary Perspectives On Complex Systems: New Findings And Approaches. [CrossRef]

- Karr, A. Chapter 2 Markov processes. https://www.sciencedirect.com/science/article/pii/. Stochastic Models, 1990; 2, 95–123. [Google Scholar]

- Kolmogorov, A. (1951). The Kolmogorov-Smirnov Test for Goodness of Fit. Journal Of The American Statistical Association, 1951; 46, 68–78. [Google Scholar] [CrossRef]

- Li, Y. (2018). Deep Reinforcement Learning: An Overview.

- Lobell, D., & Gourdji, S. (2012). The Influence of Climate Change on Global Crop Productivity. Plant Physiology 160, 1686–1697. [CrossRef]

- Malhi, G., Kaur, M., & Kaushik, P. (2021). Impact of Climate Change on Agriculture and Its Mitigation Strategies: A Review. In Sustainability; Volume 13. [CrossRef]

- Manschadi, A. , Eitzinger, J., Breisch, M., Fuchs, W., Neubauer, T., & Soltani, A. (2021). Full Parameterisation Matters for the Best Performance of Crop Models: Inter-comparison of a Simple and a Detailed Maize Model. International Journal Of Plant Production, 15, 61–78. [CrossRef]

- Manschadi, A. , Palka, M., Fuchs, W., Neubauer, T., Eitzinger, J., Oberforster, M., & Soltani, A. (2022). Performance of the SSM-iCrop model for predicting growth and nitrogen dynamics in winter wheat. https://www.sciencedirect.com/science/article/pii/S1161030122000351. European Journal Of Agronomy, 135, 126487. [CrossRef]

- Milani, S. , Topin, N., Veloso, M., & Fang, F. (2022). A Survey of Explainable Reinforcement Learning.

- Mohler, C. (2009). Crop rotation on organic farms: a planning manual. Natural Resource, Agriculture.

- Neubauer, T. , Bauer, A., Heurix, J., Iwersen, M., Mallinger, K., Manschadi, A., Purcell, W., & Rauber, A. (2024). Nachhaltige Digitale Zwillinge in der Landwirtschaft. Zeitschrift Für Hochschulentwicklung. [CrossRef]

- Purcell, W., & Neubauer, T. (2023). Digital Twins in Agriculture: A State-of-the-art review. https://www.sciencedirect.com/science/article/pii/S2772375522000594, Accessed on 2024-01-11. Smart Agricultural Technology, 3, 100094. [CrossRef]

- Shi, C. , Wan, R., Song, R., Lu, W., & Leng, L. (2020). Does the Markov Decision Process Fit the Data: Testing for the Markov Property in Sequential Decision Making. Proceedings Of The 37th International Conference On Machine Learning, 119, 8807-8817. [Google Scholar]

- Shukla, B. , Fan, I., & Jennions, I. (2020). Opportunities for Explainable Artificial Intelligence in Aerospace Predictive Maintenance.

- Silver, D. , Hubert, T., Schrittwieser, J., Antonoglou, I., Lai, M., Guez, A., Lanctot, M., Sifre, L., Kumaran, D., Graepel, T., Lillicrap, T., Simonyan, K., & Hassabis, D. (2017). Mastering Chess and Shogi by Self-Play with a General Reinforcement Learning Algorithm. [CrossRef]

- Sutton, R. , & Barto, A. (2018). Reinforcement learning: An introduction. MIT Press.

- Pourroostaei Ardakani, S., & Cheshmehzangi, A. (2021). Reinforcement Learning-Enabled UAV Itinerary Planning for Remote Sensing Applications in Smart Farming. Telecom, 2, 255–270. [CrossRef]

- Wang, J., Liu, Y., & Li, B. (2020). Reinforcement Learning with Perturbed Rewards. https://ojs.aaai.org/index.php/AAAI/article/view/6086. Proceedings Of The AAAI Conference On Artificial Intelligence, 34, 6202–6209. [CrossRef]

- Wang, Y., Velasquez, A., Atia, G., Prater-Bennette, A., & Zou, S. (2023). Model-Free Robust Average-Reward Reinforcement Learning. https://proceedings.mlr.press/v202/wang23am.html. Proceedings Of The 40th International Conference On Machine Learning, 202, 36431–36469.

- World Bank Group. (2024). Population Estimates And Projections. https://databank.worldbank.org/source/population-estimates-and-projections, Accessed: 2024-01-10.

- Xu, M. , Liu, Z., Huang, P., Ding, W., Cen, Z., Li, B., & Zhao, D. (2022). Trustworthy Reinforcement Learning Against Intrinsic Vulnerabilities: Robustness, Safety, and Generalizability. [CrossRef]

| On-policy | Off-policy | |

|---|---|---|

| Model based | Model-Based Policy Iteration; Monte Carlo Tree Search (MCTS) |

DynaQ |

| Model free | SARSA (on-policy) | n-step Q-learning; Q-learning with function approx. |

| DQN | 1-Step Q-Learning |

DynaQ | Expected SARSA | |

|---|---|---|---|---|

| Number of best Strategies |

1 | 7 | 15 | 18 |

| Reward Best Strategy |

3573 (12) | 4759 (1) | 5337 (4) | 5447 (1) |

| Reward 2nd Best Strategy |

3515 (3) | 4645 (1) | 4722 (2) | 5337 (1) |

| Reward 3rd Best Strategy |

-602 (2) | 4604 (1) | 4645 (1) | 5140 (1) |

| Non-Random Better | Equal | Random Better | |

|---|---|---|---|

| 1-step Q-learning | 11 | 5 | 10 |

| DynaQ | 11 | 8 | 7 |

| Expected SARSA | 15 | 4 | 7 |

| Median Reward | Mean Reward | SD Reward | |

|---|---|---|---|

| Regular Argmax | 3286 | 3283 | 204 |

| New Argmax | 3316 | 3316 | 192 |

| Non-Random Rewards | Random Rewards | |

|---|---|---|

| 1-step Q-learning | 3188 | 3022 |

| DynaQ (Ensemble) | 3550 | 3740 |

| Expected SARSA | 3172 | 2473 |

| Non-Random Better | Equal | Random Better | |

|---|---|---|---|

| 1-step Q-learning | 9 | 6 | 11 |

| DynaQ (Ensemble) | 5 | 10 | 11 |

| Expected SARSA | 16 | 6 | 4 |

| Starting Crop | Preference | |||

|---|---|---|---|---|

| Non-Random | Random | None | ||

| Non-random scores higher |

Spring Barley | 1 | 2 | |

| Grain Maize | 1 | 1 | 1 | |

| Winter Rye | 2 | 1 | ||

| Random scores higher |

Grain Peas | 0 | 3 | |

| Sommer Oat | 0 | 2 | 1 | |

| Equal Score | Potatoe | - | - | - |

| Starting Crop | Economical Risk | |||||

|---|---|---|---|---|---|---|

| Not Risky at All | Not Risky | Acceptable | Risky | Very Risky | ||

| Non-random scores higher |

Spring Barley | - | 1 | 2 | - | - |

| Grain Maize | 1 | 1 | - | - | 1 | |

| Winter Rye | - | 1 | 2 | - | - | |

| Random scores higher |

Grain Peas | - | 1 | 2 | - | - |

| Sommer Oat | - | 1 | 1 | 1 | - | |

| Equal Score | Potatoe | - | 1 | 2 | - | - |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).