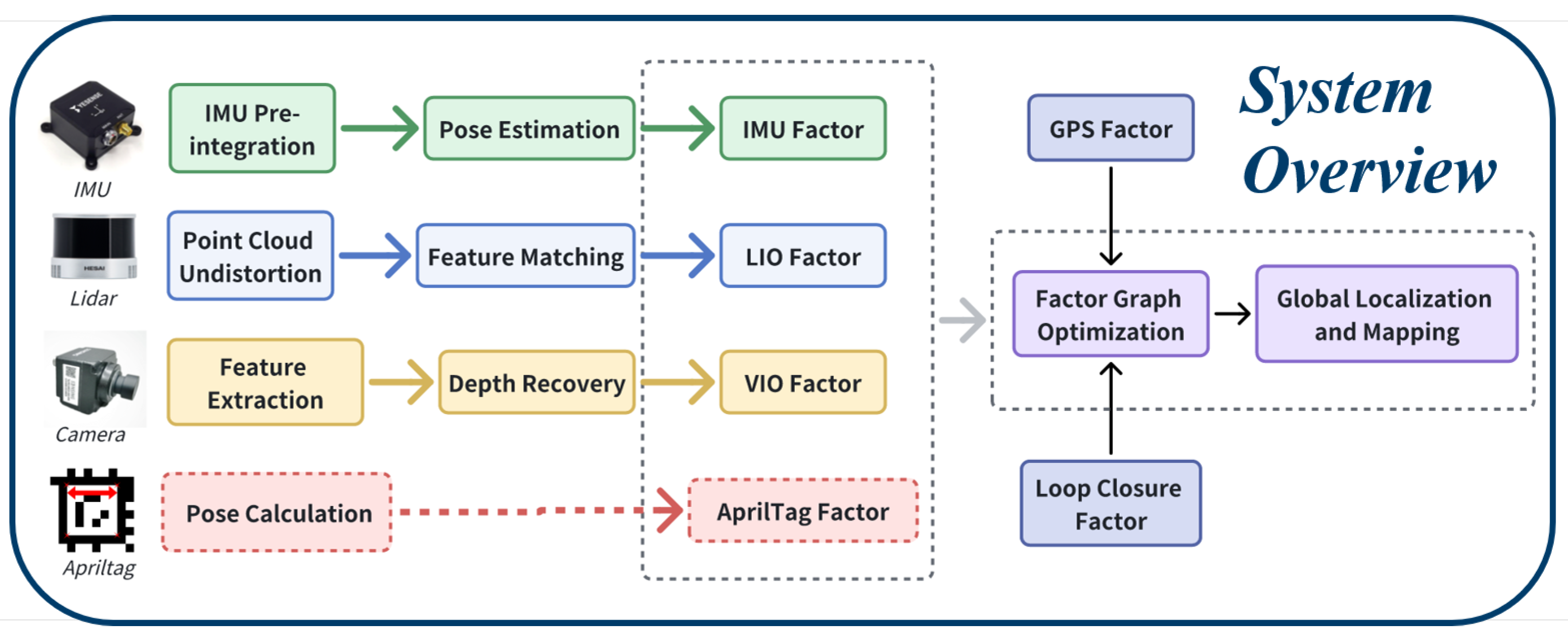

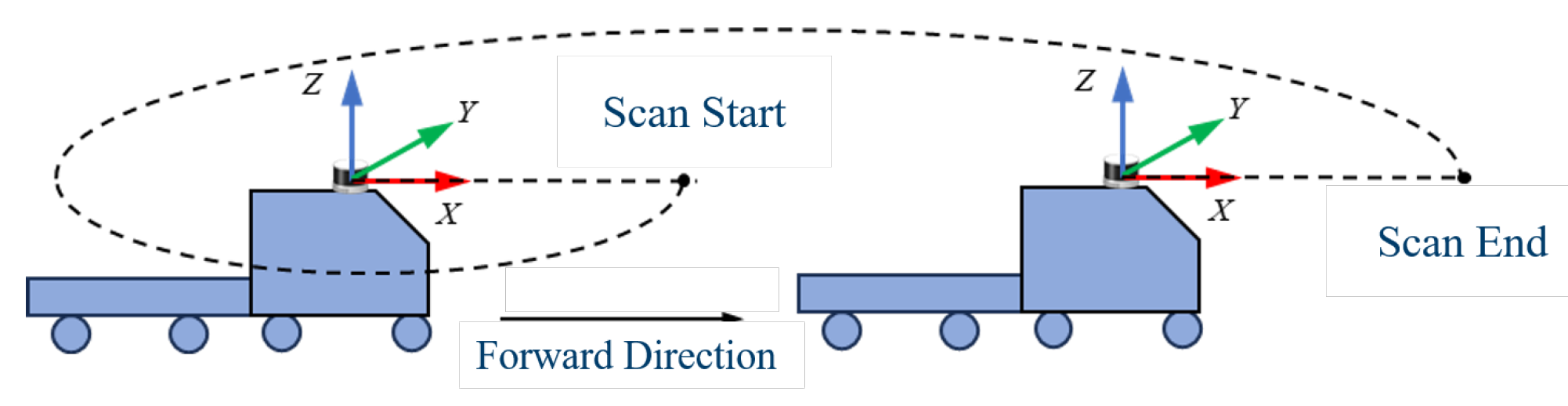

This section introduces the testing of the multi-sensor fusion localization method in real-world scenarios. Multi-sensor brackets were installed on both a test cart and a heavy truck for the experiments. Data was collected from locations with different characteristics, followed by localization evaluation and visualization of the mapping results to analyze the proposed method.

B.Field Work Scenario Positioning Test and Analysis

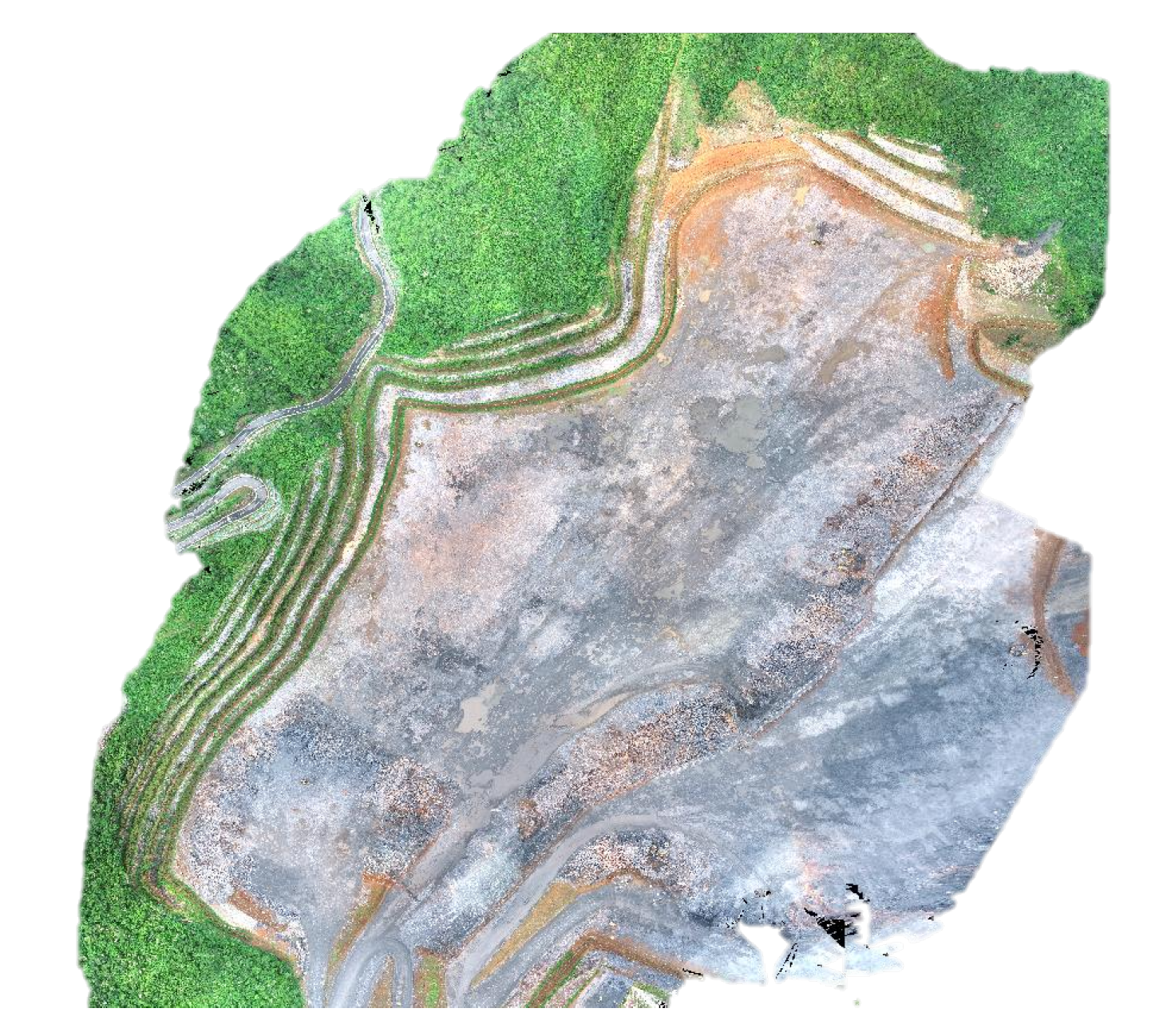

In this section, we use a self-collected dataset from a mining site, with the satellite image of the mining test area shown in

Figure 10. Our fusion localization method is applied to the self-collected dataset.

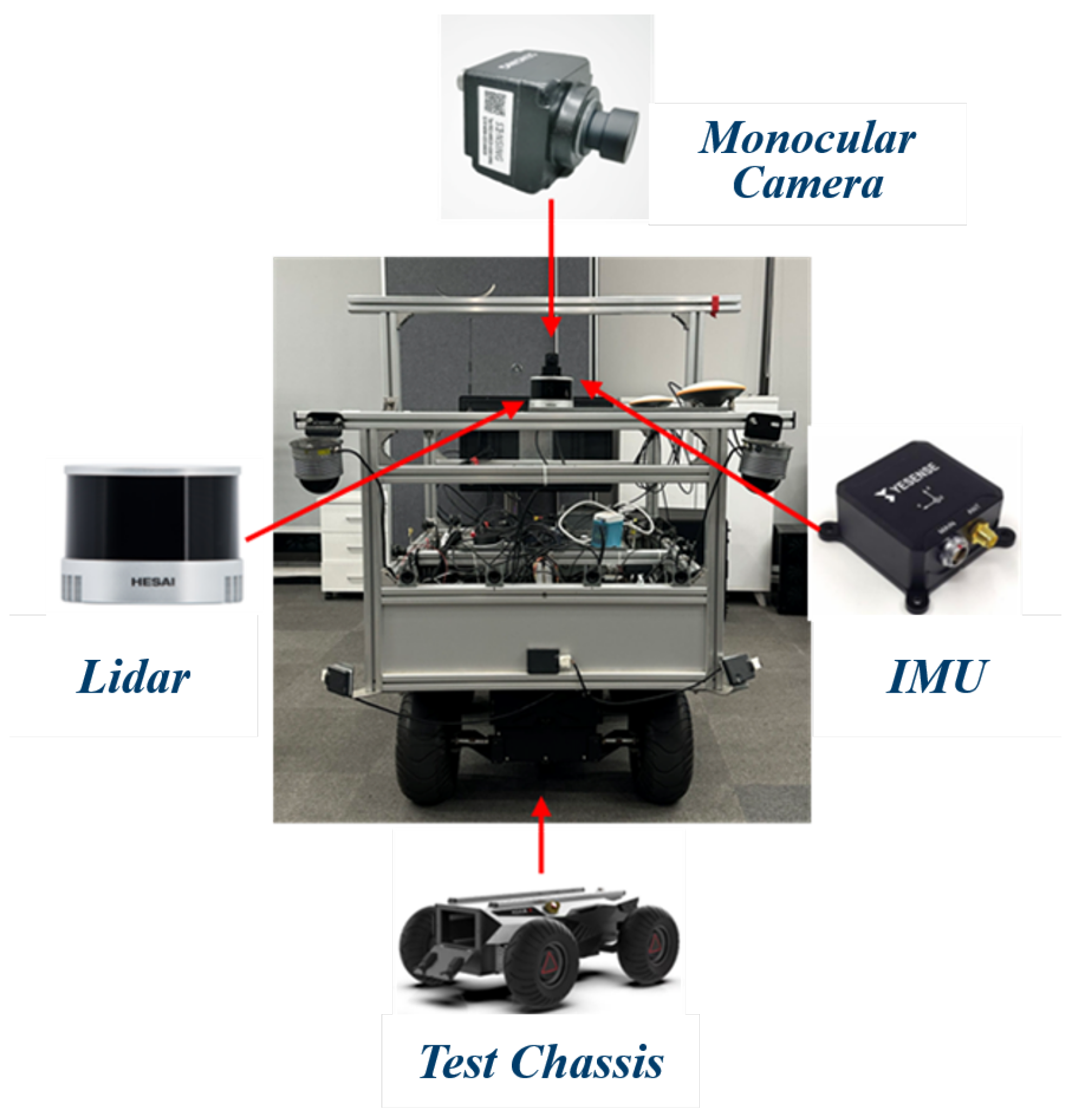

The autonomous driving positioning test utilizes an 8×4 Dongfeng dump heavy truck, which can be applied in working environments such as mines, ports, and industrial parks, capable of unloading cargo. This heavy truck is designed to be equipped with three types of sensors for multi-sensor fusion positioning, including one monocular camera, one LiDAR, and one IMU inertial navigation gyroscope, as shown in the left of

Figure 11. The model of the monocular camera is

SENSING GSML2, the LiDAR model is

HESAI XT32, and the IMU gyroscope model is

YESENSE YIS500-A.

In harsh working environments, adverse factors such as flying debris from blasting, rain erosion, and other environmental impacts pose challenges. Additionally, issues related to sensor heat dissipation, blind spots in data collection, and equipment stability must be considered. Therefore, this paper presents a multi-sensor support frame designed with an integrated safety enclosure to ensure the structural stability of the multi-sensor fusion setup. The structural design is illustrated in the right of

Figure 11.

We conducted tests on the proposed positioning algorithm , the LVI-SAM [

26] and the LIO-SAM [

24] algorithm using datasets collected from the field, including both circling and turning maneuvers around the mining site. The positioning trajectories were outputted, and we utilized the EVO [

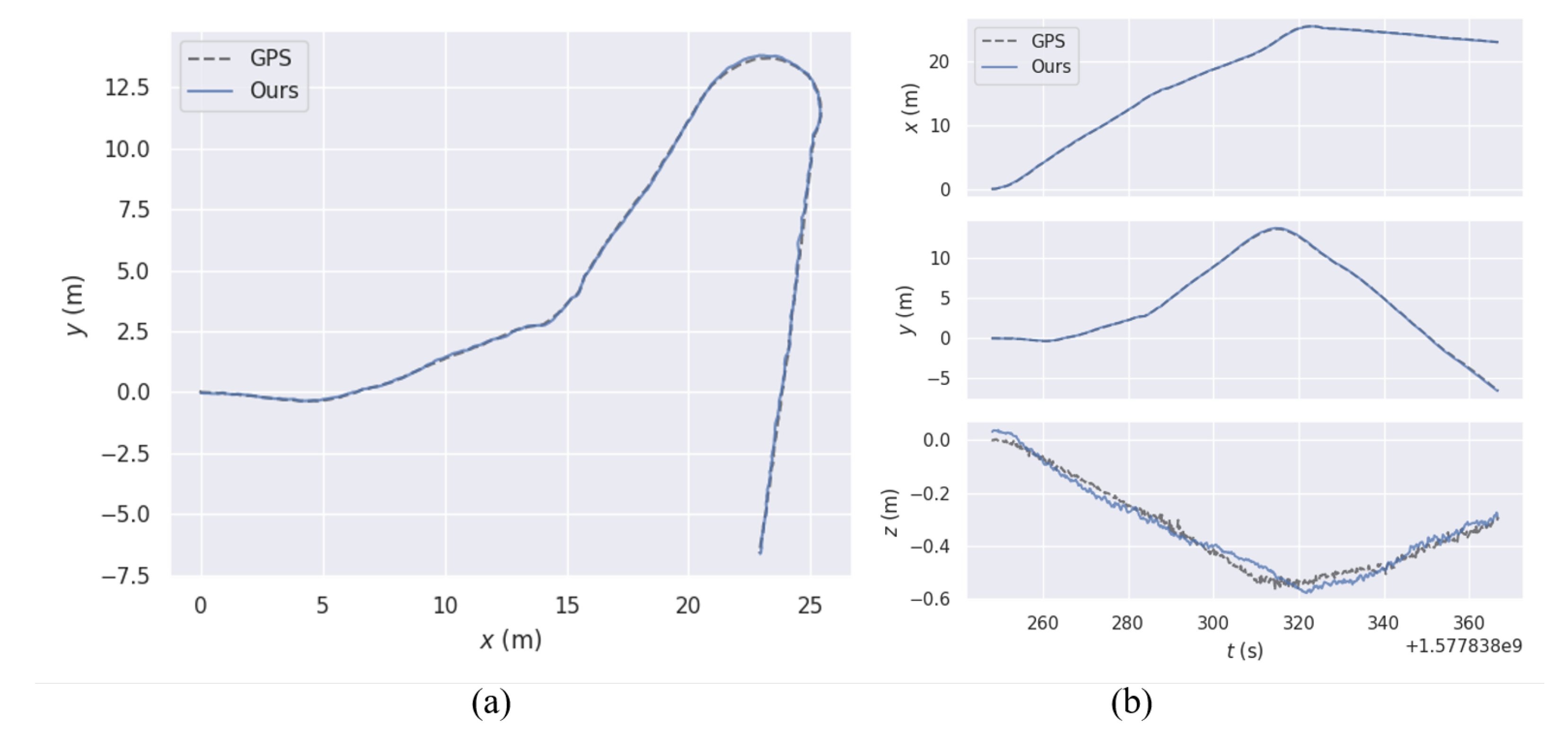

35] tool to compare these trajectories with the GPS ground truth data from the datasets. The EVO tool also provided insights into the position errors along the x, y, and z axes for both algorithms across the two datasets. In

Figure 12, (a) illustrates the trajectory errors of different algorithms for the heavy truck circling dataset, while (b) displays the xyz axis trajectory errors of different algorithms for the same dataset.

At the same time, we compared the running speeds of the LIO-SAM algorithm, LVI-SAM algorithm, and our proposed algorithm on the mining self-collected dataset, as shown in

Table 3. The table shows that the fusion localization method incorporating the iKD-Tree has a significant improvement in runtime speed compared to LIO-SAM, with an increase of over 33 percentage.

The quantitative analysis of the test results for the proposed positioning method and the LIO-SAM algorithm on the heavy truck’s surrounding driving dataset, performed using EVO, is summarized in

Table 4, which presents four types of errors.

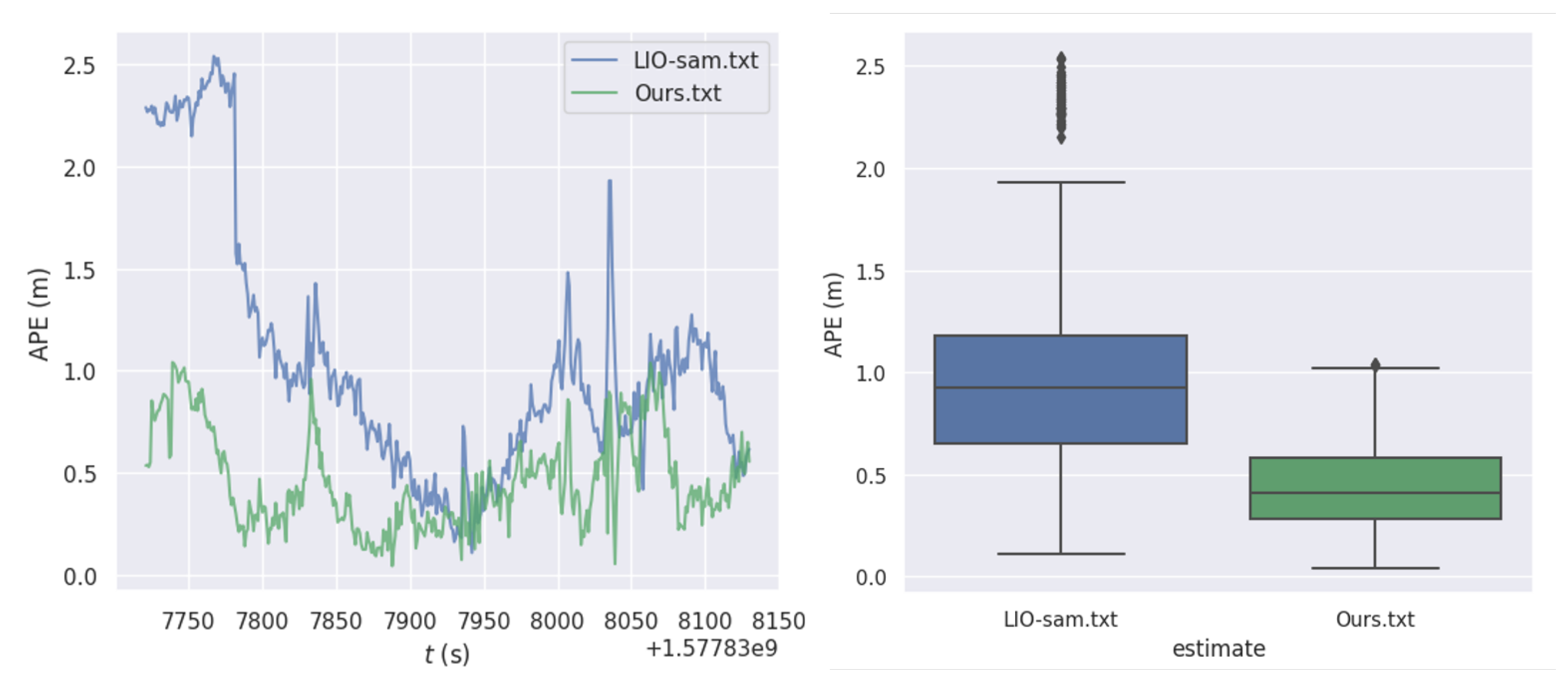

Figure 13 illustrates the statistical results of the quantitative analysis, where (a) compares the absolute pose trajectory errors of the two algorithms in the heavy truck surrounding driving scenario. The errors for both algorithms are relatively stable, indicating that the multi-sensor fusion positioning with the addition of a monocular camera shows superior performance. (b) displays the box plot of the absolute errors. From the figure, it is evident that for the heavy truck surrounding driving scenario, the absolute errors of the proposed positioning method are generally lower than those of the LIO-SAM algorithm and are significantly more stable.

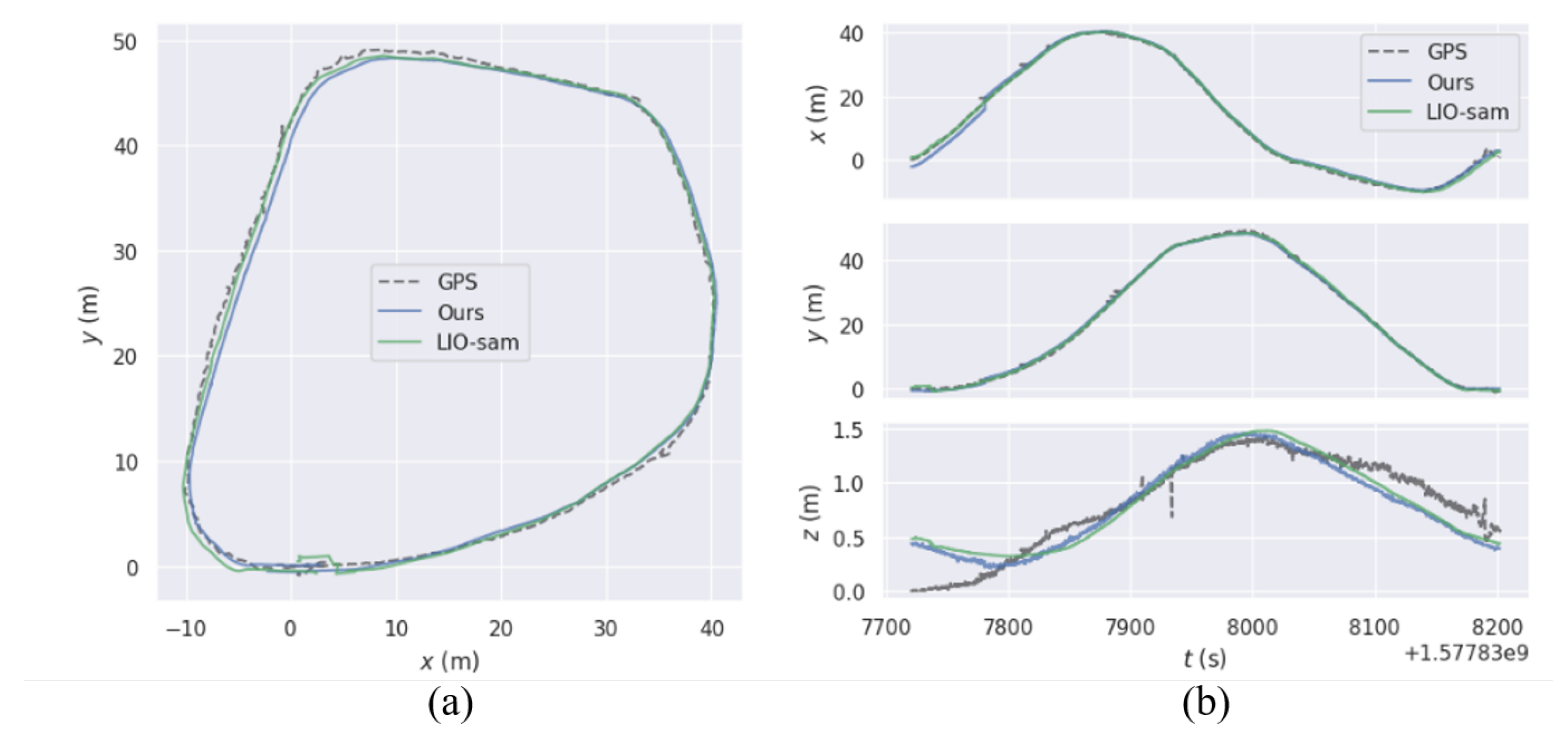

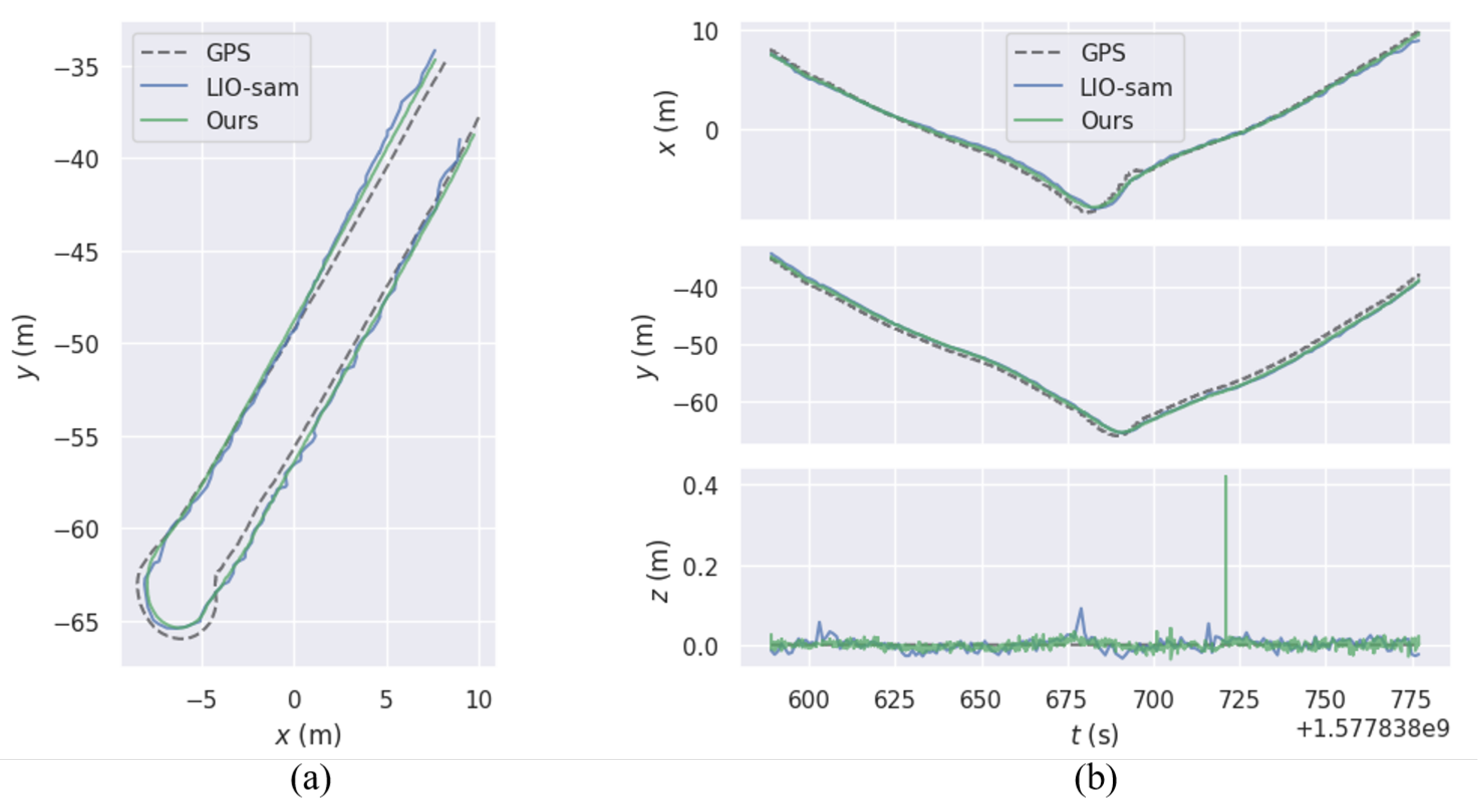

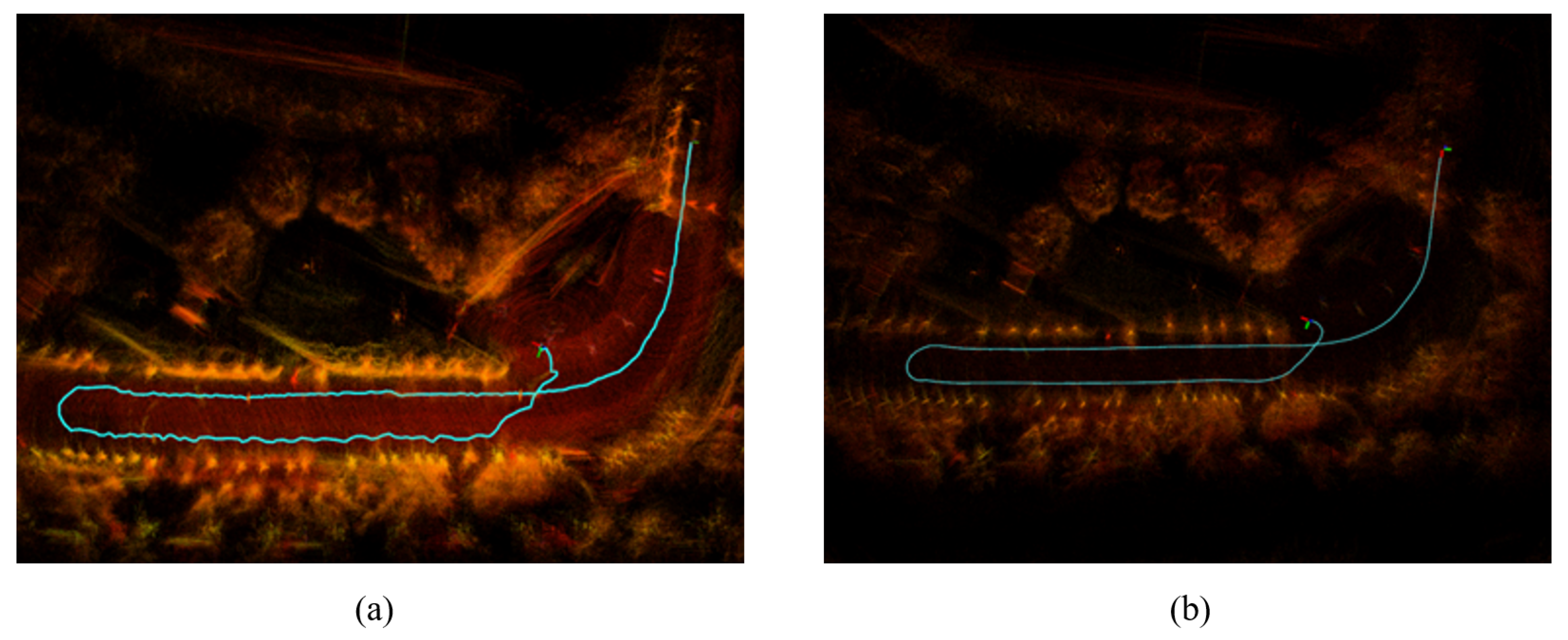

For the U-turn driving dataset, we performed trajectory evaluations using both algorithms. In

Figure 14, (a) shows the trajectory error for the U-turn dataset, while (b) illustrates the trajectory errors along the x, y, and z axes.

Table 5 provides a quantitative analysis of the trajectory error.

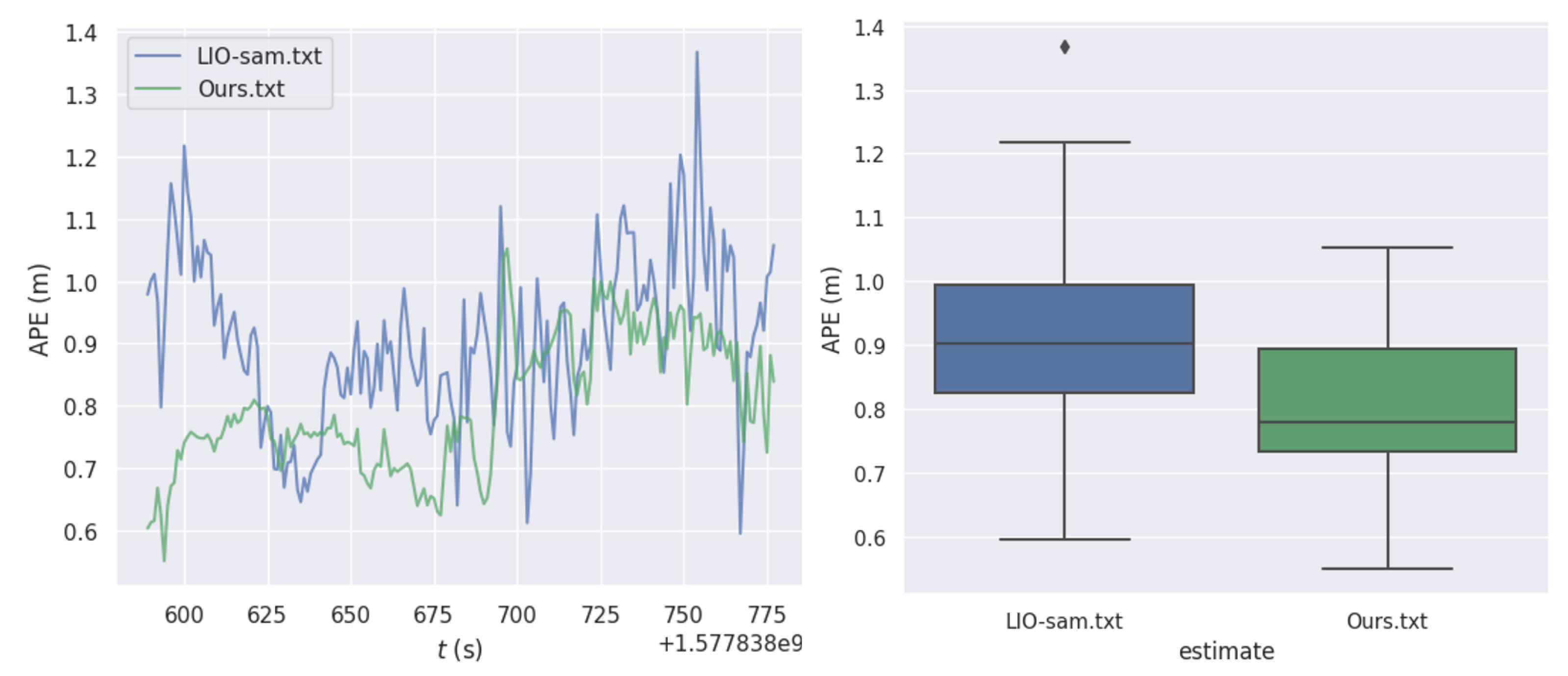

Figure 15 presents statistical graphs of the quantitative analysis: (a) compares the absolute pose trajectory errors for the two algorithms in the U-turn scenario; (b) shows box plots of absolute trajectory errors for each algorithm in the same scenario.

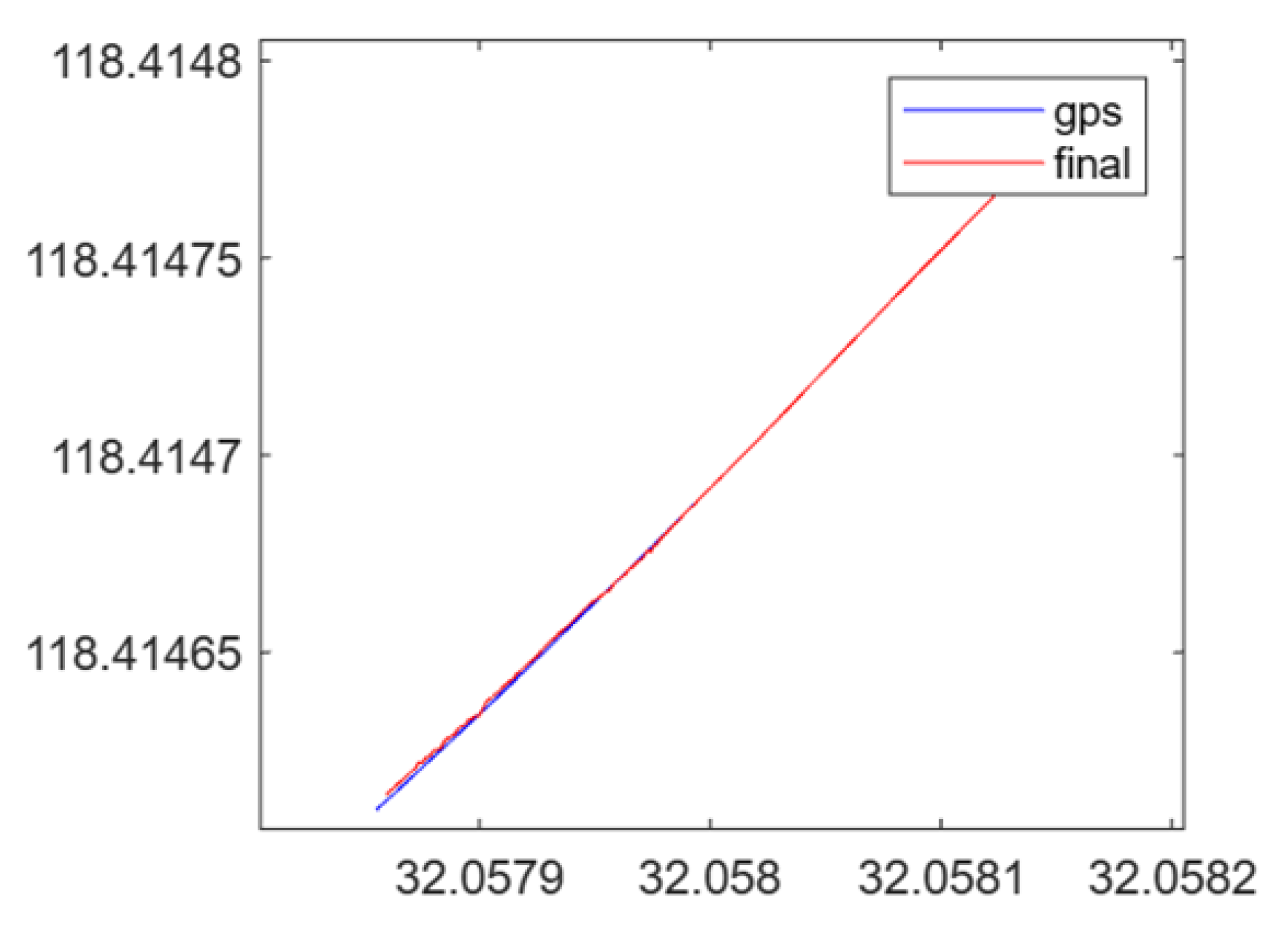

In the heavy truck circular driving dataset, there is some path drift in the GPS ground truth trajectory, mainly caused by signal fluctuation due to occlusions from mining site buildings and surrounding vegetation. In contrast, the path output by our fusion localization algorithm is nearly unaffected by such environmental obstructions.

When evaluating accuracy in the two scenarios—circular and turning driving—the root mean square error (RMSE) of absolute pose trajectory for our localization algorithm is reduced by 61.84 percentage and 34.05 percentage, respectively, compared to LIO-SAM. This improvement is attributed to the noise filtering method in our approach, which applies EMD and fractal Gaussian noise filtering to the IMU output. This process effectively reduces low-frequency colored noise in the IMU signal, enhances the signal-to-noise ratio, and improves the accuracy of both the LiDAR-inertial odometry and visual-inertial odometry in our method.

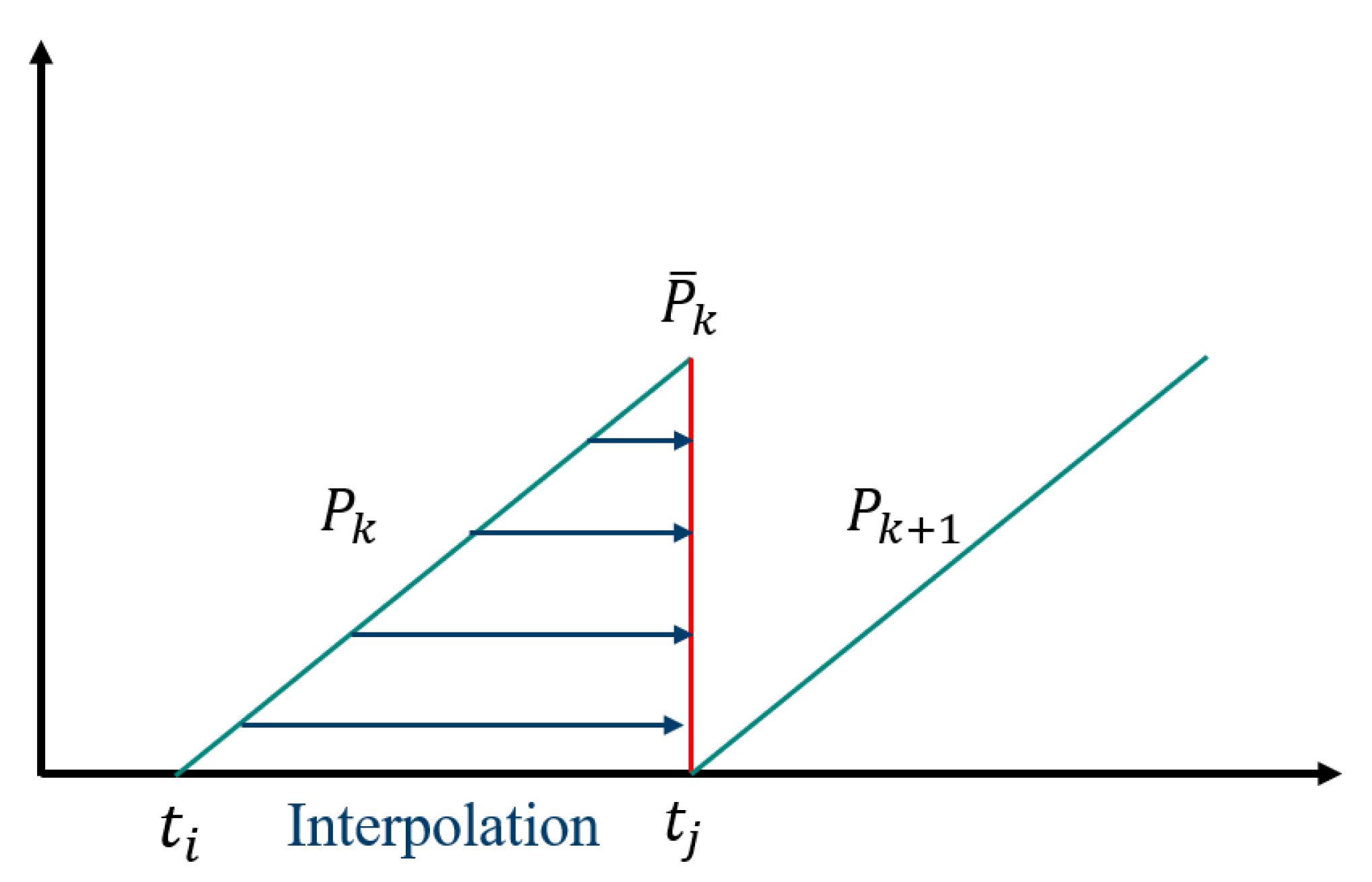

At the same time, we also tested the beneficial effects of incorporating AprilTag-assisted localization in sections with significant bumps and without GPS signals, with the results shown in

Figure 16. After adding the AprilTag localization factor, our algorithm demonstrated significant improvements in positioning accuracy and final error during long-duration travel without GPS.

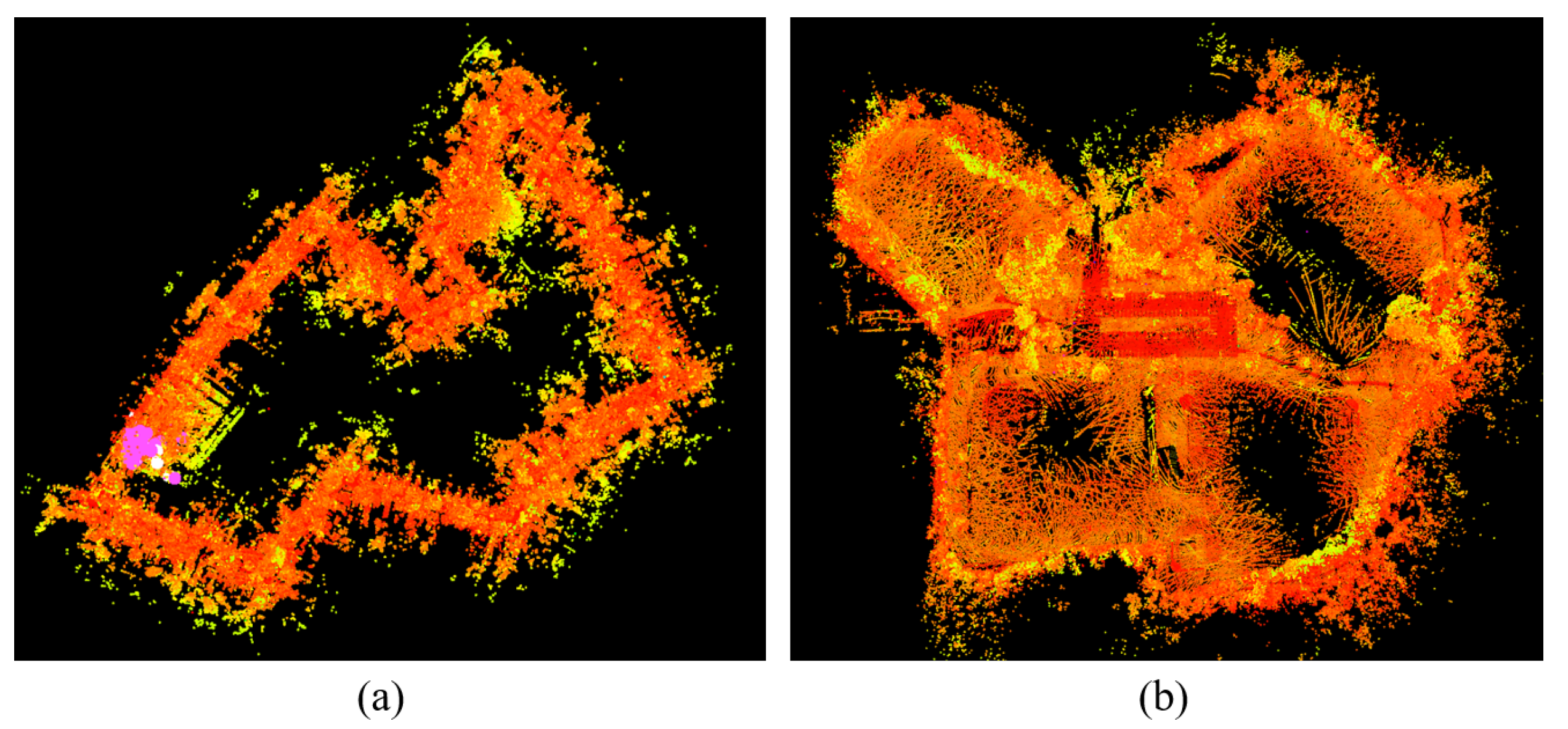

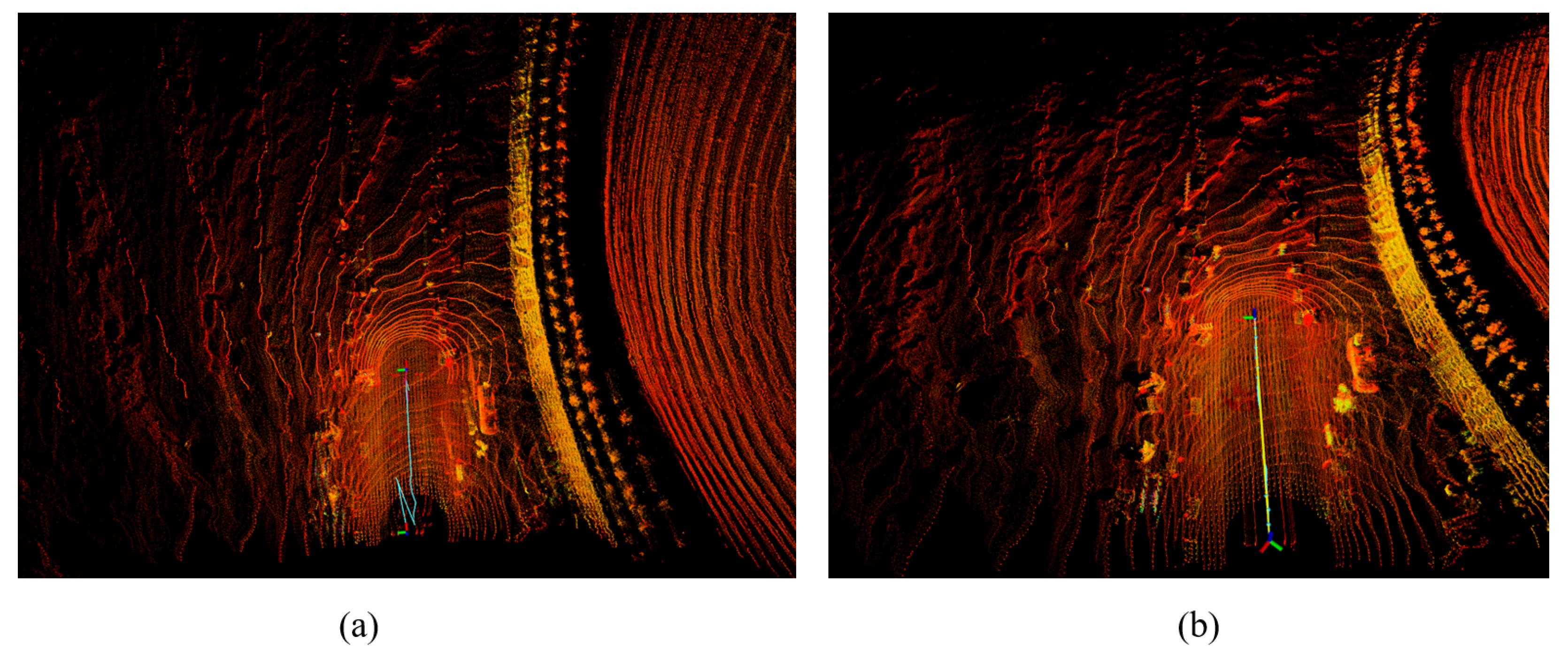

Figure 17 shows the test results of our algorithm compared to LIO-SAM in a complete mining scene. It is evident that our algorithm is smoother and more accurate, exhibiting less drift.

To demonstrate the effectiveness of our algorithm during long-duration travel, we tested our algorithm at different driving distances in a mining scenario (with a maximum speed of 30 km/h for the truck). We recorded the maximum error between the odometry produced by our algorithm and the actual GPS across varying distances, and compared it with LIO-SAM and LVI-SAM. The results are shown in

Table 6.The results from the table indicate that the odometry information produced by our algorithm has the smallest error compared to the actual GPS values. Additionally, due to the scarcity of visual information in the mining site, the LVI-SAM algorithm was significantly affected during actual use.

The experimental results above indicate that our localization method produces lower errors, resulting in a more stable positioning path with minimal drift. The integration of monocular cameras for multi-sensor fusion localization contributes to achieving a more stable error pattern, while GPS signals and AprilTag-assisted localization help our system operate reliably in long-distance travel scenarios and bumpy road segments. This supports the advantages and adaptability of our method based on real-world testing in various environments.