Submitted:

30 October 2024

Posted:

31 October 2024

You are already at the latest version

Abstract

Keywords:

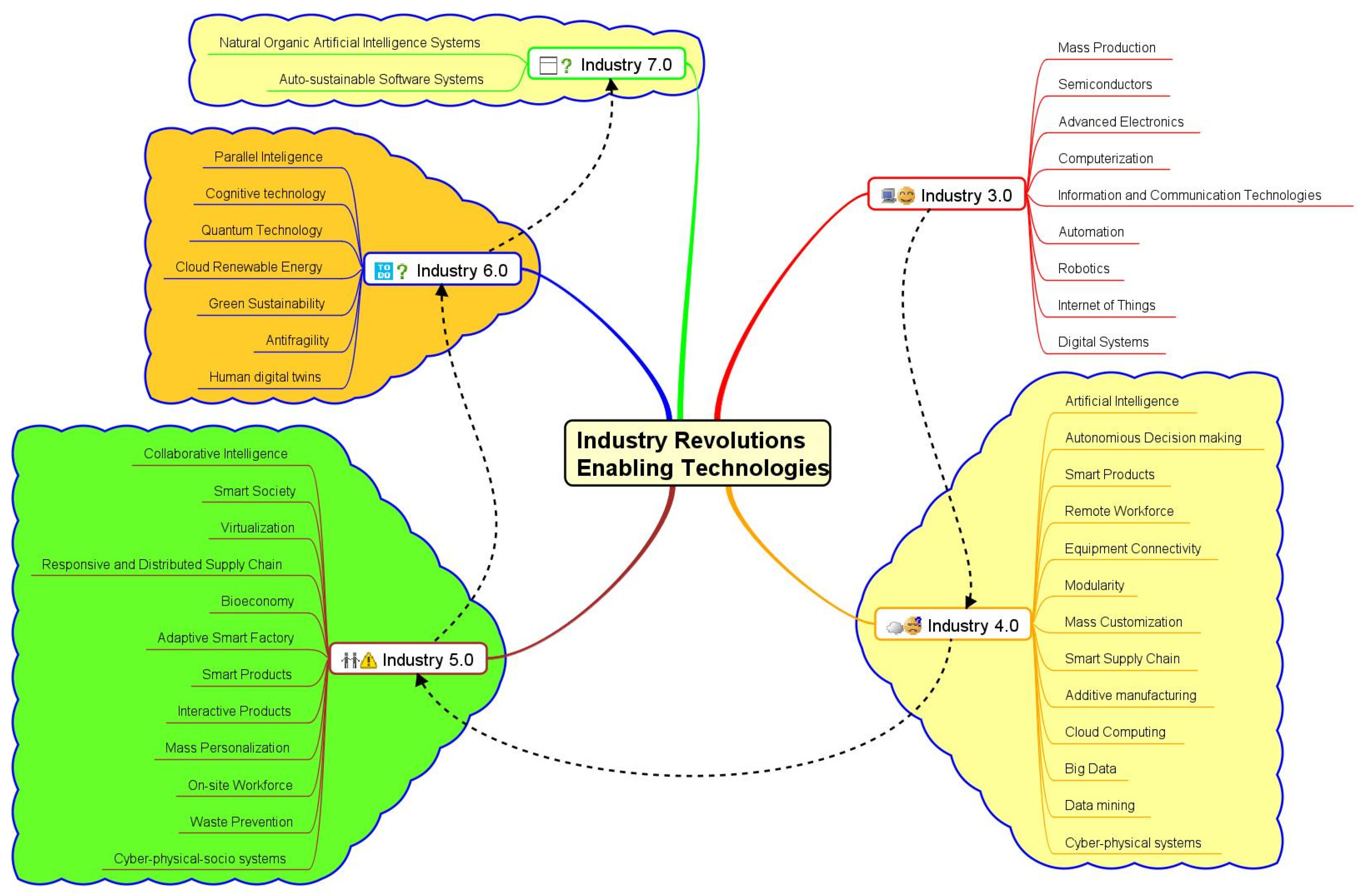

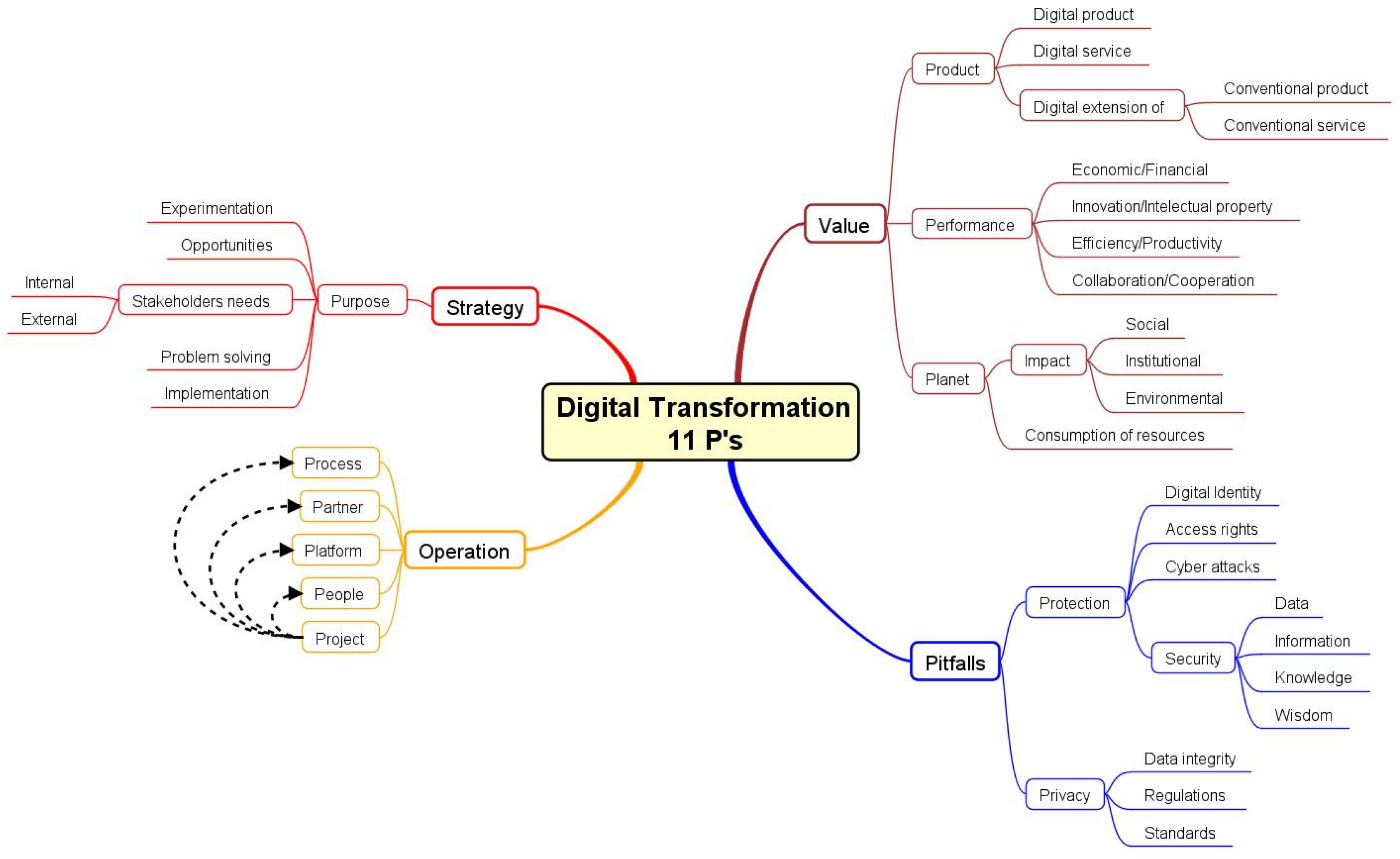

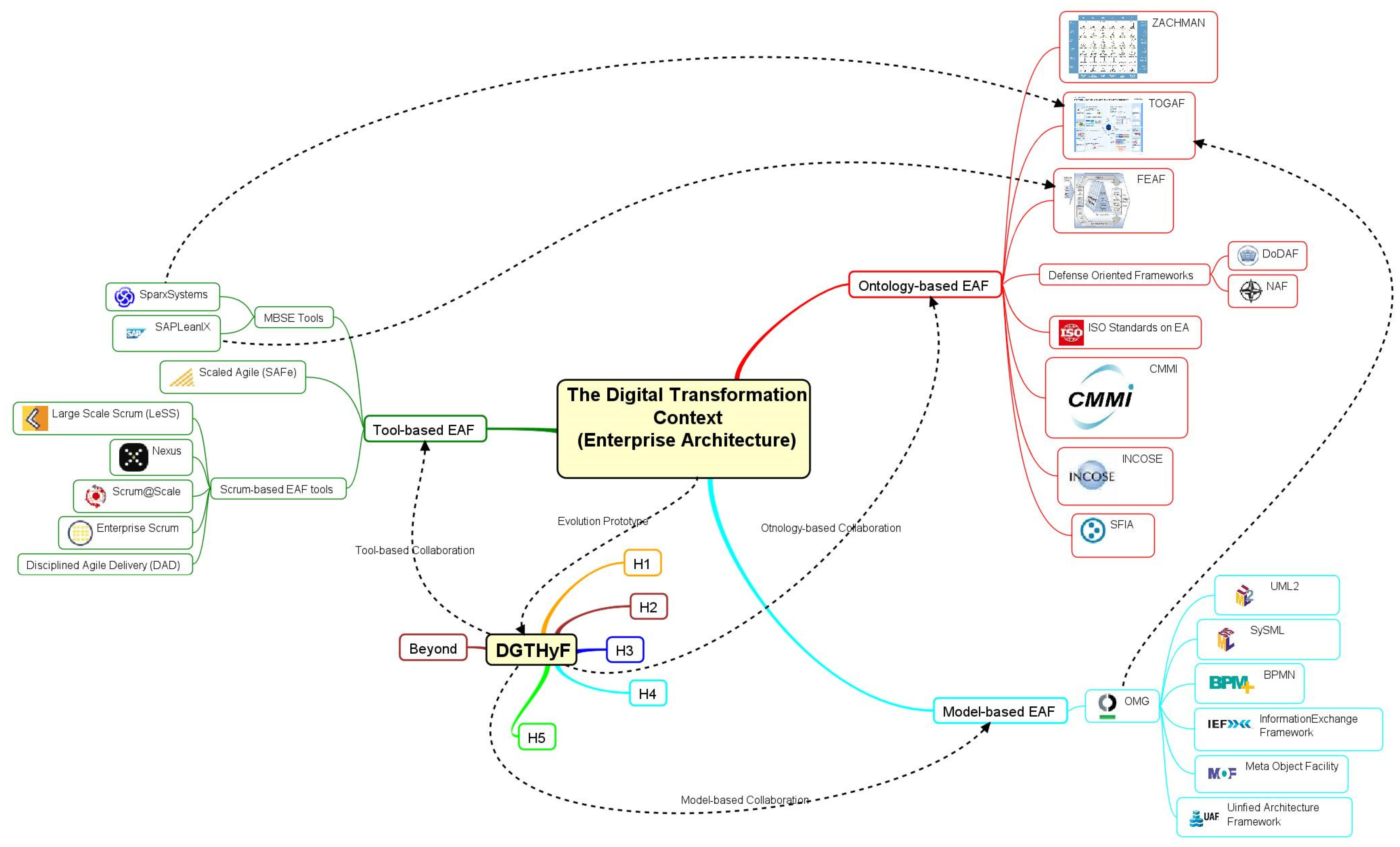

1. Introduction

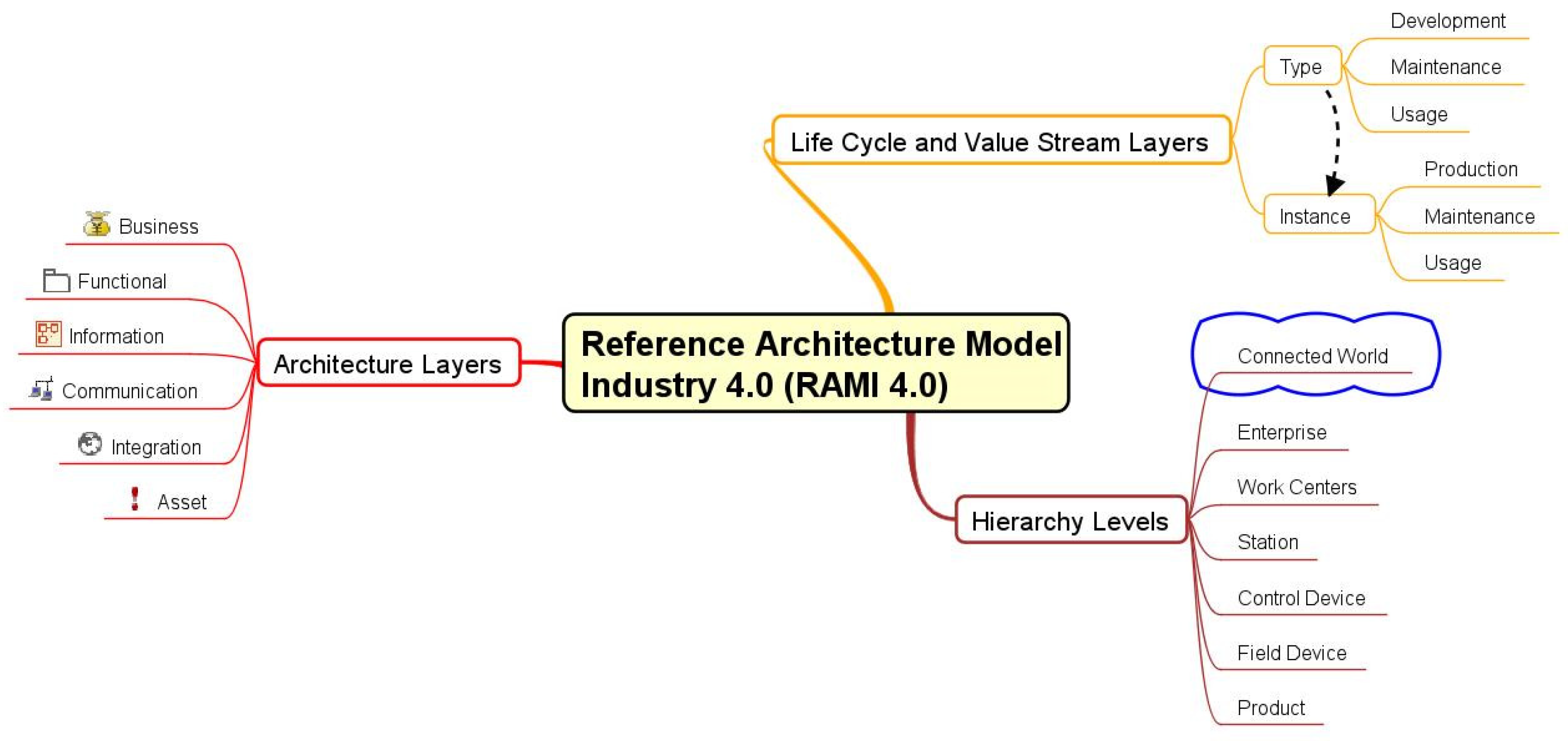

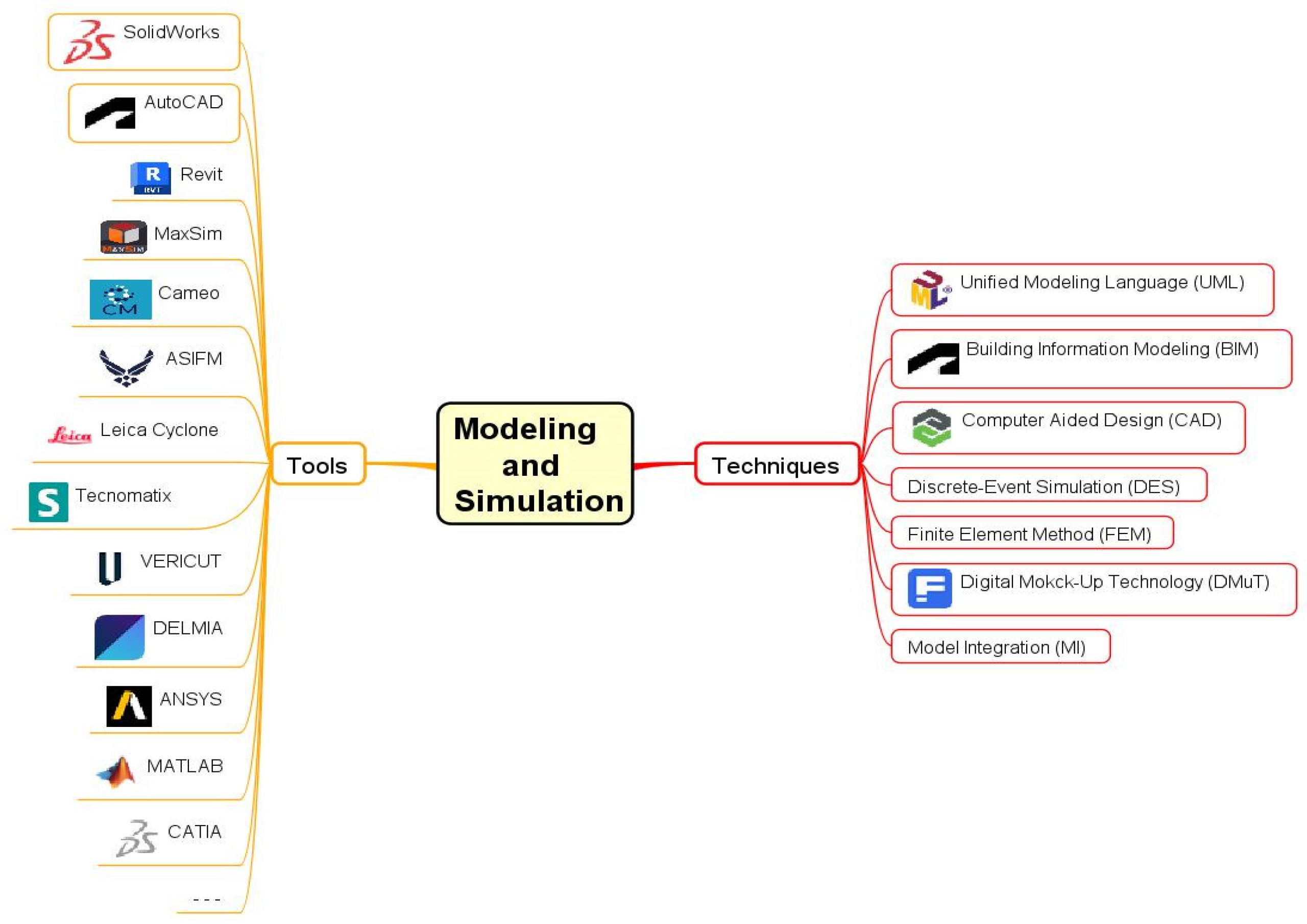

2. Materials and Methods

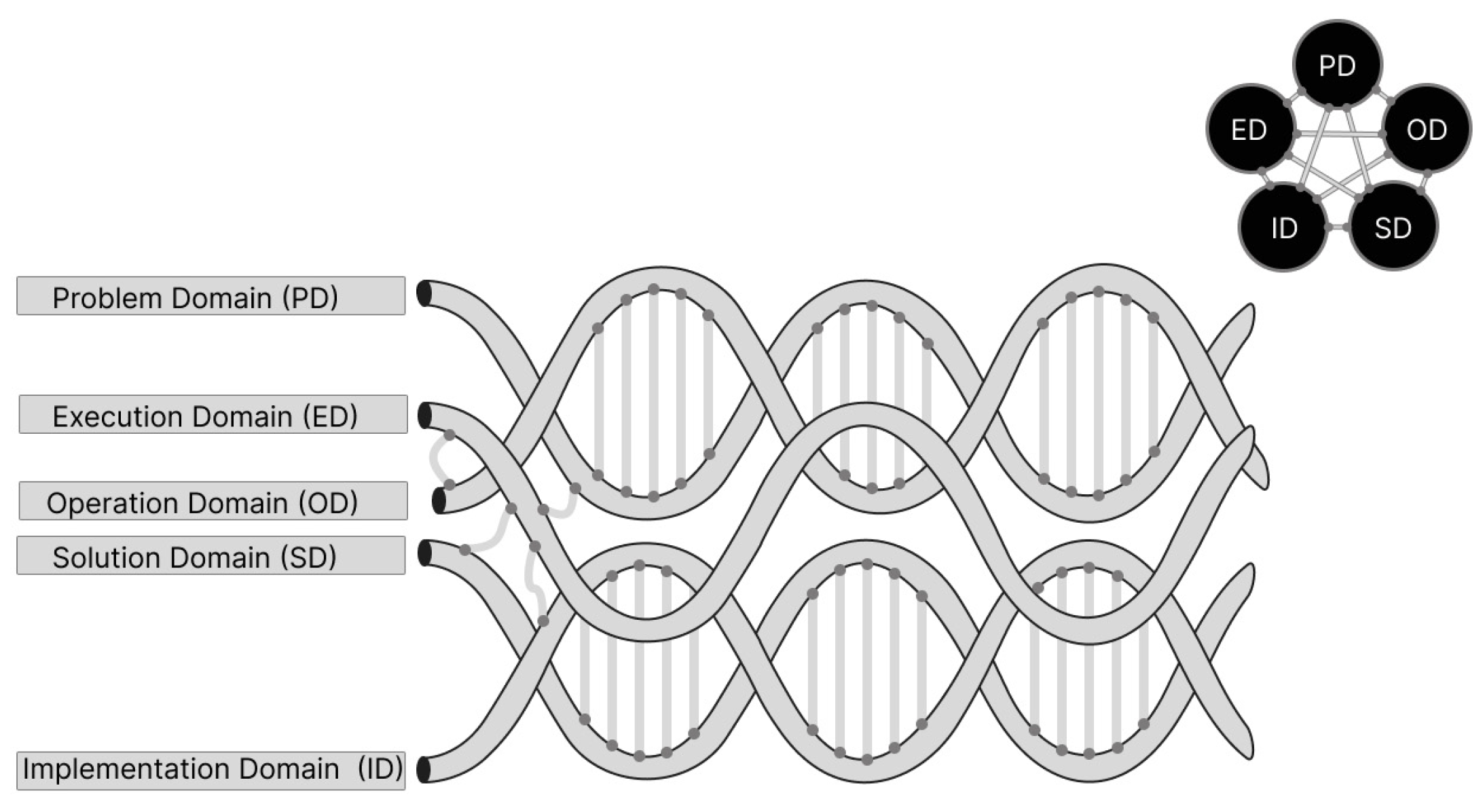

2.1. The Foundation of Hypothesis 1 (H1)—Integrated Tailorable Life Cycle Models

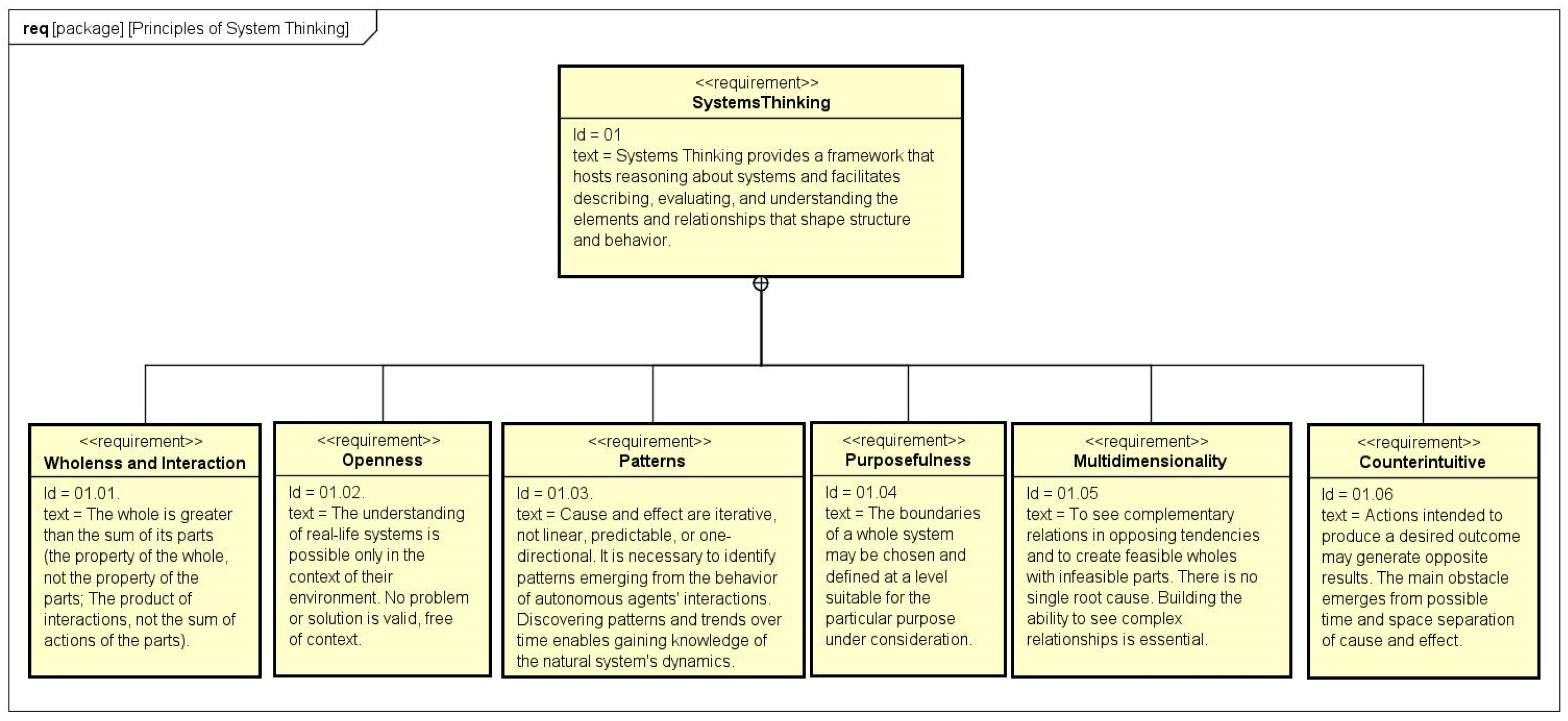

2.1.1. The Role of Systems Engineering (SyEng)

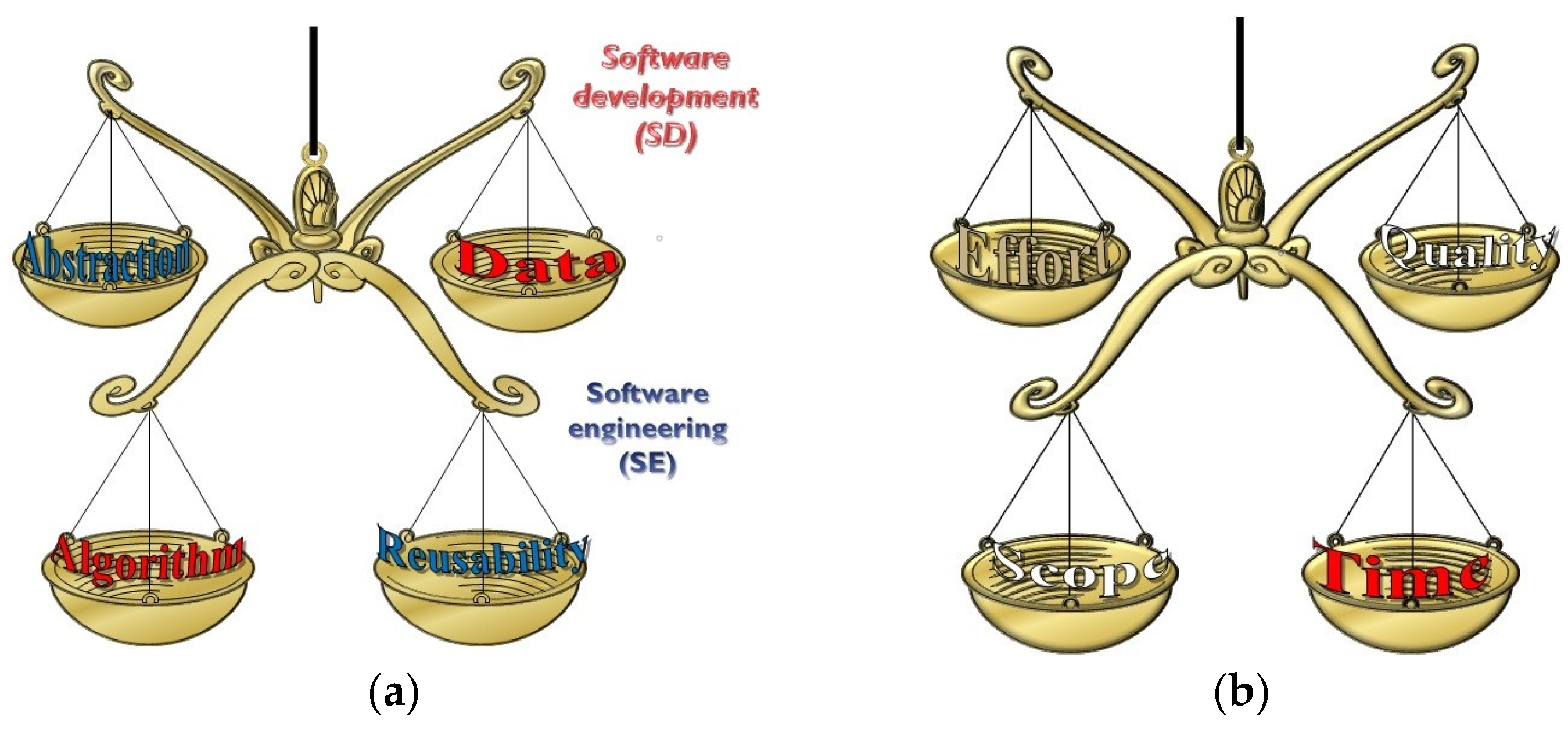

2.1.2. The Role of Software Engineering (SwEng)

2.1.3. The Role of Operations Engineering (OpEng)

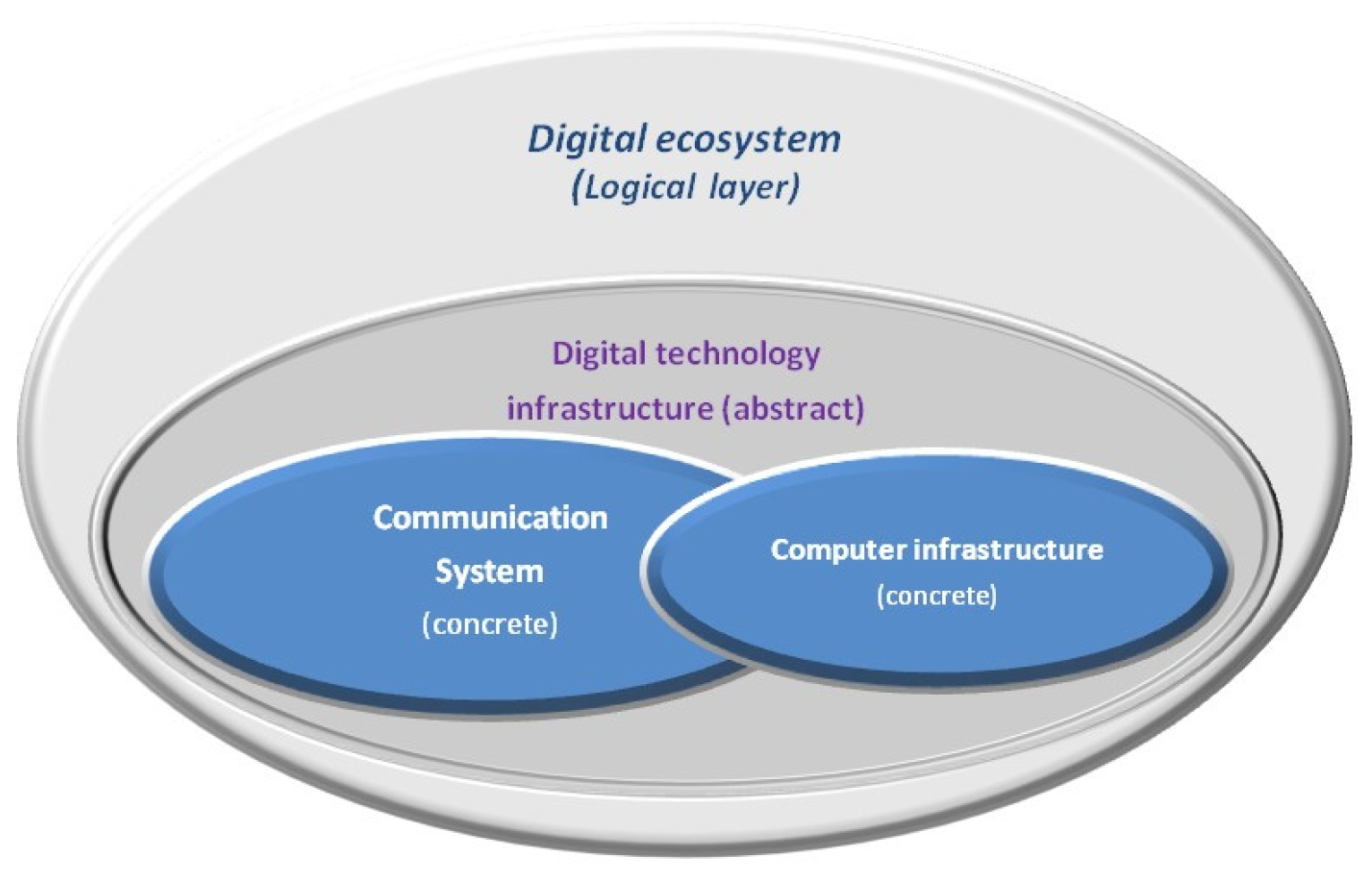

2.2. The Foundation of Additional Hypothesis (H2 to H5)

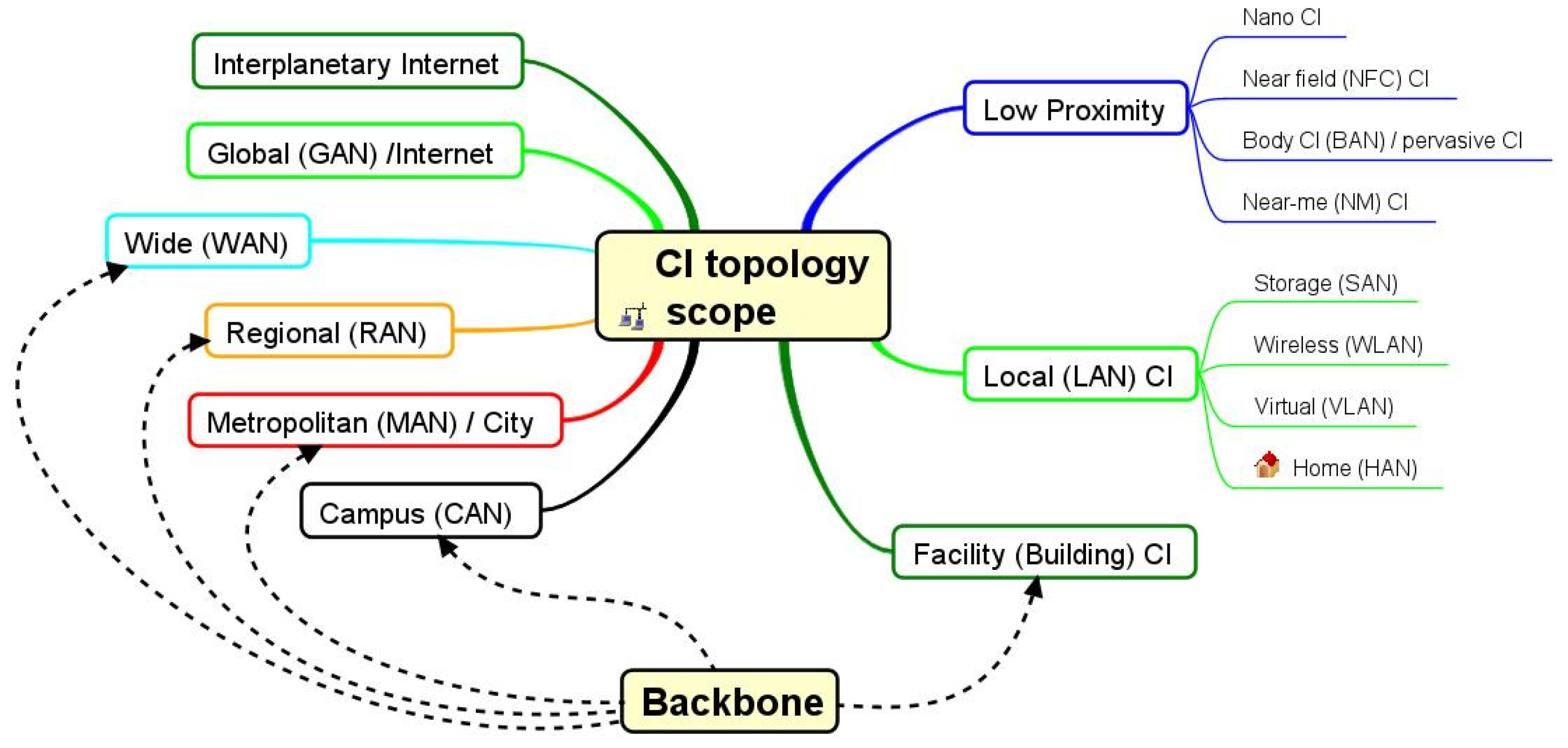

2.2.1. The Collaboration/Cooperation of Heterogeneously Staged Components (Systems)—(H2)

2.2.2. The Executable Virtualization Ability—(H3)

2.2.3. Hiding the Data-Layer Complexity—(H4)

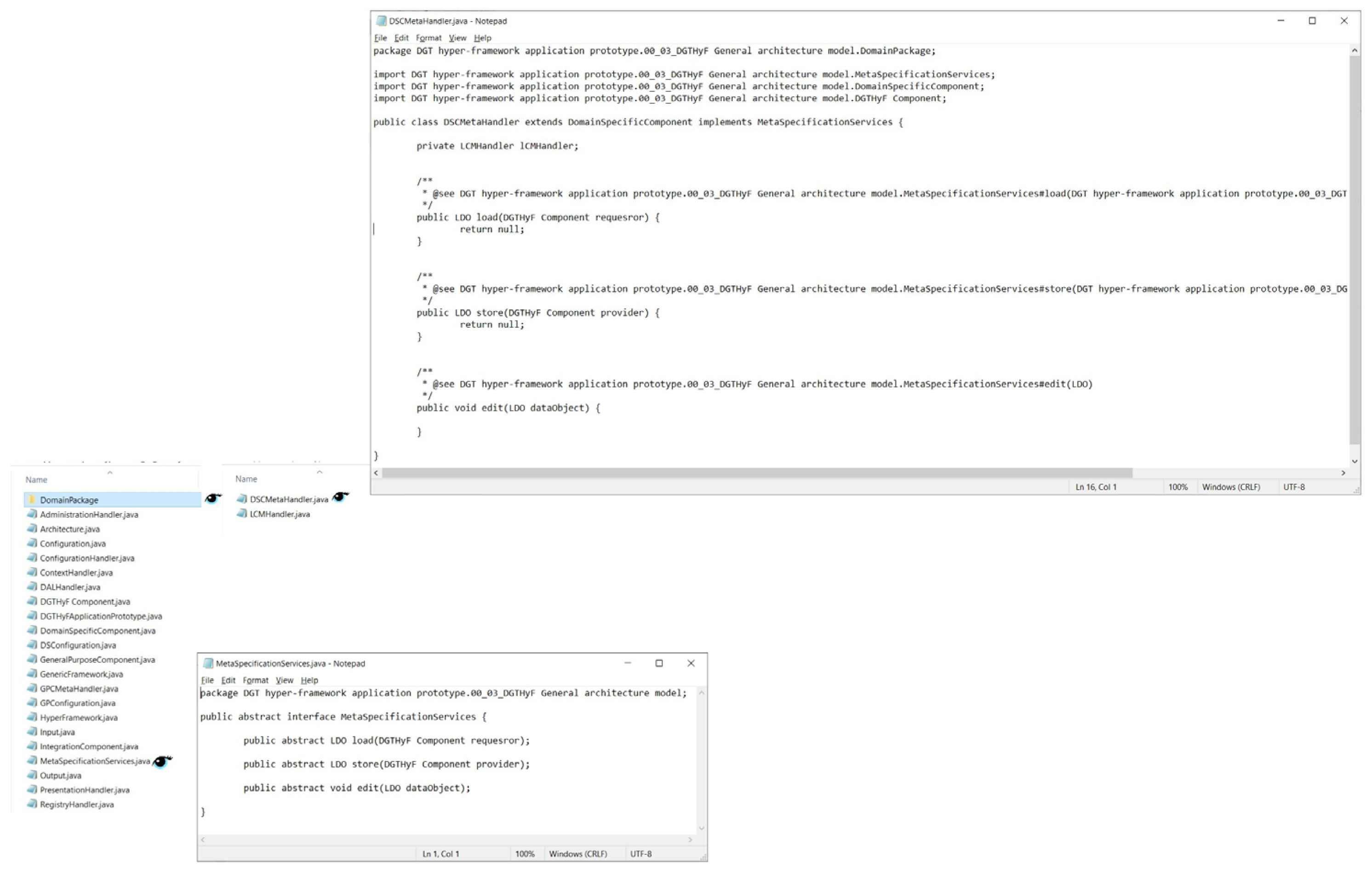

2.2.4. Meta-Specification Driven Generative Support—(H5)

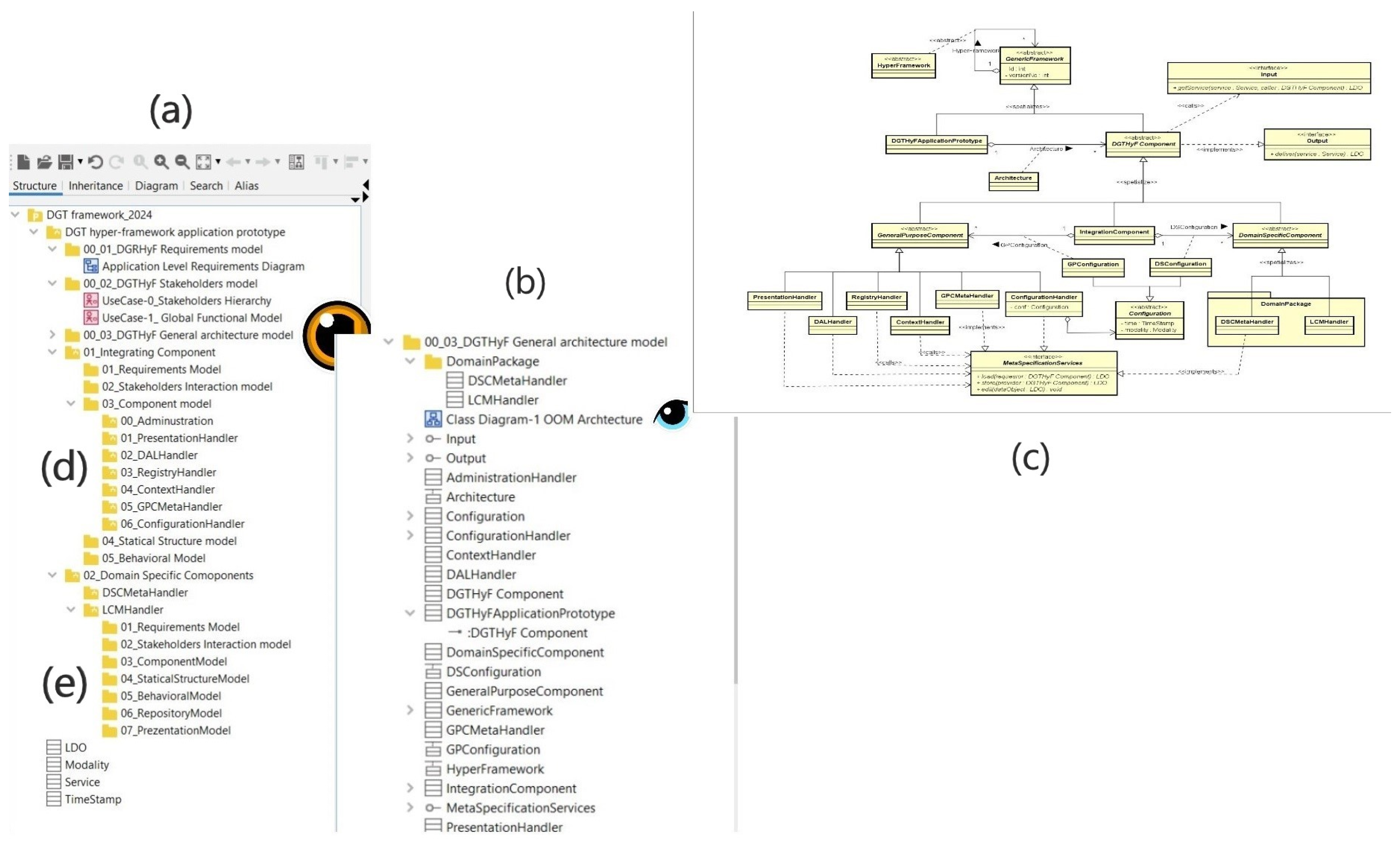

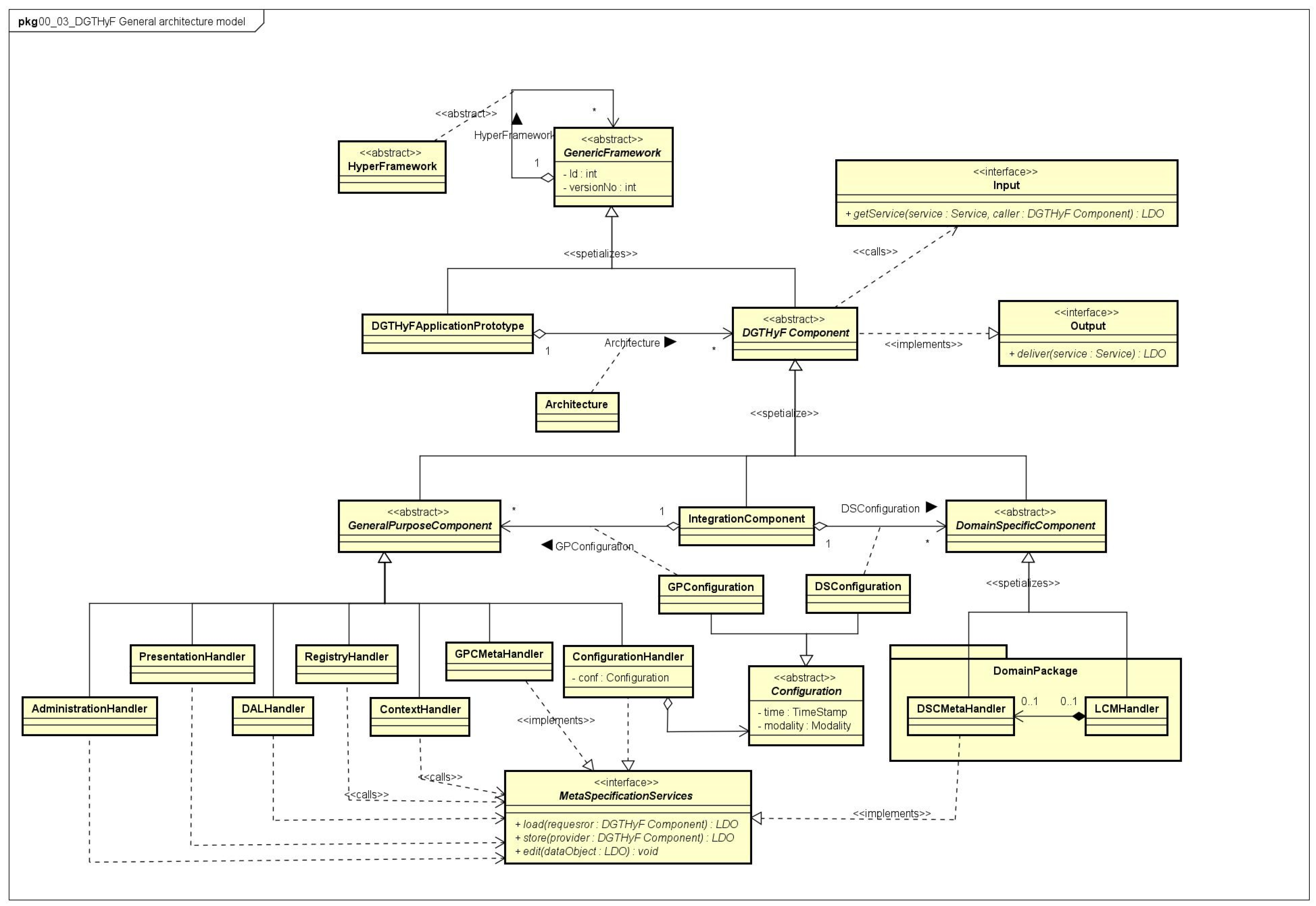

3. Results

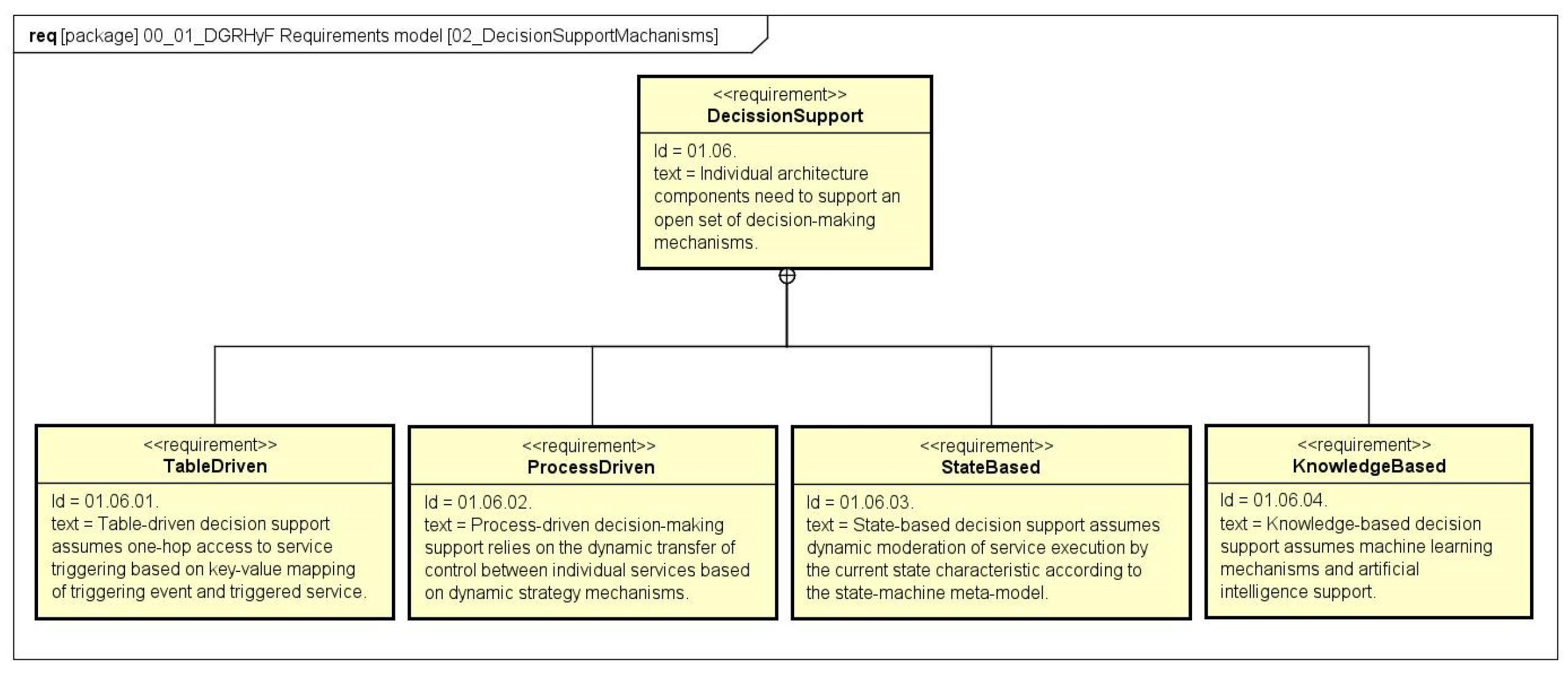

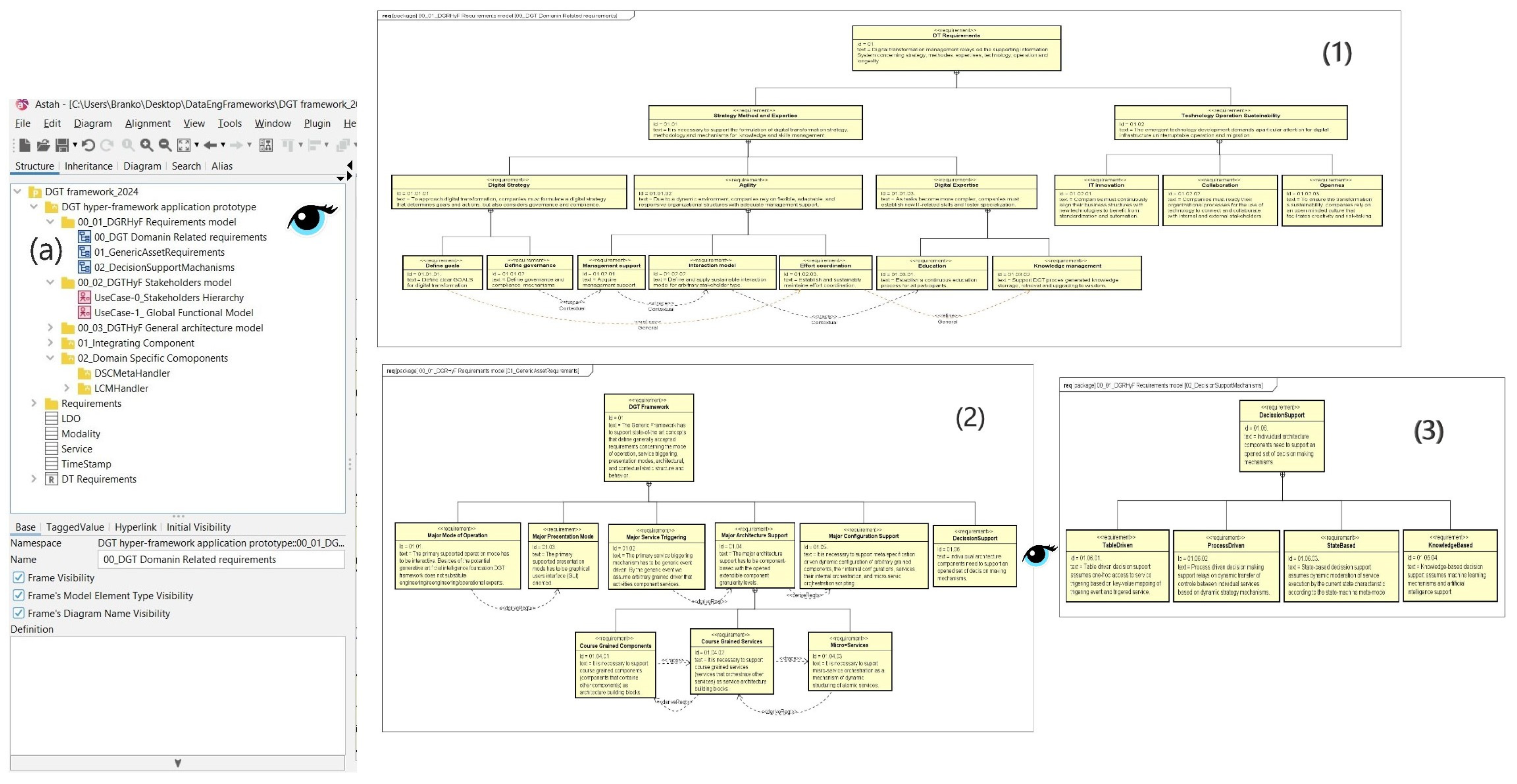

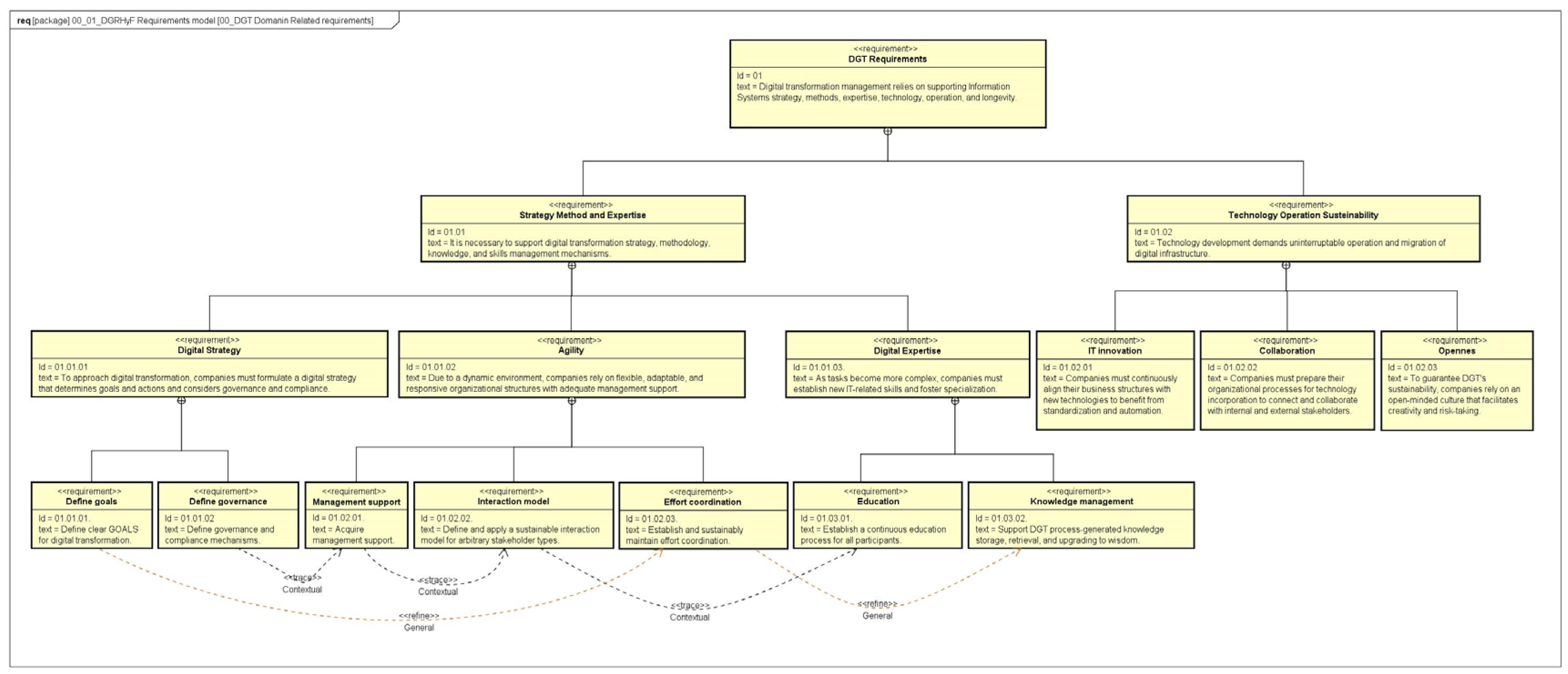

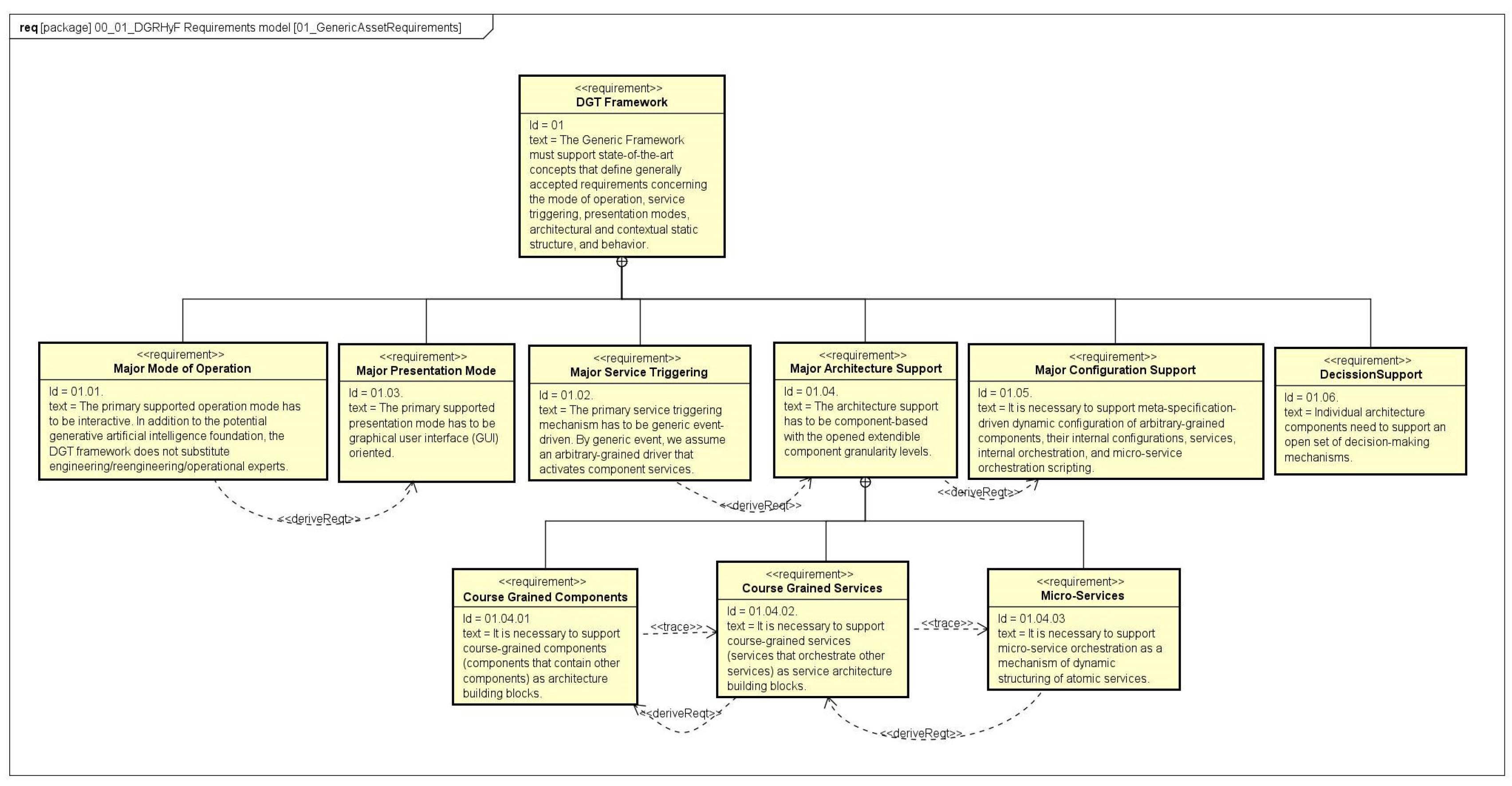

3.1. Multy-Staged Hyper-Framework Requirements Model

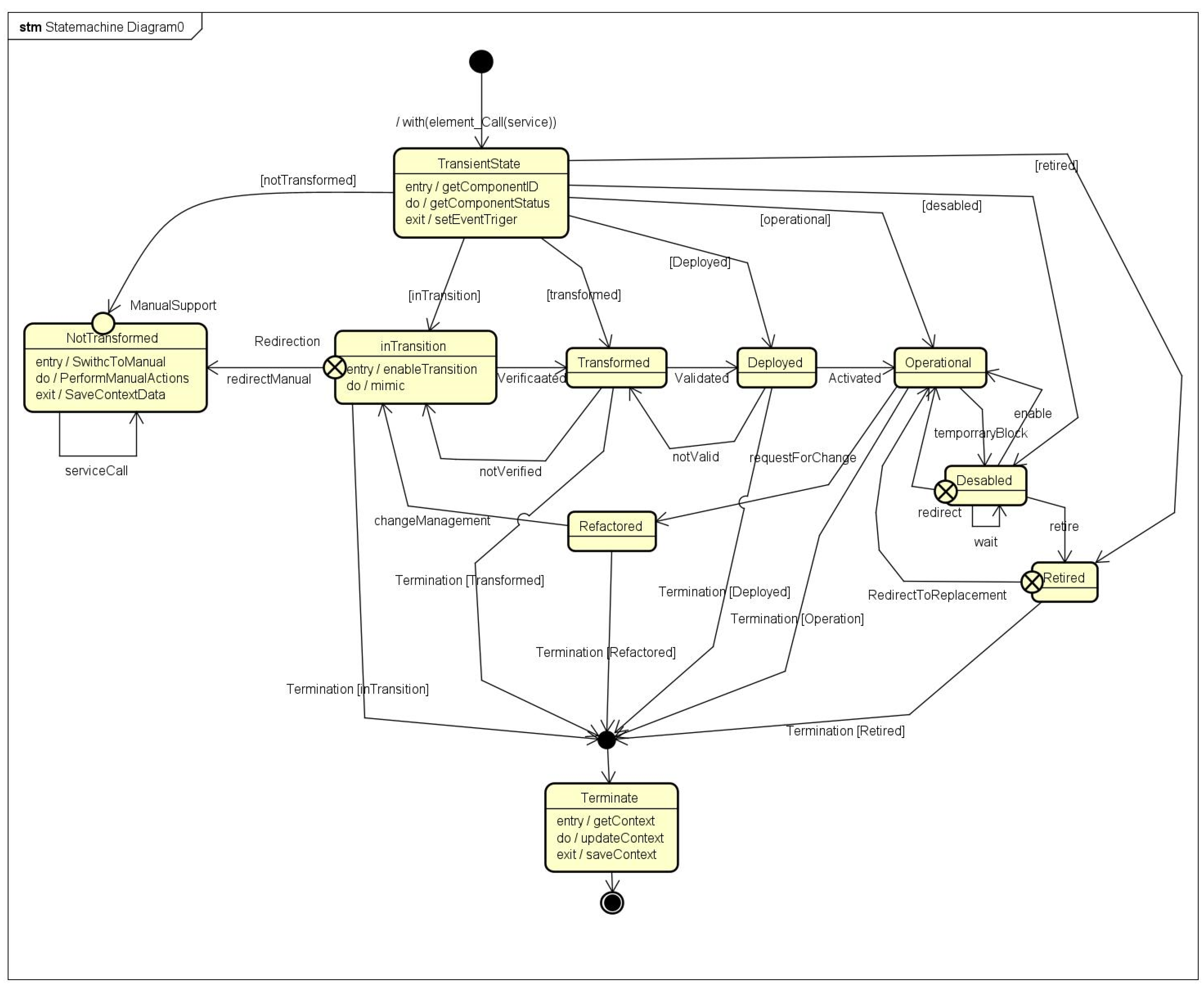

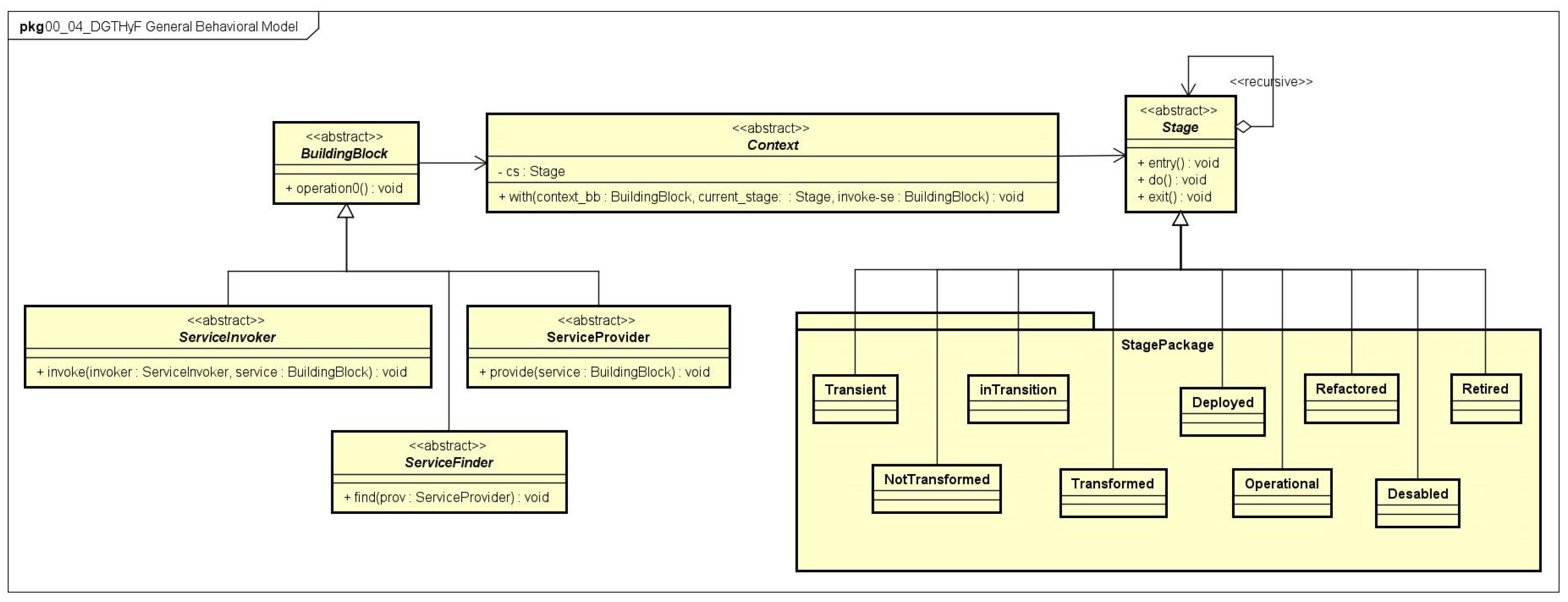

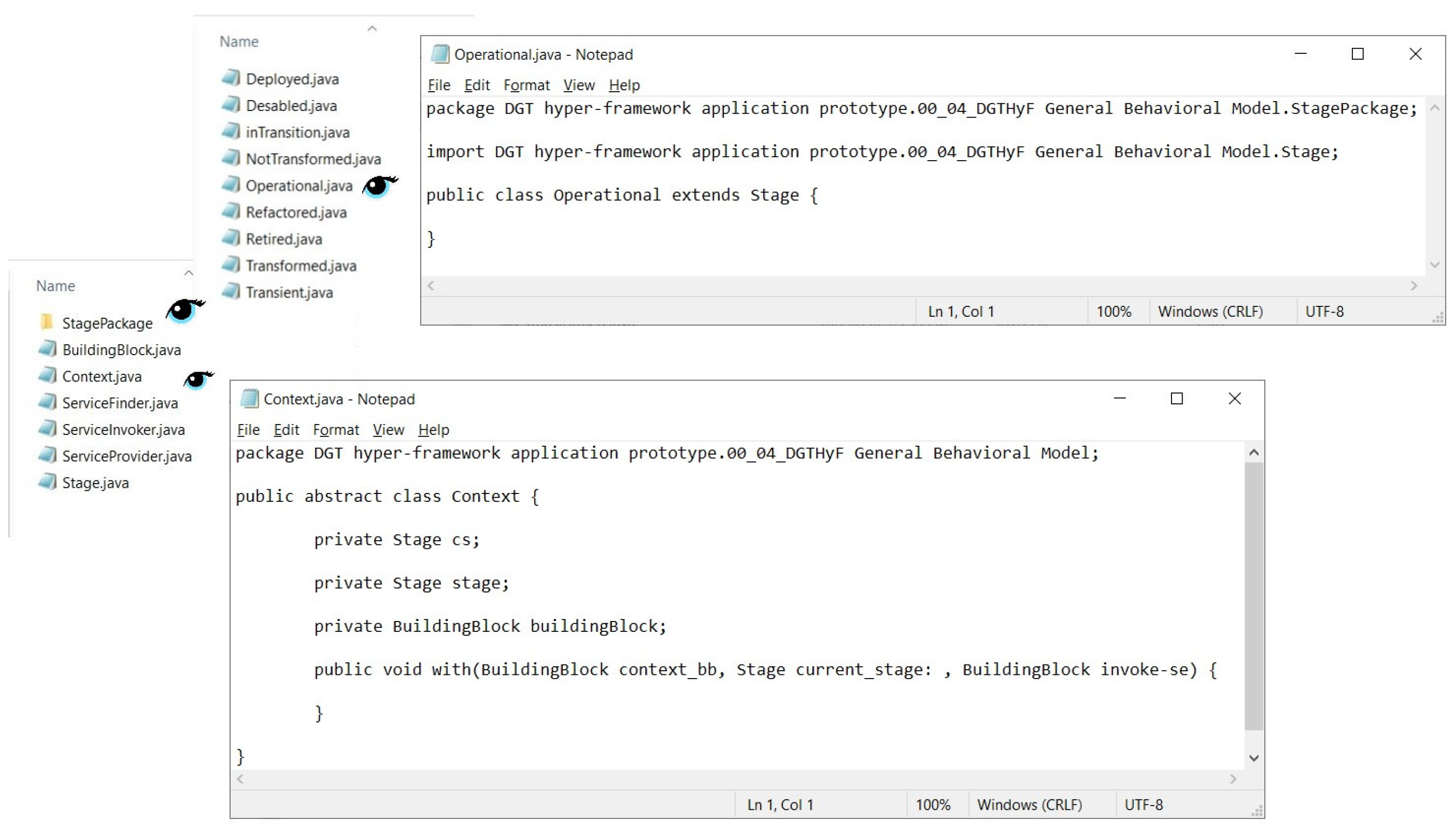

3.2. DGTHyF Generic Behavioral Model

3.3. DGT Life Cycle Model Handling Component

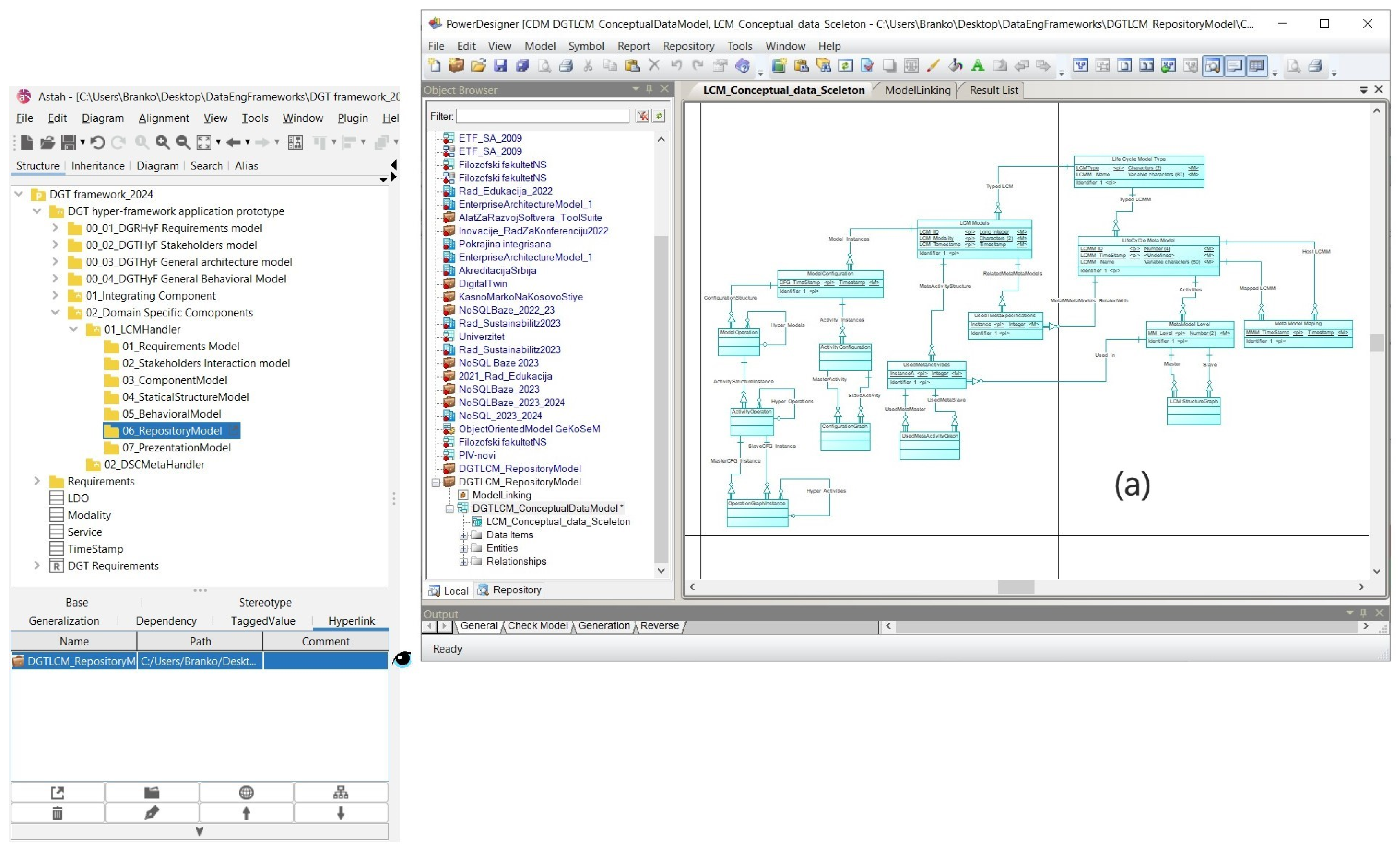

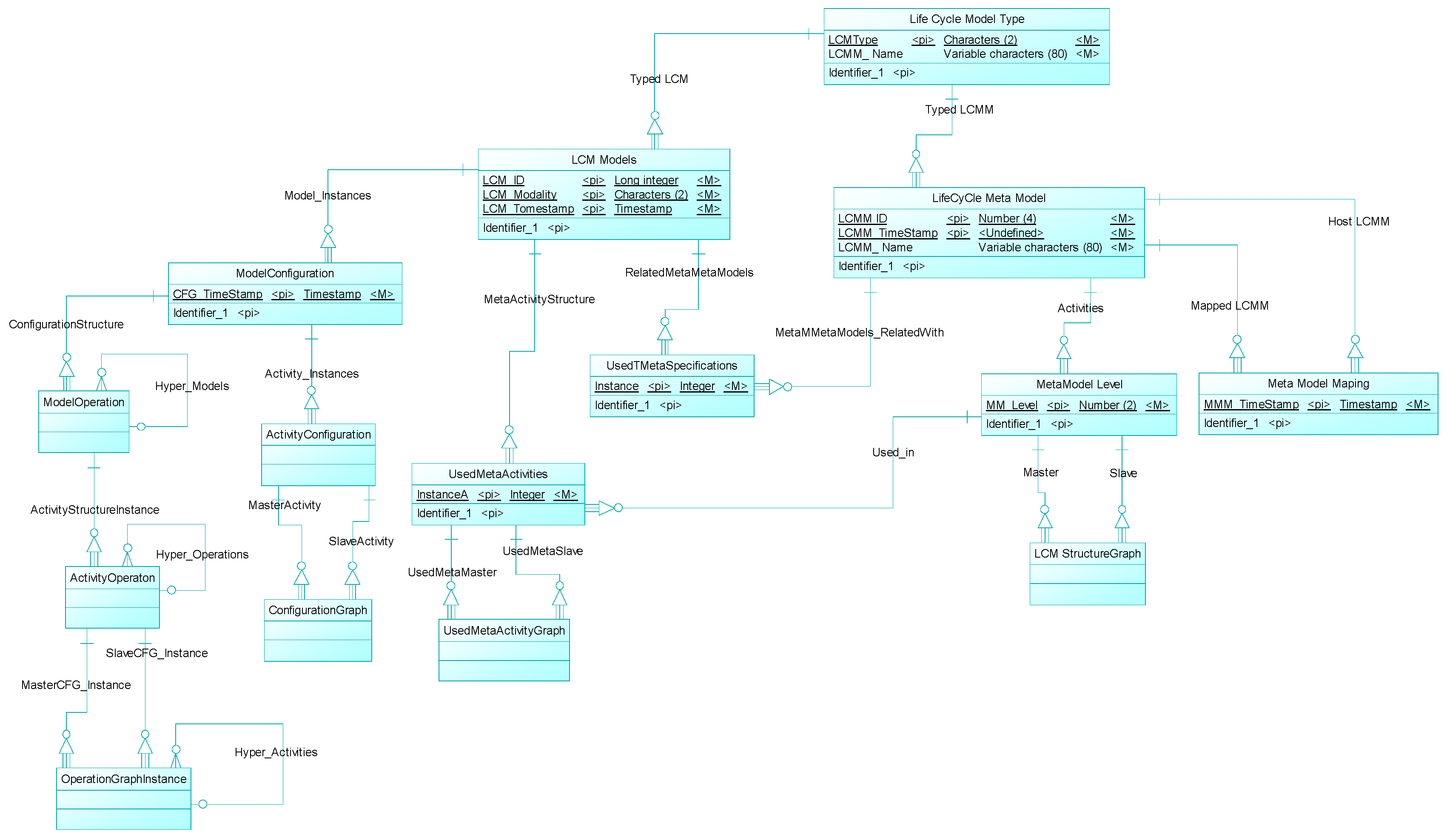

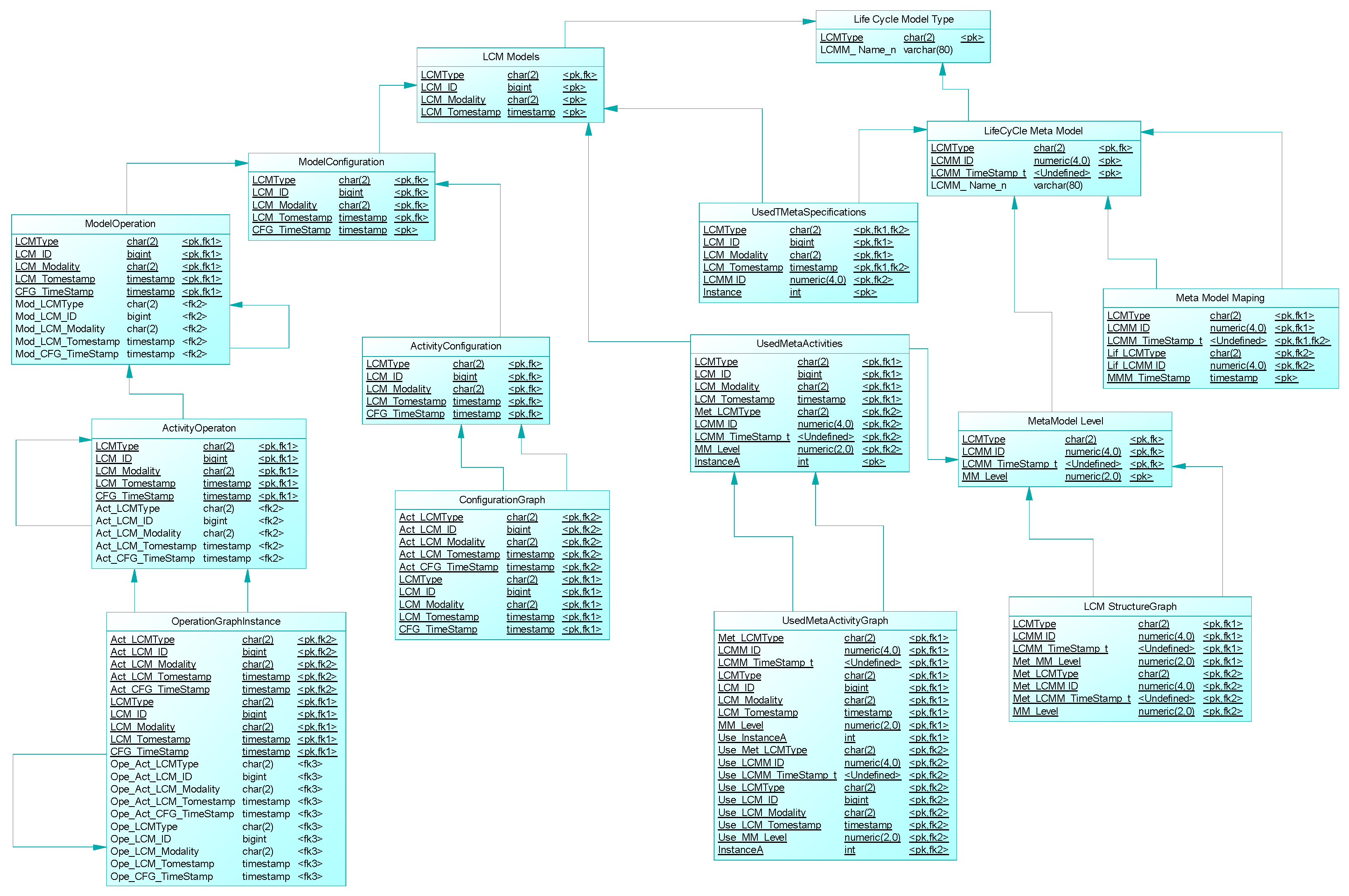

4. Discussion and Related Work

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A. Sample Data Definition Language script genereted from physical model (Figure 22)

References

- INCOSE—International Council on Systems Engineering, Systems Engineering Vision 2035. Available online: https://www.incose.org/publications/se-vision-2035 (accessed on 19 September 2024).

- SEBoK Guide to the Systems Engineering Body of Knowledge, Fundamentals for Digital Engineering. Available online: https://sebokwiki.org/wiki/Fundamentals_for_Digital_Engineering (accessed on 20 September 2024).

- World Economic Forum, Digital Transformation: Powering the Great Reset, 2020. Available online: https://www.weforum.org/publications/digital-transformation-powering-the-great-reset/ (accessed on 15 August 2024).

- Roblek, V.; Dimovski, V. Essentials of ‘the Great Reset’ through Complexity Matching. Systems 2024, 12, 182. [Google Scholar] [CrossRef]

- Marvin Cheung. 5 Ideas from Global Diplomacy, System-wide Transformation Methods to Close the Compliance Gap and Advance the 2030 Sustainable Development Goals; Ground Zero Books LLC: New York, NY, USA, 2024. [Google Scholar] [CrossRef]

- Standardization Council Industry 4.0. Available online: https://www.sci40.com/english/thematic-fields/rami4-0/ (accessed on 12 August 2024).

- Zamora Iribarren, M.; Garay-Rondero, C.L.; Lemus-Aguilar, I.; Peimbert-García, R.E. A Review of Industry 4.0 Assessment Instruments for Digital Transformation. Appl. Sci. 2024, 14, 1693. [Google Scholar] [CrossRef]

- Hinterhuber, A.; Vescovi, T.; Checchinato, F. (Eds.) Managing Digital Transformation: Understanding the Strategic Process, 1st ed.; Routledge: London, UK, 2021. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhuang, G.; Lv, H.; Li, Q. Research on Adaptive Intelligent Decision Algorithm in Industry 4.0 Digital Economy and Management Transformation Based on Clustering Algorithm. Teh. Vjesn. 2024, 31, 870–880. [Google Scholar] [CrossRef]

- Dossou, P.-E.; Laouénan, G.; Didier, J.-Y. Development of a Sustainable Industry 4.0 Approach for Increasing the Performance of SMEs. Processes 2022, 10, 1092. [Google Scholar] [CrossRef]

- Wang, X.; Yang, J.; Wang, Y.; Miao, Q.; Wang, F.-Y.; Zhao, A.; Deng, J.-L.; Li, L.; Na, X.; Vlacic, L. Steps toward Industry 5.0: Building “6S” parallel industries with cyber-physical-social intelligence. IEEE/CAA J. Autom. Sin. 2023, 10, 1692–1703. [Google Scholar] [CrossRef]

- Alojaiman, B. Technological Modernizations in the Industry 5.0 Era: A Descriptive Analysis and Future Research Directions. Processes 2023, 11, 1318. [Google Scholar] [CrossRef]

- Madsen, D.Ø.; Slåtten, K. Comparing the Evolutionary Trajectories of Industry 4.0 and 5.0: A Management Fashion Perspective. Appl. Syst. Innov. 2023, 6, 48. [Google Scholar] [CrossRef]

- Barata, J.; Kayser, I. Industry 5.0—Past, Present, and Near Future. Procedia Comput. Sci. 2023, 219, 978–788. [Google Scholar] [CrossRef]

- Hein-Pensel, F.; Winkler, H.; Brückner, A.; Wölke, M.; Jabs, I.; Mayan, I.J.; Kirschenbaum, A.; Friedrich, J.; Zinke-Wehlmann, C. Maturity assessment for Industry 5.0: A review of existing maturity model. J. Manuf. Syst. 2023, 66, 200–210. [Google Scholar] [CrossRef]

- Golovianko, M.; Terziyan, V.; Branytskyi, V.; Malyk, D. Industry 4.0 vs. Industry 5.0: Co-existence, Transition, or a Hybrid. Procedia Comput. Sci. 2023, 217, 102–113. [Google Scholar] [CrossRef]

- Rahmani, R.; Jesus, C.; Lopes, S.I. Implementations of Digital Transformation and Digital Twins: Exploring the Factory of the Future. Processes 2024, 12, 787. [Google Scholar] [CrossRef]

- Duggal, A.S.; Malik, P.K.; Gehlot, A.; Singh, R.; Gaba, G.S.; Masud, M.; Al-Amri, J.F. A sequential roadmap to industry 6.0: Exploring future manufacturing trends. IET Community 2022, 16, 521–531. [Google Scholar] [CrossRef]

- Das, S.; Pan, T. A strategic outline of Industry 6.0: Exploring the Future. Available online: SSRN:. 2022. [CrossRef]

- Tavakkoli-Moghaddam, R.; Nozari, H.; Bakhshi-Movahed, A.; Bakhshi-Movahed, A. A.; Bakhshi-Movahed, A. A Conceptual Framework for the Smart Factory 6.0. In Advanced Businesses in Industry 6.0; Oskounejad, M., Nozari, H., Eds.; IGI Global.: Hershey, PA, USA, 2024. [Google Scholar] [CrossRef]

- Hsiao, M.-H. Resource integration and firm performance through organizational capabilities for digital transformation. Digit. Transform. Soc. ahead-of-print. 2024. [Google Scholar] [CrossRef]

- Fischer, M.; Winkelmann, A. Strategy archetypes for digital transformation: Defining meta objectives using business process management. Inf. Manag. 2020, 57, 103262. [Google Scholar] [CrossRef]

- Elia, G.; Solazzo, G.; Lerro, A.; Pigni, F.; Tucci, C.L. The digital transformation canvas: A conceptual framework for leading the digital transformation process. Bus. Horiz. 2024, 67, 381–398. [Google Scholar] [CrossRef]

- Popoola, O.A.; Adama, H.E.; Chukwuekem, D.O.; Akinoso, A.E. Conceptualizing agile development in digital transformations: Theoretical foundations and practical applications. Eng. Sci. Technol. J. 2024, 5, 1524–1541. [Google Scholar] [CrossRef]

- Vial, G. Understanding digital transformation: A review and a research agenda. J. Strateg. Inf. Syst. 2019, 28, 118–144. [Google Scholar] [CrossRef]

- Butt, A.; Imran, F.; Helo, P.; Kantola, J. Strategic design of culture for digital transformation. Long Range Plan. 2024, 57, 102415. [Google Scholar] [CrossRef]

- Ruiz, L.; Benitez, J.; Castillo, A.; Braojos, J. Digital human resource strategy: Conceptualization, theoretical development, and an empirical examination of its impact on firm performance. Inf. Manag. 2024, 61, 103966. [Google Scholar] [CrossRef]

- Strohmeier, S. Digital human resource management: A conceptual clarification. Ger. J. Hum. Resour. Manag. 2020, 34, 345–365. [Google Scholar] [CrossRef]

- Ben Ghrbeia, S.; Alzubi, A. Building Micro-Foundations for Digital Transformation: A Moderated Mediation Model of the Interplay between Digital Literacy and Digital Transformation. Sustainability 2024, 16, 3749. [Google Scholar] [CrossRef]

- Zhu, L.; Wang, J.; Li, J. Exploring the Roles of Artifacts in Speculative Futures: Perspectives in HCI. Systems 2024, 12, 194. [Google Scholar] [CrossRef]

- Bantay, L.; Abonyi, J. Machine Learning-Supported Designing of Human–Machine Interfaces. Appl. Sci. 2024, 14, 1564. [Google Scholar] [CrossRef]

- Wasilewski, A. Functional Framework for Multivariant E-Commerce User Interfaces. J. Theor. Appl. Electronic. Commer. Res. 2024, 19, 412–430. [Google Scholar] [CrossRef]

- Liu, Y.; Xu, Y.; Song, R. Transforming User Experience (UX) through Artificial Intelligence (AI) in interactive media design. J. Res. Sci. Eng. 2024, 6, 1–7. [Google Scholar] [CrossRef] [PubMed]

- Casteleiro-Pitrez, J. Generative Artificial Intelligence Image Tools among Future Designers: A Usability, User Experience, and Emotional Analysis. Digital 2024, 4, 316–332. [Google Scholar] [CrossRef]

- Sethi, S.; Panda, S. Transforming Digital Experiences: The Evolution of Digital Experience Platforms (DXPs) from Monoliths to Microservices: A Practical Guide. J. Comput. Commun. 2024, 12, 142–155. [Google Scholar] [CrossRef]

- Li, J.; Cao, H.; Lin, L.; Hou, Y.; Zhu, R.; El Ali, A. User Experience Design Professionals’ Perceptions of Generative Artificial Intelligence. In Proceedings of the CHI Conference on Human Factors in Computing Systems (CHI ‘24); Association for Computing Machinery: New York, NY, USA, 2024; Volume 381, pp. 1–18. [Google Scholar] [CrossRef]

- Al Naqbi, H.; Bahroun, Z.; Ahmed, V. Enhancing Work Productivity through Generative Artificial Intelligence: A Comprehensive Literature Review. Sustainability 2024, 16, 1166. [Google Scholar] [CrossRef]

- Partarakis, N.; Zabulis, X.A. Review of Immersive Technologies, Knowledge Representation, and AI for Human-Centered Digital Experiences. Electronics 2024, 13, 269. [Google Scholar] [CrossRef]

- Laine, T.H.; Suk, H.J. Investigating User Experience of an Immersive Virtual Reality Simulation Based on a Gesture-Based User Interface. Appl. Sci. 2024, 14, 4935. [Google Scholar] [CrossRef]

- Wu, H.; Ji, P.; Ma, H.; Xing, L. A Comprehensive Review of Digital Twin from the Perspective of Total Process: Data, Models, Networks and Applications. Sensors 2023, 23, 8306. [Google Scholar] [CrossRef]

- Rawashdeh, A.; Abdallah, A.B.; Alfawaeer, M.; Al Dweiri, M.; Al-Jaghbeer, F. The Impact of Strategic Agility on Environmental Sustainability: The Mediating Role of Digital Transformation. Sustainability 2024, 16, 1338. [Google Scholar] [CrossRef]

- Aldoseri, A.; Al-Khalifa, K.N.; Hamouda, A.M. AI-Powered Innovation in Digital Transformation: Key Pillars and Industry Impact. Sustainability 2024, 16, 1790. [Google Scholar] [CrossRef]

- Zainuddin, A.A.; Zakirudin, M.A.Z.; Syafiq Zulkefli, A.S.; Mazli, A.M.; Mohd Wardi, M.A.S.; Fazail, M.N.; Mohd Razali, M.I.Z.; Yusof, M.H. Artificial Intelligence: A New Paradigm for Distributed Sensor Networks on the Internet of Things: A Review. Int. J. Perceptive Cogn. Comput. 2024, 10, 16–28. [Google Scholar] [CrossRef]

- W3C Recommendation. Available online: https://www.w3.org/TR/wot-architecture/ (accessed on day month year).

- Onopa, S.; Kotulski, Z. State-of-the-Art and New Challenges in 5G Networks with Blockchain Technology. Electronics 2024, 13, 974. [Google Scholar] [CrossRef]

- Perišić, A.; Perišić, I.; Perišić, B. Simulation-Based Engineering of Heterogeneous Collaborative Systems—A Novel Conceptual Framework. Sustainability 2023, 15, 8804. [Google Scholar] [CrossRef]

- Perišić, A.; Perišić, B. The Foundation for Open Component Analysis: A System of Systems Hyper Framework Model. Chapter In Advances in Principal Component Analysis. IntechOpen. Pedro García Márquez, F. (Ed.), 2022. [Google Scholar] [CrossRef]

- Perisic, A.; Perisic, B. Digital Twins Verification and Validation Approach through the Quintuple Helix Conceptual Framework. Electronics 2024, 13, 3303. [Google Scholar] [CrossRef]

- Guide to the Systems Engineering Body of Knowledge (SEBoK), Version 2.10, Editor’s Corner,pp.2, What’s the holdup with Digital Engineering? 2024. Available online: https://sebokwiki.org/wiki/Guide_to_the_Systems_Engineering_Body_of_Knowledge_ (accessed on day month year).

- Ester Sloth. Multi-, inter-, and transdisciplinarity: What is what?, Utrecht Univeristy, Education Development and Training. Available online: https://www.uu.nl/en/education/educational-development-training/knowledge-dossiers/interdisciplinary-education-and-cel/multi-inter-and-transdisciplinarity-what-is-what (accessed on 28 September 2024).

- Ebbs, P.J.; Ward, S.A. ISO 18404: A Model for Lean Transformation in an Alliance. In D. B. Costa, F. Drevland, & L. Florez-Perez (Eds.), Proceedings of the 32nd Annual Conference of the International Group for Lean Construction (IGLC32) (pp.; pp. 1255–12672024. [CrossRef]

- Adams, K.M.; Ibrahim, I.; Krahn, S. Engineering Systems with Standards and Digital Models: Development of a 15288-SysML Grid. Systems 2024, 12, 276. [Google Scholar] [CrossRef]

- Guide to the Systems Engineering Body of Knowledge (SEBoK), Version 2.10, pdf document, pp.83-731, Knowledge Areas. 2024. Available online: https://sebokwiki.org/wiki/Guide_to_the_Systems_Engineering_Body_of_Knowledge_ (accessed on day month year).

- INCOSE Systems Engineering Standards. Available online: https://www.incose.org/about-systems-engineering/se-standards (accessed on 15 September 2024).

- Adler, R.; Elberzhager, F.; Falcao, R.; Siebert, J. Defining and Researching “Dynamic Systems of Systems”. Software 2024, 3, 183–205. [Google Scholar] [CrossRef]

- Wikipedia, Systems engineering. Available online: https://en.wikipedia.org/wiki/Systems_engineering (accessed on 21 August 2024).

- Kunc, M. The Systems Thinking Approach to Strategic Management. Systems 2024, 12, 213. [Google Scholar] [CrossRef]

- Introduction to Systems Thinking. Available online: https://bigthinking.io/systems-thinking-innovation-overview/ (accessed on 21 August 2024).

- Paddeu, D.; Lyons, G. Foresight through developing shared mental models: The case of Triple Access Planning. Futures 2023, 155, 103295. [Google Scholar] [CrossRef]

- Muller, G.; Giudici, H. Social Systems of Systems Thinking to Improve Decision-Making Processes Toward the Sustainable Transition. In: Salado, A., Valerdi, R., Steiner, R., Head, L. (eds) The Proceedings of the 2024 Conference on Systems Engineering Research. CSER 2024. Conference on Systems Engineering Research Series. Springer, Cham. 2024. [Google Scholar] [CrossRef]

- Bell, R.; Longshore, R.; Madachy, R. Introducing SysEngBench: A Novel Benchmark for Assessing Large Language Models in Systems Engineering, Acquisition Research Program, Acquisition Management;SYM-AM-24-072, 2024. https://dair.nps.edu/handle/123456789/5135.

- Gregoriades, A.; Sutcliffe, A. Using Task Support Requirements during Socio-Technical Systems Design. Systems 2024, 12, 348. [Google Scholar] [CrossRef]

- Torkjazi, M.; Raz, A.K. A Review on Integrating Autonomy Into System of Systems: Challenges and Research Directions. IEEE Open J. Syst. Eng. 2024, 2, 157–178. [Google Scholar] [CrossRef]

- Restrepo-Carmona, J.A.; Taborda, E.A.; Paniagua-Garcia, E.; Escobar, C.A.; Sierra-Perez, J.; Vasquez, R.E. On the Integration of Complex Systems Engineering and Industry 4.0 Technologies for the Conceptual Design of Robotic Systems. Machines 2024, 12, 625. [Google Scholar] [CrossRef]

- Boy, G.A.; Masson, D.; Durnerin, É.; Morel, C. PRODEC for human systems integration of increasingly autonomous systems. INCOSE Syst. Eng. 2024, 27, 805–826. [Google Scholar] [CrossRef]

- Nilsson, J.; Javed, S.; Albertsson, K.; Delsing, J.; Liwicki, M.; Sandin, F. AI Concepts for System of Systems Dynamic Interoperability. Sensors 2024, 24, 2921. [Google Scholar] [CrossRef]

- Wach, P.; Topcu, T.G.; Jung, S.; Sandman, B.; Kulkarni, A.U.; Salado, A. A systematic literature review on the mathematical underpinning of model-based systems engineering. Syst. Eng. 2024, 1–20. [Google Scholar] [CrossRef]

- Pierre Borque; Richard, E. (Dick) Fairley Editors. SWEBOK V3.0, Guide to the Software Engineering Body of Knowledge, IEEE Computer Society. Available online: https://www.computer.org/education/bodies-of-knowledge/software-engineering (accessed on 12 August 2024).

- Institute of Data. Understanding Standards and Guidelines in Software Engineering. Available online: https://www.institutedata.com/blog/standards-and-guidelines-in-software-engineering/ (accessed on 28 September 2024).

- McConnell, S.; Tripp, L. Guest Editors; Introduction: Professional Software Engineering-Fact or Fiction? in IEEE Software 1999, 16, 13–18. [Google Scholar] [CrossRef]

- Leng, J.; The development of research software engineering as a profession, Open Access Government July 2023, pp. 278–279. Available online: https://www.openaccessgovernment.org/article/research-software-engineering-as-a-profession-rse/161896/ (accessed on 1 October 2024).

- Dwivedi, K.; Haghparast, M.; Mikkonen, T. Quantum software engineering and quantum software development lifecycle: A survey. Cluster Comput. 2024, 27, 7127–7145. [Google Scholar] [CrossRef]

- Khan, A.A.; Ahmad, A.; Waseem, M.; Liang, P.; Fahmideh, M.; Mikkonen, T.; Abrahamsson, P. Software architecture for quantum computing systems—A systematic review. J. Syst. Softw. 2023, 201, 111682. [Google Scholar] [CrossRef]

- Akbar, M.A.; Khan, A.A.; Mahmood, S.; Rafi, S. Quantum Software Engineering: A New Genre of Computing. In Proceedings of the 1st ACM International Workshop on Quantum Software Engineering: The Next Evolution (QSE-NE 2024); Association for Computing Machinery: New York, NY, USA, 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Salam, M.; Ilyas, M. Quantum computing challenges and solutions in software industry—A multivocal literature review, IET Quant. Comm. 2024, 1–24. [Google Scholar] [CrossRef]

- Hevia, J.L.; Peterssen, G.; Ebert, C.; Piattini, M. Quantum Computing. IEEE Softw. 2021, 38, 7–15. [Google Scholar] [CrossRef]

- Sarkar, A. Automated quantum software engineering. Autom. Softw. Eng. 2024, 31, 36. [Google Scholar] [CrossRef]

- Ilyas, M.; Khanm, S.U.; Khanm H, U.; Rashid, N. Software integration model: An assessment tool for global software development vendors. Journal of Software: Evolution and Process 2024, 36, e2540. [Google Scholar] [CrossRef]

- Bull, R.L.; Dudukovich, R.M.; Fraire, J.A.; Kortas, N.; Kassouf-Short, R.; Smith, A.; Schweinsberg, E. Network Emulation Testbed Capabilities for Prototyping Space DTN Software and Protocols. In The 11th International Workshop on Computer and Networking Experimental Research using Testbeds (CNERT 2024), 2024. Available online: https://ntrs.nasa.gov/api/citations/20240002839/downloads/CNERT2024.pdf.

- ElBouanani, H.; Barakat, C.; Dabbous, W.; Turletti, T. Fidelity-aware large-scale distributed network emulation. Comput. Netw. 2024, 250, 110531. [Google Scholar] [CrossRef]

- Rozi, N.R.F.; Nurhayati, A.; Rozano, S.A. Implementation OSPFv3 For Internet Protocol Verses 6 (IPv6) Based On Juniper Routers Use Emulator Virtual Engine—Next Generation (Eve-NG). Int. J. Eng. Contin. 2023, 3, 1–11. [Google Scholar] [CrossRef]

- Zhu, M.; Zhang, J.; Hua, B.; Lei, M.; Cai, Y.; Tian, L.; Wang, D.; Xu, W.; Zhang, C.; Huang, Y.; et al. Ultra-wideband fiber-THz-fiber seamless integration communication system toward 6G: Architecture, key techniques, and testbed implementation. Sci. China Inf. Sci. 2023, 66, 113301. [Google Scholar] [CrossRef]

- Wei, Z.; Qu, H.; Wang, Y.; Yuan, X.; Wu, H.; Du, Y.; Han, K.; Zhang, N.; Feng, Z. . Integrated Sensing and Communication Signals Toward 5G-A and 6G: A Survey. IEEE Internet Things J. 2023, 10, 11068–11092. [Google Scholar] [CrossRef]

- Alkhateeb, A.; Jiang, S.; Charan, G. . Real-Time Digital Twins: Vision and Research Directions for 6G and Beyond. IEEE Commun. Mag. 2023, 61, 128–134. [Google Scholar] [CrossRef]

- Wang, C.-X.; You, X.; Gao, X.; Zhu, X.; Li, Z.; Zhang, C.; Wang, H.; Huang, Y.; Chen, Y.; Haas, H.; et al. On the Road to 6G: Visions, Requirements, Key Technologies, and Testbeds. IEEE Commun. Surv. Tutor. 2023, 25, 905–974. [Google Scholar] [CrossRef]

- Adil, M.; Song, H.; Khan, M.K.; Farouk, A.; Jin, Z. 5G/6G-enabled metaverse technologies: Taxonomy, applications, and open security challenges with future research directions. J. Netw. Comput. Appl. 2024, 223, 103828. [Google Scholar] [CrossRef]

- Hafeez, S.; Khan, A.R.; Al-Quraan, M.M.; Mohjazi, L.; Zoha, A.; Imran, M.A.; Sun, Y. Blockchain-Assisted UAV Communication Systems: A Comprehensive Survey. IEEE Open J. Veh. Technol. 2023, 4, 558–580. [Google Scholar] [CrossRef]

- Khaleel, M.; Ahmed, A.A.; Alsharif, A. Artificial Intelligence in Engineering. Brill. Res. Artif. Intell. 2023, 3, 32–42. [Google Scholar] [CrossRef]

- Qin, Z.; Gao, F.; Lin, B.; Tao, X.; Liu, G.; Pan, C. A Generalized Semantic Communication System: From Sources to Channels. IEEE Wirel. Commun. 2023, 30, 18–26. [Google Scholar] [CrossRef]

- Chaccour, C.; Saad, W.; Debbah, M.; Han, Z.; Poor, H.V. Less Data, More Knowledge: Building Next Generation Semantic Communication Networks. IEEE Commun. Surv. Tutor. 2024. [Google Scholar] [CrossRef]

- Zhang, G.; Hu, Q.; Qin, Z.; Cai, Y.; Yu, G.; Tao, X. . A Unified Multi-Task Semantic Communication System for Multimodal Data. IEEE Trans. Commun. 2024, 72, 4101–4116. [Google Scholar] [CrossRef]

- Yang, Z.; Chen, M.; Li, G.; Yang, Y.; Zhang, Z. Secure Semantic Communications: Fundamentals and Challenges. IEEE Netw. 2024. [Google Scholar] [CrossRef]

- Mavromatis, A.; Colman-Meixner, C.; Silva, A.P.; Vasilakos, X.; Nejabat, R.; Simeonidou, D. A Software-Defined IoT Device Management Framework for Edge and Cloud Computing. IEEE Internet Things J. 2020, 7, 1718–1735. [Google Scholar] [CrossRef]

- Zindros, D.; Tzinas, A.; Tse, D. Rollerblade: Replicated Distributed Protocol Emulation on Top of Ledgers, Cryptology Archive, Paper 2024/210, 2024. Available online: https://eprint.iacr.org/2024/210 (accessed on 5 October 2024).

- Computer cluster, In Wikipedia. Available online: https://en.wikipedia.org/wiki/Computer_cluster (accessed on 6 October 2024).

- Private network, In Wikipedia. Available online: https://en.wikipedia.org/wiki/Private_network (accessed on 6 October 2024).

- Public computer, In Wikipedia. Available online: https://en.wikipedia.org/wiki/Public_computer (accessed on 7 October 2024).

- Computer network. In Wikipedia. Available online: https://en.wikipedia.org/wiki/Computer_network (accessed on 7 October 2024).

- Computer science Wiki, Type of Networks. Available online: https://computersciencewiki.org/index.php/Types_of_networks (accessed on 7 October 2024).

- Nguyen, H.T.; Usman, M.; Buyya, R. QFaaS: A Serverless Function-as-a-Service framework for Quantum computing. Future Gener. Comput. Syst. 2024, 154, 281–300. [Google Scholar] [CrossRef]

- Ahmad, A.; Altamimi, A.B.; Aqib, J. Altamimi; Jamal Aqib. A reference architecture for quantum computing as a service. J. King Saud Univ. —Comput. Inf. Sci. 2024, 36, 102094. [Google Scholar] [CrossRef]

- Witteborn, S. Digitalization, Digitization and Datafication: The “Three D” Transformation of Forced Migration Management. Commun. Cult. Crit. 2022, 15, 157–175. [Google Scholar] [CrossRef]

- Stefanescu, D.; Galán-García, P.; Montalvillo, L.; Unzilla, J.; Urbieta, A. Industrial Data Homogenization and Monitoring Scheme with Blockchain Oracles. Smart Cities 2023, 6, 263–290. [Google Scholar] [CrossRef]

- Anthopoulos, L.; Nikolaou, I. Homogenizing Data Flows in Smart Cities: Value-driven use Cases in the Era of Citiverse. In Companion Proceedings of the ACM Web Conference 2024 (WWW ‘24).; pp. 20241367–1371. [CrossRef]

- Islam, M.S.; Rahman, M.A.; Ameedeen, M.A.B.; Ajra, H.; Ismail, Z.B.; Zain, J.M. Blockchain-Enabled Cybersecurity Provision for Scalable Heterogeneous Network: A Comprehensive Survey. Comput. Model. Eng. Sci. 2024, 138, 43–123. [Google Scholar] [CrossRef]

- Mengistu, T.M.; Kim, T.; Lin, J. A Survey on Heterogeneity Taxonomy, Security and Privacy Preservation in the Integration of IoT, Wireless Sensor Networks and Federated Learning. Sensors 2024, 24, 968. [Google Scholar] [CrossRef] [PubMed]

- Casamayor Pujol, V.; Morichetta, A.; Murturi, I.; Kumar Donta, P.; Dustdar, S. Fundamental Research Challenges for Distributed Computing Continuum Systems. Information 2023, 14, 198. [Google Scholar] [CrossRef]

- Feng, Y.; Qi, Y.; Li, H.; Wang, X.; Tian, J. Leveraging federated learning and edge computing for recommendation systems within cloud computing networks, in Proc. SPIE 1 3210, 1321012, 2024. [Google Scholar] [CrossRef]

- Wang, J.; Chen, C.; Li, S.; Wang, C.; Cao, X.; Yang, L. Researching the CNN Collaborative Inference Mechanism for Heterogeneous Edge Devices. Sensors 2024, 24, 4176. [Google Scholar] [CrossRef]

- Wu, C.; Fan, H.; Wang, K.; Zhang, P. Enhancing Federated Learning in Heterogeneous Internet of Vehicles: A Collaborative Training Approach. Electronics 2024, 13, 3999. [Google Scholar] [CrossRef]

- Ferreira, B.A.; Petrović, T.; Orsag, M.; Dios, J.R.M.-D.; Bogdan, S. Distributed Allocation and Scheduling of Tasks With Cross-Schedule Dependencies for Heterogeneous Multi-Robot Teams. IEEE Access 2024, 12, 74327–74342. [Google Scholar] [CrossRef]

- IBM, What are containers? Available online: https://www.ibm.com/topics/containers (accessed on 15 October 2024).

- IBM, What is virtualization? Available online: https://www.ibm.com/topics/virtualization (accessed on 15 October 2024).

- Tao, F.; Ma, X.; Liu, W.; Zhang, C. Digital Engineering: State-of-the-art and perspectives. Digit. Eng. 2024, 1, 100007. [Google Scholar] [CrossRef]

- Hariri, R.; Fredericks, E.; Bowers, K. Heterogeneous data integration: Challenges and opportunities. Data Brief 2024, 56, 110853. [Google Scholar] [CrossRef]

- Available online:. Available online: https://sageitinc.com/reference-center/what-is-data-abstraction-layer (accessed on 16 October 2024).

- Available online:. Available online: https://www.proprofskb.com/blog/best-document-collaboration-tools/ (accessed on 18 October 2024).

- Buede, D.M.; William, D.M. The engineering design of system. In Pierre Bourgue; Richard E.s: Models and methods; John Wiley & Sons, 2024; ISBN: 978-1-119-98403-0, pp. 3–7.

- Rhee, H.L. A New Lifecycle Model Enabling Optimal Digital Curation. J. Librariansh. Inf. Sci. 2024, 56, 241–266. [Google Scholar] [CrossRef]

- Zachman Framework. Available online: https://www.zachman.com/resources/ea-articles-reference/327-the-framework-for-enterprise-architecture-background-description-and-utility-by-john-a-zachman (accessed on 21 October 2024).

- The Open Group, The Open Group Architecture Framework, (TOGAF). Available online: https://www.opengroup.org/togaf, https://www.opengroup.org/architecture-forum (accessed on 21 October 2024).

- FEA. https://www.feacinstitute.org/. (accessed on 21 October 2024).

- U.S. Department of Defense, DoDAF. Available online: https://dodcio.defense.gov/Library/DoD-Architecture-Framework/dodaf20_viewpoints/, (accessed on day month year).

- NATO Architecture Framework NAF. Available online: https://www.nato.int/cps/en/natohq/topics_157575.htm (accessed on 21 October 2024).

- Internationa Standards Organization, ISO EAF. Available online: https://www.iso.org/obp/ui/es/#iso:std:iso:15704:ed-2:v1:en (accessed on 20 October 2024).

- Carnagie Mellon University, Software Engineering Institute, Capability Maturity Model Integrated. Available online: https://cmmiinstitute.com/ (accessed on 21 October 2024).

- SFIA, The global skills and competency framework for the digital world. Available online: https://sfia-online.org/en (accessed on 22 October 2024).

- Object Management Group. Available online: https://www.omg.org/about/omg-standards-introduction.htm (accessed on 20 October 2024).

- Sparx Systems EAF. Available online: https://www.sparxsystems.eu/enterprise-architect/ (accessed on 22 October 2024).

- SAP LenIX EAF. Available online: https://www.leanix.net/en/wiki/ea/enterprise-architecture-frameworks (accessed on 22 October 2024).

- Scaled Agile Framework (SAFe®). Available online: https://scaledagile.com/what-is-safe/ (accessed on 22 October 2024).

- Large Scale Scrum (LeSS) Framework. Available online: https://less.works/less/framework/product (accessed on 22 October 2024).

- Nexus. Available online: https://nexus.hexagon.com/ (accessed on 22 October 2024).

- Scrum@Scale. Available online: https://www.scrumatscale.com/scrum-at-scale-guide/ (accessed on 22 October 2024).

- Enterprise Scrum. Available online: https://www.scrumstudy.com/whyscrum/scrum-for-enterprise (accessed on 22 October 2024).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).