CNNs are designed for processing and analyzing visual data, especially images. Due to their efficiency in feature extraction from images, CNNs have been widely applied across various fields, consistently demonstrating high performances [

31,

87]. In the context of smart agriculture, CNNs have proven to be particularly valuable, aiding in decision-making processes by analyzing complex agricultural data. By being exposed to large datasets, CNNs are able to learn intricate patterns within images and generalize to new, unseen data, making them well-suited for a range of agricultural applications.

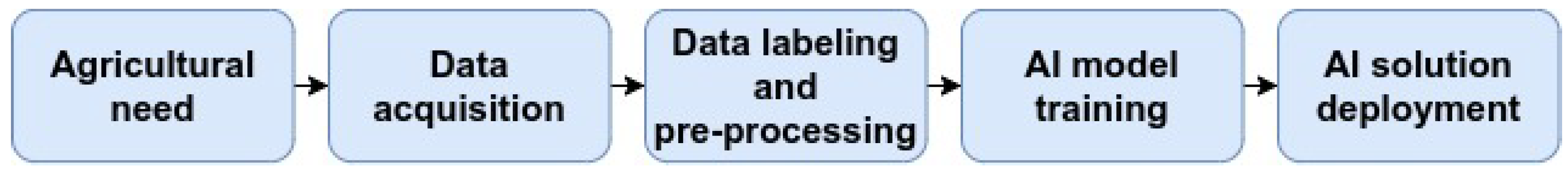

Figure 1 illustrates the general pipeline used in smart agriculture, highlighting the various stages of the process. Depending on the specific agricultural needs, data is first acquired, preprocessed, and labeled. Then, an AI model is trained to address the agricultural problem. The ability of CNNs to efficiently analyze visual data has made them widely used in a range of applications in agriculture (

Section 3). This section provides an analysis of these key applications.

5.1. Weed Detection

Weeds are undesirable plants in agriculture that compete with crops of interest for essential resources such as water, sunlight, or nutrients, and some types of weeds are toxic. They can also include remnants of previous year’s crops that re-emerge alongside current desired crops. If left unmanaged, they can significantly reduce crop yields and growth. Weed invasions are usually treated with herbicides, and very recently advanced techniques such as laser treatment [

88]. Achieving precise herbicide control or laser treatment can involve computer vision techniques and more specifically CNNs for accurate weed detection. Weed detection is the identification and localization of weeds in agricultural fields, which can be achieved with CNNs in different approaches (

Table 4).

Image segmentation and object detection as main approaches. A major challenge in weed detection is distinguishing weeds from crops, especially in complex environments where they may overlap or have similar visual features (e.g. color or spectral signatures). Two common machine learning approaches for addressing this challenge are image segmentation and object detection. In image segmentation, each pixel in the image is classified as weed or crop [

47,

48,

78]. For example, Kamath

et al. [

47] showed that CNNs are efficient for weed segmentation in paddy crops, achieving a 90% weighted mean IoU with the use of the PSPNet architecture. Espejo-Garcia

et al. [

78] showed that using transfer learning to segment weeds from crops accelerated the training process and improved performance. Specifically, the authors trained a SegNet model on segmenting weeds from carrots and then applied the acquired knowledge to segment onions, and vice versa. Asad and Bais [

48] achieved a frequency-weighted mean IoU of 98% in weed segmentation in canola fields using SegNet but noted difficulties in detecting weeds where they overlapped with crop leaves and confusions with plant stems because of high similarity between them.

On the other hand, object detection approaches aim to localize individual weeds or plants within a field using bounding boxes [

36,

59,

76,

89,

90,

91]. Zhang

et al. [

36] proposed a weed localization and identification method based on object detection, using Faster-RCNN. The authors integrated a Convolutional Block Attention Module that improves the efficiency of CNNs, achieving 99% accuracy at detecting and localizing several types of weeds and soybean seedlings. Chen

et al. [

76] used a local attention mechanism to effectively detect weeds in a sesame field outperforming other models, such as, Fast-RCNN, SSD, YOLOv4, with a mean average precision of 96% and real-time detection speed. Jabir

et al. [

59] compared the performance of YOLO, Faster-RCNN, Detectron2, and EfficientDet at detecting weed, and they found that YOLOv5 is a fast and accurate model that could be integrated to embedded systems for weed detection. To address the challenges of complex environments such as overlapping and small weeds, Wu

et al. [

89] improved the YOLOv4 model by modifying its backbone to include a hierarchical residual model, which improves small object detection. YOLO models are widely used in weed detection tasks due to their speed and efficiency in real-time detection, and the latest version, YOLOv11, further improves accuracy and processing speed[

86,

92,

93,

94]. Gao

et al. [

90] used both synthetic images and real images of weeds in sugarbeet fields to train a CNN model that is capable of weed detection under complex situations, e.g. variation in plant appear, illumination changes, foliage occlusions, and different growth stages. Generating synthetic images by cropping, zooming, flipping, and adjusting the brightness of real images, allowed the model to better generalize on real situations.

Multispectral data and vegetation indexes with RGB for enhanced weed detection. While many studies used RGB images for weed detection or segmentation, others have explored a variety of data types, such as multispectral images or vegetation indexes [

40,

95,

96,

97,

98,

99]. Sahin

et al. [

96] compared different combinations of RGB, near infrared (NIR), and the Normalized Difference Vegetation Index (NDVI)[

82] channels, as inputs to a UNet for weed segmentation. They concluded that Green, NDVI, and NIR filtered with an edge-preserving Gaussian bilateral filter were the best input to their model. Moazzam

et al. [

97] showed that combining NIR to RGB images performs better than taking each one separately for weed segmentation.

Data acquisition methods using UAVs, UGVs, and handheld cameras. To acquire different types of data, sophisticated methods are employed, including UAVs [

41,

98,

99,

100,

101,

102], UGVs [

39,

50,

103,

104], and handheld cameras [

105,

106,

107]. Ong

et al. [

100] compared the performance of a CNN with a classifier based on RF in weed detection using UAVs. To acquire data, the UAV was equipped with a camera of 20 megapixels resolution and a video resolution of 4K with 60 frames per second. The images were captured at two meters above ground level in JPEG format (RGB). The results showed that the CNN model outperformed the RF classifier by achieving an accuracy of 92% while being less sensitive to class imbalance in the dataset. Gallo

et al. [

101] demonstrated that it is possible to achieve an acceptable and realistic accuracy using high resolution RGB images captured from UAVs at 65 m above ground level. The employed YOLOv7 model outperformed other models, while also achieving real-time detection speed. Haq [

102] compared several machine learning and statistical methods in weed detection using RGB UAV images taken at 4 m above ground level. They found that CNNs outperformed traditional methods like SVM, RF, DT, and AdaBoost, with an accuracy of 99%. Similarly, Osorio

et al. [

41] used a UAV equipped with a multispectral camera at 2 m above ground level. The images were captured in 4 spectral bands: green (500 nm), red (660 nm), red edge (745 nm), and NIR (790 nm). Osorio

et al. [

41] also added NDVI as a background estimator to help isolating vegetation from the background. Among the compared models, YOLOv3 and Mask-RCNN outperformed SVM, with F1-scores reaching 94%.

UGVs, or agricultural robots as referred to by some authors, were also used in weed detection [

39,

40,

50,

104]. Quan

et al. [

39] used a field robot to detect maize seedlings and weeds in a maize field under different weather conditions, e.g. sunny, rainy, cloudy. A camera mounted on the field robot captured RGB images of the field with different shooting angles, i.e. 0°, 30°, 75°. By using a Faster-RCNN model, the system achieved 97% precision in the detection of maize seedlings among weeds. Rasti

et al. [

104] captured field RGB images using a camera mounted on a UGV at 1 m above ground and found that integrating a scatter transform to a CNN could enhance model’s performances. Suh

et al. [

50] captured field images from a camera mounted at 1 m altitude on UGV with 0° for the angle of shooting. The study compared several CNNs with transfer learning on ImageNet and obtained the highest accuracy of 98% with an AlexNet based model. Lottes

et al. [

40] used a field robot that captures images in RGB and NIR. The authors achieved more than 93% in F1-scores on weed segmentation using a CNN autoencoder with a spatio-temporal fusion.

Cameras or sensors are not exclusively mounted on unmanned vehicles, but they can also be used as handheld devices. For example, many authors [

91,

105,

106] used digital cameras to capture images in fields during different times of the day and under different weather conditions. Chen

et al. [

107] used mobile phones to build their image datasets for weed detection. Farooq

et al. [

95] used two multispectral cameras to detect several types of weeds. The first camera captures 16 bands between 460 and 630 nm, and the second captures 4 bands (green, red, red edge, NIR).

5.2. Disease Detection

Plant disease detection is the process of detecting or identifying diseases in plants. Diseases impact crop health, leading to reduced yield and low quality crops. Left untreated, crop diseases may spread quickly in the fields, which directly affects food safety and agricultural products. By analyzing images of plants, CNNs can identify symptoms of diseases, which enables targeted and fast treatments as a part of crop management (

Table 5).

Image classification for effective plant disease detection. In the literature, plant disease detection is mainly solved using image classification, where models are trained to classify RGB images, into mainly two categories, e.g. healthy or unhealthy [

51,

56,

85,

108,

109,

110,

111,

112,

113,

114]. For instance, Thakur

et al. [

85] proposed a lightweight CNN architecture of 6 million parameters based on VGG and Inception that classifies plant diseases. Specifically, they employed their model on five datasets separately, with more than 100 crop diseases. The datasets consist of RGB images of several crop leaves that are either healthy or present multiple types of diseases. The model performed consistently well, reaching an accuracy of 99%. Kalbande and Patil [

108] proposed a novel CNN model that uses several pooling techniques that include average pooling, max pooling, and global max pooling. Mixing pooling techniques aims to achieve a "smoothing to sharpening" approach in which average pooling and max pooling are applied to smoothen features extracted by convolutional layers, then global max pooling is applied to sharpen them. The method was applied to perform disease classification on images of diseased and healthy tomato leaves, reaching an accuracy of 95%. Panshul

et al. [

109] compared a CNN model to other machine learning and statistical algorithms, i.e. RF, SVM, Naive Bayes, Gradient Boosting, DT, KNN, and Multilayer Perceptron (MLP), in disease classification in potato plants. The models were trained and tested on potato leaf images. The CNN outperformed other methods by achieving 98% of accuracy. Zhong

et al. [

110] proposed a light CNN model suitable for embedded systems. The CNN model uses Phish modules and light residual modules which improves feature extraction while reducing the size of the model. The proposed model outperformed other CNN models such as ResNet and VGG at tomato disease classification, achieving 99% of accuracy while being lighter. Kaya and Gürsoy [

111] proposed a novel deep learning method to identify plant diseases. Their approach involves applying image fusion between RGB images of plant leaves and versions of the same images with the background removed. After evaluating the method on 54000 leaf images involving 38 classes, the model achieved 98% of accuracy, outperforming state-of-the-art techniques. Furthermore, Ahad

et al. [

56] compared six state-of-the-art CNNs - DenseNet121, InceptionV3, MobileNetV2, ResNeXt101, ResNet152, and SEResNeXt101- in rice disease classification. Transfer learning from ImageNet could significantly improve classification accuracy, which rose up to 98% by SEResNeXt101. Similarly, Pajjuri

et al. [

51] compared AlexNet, GoogLeNet, VGG16, and ResNet50V2 in plant disease classification. VGG16 had the best performance, reaching an accuracy of 98%.

As image segmentation or object detection. While most studies approach plant disease detection as a classification problem, others consider it as an image segmentation problem [

68,

115,

116,

117]. For instance, Sharmila

et al. [

115] and Prashanth

et al. [

68] used a Mask-RCNN model to segment leaf images. The model successfully separated pixels that show symptoms of diseases from healthy leaves, reaching high performances. Shoaib

et al. [

83] used image segmentation as a preprocessing step before performing plant disease identification. The authors trained a UNet model to create a segmentation mask which effectively isolates leaves from the background. The isolated leaf images are then passed to an InceptionV1 CNN, which achieves 99% accuracy in determining whether the leaf is healthy or diseased. Kaur

et al. [

116] proposed a CNN model for tomato leaf disease segmentation. Their model successfully classified pixels showing plant diseases, e.g. early or late blight, achieving an accuracy of 98%. Similarly, Sharma and Sethi [

117] used a CNN-based segmentation on wheat leaves to classify potential diseases.

Some authors also suggested object detection methods based on UAV imagery [

42,

49,

53]. Liang

et al. [

53] proposed a CNN model based on the CenterNet architecture that detects diseases and insect pests in a forest. The method was applied on aerial images taken above a forest and it showed high accuracy and real-time speed, outperforming state-of-the-art methods. Wu

et al. [

49] used the YOLOv3 model with the complete IoU loss function which is optimized for object detection tasks. The authors used drone images of healthy and sick pine trees, and trained the model on detecting sick trees. The results showed an accuracy of 95%, with an average processing time of less than 0.5s. Sangaiah

et al. [

42] employed a YOLO implementation in rice leaf disease detection from UAV images. The proposed model is lightweight and capable of being deployed on UAVs, while also having a high performance, reaching 86% of mean average precision (mAP).

Beyond RGB images. RGB data is the most frequently used data type in plant disease detection across the literature due to the visual nature of symptoms. In addition to the visual aspect of plant disease symptoms, acquiring RGB images does not require a lot of material and is cheap. A significant portion of research thus focused on analyzing RGB leaf images. Nevertheless, recent studies are using non-visible imagery in plant disease detection, especially at an early stage, when symptoms are not visible yet [

118,

119,

120]. Duan

et al. [

118] proposed a CNN model for early detection of blight using multispectral imaging. Blight in pepper leaves could be detected 36 hours before visible symptoms start to appear. The CNN model achieved an accuracy of 91%, which demonstrates the feasibility of using multispectral imaging in early disease detection. De Silva and Brown [

119] compared several deep learning techniques at detecting tomato diseases using multispectral images. Combining visible and NIR wavelengths achieved the highest accuracy, reaching 93%. It also showed that Vision Transformers (ViTs) outperformed CNNs, Hybrid ViTs, and Swin Transformers at this task. Reyes-Hung

et al. [

120] discussed the use of object detection methods based on YOLO to classify crop stress in multispectral images of potato crops. This study highlights the importance of using non-visible light, especially NIR and red edge, to detect plant diseases.

5.3. Crop Classification

Crop classification is the identification and categorization of different crop types. Classifying crops is useful for enhancing agricultural management, e.g. crop distribution, crop rotations, land use, or policymaking. Crop classification problems are often solved using CNNs across the literature, using different approaches (

Table 6).

Classifying crop images. Image classification approaches enable the classification of an entire image based on which crop it contains [

123,

124,

125]. Gill

et al. [

123] proposed a CNN-RNN-LSTM model to classify field images of wheat. The proposed approach classified each image into a wheat variety class with an accuracy reaching 95%. Kaya

et al. [

124] compared the performances of CNNs using transfer learning and fine-tuning to classify leaf images of different crops. The results showed that transfer learning provides the best outcomes, reaching 99% classification accuracy. Lu

et al. [

125] proposed a six-layer CNN architecture to classify fruit images into 9 classes. Their CNN model outperformed SVM, genetic algorithms, and feedforward neural networks, reaching 91% accuracy.

Satellite-based remote sensing approaches. Crop classification is not limited to classifying leaves or fruits but it can be employed at larger scales such as satellite images [

45,

126,

127,

128,

129,

130]. Yao

et al. [

45] used Sentinel-2 time series satellite images obtained on 5 dates to detect tea plantations. The authors proposed a combined model architecture between CNNs and Recurrent Neural Networks (RNNs) and compared its performance to methods such as SVM, Random Forest, CNN, and RNN. The RCNN model was trained on image segmentation by detecting pixels that show tea plantations. Their method outperformed various methods, by achieving an IoU of 79%. Rasheed and Mahmood [

126] used Sentinel-2 time-series to identify rice crops among other classes (builtup, crops, rangeland, trees, and water), without the need of in-situ data surveys. The NDVI was also adopted in addition to the multispectral inputs of Sentinel-2. A CNN approach was proposed and compared with various classical methods such as RF, SVM, classification and regression trees, Swin Transformer, HRNet, 2D-CNN, and Long Short-Term Memory (LSTM). Deep learning approaches outperformed traditional machine learning ones. Overall, the suggested approach achieved the highest performance, reaching 93% accuracy. Kou

et al. [

127] used Sentinel-2 multi-temporal satellite images as input features and ground labels from a survey as output features for crop classification. Their proposed CNN outperformed RF, achieving high capabilities of generalizing over temporal data. Farmonov

et al. [

128] used hyperspectral images acquired using a spectrometer (DESIS) mounted on the International Space Station. While DESIS images had 235 spectral bands, ranging between 400 and 1000 nm, only 29 bands were selected based on their importance. The authors proposed a method based on wavelet transforms, spectral attention and CNNs to correctly classify pixels into several agricultural crops. Zhao

et al. [

130] used Sentinel-1 synthetic-aperture radar (SAR) time series for early crop classification. They used time series of VH+VV-polarized backscatter data as inputs to different models that they compared. The results showed that 1D-CNN outperformed RF, LSTM, and GRU-RNN, making it effective at classifying crops at an early stage using SAR satellite imagery.

Fusion of Satellite and UAV Data. Satellite images are often combined with UAV imagery for crop classification [

131,

132,

133]. Yin

et al. [

131] proposed a ViT model based on 3D convolutional attention modules for crop classification tasks using multi-temporal SAR data from UAV and satellites (RADARSAT2). The attention modules consisted of a polarization module and a temporal-spatial module to effectively learn features from temporal data and polarized data (SAR). The proposed model outperformed CNNs such as ResNet or 3DResNet, reaching 98% and 91% accuracy on UAVSAR and RADARSAT2 respectively. Li

et al. [

132] proposed a CNN model to segment crop parcels or objects in remote sensing time-series. The time-series consists of a combination of SAR data acquired by a UAVSAR and multi-spectral images by RapideEye. Labels were acquired by the United States Department of Agriculture that contains a wide range of data for agriculture. The authors showed that their method effectively outperformed other techniques.

Applications of UAVs in crop classification. UAV imagery is also used independently in many studies in crop classification [

52,

134,

135]. Pandey and Jain [

52] proposed an intelligent system based on CNNs and UAV imagery for crop identification and classification. An RGB camera was mounted on a UAV that flew at 100 meters above ground to capture images. The authors compared the performance accuracy of the proposed CNN with other machine learning methods such as RF, SVM, and CNNs like AlexNet, VGG, and ResNet. Galodha

et al. [

134] used a UAV equipped with a terrestrial hyperspectral spectroradiometer to capture high resolution images of different crops. The authors compared several CNNs with a different number of layers and kernel sizes. CNNs with 3 or 5 layers achieved almost identical accuracy (87%) when 7x7 kernels were used. Kwak

et al. [

135] proposed a hybrid CNN-RF model for early crop mapping using limited input data. A CNN-RF model outperform CNNs and RFs because of its ability to leverage the advantages and strengths of both architectures for feature extraction and classification.

Crop classification has significantly benefited from remote sensing data, especially multispectral, hyperspectral and radar. The integration of advanced technology, such as sensors on UAVs and satellites, provides temporal data that is analyzed using CNNs in order to enhance crop monitoring and accurately identify crops.

5.4. Water Management

Water management focuses on optimizing water usage by monitoring irrigation, moisture levels, and potential droughts. By assessing the state of water resources, effective strategies can be developed in order to use water efficiently. Due to watering circumstances, general characteristics of plants vary significantly, e.g., the color, the shape, or the curvature. By exploiting these observable changes, several CNN approaches are used to address this topic, which support sustainable agricultural practices in the long-term (

Table 7).

Color and spectral data for plant water stress detection. Many studies use image classification techniques to differentiate between color images showing whether the plants suffer from water stress or not [

37,

72,

136,

137,

138]. Kamarudin

et al. [

136] proposed a lightweight CNN based on an attention module for water stress detection. They considered images of plants that were subject to different water treatments, ranging from full irrigation to water deprivation. Their method outperformed state-of-the-art models, reaching 87% of classification accuracy. Gupta

et al. [

72] and Azimi

et al. [

137] used images of chickpeas that went through three different watering treatments. Gupta

et al. [

72] found that ResNet-18 achieved 86% accuracy while Azimi

et al. [

137] achieved 98% accuracy using a hybrid CNN-LSTM model. Hendrawan

et al. [

37] compared four CNN models (SqueezeNet, GoogLeNet, ResNet50, AlexNet) at identifying water stress in moss cultures. They used RGB images of moss that received different water treatments, i.e. dry, semi-dry, wet, and soak. The authors found that the ResNet50 model achieved the best accuracy, reaching 87%. Zhuang

et al. [

138] used images of maize plants that were watered differently. The authors used a CNN feature extractor followed by an SVM classifier to classify images into categories ranging from drought stressed to well watered. Their method achieved a balanced performance between classification time and accuracy.

Color images are advantageous at detecting water stress in plants showing observable symptoms such as change in color or curvature. However, other authors used multispectral and hyperspectral images with the goal of identifying water stress earlier and with less observable symptoms [

61,

139,

140,

141]. Kuo

et al. [

139] used a hyperspectral spectrometer on tomato seedlings in order to detect early drought stress with the absence of visible changes. The authors proposed a 1D-CNN based on ResNet’s residual block and Grad-CAM that achieved 96% of accuracy, outperforming other methods, while also minimizing computation and data collection costs. Spišić

et al. [

140] analyzed multispectral reads to detect water stress in maize canopies. SVM, 1D-CNN, and MLP achieved comparable performance, with trade-offs between performance and detection speed. Kamarudin and Ismail [

61] compared several lightweight CNN models at drought stress identification in RGB and NIR plant images that went through different water treatments. Among MobileNet, MobileNetV2, NasNet mobile, and EfficientNet, EfficientNet achieved the best performance, reaching an accuracy of 88%. Zhang

et al. [

141] also compared different algorithms at detecting water stress in tomato plants using the visible and NIR spectrum and cloud computing. The MLP and one-vs-rest classifier outperformed 1D-CNNs at processing 1D spectral data.

Complementary data sources. Other studies used thermal and weather data for water stress detection due to its correlation with factors like temperature, humidity, soil moisture, and evapotranspiration rates [

142,

143,

144,

145]. Li

et al. [

142] used thermal imagery acquired with a thermal camera along with RGB images of rice leaves that had different levels of water stress. The proposed method demonstrated that using CNNs to extract features from background temperature, along with plant thermal images, improved classification accuracy. According to the authors, this is due to the importance of air temperature, which directly relates to plant temperatures. Sobayo

et al. [

143] used thermal imagery to estimate soil moisture. They proposed a CNN based regression model that generalized well over three farm areas, while outperforming traditional neural networks. Nagappan

et al. [

144] used weather data in order to estimate evapotranspiration in the aim of irrigation scheduling. The authors used data that includes wind speed, and max/min temperature as inputs, and evapotranspiration as labels. The authors demonstrated the effectiveness of a 1D-CNN to analyze 1D time series data. Afzaal

et al. [

145] compares several techniques for groundwater estimation using stream level, stream flow, precipitation, relative humidity, mean temperature, evapotranspiration, heat degree days, and dew point temperature. The study suggested that Artificial Neural Networks, MLP, LSTM, and CNNs were efficient at groundwater estimation, with MLP and CNN slightly outperforming other algorithms. Vegetation indexes were also used to assist in water stress identification [

140,

146,

147,

148]. For instance, Chaudhari

et al. [

148] showed that it is more common to have good results when using NDVI for drought prediction. Similarly, Spišić

et al. [

140] and Ge

et al. [

147] used NDVI in water deficit detection and soil moisture estimation respectively.

Satellite imagery for water stress and soil moisture monitoring. While fixed optical cameras and weather variables are widely used in the literature, satellites showed to also be beneficial [

146,

147,

149,

150,

151,

152]. Liu

et al. [

146] used a combination of Sentinel-1 radar and Sentinel-2 optical satellite data in order to retrieve soil moisture in farmland areas. The authors used dual polarization radar (VH, VV), elevation and local incidence angle, polarization decomposition features (H,A,

) from Sentinel-1, and several vegetation indexes (NDVI, Modified Soil Adjusted Vegetation Index (MSAVI), Difference Vegetation Index (DVI) [

82]) computed from Sentinel-2’s red and NIR bands, as inputs to different algorithms that were compared. The study showed that a regression CNN outperformed support vector regression (SVR) and generalized regression neural networks, and that MSAVI had the strongest correlation with soil moisture content, followed by NDVI, then DVI, due to the influence of MSAVI by both vegetation and soil. Bazzi

et al. [

149] used both Sentinel-1 and Sentinel-2 time series for tasks related to water management in agriculture. By using Sentinel-1 VV and VH polarization and red and NIR bands from Sentinel-2 and their derived NDVI, the authors compared different algorithms for mapping irrigated areas. They found that the CNN approach achieved 94% of accuracy, outperforming RF. Ge

et al. [

147] compared several algorithms at estimating soil moisture from satellite observations. Their data included radar data from SMOS and ASCAT satellites, as well as NDVI retrieved from MODIS NDVI product MYD13C1. Here, CNNs can perform better than tradition neural networks in soil moisture retrieval from temporal satellite observations. Hu

et al. [

150] used microwave data from the Aqua satellite for soil moisture retrieval using regression. Again, a regression CNN performed better than SVR, while also being significantly faster.

UAV approaches for soil moisture and water stress detection. UAVs were used as well in different water management applications [

66,

153]. For instance, Wu

et al. [

153] proposed a method based on UAV remote sensing and deep learning for soil moisture estimation in drip-irrigated fields. Kumar

et al. [

66] employed a UAV based technique using RGB images for water stress identification. The authors proposed a framework to identify different levels of water stress in a maize crop field. RGB images captured by a camera on a UAV were used to train different models. The proposed CNN outperformed models such as ResNet50, VGG19, and InceptionV3, achieving 93% of accuracy. The authors used multimodal and multitemporal UAV imaging that captures RGB, multispectral, and thermal infrared wavelengths and showed that CNN-LSTM has a higher accuracy than CNN and LSTM models.

In conclusion, water management in agriculture encompasses a wide range of applications, especially, drought and water stress detection, irrigation mapping, and soil moisture estimation. Studies consistently showed that it is possible to efficiently accomplish effective water management using CNN methods with different data types acquired from a variety of sources at different temporal and spatial resolutions.

5.5. Yield Prediction

Yield prediction refers to the process of forecasting the quantity of crops that will be harvested from a field at the end of its growing season. It is an important aspect in agriculture that helps farmers in planning and making decisions regarding resources, supplies, and market strategies. With the emergence of AI and advanced technologies in computer vision, research studies focused on finding methods to correlate past yield values with image data (

Table 8).

Advances in crop yield prediction through multimodal data through regression. Predicting or estimating crop yield is often a regression problem because of the scalar nature of the predicted values [

154,

155,

156,

157,

158,

159]. Mia

et al. [

154] compared different setups for yield prediction. The authors used CNN based methods with UAV multispectral imagery and a combination of monthly, weekly, or no weather data. The best results were obtained with weekly weather data that included precipitation, global solar radiation, temperature, average relative humidity, average wind speed, and vapor pressure data. Tanabe

et al. [

157] used UAV multispectral imagery for winter wheat yield prediction and showed that CNN models outperform conventional regression algorithms such as linear regression. They also demonstrated that using multitemporal data of different growth stages may not improve the prediction accuracy if the CNN is effectively implemented. The heading stage of growth was sufficient for accurate predictions. Morales

et al. [

155] also used regression CNNs for winter wheat yield prediction. The authors used remote sensing data that included nitrogen rate, precipitation, slope, elevation, topographic position index, terrain aspect, and Sentinel-1 backscatter coefficients and showed that the proposed CNN method outperformed other techniques such as Bayesian multiple linear regression, standard multiple linear regression, RF, feedforward networks with AdaBoost, and a stacked autoencoder. Terliksiz and Altilar [

156] used deep learning to extract features from MODIS multispectral data and land surface temperature time series. The proposed model concatenated features extracted by a CNN branch for multispectral and temperature data, and an LSTM branch for past yield data. This method is simple and efficient at crop yield prediction using multimodal and multitemporal data. Zhou

et al. [

158] used a CNN-LSTM model for rice yield prediction using time series that include MODIS remote sensing that and several vegetation indexes (Enhanced Vegetation Index (EVI), Soil Adjusted Vegetation Index (SAVI) [

82,

160]), Gross Primary Productivity, temperature data, spatial heterogeneity, and historical yield data. The proposed model consisted of a CNN block for spatial feature extraction followed by an LSTM block for temporal feature extraction. The proposed method outperformed other CNN and LSTM methods. In another hybrid approach, Saini

et al. [

159] proposed a CNN-LSTM method for yield prediction. The proposed approach includes a CNN block that extracts relevant spatial features, followed by a Bidirectional LSTM for phonological information. The proposed method outperformed other similar research studies.

Object detection and image segmentation for image-based yield prediction. While regression problems were suitable for crop yield prediction, some studies had different approaches. For instance, object detection methods were used to detect individual crops or fruits in RGB images. These approaches allow to estimate yields by counting fruits and identifying their maturity level [

161,

162]. CNN models were also trained on detecting crop heads and fruits, i.e. wheat head, cotton bolls, apples, and therefore estimating crop yield [

163,

164,

165]. Maji

et al. [

43] proposed a combined approach of object detection and image segmentation in yield prediction. The proposed method predicts wheat yield by detecting wheat spikes using bounding boxes in the first place, then classifying wheat pixels in the second step. They reported a mAP of 97%, overcoming difficult conditions such as overlapping and background interference.

Using semantic segmentation, Ilyas and Kim [

46] proposed a CNN architecture for strawberry yield prediction. Their method classified strawberry pixels into 3 maturity classes, enabling the yield prediction of each class. Their approach outperformed different DeepLab architectures, scoring a mean IoU of 80%. Yang

et al. [

38] showed that it is possible to estimate the yield of corn plants based on their growth stage. The authors suggested a CNN based method that classifies hyperspectral images of corn plants in the field into five growth stage. Classification accuracy reached 75% when combining color and spectral information.

Aerial crop yield prediction using UAVs. To efficiently predict yield in crop fields, having a complete view of the field is beneficial in many studies and using UAVs is very common in this domain, as they provide an aerial view of plants [

38,

57,

79,

154,

157,

162,

163,

166]. Bhadra

et al. [

57] proposed an end-to-end 3D-CNN that uses multi-temporal UAV based color images for soybean yield prediction. They demonstrated that 3D DenseNet outperformed 3D VGG and 3D ResNet. Increasing the spatio-temporal resolution did not necessarily improve model performance; instead, it added more model complexity. Yu

et al. [

166] compared CNNs with several machine learning algorithms in maize biomass estimation using drone images. A UAV equipped with both a digital camera and a multispectral camera was used to acquire data from the field. CNNs outperformed traditional models and combining multispectral with RGB data gave the best results. Li

et al. [

79] used UAV imaging and CNNs to estimate cotton yield from a low altitude (5m). The authors used image segmentation using a SegNet model to classify cotton boll pixels, then they applied linear regression on the segmentation result in order to obtain the yield. The proposed model outperformed SVM and RF.

Large-scale crop yield prediction with satellite data. Satellite imagery has also been used in crop yield prediction studies, offering a broad perspective of the fields over long periods [

155,

156,

158,

167,

168,

169,

170,

171,

172,

173,

174,

175,

176,

177]. For example, Fernandez-Beltran

et al. [

170] used monthly Sentinel-2 multispectral images with climate and soil data to estimate rice yields. The authors proposed a 3D-CNN that can extract temporal, spatial, and multispectral features from images. The proposed method proved to be effective at yield estimation, while outperforming state-of-the-art 2D and 3D-CNNs. Qiao

et al. [

172] also proposed a 3D-CNN for crop yield prediction using satellite time series. They used a multispectral dataset (MOD09A1) and a thermal dataset (MYD11A2) from MODIS satellite; the proposed method outperformed competitive methods such as LSTM, SVM, RF, DT, or 2D-CNNs. 3D-CNNs are often used to process temporal images, especially in satellite remote sensing, where data is acquired periodically and historical records are available [

169,

175,

178]. In different approaches, hybrid CNN models were used for yield prediction from satellite images. Hybrid models include a combination of CNN and LSTM modules, making them efficient at processing spatial and temporal data [

171,

173,

176]. Other studies compared different algorithms and models at yield prediction using satellite data. For instance, Huber

et al. [

167] compared XGBoost, CNN, and CNN-LSTM in yield prediction. The study was conducted using time series from MODIS multispectral (MOD09A1) and thermal (MOD11A2) data, along with meteorogical variables (precipitation and vapor pressure). The results showed that XGBoost can be efficient at yield prediction, while outperforming state-of-the-art deep learning methods. Kang

et al. [

174] compared Lasso, SVR, RF, XGBoost, LSTM, and CNN at yield at maize yield prediction using remote sensing time series. XGBoost outperforms other algorithms, especially LSTM and CNN when datasets involve small feature space.