Submitted:

18 November 2024

Posted:

19 November 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Related Work

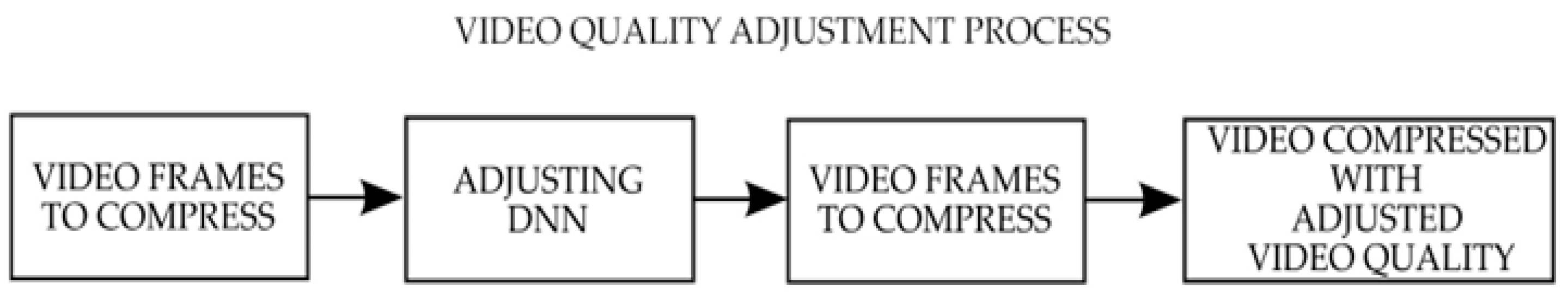

3. Concept of the Proposed Method

3.1. General Concept of the Proposed Method

3.2. HEVC Compression Adjustment Learning Process

3.3. Neural Network Architecture

4. Results

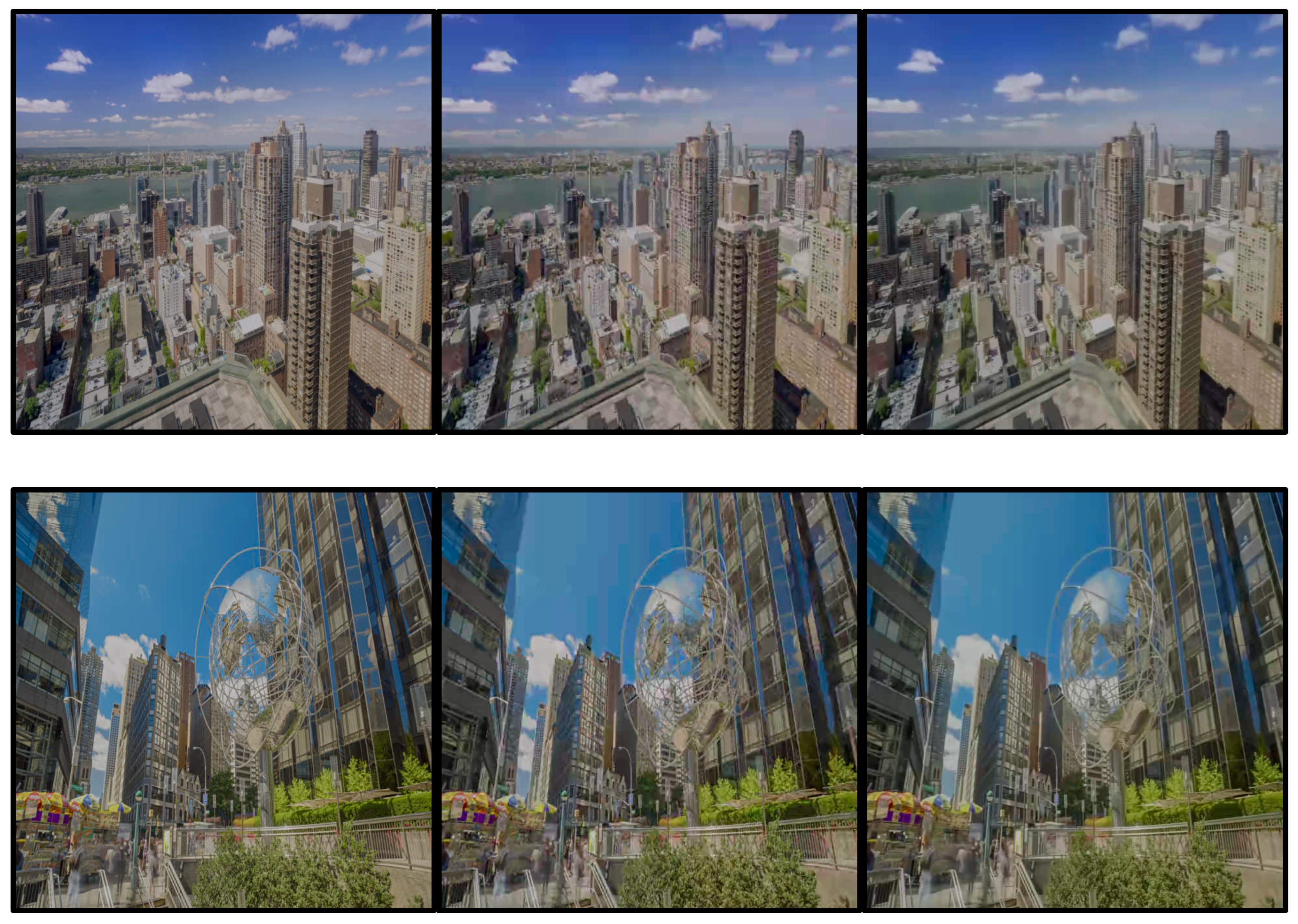

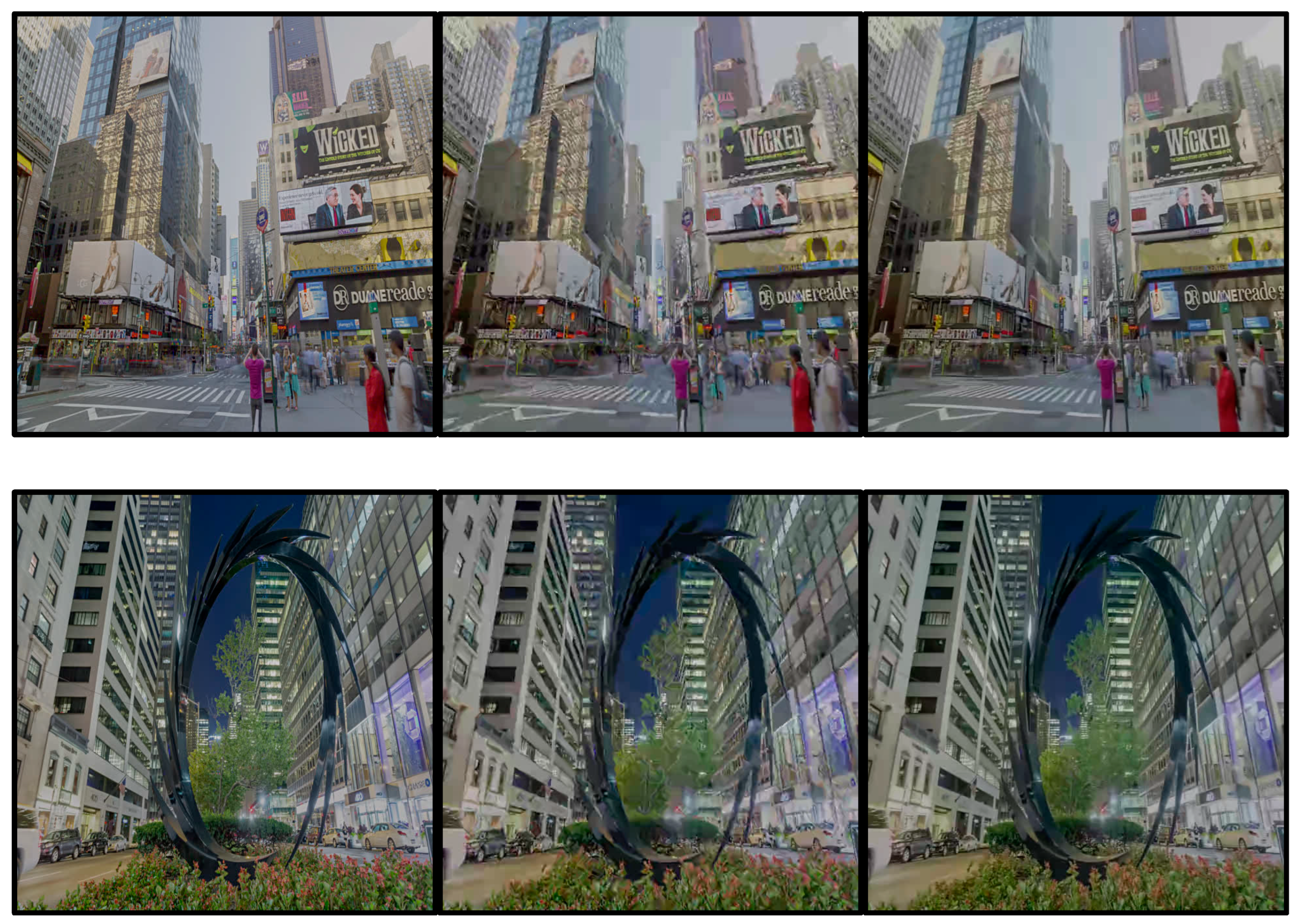

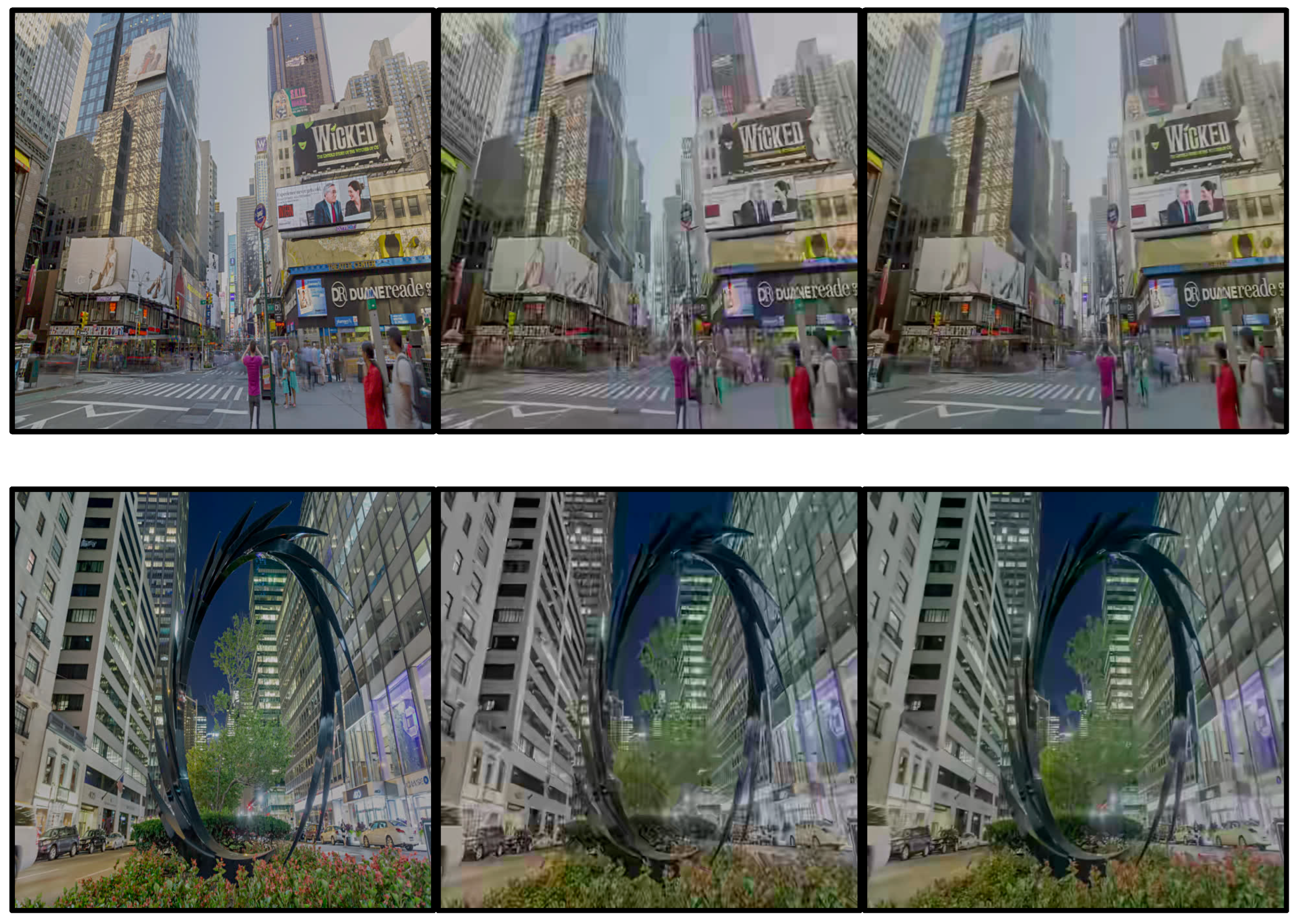

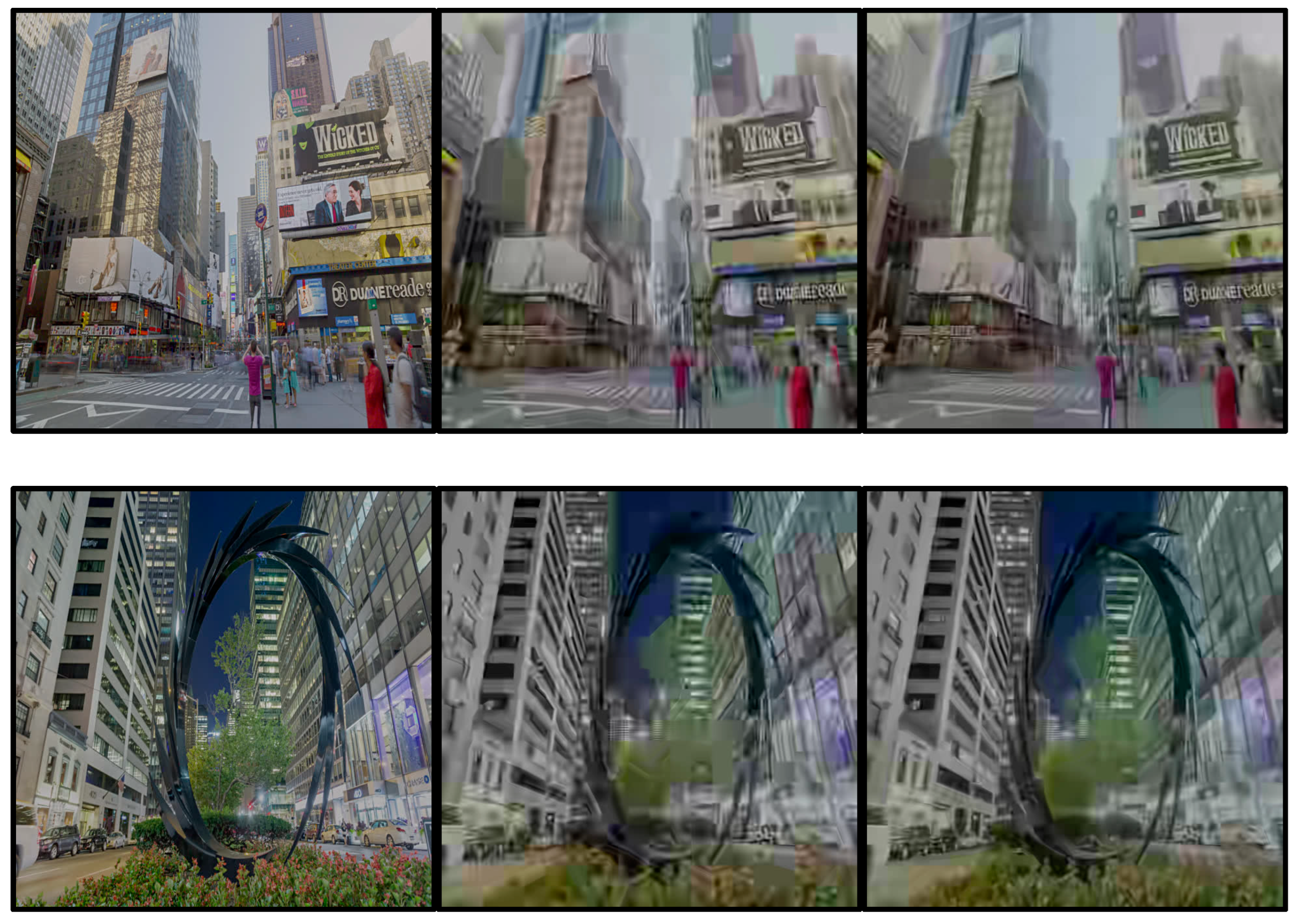

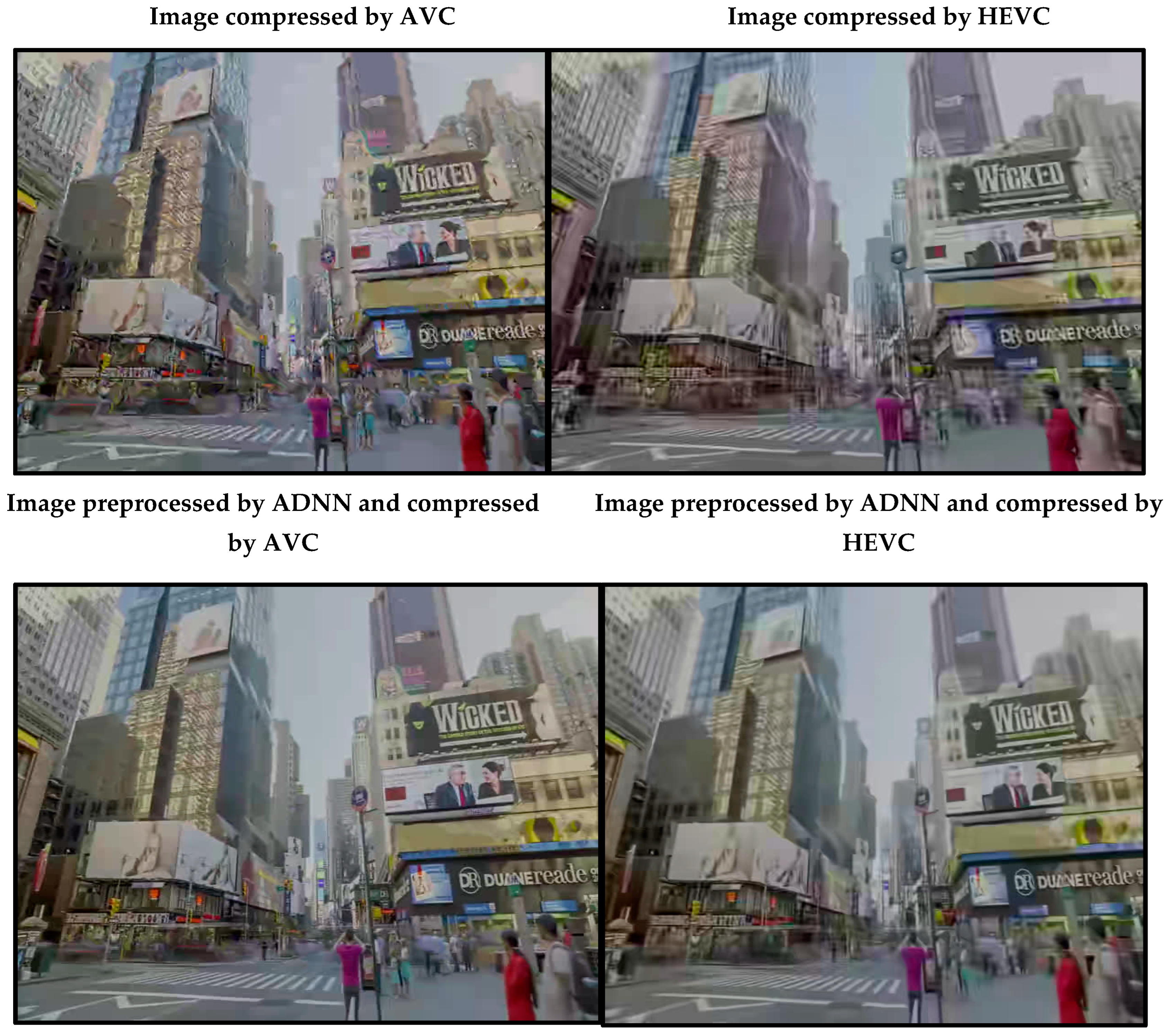

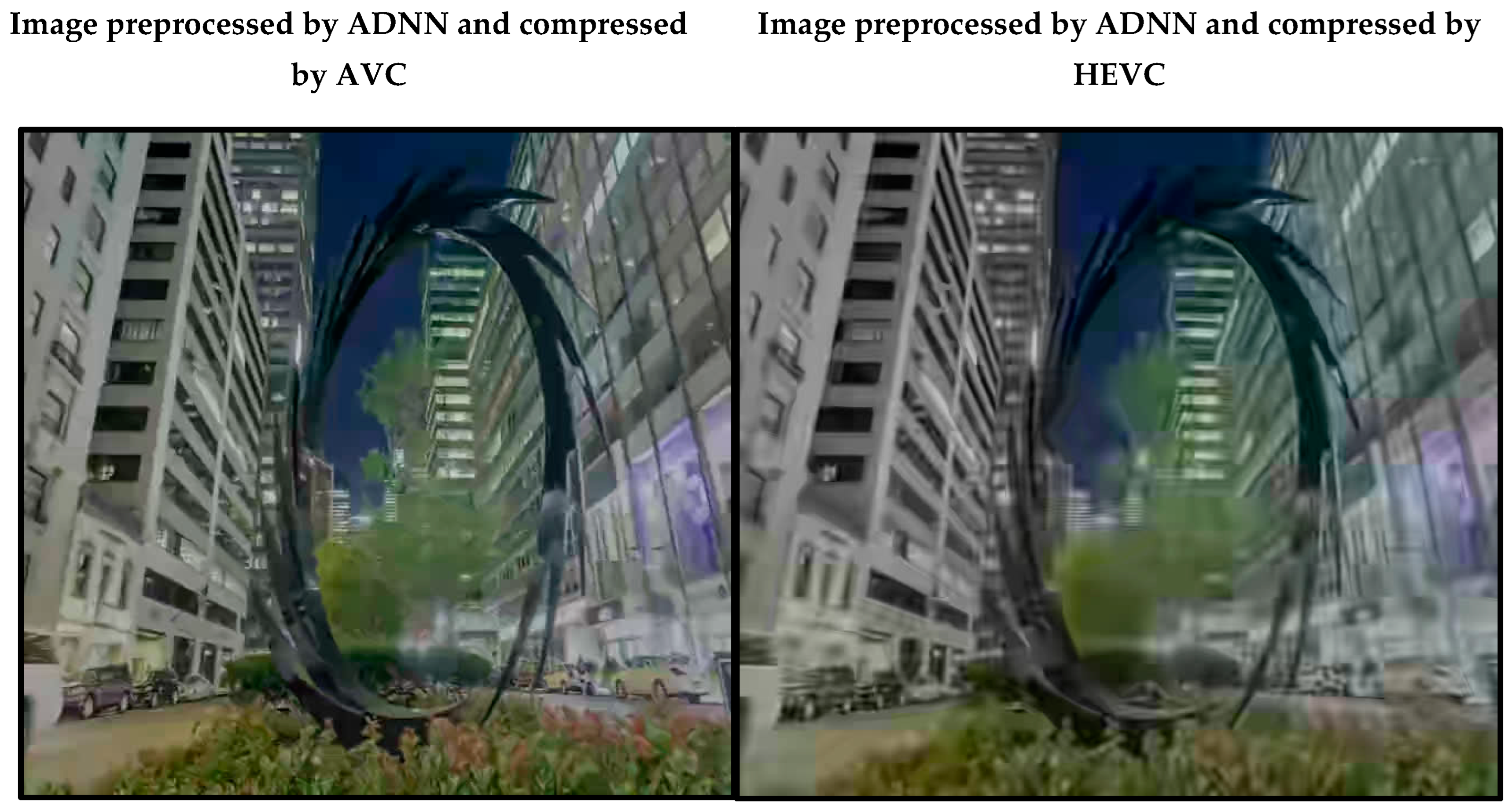

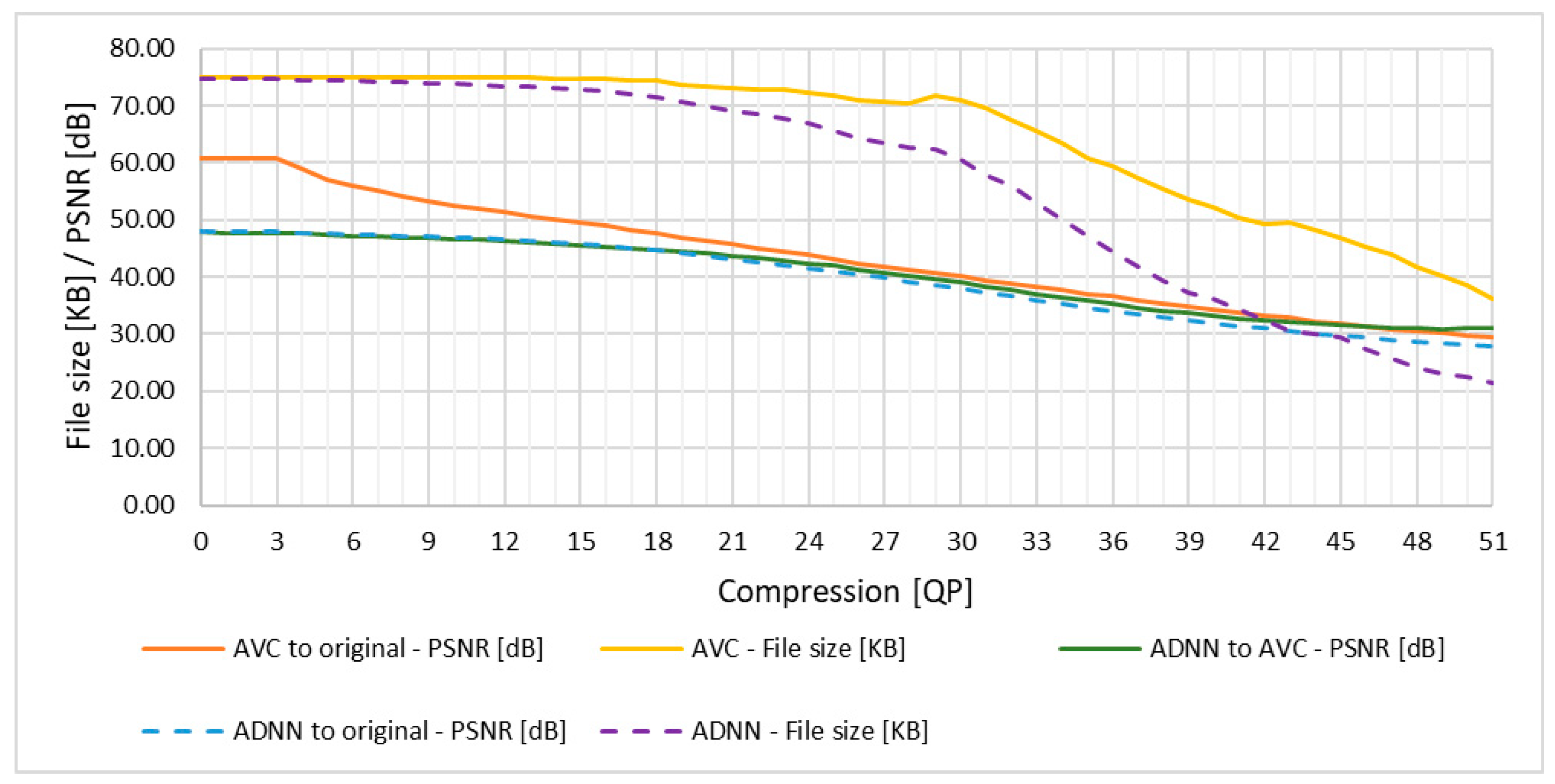

4.1. AVC Compression Enhancement Research Results

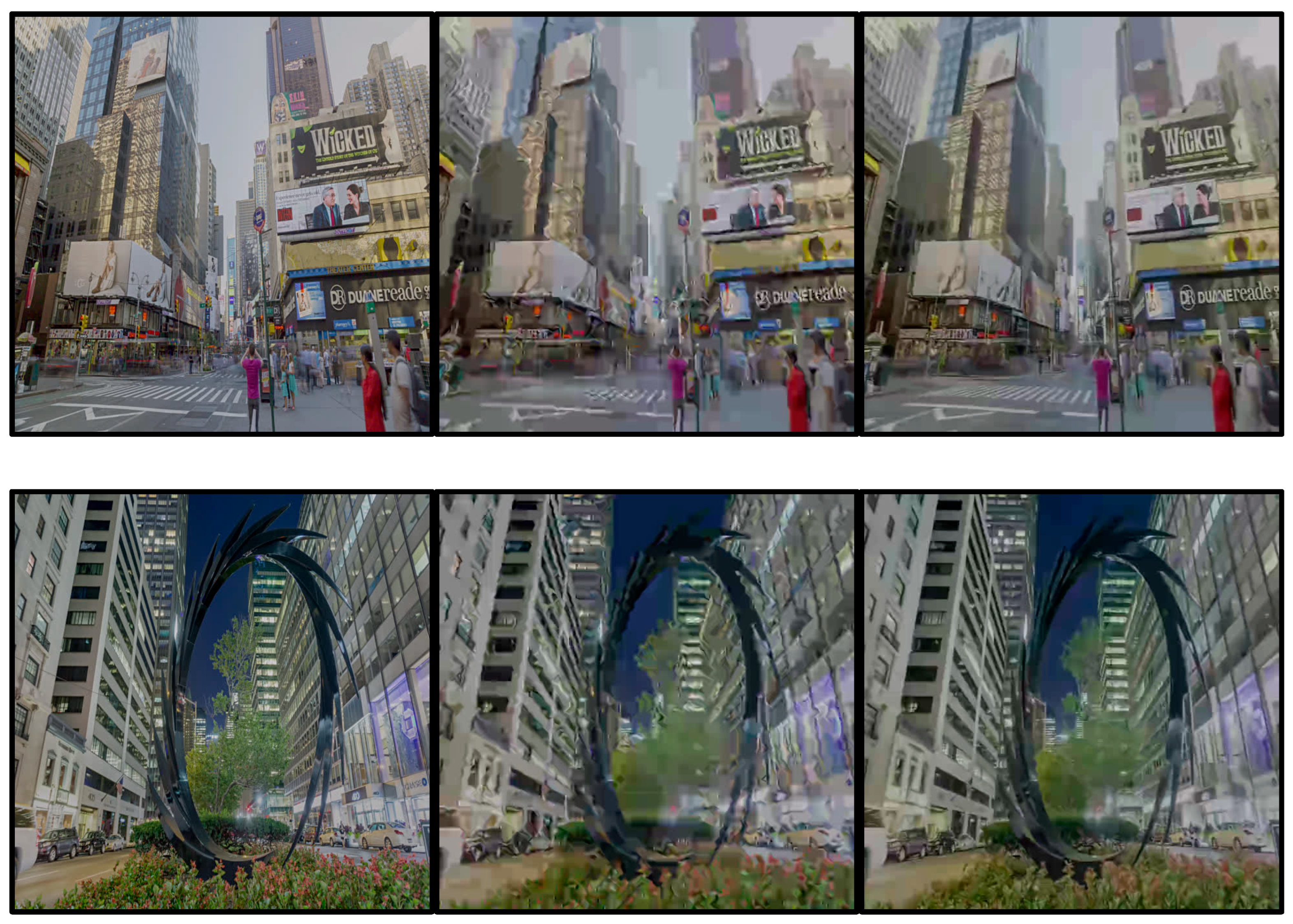

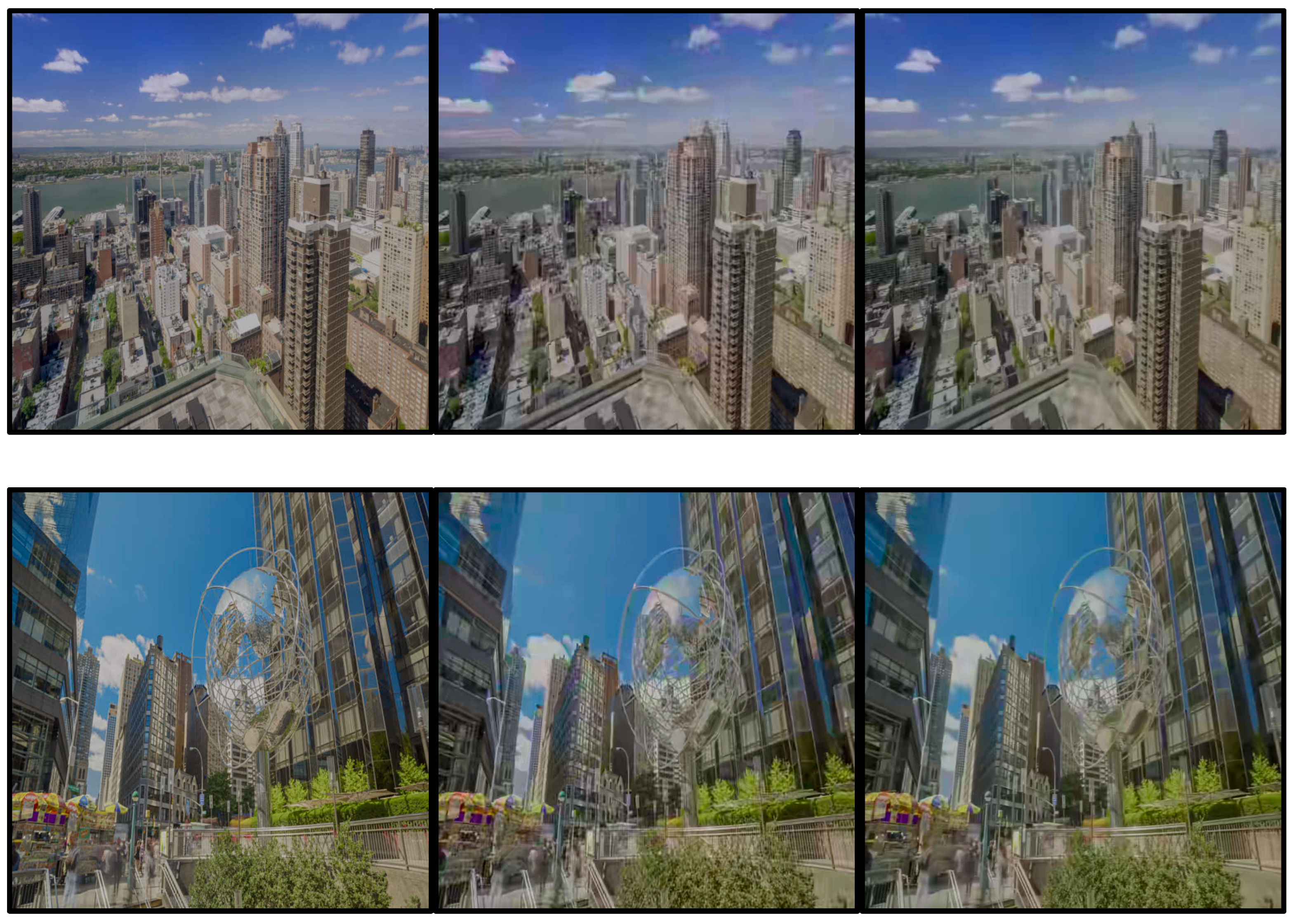

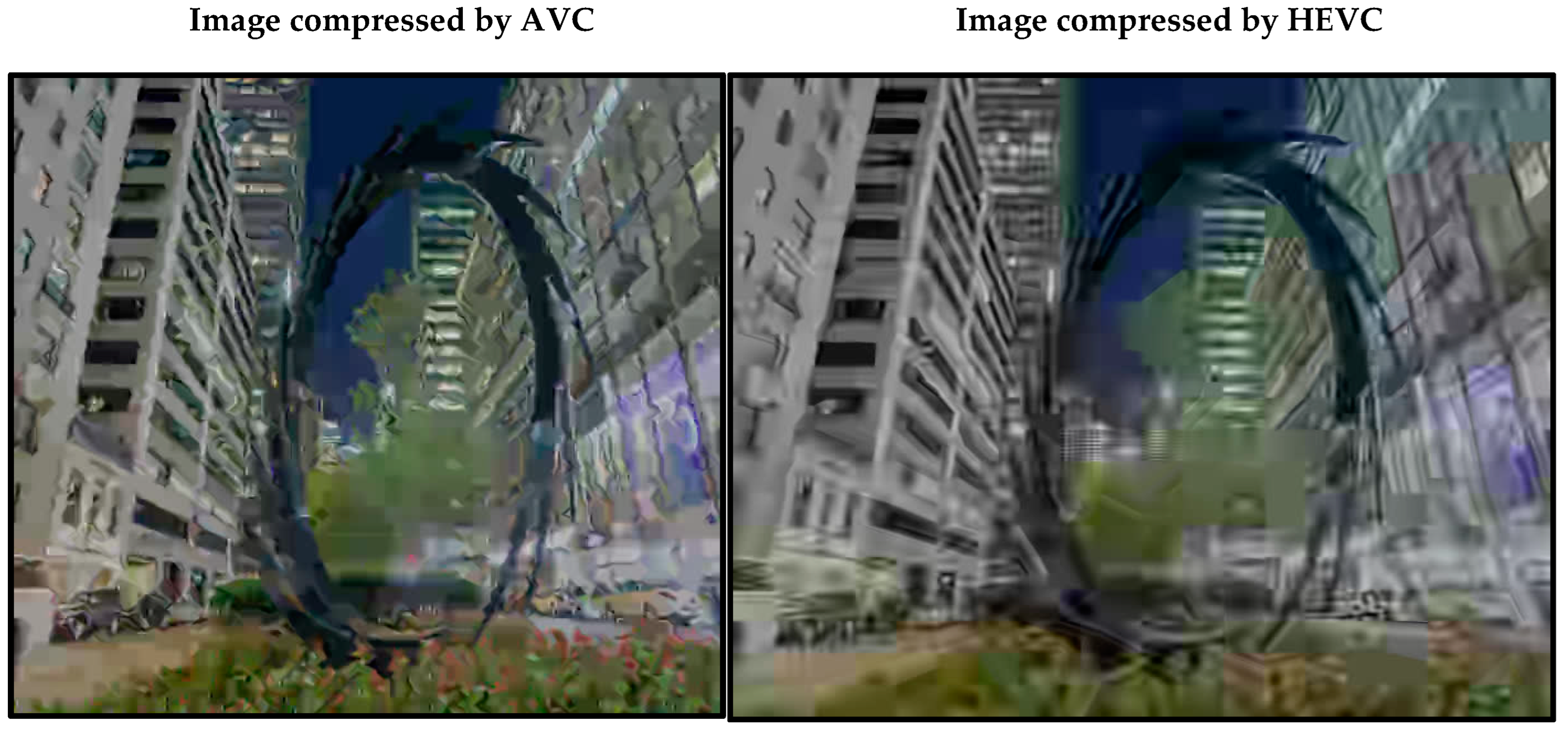

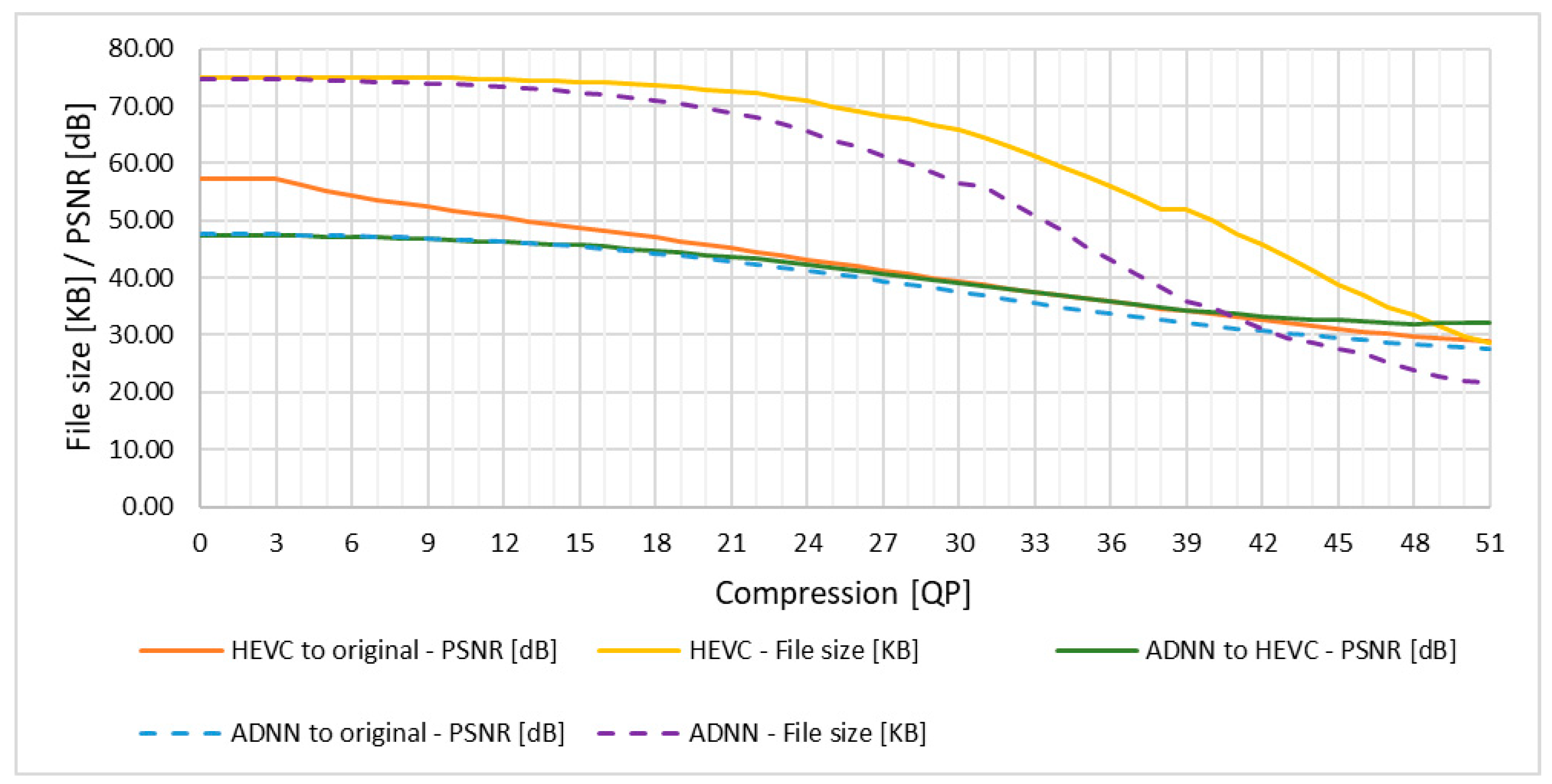

4.2. HEVC Compression Enhancement Research Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

References

- Schiopu, I.; Munteanu, A. Deep Learning Post-Filtering Using Multi-Head Attention and Multiresolution Feature Fusion for Image and Intra-Video Quality Enhancement. Sensors 2022, 22, 1353. [Google Scholar] [CrossRef]

- Jiang, X.; Song, T.; Zhu, D.; Katayama, T.; Wang, L. Quality-Oriented Perceptual HEVC Based on the Spatiotemporal Saliency Detection Model. Entropy 2019, 21, 165. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Teng, G.; An, P. Video Super-Resolution Based on Generative Adversarial Network and Edge Enhancement. Electronics 2021, 10, 459. [Google Scholar] [CrossRef]

- Schiopu, I.; Munteanu, A. Attention Networks for the Quality Enhancement of Light Field Images. Sensors 2021, 21, 3246. [Google Scholar] [CrossRef] [PubMed]

- Si, L.; Wang, Z.; Xu, R.; Tan, C.; Liu, X.; Xu, J. Image Enhancement for Surveillance Video of Coal Mining Face Based on Single-Scale Retinex Algorithm Combined with Bilateral Filtering. Symmetry 2017, 9, 93. [Google Scholar] [CrossRef]

- Lopez-Vazquez, V.; Lopez-Guede, J.M.; Marini, S.; Fanelli, E.; Johnsen, E.; Aguzzi, J. Video Image Enhancement and Machine Learning Pipeline for Underwater Animal Detection and Classification at Cabled Observatories. Sensors 2020, 20, 726. [Google Scholar] [CrossRef]

- Caballero, J.; Ledig, C.; Aitken, A.; Acosta, A.; Totz, J.; Wang, Z.; Shi, W. Real-time video super-resolution with spatio-temporal networks and motion compensation. IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 21–26 July 2017, pp. 2848–2857. [CrossRef]

- Huang, Y.; Wang, W.; Wang, L. Video superresolution via bidirectional recurrent convolutional networks. IEEE Transactions on Pattern Analysis and Machine Intelligence 2017, 40, 1015–1028. [Google Scholar] [CrossRef] [PubMed]

- Isobe, T.; Li, S.; Jia, X.; Yuan, S.; Slabaugh, G.; Xu, C.; Li, Y.; Wang, S.; Tian, Q. Video super-resolution with temporal group attention. IEEE Conference on Computer Vision and Pattern Recognition, Seattle, USA, 13–19 June 2020, pp. 7717–7727. [CrossRef]

- Kim, J.; Jung, Y.J. Multi-Stage Network for Event-Based Video Deblurring with Residual Hint Attention. Sensors 2023, 23, 2880. [Google Scholar] [CrossRef] [PubMed]

- Jiang, S.; Zhang, J.; Wang, W.; Wang, Y. Automatic Inspection of Bridge Bolts Using Unmanned Aerial Vision and Adaptive Scale Unification-Based Deep Learning. Remote Sens. 2023, 15, 328. [Google Scholar] [CrossRef]

- Li, J.; Gong, W.; Li, W. Combining Motion Compensation with Spatiotemporal Constraint for Video Deblurring. Sensors 2018, 18, 1774. [Google Scholar] [CrossRef]

- Mahdaoui, A.E.; Ouahabi, A.; Moulay, M.S. Image Denoising Using a Compressive Sensing Approach Based on Regularization Constraints. Sensors 2022, 22, 2199. [Google Scholar] [CrossRef]

- Lee, S.-Y.; Rhee, C.E. Motion Estimation-Assisted Denoising for an Efficient Combination with an HEVC Encoder. Sensors 2019, 19, 895. [Google Scholar] [CrossRef]

- Lee, M.S.; Park, S.W.; Kang, M.G. Denoising Algorithm for CFA Image Sensors Considering Inter-Channel Correlation. Sensors 2017, 17, 1236. [Google Scholar] [CrossRef]

- Chan, S.H.; Elgendy, O.A.; Wang, X. Images from Bits: Non-Iterative Image Reconstruction for Quanta Image Sensors. Sensors 2016, 16, 1961. [Google Scholar] [CrossRef]

- Min, X.; Zhai, G.; Gu, K.; Yang, X.; Guan, X. Objective Quality Evaluation of Dehazed Images. IEEE Transactions on Intelligent Transportation Systems 2019, 20, 2879–2892. [Google Scholar] [CrossRef]

- Min, X.; Zhai, G.; Gu, K.; Zhu, Y.; Zhou, J.; Guo, G.; Yang, X.; Guan, X.; Zhang, W. Quality Evaluation of Image Dehazing Methods Using Synthetic Hazy Images. IEEE Transactions on Multimedia 2019, 21, 2319–2333. [Google Scholar] [CrossRef]

- Li, Y.; Zhu, H.; He, L.; Wang, D.; Shi, J.; Wang, J. Video Super-Resolution with Regional Focus for Recurrent Network. Appl. Sci. 2023, 13, 526. [Google Scholar] [CrossRef]

- Shang, F.; Liu, H.; Ma, W.; Liu, Y.; Jiao, L.; Shang, F.; Wang, L.; Zhou, Z. Lightweight Super-Resolution with Self-Calibrated Convolution for Panoramic Videos. Sensors 2023, 23, 392. [Google Scholar] [CrossRef] [PubMed]

- Lee, Y.; Cho, S.; Jun, D. Video Super-Resolution Method Using Deformable Convolution-Based Alignment Network. Sensors 2022, 22, 8476. [Google Scholar] [CrossRef]

- Choi, J.; Oh, T.-H. Joint Video Super-Resolution and Frame Interpolation via Permutation Invariance. Sensors 2023, 23, 2529. [Google Scholar] [CrossRef] [PubMed]

- Tian, Y.; Zhang, Y.; Fu, Y.; Xu, C. Tdan: Temporally-deformable alignment network for video super-resolution. IEEE Conference on Computer Vision and Pattern Recognition, Seattle, USA, 13–19 June 2020, pp. 3357–3366. [CrossRef]

- Wang, X.; Chan, K.C.; Yu, K.; Dong, C.; Loy, C.C. EDVR: Video restoration with enhanced deformable convolutional networks. IEEE Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, USA, 16–17 June 2019, pp. 1954–1963. [CrossRef]

- Zhou, S.; Zhang, J.; Pan, J.; Xie, H.; Zuo, W.; Ren, J. Spatio-temporal filter adaptive network for video deblurring. IEEE International Conference on Computer Vision, Seoul, Korea (South), pp. 2482–2491. 27 October–2 November 2019. [CrossRef]

- Dong, C.; Deng, Y.; Loy, C.C.; Tang, X. Compression artifacts reduction by a deep convolutional network. IEEE International Conference on Computer Vision, Santiago, Chile, 2015, 7–13 December 2015, pp. 576–584. [CrossRef]

- Xing, Q.; Xu, M.; Li, T.; Guan, Z. Early exit or not: Resource-efficient blind quality enhancement for compressed images. In European Conference on Computer Vision, Glasgow, Scotland, 23–28 August 2020, pp. 275–292. [CrossRef]

- Yang, R.; Xu, M.; Wang, Z. Decoder-side HEVC quality enhancement with scalable convolutional neural network. In 2017 IEEE International Conference on Multimedia and Expo (ICME), Hong Kong, China, 10–14 July 2017, pp. 817–822, 2017. [CrossRef]

- Kaczyński, M.; Piotrowski, Z. Lustrzany Kodek Video Bazujący na Głębokiej Sieci Neuronowej. Przegląd Telekomunikacyjny - Wiadomości Telekomunikacyjne 2024, 4, 395–398. [Google Scholar] [CrossRef]

- Chan, K. CK.; Wang, X.; Yu, K.; Dong, C.; Loy, CC. BasicVSR: The search for essential components in video super-resolution and beyond. IEEE Conference on Computer Vision and Pattern Recognition, Nashville, USA, 20–25 June 2021, pp. 4945–4954. [CrossRef]

- Chan, K.C.K.; Zhou, S.; Xu, X.; Loy, C.C. BasicVSR++: Improving video superresolution with enhanced propagation and alignment. Conference on Computer Vision and Pattern Recognition, New Orleans, USA, 18–24 June 2022, pp. 5962–5971. . [CrossRef]

- Lin, J.; Huang, Y.; Wang, L. FDAN: Flow-guided deformable alignment network for video super-resolution. 2021. [CrossRef]

- Son, H.; Lee, J.; Lee, J.; Cho, S.; Lee, S. Recurrent video deblurring with blur-invariant motion estimation and pixel volumes. ACM Transactions on Graphics 2021, 40, 1–18. [Google Scholar] [CrossRef]

- Zhong, Z.; Gao, Y.; Zheng, Y.; Zheng, B. Efficient spatio-temporal recurrent neural network for video deblurring. In European Conference on Computer Vision, Glasgow, Scotland, 23–28 August 2020, pp. 191–207. [CrossRef]

- Bistroń, M.; Piotrowski, Z. Efficient Video Watermarking Algorithm Based on Convolutional Neural Networks with Entropy-Based Information Mapper. Entropy 2023, 25, 284. [Google Scholar] [CrossRef]

- Walczyna, T.; Piotrowski, Z. Fast Fake: Easy-to-Train Face Swap Model. Appl. Sci. 2024, 14, 2149. [Google Scholar] [CrossRef]

- Jiang, L.; Wang, N.; Dang, Q.; Liu, R.; Lai, B. PP-MSVSR: multi-stage video super-resolution, 2021. [CrossRef]

- Mallik, B.; Sheikh-Akbari, A.; Bagheri Zadeh, P.; Al-Majeed, S. HEVC Based Frame Interleaved Coding Technique for Stereo and Multi-View Videos. Information 2022, 13, 554. [Google Scholar] [CrossRef]

- Zhou, M.; Wei, X.; Kwong, S.; Jia, W.; Fang, B. Rate Control Method Based on Deep Reinforcement Learning for Dynamic Video Sequences in HEVC. IEEE Transactions on Multimedia 2021, 23, 1106–1121. [Google Scholar] [CrossRef]

- Jacovi, A.; Hadash, G.; Kermany, E.; Carmeli, B.; Lavi, O.; Kour, G.; Berant, J. Neural Network Gradient-Based Learning of Black-Box Function Interfaces. 2019. [CrossRef]

- Sarafian, E.; Sinay, M.; Louzoun, Y.; Agmon, N.; Kraus, S. Explicit Gradient Learning for Black-Box Optimization. Proceedings of the 37th International Conference on Machine Learning in Proceedings of Machine Learning Research 2020, 119, 8480–8490. [Google Scholar]

- Grathwohl, W.; Choi, D.; Wu, Y.; Roeder, G.; Duvenaud, D. Backpropagation through the Void: Optimizing Control Variates for Black-Box Gradient Estimation. 2017. [CrossRef]

- Kaczyński, M.; Piotrowski, Z. High-Quality Video Watermarking Based on Deep Neural Networks and Adjustable Subsquares Properties Algorithm. Sensors 2022, 22, 5376. [Google Scholar] [CrossRef]

- Kaczyński, M.; Piotrowski, Z.; Pietrow, D. High-Quality Video Watermarking Based on Deep Neural Networks for Video with HEVC Compression. Sensors 2022, 22, 7552. [Google Scholar] [CrossRef]

- Lu, G.; Ouyang, W.; Xu, D.; Zhang, X.; Cai, C.; Gao, Z. DVC: An End-to-end Deep Video Compression Framework. 2018. [CrossRef]

- Chen, M.; Goodall, T.; Patney, A.; Bovik, A. C. Learning to Compress Videos without Computing Motion. In Signal Processing: Image Communication, 2022. [CrossRef]

- Cui, W.; Zhang, T.; Zhang, S.; Jiang, F.; Zuo, W.; Wan, Z.; Zhao, D. Convolutional Neural Networks Based Intra Prediction for HEVC. In 2017 Data Compression Conference (DCC), USA, 2017, pp. 436–436. [CrossRef]

- Laude, T.; Ostermann, J. Deep Learning Based Intra Prediction Mode Decision for HEVC. In 2016 Picture Coding Symposium (PCS), Germany, 2016, pp. 1–5. [CrossRef]

- Shimizu, J.; Cheng, Z.; Sun, H.; Takeuchi, M.; Katto, J. HEVC Video Coding with Deep Learning Based Frame Interpolation. In 2020 IEEE 9th Global Conference on Consumer Electronics (GCCE), Japan, 2020, pp. 433–434. [CrossRef]

- Bao, W.; Lai, W.; Ma, C.; Zhang, X.; Gao, Z.; Yang, M. Depth Aware Video Frame Interpolation. IEEE Conference on Computer Vision and Pattern Recognition, 15–20 June 2019. [CrossRef]

- Jiang, H.; Sun, D.; Jampani, V.; Yang, M.; Miller, E.; Kautz, J. Super SloMo: High Quality Estimation of Multiple Intermediate Frames for Video Interpolation. IEEE CVPR 2018. [CrossRef]

- Çetinkaya, E.; Amirpour, H.; Ghanbari, M.; Timmerer, C. CTU Depth Decision Algorithms for HEVC: A Survey. In Signal Processing: Image Communication, 2021, 99, 116442 Elsevier BV. [Google Scholar] [CrossRef]

- Zhai, G.; Min, X. Perceptual image quality assessment: a survey. Science China Information Sciences 2020, 63. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R. Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Min, X.; Gu, Ke.; Zhai, G.; Yang, X.; Zhang, W.; Le Callet, P.; Chen, C. W. Screen content quality assessment: Overview, benchmark, and beyond. ACM Computing Surveys 2021, 54, 1–36. [Google Scholar] [CrossRef]

- Min, X.; Zhai, G.; Zhou, J.; Farias, M. C. Q.; Bovik, A. C. Study of Subjective and Objective Quality Assessment of Audio-Visual Signals. IEEE Transactions on Image Processing 2020, 29, 6054–6068. [Google Scholar] [CrossRef]

- Sheikh, H. R.; Bovik, A. C. Image information and visual quality. IEEE Transactions on Image Processing 2006, 15, 430–444. [Google Scholar] [CrossRef]

- Bjontegaard, G. Improvements of the BD-PSNR Model. In ITUT SG 16, VCEG-AI11. 2008, pp. 1–2. Available online: https://www.itu.int/wftp3/av-arch/video-site/1707_Tor/VCEG-BD04-v1.doc (accessed on 11 November 2024).

- Senzaki, K. BD-PSNR/Rate Computation Tool for Five Data Points. In ITU-T SG 16 WP 3 and ISO/IEC JTC 1/SC 29/WG 11, JCTVC-B055. 2010, pp. 1–3. Available online: https://www.itu.int/wftp3/av-arch/JCTVC-site/2010_07_B_Geneva/JCTVC-B055.doc (accessed on 11 November 2024).

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; Berg, A. C.; Fei-Fei, L. ImageNet Large Scale Visual Recognition Challenge. International Journal of Computer Vision 2015, 115, 211–252. [Google Scholar] [CrossRef]

- LG: New York HDR. Available online: https://4kmedia.org/lg-new-york-hdr-uhd-4k-demo/ (accessed on 11 November 2024).

- Khoreva, A.; Rohrbach, A.; Schiele, B. ; Video object segmentation with language referring expressions. In Asian Conference on Computer Vision. 2018. [Google Scholar] [CrossRef]

- Nah, S.; Kim, T.H.; Lee, K.M. Deep multi-scale convolutional neural network for dynamic scene deblurring. In IEEE Conference on Computer Vision and Pattern Recognition. 2017. [Google Scholar] [CrossRef]

- Nah, S.; Baik, S.; Hong, S.; Moon, G.; Son, S.; Timofte, R.; Lee, K.M. Ntire 2019 challenge on video deblurring and super-resolution: Dataset and study. In IEEE Conference on Computer Vision and Pattern Recognition Workshops. 2019. [Google Scholar]

- Xue, T.; Chen, B.; Wu, J.; Wei, D.; Freeman, W. Video enhancement with task-oriented flow. International Journal of Computer Vision. 2019. [Google Scholar] [CrossRef]

| Layer Number | Layer Type |

|---|---|

| 1 | DepthwiseConv2D(Input) |

| 2 | BatchNormalization(1) |

| 3 | Conv2D(2) |

| 4 | BatchNormalization(3) |

| Layer Number | Layer Type |

|---|---|

| 1 | DepthwiseConv2D(Input) |

| 2 | BatchNormalization(1) |

| 3 | Conv2DTranspose(2) |

| 4 | BatchNormalization(3) |

| Layer Number | Layer Type | Parameters |

|---|---|---|

| 1 | CBL(Input image) | activation function: GELU filter number: 156 Above set of properties is defined as: → Basic Parameters kernel size: 3 × 3 |

| 2 | CBL(1) | Basic Parameters kernel size: 4 × 4 |

| 3 | Multiply(2, Input QP value) | |

| 4 | Concatenate((2, 3), axis = 3) | |

| 5 | BatchNormalization(4) | - |

| 6 | CBL(5) | Basic Parameters kernel size: 4 × 4 |

| 7 | Concatenate((1, 6), axis = 3) | - |

| 8 | BatchNormalization(7) | - |

| 9 | TCBL(8) | Basic Parameters kernel size: 4 × 4 |

| 10 | TCBL(8) | Basic Parameters kernel size: 4 × 4 |

| 11 | Concatenate((9, 10), axis = 3) | - |

| 12 | BatchNormalization(11) | - |

| 13 | CBL(12) | Basic Parameters kernel size: 4 × 4 |

| 14 | CBL(12) | Basic Parameters kernel size: 4 × 4 |

| 15 | Concatenate((13, 14), axis = 3) | - |

| 16 | BatchNormalization(15) | - |

| 17 | CBL(16) | Basic Parameters kernel size: 4 × 4 |

| 18 | CBL(17) | Basic Parameters kernel size: 3 × 3 |

| 19 | Conv2D(18) | activation function: LeakyReLU kernel size: 1 |

| Input Tensor Size |

Number of Parameters | Size on Disk | Processing Time for a Single Tensor [ms] |

|---|---|---|---|

| (128, 128, 3) | 5342421 | 62.80 MB | 52.33 |

| Frame # | Type | QP 0 | QP 16 | QP 23 | QP 28 | QP 31 | QP 41 | QP 51 |

|---|---|---|---|---|---|---|---|---|

| 200 69.30 KB1 |

Proposed method to AVC | PSNR2: 48.36 SSIM3: 0.999 |

PSNR: 45.93 SSIM: 0.993 |

PSNR: 43.71 SSIM: 0.988 |

PSNR: 41.07 SSIM: 0.981 |

PSNR: 39.03 SSIM: 0.971 |

PSNR: 33.83 SSIM: 0.922 |

PSNR: 32.79 SSIM: 0.909 |

| Proposed method to original image |

PSNR: 48.36 SSIM: 0.999 68.91 KB4 |

PSNR: 45.88 SSIM: 0.991 66.30 KB |

PSNR: 42.87 SSIM: 0.976 61.37 KB |

PSNR: 40.11 SSIM: 0.962 58.45 KB |

PSNR: 38.08 SSIM: 0.950 51.33 KB |

PSNR: 32.36 SSIM: 0.881 27.93 KB |

PSNR: 29.03 SSIM: 0.801 18.96 KB |

|

| AVC to original image |

PSNR: 60.76 SSIM: 0.999 69.30 KB |

PSNR: 49.30 SSIM: 0.994 68.77 KB |

PSNR: 45.07 SSIM: 0.983 66.27 KB |

PSNR: 42.09 SSIM: 0.973 65.78 KB |

PSNR: 40.27 SSIM: 0.965 62.60 KB |

PSNR: 34.56 SSIM: 0.925 44.32 KB |

PSNR: 30.60 SSIM: 0.852 27.94 KB |

|

| 400 77.34 KB |

Proposed method to AVC | PSNR: 47.97 SSIM: 0.999 |

PSNR: 45.17 SSIM: 0.993 |

PSNR: 42.47 SSIM: 0.983 |

PSNR: 39.94 SSIM: 0.972 |

PSNR: 38.09 SSIM: 0.962 |

PSNR: 32.30 SSIM: 0.887 |

PSNR: 31.30 SSIM: 0.843 |

| Proposed method to original image |

PSNR: 47.97 SSIM: 0.999 77.02 KB |

PSNR: 45.25 SSIM: 0.991 74.90 KB |

PSNR: 41.76 SSIM: 0.973 70.24 KB |

PSNR: 38.74 SSIM: 0.949 63.9 KB |

PSNR: 36.86 SSIM: 0.933 60.19 KB |

PSNR: 30.88 SSIM: 0.827 36.36 KB |

PSNR: 27.64 SSIM: 0.704 20.43 KB |

|

| AVC to original image |

PSNR: 60.87 SSIM: 0.999 77.34 KB |

PSNR: 48.66 SSIM: 0.995 77.13 KB |

PSNR: 44.00 SSIM: 0.983 75.45 KB |

PSNR: 40.72 SSIM: 0.967 72.19 KB |

PSNR: 39.02 SSIM: 0.958 72.22 KB |

PSNR: 33.31 SSIM: 0.903 52.13 KB |

PSNR: 29.13 SSIM: 0.802 37.74 KB |

|

| 600 74.51 KB |

Proposed method to AVC | PSNR: 48.14 SSIM: 0.999 |

PSNR: 45.29 SSIM: 0.992 |

PSNR: 42.77 SSIM: 0.985 |

PSNR: 40.22 SSIM: 0.975 |

PSNR: 38.39 SSIM: 0.966 |

PSNR: 32.60 SSIM: 0.909 |

PSNR: 30.43 SSIM: 0.859 |

| Proposed method to original image |

PSNR: 48.14 SSIM: 0.999 74.12 KB |

PSNR: 45.27 SSIM: 0.990 71.81 KB |

PSNR: 41.88 SSIM: 0.972 67.26 KB |

PSNR: 39.00 SSIM: 0.951 61.99 KB |

PSNR: 37.16 SSIM: 0.937 57.69 KB |

PSNR: 31.24 SSIM: 0.855 36.96 KB |

PSNR: 27.43 SSIM: 0.744 23.29 KB |

|

| AVC to original image |

PSNR: 61.10 SSIM: 0.999 74.51 KB |

PSNR: 48.58 SSIM: 0.993 74.16 KB |

PSNR: 44.03 SSIM: 0.981 72.28 KB |

PSNR: 40.96 SSIM: 0.967 69.57 KB |

PSNR: 39.27 SSIM: 0.958 68.94 KB |

PSNR: 33.59 SSIM: 0.912 50.47 KB |

PSNR: 29.14 SSIM: 0.830 39.4 KB |

|

| 800 79.19 KB |

Proposed method to AVC | PSNR: 47.67 SSIM: 0.999 |

PSNR: 45.12 SSIM: 0.994 |

PSNR: 42.43 SSIM: 0.985 |

PSNR: 39.93 SSIM: 0.972 |

PSNR: 38.13 SSIM: 0.961 |

PSNR: 32.39 SSIM: 0.904 |

PSNR: 30.12 SSIM: 0.850 |

| Proposed method to original image |

PSNR: 47.67 SSIM: 0.999 78.93 KB |

PSNR: 45.33 SSIM: 0.993 76.91 KB |

PSNR: 41.97 SSIM: 0.976 72.63 KB |

PSNR: 38.89 SSIM: 0.952 66.07 KB |

PSNR: 37.06 SSIM: 0.936 62.59 KB |

PSNR: 31.10 SSIM: 0.851 36.17 KB |

PSNR: 27.29 SSIM: 0.737 22.84 KB |

|

| AVC to original image |

PSNR: 60.86 SSIM: 0.999 79.19 KB |

PSNR: 49.07 SSIM: 0.996 78.9 KB |

PSNR: 44.39 SSIM: 0.985 77.18 KB |

PSNR: 41.03 SSIM: 0.971 74.24 KB |

PSNR: 39.27 SSIM: 0.962 74.27 KB |

PSNR: 33.36 SSIM: 0.910 54.56 KB |

PSNR: 28.87 SSIM: 0.823 39.38 KB |

| Frame # | Type | QP 1 | QP 16 | QP 23 | QP 28 | QP 31 | QP 41 | QP 51 |

|---|---|---|---|---|---|---|---|---|

| DAVIS [62] | ADNN to AVC | PSNR1: 47.77 SSIM2: 0.998 |

PSNR: 45.45 SSIM: 0.993 |

PSNR: 42.71 SSIM: 0.986 |

PSNR: 39.93 SSIM: 0.975 |

PSNR: 38.02 SSIM: 0.964 |

PSNR: 33.20 SSIM: 0.914 |

PSNR: 30.42 SSIM: 0.872 |

| ADNN to original image | PSNR: 47.90 SSIM: 0.998 |

PSNR: 45.48 SSIM: 0.991 |

PSNR: 42.14 SSIM: 0.977 |

PSNR: 38.99 SSIM: 0.958 |

PSNR: 37.00 SSIM: 0.943 |

PSNR: 31.60 SSIM: 0.868 |

PSNR: 27.75 SSIM: 0.778 |

|

| AVC to original image |

PSNR: 61.30 SSIM: 0.999 |

PSNR: 48.98 SSIM: 0.991 |

PSNR: 44.23 SSIM: 0.984 |

PSNR: 40.93 SSIM: 0.972 |

PSNR: 39.04 SSIM: 0.964 |

PSNR: 33.45 SSIM: 0.918 |

PSNR: 29.42 SSIM: 0.853 |

|

| GoPro [63] | ADNN to AVC | PSNR: 46.56 SSIM: 0.998 |

PSNR: 44.49 SSIM: 0.993 |

PSNR: 41.84 SSIM: 0.985 |

PSNR: 39.18 SSIM: 0.973 |

PSNR: 37.36 SSIM: 0.961 |

PSNR: 31.33 SSIM: 0.883 |

PSNR: 27.90 SSIM: 0.792 |

| ADNN to original image |

PSNR: 46.68 SSIM: 0.998 |

PSNR: 44.77 SSIM: 0.993 |

PSNR: 41.59 SSIM: 0.979 |

PSNR: 38.40 SSIM: 0.956 |

PSNR: 36.46 SSIM: 0.938 |

PSNR: 30.14 SSIM: 0.832 |

PSNR: 25.82 SSIM: 0.702 |

|

| AVC to original image |

PSNR: 61.06 SSIM: 0.999 |

PSNR: 48.88 SSIM: 0.995 |

PSNR: 43.98 SSIM: 0.986 |

PSNR: 40.44 SSIM: 0.973 |

PSNR: 38.56 SSIM: 0.965 |

PSNR: 32.42 SSIM: 0.916 |

PSNR: 27.74 SSIM: 0.845 |

|

| REDS [64] | ADNN to AVC | PSNR: 46.74 SSIM: 0.998 |

PSNR: 44.35 SSIM: 0.992 |

PSNR: 41.40 SSIM: 0.982 |

PSNR: 38.88 SSIM: 0.968 |

PSNR: 37.29 SSIM: 0.957 |

PSNR: 31.42 SSIM: 0.888 |

PSNR: 27.59 SSIM: 0.787 |

| ADNN to original image |

PSNR: 46.87 SSIM: 0.998 |

PSNR: 44.58 SSIM: 0.992 |

PSNR: 40.93 SSIM: 0.974 |

PSNR: 37.75 SSIM: 0.945 |

PSNR: 36.04 SSIM: 0.925 |

PSNR: 30.15 SSIM: 0.825 |

PSNR: 25.65 SSIM: 0.693 |

|

| AVC to original image |

PSNR: 61.06 SSIM: 0.999 |

PSNR: 48.39 SSIM: 0.994 |

PSNR: 43.21 SSIM: 0.983 |

PSNR: 39.71 SSIM: 0.966 |

PSNR: 37.98 SSIM: 0.956 |

PSNR: 32.23 SSIM: 0.904 |

PSNR: 27.55 SSIM: 0.838 |

|

| Vimeo-90K-T [65] | ADNN to AVC | PSNR: 48.57 SSIM: 0.998 |

PSNR: 46.58 SSIM: 0.994 |

PSNR: 44.52 SSIM: 0.989 |

PSNR: 42.25 SSIM: 0.981 |

PSNR: 40.65 SSIM: 0.974 |

PSNR: 34.87 SSIM: 0.930 |

PSNR: 30.33 SSIM: 0.871 |

| ADNN to original image |

PSNR: 48.70 SSIM: 0.998 |

PSNR: 46.81 SSIM: 0.994 |

PSNR: 43.98 SSIM: 0.985 |

PSNR: 41.18 SSIM: 0.970 |

PSNR: 39.52 SSIM: 0.959 |

PSNR: 33.77 SSIM: 0.897 |

PSNR: 28.72 SSIM: 0.811 |

|

| AVC to original image |

PSNR: 61.28 SSIM: 0.999 |

PSNR: 50.50 SSIM: 0.996 |

PSNR: 46.37 SSIM: 0.990 |

PSNR: 43.28 SSIM: 0.981 |

PSNR: 41.71 SSIM: 0.976 |

PSNR: 36.26 SSIM: 0.946 |

PSNR: 31.11 SSIM: 0.901 |

| QP | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 |

| File size1 AVC | 75.09 | 75.05 | 75.04 | 75.04 | 75.04 | 74.98 | 74.96 | 74.96 | 74.90 | 74.95 | 74.97 | 74.86 | 74.87 |

| File size ADNN | 74.75 | 74.66 | 74.63 | 74.61 | 74.56 | 74.42 | 74.34 | 74.26 | 74.07 | 74.01 | 73.88 | 73.68 | 73.48 |

| PSNR2 AVC | 60.90 | 60.90 | 60.90 | 60.90 | 58.80 | 57.15 | 56.00 | 55.11 | 54.14 | 53.29 | 52.58 | 51.91 | 51.34 |

| PSNR ADNN | 48.04 | 47.87 | 47.87 | 47.86 | 47.75 | 47.63 | 47.51 | 47.42 | 47.23 | 47.12 | 46.93 | 46.74 | 46.56 |

| QP | 13 | 14 | 15 | 16 | 17 | 18 | 19 | 20 | 21 | 22 | 23 | 24 | 25 |

| File size AVC | 74.88 | 74.83 | 74.74 | 74.74 | 74.55 | 74.36 | 73.70 | 73.30 | 73.03 | 72.73 | 72.80 | 72.39 | 71.63 |

| File size ADNN | 73.29 | 73.08 | 72.75 | 72.48 | 71.97 | 71.48 | 70.62 | 69.83 | 69.11 | 68.54 | 67.88 | 66.84 | 65.50 |

| PSNR AVC | 50.70 | 50.11 | 49.51 | 48.90 | 48.28 | 47.71 | 46.91 | 46.30 | 45.69 | 45.08 | 44.37 | 43.81 | 43.10 |

| PSNR ADNN | 46.33 | 46.05 | 45.78 | 45.43 | 45.07 | 44.71 | 44.07 | 43.61 | 43.19 | 42.66 | 42.12 | 41.62 | 41.00 |

| QP | 26 | 27 | 28 | 29 | 30 | 31 | 32 | 33 | 34 | 35 | 36 | 37 | 38 |

| File size AVC | 71.00 | 70.56 | 70.45 | 71.74 | 70.88 | 69.51 | 67.37 | 65.56 | 63.49 | 60.88 | 59.48 | 57.29 | 55.42 |

| File size ADNN | 64.25 | 63.33 | 62.60 | 62.40 | 60.61 | 57.95 | 56.10 | 52.99 | 50.13 | 47.06 | 44.43 | 41.86 | 39.30 |

| PSNR AVC | 42.42 | 41.77 | 41.20 | 40.71 | 40.13 | 39.46 | 38.75 | 38.31 | 37.75 | 36.99 | 36.60 | 35.93 | 35.40 |

| PSNR ADNN | 40.38 | 39.77 | 39.19 | 38.61 | 38.03 | 37.29 | 36.58 | 35.98 | 35.38 | 34.65 | 34.12 | 33.49 | 32.94 |

| QP | 39 | 40 | 41 | 42 | 43 | 44 | 45 | 46 | 47 | 48 | 49 | 50 | 51 |

| File size AVC | 53.53 | 52.09 | 50.37 | 49.27 | 49.50 | 48.18 | 46.77 | 45.19 | 43.83 | 41.74 | 40.08 | 38.54 | 36.12 |

| File size ADNN | 37.30 | 36.28 | 34.36 | 32.50 | 30.47 | 29.92 | 29.37 | 27.45 | 25.76 | 24.18 | 23.02 | 22.60 | 21.38 |

| PSNR AVC | 34.83 | 34.39 | 33.71 | 33.32 | 32.81 | 32.24 | 31.83 | 31.43 | 30.90 | 30.56 | 30.16 | 29.78 | 29.44 |

| PSNR ADNN | 32.39 | 31.92 | 31.40 | 30.96 | 30.51 | 30.08 | 29.69 | 29.33 | 28.93 | 28.63 | 28.34 | 28.11 | 27.85 |

| QP AVC | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 |

| QP ADNN | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| ∇PSNR1 | -12.86 | -12.86 | -12.86 | -12.86 | -10.77 | -9.11 | -7.97 | -7.07 | -6.11 | -5.25 | -4.55 | -3.88 | -3.31 |

| ∇File size2 | 0.34 | -0.30 | -0.29 | -0.29 | -0.29 | -0.23 | -0.21 | -0.21 | -0.15 | -0.20 | -0.22 | -0.11 | -0.13 |

| QP AVC | 13 | 14 | 15 | 16 | 17 | 18 | 19 | 20 | 21 | 22 | 23 | 24 | 25 |

| QP ADNN | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 18 |

| ∇PSNR | -2.66 | -2.08 | -1.47 | -0.87 | -0.24 | 0.32 | 1.13 | 1.73 | 2.35 | 2.96 | 3.66 | 4.22 | 1.60 |

| ∇File size | -0.13 | -0.08 | 0.01 | 0.01 | 0.20 | 0.38 | 1.05 | 1.45 | 1.72 | 2.01 | 1.95 | 2.36 | -0.15 |

| QP AVC | 26 | 27 | 28 | 29 | 30 | 31 | 32 | 33 | 34 | 35 | 36 | 37 | 38 |

| QP ADNN | 19 | 20 | 20 | 20 | 20 | 21 | 24 | 25 | 27 | 30 | 31 | 32 | 33 |

| ∇PSNR | 1.65 | 1.85 | 2.41 | 2.90 | 3.48 | 3.74 | 2.87 | 2.69 | 2.02 | 1.04 | 0.69 | 0.65 | 0.58 |

| ∇File size | -0.39 | -0.73 | -0.61 | -1.91 | -1.05 | -0.40 | -0.53 | -0.06 | -0.16 | -0.28 | -1.53 | -1.20 | -2.43 |

| QP AVC | 39 | 40 | 41 | 42 | 43 | 44 | 45 | 46 | 47 | 48 | 49 | 50 | 51 |

| QP ADNN | 33 | 34 | 34 | 35 | 35 | 35 | 36 | 36 | 37 | 38 | 38 | 39 | 41 |

| ∇PSNR | 1.15 | 0.99 | 1.68 | 1.33 | 1.84 | 2.41 | 2.29 | 2.69 | 2.59 | 2.38 | 2.78 | 2.61 | 1.96 |

| ∇File size | -0.54 | -1.96 | -0.24 | -2.22 | -2.44 | -1.13 | -2.34 | -0.76 | -1.97 | -2.44 | -0.78 | -1.24 | -1.76 |

| Frame # | Type | QP 0 | QP 16 | QP 23 | QP 28 | QP 31 | QP 41 | QP 51 |

|---|---|---|---|---|---|---|---|---|

| 200 69.30 KB1 |

Proposed method to HEVC | PSNR2: 47.76 SSIM3: 0.999 |

PSNR: 46.05 SSIM: 0.994 |

PSNR: 43.64 SSIM: 0.986 |

PSNR: 40.99 SSIM: 0.977 |

PSNR: 39.33 SSIM: 0.971 |

PSNR: 34.83 SSIM: 0.941 |

PSNR: 33.13 SSIM: 0.920 |

| Proposed method to original image |

PSNR: 47.94 SSIM: 0.999 68.91 KB4 |

PSNR: 45.63 SSIM: 0.989 65.95 KB |

PSNR: 42.71 SSIM: 0.974 60.80 KB |

PSNR: 39.72 SSIM: 0.957 54.52 KB |

PSNR: 37.72 SSIM: 0.944 48.44 KB |

PSNR: 32.19 SSIM: 0.877 26.94 KB |

PSNR: 28.91 SSIM: 0.799 19.81 KB |

|

| HEVC to original image |

PSNR: 57.29 SSIM: 0.999 69.31 KB |

PSNR: 48.82 SSIM: 0.993 68.17 KB |

PSNR: 44.80 SSIM: 0.981 65.13 KB |

PSNR: 41.65 SSIM: 0.968 61.99 KB |

PSNR: 39.75 SSIM: 0.957 58.50 KB |

PSNR: 34.21 SSIM: 0.912 40.94 KB |

PSNR: 30.19 SSIM: 0.839 24.23 KB |

|

| 400 77.34 KB |

Proposed method to HEVC | PSNR: 47.34 SSIM: 0.999 |

PSNR: 45.14 SSIM: 0.993 |

PSNR: 42.41 SSIM: 0.982 |

PSNR: 39.83 SSIM: 0.968 |

PSNR: 38.04 SSIM: 0.958 |

PSNR: 33.08 SSIM: 0.907 |

PSNR: 32.47 SSIM: 0.894 |

| Proposed method to original image |

PSNR: 47.58 SSIM: 0.999 76.95 KB |

PSNR: 44.79 SSIM: 0.989 74.37 KB |

PSNR: 41.40 SSIM: 0.970 69.11 KB |

PSNR: 38.37 SSIM: 0.945 61.97 KB |

PSNR: 36.39 SSIM: 0.924 62.95 KB |

PSNR: 30.57 SSIM: 0.811 34.48 KB |

PSNR: 27.49 SSIM: 0.696 20.82 KB |

|

| HEVC to original image |

PSNR: 57.21 SSIM: 0.999 77.38 KB |

PSNR: 47.87 SSIM: 0.993 76.49 KB |

PSNR: 43.40 SSIM: 0.979 74.07 KB |

PSNR: 40.19 SSIM: 0.962 69.83 KB |

PSNR: 38.26 SSIM: 0.945 66.85 KB |

PSNR: 32.53 SSIM: 0.865 53.41 KB |

PSNR: 28.46 SSIM: 0.746 27.69 KB |

|

| 600 74.51 KB |

Proposed method to HEVC | PSNR: 47.49 SSIM: 0.999 |

PSNR: 45.34 SSIM: 0.992 |

PSNR: 42.72 SSIM: 0.983 |

PSNR: 40.22 SSIM: 0.972 |

PSNR: 38.51 SSIM: 0.964 |

PSNR: 33.36 SSIM: 0.925 |

PSNR: 31.67 SSIM: 0.900 |

| Proposed method to original image |

PSNR: 47.73 SSIM: 0.999 74.11 KB |

PSNR: 44.85 SSIM: 0.987 71.17 KB |

PSNR: 41.56 SSIM: 0.969 66.21 KB |

PSNR: 38.64 SSIM: 0.947 59.67 KB |

PSNR: 36.74 SSIM: 0.930 54.20 KB |

PSNR: 30.91 SSIM: 0.843 35.55 KB |

PSNR: 27.28 SSIM: 0.738 22.73 KB |

|

| HEVC to original image |

PSNR: 57.31 SSIM: 0.999 74.55 KB |

PSNR: 47.83 SSIM: 0.991 73.37 KB |

PSNR: 43.51 SSIM: 0.978 70.86 KB |

PSNR: 40.43 SSIM: 0.961 66.96 KB |

PSNR: 38.60 SSIM: 0.947 64.00 KB |

PSNR: 32.92 SSIM: 0.883 47.27 KB |

PSNR: 28.47 SSIM: 0.786 30.62 KB |

|

| 800 79.19 KB |

Proposed method to HEVC | PSNR: 47.12 SSIM: 0.999 |

PSNR: 45.15 SSIM: 0.994 |

PSNR: 42.45 SSIM: 0.982 |

PSNR: 39.88 SSIM: 0.968 |

PSNR: 38.23 SSIM: 0.959 |

PSNR: 33.38 SSIM: 0.934 |

PSNR: 31.62 SSIM: 0.902 |

| Proposed method to original image |

PSNR: 47.31 SSIM: 0.999 78.94 KB |

PSNR: 45.01 SSIM: 0.991 76.38 KB |

PSNR: 41.63 SSIM: 0.973 71.22 KB |

PSNR: 38.54 SSIM: 0.947 64.06 KB |

PSNR: 36.57 SSIM: 0.927 58.04 KB |

PSNR: 30.85 SSIM: 0.843 35.07 KB |

PSNR: 27.19 SSIM: 0.735 23.01KB |

|

| HEVC to original image |

PSNR: 57.13 SSIM: 0.999 79.13 KB |

PSNR: 48.39 SSIM: 0.994 78.35 KB |

PSNR: 43.78 SSIM: 0.981 75.86 KB |

PSNR: 40.44 SSIM: 0.964 71.88 KB |

PSNR: 38.45 SSIM: 0.948 68.48 KB |

PSNR: 32.71 SSIM: 0.879 49.23 KB |

PSNR: 28.31 SSIM: 0.784 32.28 KB |

| Frame # | Type | QP 1 | QP 16 | QP 23 | QP 28 | QP 31 | QP 41 | QP 51 |

|---|---|---|---|---|---|---|---|---|

| DAVIS [76] | ADNN to HEVC | PSNR1: 47.47 SSIM2: 0.997 |

PSNR: 45.40 SSIM: 0.992 |

PSNR: 42.54 SSIM: 0.984 |

PSNR: 39.89 SSIM: 0.973 |

PSNR: 38.26 SSIM: 0.964 |

PSNR: 33.98 SSIM: 0.930 |

PSNR: 32.00 SSIM: 0.915 |

| ADNN to original image | PSNR: 47.67 SSIM: 0.997 |

PSNR: 45.10 SSIM: 0.989 |

PSNR: 41.78 SSIM: 0.975 |

PSNR: 38.59 SSIM: 0.954 |

PSNR: 36.60 SSIM: 0.936 |

PSNR: 31.23 SSIM: 0.855 |

PSNR: 27.62 SSIM: 0.768 |

|

| HEVC to original image |

PSNR: 57.64 SSIM: 0.999 |

PSNR: 48.30 SSIM: 0.992 |

PSNR: 43.75 SSIM: 0.981 |

PSNR: 40.41 SSIM: 0.967 |

PSNR: 38.42 SSIM: 0.954 |

PSNR: 32.78 SSIM: 0.889 |

PSNR: 28.72 SSIM: 0.803 |

|

| GoPro [77] | ADNN to HEVC | PSNR: 46.36 SSIM: 0.997 |

PSNR: 44.50 SSIM: 0.993 |

PSNR: 41.75 SSIM: 0.984 |

PSNR: 38.97 SSIM: 0.967 |

PSNR: 37.18 SSIM: 0.953 |

PSNR: 32.17 SSIM: 0.909 |

PSNR: 29.95 SSIM: 0.895 |

| ADNN to original image |

PSNR: 46.53 SSIM: 0.998 |

PSNR: 44.50 SSIM: 0.992 |

PSNR: 41.25 SSIM: 0.977 |

PSNR: 37.99 SSIM: 0.950 |

PSNR: 35.86 SSIM: 0.926 |

PSNR: 29.59 SSIM: 0.803 |

PSNR: 25.61 SSIM: 0.683 |

|

| HEVC to original image |

PSNR: 57.56 SSIM: 0.999 |

PSNR: 48.37 SSIM: 0.994 |

PSNR: 43.49 SSIM: 0.984 |

PSNR: 39.90 SSIM: 0.967 |

PSNR: 37.71 SSIM: 0.951 |

PSNR: 31.25 SSIM: 0.847 |

PSNR: 26.83 SSIM: 0.737 |

|

| REDS [78] | ADNN to HEVC | PSNR: 46.48 SSIM: 0.997 |

PSNR: 44.22 SSIM: 0.992 |

PSNR: 41.34 SSIM: 0.981 |

PSNR: 38.77 SSIM: 0.964 |

PSNR: 37.18 SSIM: 0.951 |

PSNR: 32.04 SSIM: 0.906 |

PSNR: 29.76 SSIM: 0.892 |

| ADNN to original image |

PSNR: 46.69 SSIM: 0.998 |

PSNR: 44.10 SSIM: 0.990 |

PSNR: 40.49 SSIM: 0.970 |

PSNR: 37.41 SSIM: 0.94 |

PSNR: 35.53 SSIM: 0.914 |

PSNR: 29.51 SSIM: 0.793 |

PSNR: 25.41 SSIM: 0.674 |

|

| HEVC to original image |

PSNR: 57.38 SSIM: 0.999 |

PSNR: 47.50 SSIM: 0.992 |

PSNR: 42.57 SSIM: 0.980 |

PSNR: 39.71 SSIM: 0.960 |

PSNR: 37.17 SSIM: 0.942 |

PSNR: 31.07 SSIM: 0.838 |

PSNR: 26.57 SSIM: 0.762 |

|

| Vimeo-90K-T [79] | ADNN to HEVC | PSNR: 48.40 SSIM: 0.998 |

PSNR: 46.66 SSIM: 0.994 |

PSNR: 44.33 SSIM: 0.988 |

PSNR: 41.93 SSIM: 0.978 |

PSNR: 40.29 SSIM: 0.969 |

PSNR: 35.11 SSIM: 0.939 |

PSNR: 31.49 SSIM: 0.921 |

| ADNN to original image |

PSNR: 48.54 SSIM: 0.998 |

PSNR: 46.61 SSIM: 0.993 |

PSNR: 43.64 SSIM: 0.983 |

PSNR: 40.81 SSIM: 0.966 |

PSNR: 38.97 SSIM: 0.951 |

PSNR: 33.04 SSIM: 0.874 |

PSNR: 28.35 SSIM: 0.793 |

|

| HEVC to original image |

PSNR: 58.70 SSIM: 0.999 |

PSNR: 50.18 SSIM: 0.995 |

PSNR: 45.91 SSIM: 0.988 |

PSNR: 42.75 SSIM: 0.977 |

PSNR: 40.85 SSIM: 0.967 |

PSNR: 34.76 SSIM: 0.903 |

PSNR: 29.83 SSIM: 0.828 |

| QP | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 |

| File size1 HEVC | 75.09 | 75.09 | 75.09 | 75.09 | 75.08 | 75.08 | 75.07 | 75.07 | 75.04 | 74.97 | 74.95 | 74.81 | 74.72 |

| File size ADNN | 74.73 | 74.70 | 74.66 | 74.66 | 74.60 | 74.54 | 74.45 | 74.26 | 74.19 | 73.95 | 73.82 | 73.58 | 73.33 |

| PSNR2 HEVC | 57.24 | 57.24 | 57.24 | 57.24 | 56.16 | 55.16 | 54.34 | 53.62 | 52.95 | 52.37 | 51.74 | 51.14 | 50.58 |

| PSNR ADNN | 47.64 | 47.63 | 47.62 | 47.62 | 47.52 | 47.41 | 47.31 | 47.20 | 47.00 | 46.85 | 46.66 | 46.46 | 46.25 |

| QP | 13 | 14 | 15 | 16 | 17 | 18 | 19 | 20 | 21 | 22 | 23 | 24 | 25 |

| File size HEVC | 74.49 | 74.42 | 74.21 | 74.10 | 73.92 | 73.69 | 73.40 | 72.95 | 72.57 | 72.19 | 71.48 | 70.91 | 69.92 |

| File size ADNN | 73.02 | 72.72 | 72.29 | 71.97 | 71.50 | 70.99 | 70.32 | 69.59 | 68.78 | 67.91 | 66.84 | 65.59 | 64.12 |

| PSNR HEVC | 49.91 | 49.36 | 48.81 | 48.23 | 47.62 | 47.05 | 46.43 | 45.79 | 45.18 | 44.53 | 43.87 | 43.25 | 42.61 |

| PSNR ADNN | 45.98 | 45.68 | 45.41 | 45.07 | 44.69 | 44.31 | 43.88 | 43.39 | 42.89 | 42.37 | 41.83 | 41.27 | 40.69 |

| QP | 26 | 27 | 28 | 29 | 30 | 31 | 32 | 33 | 34 | 35 | 36 | 37 | 38 |

| File size HEVC | 68.98 | 68.30 | 67.67 | 66.68 | 65.89 | 64.46 | 62.91 | 61.34 | 59.42 | 57.82 | 56.02 | 54.09 | 52.07 |

| File size ADNN | 62.83 | 61.43 | 60.06 | 58.42 | 56.52 | 55.91 | 53.36 | 50.98 | 48.37 | 45.64 | 43.21 | 40.62 | 38.24 |

| PSNR HEVC | 41.95 | 41.33 | 40.68 | 40.01 | 39.39 | 38.77 | 38.14 | 37.57 | 36.95 | 36.34 | 35.79 | 35.22 | 34.67 |

| PSNR ADNN | 40.08 | 39.47 | 38.82 | 38.17 | 37.51 | 36.86 | 36.19 | 35.57 | 34.94 | 34.34 | 33.72 | 33.15 | 32.61 |

| QP | 39 | 40 | 41 | 42 | 43 | 44 | 45 | 46 | 47 | 48 | 49 | 50 | 51 |

| File size HEVC | 51.93 | 50.00 | 47.71 | 45.74 | 43.67 | 41.34 | 38.89 | 36.88 | 34.68 | 33.60 | 31.57 | 29.72 | 28.71 |

| File size ADNN | 35.88 | 34.88 | 33.01 | 31.03 | 29.37 | 28.71 | 27.61 | 26.74 | 25.20 | 23.78 | 22.67 | 22.06 | 21.59 |

| PSNR HEVC | 34.16 | 33.64 | 33.09 | 32.58 | 32.09 | 31.59 | 31.14 | 30.65 | 30.19 | 29.80 | 29.44 | 29.14 | 28.86 |

| PSNR ADNN | 32.09 | 31.61 | 31.13 | 30.69 | 30.26 | 29.88 | 29.50 | 29.09 | 28.72 | 28.39 | 28.12 | 27.92 | 27.72 |

| QP AVC | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 |

| QP ADNN | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| ∇PSNR1 | -9.60 | -9.60 | -9.60 | -9.60 | -8.52 | -7.52 | -6.70 | -5.98 | -5.31 | -4.73 | -4.10 | -3.50 | -2.94 |

| ∇File size2 | -0.36 | -0.36 | -0.36 | -0.36 | -0.35 | -0.35 | -0.34 | -0.34 | -0.31 | -0.24 | -0.22 | -0.08 | 0.01 |

| QP AVC | 13 | 14 | 15 | 16 | 17 | 18 | 19 | 20 | 21 | 22 | 23 | 24 | 25 |

| QP ADNN | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 18 | 19 | 20 |

| ∇PSNR | -2.27 | -1.72 | -1.17 | -0.59 | 0.02 | 0.59 | 1.22 | 1.85 | 2.46 | 3.11 | 0.44 | 0.63 | 0.78 |

| ∇File size | 0.24 | 0.31 | 0.52 | 0.63 | 0.81 | 1.04 | 1.33 | 1.78 | 2.16 | 2.54 | -0.49 | -0.59 | -0.33 |

| QP AVC | 26 | 27 | 28 | 29 | 30 | 31 | 32 | 33 | 34 | 35 | 36 | 37 | 38 |

| QP ADNN | 21 | 22 | 22 | 23 | 24 | 25 | 26 | 28 | 29 | 30 | 31 | 32 | 33 |

| ∇PSNR | 0.95 | 1.04 | 1.69 | 1.82 | 1.88 | 1.93 | 1.94 | 1.25 | 1.22 | 1.17 | 1.07 | 0.97 | 0.90 |

| ∇File size | -0.20 | -0.39 | -0.83 | -1.09 | -0.30 | -0.34 | -0.09 | -1.28 | -1.00 | -1.30 | -0.11 | -0.73 | -1.09 |

| QP AVC | 39 | 40 | 41 | 42 | 43 | 44 | 45 | 46 | 47 | 48 | 49 | 50 | 51 |

| QP ADNN | 33 | 34 | 35 | 35 | 36 | 37 | 38 | 39 | 41 | 41 | 42 | 43 | 45 |

| ∇PSNR | 1.41 | 1.31 | 1.24 | 1.76 | 1.64 | 1.56 | 1.48 | 1.44 | 0.95 | 1.33 | 1.25 | 1.12 | 0.64 |

| ∇File size | -0.95 | -1.63 | -2.08 | -0.10 | -0.46 | -0.71 | -0.65 | -1.01 | -1.67 | -0.59 | -0.54 | -0.35 | -1.09 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).