1. Introduction

Laboratory medicine plays a fundamental and decisive role in every physician's decision-making process by providing essential test results that guide diagnosis and treatment [1-3]. However, laboratory reports often present raw numerical data with little to no contextual interpretation, leaving clinicians with the task of interpreting these results. [

4]. Moreover, patients frequently receive their laboratory results with limited explanations, beyond indicating whether values are within or outside the reference range.

Laboratory reporting comments are a crucial communication tool between the laboratory, the report holder, and the requesting physician. These comments, formulated in various ways, are generally aimed at clarifying interpretative aspects and suggesting possible diagnostic implications. In most of these applications, the reporting comment represents a kind of diagnostic complement necessary to increase the meaning and clinical use of a single result or, more frequently, of multiple results that are linked by pathophysiological interrelationships.

Without adequate guidance, patients frequently turn to online resources, such as search engines or AI-based tools like ChatGPT, to interpret their results [

5,

6]. This often creates more confusion and leads to incorrect information [

7]. Many recent studies have integrated clinical data into post-analytical interpretations [

8,

9], leading to inaccurate conclusions (or hallucinations) due to the complexity of clinical information [

10,

11]. In contrast, our approach focuses on interpreting laboratory test results in isolation, ensuring that the conversational chatbot remains focused on explaining the data presented in the report without attempting to diagnose or evaluate specific clinical conditions.

This study aims to bridge the gap between patients and primary care physicians by offering AI-guided clear explanations of laboratory test results [

12,

13], improving patient understanding without straying into diagnostic territory [

14,

15]. We have developed, trained, and validated a virtual conversational chatbot capable of interpreting a wide range of blood chemistry parameters, making information more accessible and meaningful for patients while avoiding the common pitfalls associated with integrating complex clinical data.

2. Materials and Methods

2.1. Clinical Chemistry Analysis Reports

The goal was to identify laboratory parameters relevant to the patient-focused scenario. After discussions, consensus was reached on a core set of laboratory tests that included the following: Red Blood Cells (RBC), Hemoglobin (HB), Hematocrit (HCT), MCV (Mean Corpuscular Volume), MCH (Mean Corpuscular Hemoglobin), MCHC (Mean Corpuscular Hemoglobin Concentration), White Blood Cells (WBC), complete blood count (CBC) with differential (leukocyte subsets), gamma-glutamyl transferase (GGT), glucose, total cholesterol, high-density lipoprotein (HDL), low-density lipoprotein (LDL), creatinine, aspartate aminotransferase (AST), alanine aminotransferase (ALT), and total bilirubin. In addition to this core set, a second group of tests was identified, including ferritin, prostate-specific antigen (PSA), thyroid-stimulating hormone (TSH), free thyroxine (FT4), alkaline phosphatase, activated partial thromboplastin time (aPTT), prothrombin time (PT), and glycated hemoglobin (HbA1c). eGFR (Glomerular Filtration Rate), BUN (Blood Urea Nitrogen); protein electrophoresis (Total Proteins, Albumin, Alpha 1, Alpha 2, Beta 1, Beta 2, Gamma, A/G Ratio).

Laboratory A employed the Roche cobas® 8000 modular analyzer series for clinical chemistry and immunoassay testing, and the Sebia CAPILLARYS 3 system for protein electrophoresis [

15,

16]. Laboratory B utilized the Abbott Architect ci16200 integrated system and the DiaSorin LIAISON® XL for specialized immunoassay testing. This heterogeneity in analytical platforms provided an opportunity to assess the AI system's robustness in handling results from different manufacturers' reference ranges and measurement units [

2,

20].

2.2. Prompts and Claude AI chatbot

The prompts, which are brief sets of instructions designed to guide the chatbot's responses, were crafted following best practices established in recent literature [

5,

16,

17] to reduce the likelihood of 'hallucinations' and avoid overly simplistic recommendations. This prompts were designed following best practices to reduce the likelihood of "hallucinations" (i.e., irrelevant or inaccurate responses) and to avoid overly simplistic recommendations, such as advising the user to consult a doctor.

The system was tested using 30 laboratory reports from two different Italian laboratories, encompassing various biochemical parameters and measurement standards [

2,

12]. The laboratories utilized different analytical platforms and methodologies, allowing us to evaluate the chatbot's ability to interpret results across diverse instrumental settings.

2.3. Interpretation Accuracy of Claude AI Chatbot

The interpretation accuracy of Claude AI chatbot was rigorously evaluated through a peer review process involving three independent medical reviewers with extensive experience in laboratory medicine [

4,

8]. Each reviewer independently assessed the chatbot's interpretations without knowledge of the others' evaluations, using a standardized scoring system to evaluate accuracy, completeness, and clinical relevance of the generated interpretations [

12,

19].

The reviewers evaluated several key aspects:

Technical accuracy of result interpretation

Appropriateness of reference range contextualization

Clarity and accessibility of language for patient understanding

Consistency of interpretations across different analytical platforms

Identification of clinically significant patterns and interrelationships

This structured validation process allowed us to quantify the reliability and consistency of the AI interpretations across different laboratory settings and instrumental platforms [

2,

15]. The peer review process was particularly crucial in validating the chatbot's ability to maintain accuracy while translating technical data into patient-friendly explanations [

6,

7].

Importantly, the reviewers also assessed the chatbot's performance in handling platform-specific variations in reference ranges and units of measurement [

16,

17]. This evaluation was essential given that different analytical platforms may produce slightly different reference intervals for the same analyte, requiring the AI system to contextualize results appropriately based on the specific methodology used [

15,

19].

To ensure standardization of the review process, the medical reviewers used a detailed evaluation rubric that included specific criteria for assessing:

Accuracy of numerical value interpretation

Appropriate contextualization of platform-specific reference ranges

Correct identification of out-of-range values

Proper handling of unit conversions when necessary

Consistency in interpretation across different analytical systems [

8,

12,

20]

The evaluation process was conducted over a three-month period, allowing sufficient time for thorough assessment of each report and its corresponding AI interpretation. This comprehensive validation approach helped establish the reliability and clinical utility of the AI system across different laboratory settings and analytical platforms [

2,

4,

15].

3. Results

In this study, we used 30 laboratory reports covering a variety of laboratory medicine tests. These reports included clinical biochemistry panels, protein electrophoresis profiles, electrolyte levels, and urinalysis results. The data came from two different laboratories, each using distinct equipment and varying units of measurement. This diversity allowed us to evaluate the virtual conversational chatbot's ability to handle heterogeneous data formats and measurement standards.

Each report was uploaded to the chatbot in PDF format, and the AI was tasked with interpreting the results. To ensure the reliability of the machine learning model's performance, prompts were repeated at different times to verify the consistency of the interpretations provided. By using reports from different laboratories and varying instrumentation, we aimed to test the chatbot's robustness in handling a wide range of laboratory data, ensuring that its interpretations were accurate regardless of the source or format of the input.

Here, we presented 5 emblematic analysis reports to challenge the Claude -powered AI chatbot. Complete Clinical Chemistry Analysis reports of the cases are presented in

Table 1.

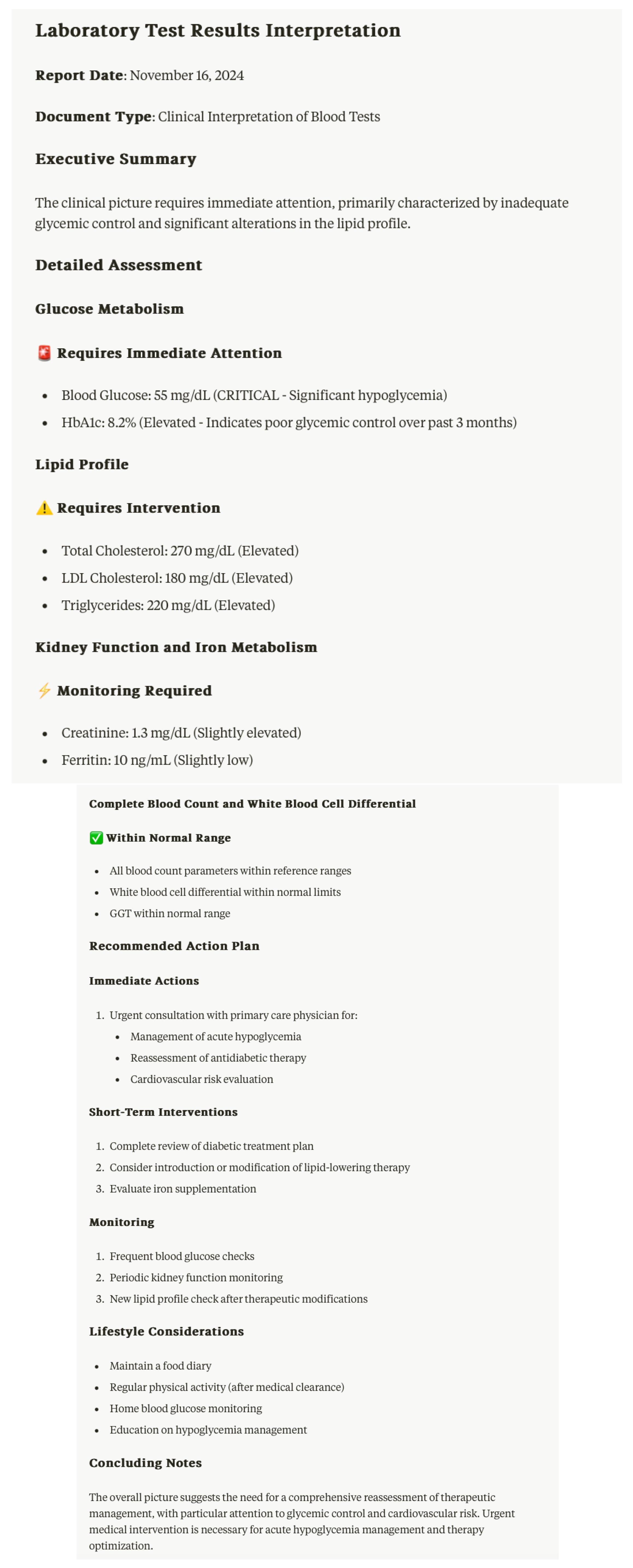

3.1.1. Case #1: Diabetic Patient with Critical Glucose Levels (Figure 1)

Patient Profile: Male, 65 years

Key Findings:

Critical hypoglycemia (55 mg/dL) ↓

HbA1c (8.2%) ↑

Dyslipidemia with total cholesterol (270 mg/dL) ↑

Creatinine (1.3 mg/dL) ↑

Ferritin (10 ng/mL) ↓

Potential Interpretation Pitfalls:

Focusing solely on the critical glucose without noting the contradictory HbA1c

Overlooking the combined cardiovascular risk factors

Missing the relationship between elevated creatinine and diabetes management

AI ChatBot interpretations:

3.1.2. Case #2a: Iron and Folate Deficiency

Patient profile: female, 35 years

Key Findings:

Serum iron (27 mch/dL) ↓

Folic acid (2.4 ng/mL) ↓

Homocysteine (43 Umol/L) ↑

Vitamin D3 (24 ng/L) ↓

Potential interpretation pitfalls:

Focusing on individual deficiencies without considering their interrelations

Overlooking cardiovascular risk from elevated homocysteine

Missing the potential underlying malabsorption syndrome

AI ChatBot interpretations:

While some parameters are optimal (particularly the lipid profile), there are several areas requiring immediate medical attention, especially the critically elevated homocysteine and multiple nutritional deficiencies. The combination of these findings suggests the need for a comprehensive medical evaluation.

3.1.3. Case #2b: Follow-Up Analysis

Patient profile: same female, 35 years

Key Findings:

Hypochromic microcytic anemia

Serum iron (26.6 mch/dL) ↓ (persistent)

Homocysteine (16.2 Umol/L) ↑

Potential interpretation pitfalls:

Failing to connect with previous results

Missing the progression of anemia

Overlooking the persistent elevated homocysteine despite normal folate

AI ChatBot interpretations:

The primary concern is iron deficiency anemia (confirming at the second sampling) requiring prompt attention. While other blood components are normal, the combination of low iron studies and anemia suggests the need for comprehensive evaluation and treatment.

3.1.4. Case #3: Complex Metabolic Profile (table 4)

Patient profile: male, 54 years

Key Findings:

Ferritin (424 ng/ml) ↑

Total cholesterol (233 mg/dL) ↑

Normal liver function tests

urine pH ↓

Potential interpretation pitfalls:

Missing the connection between elevated ferritin and potential inflammation

Overlooking the need for iron overload assessment

Failing to consider metabolic syndrome indicators

AI ChatBot interpretations:

Overall, these results indicate generally good health with a few areas requiring attention. The primary focus should be on cholesterol management and monitoring ferritin levels. The excellent inflammatory markers and normal organ function tests are very positive indicators.

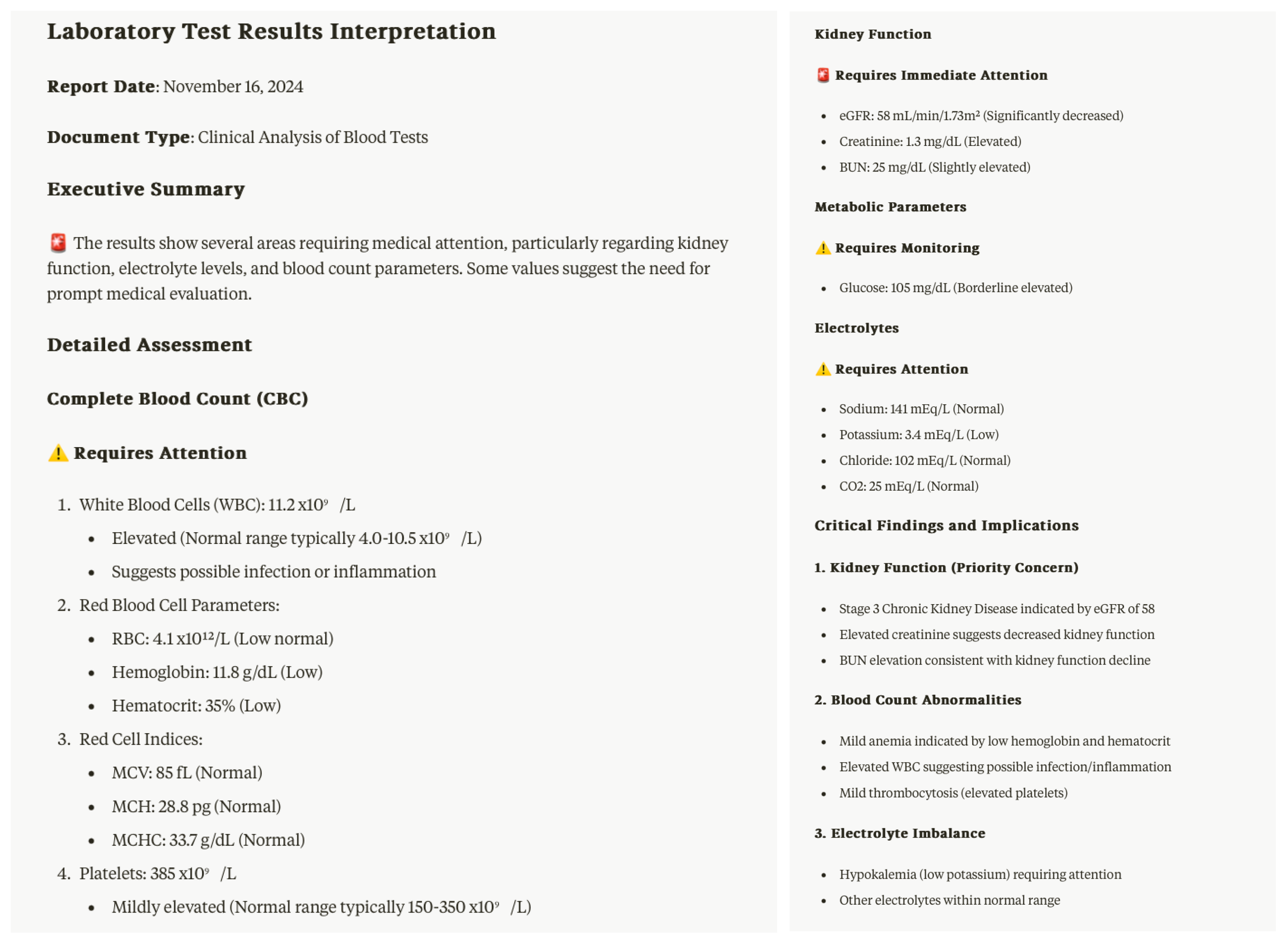

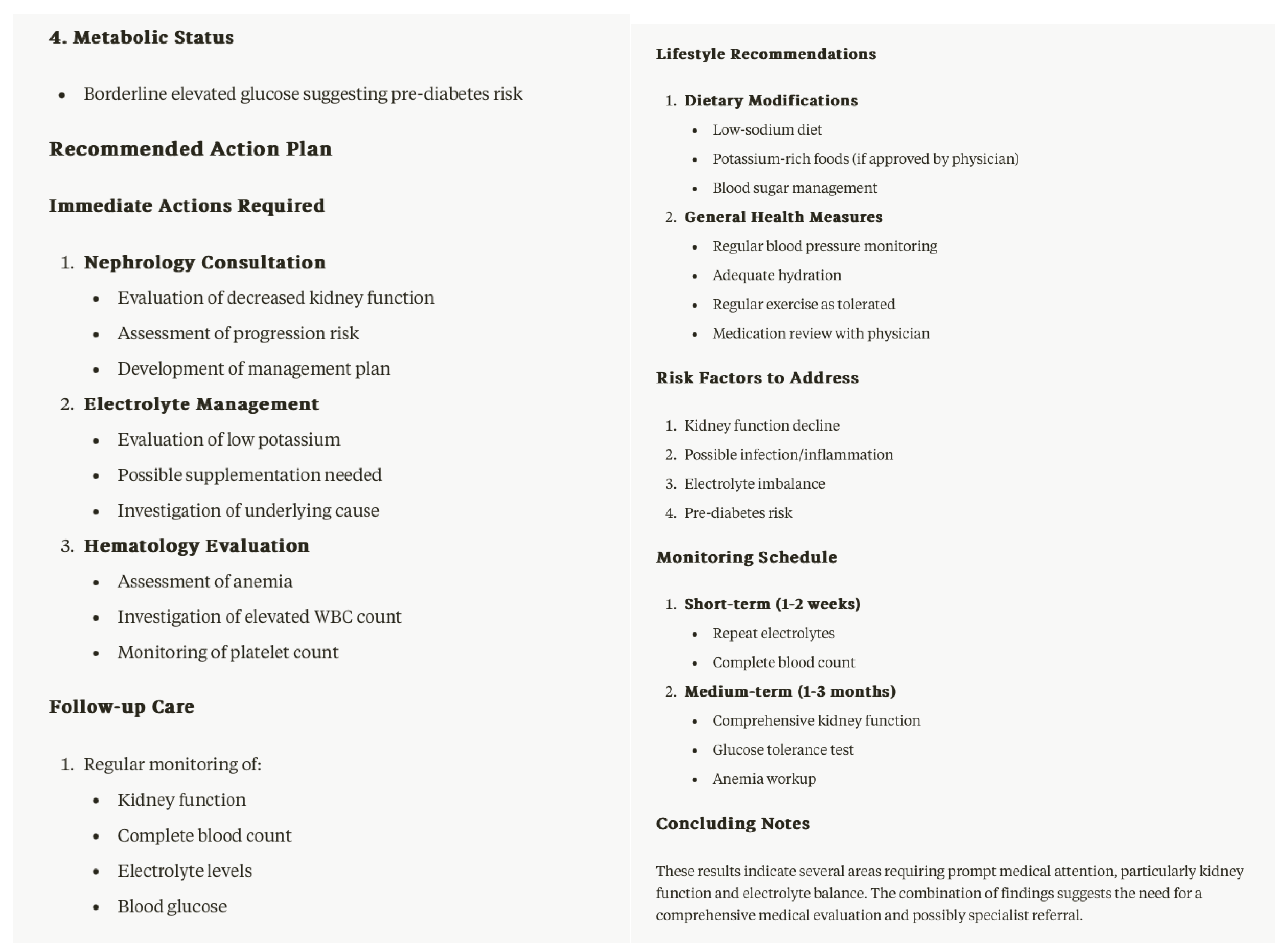

3.1.5. Case #4: Complex Metabolic Profile (Figure 2)

Patient profile: female, 50 years

Key Findings:

WBC: 11.2 x10^9/L ↑

RBC: 4.1 x10^12/L ↓

Hemoglobin: 11.8/dL ↓

Hematocrit: 35% ↓

BUN: 25 mg/dL ↑

Creatinine: 1.3 mg/dL ↑

eGFR: 58 mL/min ↓

Potential interpretation pitfalls:

Missing the connection between kidney function and electrolytes

Missing mild anemia

AI ChatBot interpretations:

The results show several areas requiring medical attention, particularly regarding kidney function, electrolyte levels, and blood count parameters. Some values suggest the need for prompt medical evaluation.

4. Discussion

Our study demonstrates the remarkable potential of AI systems, particularly the Claude-based conversational chatbot, in transforming how laboratory results are interpreted and communicated [

18,

19]. This success stems from several key factors that address current challenges in laboratory reporting practices while opening new possibilities for patient care [

20]

The controlled training environment of our AI model proved crucial in achieving consistent and reliable interpretations, completely eliminating the risk of hallucinations, while maintaining strict adherence to privacy and security requirements [

11,

18]. This approach is particularly relevant given our finding that while 96% of Italian laboratories use interpretative comments, most lack standardized procedures within their teams. The implementation of high-quality, domain-specific prompts enabled the model to provide accurate interpretations of laboratory parameters without venturing into broader clinical assessments, effectively addressing the current inconsistencies in laboratory reporting practices.

The success of this AI-based interpretation system points toward a transformative opportunity in laboratory medicine. Currently, laboratory reports are primarily designed for healthcare professionals, often presenting complex numerical data and specialized terminology that patients find difficult to comprehend. Our research suggests that integrating AI interpretation systems could enable laboratories to automatically generate patient-friendly versions of reports alongside traditional technical ones. This dual-reporting approach would include clear explanations of each parameter, intuitive graphical representations of results within reference ranges, and contextual information about general health implications, all while carefully avoiding diagnostic conclusions.

The implementation of such an AI-guided interpretation system offers multiple advantages across the healthcare ecosystem [

2,

5]. For patients, it provides unprecedented access to understandable information about their health status [

4], fostering greater engagement in their healthcare journey. This improved comprehension can significantly reduce anxiety related to test results and decrease reliance on potentially misleading online sources. Healthcare professionals benefit from this system as well, as it allows them to focus their expertise on more complex aspects of patient care, such as developing comprehensive treatment plans and discussing nuanced clinical implications.

Perhaps most significantly, this system has the potential to transform the doctor-patient relationship. When patients arrive at consultations with a better understanding of their laboratory results, discussions can become more productive and focused on treatment decisions rather than basic result interpretation. This enhanced communication pathway creates a more collaborative healthcare environment, potentially leading to improved health outcomes through better-informed decision-making and increased patient compliance with treatment plans.

The evolution toward AI-assisted laboratory reporting represents a significant step forward in patient-centered healthcare delivery. By bridging the gap between technical medical data and patient understanding, while maintaining high standards of accuracy and reliability, this approach addresses multiple challenges in current laboratory medicine. The system not only streamlines healthcare delivery but also promotes a more effective and collaborative healthcare environment, ultimately contributing to better health outcomes through improved communication and patient engagement.

Our findings suggest that the future of laboratory medicine lies in this harmonious integration of AI technology with traditional reporting systems, creating a more accessible, efficient, and patient-centered approach to healthcare delivery. This evolution in laboratory reporting could serve as a model for other areas of healthcare where technical information needs to be effectively communicated to patients while maintaining professional standards and accuracy.

5. Conclusions

This evolution in laboratory reporting, made possible by artificial intelligence, represents a significant step toward more patient-centered medicine [

3,

15] and could contribute to improving health literacy in the general population. Future studies should explore the practical implementation of these evolved reporting systems [

6,

8] and their impact on patient satisfaction, treatment adherence, and overall health outcomes.

Our study not only demonstrates the potential of carefully designed AI models to improve patients' understanding of laboratory results but also paves the way for a potential revolution in laboratory reporting systems. As AI continues to evolve, it promises to bridge communication gaps in healthcare, ultimately contributing to improved patient outcomes and more efficient healthcare delivery. Future studies should explore the practical implementation of these evolved reporting systems and their impact on patient satisfaction, treatment adherence, and overall health outcomes.

Author Contributions

Conceptualization, FB; methodology, FD; writing—original draft preparation, FD, FB.; writing—review and editing ,FD, FB.; supervision, FB. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

We encourage all authors of articles published in MDPI journals to share their research data. In this section, please provide details regarding where data supporting reported results can be found, including links to publicly archived datasets analyzed or generated during the study. Where no new data were created, or where data is unavailable due to privacy or ethical restrictions, a statement is still required. Suggested Data Availability Statements are available in section “MDPI Research Data Policies” at

https://www.mdpi.com/ethics.

Institutional review board statement

Not applicable.

Informed consent statement

Not applicable.

Data availability statement

Not applicable.

Conflicts of interest

The authors declare no conflicts of interest.

References

- Lee, P.; Bubeck, S.; Petro, J. Benefits, Limits, and Risks of GPT-4 as an AI Chatbot for Medicine. New Engl. J. Med. 2023, 388, 1233–1239. [Google Scholar] [CrossRef] [PubMed]

- Herman, D.S.; Rhoads, D.D.; Schulz, W.L.; Durant, T.J.S. Artificial Intelligence and Mapping a New Direction in Laboratory Medicine: A Review. Clin. Chem. 2021, 67, 1466–1482. [Google Scholar] [CrossRef] [PubMed]

- Naugler, C.; Church, D.L. Automation and artificial intelligence in the clinical laboratory. Crit. Rev. Clin. Lab. Sci. 2019, 56, 98–110. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Citardi, D.; Xing, A.; Luo, X.; Lu, Y.; He, Z. Patient Challenges and Needs in Comprehending Laboratory Test Results: Mixed Methods Study. J. Med Internet Res. 2020, 22, e18725. [Google Scholar] [CrossRef] [PubMed]

- Yang, H.S.; Wang, F.; Greenblatt, M.B.; Huang, S.X.; Zhang, Y. AI Chatbots in Clinical Laboratory Medicine: Foundations and Trends. Clin. Chem. 2023, 69, 1238–1246. [Google Scholar] [CrossRef] [PubMed]

- Thirunavukarasu, A.J.; Ting, D.S.J.; Elangovan, K.; Gutierrez, L.; Tan, T.F. Large language models in medicine. Nat. Med. 2023, 29, 1930–1940. [Google Scholar] [CrossRef] [PubMed]

- Zhavoronkov, A. Caution with AI-generated content in biomedicine. Nat. Med. 2023, 29, 532–532. [Google Scholar] [CrossRef] [PubMed]

- Steimetz, E.; Minkowitz, J.; Gabutan, E.C.; Ngichabe, J.; Attia, H.; Hershkop, M.; Ozay, F.; Hanna, M.G.; Gupta, R. Use of Artificial Intelligence Chatbots in Interpretation of Pathology Reports. JAMA Netw. Open 2024, 7, e2412767–e2412767. [Google Scholar] [CrossRef] [PubMed]

- Cabitza F, Banfi G. Machine learning in laboratory medicine: waiting for the flood? Clin Chem Lab Med 2018;56:516-24.

- van Dis, E.A.M.; Bollen, J.; Zuidema, W.; van Rooij, R.; Bockting, C.L. ChatGPT: five priorities for research. Nature 2023, 614, 224–226. [Google Scholar] [CrossRef] [PubMed]

- Pennestrì, F.; Banfi, G. Artificial intelligence in laboratory medicine: fundamental ethical issues and normative key-points. cclm 2022, 60, 1867–1874. [Google Scholar] [CrossRef] [PubMed]

- Ali SR, Dobbs TD, Hutchings HA, Whitaker IS. Using ChatGPT to write patient clinic letters. Lancet Digit Health 2023;5:e179-81.

- Ayers, J.W.; Poliak, A.; Dredze, M.; Leas, E.C.; Zhu, Z.; Kelley, J.B.; Faix, D.J.; Goodman, A.M.; Longhurst, C.A.; Hogarth, M.; et al. Comparing Physician and Artificial Intelligence Chatbot Responses to Patient Questions Posted to a Public Social Media Forum. JAMA Intern. Med. 2023, 183, 589–596. [Google Scholar] [CrossRef] [PubMed]

- Carobene A, Cabitza F, Bernardini S, Gopalan R, Lennerz JK, Weir C, et al. Where is laboratory medicine headed in the next decade? Clin Chem Lab Med 2023;61:535-43.

- Zhou, Q.; Qi, S.; Xiao, B.; Li, Q.; Sun, Z.; Li, L. [Artificial intelligence empowers laboratory medicine in Industry 4. 0].. 2020, 40, 287–296. [Google Scholar] [CrossRef]

- Patel SB, Lam K. ChatGPT: the future of discharge summaries? The Lancet Digit Health 2023;5:e107-e108.

- Kung, T.H.; Cheatham, M.; Medenilla, A.; Sillos, C.; De Leon, L.; Elepaño, C.; Madriaga, M.; Aggabao, R.; Diaz-Candido, G.; Maningo, J.; et al. Performance of ChatGPT on USMLE: Potential for AI-assisted medical education using large language models. PLOS Digit. Heal. 2023, 2, e0000198. [Google Scholar] [CrossRef]

- Marks, M.; Haupt, C.E. AI Chatbots, Health Privacy, and Challenges to HIPAA Compliance. JAMA 2023, 330, 309–310. [Google Scholar] [CrossRef]

- Munoz-Zuluaga, C.; Zhao, Z.; Wang, F.; Greenblatt, M.B.; Yang, H.S. Assessing the Accuracy and Clinical Utility of ChatGPT in Laboratory Medicine. Clin. Chem. 2023, 69, 939–940. [Google Scholar] [CrossRef] [PubMed]

- Chen, S.; Kann, B.H.; Foote, M.B.; Aerts, H.J.W.L.; Savova, G.K.; Mak, R.H.; Bitterman, D.S. Use of Artificial Intelligence Chatbots for Cancer Treatment Information. JAMA Oncol. 2023, 9, 1459–1462. [Google Scholar] [CrossRef] [PubMed]

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).