Submitted:

16 December 2024

Posted:

17 December 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

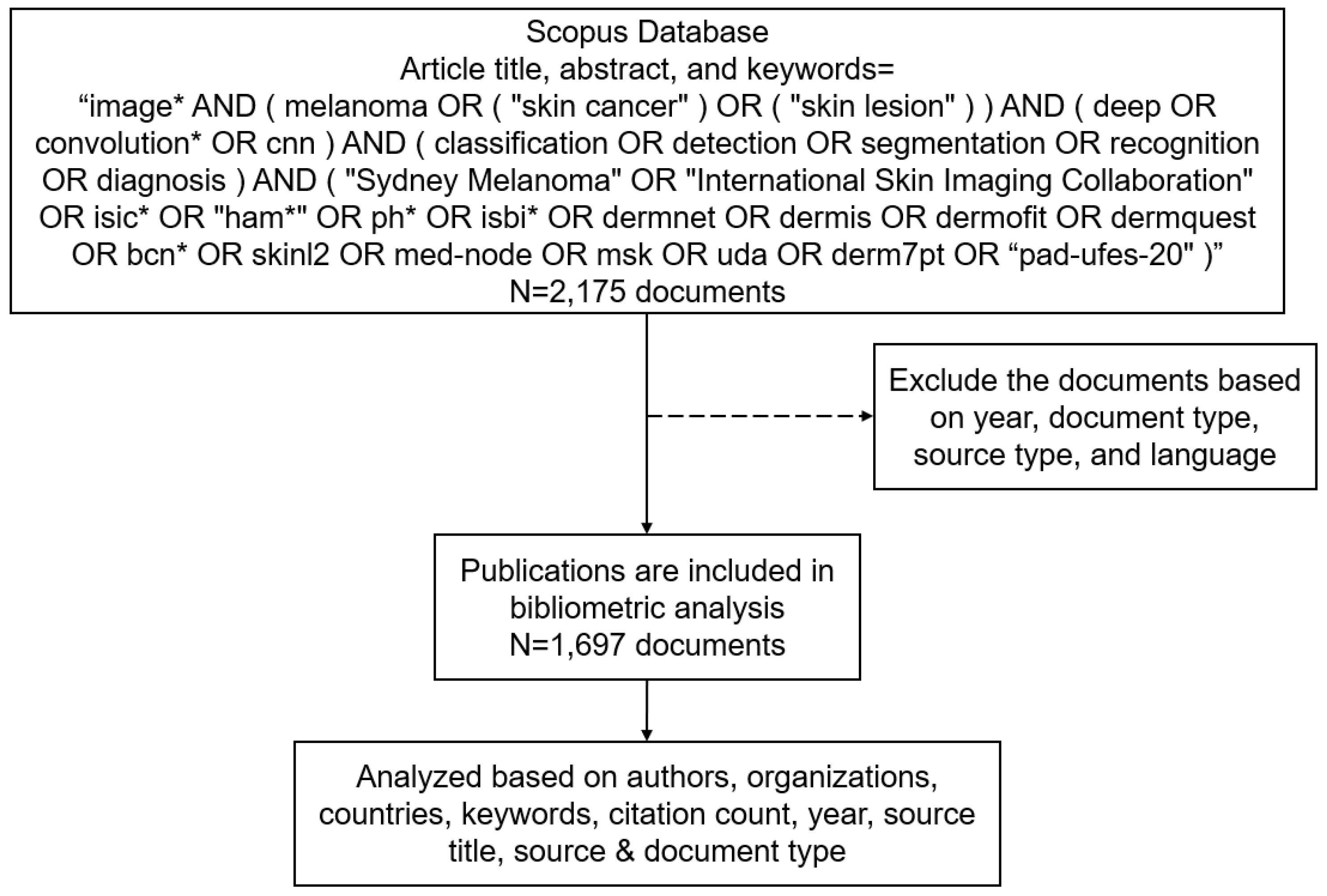

2. Material and Methods

2.1. Data Collection

2.2. Data Exclusion

2.3. Data Analysis

3. Results

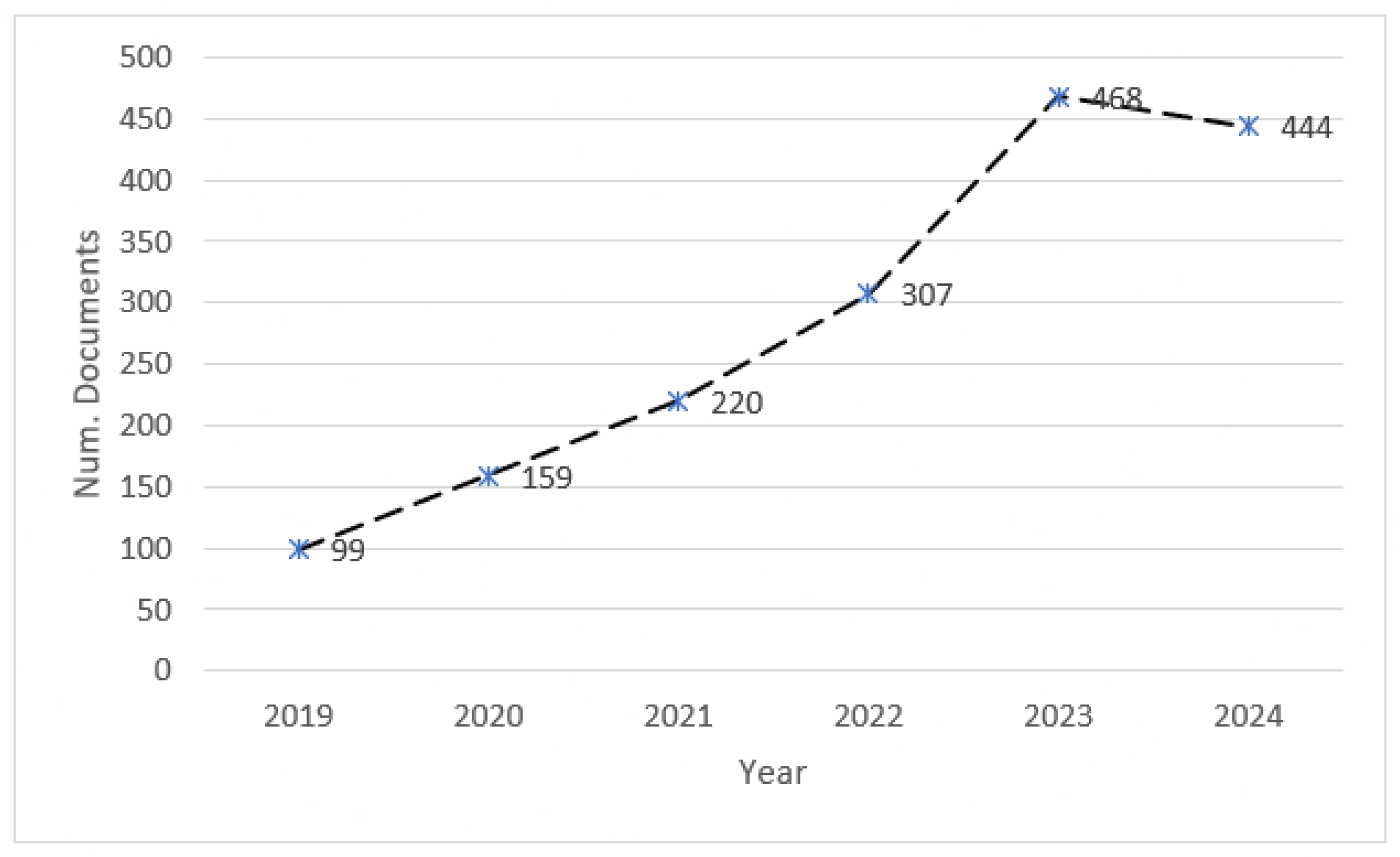

3.1. Publication Trends

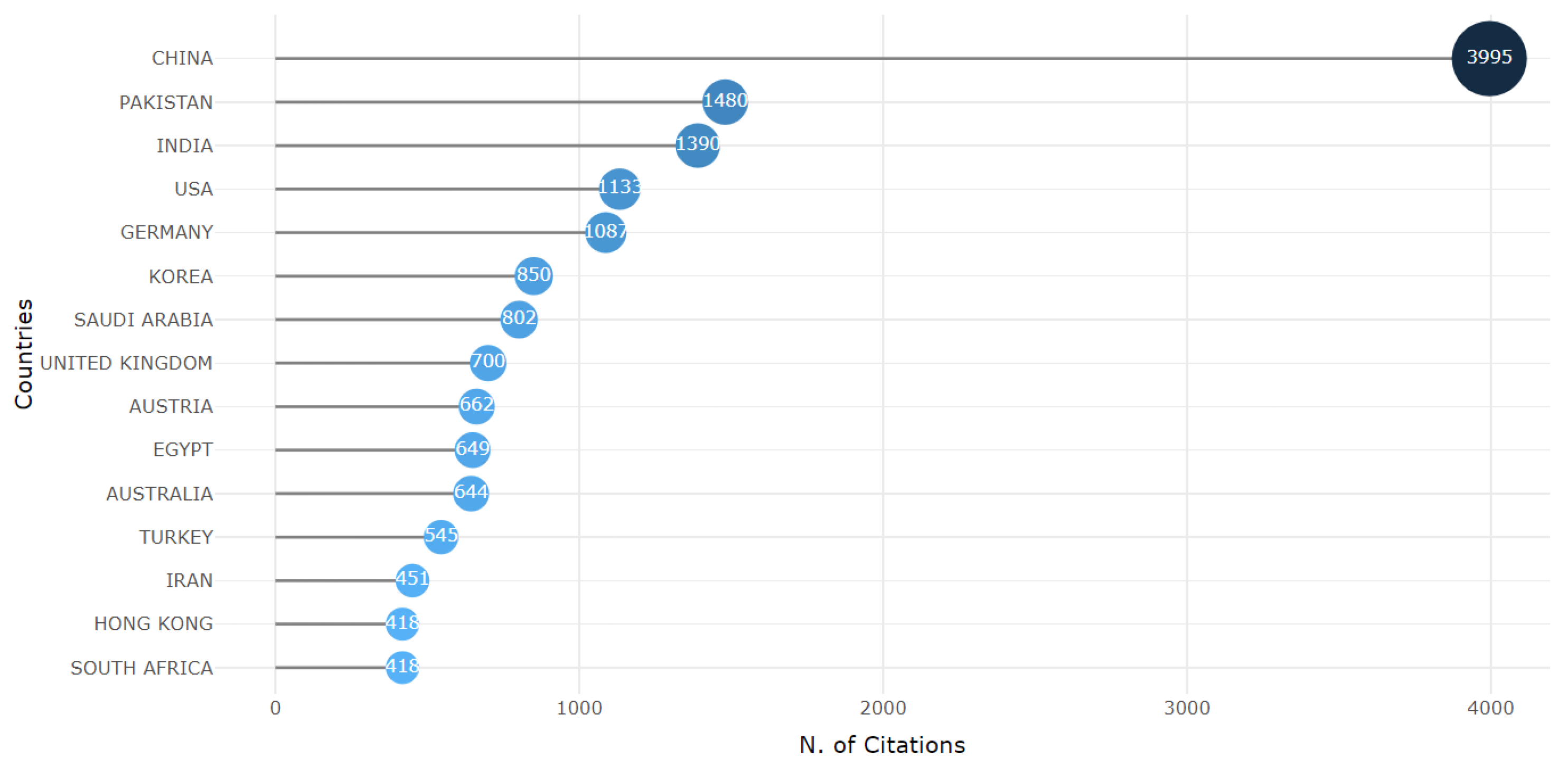

3.2. Countries Distribution

3.3. Authors Analysis

3.4. References

3.4.1. Advancements in Semi-Supervised Learning for Medical Image Segmentation

| No | Organization | #Docs |

|---|---|---|

| 1 | Skin Cancer Unit, German Cancer Research Center (DKFZ), Heidelberg, Germany |

7 |

| 2 | Dept. of Dermatology, Heidelberg University, Mannheim, Germany |

6 |

| 3 | Dept. of Dermatology, University Hospital Essen, Essen, Germany |

6 |

| 4 | Chitkara University Institute of Engineering and Technology, Chitkara University, Punjab, India |

5 |

| 5 | Dept. of Dermatology, University Hospital Regensburg, Regensburg, Germany |

5 |

3.4.2. State-of-the-Art Performance in Segmentation and Classification

3.4.3. Ensemble and Attention-Based Methods Enhancing Accuracy

3.4.4. Impact Beyond Skin Lesions

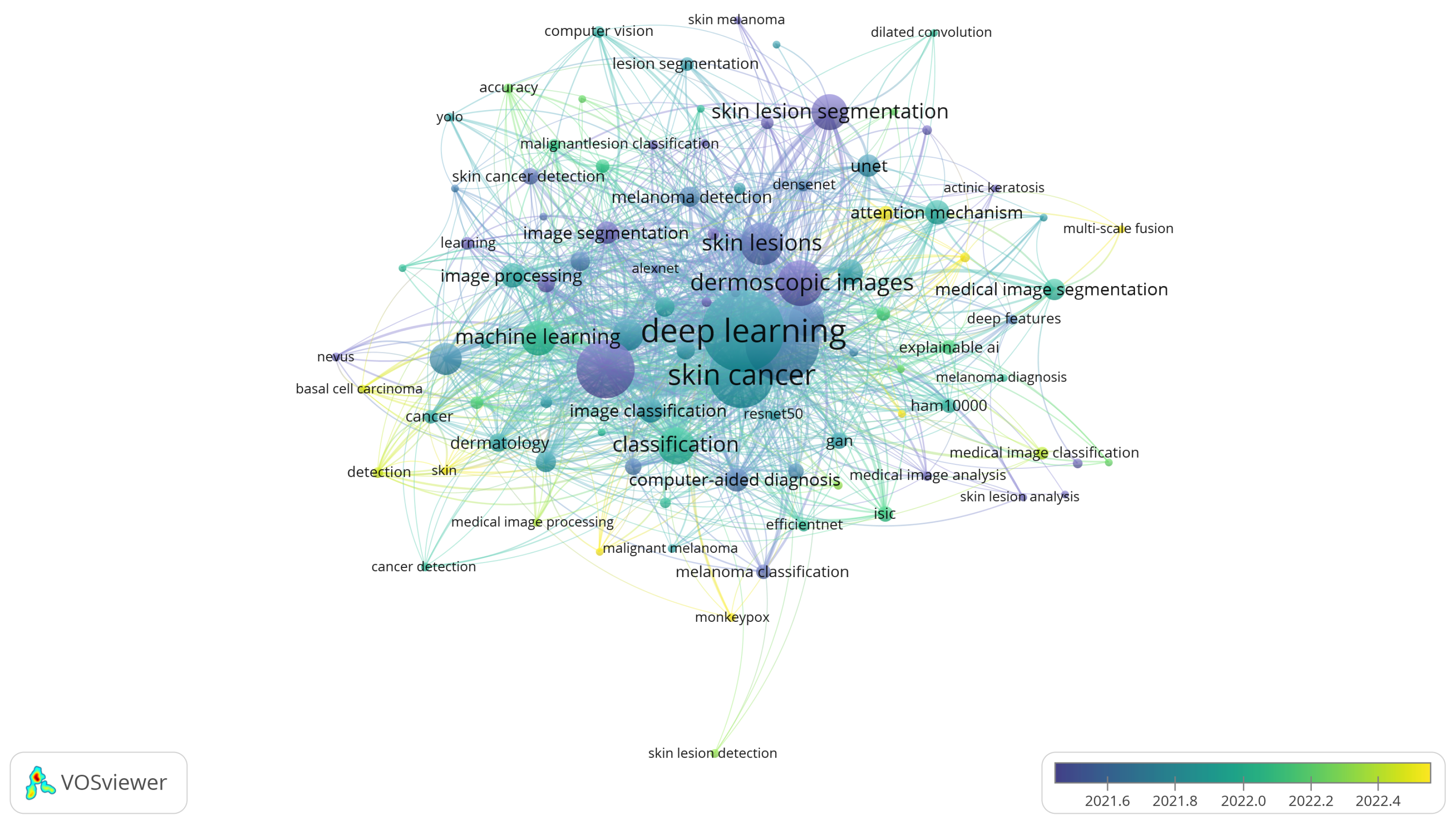

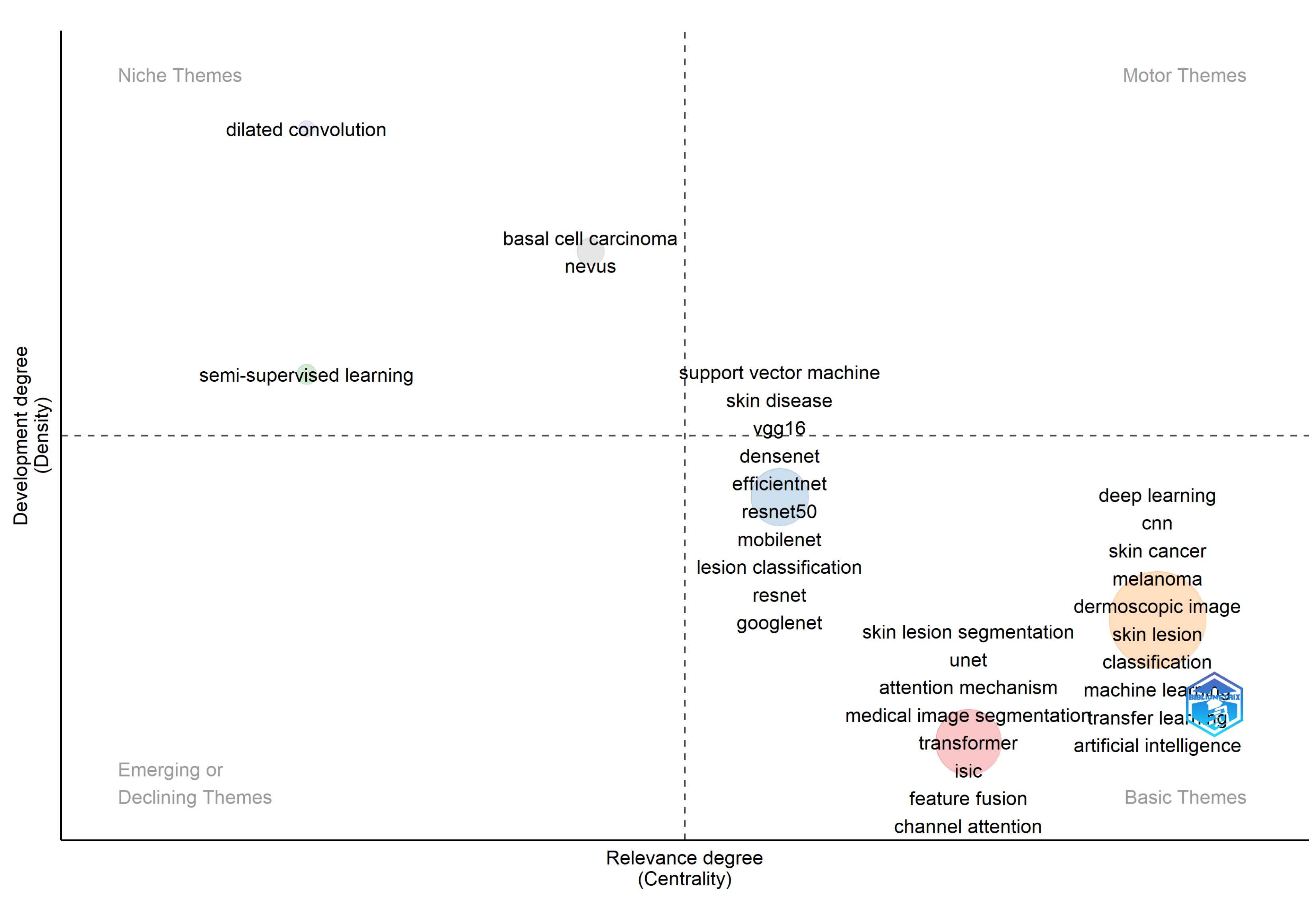

3.5. Keywords and Research Trends

4. Conclusions

References

- Furriel, B.C.R.S.; Oliveira, B.D.; Prôa, R.; Paiva, J.Q.; Loureiro, R.M.; Calixto, W.P.; Reis, M.R.C.; Giavina-Bianchi, M. Artificial intelligence for skin cancer detection and classification for clinical environment: a systematic review. Frontiers in Medicine 2024, 10, 1305954. [Google Scholar] [CrossRef] [PubMed]

- Brancaccio, G.; Balato, A.; Malvehy, J.; Puig, S.; Argenziano, G.; Kittler, H. Artificial Intelligence in Skin Cancer Diagnosis: A Reality Check. Journal of Investigative Dermatology 2024, 144, 492–499. [Google Scholar] [CrossRef] [PubMed]

- Wei, M.L.; Tada, M.; So, A.; Torres, R. Artificial intelligence and skin cancer. Frontiers in Medicine 2024, 11, 1331895. [Google Scholar] [CrossRef] [PubMed]

- Celebi, M.E.; Codella, N.; Halpern, A. Dermoscopy Image Analysis: Overview and Future Directions. IEEE Journal of Biomedical and Health Informatics 2019, 23, 474–478. [Google Scholar] [CrossRef]

- Stafford, H.; Buell, J.; Chiang, E.; Ramesh, U.; Migden, M.; Nagarajan, P.; Amit, M.; Yaniv, D. Non-Melanoma Skin Cancer Detection in the Age of Advanced Technology: A Review. Cancers 2023, 15, 3094. [Google Scholar] [CrossRef]

- Debelee, T.G. Skin Lesion Classification and Detection Using Machine Learning Techniques: A Systematic Review. Diagnostics 2023, 13. [Google Scholar] [CrossRef]

- Chu, Y.S.; An, H.G.; Oh, B.H.; Yang, S. Artificial Intelligence in Cutaneous Oncology. Frontiers in Medicine 2020, 7, 318. [Google Scholar] [CrossRef]

- Choy, S.P.; Kim, B.J.; Paolino, A.; Tan, W.R.; Lim, S.M.L.; Seo, J.; Tan, S.P.; Francis, L.; Tsakok, T.; Simpson, M.; Barker, J.N.W.N.; Lynch, M.D.; Corbett, M.S.; Smith, C.H.; Mahil, S.K. Systematic review of deep learning image analyses for the diagnosis and monitoring of skin disease. npj Digital Medicine 2023, 6, 180. [Google Scholar] [CrossRef]

- Hauser, K.; Kurz, A.; Haggenmüller, S.; Maron, R.C.; Von Kalle, C.; Utikal, J.S.; Meier, F.; Hobelsberger, S.; Gellrich, F.F.; Sergon, M.; Hauschild, A.; French, L.E.; Heinzerling, L.; Schlager, J.G.; Ghoreschi, K.; Schlaak, M.; Hilke, F.J.; Poch, G.; Kutzner, H.; Berking, C.; Heppt, M.V.; Erdmann, M.; Haferkamp, S.; Schadendorf, D.; Sondermann, W.; Goebeler, M.; Schilling, B.; Kather, J.N.; Fröhling, S.; Lipka, D.B.; Hekler, A.; Krieghoff-Henning, E.; Brinker, T.J. Explainable artificial intelligence in skin cancer recognition: A systematic review. European Journal of Cancer 2022, 167, 54–69. [Google Scholar] [CrossRef]

- Takiddin, A.; Schneider, J.; Yang, Y.; Abd-Alrazaq, A.; Househ, M. Artificial Intelligence for Skin Cancer Detection: Scoping Review. Journal of Medical Internet Research 2021, 23, e22934. [Google Scholar] [CrossRef]

- Khattar, S.; Kaur, R. Computer assisted diagnosis of skin cancer: A survey and future recommendations. Computers and Electrical Engineering 2022, 104, 108431. [Google Scholar] [CrossRef]

- Painuli, D.; Bhardwaj, S.; köse, U. Recent advancement in cancer diagnosis using machine learning and deep learning techniques: A comprehensive review. Computers in Biology and Medicine 2022, 146, 105580. [Google Scholar] [CrossRef] [PubMed]

- Brinker, T.J.; Hekler, A.; Enk, A.H.; Von Kalle, C. Enhanced classifier training to improve precision of a convolutional neural network to identify images of skin lesions. PLOS ONE 2019, 14, e0218713. [Google Scholar] [CrossRef] [PubMed]

- Maron, R.C.; Hekler, A.; Krieghoff-Henning, E.; Schmitt, M.; Schlager, J.G.; Utikal, J.S.; Brinker, T.J. Reducing the Impact of Confounding Factors on Skin Cancer Classification via Image Segmentation: Technical Model Study. Journal of Medical Internet Research 2021, 23, e21695. [Google Scholar] [CrossRef]

- Schneider, L.; Wies, C.; Krieghoff-Henning, E.I.; Bucher, T.C.; Utikal, J.S.; Schadendorf, D.; Brinker, T.J. Multimodal integration of image, epigenetic and clinical data to predict BRAF mutation status in melanoma. European Journal of Cancer 2023, 183, 131–138. [Google Scholar] [CrossRef]

- Brinker, T.J.; Hekler, A.; Hauschild, A.; Berking, C.; Schilling, B.; Enk, A.H.; Haferkamp, S.; Karoglan, A.; Von Kalle, C.; Weichenthal, M.; Sattler, E.; Schadendorf, D.; Gaiser, M.R.; Klode, J.; Utikal, J.S. Comparing artificial intelligence algorithms to 157 German dermatologists: the melanoma classification benchmark. European Journal of Cancer 2019, 111, 30–37. [Google Scholar] [CrossRef]

- Brinker, T.J.; Hekler, A.; Enk, A.H.; Klode, J.; Hauschild, A.; Berking, C.; Schilling, B.; Haferkamp, S.; Schadendorf, D.; Holland-Letz, T.; Utikal, J.S.; Von Kalle, C.; Ludwig-Peitsch, W.; Sirokay, J.; Heinzerling, L.; Albrecht, M.; Baratella, K.; Bischof, L.; Chorti, E.; Dith, A.; Drusio, C.; Giese, N.; Gratsias, E.; Griewank, K.; Hallasch, S.; Hanhart, Z.; Herz, S.; Hohaus, K.; Jansen, P.; Jockenhöfer, F.; Kanaki, T.; Knispel, S.; Leonhard, K.; Martaki, A.; Matei, L.; Matull, J.; Olischewski, A.; Petri, M.; Placke, J.M.; Raub, S.; Salva, K.; Schlott, S.; Sody, E.; Steingrube, N.; Stoffels, I.; Ugurel, S.; Zaremba, A.; Gebhardt, C.; Booken, N.; Christolouka, M.; Buder-Bakhaya, K.; Bokor-Billmann, T.; Enk, A.; Gholam, P.; Hänßle, H.; Salzmann, M.; Schäfer, S.; Schäkel, K.; Schank, T.; Bohne, A.S.; Deffaa, S.; Drerup, K.; Egberts, F.; Erkens, A.S.; Ewald, B.; Falkvoll, S.; Gerdes, S.; Harde, V.; Hauschild, A.; Jost, M.; Kosova, K.; Messinger, L.; Metzner, M.; Morrison, K.; Motamedi, R.; Pinczker, A.; Rosenthal, A.; Scheller, N.; Schwarz, T.; Stölzl, D.; Thielking, F.; Tomaschewski, E.; Wehkamp, U.; Weichenthal, M.; Wiedow, O.; Bär, C.M.; Bender-Säbelkampf, S.; Horbrügger, M.; Karoglan, A.; Kraas, L.; Faulhaber, J.; Geraud, C.; Guo, Z.; Koch, P.; Linke, M.; Maurier, N.; Müller, V.; Thomas, B.; Utikal, J.S.; Alamri, A.S.M.; Baczako, A.; Berking, C.; Betke, M.; Haas, C.; Hartmann, D.; Heppt, M.V.; Kilian, K.; Krammer, S.; Lapczynski, N.L.; Mastnik, S.; Nasifoglu, S.; Ruini, C.; Sattler, E.; Schlaak, M.; Wolff, H.; Achatz, B.; Bergbreiter, A.; Drexler, K.; Ettinger, M.; Haferkamp, S.; Halupczok, A.; Hegemann, M.; Dinauer, V.; Maagk, M.; Mickler, M.; Philipp, B.; Wilm, A.; Wittmann, C.; Gesierich, A.; Glutsch, V.; Kahlert, K.; Kerstan, A.; Schilling, B.; Schrüfer, P. Deep learning outperformed 136 of 157 dermatologists in a head-to-head dermoscopic melanoma image classification task. European Journal of Cancer 2019, 113, 47–54. [Google Scholar] [CrossRef]

- Brinker, T.J.; Hekler, A.; Enk, A.H.; Klode, J.; Hauschild, A.; Berking, C.; Schilling, B.; Haferkamp, S.; Schadendorf, D.; Fröhling, S.; Utikal, J.S.; Von Kalle, C.; Ludwig-Peitsch, W.; Sirokay, J.; Heinzerling, L.; Albrecht, M.; Baratella, K.; Bischof, L.; Chorti, E.; Dith, A.; Drusio, C.; Giese, N.; Gratsias, E.; Griewank, K.; Hallasch, S.; Hanhart, Z.; Herz, S.; Hohaus, K.; Jansen, P.; Jockenhöfer, F.; Kanaki, T.; Knispel, S.; Leonhard, K.; Martaki, A.; Matei, L.; Matull, J.; Olischewski, A.; Petri, M.; Placke, J.M.; Raub, S.; Salva, K.; Schlott, S.; Sody, E.; Steingrube, N.; Stoffels, I.; Ugurel, S.; Sondermann, W.; Zaremba, A.; Gebhardt, C.; Booken, N.; Christolouka, M.; Buder-Bakhaya, K.; Bokor-Billmann, T.; Enk, A.; Gholam, P.; Hänßle, H.; Salzmann, M.; Schäfer, S.; Schäkel, K.; Schank, T.; Bohne, A.S.; Deffaa, S.; Drerup, K.; Egberts, F.; Erkens, A.S.; Ewald, B.; Falkvoll, S.; Gerdes, S.; Harde, V.; Hauschild, A.; Jost, M.; Kosova, K.; Messinger, L.; Metzner, M.; Morrison, K.; Motamedi, R.; Pinczker, A.; Rosenthal, A.; Scheller, N.; Schwarz, T.; Stölzl, D.; Thielking, F.; Tomaschewski, E.; Wehkamp, U.; Weichenthal, M.; Wiedow, O.; Bär, C.M.; Bender-Säbelkampf, S.; Horbrügger, M.; Karoglan, A.; Kraas, L.; Faulhaber, J.; Geraud, C.; Guo, Z.; Koch, P.; Linke, M.; Maurier, N.; Müller, V.; Thomas, B.; Utikal, J.S.; Alamri, A.S.M.; Baczako, A.; Berking, C.; Betke, M.; Haas, C.; Hartmann, D.; Heppt, M.V.; Kilian, K.; Krammer, S.; Lapczynski, N.L.; Mastnik, S.; Nasifoglu, S.; Ruini, C.; Sattler, E.; Schlaak, M.; Wolff, H.; Achatz, B.; Bergbreiter, A.; Drexler, K.; Ettinger, M.; Haferkamp, S.; Halupczok, A.; Hegemann, M.; Dinauer, V.; Maagk, M.; Mickler, M.; Philipp, B.; Wilm, A.; Wittmann, C.; Gesierich, A.; Glutsch, V.; Kahlert, K.; Kerstan, A.; Schilling, B.; Schrüfer, P. A convolutional neural network trained with dermoscopic images performed on par with 145 dermatologists in a clinical melanoma image classification task. European Journal of Cancer 2019, 111, 148–154. [Google Scholar] [CrossRef]

- Attique Khan, M.; Sharif, M.; Akram, T.; Kadry, S.; Hsu, C.H. A two-stream deep neural network-based intelligent system for complex skin cancer types classification. International Journal of Intelligent Systems 2022, 37, 10621–10649. [Google Scholar] [CrossRef]

- Zahoor, S.; Lali, I.U.; Khan, M.A.; Javed, K.; Mehmood, W. Breast cancer detection and classification using traditional computer vision techniques: A comprehensive review. Current Medical Imaging 2020, 16, 1187–1200. [Google Scholar] [CrossRef]

- Malik, S.; Akram, T.; Awais, M.; Khan, M.A.; Hadjouni, M.; Elmannai, H.; Alasiry, A.; Marzougui, M.; Tariq, U. An Improved Skin Lesion Boundary Estimation for Enhanced-Intensity Images Using Hybrid Metaheuristics. Diagnostics 2023, 13. [Google Scholar] [CrossRef] [PubMed]

- Saba, T.; Khan, M.A.; Rehman, A.; Marie-Sainte, S.L. Region Extraction and Classification of Skin Cancer: A Heterogeneous framework of Deep CNN Features Fusion and Reduction. Journal of Medical Systems 2019, 43. [Google Scholar] [CrossRef] [PubMed]

- Bibi, S.; Khan, M.A.; Shah, J.H.; Damaševičius, R.; Alasiry, A.; Marzougui, M.; Alhaisoni, M.; Masood, A. MSRNet: Multiclass Skin Lesion Recognition Using Additional Residual Block Based Fine-Tuned Deep Models Information Fusion and Best Feature Selection. Diagnostics 2023, 13. [Google Scholar] [CrossRef] [PubMed]

- Arshad, M.; Khan, M.A.; Tariq, U.; Armghan, A.; Alenezi, F.; Younus Javed, M.; Aslam, S.M.; Kadry, S. A Computer-Aided Diagnosis System Using Deep Learning for Multiclass Skin Lesion Classification. Computational Intelligence and Neuroscience 2021, 2021. [Google Scholar] [CrossRef]

- Nawaz, M.; Nazir, T.; Khan, M.A.; Alhaisoni, M.; Kim, J.Y.; Nam, Y. MSeg-Net: A Melanoma Mole Segmentation Network Using CornerNet and Fuzzy K -Means Clustering. Computational and Mathematical Methods in Medicine 2022, 2022. [Google Scholar] [CrossRef]

- Nawaz, M.; Nazir, T.; Masood, M.; Ali, F.; Khan, M.A.; Tariq, U.; Sahar, N.; Damaševičius, R. Melanoma segmentation: A framework of improved DenseNet77 and UNET convolutional neural network. International Journal of Imaging Systems and Technology 2022, 32, 2137–2153. [Google Scholar] [CrossRef]

- Hussain, M.; Khan, M.A.; Damaševičius, R.; Alasiry, A.; Marzougui, M.; Alhaisoni, M.; Masood, A. SkinNet-INIO: Multiclass Skin Lesion Localization and Classification Using Fusion-Assisted Deep Neural Networks and Improved Nature-Inspired Optimization Algorithm. Diagnostics 2023, 13. [Google Scholar] [CrossRef]

- Ahmad, N.; Shah, J.H.; Khan, M.A.; Baili, J.; Ansari, G.J.; Tariq, U.; Kim, Y.J.; Cha, J.H. A novel framework of multiclass skin lesion recognition from dermoscopic images using deep learning and explainable AI. Frontiers in Oncology 2023, 13. [Google Scholar] [CrossRef]

- Iqbal, A.; Sharif, M.; Khan, M.A.; Nisar, W.; Alhaisoni, M. FF-UNet: a U-Shaped Deep Convolutional Neural Network for Multimodal Biomedical Image Segmentation. Cognitive Computation 2022, 14, 1287–1302. [Google Scholar] [CrossRef]

- Khan, M.A.; Muhammad, K.; Sharif, M.; Akram, T.; Albuquerque, V.H.C.D. Multi-Class Skin Lesion Detection and Classification via Teledermatology. IEEE Journal of Biomedical and Health Informatics 2021, 25, 4267–4275. [Google Scholar] [CrossRef]

- Khan, M.A.; Sharif, M.; Akram, T.; Damaševičius, R.; Maskeliūnas, R. Skin lesion segmentation and multiclass classification using deep learning features and improved moth flame optimization. Diagnostics 2021, 11. [Google Scholar] [CrossRef] [PubMed]

- Khan, M.A.; Akram, T.; Zhang, Y.D.; Sharif, M. Attributes based skin lesion detection and recognition: A mask RCNN and transfer learning-based deep learning framework. Pattern Recognition Letters 2021, 143, 58–66. [Google Scholar] [CrossRef]

- Khan, M.A.; Akram, T.; Sharif, M.; Javed, K.; Rashid, M.; Bukhari, S.A.C. An integrated framework of skin lesion detection and recognition through saliency method and optimal deep neural network features selection. Neural Computing and Applications 2020, 32, 15929–15948. [Google Scholar] [CrossRef]

- Khan, M.A.; Sharif, M.; Akram, T.; Bukhari, S.A.C.; Nayak, R.S. Developed Newton-Raphson based deep features selection framework for skin lesion recognition. Pattern Recognition Letters 2020, 129, 293–303. [Google Scholar] [CrossRef]

- Afza, F.; Sharif, M.; Khan, M.A.; Tariq, U.; Yong, H.S.; Cha, J. Multiclass Skin Lesion Classification Using Hybrid Deep Features Selection and Extreme Learning Machine. Sensors 2022, 22. [Google Scholar] [CrossRef]

- Afza, F.; Sharif, M.; Mittal, M.; Khan, M.A.; Jude Hemanth, D. A hierarchical three-step superpixels and deep learning framework for skin lesion classification. Methods 2022, 202, 88–102. [Google Scholar] [CrossRef]

- Khan, M.A.; Akram, T.; Zhang, Y.D.; Alhaisoni, M.; Al Hejaili, A.; Shaban, K.A.; Tariq, U.; Zayyan, M.H. SkinNet-ENDO: Multiclass skin lesion recognition using deep neural network and Entropy-Normal distribution optimization algorithm with ELM. International Journal of Imaging Systems and Technology 2023, 33, 1275–1292. [Google Scholar] [CrossRef]

- Khan, M.A.; Akram, T.; Sharif, M.; Kadry, S.; Nam, Y. Computer Decision Support System for Skin Cancer Localization and Classification. Computers, Materials and Continua 2021, 68, 1041–1064. [Google Scholar] [CrossRef]

- Malik, S.; Akram, T.; Ashraf, I.; Rafiullah, M.; Ullah, M.; Tanveer, J. A Hybrid Preprocessor DE-ABC for Efficient Skin-Lesion Segmentation with Improved Contrast. Diagnostics 2022, 12. [Google Scholar] [CrossRef]

- Malik, S.; Islam, S.M.R.; Akram, T.; Naqvi, S.R.; Alghamdi, N.S.; Baryannis, G. A novel hybrid meta-heuristic contrast stretching technique for improved skin lesion segmentation. Computers in Biology and Medicine 2022, 151. [Google Scholar] [CrossRef]

- Akram, T.; Lodhi, H.M.J.; Naqvi, S.R.; Naeem, S.; Alhaisoni, M.; Ali, M.; Haider, S.A.; Qadri, N.N. A multilevel features selection framework for skin lesion classification. Human-centric Computing and Information Sciences 2020, 10. [Google Scholar] [CrossRef]

- Anjum, M.A.; Amin, J.; Sharif, M.; Khan, H.U.; Malik, M.S.A.; Kadry, S. Deep Semantic Segmentation and Multi-Class Skin Lesion Classification Based on Convolutional Neural Network. IEEE Access 2020, 8, 129668–129678. [Google Scholar] [CrossRef]

- Kaur, R.; Gholamhosseini, H.; Sinha, R. Synthetic Images Generation Using Conditional Generative Adversarial Network for Skin Cancer Classification. 2021, Vol. 2021-December, p. 381 – 386. Cited by: 6. [CrossRef]

- Ali, A.A.; Taha, R.E.; Kaur, R.; Afifi, S.M. Multi-Class Classification of Melanoma on an Edge Device. 2023, p. 46 – 51. Cited by: 0. [CrossRef]

- Dawod, M.I.; Taha, R.; Kaur, R.; Afifi, S.M. Real-time Classification of Skin Cancer on an Edge Device. 2023, p. 184 – 191. Cited by: 0. [CrossRef]

- Kaur, R.; Gholamhosseini, H.; Sinha, R.; Lindén, M. Melanoma Classification Using a Novel Deep Convolutional Neural Network with Dermoscopic Images. Sensors 2022, 22. [Google Scholar] [CrossRef] [PubMed]

- Kaur, R.; GholamHosseini, H.; Sinha, R. Hairlines removal and low contrast enhancement of melanoma skin images using convolutional neural network with aggregation of contextual information. Biomedical Signal Processing and Control 2022, 76. [Google Scholar] [CrossRef]

- Kaur, R.; GholamHosseini, H.; Sinha, R.; Lindén, M. Automatic lesion segmentation using atrous convolutional deep neural networks in dermoscopic skin cancer images. BMC Medical Imaging 2022, 22. [Google Scholar] [CrossRef]

- Kaur, R.; Hosseini, H.G.; Sinha, R. Lesion Border Detection of Skin Cancer Images Using Deep Fully Convolutional Neural Network with Customized Weights. 2021, p. 3035 – 3038. Cited by: 2. [CrossRef]

- Kaur, R.; GholamHosseini, H.; Sinha, R. Skin lesion segmentation using an improved framework of encoder-decoder based convolutional neural network. International Journal of Imaging Systems and Technology 2022, 32, 1143–1158. [Google Scholar] [CrossRef]

- Kaur, R.; Gholamhosseini, H. Analyzing the Impact of Image Denoising and Segmentation on Melanoma Classification Using Convolutional Neural Networks. 2023. Cited by: 0. [CrossRef]

- Fogelberg, K.; Chamarthi, S.; Maron, R.C.; Niebling, J.; Brinker, T.J. Domain shifts in dermoscopic skin cancer datasets: Evaluation of essential limitations for clinical translation. New Biotechnology 2023, 76, 106–117. [Google Scholar] [CrossRef]

- Bibi, A.; Khan, M.A.; Javed, M.Y.; Tariq, U.; Kang, B.G.; Nam, Y.; Mostafa, R.R.; Sakr, R.H. Skin lesion segmentation and classification using conventional and deep learning based framework. Computers, Materials and Continua 2022, 71, 2477–2495. [Google Scholar] [CrossRef]

- Kalyani, K.; Althubiti, S.A.; Ahmed, M.A.; Lydia, E.L.; Kadry, S.; Han, N.; Nam, Y. Arithmetic Optimization with Ensemble Deep Transfer Learning Based Melanoma Classification. Computers, Materials and Continua 2023, 75, 149–164. [Google Scholar] [CrossRef]

- Kadry, S.; Taniar, D.; Damasevicius, R.; Rajinikanth, V.; Lawal, I.A. Extraction of Abnormal Skin Lesion from Dermoscopy Image using VGG-SegNet. 2021. Cited by: 36. [CrossRef]

- Cheng, X.; Kadry, S.; Meqdad, M.N.; Crespo, R.G. CNN supported framework for automatic extraction and evaluation of dermoscopy images. Journal of Supercomputing 2022, 78, 17114–17131. [Google Scholar] [CrossRef]

- Jiang, Y.; Dong, J.; Cheng, T.; Zhang, Y.; Lin, X.; Liang, J. iU-Net: a hybrid structured network with a novel feature fusion approach for medical image segmentation. BioData Mining 2023, 16. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Jiang, Y.; Qiao, H.; Wang, M.; Yan, W.; Chen, J. SIL-Net: A Semi-Isotropic L-shaped network for dermoscopic image segmentation. Computers in Biology and Medicine 2022, 150. [Google Scholar] [CrossRef] [PubMed]

- Jiang, Y.; Qiao, H.; Zhang, Z.; Wang, M.; Yan, W.; Chen, J. MDSC-Net: A multi-scale depthwise separable convolutional neural network for skin lesion segmentation. IET Image Processing 2023, 17, 3713–3727. [Google Scholar] [CrossRef]

- Jiang, Y.; Cao, S.; Tao, S.; Zhang, H. Skin Lesion Segmentation Based on Multi-Scale Attention Convolutional Neural Network. IEEE Access 2020, 8, 122811–122825. [Google Scholar] [CrossRef]

- Jiang, Y.; Dong, J.; Zhang, Y.; Cheng, T.; Lin, X.; Liang, J. PCF-Net: Position and context information fusion attention convolutional neural network for skin lesion segmentation. Heliyon 2023, 9. [Google Scholar] [CrossRef]

- Jiang, Y.; Cheng, T.; Dong, J.; Liang, J.; Zhang, Y.; Lin, X.; Yao, H. Dermoscopic image segmentation based on Pyramid Residual Attention Module. PLoS ONE 2022, 17. [Google Scholar] [CrossRef]

- Jiang, Y.; Wang, M.; Zhang, Z.; Qiao, H.; Yan, W.; Chen, J. CTDS-Net:CNN-Transformer Fusion Network for Dermoscopic Image Segmentation. 2023, p. 141 – 150. Cited by: 0. [CrossRef]

- Maron, R.C.; Haggenmüller, S.; von Kalle, C.; Utikal, J.S.; Meier, F.; Gellrich, F.F.; Hauschild, A.; French, L.E.; Schlaak, M.; Ghoreschi, K.; Kutzner, H.; Heppt, M.V.; Haferkamp, S.; Sondermann, W.; Schadendorf, D.; Schilling, B.; Hekler, A.; Krieghoff-Henning, E.; Kather, J.N.; Fröhling, S.; Lipka, D.B.; Brinker, T.J. Robustness of convolutional neural networks in recognition of pigmented skin lesions. European Journal of Cancer 2021, 145, 81–91. [Google Scholar] [CrossRef]

- Li, X.; Yu, L.; Chen, H.; Fu, C.W.; Xing, L.; Heng, P.A. Transformation-Consistent Self-Ensembling Model for Semisupervised Medical Image Segmentation. IEEE Transactions on Neural Networks and Learning Systems 2021, 32, 523–534. [Google Scholar] [CrossRef]

- Xie, Y.; Zhang, J.; Xia, Y.; Shen, C. A Mutual Bootstrapping Model for Automated Skin Lesion Segmentation and Classification. IEEE Transactions on Medical Imaging 2020, 39, 2482–2493. [Google Scholar] [CrossRef]

- Wu, H.; Chen, S.; Chen, G.; Wang, W.; Lei, B.; Wen, Z. FAT-Net: Feature adaptive transformers for automated skin lesion segmentation. Medical Image Analysis 2022, 76, 102327. [Google Scholar] [CrossRef]

- Hosny, K.M.; Kassem, M.A.; Foaud, M.M. Classification of skin lesions using transfer learning and augmentation with Alex-net. PLOS ONE 2019, 14, e0217293. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Xie, Y.; Xia, Y.; Shen, C. Attention Residual Learning for Skin Lesion Classification. IEEE Transactions on Medical Imaging 2019, 38, 2092–2103. [Google Scholar] [CrossRef] [PubMed]

- Gu, R.; Wang, G.; Song, T.; Huang, R.; Aertsen, M.; Deprest, J.; Ourselin, S.; Vercauteren, T.; Zhang, S. CA-Net: Comprehensive Attention Convolutional Neural Networks for Explainable Medical Image Segmentation. IEEE Transactions on Medical Imaging 2021, 40, 699–711. [Google Scholar] [CrossRef] [PubMed]

- Al-masni, M.A.; Kim, D.H.; Kim, T.S. Multiple skin lesions diagnostics via integrated deep convolutional networks for segmentation and classification. Computer Methods and Programs in Biomedicine 2020, 190, 105351. [Google Scholar] [CrossRef]

- Panayides, A.S.; Amini, A.; Filipovic, N.D.; Sharma, A.; Tsaftaris, S.A.; Young, A.; Foran, D.; Do, N.; Golemati, S.; Kurc, T.; Huang, K.; Nikita, K.S.; Veasey, B.P.; Zervakis, M.; Saltz, J.H.; Pattichis, C.S. AI in Medical Imaging Informatics: Current Challenges and Future Directions. IEEE Journal of Biomedical and Health Informatics 2020, 24, 1837–1857. [Google Scholar] [CrossRef]

- Goyal, M.; Oakley, A.; Bansal, P.; Dancey, D.; Yap, M.H. Skin Lesion Segmentation in Dermoscopic Images With Ensemble Deep Learning Methods. IEEE Access 2020, 8, 4171–4181. [Google Scholar] [CrossRef]

| No | Document Type | #Docs |

|---|---|---|

| 1 | Article | 1,098 |

| 2 | Conference paper | 556 |

| 3 | Review | 41 |

| 4 | Letter | 1 |

| 5 | Note | 1 |

| No | Source | #Docs |

|---|---|---|

| 1 | IEEE Access (IEEE) | 40 |

| 2 | Computers in Biology and Medicine (Elsevier) | 35 |

| 3 | Diagnostics (MDPI) | 34 |

| 4 | Multimedia Tools and Applications (Springer) | 29 |

| 5 | Biomedical Signal Processing and Control (Elsevier) | 25 |

| 6 | Computer Methods and Programs in Biomedicine (Elsevier) | 23 |

| 7 | Sensors (MDPI) | 16 |

| 8 | Cancers (MDPI) | 14 |

| 9 | International Journal of Imaging Systems and Technology (John Wiley and Son Inc.) |

14 |

| 10 | Applied Sciences (MDPI) | 13 |

| 11 | Medical Image Analysis (Elsevier) | 13 |

| 12 | Expert Systems with Applications (Elsevier) | 12 |

| 13 | Computers, Materials and Continua (Tech Science Press) | 12 |

| 14 | IEEE Journal of Biomedical and Health Informatics (IEEE) | 12 |

| 15 | Frontiers in Medicine (Frontiers Media) | 9 |

| No | Country | #Docs |

|---|---|---|

| 1 | India | 316 |

| 2 | China | 275 |

| 3 | United States | 155 |

| 4 | Saudi Arabia | 87 |

| 5 | Pakistan | 84 |

| 6 | United Kingdom | 66 |

| 7 | South Korea | 49 |

| 8 | Egypt | 45 |

| 9 | Germany | 45 |

| 10 | Canada | 41 |

| 11 | Turkey | 40 |

| 12 | Australia | 39 |

| 13 | Bangladesh | 38 |

| 14 | Spain | 34 |

| 15 | Italy | 32 |

| No | Author | Num. of Docs. | Documents | Country |

|---|---|---|---|---|

| 1 | Khan, M.A. | 20 | [19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36] | Pakistan |

| 2 | Akram, T. | 12 | [19,21,30,31,32,33,34,37,38,39,40,41] | Pakistan |

| 3 | Sharif, M. | 9 | [29,30,31,32,33,34,35,38,42] | Pakistan |

| 4 | Kaur, R. | 9 | [43,44,45,46,47,48,49,50,51] | New Zealand |

| 5 | Brinker, T.J. | 9 | [9,13,14,15,16,17,18,52] | Germany |

| 6 | Utikal, J.S. | 7 | [9,14,15,16,17,18] | Germany |

| 7 | Tariq, U. | 7 | [21,24,26,28,35,37,53] | Saudi Arabia |

| 8 | Kadry, S. | 7 | [19,24,38,42,54,55,56] | Norway |

| 9 | Jiang, Y. | 7 | [57,58,59,60,61,62,63] | China |

| 10 | Hekler, A. | 7 | [9,13,14,16,17,18,64] | Germany |

| No | Title | Year | Source | #Cit |

|---|---|---|---|---|

| 1 | Attention Residual Learning for Skin Lesion Classification [69] | 2019 | IEEE Transactions on Medical Imaging | 370 |

| 2 | Ca-Net: Comprehensive Attention Convolutional Neural Networks for Explainable Medical Image Segmentation [70] | 2021 | IEEE Transactions on Medical Imaging | 343 |

| 3 | Deep Learning Outperformed 136 Of 157 Dermatologists in A Head-To-Head Dermoscopic Melanoma Image Classification Task [17] | 2019 | European Journal of Cancer | 287 |

| 4 | Transformation-Consistent Self-Ensembling Model for Semisupervised Medical Image Segmentation [65] | 2021 | IEEE Transactions on Neural Networks and Learning Systems | 247 |

| 5 | Classification of Skin Lesions using Transfer Learning and Augmentation with Alex-Net [68] | 2019 | PLoS ONE | 223 |

| 6 | Multiple Skin Lesions Diagnostics via Integrated Deep Convolutional Networks for Segmentation and Classification [71] | 2020 | Computer Methods and Programs in Biomedicine | 221 |

| 7 | A Mutual Bootstrapping Model for Automated Skin Lesion Segmentation and Classification [66] | 2020 | IEEE Transactions on Medical Imaging | 218 |

| 8 | AI in Medical Imaging Informatics: Current Challenges and Future Directions [72] | 2020 | IEEE Journal of Biomedical and Health Informatics | 205 |

| 9 | Fat-Net: Feature Adaptive Transformers for Automated Skin Lesion Segmentation [67] | 2022 | Medical Image Analysis | 198 |

| 10 | Skin Lesion Segmentation in Dermoscopic Images with Ensemble Deep Learning Methods [73] | 2020 | IEEE Access | 189 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).