Submitted:

17 December 2024

Posted:

23 December 2024

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Open AI O1: Overview and Capabilities

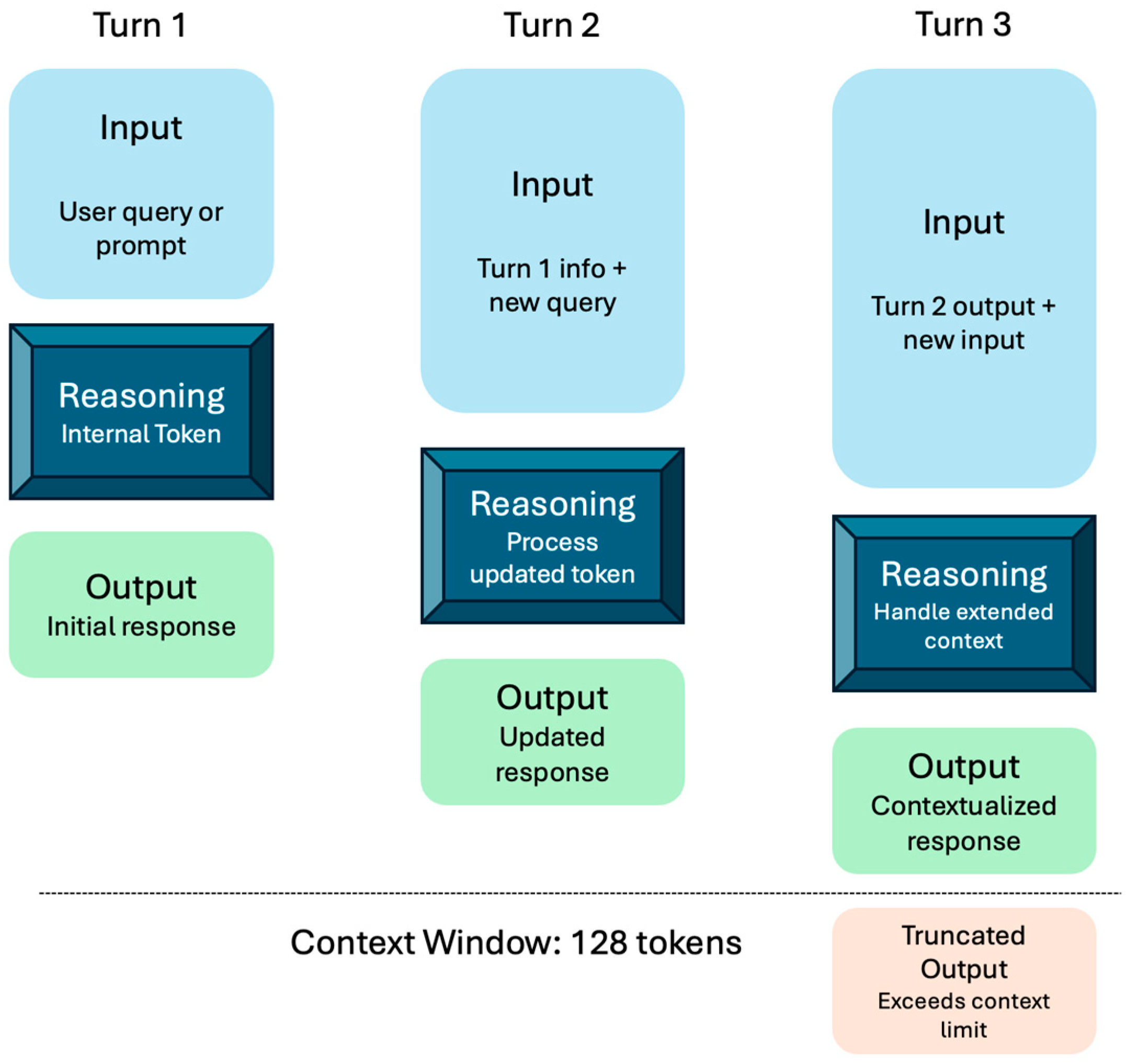

2.1. Reasoning Capabilities of O1

2.2. Prompting Strategies

2.3. Comparison of O1-Preview and O1-Mini

2.4. Key O1 Features and Improvements

2.5. Comparison with Predecessor Model

2.6. Application Areas

3. Materials & Methods

4. Results

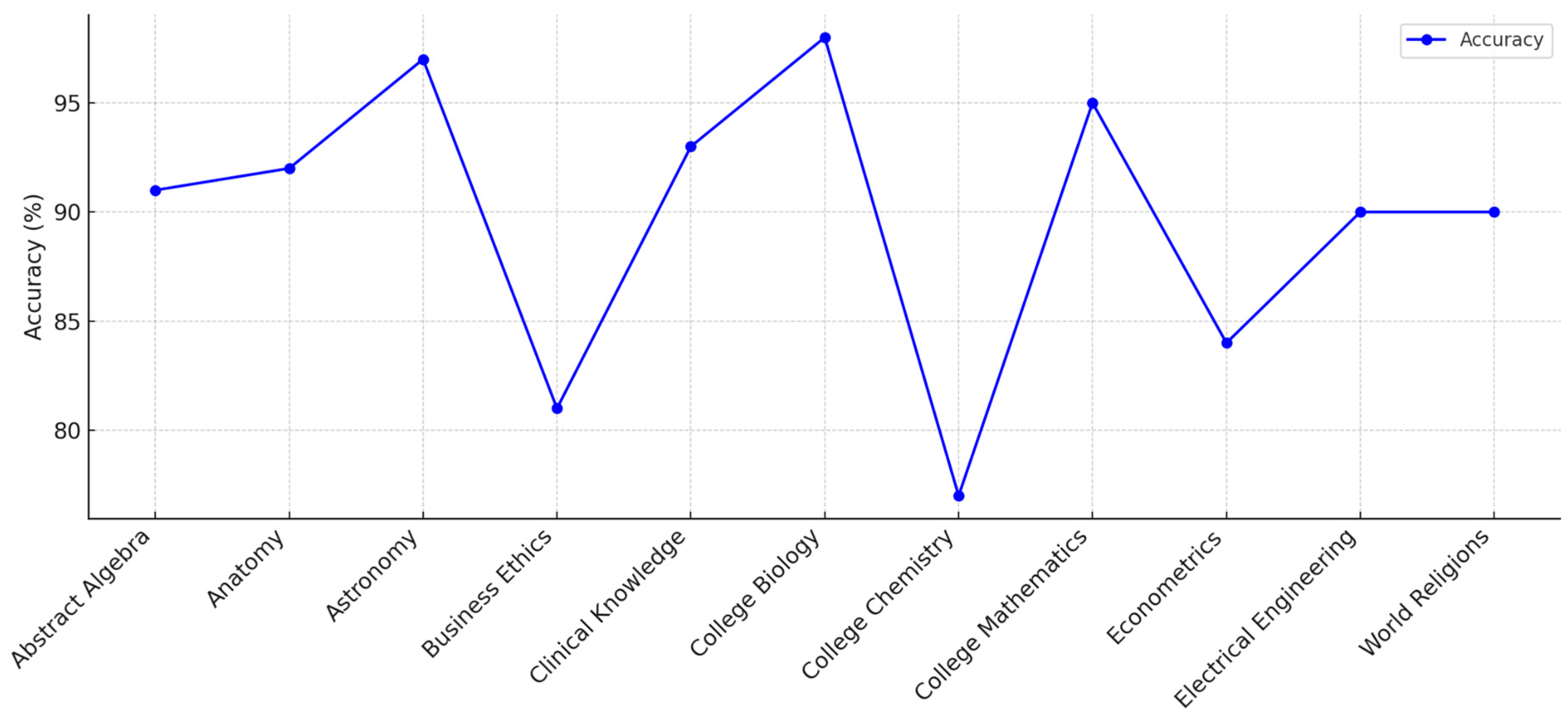

4.1. Experiment Set 1

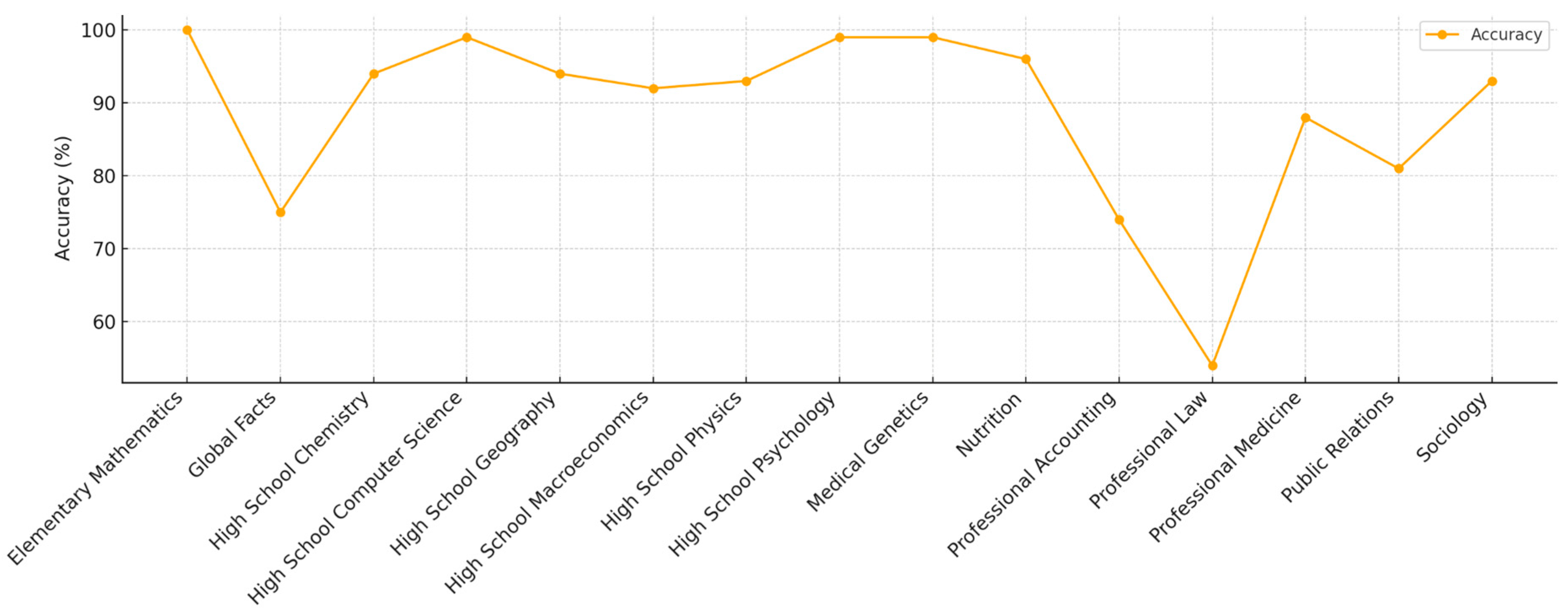

4.2. Experiment Set 2

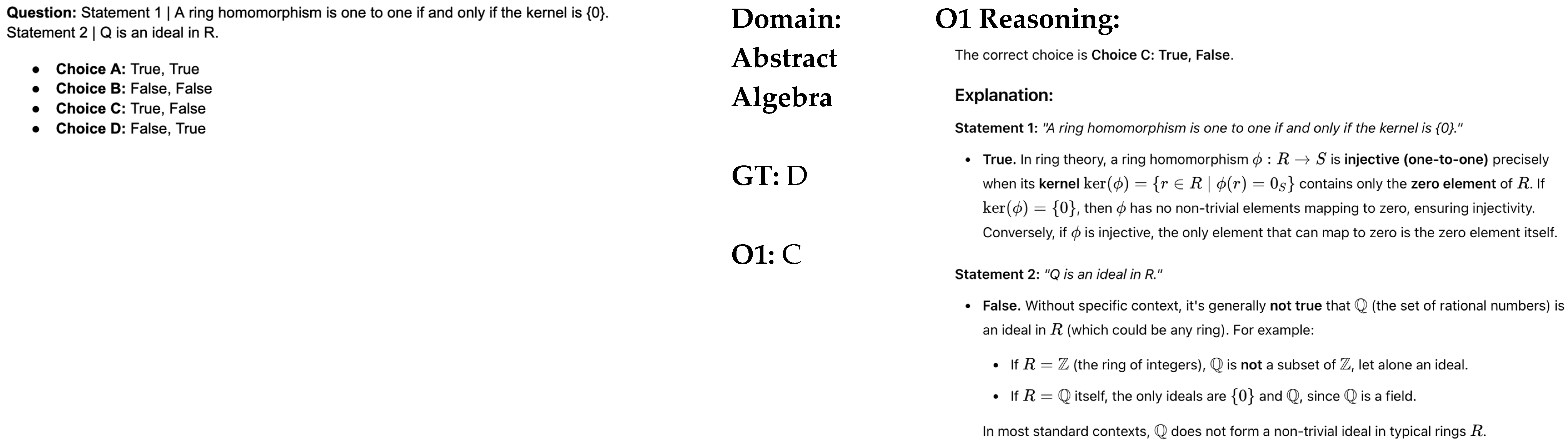

4.3. Sample Responses

5. Conclusions

Author statements

- Ethical Approval

- Ethical approval was not required because no personal data was used. Any analysis presented were aggregated.

- Competing Interests

- None declared.

References

- E. Kasneci et al., “ChatGPT for good? On opportunities and challenges of large language models for education,” Learn. Individ. Differ., vol. 103, p. 102274, Apr. 2023. [CrossRef]

- S. Shahriar, N. Al Roken, and I. Zualkernan, “Classification of Arabic Poetry Emotions Using Deep Learning,” Computers, vol. 12, no. 5, Art. no. 5, May 2023. [CrossRef]

- S. Shahriar et al., “Putting GPT-4o to the Sword: A Comprehensive Evaluation of Language, Vision, Speech, and Multimodal Proficiency,” Appl. Sci., vol. 14, no. 17, Art. no. 17, Jan. 2024. [CrossRef]

- K. Hayawi, S. Shahriar, H. Alashwal, and M. A. Serhani, “Generative AI and large language models: A new frontier in reverse vaccinology,” Inform. Med. Unlocked, vol. 48, p. 101533, Jan. 2024. [CrossRef]

- L. Yan et al., “Practical and ethical challenges of large language models in education: A systematic scoping review,” Br. J. Educ. Technol., vol. 55, no. 1, pp. 90–112, 2024. [CrossRef]

- J. Thirunavukarasu, D. S. J. Ting, K. Elangovan, L. Gutierrez, T. F. Tan, and D. S. W. Ting, “Large language models in medicine,” Nat. Med., vol. 29, no. 8, pp. 1930–1940, Aug. 2023. [CrossRef]

- S. Shahriar and N. Al Roken, “How can generative adversarial networks impact computer generated art? Insights from poetry to melody conversion,” Int. J. Inf. Manag. Data Insights, vol. 2, no. 1, p. 100066, Apr. 2022. [CrossRef]

- L. Zhao et al., “When brain-inspired AI meets AGI,” Meta-Radiol., vol. 1, no. 1, p. 100005, Jun. 2023. [CrossRef]

- H. Zhao, A. Chen, X. Sun, H. Cheng, and J. Li, “All in One and One for All: A Simple yet Effective Method towards Cross-domain Graph Pretraining,” in Proceedings of the 30th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, in KDD ’24. New York, NY, USA: Association for Computing Machinery, Aug. 2024, pp. 4443–4454. [CrossRef]

- “Introducing OpenAI o1.” Accessed: Dec. 06, 2024. [Online]. Available: https://openai.com/o1/.

- T. Zhong et al., “Evaluation of OpenAI o1: Opportunities and Challenges of AGI,” Sep. 27, 2024, arXiv: arXiv:2409.18486. [CrossRef]

- W. Fan et al., “A Survey on RAG Meeting LLMs: Towards Retrieval-Augmented Large Language Models,” in Proceedings of the 30th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, in KDD ’24. New York, NY, USA: Association for Computing Machinery, Aug. 2024, pp. 6491–6501. [CrossRef]

- P. Lewis et al., “Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks,” in Advances in Neural Information Processing Systems, Curran Associates, Inc., 2020, pp. 9459–9474. Accessed: Dec. 06, 2024. [Online]. Available: https://proceedings.neurips.cc/paper/2020/hash/6b493230205f780e1bc26945df7481e5-Abstract.html.

- S. Siriwardhana, R. Weerasekera, E. Wen, T. Kaluarachchi, R. Rana, and S. Nanayakkara, “Improving the Domain Adaptation of Retrieval Augmented Generation (RAG) Models for Open Domain Question Answering,” Trans. Assoc. Comput. Linguist., vol. 11, pp. 1–17, Jan. 2023. [CrossRef]

- S. Wu et al., “Retrieval-Augmented Generation for Natural Language Processing: A Survey,” CoRR, Jan. 2024, Accessed: Dec. 06, 2024. [Online]. Available: https://openreview.net/forum?id=bjrU9fIhD3.

- T. Carta, C. Romac, T. Wolf, S. Lamprier, O. Sigaud, and P.-Y. Oudeyer, “Grounding Large Language Models in Interactive Environments with Online Reinforcement Learning,” in Proceedings of the 40th International Conference on Machine Learning, PMLR, Jul. 2023, pp. 3676–3713. Accessed: Dec. 06, 2024. [Online]. Available: https://proceedings.mlr.press/v202/carta23a.html.

- S. Shahriar and K. Hayawi, “Let’s Have a Chat! A Conversation with ChatGPT: Technology, Applications, and Limitations,” Artif. Intell. Appl., vol. 2, no. 1, Art. no. 1, 2024. [CrossRef]

- Y. Bai et al., “Training a Helpful and Harmless Assistant with Reinforcement Learning from Human Feedback,” Apr. 12, 2022, arXiv: arXiv:2204.05862. [CrossRef]

- H. Lee et al., “RLAIF: Scaling Reinforcement Learning from Human Feedback with AI Feedback,” Oct. 2023, Accessed: Dec. 06, 2024. [Online]. Available: https://openreview.net/forum?id=AAxIs3D2ZZ.

- D. Hendrycks et al., “Measuring Massive Multitask Language Understanding,” presented at the International Conference on Learning Representations, Oct. 2020. Accessed: Dec. 05, 2024. [Online]. Available: https://openreview.net/forum?id=d7KBjmI3GmQ.

- F. Beeson, leobeeson/llm_benchmarks. (Dec. 05, 2024). Accessed: Dec. 05, 2024. [Online]. Available: https://github.com/leobeeson/llm_benchmarks.

| Model | Context window | Max output tokens | Training data |

|---|---|---|---|

| o1-preview (Points to the most recent snapshot of the o1 model: o1-preview-2024-09-12) |

128,000 tokens | 32,768 tokens | Up to Oct 2023 |

| o1-mini (Points to the most recent o1-mini snapshot: o1-mini-2024-09-12) |

128,000 tokens | 65,536 tokens | Up to Oct 2023 |

| Feature/Capability | GPT-4 | Open AI O1 |

|---|---|---|

| Training Efficiency | Predictable scaling; requires large compute resources | Optimized scaling laws with efficient compute usage |

| Domain Generalization | Excels in STEM and general NLP tasks | Balanced performance across STEM, professional, and humanities domains |

| Safety Mechanisms | RLHF reduces risks but limited in high-stakes applications | Enhanced safety pipeline and adversarial testing for critical domains |

| Context Window | Limited to shorter contexts (~8,000 tokens) | Supports extended context windows (~16,000+ tokens) |

| Multimodal Inputs | Limited to text and visual input | Improved multimodal capabilities for structured data and text integration |

| Multilingual Performance | High performance in English, moderate in low-resource languages | Consistently high performance across multiple languages, including low-resource ones |

| Alignment | Aligned through RLHF, some biases remain | More refined alignment through iterative feedback and training |

| Benchmark | Metric | GPT-4 Accuracy (%) | O1 Accuracy (%) |

|---|---|---|---|

| Multitask Language Understanding (MMLU) | Average Accuracy | 86.4 | 89.2 |

| HumanEval (Python Coding Tasks) | Pass Rate | 67.0 | 73.5 |

| Clinical Knowledge Test | Accuracy | 90.5 | 93.0 |

| Professional Law Test | Accuracy | 50.2 | 54.0 |

| High School Mathematics Test | Accuracy | 95.0 | 98.0 |

| Domain | Test Description | Benchmark |

|---|---|---|

| Abstract Algebra | Evaluates the model's understanding of algebraic structures such as groups, rings, and fields, fundamental in advanced mathematics | MMLU |

| Anatomy | Assesses knowledge of human anatomical structures and systems, essential for medical and biological sciences | MMLU |

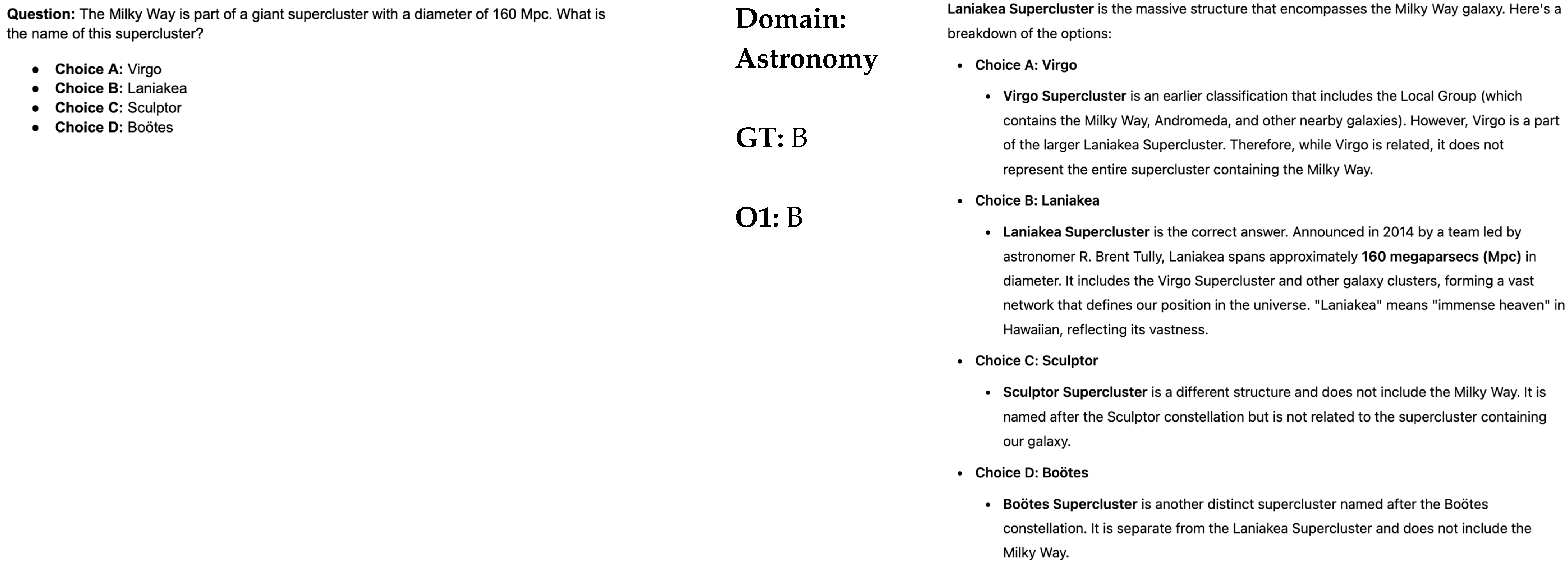

| Astronomy | Tests comprehension of celestial objects, phenomena, and the universe's structure, crucial for astrophysics studies | MMLU |

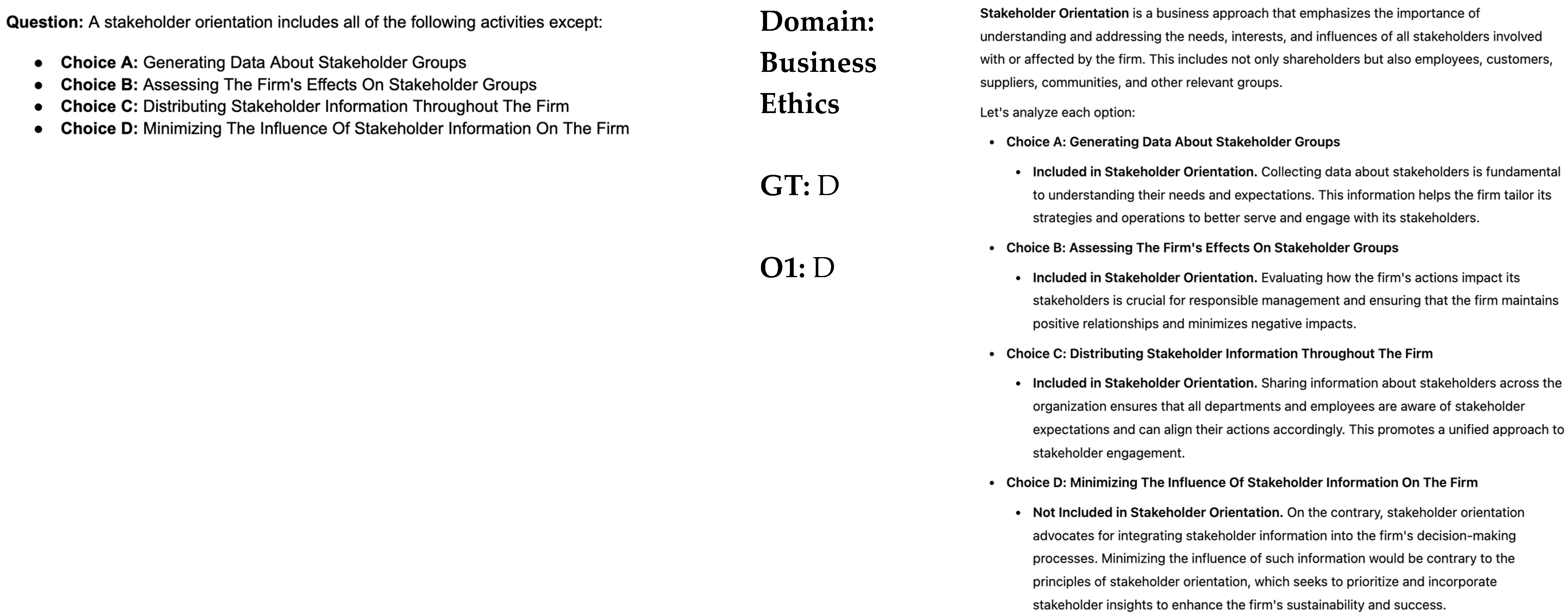

| Business Ethics | Evaluates understanding of moral principles in business contexts, important for corporate governance and ethical decision-making | MMLU |

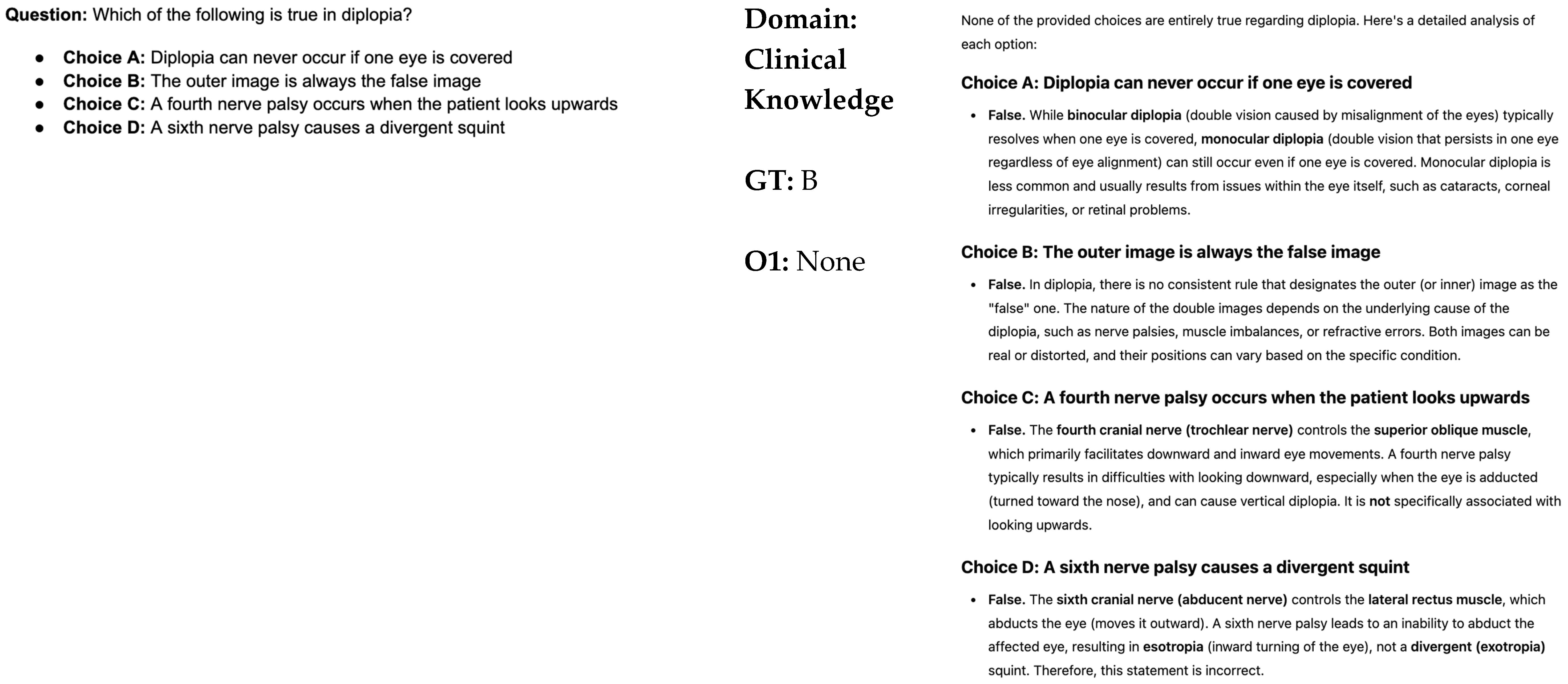

| Clinical Knowledge | Measures proficiency in medical knowledge and clinical practices, vital for healthcare professionals. Included in the MMLU benchmark | MMLU |

| College Biology | Assesses understanding of biological concepts at the college level, covering topics like genetics, ecology, and physiology | MMLU |

| College Chemistry | Tests knowledge of chemical principles, reactions, and laboratory practices at the collegiate level | MMLU |

| College Mathematics | Evaluates proficiency in higher-level mathematics, including calculus, linear algebra, and differential equations | MMLU |

| Econometrics | Assesses the ability to apply statistical methods to economic data, essential for economic analysis and forecasting | MMLU |

| Electrical Engineering | Tests knowledge of electrical circuits, systems, and signal processing, fundamental for engineering disciplines | MMLU |

| World Religions | Evaluates understanding of major world religions, their histories, beliefs, and cultural impacts | MMLU |

| Elementary Mathematics | Assesses basic mathematical skills, including arithmetic and elementary problem-solving | LLM Benchmarks |

| Global Facts | Tests general knowledge about world geography, politics, and global events | LLM Benchmarks |

| High School Chemistry | Evaluates understanding of chemical principles taught at the high school level | LLM Benchmarks |

| High School Computer Science | Assesses knowledge of basic computer science concepts, including programming and algorithms | LLM Benchmarks |

| High School Geography | Tests understanding of physical and human geography topics covered in high school curricula | LLM Benchmarks |

| High School Macroeconomics | Evaluates knowledge of economic principles related to the economy, such as inflation and GDP | LLM Benchmarks |

| High School Physics | Assesses understanding of fundamental physics concepts taught at the high school level | LLM Benchmarks |

| High School Psychology | Tests knowledge of psychological theories and practices covered in high school courses | LLM Benchmarks |

| Medical Genetics | Evaluates understanding of genetic principles and their medical applications, crucial for healthcare and research | MMLU |

| Nutrition | Assesses knowledge of dietary principles, human nutrition, and health implications | MMLU |

| Professional Accounting | Tests proficiency in accounting principles, financial reporting, and auditing practices | MMLU |

| Professional Law | Evaluates understanding of legal concepts, case law, and legal reasoning, essential for legal professionals | MMLU |

| Professional Medicine | Assesses clinical knowledge and medical practices required for healthcare providers | MMLU |

| Public Relations | Tests understanding of communication strategies, media relations, and public perception management | MMLU |

| Test | Accuracy (%) |

|---|---|

| Abstract Algebra | 91 |

| Anatomy | 92 |

| Astronomy | 97 |

| Business Ethics | 81 |

| Clinical Knowledge | 93 |

| College Biology | 98 |

| College Chemistry | 77 |

| College Mathematics | 95 |

| Econometrics | 84 |

| Electrical Engineering | 90 |

| World Religions | 90 |

| Test | Accuracy (%) |

|---|---|

| Elementary Mathematics | 100 |

| Global Facts | 75 |

| High School Chemistry | 94 |

| High School Computer Science | 99 |

| High School Geography | 94 |

| High School Macroeconomics | 92 |

| High School Physics | 93 |

| High School Psychology | 99 |

| Medical Genetics | 99 |

| Nutrition | 96 |

| Professional Accounting | 74 |

| Professional Law | 54 |

| Professional Medicine | 88 |

| Public Relations | 81 |

| Sociology | 93 |

|

|

|

|

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).