Submitted:

24 March 2025

Posted:

26 March 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

1.1. Background and Importance of Disease Detection in Horticulture:

1.2. Overview of Machine Learning (ML) and Deep Learning (DL) Applications:

1.3. Challenges and Research Gaps:

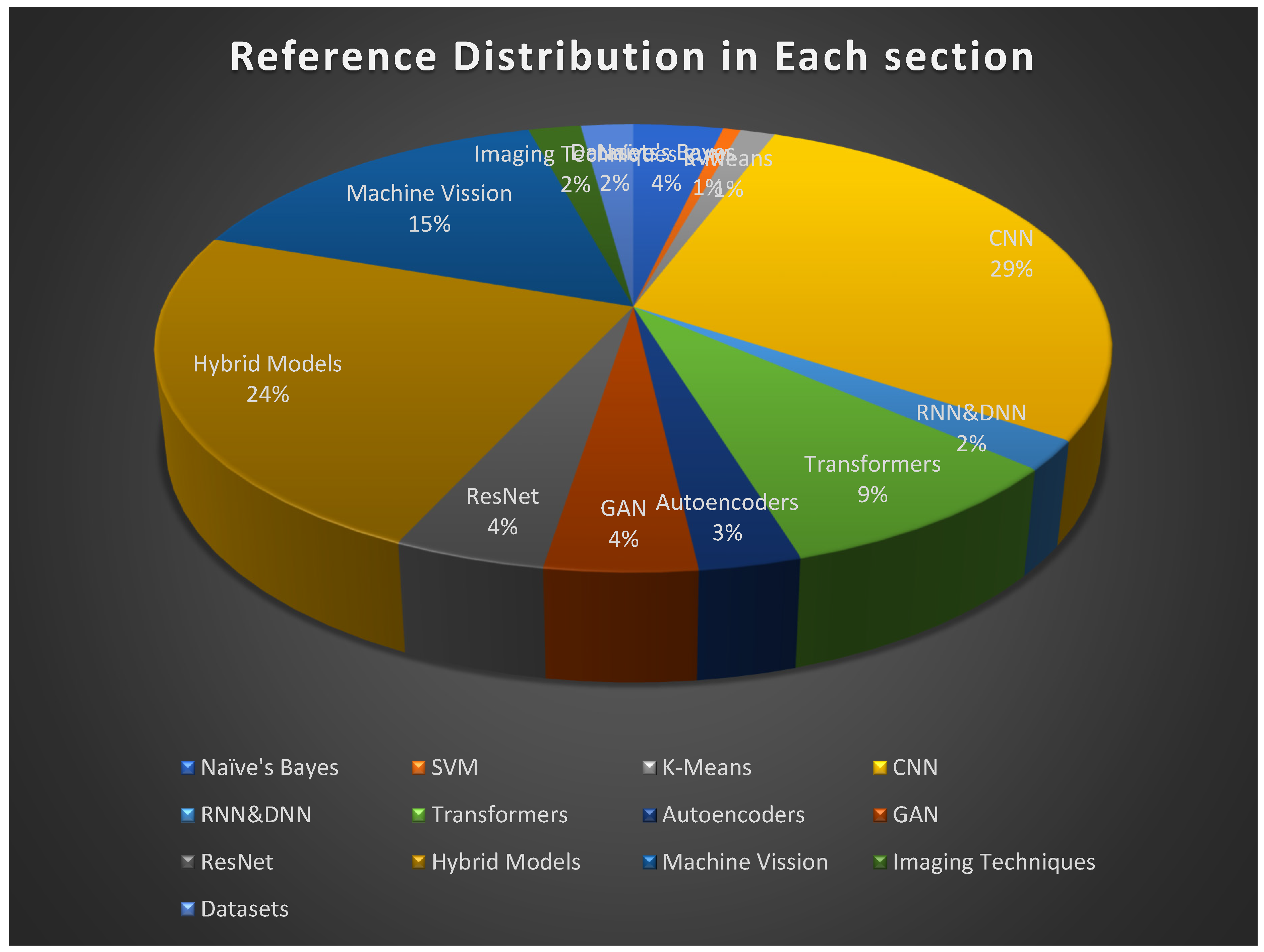

2. Machine Learning and Deep Learning Techniques for Disease Detection

2.1. Traditional Machine Learning Techniques:

2.1.1. Naive Bayes:

2.1.2. SVM (Support Vector Machine):

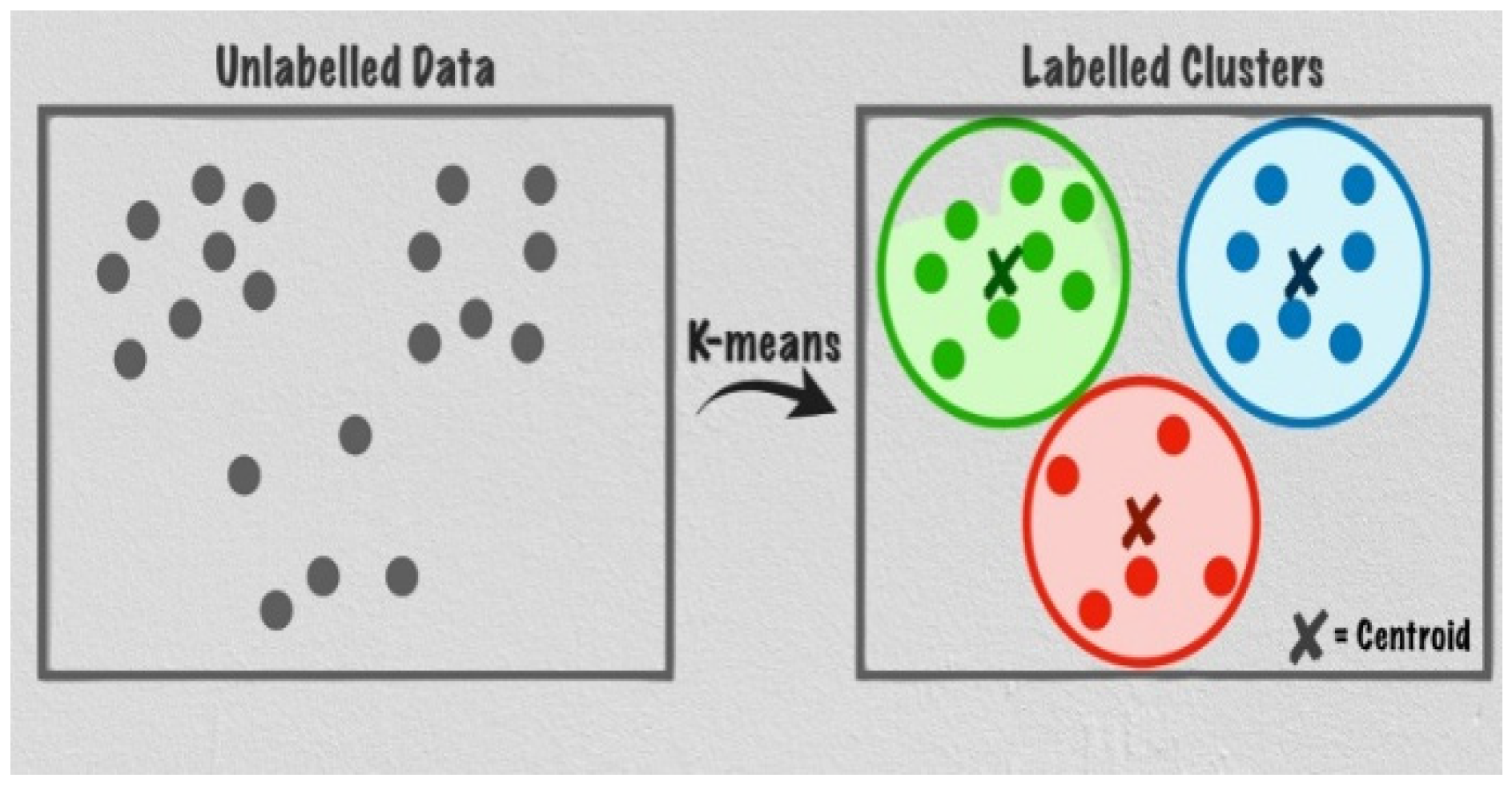

2.1.3. K-Means Clustering

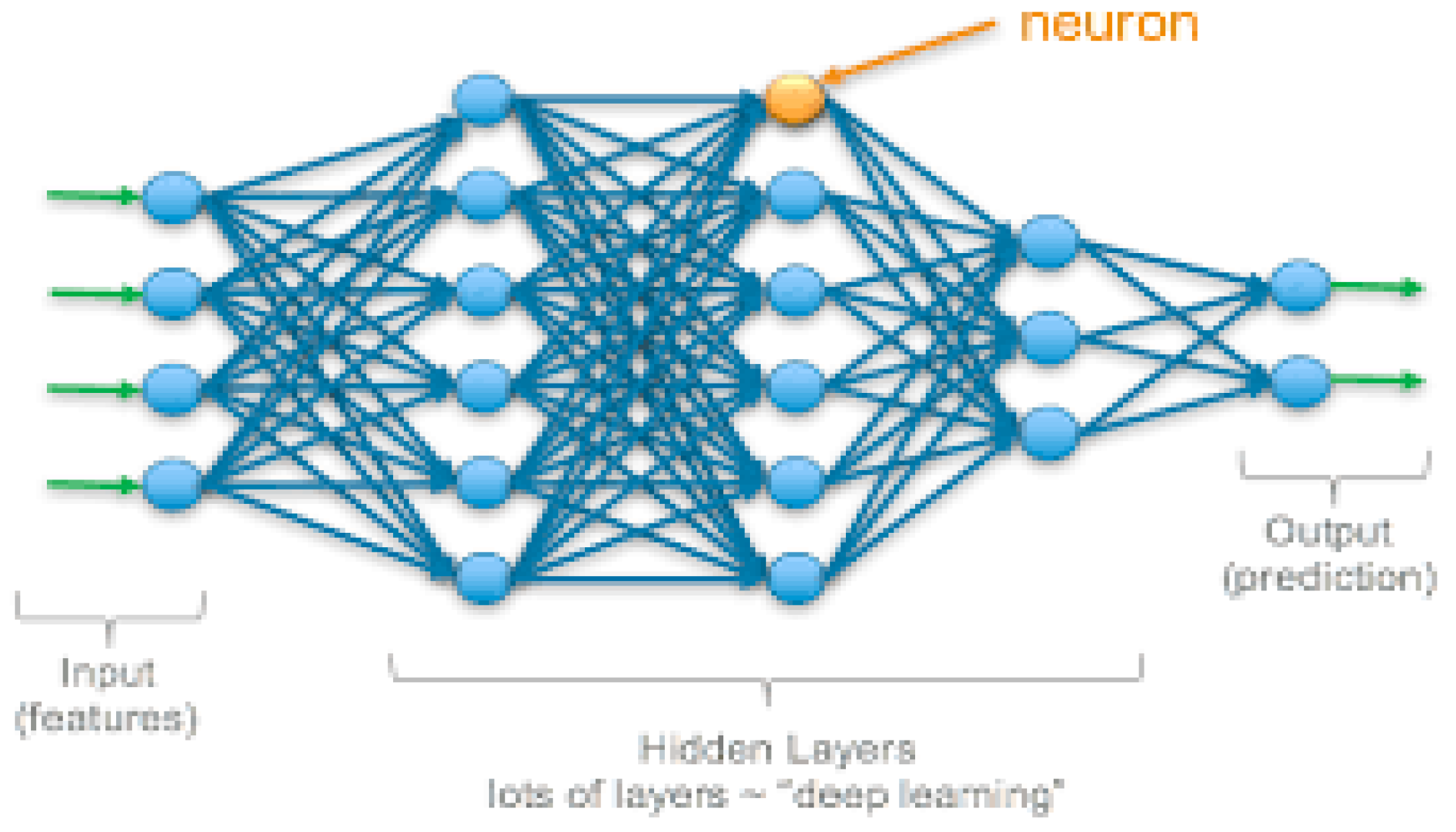

2.2. Deep learning:

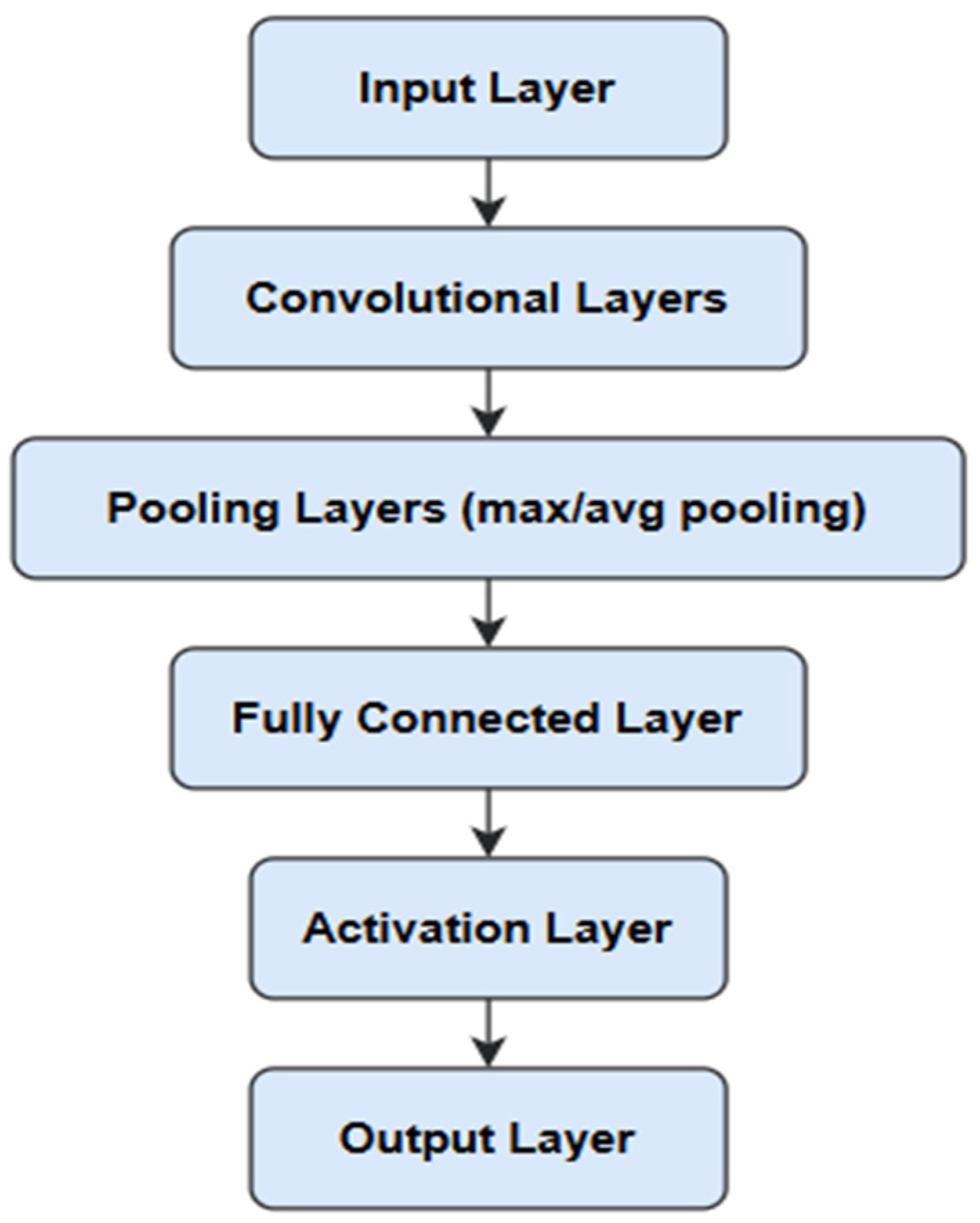

2.2.1. CNN (Convolution Neural Network):

2.2.2. RNN (Recurrent Neural Network) & DNN (Deep Neural Network):

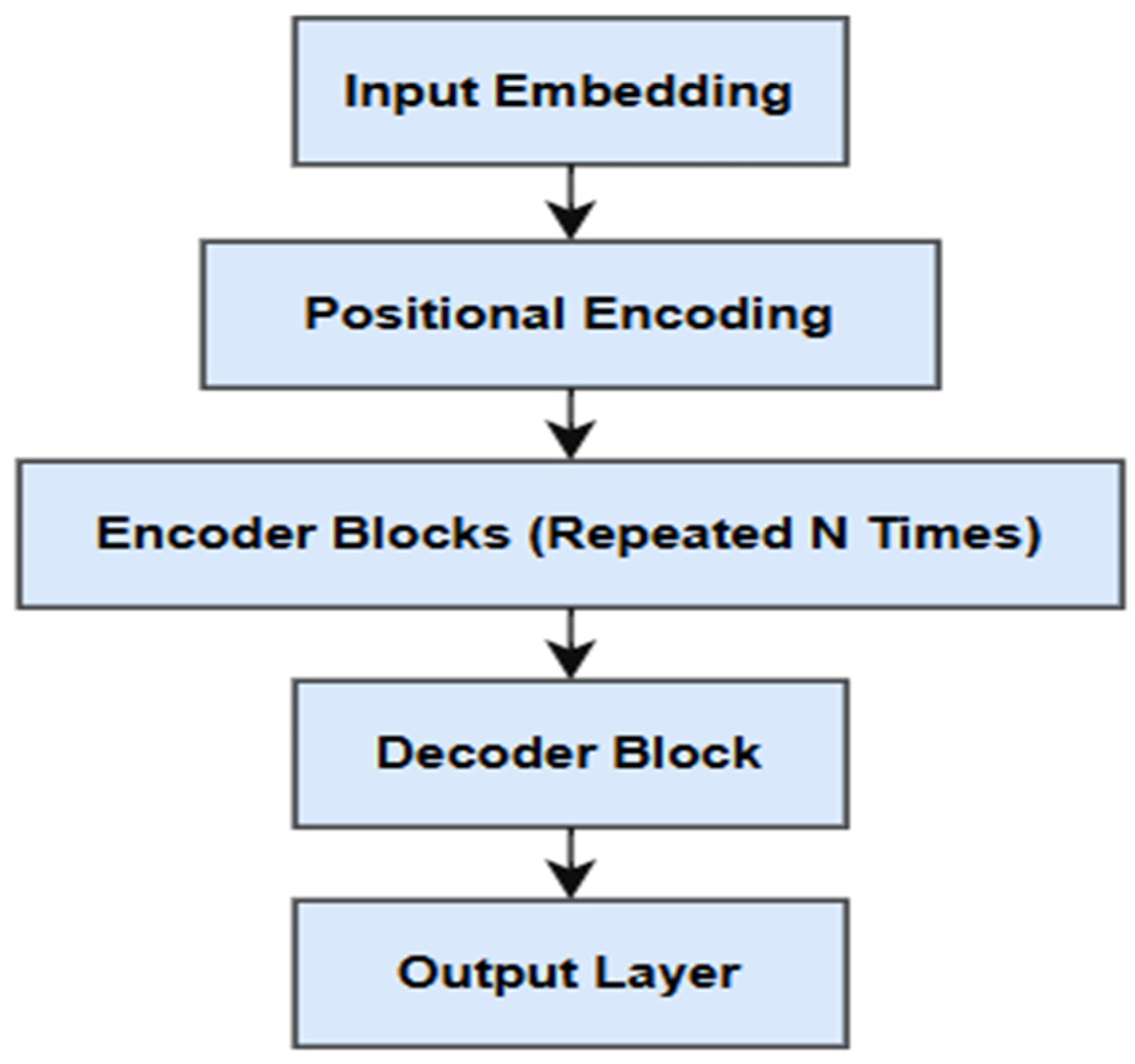

2.2.3. Transformers:

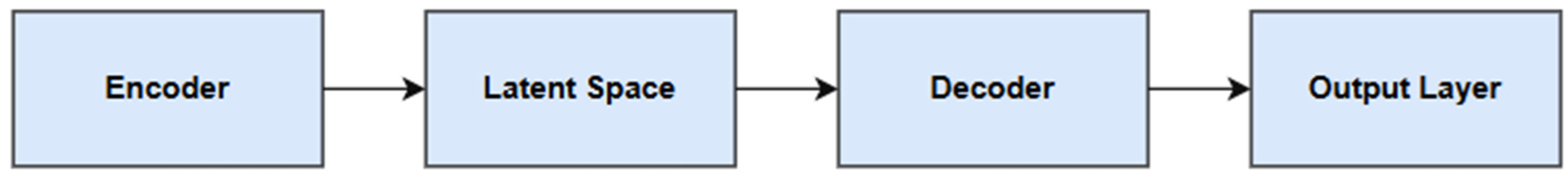

2.2.4. Auto Encoders:

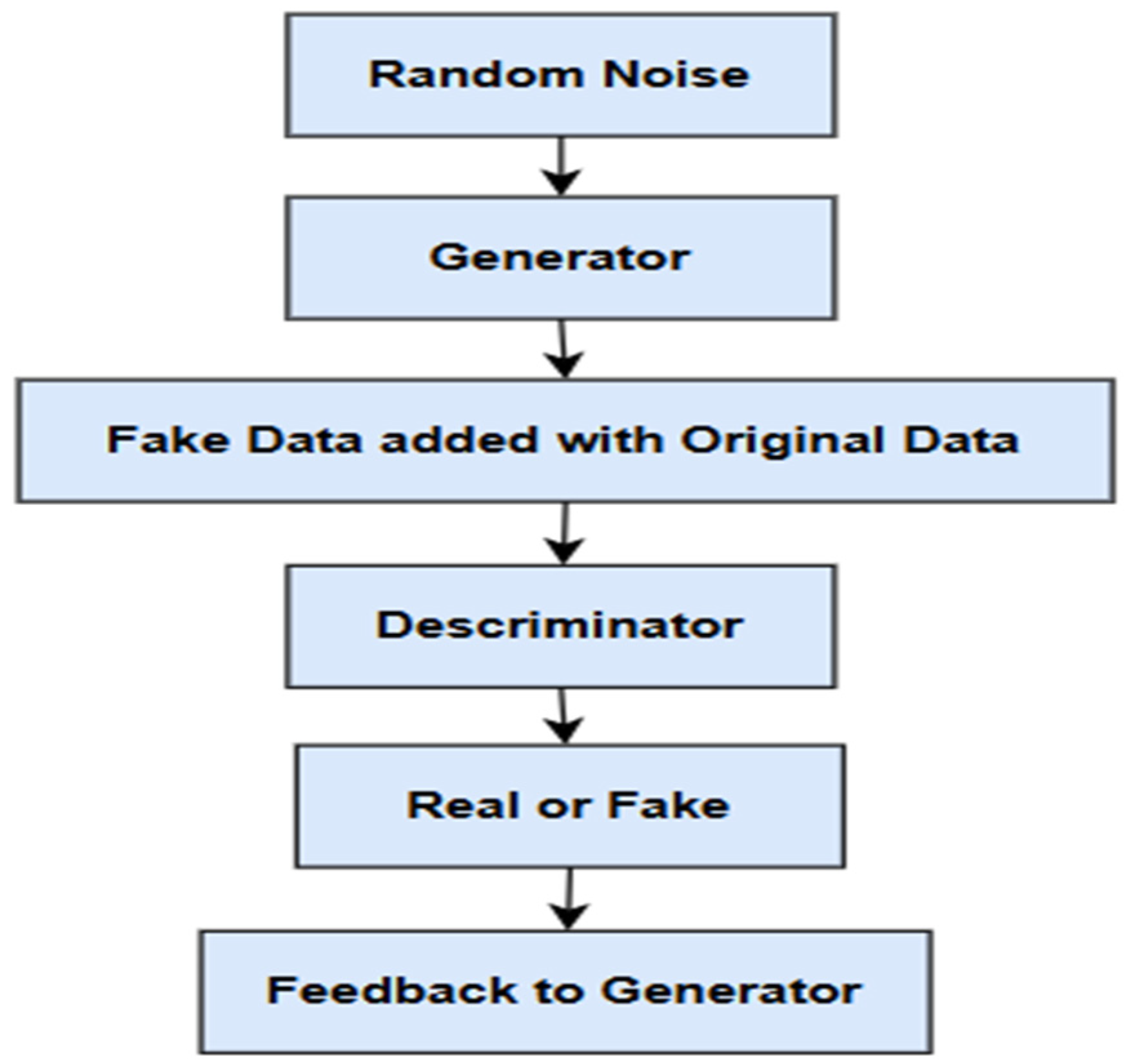

2.2.5. GAN (Generative Adversarial Network):

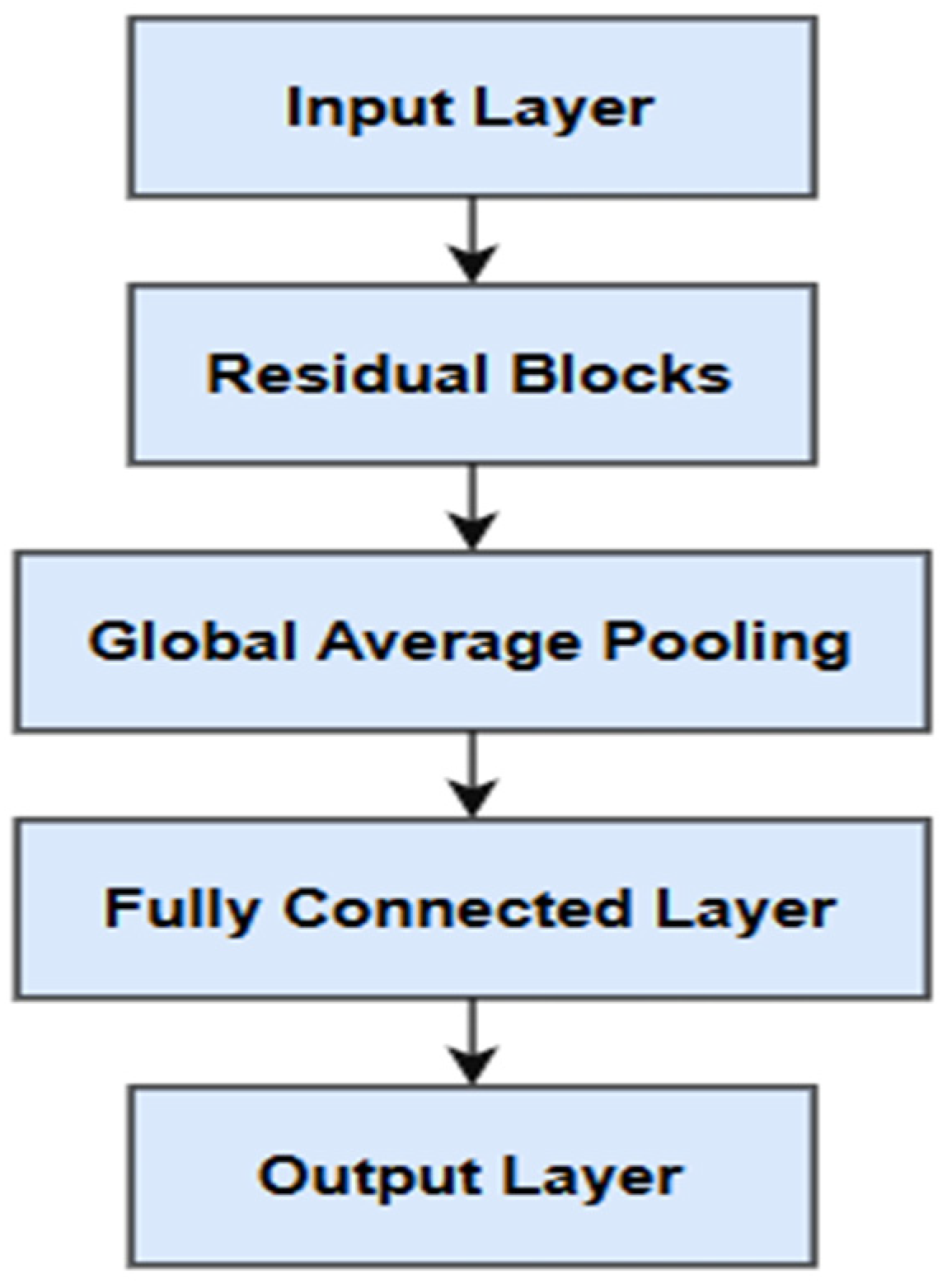

2.2.6. Res-Net:

3. Hybrid Models:

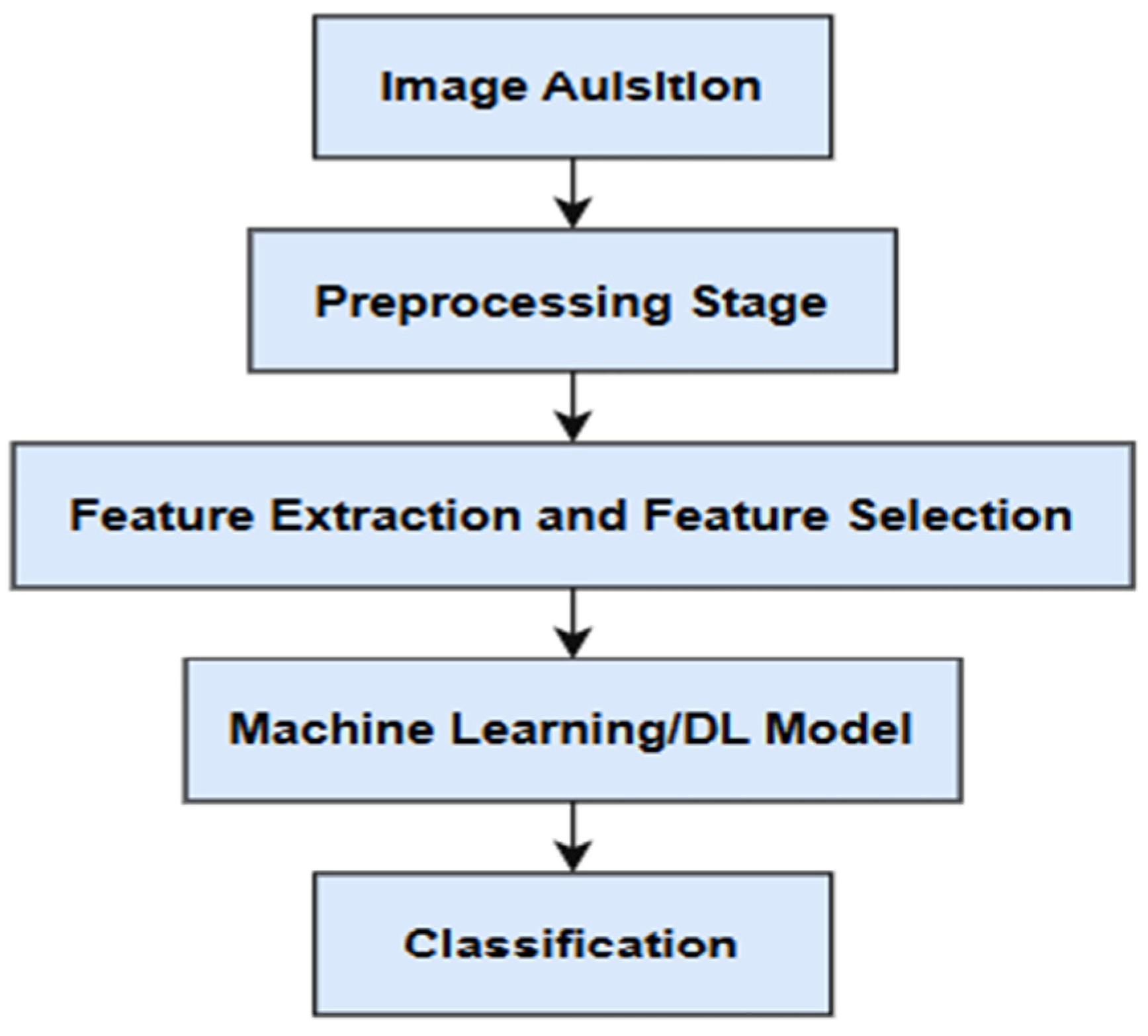

4. Machine Vision and Image Processing:

5. Applications of Advanced Imaging Techniques:

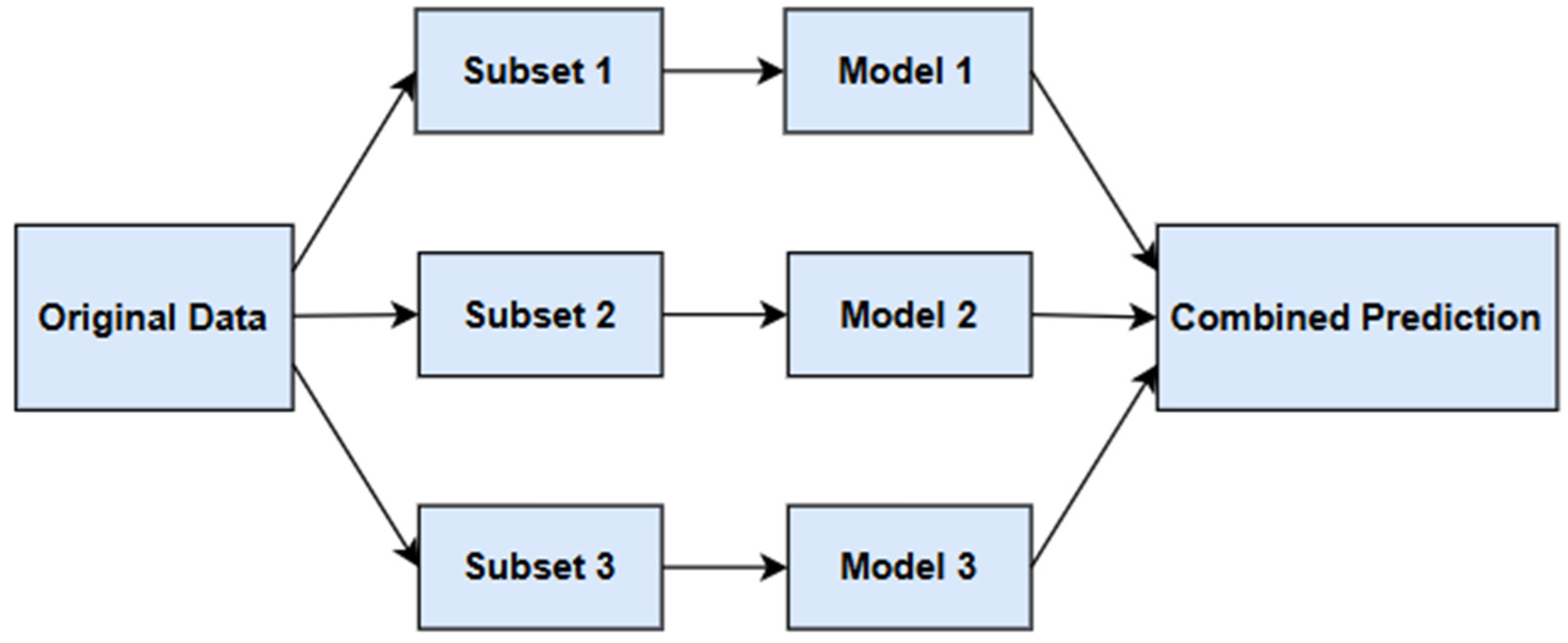

6. Ensemble Learning

7. Data and Case Studies-Based Research

8. Future Trends and Research Directions:

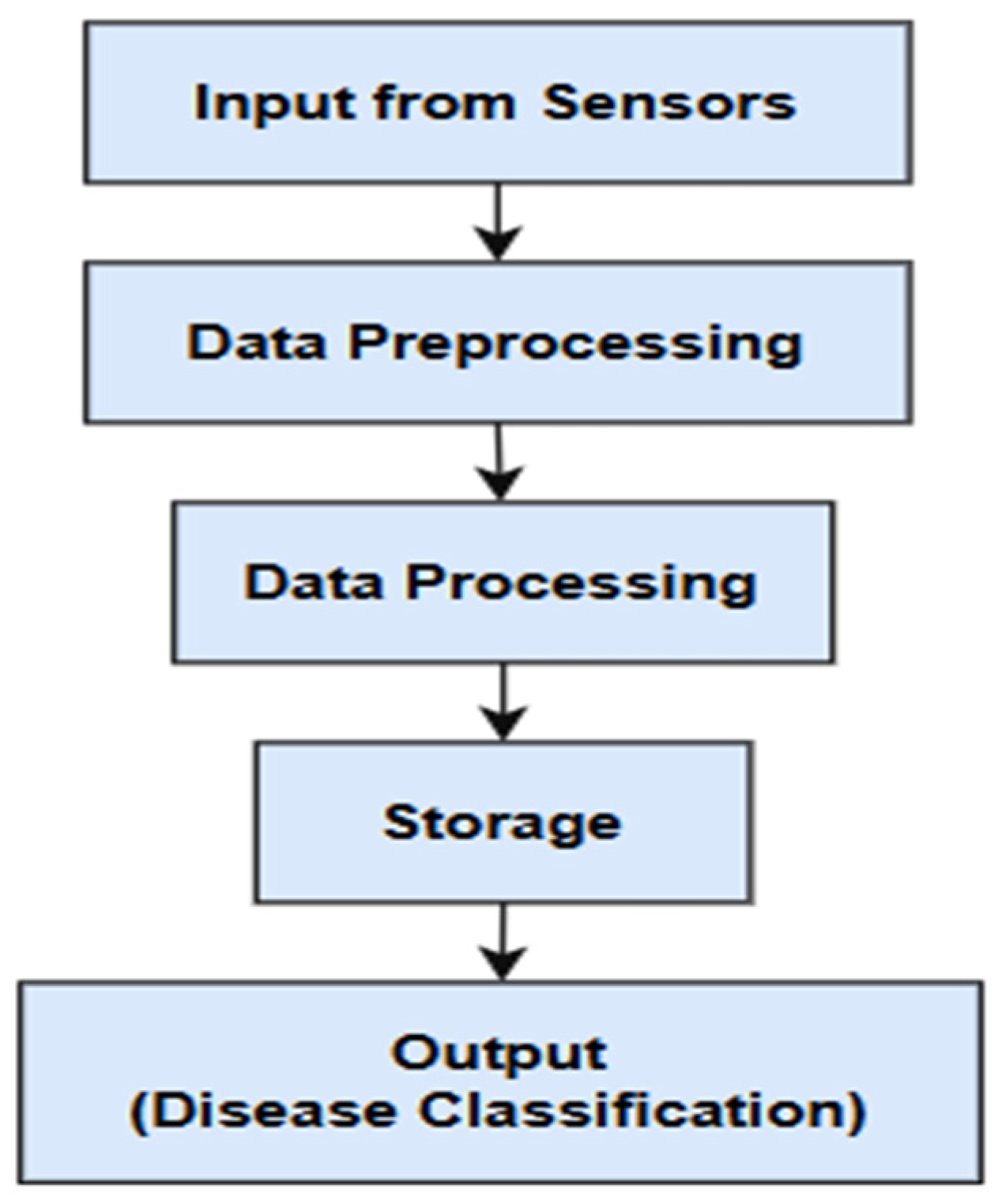

8.1. The Convergence of IoT with Edge Computing:

8.2. Resource-Constrained Environments’ Lightweight Models:

8.3. Sustainable Agriculture Applications:

8.4. Explainable AI for Better Decision-Making:

| Challenge | Impact on detection | Proposed Solutions | References |

|---|---|---|---|

| Imbalanced Datasets | Poor generalization of models | GAN-based data augmentation | [Zeng et al.,] |

| Model Interpretability | Lack of trust in predictions | Incorporation of Explainable AI Techniques | [Dhiman et al.,] |

| High Computational Costs | Limited deployment on edge devices | Development of lightweight models | [Iftikhar et al.,] |

| Similar Symptoms Across Diseases | Misclassification of diseases | Advanced imaging and hybrid models | [Li et al.,] |

9. Conclusion

References

- Dubey, S.R.; Jalal, A.S. Detection and classification of apple fruit diseases using complete local binary patterns. IEEE 2012, 978-0-7695-4872-2/12.

- de Moraes, J.L.; de Oliveira Neto, J.; Badue, C.; Oliveira-Santos, T.; de Souza, A.F. Yolo-Papaya: A Papaya Fruit Disease Detector and Classifier Using CNNs and Convolutional Block Attention Modules. Electronics 2023, 12, 2202. [Google Scholar] [CrossRef]

- Sumanto, Sumanto & Sugiarti, Yuni & Supriyatna, Adi & Carolina, Irmawati & Amin, Ruhul & Yani, Ahmad. (2021). Model Naïve Bayes Classifiers For Detection Apple Diseases. 1-4. [CrossRef]

- Sari, Wahyuni & Kurniawati, Yulia Ery & Santosa, Paulus. (2020). Papaya Disease Detection Using Fuzzy Naïve Bayes Classifier. 42-47. [CrossRef]

- Huddar, P.D.; Sujatha, S. Fruits and leaf disease detection using Naive Bayes algorithm. International Journal of Engineering Research in Electronics and Communication Engineering (IJERECE) 2017, 4, 89. [Google Scholar]

- Yasmeen, U., Khan, M. A., Tariq, U., Khan, J. A., Yar, M. A. E., Hanif, C. A., ... & Nam, Y. (2021). Citrus diseases recognition using deep improved genetic algorithm. Comput. Mater. Contin, 71(2).

- Dharmasiri, S.B.D.H.; Jayalal, S. (2019, March). Passion fruit disease detection using image processing. In 2019 International Research Conference on Smart Computing and Systems Engineering (SCSE) (pp. 126–133). IEEE.

- Awate, A.; Deshmankar, D.; Amrutkar, G. Bagul and S. Sonavane, “Fruit disease detection using color, texture analysis and ANN,” 2015 International Conference on Green Computing and Internet of Things (ICGCIoT), Greater Noida, India, 2015, pp. 970–975. [CrossRef]

- Dubey, S.R.; Dixit, P.; Singh, N.; Gupta, J.P. Infected fruit part detection using K-means clustering segmentation technique. International Journal of Artificial Intelligence and Interactive Multimedia 2013, 2. [Google Scholar]

- Ashok, V.; Vinod, D.S. (2021). A Novel Fusion of Deep Learning and Android Application for Real-Time Mango Fruits Disease Detection. In: Satapathy, S.; Bhateja, V.; Janakiramaiah, B.; Chen, YW. (eds) Intelligent System Design. Advances in Intelligent Systems and Computing, vol 1171. Springer, Singapore. [CrossRef]

- Syed-Ab-Rahman, S.F.; Hesamian, M.H. & Prasad, M. Citrus disease detection and classification using end-to-end anchor-based deep learning model. Appl Intell 2022, 52, 927–938. [Google Scholar] [CrossRef]

- Uğuz, Sinan & Şikaroğlu, Gülhan & Yağız, Abdullah. (2022). Disease detection and physical disorders classification for citrus fruit images using convolutional neural network. Journal of Food Measurement and Characterization. [CrossRef]

- Khan, M.A.; Akram, T.; Sharif, M.; Awais, M.; Javed, K.; Ali, H.; Saba, T. CCDF: Automatic system for segmentation and recognition of fruit crops diseases based on correlation coefficient and deep CNN features. Computers and electronics in agriculture 2018, 155, 220–236. [Google Scholar]

- Janakiramaiah, B.; Kalyani, G.; Prasad, L.V.; Karuna, A.; Krishna, M. Intelligent system for leaf disease detection using capsule networks for horticulture. Journal of Intelligent & Fuzzy Systems 2021, 41, 6697–6713. [Google Scholar]

- Liu, J.; Wang, X. Tomato Diseases and Pests Detection Based on Improved Yolo V3 Convolutional Neural Network. Frontiers in Plant Science 2020, 11. [Google Scholar] [CrossRef]

- Malathy, S.; Karthiga, R.R.; Swetha, K.; Preethi, G. (2021, January). Disease detection in fruits using image processing. In 2021 6th International Conference on Inventive Computation Technologies (ICICT) (pp. 747–752). IEEE.

- Dhiman, P.; Kaur, A.; Hamid, Y.; Alabdulkreem, E.; Elmannai, H.; Ababneh, N. Smart disease detection system for citrus fruits using deep learning with edge computing. Sustainability 2023, 15, 4576. [Google Scholar] [CrossRef]

- Dhiman, P.; Manoharan, P.; Lilhore, U.K.; et al. PFDI: a precise fruit disease identification model based on context data fusion with faster-CNN in edge computing environment. EURASIP J. Adv. Signal Process. 2023, 72 (2023). [Google Scholar] [CrossRef]

- Kavya, P.; Nischitha, S.; Nivedita, N.S.; Prabhu, A. (2024, June). Deep Analysis: Apple Fruit Disease Detection Using Deep Learning. In 2024 IEEE International Conference on Information Technology, Electronics and Intelligent Communication Systems (ICITEICS) (pp. 1–9). IEEE.

- Azgomi, H.; Haredasht, F.R.; Motlagh, M.R.S. Diagnosis of some apple fruit diseases by using image processing and artificial neural network. Food Control 2023, 145, 109484. [Google Scholar]

- Pathmanaban, P.; Gnanavel, B.K.; Anandan, S.S. Guava fruit (Psidium guajava) damage and disease detection using deep convolutional neural networks and thermal imaging. The Imaging Science Journal 2022, 70, 102–116. [Google Scholar]

- Gupta, S.; Tripathi, A.K. Fruit and vegetable disease detection and classification: Recent trends, challenges, and future opportunities. Engineering Applications of Artificial Intelligence 2024, 133, 108260. [Google Scholar]

- Yadav, P.K.; Burks, T.; Frederick, Q.; Qin, J.; Kim, M.; Ritenour, M.A. Citrus disease detection using convolution neural network generated features and Softmax classifier on hyperspectral image data. Frontiers in Plant Science 2022, 13, 1043712. [Google Scholar]

- Basri, H.; Syarif, I.; Sukaridhoto, S.; Falah, M.F. Intelligent system for automatic classification of fruit defect using faster region-based convolutional neural network (faster r-CNN). Jurnal Ilmiah Kursor 2019, 10. [Google Scholar]

- Kazi, S. Fruit grading, disease detection, and an image processing strategy. Journal of Image Processing and Artificial Intelligence 2023, 9, 17–34. [Google Scholar]

- Hashan, A.M.; Rahman, S.M.T.; Islam, R.M.R.U.; Avinash, K.; Shekhor, S.; Iftakhairul, S.M. (2023, December). Smart Horticulture Based on Image Processing: Guava Fruit Disease Identification. In 2023 IEEE 21st Student Conference on Research and Development (SCOReD) (pp. 270–274). IEEE.

- K. Nageswararao, A.S.L. Apple Disease Detection Using Convolutional Neural Networks. International Journal of Intelligent Systems and Applications in Engineering 2024, 12(21s), 466–470. Retrieved from https://ijisae.org/index.php/IJISAE/article/view/5442.

- Haruna, A.A.; Badi, I.A.; Muhammad, L.J.; Abuobieda, A.; Altamimi, A. (2023, January). CNN-LSTM learning approach for classification of foliar disease of apple. In 2023 1st International Conference on Advanced Innovations in Smart Cities (ICAISC) (pp. 1–6). IEEE.

- Naik, B.N.; Malmathanraj, R.; Palanisamy, P. Detection and classification of chilli leaf disease using a squeeze-and-excitation-based CNN model. Ecological Informatics 2022, 69, 101663. [Google Scholar]

- Vishnoi, V.K.; Kumar, K.; Kumar, B.; Mohan, S.; Khan, A.A. Detection of apple plant diseases using leaf images through convolutional neural network. IEEE Access 2022, 11, 6594–6609. [Google Scholar]

- Barman, U.; Choudhury, R.D.; Sahu, D.; Barman, G.G. Comparison of convolution neural networks for smartphone image based real time classification of citrus leaf disease. Computers and Electronics in Agriculture 2020, 177, 105661. [Google Scholar]

- Jung, D.H.; Kim, J.D.; Kim, H.Y.; Lee, T.S.; Kim, H.S.; Park, S.H. A hyperspectral data 3D convolutional neural network classification model for diagnosis of gray mold disease in strawberry leaves. Frontiers in Plant Science 2022, 13, 837020. [Google Scholar]

- Iftikhar, S.; Khattak, H.A.; Saadat, A.; Ameer, Z.; Zakarya, M. Efficient fruit disease diagnosis on resource-constrained agriculture devices. Journal of the Saudi Society of Agricultural Sciences 2020.

- Yadav, D.; Yadav, A.K. A Novel Convolutional Neural Network Based Model for 0Recognition and Classification of Apple Leaf Diseases. Traitement du Signal 2020, 37. [Google Scholar]

- Mehta, S.; Singh, G.; Bhale, Y.A. (2024). Precision Agriculture: An Augmented Datasets and CNN Model-Based Approach to Diagnose Diseases in Fruits and Vegetable Crops. Simulation Techniques of Digital Twin in Real-Time Applications: Design Modeling and Implementation, 215-242.

- Nirgude, V.; Rathi, S. Improving the accuracy of real field pomegranate fruit diseases detection and visualisation using convolution neural networks and grad-CAM. International Journal of Data Analysis Techniques and Strategies 2023, 15, 57–75. [Google Scholar]

- Alhazmi, S. (2023). Different Stages of Watermelon Diseases Detection Using Optimized CNN. In Soft Computing: Theories and Applications: Proceedings of SoCTA 2022 (pp. 121–133). Singapore: Springer Nature Singapore.

- Sajitha, P.; Andrushia, A.D. (2022, April). Banana Fruit Disease Detection and Categorization Utilizing Graph Convolution Neural Network (GCNN). In 2022 6th International Conference on Devices, Circuits and Systems (ICDCS) (pp. 130–134). IEEE.

- Lanjewar, M.G.; Parab, J.S. CNN and transfer learning methods with augmentation for citrus leaf diseases detection using PaaS cloud on mobile. Multimedia Tools and Applications 2024, 83, 31733–31758. [Google Scholar]

- Shrestha, G. ; Deepsikha; Das, M.; Dey, N. “Plant Disease Detection Using CNN,” 2020 IEEE Applied Signal Processing Conference (ASPCON), Kolkata, India, 2020, pp. 109–113. [CrossRef]

- Manzoor, E.S.; Malhotra, R.; Bhat, R.; Shekhar, S. (2024, July). Apple Detection: A CNN Approach for Diseases Detection. In 2024 Second International Conference on Advances in Information Technology (ICAIT) (Vol. 1, pp. 1–5). IEEE.

- Mitkal, P.S.; Jagadale, A. ‘Grading of pomegranate fruit using cnn. age 2023, 3. [Google Scholar]

- Ahmad, J.; Jan, B.; Farman, H.; Ahmad, W.; Ullah, A. Disease detection in plum using convolutional neural network under true field conditions. Sensors 2020, 20, 5569. [Google Scholar] [CrossRef]

- Mostafa, A.M.; Kumar, S.A.; Meraj, T.; Rauf, H.T.; Alnuaim, A.A.; Alkhayyal, M.A. Guava disease detection using deep convolutional neural networks: A case study of guava plants. Applied Sciences 2021, 12, 239. [Google Scholar]

- Rana, H.S.; Manjunatha, N.; Pokhare, S.S.; Marathe, R.A.; Rajan, J. (2024, October). Convolutional Neural Network Based Approach for Automatic Detection of Diseases from Pomegranate Plants. In 2024 IEEE International Conference on Distributed Computing, VLSI, Electrical Circuits and Robotics (DISCOVER) (pp. 66–72). IEEE.

- Suji, A.; Gopi, R.; Danalakshmi, D.; Govindasamy, R. (2024, July). An Automatic Detection of Citrus Fruits and Leaves Diseases Using CNN. In 2024 2nd International Conference on Sustainable Computing and Smart Systems (ICSCSS) (pp. 1552–1557). IEEE.

- Kumar, V.; Banerjee, D.; Chauhan, R.; Rawat, R.S.; Gill, K.S. (2023, December). Revolutionizing Pear Tree Disease Detection: An In-Depth Investigation into CNN-Based Approaches. In 2023 Global Conference on Information Technologies and Communications (GCITC) (pp. 1–6). IEEE.

- Assunção, E.; Diniz, C.; Gaspar, P.D.; Proença, H. Decision-making support system for fruit diseases classification using deep learning. 2020 International Conference on Decision Aid Sciences and Application (DASA) 2020, 652–656. [Google Scholar] [CrossRef]

- Krishna, R.; Prema, K.V. (2023). Constructing and Optimising RNN models to predict fruit rot disease incidence in areca nut crop based on weather parameters. IEEE Access.

- Rathnayake, G.; Rupasinghe, S.; Weerathunga, I.; Akalanka, E.D.K.S.; Sankalana, P.; Zoysa, A.K.T.D. Diseases Detection and Quality Detection of Guava Fruits and Leaves Using Image Processing. International Research Journal of Innovations in Engineering and Technology 2023, 7, 511. [Google Scholar]

- Dhiman, P.; Kukreja, V.; Manoharan, P.; Kaur, A.; Kamruzzaman, M.M.; Dhaou, I.B.; Iwendi, C. A novel deep learning model for detection of severity level of the disease in citrus fruits. Electronics 2022, 11, 495. [Google Scholar] [CrossRef]

- Aghamohammadesmaeilketabforoosh, K.; Nikan, S.; Antonini, G.; Pearce, J.M. Optimizing Strawberry Disease and Quality Detection with Vision Transformers and Attention-Based Convolutional Neural Networks. Foods 2024, 13, 1869. [Google Scholar] [CrossRef] [PubMed]

- Parmar, M.; Degadwala, S. Deep learning for accurate papaya disease identification using vision transformers. International Journal of Scientific Research in Computer Science, Engineering and Information Technology 2024, 10. [Google Scholar] [CrossRef]

- Zala, S.; Goyal, V.; Sharma, S.; Shukla, A. Transformer based fruits disease classification. Multimedia Tools and Applications 2024, 1–21. [Google Scholar]

- Nguyen, H.T.; Tran, T.D.; Nguyen, T.T.; Pham, N.M.; Nguyen Ly, P.H.; Luong, H.H. Strawberry disease identification with vision transformer-based models. Multimedia Tools and Applications 2024, 1–26. [Google Scholar]

- Chen, K.; Lang, J.; Li, J.; Chen, D.; Wang, X.; Zhou, J.; Liu, X.; Song, Y.; Dong, M. Integration of Image and Sensor Data for Improved Disease Detection in Peach Trees Using Deep Learning Techniques. Agriculture 2024, 14, 797. [Google Scholar] [CrossRef]

- SHereesha, M.; Hemavathy, C.; Teja, H.; Reddy, G.M.; Kumar, B.V.; Sunitha, G. (2023). Precision Mango Farming: Using Compact Convolutional Transformer for Disease Detection. In A. Abraham, A. Bajaj, N. Gandhi, A.M. Madureira, & C. Kahraman (Eds.), Innovations in Bio-Inspired Computing and Applications (Vol. 649, pp. 623–633). Lecture Notes in Networks and Systems. Springer, Cham. [CrossRef]

- Christakakis, P.; Giakoumoglou, N.; Kapetas, D.; Tzovaras, D.; Pechlivani, E.M. Vision Transformers in Optimization of AI-Based Early Detection of Botrytis cinerea. AI 2024, 5, 1301–1323. [Google Scholar] [CrossRef]

- Tiwari, R.G.; Misra, A.; Maheshwari, H.; Agarwal, A.K.; Sharma, M. (2024, August). Hybrid Transformer-CNN Model for Automated Diagnosis of Pomegranate Fruit Diseases. In 2024 10th International Conference on Electrical Energy Systems (ICEES) (pp. 1–5). IEEE.

- Li, X.; Chen, X.; Yang, J.; Li, S. Transformer helps identify kiwifruit diseases in complex natural environments. Computers and Electronics in Agriculture 2022, 200, 107258. [Google Scholar]

- Liu, Y.; Song, Y.; Ye, R.; Zhu, S.; Huang, Y.; Chen, T. . & Lv, C. High-Precision Tomato Disease Detection Using NanoSegmenter Based on Transformer and Lightweighting. Plants 2023, 12, 2559. [Google Scholar]

- Alshammari, H.; Gasmi, K.; Ben Ltaifa, I.; Krichen, M.; Ben Ammar, L.; Mahmood, M.A. Olive disease classification based on vision transformer and CNN models. Computational Intelligence and Neuroscience 2022, 2022, 3998193. [Google Scholar]

- Liu, Y.; Yu, Q.; Geng, S. Real-time and lightweight detection of grape diseases based on Fusion Transformer YOLO. Frontiers in Plant Science 2024, 15, 1269423. [Google Scholar] [CrossRef]

- Durairaj, V.; Surianarayanan, C. Disease detection in plant leaves using segmentation and autoencoder techniques. Malaya Journal 2020. [CrossRef] [PubMed]

- Pardede, H.F.; Suryawati, E.; Sustika, R.; Zilvan, V. (2018, November). Unsupervised convolutional autoencoder-based feature learning for automatic detection of plant diseases. In 2018 international conference on computer, control, informatics and its applications (IC3INA) (pp. 158–162). IEEE.

- Boukhris, A.; Jilali, A.; Asri, H. Deep Learning and Machine Learning Based Method for Crop Disease Detection and Identification Using Autoencoder and Neural Network. Revue d’Intelligence Artificielle 2024, 38. [Google Scholar] [CrossRef]

- Huddar, S.; Prabhushetty, K.; Jakati, J.; Havaldar, R.; Sirdeshpande, N. Deep autoencoder based image enhancement approach with hybrid feature extraction for plant disease detection using supervised classification. International Journal of Electrical & Computer Engineering (2088-8708) 2024, 14. [Google Scholar]

- Alshammari, K.; Alshammari, R.; Alshammari, A.; et al. An improved pear disease classification approach using cycle generative adversarial network. Sci Rep 2024, 14, 6680. [Google Scholar] [CrossRef]

- Zeng, Q.; Ma, X.; Cheng, B.; Zhou, E.; Pang, W. Gans-based data augmentation for citrus disease severity detection using deep learning. IEEE Access 2020, 8, 172882–172891. [Google Scholar] [CrossRef]

- Xiao, D.; Zeng, R.; Liu, Y.; Huang, Y.; Liu, J.; Feng, J.; Zhang, X. Citrus greening disease recognition algorithm based on classification network using TRL-GAN. Computers and Electronics in Agriculture 2022, 200, 107206. [Google Scholar] [CrossRef]

- Ghadekar, P.; Shaikh, U.; Ner, R.; Patil, S.; Nimase, O.; Shinde, T. (2023, November). Early Phase Detection of Bacterial Blight in Pomegranate Using GAN Versus Ensemble Learning. In International Conference on Data Science, Computation and Security (pp. 125–138). Singapore: Springer Nature Singapore.

- Noguchi, K.; Takemura, Y.; Tominaga, M.; Ishii, K. “Mandarin Orange Anomaly Detection with Cycle-GAN,” 2024 IEEE/SICE International Symposium on System Integration (SII), Ha Long, Vietnam, 2024, pp. 644–649. [CrossRef]

- Chen, S.H.; Lai, Y.W.; Kuo, C.L.; Lo, C.Y.; Lin, Y.S.; Lin, Y.R. . & Tsai, C.C. A surface defect detection system for golden diamond pineapple based on CycleGAN and YOLOv4. Journal of King Saud University-Computer and Information Sciences 2022, 34, 8041–8053. [Google Scholar]

- Wu, J.; Abolghasemi, V.; Anisi, M.H.; Dar, U.; Ivanov, A.; Newenham, C. “Strawberry Disease Detection Through an Advanced Squeeze-and-Excitation Deep Learning Model,” in IEEE Transactions on AgriFood Electronics, vol. 2, no. 2, pp. 259–267, Sept.-Oct. 2024. [CrossRef]

- Senthilkumar, C.; Kamarasan, M. An effective citrus disease detection and classification using deep learning based inception resnet V2 model. Turkish Journal of Computer and Mathematics Education 2021, 12, 2283–2296. [Google Scholar]

- Kumar, S.; Pal, S.; Singh, V.P.; Jaiswal, P. Performance evaluation of ResNet model for classification of tomato plant disease. Epidemiologic Methods 2023, 12, 20210044. [Google Scholar] [CrossRef]

- Li, X.; Rai, L. (2020, November). Apple leaf disease identification and classification using resnet models. In 2020 IEEE 3rd International Conference on Electronic Information and Communication Technology (ICEICT) (pp. 738–742). IEEE.

- Mohinani, H.; Chugh, V.; Kaw, S.; Yerawar, O.; Dokare, I. (2022, February). Vegetable and fruit leaf diseases detection using ResNet. In 2022 Interdisciplinary Research in Technology and Management (IRTM) (pp. 1–7). IEEE.

- Upadhyay, L.; Saxena, A. Evaluation of Enhanced Resnet-50 Based Deep Learning Classifier for Tomato Leaf Disease Detection and Classification. Journal of Electrical Systems 2024, 20(3s), 2270–2282. [Google Scholar]

- Jadhav, S.; Gandhi, S.; Joshi, P.; Choudhary, V. Banana Crop Disease Detection Using Deep Learning Approach. *International Journal for Research in Applied Science and Engineering Technology* 2023, *11*(5), 2061–2066. [CrossRef]

- Arora, D.; Mehta, K.; Kumar, A.; Lamba, S. (2024, March). Evaluating Watermelon Mosaic Virus Seriousness with Hybrid RNN and Random Forest Model: A Five-Degree Approach. In 2024 11th International Conference on Reliability, Infocom Technologies and Optimization (Trends and Future Directions)(ICRITO) (pp. 1–5). IEEE.

- Pydipati, R.; Burks, T.F.; Lee, W.S. Statistical and neural network classifiers for citrus disease detection using machine vision. Transactions of the ASAE 2005, 48, 2007–2014. [Google Scholar] [CrossRef]

- Palei, S.; Behera, S.K.; Sethy, P.K. A systematic review of citrus disease perceptions and fruit grading using machine vision. Procedia Computer Science 2023, 218, 2504–2519. [Google Scholar] [CrossRef]

- Agarwal, A.; Sarkar, A.; Dubey, A.K. (2019). Computer vision-based fruit disease detection and classification. In Smart Innovations in Communication and Computational Sciences: Proceedings of ICSICCS-2018 (pp. 105–115). Springer Singapore.

- Doh, B.; Zhang, D.; Shen, Y.; Hussain, F.; Doh, R.F.; Ayepah, K. (2019, September). Automatic citrus fruit disease detection by phenotyping using machine learning. In 2019 25th International conference on automation and computing (ICAC) (pp. 1–5). IEEE.

- Deng, F.; Mao, W.; Zeng, Z.; Zeng, H.; Wei, B. Multiple diseases and pests detection based on federated learning and improved faster R-CNN. IEEE Transactions on Instrumentation and Measurement 2022, 71, 1–11. [Google Scholar] [CrossRef]

- Banerjee, D.; Kukreja, V.; Hariharan, S.; Jain, V. (2023, April). Enhancing Mango Fruit Disease Severity Assessment with CNN and SVM-Based Classification. In 2023 IEEE 8th International Conference for Convergence in Technology (I2CT) (pp. 1–6). IEEE.

- Vasumathi, M.T.; Kamarasan, M. An effective pomegranate fruit classification based on CNN-LSTM deep learning models. Indian Journal of Science and Technology 2021, 14, 1310–1319. [Google Scholar] [CrossRef]

- Majid, A.; Khan, M.A.; Alhaisoni, M.; E. yar, M.A.; Tariq, U. et al. An integrated deep learning framework for fruits diseases classification. Computers Materials & Continua 2022, 71, 1387–1402. [CrossRef]

- Masuda, K.; Suzuki, M.; Baba, K.; Takeshita, K.; Suzuki, T.; Sugiura, M. . & Akagi, T. Noninvasive diagnosis of seedless fruit using deep learning in persimmon. The Horticulture Journal 2021, 90, 172–180. [Google Scholar]

- Nyarko, B.N.E.; Bin, W.; Jinzhi, Z.; Odoom, J. (2023). Tomato fruit disease detection based on improved single shot detection algorithm. Journal of Plant Protection Research.

- Gill, H.S.; Murugesan, G.; Khehra, B.S.; Sajja, G.S.; Gupta, G.; Bhatt, A. Fruit recognition from images using deep learning applications. Multimedia Tools and Applications 2022, 81, 33269–33290. [Google Scholar] [CrossRef]

- Le, A.T.; Shakiba, M.; Ardekani, I. Tomato disease detection with lightweight recurrent and convolutional deep learning models for sustainable and smart agriculture. Frontiers in Sustainability 2024, 5, 1383182. [Google Scholar] [CrossRef]

- Latif, G.; Alghazo, J.; Ben Brahim, G.; Alnujaidi, K. (n.d.). Dates fruit disease recognition using machine learning. Prince Mohammad Bin Fahd University.

- Gupta, R.; Kaur, M.; Garg, N.; Shankar, H.; Ahmed, S. (2023, May). Lemon Diseases Detection and Classification using Hybrid CNN-SVM Model. In 2023 Third International Conference on Secure Cyber Computing and Communication (ICSCCC) (pp. 326–331). IEEE.

- Alekhya, J.L.; Nithin, P.S.; Enosh, P.; Devika, Y. (2024, August). Mango Fruit Disease Detection by Integrating MobileNetV2 and Long Short-Term Memory. In 2024 International Conference on Electrical Electronics and Computing Technologies (ICEECT) (Vol. 1, pp. 1–6). IEEE.

- Khattak, A.; Asghar, M.U.; Batool, U.; Asghar, M.Z.; Ullah, H.; Al-Rakhami, M.; Gumaei, A. Automatic detection of citrus fruit and leaves diseases using deep neural network model. IEEE access 2021, 9, 112942–112954. [Google Scholar] [CrossRef]

- Mohanapriya, S.; Efshiba, V.; Natesan, P. (2021, September). Identification of Fruit Disease Using Instance Segmentation. In 2021 Third International Conference on Inventive Research in Computing Applications (ICIRCA) (pp. 1779–1787). IEEE.

- Sundaramoorthi, K.; Kamarasan, M. (2024, May). Integrating Sparrow Search Algorithm with Deep Learning for Tomato Fruit Disease Detection and Classification. In 2024 4th International Conference on Pervasive Computing and Social Networking (ICPCSN) (pp. 184–190). IEEE.

- Seetharaman, K.; Mahendran, T. Detection of Disease in Banana Fruit using Gabor Based Binary Patterns with Convolution Recurrent Neural Network. Turkish Online Journal of Qualitative Inquiry 2021, 12. [Google Scholar]

- Xue, G.; Liu, S.; Ma, Y. A hybrid deep learning-based fruit classification using attention model and convolution autoencoder. Complex & Intelligent Systems 2020, 1–11. [Google Scholar]

- Tewari, V.; Azeem, N.A.; Sharma, S. Automatic guava disease detection using different deep learning approaches. Multimedia Tools and Applications 2024, 83, 9973–9996. [Google Scholar]

- Sankaran, S.; Subbiah, D.; Chokkalingam, B.S. CitrusDiseaseNet: An integrated approach for automated citrus disease detection using deep learning and kernel extreme learning machine. Earth Science Informatics 2024, 1–18. [Google Scholar]

- Yang, D.; Wang, F.; Hu, Y.; Lan, Y.; Deng, X. Citrus huanglongbing detection based on multi-modal feature fusion learning. Frontiers in plant science 2021, 12, 809506. [Google Scholar]

- SAID, Archana Ganesh; JOSHI, Bharti. Advanced multimodal thermal imaging for high-precision fruit disease segmentation and classification. Journal of Autonomous Intelligence, [S.l.], v. 7, n. 5, p. 1618, may 2024. ISSN 2630-5046.

- Tian, Y.; Yang, G.; Wang, Z.; Li, E.; Liang, Z. Detection of apple lesions in orchards based on deep learning methods of CycleGAN and YOLOV3-dense. Journal of Sensors 2019, 2019, 7630926. [Google Scholar]

- Si, J.; Kim, S. Chili Pepper Disease Diagnosis via Image Reconstruction Using GrabCut and Generative Adversarial Serial Autoencoder. arXiv 2023, arXiv:2306.12057. [Google Scholar]

- Samajpati, B.J.; Degadwala, S.D. (2016, April). Hybrid approach for apple fruit diseases detection and classification using random forest classifier. In 2016 International conference on communication and signal processing (ICCSP) (pp. 1015–1019). IEEE.

- Nandi, R.N.; Palash, A.H.; Siddique, N.; Zilani, M.G. (2023). Device-friendly guava fruit and leaf disease detection using deep learning. In M. S. Satu, M.A. Moni, M.S. Kaiser, & M. S. Arefin (Eds.), Machine intelligence and emerging technologies (Vol. 490, pp. 55–66). Lecture Notes of the Institute for Computer Sciences, Social Informatics, and Telecommunications Engineering. Springer. [CrossRef]

- Chug, A.; Bhatia, A.; Singh, A.P.; Singh, D. A novel framework for image-based plant disease detection using hybrid deep learning approach. Soft Computing 2023, 27, 13613–13638. [Google Scholar]

- H. B. Patel and N. J. Patil, “Enhanced CNN for Fruit Disease Detection and Grading Classification Using SSDAE-SVM for Postharvest Fruits,” in IEEE Sensors Journal, vol. 24, no. 5, pp. 6719–6732, 1 March1, 2024. [CrossRef]

- Dharmasiri, S.B.D.H.; Jayalal, S. “Passion Fruit Disease Detection using Image Processing,” 2019 International Research Conference on Smart Computing and Systems Engineering (SCSE), Colombo, Sri Lanka, 2019, pp. 126–133. [CrossRef]

- Darwin Laura, Elsa Pilar Urrutia, Franklin Salazar, Jeanette Ureña, Rodrigo Moreno, Gustavo Machado, Maria Cazorla-Logroño, Santiago Altamirano,Aerial remote sensing system to control pathogens and diseases in broccoli crops with the use of artificial vision,Smart Agricultural Technology,Volume 10,2025,100739,ISSN 2772-3755. [CrossRef]

- Mahmud, M.S.; Zaman, Q.U.; Esau, T.J.; Price, G.W.; Prithiviraj, B. Development of an artificial cloud lighting condition system using machine vision for strawberry powdery mildew disease detection. Computers and electronics in agriculture 2019, 158, 219–225. [Google Scholar]

- Abd El-aziz, A.A.; Darwish, A.; Oliva, D.; Hassanien, A.E. (2020). Machine Learning for Apple Fruit Diseases Classification System. In: Hassanien, AE.; Azar, A.; Gaber, T.; Oliva, D.; Tolba, F. (eds) Proceedings of the International Conference on Artificial Intelligence and Computer Vision (AICV2020). AICV 2020. Advances in Intelligent Systems and Computing, vol 1153. Springer, Cham. [CrossRef]

- Habib, M.T.; Majumder, A.; Jakaria, A.Z.M.; Akter, M.; Uddin, M.S.; Ahmed, F. Machine vision based papaya disease recognition. Journal of King Saud University-Computer and Information Sciences 2020, 32, 300–309. [Google Scholar]

- Soltani Firouz, M.; Sardari, H. Defect detection in fruit and vegetables by using machine vision systems and image processing. Food Engineering Reviews 2022, 14, 353–379. [Google Scholar]

- Mehra, Tanvi, Vinay Kumar, and Pragya Gupta. “Maturity and disease detection in tomato using computer vision.” In 2016 Fourth international conference on parallel, distributed and grid computing (PDGC), pp. 399–403. IEEE, 2016.

- Athiraja, A.; Vijayakumar, P. RETRACTED ARTICLE: Banana disease diagnosis using computer vision and machine learning methods. Journal of Ambient Intelligence and Humanized Computing 2021, 12, 6537–6556. [Google Scholar]

- Mahmud, M.S.; Zaman, Q.U.; Esau, T.J.; Chang, Y.K.; Price, G.W.; Prithiviraj, B. Real-time detection of strawberry powdery mildew disease using a mobile machine vision system. Agronomy 2020, 10, 1027. [Google Scholar] [CrossRef]

- Hadipour-Rokni, R.; Asli-Ardeh, E.A.; Jahanbakhshi, A.; Sabzi, S. Intelligent detection of citrus fruit pests using machine vision system and convolutional neural network through transfer learning technique. Computers in Biology and Medicine 2023, 155, 106611. [Google Scholar] [CrossRef] [PubMed]

- Mia, M.R.; Mia, M.J.; Majumder, A.; Supriya, S.; Habib, M.T. Computer vision based local fruit recognition. Int. J. Eng. Adv. Technol 2019, 9, 2810–2820. [Google Scholar]

- Habib, M.T.; Mia, M.R.; Mia, M.J.; Uddin, M.S.; Ahmed, F. 2020). A computer vision approach for jackfruit disease recognition. In Proceedings of International Joint Conference on Computational Intelligence: IJCCI 2019 (pp. 343–353). Springer Singapore.

- Al Haque, A.F.; Hafiz, R.; Hakim, M.A.; Islam, G.R. (2019, December). A computer vision system for guava disease detection and recommend curative solution using deep learning approach. In 2019 22nd International Conference on Computer and Information Technology (ICCIT) (pp. 1–6). IEEE.

- Bhange, M.; Hingoliwala, H.A. Smart farming: Pomegranate disease detection using image processing. Procedia computer science 2015, 58, 280–288. [Google Scholar]

- Nithya, R.; Santhi, B.; Manikandan, R.; Rahimi, M.; Gandomi, A.H. Computer vision system for mango fruit defect detection using deep convolutional neural network. foods 2022, 11, 3483. [Google Scholar] [CrossRef]

- Abbaspour-Gilandeh, Y.; Aghabara, A.; Davari, M.; Maja, J.M. Feasibility of using computer vision and artificial intelligence techniques in detection of some apple pests and diseases. Applied Sciences 2022, 12, 906. [Google Scholar]

- Habib, M.T.; Arif, M.A.I.; Shorif, S.B.; Uddin, M.S.; Ahmed, F. Machine vision-based fruit and vegetable disease recognition: A review. Computer Vision and Machine Learning in Agriculture 2021, 143–157. [Google Scholar]

- Deshpande, T.; Sengupta, S.; Raghuvanshi, K.S. Grading & identification of disease in pomegranate leaf and fruit. International Journal of Computer Science and Information Technologies 2014, 5, 4638–4645. [Google Scholar]

- Kamala, K.L.; Alex, S.A. “Apple Fruit Disease Detection for Hydroponic plants using Leading edge Technology Machine Learning and Image Processing,” 2021 2nd International Conference on Smart Electronics and Communication (ICOSEC), Trichy, India, 2021, pp. 820–825. [CrossRef]

- Durmuş, H.; Güneş, E.O.; Kırcı, M. Disease detection on the leaves of the tomato plants by using deep learning. 2017 6th International Conference on Agro-Geoinformatics 2017, 1–5. [Google Scholar] [CrossRef]

- Qin, J.; Burks, T.; Ritenour, M.; Bonn, W.G. Detection of citrus canker using hyperspectral reflectance imaging with spectral information divergence. *Journal of Food Engineering* 2009, *93*(2), 183–191. [CrossRef]

- Jain, R.; Singla, P.; Sharma, R.; Kukreja, V.; Singh, R. (2023, April). Detection of Guava Fruit Disease through a Unified Deep Learning Approach for Multi-classification. In 2023 IEEE International Conference on Contemporary Computing and Communications (InC4) (Vol. 1, pp. 1–5). IEEE.

- Bulanon, D.; Burks, T.; Alchanatis, V. A Multispectral Imaging Analysis for Enhancing Citrus Fruit Detection. *Environmental Control in Biology* 2010, *48*(2), 81–91. [CrossRef]

- Rauf, H.T.; Saleem, B.A.; Lali, M.I.U.; Khan, M.A.; Sharif, M.; Bukhari, S.A.C. A citrus fruits and leaves dataset for detection and classification of citrus diseases through machine learning. Data in brief 2019, 26, 104340. [Google Scholar] [PubMed]

- Mahendran, T.; Seetharaman, K. (2023, January). Feature extraction and classification based on pixel in banana fruit for disease detection using neural networks. In 2023 Third International Conference on Advances in Electrical, Computing, Communication and Sustainable Technologies (ICAECT) (pp. 1–7). IEEE.

- Gehlot, M.; Saxena, R.K. & Gandhi, G.C. “Tomato-Village”: a dataset for end-to-end tomato disease detection in a real-world environment. Multimedia Systems 2023, 29, 3305–3328. [Google Scholar] [CrossRef]

- Albanese, A.; Nardello, M.; Brunelli, D. “Automated Pest Detection With DNN on the Edge for Precision Agriculture,” in IEEE Journal on Emerging and Selected Topics in Circuits and Systems, vol. 11, no. 3, pp. 458–467, Sept. 2021. [CrossRef]

- Rumy, S.M.S.H.; Hossain, M.I.A.; Jahan, F.; Tanvin, T. “An IoT based System with Edge Intelligence for Rice Leaf Disease Detection using Machine Learning,” 2021 IEEE International IOT, Electronics and Mechatronics Conference (IEMTRONICS), Toronto, ON, Canada, 2021, pp. 1–6. [CrossRef]

- Tsai, Y.-H.; Hsu, T.-C. (2024). An effective deep neural network in edge computing enabled Internet of Things for plant diseases monitoring. Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision Workshops, 695–704. Hsuan Chuang University. https://openaccess.thecvf.com/WACV2024W_paperlist.

- Kalbande, K. ., Patil, W.., Deshmukh, A.., Joshi, S.., Titarmare, A.S..; Patil, S.C.. Novel Edge Device System for Plant Disease Detection with Deep Learning Approach. International Journal of Intelligent Systems and Applications in Engineering 2024, 12, 610–618. [Google Scholar]

- Khan, A.T.; Jensen, S.M.; Khan, A.R.; Li, S. Plant disease detection model for edge computing devices. Frontiers in Plant Science 2023, 14. [Google Scholar] [CrossRef]

- Kim, J.; Chang, S.; Kwak, N. PQK: Model compression via pruning, quantization, and knowledge distillation. arXiv 2021, arXiv:arXiv:2106.14681. [Google Scholar] [CrossRef]

- Liang, T.; Glossner, J.; Wang, L.; Shi, S.; Zhang, X. Pruning and quantization for deep neural network acceleration: A survey. Journal of Systems Architecture 2021, 117, 102137. [Google Scholar] [CrossRef]

- Li, G.; Wang, Y.; Zhao, Q.; Yuan, P.; Chang, B. ; Chang, B. PMVT: A lightweight vision transformer for plant disease identification on mobile devices. Frontiers in Plant Science 2023, 14, 1256773. [Google Scholar] [CrossRef]

- Borhani, Y.; Khoramdel, J.; Najafi, E. A deep learning based approach for automated plant disease classification using vision transformer. Sci Rep 2022, 12, 11554. [Google Scholar] [CrossRef]

- Guan, H.; Fu, C.; Zhang, G.; Li, K.; Wang, P.; Zhu, Z. A lightweight model for efficient identification of plant diseases and pests based on deep learning. Frontiers in Plant Science 2023, 14, 1227011. [Google Scholar] [CrossRef]

- Delfani, P.; Thuraga, V.; Banerjee, B.; et al. Integrative approaches in modern agriculture: IoT, ML and AI for disease forecasting amidst climate change. Precision Agric 2024, 25, 2589–2613. [Google Scholar] [CrossRef]

- Egon, A.; Bell, C. (n.d.). AI in agriculture: Revolutionizing crop monitoring and disease management through precision technology. ResearchGate. Retrieved from https://www.researchgate.net/publication/385940131_AI_IN_AGRICULTURE_REVOLUTIONIZING_CROP_MONITORING_AND_DISEASE_MANAGEMENT_THROUGH_PRECISION_TECHNOLOGY.

- Kaur, A.; et al. , “Artificial Intelligence Driven Smart Farming for Accurate Detection of Potato Diseases: A Systematic Review,” in IEEE Access, vol. 12, pp. 193902–193922, 2024. [CrossRef]

- Jafar A, Bibi N, Naqvi RA, Sadeghi-Niaraki, A., Jeong, D. Revolutionizing agriculture with artificial intelligence: plant disease detection methods, applications, and their limitations. Front Plant Sci. 2024 Mar 13; 15:1356260. [CrossRef] [PubMed]

- Arulmurugan, S.; Bharathkumar, V.; Gokulachandru, S.; Yusuf, M.M. “Plant Guard: AI-Enhanced Plant Diseases Detection for Sustainable Agriculture,” 2024 International Conference on Inventive Computation Technologies (ICICT), Lalitpur, Nepal, 2024, pp. 726–730. [CrossRef]

- Tariq, M.; Ali, U.; Abbas, S.; Hassan, S.; Naqvi, R.A.; Khan, M.A.; Jeong, D. Corn leaf disease: Insightful diagnosis using VGG16 empowered by explainable AI. Frontiers in Plant Science 2024, 15. [Google Scholar] [CrossRef]

- Sagar, S.; Javed, M.; Doermann, D.S. (n.d.). Leaf-based plant disease detection and explainable AI. Indian Institute of Information Technology, Allahabad, & University at Buffalo, NY, USA.

- Mahmud, T.; et al. , “Explainable AI for Tomato Leaf Disease Detection: Insights into Model Interpretability,” 2023 26th International Conference on Computer and Information Technology (ICCIT), Cox’s Bazar, Bangladesh, 2023, pp. 1–6. [CrossRef]

- Khandaker, M.A.A.; Raha, Z.S.; Islam, S.; Muhammad, T. (2025). Explainable AI-Enhanced Deep Learning for Pumpkin Leaf Disease Detection: A Comparative Analysis of CNN Architectures. ArXiv. Retrieved from https://arxiv.org/abs/2501.05449.

- Ashoka, S.B.; Pramodha, M.; Muaad, A.Y.; Nyange, R. (2024). Explainable AI-based framework for banana disease detection. Research Square. [CrossRef]

- Khan ZA, Waqar M, Cheema KM, Bakar Mahmood AA, Ain Q, Chaudhary NI, Alshehri A, Alshamrani SS, Zahoor Raja MA. EA-CNN: Enhanced attention-CNN with explainable AI for fruit and vegetable classification. Heliyon. 2024 Nov 30;10:e40820. [CrossRef] [PubMed]

- Dubey, S.R.; Jalal, A.S. (2014). Adapted approach for fruit disease identification using images. arXiv.

- Alhwaiti, Y.; Ishaq, M.; Siddiqi, M.H.; Waqas, M.; Alruwaili, M.; Alanazi, S.; Khan, A.; Khan, F. 2024). Early detection of late blight tomato disease using histogram oriented gradient based support vector machine. arXiv. https://arxiv.org/abs/2306.08326.

- .Alagu, S. (2020). Apple Fruit disease detection using Multiclass SVM classifier and IP Webcam APP.

- Dewliya, S.; Singh, M.P. (2015). Detection and classification for apple fruit diseases using support vector machine and chain code.

- Anu, S.; Nisha, T.; Ramya, R.; Rizuvana, M. Fruit Disease Detection Using GLCM And SVM Classifier. International Journal of Scientific Research in Computer Science, Engineering and Information Technology 2019, 365-371. [CrossRef]

- Sanath Rao, U.; Swathi, R.; Sanjana, V.; Arpitha, L.; Chandrasekhar, K.; Chinmayi, & Naik, P.K. Deep learning precision farming: Grapes and mango leaf disease detection by transfer learning. Global Transitions Proceedings 2021, 2, 535–544. [CrossRef]

- Dananjayan, S.; Tang, Y.; Zhuang, J.; Hou, C.; Luo, S. Assessment of state-of-the-art deep learning-based citrus disease detection techniques using annotated optical leaf images. Computers and Electronics in Agriculture 2022, 193, 106658. [Google Scholar] [CrossRef]

- Ali, H.; Lali, M.I.; Nawaz, M.Z.; Sharif, M.; Saleem, B.A. Symptom-based automated detection of citrus diseases using color histogram and textural descriptors. Computers and Electronics in Agriculture 2017, 138, 92–104. [Google Scholar] [CrossRef]

- Zhang, S.; Wu, X.; You, Z.; Zhang, L. Leaf image-based cucumber disease recognition using sparse representation classification. Computers and Electronics in Agriculture 2017, 134, 135–141. [Google Scholar] [CrossRef]

- Lamani, S. B. (2018). Pomegranate fruits disease classification with K-means clustering. International Journal for Research Trends and Innovation, 3(3), 74-79. https://www.ijrti.org/papers/IJRTI1803012.pdf.

- Doh, B.; Zhang, D.; Shen, Y.; Hussain, F.; Doh, R.F.; Ayepah, K. , “Automatic Citrus Fruit Disease Detection by Phenotyping Using Machine Learning,” 2019 25th International Conference on Automation and Computing (ICAC), Lancaster, UK, pp. 1–5. [CrossRef]

- Tiwari, R.; Chahande, M. (2021). Apple Fruit Disease Detection and Classification Using K-Means Clustering Method. In: Das, S.; Mohanty, M.N. (eds) Advances in Intelligent Computing and Communication. Lecture Notes in Networks and Systems, vol 202. Springer, Singapore. [CrossRef]

- Devi, P.K. and Rathamani, “Image Segmentation K-Means Clustering Algorithm for Fruit Disease Detection Image Processing,” 2020 4th International Conference on Electronics, Communication and Aerospace Technology (ICECA), Coimbatore, India, 2020, pp. 861–865. [CrossRef]

- Shin, J.; Chang, Y.K.; Heung, B.; Nguyen-Quang, T.; Price, G.W.; Al-Mallahi, A. A deep learning approach for RGB image-based powdery mildew disease detection on strawberry leaves. Computers and Electronics in Agriculture 2021, 183, 106042. [Google Scholar] [CrossRef]

- Yadav, S.; Sengar, N.; Singh, A.; Singh, A.; Dutta, M.K. Identification of disease using deep learning and evaluation of bacteriosis in peach leaf. Ecological Informatics 2021, 61, 101247. [Google Scholar] [CrossRef]

- Momeny, M.; Jahanbakhshi, A.; Hadipour-Rokni, R.; Zhang, Y.-D.; Neshat, A.A.; Ampatzidis, Y. Detection of citrus black spot disease and ripeness level in orange fruit using learning-to-augment incorporated deep networks. Ecological Informatics 2022, 72, 101829. [Google Scholar] [CrossRef]

- Zhu, D.; Xie, L.; Chen, B.; Tan, J.; Deng, R.; Zheng, Y.; Hu, Q.; Mustafa, R.; Chen, W.; Yi, S.; Yung, K.; IP, A.W.H. Knowledge graph and deep learning-based pest detection and identification system for fruit quality. Internet of Things 2023, 21, 100649. [Google Scholar] [CrossRef]

- Saleem, M.; Arif, K.M.; Potgieter, J. A performance-optimized deep learning-based plant disease detection approach for horticultural crops of New Zealand. IEEE Access 2022, 10, 3201104. [Google Scholar] [CrossRef]

- James, J.A.; Manching, H.K.; Mattia, M.R.; Bowman, K.D.; Hulse-Kemp, A.M.; Beksi, W.J. CitDet: A benchmark dataset for citrus fruit detection. arXiv 2023, arXiv:2309.05645. [Google Scholar] [CrossRef]

- Wise, K.; Wedding, T.; Selby-Pham, J. Application of automated image colour analyses for the early-prediction of strawberry development and quality. Scientia Horticulturae 2022, 305, 111316. [Google Scholar] [CrossRef]

- Hasan, R.I.; Alzubaidi, L.; Yusuf, S.M.; Rahim, M.S.M. Automated masks generation for coffee and apple leaf infected with single or multiple diseases-based color analysis approaches. Informatics in Medicine Unlocked 2021, 27, 100837. [Google Scholar] [CrossRef]

- Ganesh, P.; Volle, K.; Burks, T.F.; Mehta, S.S. DeepOrange: Mask R-CNN-based orange detection and segmentation. IFAC PapersOnLine 2019, 52, 70–75. [Google Scholar] [CrossRef]

- Abdulridha, J.; Ampatzidis, Y.; Roberts, P.; Kakarla, S.C. Detecting powdery mildew disease in squash at different stages using UAV-based hyperspectral imaging and artificial intelligence. Biosystems Engineering 2020, 197, 48–60. [Google Scholar] [CrossRef]

- Qin, J.; Burks, T.F.; Kim, M.S.; Chao, K.; Ritenour, M.A. Citrus canker detection using hyperspectral reflectance imaging and PCA-based image classification method. Sensing and Instrumentation for Food Quality and Safety 2008, 2, 168–177. [Google Scholar] [CrossRef]

- Bagheri, N.; Mohamadi-Monavar, H.; Azizi, A.; Ghasemi, A. Detection of Fire Blight disease in pear trees by hyperspectral data. European Journal of Remote Sensing 2018, 51, 1–10. [Google Scholar] [CrossRef]

- Zhao, X.; Burks, T.F.; Qin, J.; Ritenour, M.A. Effect of fruit harvest time on citrus canker detection using hyperspectral reflectance imaging. Sensing and Instrumentation for Food Quality and Safety 2010, 4, 126–135. [Google Scholar]

- Lorente, D.; Aleixos, N.; Gómez-Sanchis, J.U.A.N.; Cubero, S.; García-Navarrete, O.L.; Blasco, J. Recent advances and applications of hyperspectral imaging for fruit and vegetable quality assessment. Food and Bioprocess Technology 2012, 5, 1121–1142. [Google Scholar]

- Min, D.; Zhao, J.; Bodner, G.; Ali, M.; Li, F.; Zhang, X.; Rewald, B. Early decay detection in fruit by hyperspectral imaging–Principles and application potential. Food Control 2023, 152, 109830. [Google Scholar] [CrossRef]

- Sighicelli, M.; Colao, F.; Lai, A.; Patsaeva, S. (2008, February). Monitoring post-harvest orange fruit disease by fluorescence and reflectance hyperspectral imaging. In I International Symposium on Horticulture in Europe 817 (pp. 277–284).

- Jung, D.H.; Kim, J.D.; Kim, H.Y.; Lee, T.S.; Kim, H.S.; Park, S.H. A hyperspectral data 3D convolutional neural network classification model for diagnosis of gray mold disease in strawberry leaves. Frontiers in Plant Science 2022, 13, 837020. [Google Scholar] [PubMed]

- Mehl, P.M.; Chen, Y.R.; Kim, M.S.; Chan, D.E. Development of hyperspectral imaging technique for the detection of apple surface defects and contaminations. Journal of food engineering 2004, 61, 67–81. [Google Scholar]

- Pujari, J.D.; Yakkundimath, R.; Byadgi, A.S. (2014, December). Identification and classification of fungal disease affected on agriculture/horticulture crops using image processing techniques. In 2014 IEEE International Conference on Computational Intelligence and Computing Research (pp. 1–4). IEEE.

- Genangeli, A.; Allasia, G.; Bindi, M.; Cantini, C.; Cavaliere, A.; Genesio, L. . & Gioli, B. A novel hyperspectral method to detect moldy core in apple fruits. Sensors 2022, 22, 4479. [Google Scholar]

- Qin, J.; Burks, T.F.; Zhao, X.; Niphadkar, N.; Ritenour, M.A. Multispectral detection of citrus canker using hyperspectral band selection. Transactions of the ASABE 2011, 54, 2331–2341. [Google Scholar]

- Pansy, D.L.; Murali, M. UAV hyperspectral remote sensor images for mango plant disease and pest identification using MD-FCM and XCS-RBFNN. Environmental Monitoring and Assessment 2023, 195, 1120. [Google Scholar]

- Fernández, C.I.; Leblon, B.; Wang, J.; Haddadi, A.; Wang, K. Detecting infected cucumber plants with close-range multispectral imagery. Remote Sensing 2021, 13, 2948. [Google Scholar]

- Haider, I.; Khan, M.A.; Nazir, M.; Kim, T.; Cha, J.-H. An artificial intelligence-based framework for fruits disease recognition using deep learning. Computer Systems Science & Engineering 2024, 48, 1–15. [Google Scholar] [CrossRef]

- Li, J.; Zhu, Z.; Liu, H.; Su, Y.; Deng, L. Strawberry R-CNN: Recognition and counting model of strawberry based on improved Faster R-CNN. Ecological Informatics 2023, 75, 102210. [Google Scholar] [CrossRef]

- Li, H.; Jin, Y.; Zhong, J.; Zhao, R. A fruit tree disease diagnosis model based on stacking ensemble learning. Complexity 2021, 2021, 6868592. [Google Scholar]

- Mehmood, A.; Ahmad, M.; Ilyas, Q.M. On precision agriculture: enhanced automated fruit disease identification and classification using a new ensemble classification method. Agriculture 2023, 13, 500. [Google Scholar] [CrossRef]

- Yousuf, A.; Khan, U. Ensemble classifier for plant disease detection. International Journal of Computer Science and Mobile Computing 2021, 10, 14–22. [Google Scholar]

- Javidan, S.M.; Banakar, A.; Vakilian, K.A.; Ampatzidis, Y. Tomato leaf diseases classification using image processing and weighted ensemble learning. Agronomy Journal 2024, 116, 1029–1049. [Google Scholar]

- Nader, A.; Khafagy, M.H.; Hussien, S.A. Grape leaves diseases classification using ensemble learning and transfer learning. Int. J. Adv. Comput. Sci. Appl 2022, 13, 563–571. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).