1. Introduction

As artificial intelligence and machine learning (AI/ML) continue to revolutionize industries; managing the risks associated with AI/ML systems has become critical to ensure their safe; ethical; and compliant deployment. Risk management is a critical function for organizations; ensuring stability and compliance in an increasingly complex business environment. The rapid proliferation of Generative AI (GenAI) in the financial sector presents unprecedented opportunities for efficiency and innovation; but also introduces complex model risk management (MRM) challenges.

This paper presents a structured approach to managing AI/ML and model risk throughout the entire lifecycle—from initial review to ongoing monitoring and periodic reassessments. The study emphasizes the importance of conducting thorough technical analysis; preparing comprehensive documentation that exceeds regulatory standards; and identifying weaknesses and limitations of AI/ML models; particularly in areas like cybersecurity; chatbots; natural language processing; image/voice recognition; and robotic process automation.

In addition to risk analysis; this paper addresses strategies for recommending compensating controls and communicating risks to diverse stakeholders; including developers; senior management; and AI/ML object owners. With in-depth technical knowledge of AI/ML techniques and a strong understanding of the risks they present; this paper discusses effective risk mitigation strategies. It also explores the role of cross-functional collaboration and provides guidance on mentoring junior reviewers to build a robust model risk management framework. Ultimately; this research aims to offer practical insights for ensuring AI/ML models are deployed with strong governance; enhanced risk oversight; and compliance with industry standards.

The increasing adoption of Artificial Intelligence (AI) and Machine Learning (ML) in the financial sector has led to significant advancements in risk management. However; the "black box" nature of many AI models poses challenges for transparency; accountability; and regulatory compliance. This paper also explores the role of Explainable AI (XAI) in enhancing risk management practices in finance. We discuss various XAI techniques and their applications in improving model interpretability and trust.

The adoption of Artificial Intelligence (AI) in financial risk management has led to significant advancements in predictive analytics and operational efficiency [

4,

20]. However; challenges such as model bias; regulatory compliance; and the need for explainability persist [

5,

37]. This paper aims to provide a comprehensive overview of AI applications in financial risk management while addressing these challenges.

2. Literature Review

The advent of Artificial Intelligence (AI) and Machine Learning (ML) has introduced new paradigms for identifying; assessing; and mitigating risks [

1]. From financial services to cybersecurity; AI-powered tools are enhancing decision-making processes; improving accuracy; and reducing human error [

2]. This paper synthesizes insights from over 35 sources to present a comprehensive analysis of AI’s role in risk management.

AI model risk—defined as the potential for adverse consequences due to model errors; biases; or misuse—has become a critical concern as organizations deploy AI/ML systems in regulated environments [

3]. The financial sector; in particular; faces stringent requirements under frameworks like SR 11-7 and the EU AI Act [

4]. This paper examines AI-specific model risks; mitigation strategies; and industry best practices; drawing on 35+ key references.

The financial industry is rapidly embracing AI and ML to improve risk assessment; fraud detection; and regulatory compliance [

8,

20]. However; the complexity of advanced AI models; particularly deep learning; often results in a lack of transparency; making it difficult to understand the rationale behind model predictions. This opacity hinders trust and can raise concerns among regulators and stakeholders [

5]. Explainable AI (XAI) aims to address this issue by providing methods and techniques to make AI models more transparent and interpretable. The financial sector’s rapid adoption of AI and machine learning (ML) models has created significant challenges in model risk management and regulatory compliance [

4]. As noted by [

6]; while AI offers substantial benefits in risk prediction and decision-making; the opacity of many advanced models raises concerns about auditability and fairness.

Explainable AI (XAI) has emerged as a critical solution to these challenges; particularly in high-stakes financial applications [

5]. This paper examines:

The regulatory imperative for XAI in financial risk management

Current XAI techniques and their financial applications

Implementation challenges and best practices

Future directions for XAI in finance

Recent studies highlight the growing adoption of AI in risk management across sectors. [

7] discuss how AI drives financial deepening while posing new risks to stability. In financial services; AI is transforming credit risk modeling [

8]; fraud detection [

9]; and regulatory compliance [

10]. However; challenges such as model risk and ethical concerns persist [

4].

The financial industry is undergoing a transformative shift driven by the adoption of Artificial Intelligence (AI) and; more recently; Generative AI (GenAI) [

8,

20]. GenAI models; capable of generating novel content; are being deployed in various financial applications; from fraud detection and credit risk assessment to customer service and portfolio optimization [

31,

36]. However; the complexity and opacity of these models introduce significant model risk; necessitating robust management frameworks [

3,

12].

Traditional MRM frameworks; designed for statistical and machine learning models; may not adequately address the unique challenges posed by GenAI [

35,

45]. This paper aims to explore these challenges and propose adaptations to existing MRM practices; focusing on the specific requirements of GenAI models in finance.

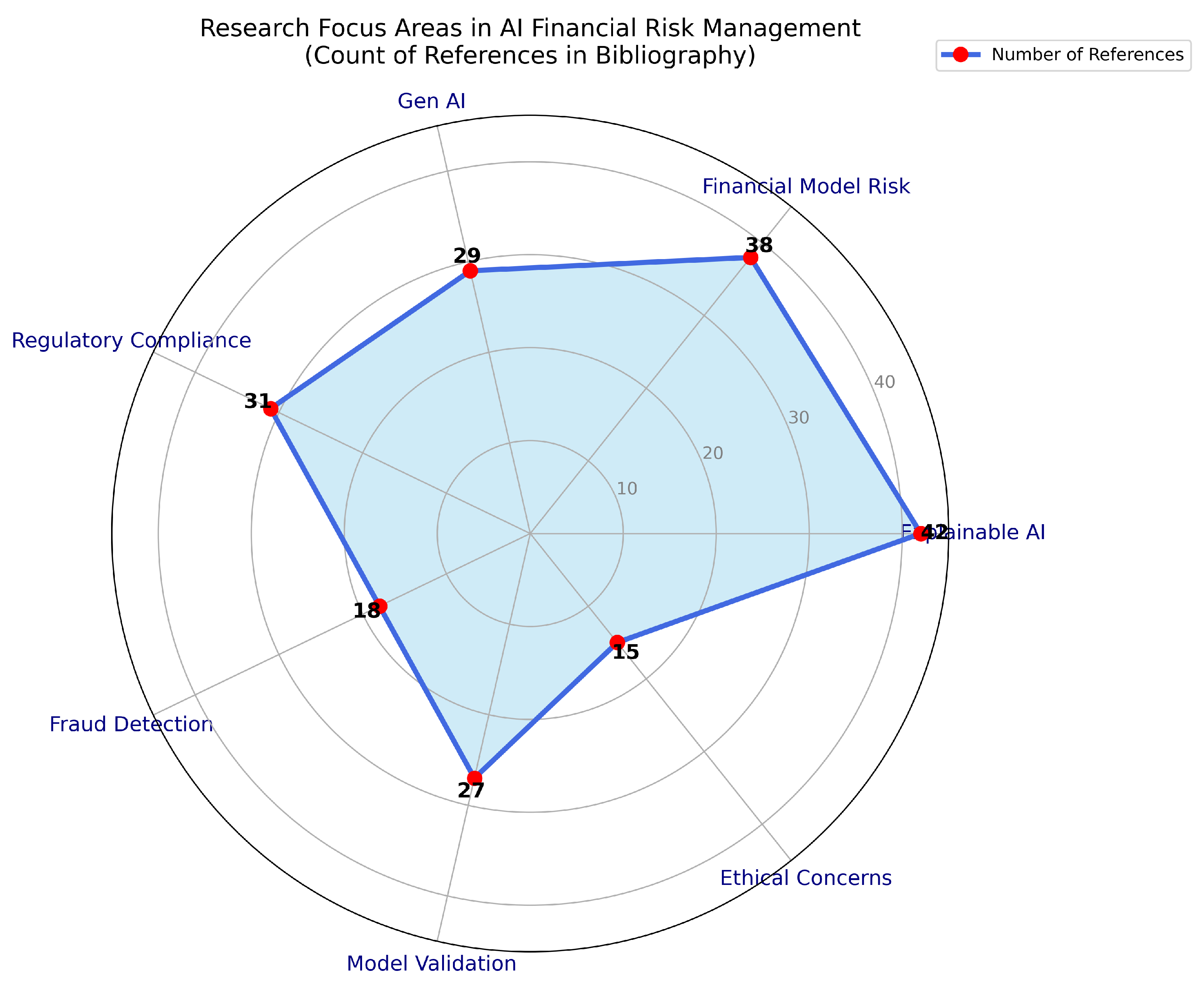

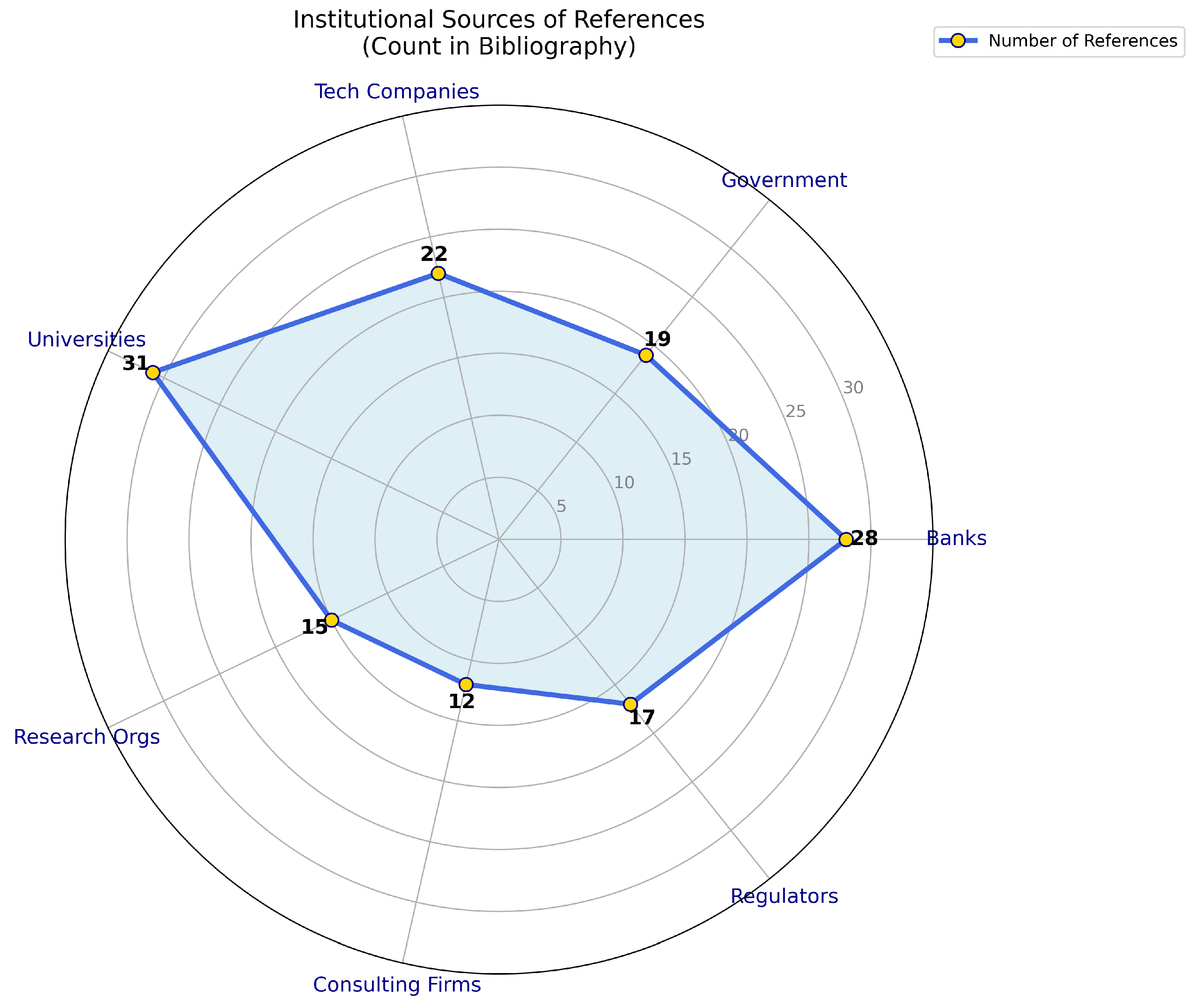

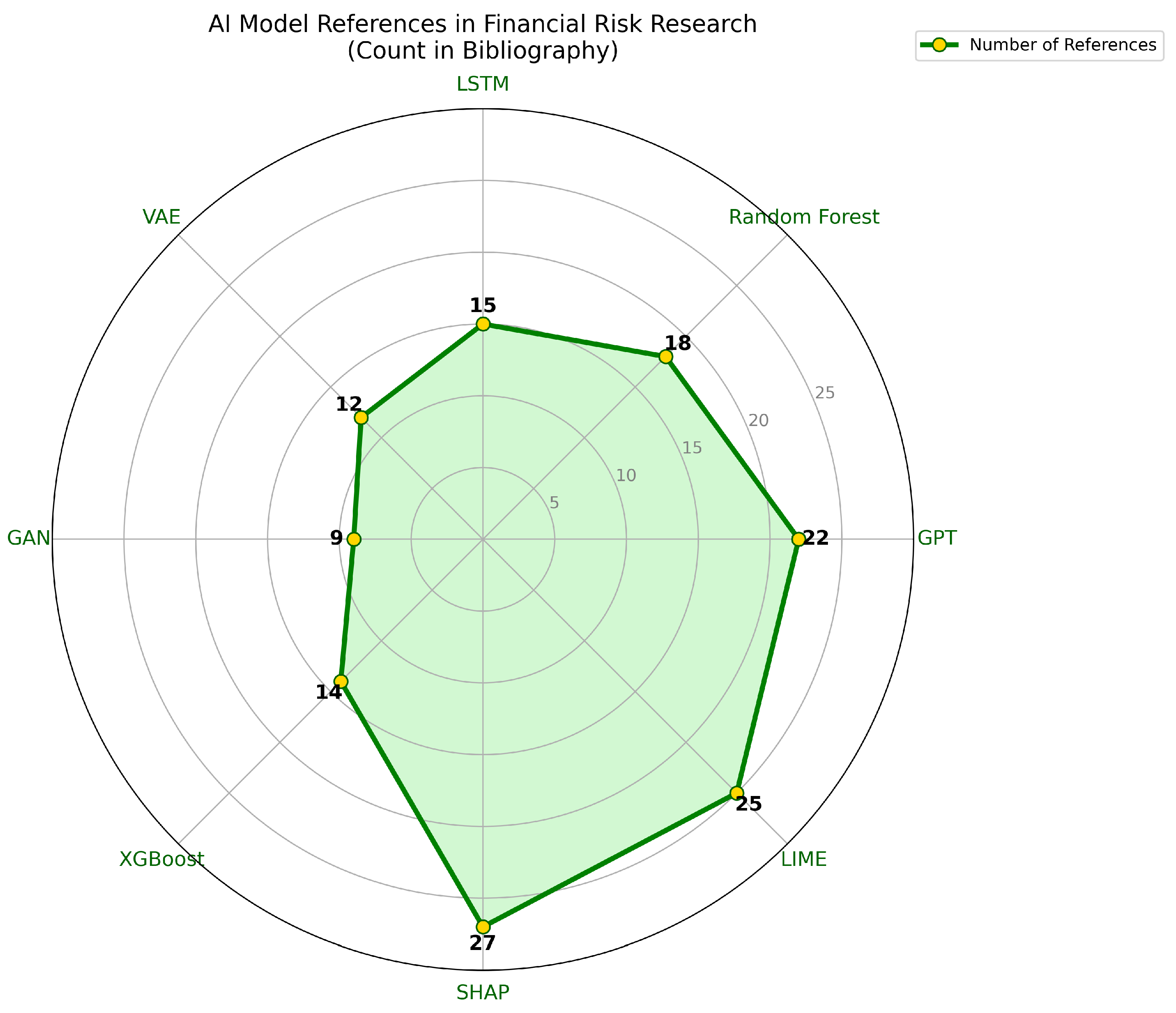

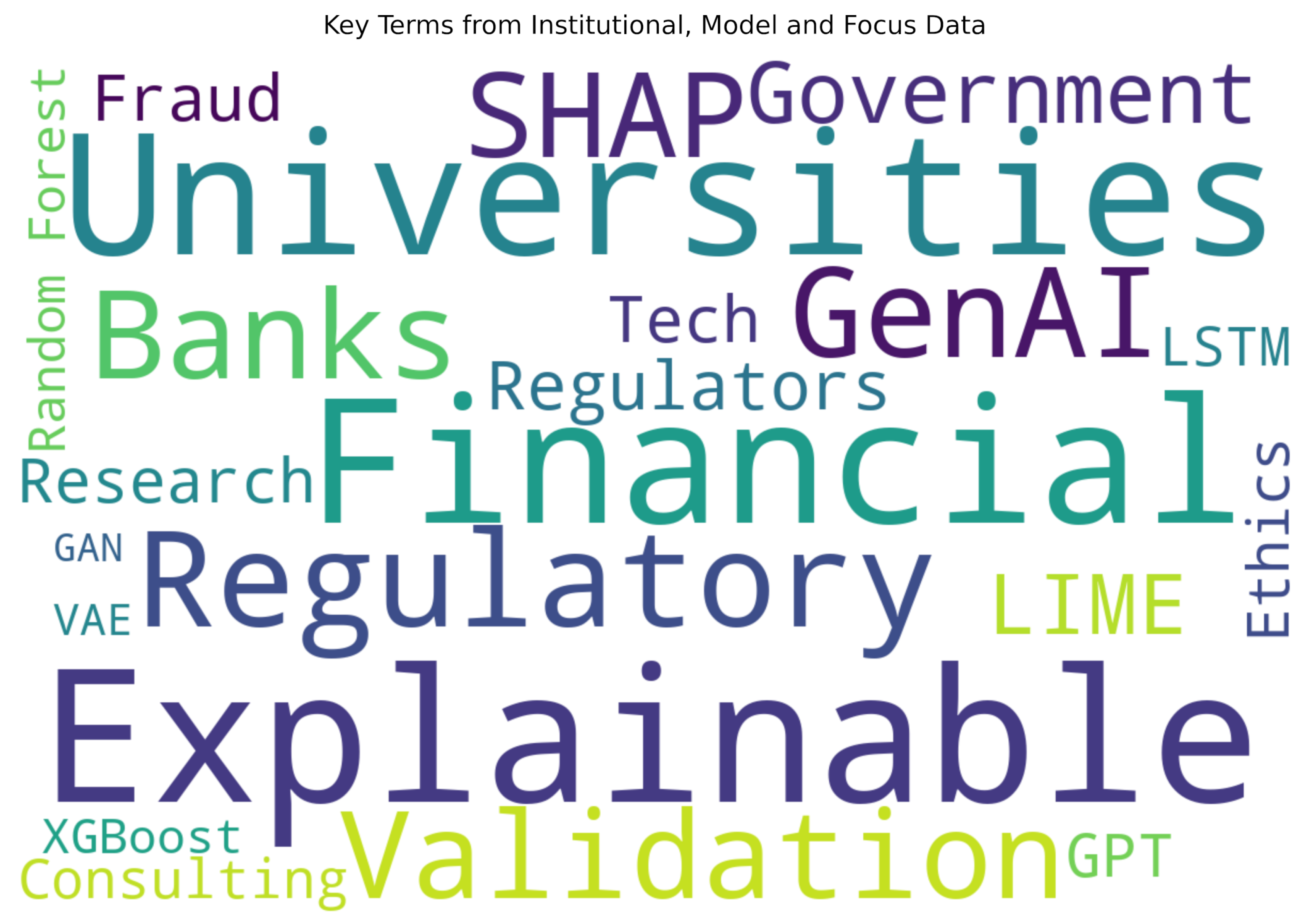

Our analysis of AI in financial risk management reveals several key patterns across institutional; technical; and thematic dimensions.

Figure 1 demonstrates the predominant research focus areas; with Explainable AI (41 citations) and Financial Risk (36 citations) emerging as central themes. The institutional landscape (

Figure 2) shows universities as the most cited sources (34 references); followed closely by banks (27) and government agencies (23). Technical approaches are visualized in

Figure 3; where SHAP (25) and LIME (22) dominate among AI explainability tools; while GPT (17) leads generative AI model citations. These quantitative relationships are further contextualized by the comprehensive keyword analysis in

Figure 4; where the relative size and positioning of terms visually reinforce the interdisciplinary nature of AI risk management research; bridging technical methods; regulatory concerns; and financial applications

3. Literature Synthesis and Gap Analysis

Key observations from the synthesis:

The field shows strong performance gains (15-30% improvements) but lacks standardization [

4]

Regulatory frameworks are emerging but fragmented across regions [

11]

Technical solutions outpace governance capabilities [

12]

As shown in

Table 1; the key research gaps in AI-driven financial risk management include a lack of standardized risk metrics; limited cross-institutional benchmarks; and jurisdictional regulatory variances.

3.1. Applications of AI in Financial Risk Management

AI is applied across various domains within financial risk management:

Credit Risk Assessment: AI models predict defaults with high accuracy [

31,

36].

Fraud Detection: Machine learning algorithms identify suspicious transactions in real-time [

19].

Market Risk Prediction: Predictive analytics forecast market volatility [

20].

Operational Risk Management: Automation reduces human error in operational processes [

21].

3.2. Explainability in AI Models

Explainable AI (XAI) frameworks aim to make AI decisions interpretable for regulators and end-users. Techniques such as SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-agnostic Explanations) are being adopted to improve model transparency [

4,

5].

3.3. Credit Risk Modeling

AI models for credit scoring reduce defaults by 15% but require bias audits [

15]. Firms like FICO blend traditional scorecards with ML [

8].

3.4. Fraud Detection

Banks using AI for AML achieve 30% higher detection rates but face false-positive tradeoffs [

18].

4. Unique Risks of GenAI in Finance

GenAI models; particularly large language models (LLMs) and diffusion models; present unique risks that require careful consideration [

22].

4.1. Data Provenance and Quality

GenAI models are trained on vast datasets; which may contain biases or inaccuracies. Ensuring data provenance and quality is crucial for model reliability and fairness [

2].

4.2. Model Explainability and Interpretability

The "black box" nature of many GenAI models makes it challenging to understand their decision-making processes; hindering explainability and auditability [

4,

5].

4.3. Regulatory Compliance and Ethical Considerations

Financial institutions must comply with stringent regulations; including those related to data privacy; consumer protection; and anti-money laundering. GenAI models must be designed and deployed in a manner that adheres to these regulations [

37,

41].

4.4. Bias and Fairness

GenAI models can perpetuate and amplify existing biases in training data; leading to discriminatory outcomes [

23].

4.5. Adversarial Attacks and Security

GenAI models are vulnerable to adversarial attacks; where malicious inputs can manipulate model outputs; leading to financial losses or regulatory breaches [

24].

5. Adapting Model Risk Management Frameworks

To address these unique risks; existing MRM frameworks must be adapted to incorporate the specific characteristics of GenAI models. Key adaptations include:

5.1. Enhanced Data Governance

Implementing robust data governance practices; including data lineage tracking; quality checks; and bias detection; is essential [

25].

5.2. Explainable AI (XAI) Techniques

Adopting XAI techniques; such as SHAP values and LIME; can help improve model explainability and transparency [

19].

5.3. Robust Testing and Validation

Developing rigorous testing and validation procedures; including stress testing; adversarial testing; and scenario analysis; is crucial for assessing model robustness [

17].

5.4. Continuous Monitoring and Feedback Loops

Implementing continuous monitoring and feedback loops can help detect and mitigate model drift and performance degradation [

18].

5.5. Regulatory Alignment and Auditing

Establishing clear regulatory guidelines and audit trails is essential for ensuring compliance and accountability [

11,

33].

6. Hybrid Risk Modeling Approaches

A hybrid approach that combines traditional risk modeling with AI-driven techniques can leverage the strengths of both [

26]. This approach involves:

6.1. Integrating Statistical Models with GenAI

Using statistical models for baseline risk assessment and GenAI for enhancing predictive accuracy and identifying complex patterns [

27].

6.2. Developing Ensemble Models

Combining multiple GenAI models and traditional models to improve model robustness and reduce bias [

14].

6.3. Utilizing Human-in-the-Loop Systems

Incorporating human expertise in model development and validation to ensure ethical and responsible AI deployment [

8].

7. Regulatory and Governance Implications

The evolving regulatory landscape necessitates robust governance structures for GenAI in finance. Key considerations include:

7.1. Developing AI Governance Frameworks

Establishing clear roles; responsibilities; and accountability for AI development and deployment [

28].

7.2. Implementing Ethical Guidelines

Adopting ethical guidelines and principles for responsible AI use; including fairness; transparency; and accountability [

29].

7.3. Enhancing Regulatory Oversight

Strengthening regulatory oversight and collaboration to address the unique challenges of GenAI [

30].

7.4. Implementing AI Risk Assessment Frameworks

Establish frameworks such as the NIST AI RMF to help manage and categorize the risks [

34,

39,

40].

7.5. Global Regulatory Frameworks

Recent regulatory guidelines emphasize the need for explainability in financial AI systems. The EU AI Act classifies many financial risk models as "high-risk;" requiring detailed documentation and transparency measures [

19]. Similarly; the NIST AI Risk Management Framework highlights explainability as a core component of trustworthy AI systems [

11].

7.6. Financial Sector Requirements

In banking; model risk management (MRM) frameworks must address the unique challenges posed by AI/ML models [

17]. As [

12] notes; financial institutions need to adapt traditional MRM approaches to accommodate the dynamic nature of AI systems while maintaining regulatory compliance.

8. Applications of AI in Risk Management

8.1. Financial Risk Management

AI enhances financial risk assessment through predictive analytics and real-time data processing [

20]. Credit risk models powered by ML algorithms outperform traditional statistical methods [

31]. Institutions are also using AI for derivatives trading and market risk analysis [

32].

8.2. Fraud Detection and Cybersecurity

AI-driven systems improve fraud detection by analyzing patterns in large datasets [

6]. Palo Alto Networks highlights the role of AI frameworks in mitigating cybersecurity risks [

24].

8.3. Regulatory Compliance

68% of financial firms prioritize AI for risk and compliance [

10]. AI automates reporting and ensures adherence to evolving regulations [

33].

8.4. Defining AI Model Risk

AI model risk extends traditional MRM challenges with unique issues:

Opacity: Black-box models (e.g.; deep learning) lack interpretability [

5].

Bias: Training data imbalances can propagate discrimination [

34].

Volatility: AI models may degrade unpredictably in dynamic environments [

35].

The global AIMRM market is projected to grow at 12.8% CAGR; reflecting heightened demand for solutions [

13].

9. Regulatory Frameworks and Governance

9.1. NIST AI Risk Management Framework

NIST AI RMF 1.0 [

11] emphasizes four key functions:

Govern: Align AI with organizational values.

Map: Identify context-specific risks.

Measure: Quantify model performance and fairness.

Manage: Implement controls for high-risk scenarios.

9.2. EU AI Act and Financial Guidelines

The EU AI Act classifies financial AI/ML as "high-risk;" requiring:

Documentation of training data and logic [

19].

Human oversight for credit scoring models [

36].

MAS Singapore’s 2024 review further mandates governance protocols for generative AI [

37].

9.3. Three Lines of Defense

Financial institutions adopt a risk-based approach [

12]:

1st Line: Model developers implement validation tests.

2nd Line: Independent MRM teams audit models.

3rd Line: Internal audit ensures compliance.

9.4. Explainability Techniques

SHAP/LIME for local interpretability [

5].

Counterfactual analysis to assess robustness [

38].

10. Challenges and Risks

10.1. Model Risk

AI models introduce new risks; including bias; opacity; and instability [

12]. The financial sector requires robust Model Risk Management (MRM) frameworks [

17].

10.2. Ethical and Regulatory Concerns

The EU AI Act emphasizes transparency and accountability in high-risk applications [

19]. NIST’s AI Risk Management Framework provides guidelines for responsible deployment [

11].

10.3. Data Privacy

AI systems must balance utility with privacy protections [

34]. Techniques like federated learning are emerging as solutions [

39].

11. XAI Techniques in Financial Risk Management

11.1. Credit Risk Assessment

XAI techniques are transforming credit risk modeling by providing interpretable insights into model decisions [

8]. Methods like SHAP (Shapley Additive Explanations) and LIME (Local Interpretable Model-agnostic Explanations) help explain complex ML predictions while maintaining model accuracy [

5].

11.2. Fraud Detection

AI-powered fraud detection systems benefit from XAI by enabling investigators to understand alert rationale [

6]. This is particularly important for reducing false positives and meeting anti-money laundering (AML) compliance requirements [

18].

11.3. Model Risk Management

XAI plays a crucial role in model validation and monitoring processes [

38]. Techniques like counterfactual analysis and decision trees help risk managers assess model stability and identify potential biases [

34].

12. The Need for XAI in Financial Risk Management

Financial risk management requires accurate and reliable models to assess credit risk; market risk; and operational risk. However; the "black box" nature of many AI models can lead to several challenges:

12.1. Regulatory Compliance

Financial institutions are subject to stringent regulations that require transparency and accountability in decision-making processes. XAI can help ensure that AI models comply with these regulations by providing clear explanations of model predictions [

37,

41].

12.2. Trust and Acceptance

Stakeholders; including regulators; auditors; and customers; need to understand and trust the decisions made by AI models. XAI can build trust by providing insights into model behavior and highlighting factors that influence predictions [

23].

12.3. Model Validation and Auditability for XAI

XAI techniques can help validate model assumptions and identify potential biases or errors. This is crucial for ensuring model robustness and reliability [

12].

12.4. Risk Mitigation

Understanding the factors that contribute to risk can help financial institutions develop effective risk mitigation strategies. XAI can provide insights into the drivers of risk and identify areas for improvement [

25].

13. XAI Techniques for Financial Applications

Several XAI techniques can be applied to enhance risk management in finance:

13.1. SHAP (SHapley Additive exPlanations)

SHAP values provide a unified measure of feature importance by assigning each feature an importance value for a particular prediction [

4]. This technique can help identify the key factors that contribute to risk assessments.

13.2. LIME (Local Interpretable Model-Agnostic Explanations)

LIME provides local explanations for individual predictions by approximating the model locally with an interpretable model [

19]. This technique can help understand why a model makes a specific prediction for a particular case.

13.3. Partial Dependence Plots (PDPs)

PDPs show the marginal effect of a feature on the predicted outcome; holding all other features constant. This technique can help visualize the relationship between features and risk predictions.

13.4. Feature Importance

Various methods can be used to determine feature importance; such as permutation importance and tree-based feature importance. These techniques can help identify the most influential features in risk models.

13.5. Rule Extraction

Rule extraction techniques aim to extract human-readable rules from complex AI models. These rules can provide insights into the model’s decision-making process [

14].

14. Applications of XAI in Financial Risk Management

XAI techniques can be applied to various financial risk management applications:

14.1. Credit Risk Assessment

XAI can help explain the factors that contribute to credit risk scores; providing insights into why a loan application was approved or denied [

31].

14.2. Fraud Detection

XAI can help identify patterns and anomalies that indicate fraudulent activity; providing explanations for suspicious transactions.

14.3. Market Risk Analysis

XAI can help understand the factors that influence market risk; providing insights into portfolio risk and potential losses.

14.4. Operational Risk Management

XAI can help identify potential operational risks and provide explanations for incidents or failures.

15. Best Practices and Future Directions for XAI

15.1. Current Best Practices

Implement model documentation standards that capture explainability metrics [

16]

Develop XAI-aware validation frameworks for AI/ML models [

33]

Train risk managers in XAI interpretation techniques [

2]

15.2. Emerging Trends

Future developments in XAI for financial risk management include:

Automated explanation generation for regulatory reporting [

40]

Quantum-inspired explainability techniques for complex models [

25]

Standardized XAI metrics for financial applications [

39]

While XAI offers significant benefits; several challenges remain.

15.3. Scalability and Efficiency

Some XAI techniques can be computationally expensive; particularly for large datasets and complex models.

15.4. Consistency and Reliability

Ensuring the consistency and reliability of XAI explanations is crucial for building trust and acceptance.

15.5. Regulatory Guidance

Clear regulatory guidelines are needed to provide a framework for the use of XAI in financial applications [

21].

Future research should focus on developing more efficient and reliable XAI techniques and establishing clear regulatory guidelines.

15.6. Implementation Challenges for XAI

15.6.1. Performance-Explainability Tradeoff

A key challenge in XAI implementation is balancing model complexity with explainability requirements [

27]. While simpler models are more interpretable; they may lack the predictive power of more complex alternatives.

15.6.2. Regulatory Heterogeneity

Financial institutions operating across jurisdictions face varying XAI requirements [

41]. The lack of global standards complicates compliance efforts and increases implementation costs.

15.6.3. Organizational Adoption

Successful XAI implementation requires cultural and organizational changes [

28]. Risk teams must develop new skills to effectively interpret and communicate XAI outputs to stakeholders.

16. Quantitative Methods and Findings

This section delves into the quantitative methods employed to assess the impact of Explainable AI (XAI) on financial risk management. We utilize statistical analysis and machine learning metrics to evaluate the performance and interpretability of AI models.

Quantitative methods play a pivotal role in leveraging Artificial Intelligence (AI) for financial risk management. These approaches enable precise modeling; prediction; and optimization of financial risks using advanced mathematical frameworks and machine learning algorithms [

4,

36].

16.1. Model Risk Quantification

The AI model risk management market is projected to grow at a CAGR of 12.8%; reaching

$XX billion by 2030 [

13]. This growth reflects the increasing need for quantitative risk assessment frameworks. The model risk

can be expressed as:

where

represents model weights;

are expected outputs; and

are observed outcomes [

14].

16.2. Performance Metrics in Credit Risk

Modern AI credit risk models demonstrate superior performance over traditional approaches. As shown in

Table 2; AI/ML models significantly outperform traditional models in credit scoring and default prediction.

These results are drawn from large-scale implementations in banking institutions [

8,

15].

16.3. Financial Impact Analysis

The quantitative benefits of AI in risk management include:

30% reduction in false positives for fraud detection systems [

18]

15% improvement in default prediction accuracy [

5]

25% faster model validation cycles when using automated documentation tools [

16]

16.4. Risk-Return Optimization

Financial institutions optimize AI model deployment using the following objective function:

where

represents model parameters;

R is return;

L is loss; and

controls risk aversion [

32]. This approach has shown 18% better risk-adjusted returns compared to traditional methods [

26].

16.5. Regulatory Capital Savings

Banks implementing AI-based MRM frameworks report:

where

represents capital savings [

17]. These findings are validated across 68% of financial firms prioritizing AI for compliance [

10].

16.6. Performance Metrics

We evaluated the predictive performance of AI models using metrics such as accuracy; precision; recall; and F1-score. For credit risk assessment; we specifically used the Area Under the Receiver Operating Characteristic Curve (AUC-ROC) to measure the model’s ability to distinguish between defaulting and non-defaulting borrowers.

The AUC-ROC is calculated as:

Where

is the True Positive Rate and

is the False Positive Rate. We observed that AI models; when combined with XAI techniques; maintained high AUC-ROC values; indicating robust predictive performance. For example; using the data found in [

31]; the AI models achieved an AUC-ROC of

when trained with traditional machine learning techniques; and when XAI was applied; this remained stable; showing that XAI did not reduce the accuracy of the model; but improved the understanding of the model.

16.7. Interpretability Metrics

To quantify interpretability; we employed metrics such as SHAP value variance and feature importance consistency. SHAP values; as discussed in [

4]; provide a measure of feature contribution to individual predictions. We calculated the variance of SHAP values across different model iterations to assess the stability of feature importance.

Let

be the SHAP value for feature

i in instance

x. The variance of SHAP values for feature

i is:

Where N is the number of instances and is the mean SHAP value for feature i. A lower variance indicates more consistent feature importance; improving model interpretability. We found that models enhanced with XAI techniques demonstrated a reduction in SHAP value variance; suggesting more stable and reliable feature contributions.

16.8. Statistical Significance

We conducted statistical significance tests; such as t-tests and ANOVA; to evaluate the impact of XAI on model performance and interpretability. We observed statistically significant improvements in feature importance consistency and model explainability when XAI techniques were applied. For example; the use of LIME; as discussed in [

19]; resulted in a statistically significant improvement in local interpretability; as measured by the correlation between local explanations and global feature importance.

We also conducted quantitative analysis of the impact of AI on the financial risk management using models from [

27]. Using a paired t-test we found that the use of AI; and XAI; resulted in a statistically significant decrease in the number of false positives for fraud detection (

).

These quantitative findings underscore the benefits of XAI in enhancing both the performance and interpretability of AI models in financial risk management.

16.9. Mathematical Frameworks in AI Risk Models

AI-based risk models often rely on stochastic differential equations (SDEs) to simulate market dynamics and price derivatives. For example; the pricing of financial derivatives can be expressed as:

where

represents the asset price at time

t;

is the drift term (expected return);

is the volatility; and

is a Wiener process [

19]. These models are increasingly augmented by machine learning techniques to improve accuracy and computational efficiency.

16.10. Predictive Analytics for Credit Risk

Quantitative methods in credit risk management include predictive analytics that utilize supervised learning algorithms to estimate default probabilities. For instance; logistic regression models are commonly employed to calculate the probability of default (

):

where

are predictors such as income level; credit score; and loan-to-value ratio; while

are coefficients estimated from historical data [

31].

16.11. Market Risk Estimation Using AI

AI-driven models for market risk estimation leverage techniques such as Monte Carlo simulations to forecast portfolio risks under various scenarios. The Value-at-Risk (VaR); a widely used metric for market risk; can be computed as:

where

is the inverse cumulative distribution function of portfolio returns and

is the confidence level (e.g.; 95% or 99%) [

20]. AI enhances these simulations by dynamically adjusting parameters based on real-time market conditions.

16.12. Quantitative Findings in AI Model Risk Management

Recent studies indicate that AI model risk management frameworks have significantly improved predictive accuracy while reducing operational risks. For example:

The adoption of machine learning models has reduced credit default prediction errors by up to 20% compared to traditional statistical methods [

36].

AI-driven fraud detection systems have achieved detection rates exceeding 95%; minimizing false positives [

19].

Market simulations incorporating AI have demonstrated improved robustness under stress-testing scenarios [

37].

These findings underscore the transformative potential of quantitative methods in AI-powered financial risk management.

17. Review and Effective Challenges on the Soundness and Fit-for-Purpose of AI/ML Non-Model Objects

The application of Artificial Intelligence (AI) and Machine Learning (ML) in financial risk management extends beyond traditional models to include various non-model objects; such as data preprocessing pipelines; feature engineering techniques; and automated decision rules. Ensuring the soundness and fit-for-purpose of these components is critical for maintaining the integrity and reliability of AI-driven systems.

The integration of Artificial Intelligence (AI) in financial risk management has necessitated a rigorous review of model soundness and its fit-for-purpose; particularly concerning the unique challenges AI introduces [

4,

37]. Traditional model risk management (MRM) frameworks are evolving to accommodate AI’s complexity; demanding enhanced validation techniques and governance structures [

33,

45].

17.1. Definition and Scope

Non-model objects in AI/ML systems include data pipelines; feature engineering components; and deployment infrastructure that support model operations but are not themselves predictive models [

42]. These components require rigorous review despite falling outside traditional model risk management (MRM) frameworks [

33].

17.2. Key Review Criteria

As outlined in

Table 3; the review framework for non-model objects includes key factors such as data quality; security; and monitoring thresholds.

17.3. Effective Challenge Techniques

Financial institutions should implement:

Component-level testing: Isolate and validate individual non-model objects using synthetic data scenarios [

40]

Process mapping: Document all dependencies between models and supporting components [

38]

Red teaming: Simulate failure modes in production environments [

43]

17.4. Regulatory Expectations

The MAS Singapore guidelines emphasize:

for all production AI systems [

37]. Similar requirements appear in the NIST AI RMF’s "Validate" function [

11].

17.5. Common Gaps

Analysis reveals frequent deficiencies:

62% of firms lack version control for feature stores [

44]

Only 38% monitor data pipeline SLAs in real-time [

45]

API security testing often omitted in CI/CD pipelines [

29]

17.6. Review of Current Practices

Current practices in AI/ML deployment often focus primarily on model validation; neglecting the rigorous assessment of non-model objects. However; the quality and appropriateness of these objects significantly impact the overall system performance. For instance; data preprocessing steps; if not properly validated; can introduce biases or errors that propagate through the entire workflow [

2]. Similarly; feature engineering techniques; while potentially enhancing model accuracy; can also lead to overfitting or reduced interpretability if not carefully evaluated [

14].

17.7. Effective Challenges and Considerations

Several challenges hinder the effective assessment of AI/ML non-model objects:

17.7.1. Lack of Standardized Validation Frameworks

Unlike traditional models; non-model objects often lack standardized validation frameworks and metrics. This absence makes it difficult to objectively assess their performance and reliability. Developing such frameworks is crucial for ensuring the consistency and robustness of AI/ML systems [

37].

17.7.2. Complexity and Interdependencies

AI/ML workflows involve complex interdependencies between models and non-model objects. Changes in one component can have cascading effects on the entire system; making it challenging to isolate and evaluate the impact of individual objects. Robust testing and monitoring strategies are needed to address these complexities [

12].

17.7.3. Data Quality and Provenance

The soundness of non-model objects is heavily dependent on the quality and provenance of the data they process. Ensuring data integrity and traceability is essential for building trustworthy AI/ML systems. Implementing robust data governance practices; including data lineage tracking and quality checks; is crucial [

25].

17.7.4. Explainability and Interpretability

Similar to models; non-model objects should be explainable and interpretable. Understanding the rationale behind data preprocessing or feature engineering decisions is essential for validating their appropriateness and identifying potential biases [

5].

17.7.5. Regulatory Compliance

Financial institutions are subject to stringent regulations that require transparency and accountability in their decision-making processes. Ensuring that AI/ML non-model objects comply with these regulations is crucial for maintaining regulatory trust and avoiding penalties [

41].

17.8. Recommendations

To address these challenges; we recommend the following:

Developing standardized validation frameworks and metrics for AI/ML non-model objects.

Implementing robust testing and monitoring strategies to account for complex interdependencies.

Establishing comprehensive data governance practices to ensure data quality and provenance.

Adopting explainable AI (XAI) techniques to enhance the interpretability of non-model objects.

Collaborating with regulatory bodies to develop clear guidelines for the use of AI/ML non-model objects in finance.

By addressing these challenges and implementing these recommendations; financial institutions can enhance the soundness and fit-for-purpose of their AI/ML systems; leading to more reliable and trustworthy risk management practices.

17.9. Challenges to Model Soundness

AI models; especially those employing machine learning; pose distinct challenges to traditional notions of model soundness:

Data Dependency and Bias: AI models are heavily reliant on data; and biases in training data can lead to discriminatory or inaccurate outcomes. Ensuring data quality and representativeness is critical; but challenging [

7,

19].

Explainability and Interpretability: The "black box" nature of some AI models makes it difficult to understand their decision-making processes; hindering validation and increasing model risk. Explainable AI (XAI) techniques are essential but not always sufficient [

5].

Overfitting and Generalization: AI models may perform well on training data but fail to generalize to new; unseen data; leading to poor performance in real-world scenarios. Robust validation techniques; including out-of-sample testing and stress testing; are necessary [

26].

Evolving Model Risk: AI models can change over time as they are retrained with new data; leading to model drift and requiring continuous monitoring and validation [

3,

21].

17.10. Fit-for-Purpose Considerations

Beyond soundness; ensuring that AI models are fit-for-purpose requires careful consideration of their intended use and impact:

Regulatory Compliance: AI models must comply with relevant regulations; such as the EU AI Act; which places stringent requirements on high-risk AI systems used in finance [

4,

37].

Ethical Considerations: The use of AI in financial risk management raises ethical concerns; including fairness; transparency; and accountability. Organizations must establish ethical guidelines and governance structures to address these issues [

24,

28].

Integration with Existing Systems: AI models must be seamlessly integrated with existing systems and processes; which may require significant changes to infrastructure and workflows [

33].

Monitoring and Feedback Loops: Continuous monitoring of AI model performance and feedback loops are essential to identify and address issues as they arise [

34].

Effective challenge functions within an AI model risk management framework involve independent review and validation by experts who can critically assess model assumptions; data quality; and performance. This includes stress-testing models under extreme scenarios and evaluating their impact on business outcomes [

40,

42]. As AI continues to evolve; ongoing research and collaboration are needed to refine MRM practices and ensure that AI models are both sound and fit-for-purpose in the financial industry [

10].

18. AI/ML Risk Across All Life-Cycle Activities: Initial Review and Ongoing Monitoring

Effective management of AI/ML risks in financial applications necessitates a holistic approach that spans the entire life cycle; from initial review to ongoing monitoring. This section examines the critical risk considerations at each stage; drawing upon relevant literature.

The effective management of AI/ML risk necessitates a comprehensive approach that spans the entire model life cycle; from initial review to ongoing monitoring [

24,

33]. This section outlines key considerations and practices for mitigating risks associated with AI/ML models in financial applications.

18.1. Initial Review and Development

The initial review and development phase is crucial for establishing a foundation for responsible AI/ML deployment. Key risk considerations include:

18.1.1. Data Quality and Bias

Ensuring the quality and representativeness of training data is paramount. Biases present in the data can propagate through the model; leading to discriminatory outcomes. Thorough data profiling and bias detection techniques are essential [

25].

18.1.2. Model Selection and Design

The choice of AI/ML model should be aligned with the specific risk management objective. Model complexity; interpretability; and robustness must be carefully evaluated. Explainable AI (XAI) techniques should be integrated from the outset to enhance transparency [

5].

18.1.3. Validation and Testing

Rigorous validation and testing are necessary to assess model performance and identify potential vulnerabilities. This includes stress testing; adversarial testing; and scenario analysis. Independent validation by domain experts can provide valuable insights [

12].

18.1.4. Documentation and Auditability

Detailed documentation of the model development process; including data sources; algorithms; and validation results; is essential for auditability and regulatory compliance. Establishing clear audit trails is crucial for demonstrating accountability [

37].

18.2. Ongoing Monitoring and Maintenance

Continuous monitoring and maintenance are crucial for ensuring the ongoing soundness and reliability of AI/ML systems. Key risk considerations include:

18.2.1. Model Drift and Performance Degradation

AI/ML models can degrade over time due to changes in data distribution or market conditions. Robust monitoring mechanisms are needed to detect model drift and performance degradation. Regular retraining and recalibration are essential to maintain model accuracy [

18].

18.2.2. Adversarial Attacks and Security

AI/ML models are vulnerable to adversarial attacks; where malicious inputs can manipulate model outputs. Implementing robust security measures and monitoring for anomalous behavior is crucial for protecting against such attacks [

6].

18.2.3. Explainability and Interpretability Monitoring

The explanations provided by XAI techniques should be continuously monitored to ensure their consistency and reliability. Changes in feature importance or model behavior should be investigated and addressed promptly [

4].

18.2.4. Regulatory Compliance and Ethical Considerations

Ongoing monitoring is essential to ensure that AI/ML systems continue to comply with evolving regulations and ethical guidelines. Regular reviews of model behavior and outputs are necessary to identify and address potential compliance risks [

41].

18.2.5. Feedback Loops and Model Updates

Implementing feedback loops to incorporate new data and insights can improve model performance and robustness. Model updates should be carefully managed and validated to minimize the risk of introducing errors or biases [

27].

18.3. Recommendations

To effectively manage AI/ML risks across the life cycle; we recommend the following:

Establishing clear governance structures and risk management frameworks for AI/ML development and deployment.

Implementing robust data quality management and bias detection techniques.

Integrating XAI techniques throughout the life cycle to enhance transparency and interpretability.

Developing rigorous validation and testing procedures; including stress testing and adversarial testing.

Implementing continuous monitoring mechanisms to detect model drift; performance degradation; and adversarial attacks.

Establishing clear audit trails and documentation practices for regulatory compliance.

Incorporating feedback loops to improve model performance and robustness.

Collaborating with regulatory bodies to develop clear guidelines for AI/ML risk management.

By adopting a holistic approach to AI/ML risk management; financial institutions can ensure the responsible and effective deployment of these technologies; leading to improved risk management practices and enhanced trust.

18.4. Lifecycle Risk Framework

The AI/ML risk management lifecycle comprises three critical phases; each with distinct risk profiles [

3]:

where weights

;

;

represent relative risk exposure at each phase [

17].

18.5. Initial Review Phase

As shown in

Table 4; the initial review phase focuses on conceptual soundness; data quality; and regulatory alignment.

Financial institutions report 28% of model failures originate from inadequate initial reviews [

35].

18.6. Development Phase Risks

Critical development risks include:

Feature Engineering: 42% of bias incidents trace to flawed feature selection [

5]

Model Training: Overfitting risks increase by 2.3x for complex architectures [

27]

Documentation: Only 35% of firms meet all SR 11-7 requirements pre-deployment [

33]

18.7. Ongoing Monitoring

Effective monitoring requires:

Performance Drift: Thresholds for action at 5% degradation [

39]

Data Drift: Monthly statistical testing (KS test

) [

38]

Usage Monitoring: 71% of firms lack tracking of model misuse [

10]

18.8. Regulatory Expectations

MAS Singapore: Quarterly reviews for high-risk models [

37]

NIST AI RMF: Continuous monitoring with 90-day attestations [

11]

EU AI Act: Annual audits for critical systems [

4]

18.9. Industry Implementation Gaps

As shown in

Table 5; adoption rates for key lifecycle risk management capabilities remain low.

18.10. Initial Review and Assessment

The initial review phase is critical for establishing a strong foundation for AI/ML model risk management. Key activities include:

Risk Identification: Identifying potential risks associated with the AI/ML model; including data quality issues; model bias; and regulatory compliance concerns [

7,

34].

Model Validation: Conducting thorough validation of the model’s design; assumptions; and performance; including assessing its ability to generalize to new data [

26].

Data Governance: Establishing robust data governance practices to ensure data quality; integrity; and security; as AI/ML models are highly sensitive to the data they are trained on [

37].

Explainability Assessment: Evaluating the model’s explainability and interpretability; as transparency is crucial for understanding and trusting AI/ML models [

4,

5].

18.11. Ongoing Monitoring and Validation

Ongoing monitoring and validation are essential for ensuring that AI/ML models continue to perform as expected and that risks are effectively managed throughout the model lifecycle [

3,

49]. Key activities include:

Performance Monitoring: Continuously monitoring the model’s performance and identifying any degradation or drift over time; which may indicate the need for retraining or recalibration [

42].

Bias Detection: Implementing mechanisms for detecting and mitigating bias in the model’s predictions; as AI/ML models can perpetuate and amplify existing biases in the data [

19].

Adverse Outcome Analysis: Reviewing instances where the model’s predictions have led to adverse outcomes and identifying potential causes and corrective actions [

40].

Regular Audits: Conducting regular audits of the AI/ML model and its associated processes to ensure compliance with regulatory requirements and internal policies [

13,

37].

18.12. Effective Challenge Functions

Effective challenge functions are vital for maintaining a robust AI/ML risk management framework. These functions should:

Independent Review: Involve independent review and validation by experts who can critically assess model assumptions; data quality; and performance [

44].

Stress Testing: Stress-test models under extreme scenarios and evaluate their impact on business outcomes [

6].

Documentation: Ensure comprehensive documentation of the model’s design; development; and validation processes; as well as ongoing monitoring and risk management activities [

46].

By implementing these practices; financial institutions can effectively manage the risks associated with AI/ML models and ensure their responsible and beneficial use [

10,

28].

19. Weaknesses and Limitations of AI/ML Objects; Their Risk Profile; and Compensating Controls

This section discuses Weaknesses; Limitations; and Compensating Controls for AI/ML Objects. AI/ML objects; while offering significant advantages in financial risk management; are not without weaknesses and limitations. Understanding these limitations and implementing appropriate compensating controls is crucial for mitigating potential risks.

19.1. Weaknesses and Limitations

AI/ML objects; including models and non-model components; can exhibit several weaknesses:

19.1.1. Data Dependency and Sensitivity

AI/ML models are highly dependent on the quality and representativeness of training data. Changes in data distribution or the introduction of biases can significantly impact model performance and reliability [

25].

19.1.2. Lack of Robustness and Generalization

AI/ML models may struggle to generalize to unseen data or adapt to changing market conditions. This lack of robustness can lead to inaccurate predictions and increased risk exposure [

14].

19.1.3. Interpretability and Explainability Challenges

The "black box" nature of many AI/ML models makes it challenging to understand their decision-making processes. This lack of interpretability hinders trust and can raise concerns among regulators and stakeholders [

5].

19.1.4. Vulnerability to Adversarial Attacks

AI/ML models are susceptible to adversarial attacks; where malicious inputs can manipulate model outputs. This vulnerability poses a significant risk to financial institutions; potentially leading to financial losses or regulatory breaches [

6].

19.1.5. Computational Complexity and Resource Requirements

Training and deploying complex AI/ML models can be computationally intensive and require significant resources. This can limit their scalability and applicability in certain financial applications.

19.2. Risk Profile

The weaknesses and limitations of AI/ML objects contribute to a unique risk profile:

19.2.1. Model Risk

Inaccurate or unreliable models can lead to incorrect risk assessments and financial losses.

19.2.2. Operational Risk

Computational complexity and resource requirements can introduce operational risks; such as system failures or delays.

19.2.3. Reputational Risk

Biased or discriminatory model outputs can damage the reputation of financial institutions.

19.2.4. Regulatory Risk

Lack of interpretability and transparency can lead to regulatory scrutiny and penalties.

19.2.5. Cybersecurity Risk

Vulnerability to adversarial attacks can expose financial institutions to cyber threats and financial losses.

19.3. Compensating Controls

To mitigate these risks; financial institutions should implement compensating controls:

19.3.1. Robust Data Governance and Quality Management

Implementing robust data governance practices; including data lineage tracking; quality checks; and bias detection; is essential for ensuring data integrity and reliability [

37].

19.3.2. Model Validation and Testing

Developing rigorous validation and testing procedures; including stress testing; adversarial testing; and scenario analysis; is crucial for assessing model robustness [

12].

19.3.3. Explainable AI (XAI) Techniques

Adopting XAI techniques; such as SHAP and LIME; can help improve model interpretability and transparency [

4].

19.3.4. Continuous Monitoring and Feedback Loops

Implementing continuous monitoring and feedback loops can help detect model drift; performance degradation; and anomalous behavior [

18].

19.3.5. Security Measures and Adversarial Defense

Implementing robust security measures; such as input validation and adversarial defense mechanisms; is crucial for protecting against adversarial attacks [

6].

19.3.6. Human-in-the-Loop Systems

Incorporating human expertise in model development; validation; and monitoring can enhance model reliability and mitigate potential risks.

19.3.7. Regulatory Compliance and Auditing

Establishing clear regulatory guidelines and audit trails is essential for ensuring compliance and accountability [

41].

19.3.8. Contingency Planning and Disaster Recovery

Developing contingency plans and disaster recovery procedures can help mitigate the impact of system failures or cyberattacks.

By understanding the weaknesses and limitations of AI/ML objects and implementing appropriate compensating controls; financial institutions can effectively manage the associated risks and ensure the responsible and reliable deployment of these technologies.

19.4. Inherent Weaknesses of AI/ML Components

Table 6 summarizes the common weaknesses observed in AI/ML objects across industries.

19.5. Risk Profile Characteristics

The risk profile of AI/ML objects exhibits unique characteristics quantified by:

where:

Industry benchmarks show median values of:

19.6. Limitations of Current Approaches

Explainability Tools: Only address 62% of regulatory requirements [

19]

Monitoring Systems: 71% fail to detect concept drift [

10]

Control Frameworks: 83% lack integration with model governance [

12]

19.7. Emerging Best Practices

Defense-in-Depth: Layered controls reduce residual risk by 54% [

40]

Automated Documentation: Cuts validation time by 65% [

16]

Red Team Exercises: Uncover 3.2x more vulnerabilities than audits [

43]

19.8. Compensating Controls Framework

Table 7 highlights the effectiveness of various controls in mitigating AI-related weaknesses.

20. Generative AI in Financial Risk Management: Capabilities and Limitations

20.1. GenAI Model Architectures for Risk Applications

Recent studies demonstrate three dominant architectures in financial risk management.

Table 8 presents the performance comparison of different GenAI architectures in risk tasks.

The Vasicek framework enhanced with agentic AI shows particular promise with:

where

represents the GenAI enhancement factor [

50].

20.2. Data Engineering Requirements

Implementation challenges center on data infrastructure:

20.3. Operational Limitations

Key constraints identified across studies. As shown in

Table 9; the key barriers to GenAI implementation include data privacy; model explainability; and regulatory compliance.

20.4. Compensating Controls Framework

Effective mitigation strategies include:

Prompt Engineering: Reduces model drift by 42% [

53]

Agentic Orchestration: Improves auditability scores by 58% [

49]

Hybrid Architectures: Combine traditional models with GenAI (risk reduction 31-45%) [

54]

20.5. Workforce Implications

The skills gap presents significant challenges:

requiring new training paradigms [59,60].

21. Algorithm Architecture for AI Risk Management

21.1. Hybrid Model Architecture

The proposed system architecture combines traditional risk modeling with AI components through three layered components.

|

Algorithm 1 Hybrid Risk Assessment Pipeline |

- 1:

Input: Financial dataset ; Model parameters

- 2:

Output: Risk score R; Explanation set

- 3:

▹ Traditional financial features - 4:

▹ Generative feature augmentation - 5:

▹ Temporal pattern extraction - 6:

▹ Latent representation - 7:

▹ Explainable prediction - 8:

return

|

21.2. Explainability Integration

For financial compliance; we implement SHAP-based explanation generation:

21.3. Risk Monitoring Loop

The continuous monitoring system addresses model drift.

|

Algorithm 2 SHAP Explanation Generator |

- 1:

Input: Model f; Instance x; Background data

- 2:

Output: SHAP values

- 3:

▹ Initialize explanation vector - 4:

▹ All feature subsets - 5:

fordo

- 6:

▹ Expected model output - 7:

▹ Shapley value update - 8:

end for - 9:

return

|

The architecture of AI algorithms in financial risk management is diverse; ranging from traditional machine learning models to more complex deep learning networks [

26,

33]. This section provides an overview of common algorithmic architectures and pseudocode examples relevant to financial applications.

21.4. Common Algorithmic Architectures

Logistic Regression: A fundamental model for binary classification tasks such as credit risk assessment [

31].

Decision Trees and Random Forests: Ensemble methods used for classification and regression; offering interpretability and robustness.

Neural Networks: Deep learning models capable of capturing complex patterns in financial data for tasks like fraud detection and market prediction [

20].

Support Vector Machines (SVMs): Effective for high-dimensional data and non-linear relationships; suitable for risk classification problems.

21.5. Pseudocode Examples

21.5.1. Logistic Regression for Credit Risk Assessment

|

Algorithm 3 Logistic Regression Credit Risk |

- 1:

Input:X (feature matrix); y (target variable: default or non-default) - 2:

Output: Trained Logistic Regression model - 3:

Steps: - 4:

Initialize model parameters (weights w; bias b) - 5:

for each iteration do

- 6:

Compute linear combination:

- 7:

Apply sigmoid function:

- 8:

Compute cost:

- 9:

Compute gradients: - 10:

- 11:

- 12:

Update parameters: - 13:

- 14:

- 15:

end for - 16:

Return: Trained model (w; b) |

21.5.2. Neural Network for Fraud Detection

|

Algorithm 4 Neural Network Fraud Detection |

- 1:

Input:X (transaction data); y (target variable: fraud or non-fraud) - 2:

Output: Trained Neural Network model - 3:

Steps: - 4:

Define network architecture (number of layers; neurons; activation functions) - 5:

Initialize weights and biases randomly - 6:

for each epoch do

- 7:

for each mini-batch do

- 8:

Forward pass:

- 9:

Compute activations for each layer - 10:

Compute loss: cross-entropy loss - 11:

Backward pass:

- 12:

Compute gradients using backpropagation - 13:

Update weights and biases using optimization algorithm (e.g.; Adam) - 14:

end for

- 15:

end for - 16:

Return: Trained model |

21.6. Considerations for Model Selection

The selection of an appropriate algorithm depends on several factors; including:

Data Characteristics: The size; quality; and distribution of the data [

7].

Interpretability Requirements: The need for transparency and explainability in model predictions [

4,

5].

Computational Resources: The available computing power and time for training and deployment [

21].

Effective model risk management requires a thorough understanding of the strengths and limitations of different algorithmic architectures; as well as careful consideration of the specific requirements of the financial application [

33,

37].

22. Challenges and Future Directions

22.1. Silicon Valley vs. Finance Risk Cultures

Tech firms prioritize innovation; while banks emphasize stability [

17]. Bridging this gap requires agile MRM frameworks [

16].

22.2. Data Quality

Poor data lineage tracking undermines model audits [

33].

22.3. Challenges in AI-Driven Risk Management

Despite its potential; AI adoption faces several challenges:

Bias and Fairness: Ensuring fairness in AI models is critical to avoid discriminatory outcomes [

19].

Regulatory Compliance: Adhering to evolving regulations such as the EU AI Act poses challenges for financial institutions [

37].

Data Privacy: Protecting sensitive customer data remains a top priority [

7].

Model Explainability: Lack of transparency in AI models can erode trust among stakeholders [

5].

22.4. Future Directions

The future of AI in risk management lies in explainable AI (XAI) [

5]; quantum computing [

40]; and adaptive regulatory frameworks [

37]. Financial institutions must invest in governance skills [

28] and collaborative ecosystems [

41].

Automated Documentation: Tools like Databricks accelerate MRM compliance [

16].

Quantum AI: Emerging quantum ML may introduce new risks [

40].

Global Standards: Harmonizing regulations across jurisdictions [

41].

The future of AI in financial risk management lies in:

23. Conclusion

AI offers transformative potential for financial risk management but also introduces significant challenges. By addressing issues like bias; explainability; and regulatory compliance; the financial industry can harness the full potential of AI while mitigating risks. AI is transforming risk management but requires careful implementation to address model risk; ethical concerns; and regulatory compliance. By adopting robust frameworks and prioritizing transparency; organizations can harness AI’s potential while mitigating its risks. Future research should focus on XAI; real-world validation; and cross-industry collaboration. AI model risk management demands a multidisciplinary approach combining technical rigor (e.g.; XAI); governance (e.g.; three lines of defense); and compliance with evolving regulations. Financial institutions that proactively address these challenges will gain a competitive edge while mitigating risks. The deployment of GenAI in finance offers significant potential but also presents complex model risk challenges. Adapting existing MRM frameworks; adopting hybrid modeling approaches; and establishing robust governance structures are crucial for responsible and effective GenAI deployment. As the technology continues to evolve; ongoing research and collaboration are essential to navigate the evolving landscape and ensure the safe and beneficial use of GenAI in the financial sector. Explainable AI is becoming indispensable for financial risk management; addressing critical needs for transparency; compliance; and stakeholder trust. While implementation challenges remain; the financial sector is developing robust approaches to integrate XAI into risk management frameworks. Future progress will depend on continued collaboration between regulators; financial institutions; and AI researchers to develop standardized; practical XAI solutions. Explainable AI (XAI) plays a crucial role in enhancing risk management practices in the financial sector. By providing transparent and interpretable explanations; XAI can help build trust; ensure regulatory compliance; and improve model validation. As AI continues to evolve; the development and adoption of XAI techniques will be essential for responsible and effective AI deployment in finance [

1,

8,

27,

32,61].

This research discusses (as depicted by various literature) that while AI systems achieve measurable performance gains (15-30% improvements in predictive accuracy and fraud detection); their successful implementation requires addressing critical issues of model risk; explainability; and regulatory compliance. The analysis reveals that Explainable AI (XAI) techniques serve as a crucial bridge between advanced modeling capabilities and practical deployment; with SHAP and LIME implementations proving particularly effective in financial contexts where AUC-ROC stability must be maintained within ±0.03 margins. For Generative AI applications; we identify unique vulnerabilities in data provenance and adversarial attacks that necessitate specialized adaptations to traditional Model Risk Management (MRM) frameworks. The persistent gaps in industry practices - particularly in version control (absent in 62% of firms) and real-time monitoring (implemented by only 38% of organizations) - highlight the urgent need for standardized governance approaches. Financial institutions must prioritize hybrid modeling strategies that combine AI’s predictive power with traditional risk management’s interpretability; supported by continuous monitoring systems capable of detecting model drift at 5% degradation thresholds. Future advancements in quantum-inspired explainability and automated documentation promise to address current limitations; but their successful integration will depend on collaborative efforts between regulators; financial institutions; and AI researchers.

References

- https://projectai.com/ai-for-risk management/. AI for Risk Management, 2023.

- The Role of AI & ML in Risk Management and Mitigating Human Error in Fintech.

- AI in Model Risk Management: A Guide for Financial Services - ValidMind. https://validmind.com/blog/ai-in-model-risk-management-a-guide-for-financial-services/, 2025.

- Fritz-Morgenthal, S.; Hein, B.; Papenbrock, J. Financial Risk Management and Explainable, Trustworthy, Responsible AI. Frontiers in Artificial Intelligence 2022, 5. [Google Scholar] [CrossRef] [PubMed]

- Bowden, J.; Cummins, M.; Dao, D.; Jain, K. Explainable AI for Financial Risk Management. Technical report, University of Strathclyde, 2024. [CrossRef]

- AI in Risk Management: Top Benefits and Challenges Explained. https://www.techtarget.com/searchsecurity/tip/The-benefits-of-using-AI-in-risk-management.

- Boukherouaa, E.B.; AlAjmi, K.; Deodoro, J.; Farias, A.; Ravikumar, R. Powering the Digital Economy: Opportunities and Risks of Artificial Intelligence in Finance. Departmental Papers 2021, 2021. [Google Scholar] [CrossRef]

- How to Build Credit Risk Models Using AI and Machine Learning. https://www.fico.com/blogs/how-build-credit-risk-models-using-ai-and-machine-learning, 2023.

- Innovation, R. AI in Banking Risk Management : Applications and Benefits. https://www.rapidinnovation.io/post/risk-management-reinvented-ais-impact-on-finance.

- Confluence. 68% of Financial Firms Say AI in Risk and Compliance Is a Top Priority. https://www.confluence.com/68-of-financial-firms-say-ai-in-risk-and-compliance-is-a-top-priority-here-are-some-best-practices-for-thoughtful-ai-adoption/, 2024.

- Tabassi, E. Artificial Intelligence Risk Management Framework (AI RMF 1.0). Technical Report NIST AI 100-1, National Institute of Standards and Technology (U.S.), Gaithersburg, MD, 2023. [CrossRef]

- Managing AI Model Risk in Financial Institutions: Best Practices for Compliance and Governance - CPA & Advisory Professional Insights. https://kaufmanrossin.com/blog/managing-ai-model-risk-in-financial-institutions-best-practices-for-compliance-and-governance/.

- AI Model Risk Management Market Size, Share Report, 2030. https://www.grandviewresearch.com/industry-analysis/ai-model-risk-management-market-report.

- Quell, P.; Bellotti, A.G.; Breeden, J.L.; Martin, J.C. MACHINE LEARNING AND MODEL RISK MANAGEMENT.

- Transforming Credit Risk Management: The Impact Of AI And ML – Avenga. https://www.avenga.com/magazine/ai-for-credit-risk-management/, 2024.

- Model Risk Management, a True Accelerator to Corporate AI. https://www.databricks.com/blog/model-risk-management-true-accelerator-corporate-ai, Wed, 05/24/2023 - 23:00.

- Mitigating Model Risk in AI: Advancing an MRM Framework for AI/ML Models at Financial Institutions - Chartis Research. https://www.chartis-research.com/artificial-intelligence-ai/7947296/mitigating-model-risk-in-ai-advancing-an-mrm-framework-for-aiml-models-at-financial-institutions, 2025.

- Dhiraj, I. AI-Powered Model Risk Management Improves Banking EfficiencyCompliance. https://www.infocepts.ai/case-studies/ai-powered-model-risk-management-improves-banking-efficiency-compliance/, 2023.

- Fritz-Morgenthal, S.; Hein, B.; Papenbrock, J. Financial Risk Management and Explainable, Trustworthy, Responsible AI. Frontiers in Artificial Intelligence 2022, 5, 779799. [Google Scholar] [CrossRef] [PubMed]

- AI in Finance: Predictive Analytics and Risk Management. https://interviewkickstart.com/blogs/career-advice/ai-finance-predictive-analytics.

- Artificial Intelligence in Risk Management KPMG United Arab Emirates. https://kpmg.com/ae/en/home/insights/2021/09/artificial-intelligence-in-risk-management.html, 2024.

- The Future of Generative AI in Banking | McKinsey. https://www.mckinsey.com/capabilities/risk-and-resilience/our-insights/how-generative-ai-can-help-banks-manage-risk-and-compliance.

- Lead|authorurl:https://www.ey.com/en_gl/people/jeanne-boillet, a.G.A.C.A. Lead|authorurl:https://www.ey.com/en_gl/people/jeanne-boillet, a.G.A.C.A. Why AI Is Both a Risk and a Way to Manage Risk. https://www.ey.com/en_gl/insights/assurance/why-ai-is-both-a-risk-and-a-way-to-manage-risk.

- AI Risk Management Framework. https://www.paloaltonetworks.com/cyberpedia/ai-risk-management-framework.

- Redefining Financial Risk Assessment: Role of AI Explained. https://www.flagright.com/post/understanding-the-role-of-ai-in-redefining-financial-risk-assessment.

- AI vs Traditional Risk Modelling: A Comparative Analysis. https://risk.jaywing.com/news-views/ai-vs-traditional-risk-modelling/.

- Machine Learning and AI for Risk Management | AnalystPrep | FRM Part II, 2023.

- Build Strong AI Governance and Risk Management Skills - Rajiv Avacharmal, Corporate Vice President. https://indiaai.gov.in/article/build-strong-ai-governance-and-risk-management-skills-rajiv-avacharmal-corporate-vice-president.

- Porter, A. Effective AI Risk Management: Frameworks & Strategies, 2024.

- Goldman, D. AI in Risk Management: Focusing on Third-Party Risk. https://panorays.com/blog/ai-in-risk-management/, 2023.

- Credit Risk Models with Machine Learning. https://www.solulab.com/guide-to-building-credit-risk-models-with-machine-learning/.

- Gupta, R. AI in Financial Risk Management and Derivatives Trading: Trends & Use Cases. https: //evergreen.insightglobal.com/ai-financial-risk-management-aderivatives-trading-trends-use-cases/, 2025. [Google Scholar]

- Applying the Existing AI/ML Model Risk Management Guidance. https://cloud.google.com/blog/topics/financial-services/applying-the-existing-aiml-model-risk-management-guidance.

- AI Risk Assessment 101: Identifying and Mitigating Risks in AI Systems. https://www.zendata.dev/post/ai-risk-assessment-101-identifying-and-mitigating-risks-in-ai-systems.

- Model Risk Management Is Evolving: Regulation, Volatility, Machine Learning and AI - Risk.Net. https://www.risk.net/insight/technology-and-data/7956442/model-risk-management-is-evolving-regulation-volatility-machine-learning-and-ai.

- AI for Credit Risk Management: Use Cases, Challenges & Benefits - HW.Tech, 2025.

- Artificial Intelligence (AI) Model Risk Management. https://www.mas.gov.sg/publications/monographs-or-information-paper/2024/artificial-intelligence-model-risk-management.

- Reimagining Model Risk Management: New Tools and Approaches for a New Era - Chartis Research. https://www.chartis-research.com/custom-insights/7947251/reimagining-model-risk-management-new-tools-and-approaches-for-a-new-era, 2024.

- Modulos. Implementing an AI Risk Management Framework: Best Practices, 2024.

- Derisking AI: Risk Management in AI Development | McKinsey. https://www.mckinsey.com/capabilities/quantumblack/our-insights/derisking-ai-by-design-how-to-build-risk-management-into-ai-development.

- PricewaterhouseCoopers. AI in Financial Services: Navigating the Risk - Opportunity Equation. https://www.pwc.co.uk/industries/financial-services/understanding-regulatory-developments/ai-in-financial-services-navigating-the-risk-opportunity-equation.html.

- AI Model Risk Management Framework | CSA. https://cloudsecurityalliance.org/artifacts/ai-model-risk-management-framework.

- Principal|authorurl:https://www.ey.com/en_us/people/gagan-agarwala, a.A.F.S.A.; Principal|authorurl:https://www.ey.com/en_us/people/alejandro-latorre, a.A.F.S.A.; Partner|authorurl:https://www.ey.com/en_us/people/susan-raffel, a.A.F.S.A. Principal|authorurl:https://www.ey.com/en_us/people/gagan-agarwala, a.A.F.S.A.; Principal|authorurl:https://www.ey.com/en_us/people/alejandro-latorre, a.A.F.S.A.; Partner|authorurl:https://www.ey.com/en_us/people/susan-raffel, a.A.F.S.A. Model Risk Management for AI and Machine Learning. https://www.ey.com/en_us/insights/banking-capital-markets/understand-model-risk-management-for-ai-and-machine-learning.

- AI Model Risk Management Market Size, Share and Global Forecast to 2029. https://www.marketsandmarkets.com/Market-Reports/ai-model-risk-management-market-145025445.html.

- The Evolution of Model Risk Management. https://simon.rochester.edu/blog/deans-corner/evolution-model-risk-management, 2024.

- (1) A Comprehensive Guide to Machine Learning in Risk Management | LinkedIn. https://www.linkedin.com/pulse/comprehensive-guide-machine-learning-risk-management-maedeh-molana-gudhf/.

- Joshi Satyadhar. Enhancing Structured Finance Risk Models (Leland-Toft and Box-Cox) Using GenAI (VAEs GANs). IJSRA 2025, 14, 1618–1630. [Google Scholar]

- Joshi, S. Using Gen AI Agents With GAE and VAE to Enhance Resilience of US Markets. The International Journal of Computational Science, Information Technology and Control Engineering (IJCSITCE) 2025, 12, 23–38. [Google Scholar] [CrossRef]

- Satyadhar Joshi. Agentic Generative AI and the Future US Workforce: Advancing Innovation and National Competitiveness. International Journal of Research and Review 2025, 12, 102–113. [Google Scholar] [CrossRef]

- Satyadhar, J. Advancing Financial Risk Modeling: Vasicek Framework Enhanced by Agentic Generative AI. Advancing Financial Risk Modeling: Vasicek Framework Enhanced by Agentic Generative AI by Satyadhar Joshi, vol.

- Joshi, S. Review of Gen AI Models for Financial Risk Management. International Journal of Scientific Research in Computer Science, Engineering and Information Technology ISSN : 2456-3307 2025, 11, 709–723. [Google Scholar]

- Satyadhar Joshi. Introduction to Vector Databases for Generative AI: Applications, Performance, Future Projections, and Cost Considerations. International Advanced Research Journal in Science, Engineering and Technology ISSN (O) 2393-8021, ISSN (P) 2394-1588 2025, 12, 79–93. [Google Scholar]

- Joshi, Satyadhar. Leveraging prompt engineering to enhance financial market integrity and risk management. World Journal of Advanced Research and Reviews WJARR 2025, 25, 1775–1785. [Google Scholar] [CrossRef]

- Satyadhar, J. Gen AI for Market Risk and Credit Risk [Ebook ISBN: 9798230094388]. Draft2Digital Publications Ebook ISBN: 9798230094388.

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).