Submitted:

28 March 2025

Posted:

31 March 2025

You are already at the latest version

Abstract

Keywords:

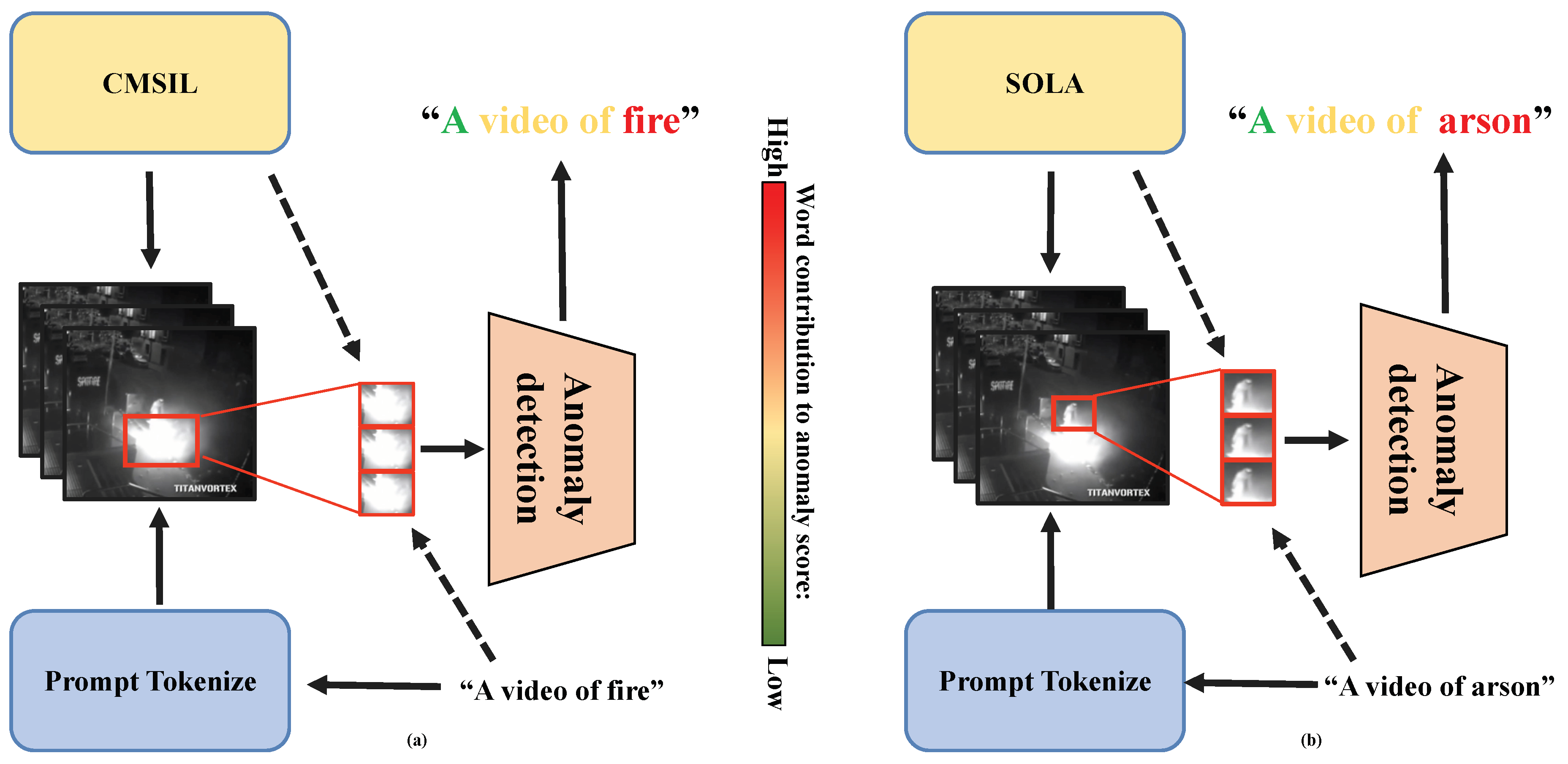

1. Introduction

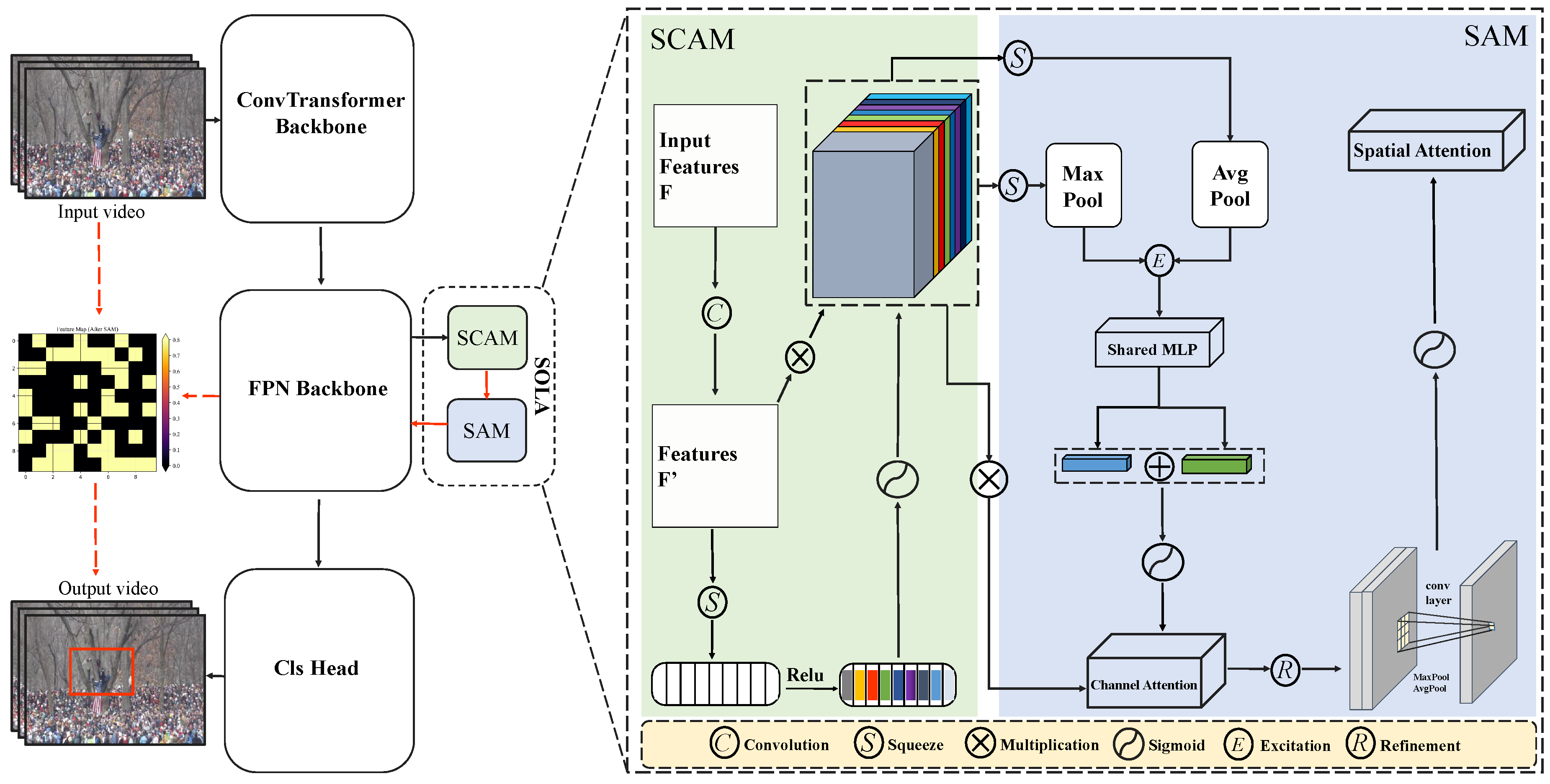

- We integrate SAM into the FPN structure of the CMSIL model, which effectively enhances the detection ability of small targets and local anomalies, overcoming the limitations of traditional methods.

- We enhance the model’s robustness in complex backgrounds by integrating SAM into multi-scale feature fusion, effectively mitigating the impact of background noise on anomaly detection accuracy.

- We conduct extensive experiments on two challenging datasets. The results demonstrate that the proposed method outperforms several state-of-the-art approaches.

2. Related Work

2.1. Video Anomaly Detection

2.2. Small-Object Anomaly Detection

3. Proposed Method

3.1. Overview

3.2. Small Object Context-Aware Attention Module

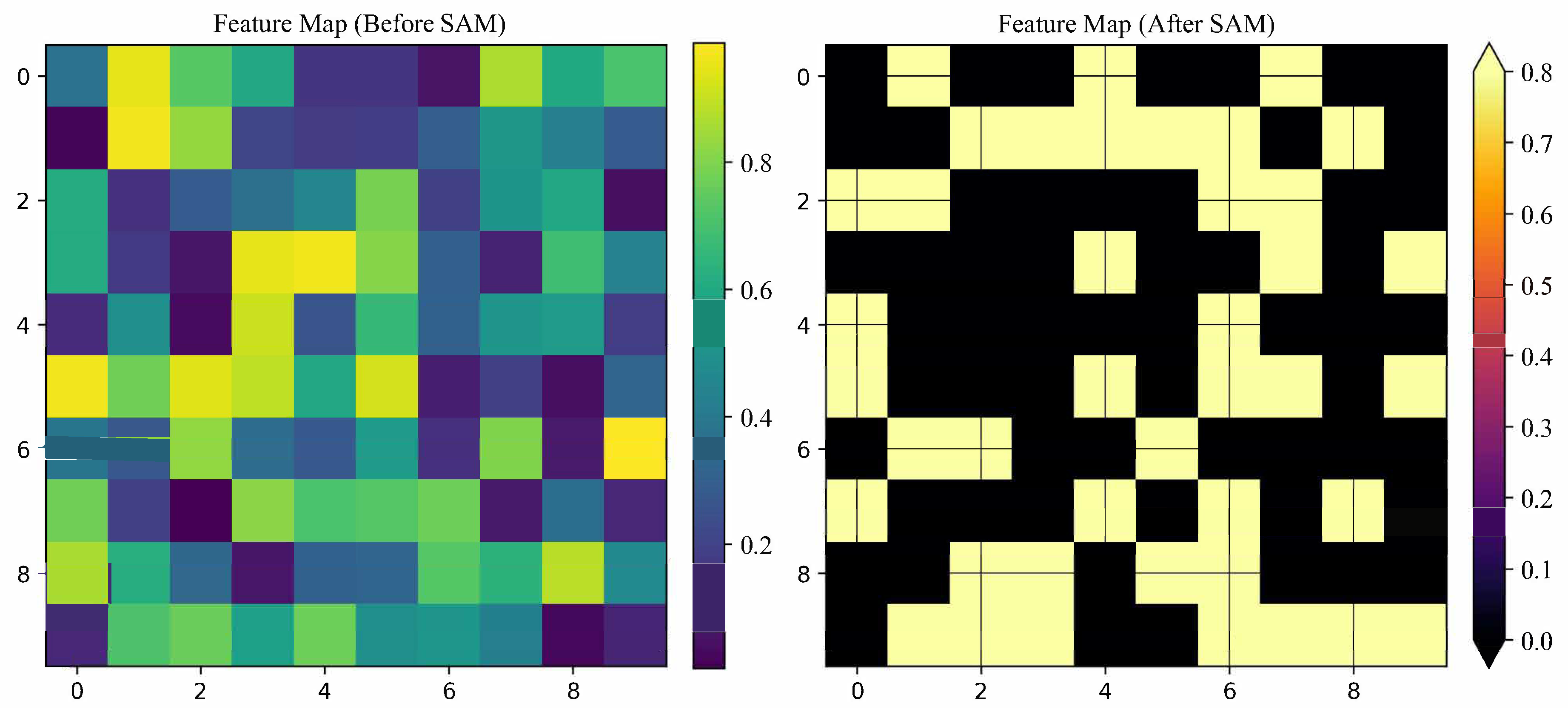

3.3. Small-Object Attention Module

3.4. Loss Function

4. Experiment Results

4.1. Datasets

4.2. Evaluation Metrics

4.3. Implementation Details

4.4. Comparison with State-of-the-art Methods

4.4.1. Comparisons on XD-Violence

4.4.2. Comparisons on UCF-Crime

4.5. Ablation Studies and Analysis

4.6. Visualization

5. Conclusions

Author Contributions

Data Availability Statement

Conflicts of Interest

References

- Ahn, S.; Jo, Y.; Lee, K.; Kwon, S.; Hong, I.; Park, S. AnyAnomaly: Zero-Shot Customizable Video Anomaly Detection with LVLM. arXiv preprint arXiv:2503.04504 2025.

- Ma, J.; Wang, J.; Luo, J.; Yu, P.; Zhou, G. Sherlock: Towards Multi-scene Video Abnormal Event Extraction and Localization via a Global-local Spatial-sensitive LLM. arXiv preprint arXiv:2502.18863 2025.

- Li, Z.; Zhao, M.; Yang, X.; Liu, Y.; Sheng, J.; Zeng, X.; Wang, T.; Wu, K.; Jiang, Y.G. STNMamba: Mamba-based Spatial-Temporal Normality Learning for Video Anomaly Detection. arXiv preprint arXiv:2412.20084 2024.

- Xu, A.; Wang, H.; Ding, P.; Gui, J. Dual Conditioned Motion Diffusion for Pose-Based Video Anomaly Detection. arXiv preprint arXiv:2412.17210 2024.

- Zhang, H.; Xu, X.; Wang, X.; Zuo, J.; Huang, X.; Gao, C.; Zhang, S.; Yu, L.; Sang, N. Holmes-vau: Towards long-term video anomaly understanding at any granularity. arXiv preprint arXiv:2412.06171 2024.

- Tan, X.; Wang, H.; Geng, X. Frequency-Guided Diffusion Model with Perturbation Training for Skeleton-Based Video Anomaly Detection. arXiv preprint arXiv:2412.03044 2024.

- Ye, M.; Liu, W.; He, P. Vera: Explainable video anomaly detection via verbalized learning of vision-language models. arXiv preprint arXiv:2412.01095 2024.

- Bao, Q.; Liu, F.; Liu, Y.; Jiao, L.; Liu, X.; Li, L. Hierarchical scene normality-binding modeling for anomaly detection in surveillance videos. In Proceedings of the Proceedings of the 30th ACM international conference on multimedia, 2022, pp. 6103–6112.

- Kaneko, Y.; Miah, A.S.M.; Hassan, N.; Lee, H.S.; Jang, S.W.; Shin, J. Multimodal Attention-Enhanced Feature Fusion-based Weekly Supervised Anomaly Violence Detection. arXiv preprint arXiv:2409.11223 2024.

- Almahadin, G.; Subburaj, M.; Hiari, M.; Sathasivam Singaram, S.; Kolla, B.P.; Dadheech, P.; Vibhute, A.D.; Sengan, S. Enhancing video anomaly detection using spatio-temporal autoencoders and convolutional lstm networks. SN Computer Science 2024, 5, 190. [CrossRef]

- Hasan, M.; Choi, J.; Neumann, J.; Roy-Chowdhury, A.K.; Davis, L.S. Learning temporal regularity in video sequences. In Proceedings of the Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 733–742.

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE computer society conference on computer vision and pattern recognition (CVPR’05). Ieee, 2005, Vol. 1, pp. 886–893.

- Dalal, N.; Triggs, B.; Schmid, C. Human detection using oriented histograms of flow and appearance. In Proceedings of the Computer Vision–ECCV 2006: 9th European Conference on Computer Vision, Graz, Austria, May 7-13, 2006. Proceedings, Part II 9. Springer, 2006, pp. 428–441.

- Nejad, S.S. Weakly-Supervised Anomaly Detection in Surveillance Videos Based on Two-Stream I3D Convolution Network. Master’s thesis, The University of Western Ontario (Canada), 2023.

- Liu, Z.; Nie, Y.; Long, C.; Zhang, Q.; Li, G. A hybrid video anomaly detection framework via memory-augmented flow reconstruction and flow-guided frame prediction. In Proceedings of the Proceedings of the IEEE/CVF international conference on computer vision, 2021, pp. 13588–13597.

- Li, D.; Nie, X.; Gong, R.; Lin, X.; Yu, H. Multi-branch GAN-based abnormal events detection via context learning in surveillance videos. IEEE Transactions on Circuits and Systems for Video Technology 2023, 34, 3439–3450. [Google Scholar] [CrossRef]

- Sun, S.; Gong, X. Hierarchical semantic contrast for scene-aware video anomaly detection. In Proceedings of the Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2023, pp. 22846–22856.

- Li, S.; Liu, F.; Jiao, L. Self-training multi-sequence learning with transformer for weakly supervised video anomaly detection. In Proceedings of the Proceedings of the AAAI Conference on Artificial Intelligence, 2022, Vol. 36, pp. 1395–1403.

- Ni, B.; Peng, H.; Chen, M.; Zhang, S.; Meng, G.; Fu, J.; Xiang, S.; Ling, H. Expanding language-image pretrained models for general video recognition. In Proceedings of the European conference on computer vision. Springer, 2022, pp. 1–18.

- Ju, C.; Han, T.; Zheng, K.; Zhang, Y.; Xie, W. Prompting visual-language models for efficient video understanding. In Proceedings of the European Conference on Computer Vision. Springer, 2022, pp. 105–124.

- Qian, Z.; Tan, J.; Ou, Z.; Wang, H. CLIP-Driven Multi-Scale Instance Learning for Weakly Supervised Video Anomaly Detection. In Proceedings of the 2024 IEEE International Conference on Multimedia and Expo (ICME). IEEE, 2024, pp. 1–6.

- Goyal, K.; Singhai, J. Review of background subtraction methods using Gaussian mixture model for video surveillance systems. Artificial Intelligence Review 2018, 50, 241–259. [CrossRef]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning spatiotemporal features with 3d convolutional networks. In Proceedings of the Proceedings of the IEEE international conference on computer vision, 2015, pp. 4489–4497.

- Shi, X.; Chen, Z.; Wang, H.; Yeung, D.Y.; Wong, W.K.; Woo, W.c. Convolutional LSTM network: A machine learning approach for precipitation nowcasting. Advances in neural information processing systems 2015, 28.

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929 2020.

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In Proceedings of the International conference on machine learning. PmLR, 2021, pp. 8748–8763.

- Shen, F.; Shu, X.; Du, X.; Tang, J. Pedestrian-specific Bipartite-aware Similarity Learning for Text-based Person Retrieval. In Proceedings of the Proceedings of the 31th ACM International Conference on Multimedia, 2023.

- Shen, F.; Tang, J. IMAGPose: A Unified Conditional Framework for Pose-Guided Person Generation. In Proceedings of the The Thirty-eighth Annual Conference on Neural Information Processing Systems, 2024.

- Shen, F.; Jiang, X.; He, X.; Ye, H.; Wang, C.; Du, X.; Li, Z.; Tang, J. IMAGDressing-v1: Customizable Virtual Dressing. arXiv preprint arXiv:2407.12705 2024.

- Shen, F.; Ye, H.; Liu, S.; Zhang, J.; Wang, C.; Han, X.; Yang, W. Boosting Consistency in Story Visualization with Rich-Contextual Conditional Diffusion Models. arXiv preprint arXiv:2407.02482 2024.

- Shen, F.; Wang, C.; Gao, J.; Guo, Q.; Dang, J.; Tang, J.; Chua, T.S. Long-Term TalkingFace Generation via Motion-Prior Conditional Diffusion Model. arXiv preprint arXiv:2502.09533 2025.

- Brox, T.; Bruhn, A.; Papenberg, N.; Weickert, J. High accuracy optical flow estimation based on a theory for warping. In Proceedings of the Computer Vision-ECCV 2004: 8th European Conference on Computer Vision, Prague, Czech Republic, May 11-14, 2004. Proceedings, Part IV 8. Springer, 2004, pp. 25–36.

- Liu, W.; Luo, W.; Lian, D.; Gao, S. Future frame prediction for anomaly detection–a new baseline. In Proceedings of the Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 6536–6545.

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp. 2117–2125.

- Zhang, M.; Wang, J.; Qi, Q.; Sun, H.; Zhuang, Z.; Ren, P.; Ma, R.; Liao, J. Multi-scale video anomaly detection by multi-grained spatio-temporal representation learning. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2024, pp. 17385–17394.

- Li, H.; Zhang, R.; Pan, Y.; Ren, J.; Shen, F. LR-FPN: Enhancing Remote Sensing Object Detection with Location Refined Feature Pyramid Network. arXiv preprint arXiv:2404.01614 2024.

- Weng, W.; Wei, M.; Ren, J.; Shen, F. Enhancing Aerial Object Detection with Selective Frequency Interaction Network. IEEE Transactions on Artificial Intelligence 2024, 1, 1–12. [CrossRef]

- Shen, F.; Ye, H.; Zhang, J.; Wang, C.; Han, X.; Yang, W. Advancing pose-guided image synthesis with progressive conditional diffusion models. arXiv preprint arXiv:2310.06313 2023.

- Sultani, W.; Chen, C.; Shah, M. Real-world anomaly detection in surveillance videos. In Proceedings of the Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 6479–6488.

- Zhou, H.; Yu, J.; Yang, W. Dual memory units with uncertainty regulation for weakly supervised video anomaly detection. In Proceedings of the Proceedings of the AAAI Conference on Artificial Intelligence, 2023, Vol. 37, pp. 3769–3777.

- Balntas, V.; Riba, E.; Ponsa, D.; Mikolajczyk, K. Learning local feature descriptors with triplets and shallow convolutional neural networks. In Proceedings of the Bmvc, 2016, Vol. 1, p. 3.

- Kendall, A.; Gal, Y.; Cipolla, R. Multi-task learning using uncertainty to weigh losses for scene geometry and semantics. In Proceedings of the Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 7482–7491.

| Method | Feature | AP(%) XD | AUC(%) UCF |

|---|---|---|---|

| P. Wu et al. (ECCV’20) [2] | - | 82.44 | |

| RTFM (ICCV’21) [4] | 77.81 | 84.03 | |

| CRFD (TIP’21) [20] | I3D-RGB | 75.90 | 84.89 |

| MSL (AAAI’22) [9] | 78.28 | - | |

| MGFN (AAAI’23) [22] | 79.19 | 86.98 | |

| AFR (Ours) | I3D-RGB | 81.74 | 92.73 |

| Baseline | SAM | AP(%) - XD | AUC(%) - UCF |

|---|---|---|---|

| ✓ | 80.91 | 87.57 | |

| ✓ | ✓ | 81.74 | 92.73 |

| Baseline | SAM-CA | SAM-SA | AP(%) - XD | AUC(%) - UCF |

|---|---|---|---|---|

| ✓ | 80.91 | 87.57 | ||

| ✓ | ✓ | 79.02 | 84.11 | |

| ✓ | ✓ | 80.88 | 85.64 | |

| ✓ | ✓ | ✓ | 81.74 | 92.73 |

| Baseline | SAM | NonLocal | AP(%) - XD | AUC(%) - UCF |

|---|---|---|---|---|

| ✓ | 80.91 | 87.57 | ||

| ✓ | ✓ | 78.19 | 84.47 | |

| ✓ | ✓ | 81.74 | 92.73 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).