Submitted:

05 April 2025

Posted:

08 April 2025

You are already at the latest version

Abstract

Keywords:

1. Introduction

2. Related Work

2.1. Vision Transformers and Token Representations

2.2. Efficient Transformer Inference

2.3. Token Pruning and Dropping

2.4. Token Merging and Aggregation

2.5. Patch Selection and Redundancy Reduction

2.6. Saliency-Based and Attention-Based Importance Estimation

2.7. Token Importance Estimation Without Supervision

2.8. Interpretability and Visual Explanation of Transformers

2.9. Summary

3. Method

3.1. Overview

3.2. Vision Transformers and Patch Tokenization

3.3. Redundancy Estimation via Patch Similarity

3.4. Patch Pruning Strategy

3.5. Spatial Diversity Preservation

3.6. Transformer Integration

- Pre-transformer pruning: Apply pruning once, before the first transformer layer. This yields maximum speedup by reducing the length of the sequence throughout the entire model.

- Layer-wise progressive pruning: Perform pruning after selected transformer layers, allowing gradual reduction in token count. This supports better feature refinement and is more compatible with deeper ViTs.

3.7. Computational Complexity

3.8. Training-Free and Model-Agnostic Design

3.9. Summary

- Compute patch embeddings from the input image using the ViT patch projection layer.

- Calculate pairwise patch similarities using cosine similarity.

- Compute redundancy scores for each patch based on average similarity.

- Prune the most redundant patches based on a fixed or adaptive threshold.

- Retain the class token and remaining patch tokens for transformer processing.

4. Experiments

4.1. Experimental Setup

4.1.1. Datasets

- ImageNet-1K [65]: A large-scale classification dataset with over 1.2M training images and 50K validation images across 1000 categories.

- CIFAR-100 [81]: A smaller but challenging dataset containing 100 fine-grained classes with 50K training and 10K test images of size .

- Oxford Flowers-102 [82]: A fine-grained dataset with 102 flower categories, used to evaluate robustness under limited data and subtle visual variations.

4.1.2. Models

- ViT-B/16 [17]: A baseline Vision Transformer with 12 transformer blocks, hidden size 768, and patch size .

- DeiT-Small [20]: A data-efficient variant with a smaller footprint, trained with stronger augmentations and knowledge distillation.

- Swin-Tiny [21]: A hierarchical transformer with local window-based attention, used to evaluate generalization to non-ViT architectures.

4.1.3. Implementation Details

4.2. Baselines

- No Pruning: Full model inference with all patch tokens retained.

- Random Pruning: Randomly prune the same number of tokens as our method to measure the impact of informed pruning.

- Attention Rollout [66]: Use attention maps to select top-k patches with highest cumulative attention to the class token.

- Dynamic ViT [67]: A learnable gating mechanism that drops tokens dynamically during inference.

- Token Pooling (ToMe) [38]: Merge similar tokens using learned clustering during attention stages.

4.3. Main Results

4.4. Ablation Studies

4.4.1. Similarity Metric

4.4.2. Spatial Diversity Regularization

4.4.3. Pruning Location

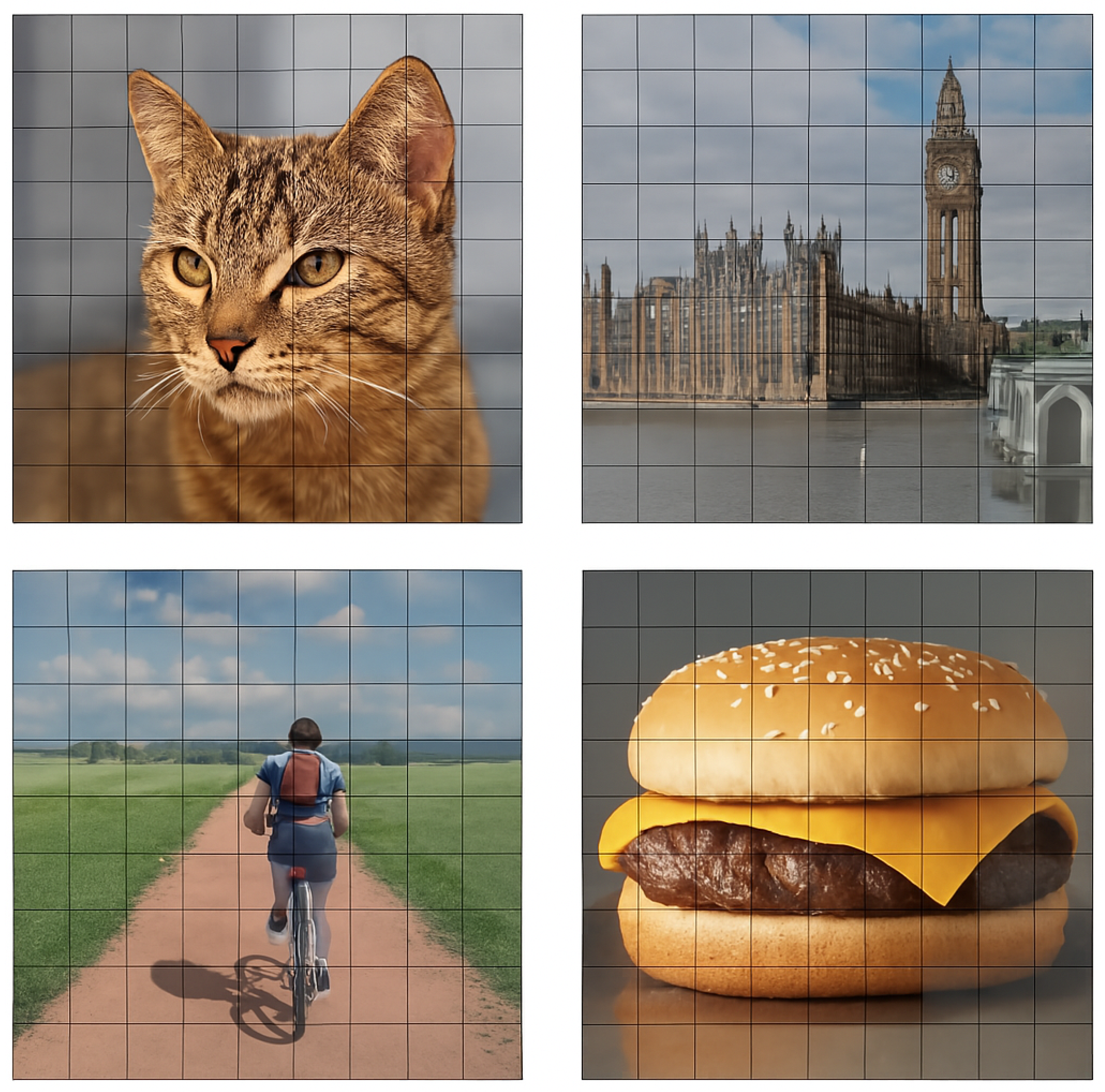

4.5. Qualitative Results

4.6. Inference Speed and Memory

4.7. Summary of Findings

- Similarity-based patch pruning is effective across multiple datasets and architectures.

- Our method outperforms prior token pruning approaches while being significantly simpler.

- The method scales well with higher pruning ratios and is compatible with pretrained models.

- Both quantitative and qualitative evaluations confirm that retained patches capture essential information.

5. Discussion

5.1. Interpretability and Simplicity

5.2. Generalizability and Transferability

5.3. Trade-offs and Limitations

- Static Pruning: Since the pruning decision is made once based on similarity in the input embeddings, the model cannot adaptively revise its token importance throughout the transformer layers. This can be suboptimal for tasks that require dynamic token interactions.

- Global Similarity Bias: Computing average similarity over all patches may penalize patches that are similar to many others but still carry semantically critical information (e.g., repetitive object parts). Incorporating task-awareness or class sensitivity could mitigate this issue.

- Lack of Supervision: The method does not leverage label or task information. While this is a strength in terms of generality, it may also limit optimality for task-specific importance scoring, such as in segmentation or detection.

- Computational Cost of Similarity Matrix: For very high-resolution images with many patches (e.g., or larger), computing the full similarity matrix can become a bottleneck. However, approximate nearest neighbor search or clustering-based approaches can reduce this cost substantially.

5.4. Extensions and Future Work

- Adaptive Thresholding: Rather than pruning a fixed ratio of patches, future work could explore dynamic thresholds based on image content complexity or entropy.

- Learnable Similarity Functions: While cosine similarity is simple and effective, learning a similarity function jointly with the transformer could offer greater pruning precision.

- Multi-Stage Pruning: Combining patch similarity pruning with progressive token merging during transformer layers may yield additional efficiency gains.

- Task-Aware Pruning: Incorporating weak supervision or attention-weighted similarity scores could adapt the method for downstream tasks such as object detection, segmentation, or image captioning.

- Uncertainty Estimation: Introducing confidence scores or uncertainty quantification could help decide when not to prune certain ambiguous or borderline patches.

5.5. Broader Impact

5.6. Summary

6. Conclusions

References

- Gabriel Synnaeve Nicolas Carion, Francisco Massa. End-to-end object detection with transformers. arXiv preprint arXiv:2005.12872, arXiv:2005.12872, 2023.

- Leonid Boytsov and Eric Nyberg. Flexible retrieval with NMSLIB and FlexNeuART. In Proceedings of Second Workshop for NLP Open Source Software (NLP-OSS), pages 32–43, Online, 20. Association for Computational Linguistics. 20 November.

- Ting Liu, Liangtao Shi, Richang Hong, Yue Hu, Quanjun Yin, and Linfeng Zhang. Multi-stage vision token dropping: Towards efficient multimodal large language model. arXiv preprint arXiv:2411.10803, arXiv:2411.10803, 2024.

- Rodrigo Nogueira, Zhiying Jiang, and Jimmy Lin. Document ranking with a pretrained sequence-to-sequence model, 2020.

- Ehud D Karnin. A simple procedure for pruning back-propagation trained neural networks. IEEE transactions on neural networks, 1990.

- Linke Ouyang, Yuan Qu, Hongbin Zhou, Jiawei Zhu, Rui Zhang, Qunshu Lin, Bin Wang, Zhiyuan Zhao, Man Jiang, Xiaomeng Zhao, Jin Shi, Fan Wu, Pei Chu, Minghao Liu, Zhenxiang Li, Chao Xu, Bo Zhang, Botian Shi, Zhongying Tu, and Conghui He. Omnidocbench: Benchmarking diverse pdf document parsing with comprehensive annotations, 2024.

- Gyuwan Kim and Kyunghyun Cho. Length-adaptive transformer: Train once with length drop, use anytime with search. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), pages 6501–6511, Online, 21. Association for Computational Linguistics. 20 August.

- Yi Tay, Mostafa Dehghani, Dara Bahri, and Donald Metzler. Efficient transformers: A survey. ACM Computing Surveys, 2022; 28.

- Yanyu Li, Ju Hu, Yang Wen, Georgios Evangelidis, Kamyar Salahi, Yanzhi Wang, Sergey Tulyakov, and Jian Ren. Rethinking vision transformers for mobilenet size and speed. arXiv preprint arXiv:2212.08059, arXiv:2212.08059, 2022.

- Salman Khan, Muzammal Naseer, Munawar Hayat, Syed Waqas Zamir, Fahad Shahbaz Khan, and Mubarak Shah. Transformers in vision: A survey. ACM computing surveys (CSUR), 2022; 41.

- Liu Yang, Qingyao Ai, Jiafeng Guo, and W. Bruce Croft. anmm: Ranking short answer texts with attention-based neural matching model. CoRR, 0164; .1.

- Anonymous. Frobnication tutorial, 2024. Supplied as supplemental material tr.pdf.

- Kai Hui, Andrew Yates, Klaus Berberich, and Gerard de Melo. A position-aware deep model for relevance matching in information retrieval. CoRR, 0394; .0.

- Xiao Bi, Deli Chen, Guanting Chen, Shanhuang Chen, Damai Dai, Chengqi Deng, Honghui Ding, Kai Dong, Qiushi Du, Zhe Fu, et al. Deepseek LLM: Scaling open-source language models with longtermism. arXiv:2401.02954, arXiv:2401.02954, 2024.

- Shunyu Yao, Dian Yu, Jeffrey Zhao, Izhak Shafran, Tom Griffiths, Yuan Cao, and Karthik Narasimhan. Tree of thoughts: Deliberate problem solving with large language models. Advances in Neural Information Processing Systems.

- Gemini Team, Rohan Anil, Sebastian Borgeaud, Yonghui Wu, Jean-Baptiste Alayrac, Jiahui Yu, Radu Soricut, Johan Schalkwyk, Andrew M Dai, Anja Hauth, et al. Gemini: a family of highly capable multimodal models. arXiv preprint arXiv:2312.11805, arXiv:2312.11805, 2023.

- Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Dirk Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, Sylvain Gelly, et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929, arXiv:2010.11929, 2020.

- Vera Boteva, Demian Gholipour, Artem Sokolov, and Stefan Riezler. A full-text learning to rank dataset for medical information retrieval. In Proceedings of the European Conference on Information Retrieval (ECIR). Springer, 2016.

- Yijiang Liu, Huanrui Yang, Zhen Dong, Kurt Keutzer, Li Du, and Shanghang Zhang. Noisyquant: Noisy bias-enhanced post-training activation quantization for vision transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 20321–20330, 2023.

- Hugo Touvron, Matthieu Cord, Matthijs Douze, Francisco Massa, Alexandre Sablayrolles, and Hervé Jégou. Training data-efficient image transformers & distillation through attention. In International conference on machine learning, pages 10347–10357. PMLR, 2021.

- Ze Liu, Yutong Lin, Yue Cao, Han Hu, Yixuan Wei, Zheng Zhang, Stephen Lin, and Baining Guo. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF international conference on computer vision, pages 10012–10022, 2021.

- Sucheng Ren, Zhengqi Gao, Tianyu Hua, Zihui Xue, Yonglong Tian, Shengfeng He, and Hang Zhao. Co-advise: Cross inductive bias distillation. In Proceedings of the IEEE/CVF Conference on computer vision and pattern recognition, pages 16773–16782, 2022.

- Zhuyun Dai and Jamie Callan. Deeper text understanding for IR with contextual neural language modeling. CoRR, 0921; .7.

- Yanwei Li, Chengyao Wang, and Jiaya Jia. LLaMA-VID: An image is worth 2 tokens in large language models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2024.

- Sinong Wang, Belinda Z Li, Madian Khabsa, Han Fang, and Hao Ma. Linformer: Self-attention with linear complexity. arXiv preprint arXiv:2006.04768, arXiv:2006.04768, 2020.

- Yassine Zniyed, Thanh Phuong Nguyen, et al. Enhanced network compression through tensor decompositions and pruning. IEEE Transactions on Neural Networks and Learning Systems.

- Ralf Herbrich, Thore Graepel, and Klaus Obermayer. Large margin rank boundaries for ordinal regression. 88, 01 2000.

- Shilong Liu, Zhaoyang Zeng, Tianhe Ren, Feng Li, Hao Zhang, Jie Yang, Qing Jiang, Chunyuan Li, Jianwei Yang, Hang Su, et al. Grounding dino: Marrying dino with grounded pre-training for open-set object detection. In European Conference on Computer Vision, pages 38–55. Springer, 2025.

- Manzil Zaheer, Guru Guruganesh, Kumar Avinava Dubey, Joshua Ainslie, Chris Alberti, Santiago Ontanon, Philip Pham, Anirudh Ravula, Qifan Wang, Li Yang, and Amr Ahmed. Big bird: Transformers for longer sequences. In H. Larochelle, M. Ranzato, R. Hadsell, M.F. Balcan, and H. Lin, editors, Advances in Neural Information Processing Systems, volume 33, pages 17283–17297. Curran Associates, Inc., 2020.

- Keshav Santhanam, Omar Khattab, Jon Saad-Falcon, Christopher Potts, and Matei Zaharia. Colbertv2: Effective and efficient retrieval via lightweight late interaction, 2021.

- Junhua Mao, Jonathan Huang, Alexander Toshev, Oana Camburu, Alan Yuille, and Kevin Murphy. Generation and comprehension of unambiguous object descriptions, 2016.

- Wuhyun Shin Byungseok Roh, JaeWoong Shin. Sparse detr: Efficient end-to-end object detection with learnable sparsity. arXiv preprint arXiv:2111.14330, arXiv:2111.14330, 2021.

- Yongming Rao, Wenliang Zhao, Benlin Liu, Jiwen Lu, Jie Zhou, and Cho-Jui Hsieh. Dynamicvit: Efficient vision transformers with dynamic token sparsification. Advances in neural information processing systems, 1394; 9.

- Xun Wang, Haozhi Zhang, Weilin Huang, and Matthew R Scott. Cross-batch memory for embedding learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 6388–6397, 2020.

- Zhuyun Dai and Jamie Callan. Context-aware sentence/passage term importance estimation for first stage retrieval. arXiv preprint arXiv:1910.10687, arXiv:1910.10687, 2019.

- Daniël Rennings, Felipe Moraes, and Claudia Hauff. An Axiomatic Approach to Diagnosing Neural IR Models. In Leif Azzopardi, Benno Stein, Norbert Fuhr, Philipp Mayr, Claudia Hauff, and Djoerd Hiemstra, editors, Advances in Information Retrieval, Lecture Notes in Computer Science, pages 489–503, Cham, 2019. Springer International Publishing. ZSCC: NoCitationData[s0].

- Baolin Peng, Chunyuan Li, Pengcheng He, Michel Galley, and Jianfeng Gao. Instruction tuning with gpt-4. arXiv:2304.03277, arXiv:2304.03277, 2023.

- Daniel Bolya, Cheng-Yang Fu, Xiaoliang Dai, Peizhao Zhang, Christoph Feichtenhofer, and Judy Hoffman. Token merging: Your ViT but faster. In International Conference on Learning Representations, 2023.

- Wenyu Lv, Shangliang Xu, Yian Zhao, Guanzhong Wang, Jinman Wei, Cheng Cui, Yuning Du, Qingqing Dang, and Yi Liu. Detrs beat yolos on real-time object detection. arXiv preprint arXiv:2304.08069, arXiv:2304.08069, 2023.

- Weihao Yu, Mi Luo, Pan Zhou, Chenyang Si, Yichen Zhou, Xinchao Wang, Jiashi Feng, and Shuicheng Yan. Metaformer is actually what you need for vision. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 10819–10829, 2022.

- N Goyal Y Liu, M Ott. Roberta: A robustly optimized bert pretraining approach. arXiv preprint arXiv:1907.11692, arXiv:1907.11692, 2019.

- Adam Berger and John Lafferty. Information retrieval as statistical translation. In Proceedings of the 22nd Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, SIGIR ’99, page 222–229, New York, NY, USA, 1999. Association for Computing Machinery.

- Yinhan Liu, Myle Ott, Naman Goyal, Jingfei Du, Mandar Joshi, Danqi Chen, Omer Levy, Mike Lewis, Luke Zettlemoyer, and Veselin Stoyanov. Roberta: A robustly optimized bert pretraining approach. arXiv preprint arXiv:1907.11692, arXiv:1907.11692, 2019.

- Van Dang, Michael Bendersky, and W. Bruce Croft. Two-stage learning to rank for information retrieval. In Proceedings of the 35th European Conference on Advances in Information Retrieval, ECIR’13, pages 423–434, Berlin, Heidelberg, 2013. Springer-Verlag.

- Xinyu Ma, Jiafeng Guo, Ruqing Zhang, Yixing Fan, Xiang Ji, and Xueqi Cheng. Prop: Pre-training with representative words prediction for ad-hoc retrieval, 2020.

- Yassine Zniyed, Thanh Phuong Nguyen, et al. Efficient tensor decomposition-based filter pruning. Neural Networks, 0639.

- Y Liang, C Ge, Z Tong, Y Song, P Xie, et al. Not all patches are what you need: Expediting vision transformers via token reorganizations. In ICLR, 2022.

- Qizhe Zhang, Aosong Cheng, Ming Lu, Zhiyong Zhuo, Minqi Wang, Jiajun Cao, Shaobo Guo, Qi She, and Shanghang Zhang. [cls] attention is all you need for training-free visual token pruning: Make vlm inference faster, 2024.

- Google Research. Vision transformer. https://github.com/google-research/vision_transformer/, 2023.

- Angela Fan, Edouard Grave, and Armand Joulin. Reducing transformer depth on demand with structured dropout. In International Conference on Learning Representations, 2020.

- Guodong Guo Xiangcheng Liu, Tianyi Wu. Adaptive sparse vit: Towards learnable adaptive token pruning by fully exploiting self-attention. arXiv preprint arXiv:2209.13802, arXiv:2209.13802, 2022.

- Fedor Moiseev Elena Voita, David Talbot. Analyzing multi-head self-attention: Specialized heads do the heavy lifting, the rest can be pruned. arXiv preprint arXiv:1905.09418, arXiv:1905.09418, 2019.

- Lee Xiong, Chenyan Xiong, Ye Li, Kwok-Fung Tang, Jialin Liu, Paul Bennett, Junaid Ahmed, and Arnold Overwijk. Approximate nearest neighbor negative contrastive learning for dense text retrieval, 2020.

- Benjamin Graham Angela Fan, Pierre Stock. Training with quantization noise for extreme model com- pression. arXiv preprint arXiv:2004.07320, arXiv:2004.07320, 2020.

- Huanrui Yang, Hongxu Yin, Maying Shen, Pavlo Molchanov, Hai Li, and Jan Kautz. Global vision transformer pruning with hessian-aware saliency. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 18547–18557, 2023.

- Jinnian Zhang, Houwen Peng, Kan Wu, Mengchen Liu, Bin Xiao, Jianlong Fu, and Lu Yuan. Minivit: Compressing vision transformers with weight multiplexing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 12145–12154, 2022.

- Ilya O Tolstikhin, Neil Houlsby, Alexander Kolesnikov, Lucas Beyer, Xiaohua Zhai, Thomas Unterthiner, Jessica Yung, Andreas Steiner, Daniel Keysers, Jakob Uszkoreit, et al. Mlp-mixer: An all-mlp architecture for vision. Advances in neural information processing systems, 2427; 2.

- Chris, J.C. Burges. From ranknet to lambdarank to lambdamart: An overview. Technical report, 10. 20 June.

- Mike Taylor, John Guiver, Stephen Robertson, and Tom Minka. Softrank: Optimising non-smooth rank metrics. 08. 20 February.

- Shaohan Huang, Li Dong, Wenhui Wang, Yaru Hao, Saksham Singhal, Shuming Ma, Tengchao Lv, Lei Cui, Owais Khan Mohammed, Barun Patra, Qiang Liu, Kriti Aggarwal, Zewen Chi, Johan Bjorck, Vishrav Chaudhary, Subhojit Som, Xia Song, and Furu Wei. Language is not all you need: Aligning perception with language models, 2023.

- Qiming Zhang Yufei Xu, Jing Zhang. Vitpose: Simple vision transformer baselines for human pose estimation. arXiv preprint arXiv:2204.12484, arXiv:2204.12484, 2022.

- Luyu Gao, Zhuyun Dai, Tongfei Chen, Zhen Fan, Benjamin Van Durme, and Jamie Callan. Complementing lexical retrieval with semantic residual embedding, 2020.

- Yuliang Liu, Zhang Li, Mingxin Huang, Biao Yang, Wenwen Yu, Chunyuan Li, Xu-Cheng Yin, Cheng-Lin Liu, Lianwen Jin, and Xiang Bai. Ocrbench: on the hidden mystery of ocr in large multimodal models. Science China Information Sciences, 2201; :02.

- Rodrigo Nogueira. From doc2query to doctttttquery. 2019.

- Jia Deng, R. Socher, Li Fei-Fei, Wei Dong, Kai Li, and Li-Jia Li. Imagenet: A large-scale hierarchical image database. In 2009 IEEE Conference on Computer Vision and Pattern Recognition(CVPR), volume 00, pages 248–255, 06 2009.

- F. Rosenblatt. The perceptron: A probabilistic model for information storage and organization in the brain. Psychological Review, 1958.

- Kaleel Mahmood, Rigel Mahmood, and Marten Van Dijk. On the robustness of vision transformers to adversarial examples. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 7838–7847, 2021.

| Method | Pruning Ratio | Accuracy (%) | FLOPs (G) | Speedup |

|---|---|---|---|---|

| No Pruning | 0.00 | 81.8 | 17.6 | |

| Random Pruning | 0.30 | 77.2 | 13.1 | |

| Attention Rollout | 0.30 | 79.5 | 13.1 | |

| ToMe | 0.30 | 80.1 | 12.8 | |

| Ours (Similarity) | 0.30 | 80.6 | 12.7 | |

| Ours (Similarity) | 0.50 | 79.3 | 9.2 | |

| Ours (Similarity) | 0.60 | 77.9 | 7.6 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).