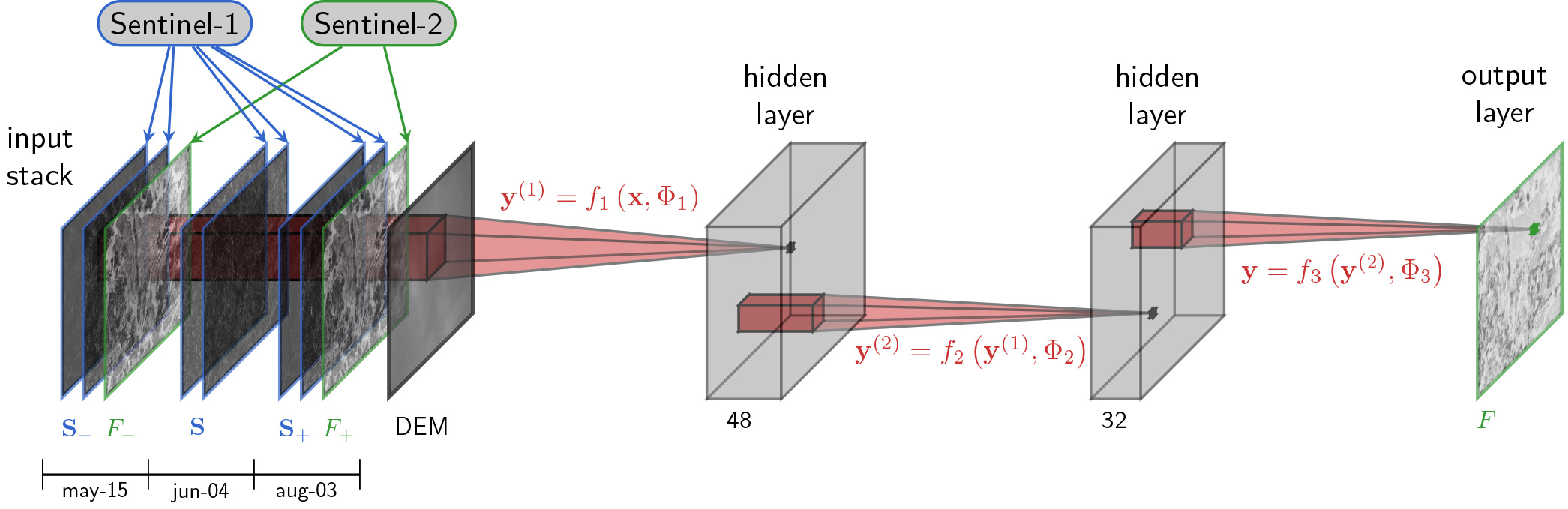

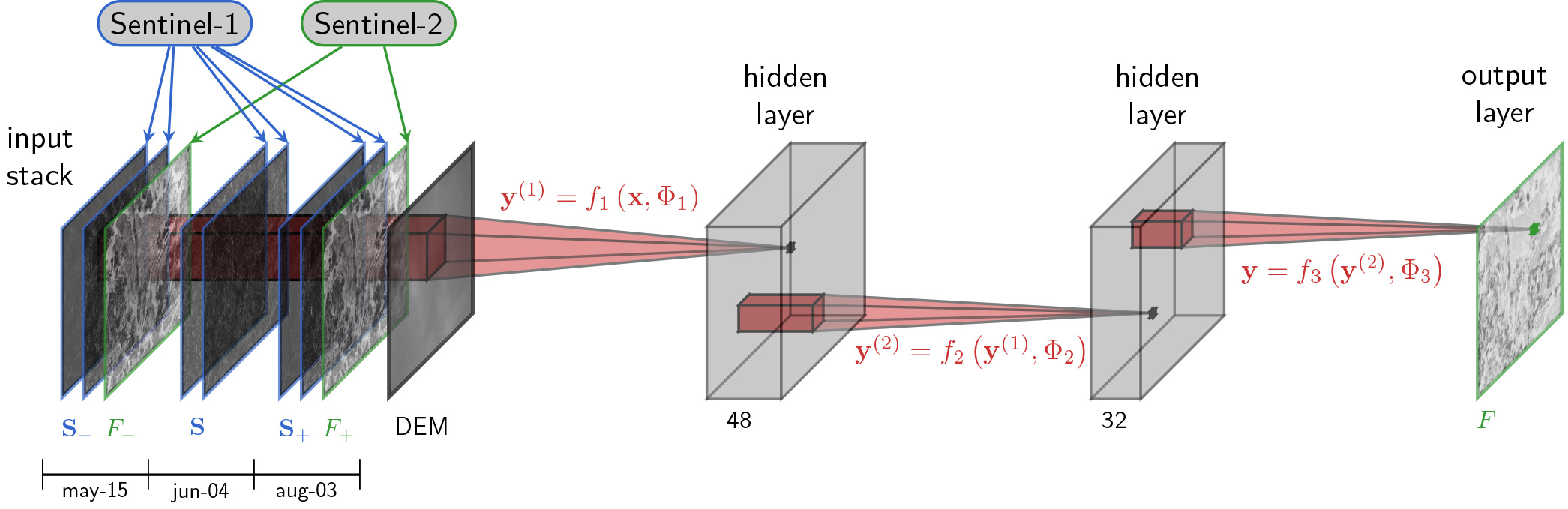

Sensitivity to weather conditions, and specially to clouds, is a severe limiting factor to the use of optical remote sensing for Earth monitoring applications. A possible alternative, is to resort to weather-insensitive synthetic aperture radar (SAR) images. However, in many real-world applications, critical decisions are made based on some informative spectral features, such as water, vegetation or soil indices, which cannot be extracted from SAR images. In the absence of optical sources, these data must be estimated. The current practice is to perform linear interpolation between data available at temporally close time instants. In this work, we propose to estimate missing spectral features through data fusion and deep-learning. Several sources of information are taken into account - optical sequences, SAR sequences, DEM - so as to exploit both temporal and cross-sensor dependencies. Based on these data, and a tiny cloud-free fraction of the target image, a compact convolutional neural network (CNN) is trained to perform the desired estimation. To validate the proposed approach, we focus on the estimation of the normalized difference vegetation index (NDVI), using coupled Sentinel-1 and Sentinel-2 time-series acquired over an agricultural region of Burkina Faso from May to November 2016. Several fusion schemes are considered, causal and non-causal, single-sensor or joint-sensor, corresponding to different operating conditions. Experimental results are very promising, showing a significant gain over baselines methods according to all performance indicators.