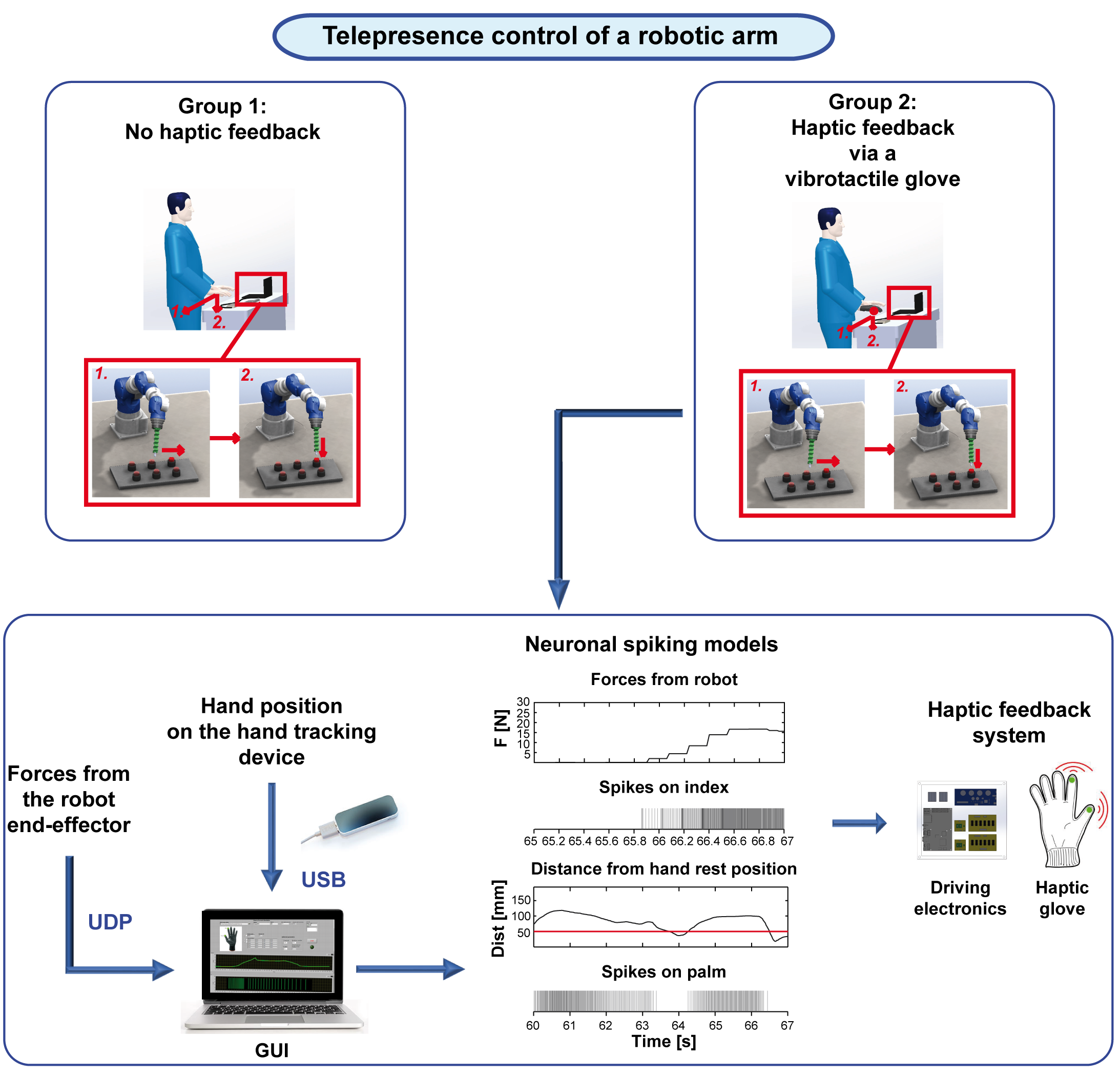

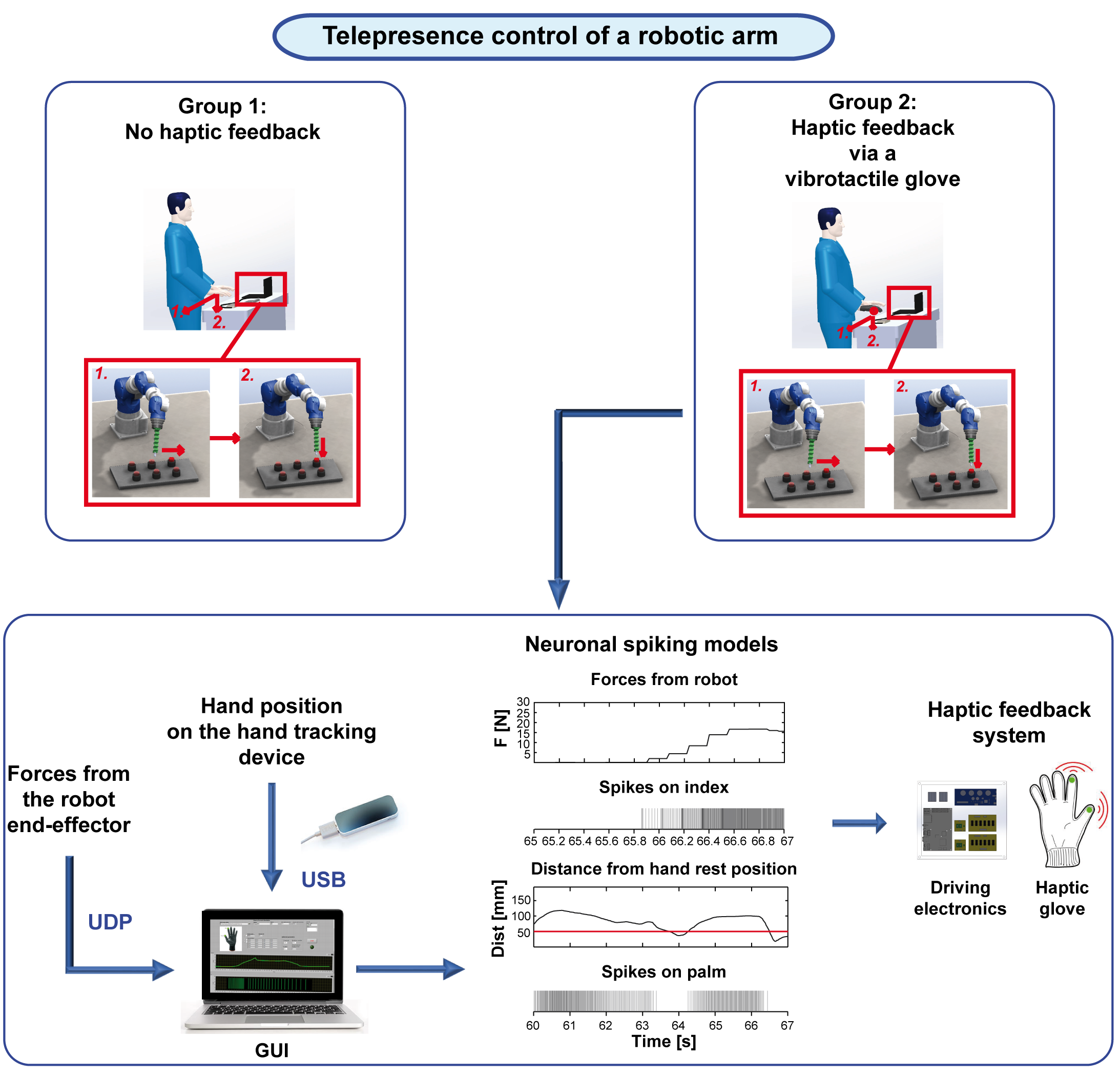

Research on bidirectional human-machine interfaces will enable the smooth interaction with robotic platforms in contexts ranging from industry to tele-medicine and rescue. This paper introduces a bidirectional communication system to achieve multisensory telepresence during the gestural control of an industrial robotic arm. We complement the gesture-based control by means of a tactile-feedback strategy grounding on a spiking artificial neuron model. Force and motion from the robot are converted in neuromorphic haptic stimuli delivered on the user’s hand through a vibro-tactile glove. Untrained personnel participated in an experimental task benchmarking a pick-and-place operation. The robot end-effector was used to sequentially press six buttons, illuminated according to a random sequence, and comparing the tasks executed without and with tactile feedback. The results demonstrated the reliability of the hand tracking strategy developed for controlling the robotic arm, and the effectiveness of a neuronal spiking model for encoding hand displacement and exerted forces in order to promote a fluid embodiment of the haptic interface and control strategy. The main contribution of this paper is in presenting a robotic arm under gesture-based remote control with multisensory telepresence, demonstrating for the first time that a spiking haptic interface can be used to effectively deliver on the skin surface a sequence of stimuli emulating the neural code of the mechanoreceptors beneath.