1. Introduction

Time domain data science is a rapidly growing field with problems ranging from "physics-based" time-dependent modeling of driving processes to the model independent forecasting of transients. Traditionally, physical and environmental sciences including meteorology, solar and astrophysics, has been a field where modeling of observational data is done with a theoretical or phenomenological, physics-based model. For forecasting, statistical techniques like the Kalman Filter, autoregressive models, etc. have been used with data assimilation encompassing the suite of cutting edge methods used in meteorology. With ever increasing volumes, velocities and varieties of time domain data, from contemporaneous observations, fast and precise forecasting is becoming increasingly important. Especially, real time alerts that are auto-generated is a challenge with the sheer volume and velocity of data flow. And this challenge is particularly pronounced in astronomy which affords some of the highest values of volume and velocity. With the next generation of observatories like CTA in gamma-rays [for eg., [

1,

2,

3], SKA in radio [for eg., [

4,

5,

6], LSST in optical [for eg., [

7], this exact approach is not practical and in some situation not possible to implement. LSST is expected to produce

alerts per night. SKA will receive few Petabytes (PBs) of raw data per second from the telescopes and the data and signal processors will have to handle a few Terabytes per second. This will translate into a very high rate of transient signals. At such large rates and volumes, we need automated, fast and efficient and intelligent systems to process and interpret such alerts. Probabilistic forecast of a future episode based on current and / or past observations at different wavelengths would constitute alerts. Target of Opportunity (ToO) observations stand to benefit from machine intelligence in this respect. Traditionally ToO observations sent under MoU agreements between collaborations running different telescopes, astronomer’s telegrams, virtual observatory events or VOEvents

1, which are automatically parsed as also preplanned observations coordinated by representatives of different telescope collaborations. Furthermore, the variety of transient signals makes it inevitable to use machine learning techniques [eg., [

7,

8,

9,

10] to detect complex patterns and classes of signals. In particular, methods of deep learning are particularly relevant in capturing the complex variability behaviour of astronomical signals [

11,

12]. The vast majority of existing efforts are focussed on classifying transients from a population. While, one can classify the variability in lightcurves or in general any time-series broadly into stochastic, (quasi-)periodic, secular and transients, the exact pattern of variations can be quite complex to both model and predict. That is why it is equally important to focus on individual lightcurves and test the ability of machine / deep learning methods in capturing the complexity and individuality of lightcurves as also the generalisation capability to a variety of lightcurves. In this work we will focus on the former with a view to building towards the latter.

Compared to astronomical signals, even the more elaborate datasets of solar outbursts - flares and coronal mass ejections (CMEs) - the meteorological datasets such as atmospheric or oceanographic data are vastly more complex. Not only do they have spatio-temporal features (true also for solar data), the resolution required to make impactful predictions are significantly finer. This poses a challenge not only for the data scientific techniques but also for the underlying models (numerical/physical). Often for numerical weather prediction, convective processes (atmosphere) require separate numerical schemes for the physical models from the coarse-grained global circulation models or GCMs [eg., [

13]

2. Thus meteorological data and the requirements of meteorological forecasting present greater challenges than astronomical forecasting. Furthermore, the principle approach(es) of numerical weather prediction center around data assimilation [

14] - which involves optimally combining observed information with the theoretical (most likely numerical) model - including for operations at weather and climate prediction centres like the Met-Office and European Centre for for Medium-Range Weather Forecasts (ECMWF). Therefore, for testing basic features of machine learning / deep learning methods, astronomical datasets may have an advantage. And hence, we focus on them here, with the hope that some of the fundamental insights or indeed challenges would hold true in general, for more complicated scenarios.

Astrophysical variability is often in the low signal to noise ratio regime making it a more challenging one. Some of the most important forecast problems for astronomical time-series are for transient flares and quasi-periodic oscillations that are buried under stochastic signals. Note that the stochasticity or noisy component is actually a combination of background noise either due to instrumental or observational backgrounds and a genuine astrophysical component related to stochastic processes in the transient source. This constitutes an interesting forecasting challenge. Prediction of noisy time-series is certainly not limited to astronomy. In fact, important applications appear in the fields of finance [

15], meteorology [

16], neuroscience, etc. As explained in Lee Giles and Lawrence [

17], in general, forecasting from limited sample sizes in a low signal to noise, highly non-stationary and non-linear setting is enormously challenging. And in this most general scenario, Lee Giles and Lawrence [

17] prescribes combination of symbolic representation with a self-organising map and grammatical inference with recurrent neural networks (RNNs) [

18]. In our case of astrophysical signals, while we maybe in the high noise regime, the non-linearity and non-stationarity can be assumed to be weak in most typical cases. Therefore, we simply use RNNs. While we do not wish to draw conclusions and see patterns and trends by over-fitting a unwanted noise in the lightcurves, we do wish to study outbursts that emerge at short timescale of the noise spectrum. In fact, a key question in variability in AGNs is distinguishing between statistical fluctuations that in extreme cases lead to occasional outbursts and a special event isolated from these underlying fluctuations and thus drawn from a different physical process or distribution equivalently. An agnostic learning algorithm should be able to predict the former but not the latter. In order to do this, we must broadly characterise the response of RNN architecture to noise characteristics. This is clearly relevant in predicting a well defined transient episode like an outburst or flaring episode or a potential quasi-periodic signal in advance in real astronomical observations.

A key part of time domain astronomy involves setting up multiwavelength observational campaigns. These are either pre-planned medium to long term observation or target of opportunity observations (ToOs) often triggered by an episode of transient activity at one wavelength. In either case, a key ingredient is the ability to use existing observations to predict future ones. These existing observations are either simply past observations of the same wavelength or more interestingly those at another wavelength. The latter presumes a physical model that generates multiwavelength emission with temporal lags between different wavebands. This presents a forecasting problem tailored for application of RNNs and deep learning in general. Most of the applications of machine learning in transient astronomy are focussed on the classification of transients (either source or variability type) [

19]. Here we look at time-domain forecasting along the lines of that in finance, econometrics, numerical weather prediction (NWP), etc. Specifically, multiwavelength transient astronomy may be viewed as a multivariate forecasting.

One of the keys to such forecasting is understanding, as best as we can, the performance of the algorithm in presence of different types of temporal structures. And the quantification of the performance is done in terms of training and test losses.

There are several predictive frameworks for time-series, machine learning and otherwise. A number of time-series models are in use across domains

3, such as Holt-Winters [

20], ARIMA and SARIMA [

21], GARCH [

22], etc. Typically the Autoregressive Integrated Moving Average (ARIMA) models are most popularly used for forecasting in several domains with the "Integrated" part dealing with the large scale trends. For detecting features and patterns, supervised machine learning algorithms such as Random Forest [

23], Gradient Boosting Machine [

24], etc. Viewing the problem as a regression problem one can use Lasso Regression, Neural Networks, etc. In this paper, we do not attempt to provide a comprehensive survey on the various time-series forecasting methods and their efficacy for forecasting in domains modest signal-to-noise domains like astronomy. Rather we focus on one of the most popular methods that has yielded success for long-term time-series data. In this respect, recurrent neural networks (RNNs) are an excellent candidate [

18].

RNNs like other neural networks have the capabilities of handling complex, non-linear dependencies. But unlike other networks, its "directed cyclic" nature, which allows the input signal at any stage to be fed back to itself suitable for time-series work. In particular, we focus Long Short-Term Memory RNNs architectures (LSTM) [

25] which are capable of keeping track of long-term dependencies. This is clearly important in astronomy with the rather large dynamic range of timescales of underlying physical processes as well as observations. In order to do this, we test with artificial datasets that do not have a very complex temporal structure. More significantly, we use artificial datasets with characteristics typically found in long term astronomy observations and that we have some apriori knowledge of.

We subdivide the work into the following sections. In

Section 2, we review the basic or core architecture of the RNN-LSTM used. The following

Section 3 provides the science case of multiwavelength forecasting motivating the methodological work. The so-called sensitivity tests in two sections. In sub

Section 4.1, we examine the effects of dataset characteristics, gaps and complexity in terms of fourier components on performance. In sub

Section 4.2, we examine dependencies on the neural network (hyper)parameters. We apply our learning to the example of real-time statistical forecast of a transient flare while it develops in

Section 5. Finally we put forth our conclusions in

Section 6.

3. Motivation : Towards Multivariate (Multiwavelength) Forecasting

From the astronomy point of view, a very interesting application is the forecasting of a transient in one wavelength using the transient behaviour at another wavelength. This is in practice how multiwavelength target of opportunity observations (ToOs) are conducted. In general for other fields, this can be applied to forecasting a transient driven by multiple (driver) variables. Flares measured by observatories or telescopes at one wavelength are used to trigger observations in another, provided they exceed a certain threshold. Usually the threshold is based on a simple, predetermined estimate of what constitutes a "high" or "active" state, based on historical data [for eg., [

27,

28]. In certain cases [for instance for polarisation changes [

29], there are multiple threshold criteria that are statistically significant are used. However, due to the stochastic nature of flares, there’s an unavoidable arbitrariness in the definitions of a flaring state which would lead to a trigger. Increasingly this approach of conducting target of opportunity observations, will need to be automated and made as statistically robust and consistent as possible. Observations from large scale observatories such as CTA and SKA, will have tremendous data volumes and velocities, too high to be able to conduct entire multiwavelength campaigns with complete or even partial manual triggering. Therefore, one approach is to use agnostic methods and algorithms as provided by machine learning and deep learning. Even for automatic alerts that are presently generated, both speed and accuracy with which one needs to compute triggering thresholds make it perfectly suitable to use machine learning techniques. This argument can be extended to the case of multi-messenger observations. Multiwavelength or multi-messenger observations requires multivariate forecasting. As a result, we need to have systems of multivariate forecasting ready for the next generation of observatories.

There are several traditional statistical methods of multivariate time-series forecasting in competition with machine learning (ML) and deep learning (DL) methods [for eg., [

30,

31]. These traditional methods typically need an underlying generating mechanisms for the time-series. This is not the case for agnostic machine learning or deep learning algorithms as explained in Han [

31]. Also, the traditional time-series methods like Autoregressive Models or Gaussian Processes may fail in presence of features at multiple timescales from very short (under a minute in astronomy) to very long (multiple years) unlike certain advanced deep learning models like Lai et al. [

32]. However, the latter are quite complex, often hard to interpret and seem like "black boxes" [

33,

34]. In case of deep learning algorithms, the complexity is by design to enable match the complexity of features to be forecast and hence inherent in its design and objectives. However, if the sensitivity of forecasts to various components of such a "black box" is studied, then such algorithms could potentially lead to reliable forecasts. So in order for multivariate forecasts to succeed, we need to understand the sensitivities of the univariate forecasting in the first place. As the need for accuracy and speed or computational resources are in conflict, one needs to learn what is the optimal trade-off.

As an example, we use two simulated lightcurves, as if they were observations at two separate wavelengths to predict a third one. The lightcurves have power-law noise as is typical of astronomical lightcurves, given by

The first lightcurve shown in green in

Figure 3, is a red noise lightcurve comprised of 1000 time-steps. The second one is also a red noise lightcurve (i.e.

) with a transient flare introduced at time step 200 shown in blue in the figure. The transient flare has an exponential shape, given by

, where

. The third lightcurve, which we intend to predict also has red noise character, but has a transient flare at time step or epoch 800. And as seen in the

Figure 3, this flare is predicted by LSTM network as shown in orange. For this forecasting, a system of 3 LSTM layers with 30 units and a dense layer was used with a lookback of 1. The test to train ratio of

is used. The methodology for multivariate forecasting used here is motivated by the demonstration of predicting Google stock prices with RNN-LSTMs here on medium

6.

Here it is clear that by training on an outburst in a earlier segment of the lightcurve or equivalently a lightcurve at another wavelength, we can use LSTMs to forecast a future transient provided it is statistically similar to the training flare and is not extremely narrow. On the other hand, neither a transient without a precedent nor an extremely narrow one (with width , i.e. typical variability timescales) will not be forecast with this method. A straightforward way in which we test this is to swap the lightcurves (i.e. use latter flare for training) or use input lightcurves without a flare for training ; this naturally cannot predict a future flare. So in principle, this could discriminate between flares that arise from the original distribution of variations in the source from special, isolated episodes. Therefore, LSTMs could also potentially be used for discriminating between these two scenarios. However, this study will form part of a dedicated, future paper. Here, in this example of multiwavelength forecasting makes certain choices for the hyperparameters which give reasonable results. However, in order to make optimal choices, we first need to understand the sensitivities to these for different datasets. And as the process of forecasting is complex, we need to conduct these sensitivity tests on the simpler univariate case. Hence, we perform tests on sensitivity of prediction accuracy to network architecture parameters which include RNN hyperparameters and that to certain properties of the data or time-series. This is done with a view to get a feel for what choices can alter the forecast dramatically and which ones leave the forecast unaffected. Generalisation of results is a big challenge in forecasting with deep learning. So while this process does not lead to a fine calibration of the forecasting RNN-LSTM, it can be a guide to building more robust and reliable frameworks.

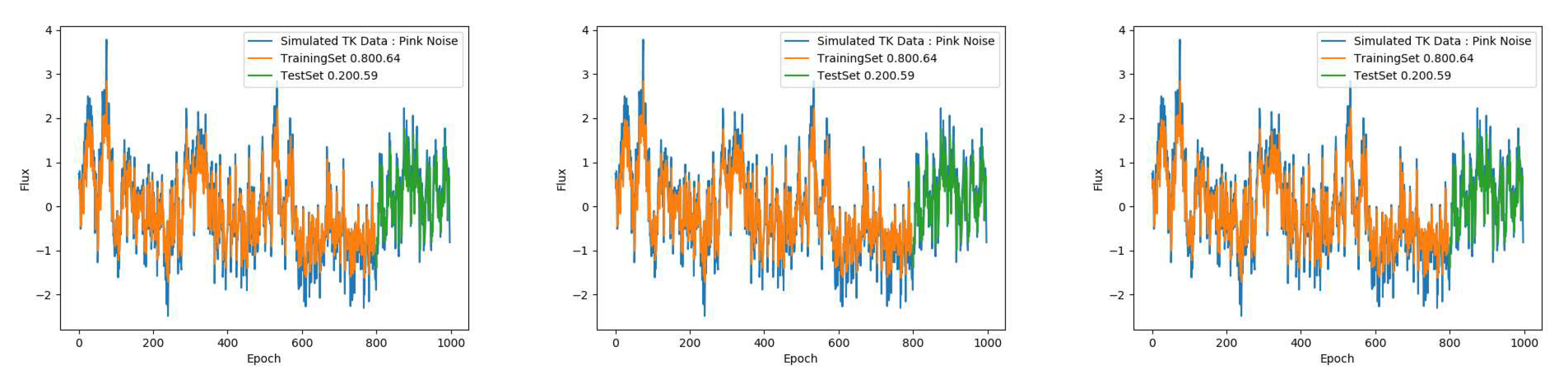

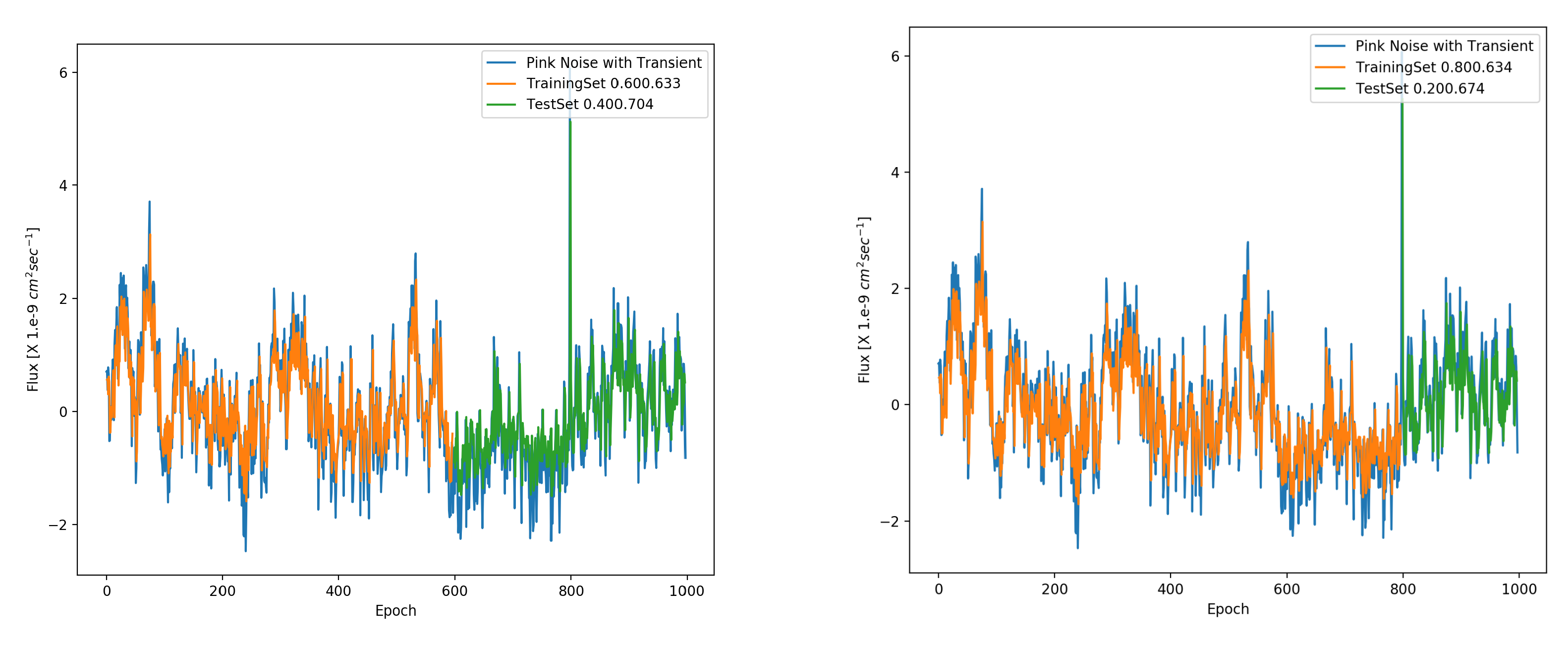

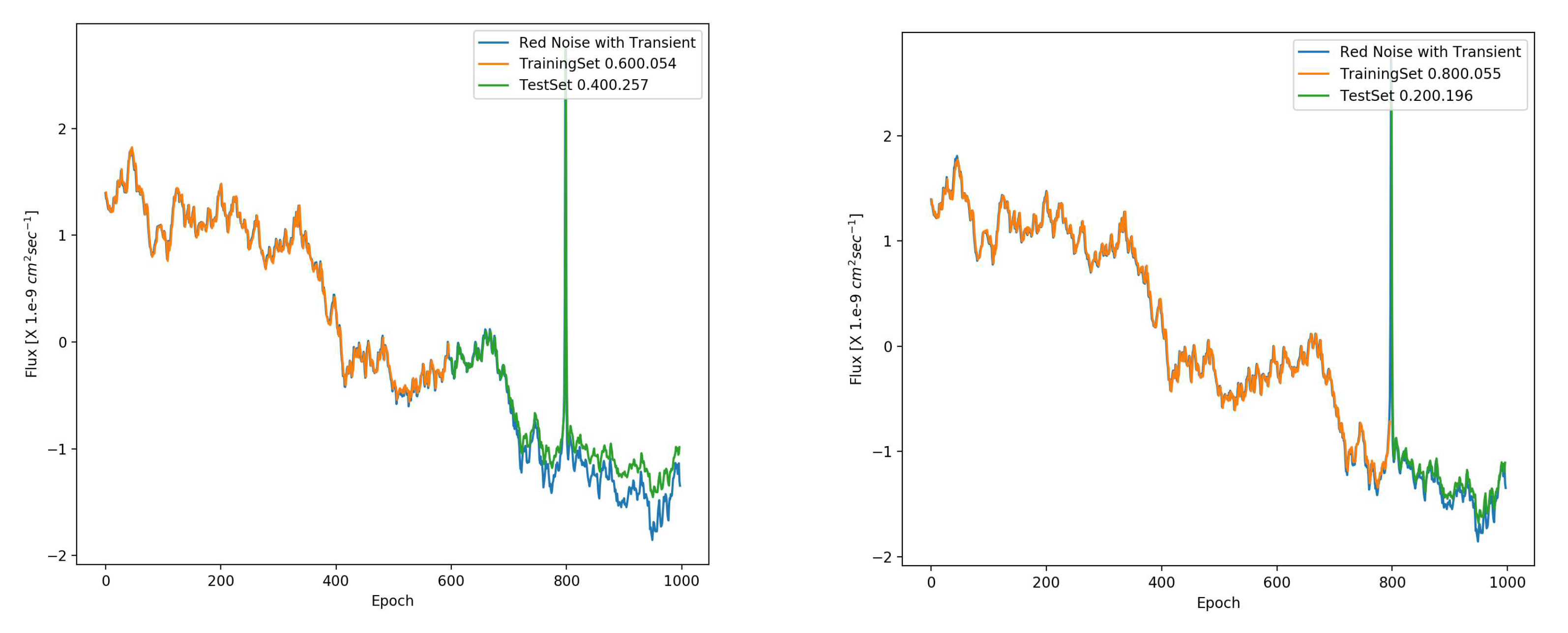

5. Detection of a Flare or Transient

Having characterised the performance of the LSTM network with pink and red noise with different parameters, we can now test detectability of a transient, as it develops. This is relevant to the scenario wherein a initial "quick look analysis" suggests that a flare is developing and we prolong the observation. We once again simulate pink and red noise lightcurves, but just a single realisation this time. We add to it a sharp transient characterised by the exponential function as , where which is approximately a hundredth of the total length of the lightcurve or year. And we position t0 at along the lightcurve, which is an choice made to be close to the typical ratio of train to test data. And the amplitude is multiplied by 2 times the maximum flux value of the noise to make it a significant outburst.

The results for the transient detection is shown in the

Figure 14 and

Figure 15 for pink and red noise respectively. We find that for pink noise, the prediction for the same transient is far superior to that for the red noise case. This is what we would expect from the simulation tests done in the previous sections. It is clear that the LSTMs perform rather poorly in presence of red noise and would give an imprecise prediction, not only for the transient, but in fact for the entire forecast. Now in absolute terms, one would expect the stateless LSTMs to fail at predicting a sharp, significant transient in either scenario, due to lack of long term correlation. However, given that there is a finite time,

, and given the normalisation, we do find that the LSTMs have decent predictive power as a batch size of

derives correlation on these timescales. And the closer we are to the outburst, the better the performance giving a very impressive value of loss (test to train) ratio of

for an

split for pink noise. This deteriorates to

for a

split.

6. Discussion and Conclusions

Sensitivity tests are critical for complex algorithms to test the stability of predictions and tendencies for certain types of datasets to yield certain types of results. Ever increasing volumes of time-domain data in astronomy at different wavelengths with CTA in gamma-rays [for eg., [

1,

2,

3], SKA in radio [for eg., [

4,

5,

6], LSST in optical [for eg., [

7] has ushered in a new era in observational astronomy driven by data. This includes a variety of variable objects ranging from Galactic compact binaries and extragalactic sources like AGNs amd GBRs to the mysterious Fast Radio Bursts (FRBs). In this era, multi-wavelength campaigns will have to scale with the volumes of data with a high degree of automated and optimised or "intelligent" decision making, whether it is classification of transients or indeed follow-up observations. This will inevitably lead to a growing importance of the forecasting element. Hence exhaustive efforts are needed to test forecasting algorithms that are likely to be used. In this context, the RNN-LSTM models are likely to be a popular choice. Astronomical lightcurves, i.e. time-series generated by an astrophysical system are apriori expected to be less complex than those in other domains. However, the combination of interesting quasi-periodic signals and outbursts with interesting stochastic astrophysical signals and background noise, makes astronomical forecasting a non-trivial challenge. In several multiwavelength and now increasingly multimessenger observations, an episode of increased flux measured in one waveband by one telescope or observatory triggers other facilities in different wavebands to observe the same source position. This essentially comes from the idea that physical processes leaves imprints on different parts of the electromagnetic spectrum with time-lags. And coordinated or synchronous observations amongst other things aim at identifying and quantifying the driving physical processes in terms of observed lags. To facilitate these coordinated observations, precise, fast, and reliable forecasting is key, and here machine learning algorithms like the RNN LSTMs can be very useful. Therefore, it is worthwhile to test them against types of time-series that maybe encountered with simulated lightcurves.

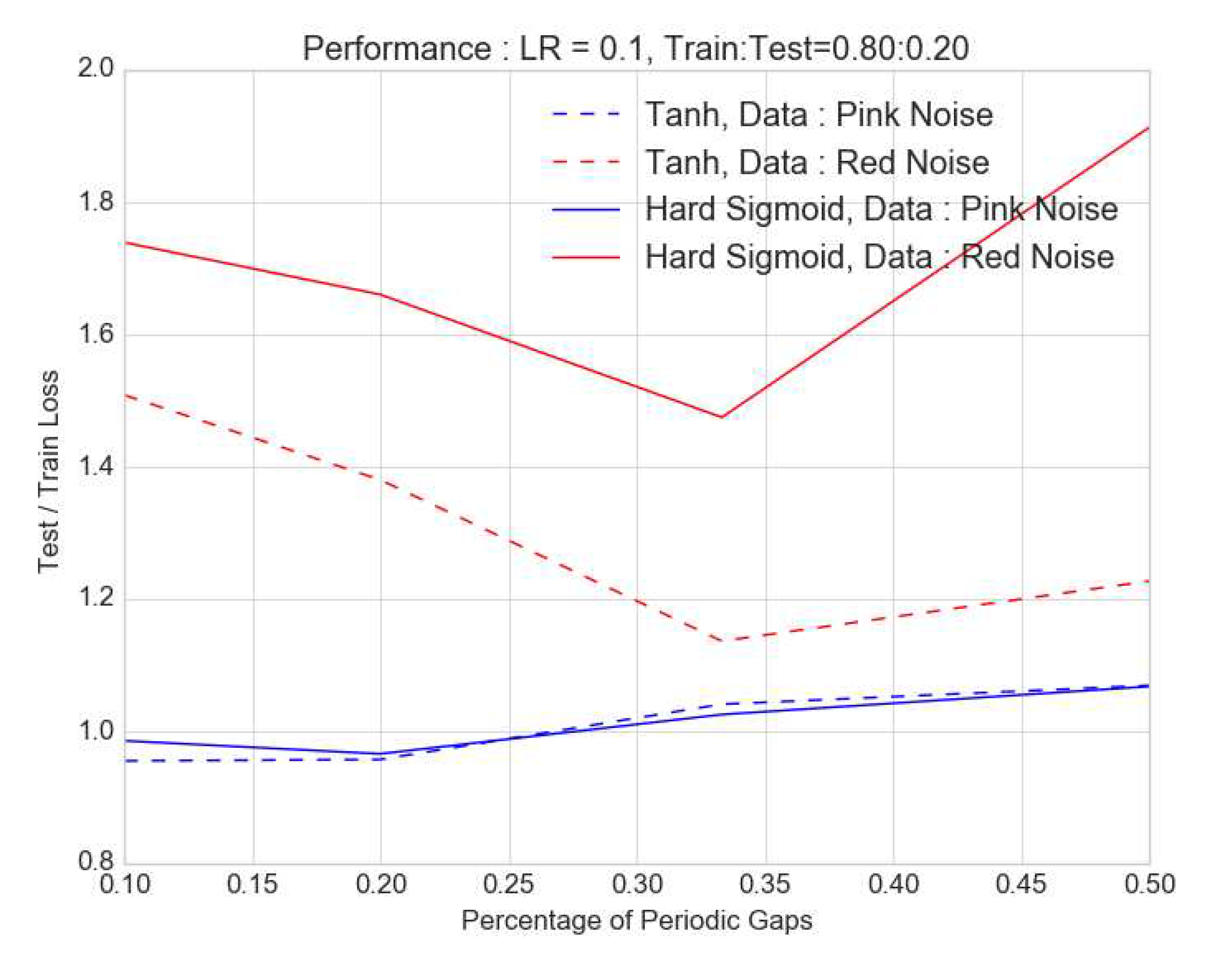

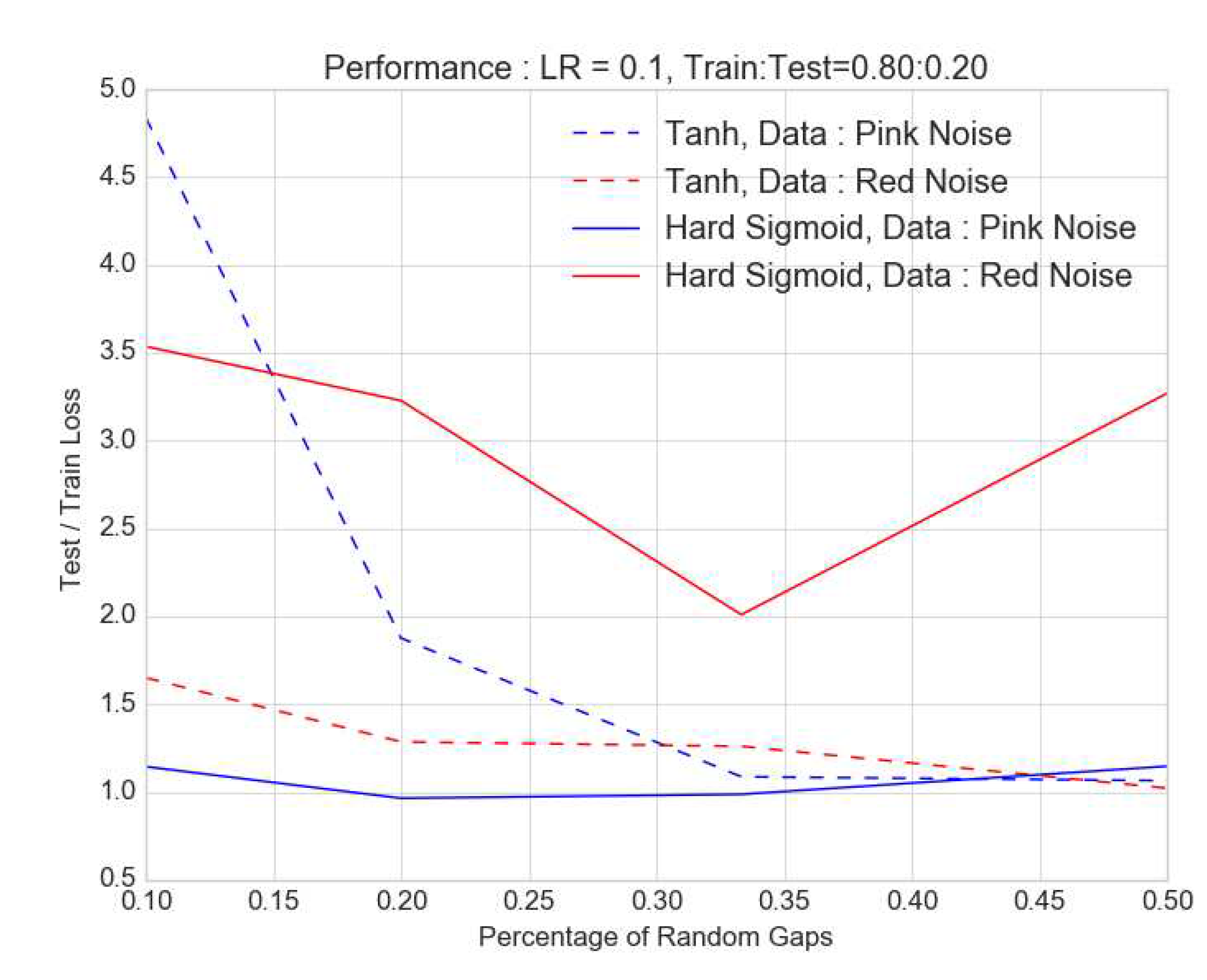

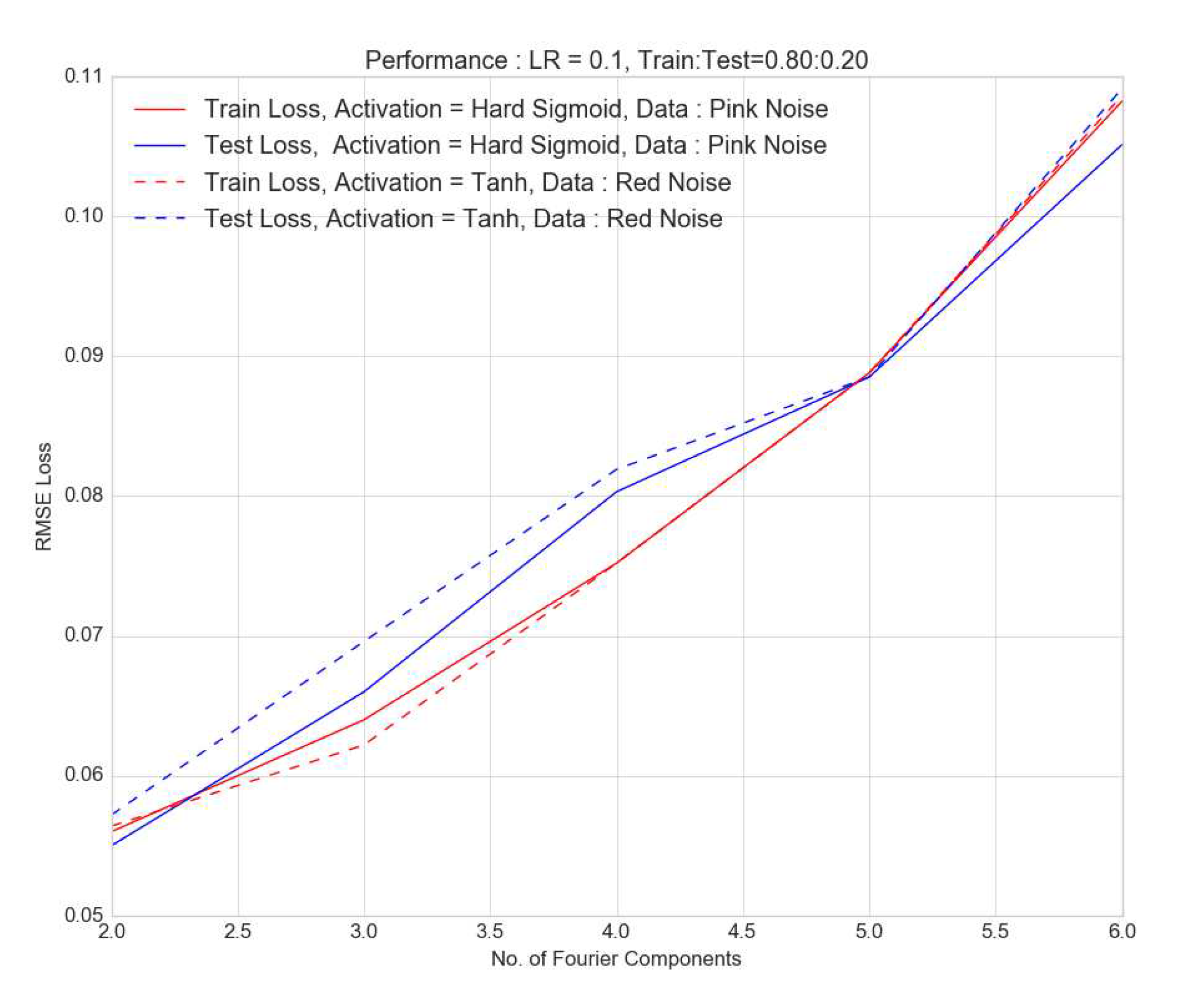

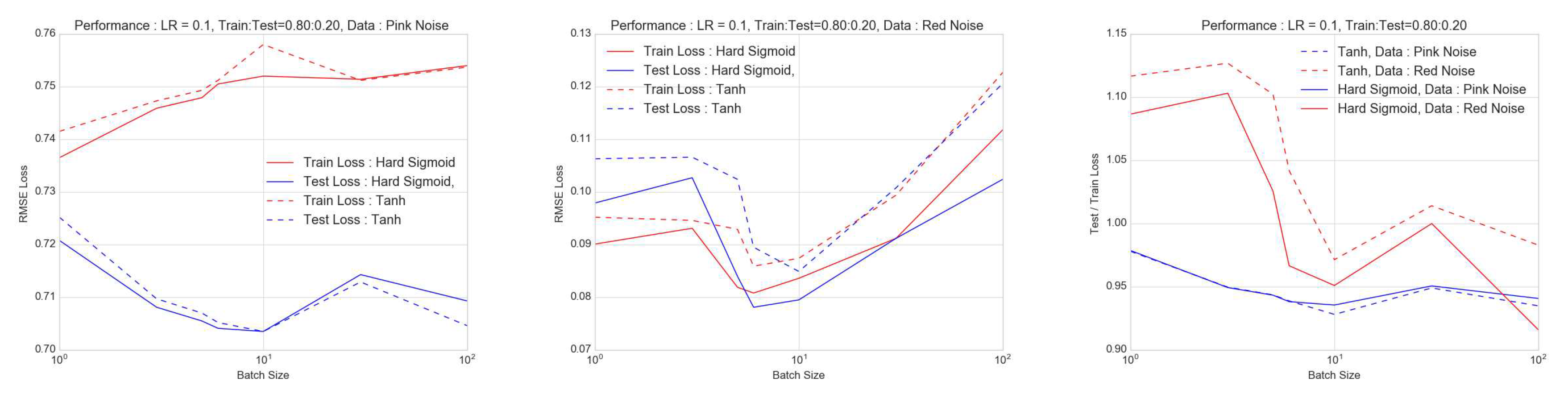

In testing with simulated lightcurves, we perform two types of tests. The first is sensitivity to properties or characteristics of data. The second is sensitivity to elements of the forecasting machinery for different datasets. Under characteristics of data, we look at incompleteness in data, or gaps in lightcurves that we routinely encounter in astronomy observations. We simulate purely periodic and random gaps and vary the percentage of missing data points to compute the training and test losses as measures of performance. We find that red noise lightcurves are generally more affected by presence of both periodic and random gaps, than the pink noise. Another general outcome seems to be presence of a sweet spot for the fraction of gaps at around 20 or , where the test to train losses tend to be closest to unity and thereby optimal. One possible implication could be that the gaps could, to an extent reduce the complexity and thereby improve performance of a particular forecasting architecture. Another alternate scenario could be that as we move from pink to red noise i.e. from a power spectral density index of 1.0 to 2.0, the longer timescales are more significant than the shorter ones on which gaps are introduced. And this leads to an initial improvement with increase in gaps until, the extent of the gaps is large enough that eventually starts to hamper predictability. These two effects would be degenerate. A stronger conclusion would require a comparison with other machine learning algorithms or indeed other forecasting methods, which we leave for a future paper. The complexity measured in terms of no. of Fourier components has a more monotonic effect. As the number of Fourier components increase, the performance tends to deteriorate for both pink and red noise.

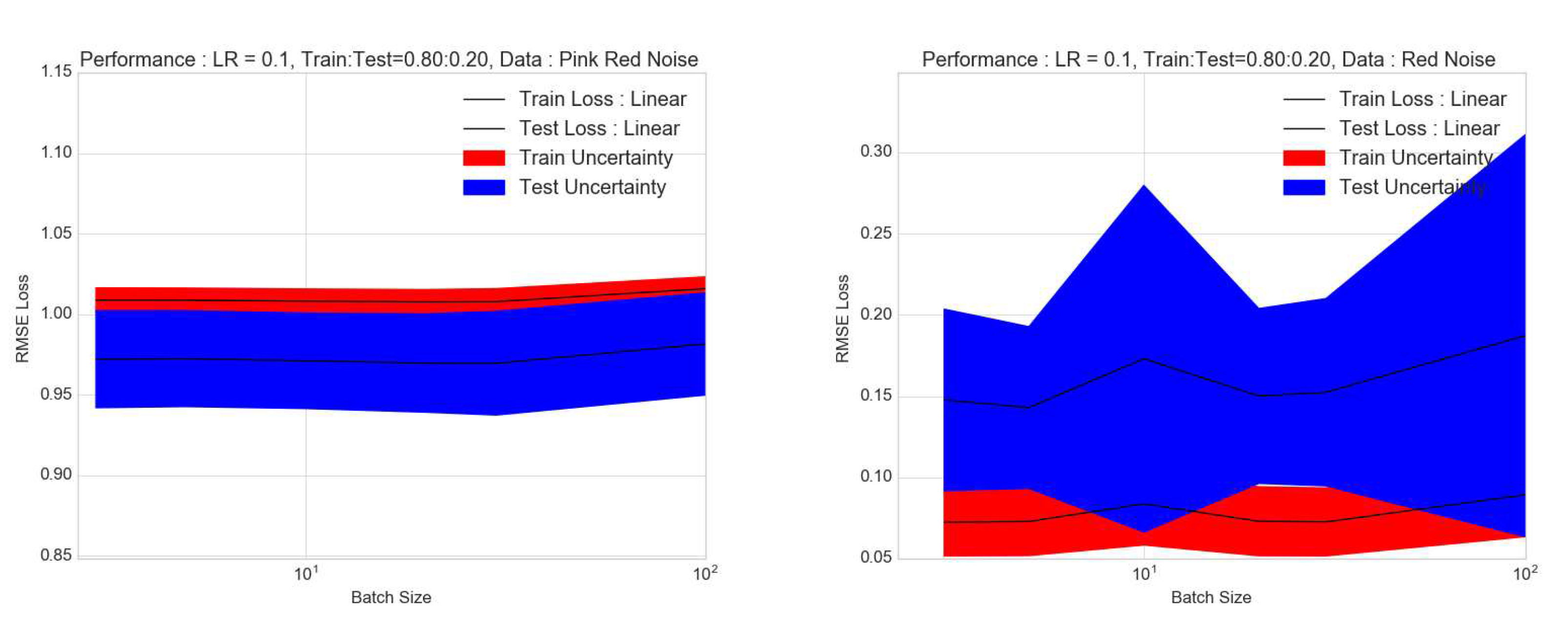

From the elements of the forecasting machinery or architecture, the most important one appears to be the batch size. Once again, there seems to be a sweet spot at a batch size of around 10 for performance in terms of both absolute values of losses and the ratio of test to train losses. The performance for a learning rate of 0.1 and train to test ratio of

at first glance seems to be slightly better for red noise, with a hint of overfitting for pink noise with the test to train loss ratio dropping below 1.0 in some cases. This is shown in

Figure 12. However this is not significant. What is more significant though is that this ratio and hence the performance is more stable for pink noise. This is confirmed by the uncertainty calculation in

Figure 13 which shows a larger uncertainty for red noise. A possible, even likely reason for this is that for stateless mode in which we are operating the LSTMs, it does not account for correlations over longer timescales than within a batch. With greater power at longer timescales in red noise compared to pink noise, the LSTMs should have a worse performance. This should also imply that if stateful mode is used then the performance on the red noise lightcurves should improve more than that for the pink noise. Once again, there is a need to compare with other forecasting models which is beyond the scope of this paper and will be addressed in a future one. The main conclusions from this study are summarised below,

Fast and precise, automated multiwavelength transient forecasting will become increasingly important to plan observational campaigns in the era of big astronomical data with multiple facilities such as SKA, LSST, CTA, etc. This is a natural application of multivariate forecasting with RNN-LSTMs. Hence studies of transients with methods of deep learning both methodological and astrophysical are of great relevance as has been echoed by others [eg., [

11,

12].

Given a precedence of a transient or flare in historical or current lightcurves, we can predict statistically the occurrence of a flare in the future provided this is not a special episode unrelated to the parent distribution or process driving variability. This also suggests that the RNN-LSTMs could potentially be used to discriminate between flares from different distributions. This would require dedicated investigations which would be the subject of future papers.

Deep learning methods in general are prone to overfitting in the presence of noise ; this is naturally a concern extending to applications beyond astrophysics (eg. financial predictions [

40,

41], meteorological forecasts [

42]). This makes transient detection is therefore especially difficult. In astrophysics, flares are such transients can emerge from underlying astrophysical noise. Furthermore, quality of forecast depends upon the properties of this noise. For instance, we find that noise with steeper or "redder" power spectra makes transient detection less precise and more challenging. In other words, greater power and correlations on longer timescales will make it challenging to make good forecasts of flares. This is consistent with other challenges that accompany sources with steep power spectral densities. For instance, one would need to investigate direct effects of underlying distribution(s) and non-stationary behaviour of variations in sources with power spectral densities steeper than index of unity or flicker noise, [

39] on these predictions. The response of the type of architecture to different types of noise especially with respect to overfitting deserves its own study and will be subject of future investigations.

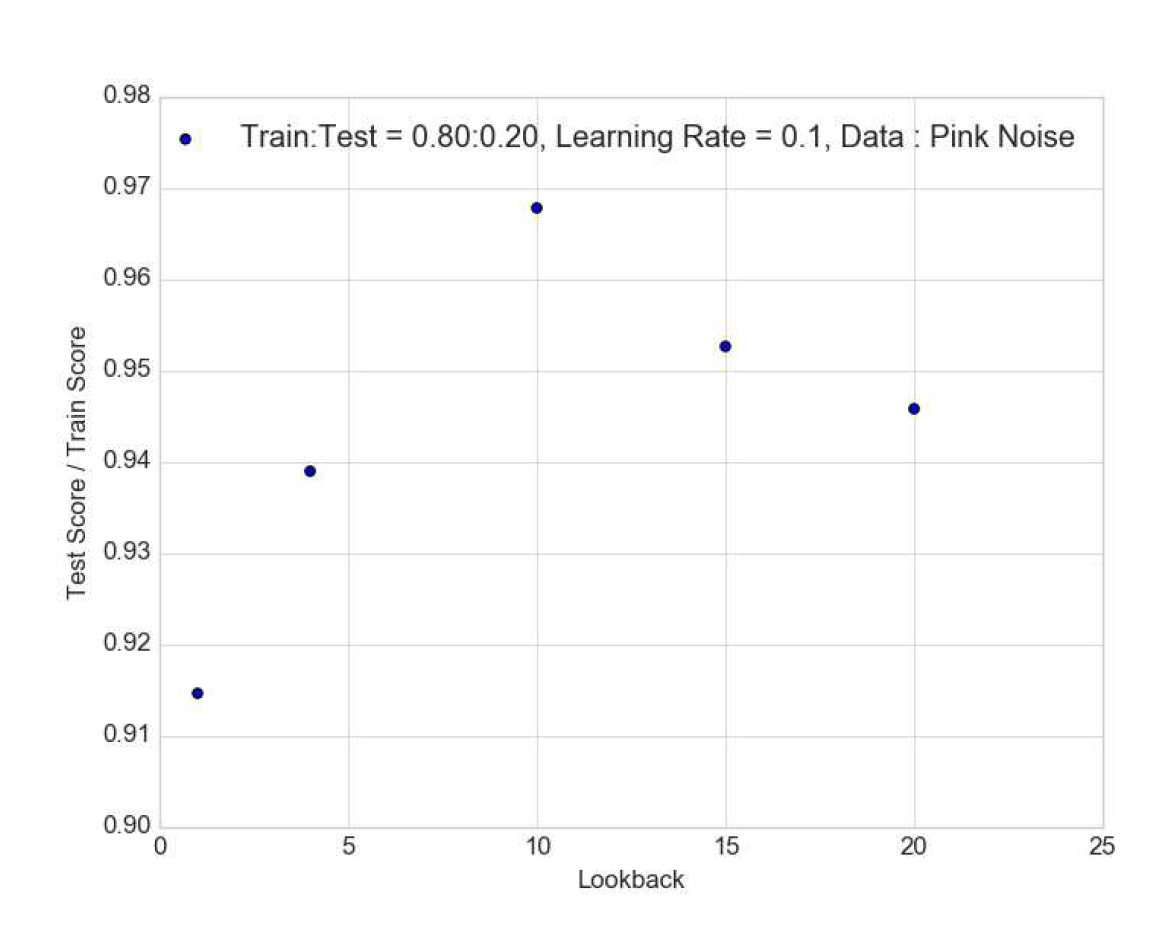

Univariate forecasting sensitivity in terms of the losses or scores depend upon complexity of data as well as the structure of neural network particularly batch size. The sensitivity to data characteristics varies in different ways. The losses increase monotonically with complexity measured in terms of no. of Fourier components. The sensitivity to incompleteness is less straightforward and depends on a number of aspects like the underlying distribution (PDF), and LSTM architecture and parameters (like activation) and noise properties (PSD). But in general, the sensitivity is greater for redder noise. There are multiple approaches to tackling missing data ; certain types of imputation can help for moderate MDF (

) [

43]. Alternately one could encode the effects in terms of additional uncertainty (statistical) and bias (systematic) terms like we do for batch size. The dependence on the "structural parameters" of the network in general are not always straightforward or monotonic. This makes it challenging to come up with an optimal network in the most general case. However, from the current study we see that amongst the most crucial "structural parameters" is the batch size. For long term monitoring, a batch size of 10-30 gives good results. For other parts of the architecture, possible approaches are pre-training LSTM models with realistic simulations [eg., [

38,

39]. Yet another alternative is using historical data for this pre-training and this is feasible as forecasting systems can be tied to individual facilities at specific wavelengths.

The results show that in general, that while RNN LSTMs are powerful for forecasting time-series, there’s enough complexity and attention needs to be paid to selection of architecture parameters for different types of noise. This is vital for noisy time-series forecasting. Generative methods (or time-series simulations) can provide an excellent way of testing sensitivities to facilitate such selection and possibly pre-selection for forecasting algorithms to be deployed in actual observational astronomy campaigns. Clearly, the power spectral density and probability distribution function of lightcurves from past long-term campaigns are an incredibly useful guide in construction of forecasting machinery. Multiwavelength lightcurves will need multivariate forecasting. A deep dive into this will be the topic of a future paper.

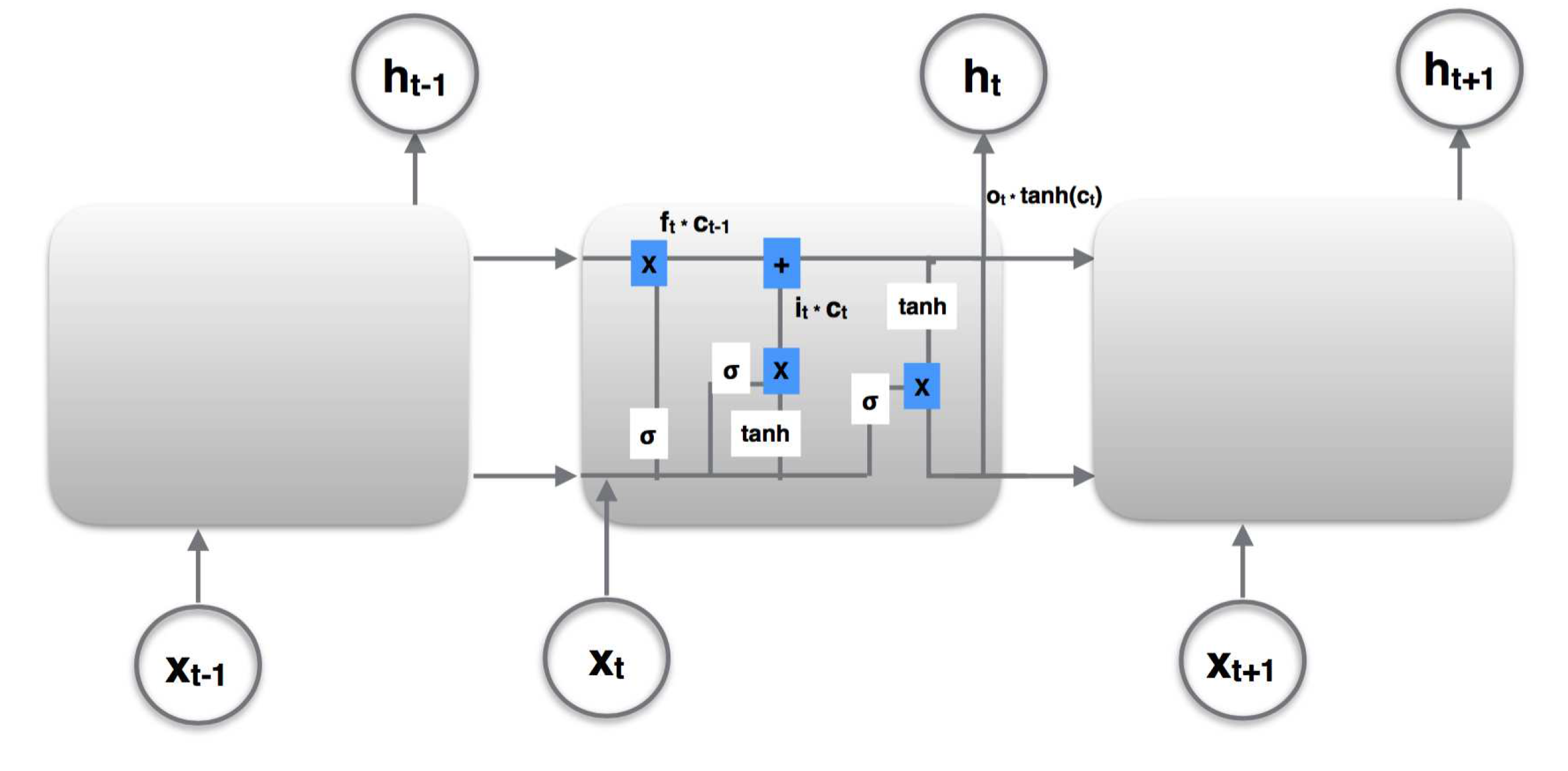

Figure 1.

The figure shows the basic structure of an RNN-LSTM block within a segment of the network with the time axes unrolled. The output from the previous block, is combined optimally with input, at the current stage, to produce output at this stage. represents the "forget gate" that determines if the previous internal state, , which is a combination of and is passed through or forgotten. The input gate, determines the internal state at the current stage, and the output gate, determines how much of this current state is passed on to the output.

Figure 1.

The figure shows the basic structure of an RNN-LSTM block within a segment of the network with the time axes unrolled. The output from the previous block, is combined optimally with input, at the current stage, to produce output at this stage. represents the "forget gate" that determines if the previous internal state, , which is a combination of and is passed through or forgotten. The input gate, determines the internal state at the current stage, and the output gate, determines how much of this current state is passed on to the output.

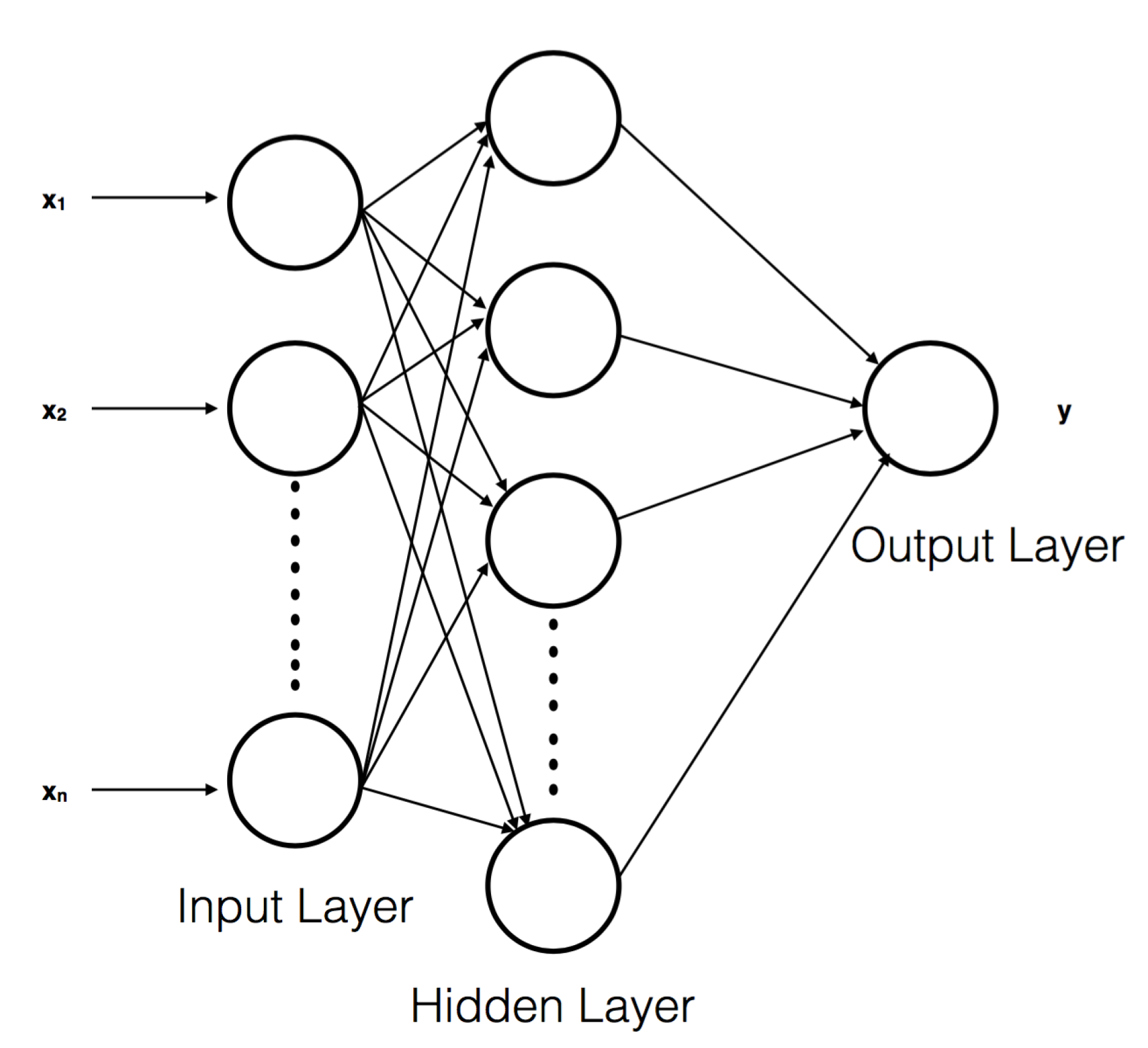

Figure 2.

The figure shows the basic structure of an RNN network structure. Essentially it is composed of an input and output layers comprising of blocks representing the input and output data. In between there are one or more hidden layers consisting of the blocks, which perform the actual "fitting of the model".

Figure 2.

The figure shows the basic structure of an RNN network structure. Essentially it is composed of an input and output layers comprising of blocks representing the input and output data. In between there are one or more hidden layers consisting of the blocks, which perform the actual "fitting of the model".

Figure 3.

The figure shows an example of multivariate forecasting with RNN-LSTM. Here two input lightcurves one purely red noise (green) and the other red noise plus transient (blue) are used to predict a flare in a third lightcurve (orange). These lightcurves are artificially generated and scaled in amplitude for presentation purposes. It is clear that if the training data has flare-like features similar to ones we wish to forecast, then we could predict such flares in test data or future lightcurves.

Figure 3.

The figure shows an example of multivariate forecasting with RNN-LSTM. Here two input lightcurves one purely red noise (green) and the other red noise plus transient (blue) are used to predict a flare in a third lightcurve (orange). These lightcurves are artificially generated and scaled in amplitude for presentation purposes. It is clear that if the training data has flare-like features similar to ones we wish to forecast, then we could predict such flares in test data or future lightcurves.

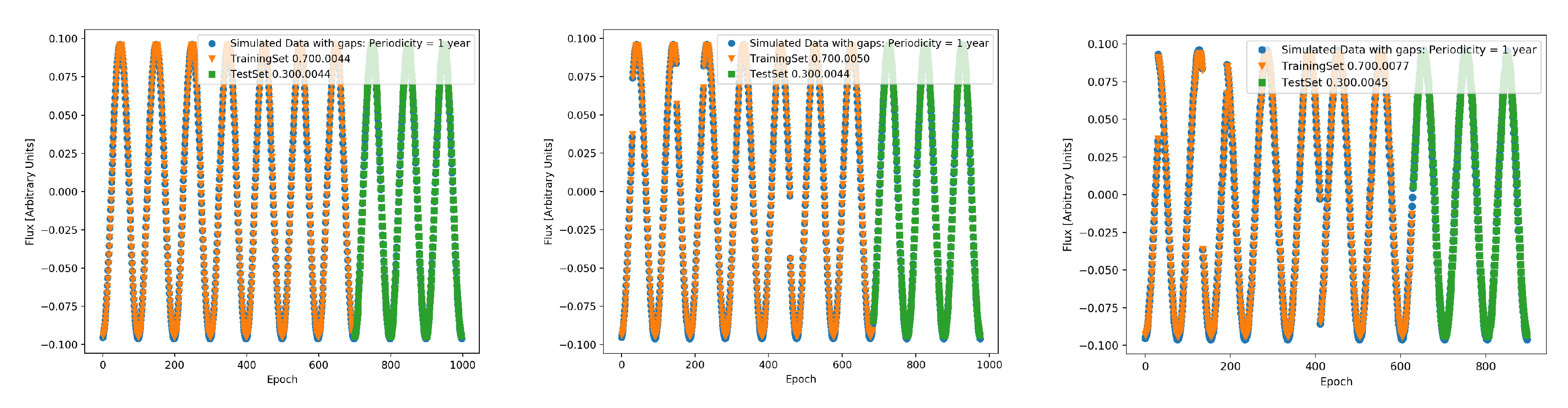

Figure 4.

Top: The figure shows the prediction of a strictly periodic signal with a period of a year, with RNNs with LSTM. This is a trivial case of forecasting for a time-series exactly known.Center and Bottom: The signal is once again the annual periodicity, but with missing data. In each of these cases, the proportion of the size of test to train dataset is given adjacent to the score. As shown, this affects the prediction a small amount.

Figure 4.

Top: The figure shows the prediction of a strictly periodic signal with a period of a year, with RNNs with LSTM. This is a trivial case of forecasting for a time-series exactly known.Center and Bottom: The signal is once again the annual periodicity, but with missing data. In each of these cases, the proportion of the size of test to train dataset is given adjacent to the score. As shown, this affects the prediction a small amount.

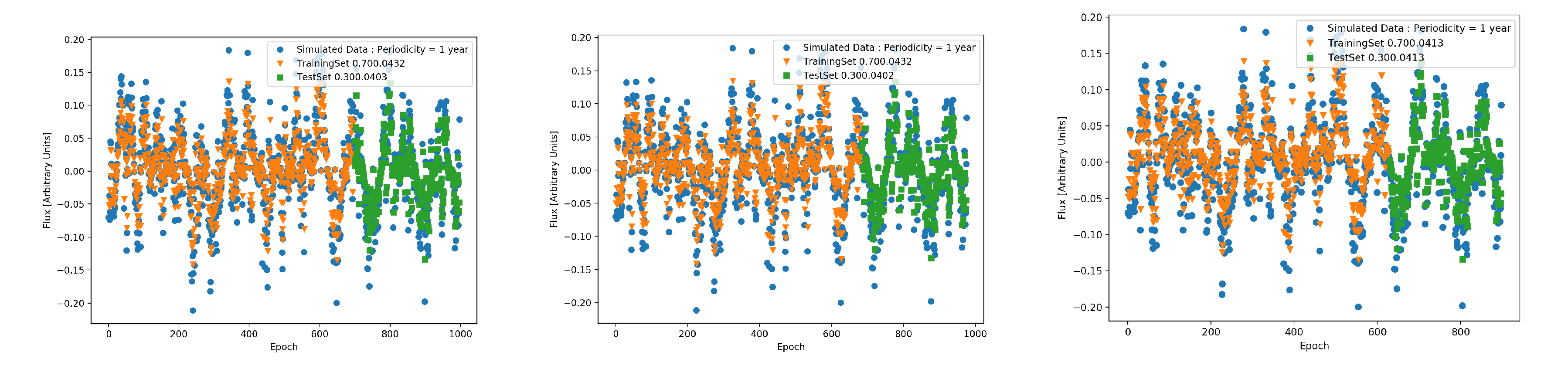

Figure 5.

Top: The figure shows the prediction of the annual periodic signal modulating a stochastic, pink noise signal, with RNNs with LSTM. The prediction while not trivial as the strictly periodic case, is still strong. Center: The same signal with missing data () clearly makes trickier to predict accurately. Bottom: With of missing data seems to be exactly precise yet this is misleading.

Figure 5.

Top: The figure shows the prediction of the annual periodic signal modulating a stochastic, pink noise signal, with RNNs with LSTM. The prediction while not trivial as the strictly periodic case, is still strong. Center: The same signal with missing data () clearly makes trickier to predict accurately. Bottom: With of missing data seems to be exactly precise yet this is misleading.

Figure 6.

The ratio of the test to train loss is shown as a function of the percentage of periodic gaps in data for pink (blue and red noise (red). This is shown for hard sigmoid (solid) and tanh (dashed) activation with the latter as the most optimal scenario. For fraction of gaps exceeding , the accuracy gets worse as proportion of missing data increases. This deterioration is less pronounced for pink noise.

Figure 6.

The ratio of the test to train loss is shown as a function of the percentage of periodic gaps in data for pink (blue and red noise (red). This is shown for hard sigmoid (solid) and tanh (dashed) activation with the latter as the most optimal scenario. For fraction of gaps exceeding , the accuracy gets worse as proportion of missing data increases. This deterioration is less pronounced for pink noise.

Figure 7.

Like in

Figure 3, the ratio of the test to train loss is shown as a function of the percentage of random gaps in data for pink (

blue and red noise (red) ; once again the activation functions are hard sigmoid (

solid) and tanh (dashed). For fraction of gaps exceeding

, the accuracy gets worse as proportion of missing data increases. This deterioration is less pronounced for pink noise.

Figure 7.

Like in

Figure 3, the ratio of the test to train loss is shown as a function of the percentage of random gaps in data for pink (

blue and red noise (red) ; once again the activation functions are hard sigmoid (

solid) and tanh (dashed). For fraction of gaps exceeding

, the accuracy gets worse as proportion of missing data increases. This deterioration is less pronounced for pink noise.

Figure 8.

The test (blue) and train (red) losses are shown as a function of the no. of Fourier components in the pink noise signal ; the activation functions are hard sigmoid (solid) and tanh (dashed). As the complexity i.e. the number of Fourier components increase, both the train and test losses increase as predictions tend to get harder to make.

Figure 8.

The test (blue) and train (red) losses are shown as a function of the no. of Fourier components in the pink noise signal ; the activation functions are hard sigmoid (solid) and tanh (dashed). As the complexity i.e. the number of Fourier components increase, both the train and test losses increase as predictions tend to get harder to make.

Figure 9.

The ratio of test to train loss as function of lookback is shown for the pink noise case. It shows that the train and test loss values are the closest with the ratio being nearest to 1 for lookback of 10.

Figure 9.

The ratio of test to train loss as function of lookback is shown for the pink noise case. It shows that the train and test loss values are the closest with the ratio being nearest to 1 for lookback of 10.

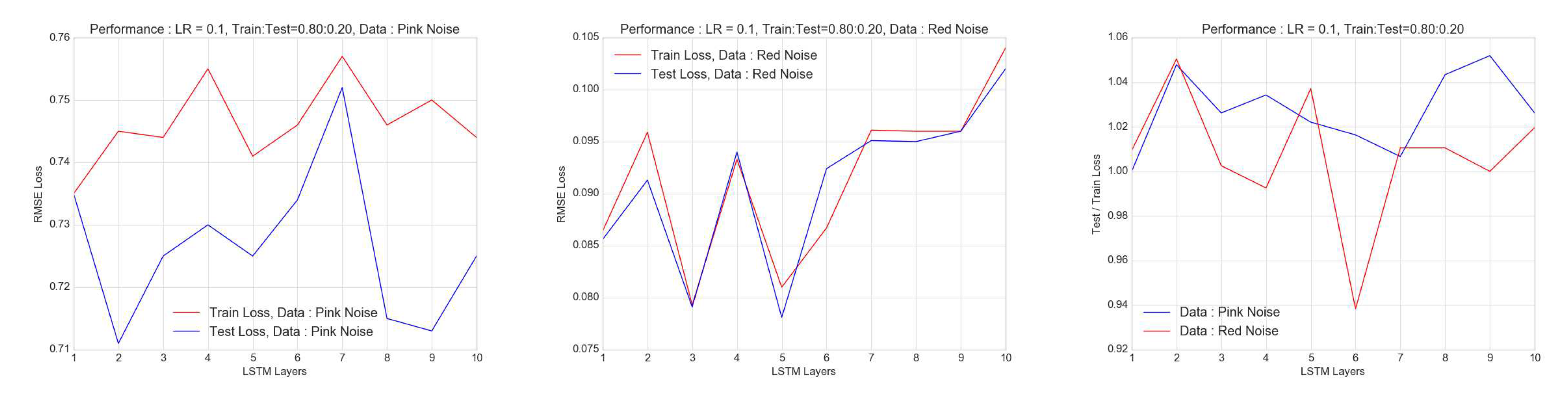

Figure 10.

The dependence of RMSE loss of pink (Left) and red (Right) noise process on the layers is shown in the figure for a learning rate of 0.1 and a train to test ratio of . While there is sensitivity to the number of identical RNN-LSTM layers, there is neither a trend nor a "sweet spot" for this number. This is suggestive that adding layers does not improve performance dramatically. In general, the performance is better or more consistent for pink noise.

Figure 10.

The dependence of RMSE loss of pink (Left) and red (Right) noise process on the layers is shown in the figure for a learning rate of 0.1 and a train to test ratio of . While there is sensitivity to the number of identical RNN-LSTM layers, there is neither a trend nor a "sweet spot" for this number. This is suggestive that adding layers does not improve performance dramatically. In general, the performance is better or more consistent for pink noise.

Figure 11.

The dependence of prediction accuracy of the pink noise process on the learning rate is shown in the figure for a batch size of 1 and a train to test ratio of . The learning rate does not impact the test and train scores significantly just the rate of convergence.

Figure 11.

The dependence of prediction accuracy of the pink noise process on the learning rate is shown in the figure for a batch size of 1 and a train to test ratio of . The learning rate does not impact the test and train scores significantly just the rate of convergence.

Figure 12.

The dependence of training and test losses of the pink and red noise processes on the batch size is shown in the figure for a train to test ratio of .

Figure 12.

The dependence of training and test losses of the pink and red noise processes on the batch size is shown in the figure for a train to test ratio of .

Figure 13.

The uncertainty on the effect of batch size is calculated by repeating the exercise in

Figure 12 over 100 simulations of pink and red noise. Uncertainty on test loss is shown in blue, whereas that on the training loss is shown in red. This shows an optimal batch size of

with a hint of overfitting with pink noise.

Figure 13.

The uncertainty on the effect of batch size is calculated by repeating the exercise in

Figure 12 over 100 simulations of pink and red noise. Uncertainty on test loss is shown in blue, whereas that on the training loss is shown in red. This shows an optimal batch size of

with a hint of overfitting with pink noise.

Figure 14.

The figure shows detection of a transient in presence of pink noise. To the left, is a train to test split of and to the right is a split. The LSTM with batch size 30 performs well, improving as we go nearer to the exponentially shaped transient.

Figure 14.

The figure shows detection of a transient in presence of pink noise. To the left, is a train to test split of and to the right is a split. The LSTM with batch size 30 performs well, improving as we go nearer to the exponentially shaped transient.

Figure 15.

Same as

Figure 14 but with red noise. The LSTM with batch size 30 performs rather poorly showing the effect of noise.

Figure 15.

Same as

Figure 14 but with red noise. The LSTM with batch size 30 performs rather poorly showing the effect of noise.